Abstract

For the interaction energy with repulsive–attractive potentials, we give generic conditions which guarantee the radial symmetry of the local minimizers in the infinite Wasserstein distance. As a consequence, we obtain the uniqueness of local minimizers in this topology for a class of interaction potentials. We introduce a novel notion of concavity of the interaction potential allowing us to show certain fractal-like behavior of the local minimizers. We provide a family of interaction potentials such that the support of the associated local minimizers has no isolated points and any superlevel set has no interior points.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Explaining the intricate behavior of coherent structures of active/passive media composed by many interacting agents in mathematical biology and technology has attracted lots of attention in the applied mathematics community. These questions are ubiquitous in collective behavior of animal species, cell aggregates by chemical cues or adhesion forces, granular media and self-assembly of particles, see for instance [17, 34, 36, 49] and the references therein. In many of these models, particular solutions emerge from consensus of movement while their relative positions are determined based only on attraction and repulsion effects [23, 28]. These equilibrium shapes at the continuum level can be characterized by probability measures \(\rho \) for which the balance of attractive and repulsive forces hold. This is equivalent to finding probability measures \(\rho \) such that

with \(W:{{\mathbb {R}}^d}\rightarrow (-\infty ,\infty ]\) being an attractive-repulsive interaction potential between the particles. The richness of the shapes of the support of these aggregation equilibria is quite surprising even for simple potentials [37]. Finding particular configurations satisfying (1.1) is a challenging problem due to its highly nonlinear nature since the support of the measure itself is part of the problem and the regularity of the potential plays a key role. These configurations appear naturally as the steady states for the mean-field dynamics associated to the particle system

Notice that the system of ODEs (1.2) is the finite dimensional gradient flow of a discrete interaction energy. Its formal mean-field limit follows the nonlocal partial differential equation

usually referred to as the aggregation equation, where the transport velocity field \(\textbf{u}(t,\textbf{x})\) is given by

The aggregation Eq. (1.3) is the 2-Wasserstein gradient flow of the total potential energy

In the sequel, we will denote by \(V = V[\rho ] = W*\rho \) the interaction potential generated by the particle density \(\rho \). Notice that \(\rho (\textbf{x})\) is a steady state of (1.3) if \(\textbf{u}(\textbf{x})\) satisfies (1.1), or equivalently \(V[\rho ]\) is constant on each of the connected components of \(\text {supp}\,\rho \), modulo regularity of the velocity field.

We emphasize that even if the interaction potential W is radially symmetric, it is quite challenging to prove or disprove radial symmetry of global or local minimizers of the interaction energy (1.5). Notice that despite of the fact that the energy is rotationally invariant for radial functions with radially symmetric interaction potentials, the uniqueness of global minimizers of the interaction energy, modulo translations, is not known except for particular cases [32, 40] using the linear interpolation convexity (LIC).

Nothing is essentially known about uniqueness for local miminizers. Actually, in order to discuss about local minimizers of the interaction energy (1.5), we obviously need to specify the topology in probability measures that we use to measure the distance. Here, we follow previous works [2, 10, 13] that showed that transport distances between probability measures are the right tool to deal with this variational problem. We remind the reader the main properties of transport distances in Sect. 2, in particular, the infinity Wasserstein distance \(d_\infty \) plays an important role in order to write Euler-Lagrange conditions for local minimizers [2, 14].

The problem of finding global minimizers of the interaction energy, modulo translations, for particular potentials is a classical problem in potential theory [32, 43]. More precisely, for the repulsive logarithmic potential with quadratic confinement \(W(\textbf{x})=\tfrac{|\textbf{x}|^2}{2}-\ln |\textbf{x}|\) in 2D, it is known [32] that the unique global minimizer is the characteristic function of a suitable Euclidean ball. When the repulsive singularity at zero is stronger than Newtonian but still locally integrable, regularity results for \(d_\infty \)-local minimizers have been obtained [13] and the uniqueness is known for particular power-law potential cases again [11, 12, 40]. The existence of compactly supported global minimizers of the interaction energy for generic interaction potentials was obtained in [10, 47] based on the condition of H-stability of interaction potentials.

Some qualitative properties of the support of the minimizers are known depending on the smoothness/singularity of the potential at the origin. Interaction potentials which are at least \(C^2\) smooth at the origin generically lead to minimizers concentrated on Dirac points for which particular geometric constraints and explicit forms are known [16, 39]. The dimensionality of the support of the \(d_\infty \)-local minimizers was estimated in terms of the repulsive singularity strength of weakly singular interaction potentials at the origin in [2] showing that the more repulsive the potential at zero gets the larger the support of the \(d_\infty \)-local minimizers is. We refer to weakly singular repulsive–attractive potentials as potentials with a repulsive singularity at the origin behaving between Newtonian singularity and smooth quadratic behavior at the origin. Other related problems include singular anisotropic potentials [24, 25, 42], in which the explicit form of the global minimizers are known in particular cases, and interaction energies with constraints [9, 26, 30, 31]. Explicit stationary solutions of (1.1) are known for some power-law potentials [22]: attractive power is an even integer and weakly singular at the origin. They were expected to be indeed global minimizers supported by strong numerical evidence [33].

As a conclusion, a key problem not covered by the current literature is to find sufficient conditions by variational methods for radial symmetry or the break of radial symmetry of \(d_\infty \)-local minimizers for weakly singular repulsive–attractive potentials. In order to attack these issues, our first objective is to further exploit and refine the convexity properties of the interaction energy to study the radial symmetry and uniqueness of \(d_\infty \)-local minimizers of (1.5). The crucial assumption on the interaction energy is the LIC property, which basically means that E is convex along the linear interpolation between any two measures \(\rho _0\) and \(\rho _1\). It is well-known that the global minimizer is unique and radially-symmetric under the LIC assumption for certain particular functionals [38, 40, 42].

The main goal of the first part of this work, Sects. 3, 4 and 5, is to find sufficient conditions leading to radial symmetry of local minimizers of (1.5) and its consequences. Assuming the LIC property, we first show that any \(d_\infty \)-local minimizer of the interaction energy (1.5) is radially-symmetric, see Theorem 3.1. Then, by imposing an extra assumption on the sign of \(\Delta ^2 W\), we obtain the uniqueness of \(d_\infty \)-local minimizers modulo translations, see Theorem 4.1. As a test case of our theory, we apply our results to the power-law potentials \(W(\textbf{x}) = \frac{|\textbf{x}|^a}{a}-\frac{|\textbf{x}|^b}{b}\), and identify the ranges of a and b for which we show the radial symmetry and uniqueness of \(d_\infty \)-local minimizers, see Theorem 5.1. In particular, we prove that some of the steady states given by the explicit formula in [22] are the global minimizers of the interaction energy (1.5), and also their unique \(d_\infty \)-local minimizer, see also related results in one dimension [29]. This also confirms accurate numerical simulations of equilibrium measures [33].

We emphasize that Sects. 3, 4 and 5 together lead to the first results in the literature proving radial symmetry and uniqueness of \(d_\infty \)-local minimizers of the interaction energy (1.5) for a general family of interaction potentials. The importance of showing radial-symmetry and uniqueness is not only from the variational viewpoint but also from the evolutionary viewpoint of gradient flows associated to the interaction energy. The connection to the long time asymptotics of the corresponding aggregation equation [1,2,3,4, 6, 8, 15] is not explored in this work although there are still important open problems under different assumptions on the interaction potential. Nevertheless, we remark that the radial symmetry of steady states and the gradient flow structure of the aggregation equation are crucial properties for showing precise long time asymptotics both for the aggregation equation with particular interaction potentials in [7, 11, 12, 46] and also for the aggregation–diffusion equations in [20, 21, 27, 35, 44, 45].

The next main question that we want to address in this work is to give sufficient conditions to allow for non-radial local/global minimizers and even fractal behavior on the structure of their support. The radial symmetry is broken for interaction potentials at least \(C^2\) smooth at the origin. The support of their stationary states consists of finite number of isolated Dirac points with some conditions [16], and some particular configurations such as simplices appear as asymptotic limits for power-law potentials [39]. Stationary states with complex structure have been reported in the numerical literature and by studying the stability/instability of Delta ring solutions [1, 5, 48]. The dimensionality of the support of stationary states was estimated in [2] as mentioned earlier. In this quest, we can wonder if fractal behavior of the support of the minimizers appears among the natural family of power-law potentials. It was numerically observed in [2] that stationary states for power-law potentials seem not to show fractal behavior, i.e., the dimension of the support of the steady states seems to be an integer. We show in Sect. 6, Theorem 6.1, that this is in fact the case in one dimension, i.e. fractal behavior is not possible at least in one dimension for some power-law potentials.

The main result of the second part of this work is to give a generic family of potentials for which we have fractal-like behavior. In order to achieve this, Sect. 7 introduces a novel notion of concavity of the interaction potential W allowing us to show certain fractal behavior on superlevel sets of \(d_\infty \)-local minimizers of the interaction energy (1.5). This notion of concavity is based on negative regions of the Fourier transform of the potential, in contrast to the LIC property and a slightly stronger notion of convexity, the Fourier-LIC (FLIC) property defined in Sect. 2. More precisely, the main result, Theorem 7.1, asserts that if the interaction potential is infinitesimal-concave, then any superlevel set of \(d_\infty \)-local minimizers of the interaction energy (1.5) does not contain interior points. Sect. 8 provides explicit constructive examples of infinitesimal-concave potentials in any dimension based on a careful modification of power-law kernels in Fourier variables, see Theorem 8.1. We finally show in Corollary 8.4 that for these potentials the behavior of the support of the corresponding \(d_\infty \)-local minimizers of the interaction energy (1.5) is almost fractal, in the sense that the interior of any superlevel set is empty, and moreover the support does not contain isolated points. A related idea of breaking the symmetry of minimizers of the interaction energy (1.5) via a finite number of unstable Fourier modes for the uniform distribution on the sphere was described in [50].

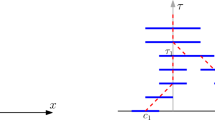

Finally, Sect. 9 provides another constructive example in which a steady state is the uniform distribution on a Cantor set, see Theorems 9.2 and 9.6 . The main idea of this construction is to produce a potential in a recursive hierarchical manner that introduces some kind of concavity at a sequence of small scales. These three Sects. 7, 8 and 9 show all together that the behavior of the support of \(d_\infty \)-local minimizers of infinitesimal concave potentials can be hugely sophisticated. This is further corroborated by the numerical simulations in Sect. 10 illustrating by means of particle methods the intricate fractal-like structures of the steady states. One of the remaining open problems is to prove or disprove the fractal behavior of \(d_\infty \)-local minimizers for weakly singular power-law like interaction potentials in \(d\ge 2\).

2 Preliminaries and convexity

We write \({\mathcal {P}}({\mathbb {R}}^d)\) for the set of Borel probability measures. Given \(1\le p<\infty \), we write \({\mathcal {P}}_p({\mathbb {R}}^d)\) for the subset of \({\mathcal {P}}({\mathbb {R}}^d)\) of measures with finite pth moment. The pth Wasserstein distance \(d_p(\mu ,\nu )\) between two probability measures \(\mu \) and \(\nu \) belonging to \({\mathcal {P}}_p({\mathbb {R}}^d)\) is

where \(\Pi (\mu ,\nu )\) is the set of transport plans between \(\mu \) and \(\nu \); i.e., \(\Pi (\mu ,\nu )\) is the subset of \({\mathcal {P}}({{\mathbb {R}}^d}\times {{\mathbb {R}}^d})\) of measures with \(\mu \) as first marginal and \(\nu \) as second marginal. We also define the \(\infty \)-Wasserstein distance \(d_\infty (\mu ,\nu )\), whenever \(\mu \) and \(\nu \) are compactly supported, by

where the \(\text {supp}\,\) denotes the support.

We will adopt the following notation for Fourier transform and its inverse

for all \(\xi ,\textbf{x}\in {{\mathbb {R}}^d}\). Then \( {\hat{\delta }} = 1,\, {\check{\delta }} = (2\pi )^{-d} \), and

We will use in this work different notions of convexity for the interaction energy functional (1.5). For the sake of notational simplicity, we will denote by E either the energy functional (1.5) acting on probability measures or the bilinear form acting on signed measures associated to the interaction potential W. We also denote particle densities by \(\rho \) whenever it is a probability measure and \(\mu \) if it is a signed measure. For notational simplicity, we will use \(\rho (\textbf{x}) \,\,\textrm{d}{\textbf{x}}\) as the integration against the measure \(\rho \) no matter if it can be identified with a Lebesgue integrable function or not. In the sequel, we also use C and c to refer to generic positive constants.

We start by the simplest notion of linear interpolation convexity (LIC): we say that the interaction energy E is LIC, if for any probability measures \(\rho _0,\rho _1\in {\mathcal {P}}_2({\mathbb {R}}^d)\), \(\rho _0\ne \rho _1\), such that \(E[\rho _0]<\infty \) and \(E[\rho _1]<\infty \) with the same total mass and center of mass, the function \(t\mapsto E[(1-t)\rho _0 + t \rho _1]\) is strictly convex, or equivalently, \(E[\mu ]> 0\) for every nonzero signed measure \(\mu \in {\mathcal {M}}({\mathbb {R}}^d)\) with \(\int _{{\mathbb {R}}^d}\mu (\textbf{x})\,\textrm{d}{\textbf{x}}=\int _{{\mathbb {R}}^d}\textbf{x}\mu (\textbf{x})\,\textrm{d}{\textbf{x}}=0\) and \(E[|\mu |]<\infty \). Notice the equivalence by taking \(\mu =\rho _0-\rho _1\). This notion of convexity has been classically used in statistical mechanics, see [38].

A stronger convexity property is the following: if for any \(0<r<R<\infty \), there exists \(c>0\) such that

then we say E has Fourier linear interpolation convexity (FLIC). It is straightforward to check that FLIC implies LIC for the interaction energy. As already mentioned in the introduction, these notions of convexity were used in [40], the author showed that the interaction energy (1.5) associated to the attractive potential \(W(x)=\tfrac{|\textbf{x}|^a}{a}\) has the LIC property, for \(2\le a \le 4\) and that the interaction energy (1.5) associated to the repulsive potential \(W(x)=-\tfrac{|\textbf{x}|^b}{b}\) has the FLIC property for \(-d< b < 0\), since its Fourier transform is shown to be \(c|\textbf{x}|^{-d-b}\) for some \(c>0\).

It is clear that LIC implies the uniqueness of global minimizers for the interaction energy (1.5) in \({\mathcal {P}}_2({\mathbb {R}}^d)\) with fixed total mass and center of mass. Observe also that the sum of an LIC potential and an (F)LIC potential is an (F)LIC potential. The positivity of the Fourier transform of the interaction potential, related to then FLIC convexity, has been used to prove uniqueness of minimizers for interaction energies with asymmetric potentials, see [24, 42] and it is also related to the concept of H-stability in statistical mechanics to detect phase transitions in aggregation–diffusion equations [18, 19] and the existence of compactly supported minimizers for the interaction energy [10, 47].

It is important to remark that linear interpolation convexity of the interaction energy as defined above is totally different from displacement convexity of (1.5) in the optimal transportation sense, see [41]. In fact, the energy functional (1.5) is strictly displacement convex as soon as the potential W is strictly convex.

We now remind the reader about the necessary conditions for local minimizers of the interaction energy (1.5) in [2, 14]. The following conditions come from Euler-Lagrange variational arguments using the mass constraint, and they are related to obstacle-like problems as in [13]. Although further hypotheses on the interaction potential W may be considered in various places below, we minimally assume that

From now on, we will assume without loss of generality that the interaction potential \(W\ge 0\). Notice that these assumptions imply that \(V=W*\rho \ge 0\) is always a lower semicontinuous function for all \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\), see [2, Lemma 2]. The results in [2, Proposition 1], together with [13, Remark 2.3], give

Lemma 2.1

If \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) is a compactly supported local energy minimizer in the \(d_\infty \)-sense for the energy E with interaction potential W satisfying ((H)), then there exists \(\epsilon _0>0\), such that for any \(\textbf{x}\in \text {supp}\,\rho \),

Lemma 2.2

If \(\rho \in {\mathcal {P}}_2({\mathbb {R}}^d)\) is a local energy minimizer in the \(d_2\)-sense for the energy E with interaction potential W satisfying ((H)), then V is constant on \(\text {supp}\,\rho \) in the sense that \(V(\textbf{x})=C_\rho :=2 E[\rho ]\) \(\rho \)-a.e., and

In order to show the FLIC property of the interaction energy, one can reduce to show (2.1) for compactly supported signed measures \(\mu \).

Lemma 2.3

Assume then interaction potential W satisfies ((H)) and that the associated interaction energy E satisfies (2.1) for compactly supported signed measures \(\mu \), then the interaction energy E is FLIC.

Proof

To see this, assume that we already proved (2.1) for compactly supported \(\mu \in {\mathcal {M}}({\mathbb {R}}^d)\). Then let \(\mu \in {\mathcal {M}}({\mathbb {R}}^d)\) be a signed measure with \(E[|\mu |]<\infty \) and \(\mu =\rho _0-\rho _1\) for some \(\rho _0,\rho _1\in {\mathcal {P}}_2({\mathbb {R}}^d)\) with the same center of mass. Then

and one can write \(\mu =\mu _+-\mu _-\) as the positive and negative parts, with \(m_\mu :=\int _{{{\mathbb {R}}^d}}\mu _+\,\textrm{d}{\textbf{x}}=\int _{{{\mathbb {R}}^d}}\mu _-\,\textrm{d}{\textbf{x}}>0\) and \(E[\mu _+]<\infty ,\,E[\mu _-]<\infty \).

Fix a compactly supported smooth non-negative radial function \(\psi (\textbf{x})\) with \(\int _{{{\mathbb {R}}^d}}\psi (\textbf{x})\,\textrm{d}{\textbf{x}}=1\). Let \(N\in {\mathbb {N}}\) be sufficiently large, and let

be a compactly supported signed measure. Since \(\lim _{N\rightarrow \infty }\int _{[-N,N]^d}\mu _+\,\textrm{d}{\textbf{x}} = m_\mu >0\), \(\lambda _N\) is well-defined for sufficiently large N with \(\lim _{N\rightarrow \infty }\lambda _N=1\), and we have \(\int _{{{\mathbb {R}}^d}}{\tilde{\mu }}_N\,\textrm{d}{\textbf{x}}=0\).

Then define

Then \(\mu _N\) is compactly supported, satisfies \(\int _{{{\mathbb {R}}^d}} \mu (\textbf{x})\,\textrm{d}{\textbf{x}}=0\), and

Also, \(\lim _{N\rightarrow \infty }c_N=0\). Then we will show that

converges to zero as \(N\rightarrow \infty \). For the first term on the RHS, this is a consequence of \(E[|\mu |]<\infty \) since

and the RHS converges to zero as \(N\rightarrow \infty \). For the second term, this is a consequence of \(E[|\mu |]<\infty \) and \(\lim _{N\rightarrow \infty }\lambda _N=1\), since \({\tilde{\mu }}_N=\mu \chi _{[-N,N]^d}+(\lambda _N-1)\mu _+\chi _{[-N,N]^d}\) and then

with the last integral and \(E[\mu _+\chi _{[-M,M]^d}]\) being finite.

For the third term, we first take a non-negative compactly-supported radial smooth function \(\Psi \) with \(\Psi (\textbf{x}-\textbf{z})\ge \psi (\textbf{x}-\textbf{x}_1)\) for any \(|\textbf{x}_1|=1\) and

Notice that \(E[\Psi ]<\infty \). Take \(M>0\) large enough so that \(\int _{{{\mathbb {R}}^d}}|\mu |\chi _{[-M,M]^d}\,\textrm{d}{\textbf{x}}\ge \frac{1}{2}\int _{{{\mathbb {R}}^d}}|\mu |\,\textrm{d}{\textbf{x}}\). Then the FLIC property for compactly supported measures implies an estimate along a linear interpolation curve

with

since both \(\Lambda _M\Psi (\cdot -\textbf{z}_M)\) and \(|\mu |\chi _{[-M,M]^d}\) have same total mass and center of mass. Also, since \(\int _{{{\mathbb {R}}^d}}|\mu |\chi _{[-M,M]^d}\,\textrm{d}{\textbf{x}}\ge \frac{1}{2}\int _{{{\mathbb {R}}^d}}|\mu |\,\textrm{d}{\textbf{x}}\), \(\textbf{z}_M\) satisfies (2.4). This implies

for any M sufficiently large and \(|\textbf{x}_1|=1\). Taking \(M\rightarrow \infty \), we get

Therefore, we obtain that

converges to zero as \(N\rightarrow \infty \), since \(\lim _{N\rightarrow \infty }c_N=0\).

Similarly one can show that \(\lim _{N\rightarrow \infty }\int _{r\le |\xi | \le R} |{\hat{\mu }}_N(\xi )|^2\,\textrm{d}{\xi }=\int _{r\le |\xi | \le R} |{\hat{\mu }}(\xi )|^2\,\textrm{d}{\xi }\). In fact, we write

First notice that \({\hat{\mu }}, {\mathcal {F}}(\mu \chi _{[-N,N]^d}), \hat{{\tilde{\mu }}}_N\) are uniformly bounded in \(L^\infty ({{\mathbb {R}}^d})\). The first term in (2.5) converges to zero as \(N\rightarrow \infty \) since

uniformly in \(\xi \in {{\mathbb {R}}^d}\). The second term converges to zero since

The third term converges to zero since

The compactly supported signed measure \(\mu _N\) satisfying (2.3), and therefore satisfies (2.1) by assumption. Then (2.1) for \(\mu \) follows by taking \(N\rightarrow \infty \). \(\square \)

In order to obtain further consequences of the LIC convexity of the interaction energy, we need stronger assumptions on the potential W:

These hypotheses can be verified for power law interaction potentials. An important consequence of the LIC convexity of the interaction energy is:

Theorem 2.4

Assume the interaction energy E associated to a potential W satisfying ((H-s)) is LIC and that there exists a global minimizer of E in \({\mathcal {P}}_2({\mathbb {R}}^d)\). Then given any \(\rho \in {\mathcal {P}}_2({\mathbb {R}}^d)\) satisfying the necessary condition (2.2) for the \(d_2\)-local minimizer is the global minimizer.

To show this, we need a technical lemma where the stronger hypotheses are essential.

Lemma 2.5

Consider the interaction energy E associated to a potential W satisfying ((H-s)) and suppose \(\rho \in {\mathcal {P}}_2({\mathbb {R}}^d)\) is compactly supported and satisfies \(E[\rho ]<\infty \). Given a non-negative radially decreasing smooth compactly supported mollifier \(\psi \) supported on \(B_1\) with \(\int _{{{\mathbb {R}}^d}}\psi \,\textrm{d}{\textbf{x}}=1\). Denote \(\psi _\alpha (\textbf{x}) = \frac{1}{\alpha ^d}\psi (\frac{\textbf{x}}{\alpha })\), then \(E[\rho *\psi _\alpha ]<\infty \) for sufficiently small \(\alpha >0\), and

Proof

Notice it is straightforward to show that \(\rho *\psi _\alpha \) converges weakly to \(\rho \) as \(\alpha \rightarrow 0+\), and conclude that \(E[\rho ]\le \liminf _{\alpha \rightarrow 0+}E[\rho *\psi _\alpha ]\). This lemma improves this \(\liminf \) result to a limit. Let \(R>0\) be such that \(\text {supp}\,\rho \subseteq B_{R/2}\).

Notice that \(\Psi _\alpha =\psi _\alpha *\psi _\alpha \) is also a compactly supported (on \(B_{2\alpha }\)) non-negative radially decreasing smooth function with \(\int _{{{\mathbb {R}}^d}}\Psi _\alpha \,\textrm{d}{\textbf{x}}=1\). Therefore, for any \(\textbf{x}\in B_{R/2}\),

where \(\Psi _\alpha ^{-1}\) denotes the inverse function of \(\Psi _\alpha \) as a decreasing of \(r\in [0,2\alpha ]\). By the assumptions ((H-s)), if \(\alpha \le R/2\), we have

This implies

i.e., \(E[\rho *\psi _\alpha ]\) is finite.

Then write

We now use the continuity assumptions on W in ((H-s)). If W is continuous on \({\mathbb {R}}^d\), then \((W*\Psi _\alpha )(\textbf{x}-\textbf{y})\) converges to \(W(\textbf{x}-\textbf{y})\) at every \((\textbf{x},\textbf{y})\in {{\mathbb {R}}^d}\times {{\mathbb {R}}^d}\). Otherwise in case \(W(0)=+\infty \), by the continuity of W away from 0, \((W*\Psi _\alpha )(\textbf{x}-\textbf{y})\) converges to \(W(\textbf{x}-\textbf{y})\) at every \((\textbf{x},\textbf{y})\in {{\mathbb {R}}^d}\times {{\mathbb {R}}^d}\) with \(\textbf{x}\ne \textbf{y}\). We also have that the diagonal set \(x=y\) is negligible with respect to the product measure \(\rho (\textbf{y})\,\textrm{d}{\textbf{y}}\rho (\textbf{x})\,\textrm{d}{\textbf{x}}\) since \(E[\rho ]<\infty \). Therefore we see that \((W*\Psi _\alpha )(\textbf{x}-\textbf{y})\) converges to \(W(\textbf{x}-\textbf{y})\) almost everywhere with respect to the measure \(\rho (\textbf{y})\,\textrm{d}{\textbf{y}}\rho (\textbf{x})\,\textrm{d}{\textbf{x}}\).

In both cases, since we proved that \((W*\Psi _\alpha )(\textbf{x}-\textbf{y})\le C_R W(\textbf{x}-\textbf{y})\) for any \(\textbf{x}\ne \textbf{y}\), the integral in (2.6) is dominated by the integral

and the dominated convergence theorem gives the conclusion. \(\square \)

Proof of Theorem 2.4

Notice that the global minimizer is unique once we fix the center of mass according to our discussion above. Let \(\rho _\infty \in {\mathcal {P}}_2({\mathbb {R}}^d)\) be the global energy minimizer with the same center of mass, and assume on the contrary that \(\rho \ne \rho _\infty \). Define \(\rho _1=\rho _\infty *\psi _\alpha \) with \(\psi _\alpha \) as defined in Lemma 2.5, and

as the linear interpolation curve, satisfying \(\frac{\,\textrm{d}^2}{\,\textrm{d}{t}^2}E[\rho _t] \ge 0,\,\forall t\in (0,1)\). Notice that \(E[\rho _\infty ] < E[\rho ]\) since \(\rho \ne \rho _\infty \) taking into account the uniqueness of global minimizer. By Lemma 2.5, \(E[\rho _1]<E[\rho ]\) if \(\alpha \) is sufficiently small. Then we see that

On the other hand, notice that by the bi-linearity of E and the necessary condition of the \(d_2\)-local minimizers in (2.2),

where the inequality uses the fact that \(\rho _1=\rho _\infty *\psi _\alpha \) is a smooth function and then the possible zero-measure exceptional set in (2.2) does not contribute. This gives a contradiction. \(\square \)

We now focus on defining properly the concept of steady state for the interaction energy E and the aggregation Eq. (1.3) we are dealing with. We will denote by \(\partial V(x)\) the subdifferential of V at the point \(x\in {{\mathbb {R}}^d}\).

Definition 2.6

We say \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) is a steady state of the interaction energy E if \(0\in \partial V (\textbf{x})\) \(\rho \)-a.e.

Remark 2.7

Assume that \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) is a steady state with \(V(\textbf{x})\in C^1({{\mathbb {R}}^d})\), then \(\textbf{u}=\nabla W*\rho \) is continuous on \({{\mathbb {R}}^d}\) and \(\textbf{u}=0\) on \(\text {supp}\,\rho \). Moreover, \(\rho \) is a stationary distributional solution to (1.3).

Notice also that any \(d_\infty \)-local minimizer is a steady state of the interaction energy E.

3 Radial symmetry of \(d_\infty \)-local minimizers and steady states

In this section, for the locally stable steady states (in the sense of Remark 2.7) of the aggregation Eq. (1.3), we give sufficient conditions for their radial symmetry.

Theorem 3.1

Assume \(d\ge 2\) and W satisfies ((H)), W is radially symmetric, and the interaction energy E is LIC. Then every compactly supported \(d_\infty \)-local minimizer \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) is radially symmetric.

Furthermore, suppose E is FLIC and \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) is a compactly supported steady state of the interaction energy E such that \(\nabla ^2 W*\rho \) is continuous and \(\nabla ^2 W*\rho \ge 0\) (i.e., being semi-positive-definite) on \(\text {supp}\,\rho \), then \(\rho \) is radially symmetric.

This theorem is proven in several steps, and we first focus on the 2D case and prove by contradiction. We find better competitors in case of asymmetry for local minimizers or quantifying the behavior of the hessian at the boundary of the support for steady states. The multi-D cases can be similarly done by choosing a direction along which \(\rho \) is not rotationally symmetric to reach contradiction, but it needs some further technical details. Without loss of generality, we may assume due to translational invariance that a given \(d_\infty \)-local minimizer / steady state has zero center of mass \(\int _{{\mathbb {R}}^d}\textbf{x}\rho (\textbf{x})\,\textrm{d}{\textbf{x}}=0\). Let \({\mathcal {R}}_\theta f\) denote the rotation of a function f by the angle \(\theta \):

Proof of Theorem 3.1, local minimizer case, for \(d=2\)) Assume on the contrary that a compactly supported \(d_\infty \)-local minimizer \(\rho \) is not radially symmetric. Define

By the radial symmetry of W, we have \(E[\rho ] = E[{\mathcal {R}}_\theta \rho ]\). Then by LIC, we see that \(E[\rho _\theta ]<E[\rho ]\) since \(\rho \ne {\mathcal {R}}_\theta \rho \) if \(|\theta |\) is small enough. On the other hand, notice that \(d_\infty (\rho ,\rho _\theta ) \le R\theta \) where \(R=\max _{\textbf{x}\in \text {supp}\,\rho }|\textbf{x}|<\infty \). Therefore, by the definition of \(d_\infty \)-local minimizer, we have \(E[\rho _\theta ]\ge E[\rho ]\) for \(|\theta |\) small enough, leading to a contradiction. \(\square \)

To prove the statement on steady states, we first give a lower bound of the linear interpolation convexity.

Lemma 3.2

Assume \(d=2\) and the interaction energy E is FLIC. Assume \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) with zero center of mass is not radially-symmetric. Then for small \(\theta >0\),

To prove this, we first give a lemma:

Lemma 3.3

Let f(x) be a function defined on the torus \({\mathbb {T}}\). Then for \(|\theta | \le c_f\) being small,

where c is an absolute constant.

Proof

Notice that by Fourier series expansion, we can write

and

Therefore, there exists \(K=K_f\) such that

We observe that \( |1-e^{ik\theta }|^2 \ge \sin ^2(k\theta ) \ge c\theta ^2\), for all \(|k|\le K\), if \(|\theta |\le 0.1/K\). Then the conclusion follows.

\(\square \)

Proof of Lemma 3.2

Due to the FLIC assumption (2.1) of the interaction energy, it suffices to show that

for some \(0<R_1<R_2\). Since \(\rho \) is not radially symmetric, we have the same property for \({\hat{\rho }}\), which further implies

where we denote \(f(r,\phi ) = {\hat{\rho }}(r(\cos \phi ,\sin \phi )^T)\), \(\phi \in [0,2\pi ]\), and \({\bar{f}}(r)=\frac{1}{2\pi }\int _{{\mathbb {T}}}f(r,\phi )\,\textrm{d}{\phi }\) is the angular average of f. Therefore, there exists \(\epsilon >0\) such that

has positive measure. We may assume \(S\subseteq [R_1,R_2]\) for some \(0<R_1<R_2\) by replacing with a subset of itself if necessary. For each \(r\in S\), let \(c_r\) denote the constant \(c_f\) in Lemma 3.3 with \(f = f(r,\cdot )\). We can express the set S as a union of nested sets

for any \(\delta >0\), concluding that \(|S_\delta |>0\) for some \(\delta >0\). Therefore, using Lemma 3.3, we infer that

where c is an absolute constant. Therefore, we finally obtain

Then the conclusion follows. \(\square \)

Proof of Theorem 3.1, steady state case, for \(d=2\))

Assume on the contrary that \(\rho \) is a steady state satisfying the assumptions but not radially symmetric. Define

Then Lemma 3.2 shows that for small \(|\theta |\),

by noticing that \(\frac{\,\textrm{d}^2}{\,\textrm{d}{t}^2} E[(1-t)\rho +t {\mathcal {R}}_\theta \rho ] = 2E[\rho -{\mathcal {R}}_\theta \rho ]\).

Since \(\rho \) is a steady state, \(\nabla V=0\) on \(\text {supp}\,\rho \) by definition. By the continuity of \(\nabla ^2 V\) and its semi-positive-definiteness on \(\text {supp}\,\rho \), for any \(\epsilon >0\), there exists \(\delta >0\) such that

Here, we have used the classical notation for order of square matrices with \(I_d\) being the identity matrix in dimension d. Therefore, if \(\textbf{x}_1\in \text {supp}\,\rho \) and \(|\textbf{x}_2-\textbf{x}_1|\le \delta \), then

by Taylor expansion.

Notice that

due to rotational symmetry of W. Then, we have

if \(R\theta <\delta \), where \(R=\max _{\textbf{x}\in \text {supp}\,\rho }|\textbf{x}|<\infty \). Taking \(\epsilon =\frac{c}{R^2}\) where c is given by (3.1), we conclude that

for \(\theta \) small enough, contradicting (3.1). \(\square \)

We now go back to the general \(d\ge 3\) case. Denote \(\textbf{x}= (x_1,x_2,\textbf{y})^T\). Since \(\rho \) is not radially-symmetric, we may assume without loss of generality that the center of mass of \(\rho \) is 0, and

for small \(|\theta |\). The local minimizer case can be done similarly as above using \(\rho _\theta = \frac{1}{2}(\rho + {\tilde{{\mathcal {R}}}}_\theta \rho )\) instead. To treat the steady state case, we next generalize Lemma 3.2.

Lemma 3.4

Assume \(d\ge 3\) and the interaction energy E is FLIC. Assume \(\rho \in {\mathcal {P}}({\mathbb {R}}^d)\) with zero center of mass satisfies \(\rho \ne {\tilde{{\mathcal {R}}}}_\theta \rho \). Then for small \(\theta >0\), \(E[\rho -{\tilde{{\mathcal {R}}}}_\theta \rho ] \ge c\theta ^2\) for some \(c>0\).

Proof

Due to the FLIC property of E, it suffices to show that

for some \(0<R_1<R_2\). The condition \(\rho \ne {\tilde{{\mathcal {R}}}}_\theta \rho \) implies the same property of \({\hat{\rho }}\), which implies

where we denote \(f(r,\phi ,\eta ) = {\hat{\rho }}(r(\cos \phi ,\sin \phi )^T,\eta )\), \(\phi \in [0,2\pi ]\) and \({\bar{f}}(r,\eta ) = \frac{1}{2\pi }\int _{{\mathbb {T}}}f(r,\phi ,\eta )\,\textrm{d}{\phi } \). Therefore, there exists \(\epsilon >0\) such that

has positive measure. We may assume \(S\subseteq \{(r,\eta ):\sqrt{r^2+\eta ^2}\in [R_1,R_2]\}\) for some \(0<R_1<R_2\) by replacing with a subset of itself if necessary. For each \((r,\eta )\in S\), let \(c_{(r,\eta )}\) denote the constant \(c_f\) in Lemma 3.3 with \(f = f(r,\cdot ,\eta )\). Similarly as in the 2D case, we write S as

to conclude that \(|S_\delta |>0\) for some \(\delta >0\). Therefore, we obtain

where c is an absolute constant. As a consequence, we get

Then the conclusion follows. \(\square \)

Proof of Theorem 3.1, for \(d\ge 3\)) Similarly as above, we define

Then Lemma 3.4 shows that for small \(|\theta |\), \(E[\rho _\theta ] \le E[\rho ] - c\theta ^2\), by noticing that \(\frac{\,\textrm{d}^2}{\,\textrm{d}{t}^2} E[(1-t)\rho +t {\mathcal {R}}_\theta \rho ] = 2E[\rho -{\tilde{{\mathcal {R}}}}_\theta \rho ]\). Similar to the \(d=2\) case, we can use the steady state properties and the assumption on \(\nabla ^2 V\) to show that for any \(c_1>0\),

if \(|\theta |\) is small enough, leading to the contradiction. \(\square \)

Remark 3.5

(Failure of uniqueness and radial symmetry for \(d_\infty \)-local minimizers of 1D FLIC potentials) For FLIC potentials, the uniqueness of global minimizer clearly implies its radial symmetry in any dimension. However, for \(d_\infty \) local minimizers, although the radial symmetry is still true for \(d\ge 2\), it is generally false for \(d=1\). We provide an example of a \(d_\infty \)-local minimizer of a 1D FLIC potential that may fail to be unique and radially-symmetric (i.e., in 1D, an even function). Define

where \(\epsilon \ge 0\) and \(\phi \) is a non-negative smooth even function supported on \([-1/2,1/2]\) with \(\phi ''(0)<0\). The result in [40] shows that the interaction energy associated to the potential \(W_0\) is FLIC with

Since \(|{\hat{\phi }}(\xi )| \le C(1+|\xi |)^{-5}\), there holds \({\hat{W}}_\epsilon (\xi )>0,\,\forall \xi \ne 0\) if \(\epsilon >0\) is small enough, and then \(W_\epsilon \) is FLIC.

Notice now that \(W_\epsilon (x)\) has local minima at \(x=\pm 1\), with

Also notice that \(W_\epsilon ''(0) = -1\). It follows that for any \(0<\alpha <\tfrac{1}{2}\), \(\rho _\alpha (x) = (\tfrac{1}{2}-\alpha )\delta (x-\frac{1}{2}) + (\tfrac{1}{2}+\alpha )\delta (x+\frac{1}{2})\) is a steady state for \(W_\epsilon \), since

satisfies \((W_\epsilon *\rho _\alpha )'(\tfrac{1}{2})=(W_\epsilon *\rho _\alpha )'(-\tfrac{1}{2})=0\). Also, since

we observe that both quantities are positive by taking \(|\alpha |<\frac{\lambda -1}{2(\lambda +1)}\). This implies \(\rho _\alpha \) is a \(d_\infty \)-local minimizer for every such \(\alpha \) (which will be justified in the next paragraph), and this shows that the \(d_\infty \)-local minimizers of \(W_\epsilon \) are non-unique and non-radially-symmetric in general.

To see that \(\rho _\alpha \) is a \(d_\infty \)-local minimizer, we consider any alternate \(\rho \ne \rho _\alpha \) with the same total mass and center of mass and \(\beta :=d_\infty (\rho ,\rho _\alpha )\) being small. Then \(\text {supp}\,\rho \subseteq [-\tfrac{1}{2}-\beta ,-\tfrac{1}{2}+\beta ]\cup [\tfrac{1}{2}-\beta ,\tfrac{1}{2}+\beta ]\) with

Define \(\rho _t = (1-t)\rho _\alpha +t\rho \), and we have \(\frac{\,\textrm{d}^2}{\,\textrm{d}{t}^2}E[\rho _t] > 0\) for any \(0\le t \le 1\) by the LIC property of E (with the interaction potential \(W_\alpha \)). Denoting \(V_\alpha :=W_\epsilon *\rho _\alpha \), we have

Since \(V_\alpha \in C^2\) has vanishing first derivative and positive second derivative at \(\pm \tfrac{1}{2}\), we have \(V_\alpha (x)\ge V_\alpha (\pm \tfrac{1}{2})\) for \(|x-(\pm \tfrac{1}{2})|\le \beta \) if \(\beta >0\) is small enough. Then we see that the first integral in the last expression is non-negative since

and similar for the second integral. Therefore we get \(\frac{\,\textrm{d}}{\,\textrm{d}{t}}|_{t=0}E[\rho _t]\ge 0\), which implies \(\frac{\,\textrm{d}}{\,\textrm{d}{t}}E[\rho _t]\ge 0\) for any \(0\le t \le 1\) since \(\frac{\,\textrm{d}^2}{\,\textrm{d}{t}^2}E[\rho _t] > 0\). Therefore

which shows that \(\rho _\alpha \) is a \(d_\infty \)-local minimizer.

4 From radially-symmetric steady states to uniqueness of \(d_\infty \)-local minimizer

We first show our main result concerning the uniqueness of minimizers as a consequence of their radial symmetry.

Theorem 4.1

Assume W satisfies ((H-s)), \(W\in C^4({{\mathbb {R}}^d}\setminus \{0\})\) is radially symmetric, the interaction energy (1.5) associated to the potential W is LIC and

and

Let \(\rho \) be a compactly supported \(d_\infty \)-local minimizer such that \(\nabla W * \rho \) is continuous with zero center of mass. Then \(\rho \) is the unique global minimizer of E over \({\mathcal {P}}_2({\mathbb {R}}^d)\) with zero center of mass.

We want to take advantage on the fourth derivative assumption (4.1) in order to apply maximum principle arguments for certain operators. We need some preliminary results in this direction.

Lemma 4.2

Let \(f\in C^1([x_1,x_2])\cap C^4((x_1,x_2))\). Assume

for some positive function \(a(x)\in C((x_1,x_2])\). Then either \(x_1\) or \(x_2\) is not a local minimum point of f.

Proof

Denote \(g = {\mathcal {L}}f\). By assumption \(({\mathcal {L}}g)(x)<0,\,\forall x\in (x_1,x_2)\). This implies that there exist at most 2 points in \((x_1,x_2)\) where \(g=0\). In fact, suppose \(g(y_1)=g(y_2)=g(y_3)=0\) with \(y_1<y_2<y_3\), then \(({\mathcal {L}}g)(x)<0\) forces g to be positive on \((y_1,y_2)\) and \((y_2,y_3)\) due to the classical maximum principle, and it follows that \(g'(y_2)=0\), \(g''(y_2)\ge 0\) which is a contradiction to \(({\mathcal {L}}g)(y_2)<0\).

Denote \(A(x) = - \int _x^{x_2}a(y)\,\textrm{d}{y}\) which is a non-positive increasing continuous function defined on \((x_1,x_2]\), satisfying \(A'(x)=a(x)\). Then notice that

where the integral is interpreted as an improper integral, and the existence of the last limit follows from the assumption \(f'(x_1)=0\) and the fact that A(x) is negative and increasing for \(x<x_2\). This requires that \(g=f''+a(x)f'\) is positive at some point in \((x_1,x_2)\), and g is negative at some other point in \((x_1,x_2)\), and therefore there exists at least 1 point in \((x_1,x_2)\) where \(g=0\).

Now we separate into the following cases:

-

There exists 1 point y in \((x_1,x_2)\) such that \(g(y)=0\), and \(g|_{(x_1,y)}>0\), \(g|_{(y,x_2)}<0\). In this case,

$$\begin{aligned} e^{A(x_2-\epsilon )}f'(x_2-\epsilon ) = e^{A(x_2)}f'(x_2)-\int _{x_2-\epsilon }^{x_2}e^{A(x)}g(x)\,\textrm{d}{x} > 0 \end{aligned}$$if \(0<\epsilon <x_2-y\). This implies that \(x_2\) is not a local minimum point of f.

-

There exists 1 point y in \((x_1,x_2)\) such that \(g(y)=0\), and \(g|_{(x_1,y)}<0\), \(g|_{(y,x_2)}>0\). In this case,

$$\begin{aligned} e^{A(x_1+\epsilon )}f'(x_1+\epsilon ) = \lim _{x\rightarrow x_1+}e^{A(x)}f'(x_1)+\int _{x_1}^{x_1+\epsilon }e^{A(x)}g(x)\,\textrm{d}{x} = \int _{x_1}^{x_1+\epsilon }e^{A(x)}g(x)\,\textrm{d}{x} < 0 \end{aligned}$$if \(0<\epsilon <y-x_1\). This implies that \(x_1\) is not a local minimum point of f.

-

There exist 2 points \(z_1<z_2\) in \((x_1,x_2)\) such that \(g(z_1)=g(z_2)=0\). Then \(({\mathcal {L}}g)(x)<0\) implies that \(g|_{(x_1,z_1)}<0\), \(g|_{(z_1,z_2)}>0\), \(g|_{(z_2,x_2)}<0\) since otherwise one gets a contradiction at \(z_1\) or \(z_2\) similarly as done above for \(y_2\). Then it follows as in the previous two cases that neither \(x_1\) nor \(x_2\) is a local minimum point of f.

\(\square \)

Lemma 4.3

Let \(f\in C^1([x_1,\infty ))\cap C^4((x_1,\infty ))\). Assume

for some \(x_2>x_1\) and some positive function \(a(x)\in C\big ((x_1,x_2]\big )\). Then either \(x_1\) is not a local minimum point of f, or \(x_1\) is the global minimum point of f on \([x_1,x_2]\).

Proof

Similar to the previous proof, there exists at most 2 points in \((x_1,x_2)\) where \(g={\mathcal {L}}f = 0\). We claim that in fact there exists at most one point with the additional assumption \(g(x_2)>0\). In fact, suppose \(g(y_1)=g(y_2)=0\) with \(y_1<y_2\), then \(({\mathcal {L}}g)(x)<0\) forces g to be positive on \((y_1,y_2)\) due to the classical maximum principle. Moreover, g is also positive on \((y_2,x_2)\) since there are no other zeros of g and \(g(x_2)>0\), and it follows as above that \(g'(y_2)=0\), \(g''(y_2)\ge 0\) which is a contradiction to \(({\mathcal {L}}g)(y_2)<0\). Now, we may separate into the following cases:

-

There exists 1 point y in \((x_1,x_2)\) such that \(g(y)=0\), and \(g|_{(x_1,y)}<0\), \(g|_{(y,x_2)}>0\). Notice there is a change of sign on g at the zero and \(g(x_2)>0\), otherwise we arrive at a contradiction again. In this case, \(x_1\) is not a local minimum point of f proceeding as in the first two cases of Lemma 4.2.

-

Assume now that \(g|_{(x_1,x_2)}>0\). In this case

$$\begin{aligned} e^{A(x)}f'(x) = \lim _{x\rightarrow x_1+}e^{A(x)}f'(x_1) + \int _{x_1}^x e^{A(z)}g(z)\,\textrm{d}{z} = \int _{x_1}^x e^{A(z)}g(z)\,\textrm{d}{z}> 0,\quad \forall x\in (x_1,x_2) \end{aligned}$$which implies that f is increasing on \([x_1,x_2]\). Therefore \(x_1\) is a global minimum point of f on \([x_1,x_2]\).

\(\square \)

Proof of Theorem 4.1

Assume first that \(d\ge 2\). By Theorem 3.1, \(\rho \) is radially-symmetric.

Suppose there exists a subset of \((\text {supp}\,\rho )^c\), \(S_1 = \{R_1< |\textbf{x}| < R_2\}\) for some \(0\le R_1<R_2\), with \(\{|\textbf{x}|=R_1\}\subseteq \text {supp}\,\rho \) and \(\{|\textbf{x}|=R_2\}\subseteq \text {supp}\,\rho \). Denote \(({\mathcal {L}}V)(r) = V''(r) + \frac{d-1}{r}V'(r)\) as an operator on the radial direction, then \(\Delta V(\textbf{x}) = ({\mathcal {L}}V)(r)\), \(\Delta ^2 V(\textbf{x}) = ({\mathcal {L}}^2 V)(r) < 0\) for any \(R_1<r<R_2\). Since \(\rho \) is a \(d_\infty \)-local minimizer, we have \(V'(R_1)=V'(R_2)=0\) by Lemma 2.1 and the assumption that \(V\in C^1\). Therefore Lemma 4.2 shows that either \(R_1\) or \(R_2\) is not a local minimum point of V(r), which contradicts Lemma 2.1.

Suppose there exists a subset of \((\text {supp}\,\rho )^c\), \( S_2 = \{|\textbf{x}| < R_2\} \) for some \(R_2>0\), with \(\{|\textbf{x}|=R_2\}\subseteq \text {supp}\,\rho \). Then \(\Delta ^2 V(\textbf{x}) = ({\mathcal {L}}^2 V)(r) < 0\) for any \(0<r<R_2\). Since \(\rho \) is a \(d_\infty \)-local minimizer, we have \(V'(R_2)=0\) by Lemma 2.1 and the assumption that \(V\in C^1\), and \(V'(0)=0\) by the radial symmetry of V. Therefore Lemma 4.2 shows that either 0 or \(R_2\) is not a local minimum point of V(r). By Lemma 2.1, \(R_2\in \text {supp}\,V(r)\) is a local minimum point of V(r), and thus 0 is not a local minimum point of V(r), which implies \(\Delta V(0)\le 0\). Since \(\Delta V\) is radially symmetric and \(\Delta ^2 V(\textbf{x})<0\) for \(|\textbf{x}|<R_2\), we have \(\Delta V(\textbf{x})<0\) for any \(0<|\textbf{x}|<R_2\). This contradicts the fact that \(V'(R_2)=0\) by integrating on \(S_2\).

Therefore \(\text {supp}\,\rho \) is a ball, and denote its radius as \(R_1\ge 0\). Since \(\Delta V(\textbf{x}) = ({\mathcal {L}}V)(r)>0\) for all r large enough (due to the assumption (4.2)), we apply Lemma 4.3 on \([R_1,R_2]\) for large \(R_2\), and obtain that \(R_1\) is a global minimum of V(r) on any \([R_1,R_2]\). Then the conclusion follows from Theorem 2.4.

For the case \(d=1\), one could argue similarly and show that there cannot be an interval \((x_1,x_2)\subseteq \text {supp}\,\rho \) with \(\{x_1,x_2\}\subseteq \text {supp}\,\rho \), and conclude that \(\text {supp}\,\rho \) is an interval \([X_1,X_2]\). Then one could obtain similarly that \(X_2\) is the global minimum point of V on \([X_2,\infty )\), and \(X_1\) is the global minimum point of V on \((-\infty ,X_1]\). Then Theorem 2.4 shows that \(\rho \) is the unique global minimizer of E. The radial symmetry of \(\rho \) is obtained by noticing that \(\rho (-x)\) is also a global minimizer with zero center of mass, and therefore equal to \(\rho (x)\). \(\square \)

Remark 4.4

In the previous proof, if \(d\ge 2\) and suppose we know that \(\rho \) does not have an isolated Dirac mass at 0, then in the case of \(S_1\) we also can exclude the possibility of \(R_1=0\), and therefore we may weaken the continuity assumption to ‘\(\nabla W*\rho \) is continuous on \({{\mathbb {R}}^d}\backslash \{0\}\)’.

In case the interaction energy does not necessarily meet the FLIC property, we can obtain a similar result for stationary states under an additional regularity assumption: \(\nabla W*\rho \) and \(\Delta W*\rho \) are continuous.

Theorem 4.5

Assume W satisfies ((H)), \(W\in C^4({{\mathbb {R}}^d}\setminus \{0\})\) is radially symmetric with

and

Let \(\rho \) be a compactly supported steady state in the sense of Definition 2.6, with \(\nabla W*\rho \) and \(\Delta W*\rho \) being continuous, and \(\Delta W*\rho \ge 0\) on \(\text {supp}\,\rho \). Then the complement of \(\text {supp}\,\rho \) is connected. If in addition, \(\rho \) is radially-symmetric, or \(d=1\), then \(\rho \) satisfies the \(d_2\)-local minimizer condition (2.2).

If in addition, W satisfies FLIC, then any compactly supported steady state \(\rho \), with the regularity assumption that \(\nabla ^2 W*\rho \) is continuous and semi-positive definite on \(\text {supp}\,{\rho }\), is the global minimizer of the interaction energy.

We first remark that the last part of the previous theorem is a direct consequence of Theorem 3.1 and Theorem 2.4 together with the first part of it. To prove this result, we need the following lemmas, which are standard maximum principle arguments in elliptic theory.

Lemma 4.6

Let \(\Omega \) be a bounded open set, and \(f\in C({\bar{\Omega }})\cap C^2(\Omega )\). If \(\Delta f\le 0\) on \(\Omega \), then \(\min _{\textbf{x}\in {\bar{\Omega }}}f(\textbf{x}) = \min _{\textbf{x}\in \partial \Omega }f(\textbf{x})\). Assume further \(f\in C^1({\bar{\Omega }})\) and \(\Omega \) is connected, if \(\Delta f\le 0\) on \(\Omega \) and \(\nabla f = 0\) on \(\partial \Omega \), then f is constant on \({\bar{\Omega }}\).

Proof of Theorem 4.5

Take R large enough such that \(\text {supp}\,\rho \subseteq \{|\textbf{x}|<R\}\), and by (4.2), we have

for R large enough. Denote \(S = \{|\textbf{x}|<R\}\backslash \text {supp}\,\rho \).

STEP 1: Prove that \(\Delta V \ge 0\) on S. First notice that for any \(\textbf{x}\in S\),

by (4.1). Then applying Lemma 4.6 to \(\Delta V\) which is continuous on \({{\bar{S}}}\) by assumption, we get

Notice that \( \partial S= \partial (\text {supp}\,\rho ) \cup \{|\textbf{x}|=R\}\) and

since \(\Delta V\ge 0\) on \(\text {supp}\,\rho \) by assumption. Combined with (4.3), we get

which implies the claim.

STEP 2: Prove the connectivity of S. Suppose on the contrary that \(S_1\) is a connected component of S whose closure does not intersect \(\{|\textbf{x}|=R\}\). Then \(\partial S_1\subseteq \partial (\text {supp}\,\rho )\), which implies

by the continuity of \(\nabla V\) since \(\rho \) is a stationary state. Using the second part of Lemma 4.6 since \(-\Delta V\le 0\) on \(S_1\), we deduce that V is constant on \(S_1\). This fact contradicts (4.4).

STEP 3: Prove the \(d_2\)-local minimizer condition (2.2). Assume that \(d\ge 2\) and \(\rho \) is radially symmetric. Due to the connectivity of S, S has the form \({{\bar{S}}} = \{R_1 \le |\textbf{x}| \le R\}\) for some \(0\le R_1<R\). Let us denote for simplicity the radial potential generated by \(\rho \) as \(V(\textbf{x})=V(r),\,r=|\textbf{x}|\), then it satisfies

This implies that V(r) is increasing on \([R_1,\infty )\), which gives the desired conclusion. Indeed, to see this, notice that

Multiplying by \(r^{d-1}\) and integrating leads to \(V'(r)\ge 0\). The 1D case without the radial symmetry assumption can be handled similarly. \(\square \)

5 Radial symmetry and global minimizers for power-law potentials

We apply the previous results to the power-law potential

where \(a>b>-d\) and with the convention \(\tfrac{|x|^0}{0}:=\ln x\).

Let \(\rho \in {\mathcal {P}}\) be compactly supported with zero center of mass. We define \(\rho \) to be mild if \(d=1\) with \(\nabla W*\rho \) continuous, or \(d\ge 2\) with \(\nabla W*\rho \) continuous on \({\mathbb {R}}^d\backslash \{0\}\).

Theorem 5.1

Let W be defined by (5.1). If (a, b) satisfies

Then any compactly supported \(d_\infty \)-local minimizer \(\rho \) with \(d\ge 2\) is radially-symmetric. If (a, b) further satisfies

Then the unique global minimizer \(\rho _\infty \) is mild, and it is the unique mild \(d_\infty \)-local minimizer.

If \(a=2\) and \(2-d<b<\min \{4-d,2\}\) or \(a=4\) and \(2-d<b<{{\bar{b}}} = (2+2d-d^2)/(d+1)\), then the global minimizer is given by the explicit formulas in (5.9) and (5.10).

Remark 5.2

In one dimension, the ranges \(-1<b<2\) for \(a=2\) and \(2<a<3\) for \(b=2\) were discussed in [29]. We now discuss the sharpness of the assumption (5.3) in the case \(d\ge 2\).

It is shown in [1] that for W given by (5.1) with \(a\ge 2\) and \(\frac{(3-d)a-10+7d-d^2}{a+d-3}=:b_{\max }<b<2\), then the Dirac Delta on a particular \((d-1)\)-dimensional sphere is a \(d_\infty \)-local minimizer. Notice that \(b_{\max }<4-d\) if \(a>2\). Therefore the assumption \(a=2\) in (5.3) cannot be improved within our framework, in which a necessary step to get the uniqueness of \(d_\infty \)-local minimizer is to show the connectivity of \((\text {supp}\,\rho )^c\) for any \(d_\infty \)-local minimizer \(\rho \), see Theorem 4.5.

In the case \(d\ge 3\), the same reasoning also shows that the upper bound \(4-d\) for b cannot be improved within our framework, because for \(a=2\) and \(4-d=b_{\max }<b<2\) the Dirac Delta on a sphere is a \(d_\infty \)-local minimizer.

To prove this theorem, we first analyze the FLIC property of power-law potentials to justify the radial symmetry of \(d_\infty \)-local minimizers. Then, using the radial symmetry, we show the mild property of the global minimizer \(\rho _\infty \) with the range of parameters (5.3). In the special case \(a=2\), we obtain a better regularity result, \(\nabla ^2 W*\rho _\infty \) is continuous, by using the explicit formulas given by [22]. Finally we show the uniqueness of mild \(d_\infty \)-local minimizer by applying Theorem 4.1.

5.1 The (F)LIC property and radial symmetry

We first figure out the values of (a, b) such that W has FLIC. The author in [40] proved that \(\frac{|\textbf{x}|^a}{a}\) has the LIC property, for \(2\le a \le 4\). It is clear, also used in [40], that \(-\frac{|\textbf{x}|^b}{b}\) has the FLIC property for \(-d< b < 0\), since its Fourier transform is \(c|\textbf{x}|^{-d-b}\) for some \(c>0\). We next extend the range of b.

Theorem 5.3

The interaction energy E associated to the potential \(\frac{|\textbf{x}|^a}{a}-\frac{|\textbf{x}|^b}{b}\), with \(-d< b < 2\le a\le 4\), except \(0\le b<1\) in one dimension, has the FLIC property.

As a consequence, any compactly supported \(d_\infty \)-local minimizer of the interaction energy with \(-d< b < 2\le a\le 4\) and \(d\ge 2\) is radially symmetric. In particular, the unique global minimizer for the interaction energy with \(-d< b < 2\le a\le 4\) and \(d\ge 2\) is radially symmetric.

Proof

The range \(-d<b<0\) is done in [40]. Since W satisfies ((H)), then Lemma 2.3 allows us to reduce to the case of nonzero compactly supported signed measures \(\mu \in {\mathcal {M}}({\mathbb {R}}^d)\), with

Notice that \(\frac{|\textbf{x}|^a}{a}\) has the LIC property, for \(2\le a \le 4\), as proven in [40]. Since \(\mu \) is compactly supported, we are reduced to show the FLIC property for the repulsive part of the interaction potential, \(U(\textbf{x}) = -\frac{|\textbf{x}|^b}{b}\). Then we separate into cases:

Case 1 \(2-d<b<2\). Let \( f(\textbf{x}) = \Delta ^{-1}\mu (\textbf{x}) \). Then for \(|\textbf{x}|\) large, by (5.4), we have

Since \(b<2\), this suffices to justify the integration-by-parts below

Notice that \( \Delta U(\textbf{x}) = - (b+d-2)|\textbf{x}|^{b-2} \) has Fourier transform \( \widehat{(\Delta U)}(\xi ) = - (b+d-2)c|\xi |^{-d-b+2} \), where c is a positive constant. Then

Notice that \(b+d-2>0\) for all the stated cases. Therefore the conclusion follows.

Case 2 The Newtonian cases \(b=2-d\), with \(d=1,2\). In this case, since \(-U\) is the fundamental solution to the Laplacian (up to a positive constant multiple), we have

where the integration-by-parts is justified by (5.5). Then, since \(\nabla f\) is \(L^1\) by (5.5), we have

which implies

Finally, we apply Theorem 3.1 to deduce the radial symmetry of any compactly supported \(d_\infty \)-local minimizer of E with \(-d< b < 2\le a\le 4\) and \(d\ge 2\).

Moreover, using [10, 47] the global minimizers of E in this range are compactly supported. Then, the last claim about global minimizers results directly from the FLIC property. \(\square \)

Remark 5.4

The cases \(0\le b <1\) and \(2\le a\le 4\) in one dimension are not covered by the previous arguments. In fact, the FLIC property is still true for these cases. However, these cases do not have the necessary properties for Theorem 4.1 to be applicable, and thus, we postpone the proof to the appendix.

5.2 Regularity properties

We first show ((H-s)) for the power-law potentials (5.1).

Lemma 5.5

For W given by (5.1) with \(a\ge 2\) and \(-d<b<2\), there exists \(C_1>0\) such that \(W+C_1\) satisfies ((H-s)).

Proof

Take \(C_1=\max \{-\inf W,0\}+1 > 0\), and then \(W_1:=W+C_1\) is bounded from below by 1. It suffices to show that for any \(R>0\), there exists \(C_R>0\) such that \(\frac{1}{|B(\textbf{x};r)|}\int _{B(\textbf{x};r)}W_1(\textbf{y})\,\textrm{d}{\textbf{y}} \le C_R W_1(\textbf{x}), \forall |\textbf{x}|\le R,\,0<r\le R\). If \(b>0\), then \(W_1\) is continuous, and the function

is defined for \(\bar{B(0;R)}\times [0,R]\) and continuous. Therefore \(\phi \) achieves its maximum, and the conclusion follows.

If \(b< 0\), with the constants C depending on R,

for any \(|\textbf{x}|\le R\) and \(r\le R\). If \(r\le \frac{|\textbf{x}|}{2}\), then \(\frac{|\textbf{x}|}{2}\le |\textbf{y}| \le \frac{3|\textbf{x}|}{2}\) in the last integral, and

and the conclusion follows. If \(r> \frac{|\textbf{x}|}{2}\), then

using the radially-decreasing property of \(|\textbf{y}|^b\) and the assumption \(-d<b<0\). Therefore

and the conclusion follows.

The case \(b=0\) (i.e., \(-\frac{|\textbf{x}|^b}{b}:=-\ln |\textbf{x}|\)) can be treated similarly as the \(b<0\) case. \(\square \)

Next we give the mild property of the global minimizer for power-law potentials.

Lemma 5.6

Assume \(a\ge 2\), \(2-d<b<2\) and W be given by (5.1). Assume \(\rho \in {\mathcal {P}}\) is compactly supported. If we have either of the following:

-

\(d=1\);

-

\(d\ge 2\), and \(\rho \) is radially-symmetric;

then \(\rho \) is mild. In particular, the global minimizer \(\rho _\infty \) with (a, b) satisfying the assumption of Theorem 5.3 is mild.

Proof

For the case \(d=1\), we have \(1<b<2\). Notice that \(\nabla W(x) = \text {sgn}(x)(|x|^{a-1}-|x|^{b-1})\) is continuous, and therefore \(\nabla W*\rho \) is continuous.

For the case \(2-d<b<2\) with \(d\ge 2\) and \(\rho \) being compactly-supported and radially-symmetric, there exists a measure \({\tilde{\rho }}\) supported on [0, R] for some \(R>0\) such that

for any continuous function \(\phi \), where \(\,\textrm{d}{S(\textbf{y})}\) denotes the surface measure on the unit sphere. We clearly have

by taking \(\phi =1\), since \(\rho \) has total mass 1. (5.6) is also applicable to \(W(\textbf{x}-\textbf{y})\) for fixed \(\textbf{x}\) by an approximation argument on the potential, and gives

To analyze the continuity of \(W*\rho \) for W given by (5.1) with \(a\ge 2\), we only need to consider the potential \(W=-\frac{|\textbf{x}|^b}{b}\). Also, due to the radial symmetry of \(W*\rho \), we only need to consider directional derivative along the radial direction, which is

for \(\textbf{x}\ne 0\), where on the RHS we write \(W(\textbf{x})=\omega (|\textbf{x}|)\). Here (5.8) can be justified as long as the RHS is dominated by an \(L^1\) function uniformly in a neighborhood of s, which we will prove in the rest of the proof. For \(W=-\frac{|\textbf{x}|^b}{b}\), we have

Here the last integrand is dominated by

Therefore the RHS of (5.8) with is dominated by

which is uniformly bounded (by a constant multiple of (5.7)) for \(s\in [\epsilon ,R]\) for any \(\epsilon >0\) as long as \(b>2-d\). This justify (5.8), and proves the continuity of \((\nabla W*\rho )(\textbf{x})\) by dominated convergence, for \(s=|\textbf{x}|\ne 0\).

Finally, if the assumption of Theorem 5.3 is satisfied for (a, b), then E has the FLIC property, and then the global minimizer is unique, compactly supported and radially-symmetric. Therefore the previous argument can be applied to conclude that the global minimizer is mild. \(\square \)

Finally we recall the explicit construction [22] for the explicit steady state for power-law potentials defined in (5.1) with \(a=2\) and \(2-d<b<\min \{4-d,2\}\), given by:

where \(A=\frac{-d\Gamma (\frac{d}{2})\sin \frac{(b+d)\pi }{2}}{(b+d-2)\pi ^{\frac{d}{2}+1}}>0\), and R is uniquely determined by the total mass condition \(\int _{{\mathbb {R}}^d}\rho _\infty \,\textrm{d}{\textbf{x}}=1\). We also have the explicit formula [22] for the case \(a=4\) and \(2-d<b<{{\bar{b}}}<3-d\), given by:

with \(A_1\), \(A_2\) and R uniquely determined by the total mass condition \(\int _{{\mathbb {R}}^d}\rho _\infty \,\textrm{d}{\textbf{x}}=1\) and a second moment condition. Notice that the upper bound \({{\bar{b}}}\) is given by the relation \(A_1+A_2=0\) for which the function given by (5.10) touches 0 at the origin. We refer to [22] for further details.

Lemma 5.7

If W is given by (5.1) with \(a=2\) and \(2-d<b<\min \{4-d,2\}\) or \(a=4\) and \(2-d<b<{{\bar{b}}}\), then \(\rho _\infty \) defined in (5.9) or (5.10) is a steady state with \(\nabla ^2 W*\rho _\infty \) being continuous.

Proof

It is proved in [22] that \(\rho _\infty \) defined in (5.9) or (5.10) is a steady state.

It is clear that \(\nabla ^2(\frac{|\textbf{x}|^a}{a}) * \rho _\infty \) is continuous. To deal with \(\nabla ^2(\frac{|\textbf{x}|^b}{b}) * \rho _\infty \), first notice that \(\rho _\infty \in L^p\) for any \(p<\frac{2d}{b+d-2}\). Then by Hardy-Littlewood-Sobolev inequality,

for \(\epsilon >0\) small enough, by checking the index relation

for \(b>2-d\).

Then notice that

where the last parenthesis is in \(L^q\) for any \(1\le q < \infty \). Therefore we see that \(\Delta \Big (\frac{|\textbf{x}|^b}{b}\Big ) * \rho _\infty \) is continuous by taking q large enough. The continuity of \(\nabla ^2 (\frac{|\textbf{x}|^b}{b}) * \rho _\infty \) follows by taking the Riesz transform on \(\rho _\infty \) which is bounded on \(L^p\). \(\square \)

Finally we prove Theorem 5.1.

Proof of Theorem 5.1

We first notice that for the special case \((a,b)=(2,2-d)\), [13, Theorem 3.4(i)] shows that any \(d_\infty \)-local minimizer is in \(L^\infty \) with \(W*\rho _\infty \) is \(C^{1,1}\). Then the unique \(d_\infty \)-local minimizer is the characteristic function of a ball as shown in [46, Theorem 2.1], coinciding with the formula (5.9). We also refer to [32] for the classical proof that the characteristic of the ball is the global minimizer. In the rest of the proof, we will assume \((a,b)\ne (2,2-d)\).

Let \(\rho \) be a compactly supported \(d_\infty \)-local minimizer. When \(d\ge 2\) and (5.2) is satisfied, W is FLIC by Theorem 5.3. Then Theorem 3.1 implies that \(\rho \) is radially-symmetric.

If (a, b) further satisfies \(2-d\le b<2\), then the unique global minimizer \(\rho _\infty \) is mild. In fact, for the Newtonian case \(b=2-d\), [13, Theorem 3.4(i)] shows that \(W*\rho _\infty \) is \(C^{1,1}\), and in particular, \(\nabla W*\rho _\infty \) is continuous and thus \(\rho _\infty \) is mild. For the case \(2-d<b<\min \{4-d,2\}\), since \(\rho _\infty \) is radially-symmetric, Lemma 5.6 shows that \(\rho _\infty \) is mild.

If (a, b) further satisfies (5.3), by calculating

we see that (4.1) and (4.2) are satisfied under the assumption (5.3) with \((a,b)\ne (2,2-d)\). Also, by Lemma 5.5, W satisfies ((H-s)) up to adding a constant. Moreover, the positive bound from below on the dimension of the support of \(d_\infty \)-local minimizers, due to [2, Theorem 1], implies that \(\rho _\infty \) does not contain an isolated Dirac at 0. Therefore, if the \(d_\infty \)-local minimizer \(\rho \) is mild, then Theorem 4.1 together with Remark 4.4 allows to conclude that \(\rho \) is the global minimizer.

Finally, if \(a=2\) with \(2-d<b<4-d\), then Lemma 5.7 implies that \(\rho _\infty \) defined in (5.9) is a steady state with \(\nabla ^2W*\rho _\infty \) being continuous (and therefore \(\nabla ^2W*\rho _\infty =0\) on \(\text {supp}\,\rho _\infty =\bar{B(0;R)}\)). Then Theorem 4.5 and Theorem 2.4 implies that \(\rho _\infty \) is the global minimizer.

If \(a=4\) with \(2-d<b<{{\bar{b}}}\), then Lemma 5.7 implies that \(\rho _\infty \) defined in (5.10) is a steady state with \(\nabla ^2W*\rho _\infty \) being continuous. In particular, \((\Delta W*\rho _\infty )(\textbf{x})=0\) for \(|\textbf{x}|=R\). Notice that \(\Delta W\), viewed as a function of \(r=|\textbf{x}|\), is increasing on \(r\in (0,\infty )\). Therefore the radial function \(\Delta W*\rho _\infty \) is increasing on \([R,\infty )\), and thus \((\Delta W*\rho _\infty )(\textbf{x})\ge 0\) for any \(|\textbf{x}|\ge R\). This implies that \(\rho _\infty \) satisfies the condition (2.2), and then Theorem 2.4 implies that \(\rho _\infty \) is the global minimizer.

\(\square \)

Remark 5.8

In the case \(d\ge 3\), \(a=2\), \(b=4-d\), one can show that the steady state \(\rho \) in the form of Dirac Delta on a sphere (with radius R) given by [1] is the global minimizer. In fact, notice that \(\Delta ^2 W(\textbf{x})=0\) for any \(\textbf{x}\ne 0\), and therefore \(\Delta ^2 W*\rho =0\) in B(0; R), which implies that \(\Delta W*\rho \) is constant in B(0; R) by radial symmetry. The steady state \(\rho \) is mild by Lemma 5.6, i.e., \(\nabla W*\rho \) is continuous and vanishes for \(|\textbf{x}|=R\). Then we obtain \(\Delta W*\rho =0\) in B(0; R), which implies that \(W*\rho \) is constant in \(\bar{B(0;R)}\). Similar to the proof of Lemma 5.6, one can show that \(\Delta W*\rho \) is continuous. Then a similar argument as in the last paragraph of the proof of Theorem 5.1 shows that \(\Delta W*\rho \ge 0\) for any \(|\textbf{x}|\ge R\). Therefore we see that \(\rho \) is the global minimizer by Theorem 2.4.

6 \(d_\infty \)-local minimizers of near power-law potentials in 1D are not fractal

Consider a 1D potential of the form

where \(1<b<2\) and \(W_1\) is smooth. Applying the dimensionality result in [2], we know that \(1\ge \text {dim}(\text {supp}\,\rho )\ge 2-b\) for all \(d_\infty \)-local minimizers. We now prove that the dimension of the support is actually the maximum. It is in this sense that we name these \(d_\infty \)-local minimizers not being fractal, although this does not exclude the case where \(\text {supp}\,\rho \) is the union of some closed intervals and a set with fractal dimension.

Theorem 6.1

Let \(\rho \in {\mathcal {P}}({\mathbb {R}})\) be a \(d_\infty \)-local minimizer of (6.1) which is supported inside \((-R,R)\), and satisfies \(W*\rho \in C^1([-R,R])\). Then there exists \(c_s>0\) depending on b, R and \(\Vert W_1\Vert _{C^4([-2R,2R])}\), such that \(|\text {supp}\,\rho | \ge c_s\). In particular, \(\text {dim}(\text {supp}\,\rho ) = 1\).

Proof

By [2, Theorem 1], \(\text {supp}\,\rho \) does not contain any isolated point.

STEP 1: Rough estimate of the local mass. We first show that for every connected component of \(\text {supp}\,\rho \) which is a closed interval I, it satisfies

where the \(C^2\) norm is on \([-2R,2R]\) (and similar for other \(C^2,C^4\) norms in this proof). In fact, take any fixed \(x\in I\), we have

since \(W*\rho \) is a constant on I. Notice that

and

and (6.2) follows.

Then we show that for every open interval J such that \(J\cap \text {supp}\,\rho \) is nonempty and not connected, it satisfies

In fact, since \(J\cap \text {supp}\,\rho \) is nonempty and not connected, we may take \(x_1,x_2\in J\cap \text {supp}\,\rho \) with \(x_1<x_2\) and \([x_1,x_2]\not \subseteq \text {supp}\,\rho \). Then we may take a maximal open interval \((x_3,x_4)\) in \([x_1,x_2]\backslash \text {supp}\,\rho \).

Then for any \(x\in (x_3,x_4)\), we compute

and estimate

and

If (6.3) was not true, then \((W''''*\rho )(x)<0\) for any \(x\in (x_3,x_4)\). Notice that \(W\in C^1({\mathbb {R}})\), and so is \(W*\rho \). Therefore, applying Lemma 4.2 to \(W*\rho \) on \([x_3,x_4]\), we deduce that either \(x_3\) or \(x_4\) is not a local minimum of \(W*\rho \), and we get a contradiction against the \(d_\infty \)-local minimizer property of \(\rho \) in view of Lemma 2.1.

STEP 2: Decomposition of the support. Assume on the contrary that \(|\text {supp}\,\rho |< c_s\), with the constant \(c_s>0\) to be determined. Then we apply the technical Lemma 6.2, that is postponed below, to \(\text {supp}\,\rho \) (which has no isolated points) with \(\epsilon =c_s\). This gives us a decomposition of \(\text {supp}\,\rho \) into connected component intervals \(\{I_1,I_2,\ldots \}\) and a cover of intervals \(\{J_1,J_2,\ldots \}\) with the properties listed therein. In particular, we have \( \sum _k|I_k|+\sum _l |J_l|< |\text {supp}\,\rho |+\epsilon < 2c_s\) from item 4 of Lemma 6.2.

By item 3 of Lemma 6.2, we may apply the estimate (6.3) for \(J_1,J_2,\ldots \) to get

Therefore, with the condition

we get

Since \(\{I_1,I_2,\ldots \}\cup \{J_1,J_2,\ldots \}\) covers \(\text {supp}\,\rho \), we have \(\sum _k \int _{I_k}\rho \,\textrm{d}{x}+\sum _l \int _{J_l}\rho \,\textrm{d}{x} \ge \int _{[-R,R]}\rho \,\textrm{d}{x}= 1\), and then we get

Then we define

Then \(\sum _k \delta _k \le |\text {supp}\,\rho | < c_s\) since \(\{I_k\}\), as the connected component intervals of \(\text {supp}\,\rho \), are disjoint. Notice that

Combined with (6.7), we get

We will also use the fact that

for every k, which can be seen by applying (6.2) to \(I_k\).

STEP 3: 4-th order derivative estimate. Fix \(k\in S\). Denote \(I_k=[x_1,x_2]\) and then \(\delta _k=|I_k|=x_2-x_1\). Define

where \(C_1\) is a large constant to be determined. We first show

under a suitable condition (6.11). In fact, by (6.4), \((W''''*\rho )(x)\) is decomposed into two parts, with the second part controlled by (6.5). To control the first part,

where the second inequality uses the fact that \(|x-y|\le \delta _k+C_1m_k\) for \(x\in {\tilde{I}}_k\) and \(y\in I_k\), the third inequality uses the definition of S in (6.8), and the last inequality uses \(m_k\le m_s\) from (6.9). Then (6.10) follows if we assume the condition

STEP 4: Vacuum regions and exclusion. Using the same argument as in STEP 1, we claim that

Suppose not, then there exists \(y\in ({\tilde{I}}_k\backslash I_k)\cap \text {supp}\,\rho \), which we may assume to satisfies \(x_2<y\le x_2+C_1m_k\) without loss of generality. Then \([x_2,y]\not \subseteq \text {supp}\,\rho \) since \(I_k=[x_1,x_2]\) is a connected component of \(\text {supp}\,\rho \). Then, in view of (6.10), we may find a maximal open interval \((x_3,x_4)\) in \([x_2,y]\backslash \text {supp}\,\rho \) to apply Lemma 4.2 and get a contradiction, similar to the last paragraph of STEP 1.

For \(I_k = [x_1,x_2]\), define

and we claim that \(\{{\bar{I}}_k:k\in S\}\) are disjoint under a suitable condition (6.13). In fact, suppose \({\bar{I}}_k\cap {\bar{I}}_{k'}\ne \emptyset \) and \(m_k\ge m_{k'}\), then by \(\delta _{k'}\le 4c_sm_{k'}\le 4c_sm_{k}\),

if one assume the condition

This implies that \(I_{k'}\subseteq {\tilde{I}}_k\). Since \(I_k\) and \(I_{k'}\) are disjoint and \(I_{k'}\cap \text {supp}\,\rho \ne \emptyset \), we get a contradiction with (6.12).

The disjoint property of \(\{{\bar{I}}_k\}_{k\in S}\) and the fact that \(I_k\subseteq [-R,R]\) show that

since \({\bar{I}}_k\subseteq [-(R+\frac{C_1}{3}m_s),(R+\frac{C_1}{3}m_s)]\). On the other hand,

by (6.8). Recall from (6.9) that \(m_s\) can be made arbitrarily small by taking \(c_s\) small. To choose the parameters \(C_1\) and \(c_s\), we first choose \(C_1=9R\), and then choose \(c_s\) small enough so that \(m_s\) is small enough to satisfy (6.6), (6.11), (6.13) and \(m_s<1/9\), to obtain a contradiction between (6.14) and (6.15). \(\square \)

Lemma 6.2

For any closed set \(A\subseteq (-R,R)\) with no isolated points and \(\epsilon >0\), there exists a countable collection of intervals \(\{I_1,I_2,\ldots \}\cup \{J_1,J_2,\ldots \}\) which covers A, satisfying

-

\(\{I_1,I_2,\ldots ,J_1,J_2,\ldots \}\) are subsets of \((-R,R)\).

-

\(\{I_k\}\) are the connected components of A being closed intervals. \(\{I_k\}\) is a finite or countable collection.

-

For every l, \(J_l\) is an open interval and \(J_l\cap A\) is nonempty and not connected.

-

\(\sum _k |I_k|+\sum _l |J_l| < |A|+\epsilon \).

(Here we do not regard a single point as a closed interval.)

Proof

We first take \(\{I_k\}\) as the collection of all the connected components of A being closed intervals, and this collection is pairwise disjoint, and either finite or countable. Denote \(I=\bigcup _k I_k\), then

By the definition of the Lebesgue measure, there exists a countable collection of open intervals \(\{J_1,J_2,\ldots \}\) which are subsets of \((-R,R)\) and cover \(A\backslash I\), such that \(\sum _{l=1}^\infty |J_l| < |A\backslash I|+\epsilon \). By deleting those \(J_l\) with empty intersection with \(A\backslash I\), we may assume that \(J_l\cap (A\backslash I)\ne \emptyset \) for every l.

Then for every l, we claim that the nonempty set \(J_l\cap A\) is not connected. To see this, denote \(J_l=(x_1,x_2)\). Suppose \(J_l\cap A\) is connected. Since A does not contain isolated points, \(J_l\cap A\) has to be an interval, which is a subset of a connect component of A being an interval. Therefore \(J_l\cap A\subseteq I_k\) for some k, and we get a contradiction against \(J_l\cap (A\backslash I)\ne \emptyset \).

\(\square \)

The main conclusion of this section is that if we were looking for fractal behavior on the support of global minimizers of the interaction energy, power-law potentials or their perturbations are not the right family of potentials at least in one dimension.

7 Linear interpolation concavity and its consequences on local minimizers

We say W is linear-interpolation-concave with size \(\delta \), if there exists a nonzero function \(\mu \in L^\infty ({{\mathbb {R}}^d})\) with \(\int _{{\mathbb {R}}^d}\mu \,\textrm{d}{\textbf{x}}=0\) and \(\text {diam}\,(\text {supp}\,\mu ) \le \delta \), such that \(E[\mu ] < 0\). We say W is infinitesimal-concave if W is linear-interpolation-concave with size \(\delta \) for any \(\delta > 0\).

Theorem 7.1

Assume that the interaction potential W satisfies ((H)). If W is linear-interpolation-concave with size \(\delta \), then for any \(d_\infty \)-local minimizer \(\rho \) and \(\epsilon _0>0\), \(\{\textbf{x}: \rho (\textbf{x})\ge \epsilon _0\}\) does not contain a ball of radius \(\delta \). In particular, if W is infinitesimal-concave, then for any \(d_\infty \)-local minimizer \(\rho \) and \(\epsilon _0>0\), \(\{\textbf{x}: \rho (\textbf{x})\ge \epsilon _0\}\) has no interior point.

Here the meaning of the condition \(\rho (\textbf{x})\ge \epsilon _0\) is clear if \(\rho \) is a continuous function. Otherwise, we may use the Radon-Nikodym decomposition to write \(\rho =\rho _c+\rho _s\) where \(\rho _c\) is an \(L^1\) function and \(\text {supp}\,\rho _s\) has Lebesgue measure zero. Then \(\{\textbf{x}: \rho (\textbf{x})\ge \epsilon _0\}\) is interpreted as \(\{\textbf{x}: \rho _c(\textbf{x})\ge \epsilon _0\}\), and the conclusion of Theorem 7.1 is that \(\{\textbf{x}: \rho _c(\textbf{x})\ge \epsilon _0\}\) does not contain a ball of radius \(\delta \) for any representative for \(\rho _c\in L^1\) (which may differ by a set with Lebesgue measure zero).

In order to show Theorem 7.1, we need an improvement on the necessary condition for \(d_\infty \)-local minimizers in Lemma 2.1.

Lemma 7.2

Assume that the interaction potential W satisfies ((H)), and \(\rho \) is a \(d_\infty \)-local minimizer for the corresponding interaction energy. If \(B(\textbf{x};\delta )\subseteq \text {supp}\,\rho \), then \(V=W*\rho \) is a constant on \(B(\textbf{x};\delta )\) almost everywhere.

Proof

We will prove an equivalent statement with \(B(\textbf{x};\delta )\) replaced by a cube \(Q=[x_1,x_1+\delta ]\times \cdots \times [x_d,x_d+\delta ]\). Let \(\epsilon _0\) be as in Lemma 2.1. By replacing \(\epsilon _0\) with a smaller number, we may assume \(\frac{\epsilon _0}{\sqrt{d}}\le \delta \). Take \(\{\textbf{z}_n\}_{n=1}^N\) as the set of points in \(B(\textbf{x},\delta )\) with each coordinate in \(\frac{\epsilon _0}{3\sqrt{d}}{\mathbb {Z}}\). Then for any \(\textbf{y}\in Q\), there exists some \(\textbf{z}_n\) such that \(|\textbf{y}-\textbf{z}_n|\le \frac{\epsilon _0}{3}< \frac{\epsilon _0}{2}\), i.e., the collection of balls \(\{B(\textbf{z}_n;\frac{\epsilon _0}{2})\}\) covers Q.

Suppose it is not true that \(V=W*\rho \) is a constant on Q almost everywhere. Then there exists \(C_1\) such that both

have positive Lebesgue measure. We claim that for any \(n=1,\ldots ,N\),

In fact, if \(B(\textbf{z}_n;\frac{\epsilon _0}{2})\cap A_2\ne \emptyset \), then we may take \(\textbf{y}\in B(\textbf{z}_n;\frac{\epsilon _0}{2})\cap A_2\). Applying Lemma 2.1 to the point \(\textbf{y}\) shows that \(V(\textbf{y}_1)\ge V(\textbf{y})\ge C_1\) for \(\textbf{y}_1\in B(\textbf{y};\epsilon _0)\) almost everywhere. Since \(|\textbf{y}-\textbf{z}_n|<\frac{\epsilon _0}{2}\), we have \(B(\textbf{z}_n;\frac{\epsilon _0}{2})\subseteq B(\textbf{y};\epsilon _0)\), and the claim follows.

Since \(A_2\) has positive Lebesgue measure, we may take \(\textbf{y}\in A_2\), and take \(\textbf{z}_m\) with \(\textbf{y}\in B(\textbf{z}_m;\frac{\epsilon _0}{2})\). Applying (7.1), we see that \(|B(\textbf{z}_m;\frac{\epsilon _0}{2})\cap A_1|=0\). This implies that \(B(\textbf{z}_{m'};\frac{\epsilon _0}{2})\cap A_2\ne \emptyset \) for any \(\textbf{z}_{m'}\) with \(|\textbf{z}_m-\textbf{z}_{m'}|=\frac{\epsilon _0}{3\sqrt{d}}\), i.e., the closest neighbors of \(\textbf{z}_m\), since \(\textbf{z}_{m'}\) is an interior point of \(B(\textbf{z}_m;\frac{\epsilon _0}{2})\). Applying (7.1) iteratively, we obtain \(|B(\textbf{z}_n;\frac{\epsilon _0}{2})\cap A_1|=0\) for any n. Since \(\{B(\textbf{z}_n;\frac{\epsilon _0}{2})\}\) covers Q, we get \(|A_1|=0\), contradicting the assumption that \(A_1\) has positive measure.

\(\square \)

Proof of Theorem 7.1