Abstract

We analyze under which conditions equilibration between two competing effects, repulsion modeled by nonlinear diffusion and attraction modeled by nonlocal interaction, occurs. This balance leads to continuous compactly supported radially decreasing equilibrium configurations for all masses. All stationary states with suitable regularity are shown to be radially symmetric by means of continuous Steiner symmetrization techniques. Calculus of variations tools allow us to show the existence of global minimizers among these equilibria. Finally, in the particular case of Newtonian interaction in two dimensions they lead to uniqueness of equilibria for any given mass up to translation and to the convergence of solutions of the associated nonlinear aggregation-diffusion equations towards this unique equilibrium profile up to translations as \(t\rightarrow \infty \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The evolution of interacting particles and their equilibrium configurations has attracted the attention of many applied mathematicians and mathematical analysts for years. Continuum description of interacting particle systems usually leads to analyze the behavior of a mass density \(\rho (t,x)\) of individuals at certain location \(x\in {\mathbb {R}}^d\) and time \(t\ge 0\). Most of the derived models result in aggregation-diffusion nonlinear partial differential equations through different asymptotic or mean-field limits [14, 29, 75]. The different effects reflect that equilibria are obtained by competing behaviors: the repulsion between individuals/particles is modeled through nonlinear diffusion terms while their attraction is integrated via nonlocal forces. This attractive nonlocal interaction takes into account that the presence of particles/individuals at a certain location \(y\in {\mathbb {R}}^d\) produces a force at particles/individuals located at \(x\in {\mathbb {R}}^d\) proportional to \(-\nabla W(x-y)\) where the given interaction potential \(W:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) is assumed to be radially symmetric and increasing consistent with attractive forces. The evolution of the mass density of particles/individuals is given by the nonlinear aggregation-diffusion equation of the form:

with initial data \(\rho _0 \in L^1_+({\mathbb {R}}^d)\cap L^m({\mathbb {R}}^d)\). We will work with degenerate diffusions, \(m>1\), that appear naturally in modelling repulsion with very concentrated repelling nonlocal forces [14, 75], but also with linear and fast diffusion ranges \(0<m\le 1\), which are also classical in applications [59, 77]. These models are ubiquitous in mathematical biology where they have been used as macroscopic descriptions for collective behavior or swarming of animal species, see [15, 20, 69,70,71, 84] for instance, or more classically in chemotaxis-type models, see [11, 13, 26, 53, 54, 59, 77] and the references therein.

On the other hand, this family of PDEs is a particular example of nonlinear gradient flows in the sense of optimal transport between mass densities, see [2, 33, 34]. The main implication for us is that there is a natural Lyapunov functional for the evolution of (1.1) defined on the set of centered mass densities \(\rho \in L^1_+({\mathbb {R}}^d)\cap L^m({\mathbb {R}}^d)\) given by

being the last integral defined in the improper sense, and if \(m=1\) we replace the first integral of \(\mathcal {E} [\rho ]\) by \( \int _{{\mathbb {R}}^d} \rho \log \rho dx\). Therefore, if the balance between repulsion and attraction occurs, these two effects should determine stationary states for (1.1) including the stable solutions possibly given by local (global) minimizers of the free energy functional (1.2).

Many properties and results have been obtained in the particular case of Newtonian attractive potential due to its applications in mathematical modeling of chemotaxis [59, 77] and gravitational collapse models [78]. In the classical 2D Keller–Segel model with linear diffusion, it is known that equilibria can only happen in the critical mass case [10] while self-similar solutions are the long time asymptotics for subcritical mass cases [13, 22]. For supercritical masses, all solutions blow up in finite time [54]. It was shown in [23, 63] that degenerate diffusion with \(m>1\) is able to regularize the 2D classical Keller–Segel problem, where solutions exist globally in time regardless of its mass, and each solution remain uniformly bounded in time. For the Newtonian attraction interaction in dimension \(d\ge 3\), the authors in [9] show that the value of the degeneracy of the diffusion that allows the mass to be the critical quantity for dichotomy between global existence and finite time blow-up is given by \(m=2-2/d\). In fact, based on scaling arguments it is easy to argue that for \(m>2-2/d\), the diffusion term dominates when density becomes large, leading to global existence of solutions for all masses. This result was shown in [80] together with the global uniform bound of solutions for all times.

However, in all cases where the diffusion dominates over the aggregation, the long time asymptotics of solutions to (1.1) have not been clarified, as pointed out in [8]. Are there stationary solutions for all masses when the diffusion term dominates? And if so, are they unique up to translations? Do they determine the long time asymptotics for (1.1)? Only partial answers to these questions are present in the literature, which we summarize below.

To show the existence of stationary solutions to (1.1), a natural idea is to look for the global minimizer of its associated free energy functional (1.2). For the 3D case with Newtonian interaction potential and \(m>4/3\), Lions’ concentration-compactness principle [67] gives the existence of a global minimizer of (1.2) for any given mass. The argument can be extended to kernels that are no more singular than Newtonian potential in \({\mathbb {R}}^d\) at the origin, and have slow decay at infinity. The existence result is further generalized by [5] to a broader class of kernels, which can have faster decay at infinity. In all the above cases, the global minimizer of (1.2) corresponds to a stationary solution to (1.1) in the sense of distributions. In addition, the global minimizer must be radially decreasing due to Riesz’s rearrangement theorem.

Regarding the uniqueness of stationary solutions to (1.1), most of the available results are for Newtonian interaction. For the 3D Newtonian potential with \(m>4/3\), for any given mass, the authors in [65] prove uniqueness of stationary solutions to (1.1) among radial functions, and their method can be generalized to the Newtonian potential in \({\mathbb {R}}^d\) with \(m>2-2/d\). For the 3D case with \(m>4/3\), [79] show that all compactly supported stationary solutions must be radial up to a translation, hence obtaining uniqueness of stationary solutions among compactly supported functions. The proof is based on moving plane techniques, where the compact support of the stationary solution seems crucial, and it also relies on the fact that the Newtonian potential in 3D converges to zero at infinity. Similar results are obtained in [28] for 2D Newtonian potential with \(m>1\) using an adapted moving plane technique. Again, the uniqueness result is based on showing radial symmetry of compactly supported stationary solutions. Finally, we mention that uniqueness of stationary states has been proved for general attracting kernels in one dimension in the case \(m=2\), see [21]. To the best of our knowledge, even for Newtonian potential, we are not aware of any results showing that all stationary solutions are radial (up to a translation).

Previous results show the limitations of the present theory: although the existence of stationary states for all masses is obtained for quite general potentials, their uniqueness, crucial for identifying the long time asymptotics, is only known in very particular cases of diffusive dominated problems. The available uniqueness results are not very satisfactory due to the compactly supported restriction on the uniqueness class imposed by the moving plane techniques. And thus, large time asymptotics results are not at all available due to the lack of mass confinement results of any kind uniformly in time together with the difficulty of identifying the long time limits of sequences of solutions due to the restriction on the uniqueness class for stationary solutions.

If one wants to show that the long time asymptotics are uniquely determined by the initial mass and center of mass, a clear strategy used in many other nonlinear diffusion problems, see [87] and the references therein, is the following: one first needs to prove that all stationary solutions are radial up to a translation in a non restrictive class of stationary solutions, then one has to show uniqueness of stationary solutions among radial solutions, and finally this uniqueness will allow to identify the limits of time diverging sequences of solutions, if compactness of these sequences is shown in a suitable functional framework. Let us point out that comparison arguments used in standard porous medium equations are out of the question here due to the lack of maximum principle by the presence of the nonlocal term.

In this work, we will give the first full result of long time asymptotics for a diffusion dominated problem using the previous strategy without smallness assumptions of any kind. More precisely, we will prove that all solutions to the 2D Keller–Segel equation with \(m>1\) converge to the global minimizer of its free energy using the previous strategy. The first step will be to show radial symmetry of stationary solutions to (1.1) under quite general assumptions on W and the class of stationary solutions. Let us point out that standard rearrangement techniques fail in trying to show radial symmetry of general stationary states to (1.1) and they are only useful for showing radial symmetry of global minimizers, see [28]. Comparison arguments for radial solutions allow to prove uniqueness of radial stationary solutions in particular cases [61, 65]. However, up to our knowledge, there is no general result in the literature about radial symmetry of stationary solutions to nonlocal aggregation-diffusion equations.

Our first main result is that all stationary solutions of (1.1), with no restriction on \(m>0\), are radially decreasing up to translation by a fully novel application of continuous Steiner symmetrization techniques for the problem (1.1). Continuous Steiner symmetrization has been used in calculus of variations [18] for replacing rearrangement inequalities [16, 64, 72], but its application to nonlinear nonlocal aggregation-diffusion PDEs is completely new. Most of the results present in the literature using continuous Steiner symmetrization deal with functionals of first order, i.e. functionals involving a power of the modulus of the gradient of the unknown, see [19, Corollary 7.3] for an application to p-Laplacian stationary equations, and in [58, Section II] and [18, 57], while in our case the functional (1.2) is purely of zeroth order. The decay of the attractive Newtonian potential interaction term in \(d\ge 3\) follows from [18, Corollary 2] and [72], which is the only result related to our strategy.

We will construct a curve of measures starting from a stationary state \(\rho \) using continuous Steiner symmetrization such that the functional (1.2) decays strictly at first order along that curve unless the base point \(\rho \) is radially symmetric, see Proposition 2.15. However, the functional (1.2) has at most a quadratic variation when \(\rho \) is a stationary state as the first term in the Taylor expansion cancels. This leads to a contradiction unless the stationary state is radially symmetric. The construction of this curve needs a non-classical technique of slowing-down the velocities of the level sets for the continuous Steiner symmetrization in order to cope with the possible compact support of stationary states in the degenerate case \(m>1\), see Proposition 2.8. This first main result is the content of Sect. 2 in which we specify the assumptions on the interaction potential and the notion of stationary solutions in details. We point out that the variational structure of (1.1) is crucial to show the radially decreasing property of stationary solutions.

The result of radial symmetry for general stationary solutions to (1.1) is quite striking in comparison to other gradient flow models in collective behavior based on the competition of attractive and repulsive effects via nonlocal interaction potentials. Actually, there exist numerical and analytical evidence in [4, 7, 62] that there should be stationary solutions of these fully nonlocal interaction models which are not radially symmetric despite the radial symmetry of the interaction potential. Our first main result shows that this break of symmetry does not happen whenever nonlinear diffusion is chosen to model very strong localized repulsion forces, see [84]. Symmetry breaking in nonlinear diffusion equations without interactions has also received a lot of attention lately related to the Caffarelli–Kohn–Nirenberg inequalities, see [45, 46]. Another consequence of our radial symmetry results is the lack of non-radial local minimizers, and even non-radial critical points, of the free energy functional (1.2), which is not at all obvious.

We also generalize our radial symmetry result when (1.1) has an additional term \(\nabla \cdot (\rho \nabla V)\) on the right-hand side, where V is a confining potential (see Sect. 2.5 for precise conditions on V), in the sense that it plays the role of preventing particles to drift away in the presence of the diffusion. It is known that with the extra term, the corresponding energy functional has an additional term \(\int V(x) \rho (x)\, dx\). The particular case of quadratic confinement \(V(x)=\tfrac{|x|^2}{2}\) is important since it leads to the free energy functional associated to (1.1) with homogeneous kernels in self-similar variables [24, 25, 36] and thus, characterizing the self-similar profiles for those problems.

Finally, let us remark that our radial symmetry result applies to stationary states of (1.1) for any \(m>0\) regardless of being in the diffusion dominated case or not. As soon as stationary states of (1.1) exist under suitable assumptions on the interaction potential W, and the confining potential V if present, they must be radially symmetric up to a translation. This fact makes our result applicable to the fair-competition cases [10,11,12] and the aggregation-dominated cases, see [39, 40, 68] with degenerate, linear or fast diffusion. Section 2.4 is finally devoted to deal with the most restrictive case of \(\lambda \)-convex potentials and the Newtonian potential with \(m\ge 1-\tfrac{1}{d}\). In these cases, we can directly make use of the key first-order decay result of the interaction energy along Continuous Steiner symmetrization curves in Proposition 2.15, bypassing the technical result in Proposition 2.8, in order to give a nice shortcut of the proof of our main Theorem 2.2 based on gradient flow techniques.

We next study more properties of particular radially decreasing stationary solutions. We make use of the variational structure to show the existence of global minimizers to (1.2) under very general hypotheses on the interaction potential W and \(m>1\). In Sect. 3, we show that these global minimizers are in fact radially decreasing continuous functions, compactly supported if \(m>1\). These results fully generalize the results in [28, 79]. Putting together Sects. 2 and 3, the uniqueness and full characterization of the stationary states is reduced to uniqueness among the class of radial solutions. This result is known in the case of Newtonian attraction kernels [65].

Finally, we make use of the uniqueness among translations for any given mass of stationary solutions to (1.1) to obtain the second main result of this work, namely to answer the open problem of the long time asymptotics to (1.1) with Newtonian interaction in 2D and \(m>1\). This is accomplished in Sect. 4 by a compactness argument for which one has to extract the corresponding uniform in time bounds and a careful treatment of the nonlinear terms and dissipation while taking the limit \(t\rightarrow \infty \). We do not know how to obtain a similar result for Newtonian interaction in \(d\ge 3\) due to the lack of uniform in time mass confinement bounds in this case. We essentially cannot show that mass does not escape to infinity while taking the limit \(t\rightarrow \infty \). However, the compactness and characterization of stationary solutions is still valid in that case.

The present work opens new perspectives to show radial symmetry for stationary solutions to nonlocal aggregation-diffusion problems. While the hypotheses of our result to ensure existence of global radially symmetric minimizers of (1.2), and in turn of stationary solutions to (1.1), are quite general, we do not know yet whether there is uniqueness among radially symmetric stationary solutions (with a fixed mass) for general non-Newtonian kernels. We even do not have available uniqueness results of radial minimizers beyond Newtonian kernels. Understanding if the existence of radially symmetric local minimizers, that are not global, is possible for functionals of the form (1.2) with radial interaction potential is thus a challenging question. Concerning the long-time asymptotics of (1.1), the lack of a novel approach to find confinement of mass beyond the usual virial techniques and comparison arguments in radial coordinates hinders the advance in their understanding even for Newtonian kernels with \(d\ge 3\). Last but not least, our results open a window to obtain rates of convergence towards the unique equilibrium up to translation for the Newtonian kernel in 2D. The lack of general convexity of this variational problem could be compensated by recent results in a restricted class of functions, see [32]. However, the problem is quite challenging due to the presence of free boundaries in the evolution of compactly supported solutions to (1.1) that rules out direct linearization techniques as in the linear diffusion case [22].

2 Radial symmetry of stationary states with degenerate diffusion

Throughout this section, we assume that \(m>0\), and \(W\) satisfies the following four assumptions:

-

(K1)

\(W\) is attracting, i.e., \(W(x) \in C^1({\mathbb {R}}^d \setminus \{0\})\) is radially symmetric

$$\begin{aligned} W(x)=\omega (|x|)=\omega (r) \end{aligned}$$and \(\omega '(r)>0\) for all \(r>0\) with \(\omega (1)=0\).

-

(K2)

\(W\) is no more singular than the Newtonian kernel in \({\mathbb {R}}^d\) at the origin, i.e., there exists some \(C_w>0\) such that \(\omega '(r) \le C_w r^{1-d}\) for \(r\le 1\).

-

(K3)

There exists some \(C_w>0\) such that \(\omega '(r) \le C_w\) for all \(r>1\).

-

(K4)

Either \(\omega (r)\) is bounded for \(r\ge 1\) or there exists \(C_w>0\) such that for all \(a,b\ge 0\):

$$\begin{aligned} \omega _+(a+b)\le C_w (1+\omega (1+a)+\omega (1+b))\,. \end{aligned}$$

As usual, \(\omega _\pm \) denotes the positive and negative part of \(\omega \) such that \(\omega =\omega _+-\omega _-\). In particular, if \(W=-{\mathcal N}\), modulo the addition of a constant factor, is the attractive Newtonian potential, where \({\mathcal N}\) is the fundamental solution of \(-\Delta \) operator in \({\mathbb {R}}^d\), then \(W\) satisfies all the assumptions. Since the Eq. (1.1) does not change by adding a constant to the potential W, we will consider that the potential W is defined modulo additive constants from now on.

We denote by \(L^{1}_{+}({\mathbb {R}}^{d})\) the set of all non-negative functions in \(L^{1}({\mathbb {R}}^{d})\). Let us start by defining precisely stationary states to the aggregation Eq. (1.1) with a potential satisfying (K1)–(K4).

Definition 2.1

Given \(\rho _s \in L^1_+({\mathbb {R}}^d)\cap L^\infty ({\mathbb {R}}^d)\) we call it a stationary state for the evolution problem (1.1) if \(\rho _s^{m}\in H^1_{loc} ({\mathbb {R}}^d)\), \(\nabla \psi _s:=\nabla W *\rho _s\in L^1_{loc} ({\mathbb {R}}^d)\), and it satisfies

in the sense of distributions in \({\mathbb {R}}^d\).

Let us first note that \(\nabla \psi _s\) is globally bounded under the assumptions (K1)–(K3). To see this, a direct decomposition in near- and far-field sets yields

where we split the integrand into the sets \(\mathcal {A} := \{ y : |x - y| \le 1 \}\) and \(\mathcal {B} := {\mathbb {R}}^d \setminus \mathcal {A}\), and apply the assumptions (K1)–(K3).

Under the additional assumptions (K4) and \(\omega (1+|x|)\rho _s \in L^1({\mathbb {R}}^d)\), we will show that the potential function \(\psi _s(x) = W*\rho _s(x)\) is also locally bounded. First, note that (K1)–(K3) ensures that \(|\omega (r)| \le {\tilde{C}}_w \phi (r)\) for all \(r\le 1\) with some \({\tilde{C}}_w>0\), where

Hence we can again perform a decomposition in near- and far-field sets and obtain

Our main goal in this section is the following theorem.

Theorem 2.2

Assume that W satisfies (K1)–(K4) and \(m>0\). Let \(\rho _s \in L^1_+({\mathbb {R}}^d)\cap L^\infty ({\mathbb {R}}^d)\) with \(\omega (1+|x|)\rho _s \in L^1({\mathbb {R}}^d)\) be a non-negative stationary state of (1.1) in the sense of Definition 2.1. Then \(\rho _s\) must be radially decreasing up to a translation, i.e. there exists some \(x_0\in {\mathbb {R}}^d\), such that \(\rho _s(\cdot - x_0)\) is radially symmetric, and \(\rho _s(|x-x_0|)\) is non-increasing in \(|x-x_0|\).

Before going into the details of the proof, we briefly outline the strategy here. Assume there is a stationary state \(\rho _s\) which is not radially decreasing under any translation. To obtain a contradiction, we consider the free energy functional \(\mathcal {E}[\rho ]\) associated with (1.1),

where \(\mathcal {S}[\rho ]\) is replaced by \(\int \rho \log \rho \,dx\) if \(m=1\). We first observe that \(\mathcal {I}[\rho _s]\) is finite since the potential function \(\psi _s=W*\rho _s \in {\mathcal W}^{1,\infty }_{loc}({\mathbb {R}}^d)\) satisfies (2.4) with \(\omega (1+|x|)\rho _s \in L^1({\mathbb {R}}^d)\). Since \(\rho _s \in L^1_+({\mathbb {R}}^d)\cap L^\infty ({\mathbb {R}}^d)\), \(\mathcal {S}[\rho _s]\) is finite for all \(m>1\), but may be \(-\infty \) if \(m\in (0,1]\).

Below we discuss the strategy for \(m>1\) first, and point out the modification for \(m\in (0,1]\) in the next paragraph. Using the assumption that \(\rho _s\) is not radially decreasing under any translation, we will apply the continuous Steiner symmetrization to perturb around \(\rho _s\) and construct a continuous family of densities \(\mu (\tau , \cdot )\) with \(\mu (0,\cdot )=\rho _s\), such that \(\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s] < -c\tau \) for some \(c>0\) and any small \(\tau >0\). On the other hand, using that \(\rho _s\) is a stationary state, we will show that \(|\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s]| \le C\tau ^2\) for some \(C>0\) and any small \(\tau >0\). Combining these two inequalities together gives us a contradiction for sufficiently small \(\tau >0\).

For \(m\in (0,1)\), even if \(\mathcal {S}[\rho _s]\) might be \(-\infty \) by itself, the difference \(\mathcal {S}[\mu (\tau )] - \mathcal {S}[\rho _s] \) can be still well-defined in the following sense, if we regularize the function \(\frac{1}{m-1}\rho ^{m}\) by \(\frac{1}{m-1}\rho (\rho +\epsilon )^{m-1}\) and take the limit \(\epsilon \rightarrow 0\):

and if \(m=1\) the integrand is replaced by \(\mu (\tau ,\cdot ) \log (\mu (\tau ,\cdot )+\epsilon ) - \rho _s \log (\rho _s +\epsilon )\). Note that as long as \(\mu (\tau )\) has the same distribution as \(\rho _s\), the above definition gives \(\mathcal {S}[\mu (\tau )] - \mathcal {S}[\rho _s] =0\). With such modification, we will show that the difference \(\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s]\) is well-defined and satisfies the same two inequalities as the \(m>1\) case, so we again have a contradiction for small \(\tau >0\).

If the kernel W has certain convexity properties and \(m\ge 1-\tfrac{1}{d}\), then it is known that (1.1) has a rigorous Wasserstein gradient flow structure. In this case, once we obtain the crucial estimate: \(\mathcal {E}[\mu (\tau )] -\mathcal {E}[\rho _s] <-c\tau \), there is a shortcut that directly leads to the radial symmetry result, which we will discuss in Sect. 2.4.

Let us characterize first the set of possible stationary states of (1.1) in the sense of Definition 2.1 and their regularity. Parts of these arguments are reminiscent from those done in [28, 79] in the case of attractive Newtonian potentials.

Lemma 2.3

Let \(\rho _s \in L^1_+({\mathbb {R}}^d)\cap L^\infty ({\mathbb {R}}^d)\) with \(\omega (1+|x|)\rho _s \in L^1({\mathbb {R}}^d)\) be a non-negative stationary state of (1.1) for some \(m>0\) in the sense of Definition 2.1. Then \(\rho _s \in \mathcal {C}({\mathbb {R}}^d)\), and there exists some \(C = C(\Vert \rho _s\Vert _{L^1}, \Vert \rho _s\Vert _{L^\infty }, C_w, d)>0\), such that

and

In addition, if \(m \in (0,1]\), then \(\mathrm {supp}\,\rho _s = {\mathbb {R}}^d\).

Proof

We have already checked that under these assumptions on W and \(\rho _s\), the potential function \(\psi _s\in {\mathcal W}^{1,\infty }_{loc}({\mathbb {R}}^d)\) due to (2.2)–(2.4). Since \(\rho _s^{m}\in H^1_{loc} ({\mathbb {R}}^d)\), then \(\rho _s^m\) is a weak \(H^1_{loc}({\mathbb {R}}^{d})\) solution of

with right hand side belonging to \({\mathcal W}^{-1,p}_{loc} ({\mathbb {R}}^d)\) for all \(1\le p \le \infty \). As a consequence, \(\rho _s^m\) is in fact a weak solution in \({\mathcal W}_{loc}^{1,p}({\mathbb {R}}^d)\) for all \(1<p<\infty \) of (2.9) by classical elliptic regularity results. Sobolev embedding shows that \(\rho _s^m\) belongs to some Hölder space \(\mathcal {C}_{loc}^{0,\alpha }({\mathbb {R}}^d)\), and thus \(\rho _s\in \mathcal {C}_{loc}^{0,\beta }({\mathbb {R}}^d)\) with \(\beta := \min \{\alpha /m, 1\}\). Let us define the set \(\Omega =\{x\in {\mathbb {R}}^d : \rho _s(x)>0\}\). Since \(\rho _s\in \mathcal {C}({\mathbb {R}}^d)\), then \(\Omega \) is an open set and it consists of a countable number of open possibly unbounded connected components. Let us take any bounded smooth connected open subset \(\Theta \) such that \(\overline{ \Theta } \subset \Omega \), and start with the case \(m\ne 1\). Since \(\rho _s\in \mathcal {C}({\mathbb {R}}^d)\), then \(\rho _s\) is bounded away from zero in \(\Theta \) and thus due to the assumptions on \(\rho _s\), we have that \(\frac{m}{m-1}\nabla \rho _s^{m-1} = \frac{1}{\rho _s} \nabla \rho _{s}^{m}\) holds in the distributional sense in \(\Theta \). We conclude that wherever \(\rho _s\) is positive, (2.1) can be interpreted as

in the sense of distributions in \(\Omega \). Hence, the function \(G(x)=\frac{m}{m-1}\rho _s^{m-1}(x) +\psi _s (x)\) is constant in each connected component of \(\Omega \). From here, we deduce that any stationary state of (1.1) in the sense of Definition 2.1 is given by

where G(x) is a constant in each connected component of the support of \(\rho _s\), and its value may differ in different connected components. Due to \(\psi _s\in {\mathcal W}^{1,\infty }_{loc}({\mathbb {R}}^d)\), we deduce that \(\rho _s \in \mathcal {C}_{loc}^{0,1/(m-1)}({\mathbb {R}}^d)\) if \(m\ge 2\) and \(\rho _s \in \mathcal {C}_{loc}^{0,1}({\mathbb {R}}^d)\) for \(m \in (0,1)\cup (1,2)\). Putting together (2.11) and (2.2), we conclude the desired estimate.

In addition, from (2.11) we have that \(\Omega = {\mathbb {R}}^d\) if \(m \in (0,1)\): if not, let \(\Omega _0\) be any connected component of \(\Omega \), and take \(x_0 \in \partial \Omega _0\). As we take a sequence of points \(x_n \rightarrow x_0\) with \(x_n \in \Omega _0\), we have that \(\rho _s(x_n)^{m-1}\rightarrow \infty \), whereas the sequence \(G(x_n) - \psi _s(x_n)\) is bounded [since \(\psi _s\) is locally bounded due to (2.4)], a contradiction.

If \(m=1\), the above argument still goes through except that we replace (2.10) by

in the sense of distributions in \(\Omega \). As a result, the function \(G(x)=\log \rho _s +\psi _s (x)\) is constant in each connected component of \(\Omega \). The same argument as the \(m\in (0,1)\) case then yields that \(\rho _s \in \mathcal {C}_{loc}^{0,1}({\mathbb {R}}^d)\) and \(\Omega = {\mathbb {R}}^d\), leading to the estimate \(|\nabla \log \rho | \le C\) in \({\mathbb {R}}^d\). \(\square \)

2.1 Some preliminaries about rearrangements

Now we briefly recall some standard notions and basic properties of decreasing rearrangements for non-negative functions that will be used later. For a deeper treatment of these topics, we address the reader to the books [6, 51, 56, 60, 64] or the papers [73, 81,82,83]. We denote by \(|E|_{d}\) the Lebesgue measure of a measurable set E in \({\mathbb {R}}^{d}\). Moreover, the set \(E^{\#}\) is defined as the ball centered at the origin such that \(|E^{\#}|_{d}=|E|_{d}\).

A non-negative measurable function f defined on \({\mathbb {R}}^{d}\) is called radially symmetric if there is a non-negative function \(\widetilde{f}\) on \([0,\infty )\) such that \(f(x)=\widetilde{f}(|x|)\) for all \(x\in {\mathbb {R}}^{d}\). If f is radially symmetric, we will often write \(f(x)=f(r)\) for \(r=|x|\ge 0\) by a slight abuse of notation. We say that f is rearranged if it is radial and \(\widetilde{f}\) is a non-negative right-continuous, non-increasing function of \(r>0\). A similar definition can be applied for real functions defined on a ball \(B_{R}(0)=\left\{ x\in {\mathbb {R}}^d:|x|<R\right\} \).

We define the distribution function of\(f\in L^{1}_{+}({\mathbb {R}}^{d})\) by

Then the function \(f^{*}:[0,+\infty )\rightarrow [0,+\infty ]\) defined by

will be called the Hardy–Littlewood one-dimensional decreasing rearrangement of f. By this definition, one could interpret \(f^{*}\) as the generalized right-inverse function of \(\zeta _{f}(\tau )\).

Making use of the definition of \(f^{*}\), we can define a special radially symmetric decreasing function \(f^{\#}\), which we will call the Schwarz spherical decreasing rearrangement of f by means of the formula

where \(\omega _d\) is the volume of the unit ball in \({\mathbb {R}}^d\). It is clear that if the set \(\Omega _{f}=\left\{ x\in {\mathbb {R}}^{d}:f(x)>0\right\} \) of f has finite measure, then \(f^{\#}\) is supported in the ball \(\Omega _{f}^{\#}\).

One can show that \(f^{*}\) (and so \(f^{\#}\)) is equidistributed with f (i.e. they have the same distribution function). Thus if \(f\in L^{p}({\mathbb {R}}^d)\), a simple use of Cavalieri’s principle (see e.g. [60, 82]) leads to the invariance property of the \(L^{p}\) norms:

In particular, using the layer-cake representation formula (see e.g. [64]) one could easily infer that

Among the many interesting properties of rearrangements, it is worth mentioning the Hardy–Littlewood inequality (see [6, 51, 60] for the proof): for any couple of non-negative measurable functions \(f,\,g\) on \({\mathbb {R}}^{d}\), we have

Since in Sect. 4 we will use estimates of the solutions of Keller–Segel problems in terms of their integrals, let us now recall the concept of comparison of mass concentration, taken from [85], that is remarkably useful.

Definition 2.4

Let \(f,g\in L^{1}_{loc}({\mathbb {R}}^{d})\) be two non-negative, radially symmetric functions on \({\mathbb {R}}^{d}\). We say that f is less concentrated than g, and we write \(f\prec g\) if for all \(R>0\) we get

The partial order relationship \(\prec \) is called comparison of mass concentrations. Of course, this definition can be suitably adapted if f, g are radially symmetric and locally integrable functions on a ball \(B_{R}\). The comparison of mass concentrations enjoys a nice equivalent formulation if f and g are rearranged, whose proof we refer to [1, 41, 86]:

Lemma 2.5

Let \(f,g\in L^{1}_{+}({\mathbb {R}}^{d})\) be two non-negative rearranged functions. Then \(f\prec g\) if and only if for every convex nondecreasing function \(\Phi :[0,\infty )\rightarrow [0,\infty )\) with \(\Phi (0)=0\) we have

From this Lemma, it easily follows that if \(f\prec g\) and \(f,g\in L^{p}({\mathbb {R}}^{d})\) are rearranged and non-negative, then

Let us also observe that if \(f,g\in L^{1}_{+}({\mathbb {R}}^{d})\) are non-negative and rearranged, then \(f\prec g\) if and only if for all \(s\ge 0\) we have

If \(f\in L^1_+({\mathbb {R}}^d)\), we denote by \(M_2[f]\) the second moment of f, i.e.

In this regard, another interesting property which will turn out useful is the following

Lemma 2.6

Let \(f, g \in L^1_+({\mathbb {R}}^d)\) with \(\Vert f\Vert _{L^1({\mathbb {R}}^d)} = \Vert g\Vert _{L^1({\mathbb {R}}^d)}\). If additionally g is rearranged and \(f^\# \prec g\), then \(M_2[f] \ge M_2[g]\).

Proof

Let us consider the sequence of bounded radially increasing functions \(\left\{ \varphi _{n}\right\} \), where \(\varphi _{n}(x)=\min \left\{ |x|^{2},n\right\} \) is the truncation of the function \(|x|^{2}\) at the level n and define the function

Then \(h_{n}\) is non-negative, bounded and rearranged. Thus using the Hardy–Littlewood inequality (2.14) and [1, Corollary 2.1] we find

Then passing to the limit as \(n\rightarrow \infty \) we find the desired result. \(\square \)

Remark 2.7

Lemma 2.6 can be easily generalized when \(|x|^{2}\) is replaced by any non-negative radially increasing potential \(V=V(r)\), \(r=|x|\), such that

2.2 Continuous Steiner symmetrization

Although classical decreasing rearragement techniques are very useful to study properties of the minimizers and for solutions of the evolution problem (1.1) in next sections, we do not know how to use them in connection with showing that stationary states are radially symmetric. For an introduction of continuous Steiner symmetrization and its properties, see [16, 18, 64]. In this subsection, we will use continuous Steiner symmetrization to prove the following proposition.

Proposition 2.8

Let \(\mu _0 \in \mathcal {C}({\mathbb {R}}^d) \cap L^1_+({\mathbb {R}}^d)\), and assume it is not radially decreasing after any translation.

Moreover, if \(m\in (0,1)\cup (1,\infty )\), assume that \(|\frac{m}{m-1}\nabla \mu _0^{m-1}| \le C_0\) in \(\mathrm {supp}\,\mu _0\) for some \(C_0\); and if \(m=1\) assume that \(|\nabla \log \mu _0| \le C_0\) in \(\mathrm {supp}\,\mu _0\) for some \(C_0\). In addition, if \(m\in (0,1]\), assume that \(\mathrm {supp}\,\mu _0 = {\mathbb {R}}^d\).

Then there exist some \(\delta _0>0, c_0>0, C_1>0\) (depending on m, \(\mu _0\) and \(W\)) and a function \(\mu \in C([0,\delta _0]\times {\mathbb {R}}^d)\) with \(\mu (0,\cdot ) = \mu _0\), such that \(\mu \) satisfies the following for a short time \(\tau \in [0,\delta _0]\), where \(\mathcal {E}\) is as given in (2.5):

2.2.1 Definitions and basic properties of Steiner symmetrization

Let us first introduce the concept of Steiner symmetrization for a measurable set \(E\subset {\mathbb {R}}^{d}\) . If \(d=1\), the Steiner symmetrization of E is the symmetric interval \(S(E)=\left\{ x\in {\mathbb {R}}:|x|<|E|_{1}/2\right\} \). Now we want to define the Steiner symmetrization of E with respect to a direction in \({\mathbb {R}}^{d}\) for \(d\ge 2\). The direction we symmetrize corresponds to the unit vector \(e_{1}=(1,0,\ldots ,0)\), although the definition can be modified accordingly when considering any other direction in \({\mathbb {R}}^{d}\).

Let us label a point \(x\in {\mathbb {R}}^{d}\) by \((x_{1},x^{\prime })\), where \(x^{\prime }=(x_{2},\ldots ,x_{d})\in {\mathbb {R}}^{d-1}\) and \(x_{1}\in {\mathbb {R}}\). Given any measurable subset E of \({\mathbb {R}}^{d}\) we define, for all \(x^{\prime }\in {\mathbb {R}}^{d-1}\), the section of E with respect to the direction \(x_{1}\) as the set

Then we define the Steiner symmetrization of E with respect to the direction \(x_{1}\) as the set S(E) which is symmetric about the hyperplane \(\left\{ x_{1}=0\right\} \) and is defined by

In particular we have that \(|E|_{d}=|S(E)|_{d}\).

Now, consider a non-negative function \(\mu _{0}\in L^{1}({\mathbb {R}}^{d})\), for \(d\ge 2\). For all \(x^{\prime }\in {\mathbb {R}}^{d-1}\), let us consider the distribution function of \(\mu _{0}(\cdot ,x^{\prime })\), i.e. the function

where

Then we can give the following definition:

Definition 2.9

We define the Steiner symmetrization (or Steiner rearrangement) of \(\mu _{0}\) in the direction \(x_{1}\) as the function \(S \mu _{0}=S \mu _{0}(x_{1},x^{\prime })\) such that \(S \mu _{0}(\cdot ,x^{\prime })\) is exactly the Schwarz rearrangement of \(\mu _{0}(\cdot ,x^{\prime })\)i.e. (see (2.12))

As a consequence, the Steiner symmetrization \(S\mu _{0}(x_{1},x^{\prime })\) is a function being symmetric about the hyperplane \(\left\{ x_{1}=0\right\} \) and for each \(h>0\) the level set

is equivalent to the Steiner symmetrization

which implies that \(S\mu _{0}\) and \(\mu _{0}\) are equidistributed, yielding the invariance of the \(L^{p}\) norms when passing from \(\mu _{0}\) to \(S\mu _{0}\), that is for all \(p\in [1,\infty ]\) we have

Moreover, by the layer-cake representation formula, we have

Now, we introduce a continuous version of this Steiner procedure via an interpolation between a set or a function and their Steiner symmetrizations that we will use in our symmetry arguments for steady states.

Definition 2.10

For an open set \(U\subset {\mathbb {R}}\), we define its continuous Steiner symmetrization\(M^\tau (U)\) for any \(\tau \ge 0\) as below. In the following we abbreviate an open interval \((c-r, c+r)\) by I(c, r), and we denote by \(\mathrm {sgn}\,c\) the sign of c (which is 1 for positive c, \(-1\) for negative c, and 0 if \(c=0\)).

-

(1)

If \(U = I(c,r)\), then

$$\begin{aligned} M^\tau (U):= {\left\{ \begin{array}{ll}I(c-\tau \,\mathrm {sgn}\,c, r) &{} \text { for }0\le \tau < |c|,\\ I(0,r) &{}\text { for }\tau \ge |c|. \end{array}\right. } \end{aligned}$$ -

(2)

If \(U = \cup _{i=1}^N I(c_i,r_i)\) (where all \(I(c_i, r_i)\) are disjoint), then \(M^\tau (U) := \cup _{i=1}^N M^\tau (I(c_i, r_i))\) for \(0\le \tau <\tau _1\), where \(\tau _1\) is the first time two intervals \(M^\tau (I(c_i, r_i))\) share a common endpoint. Once this happens, we merge them into one open interval, and repeat this process starting from \(\tau =\tau _1\).

-

(3)

If \(U = \cup _{i=1}^\infty I(c_i, r_i)\) (where all \(I(c_i, r_i)\) are disjoint), let \(U_N = \cup _{i=1}^N I(c_i, r_i)\) for each \(N>0\), and define \(M^\tau (U) := \cup _{N=1}^\infty M^\tau (U_N)\).

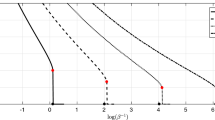

See Fig. 1 for illustrations of \(M^\tau (U)\) in the cases (1) and (2). Also, we point out that case (3) can be seen as a limit of case (2), since for each \(N_1<N_2\) one can easily check that \(M^\tau (U_{N_1}) \subset M^\tau (U_{N_2})\) for all \(\tau \ge 0\). Moreover, according to [18], the definition of \(M^{\tau }(U)\) can be extended to any measurable set U of \({\mathbb {R}}\), since

being \(O_{n}\supset O_{n+1}\)\(n=1,2,\ldots ,\) open sets and N a nullset.

In the next lemma we state four simple facts about \(M^\tau \). They can be easily checked for case (1) and (2) (hence true for (3) as well by taking the limit), and we omit the proof.

Lemma 2.11

Given any open set \(U\subset {\mathbb {R}}\), let \(M^\tau (U)\) be defined in Definition 2.10. Then

-

(a)

\(M^{0}(U)=U\), \(M^{\infty }(U)=S(E)\).

-

(b)

\(|M^\tau (U)| = |U|\) for all \(\tau \ge 0\).

-

(c)

If \(U_1 \subset U_2\), we have \(M^\tau (U_1) \subset M^\tau (U_2)\) for all \(\tau \ge 0\).

-

(d)

\(M^\tau \) has the semigroup property: \(M^{\tau +s}U = M^\tau (M^s(U))\) for any \(\tau ,s\ge 0\) and open set U.

Once we have the continuous Steiner symmetrization for a one-dimensional set, we can define the continuous Steiner symmetrization (in a certain direction) for a non-negative function in \({\mathbb {R}}^d\).

Definition 2.12

Given \(\mu _0 \in L^1_+({\mathbb {R}}^d)\), we define its continuous Steiner symmetrization \(S^\tau \mu _0\) (in direction \(e_1 = (1,0,\cdots ,0)\)) as follows. For any \(x_1 \in {\mathbb {R}}, x'\in {\mathbb {R}}^{d-1}, h>0\), let

where \(U_{x'}^h\) is defined in (2.19).

For an illustration of \(S^\tau \mu _0\) for \(\mu _0\in L^1({\mathbb {R}})\), see Fig. 2.

Using the above definition, Lemma 2.11 and the representation (2.20) one immediately has

Furthermore, it is easy to check that \(S^\tau \mu _0 = \mu _0\) for all \(\tau \) if and only if \(\mu _0\) is symmetric decreasing about the hyperplane \(H=\{x_1=0\}\). Below is the definition for a function being symmetric decreasing about a hyperplane:

Definition 2.13

Let \(\mu _0 \in L^1_+({\mathbb {R}}^d)\). For a hyperplane \(H \subset {\mathbb {R}}^d\) (with normal vector e), we say \(\mu _0\) is symmetric decreasing about H if for any \(x\in H\), the function \(f(\tau ):=\mu _0(x+\tau e)\) is rearranged, i.e. if \(f=f^{\#}\).

Next we state some basic properties of \(S^\tau \) without proof, see [18, 56, 58] for instance.

Lemma 2.14

The continuous Steiner symmetrization \(S^\tau \mu _0\) in Definition 2.12 has the following properties:

-

(a)

For any \(h>0\), \(|\{S^\tau \mu _0> h\}| = |\{\mu _0>h\}|\). As a result, \(\Vert S^\tau \mu _0\Vert _{L^p({\mathbb {R}}^d)} = \Vert \mu _0\Vert _{L^p({\mathbb {R}}^d)}\) for all \(1\le p\le +\infty \).

-

(b)

\(S^\tau \) has the semigroup property, that is, \(S^{\tau +s}\mu _0 = S^\tau (S^s\mu _0)\) for any \(\tau ,s\ge 0\) and non-negative \(\mu _0 \in L^1({\mathbb {R}}^d)\).

Lemma 2.14 immediately implies that \(\mathcal {S}[S^\tau \mu _0]\) is constant in \(\tau \), where \(\mathcal {S}[\cdot ]\) is as given in (2.5).

2.2.2 Interaction energy under Steiner symmetrization

In this subsection, we will investigate \(\mathcal {I}[S^\tau \mu _0]\). It has been shown in [18, Corollary 2] and [64, Theorem 3.7] that \(\mathcal {I}[S^\tau \mu _0]\) is non-increasing in \(\tau \). Indeed, in the case that \(\mu _0\) is a characteristic function \(\chi _{\Omega _0}\), it is shown in [72] that \(\mathcal {I}[S^\tau \mu _0]\) is strictly decreasing for \(\tau \) small enough if \(\Omega _0\) is not a ball. However, in order to obtain (2.16) for a strictly positive \(c_0\), some refined estimates are needed, and we will prove the following:

Proposition 2.15

Let \(\mu _0 \in \mathcal {C}({\mathbb {R}}^d) \cap L^1_+({\mathbb {R}}^d)\). Assume the hyperplane \(H=\{x_1=0\}\) splits the mass of \(\mu _0\) into half and half, and \(\mu _0\) is not symmetric decreasing about H. Let \(\mathcal {I}[\cdot ]\) be given in (2.5), where \(W\) satisfies the assumptions (K1)–(K3). Then \(\mathcal {I}[S^\tau \mu _0]\) is non-increasing in \(\tau \), and there exists some \(\delta _0>0\) (depending on \(\mu _0\)) and \(c_0>0\) (depending on \(\mu _0\) and \(W\)), such that

The building blocks to prove Proposition 2.15 are a couple of lemmas estimating how the interaction energy between two one-dimensional densities \(\mu _1, \mu _2\) changes under continuous Steiner symmetrization for each of them. That is, we will investigate how

changes in \(\tau \) for a given one-dimensional kernel \({\mathcal K}\) to be determined. We start with the basic case where \(\mu _1, \mu _2\) are both characteristic functions of some open interval.

Lemma 2.16

Assume \({\mathcal K}(x) \in \mathcal {C}^1({\mathbb {R}})\) is an even function with \({\mathcal K}'(x)<0\) for all \(x>0\). For \(i=1,2\), let \(\mu _i := \chi _{I(c_i,r_i)}\) respectively, where I(c, r) is as given in Definition 2.10. Then the following holds for the function \(I(\tau ) := I_{\mathcal K}[\mu _1, \mu _2](\tau )\) introduced in (2.21):

-

(a)

\(\frac{d^+}{d \tau } I(0) \ge 0\). (Here \(\frac{d^+}{d\tau }\) stands for the right derivative.)

-

(b)

If in addition \(\mathrm {sgn}\,c_1 \ne \mathrm {sgn}\,c_2\), then

$$\begin{aligned} \frac{d^+}{d \tau } I(0) \ge c_w \min \{r_1, r_2\} |c_2-c_1| > 0, \end{aligned}$$(2.22)where \(c_w\) is the minimum of \(|{\mathcal K}'(r)|\) for \(r\in [\frac{|c_2-c_1|}{2}, r_1+r_2 + |c_2-c_1|]\).

Proof

By definition of \(S^\tau \), we have \(S^\tau \mu _i = \chi _{M^\tau (I(c_i, r_i))}\) for \(i=1,2\) and all \(\tau \ge 0\). If \(\mathrm {sgn}\,c_1 = \mathrm {sgn}\,c_2\), the two intervals \(M^\tau (I(c_i, r_i))\) are moving towards the same direction for small enough \(\tau \), during which their interaction energy \(I(\tau )\) remains constant, implying \(\frac{d}{d \tau } I(0)=0\). Hence it suffices to focus on \(\mathrm {sgn}\,c_1 \ne \mathrm {sgn}\,c_2\) and prove (2.22).

Without loss of generality, we assume that \(c_2>c_1\), so that \(\mathrm {sgn}\,c_2- \mathrm {sgn}\,c_1\) is either 2 or 1. The definition of \(M^\tau \) gives

Taking its right derivative in \(\tau \) yields

Let us deal with the case \(r_1\le r_2\) first. In this case we rewrite \(\frac{d^+}{d\tau } I(0)\) as

where Q is the rectangle \([-r_1,r_1]\times [-r_2+(c_2-c_1), r_2+(c_2-c_1)]\), as illustrated in Fig. 3. Let \( Q^- = Q \cap \{x-y>0\}\), and \(Q^+ = Q \cap \{x-y<0\}\). The assumptions on \({\mathcal K}\) imply \({\mathcal K}'(x-y)<0\) in \(Q^-\), and \({\mathcal K}'(x-y)>0\) in \(Q^+\).

Illustration of the sets \(Q, Q^-, {\tilde{Q}}^+\) and D in the proof of Lemma 2.16

Let \({\tilde{Q}}^+ := Q^+ \cap \{y\le r_2\}\), and \(D:= [-r_1, r_1]\times [r_2 + \frac{c_2-c_1}{2}, r_2+(c_2-c_1)]\). (\({\tilde{Q}}^+\) and D are the yellow set and green set in Fig. 3 respectively). By definition, \({\tilde{Q}}^+\) and D are disjoint subsets of \(Q^+\), so

We claim that \(\int _{Q^-} {\mathcal K}'(x-y)dxdy + \int _{{\tilde{Q}}^+} {\mathcal K}'(x-y)dxdy \ge 0\). To see this, note that \( Q^- \cup {\tilde{Q}}^+ \) forms a rectangle, whose center has a zero x-coordinate and a positive y-coordinate. Hence for any \(h>0\), the line segment \({\tilde{Q}}^+ \cup \{x-y = -h\}\) is longer than \(Q^-\cup \{x-y = h\}\), which gives the claim.

Therefore, (2.24) becomes

Note that D is a rectangle with area \(r_1(c_2-c_1)\), and for any \((x,y)\in D\), we have (recall that \(r_{2}>r_{1}\))

This finally gives

Similarly, if \(r_1>r_2\), then \(I'(0)\) can be written as (2.23) with \({\tilde{Q}}\) defined as \([-r_1+(c_2-c_1),r_1+(c_2-c_1)]\times [-r_2, r_2]\) instead, and the above inequality would hold with the roles of \(r_1\) and \(r_2\) interchanged. Combining these two cases, we have

where \(c_w\) is the minimum of \(|{\mathcal K}'(r)|\) for \(r\in [\frac{|c_2-c_1|}{2}, r_1+r_2 + |c_2-c_1|]\). \(\square \)

The next lemma generalizes the above result to open sets with finite measures.

Lemma 2.17

Assume \({\mathcal K}(x) \in \mathcal {C}^1({\mathbb {R}})\) is an even function with \({\mathcal K}'(r)<0\) for all \(r>0\). For open sets \(U_1, U_2 \subset {\mathbb {R}}\) with finite measure, let \(\mu _i := \chi _{U_i}\) for \(i=1,2\), and \(I(\tau ) := I_{\mathcal K}[\mu _1,\mu _2](\tau )\) is as defined in (2.21). Then

-

(a)

\(\frac{d}{d \tau }I(\tau )\ge 0\) for all \(\tau \ge 0\);

-

(b)

In addition, assume that there exists some \(a\in (0,1)\) and \(R>\max \{|U_1|, |U_2|\}\) such that \(|U_1 \cap (\frac{|U_1|}{2}, R)|>a\), and \(|U_2 \cap (-R, -\frac{|U_2|}{2})|>a\). Then for all \(\tau \in [0,a/4]\), we have

$$\begin{aligned} \frac{d^+}{d \tau } I(\tau ) \ge \frac{1}{128} c_w a^3 > 0, \end{aligned}$$(2.25)where \(c_w\) is the minimum of \(|{\mathcal K}'(r)|\) for \(r\in [\frac{a}{4}, 4R]\).

Proof

It suffices to focus on the case when \(U_1, U_2\) both consist of a finite disjoint union of open intervals, and for the general case we can take the limit. Recall that \(S^\tau \mu _i = \chi _{M^\tau (U_i)}\) for \(i=1,2\) and all \(\tau \ge 0\).

To show (a), due to the semigroup property of \(S^\tau \) in Lemma 2.14, all we need to show is \(\frac{d^+}{d \tau }I(0)\ge 0\). By writing \(U_1, U_2\) each as a union of disjoint open intervals and expressing \(I(\tau )\) a sum of the pairwise interaction energy, (a) immediately follows from Lemma 2.16(a).

We will prove (b) next. First, we claim that

To see this, note that \(A_1(0)>\frac{3a}{4}\) due to the assumption \(|U_1 \cap (\frac{|U_1|}{2}, R)|>a\). Since each interval in \(M^\tau (U_1)\) moves with speed either 0 or \(\pm 1\) at each \(\tau \), we know \(A_1'(\tau )\ge -2\) for all \(\tau \), yielding the claim. (Similarly, \(A_2(\tau ) := |M^\tau (U_2) \cap (-R, -\frac{|U_2|}{2}-\frac{a}{4})|>\frac{a}{4}\) for all \(\tau \in [0,\frac{a}{4}]\).)

Now we pick any \(\tau _0 \in [0,\frac{a}{4}]\), and we aim to prove (2.25) at this particular time \(\tau _0\). At \(\tau =\tau _0\), write \(M^{\tau _0}(U_1):= \cup _{k=1}^{N_1} I(c_k^1, r_k^1)\), where all intervals \(I(c_k^1, r_k^1)\) are disjoint, and none of them share common endpoints – if they do, we merge them into one interval.

Note that for every \(x\in M^{\tau _0}(U_1) \cap (\frac{|U_1|}{2}+\frac{a}{4}, \,R)\), x must belong to some \(I(c_k^1, r_k^1)\) with \(a/4\le c_k^1 \le R+|U_1|/2\). Otherwise, the length of \(I(c_k^1, r_k^1)\) would exceed \(|U_1|\), contradicting Lemma 2.11(a). We then define

Combining the above discussion with (2.26), we have \(\sum _{k\in \mathscr {I}_1}|I(c_k^1, r_k^1)| \ge a/4\), i.e.

Likewise, let \(M^{\tau _0}(U_2) := \cup _{k=1}^{N_2} I(c_k^2, r_k^2)\), and denote by \(\mathscr {I}_2\) the set of indices k such that \(-R-|U_2|/2\le c_k^2 \le -\frac{a}{4}\), and similarly we have \(\sum _{k\in \mathscr {I}_2} r_k^2 \ge a/8\).

The semigroup property of \(M^\tau \) in Lemma 2.11 gives that for all \(s>0\),

Since none of the intervals \(I(c_k^1, r_k^1)\) share common endpoints, we have

A similar result holds for \(M^{\tau _0+s}(U_2)\), hence we obtain for sufficiently small \(s> 0\):

Applying Lemma 2.16(a) to the above identity yields

Next we will obtain a lower bound for \(T_{kl}\). By definition of \(\mathscr {I}_1\) and \( \mathscr {I}_2\), for each \(k\in \mathscr {I}_1\) and \(l\in \mathscr {I}_2\) we have that \(c_k^1 \ge \frac{a}{4}\) and \(c_l^2 \le -\frac{a}{4}\), hence \(|c_l^2 - c_k^1| \ge \frac{a}{2}\). Thus Lemma 2.16(b) yields

where \(c_w = \min _{r\in [\frac{a}{4}, 4R]} |{\mathcal K}'(r)|\) (here we used that for \(k\in \mathscr {I}_1, l\in \mathscr {I}_2\), we have \(r_k^1+r_l^2 + |c_l^2-c_k^1| \le |U_1|/2+|U_2|/2 + (R+|U_1|/2) + (R+|U_2|/2) \le 4R\), due to the assumption \(R>\max \{|U_1|, |U_2|\}\).)

Plugging the above inequality into (2.28) and using \(\min \{u,v\} \ge \min \{u,1\} \min \{v,1\}\) for \(u,v>0\), we have

here we applied (2.27) in the second-to-last inequality, and used the assumption \(a\in (0, 1)\) for the last inequality. Since \(\tau _0 \in [0,a/4]\) is arbitrary, we can conclude. \(\square \)

Now we are ready to prove Proposition 2.15.

Proof of Proposition 2.15

Since \(\mu _0 \in \mathcal {C}({\mathbb {R}}^d) \cap L^1_+({\mathbb {R}}^d)\) is not symmetric decreasing about \(H = \{x_1 = 0\}\), we know that there exists some \(x' \in {\mathbb {R}}^{d-1}\) and \(h>0\), such that \(U_{x'}^h := \{x_1\in {\mathbb {R}}: \mu _0(x_1, x')>h\}\) has finite measure, and its difference from \((-|U_{x'}^h|/2, |U_{x'}^h|/2)\) has nonzero measure.

For \(R>0, a>0\), define

Our discussion above yields that at least one of \(B_1^{R,a}\) and \(B_2^{R,a}\) is nonempty when R is sufficiently large and \(a>0\) sufficiently small (hence at least one of them must have nonzero measure by continuity of \(\mu _0\)). Indeed, using the fact that H splits the mass of \(\mu _0\) into half and half, we can choose R sufficiently large and \(a>0\) sufficiently small (both of them depend on \(\mu _0\) only), such that both \(B_1^{R,a}\) and \(B_2^{R,a}\) have nonzero measure in \({\mathbb {R}}^{d-1}\times (0,+\infty )\).

Now, let us define a one-dimensional kernel \(K_l(r) := -\tfrac{1}{2}W(\sqrt{r^2+l^2})\). Note that for any \(l>0\), the kernel \(K_l \in \mathcal {C}^1({\mathbb {R}})\) is even in r, and \(K_l'(r)<0\) for all \(r>0\). By definition of \(S^\tau \), we can rewrite \(\mathcal {I}[S^\tau \mu _0]\) as

Thus using the notation in (2.21), \(\mathcal {I}[S^\tau \mu _0] \) can be rewritten as

and taking its right derivative [and applying Lemma 2.17(a)] yields

By definition of \(B_1^{R,a}\) and \(B_2^{R,a}\), for any \((x',h_1)\in B_1^{R,a}\) and \((y',h_2)\in B_2^{R,a}\), we can apply Lemma 2.17(b) to obtain

where \(c_w\) is the minimum of \(|K_{|x'-y'|}'(r)|\) in [a / 4, 4R]. By definition of \(K_l(r)\), we have

Using \(|x'|\le R\) and \(|y'|\le R\) (due to definition of \(B_1, B_2\)), we have \( \frac{r}{\sqrt{r^2+|x'-y'|^2}} \ge \frac{a}{20R}\) for all \(r\in [a/4,4R]\), hence \(c_w \ge \frac{a}{40R} \min _{r\in [\frac{a}{4}, 4R]}W'(r)\).

Plugging (2.30) (with the above \(c_w\)) into (2.29) finally yields

hence we can conclude the desired estimate. \(\square \)

2.2.3 Proof of Proposition 2.8

In the statement of Proposition 2.8, we assume that \(\mu _0\) is not radially decreasing up to any translation. Since Steiner symmetrization only deals with symmetrizing in one direction, we will use the following simple lemma linking radial symmetry with being symmetric decreasing about hyperplanes. Although the result is standard (see [48, Lemma 1.8]), for the sake of completeness we include here the details of the proof.

Lemma 2.18

Let \(\mu _0 \in \mathcal {C}({\mathbb {R}}^d)\). Suppose for every unit vector e, there exists a hyperplane \(H \subset {\mathbb {R}}^d\) with normal vector e, such that \(\mu _0\) is symmetric decreasing about H. Then \(\mu _0\) must be radially decreasing up to a translation.

Proof

For \(i=1,\dots ,d\), let \(e_i\) be the unit vector with i-th coordinate 1 and all the other coordinates 0. By assumption, for each i, there exists some hyperplane \(H_i\) with normal vector \(e_i\), such that \(\mu _0\) is symmetric decreasing about \(H_i\). We then represent each \(H_i\) as \(\{(x_1,\dots ,x_d): x_i = a_i\)} for some \(a_i\in {\mathbb {R}}\), and then define \(a\in {\mathbb {R}}^d\) as \(a:=(a_1,\dots , a_d)\). Our goal is to prove that \(\mu _0(\cdot -a)\) is radially decreasing.

We first claim that \(\mu _0(x) = \mu _0(2a-x)\) for all \(x\in {\mathbb {R}}^d\). For any hyperplane \(H\subset {\mathbb {R}}^d\), let \(T_H: {\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\) be the reflection about the hyperplane H. Since \(\mu _0\) is symmetric with respect to \(H_1, \dots , H_d\), we have \(\mu _0(x) = \mu _0(T_{H_i}x)\) for \(x\in {\mathbb {R}}^d\) and all \(i=1,\dots ,d\), thus \(\mu _0(x) = \mu _0(T_{H_1}\dots T_{H_d} x) = \mu _0(2a-x)\).

The claim implies that every hyperplane H passing through a must split the mass of \(\mu _0\) into half and half. Denote the normal vector of H by e. By assumption, \(\mu _0\) is symmetric decreasing about some hyperplane \(H'\) with normal vector e. The definition of symmetric decreasing implies that \(H'\) is the only hyperplane with normal vector e that splits the mass into half and half, hence \(H'\) must coincide with H. Thus \(\mu _0\) is symmetric decreasing about every hyperplane passing through a, hence we can conclude. \(\square \)

Proof of Proposition 2.8

Since \(\mu _0\) is not radially decreasing up to any translation, by Lemma 2.18, there exists some unit vector e, such that \(\mu _0\) is not symmetric decreasing about any hyperplane with normal vector e. In particular, there is a hyperplane H with normal vector e that splits the mass of \(\mu _0\) into half and half, and \(\mu _0\) is not symmetric decreasing about H. We set \(e=(1,0,\dots ,0)\) and \(H = \{x_1=0\}\) throughout the proof without loss of generality. For the rest of the proof, we will discuss two different cases \(m\in (0,1]\) and \(m>1\), and construct \(\mu (\tau , \cdot )\) in different ways.

Case 1:\(m \in (0,1]\). In this case, we simply set \(\mu (\tau ,\cdot ) = S^\tau \mu _0\). By Proposition 2.15, \(\mathcal {I}[S^\tau \mu _0]\) is decreasing at least linearly for a short time. Since continuous Steiner symmetrization preserves the distribution function, even if \(\mathcal {S}[\mu _0] = -\infty \) by itself, we still have the difference \(\mathcal {S}[\mu (\tau )] - \mathcal {S}[\mu _0] \equiv 0\) in the sense of (2.6). Thus (2.16) holds for all sufficiently small \(\tau >0\). In addition, (2.18) is automatically satisfied since we assumed that \(\mathrm {supp}\,\mu _0 = {\mathbb {R}}^d\) for \(m\in (0,1]\), and recall that \(S^\tau \) is mass-preserving by definition.

It then suffices to prove (2.17) for all sufficiently small \(\tau >0\). Let us discuss the case \(m=1\) first. By assumption, \(|\nabla \log \mu _0| \le C_0\). For any \(y\in {\mathbb {R}}^d\) and \(\tau >0\) we claim that

To see this, let us fix any \(y = (y_1, y')\in {\mathbb {R}}^d\). Since \(\log \mu _0(\cdot , y')\) is Lipschitz with constant \(C_0\), for any \(\tau >0\), the following two inequalities hold:

and

Since the level sets of \(\mu _0\) are moving with velocity at most 1 (and note that any level set of \(\mu _0\) is also a level set of \(\log \mu _0\)), we obtain (2.31). It implies

We then have \(|\mu (\tau ,y) - \mu _0(y)| \le 2C_0\mu _0(y) \tau \) for all \(\tau \in (0, \frac{\log 2}{C_0})\) and all \(y\in {\mathbb {R}}^d\).

Now we move on to \(m\in (0,1)\), where we aim to show that \(|\mu (\tau ,y) - \mu _0(y)| \le C_1 \mu _0^{2-m}(y)\tau \) for some \(C_1\) for all sufficiently small \(\tau >0\). Using the assumption \(|\nabla \frac{m}{1-m} \mu _0^{m-1}| \le C_0\), the same argument to obtain (2.31) then gives the following for all \(y\in {\mathbb {R}}^d, \tau >0\):

Note that \(\mu _0^{m-1}(y)\ge \Vert \mu _0\Vert _\infty ^{m-1}\), since \(\mu _0 \in L^\infty \) and \(m\in (0,1)\). Let us set \(\delta _0 = \frac{m}{2(1-m)C_0}\Vert \mu _0\Vert _\infty ^{m-1}\). For any \(\tau \in (0,\delta _0)\), the left hand side of the above inequality is strictly positive, thus we have

and note that our choice of \(\delta _0\) ensures that

for all \(\tau \in (0,\delta _0)\). Let \(f(a) := \left( \mu _0^{m-1}(y) +a\right) ^{\frac{1}{m-1}} - \mu _0(y)\), which is a convex and decreasing function in a with \(f(0)=0\). Using this function f, the above inequality (2.32) can be rewritten as

Since f is convex and decreasing, for all \(|a| \le \frac{C_0(1-m)}{m}\delta _0=\frac{1}{2}\Vert \mu _{0}\Vert _{\infty }^{m-1}\) we have

and this leads to

with \(C_1 := \frac{2^{\frac{m-2}{m-1}}}{m}C_{0}\), which gives (2.17).

Case 2:\(m>1\). Note that if we set \(\mu (\tau ,\cdot ) = S^\tau \mu _0\), then it directly satisfies (2.16) for a short time, since \(\mathcal {I}[S^\tau \mu _0]\) is decreasing at least linearly for a short time by Proposition 2.15, and we also have \(\mathcal {S}[S^\tau \mu _0]\) is constant in \(\tau \). However, \(S^\tau \mu _0\) does not satisfy (2.17) and (2.18). To solve this problem, we will modify \(S^\tau \mu _0\) into \(\tilde{S}^\tau \mu _0\), where we make the set \(U_{x'}^h := \{x_1\in {\mathbb {R}}: \mu _0(x_1, x')>h\}\) travels at speed v(h) rather than at constant speed 1, with v(h) given by

for some sufficiently small constant \(h_0>0\) to be determined later. More precisely, we define \(\mu (\tau ,\cdot ) = {\tilde{S}}^\tau \mu _0\) as

with v(h) as in (2.33). For an illustration on the difference between \(S^\tau \mu _0\) and \({\tilde{S}}^\tau \mu _0\), see the left figure of Fig. 4.

Left: A sketch on \(\mu _0\) (grey), \(S^\tau \mu _0\) (blue) and \({\tilde{S}}^\tau \mu _0\) (red dashed) for a small \(\tau >0\). Right: In the construction of \({\tilde{S}}^\tau \), due to a reduced speed at lower values, a higher value level set may travel over a lower value level set. The figure illustrates this phenomenon for a large \(\tau >0\)

Note that \({\tilde{S}}^\tau \mu _0\) and \(S^\tau \mu _0\) do not necessarily have the same distribution function. Due to a reduced speed v(h) for \(h\in (0,h_0)\) in the construction of \(\tilde{S}^\tau \), a higher block may travel over a lower block, as illustrated in the right figure of Fig. 4. When this happens, the part that is hanging outside would “drop down” as we integrate in h in (2.34), thus changing the distribution function of \({\tilde{S}}^\tau \mu _0\). But, this is not likely (and even impossible) to happen when \(\tau \ll 1\): indeed, using the regularity assumption \(|\nabla \mu _0^{m-1}|\le C_0\) and the particular v(h) in (2.33), one can show that the level sets remain ordered for small enough \(\tau \). But we will not pursue in this direction, since later we will show in (2.38) that \(\mathcal {S}[{\tilde{S}}^\tau \mu _0] \le \mathcal {S}[\mu _0]\) for all \(\tau >0\), which is sufficient for us.

Our goal is to show that such \(\mu (\tau ,\cdot )\) satisfies (2.16), (2.17) and (2.18) for small enough \(\tau \). Let us first prove that for any \(h_0>0\), \(\mu (\tau ,\cdot )\) satisfies (2.17) and (2.18) for \(\tau \in [0,\delta _1]\), where \(\delta _1 = \delta _1(m,h_0,C_0)>0\). To show (2.18), note that the assumption \(|\nabla (\mu _0^{m-1})| \le C_0\) directly leads to the following: for any \(x,y\in {\mathbb {R}}^d\) with \(\mu _0(x)\ge h>0\) and \(\mu _0(y)=0\), we have that \(|x-y| \ge h^{m-1}/C_0\). This implies that for any connected component \(D_i \subset \mathrm {supp}\,\mu _0\),

Now define \(D_{i,x'}\) as the one-dimensional set \(\{x_1\in {\mathbb {R}}: (x_1, x') \in D_i\}\). The inequality (2.35) yields

and note that for any \(h>0\), we have \(h^{m-1}/(C_0 v(h))\ge h_0^{m-1}/C_0\) by definition of v(h). Using the above equation, the definition of \({\tilde{S}}^\tau \) and the fact that \(M^{v(h)\tau }\) is measure-preserving, we have that (2.18) holds for all \(\tau \le h_0^{m-1}/C_0\).

Next we prove (2.17). Let us fix any \(y = (y_1, y')\in {\mathbb {R}}^d\), and denote \(h=\mu _0(y)\). Using \(|\nabla \mu _0^{m-1}| \le C_0\), we have that for any \(\lambda >1\),

So we have \(y_1 \not \in M^{v(\lambda h)\tau } \left( U_{y'}^{\lambda h}\right) \) for all \(\tau \le \frac{(\lambda ^{m-1}-1)h^{m-1}}{C_0 v(\lambda h)}\), which is uniformly bounded below by \( \frac{(\lambda ^{m-1}-1)h_0^{m-1}}{C_0 \lambda ^{m-1}}\) due to the fact that \(v(\lambda h) \le (\lambda h/h_0)^{m-1}\) for all h. By definition of \({\tilde{S}}^\tau \) and the fact that \(\mu _0(y)=h\), the following holds for all \(\lambda > 1\):

Note that there exists \(c_m^1>0\) only depending on m, such that \(\lambda ^{m-1}-1 \ge c_m^1(\lambda - 1)\) for all \(1<\lambda <2\). Hence for all \(1<\lambda <2\) we have

and this directly implies

Similarly, for any \(0<\eta < 1\) we have \( \mathrm {dist}(y_1, (U_{y'}^{\eta h})^c)\ge \frac{(1-\eta ^{m-1}) h^{m-1}}{C_0}, \) and an identical argument as above gives us

Now we let \(c_m^2>0\) be such that \(1-\eta ^{m-1} \ge c_m^2(1-\eta )\) for all \(\frac{1}{2}<\eta <1\). Hence we have \({\tilde{S}}^\tau [\mu _0](y) - \mu _0(y) \ge -(1-\eta )\mu _0(y)\) for \(\tau = \dfrac{c_m^2 h_0^{m-1}}{C_0}(1-\eta )\), which implies

Combining (2.36) and (2.37), we have that for any \(h_0>0\), (2.17) holds for some \(C_1\) for all \(\tau \in [0,\delta _1]\), where both \(C_1>0\) and \(\delta _1>0\) depend on \(C_0, h_0\) and m.

Finally, we will show that (2.16) holds for \(\mu (\tau ) = {\tilde{S}}^\tau [\mu _0]\) if we choose \(h_0>0\) to be sufficiently small. First, we point out that \(\mathcal {S}[{\tilde{S}}^\tau \mu _0]\) is not preserved for all \(\tau \). This is because when different level sets are moving at different speed v(h), we no longer have that \(M^{v(h_1)\tau }(U_{x'}^{h_1}) \subset M^{v(h_2)\tau }(U_{x'}^{h_2}) \) for all \(h_1>h_2\). Nevertheless, we claim it is still true that

To see this, note that the definition of \({\tilde{S}}^\tau \) and the fact that \(M^{v(h)\tau }\) is measure preserving give us

regardless of the definition of v(h). This implies that \(\int f({\tilde{S}}^\tau \mu _0(x))dx \le \int f(\mu _0(x))dx\) for any convex increasing function f, yielding (2.38).

Due to (2.38) and the fact that \(\mathcal {E}[\cdot ] = \mathcal {S}[\cdot ]+\mathcal {I}[\cdot ]\), in order to prove (2.16), it suffices to show

Recall that Proposition 2.15 gives that \(\mathcal {I}[S^\tau \mu _0] \le \mathcal {I}[\mu _0] - c\tau \) for \(\tau \in [0,\delta ]\) with some \(c>0\) and \(\delta >0\). As a result, to show (2.39), all we need is to prove that if \(h_0>0\) is sufficiently small, then

To show (2.40), we first split \(S^\tau \mu _0\) as the sum of two integrals in \(h\in [h_0,\infty )\) and \(h\in [0,h_0)\):

We then split \({\tilde{S}}^\tau \mu _0\) similarly, and since \(v(h)=1\) for all \(h>h_0\) we obtain

For any \(\tau \ge 0\), we have \(\Vert f_1(\tau ,\cdot )\Vert _{L^\infty ({\mathbb {R}}^d)} \le \Vert \mu _0\Vert _{L^\infty ({\mathbb {R}}^d)}\), while \(\Vert f_2(\tau ,\cdot )\Vert _{L^\infty ({\mathbb {R}}^d)}\) and \(\Vert \tilde{f}_2(\tau ,\cdot )\Vert _{L^\infty ({\mathbb {R}}^d)}\) are both bounded by \(h_0\). As for the \(L^1\) norm, we have that \(\Vert f_1(\tau ,\cdot )\Vert _{L^1({\mathbb {R}}^d)} \le \Vert \mu _0\Vert _{L^1({\mathbb {R}}^d)}\), and

where \(m_{\mu _0}(h_0)\) approaches 0 as \(h_0\searrow 0\).

Also, since \(v(h)\le 1\), we know that for each \(\tau \ge 0\), there is a transport map \(\mathcal {T}(\tau ,\cdot ):[0,\infty )\times {\mathbb {R}}^d\rightarrow {\mathbb {R}}^d\) with \(\sup _{x\in {\mathbb {R}}^d} |\mathcal {T}(\tau ,x)-x|\le 2 \tau \), such that \(\mathcal {T}(\tau ,\cdot )\# f_2(\tau ,\cdot )=\tilde{f}_2(\tau ,\cdot )\) (that is, \(\int {\tilde{f}}_2(\tau ,x) \varphi (x)dx = \int f_2(\tau ,x)\varphi (\mathcal {T}(\tau ,x))dx\) for any measurable function \(\varphi \)). Indeed, since the level sets of \(f_2\) are traveling at speed 1 and the level sets of \({\tilde{f}}_2\) are traveling with speed v(h), for each \(\tau \) we can find a transport plan between them with maximal displacement \(L^\infty \) distance at most \(2\tau \) in its support. Let us remark that since these densities are both in \(L^\infty \), there is some optimal transport map \(\tilde{\mathcal {T}}\) for the \(\infty \)-Wasserstein such that \(|\tilde{\mathcal {T}}(\tau ,x)-x|\le 2\tau \). Although existence of an optimal map is known [38], we just need a transport map with this property below.

Using the decompositions (2.41), (2.42) and the definition of \(\mathcal {I}[\cdot ]\), we obtain, omitting the \(\tau \) dependence on the right hand side,

and we will bound \(A_1(\tau )\) and \(A_2(\tau )\) in the following. For \(A_1(\tau )\), denote \(\Phi (\tau ,\cdot ) =: W*f_1(\tau ,\cdot )\), and using the \(L^\infty \), \(L^1\) bounds on \(f_1\) and the assumptions (K2),(K3), we proceed in the same way as in (2.4) to obtain that \(\Vert \nabla \Phi \Vert _{L^\infty ({\mathbb {R}}^d)} \le C = C(\Vert \mu _0\Vert _{L^\infty ({\mathbb {R}}^d)}, \Vert \mu _0\Vert _{L^1({\mathbb {R}}^d)}, C_w, d)\).

Using that \(\mathcal {T}(\tau ,\cdot )\# f_2(\tau ,\cdot ) = \tilde{f}_2(\tau ,\cdot )\), we can rewrite \(A_1(\tau )\) as

where the coefficient of \(\tau \) can be made arbitrarily small by choosing \(h_0\) sufficiently small. To control \(A_2(\tau )\), we first use the identity \(\int f(W*g)dx = \int g(W*f)dx\) to bound it by

and both terms can be controlled in the same way as \(A_1(\tau )\), since both \(\Phi _2 := W*f_2\) and \({{\tilde{\Phi }}}_2 := W*{\tilde{f}}_2\) satisfy the same estimate as \(\Phi \). Combining the estimates for \(A_1(\tau )\) and \(A_2(\tau )\), we can choose \(h_0>0\) sufficiently small, depending on \(\mu _0\) and \(W\), such that Eq. (2.40) would hold for all \(\tau \), which finishes the proof. \(\square \)

2.3 Proof of Theorem 2.2

Proof

Towards a contradiction, assume there is a stationary state \(\rho _s\) that is not radially decreasing. Due to Lemma 2.3, we have that \(\rho _s \in \mathcal {C}({\mathbb {R}}^d) \cap L^1_+({\mathbb {R}}^d)\), and \(|\frac{m}{m-1}\nabla \rho _s^{m-1}| \le C_0\) in \(\mathrm {supp}\,\rho _s\) for some \(C_0>0\) (and if \(m=1\), it becomes \(|\nabla \log \rho _s|\le C_0\)). In addition, if \(m\in (0,1]\), the same lemma also gives \(\mathrm {supp}\,\rho _s = {\mathbb {R}}^d\). This enables us to apply Proposition 2.8 to \(\rho _s\), hence there exists a continuous family of \(\mu (\tau ,\cdot )\) with \(\mu (0,\cdot ) = \rho _s\) and constants \(C_1>0, c_0>0,\delta _0>0\), such that the following holds for all \(\tau \in [0,\delta _0]\):

Next we will use (2.44) and (2.45) to directly estimate \(\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s]\), and our goal is to show that there exists some \(C_2>0\), such that

We then directly obtain a contradiction between (2.43) and (2.46) for sufficiently small \(\tau >0\).

Let \(g(\tau ,x) := \mu (\tau ,x)-\rho _s(x)\). Due to (2.44), we have \(|g(\tau ,x)| \le C_1 \rho _s(x)^{\max \{1,2-m\}} \tau \) for all \(x\in {\mathbb {R}}^d\) and \(\tau \in [0,\delta _0]\). From now on, we set \(\delta _0\) to be the minimum of its previous value and \((2C_1(1+\Vert \rho _s\Vert _\infty ))^{-1}\). Such \(\delta _0\) ensures that \(\mathrm {supp}\,g(\tau ,\cdot ) \subset \mathrm {supp}\,\rho _s\) and \(|g(\tau ,x)/\rho _s(x)|\le \frac{1}{2}\) for all \(\tau \in [0,\delta _0]\).

Since the energy \(\mathcal {E}\) takes different formulas for \(m\ne 1\) and \(m=1\), we will treat these two cases differently. Let us start with the case \(m\in (0,1)\cup (1,+\infty )\). Using the notation \(g(\tau ,x)\), we have the following: (where in the integrand we omit the x dependence, due to space limitations)

Recall that for all \(|a|<1/2\), we have the elementary inequality

Since for all \(x\in \mathrm {supp}\,\rho _s\) and \(\tau \in [0,\delta _0]\) we have \(|g(\tau ,x)/\rho _s(x)|\le \frac{1}{2}\), we can replace a by \(g(x)/\rho _s(x)\) in the above inequality, then multiply \(\frac{1}{|m-1|}\rho _s^m\) to both sides to obtain the following (with \(C_2(m)=C(m)/|m-1|\)):

Applying this to (2.47), we have the following for all \(\tau \le \min \{\delta _0, C_1/2\}\):

Since \(\rho _s\) is a steady state solution, from (2.11) we have \(\frac{m}{m-1}\rho _s^{m-1} + W*\rho _s = C_i\) in each connected component \(D_i\subset \mathrm {supp}\,\rho _s\), hence \(I_1 \equiv 0\) for all \(\tau \in [0,\delta _0]\) due to (2.45) and the definition of \(g(\tau ,\cdot )\).

For \(I_2\) and \(I_3\), since \(|g(\tau ,x)| \le C_1 \rho _s(x)^{\max \{1,2-m\}} \tau \) for \(\tau \in [0,\delta _0]\), for \(m>1\) it becomes \(|g(\tau ,x)| \le C_1 \rho _s(x) \tau \), thus we directly have

for some \(A>0\) depending on \(\Vert \rho _s\Vert _{1}, \Vert \rho _s\Vert _{\infty }, m\) and d (where we use (2.4) and \(\rho _s \omega (1+|x|)\in L^1\) to control \(I_2\)). For \(m\in (0,1)\), the bound of g implies \(|g(\tau ,x)| \le C_1 \Vert \rho _s\Vert _{\infty }^{1-m} \rho _s(x) \tau \). Plugging this into \(I_2\) gives the same bound as above (with a different A). And for \(I_3\), plugging in \(|g(\tau ,x)| \le C_1 \rho _s(x)^{2-m} \tau \) gives

where in the last inequality we used that \(2-m>1\) and \(\rho _s \in L^1 \cap L^\infty \). Putting them together finally gives \(\big |\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s]\big | \le 2A\tau ^2\) for all \(\tau \le \delta _0\), finishing the proof for \(m\in (0,1)\cup (1,+\infty )\).

Next we move on to the case \(m=1\). Using the notation \(g(\tau ,x)\), the difference \(\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s]\) can be rewritten as follows: (where we again omit the x dependence in the integrand)

Again, we have \(J_1 = 0\) since \(\int g(\tau )dx = 0\), and \(\log \rho _s + W*\rho _s = C\) in \({\mathbb {R}}^d\). \(J_3\) is the same term as \(I_2\), thus again can be controlled by \(A\tau ^2\). Finally it remains to control \(J_2\). Let us break \(J_2\) into

For \(J_{22}\), using the inequality \(\log (1+a)<a\) for all \(a>0\), we have

where we use (2.44) in the second inequality. To control \(J_{21}\), due to the elementary inequality

for some universal constant C, letting \(a = \frac{g(\tau )}{\rho _s}\) and apply it to \(J_{21}\) gives

where the last inequality is obtained in the same way as (2.48). Combining these estimates above gives \(|\mathcal {E}[\mu (\tau )] - \mathcal {E}[\rho _s]| \le A\tau ^2\) for some \(A>0\) depending on \(\Vert \rho _s\Vert _{1}, \Vert \rho _s\Vert _{\infty }\) and d, which completes the proof. \(\square \)

2.4 A shortcut for equations with a gradient flow structure

In this subsection, we would like to discuss a shortcut for proving Theorem 2.2, once the first order decay under continuous Steiner symmetrization in Proposition 2.15 has been established, if the Eq. (1.1) has a rigorous gradient flow structure. Over the past two decades, it was discovered that many evolution PDEs have a Wasserstein gradient flow structure including the heat equation, porous medium equation, and the aggregation-diffusion Eq. (1.1) if the kernel W has certain convexity properties, see [2, 34, 42, 55, 76]. More precisely, for (1.1), if W is known to be \(\lambda \)-convex, then given any \(\rho _0 \in \mathcal {P}_2({\mathbb {R}}^d)\) (space of non-negative probability measures with finite second-moment) with \(\mathcal {E}[\rho _0]<\infty \), there exists a unique gradient flow \(\rho (t)\) of the free energy functional \(\mathcal {E}[\rho _0]\) in the space \(\mathcal {P}_2({\mathbb {R}}^d)\) endowed by the 2-Wasserstein distance. In addition, the gradient flow coincides with the unique weak solution if the velocity field has the necessary integrability conditions.

The \(\lambda \)-convexity of the potential W does not hold in the generality of our assumptions (K1)–(K4). However, the \(\lambda \)-convexity assumption on W has been recently relaxed in the following works for the particular, but important, case of the attractive Newtonian kernel. Craig [42] has shown that the gradient flow is well-posed if the energy \(\mathcal {E}\) is \(\xi \)-convex, where \(\xi \) is a modulus of convexity. Carrillo and Santambrogio [35] have recently shown that for (1.1) with attractive Newtonian potential, for any \(\rho _0\) in \(L^\infty ({\mathbb {R}}^d) \cap \mathcal {P}_2({\mathbb {R}}^d)\), there is a local-in-time gradient flow solution. The authors show that there are local in time \(L^\infty \) bounds at the discrete variational level allowing for local in time well defined gradient flow solutions. Furthermore, this gradient flow solution is unique among a large class of weak solutions due to the earlier results [32]. There, it was also shown that the free energy functional \(\mathcal {E}\) is \(\xi \)-convex for \(m\ge 1-\tfrac{1}{d}\) in the set of bounded densities \(L^\infty ({\mathbb {R}}^d) \cap \mathcal {P}_2({\mathbb {R}}^d)\) with a given fixed bound allowing the use of the recent theory of \(\xi \)-convex gradient flows in [42]. Summarizing, the recent results for the Newtonian attractive kernel [32, 35, 42] allow for a rigorous gradient flow structure of the Newtonian attractive kernel case for \(m\ge 1-\tfrac{1}{d}\) with initial data in \(L^\infty ({\mathbb {R}}^d) \cap \mathcal {P}_2({\mathbb {R}}^d)\).

In short we now know two particular more restrictive classes of potentials than the assumptions (K1)–(K4), including the Newtonian kernel case, for which a rigorous gradient flow theory has been developed for (1.1). Next we will show that under a rigorous gradient flow structure, once we use continuous Steiner symmetrization to obtain Proposition 2.15, it almost directly leads to radial symmetry via the following shortcut. In particular, Proposition 2.8 is not needed. Below is the statement and proof of the new proposition that we include for the sake of completeness. Note that it is weaker than Theorem 2.2, since Wasserstein gradient flow requires solutions to have a finite second moment, and furthermore for the existence of the gradient flow solutions we need to assume \(m\ge 1-\tfrac{1}{d}\). We will discuss this difference in Remark 2.20.

Proposition 2.19

Assume that W is such that (1.1) has a local-in-time unique gradient flow solution. Let \(\rho _s \in L^\infty ({\mathbb {R}}^d) \cap \mathcal {P}_2({\mathbb {R}}^d) \) be a stationary solution of (1.1) with \( \mathcal {E}[\rho _s]\) being finite. Then \(\rho _s\) must be radially decreasing after a translation.

Proof

Towards a contradiction, assume there is a stationary state \(\rho _s\) that is not radially decreasing after any translation. As before, Lemma 2.3 yields that \(\rho _s \in \mathcal {C}({\mathbb {R}}^d) \cap L^1_+({\mathbb {R}}^d)\). Applying Lemma 2.18 to \(\rho _s\) allows us to find a hyperplane H that splits the mass of \(\rho _s\) into half and half, but \(\rho _s\) is not symmetric decreasing about H. Without loss of generality assume \(H = \{x_1=0\}\). Applying Proposition 2.15 to \(\rho _s\) and using the fact that the \(L^m\) norm is conserved under the continuous Steiner symmetrization \(S^\tau \), we directly have that

where \(c_0, \delta _0\) are strictly positive constants that depend on \(\rho _s\). In addition, since the continuous Steiner symmetrization \(S^\tau \) gives an explicit transport plan from \(\rho _s\) to \(S^\tau \rho _s\), where each layer is shifted by no more than distance \(\tau \), we have \(W_\infty (\rho _s, S^\tau \rho _s) \le \tau \), thus

Using (2.49) and (2.50), the metric slope \(|\partial \mathcal {E}|(\rho _s)\) as defined in [2, Definition 1.2.4] satisfies

On the other hand, the local in time gradient flow solution \(\rho (t)\) with initial solution \(\rho _s\) satisfies an Evolution Differential Inequality (EVI) (see [42, Definition 2.10] when W is the Newtonian kernel), then arguing as in [3, Proposition 3.6], see also [32], we have that the following energy dissipation inequality is satisfied, for all \(t\ge 0\)