Abstract

Background

Multiple tools have been applied to radiomics evaluation, while evidence rating tools for this field are still lacking. This study aims to assess the quality of pancreatitis radiomics research and test the feasibility of the evidence level rating tool.

Results

Thirty studies were included after a systematic search of pancreatitis radiomics studies until February 28, 2022, via five databases. Twenty-four studies employed radiomics for diagnostic purposes. The mean ± standard deviation of the adherence rate was 38.3 ± 13.3%, 61.3 ± 11.9%, and 37.1 ± 27.2% for the Radiomics Quality Score (RQS), the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) checklist, and the Image Biomarker Standardization Initiative (IBSI) guideline for preprocessing steps, respectively. The median (range) of RQS was 7.0 (− 3.0 to 18.0). The risk of bias and application concerns were mainly related to the index test according to the modified Quality Assessment of Diagnostic Accuracy Studies (QUADAS-2) tool. The meta-analysis on differential diagnosis of autoimmune pancreatitis versus pancreatic cancer by CT and mass-forming pancreatitis versus pancreatic cancer by MRI showed diagnostic odds ratios (95% confidence intervals) of, respectively, 189.63 (79.65–451.48) and 135.70 (36.17–509.13), both rated as weak evidence mainly due to the insufficient sample size.

Conclusions

More research on prognosis of acute pancreatitis is encouraged. The current pancreatitis radiomics studies have insufficient quality and share common scientific disadvantages. The evidence level rating is feasible and necessary for bringing the field of radiomics from preclinical research area to clinical stage.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Key points

-

More high-quality research on prognosis of acute pancreatitis is encouraged, since it has great influence on clinical decision-making but cannot be easily predicted by radiologists’ assessment.

-

The overall RQS rating could detect common methodological issues across radiomics research, but the biological correlation and comparison to “gold standard” item needs further modification for non-oncological radiomics studies.

-

The RQS rating, TRIPOD checklist, and IBSI for preprocessing steps can serve as tools for radiomics quality evaluation in non-oncological field, while the development of a single comprehensive tool is more favorable for future evaluation.

-

An evidence level rating tool has been confirmed to be feasible for the determination of the existing gap between preclinical and clinical use of radiomics research and is necessary for the overall assessment of specific clinical problems.

Background

Acute pancreatitis is a frequent pancreatic disease that is characterized by a local and systemic inflammatory response with the varying clinical course from self-limiting mild acute pancreatitis to moderate or severe acute pancreatitis which has a substantial mortality rate [1]. A plethora of studies attempted to predict the severity of acute pancreatitis to guide clinical treatment, such as the Acute Physiology and Chronic Health Evaluation (APACHE) II [2], the bedside index for severity in acute pancreatitis (BISAP) [3], and the CT severity index (CTSI) [4]. However, complexity in evaluation may hinder their clinical application, and they are not useful for predicting recurrence or local complications [2,3,4]. Approximately 20% of acute pancreatitis patients endure recurrent attacks and progress to chronic pancreatitis, a fibroinflammatory syndrome of the exocrine pancreas [5]. Chronic pancreatitis may present mass-like or cyst-like appearance, mimicking mass-forming pancreatitis, autoimmune pancreatitis, pancreatic cancer, and other pancreatic tumors [6]. The differential diagnosis and determination of malignancy of these lesions are hard, but it is necessary to achieve an accurate diagnosis to avoid unnecessary surgery in inflammatory conditions.

Radiomics represents the process of extracting quantitative features to transform images into high-dimensional data for capturing deeper information to support decision-making [7,8,9,10,11]. Current studies have shown its potential for pancreatic precision medicine, especially in diagnosis and management of pancreatic tumors [12,13,14]. Although the main use of radiomics lies in oncology, the radiomics approach is suitable for non-oncological research based on its nature [15,16,17]. However, only 5.6% of pancreatic radiomics studies investigated the role of radiomics in acute pancreatitis [18]. Most radiomics studies on chronic, mass-forming, or autoimmune pancreatitis were aimed to differentiate these inflammatory conditions from malignancy lesions [19,20,21,22]. Implanting radiomics in acute pancreatitis could provide predictive information to identify patients with worse prognosis and therefore promote personalized medical treatment. It is also important to identify patients with a high risk of chronic pancreatitis to allow for closer follow-up and early intervention. Further, the current radiomics reviews applied multiple tools for quality assessment, while the study quality and clinical value of radiomics in pancreatitis are unknown. A high level of evidence is an essential prerequisite for translating radiomics into clinical use. To the best of our knowledge, the level of evidence supporting radiomics models for clinical practice has not been fully investigated.

Hence, our review is aimed to systematically evaluate the methodology quality, reporting transparency, and risk of bias of current radiomics studies on pancreatitis, and determine their level of evidence according to the results of meta-analyses.

Methods

Protocol and registration

The protocol of the current systematic review has been drafted and registered (Additional file 1: Note S1). This systematic review followed the Preferred Reporting Items for Systematic Reviews and Meta-analysis (PRISMA) statement [23], and the relevant checklists are available as Additional file 2.

Literature search and study selection

A systematic search of articles on radiomics in pancreatitis was performed via PubMed, Embase, Web of Science, China National Knowledge Infrastructure, and Wanfang Data until February 28, 2022, with a search string combining “radiomics” and “pancreatitis.” There was no limitation of publish period, but only articles written in English, Chinese, Japanese, German or French were eligible. The reference lists of included articles and relevant reviews were screened to identify additional eligible articles. We included primary radiomics articles whose purposes were diagnostic, prognostic, or predictive. Two reviewers each with 4 years of experience in radiomics and systematic review searched and selected articles independently. In case of disagreements, a third reviewer with 30 years of experience in abdominal radiology and experience in radiomics research would be consulted. The detailed search strategy and eligibility criteria are available in Additional file 1: Note S2.

Data extraction and quality assessment

We modified a data extraction sheet for the current review, which includes literature information, study characteristics, radiomics considerations, and model metrics (Additional file 1: Table S1) [24]. One reviewer extracted the data independently and then the other reviewer cross-checked the results. The disagreements were resolved by a third reviewer.

The Radiomics Quality Score (RQS) [10], the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) checklist [25], the Image Biomarker Standardization Initiative (IBSI) guideline [11], and the modified Quality Assessment of Diagnostic Accuracy Studies (QUADAS-2) tool [26] were employed to assess the study quality (Additional file 1: Tables S2 to S5). These tools were modified to current review topic. Briefly, the RQS with 16 items was used to assess the methodological quality of radiomics according to six key domains [27]. The TRIPOD was partially modified into a 35-item checklist for application in radiomics, excluding the Additional file 1 and funding items [28]. Due to the overlapping with the RQS and the TRIPOD, only seven items relevant to preprocessing steps were selected from the IBSI guideline [29]. The QUADAS-2 tool was tailored to the current research question through signaling questions for risk of bias and application concerns [24]. Two reviewers rated the articles independently, and the disagreements were resolved by discussion with a third reviewer. The consensus reached during data extraction and quality assessment is described in Additional file 1: Note S3.

Data synthesis and analysis

The characteristics of included studies were descriptively summarized. The RQS score and the percentage of the ideal score were described as the mean score and the percentage of mean score to ideal score for each item, respectively. The adherence rates of the RQS rating, the TRIPOD checklist and the IBSI guideline were calculated as the ratio of the number of articles with basic adherence to the number of all available articles. In case a score of at least one point for each item was obtained without minus points, it was considered to have basic adherence, as those which have been reported [27,28,29]. During the calculation of TRIPOD, the “if done” or “if relevant” items (5c, 11, and 14b) and validation items (10c, 10e, 12, 13, 17, and 19a) were excluded from both the denominator and numerator [28, 29]. The result of QUADAS-2 assessment was summarized as proportions of high risk, low risk and unclear.

Subgroup analysis was performed to determine whether a factor influenced on the ideal percentage of RQS, the TRIPOD adherence rate, and the IBSI adherence rate, including the journal type, first authorship, biomarker, and imaging modality. According to the data distribution, Student’s t test or Mann–Whitney’s U test was used for intergroup differences, and one-way analysis of variance or Kruskal–Wallis H test was applied for multiple comparisons. The Spearman correlation test was used for the correlation analysis between the study quality (the ideal percentage of RQS, the TRIPOD adherence rate, and the IBSI adherence rate) and characteristics (the sample size and the impact factor). The SPSS software version 26.0 was used for statistical analysis. A two-tailed p value < 0.05 was recognized as statistical significance, unless otherwise specified.

In the current review, the value of radiomics in differential diagnosis of autoimmune pancreatitis versus pancreatic cancer by CT and mass-forming pancreatitis versus pancreatic cancer by MRI were repeatedly addressed. Therefore, these two clinical questions were included in the meta-analysis. We performed meta-analysis according to imaging modalities, to present the clinically practicable estimation. One reviewer directly extracted or reconstructed the two-by-two tables based on available data, and then the other reviewer cross-checked the results. The diagnostic odds ratio (DOR) with its 95% confidence interval (CI) and the corresponding p value were calculated using random effect model. The sensitivity, specificity, positive and negative likelihood ratio and their 95% CIs were also quantitatively synthesized. The hierarchical summary receiver operating characteristic (HSROC) curve was drawn for visual evaluation of diagnostic performance and heterogeneity. The Cochran’s Q test and the Higgins I2 test were conducted for heterogeneity assessment. The Deeks funnel plot was constructed for publication bias. The Deeks funnel asymmetry test, Egger’s test, and Begg’s test were performed. A two-tailed p value > 0.10 indicated a low publication bias. The trim and fill method was employed to evaluate the robustness of meta-analyses. The Stata software version 15.1 with metan, midas, and metandi packages was employed for meta-analysis.

The model type and phase of image mining studies of the studies were classified according to the TRIPOD statement (Additional file 1: Table S6) [25] and a previous review (Additional file 1: Table S7) [30]. The levels of evidence supporting clinical values were rated based on the results of meta-analyses (Additional file 1: Table S8) [31, 32]. The detailed analysis methods are described in Additional file 1: Note S4.

Results

Literature search

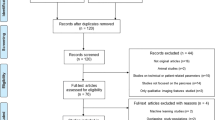

The search identified 587 records in total, 257 of which were excluded due to duplication. After screening the remaining 330 records, 73 full texts were retrieved and reviewed. Finally, 30 studies were included (Fig. 1) [33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62]. No additional eligible study was found through hand search of their reference lists or relevant reviews.

Study characteristics

The characteristics of the 30 included studies are summarized in Table 1. Figure 2 shows the topics of the 33 models included in the 30 studies. 69.7% (23/33) models focused on the role of radiomics in differential diagnosis of pancreatitis from pancreatic tumors, while 12.1% (4/33) models employed radiomics to distinguish chronic pancreatitis from normal pancreas tissue, functional abdominal pain, and acute pancreatitis. The remaining 18.1% (6/33) models investigated the predictive potential of radiomics in prognosis of acute pancreatitis. The literature information, model characteristics, and radiomics information of each study are present in Additional file 1: Tables S9 to S11.

Study topics and number of studies. Three studies investigated two topics, respectively, and had been treated as two different studies in the term of topic. Therefore, there were thirty studies according to article, but thirty-three models according to topic. The bolded number with modality indicates the studies included in the meta-analysis

Study quality

The overall mean ± standard deviation (median, range) of the RQS rating was 7.0 ± 5.0 (7.0, − 3.0 to 18.0), with an overall adherence rate of 38.3% (184/480), and an ideal percentage of RQS of 20.3% (7.3/36) (Table 2; Fig. 3). Although more than nine-tenths of the studies performed feature reduction steps and reported discrimination statistics, none of the studies conducted test–retest analysis, phantom study, cutoff analysis, or cost-effectiveness analysis. All six key domains of RQS were suboptimal, among which the model performance index domain showed the highest ideal percentage of 42.7% (2.1/5).

The overall adherence rate of the TRIPOD checklist was 61.3% (478/780), excluding “if relevant,” “if done,” and “validation” items (5c, 11, 14b, 10c, 10e, 12, 13, 17, and 19a) (Table 3; Fig. 3). None of the studies reported the blinded method during the outcome assessment (item 6b), sample size calculation (item 8), and handling of missing data (item 9). The discussion section reached the highest adherence rate of 90.0% (81/90), while the adherence rate of the validation section was only 17.3% (9/52).

The overall adherence rate of IBSI preprocessing steps was 37.1% (78/210) (Fig. 4). The software for feature extraction varied among studies, including MATLAB (7/30), Pyradiomics (6/30), IBEX (5/30), and others. Three studies did not report the software used. Among these, Pyradiomics and IBEX were with IBSI compliance. The studies used manual (23/30) and automatic (1/30) methods for segmentation. However, one study did not report the segmentation method. The robustness assessment was performed in 40.0% (12/30) of the studies, all concerning the inter- and intra-reader agreements. Other preprocessing steps were sometimes conducted.

The results of the QUADAS-2 assessment are presented in Fig. 3. The risk of bias and application concerns relating to index testing were most frequently observed mainly due to the lack of external validation. The risk of bias in patient selection was rated as high in two studies due to the case–control design. Most of the studies did not provide the timing of scanning; therefore, the corresponding risk of bias was unclear. Individual assessment per study per element is present in Additional file 1: Tables S12 to S15.

Meta-analysis

The datasets for meta-analyses are present in Additional file 1: Table S16. The pooled analysis showed that the DOR (95% CI) of radiomics for distinguishing autoimmune pancreatitis versus pancreatic cancer by CT and mass-forming pancreatitis versus pancreatic cancer by MRI were 189.63 (79.65–451.48) and 135.70 (36.17–509.13), respectively (Fig. 5 and Table 4). However, their levels of evidence were both weak mainly due to the insufficient sample size. There was significant heterogeneity among studies, but the likelihood of publication bias was low. The trim and fill analysis demonstrated that there were missing datasets, but the adjusted diagnostic performance was still of statistical significance. The results of meta-analyses regardless of imaging modalities presented dramatic statistical significance (Additional file 1: Table S17). The corresponding plots of meta-analyses are present in Additional file 1: Figures S1 to S9.

Correlations between study characteristics and quality

Figure 6 shows the potential correlation between study characteristics and its quality. The studies before and after the publication of the RQS, the TRIPOD checklist, or the IBSI guideline did not show obvious difference. Only the ideal percentage of RQS was considered to be related to the sample size (r = 0.456, p = 0.011). The results of subgroup analysis and correlation tests are present in Additional file 1: Tables S18 and S19. No difference of the ideal percentage of RQS, the TRIPOD adherence rate, and the IBSI adherence rate among subgroups has been found (all p > 0.05).

Correlations between study characteristics and quality. Swam plots of (a) ideal percentage of RQS, (b) TRIPOD adherence rate, and (c) IBSI adherence rate. The diameter of bubbles indicates the sample size of studies. Seven studies published on journals without impact factor were excluded. The lighter color indicates the studies after the publication of RQS, TRIPOD, and IBSI; the darker color indicates those before their publications

Discussion

In our review, radiomics showed promising performance of diagnostic and prognostic models for multiple purposes in pancreatitis, but their levels of evidence were weak. The overall adherence rates of the RQS rating, the TRIPOD checklist, and the IBSI preprocessing steps were 38.3%, 61.3%, and 37.1%, respectively. The ideal percentage of RQS was positively related to the sample size. Our results implied that the level of evidence supporting clinical application and the overall study quality were suboptimal in pancreatitis radiomics research, requiring significant improvement.

Several reviews have summarized the use of radiomics in multiple pancreatic diseases from pancreatic cystic lesions to pancreatic tumors [15,16,17,18,19,20,21,22]. A comprehensive review reported that most of the pancreatic radiomics studies investigated focal pancreatic lesions, but only four studies discussed the pancreatitis [12]. In our review, radiomics has been most frequently applied to differential diagnosis of pancreatic cancer from autoimmune pancreatitis, chronic pancreatitis, or mass-forming pancreatitis. The misdiagnosis causes pancreatic cancer patients to miss the surgical opportunity, while the patients with inflammatory condition may receive unnecessary treatment. The accurate diagnosis of these lesions is hindered by mimicking imaging features [6]. Radiomics showed comparable and even better performance than radiologists’ assessment [38, 42, 46, 52, 56, 58], but their level of evidence supporting clinical translation is still weak. Therefore, more validation for the establishment of a sound evidence basis is the main issue for diagnostic. The prognosis prediction for acute pancreatitis is another topic of clinical significance. Although the CT severity index has been established for prognosis prediction of acute pancreatitis [4], the pancreatic parenchyma injury and extra-pancreatic inflammation are not visible enough in early pancreatitis. The conventional imaging features usually lag behind disease progression, which cannot help clinical decision-making. Current studies demonstrated the usefulness of radiomics in predicting severity, recurrence, progression, and extra-pancreatic necrosis [33, 35, 40, 41, 45, 59]. However, the studies were conducted by varying imaging modalities concerning separate outcomes, which do not allow further meta-analysis to establish any evidence. Besides, as a continuous disease progress, acute pancreatitis needs comprehensive prediction for multiple clinical outcomes. Corresponding models have not been developed yet. Thus, it is more urgent to encourage more investigation into prognosis.

The inadequate quality of radiomics studies has been addressed repeatedly [15,16,17,18,19,20,21,22,23,24, 27,28,29]. In accordance with previous reviews, several items were always lacking including test–retest analysis, phantom study, cutoff analysis, and cost-effectiveness analysis in RQS, the blinded method during outcome assessment, sample size calculation, and handling of missing data in TRIPOD, and details of image preprocessing in selected IBSI items. In spite of these common issues across radiomics studies, there are some non-oncology specific issues. Contrary to the oncological field, the concept of biological correlate did not clearly fit the current topic [17], since the inflammatory diseases do not always relate to genomics. In prognostic studies, comparison to “gold standard” is not suitable for non-oncological diseases without a widely accepted “gold standard,” while the tumor staging is usually employed as the “gold standard” of survival prediction. The TRIPOD items and IBSI preprocessing items were suitable for non-oncological studies, since they were not specified for oncological field. We found that the ideal percentage of RQS was positively related with the sample size. We suspected that the larger sample size might allow more sufficient validation, evaluation of calibration statics, and clinical utility assessment, which could gain a higher RQS rating.

Most of the radiomics studies were oncological, but radiomics has potential clinical application in the non-oncological field [30]. Several reviews have summarized the role of radiomics in non-oncological diseases, including mild cognitive impairment and Alzheimer’s disease [15], COVID-19 and viral pneumonia [16], and cardiac diseases [17]. The study quality evaluated by RQS was the main concern of these reviews. Their ideal percentage of RQS were 9.9%, 34.1%, and 19.4%, respectively. We suspected that the COVID-19 and viral pneumonia review reached a better RQS rating since the included studies were published recently with a relatively larger sample size, which allow adequate feature reduction and external validation. Actually, none of the studies in this review lacked the feature reduction, and all the studies performed validation [16]. In contrast, a significant number of previous studies did not perform feature reduction and validation. As a result, the other non-oncological radiomics reviews showed lower RQS ratings [15, 17]. Our review is in line with these non-oncological radiomics reviews with a comparable ideal percentage of RQS of 20.3%. Nevertheless, the feasibility of the TRIPOD checklist [28] and the IBSI preprocessing steps [29] have only been assessed in the oncological field. Our study initially tested and confirmed that they were useful in non-oncological field, but further validation is needed.

An evidence level rating tool has been tested in our review [31, 32]. The evidence level rating process is feasible to show the gap between academic research and clinical application in radiomics studies. It is necessary to employ this tool, since the dramatic model performance did not naturally guarantee a strong level of evidence supporting the clinical translation. However, this tool did not mention on which dataset a predictive model should be assessed, because this tool is originally developed for reviewing epidemic studies and clinical trials [31, 32]. It is recommended to perform the assessment of radiomics models on an external validation dataset [10, 11, 25]. We consider that future studies should determine the level of evidence based on results of meta-analyses of validation datasets.

We believed that the whole radiomics research community should participate in the improvement in methodological and reporting quality for a higher level of evidence to support the translation of radiomics. They need to get involved into this process, to critically appraise the study design, conduct and analyze the model, and report the study. Indeed, the IBSI guideline used in our review is an achievement gained by an independent international collaboration which works towards standardization of the radiomics methodology and reporting [11]. There are many other guidelines developed or under development by the radiomics and artificial intelligence community with the purpose to improve study quality, including Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis based on Artificial Intelligence (TRIPOD-AI) [63], Prediction model Risk Of Bias ASsessment Tool based on Artificial Intelligence (PROBAST-AI) [63], Quality Assessment of Diagnostic Accuracy Studies centered on Artificial Intelligence (QUADAS-AI) [64], Developmental and Exploratory Clinical Investigations of DEcision support systems driven by Artificial Intelligence (DECIDE-AI) [65], Standard Protocol Items: Recommendations for Interventional Trials–Artificial Intelligence (SPRIIT-AI) [66], Consolidated Standards of Reporting Trials–Artificial Intelligence (CONSORT-AI) [67], Standards for Reporting of Diagnostic Accuracy Study centered on Artificial Intelligence (STARD-AI) [68], Checklist for Artificial Intelligence in Medical Imaging (CLAIM) [69], etc. Their project teams and steering committees usually consisted of a broad range of experts to provide balanced and diverse views involving various stakeholder groups.

However, the importance of the participants varies with the stage from early scientific validation to later regulatory assessment. For offline preclinical validations, reporting guidelines and risk of bias assessment tools for radiomics model studies are used, emphasizing the methodological and reporting quality [63, 64]. During this stage, the researchers, authors, reviewers, and editors of radiomics studies play an important role to improve the methodological and reporting quality, and make sure only studies with adequate innovation are being published. Next, at the stage of safety and utility, the small-scale early live clinical evaluations are used to inform regulatory decisions and are part of the clinical evidence generation process [65]. With improvements of study quality, the radiomics research community could for the first time provide more robust scientific evidence for the translation of radiomics. Before clinical application, it is necessary to test the radiomics for safety and effectiveness in large-scale, comparative, and prospective trails [66,67,68]. Similar to the random clinical trials which are considered as the gold standard for drug therapies, the aim of these studies should be to provide stronger evidence for translation of radiomics from research application into a clinically relevant tool. Nevertheless, given the somewhat different focuses of scientific evaluation and regulatory assessment, as well as differences between regulatory jurisdictions, the health policy makers and legal experts may have a greater say in this stage.

The quality assessment results should be seen as a quality seal of the published results more than a way of underlining the possible weaknesses of the proposed model [70]. At present, the researchers are reticent in publishing the quality assessment results for their radiomics studies, and journals do not demand particular checklists for radiomics studies. Nevertheless, in this early stage of radiomics, the authors, editors, reviewers, and readers should be able to ascertain whether a radiomic study is compliant with good practice or whether the study has justified any noncompliance.

There are several limitations in our study. First, the RQS was far from perfect. Some of TRIPOD items may be not suitable for radiomics studies. We did not exhaust the IBSI checklist, but focused on preprocessing steps. Nevertheless, the current review served as an example for the application of these tools in the non-oncological field. Second, radiomics is considered as a subset of artificial intelligence, but we did not apply Checklist for Artificial Intelligence in Medical Imaging for our review [69]. This tool allows assessments on not only artificial intelligence in medical imaging that includes classification, image reconstruction, text analysis, and workflow optimization, but also general manuscript review criteria. However, many items in this tool are too general [71], and therefore hard to apply in radiomics. The tools we used could cover almost all the CLAIM items with more specific instructions. It would be interesting to assess the feasibility of CLAIM in radiomics, but it falls out of our study scope. Third, studies included in the current review focus on very different topics. It may not be fair to run meta-analyses of heterogenous studies, and this process gives insights into clinical questions with a limited number of studies [24, 72]. Indeed, only two selected clinical questions with similar settings were included in meta-analyses for evidence level rating. The increasing number of studies would allow more robust scientific data aggregation in the future. Still, this is a timely attempt to test the feasibility of the evidence level rating tool for radiomics.

In conclusion, more high-quality studies on prognosis of acute pancreatitis are encouraged, since it has great influence on clinical decision-making but could not be easily predicted by radiologists’ assessment. Although meta-analysis of studies showed fascinating potential in differentiating pancreatitis from pancreatic cancer, the level of evidence was weak. The current methodological and reporting quality of radiomics studies on pancreatitis is insufficient. Moreover, evidence rating is needed before radiomics can be translated into clinical practice.

Availability of data and materials

All data generated or analyzed during this study are included in this published article and its Additional file 1.

Abbreviations

- CI:

-

Confidence interval

- DOR:

-

Diagnostic odds ratio

- HSROC:

-

Hierarchical summary receiver operating characteristic

- IBSI:

-

Image Biomarker Standardization Initiative

- QUADAS-2:

-

Modified Quality Assessment of Diagnostic Accuracy Studies

- RQS:

-

Radiomics Quality Score

- TRIPOD:

-

Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis

References

Boxhoorn L, Voermans RP, Bouwense SA et al (2020) Acute pancreatitis. Lancet 396(10252):726–734

Banks PA, Bollen TL, Dervenis C et al (2013) Classification of acute pancreatitis—2012: revision of the Atlanta classification and definitions by international consensus. Gut 62(1):102–111

Knaus WA, Draper EA, Wagner DP, Zimmerman JE (1985) APACHE II: a severity of disease classification system. Crit Care Med 13(10):818–829

Wu BU, Johannes RS, Sun X et al (2008) The early prediction of mortality in acute pancreatitis: a large population-based study. Gut 57(12):1698–1703

Beyer G, Habtezion A, Werner J, Lerch MM, Mayerle J (2020) Chronic pancreatitis. Lancet 396(10249):499–512

Wolske KM, Ponnatapura J, Kolokythas O, Burke LMB, Tappouni R, Lalwani N (2019) Chronic pancreatitis or pancreatic tumor? A problem-solving approach. Radiographics 39(7):1965–1982

Lambin P, Rios-Velazquez E, Leijenaar R et al (2012) Radiomics: extracting more information from medical images using advanced feature analysis. Eur J Cancer 48(4):441–446

Gillies RJ, Kinahan PE, Hricak H (2016) Radiomics: images are more than pictures, they are data. Radiology 278(2):563–577

O’Connor JP, Aboagye EO, Adams JE et al (2017) Imaging biomarker roadmap for cancer studies. Nat Rev Clin Oncol 14:169–186

Lambin P, Leijenaar RTH, Deist TM et al (2017) Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol 14(12):749–762

Zwanenburg A, Vallières M, Abdalah MA et al (2020) The image biomarker standardization initiative: standardized quantitative radiomics for high-throughput image-based phenotyping. Radiology 295(2):328–338

Gu D, Hu Y, Ding H et al (2019) CT radiomics may predict the grade of pancreatic neuroendocrine tumors: a multicenter study. Eur Radiol 29(12):6880–6890

Rigiroli F, Hoye J, Lerebours R et al (2021) CT radiomic features of superior mesenteric artery involvement in pancreatic ductal adenocarcinoma: a pilot study. Radiology 301(3):610–622

Mapelli P, Bezzi C, Palumbo D et al (2022) 68Ga-DOTATOC PET/MR imaging and radiomic parameters in predicting histopathological prognostic factors in patients with pancreatic neuroendocrine well-differentiated tumours. Eur J Nucl Med Mol Imaging. https://doi.org/10.1007/s00259-022-05677-0

Won SY, Park YW, Park M, Ahn SS, Kim J, Lee SK (2020) Quality reporting of radiomics analysis in mild cognitive impairment and Alzheimer’s disease: a roadmap for moving forward. Korean J Radiol 21(12):1345–1354

Kao YS, Lin KT (2021) A meta-analysis of computerized tomography-based radiomics for the diagnosis of COVID-19 and viral pneumonia. Diagnostics 11(6):991

Ponsiglione A, Stanzione A, Cuocolo R et al (2021) Cardiac CT and MRI radiomics: systematic review of the literature and radiomics quality score assessment. Eur Radiol. https://doi.org/10.1007/s00330-021-08375-x

Abunahel BM, Pontre B, Kumar H, Petrov MS (2021) Pancreas image mining: a systematic review of radiomics. Eur Radiol 31(5):3447–3467

Virarkar M, Wong VK, Morani AC, Tamm EP, Bhosale P (2021) Update on quantitative radiomics of pancreatic tumors. Abdom Radiol (NY). https://doi.org/10.1007/s00261-021-03216-3

Bartoli M, Barat M, Dohan A et al (2020) CT and MRI of pancreatic tumors: an update in the era of radiomics. Jpn J Radiol 38(12):1111–1124

Dalal V, Carmicheal J, Dhaliwal A, Jain M, Kaur S, Batra SK (2020) Radiomics in stratification of pancreatic cystic lesions: machine learning in action. Cancer Lett 469:228–237

Bezzi C, Mapelli P, Presotto L et al (2021) Radiomics in pancreatic neuroendocrine tumors: methodological issues and clinical significance. Eur J Nucl Med Mol Imaging 48(12):4002–4015

Page MJ, McKenzie JE, Bossuyt PM et al (2021) The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372:n71

Zhong J, Hu Y, Si L et al (2021) A systematic review of radiomics in osteosarcoma: utilizing radiomics quality score as a tool promoting clinical translation. Eur Radiol 31(3):1526–1535

Collins GS, Reitsma JB, Altman DG, Moons KG (2015) Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. Ann Intern Med 162(1):55–63

Whiting PF, Rutjes AW, Westwood ME et al (2011) QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med 155(8):529–536

Park JE, Kim HS, Kim D et al (2020) A systematic review reporting quality of radiomics research in neuro-oncology: toward clinical utility and quality improvement using high-dimensional imaging features. BMC Cancer 20(1):29

Park JE, Kim D, Kim HS et al (2020) Quality of science and reporting of radiomics in oncologic studies: room for improvement according to radiomics quality score and TRIPOD statement. Eur Radiol 30(1):523–536

Park CJ, Park YW, Ahn SS et al (2022) Quality of radiomics research on brain metastasis: a roadmap to promote clinical translation. Korean J Radiol 23(1):77–88

Sollini M, Antunovic L, Chiti A, Kirienko M (2019) Towards clinical application of image mining: a systematic review on artificial intelligence and radiomics. Eur J Nucl Med Mol Imaging 46(13):2656–2672

Dang Y, Hou Y (2021) The prognostic value of late gadolinium enhancement in heart diseases: an umbrella review of meta-analyses of observational studies. Eur Radiol 31(7):4528–4537

Kalliala I, Markozannes G, Gunter MJ et al (2017) Obesity and gynaecological and obstetric conditions: umbrella review of the literature. BMJ j4511

Chen Y, Chen TW, Wu CQ et al (2019) Radiomics model of contrast-enhanced computed tomography for predicting the recurrence of acute pancreatitis. Eur Radiol 29(8):4408–4417

Cheng MF, Guo YL, Yen RF et al (2018) Clinical Utility of FDG PET/CT in patients with autoimmune pancreatitis: a case-control study. Sci Rep 8(1):3651

Cui W, Zhang W, Zhou L, Jin X, Xiao D (2021) Predictive value of CT texture analysis for recurrence in children with acute pancreatitis. Chin J Dig Surg 20(4):459–465. https://doi.org/10.3760/cma.j.cn115610-20210331-00156 (in Chinese)

Das A, Nguyen CC, Li F, Li B (2008) Digital image analysis of EUS images accurately differentiates pancreatic cancer from chronic pancreatitis and normal tissue. Gastrointest Endosc 67(6):861–867

Deng Y, Ming B, Zhou T et al (2021) Radiomics Model Based on MR images to discriminate pancreatic ductal adenocarcinoma and mass-forming chronic pancreatitis lesions. Front Oncol 11:620981

E L, Xu Y, Wu Z, et al (2020) Differentiation of focal-type autoimmune pancreatitis from pancreatic ductal adenocarcinoma using radiomics based on multiphasic computed tomography. J Comput Assist Tomogr 44(4):511–518

Frøkjær JB, Lisitskaya MV, Jørgensen AS et al (2020) Pancreatic magnetic resonance imaging texture analysis in chronic pancreatitis: a feasibility and validation study. Abdom Radiol (NY) 45(5):1497–1506

Hu Y, Huang X, Liu N, Tang L (2021) The value of T2WI sequence-based radiomics in predicting recurrence of acute pancreatitis. Chin J Magn Reson Imaging 12(10):12–15. https://doi.org/10.12015/issn.1674-8034.2021.10.003 (in Chinese)

Iranmahboob AK, Kierans AS, Huang C, Ream JM, Rosenkrantz AB (2017) Preliminary investigation of whole-pancreas 3D histogram ADC metrics for predicting progression of acute pancreatitis. Clin Imaging 42:172–177

Li J, Liu F, Fang X et al (2021) CT radiomics features in differentiation of focal-type autoimmune pancreatitis from pancreatic ductal adenocarcinoma: a propensity score analysis. Acad Radiol 29(3):358–366

Li SS, Wu ZF, Lin N (2021) CT signs combined with texture features in the differential diagnosis between focal autoimmune pancreatitis and pancreatic cancer. J Chin Clin Med Imaging 32(5):347–350. https://doi.org/10.12117/jccmi.2021.05.010 (in Chinese)

Lin Y, Shen Y, Zou X, Li Z, Hu D, Feng C (2019) The value of CT texture analysis in differentiating autoimmune pancreatitis from pancreatic ductal adenocarcinoma. J Pract Radiol 35(11):1174–1178. https://doi.org/10.3969/ji.sn.1002G1671.2019.11.015 (in Chinese)

Lin Q, Ji YF, Chen Y et al (2020) Radiomics model of contrast-enhanced MRI for early prediction of acute pancreatitis severity. J Magn Reson Imaging 51(2):397–406

Liu Z, Li M, Zuo C et al (2021) Radiomics model of dual-time 2-[18F]FDG PET/CT imaging to distinguish between pancreatic ductal adenocarcinoma and autoimmune pancreatitis. Eur Radiol 31(9):6983–6991

Liu J, Hu L, Zhou B, Wu C, Cheng Y (2022) Development and validation of a novel model incorporating MRI-based radiomics signature with clinical biomarkers for distinguishing pancreatic carcinoma from mass-forming chronic pancreatitis. Transl Oncol 18:101357

Ma X, Wang YR, Zhuo LY et al (2022) Retrospective Analysis of the Value of Enhanced CT radiomics analysis in the differential diagnosis between pancreatic cancer and chronic pancreatitis. Int J Gen Med 15:233–241

Mashayekhi R, Parekh VS, Faghih M, Singh VK, Jacobs MA, Zaheer A (2020) Radiomic features of the pancreas on CT imaging accurately differentiate functional abdominal pain, recurrent acute pancreatitis, and chronic pancreatitis. Eur J Radiol 123:108778

Park S, Chu LC, Hruban RH et al (2020) Differentiating autoimmune pancreatitis from pancreatic ductal adenocarcinoma with CT radiomics features. Diagn Interv Imaging 101(9):555–564

Peng L, Zha Y, Zeng F, Liu B, Yan Y (2020) The value-based T2 histogram analysis for differential diagnosis in solid pancreatic lesions. Chin J Magn Reson Imaging 11(3):201–206. https://doi.org/10.12015/issn.1674-8034.2020.03.008 (in Chinese)

Ren S, Zhang J, Chen J et al (2019) Evaluation of texture analysis for the differential diagnosis of mass-forming pancreatitis from pancreatic ductal adenocarcinoma on contrast-enhanced CT images. Front Oncol 9:1171

Ren S, Zhao R, Zhang J et al (2020) Diagnostic accuracy of unenhanced CT texture analysis to differentiate mass-forming pancreatitis from pancreatic ductal adenocarcinoma. Abdom Radiol (NY) 45(5):1524–1533

Ren H, Mori N, Hamada S et al (2021) Effective apparent diffusion coefficient parameters for differentiation between mass-forming autoimmune pancreatitis and pancreatic ductal adenocarcinoma. Abdom Radiol (NY) 46(4):1640–1647

Zhang MM, Yang H, Jin ZD, Yu JG, Cai ZY, Li ZS (2010) Differential diagnosis of pancreatic cancer from normal tissue with digital imaging processing and pattern recognition based on a support vector machine of EUS images. Gastrointest Endosc 72(5):978–985

Zhang Y, Cheng C, Liu Z et al (2019) Radiomics analysis for the differentiation of autoimmune pancreatitis and pancreatic ductal adenocarcinoma in 18F-FDG PET/CT. Med Phys 46(10):4520–4530

Zhang Y, Cheng C, Liu Z et al (2019) Differentiation of autoimmune pancreatitis and pancreatic ductal adenocarcinoma based on multi-modality texture features in 18F-FDG PET/CT. J Biomedical Eng 36(5):755–762. https://doi.org/10.7507/1001-5515.201807012 (in Chinese)

Zhang J, Li Q, Wang J et al (2019) Contrast-enhanced CT and texture analysis of mass-forming pancreatitis and cancer in the pancreatic head. Natl Med J China 99(33):2575–2580. https://doi.org/10.3760/cma.j.issn.0376-2491.2019.33.004 (in Chinese)

Zhou T, Xie CL, Chen Y et al (2021) Magnetic resonance imaging-based radiomics models to predict early extrapancreatic necrosis in acute pancreatitis. Pancreas 50(10):1368–1375

Zhu M, Xu C, Yu J et al (2013) Differentiation of pancreatic cancer and chronic pancreatitis using computer-aided diagnosis of endoscopic ultrasound (EUS) images: a diagnostic test. PLoS One 8(5):e63820

Zhu J, Wang L, Chu Y et al (2015) A new descriptor for computer-aided diagnosis of EUS imaging to distinguish autoimmune pancreatitis from chronic pancreatitis. Gastrointest Endosc 82(5):831–836

Ziegelmayer S, Kaissis G, Harder F et al (2020) Deep convolutional neural network-assisted feature extraction for diagnostic discrimination and feature visualization in pancreatic ductal adenocarcinoma (PDAC) versus autoimmune pancreatitis (AIP). J Clin Med 9(12):4013

Collins GS, Dhiman P, Andaur Navarro CL et al (2021) Protocol for development of a reporting guideline (TRIPOD-AI) and risk of bias tool (PROBAST-AI) for diagnostic and prognostic prediction model studies based on artificial intelligence. BMJ Open 11(7):e048008

Sounderajah V, Ashrafian H, Rose S et al (2021) A quality assessment tool for artificial intelligence-centered diagnostic test accuracy studies: QUADAS-AI. Nat Med 27(10):1663–1665

Vasey B, Nagendran M, Campbell B,: DECIDE-AI expert group, et al (2022) Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat Med 28(5):924–933

Cruz Rivera S, Liu X, Chan AW, et al. (2020) Guidelines for clinical trial protocols for interventions involving artificial intelligence: the SPIRIT-AI extension. Nat Med 26(9):1351–1363

Liu X, Cruz Rivera S, Moher D, Calvert MJ, Denniston AK, SPIRIT-AI and CONSORT-AI Working Group (2020) Reporting guidelines for clinical trial reports for interventions involving artificial intelligence: the CONSORT-AI extension. Nat Med 26(9):1364–1374

Sounderajah V, Ashrafian H, Aggarwal R et al (2020) Developing specific reporting guidelines for diagnostic accuracy studies assessing AI interventions: the STARD-AI Steering Group. Nat Med 26(6):807–808

Mongan J, Moy L, Kahn CE Jr (2020) Checklist for Artificial Intelligence in Medical Imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell 2(2):e200029

Guiot J, Vaidyanathan A, Deprez L et al (2022) A review in radiomics: making personalized medicine a reality via routine imaging. Med Res Rev 42(1):426–440

Si L, Zhong J, Huo J et al (2022) Deep learning in knee imaging: a systematic review utilizing a Checklist for Artificial Intelligence in Medical Imaging (CLAIM). Eur Radiol 32(2):1353–1361

Ursprung S, Beer L, Bruining A et al (2020) Radiomics of computed tomography and magnetic resonance imaging in renal cell carcinoma-a systematic review and meta-analysis. Eur Radiol 30(6):3558–3566

Acknowledgements

The authors would like to express their gratitude to Dr. Yong Chen for sharing his experience in pancreatitis radiomics, Dr. Zihao Liu for sharing his knowledge of nuclear medicine, Dr. Yijing Yao for sharing her knowledge of ultrasound, Dr. Run Yang, Dr. Haoda Chen, and Dr. Leijie Huang for sharing their knowledge of clinical practice in pancreatitis, Dr. Shiqi Mao for his suggestions on data visualization, and Dr. Guangcheng Zhang for English language polishing.

Funding

This study has received funding by National Natural Science Foundation of China (81771789, 81771790); Yangfan Project of Science and Technology Commission of Shanghai Municipality (22YF1442400); Shanghai Science and Technology Commission Science and Technology Innovation Action Clinical Innovation Field (18411953000); Medicine and Engineering Combination Project of Shanghai Jiao Tong University (YG2019ZDB09); and Research Fund of Tongren Hospital, Shanghai Jiao Tong University School of Medicine (TRKYRC-XX202204, 2020TRYJ(LB)06, 2020TRYJ(JC)07, TRGG202101, TRYJ2021JC06).

Author information

Authors and Affiliations

Contributions

JYZ and YFH performed the literature search, data extraction, and quality assessment. JYZ performed meta-analyses, visualized data, and drafted the original version of manuscript. All authors read and approved the final manuscript.

Corresponding authors

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1: Supplementary Note S1.

Review Protocol. Supplementary Note S2. Search Strategy and Study Selection. Supplementary Note S3. Consensus Reached during Data extraction and Quality Assessment. Supplementary Note S4. Data Synthesis and Analysis Methods. Supplementary Table S1. Data Extraction Sheet. Supplementary Table S2. Methodological Quality according to RQS Checklist. Supplementary Table S3. Reporting Completeness according to TRIPOD Statement. Supplementary Table S4. Pre-processing Steps according to IBSI Guideline. Supplementary Table S5. Risk of Bias and Concern on Application Assessment according to QUADAS-2 Tool. Supplementary Table S6. Types of Prediction Model Studies Covered by The TRIPOD Statement. Supplementary Table S7. Trials Classifications for Image Mining Tools Development Process. Supplementary Table S8. Category of Five Levels of Supporting Evidence of Meta-analyzes. Supplementary Table S9. Study Characteristics of Included Studies. Supplementary Table S10. PICOT of Included Studies. Supplementary Table S11. Radiomics Methodological Consideration of Included Studies. Supplementary Table S12. RQS Rating per Study. Supplementary Table S13. TRIPOD Adherence per Study. Supplementary Table S14. Pre-processing Steps Performed in Each Study. Supplementary Table S15. QUADAS-2 Assessment per Study. Supplementary Table S16. Model Metrics of Studies Included in Meta-analysis. Supplementary Table S17. Diagnostic performance of meta-analyzed clinical questions regardless of imaging modality. Supplementary Table S18. Subgroup Analysis of Study Quality according to Study Characteristics. Supplementary Table S19. Correlation between Ideal Percentage of RQS, TRIPOD Adherence Rate, Sample Size and Impact Factor. Supplementary Figure S1. Forrest Plot of Diagnostic Odds Radio. Supplementary Figure S2. Forrest Plot of Pooled Sensitivity. Supplementary Figure S3. Forrest Plot of Pooled Specificity. Supplementary Figure S4. Forrest Plot of Pooled Positive Likelihood Ratio. Supplementary Figure S5. Forrest Plot of Pooled Negative Likelihood Ratio. Supplementary Figure S6. HSROC Curve of the Model Performance. Supplementary Figure S7. Funnel plot of Studies Included in Meta-analysis. Supplementary Figure S8. Deeks Funnel Plot of Studies Included in Meta-analysis. Supplementary Figure S9. Trim and Fill Analysis of Studies Included in Meta-analysis.

Additional file 2:

PRISMA checklist.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhong, J., Hu, Y., Xing, Y. et al. A systematic review of radiomics in pancreatitis: applying the evidence level rating tool for promoting clinical transferability. Insights Imaging 13, 139 (2022). https://doi.org/10.1186/s13244-022-01279-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13244-022-01279-4