Abstract

In this paper, we deal with the inverse problem of identifying the unknown source of time-fractional diffusion equation on a columnar symmetric domain. This problem is ill-posed. Firstly, we establish the conditional stability for this inverse problem. Then the regularization solution is obtained by using the Tikhonov regularization method and the error estimates are derived under the a priori and a posteriori choice rules of the regularization parameter. Three numerical examples are presented to illustrate the validity and effectiveness of our method.

Similar content being viewed by others

1 Introduction

Diffusion equations with fractional order derivatives have been playing more and more important roles. For instance, they appear in mechanics, chemistry, electrical engineering and medicine [1–11]. Time-fractional diffusion equations can be used to describe some anomalous diffusion phenomena in many fields of science [12–15]. These fractional order models are more adequate than the integer order models, because the fractional order derivatives enable the description the properties of different substances [16].

In the past years, many regularization methods have been proposed to deal with the inverse problem for time-fractional diffusion equation, such as the backward problems [17–22], the inverse source problems [23–27], the Cauchy problem [28–31], the initial value problem [32, 33]. In [34], the authors used the quasi-boundary value method to identify the initial value of heat equation on a columnar symmetric domain. In [35], the authors used a quasi-boundary regularization method to identify the initial value of time-fractional diffusion equation on spherically symmetric domain. In this work, we will use the Tikhonov regularization method to identify the space-dependent source for the time-fractional diffusion equation on a columnar symmetric domain. We not only give the a priori choice of the regularization parameter, but also give the a posteriori choice of the regularization parameter which only depends on the measurable date. To the best of our knowledge, there are few papers for the time-fractional diffusion equation on a columnar symmetric domain. In this work, we focus on an inverse problem for the following time-fractional diffusion equation on a columnar symmetric domain:

where \(r_{0}\) is the radius of the cylinder, \(g(r)\) is given, \(f(r)\) is unknown source. \(D_{t}^{\alpha }\) is the Caputo fractional derivative of order \(0<\alpha <1\) defined by

We use the final time to identify the unknown source \(f(r)\). In applications, the input function \(g(r)\) can be measured, and we assume the function \(g^{\delta }(r)\) as the measurable data, which satisfies

where δ is a noise level of input data.

The rest of this paper is organized as follows. In Sect. 2, we give some preliminaries. In Sect. 3, we analyze the ill-posedness of this problem and give the conditional stability. In Sect. 4, the error estimates are obtained under the a priori and a posteriori parameter choice rules. Three numerical examples are presented to demonstrate the effectiveness of our proposed method in Sect. 5.

2 Preliminaries

In this section, we will give some preliminaries which are very useful for our main conclusion.

Definition 2.1

([6])

The Mittag-Leffler function is

where \(\alpha >0\) and \(\beta \in \mathbb{R}\) are arbitrary constants.

Lemma 2.1

([36])

For the Mittag-Leffler function, we have

Lemma 2.2

([6])

For\(\gamma >0\), we have

where\(E_{\gamma ,\beta }^{(k)}(y):=\frac{d^{k}}{dy^{k}}E_{\gamma , \beta }(y)\).

Lemma 2.3

([37])

For\(0<\alpha <1\), \(\eta \geq 0\), we have\(0\leq E_{\alpha ,1}(-\eta )\leq 1\). Moreover, \(E_{\alpha ,1}(-\eta )\)is completely monotonic, that is,

Lemma 2.4

([38])

For any\(\mu _{n}\)satisfying\(\mu _{n}\geq \mu _{1}> 0\), there exists a positive constantC, depending onα, T, \(\mu _{1}\), \(r_{0}\)such that

where\(C(\alpha ,T,\mu _{1},r_{0})=r_{0}^{2}(1-E_{\alpha ,1}(-(\frac{ \mu _{1}}{r_{0}})^{2}T^{\alpha }))\).

Lemma 2.5

For any\(p>0\), \(\nu >0\), \(s\geq \mu _{1}>0\), we have

where\(C_{1}=C_{1}(p,C)>0\)and\(C_{2}=C_{2}(p, C, \mu _{1})>0\).

Proof

If \(0< p<4\), then \(\lim_{s\rightarrow 0}F(s)=\lim_{s\rightarrow +\infty }F(s)=0\), we have

where \(s^{\ast }\in (0,\infty )\) such that \(F'(s^{\ast })=0\), then \(s^{\ast }=(\frac{(4-p)C^{2}}{p\nu })^{\frac{1}{4}}\).

If \(p\geq 4\), we can obtain

Lemma 2.5 is proved. □

Lemma 2.6

For any\(p>0\), \(\nu >0\), \(s\geq \mu _{1}>0\), we have

where\(C_{3}=C_{3}(p,C)>0\)and\(C_{4}=C_{4}(p, C, \mu _{1})>0\).

Proof

If \(0< p<2\), then \(\lim_{s\rightarrow 0}M(s)=\lim_{s\rightarrow +\infty }M(s)=0\), we have

where \(s^{\ast }\in (0,\infty )\) such that \(M'(s^{\ast })=0\), then \(s^{\ast }=(\frac{(2-p)C^{2}}{(p+2)\nu })^{\frac{1}{4}}\).

If \(p\geq 2\), we can obtain

Lemma 2.6 is proved. □

3 Ill-posedness and a conditional stability

We can obtain the solution of the problem (1.1) by the method of separation of variables, as follows [39]:

where \(f_{n}=(f(r),\omega _{n}(r))\), \(\omega _{n}(r)=\frac{\sqrt{2}}{r _{0}J_{1}(\mu _{n})}J_{0}(\frac{\mu _{n}}{r_{0}})\), \(n=1,2,3,\ldots \) , and \(\{\omega _{n}(r)\}_{n=1}^{\infty }\) is an orthonormal basis of \(L^{2}[0,r_{0}]\), \(J_{0}(\cdot )\) and \(J_{1}(\cdot )\) are the zeroth order Bessel function and the first order Bessel function, respectively. \(\mu _{n}\) is the infinite number real root of the equation

and it satisfies

Through this paper, \(L^{2}[0,r_{0};r]\) denotes the Hilbert space of Lebesgue measurable functions f with weight r on \([0,r_{0}]\). \((\cdot ,\cdot )\) and \(\|\cdot \|\) denote the inner product and norm on \(L^{2}[0,r_{0};r]\), respectively, with the norm

We consider the condition \(u(r,T)=g(r)\), then we have

where \(g_{n}=(g(r),\omega _{n}(r))\). Defining the operator \(K: f\rightarrow g\), we get

The operator K is a linear self-adjoint compact operator, its singular value is \(\{\sigma _{n}\}_{n=1}^{\infty }\) and

and we also have

Then we can obtain

So

By using Lemma 2.4 and (3.7), we have

and

we see that \(\mu _{n}\rightarrow \infty \) when \(n\rightarrow \infty \), so \(\frac{1}{\sigma _{n}}\rightarrow \infty \). So the exact data function \(g(r)\) must satisfy the property that \((g,\omega _{n}(r))\) decays rapidly. But we cannot ensure the function \(g(r)\) decreases, because the function \(g(r)\) concerns measurable data, a tiny disturbance of \(g(r)\) will cause a great error. So problem (1.1) is ill-posed. Assume for the unknown source \(f(r)\) there exists an a priori bound as follows:

where \(E>0\) is a constant and \(\|\cdot \|_{H^{p}}\) denotes the norm in Hilbert space which is defined as follows [40]:

The conditional stability of the inverse source problem can be obtained from Theorem 3.1.

Theorem 3.1

Let the a priori bound condition (3.12) hold, then we have

Proof

Due to (3.10), (3.11), (3.12), and the Hölder inequality, we obtain

This completes the proof of Theorem 3.1. □

4 Regularization method and convergence estimate

In this section, we will use the Tikhonov regularization method to obtain the regularization solution for problem (1.1). From Sect. 3, we know that \(\{\omega _{n}(r)\}_{n=1}^{\infty }\) is an orthogonal basis of \(L^{2}[0,r_{0};r]\), \(\{\sigma _{n}\}_{n=1}^{\infty }\) is singular value of the linear self-adjoint compact operator K, and

We adopt the Tikhonov regularization method to solve the ill-posed problem, which minimizes the following functional:

where \(\nu >0\) is the regularization parameter. From Theorem 2.12 of [41], we see that the minimum \(f_{\nu }(r)\) satisfies

By a singular value decomposition of the compact self-adjoint operator, we have [42]

Then we give the regularization solution with measurable data as follows:

4.1 An a priori parameter choice

In this subsection, we will give error estimates for under the suitable choice for the regularization parameter.

Theorem 4.1

Let\(f(r)\)given by (3.10) be the exact solution of problem (1.1). Let\(g^{\delta }(r)\)satisfy condition (1.3). Let the a priori condition (3.12) hold for\(p>0\). Let\(f_{\nu }^{\delta }(r)\)given by (4.3) be the Tikhonov regularization solution. If the regularization parameterνsatisfies

then the following error estimates hold:

where\(C_{1}\), \(C_{2}\)are positive constants depending onp, C, \(\mu _{1}\).

Proof

By utilizing triangle inequality, we have

Using (4.3) and (1.3), we obtain

Considering (3.10) and condition (3.12), we can obtain

where

Using (3.11) and Lemma 2.5, we get

So

We choose the regularization parameter as follows:

then we have

Theorem 4.1 is proved. □

4.2 An a posteriori selection rule

In this subsection, we will utilize Morozov’s discrepancy principle to give an a posteriori regularization parameter choice. That is, we will choose the solution ν of the following equation as an a posterior regularization parameter:

where \(\tau >1\) is constant. We need Lemma 4.1 to obtain the existence and uniqueness of (4.5).

Lemma 4.1

Let\(\delta >0\), the function\(d(\nu ):= \|Kf_{\nu }^{\delta }-g^{\delta }\|\). If\(\|g^{\delta }\|>\tau \delta \), we have some properties, as follows:

- (a)

\(d(\nu )\)is a continuous function;

- (b)

\(\lim_{\nu \rightarrow 0}d(\nu )=0\);

- (c)

\(\lim_{\nu \rightarrow \infty }d(\nu )=\|g ^{\delta }\|\);

- (d)

\(d(\nu )\)is a strictly increasing function, for any\(\nu \in (0,\infty )\).

The proof of Lemma 4.1 is skipped.

Lemma 4.2

Letνbe the solution of (4.5), we have the following inequality:

where\(C_{3}\), \(C_{4}\)are positive constants depending onp, C, \(\mu _{1}\).

Proof

Using (4.5), we have

where

Utilizing (3.11) and Lemma 2.6, we get

then

So we can obtain

□

Next, we will give the error estimate for under the a posteriori choice rule.

Theorem 4.2

Let\(f(r)\)given by (3.10) be the exact solution of problem (1.1). Let\(f_{\nu }^{\delta }\)given by (4.3) be the Tikhonov regularization solution. Let the solutionνof Eq. (4.5) be regarded as the regularization parameter, then the following error estimates hold:

Proof

Utilizing the triangle inequality, we have

Using Lemma 4.1 and (4.4), we get

For the second part of the right side of (4.6), using (1.3) and (4.5), for \(0< p<1\), we obtain

We have

Using Theorem 3.1, we get

For \(p\geq 2\), because \(H^{p}\) compacts into \(H^{2}\), then there exists a \(m\in \mathbb{N}\) such that \(\|f(r)\|_{H^{2}}\leq m\|f(r)\|_{H^{p}} \leq mE\), we have

It is clear that

The proof of Theorem 4.2 is completed. □

5 Numerical experiments

In this section, we will use three different examples to illustrate the effectiveness and stability of the Tikhonov regularization method under two regularization parameter choice rules. Firstly, we obtain \(g(r)\) by solving the direct problem, as follows:

Let \(r_{0}=\pi \), \(T=1\), we discretize the above equation by the finite difference method. Let \(\Delta r=\frac{\pi }{M}\), \(\Delta t= \frac{1}{N}\) and \(r_{i}=(i-1)\Delta r\) (\(i=0,1,2,\ldots ,M\)), \(t_{n}=(n-1)\Delta t \) (\(n=0,1,2,\ldots ,N\)). In our numerical computations, we will take \(M_{1}=M_{2}=50\). The approximate values of each grid point u are denoted \(u_{i,j}\approx u(r_{i},t_{j})\). The discrete scheme of time-fractional derivative is given as follows [43, 44]:

where \(i=1,2,\ldots ,M-1\); \(n=1,2,\ldots ,N\) and \(b_{j}=(j+1)^{1-\alpha }-j^{1-\alpha }\).

Then we take \(g^{\delta }\) as noise by adding a random perturbation, i.e.,

where ε reflects the relative error level.

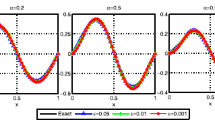

Example 1

Take the source function \(f(r)=r\sin(r)\).

Example 2

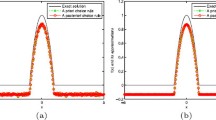

Consider the piecewise smooth function

Example 3

Consider the discontinuous function

Figure 1 shows the comparisons between the exact solution and the regularization under the a priori and a posteriori regularization parameters choice when \(\alpha =0.2\) for \(\varepsilon =0.001 \), and \(\varepsilon =0.0001\) with Example 1. Figure 2 shows the comparisons between the exact solution and the regularization under the a priori and a posteriori regularization parameters choice when \(\alpha =0.6\) for \(\varepsilon =0.001 \), and \(\varepsilon =0.0001\) with Example 1. Figure 3 shows the comparisons between the exact solution and the regularization under the priori and posteriori regularization parameters choice when \(\alpha =0.2\) for \(\varepsilon =0.001 \), and \(\varepsilon =0.0001\) with Example 2. Figure 4 shows the comparisons between the exact solution and the regularization under the priori and posteriori regularization parameters choice when \(\alpha =0.6\) for \(\varepsilon =0.001 \), and \(\varepsilon =0.0001\) with Example 2. Figure 5 shows the comparisons between the exact solution and the regularization under the a priori and a posteriori regularization parameters choice when \(\alpha =0.2\) for \(\varepsilon =0.001 \), and \(\varepsilon =0.0001\) with Example 3. Figure 6 shows the comparisons between the exact solution and the regularization under the a priori and a posteriori regularization parameters choice when \(\alpha =0.6\) for \(\varepsilon =0.001 \), and \(\varepsilon =0.0001\) with Example 3. From Figs. 1–6, we can find the smaller ε, the better the computed approximation is. Moreover, we can also find the smaller α, the results are also better. Finally, we find that the results of Example 1 are better than that of Examples 2, 3, because in Examples 2, 3, the exact solutions are non-smooth and discontinuous functions, the recover data near the non-smooth and discontinuity points are not accurate. This is the well-known Gibbs phenomenon. But for the ill-posed problem, the results presented in Figs. 3–6 are reasonable.

The comparison of numerical effects between the exact solution and regularization solution for Example 1, \(\alpha =0.6\): (a) \(\varepsilon =0.001\), (b) \(\varepsilon =0.0001\)

The comparison of numerical effects between the exact solution and regularization solution for Example 1, \(\alpha =0.2\): (a) \(\varepsilon =0.001\), (b) \(\varepsilon =0.0001\)

The comparison of numerical effects between the exact solution and regularization solution for Example 2, \(\alpha =0.6\): (a) \(\varepsilon =0.001\), (b) \(\varepsilon =0.0001\)

The comparison of numerical effects between the exact solution and regularization solution for Example 2, \(\alpha =0.2\): (a) \(\varepsilon =0.001\), (b) \(\varepsilon =0.0001\)

The comparison of numerical effects between the exact solution and regularization solution for Example 3, \(\alpha =0.6\): (a) \(\varepsilon =0.001\), (b) \(\varepsilon =0.0001\)

The comparison of numerical effects between the exact solution and regularization solution for Example 3, \(\alpha =0.2\): (a) \(\varepsilon =0.001\), (b) \(\varepsilon =0.0001\)

6 Conclusion

In this paper, we use the Tikhonov regularization method to identify the source of the time-fractional diffusion equation on a columnar symmetric domain. Based on a conditional stability result, the error estimates are obtained under the a priori and a posteriori choice rules of regularization parameter. Meanwhile, the numerical examples verify the efficiency and accuracy of this method. Our original contributions are that we first identify the source of the time-fractional diffusion equation on a columnar symmetric domain. Moreover, we give the a posteriori regularization choice rule which only depends on the measurable data. In future, we will consider the inverse problem of identifying the initial value of the time-fractional diffusion equation on a columnar symmetric domain and give the optimal error estimate analyze. In addition, in this paper, we consider the time-fractional derivative is the Caputo fractional derivative of order \(0<\alpha <1\), but in Ref. [45–52], one mentioned the Caputo–Fabrizio fractional integro-differential equation, which is very useful in practice. We will consider the inverse problem of Caputo–Fabrizio fractional integro-differential equations, and use the Tikhonov regularization method to solve this inverse problem.

References

Debnath, L.: Recent applications of fractional calculus to science and engineering. Int. J. Math. Math. Sci. 54, 3413–3442 (2003)

Hatano, Y., Hatano, N.: Dispersive transport of ions in column experiments: an explanation of long-tailed profiles. Water Resour. Res. 34, 1027–1033 (1998)

Ginoa, M., Cerbelli, S., Roman, H.E.: Fractional diffusion equation and relaxation in complex viscoelastic materials. Physica A 191, 449–453 (1992)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Metzler, R., Klafter, J.: Boundary value problems for fractional diffusion equations. Physica A 278, 107–125 (2000)

Podlubny, I.: Fractional Differential Equations. Academic Press, San Diego (1999)

Singh, J., Kumar, D., Baleanu, D., Rathore, S.: On the local fractional wave equation in fractal strings. Math. Methods Appl. Sci. 42(5), 1588–1595 (2019)

Singh, J., Kumar, D., Baleanu, D.: New aspects of fractional Biswas–Milovic model with Mittag-Leffler law. Math. Model. Nat. Phenom. 14, 303 (2019)

Kumar, D., Singh, J., Baleanu, D.: On the analysis of vibration equation involving a fractional derivative with Mittag-Leffler law. Math. Methods Appl. Sci. (2019). https://doi.org/10.1002/mma.5903

Goswami, A., Singh, J., Kumar, D.: An efficient analytical approach for fractional equal width equations describing hydro-magnetic waves in cold plasma. Physica A 524, 563–575 (2019)

Goswami, A., Singh, J., Kumar, D.: An efficient analytical technique for fractional partial differential equations occurring in ion acoustic waves in plasma. J. Ocean Eng. Sci. 4(2), 85–99 (2019)

Sokolov, I.M., Klafter, J.: From diffusion to anomalous diffusion: a century after Einsteins Brownian motion. Chaos 15, 1–7 (2005)

Jin, B.T., Lazarov, R., Zhou, Z.: Error estimates for a semidiscrete finite element method for fractional order parabolic equations. SIAM J. Numer. Anal. 55, 445–466 (2013)

Eidelman, S.D., Kochubei, A.N.: Cauchy problem for fractional diffusion equations. J. Differ. Equ. 199, 211–255 (2004)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 225, 1533–1552 (2007)

Gorenflo, R., Mainardi, F.: Fractional calculus: integral and differential equations of fractional order. In: Carpinteri, A., Mainardi, F. (eds.) Fractals and Fractional Calculus in Continuum Mechanics, pp. 223–276. Springer, New York (1997)

Liu, J.J., Yamamoto, M.: A backward problem for the time-fractional diffusion equation. Appl. Anal. 89, 1769–1788 (2010)

Ren, C., Xu, X., Lu, S.: Regularization by projection for a backward problem of the time-fractional diffusion equation. J. Inverse Ill-Posed Probl. 22, 121–139 (2014)

Yang, F., Fu, J.L., Li, X.X.: A potential-free field inverse Schrödinger problem: optimal error bound analysis and regularization method. Inverse Probl. Sci. Eng. https://doi.org/10.1080/17415977.2019.1700243

Xiong, X.T., Wang, J.X., Li, M.: An optimal method for fractional heat conduction problem backward in time. Appl. Anal. 91, 823–840 (2012)

Wang, L.Y., Liu, J.J.: Data regularization for a backward time-fractional diffusion problem. Comput. Math. Appl. 64, 3613–3626 (2012)

Yang, F., Fan, P., Li, X.X., Ma, X.Y.: Fourier truncation regularization method for a time-fractional backward diffusion problem with a nonlinear source. Mathematics 7, 865 (2019)

Zhang, Y., Xu, X.: Inverse source problem for a fractional diffusion equation. Inverse Probl. 27, 1–12 (2011)

Wang, W., Yamamoto, M., Han, B.: Numerical method in reproducing kernel space for an inverse source problem for the fractional diffusion equation. Inverse Probl. 29(9), 95009–95023 (2013)

Wang, J.G., Zhou, Y.B., Wei, T.: Two regularization methods to identify a space-dependent source for the time-fractional diffusion equation. Appl. Numer. Math. 68, 39–57 (2013)

Yang, F., Fu, C.L., Li, X.X.: A mollification regularization method for unknown source in time-fractional diffusion equation. Int. J. Comput. Math. 91, 1516–1534 (2014)

Yang, F., Fu, C.L.: The quasi-reversibility regularization method for identifying the unknown source for time-fractional diffusion equation. Appl. Math. Model. 39, 1500–1512 (2014)

Wei, T., Zhang, Z.Q.: Stable numerical solution to a Cauchy problem for a time-fractional diffusion equation. Eng. Anal. Bound. Elem. 40, 128–137 (2014)

Zheng, G.H., Wei, T.: Spectral regularization method for a Cauchy problem of the time-fractional advection-dispersion equation. J. Comput. Appl. Math. 233, 2631–2640 (2010)

Zheng, G.H., Wei, T.: A new regularization method for a Cauchy problem of the time-fractional diffusion equation. Adv. Comput. Math. 36, 377–398 (2012)

Yang, F., Zhang, P., Li, X.X.: The truncation method for the Cauchy problem of the inhomogeneous Helmholtz equation. Appl. Anal. 98, 991–1004 (2019)

Yang, F., Pu, Q., Li, X.X., Li, D.G.: The truncation regularization method for identifying the initial value on non-homogeneous time-fractional diffusion-wave equations. Mathematics 7, 1007 (2019)

Yang, F., Zhang, Y., Li, X.X.: Landweber iterative method for identifying the initial value problem of the time-space fractional diffusion-wave equation. Numer. Algorithms (2020). https://doi.org/10.1007/s11075-019-00734-6

Yang, F., Sun, Y.R., Li, X.X., Huang, C.Y.: The quasi-boundary value method for identifying the initial value of heat equation on a columnar symmetric domain. Numer. Algorithms 82(2), 623–639 (2019)

Yang, F., Wang, N., Li, X.X., Huang, C.Y.: A quasi-boundary regularization method for identifying the initial value of time-fractional diffusion equation on spherically symmetric domain. J. Inverse Ill-Posed Probl. 27(5), 609–621 (2019)

Haubold, H.J., Mathai, A.M., Saxena, R.K.: Mittag-Leffler functions and their applications. J. Appl. Math. 2011, Article ID 298628 (2011)

Pollard, H.: The completely monotonic character of the Mittag-Leffler function \(E_{\alpha }(-x)\). Bull. Am. Math. Soc. 54, 1115–1116 (1948)

Wang, J.G., Wei, T.: Quasi-reversibility method to identify a space-dependent source for the time-fractional diffusion equation. Appl. Math. Model. 39, 6139–6149 (2015)

Cheng, W., Zhao, L.L., Fu, C.L.: Source term identification for an axisymmetric inverse heat conduction problem. Comput. Math. Appl. 59, 142–148 (2010)

Sakamoto, K., Yamamoto, M.: Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse problems. J. Math. Anal. Appl. 382(1), 426–447 (2011)

Kirsch, A.: An Introduction to the Mathematical Theory of Inverse Problems, vol. 120. Springer, Berlin (2011)

Groetsch, C.W.: The Theory of Tikhonov Regularization for Fredholm Equations of the First Kind, vol. 105. Pitman, Boston (1984)

Murio, D.A.: Implicit finite difference approximation for time-fractional diffusion equations. Comput. Math. Appl. 56, 1138–1145 (2008)

Zhuang, P., Liu, F.: Implicit difference approximation for the time fractional diffusion equation. J. Appl. Math. Comput. 22, 87–99 (2006)

Tian, Y.S., Bai, Z.B., Sun, S.J.: Positive solutions for a boundary value problem of fractional differential equation with p-Laplacian operator. Adv. Differ. Equ. 2019, 349 (2019)

Aydogan, S.M., Baleanu, D., Mousalou, A., Rezapour, S.: On approximate solutions for two higher-order Caputo–Fabrizio fractional integro-differential equations. Adv. Differ. Equ. 2017, 221 (2017)

Aydogan, S.M., Baleanu, D., Mousalou, A., Shahram, R.: On high order fractional integro-differential equations including the Caputo–Fabrizio derivative. Bound. Value Probl. 2018, 90 (2018)

Baleanu, D., Mousalou, A., Rezapour, S.: A new method for investigating approximate solutions of some fractional integro-differential equations involving the Caputo–Fabrizio derivative. Adv. Differ. Equ. 2017, 51 (2017)

Baleanu, D., Mousalou, A., Rezapour, S.: On the existence of solutions for some infinite coefficient-symmetric Caputo–Fabrizio fractional integro-differential equations. Bound. Value Probl. 2017, 145 (2017)

Baleanu, D., Mousalou, A., Rezapour, S.: The extended fractional Caputo–Fabrizio derivative of order \(0\leq \sigma <1\) on \(C_{R}[0, 1]\) and the existence of solutions for two higher-order series-type differential equations. Adv. Differ. Equ. 2018, 255 (2018)

Baleanu, D., Rezapour, S., Mohammadi, H.: Some existence results on nonlinear fractional differential equations. Philos. Trans. R. Soc. 371, 20120144 (2013)

Baleanu, D., Rezapour, S., Saberpour, Z.: On fractional integro-differential inclusions via the extended fractional Caputo–Fabrizio derivation. Bound. Value Probl. 2019, 79 (2019)

Yang, F., Fan, P., Li, X.X.: Fourier truncation regularization method for a three-dimensional Cauchy problem of the modified Helmholtz equation with perturbed wave number. Mathematics 7, 705 (2019)

Wang, J.G., Wei, T., Zhou, Y.B.: Tikhonov regularization method for a backward problem for the time-fractional diffusion equation. Appl. Math. Model. 37, 8518–8532 (2013)

Acknowledgements

The authors would like to thank the editor and the referees for their valuable comments and suggestions that improve the quality of our paper.

Availability of data and materials

Not applicable.

Funding

The work is supported by the National Natural Science Foundation of China (No. 11561045, 11961044) and the Doctor Fund of Lan Zhou University of Technology.

Author information

Authors and Affiliations

Contributions

The main idea of this paper was proposed by Fan Yang and Pan Zhang prepared the manuscript initially and performed all the steps of the proofs in this research. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Abbreviations

Not applicable.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Yang, F., Zhang, P., Li, XX. et al. Tikhonov regularization method for identifying the space-dependent source for time-fractional diffusion equation on a columnar symmetric domain. Adv Differ Equ 2020, 128 (2020). https://doi.org/10.1186/s13662-020-2542-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-020-2542-1