Abstract

In this paper, we investigate an inverse problem to determine an unknown source term that has a separable-variable form in the time-fractional diffusion equation, whereby the data is obtained at a certain time. This problem is ill-posed, and we use the Landweber iterative regularization method to solve this inverse source problem. Two kinds of convergence rates are obtained by using an a priori and an a posteriori regularization parameters choice rules, respectively. Numerical examples are provided to show the effectiveness of the proposed method.

Similar content being viewed by others

1 Introduction

In recent years, diffusion equations with fractional-order derivatives play an important role in modeling contaminant diffusion processes. A fractional diffusion equation mainly describes anomalous diffusion phenomena because fractional-order derivatives enable the description of memory and hereditary properties of heterogeneous substances [1]. Replacing the standard time derivative with a time fractional derivative leads to the time fractional diffusion equation, and it can be used to describe superdiffusion and subddiffusion phenomena [1–10]. In some practical problems, the diffusion coefficients, a part of boundary data, initial data, or source term may be unknown. We need additional measurement to identify them, which leads to some fractional diffusion inverse problem. Nowadays there are many research results about fractional diffusion inverse problem. In [11], the authors considered an inverse problem of recovering boundary functions from transient data at an interior point in a 1-D semiinfinite half-order time-fractional diffusion equation. In [12], the authors applied a quasi-reversibility regularization method to solve a backward problem for the time-fractional diffusion equation. In [13–15], the authors studied an inverse problem in a spatial fractional diffusion equation by using the quasi-boundary value method and truncation method. In [16, 17], the authors determined the unknown source in one-dimensional and two-dimensional fractional diffusion equations. In [18], the authors used the dynamic spectral method to consider the inverse heat conduction problem of a fractional heat diffusion equation in 2-D setting. In [19], the authors used an optimal regularization method to consider the inverse heat conduction problem of a fractional heat diffusion equation. In [20, 21], the authors used the quasi-reversibility regularization method and Fourier regularization method to identify the unknown source for a fractional heat diffusion equation.

In this paper, we investigate an inverse problem for the time-fractional diffusion equation with variable coefficients in a general bounded domain[13, 22]:

where Ω is a bounded domain in \(\mathbb{R}^{d}\) with a sufficient smooth boundary ∂Ω, \(D_{t}^{\alpha}(\cdot )\) is the Caputo fractional derivative of order α (\(0<\alpha\leq1\)), −L is defined on \(D(-L)=H^{2}(\Omega)\cap H_{0}^{1}(\Omega)\) and is a symmetric uniformly elliptic operator:

where the coefficient functions \(a_{ij}\) and \(c(x)\) satisfy

As in [13], define the Hilbert space

with the norm

Assume that the time-fractional source term \(q\in C[0,T]\) satisfies \(q(t)\geq q_{0}>0\) for all \(t\in[0,T]\) and is known. The space-dependent source term \(f(x)\) is unknown. We use the data \(u(x,T)=g(x)\) to determine \(f(x)\). The noise data \(g^{\delta}\in L^{2}(\Omega)\) satisfies

where \(\Vert \cdot \Vert \) denotes the \(L^{2}(\Omega)\) norm, and \(\delta>0\) is a noise level.

In [13], the authors used the modified quasi-boundary value method to solve problem (1.1), but the error estimates between the regularization solution and the exact solution have the saturation phenomenon under the two parameter choice rules, that is, the best convergence rate for the a priori parameter choice method is \(O(\delta ^{\frac{2}{3}})\), and for the a posteriori parameter choice method, it is \(O(\delta^{\frac{1}{2}})\). In [22], the authors used the Tikhonov regularization method to solve problem (1.1), but the error estimates between the regularization solution and the exact solution also have the saturation phenomenon under the two parameter choice rules. In this study, we use the Landweber iterative regularization method to solve this problem. Our error estimates under two parameter choice rules have no saturation phenomenon, and the convergence rates are all \(O(\delta^{\frac{p}{p+2}})\).

This paper is organized as follows. Section 2 presents the Landweber iterative regularization method. Section 3 presents the convergence estimates under a priori and a posteriori choice rules. Section 4 presents some numerical examples to show the effectiveness of our method. Section 5 presents a simple conclusion.

2 Landweber iterative regularization method

In this section, we first give some useful lemmas.

Lemma 2.1

([23])

For \(\eta>0\) and \(0<\alpha \leq1\), we have \(0\leq E_{\alpha,1}(-\eta)<1\), and \(E_{\alpha ,1}(-\eta)\) is completely monotonic, that is,

Lemma 2.2

([23])

For \(\beta\in\mathbb{R}\) and \(\alpha>0\), we have

Lemma 2.3

([24])

For \(0<\alpha<1\), \(\lambda >0\), and \(q\in C(0,T)\), we have

Moreover, if \(\lambda=0\), then

Lemma 2.4

([13])

For any \(\lambda_{n}\) satisfying \(\lambda_{n}\geq\lambda_{1}>0\), there exists a positive constant \(C_{1}\) depending on α, T, \(\lambda_{1}\) such that

Lemma 2.5

For any \(0< x<1\), we have

and

Due to Lemma 2.3, using the separation of variables, we obtain the solution of problem (1.1)

where \(\lambda_{n}\) are the eigenvalues of the operator −L, and the corresponding eigenfunctions are \(X_{n}(x)\), \(f_{n}=(f(x),X_{n}(x))\). Using \(u(x,T)=g(x)\), we have

where \(g_{n}=(g(x),X_{n}(x))\). Since −L is a symmetric uniformly elliptic operator, we get [25]

Let \(h_{n}(T):=\int_{0}^{T}q(\tau)(T-\tau)^{\alpha-1}E_{\alpha ,\alpha}(-\lambda_{n}(T-\tau)^{\alpha})\,d\tau\).

So we obtain

Then

that is,

We only need to solve the following first kind integral equation to obtain \(f(x)\):

where the kernel is

For \(k(x,\xi)=k(\xi,x)\), K is a self-adjoint operator. If \(f\in L^{2}(\Omega)\), then \(g\in H^{2}(\Omega)\) from [25]. Because \(H^{2}(\Omega)\) is compactly embedded in \(L^{2}(\Omega)\), we have that \(K:L^{2}(\Omega)\rightarrow L^{2}(\Omega)\) is a compact operator. So problem (1.1) is ill-posed [26]. Assume that \(f(x)\) has the following a priori bound:

where \(E>0\) is a constant. We first give conditional stability results about ill-posed problem (1.1).

Theorem 2.6

([13])

Let \(q(t)\in C[0,T]\) satisfy \(q(t)\geq q_{0}>0\) for all \(t\in[0,T]\), and let \(f(x)\in D((-L)^{-\frac{p}{2}})\) satisfy the a priori bound condition

Then we have

where \(C_{2}:=(C_{1}q_{0})^{-\frac{p}{p+2}}\).

Now we use the Landweber iterative method to obtain the regularization solution for (1.1) and rewrite the equation \(Kf=g\) in the form \(f=(I-aK^{*}K)f+aK^{*}g\) for some \(a>0\). Iterate this equation:

where m is an iterative step number, and the selected regularization parameter a is called the relaxation factor and satisfies \(0< a<\frac {1}{\Vert K\Vert ^{2}}\). Since K is a self-adjoint operator, we obtain

We get

where \(g_{n}^{\delta}=(g^{\delta},X_{n}(x))\).

3 Error estimate under two parameter choice rules

In this section, we give two convergence estimates under an a priori regularization parameter choice rule and an a posteriori regularization parameter choice rule, respectively.

3.1 An a priori regularization parameter choice rule

Theorem 3.1

Let \(f(x)\) be the exact solution of problem (1.1), and let \(f^{m,\delta}(x)\) be the regularization Landweber iterative approximation solution. Choosing the regularization parameter \(m=[r]\), where

we have the following convergence rate estimate:

where \([r]\) denotes the largest integer less than or equal to r, and \(C_{3}=\sqrt{a}+(\frac{aC_{1}^{2}q_{0}}{p})^{-\frac{p}{4}}\) is a positive constant depending on a, p, and \(q_{0}\).

Proof

By the triangle inequality we have

We first give an estimate for the first term. From conditions (1.6) and (2.17) we have

where \(H(n):=\frac{1-(1-ah_{n}^{2}(T))^{m}}{h_{n}(T)}\).

By Lemma 2.5 we get

that is,

So

Now we estimate the second term in (3.3). By (2.4), (2.14), and (2.17) we have

where \(Q(n):=(1-ah_{n}^{2}(T))^{m}\lambda_{n}^{-\frac{p}{2}}\).

Using Lemma 2.2 and Theorem 2.6, we have

Let \(\lambda_{n}:=t\) and

Let \(t_{0}\) satisfy \(F'(t_{0})=0\). Then we easily get

Thus

that is,

Thus we obtain

Hence

Combining (3.5) and (3.10) and choosing the regularization parameter \(m=[r]\), we get

where \(C_{3}:=\sqrt{a}+(\frac{aq_{0}^{2}C_{1}^{2}}{p})^{-\frac{p}{4}}\).

We complete the proof of Theorem 3.1. □

3.2 An a posteriori regularization parameter choice rule

We consider the a posteriori regularization parameter choice in the Morozov discrepancy and construct regularization solution sequences \(f^{m,\delta}(x)\) by the Landweber iterative regularization method. Let \(\tau>1\) be a given fixed constant. Stop the algorithm at the first occurrence of \(m=m(\delta)\in\mathbb{N}_{0}\) with

where \(\Vert g^{\delta} \Vert \geq\tau\delta\) is constant.

Lemma 3.2

Let \(\gamma(m)=\Vert Kf^{m,\delta}(\cdot)-g^{\delta}(\cdot)\Vert \). Then we have:

-

(a)

\(\gamma(m)\) is a strictly decreasing function for any \(m\in (0,+\infty)\);

-

(b)

\(\lim_{m\rightarrow+\infty}\gamma(m)=\Vert g^{\delta }\Vert \);

-

(c)

\(\lim_{m\rightarrow0}\gamma(m)=0\);

-

(d)

\(\gamma(m)\) is a continuous function.

Lemma 3.3

For fixed \(\tau>1\), combining Landweber’s iteration method with stopping rule (3.12), we obtain that the regularization parameter \(m=m(\delta,g^{\delta})\in\mathbb {N}_{0}\) satisfies

Proof

From (2.17) we get the representation

and

Since \(\vert 1-ah_{n}^{2}(T)\vert <1\), we conclude that \(\Vert KR_{m-1}-I\Vert \leq1\).

On the other hand, it is easy to see that m is the minimum value and satisfies

Hence

On the other hand, using (2.14), we obtain

Let

so that

Using Lemma 2.4, we have

Let \(t:=\lambda_{n}\) and

Suppose that \(t_{\ast}\) satisfies \(G'(t_{\ast})=0\). Then we easily get

so that

Then

Combining (3.15) with (3.17), we obtain

The proof of lemma is completed. □

Theorem 3.4

Let \(f(x)\) be the exact solution of problem (1.1), and let \(f^{m,\delta}(x)\) be the Landweber iterative regularization approximation solution. Choosing the regularization parameter by Landweber’s iterative method with stopping rule (3.12), we have the following error estimate:

where \(C_{4}=(\frac{p+2}{q_{0}^{2}C_{1}^{2}})^{\frac{1}{2}}(\frac {q_{0}}{(\tau-1)})^{\frac{2}{p+2}}\) is a constant.

Proof

Using the triangle inequality, we obtain

Applying (3.2) and Lemma 3.3, we get

where \(C_{4}=(\frac{p+2}{q_{0}^{2}C_{1}^{2}})^{\frac{1}{2}}(\frac {q_{0}}{\tau-1})^{\frac{2}{p+2}}\).

For the second part of the right side of (3.20), we get

Combining (1.6) and (3.12), we have

Using Theorem 2.6 and (2.14), we have

So

Hence

The theorem is proved. □

4 Numerical implementation and numerical examples

In this section, we provide numerical examples to illustrate the usefulness of the proposed method. Since analytic solution of problem (1.1) is difficult, we first solve the forward problem to obtain the final data \(g(x)\) using the finite difference method. For details of the finite difference method, we refer to [27–30]. Assume that \(\Omega=(0,1)\) and take \(\Delta t=\frac{T}{N}\) and \(\Delta x=\frac {1}{M}\). The grid points on the time interval \([0,T]\) are labeled \(t_{n}=n\Delta t\), \(n=0,1,\ldots,N\), and the grid points in the space interval \([0,1]\) are \(x_{i}=i\Delta x\), \(i=0,1,2,\ldots,M\). Noise data are generated by adding random perturbation, that is,

where ε reflects the noise level. The total error level g can be given as follows:

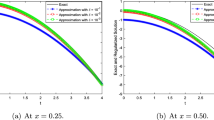

In our numerical experiments, we take \(T=1\). When computing the Mittag-Leffler function, we need a better algorithm in [29]. In applications, the a priori bound E is difficult to obtain, and thus we only give the numerical results under the a posteriori parameter choice rule. In the following three examples, the regularization parameter is given by (3.12) with \(\tau=1.1\). To avoid the ‘inverse crime,’ we use a finer grid to computer the forward problem, that is, we take \(M=100\), \(N=200\) and choose \(M=50\), \(N=100\) for solving the regularized inverse problem. In the computational procedure, we let \(a(x)=x^{2}+1\), \(c(x)=-(x+1)\), and the time-dependent source term \(q(t)=e^{-t}\).

Example 1

Take function \(f(x)=[x(1-x)]^{\alpha }\sin5\pi x\).

Example 2

Consider the following piecewise smooth function:

Example 3

Consider the following initial function:

Figure 1 indicates the exact and regularized source terms given by the a posteriori parameter choice rule for Example 1. Figure 2 indicates the exact and regularized source terms given by the a posteriori parameter choice rule for Example 2. Figure 3 indicates the exact and regularized source terms given by the a posteriori parameter choice rule for Example 3. According to these three examples, we can find that the smaller ε and α, the better effect between the exact solution and regularized solution. From Figures 2 and 3 we can see that the numerical solution is not better than that of Example 1. Figures 2 and 3 presented worse numerical results because due to the common finite element or high-order finite element, it is difficult to describe a piecewise function. However, the numerical result is reasonable. Moreover, numerical examples show that the Landweber iterative method is efficient and accurate.

The exact and regularized terms given by the a posteriori parameter choice rule for Example 1 : (a) \(\pmb{\alpha=0.2}\) , (b) \(\pmb{\alpha=0.5}\) , (c) \(\pmb{\alpha=0.9}\) .

The exact and regularized terms given by the a posteriori parameter choice rule for Example 2 : (a) \(\pmb{\alpha=0.2}\) , (b) \(\pmb{\alpha=0.5}\) , (c) \(\pmb{\alpha=0.9}\) .

The exact and regularized terms given by the a posteriori parameter choice rule for Example 3 : (a) \(\pmb{\alpha=0.2}\) , (b) \(\pmb{\alpha=0.5}\) , (c) \(\pmb{\alpha=0.9}\) .

5 Conclusion

In this paper, we solve an inverse problem for identifying the unknown source of a separable-variable form in a time-fractional diffusion equation with variable coefficients in a general domain. We propose the Landweber iterative method to obtain a regularization solution. The error estimates are obtained under the a priori regularization parameter choice rule and the a posteriori regularization parameter choice rule. Comparing the error estimated obtained by [13, 22], our error estimates have no saturation phenomenon, and the convergence rates are all \(O(\delta^{\frac{p}{p+2}})\) under two parameter choice rules. Meanwhile, numerical examples verify that the Landweber iterative regularization method is efficient and accurate. In the future work, we will continue to study some source terms that depend on both time and space variables.

References

Berkowitz, B, Scher, H, Silliman, SE: Anomalous transport in laboratory-scale, heterogenous porous media. Water Resour. Res. 36(1), 149-158 (2000)

Metzler, R, Klafter, J: Subdiffusive transport close to thermal equilibrium: from the Langevin equation to fractional diffusion. Phys. Rev. E 61(6), 6308-6311 (2000)

Scalas, E, Gorenflo, R, Mainardi, F: Fractional calculus and continuous-time finance. Phys. A 284, 367-384 (2000)

Sokolov, IM, Klafter, J: From diffusion to anomalous diffusion: a century after Einstein’s Brownian motion. Chaos 15, 1-7 (2005)

Bhrawy, AH, Baleanu, D: A spectral Legendre-Gauss-Lobatto collocation method for a space-fractional advection diffusion equations with variable coefficients. Rep. Math. Phys. 72(2), 219-233 (2013)

Fairouz, T, Mustafa, I, Zeliha, SK, Dumitru, B: Solutions of the time fractional reaction-diffusion equations with residual power series method. Adv. Mech. Eng. 8(10), 1-10 (2016)

Gómez-Aguilar, JF, Miranda-Hernández, M, López-López, MG, Alvarado-Martinez, VM, Baleanu, D: Modeling and simulation of the fractional space-time diffusion equation. Commun. Nonlinear Sci. Numer. Simul. 30(1), 115-127 (2016)

Benson, DA, Wheatcraft, SW, Meerschaert, MM: Application of a fractional advection-dispersion equation. Water Resour. Res. 36(6), 1403-1412 (2000)

Sun, HG, Zhang, Y, Chen, W, Donald, MR: Use of a variable-index fractional-derivative model to capture transient dispersion in heterogeneous media. J. Contam. Hydrol. 157, 47-58 (2014)

Zhang, Y, Sun, HG, Lu, BQ, Rhiannon, G, Roseanna, MN: Identify source location and release time for pollutants undergoing super-diffusion and decay: parameter analysis and model evaluation. Adv. Water Resour. 107, 517-524 (2017)

Murio, DA: Stable numerical solution of fractional-diffusion inverse heat conduction problem. Comput. Math. Appl. 53(1), 492-501 (2007)

Liu, JJ, Yamamoto, M: A backward problem for the time-fractional diffusion equation. Appl. Anal. 80(11), 1769-1788 (2010)

Wei, T, Wang, J: A modified quasi-boundary value method for an inverse source problem of the time-fractional diffusion equation. Appl. Numer. Math. 78, 95-111 (2014)

Wang, JG, Zhou, YB, Wei, T: Two regularization methods to identify a space-depend source for the time-fractional diffusion equation. Appl. Numer. Math. 68, 39-75 (2013)

Zhang, ZQ, Wei, T: Identifying an unknown source in time-fractional diffusion equation by a truncation method. Appl. Math. Comput. 219(11), 5972-5983 (2013)

Kirane, M, Malik, AS: Determination of an unknown source term and the temperature distribution for the linear heat equation involving fractional derivative in time. Appl. Math. Comput. 218(1), 163-170 (2011)

Kirane, M, Malik, AS, Al-Gwaiz, MA: An inverse source problem for a two dimensional time fractional diffusion equation with nonlocal boundary conditions. Math. Methods Appl. Sci. 36(9), 1056-1069 (2013)

Xiong, XT, Zhou, Q, Hon, YC: An inverse problem for fractional diffusion equation in 2-dimensional case: stability analysis and regularization. J. Math. Anal. Appl. 393, 185-199 (2012)

Xiong, XT, Guo, HB, Liu, XH: An inverse problem for a fractional diffusion equation. J. Comput. Appl. Math. 236, 4474-4484 (2012)

Yang, F, Fu, CL: The quasi-reversibility regularization method for identifying the unknown source for time fractional diffusion equation. Appl. Math. Model. 39, 1500-1512 (2015)

Yang, F, Fu, CL, Li, XX: The inverse source problem for time fractional diffusion equation: stability analysis and regularization. Inverse Probl. Sci. Eng. 23(6), 969-996 (2015)

Nguyen, HT, Le, DL, Nguyen, VT: Regularized solution of an inverse source problem for a time fractional diffusion equation. Appl. Math. Model. 40(19-20), 8244-8264 (2016)

Pollard, H: The completely monotonic character of the Mittag-Leffler function \(E_{\alpha}(-x)\). Bull. Am. Math. Soc. 54, 1115-1116 (1948)

Kilbas, AA, Srivastava, HM, Trujillo, JJ: Theory and Applications of Fractional Differential Equations. North-Holland Mathematics Studies, vol. 204. Elsevier, Amsterdam (2006)

Sakamoto, K, Yamamoto, M: Initial value/boundary value problems for fractional diffusion-wave equations and applications to some inverse. J. Math. Anal. Appl. 382(1), 426-447 (2011)

Kirsch, A: An Introduction to the Mathematical Theory of Inverse Problems. Springer, New York (1996)

Murio, DA: Implicit finite difference approximation for the time-fractional diffusion equation. Comput. Math. Appl. 56(4), 1138-1145 (2008)

Yang, M, Liu, JJ: Implicit difference approximation for the time-fractional diffusion equation. J. Appl. Math. Comput. 22(3), 87-99 (2006)

Podlubny, I, Kacenak, M: Mittag-Leffler function, The MATLAB routine (2006). http://www.mathworks.com/matlabcentral/fileexchage

Murio, DA, Mejıa, CE: Source terms identification for time fractional diffusion equation. Rev. Colomb. Mat. 42(1), 25-46 (2008)

Acknowledgements

The authors would like to thank the editor and referees for their valuable comments and suggestions that improved the quality of our paper. The work is supported by the National Natural Science Foundation of China (11561045, 11501272) and the Doctor Fund of Lan Zhou University of Technology.

Author information

Authors and Affiliations

Contributions

The main idea of this paper was proposed by FY, and XL prepared the initial manuscript and performed all the steps of the proofs in this research. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yang, F., Liu, X., Li, XX. et al. Landweber iterative regularization method for identifying the unknown source of the time-fractional diffusion equation. Adv Differ Equ 2017, 388 (2017). https://doi.org/10.1186/s13662-017-1423-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1423-8