Abstract

We use techniques from (tracial noncommutative) polynomial optimization to formulate hierarchies of semidefinite programming lower bounds on matrix factorization ranks. In particular, we consider the nonnegative rank, the positive semidefinite rank, and their symmetric analogs: the completely positive rank and the completely positive semidefinite rank. We study convergence properties of our hierarchies, compare them extensively to known lower bounds, and provide some (numerical) examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Matrix Factorization Ranks

A factorization of a matrix \(A \in \mathbb {R}^{m \times n}\) over a sequence \(\{K^d\}_{d\in \mathbb {N}}\) of cones that are each equipped with an inner product \(\langle \cdot ,\cdot \rangle \) is a decomposition of the form \(A=(\langle X_i,Y_j\rangle )\) with \(X_i, Y_j \in K^d\) for all \((i,j)\in [m]\times [n]\), for some integer \(d\in \mathbb {N}\). Following [34], the smallest integer d for which such a factorization exists is called the cone factorization rank of A over \(\{K^d\}\).

The cones \(K^d\) we use in this paper are the nonnegative orthant \(\mathbb {R}^d_+\) with the usual inner product and the cone \(\mathrm {S}^d_+\) (resp., \(\mathrm {H}^d_+\)) of \(d\times d\) real symmetric (resp., Hermitian) positive semidefinite matrices with the trace inner product \(\langle X, Y \rangle = \mathrm {Tr}(X^\textsf {T}Y)\) (resp., \(\langle X, Y \rangle = \mathrm {Tr}(X^* Y)\)). We obtain the nonnegative rank, denoted \({{\,\mathrm{rank}\,}}_+(A)\), which uses the cones \(K^d=\mathbb {R}^d_+\), and the positive semidefinite rank, denoted \(\hbox {psd-rank}_\mathbb {K}(A)\), which uses the cones \(K^d=\mathrm {S}^d_+\) for \(\mathbb {K}= \mathbb {R}\) and \(K^d=\mathrm {H}^d_+\) for \(\mathbb {K}=\mathbb {C}\). Both the nonnegative rank and the positive semidefinite rank are defined whenever A is entrywise nonnegative.

The study of the nonnegative rank is largely motivated by the groundbreaking work of Yannakakis [78], who showed that the linear extension complexity of a polytope P is given by the nonnegative rank of its slack matrix. The linear extension complexity of P is the smallest integer d for which P can be obtained as the linear image of an affine section of the nonnegative orthant \(\mathbb {R}^d_+\). The slack matrix of P is given by the matrix \((b_i-a_i^\mathsf{T}v)_{v\in V,i\in I}\), where \(P= \text {conv}(V)\) and \(P= \{x: a_i^\mathsf{T}x\le b_i\ (i\in I)\}\) are the point and hyperplane representations of P. Analogously, the semidefinite extension complexity of P is the smallest d such that P is the linear image of an affine section of the cone \(\mathrm {S}^d_+\) and it is given by the (real) positive semidefinite rank of its slack matrix [34].

The motivation to study the linear and semidefinite extension complexities is that polytopes with small extension complexity admit efficient algorithms for linear optimization. Well-known examples include spanning tree polytopes [54] and permutahedra [32], which have polynomial linear extension complexity, and the stable set polytope of perfect graphs, which has polynomial semidefinite extension complexity [40] (see, e.g., the surveys [18, 25]). The above connection to the nonnegative rank and to the positive semidefinite rank of the slack matrix can be used to show that a polytope does not admit a small extended formulation. Recently, this connection was used to show that the linear extension complexities of the traveling salesman, cut, and stable set polytopes are exponential in the number of nodes [29], and this result was extended to their semidefinite extension complexities in [51]. Surprisingly, the linear extension complexity of the matching polytope is also exponential [66], even though linear optimization over this set is polynomial time solvable [23]. It is an open question whether the semidefinite extension complexity of the matching polytope is exponential.

Besides this link to extension complexity, the nonnegative rank also finds applications in probability theory and in communication complexity, and the positive semidefinite rank has applications in quantum information theory and in quantum communication complexity (see, e.g., [24, 29, 42, 55]).

For square symmetric matrices (\(m=n\)), we are also interested in symmetric analogs of the above matrix factorization ranks, where we require the same factors for the rows and columns (i.e., \(X_i = Y_i\) for all \(i\in [n]\)). The symmetric analog of the nonnegative rank is the completely positive rank, denoted \(\hbox {cp-rank}(A)\), which uses the cones \(K^d = \mathbb {R}_+^d\), and the symmetric analog of the positive semidefinite rank is the completely positive semidefinite rank, denoted \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {K}(A)\), which uses the cones \(K^d=\mathrm {S}^d_+\) if \(\mathbb {K}=\mathbb {R}\) and \(K^d=\mathrm {H}^d_+\) if \(\mathbb {K}=\mathbb {C}\). These symmetric factorization ranks are not always well defined since not every symmetric nonnegative matrix admits a symmetric factorization by nonnegative vectors or positive semidefinite matrices. The symmetric matrices for which these parameters are well defined form convex cones known as the completely positive cone, denoted \(\hbox {CP}^n\), and the completely positive semidefinite cone, denoted \(\mathrm {CS}_{+}^n\). We have the inclusions \(\hbox {CP}^n \subseteq \mathrm {CS}_{+}^n \subseteq \mathrm {S}_+^n\), which are known to be strict for \(n\ge 5\). For details on these cones see [6, 17, 50] and references therein.

Motivation for the cones \(\hbox {CP}^n\) and \(\mathrm {CS}_{+}^n\) comes in particular from their use to model classical and quantum information optimization problems. For instance, graph parameters such as the stability number and the chromatic number can be written as linear optimization problems over the completely positive cone [45], and the same holds, more generally, for quadratic problems with mixed binary variables [13]. The \(\hbox {cp-rank}\) is widely studied in the linear algebra community; see, e.g., [6, 10, 68, 69].

The completely positive semidefinite cone was first studied in [50] to describe quantum analogs of the stability number and of the chromatic number of a graph. This was later extended to general graph homomorphisms in [72] and to graph isomorphism in [2]. In addition, as shown in [53, 72], there is a close connection between the completely positive semidefinite cone and the set of quantum correlations. This also gives a relation between the completely positive semidefinite rank and the minimal entanglement dimension necessary to realize a quantum correlation. This connection has been used in [38, 62, 63] to construct matrices whose completely positive semidefinite rank is exponentially large in the matrix size. For the special case of synchronous quantum correlations, the minimum entanglement dimension is directly given by the completely positive semidefinite rank of a certain matrix (see [37]).

The following inequalities hold for the nonnegative rank and the positive semidefinite rank: We have

for any \(m\times n\) nonnegative matrix A and \(\hbox {cp-rank}(A)\le \left( {\begin{array}{c}n+1\\ 2\end{array}}\right) \) for any \(n\times n\) completely positive matrix A. However, the situation for the cpsd-rank is very different. Exploiting the connection between the completely positive semidefinite cone and quantum correlations it follows from results in [73] that the cone \(\mathrm {CS}_{+}^n\) is not closed for \(n\ge 1942\). The results in [22] show that this already holds for \(n\ge 10\). As a consequence, there does not exist an upper bound on the \(\hbox {cpsd-rank}\) as a function of the matrix size. For small matrix sizes, very little is known. It is an open problem whether \(\mathrm {CS}_{+}^5\) is closed, and we do not even know how to construct a \(5 \times 5\) matrix whose cpsd-rank exceeds 5.

The \({{\,\mathrm{rank}\,}}_+\), \(\hbox {cp-rank}\), and \(\text {psd-rank}\) are known to be computable; this follows using results from [65] since upper bounds exist on these factorization ranks that depend only on the matrix size, see [5] for a proof for the case of the \(\hbox {cp-rank}\). But computing the nonnegative rank is NP-hard [76]. In fact, determining the \({{\,\mathrm{rank}\,}}_+\) and \(\hbox {psd-rank}\) of a matrix are both equivalent to the existential theory of the reals [70, 71]. For the cp-rank and the cpsd-rank, no such results are known, but there is no reason to assume they are any easier. In fact, it is not even clear whether the cpsd-rank is computable in general.

To obtain upper bounds on the factorization rank of a given matrix, one can employ heuristics that try to construct small factorizations. Many such heuristics exist for the nonnegative rank (see the overview [30] and references therein), factorization algorithms exist for completely positive matrices (see the recent paper [39], also [20] for structured completely positive matrices), and algorithms to compute positive semidefinite factorizations are presented in the recent work [75]. In this paper, we want to compute lower bounds on matrix factorization ranks, which we achieve by employing a relaxation approach based on (noncommutative) polynomial optimization.

1.2 Contributions and Connections to Existing Bounds

In this work, we provide a unified approach to obtain lower bounds on the four matrix factorization ranks mentioned above, based on tools from (noncommutative) polynomial optimization.

We sketch the main ideas of our approach in Sect. 1.4 below, after having introduced some necessary notation and preliminaries about (noncommutative) polynomials in Sect. 1.3. We then indicate in Sect. 1.5 how our approach relates to the more classical use of polynomial optimization dealing with the minimization of polynomials over basic closed semialgebraic sets. The main body of the paper consists of four sections each dealing with one of the four matrix factorization ranks. We start with presenting our approach for the completely positive semidefinite rank and then explain how to adapt this to the other ranks.

For our results, we need several technical tools about linear forms on spaces of polynomials, both in the commutative and noncommutative setting. To ease the readability of the paper, we group these technical tools in Appendix A. Moreover, we provide full proofs, so that our paper is self-contained. In addition, some of the proofs might differ from the customary ones in the literature since our treatment in this paper is consistently on the ‘moment’ side rather than using real algebraic results about sums of squares.

In Sect. 2, we introduce our approach for the completely positive semidefinite rank. We start by defining a hierarchy of lower bounds

where \({\xi _{t}^{\mathrm {cpsd}}}(A)\), for \(t \in \mathbb {N}\), is given as the optimal value of a semidefinite program whose size increases with t. Not much is known about lower bounds for the cpsd-rank in the literature. The inequality \(\sqrt{{{\,\mathrm{rank}\,}}(A)} \le {{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A)\) is known, which follows by viewing a Hermitian \(d\times d\) matrix as a \(d^2\)-dimensional real vector, and an analytic lower bound is given in [62]. We show that the new parameter \({\xi _{1}^{\mathrm {cpsd}}}(A)\) is at least as good as this analytic lower bound and we give a small example where a strengthening of \({\xi _{2}^{\mathrm {cpsd}}}(A)\) is strictly better then both above-mentioned generic lower bounds. Currently, we lack evidence that the lower bounds \({\xi _{t}^{\mathrm {cpsd}}}(A)\) can be larger than, for example, the matrix size, but this could be because small matrices with large cpsd-rank are hard to construct or might even not exist. We also introduce several ideas leading to strengthenings of the basic bounds \({\xi _{t}^{\mathrm {cpsd}}}(A)\).

We then adapt these ideas to the other three matrix factorization ranks discussed above, where for each of them we obtain analogous hierarchies of bounds.

For the nonnegative rank and the completely positive rank, much more is known about lower bounds. The best-known generic lower bounds are due to Fawzi and Parrilo [26, 27]. In [27], the parameters \(\tau _+(A)\) and \(\tau _{\mathrm {cp}}(A)\) are defined, which, respectively, lower bound the nonnegative rank and the \(\hbox {cp-rank}\), along with their computable semidefinite programming relaxations \(\tau _\mathrm {+}^\mathrm {sos}(A)\) and \(\tau _\mathrm {cp}^\mathrm {sos}(A)\). In [27] it is also shown that \(\tau _+(A)\) is at least as good as certain norm-based lower bounds. In particular, \(\tau _+(\cdot )\) is at least as good as the \(\ell _\infty \) norm-based lower bound, which was used by Rothvoß [66] to show that the matching polytope has exponential linear extension complexity. In [26] it is shown that for the Frobenius norm, the square of the norm-based bound is still a lower bound on the nonnegative rank, but it is not known how this lower bound compares to \(\tau _+(\cdot )\).

Fawzi and Parrilo [27] use the atomicity of the nonnegative and completely positive ranks to derive the parameters \(\tau _+(A)\) and \(\tau _{\mathrm {cp}}(A)\); i.e., they use the fact that the nonnegative rank (cp-rank) of A is equal to the smallest d for which A can be written as the sum of d nonnegative (positive semidefinite) rank one matrices. As the \(\hbox {psd-rank}\) and \(\hbox {cpsd-rank}\) are not known to admit atomic formulations, the techniques from [27] do not extend directly to these factorization ranks. However, our approach via polynomial optimization captures these factorization ranks as well.

In Sects. 3 and 4, we construct semidefinite programming hierarchies of lower bounds \({\xi _{t}^{\mathrm {cp}}}(A)\) and \({\xi _{t}^{\mathrm {+}}}(A)\) on \(\hbox {cp-rank}(A)\) and \({{\,\mathrm{rank}\,}}_+(A)\). We show that the bounds \({\xi _{t}^{\mathrm {+}}}(A)\) converge to \(\tau _+(A)\) as \(t \rightarrow \infty \). The basic hierarchy \(\{{\xi _{t}^{\mathrm {cp}}}(A)\}\) for the cp-rank does not converge to \(\tau _{\mathrm {cp}}(A)\) in general, but we provide two types of additional constraints that can be added to the program defining \({\xi _{t}^{\mathrm {cp}}}(A)\) to ensure convergence to \(\tau _{\mathrm {cp}}(A)\). First, we show how a generalization of the tensor constraints that are used in the definition of the parameter \(\tau _{\mathrm {cp}}^\mathrm {sos}(A)\) can be used for this, and we also give a more efficient (using smaller matrix blocks) description of these constraints. This strengthening of \({\xi _{2}^{\mathrm {cp}}}(A)\) is then at least as strong as \(\tau _{\mathrm {cp}}^\mathrm {sos}(A)\), but requires matrix variables of roughly half the size. Alternatively, we show that for every \(\varepsilon >0\) there is a finite number of additional linear constraints that can be added to the basic hierarchy \(\{{\xi _{t}^{\mathrm {cp}}}(A)\}\) so that the limit of the sequence of these new lower bounds \({\xi _{t}^{\mathrm {+}}}(A)\) is at least \(\tau _{\mathrm {cp}}(A)-\varepsilon \). We give numerical results on small matrices studied in the literature, which show that \({\xi _{3}^{\mathrm {+}}}(A)\) can improve over \(\tau _{+}^\mathrm {sos}(A)\).

Finally, in Sect. 5, we derive a hierarchy \(\{{\xi _{t}^{\mathrm {psd}}}(A)\}\) of lower bounds on the psd-rank. We compare the new bounds \({\xi _{t}^{\mathrm {psd}}}(A)\) to a bound from [52], and we provide some numerical examples illustrating their performance.

We provide two implementations of all the lower bounds introduced in this paper, at the arXiv submission of this paper. One implementation uses Matlab and the CVX package [36], and the other one uses Julia [8]. The implementations support various semidefinite programming solvers, for our numerical examples we used Mosek [56].

1.3 Preliminaries

In order to explain our basic approach in the next section, we first need to introduce some notation. We denote the set of all words in the symbols \(x_1,\ldots ,x_n\) by \(\langle \mathbf{x}\rangle = \langle x_1, \ldots , x_n \rangle \), where the empty word is denoted by 1. This is a semigroup with involution, where the binary operation is concatenation, and the involution of a word \(w\in \langle \mathbf{x}\rangle \) is the word \(w^*\) obtained by reversing the order of the symbols in w. The \(*\)-algebra of all real linear combinations of these words is denoted by \(\mathbb {R}\langle \mathbf{x} \rangle \), and its elements are called noncommutative polynomials. The involution extends to \(\mathbb {R}\langle \mathbf{x}\rangle \) by linearity. A polynomial \(p\in \mathbb {R}\langle \mathbf{x}\rangle \) is called symmetric if \(p^*=p\) and \(\mathrm {Sym} \, \mathbb {R}\langle \mathbf{x}\rangle \) denotes the set of symmetric polynomials. The degree of a word \(w\in \langle \mathbf{x}\rangle \) is the number of symbols composing it, denoted as |w| or \(\deg (w)\), and the degree of a polynomial \(p=\sum _wp_ww\in \mathbb {R}\langle \mathbf{x}\rangle \) is the maximum degree of a word w with \(p_w\ne 0\). Given \(t\in \mathbb {N}\cup \{\infty \}\), we let \(\langle \mathbf{x} \rangle _t\) be the set of words w of degree \(|w| \le t\), so that \(\langle \mathbf{x} \rangle _\infty =\langle \mathbf{x}\rangle \), and \(\mathbb {R}\langle \mathbf{x} \rangle _t\) is the real vector space of noncommutative polynomials p of degree \(\mathrm {deg}(p) \le t\). Given \(t \in \mathbb {N}\), we let \(\langle \mathbf{x} \rangle _{=t}\) be the set of words of degree exactly equal to t.

For a set \(S\subseteq \mathrm {Sym} \,\mathbb {R}\langle \mathbf{x}\rangle \) and \(t\in \mathbb {N}\cup \{\infty \}\), the truncated quadratic module at degree 2t associated to S is defined as the cone generated by all polynomials \(p^*g p \in \mathbb {R}\langle \mathbf{x}\rangle _{2t}\) with \(g\in S\cup \{1\}\):

Likewise, for a set \(T \subseteq \mathbb {R}\langle \mathbf{x}\rangle \), we can define the truncated ideal at degree 2t, denoted by \(\mathscr {I}_{2t}(T)\), as the vector space spanned by all polynomials \(p h \in \mathbb {R}\langle \mathbf{x}\rangle _{2t}\) with \(h \in T\):

We say that \({{\mathscr {M}}}(S) + {{\mathscr {I}}}(T)\) is Archimedean when there exists a scalar \(R>0\) such that

Throughout, we are interested in the space \(\mathbb {R}\langle \mathbf{x} \rangle _t^*\) of real-valued linear functionals on \(\mathbb {R}\langle \mathbf{x} \rangle _t\). We list some basic definitions: A linear functional \(L \in \mathbb {R}\langle \mathbf{x} \rangle _t^*\) is symmetric if \(L(w) = L(w^*)\) for all \(w \in \langle \mathbf{x} \rangle _t\) and tracial if \(L(ww') = L(w'w)\) for all \(w,w' \in \langle \mathbf{x} \rangle _t\). A linear functional \(L \in \mathbb {R}\langle \mathbf{x} \rangle _{2t}^*\) is said to be positive if \(L(p^*p) \ge 0\) for all \(p \in \mathbb {R}\langle \mathbf{x} \rangle _t\). Many properties of a linear functional \(L \in \mathbb {R}\langle \mathbf{x}\rangle _{2t}^*\) can be expressed as properties of its associated moment matrix (also known as its Hankel matrix). For \(L \in \mathbb {R}\langle \mathbf{x}\rangle _{2t}^*\) we define its associated moment matrix, which has rows and columns indexed by words in \(\langle \mathbf{x}\rangle _t\), by

and as usual we set \(M(L) = M_\infty (L)\). It then follows that L is symmetric if and only if \(M_t(L)\) is symmetric, and L is positive if and only if \(M_t(L)\) is positive semidefinite. In fact, one can even express nonnegativity of a linear form \(L\in \mathbb {R}\langle \mathbf{x}\rangle _{2t}^*\) on \({{\mathscr {M}}}_{2t}(S)\) in terms of certain associated positive semidefinite moment matrices. For this, given a polynomial \(g\in \mathbb {R}\langle \mathbf{x}\rangle \), define the linear form \(gL \in \mathbb {R}\langle \mathbf{x}\rangle _{2t-\deg (g)}^*\) by \((gL)(p)=L(gp)\). Then, we have

and thus \(L\ge 0\) on \({{\mathscr {M}}}_{2t}(S)\) if and only if \(M_{t-d_{g}}(gL) \succeq 0\) for all \(g\in S \cup \{1\}\). Also, the condition \(L=0\) on \({{\mathscr {I}}}_{2t}(T)\) corresponds to linear equalities on the entries of \(M_t(L)\).

The moment matrix also allows us to define a property called flatness. For \(t \in \mathbb {N}\), a linear functional \(L \in \mathbb {R}\langle \mathbf{x}\rangle _{2t}^*\) is called \(\delta \)-flat if the rank of \(M_t(L)\) is equal to that of its principal submatrix indexed by the words in \(\langle \mathbf{x}\rangle _{t-\delta }\), that is,

We call Lflat if it is \(\delta \)-flat for some \(\delta \ge 1\). When \(t=\infty \), L is said to be flat when \(\mathrm {rank}(M(L))<\infty \), which is equivalent to \({{\,\mathrm{rank}\,}}(M(L))={{\,\mathrm{rank}\,}}(M_s(L))\) for some \(s\in \mathbb {N}\).

A key example of a flat symmetric tracial positive linear functional on \(\mathbb {R}\langle \mathbf{x}\rangle \) is given by the trace evaluation at a given matrix tuple \(\mathbf {X}= (X_1,\ldots ,X_n) \in (\mathrm {H}^d)^n\):

Here, \(p(\mathbf {X})\) denotes the matrix obtained by substituting \(x_i\) by \(X_i\) in p, and throughout \(\mathrm {Tr}(\cdot )\) denotes the usual matrix trace, which satisfies \(\mathrm {Tr}(I) = d\) where I is the identity matrix in \(\mathrm {H}^d\). We mention in passing that we use \(\mathrm {tr}(\cdot )\) to denote the normalized matrix trace, which satisfies \(\mathrm {tr}(I) = 1\) for \(I \in \mathrm {H}^d\). Throughout, we use \(L_\mathbf {X}\) to denote the real part of the above functional, that is, \(L_\mathbf {X}\) denotes the linear form on \(\mathbb {R}\langle \mathbf{x}\rangle \) defined by

Observe that \(L_\mathbf {X}\) too is a symmetric tracial positive linear functional on \(\mathbb {R}\langle \mathbf{x}\rangle \). Moreover, \(L_\mathbf {X}\) is nonnegative on \({{\mathscr {M}}}(S)\) if the matrix tuple \(\mathbf {X}\) is taken from the matrix positivity domain\({\mathscr {D}}(S)\) associated to the finite set \(S \subseteq \mathrm {Sym} \, \mathbb {R}\langle \mathbf{x}\rangle \), defined as

Similarly, the linear functional \(L_\mathbf {X}\) is zero on \({{\mathscr {I}}}(T)\) if the matrix tuple \(\mathbf {X}\) is taken from the matrix variety\(\mathscr {V}(T)\) associated to the finite set \(T \subseteq \mathrm {Sym} \, \mathbb {R}\langle \mathbf{x}\rangle \), defined as

To discuss convergence properties of our lower bounds for matrix factorization ranks, we will need to consider infinite dimensional analogs of matrix algebras, namely \(C^*\)-algebras admitting a tracial state. Let us introduce some basic notions we need about \(C^*\)-algebras; see, e.g., [9] for details. For our purposes, we define a \(C^*\)-algebra to be a norm closed \(*\)-subalgebra of the complex algebra \({{\mathscr {B}}}({\mathscr {H}})\) of bounded operators on a complex Hilbert space \({\mathscr {H}}\). In particular, we have \(\Vert a^*a\Vert = \Vert a\Vert ^2\) for all elements a in the algebra. Such an algebra \({{\mathscr {A}}}\) is said to be unital if it contains the identity operator (denoted 1). For instance, any full complex matrix algebra \(\mathbb {C}^{d\times d}\) is a unital \(C^*\)-algebra. Moreover, by a fundamental result of Artin-Wedderburn, any finite dimensional \(C^*\)-algebra (as a vector space) is \(*\)-isomorphic to a direct sum \(\bigoplus _{m=1}^M \mathbb {C}^{d_m\times d_m}\) of full complex matrix algebras [3, 77]. In particular, any finite dimensional \(C^*\)-algebra is unital.

An element b in a \(C^*\)-algebra \({{\mathscr {A}}}\) is called positive, denoted \(b\succeq 0\), if it is of the form \(b=a^*a\) for some \(a\in {{\mathscr {A}}}\). For finite sets \(S \subseteq \mathrm {Sym} \,\mathbb {R}\langle \mathbf{x}\rangle \) and \(T \subseteq \mathbb {R}\langle \mathbf{x}\rangle \), the \(C^*\)-algebraic analogs of the matrix positivity domain and matrix variety are the sets

A state\(\tau \) on a unital \(C^*\)-algebra \({{\mathscr {A}}}\) is a linear form on \({{\mathscr {A}}}\) that is positive, i.e., \(\tau (a^*a)\ge 0\) for all \(a\in {{\mathscr {A}}}\), and satisfies \(\tau (1)=1\). Since \({{\mathscr {A}}}\) is a complex algebra, every state \(\tau \) is Hermitian: \(\tau (a) = \tau (a^*)\) for all \(a \in {{\mathscr {A}}}\). We say that that a state is tracial if \(\tau (ab) = \tau (ba)\) for all \(a,b \in {\mathscr {A}}\) and faithful if \(\tau (a^*a)=0\) implies \(a=0\). A useful fact is that on a full matrix algebra \(\mathbb {C}^{d\times d}\) the normalized matrix trace is the unique tracial state (see, e.g., [15]). Now, given a tuple \(\mathbf {X}=(X_1,\ldots ,X_n)\in {{\mathscr {A}}}^n\) in a \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \), the second key example of a symmetric tracial positive linear functional on \(\mathbb {R}\langle \mathbf{x}\rangle \) is given by the trace evaluation map, which we again denote by \(L_\mathbf {X}\) and is defined by

1.4 Basic Approach

To explain the basic idea of how we obtain lower bounds for matrix factorization ranks, we consider the case of the completely positive semidefinite rank. Given a minimal factorization \(A=(\mathrm {Tr}(X_i,X_j))\), with \(d={{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A)\) and \(\mathbf {X}=(X_1,\ldots ,X_n)\) in \((\mathrm {H}_+^d)^n\), consider the linear form \(L_{\mathbf{X}}\) on \(\mathbb {R}\langle \mathbf{x}\rangle \) as defined in (5):

Then, we have \(A=(L_{\mathbf {X}}(x_ix_j))\) and \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A) = d=L_{\mathbf {X}}(1)\). To obtain lower bounds on \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A)\), we minimize L(1) over a set of linear functionals L that satisfy certain computationally tractable properties of \(L_{\mathbf{X}}\). Note that this idea of minimizing L(1) has recently been used in the works [59, 74] in the commutative setting to derive a hierarchy of lower bounds converging to the nuclear norm of a symmetric tensor.

The above linear functional \(L_{\mathbf{X}}\) is symmetric and tracial. Moreover, it satisfies some positivity conditions, since we have \(L_{\mathbf{X}}(q) \ge 0\) whenever \(q(\mathbf{X})\) is positive semidefinite. It follows that \(L_{\mathbf{X}}(p^*p) \ge 0\) for all \(p\in \mathbb {R}\langle \mathbf{x}\rangle \) and, as we explain later, \(L_{\mathbf{X}}\) satisfies the localizing conditions \(L_{\mathbf{X}}(p^*(\sqrt{A_{ii}} x_i - x_i^2)p) \ge 0\) for all p and i. Truncating the linear form yields the following hierarchy of lower bounds:

The bound \({\xi _{t}^{\mathrm {cpsd}}}(A)\) is computationally tractable (for small t). Indeed, as was explained in Sect. 1.3, the localizing constraint “\(L\ge 0\) on \({\mathscr {M}}_{2t}(S)\)” can be enforced by requiring certain matrices, whose entries are determined by L, to be positive semidefinite. This makes the problem defining \({\xi _{t}^{\mathrm {cpsd}}}(A)\) into a semidefinite program. The localizing conditions ensure the Archimedean property of the quadratic module, which permits to show certain convergence properties of the bounds \({\xi _{t}^{\mathrm {cpsd}}}(A)\).

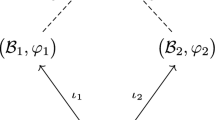

The above approach extends naturally to the other matrix factorization ranks, using the following two basic ideas. First, since the cp-rank and the nonnegative rank deal with factorizations by diagonal matrices, we use linear functionals acting on classical commutative polynomials. Second, the asymmetric factorization ranks (psd-rank and nonnegative rank) can be seen as analogs of the symmetric ranks in the partial matrix setting, where we know only the values of L on the quadratic monomials corresponding to entries in the off-diagonal blocks (this will require scaling of the factors in order to be able to define localizing constraints ensuring the Archimedean property). A main advantage of our approach is that it applies to all four matrix factorization ranks, after easy suitable adaptations.

1.5 Connection to Polynomial Optimization

In classical polynomial optimization, the problem is to find the global minimum of a commutative polynomial f over a semialgebraic set of the form

where \(S \subseteq \mathbb {R}[\mathbf {x}] = \mathbb {R}[x_1,\ldots ,x_n]\) is a finite set of polynomials.Footnote 1 Tracial polynomial optimization is a noncommutative analog, where the problem is to minimize the normalized trace \(\mathrm {tr}(f(\mathbf {X}))\) of a symmetric polynomial f over a matrix positivity domain \({\mathscr {D}}(S)\) where \(S \subseteq \mathrm {Sym} \, \mathbb {R}\langle \mathbf{x}\rangle \) is a finite set of symmetric polynomials.Footnote 2 Notice that the distinguishing feature here is the dimension independence: the optimization is over all possible matrix sizes. Perhaps counterintuitively, in this paper, we use techniques similar to those used for the tracial polynomial optimization problem to compute lower bounds on factorization dimensions.

For classical polynomial optimization Lasserre [46] and Parrilo [60] have proposed hierarchies of semidefinite programming relaxations based on the theory of moments and the dual theory of sums of squares polynomials. These can be used to compute successively better lower bounds converging to the global minimum (under the Archimedean condition). This approach has been used in a wide range of applications and there is an extensive literature (see, e.g., [1, 47, 49]). Most relevant to this work, it is used in [48] to design conic approximations of the completely positive cone and in [58] to check membership in the completely positive cone. This approach has also been extended to the noncommutative setting, first to the eigenvalue optimization problem [57, 61] (which will not play a role in this paper), and later to tracial optimization [14, 43].

For our paper, the moment formulation of the lower bounds is most relevant: For all \(t \in \mathbb {N}\cup \{\infty \}\), we can define the bounds

where \(f_t\) (resp., \(f_t^\mathrm {tr}\)) lower bounds the (tracial) polynomial optimization problem.

The connection between the parameters \({\xi _{t}^{\mathrm {cpsd}}}(A)\) and \(f_t^\mathrm {tr}\) is now clear: in the former we do not have the normalization property “\(L(1)=1\)” but we do have the additional affine constraints “\(L(x_i x_j) = A_{ij}\)”. This close relation to (tracial) polynomial optimization allows us to use that theory to understand the convergence properties of our bounds. Since throughout the paper we use (proof) techniques from (tracial) polynomial optimization, we will state the main convergence results we need, with full proofs, in Appendix A. Moreover, we give all proofs from the “moment side”, which is most relevant to our treatment. Below we give a short summary of the convergence results for the hierarchies \(\{f_t\}\) and \(\{f_t^\mathrm {tr}\}\) that are relevant to our paper. We refer to Appendix A.3 for details.

Under the condition that \({{\mathscr {M}}}(S)\) is Archimedean, we have asymptotic convergence: \(f_t \rightarrow f_\infty \) and \(f_t^\mathrm {tr} \rightarrow f_\infty ^\mathrm {tr}\) as \(t \rightarrow \infty \). In the commutative setting, one can moreover show that \(f_\infty \) is equal to the global minimum of f over the set D(S). However, in the noncommutative setting, the parameter \(f_\infty ^\mathrm {tr}\) is in general not equal to the minimum of \(\mathrm {tr}(f(\mathbf {X}))\) over \(\mathbf {X}\in {\mathscr {D}}(S)\). Instead, we need to consider the \(C^*\)-algebraic version of the tracial polynomial optimization problem: one can show that

An important additional convergence result holds under flatness. If the program defining the bound \(f_t\) (resp., \(f_t^\mathrm {tr}\)) admits a sufficiently flat optimal solution, then equality holds: \(f_t = f_\infty \) (resp., \(f_t^\mathrm {tr} = f_\infty ^\mathrm {tr}\)). Moreover, in this case, the parameter \(f_t^\mathrm {tr}\) is equal to the minimum value of \(\mathrm {tr}(f(\mathbf {X}))\) over the matrix positivity domain \({\mathscr {D}}(S)\).

2 Lower Bounds on the Completely Positive Semidefinite Rank

Let A be a completely positive semidefinite \(n \times n\) matrix. For \(t \in \mathbb {N}\cup \{\infty \}\), we consider the following semidefinite program, which, as we see below, lower bounds the complex completely positive semidefinite rank of A:

where we set

Additionally, define the parameter \({\xi _{*}^{\mathrm {cpsd}}}(A)\), obtained by adding the rank constraint \({{\,\mathrm{rank}\,}}(M(L)) < \infty \) to the program defining \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\), where we consider the infimum instead of the minimum since we do not know whether the infimum is always attained. (In Proposition 1 we show the infimum is attained in \({\xi _{t}^{\mathrm {cpsd}}}(A)\) for \(t\in \mathbb {N}\cup \{\infty \}\)). This gives a hierarchy of monotone nondecreasing lower bounds on the completely positive semidefinite rank:

The inequality \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\le {\xi _{*}^{\mathrm {cpsd}}}(A)\) is clear and monotonicity as well: If L is feasible for \({\xi _{k}^{\mathrm {cpsd}}}(A)\) with \(t \le k \le \infty \), then its restriction to \(\mathbb {R}\langle \mathbf{x}\rangle _{2t}\) is feasible for \({\xi _{t}^{\mathrm {cpsd}}}(A)\).

The following notion of localizing polynomials will be useful. A set \(S\subseteq \mathbb {R}\langle \mathbf{x}\rangle \) is said to be localizing at a matrix tuple \(\mathbf {X}\) if \(\mathbf {X}\in {\mathscr {D}}(S)\) (i.e., \(g(\mathbf {X})\succeq 0\) for all \(g\in S\)) and we say that S is localizing for A if S is localizing at some factorization \(\mathbf {X}\in (\mathrm {H}_+^d)^n\) of A with \(d={{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\). The set \(S_A^{\mathrm {cpsd}}\) as defined in (7) is localizing for A, and, in fact, it is localizing at any factorization \(\mathbf {X}\) of A by Hermitian positive semidefinite matrices. Indeed, since

we have \(\sqrt{A_{ii}} X_i - X_i^2 \succeq 0\) for all \(i\in [n]\).

We can now use this to show the inequality \({\xi _{*}^{\mathrm {cpsd}}}(A) \le {{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\). For this set \(d = {{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\), let \(\mathbf {X}\in (\mathrm {H}_+^d)^n\) be a Gram factorization of A, and consider the linear form \(L_\mathbf {X}\in \mathbb {R}\langle \mathbf {x}\rangle ^*\) defined by

By construction \(L_\mathbf {X}\) is symmetric and tracial, and we have \(A=(L(x_ix_j))\). Moreover, since the set of polynomials \(S_A^{\mathrm {cpsd}}\) is localizing for A, the linear form \(L_\mathbf {X}\) is nonnegative on \({\mathscr {M}}(S_A^{\mathrm {cpsd}})\). Finally, we have \({{\,\mathrm{rank}\,}}(M(L_\mathbf {X}))<\infty \), since the algebra generated by \(X_1, \ldots , X_n\) is finite dimensional. Hence, \(L_\mathbf {X}\) is feasible for \({\xi _{*}^{\mathrm {cpsd}}}(A)\) with \(L_\mathbf {X}(1)=d\), which shows \({\xi _{*}^{\mathrm {cpsd}}}(A) \le {{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\).

The inclusions in (8) below show the quadratic module \({{\mathscr {M}}}(S_A^{\mathrm {cpsd}})\) is Archimedean (recall the definition in (3)). Moreover, although there are other possible choices for the localizing polynomials to use in \(S_A^{\mathrm {cpsd}}\), these inclusions also show that the choice made in (7) leads to the largest truncated quadratic module and thus to the best bound. For any scalar \(c > 0\), we have the inclusions

which hold in light of the following identities:

In the rest of this section, we investigate properties of the hierarchy \(\{{\xi _{t}^{\mathrm {cpsd}}}(A)\}\) as well as some variations on it. We discuss convergence properties, asymptotically and under flatness, and we give another formulation for the parameter \({\xi _{*}^{\mathrm {cpsd}}}(A)\). Moreover, as the inequality \({\xi _{*}^{\mathrm {cpsd}}}(A) \le {{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\) is typically strict, we present an approach to strengthen the bounds in order to go beyond \({\xi _{*}^{\mathrm {cpsd}}}(A)\). Then, we propose some techniques to simplify the computation of the bounds, and we illustrate the behavior of the bounds on some examples.

2.1 The Parameters \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\) and \({\xi _{*}^{\mathrm {cpsd}}}(A)\)

In this section, we consider convergence properties of the hierarchy \({\xi _{t}^{\mathrm {cpsd}}}(\cdot )\), both asymptotically and under flatness. We also give equivalent reformulations of the limiting parameters \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\) and \({\xi _{*}^{\mathrm {cpsd}}}(A)\) in terms of \(C^*\)-algebras with a tracial state, which we will use in Sects. 2.3–2.4 to show properties of these parameters.

Proposition 1

Let \(A \in \mathrm {CS}_{+}^n\). For \(t \in \mathbb {N}\cup \{\infty \}\) the optimum in \({\xi _{t}^{\mathrm {cpsd}}}(A)\) is attained, and

Moreover, \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\) is equal to the smallest scalar \(\alpha \ge 0\) for which there exists a unital \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \) and \((X_1,\ldots ,X_n) \in {\mathscr {D}}_{{\mathscr {A}}}(S_A^{\mathrm {cpsd}})\) such that \(A = \alpha \cdot (\tau (X_iX_j))\).

Proof

The sequence \(({\xi _{t}^{\mathrm {cpsd}}}(A))_t\) is monotonically nondecreasing and upper bounded by \({\xi _{\infty }^{\mathrm {cpsd}}}(A) <\infty \), which implies its limit exists and is at most \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\).

As \({\xi _{t}^{\mathrm {cpsd}}}(A)\le {\xi _{\infty }^{\mathrm {cpsd}}}(A)\), we may add the redundant constraint \(L(1) \le {\xi _{\infty }^{\mathrm {cpsd}}}(A)\) to the problem \({\xi _{t}^{\mathrm {cpsd}}}(A)\) for every \(t \in \mathbb {N}\). By (10), we have \(\mathrm {Tr}(A) -\sum _ix_i^2 \in {{\mathscr {M}}}_2(S_A^{\mathrm {cpsd}})\). Hence, using the result of Lemma 13, the feasible region of \({\xi _{t}^{\mathrm {cpsd}}}(A)\) is compact, and thus, it has an optimal solution \(L_t\). Again by Lemma 13, the sequence \((L_t)\) has a pointwise converging subsequence with limit \(L \in \mathbb {R}\langle \mathbf {x}\rangle ^*\). This pointwise limit L is symmetric, tracial, satisfies \((L(x_ix_j)) = A\), and is nonnegative on \({\mathscr {M}}(S_A^{\mathrm {cpsd}})\). Hence, L is feasible for \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\). This implies that L is optimal for \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\) and we have \(\lim _{t \rightarrow \infty } {\xi _{t}^{\mathrm {cpsd}}}(A) = {\xi _{\infty }^{\mathrm {cpsd}}}(A)\).

The reformulation of \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\) in terms of \(C^*\)-algebras with a tracial state follows directly using Theorem 1. \(\square \)

Next, we give some equivalent reformulations for the parameter \({\xi _{*}^{\mathrm {cpsd}}}(A)\), which follow as a direct application of Theorem 2. In general, we do not know whether the infimum in \({\xi _{*}^{\mathrm {cpsd}}}(A)\) is attained. However, as a direct application of Corollary 1, we see that this infimum is attained if there is an integer \(t \in \mathbb {N}\) for which \({\xi _{t}^{\mathrm {cpsd}}}(A)\) admits a flat optimal solution.

Proposition 2

Let \(A \in \mathrm {CS}_{+}^n\). The parameter \({\xi _{*}^{\mathrm {cpsd}}}(A)\) is given by the infimum of L(1) taken over all conic combinations L of trace evaluations at elements in \({\mathscr {D}}_{{\mathscr {A}}}(S_A^{\mathrm {cpsd}})\) for which \(A=(L(x_ix_j))\). The parameter \({\xi _{*}^{\mathrm {cpsd}}}(A)\) is also equal to the infimum over all \(\alpha \ge 0\) for which there exist a finite dimensional \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \) and \((X_1,\ldots ,X_n) \in {\mathscr {D}}_{{\mathscr {A}}}(S_A^{\mathrm {cpsd}})\) such that \(A = \alpha \cdot (\tau (X_iX_j))\).

In addition, if \({\xi _{t}^{\mathrm {cpsd}}}(A)\) admits a flat optimal solution, then \({\xi _{t}^{\mathrm {cpsd}}}(A)= {\xi _{*}^{\mathrm {cpsd}}}(A)\).

Next we show a formulation for \({\xi _{*}^{\mathrm {cpsd}}}(A)\) in terms of factorization by block-diagonal matrices, which helps explain why the inequality \({\xi _{*}^{\mathrm {cpsd}}}(A) \le {{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A)\) is typically strict. Here \(\Vert \cdot \Vert \) is the operator norm, so that \(\Vert X\Vert = \lambda _\mathrm {max}(X)\) for \(X \succeq 0\).

Proposition 3

For \(A \in \mathrm {CS}_{+}^n\) we have

Note that using matrices from \(\mathrm {S}_+^{d_m}\) instead of \(\mathrm {H}_+^{d_m}\) does not change the optimal value.

Proof

The proof uses the formulation of \({\xi _{*}^{\mathrm {cpsd}}}(A)\) in terms of conic combinations of trace evaluations at matrix tuples in \({\mathscr {D}}(S_A^{\mathrm {cpsd}})\) as given in Proposition 2. We first show the inequality \(\beta \le {\xi _{*}^{\mathrm {cpsd}}}(A)\), where \(\beta \) denotes the optimal value of the program in (13).

For this, assume \(L\in \mathbb {R}\langle \mathbf{x}\rangle ^*\) is a conic combination of trace evaluations at elements of \({{\mathscr {D}}}(S_A^{\mathrm {cpsd}})\) such that \(A=(L(x_ix_j))\). We will construct a feasible solution for (13) with objective value L(1). The linear functional L can be written as

Let \(d_m\) denote the size of the matrices \(Y_1^m, \ldots , Y_n^m\), so that \(L(1)=\sum _m \lambda _m d_m\). Since \(\mathbf Y^m \in {{\mathscr {D}}}(S_A^{\mathrm {cpsd}})\), we have \(Y^m_i \succeq 0\) and \(A_{ii}I-(Y^m_i)^2\succeq 0\) by identities (10) and (12). This implies \(\Vert Y^m_i\Vert ^2 \le A_{ii}\) for all \(i\in [n]\) and \(m \in [M]\). Define \(\mathbf X^m = \sqrt{\lambda _m} \, \mathbf Y^m\). Then, \(L(x_ix_j)= \sum _m {{\,\mathrm{Tr}\,}}(X^m_iX^m_j)\), so that the matrices \(\oplus _m X^m_1,\ldots ,\oplus _m X^m_n\) form a Gram decomposition of A. This gives a feasible solution to (13) with value

which shows \(\beta \le L(1)\), and hence \(\beta \le {\xi _{*}^{\mathrm {cpsd}}}(A)\).

For the other direction, we assume

Set \(\lambda _m = \mathrm {max}_{i\in [n]} {\Vert X^m_i\Vert ^2/ A_{ii}}\), and define the linear form L by

We have \(L(1)=\sum _m \lambda _m d_m\) and \(A=(L(x_ix_j))\), and thus it suffices to show that each matrix tuple \(\mathbf Y^m\) belongs to \({{\mathscr {D}}}(S_A^{\mathrm {cpsd}})\). For this we observe that \(\lambda _mA_{ii}\ge \Vert X^m_i\Vert ^2\). Therefore \(\lambda _m A_{ii} I \succeq (X_i^m)^2\), and thus \(A_{ii} I \succeq (Y_i^m)^2\), which implies \(\sqrt{A_{ii}} Y_i^m - (Y_i^m)^2 \succeq 0\). This shows \({\xi _{*}^{\mathrm {cpsd}}}(A) \le L(1)=\sum _m \lambda _m d_m\), and thus \({\xi _{*}^{\mathrm {cpsd}}}(A) \le \beta \). \(\square \)

We can say a bit more when the matrix A lies on an extreme ray of the cone \(\mathrm {CS}_{+}^n\). In the formulation from Proposition 3, it suffices to restrict the minimization over factorizations of A involving only one block. However, we know very little about the extreme rays of \(\mathrm {CS}_{+}^n\), also in view of the recent result that the cone is not closed for large n [22, 73].

Proposition 4

If A lies on an extreme ray of the cone \(\mathrm {CS}_{+}^n\), then

Moreover, if \(\oplus _{m=1}^M X^m_1,\ldots ,\oplus _{m=1}^M X^m_n\) is a Gram decomposition of A providing an optimal solution to (13) and some block \(X^m_i\) has rank 1, then \({\xi _{*}^{\mathrm {cpsd}}}(A)={{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\).

Proof

Let \(\beta \) be the infimum in Proposition 4. The inequality \({\xi _{*}^{\mathrm {cpsd}}}(A) \le \beta \) follows from the reformulation of \({\xi _{*}^{\mathrm {cpsd}}}(A)\) in Proposition 3. To show the reverse inequality we consider a solution \( \oplus _{m=1}^M X^m_1,\ldots ,\oplus _{m=1}^M X^m_n \) to (13), and set \(\lambda _m= \mathrm {max}_i\Vert X^m_i\Vert ^2/A_{ii}\). We will show \(\beta \le \sum _m d_m\lambda _m\). For this define the matrices \( A_m={{\,\mathrm{Gram}\,}}(X^m_1,\cdots ,X^m_n), \) so that \(A=\sum _m A_m\). As A lies on an extreme ray of \(\mathrm {CS}_{+}^n\), we must have \(A_m = \alpha _m A\) for some \(\alpha _m>0\) with \(\sum _m\alpha _m=1\). Hence, since

we have \(\beta \le d_m\lambda _m/\alpha _m\) for all \(m\in [M]\). It suffices now to use \(\sum _m \alpha _m=1\) to see that \(\mathrm {min}_m d_m\lambda _m/\alpha _m \le \sum _m d_m\lambda _m\). So we have shown \(\beta \le \mathrm {min}_m d_m\lambda _m/\alpha _m \le \sum _m d_m\lambda _m.\) This implies \(\beta \le {\xi _{*}^{\mathrm {cpsd}}}(A)\), and thus equality holds.

Assume now that \(\oplus _{m=1}^M X^m_1,\ldots ,\oplus _{m=1}^M X^m_n\) is optimal to (13) and that there is a block \(X_i^m\) of rank 1. By Proposition 3 we have \(\sum _m d_m\lambda _m= {\xi _{*}^{\mathrm {cpsd}}}(A)\). From the argument just made above it follows that

As \(\sum _m \alpha _m=1\) this implies \(d_m\lambda _m/\alpha _m =\mathrm {min}_m d_m\lambda _m/\alpha _m\) for all m; that is, all terms \(d_m\lambda _m/\alpha _m\) take the same value \({\xi _{*}^{\mathrm {cpsd}}}(A)\). By assumption, there exist some \(m\in [M]\) and \(i\in [n]\) for which \(X^m_i\) has rank 1. Then \(\Vert X^m_i\Vert ^2=\langle X^m_i,X^m_i\rangle \), which gives \(\lambda _m =\alpha _m\), and thus \({\xi _{*}^{\mathrm {cpsd}}}(A) = d_m\). On the other hand, \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\le d_m\) since \((X^m_i/\sqrt{\alpha _m})_i\) forms a Gram decomposition of A, so equality \({\xi _{*}^{\mathrm {cpsd}}}(A)=d_m={{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\) holds. \(\square \)

2.2 Additional Localizing Constraints to Improve on \({\xi _{*}^{\mathrm {cpsd}}}(A)\)

In order to strengthen the bounds, we may require nonnegativity over a (truncated) quadratic module generated by a larger set of localizing polynomials for A. The following lemma gives one such approach.

Lemma 1

Let \(A \in \mathrm {CS}_{+}^n\). For \(v\in \mathbb {R}^n\) and \(g_v= v^\textsf {T}Av -\big (\sum _{i=1}^n v_ix_i\big )^2\), the set \(\{g_v\}\) is localizing for every Gram factorization by Hermitian positive semidefinite matrices of A (in particular, \(\{g_v\}\) is localizing for A).

Proof

If \(X_1,\ldots ,X_n\) is a Gram decomposition of A by Hermitian positive semidefinite matrices, then

hence \(v^\textsf {T}AvI-(\sum _{i=1}^nv_iX_i)^2\succeq 0\). \(\square \)

Given a set \(V\subseteq \mathbb {R}^n\), we consider the larger set

of localizing polynomials for A. For \(t \in \mathbb {N}\cup \{\infty ,*\}\), denote by \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\) the parameter obtained by replacing in \({\xi _{t}^{\mathrm {cpsd}}}(A)\) the nonnegativity constraint on \({{\mathscr {M}}}_{2t}(S_A^{\mathrm {cpsd}})\) by nonnegativity on the larger set \({{\mathscr {M}}}_{2t}(S_{A,V}^{\mathrm {cpsd}})\). We have \({\xi _{t,\emptyset }^{\mathrm {cpsd}}}(A)={\xi _{t}^{\mathrm {cpsd}}}(A)\) and

By scaling invariance, we can add the above constraints for all \(v \in \mathbb {R}^n\) by setting V to be the unit sphere \(\mathbb {S}^{n-1}\). Since \(\mathbb {S}^{n-1}\) is a compact metric space, there exists a sequence \(V_1 \subseteq V_2 \subseteq \ldots \subseteq \mathbb {S}^{n-1}\) of finite subsets such that \(\bigcup _{k\ge 1} V_k\) is dense in \(\mathbb {S}^{n-1}\). Each of the parameters \({\xi _{t,V_k}^{\mathrm {cpsd}}}(A)\) involves finitely many localizing constraints, and, as we now show, they converge to the parameter \({\xi _{t,\mathbb {S}^{n-1}}^{\mathrm {cpsd}}}(A)\).

Proposition 5

Consider a matrix \(A\in \mathrm {CS}_{+}^n\). For \(t \in \{\infty , *\}\), we have

Proof

Let \(\varepsilon > 0\). Since \(\bigcup _k V_k\) is dense in \(\mathbb {S}^{n-1}\), there is an integer \(k\ge 1\) so that for every \(u \in \mathbb {S}^{n-1}\) there exists a vector \(v \in V_k\) satisfying

The above Propositions 1 and 2 have natural analogs for the programs \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\). These show that for \(t = \infty \) (\(t = *\)) the parameter \({\xi _{t,V_k}^{\mathrm {cpsd}}}(A)\) is the infimum over all \(\alpha \ge 0\) for which there exist a (finite dimensional) unital \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \) and \(\mathbf {X}\in {\mathscr {D}}_{{\mathscr {A}}}(S_{A,V_k}^{\mathrm {cpsd}})\) such that \(A = \alpha \cdot (\tau (X_iX_j))\).

Below we will show that \(\mathbf{X}' = \sqrt{1-\varepsilon } \mathbf{X} \in {\mathscr {D}}_{{\mathscr {A}}}(S_{A,\mathbb {S}^{n-1}}^{\mathrm {cpsd}})\). This implies that the linear form \(L \in \mathbb {R}\langle \mathbf {x}\rangle ^*\) defined by \(L(p) = \alpha /(1-\varepsilon ) \tau (p(\mathbf{X'}))\) is feasible for \({\xi _{t,\mathbb {S}^{n-1}}^{\mathrm {cpsd}}}(A)\) with objective value \(L(1) = \alpha /(1-\varepsilon )\). This shows

Since \(\varepsilon >0\) was arbitrary, letting \(\varepsilon \) tend to 0 completes the proof.

We now show \(\mathbf{X}' = \sqrt{1-\varepsilon } \mathbf{X} \in {\mathscr {D}}_{{\mathscr {A}}}(S_{A,\mathbb {S}^{n-1}}^{\mathrm {cpsd}})\). For this consider the map

where \(\Vert \cdot \Vert \) denotes the \(C^*\)-algebra norm of \({\mathscr {A}}\). For \(\alpha \in \mathbb {R}\) and \(a\in {{\mathscr {A}}}\) with \(a^*=a\), we have \(\alpha \ge \Vert a\Vert \) if and only if \(\alpha -a\succeq 0\) in \({{\mathscr {A}}}\), or, equivalently, \(\alpha ^2-a^2\succeq 0\) in \({{\mathscr {A}}}\). Since \(\mathbf {X}\in {\mathscr {D}}_{{\mathscr {A}}}(S_{A,V_k}^{\mathrm {cpsd}})\) we have \(v^\textsf {T}A v - f_{\mathbf{X}}(v) \ge 0\) for all \(v \in V_k\), and hence

Let \(u \in \mathbb {S}^{n-1}\) and let \(v \in V_k\) be such that (14) holds. Using Cauchy-Schwarz we have

Since \(\sqrt{A_{ii}} X_i - X_i^2\) is positive in \({\mathscr {A}}\), we have that \(\sqrt{A_{ii}} -X_i\) is positive in \({\mathscr {A}}\) by (9) and (10), which implies \(\Vert X_i\Vert \le \sqrt{A_{ii}}\). By the reverse triangle inequality, we then have

Combining the above inequalities we obtain that \(u^\textsf {T}A u - f_{\mathbf{X}'}(u) \ge 0\) for all \(\mathbb {S}^{n-1}\), and hence \(u^\textsf {T}A u - \big (\sum _{i=1}^n u_i X_i'\big )^2\) is positive in \({\mathscr {A}}\). Thus, we have \(\mathbf {X}' \in {\mathscr {D}}_{{\mathscr {A}}}(S_{A,\mathbb {S}^{n-1}}^{\mathrm {cpsd}})\). \(\square \)

We now discuss two examples where the bounds \({\xi _{*,V}^{\mathrm {cpsd}}}(A)\) go beyond \({\xi _{*}^{\mathrm {cpsd}}}(A)\).

Example 1

Consider the matrix

with \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A) = 2\). We can also write \(A = \mathrm {Gram}(Y_1, Y_2)\), where

With \(X_i= \sqrt{2} \ Y_i\) we have \(I - X_i^2 \succeq 0\) for \(i=1,2\). Hence the linear form \(L = L_\mathbf {X}/2\) is feasible for \({\xi _{*}^{\mathrm {cpsd}}}(A)\), which shows that \({\xi _{*}^{\mathrm {cpsd}}}(A) \le L(1) = 3/2\). In fact, this form L gives an optimal flat solution to \({\xi _{2}^{\mathrm {cpsd}}}(A)\), as we can check using a semidefinite programming solver, so \({\xi _{*}^{\mathrm {cpsd}}}(A) = 3/2\). In passing, we observe that \({\xi _{1}^{\mathrm {cpsd}}}(A) = 4/3\), which coincides with the analytic lower bound (18) (see also Lemma 6 below).

For \(e = (1,1) \in \mathbb {R}^2\) and \(V = \{e\}\), this form L is not feasible for \({\xi _{*,V}^{\mathrm {cpsd}}}(A)\), because for the polynomial \(p = 1-3 x_1 - 3x_2\) we have \(L(p^*g_ep) = -9/2 < 0\). This means that the localizing constraint \(L(p^*g_ep)\ge 0\) is not redundant: For \(t\ge 2\) it cuts off part of the feasibility region of \({\xi _{t}^{\mathrm {cpsd}}}(A)\). Indeed, using a semidefinite programming solver, we find an optimal flat solution of \({\xi _{3,V}^{\mathrm {cpsd}}}(A)\) with objective value \((5-\sqrt{3})/2\approx 1.633\), hence

Example 2

Consider the symmetric circulant matrices

For \(0\le \alpha \le 1/2\), we have \(M(\alpha ) \in \mathrm {CS}_{+}^5\) with \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(M(\alpha )) \le 5\). To see this, we set \(\beta =(1+\sqrt{1-4\alpha ^2})/2\) and observe that the matrices

form a factorization of \(M(\alpha )\). As \(M(\alpha )\) is supported by a cycle, we have \(M(\alpha )\in \mathrm {CS}_{+}^5\) if and only if \(M(\alpha )\in \hbox {CP}^5\) [50]. Thus, \(M(\alpha ) \in \mathrm {CS}_{+}^5\) if and only if \(0 \le \alpha \le 1/2\).

By using its formulation in Proposition 3, we can use the above factorization to derive the inequality \({\xi _{*}^{\mathrm {cpsd}}}(M(1/2))\le 5/2\). However, using a semidefinite programming solver, we see that

where V is the set containing the vector \((1,-1,1,-1,1)\) and its cyclic shifts. Hence, the bound \({\xi _{2,V}^{\mathrm {cpsd}}}(M(1/2))\) is tight: It certifies \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(M(1/2))=5\), while the other known bounds, the rank bound \(\sqrt{\mathrm {rank}(A)}\) and the analytic bound (18), only give \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A) \ge 3\).

We now observe that there exist \(0<\varepsilon ,\delta <1/2\) such that \(\hbox {cpsd-rank}_\mathbb {C}(M(\alpha )) = 5\) for all \( \alpha \in [0,\varepsilon ] \cup [\delta ,1/2]\). Indeed, this follows from the fact that \({\xi _{1}^{\mathrm {cpsd}}}(M(0)) = 5\) (by Lemma 6), the above result that \({\xi _{2,V}^{\mathrm {cpsd}}}(M(1/2)) = 5\), and the lower semicontinuity of \(\alpha \mapsto {\xi _{2,V}^{\mathrm {cpsd}}}(M(\alpha ))\), which is shown in Lemma 7 below.

As the matrices \(M(\alpha )\) are nonsingular, the above factorization shows that their cp-rank is equal to 5 for all \(\alpha \in [0,1/2]\); whether they all have \(\hbox {cpsd-rank}\) equal to 5 is not known.

2.3 Boosting the Bounds

In this section, we propose some additional constraints that can be added to strengthen the bounds \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\) for finite t. These constraints may shrink the feasibility region of \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\) for \(t \in \mathbb {N}\), but they are redundant for \(t\in \{\infty ,*\}\). The latter is shown using the reformulation of the parameters \({\xi _{\infty ,V}^{\mathrm {cpsd}}}(A)\) and \({\xi _{*,V}^{\mathrm {cpsd}}}(A)\) in terms of \(C^*\)-algebras.

We first mention how to construct localizing constraints of “bilinear type”, inspired by the work of Berta, Fawzi and Scholz [7]. Note that as for localizing constraints, these bilinear constraints can be modeled as semidefinite constraints.

Lemma 2

Let \(A\in \mathrm {CS}_{+}^n\), \(t \in \mathbb {N}\cup \{\infty , *\}\), and let \(\{g,g'\}\) be localizing for A. If we add the constraints

to \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\), then we still get a lower bound on \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\). However, the constraints (16) are redundant for \({\xi _{\infty ,V}^{\mathrm {cpsd}}}(A)\) and \({\xi _{*,V}^{\mathrm {cpsd}}}(A)\) when \(g,g' \in {{\mathscr {M}}}(S_{A,V}^{\mathrm {cpsd}})\).

Proof

Let \(\mathbf {X}\in (\mathrm {H}^d_+)^n\) be a Gram decomposition of A, and let \(L =L_\mathbf {X}\) be the real part of the trace evaluation at \(\mathbf {X}\). Then, \(p(\mathbf {X})^* g(\mathbf {X}) p(\mathbf {X})\succeq 0\) and \(g'(\mathbf {X})\succeq 0\), and thus

So by adding the constraints (16) we still get a lower bound on \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\).

To show that the constraints (16) are redundant for \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\) and \({\xi _{*,V}^{\mathrm {cpsd}}}(A)\) when \(g,g'\in {{\mathscr {M}}}(S_{A,V}^{\mathrm {cpsd}})\), we let \(t\in \{\infty ,*\}\) and assume L is feasible for \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\). By Theorem 1 there exist a unital \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \) and \(\mathbf {X}\in {\mathscr {D}}(S_{A,V}^{\mathrm {cpsd}})\) such that \(L(p)=L(1) \tau (p(\mathbf {X}))\) for all \(p\in \mathbb {R}\langle \mathbf{x}\rangle \). Since \(g,g' \in {{\mathscr {M}}}(S_{A,V}^{\mathrm {cpsd}})\) we know that \(g(\mathbf {X}), g'(\mathbf {X})\) are positive elements in \({{\mathscr {A}}}\), so \(g(\mathbf {X}) = a^* a\) and \(g'(\mathbf {X}) = b^* b\) for some \(a,b \in {{\mathscr {A}}}\). Then, we have

where we use that \(\tau \) is a positive tracial state on \({{\mathscr {A}}}\). \(\square \)

Second, we show how to use zero entries in A and vectors in the kernel of A to enforce new constraints on \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\).

Lemma 3

Let \(A\in \mathrm {CS}_{+}^n\) and \(t \in \mathbb {N}\cup \{\infty , *\}\). If we add the constraint

to \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\), then we still get a lower bound on \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\). Moreover, these constraints are redundant for \({\xi _{\infty ,V}^{\mathrm {cpsd}}}(A)\) and \({\xi _{*,V}^{\mathrm {cpsd}}}(A)\).

Proof

Let \(\mathbf {X}\in (\mathrm {H}^d_+)^n\) be a Gram factorization of A and let \(L_\mathbf {X}\) be as in (5). If \(Av=0\), then \(0=v^\textsf {T}Av = {{\,\mathrm{Tr}\,}}((\sum _{i=1}^n v_iX_i)^2)\) and thus \(\sum _{i=1}^nv_iX_i=0\). Hence \(L_\mathbf {X}((\sum _{I=1}^nv_ix_i)p)=\mathrm {Re}({{\,\mathrm{Tr}\,}}((\sum _{i=1}^nv_iX_i)p(\mathbf {X})))=0\). If \(A_{ij}=0\), then \({{\,\mathrm{Tr}\,}}(X_iX_j)=0\), which implies \(X_iX_j=0\), since \(X_i\) and \(X_j\) are positive semidefinite. Hence, \(L_\mathbf {X}(x_ix_ip)=\text {Re}({{\,\mathrm{Tr}\,}}(X_iX_jp(\mathbf {X})))=0\). Therefore, adding the constraints (17) still lower bounds \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A)\).

As in the proof of the previous lemma, if \(t \in \{\infty ,*\}\) and L is feasible for \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\) then, by Theorem 1, there exist a unital \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \) and \(\mathbf {X}\) in \({\mathscr {D}}(S_{A,V}^{\mathrm {cpsd}})\) such that \(L(p)=L(1) \tau (p(\mathbf {X}))\) for all \(p\in \mathbb {R}\langle \mathbf{x}\rangle \). Moreover, by Lemma 12, we may assume \(\tau \) to be faithful. For a vector v in the kernel of A, we have \(0 = v^\textsf {T}A v = L((\sum _i v_i x_i)^2) = L(1) \tau ( (\sum _i v_i X_i)^2)\), and hence, since \(\tau \) is faithful, \(\sum _i v_i X_i = 0\) in \({{\mathscr {A}}}\). It follows that \(L(p (\sum _i v_i x_i)) = L(1) \tau (p(\mathbf {X}) \, 0) = 0\) for all \(p \in \mathbb {R}\langle \mathbf{x}\rangle \). Analogously, if \(A_{ij}=0\), then \(L(x_ix_j)=0\) implies \(\tau (X_iX_j)=0\) and thus \(X_iX_j=0\), since \(X_i, X_j\) are positive in \({{\mathscr {A}}}\) and \(\tau \) is faithful. This implies \(L(p x_i x_j) = 0\) for all \(p \in \mathbb {R}\langle \mathbf{x}\rangle \). This shows that the constraints (17) are redundant. \(\square \)

Note that the constraints \(L(p \, (\sum _{i=1}^nv_ix_i))=0\) for \(p\in \mathbb {R}\langle \mathbf{x}\rangle _t,\) which are implied by (17), are in fact redundant: if \(v \in \ker (A)\), then the vector obtained by extending v with zeros belongs to \(\ker (M_t(L))\), since \(M_t(L)\succeq 0\). Also, for an implementation of \({\xi _{t}^{\mathrm {cpsd}}}(A)\) with the additional constraints (17), it is more efficient to index the moment matrices with a basis for \(\mathbb {R}\langle \mathbf{x}\rangle _{t}\) modulo the ideal \({\mathscr {I}}_t\big (\{ \sum _i v_i x_i: v \in \ker (A)\} \cup \{x_i x_j : A_{ij} = 0\}\big )\).

2.4 Additional Properties of the Bounds

Here, we list some additional properties of the parameters \({\xi _{t}^{\mathrm {cpsd}}}(A)\) for \(t \in \mathbb {N}\cup \{\infty , *\}\). First we state some properties for which the proofs are immediate and thus omitted.

Lemma 4

Suppose \(A\in \mathrm {CS}_{+}^n\) and \(t \in \mathbb {N}\cup \{\infty ,*\}\).

-

(1)

If P is a permutation matrix, then \({\xi _{t}^{\mathrm {cpsd}}}(A) = {\xi _{t}^{\mathrm {cpsd}}}(P^\textsf {T}A P)\).

-

(2)

If B is a principal submatrix of A, then \({\xi _{t}^{\mathrm {cpsd}}}(B) \le {\xi _{t}^{\mathrm {cpsd}}}(A)\).

-

(3)

If D is a positive definite diagonal matrix, then \({\xi _{t}^{\mathrm {cpsd}}}(A) = {\xi _{t}^{\mathrm {cpsd}}}(D A D).\)

We also have the following direct sum property, where the equality follows using the \(C^*\)-algebra reformulations as given in Propositions 1 and 2.

Lemma 5

If \(A \in \mathrm {CS}_{+}^n\) and \(B \in \mathrm {CS}_{+}^m\), then \({\xi _{t}^{\mathrm {cpsd}}}(A\oplus B) \le {\xi _{t}^{\mathrm {cpsd}}}(A) + {\xi _{t}^{\mathrm {cpsd}}}(B)\), where equality holds for \(t \in \{\infty , *\}\).

Proof

To prove the inequality, we take \(L_A\) and \(L_B\) feasible for \({\xi _{t}^{\mathrm {cpsd}}}(A)\) and \({\xi _{t}^{\mathrm {cpsd}}}(B)\), and construct a feasible L for \({\xi _{t}^{\mathrm {cpsd}}}(A\oplus B)\) by \(L(p(\mathbf{x}, \mathbf{y})) = L_A(p(\mathbf{x}, \mathbf{0})) + L_B(p(\mathbf{0}, \mathbf{y}))\).

Now we show equality for \(t = \infty \) (\(t=*\)). By Proposition 1 (Proposition 2), \({\xi _{t}^{\mathrm {cpsd}}}(A\oplus B)\) is equal to the infimum over all \(\alpha \ge 0\) for which there exists a (finite dimensional) unital \(C^*\)-algebra \({{\mathscr {A}}}\) with tracial state \(\tau \) and \((\mathbf {X}, \mathbf{Y}) \in {{\mathscr {D}}}_{{\mathscr {A}}}(S_{A\oplus B}^{\mathrm {cpsd}})\) such that \(A = \alpha \cdot (\tau (X_iX_j))\), \(B = \alpha \cdot (\tau (Y_iY_j))\) and \((\tau (X_iY_j))=0\). This implies \(\mathbf {X}\in {{\mathscr {D}}}_{{\mathscr {A}}}(S_A^{\mathrm {cpsd}})\) and \(\mathbf Y\in {{\mathscr {D}}}_{{\mathscr {A}}}(S_B^{\mathrm {cpsd}})\). Let \(P_A\) be the projection onto the space \(\sum _i \mathrm {Im}(X_i)\) and define the linear form \(L_A \in \mathbb {R}\langle \mathbf {x}\rangle ^*\) by \(L_A(p) = \alpha \cdot \tau (p(\mathbf {X}) P_A)\). It follows that \(L_A\) is nonnegative on \({\mathscr {M}}(S_A^{\mathrm {cpsd}})\), and

so \(L_A\) is feasible for \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\) with \(L_A(1)=\alpha \tau (P_A)\). In the same way, we consider the projection \(P_B\) onto the space \(\sum _j \mathrm {Im}(Y_j)\) and define a feasible solution \(L_B\) for \({\xi _{t}^{\mathrm {cpsd}}}(B)\) with \(L_B(1)=\alpha \tau (P_B)\). By Lemma 12 we may assume \(\tau \) to be faithful, so that positivity of \(X_i\) and \(Y_j\) together with \(\tau (X_iY_j) = 0\) implies \(X_iY_j = 0\) for all i and j, and thus \(\sum _i \mathrm {Im}(X_i) \perp \sum _j \mathrm {Im}(Y_j)\). This implies \(I \succeq P_A + P_B\) and thus \(\tau (P_A+P_B)\le \tau (1)=1\). We have

so \({\xi _{t}^{\mathrm {cpsd}}}(A)+{\xi _{t}^{\mathrm {cpsd}}}(B) \le L_A(1)+L_B(1)\le \alpha \), completing the proof. \(\square \)

Note that the \(\hbox {cpsd-rank}\) of a matrix satisfies the same properties as those mentioned in the above two lemmas, where the inequality in Lemma 5 is always an equality: \(\hbox {cpsd-rank}_\mathbb {C}(A~\oplus ~B)=\hbox {cpsd-rank}_\mathbb {C}(A)+\hbox {cpsd-rank}_\mathbb {C}(B)\) [38, 62].

The following lemma shows that the first level of our hierarchy is at least as good as the analytic lower bound (18) on the cpsd-rank derived in [62, Theorem 10].

Lemma 6

For any non-zero matrix \(A \in \mathrm {CS}_{+}^n\), we have

Proof

Let L be feasible for \({\xi _{1}^{\mathrm {cpsd}}}(A)\). Since L is nonnegative on \({{\mathscr {M}}}_{2}(S_A^{\mathrm {cpsd}})\), it follows that \(L(\sqrt{A_{ii}}x_i-x_i^2)\ge 0\), implying \(\sqrt{A_{ii}} L(x_i)\ge L(x_i^2)=A_{ii}\) and thus \(L(x_i)\ge \sqrt{A_{ii}}\). Moreover, the matrix \(M_1(L)\) is positive semidefinite. By taking the Schur complement with respect to its upper left corner (indexed by 1), it follows that the matrix \(L(1)\cdot A- (L(x_i)L(x_j))\) is positive semidefinite. Hence, the sum of its entries is nonnegative, which gives \(L(1)(\sum _{i,j}A_{ij})\ge (\sum _i L(x_i))^2\ge (\sum _i \sqrt{A_{ii}})^2\) and shows the desired inequality. \(\square \)

As an application of Lemma 6, the first bound \({\xi _{1}^{\mathrm {cpsd}}}\) is exact for the \(k\times k\) identity matrix: \({\xi _{1}^{\mathrm {cpsd}}}(I_k)={{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(I_k)=k\). Moreover, by combining this with Lemma 4, it follows that \({\xi _{1}^{\mathrm {cpsd}}}(A)~\ge ~k\) if A contains a diagonal positive definite \(k\times k\) principal submatrix. A slightly more involved example is given by the \(5 \times 5\) circulant matrix A whose entries are given by \(A_{ij} = \cos ((i-j)4\pi /5)^2\) (\(i,j \in [5]\)); this matrix was used in [25] to show a separation between the completely positive semidefinite cone and the completely positive cone, and it was shown that \(\hbox {cpsd-rank}_\mathbb {C}(A) =2\). The analytic lower bound of [62] also evaluates to 2, hence Lemma 6 shows that our bound is tight on this example.

We now examine further analytic properties of the parameters \({\xi _{t}^{\mathrm {cpsd}}}(\cdot )\). For each \(r \in \mathbb {N}\), the set of matrices \(A\in \mathrm {CS}_{+}^n\) with \({{\,\mathrm{cpsd-rank_{\mathbb {C}}}\,}}(A) \le r\) is closed, which shows that the function \(A \mapsto \hbox {cpsd-rank}_\mathbb {C}(A)\) is lower semicontinuous. We now show that the functions \(A \mapsto {\xi _{t}^{\mathrm {cpsd}}}(A)\) have the same property. The other bounds defined in this paper are also lower semicontinuous, with a similar proof.

Lemma 7

For every \(t \in \mathbb {N}\cup \{\infty \}\) and \(V \subseteq \mathbb {R}^n\), the function

is lower semicontinuous.

Proof

It suffices to show the result for \(t\in \mathbb {N}\), because \({\xi _{\infty ,V}^{\mathrm {cpsd}}}(A)=\mathrm {sup}_t\, {\xi _{t,V}^{\mathrm {cpsd}}}(A)\), and the pointwise supremum of lower semicontinuous functions is lower semicontinuous. We show that the level sets \(\{A \in \mathrm {S}^n: {\xi _{t,V}^{\mathrm {cpsd}}}(A) \le r\}\) are closed. For this, we consider a sequence \((A_k)_{k\in \mathbb {N}}\) in \(\mathrm {S}^n\) converging to \(A \in \mathrm {S}^n\) such that \({\xi _{t,V}^{\mathrm {cpsd}}}(A_k) \le r\) for all k. We show that \({\xi _{t,V}^{\mathrm {cpsd}}}(A) \le r\). Let \(L_k\in \mathbb {R}\langle \mathbf{x}\rangle _{2t}^*\) be an optimal solution to \({\xi _{t,V}^{\mathrm {cpsd}}}(A_k)\). As \(L_k(1) \le r\) for all k, it follows from Lemma 13 that there is a pointwise converging subsequence of \((L_k)_k\), still denoted \((L_k)_k\) for simplicity, that has a limit \(L\in \mathbb {R}\langle \mathbf{x}\rangle _{2t}^*\) with \(L(1)\le r\). To complete the proof we show that L is feasible for \({\xi _{t,V}^{\mathrm {cpsd}}}(A)\). By the pointwise convergence of \(L_k\) to L, for every \(\varepsilon >0\), \(p \in \mathbb {R}\langle \mathbf{x}\rangle \), and \(i \in [n]\), there exists a \(K \in \mathbb {N}\) such that for all \(k \ge K\) we have

Hence, we have

where in the second inequality we use that \(0 \le L_k(p^* x_i p) \le L(p^* x_i p) + 1\). Letting \(\varepsilon \rightarrow 0\) gives \(L(p^*(\sqrt{A_{ii}}x_i-x_i^2)p)\ge 0\).

Similarly, one can show \(L(p^*(v^\textsf {T}Av - (\sum _i v_i x_i)^2) p) \ge 0\) for \(v \in V\), \(p \in \mathbb {R}\langle \mathbf{x}\rangle \).

\(\square \)

If we restrict to completely positive semidefinite matrices with an all-ones diagonal, that is, to \(\mathrm {CS}_{+}^n \cap \mathrm {E}_n\), we can show an even stronger property. Here, \(\mathrm {E}_n\) is the elliptope, which is the set of \(n \times n\) positive semidefinite matrices with an all-ones diagonal.

Lemma 8

For every \(t \in \mathbb {N}\cup \{\infty \}\), the function

is convex, and hence continuous on the interior of its domain.

Proof

Let \(A,B\in \mathrm {CS}_{+}^n\cap \mathrm {E}_n\) and \(0<\lambda <1\). Let \(L_A\) and \(L_B\) be optimal solutions for \({\xi _{t}^{\mathrm {cpsd}}}(A)\) and \({\xi _{t}^{\mathrm {cpsd}}}(B)\). Since the diagonals of A and B are the same, we have \(S_A^{\mathrm {cpsd}}=S_B^{\mathrm {cpsd}}\). So the linear functional \(L=\lambda L_A+(1-\lambda )L_B\) is feasible for \({\xi _{t}^{\mathrm {cpsd}}}(\lambda A+(1-\lambda )B)\), hence \( {\xi _{t}^{\mathrm {cpsd}}}(\lambda A+(1-\lambda )B)\le \lambda L_A(1)+(1-\lambda )L_B(1) = \lambda {\xi _{t}^{\mathrm {cpsd}}}(A)+ (1-\lambda ){\xi _{t}^{\mathrm {cpsd}}}(B). \) \(\square \)

Example 3

In this example, we show that for \(t \ge 1\), the function

is not continuous. For this, we consider the matrices

with \({{\,\mathrm{cpsd-rank}\,}}_\mathbb {C}(A_k) = 2\) for all \(k\ge 1\). As \(A_k\) is diagonal positive definite, we have \({\xi _{t}^{\mathrm {cpsd}}}(A_k) = 2\) for all \(t,k\ge 1\), while \({\xi _{t}^{\mathrm {cpsd}}}(\lim _{k \rightarrow \infty } A_k) = 1\). This argument extends to \(\mathrm {CS}_{+}^n\) with \(n > 2\). This example also shows that the first level of the hierarchy \({\xi _{1}^{\mathrm {cpsd}}}(\cdot )\) can be strictly better than the analytic lower bound (18) of [62].

Example 4

In this example, we determine \({\xi _{t}^{\mathrm {cpsd}}}(A)\) for all \(t \ge 1\) and \(A \in \mathrm {CS}_{+}^2\). In view of Lemma 4(3), we only need to find \({\xi _{t}^{\mathrm {cpsd}}}(A(\alpha ))\) for \(0 \le \alpha \le 1\), where \( A(\alpha )= \bigl ({\begin{matrix} 1 &{} \alpha \\ \alpha &{} 1\end{matrix}}\bigr ). \)

The first bound \({\xi _{1}^{\mathrm {cpsd}}}(A(\alpha ))\) is equal to the analytic bound \(2/(\alpha +1)\) from (18), where the equality follows from the fact that L given by \(L(x_i x_j) = A(\alpha )_{ij}\), \(L(x_1)=L(x_2)=1\) and \(L(1)=2/(\alpha +1)\) is feasible for \({\xi _{1}^{\mathrm {cpsd}}}(A(\alpha ))\).

For \(t \ge 2\), we show \({\xi _{t}^{\mathrm {cpsd}}}(A(\alpha )) = 2-\alpha \). By the above, this is true for \(\alpha = 0\) and \(\alpha = 1\), and in Example 1 we show \({\xi _{t}^{\mathrm {cpsd}}}(A(1/2)) =3/2\) for \(t\ge 2\). The claim then follows since the function \(\alpha \mapsto {\xi _{t}^{\mathrm {cpsd}}}(A(\alpha ))\) is convex by Lemma 8.

3 Lower Bounds on the Completely Positive Rank

The best current approach for lower bounding the completely positive rank of a matrix is due to Fawzi and Parrilo [27]. Their approach relies on the atomicity of the completely positive rank, that is, the fact that \(\hbox {cp-rank}(A)=r\) if and only if A has an atomic decomposition \(A=\sum _{k=1}^r v_k v_k^\textsf {T}\) for nonnegative vectors \(v_k\). In other words, if \(\hbox {cp-rank}(A)=r\), then A / r can be written as a convex combination of r rank one positive semidefinite matrices \(v_k v_k^\textsf {T}\) that satisfy \(0 \le v_k v_k^\textsf {T}\le A\) and \(v_k v_k^\textsf {T}\preceq A\). Based on this observation, Fawzi and Parrilo define the parameter

as lower bound for \(\hbox {cp-rank}(A)\). They also define the semidefinite programming parameter

as an efficiently computable relaxation of \(\tau _\mathrm {cp}(A)\), and they show \({{\,\mathrm{rank}\,}}(A) \le \tau _{\mathrm {cp}}^{\mathrm {sos}}(A)\). Therefore, we have

Instead of the atomic point of view, here we take the matrix factorization perspective, which allows us to obtain bounds by adapting the techniques from Sect. 2 to the commutative setting. Indeed, we may view a factorization \(A =(a_i^\mathsf{T}a_j)\) by nonnegative vectors as a factorization by diagonal (and thus pairwise commuting) positive semidefinite matrices.

Before presenting the details of our hierarchy of lower bounds, we mention some of our results in order to make the link to the parameters \(\tau _{\mathrm {cp}}^{\mathrm {sos}}(A)\) and \( \tau _\mathrm {cp}(A)\). The direct analog of \(\{{\xi _{t}^{\mathrm {cpsd}}}(A)\}\) in the commutative setting leads to a hierarchy that does not converge to \(\tau _{\mathrm {cp}}(A)\), but we provide two approaches to strengthen it that do converge to \(\tau _{\mathrm {cp}}(A)\). The first approach is based on a generalization of the tensor constraints in \(\tau _{\mathrm {cp}}^{\mathrm {sos}}(A)\). We also provide a computationally more efficient version of these tensor constraints, leading to a hierarchy whose second level is at least as good as \(\tau _{\mathrm {cp}}^\mathrm {sos}(A)\) while being defined by a smaller semidefinite program. The second approach relies on adding localizing constraints for vectors in the unit sphere as in Sect. 2.2.

The following hierarchy is a commutative analog of the hierarchy from Sect. 2, where we may now add the localizing polynomials \(A_{ij}-x_ix_j\) for the pairs \(1 \le i < j \le n\), which was not possible in the noncommutative setting of the completely positive semidefinite rank. For each \(t \in \mathbb {N}\cup \{\infty \}\), we consider the semidefinite program

where we set

We additionally define \({\xi _{*}^{\mathrm {cp}}}(A)\) by adding the constraint \({{\,\mathrm{rank}\,}}(M(L)) < \infty \) to \({\xi _{\infty }^{\mathrm {cp}}}(A)\). We also consider the strengthening \({\xi _{t,\dagger }^{\mathrm {cp}}}(A)\), where we add to \({\xi _{t}^{\mathrm {cp}}}(A)\) the positivity constraints

and the tensor constraints

which generalize the case \(l=2\) used in the relaxation \(\tau _\mathrm {cp}^\mathrm {sos}(A)\). Here, for a word \(w \in \langle \mathbf {x}\rangle \), we denote by \(w^c\) the corresponding (commutative) monomial in \([\mathbf {x}]\). The tensor constraints (20) involve matrices indexed by the noncommutative words of length exactly l. In Sect. 3.4, we show a more economical way to rewrite these constraints as \( (L(mm'))_{m,m' \in [\mathbf {x}]_{=l}} \preceq Q_l A^{\otimes l} Q_l^\textsf {T}, \) thus involving smaller matrices indexed by commutative words of degree l.

Note that, as before, we can strengthen the bounds by adding other localizing polynomials to the set \(S_A^{\mathrm {cp}}\). In particular, we can follow the approach of Sect. 2.2. Another possibility is to add localizing constraints specific to the commutative setting: we can add each monomial \(u \in [\mathbf{x}]\) to \(S_A^{\mathrm {cp}}\) (see Sect. 3.5.2 for an example).

The bounds \({\xi _{t}^{\mathrm {cp}}}(A)\) and \({\xi _{t,\dagger }^{\mathrm {cp}}}(A)\) are monotonically nondecreasing in t, and they are invariant under simultaneously permuting the rows and columns of A and under scaling a row and column of A by a positive number. In Propositions 6 and 7, we show

and in Proposition 10, we show the equality \({\xi _{*,\dagger }^{\mathrm {cp}}}(A) = \tau _{\mathrm {cp}}(A)\).

3.1 Comparison to \(\tau _\mathrm {cp}^\mathrm {sos}(A)\)

We first show that the semidefinite programs defining \({\xi _{t,\dagger }^{\mathrm {cp}}}(A)\) are valid relaxations for the completely positive rank. More precisely, we show that they lower bound \(\tau _{\mathrm {cp}}(A)\).

Proposition 6

For \(A \in \hbox {CP}^n\) and \(t \in \mathbb {N}\cup \{\infty ,*\}\), we have \({\xi _{t,\dagger }^{\mathrm {cp}}}(A) \le \tau _{\mathrm {cp}}(A)\).

Proof

It suffices to show the inequality for \(t=*\). For this, consider a decomposition \(A=\alpha \, \sum _{k=1}^r \lambda _k R_k\), where \(\alpha \ge 1\), \(\lambda _k>0\), \(\sum _{k=1}^r \lambda _k = 1\), \(0\le R_k\le A\), \(R_k\preceq A\), and \({{\,\mathrm{rank}\,}}R_k= 1\). There are nonnegative vectors \(v_k\) such that \(R_k=v_k v_k^\textsf {T}\). Define the linear map \(L\in \mathbb {R}[\mathbf{x}]^*\) by \(L=\alpha \sum _{k=1}^r \lambda _k L_{v_k}\), where \(L_{v_k}\) is the evaluation at \(v_k\) mapping any polynomial \(p\in \mathbb {R}[\mathbf{x}]\) to \(p(v_k)\).

The equality \((L(x_ix_j))=A\) follows from the identity \(A=\alpha \sum _{k=1}^r \lambda _k R_k\). The constraints \( L((\sqrt{A_{ii}} x_i - x_i^2) p^2) \ge 0 \) follow because

where we use that \((v_k)_i \ge 0\) and \((v_k)_i^2 = (R_k)_{ii} \le A_{ii}\) implies \((v_k)_i^2 \le (v_k)_i \sqrt{A_{ii}}\). The constraints \( L((A_{ij} - x_ix_j) p^2) \ge 0 \) and

follow in a similar way.

It remains to be shown that \(X_l \preceq A^{\otimes l}\) for all l, where we set \(X_l = (L(uv))_{u,v\in \langle \mathbf{x}\rangle _{=l}}\). Note that \(X_1=A\). We adapt the argument used in [27] to show \(X_l \preceq A^{\otimes l}\) using induction on \(l \ge 2\). Suppose \(A^{\otimes (l-1)}\succeq X_{l-1}\). Combining \(A-R_k\succeq 0\) and \(R_k\succeq 0\) gives \((A-R_k)\otimes R_k^{\otimes (l-1)}\succeq 0\) and thus \(A\otimes R_k^{\otimes (l-1)}\succeq R_k^{\otimes l}\) for each k. Scale by factor \(\alpha \lambda _k\) and sum over k to get

Finally, combining with \(A^{\otimes (l-1)}-X_{l-1}\succeq 0\) and \(A\succeq 0\), we obtain

\(\square \)

Now we show that the new parameter \({\xi _{2,\dagger }^{\mathrm {cp}}}(A)\) is at least as good as \(\tau _\mathrm {cp}^\mathrm {sos}(A)\). Later in Sect. 3.5.1, we will give an example where the inequality is strict.

Proposition 7

For \(A \in \hbox {CP}^n\) we have \( \tau _{\mathrm {cp}}^{\mathrm {sos}}(A) \le {\xi _{2,\dagger }^{\mathrm {cp}}}(A). \)

Proof

Let L be feasible for \({\xi _{2,\dagger }^{\mathrm {cp}}}(A)\). We will construct a feasible solution to the program defining \(\tau _{\mathrm {cp}}^{\mathrm {sos}}(A)\) with objective value L(1), which implies \(\tau _{\mathrm {cp}}^{\mathrm {sos}}(A)\le L(1)\) and thus the desired inequality. For this set \(\alpha = L(1)\) and define the symmetric \(n^2 \times n^2\) matrix X by \( X_{(i,j),(k,l)} =L(x_ix_jx_kx_l)\) for \(i,j,k,l \in [n]\). Then, the matrix

is positive semidefinite. This follows because M is obtained from the principal submatrix of \(M_2(L)\) indexed by the monomials 1 and \(x_ix_j\) (\(1\le i\le j\le n\)) where the rows/columns indexed by \(x_j x_i\) with \(1 \le i < j \le n\) are duplicates of the rows/columns indexed by \(x_i x_j\).

We have \(L((A_{ij} - x_ix_j)x_ix_j) \ge 0\) for all i, j: For \(i \ne j\) this follows using the constraint \(L((A_{ij} - x_ix_j)u) \ge 0\) with \(u = x_ix_j\) (from (19)), and for \(i = j\) this follows from

which holds because of (10), the constraint \(L(p^2) \ge 0\) for \(\deg (p)\le 2\), and the constraint \(L(\sqrt{A_{ii}} x_i - x_i^2) \ge 0\). Using \(L(x_ix_j) = A_{ij}\), we get \( X_{(i,j),(i,j)} = L(x_i^2x_j^2) \le A_{ij}^2. \) We also have \( X_{(i,j),(k,l)} = L(x_ix_jx_kx_l) = L(x_ix_lx_kx_j) = X_{(i,l),(k,j)}, \) and the constraint \((L(uv))_{u,v \in \langle \mathbf {x}\rangle _{=2}} \preceq A^{\otimes 2}\) implies \(X \preceq A \otimes A\). \(\square \)

3.2 Convergence of the Basic Hierarchy

We first summarize convergence properties of the hierarchy \({\xi _{t}^{\mathrm {cp}}}(A)\). Note that unlike in Sect. 2 where we can only claim the inequality \({\xi _{\infty }^{\mathrm {cpsd}}}(A)\le {\xi _{*}^{\mathrm {cpsd}}}(A)\), here we can show the equality \({\xi _{\infty }^{\mathrm {cp}}}(A) = {\xi _{*}^{\mathrm {cp}}}(A)\). This is because we can use Theorem 7, which permits to represent certain truncated linear functionals by finite atomic measures.

Proposition 8

Let \(A \in \hbox {CP}^n\). For every \(t \in \mathbb {N}\cup \{\infty , *\}\) the optimum in \({\xi _{t}^{\mathrm {cp}}}(A)\) is attained, and \({\xi _{t}^{\mathrm {cp}}}(A) \rightarrow {\xi _{\infty }^{\mathrm {cp}}}(A) = {\xi _{*}^{\mathrm {cp}}}(A)\) as \(t\rightarrow \infty \). If \({\xi _{t}^{\mathrm {cp}}}(A)\) admits a flat optimal solution, then \({\xi _{t}^{\mathrm {cp}}}(A) = {\xi _{\infty }^{\mathrm {cp}}}(A)\). Moreover, \({\xi _{\infty }^{\mathrm {cp}}}(A) = {\xi _{*}^{\mathrm {cp}}}(A)\) is the minimum value of L(1) taken over all conic combinations \(L\) of evaluations at elements of \(D(S_A^{\mathrm {cp}})\) satisfying \(A = (L(x_ix_j))\).