Abstract

The positive semidefinite (psd) rank of a polytope is the smallest \(k\) for which the cone of \(k \times k\) real symmetric psd matrices admits an affine slice that projects onto the polytope. In this paper we show that the psd rank of a polytope is at least the dimension of the polytope plus one, and we characterize those polytopes whose psd rank equals this lower bound. We give several classes of polytopes that achieve the minimum possible psd rank including a complete characterization in dimensions two and three.

Similar content being viewed by others

1 Introduction

Efficient representations of polytopes are of fundamental importance in contexts such as linear optimization where the complexity of many algorithms depends on the size of the representation. A standard idea to find a compact description of a complicated polytope \(P \subset \mathbb{R }^n\) is to look for a simpler convex set of higher dimension that has \(P\) as a linear image of it. Affine slices of closed convex cones offer a rich source of convex sets and the following definition was introduced in [9].

Definition 1.1

Let \(P \subset \mathbb{R }^n\) be a polytope. If \(K \subset \mathbb{R }^m\) is a closed convex cone, \(L\) an affine space in \(\mathbb{R }^m\), and \(\pi \,:\, \mathbb{R }^m \rightarrow \mathbb{R }^{n}\) a linear map such that \(P = \pi (K \cap L)\), then we say that \(K \cap L\) is a \(K\)-lift of \(P\).

If linear optimization over affine slices of \(K\) admits efficient algorithms, then often, linear optimization over \(P\) can be done rapidly as well. Well studied cones in this context are nonnegative orthants and the cones of real symmetric positive semidefinite (psd) matrices. We will denote the \(m\)-dimensional nonnegative orthant by \(\mathbb{R }^{m}_{+}\) and the cone of \(m \times m\) psd matrices by \(\mathcal{S }_+^m\). Affine slices of \(\mathbb{R }^{m}_{+}\) are polyhedra over which linear optimization can be done efficiently via linear programming. Affine slices of \(\mathcal{S }_+^m\) are called spectrahedra, and linear optimization over them can be done efficiently via semidefinite programming. Recall that \(\mathbb{R }^{m}_{+}\) embeds into \(\mathcal{S }_+^m\) via diagonal matrices and hence, polyhedra are special cases of spectrahedra, and semidefinite programming generalizes linear programming.

There are many families of polytopes in \(\mathbb{R }^n\) with exponentially many facets (in \(n\)) that admit small (polynomial in \(n\)) polyhedral or spectrahedral lifts. Examples are the parity and spanning tree polytopes [16], the permutahedron [7] and the stable set polytope of a perfect graph [15]. When the lifts come from families of cones such as \(\{\mathbb{R }^{m}_{+}\}\) or \(\{\mathcal{S }_+^m \}\), it is useful to determine the smallest cone in the family that admits a lift of the polytope. This allows the notion of cone rank of a polytope with respect to a family of cones [9]. We recall the definitions needed in this paper.

Definition 1.2

[9]

-

(1)

The nonnegative rank of a polytope \(P \subset \mathbb{R }^n\), denoted as \(\text {rank}_+\,P\), is the smallest \(k\) such that \(P\) has an \(\mathbb{R }^{k}_{+}\)-lift.

-

(2)

The positive semidefinite rank of a polytope \(P \subset \mathbb{R }^n\), denoted as \(\text {rank}_{\text {psd}}\,P\), is the smallest \(k\) such that \(P\) has an \(\mathcal{S }_+^k\)-lift.

To describe our results, we need the following further definitions.

Definition 1.3

[16] Let \(P\) be a full-dimensional polytope in \(\mathbb{R }^n\) with vertex set \(\{p_1,\ldots ,p_v\}\) and an irredundant (facet) inequality representation

where \(\beta _j \in \mathbb{R }\) and \(a_j \in \mathbb{R }^n\). Then the nonnegative matrix in \(\mathbb{R }^{v \times f}\) whose \((i,j)\)-entry is \(\beta _j - \langle a_j, p_i \rangle \) is called a slack matrix of \(P\).

Recall that the polar dual of a cone \(K \subset \mathbb{R }^m\) is the cone

In the vector space of \(m \times m\) symmetric matrices we use the trace inner product \(\langle A, B \rangle = \text {Tr}(AB)\). Both \(\mathcal{S }_+^k\) and \(\mathbb{R }^k_+\) are self dual cones, meaning that \(K^*=K\), and we will identify them with their polar duals in what follows. The notion of cone factorizations of slack matrices plays a central role in the theory of cone lifts of polytopes.

Definition 1.4

[9] Let \(M = (M_{ij}) \in \mathbb{R }_+^{p \times q}\) be a nonnegative matrix and \(K\) a closed convex cone whose polar dual is \(K^{*}\).

-

A \(K\)-factorization of \(M\) is a pair of ordered sets \(a^1, \ldots , a^p \in K\) and \(b^1, \ldots , b^q \in K^*\) (called factors) such that \(\langle a^{i}, b^{j} \rangle = M_{ij}\).

-

When \(K = \mathbb{R }^{m}_{+}\) (respectively, \(\mathcal{S }_+^m\)), a \(K\)-factorization of \(M\) is called a nonnegative (respectively, psd) factorization of \(M\).

-

The smallest \(k\) for which \(M\) has an \(\mathbb{R }^{k}_{+}\)-factorization (respectively, \(\mathcal{S }_+^k\)-factorization) is called the nonnegative rank (respectively, psd rank) of \(M\). We denote these invariants of \(M\) as \(\text {rank}_+\,M\) and \(\text {rank}_{\text {psd}}\,M\).

Any positive scaling of a facet inequality of a polytope \(P\) can be used in Definition 1.3 and so the slack matrix of \(P\) is only defined up to positive scalings of its columns. We denote any such slack matrix of \(P\) by \(S_P\). Since scaling rows or columns of a matrix \(M\) by arbitrary positive real numbers does not affect the existence of a \(K\)-factorization of \(M\), all slack matrices of \(P\) will have the same behavior with respect to \(K\)-factorizations and, in particular, have the same nonnegative (respectively, psd) rank.

In what follows, \(P \subset \mathbb{R }^n\) is always an \(n\)-dimensional polytope. Yannakakis showed in [16] that \(\text {rank}_+\,P = \text {rank}_+\,S_P\) by proving that \(P\) has an \(\mathbb{R }^k_+\)-lift if and only if \(S_P\) has an \(\mathbb{R }^{k}_{+}\)-factorization. The nonnegative rank of a polytope has been the subject of many recent papers [3–6, 13]. The psd rank of a convex set \(C \subset \mathbb{R }^n\) was introduced in [9] where Yannakakis’ theorem was generalized (Theorem 2.4 [9]). Specializing to polytopes, this theorem says that \(P\) has a \(K\)-lift (in particular, \(\mathcal{S }_+^{k}\)-lift) if and only if \(S_P\) has a \(K\)-factorization (\(\mathcal{S }_+^{k}\)-factorization), and so, \(\text {rank}_{\text {psd}}\,P = \text {rank}_{\text {psd}}\,S_P\). (The extension of Yannakakis’ theorem in the case of polytopes also appeared in [3].) Since \(\mathbb{R }^{k}_{+}\) embeds into \(\mathcal{S }_+^{k}\) for each \(k\), we always have \(\text {rank}_{\text {psd}}\,P \le \text {rank}_+\,P\). It is easy to see that \(\text {rank}_+\,P \ge \text {rank}\,S_P = n+1\). In Proposition 3.2 we show that \(\text {rank}_{\text {psd}}\,P\) is also at least \(n+1\). This is not immediate since for a general nonnegative matrix \(M, \,\text {rank}\,M\) is not a lower bound for \(\text {rank}_{\text {psd}}\,M\), and the correct relationship is that \(\frac{1}{2}(\sqrt{1 + 8\ \text {rank}\,M}-1) \le \text {rank}_{\text {psd}}\,M\) [9]. Theorem 3.5 characterizes those \(n\)-polytopes whose psd rank equals \(n+1\), and we give several families of \(n\)-dimensional polytopes whose psd rank equals this lower bound.

We now recall a few useful facts about nonnegative and psd ranks of polytopes that will be needed in this paper. It follows from [9, Prop. 2] that \(\text {rank}_+\,P\) and \(\text {rank}_{\text {psd}}\,P\) are invariant under projective (and hence also, affine) transformations of \(P\). Further, transposing a matrix \(M\) does not effect the existence of a \(K\)-factorization of \(M\) if \(K\) is self-dual. Therefore, if \(P\) contains the origin in its interior, its polar polytope is \(P^\circ := \{ y \in \mathbb{R }^n \,:\, \langle x, y \rangle \le 1 \,\forall \, x \in P \}\), and \(\text {rank}_+\,P = \text {rank}_+\,P^\circ \) and \(\text {rank}_{\text {psd}}\,P = \text {rank}_{\text {psd}}\,P^\circ \) since we can obtain a slack matrix of \(P^\circ \) by transposing a slack matrix of \(P\) and rescaling rows. It is common to define the slack matrix of a polytope using any inequality description of the polytope, including redundant inequalities. This will not affect the nonnegative or psd rank of the polytope. However, since some of our results will become more cumbersome to state using this more general definition of a slack matrix, we restrict ourselves to Definition 1.3.

The psd rank of a polytope \(P\) quantifies the power of semidefinite programming to provide efficient algorithms for linear optimization over \(P\). For example, the stable set polytope of a perfect graph on \(n\) vertices is known to have psd rank \(n+1\) which provides the only known polynomial time algorithm (via semidefinite programming) for finding the highest weight stable set in a perfect graph. The connection between psd rank and semidefinite lifts allows psd rank to become a possible tool for settling questions concerning semidefinite programming in combinatorial optimization. A question that is currently active is whether the nonnegative rank of the perfect matching polytope of a complete graph \(K_n\) is polynomial in \(n\). This was raised in [16] where it was shown that there are no small symmetric \(\mathbb{R }^{k}_{+}\)-lifts of these polytopes. Both nonnegative and psd ranks of these polytopes are unknown at the moment. Another active question concerns the possible gap between \(\text {rank}_+\,P\) and \(\text {rank}_{\text {psd}}\,P\) which is a measure of the relative strength of linear vs. semidefinite programming for linear optimization over \(P\). No example where this gap is large is known so far. While nonnegative rank has been studied in several papers, the notion of psd rank is new. The results and techniques presented here further improve our understanding of psd rank of a polytope.

This paper is organized as follows. In Sect. 2, we introduce tools to study the psd rank of a general nonnegative matrix \(M\) using Hadamard square roots of \(M\). In Sect. 3, we specialize to slack matrices of polytopes and derive the lower bound of \(n+1\) for the psd rank of an \(n\)-dimensional polytope (Proposition 3.2). Theorem 3.5 characterizes \(n\)-dimensional polytopes with psd rank \(n+1\) in terms of the lowest rank of a Hadamard square root of a slack matrix of \(P\). In Sect. 4 we give several families of polytopes whose psd rank equals this lower bound. In the plane, the full-dimensional polytopes with psd rank three are exactly triangles and quadrilaterals (Theorem 4.7). Every polytope in \(\mathbb{R }^{n}\) with at most \(n+2\) vertices has psd rank \(n+1\) (Theorem 4.3). In \(\mathbb{R }^{3}\), the situation gets more tricky and we exhibit polytopes of a fixed combinatorial type (octahedra) whose psd rank depends on the embedding of the polytope. Nonetheless, we show that the three dimensional polytopes with psd rank four are exactly tetrahedra, quadrilateral pyramids, bisimplicies, combinatorial triangular prisms, “biplanar” octahedra, and “biplanar” cuboids (Theorem 4.11). It follows from [8] that if \(S_P\) is a \(0/1\) matrix then \(\text {rank}_{\text {psd}}\,P = n+1\). Such polytopes are called \(2\)-level polytopes and include the stable set polytopes of perfect graphs. We exhibit polytopes that are not combinatorially equivalent to \(2\)-level polytopes whose psd rank achieves the lower bound. We also show polytopes that are combinatorially equivalent to \(2\)-level polytopes whose psd rank is not the minimum possible. Finally, we prove in Theorem 4.12 that for stable set polytopes, the results of Lovász prevail even in our general setting in the sense that the stable set polytope of a graph on \(n\) vertices has psd rank \(n+1\) if and only if the graph is perfect.

2 Hadamard Square Roots and psd Ranks of Matrices

Definition 2.1

A Hadamard square root of a nonnegative real matrix \(M\), denoted as \(\sqrt{M}\), is any matrix whose \((i,j)\)-entry is a square root (positive or negative) of the \((i,j)\)-entry of \(M\). Additionally, we let \(\root + \of {M}\) denote the all-nonnegative Hadamard square root of \(M\).

Let \(\text {rank}_{\! \! {\sqrt{\ }}}\,M := \,\text {min}\, \{ \text {rank}\,\sqrt{M} \}\) be the minimum rank of a Hadamard square root of a nonnegative matrix \(M\). We recall the basic connection between the psd rank of a nonnegative matrix \(M\) and \(\text {rank}_{\! \! {\sqrt{\ }}}\,M\) shown in [9, Prop. 4.8], and also in [3].

Proposition 2.2

If \(M\) is a nonnegative matrix, then \(\text {rank}_{\text {psd}}\,M \le \text {rank}_{\! \! {\sqrt{\ }}}\,M \). In particular, the psd rank of a \(0/1\) matrix is at most the rank of the matrix.

Proof

Let \(\sqrt{M}\) be a Hadamard square root of \(M \in \mathbb{R }^{p \times q}_+\) of rank \(r\). Then there exist vectors \(a_1, \ldots , a_p, b_1, \ldots , b_q \in \mathbb{R }^{r}\) such that \((\sqrt{M})_{ij} = \langle a_i, b_j \rangle \). Therefore, \(M_{ij} = \langle a_i, b_j \rangle ^2 = \langle a_i a_i^T, b_j b_j^T \rangle \) where the second inner product is the trace inner product for symmetric matrices defined earlier. Hence, \(\text {rank}_{\text {psd}}\,{M} \le r\). \(\square \)

The upper bound in Proposition 2.2 can be strict even for simple examples.

Example 2.3.

For the matrix

\(\text {rank}\,M = \text {rank}_{\! \! {\sqrt{\ }}}\,M = 3\) while \(\text {rank}_{\text {psd}}\,M = 2\). Assigning the first three psd matrices below to the rows of \(M\), and the next three to the columns of \(M\), we obtain a \(\mathcal{S }_+^2\)-factorization of \(M\):

Even though \(\text {rank}_{\! \! {\sqrt{\ }}}\,M\) is only an upper bound on \(\text {rank}_{\text {psd}}\,{M}\), we cannot find \(\mathcal{S }_+^k\)-factorizations of \(M\) with only rank one factors if \(k < \text {rank}_{\! \! {\sqrt{\ }}}\,M\) as shown in Lemma 2.4 below. Note that the psd factors corresponding to the first row and the third column of the matrix \(M\) in Example 2.3 both have rank two.

Lemma 2.4

The smallest \(k\) for which a nonnegative real matrix \(M\) admits a \(\mathcal{S }_+^k\)-factorization in which all factors are matrices of rank one is \(k = \text {rank}_{\! \! {\sqrt{\ }}}\,M\).

Proof

If \(k = \text {rank}_{\! \! {\sqrt{\ }}}\,M\), then there is a Hadamard square root of \(M \in \mathbb{R }^{p \times q}_+\) of rank \(k\) and the proof of Proposition 2.2 gives a \(\mathcal{S }_+^k\)-factorization of \(M\) in which all factors have rank one. On the other hand, if there exist \(a_1a_1^T, \ldots , a_pa_p^T, b_1b_1^T, \ldots , b_qb_q^T \in \mathcal{S }_+^k\) such that \(M_{ij}= \langle a_ia_i^T, b_jb_j^T \rangle = \langle a_i, b_j \rangle ^2\), then the matrix with \((i,j)\)-entry \(\langle a_i, b_j \rangle \) is a Hadamard square root of \(M\) of rank at most \(k\). \(\square \)

Example 2.5

For a \(0/1\) matrix \(M, \,\text {rank}_{\text {psd}}\,M \le \text {rank}_{\! \! {\sqrt{\ }}}\,M \le \text {rank}\,M\). In Example 2.3 we saw that the first inequality may be strict. We now show that the second inequality may also be strict. The following derangement matrix

has rank three and psd rank two. An \(\mathcal{S }_+^2\)-factorization in which all factors have rank one is obtained by assigning

to the three rows and the three columns, respectively. A Hadamard square root of \(M\) of rank two is

We now show a method to increase the psd rank of any matrix by one. This technique will be used later to study the psd rank of a polytope.

Proposition 2.6

Suppose \(M \in \mathbb{R }^{p \times q}_+\) and \({rank_\mathrm{{psd}}} M = k\). If \(M\) is extended to

and \(\mathbf{0}\) is a column of zeros, then \({rank_\mathrm{{psd}}} M^{\prime } = k+1\). Further, the factor associated to the last column of \(M^{\prime }\) in any \(\mathcal{S }_+^{k+1}\)-factorization of \(M^{\prime }\) has rank one.

Proof

Suppose \(M^{\prime }\) has a \(\mathcal{S }_+^k\)-factorization with factors \(A_1, \ldots , A_p, A \in \mathcal{S }_+^k\) associated to its rows and \(B_1, \ldots , B_q,B \in \mathcal{S }_+^k\) associated to its columns. Then \(A, B \ne 0\) since \(\langle A, B \rangle = \alpha \ne 0\). Let \(r= \text {rank}\,(B) >0\). Then there exists an orthogonal matrix \(U\) such that \(U^{-1} B U = \text {diag}(\lambda _1, \ldots , \lambda _{r}, 0, \ldots , 0) =: D\) where \(\lambda _1, \ldots , \lambda _r\) are the nonzero (positive) eigenvalues of \(B\). Let \(A_i^{\prime } := U^{-1}A_iU\) for \(i=1,\ldots ,p\). Then

Since the diagonal entries of \(A_i^{\prime }\) are nonnegative, \(\langle D, A_i^{\prime } \rangle = 0\) implies that the first \(r\) diagonal entries of \(A_i^{\prime }\) are all zero. Therefore, the first \(r\) rows and the first \(r\) columns of \(A_i^{\prime }\) are all zero since \(A_i^{\prime }\) is psd. Now let \(B_j^{\prime } := U^{-1}B_jU\) for all \(j=1,\ldots ,q\). Then for all \(i=1,\ldots ,p\) and \(j=1,\ldots ,q\),

However, since \(A_i^{\prime }\) has nonzero entries only in its bottom right \((k-r) \times (k-r)\) block, it also follows that \( M_{ij} = \langle \tilde{A_i}, \tilde{B_j} \rangle \) where \(\tilde{A_i}\) is the bottom right \((k-r) \times (k-r)\)-submatrix of \(A_i^{\prime }\) and \(\tilde{B_j}\) is the bottom right \((k-r) \times (k-r)\) submatrix of \(B_j^{\prime }\). Thus, there exists a \(\mathcal{S }_+^{k-r}\)-factorization of \(M\) which is a contradiction to the fact that the psd rank of \(M\) is \(k\). Therefore, \(\text {rank}_{\text {psd}}\,M^{\prime } \ge k+1\).

An \(\mathcal{S }_+^{k+1}\)-factorization of \(M^{\prime }\) can be obtained from an \(\mathcal{S }_+^k\)-factorization \(A_1, \ldots , A_p B_1, \ldots , B_q \in \mathcal{S }_+^k\) of \(M\) by setting

Now consider an \(\mathcal{S }_+^{k+1}\)-factorization of \(M^{\prime }\) and let \(B\) be the matrix associated to the last column of \(M^{\prime }\) in this factorization. If \(\text {rank}\,(B)=r\), then by the same argument as above, there exists an \(\mathcal{S }_+^{k+1-r}\)-factorization of \(M\). Since \(\text {rank}_{\text {psd}}\,M = k, \,k+1-r \ge k\) or equivalently, \(r \le 1\). Since \(B \ne 0\), it follows that \(\text {rank}\,(B)=1\). \(\square \)

Example 2.7

The psd rank of a \(n \times n\) diagonal matrix with positive diagonal entries is \(n\). The statement holds for \(n=1\) and the general case follows by induction on \(n\) and the first part of Proposition 2.6. Each factor in an \(\mathcal{S }_+^n\)-factorization of such a diagonal matrix must have rank one. This follows by applying the second part of Proposition 2.6 to both the diagonal matrix and its transpose.

3 Hadamard Square Roots and psd Ranks of Polytopes

In this section we derive a lower bound to the psd rank of any polytope. We begin with the following easy fact.

Lemma 3.1

Let \(P \subset \mathbb{R }^n\) be an \(n\)-dimensional polytope. Then a slack matrix \(S_P\) has rank \(n+1\).

Proof

Let the vertices of \(P\) be \(p_1, \ldots , p_v\) and the facet inequalities of \(P\) be \(\langle a_j, x \rangle \le \beta _j\) for \(j=1, \ldots , f\). Then the corresponding \(v \times f\) slack matrix \(S_P\) has \((i,j)\)-entry equal to \(\beta _j - \langle a_j, p_i \rangle \), and we may factorize \(S_P\) as

Since \(P\) is full-dimensional and bounded, both of the factors have rank \(n+1\). \(\square \)

We now obtain a lower bound on the psd rank of a polytope.

Proposition

If \(P \subset \mathbb{R }^n\) is a full-dimensional polytope, then the psd rank of \(P\) is at least \(n+1\). Furthermore, if \(\text {rank}_{\text {psd}}\,P = n+1\), then every \(\mathcal{S }_+^{n+1}\)-factorization of the slack matrix of \(P\) only uses rank one matrices as factors.

Proof

The proof is by induction on \(n\). If \(n=1\), then \(P\) is a line segment and we may assume that its vertices are \(p_1, p_2\) and facets are \(f_1, f_2\) with \(p_1 = f_2\) and \(p_2 = f_1\). Hence its slack matrix is a \(2 \times 2\) diagonal matrix with positive diagonal entries. By the arguments in Example 2.7, \(\text {rank}_{\text {psd}}\,S_P = 2\) and any \(\mathcal{S }_+^{2}\)-factorization of it uses only matrices of rank one.

Assume the first statement in the theorem holds up to dimension \(n-1\) and consider a polytope \(P \subset \mathbb{R }^n\) of dimension \(n\). Let \(F\) be a facet of \(P\) with vertices \(p_1, \ldots , p_s\), facets \(f_1, \ldots , f_t\) and slack matrix \(S_F\). Suppose \(f_i\) corresponds to facet \(F_i\) of \(P\) for \(i=1, \ldots , t\). By induction hypothesis, \(\text {rank}_{\text {psd}}\,F = \text {rank}_{\text {psd}}\,S_F \ge n\). Let \(p\) be a vertex of \(P\) not in \(F\) and assume that the top left \((s+1) \times (t+1)\) submatrix of \(S_P\) is indexed by \(p_1, \ldots , p_s, p\) in the rows and \(F_1, \ldots , F_t, F\) in the columns. Then this submatrix of \(S_P\), which we will call \(S_F^{\prime }\), has the form

with \(\alpha > 0\). By Proposition 2.6, the psd rank of \(S_F^{\prime }\) is at least \(n+1\) since the psd rank of \(S_F\) is at least \(n\). Hence, \(\text {rank}_{\text {psd}}\,P = \text {rank}_{\text {psd}}\,S_P \ge n+1\).

Suppose there is now a \(\mathcal{S }_+^{n+1}\)-factorization of \(S_P\) and therefore of \(S_F^{\prime }\). By Proposition 2.6 the factor corresponding to the facet \(F\) has rank one. Repeating the procedure for all facets \(F\) and all submatrices \(S_F^{\prime }\) we get that all factors corresponding to the facets of \(P\) in this \(\mathcal{S }_+^{n+1}\)-factorization of \(S_P\) must have rank one. To prove that all factors indexed by the vertices of \(P\) also have rank one, recall that the transpose of a slack matrix of \(P\) is (up to row scaling) a slack matrix of the polar polytope \(P^\circ \), concluding the proof. \(\square \)

Remark 3.3

The zero pattern in \(S_P\) has been used to provide lower bounds for \(\text {rank}_+\,P\) (see for instance [5, 16]). We note that the zero pattern of a slack matrix by itself is not enough to improve the lower bound on psd rank given in Proposition 3.2. For example, consider the slack matrix \(S_k\) of a k-gon in \(\mathbb{R }^2\). Then \(\text {rank}_{\text {psd}}\,S_k\) grows to infinity as \(k\) goes to infinity as shown in [9]. The Hadamard square \(S_k^2\), however, has the same zero pattern as \(S_k\) and \(\text {rank}_{\text {psd}}\,S_k^2 \le \text {rank}\,S_k = 3\) by Lemma 3.1.

Example 3.4

The Birkhoff polytope \(B(n)\) is the convex hull of all \(n \times n\) permutation matrices. It was shown in [5] that \(\text {rank}_+\,B(n) = n^2\) when \(n \ge 5\). By Proposition 3.2, \(\text {rank}_{\text {psd}}\,B(n) \ge n^2-2n+2\). The permutahedron \(\Pi (n)\) is the convex hull of the vectors \((\pi (1), \ldots , \pi (n))\) where \(\pi \) is a permutation on \(n\) letters. It was shown in [7] that \(\text {rank}_+\,\Pi (n) = O(n \text {log} n )\). By Proposition 3.2, \(\text {rank}_{\text {psd}}\,\Pi (n) \ge n\).

Theorem 3.5

If \(P \subset \mathbb{R }^n\) is a full-dimensional polytope, then \(\text {rank}_{\text {psd}}\,P = n+1\) if and only if \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P = n+1\).

Proof

By Proposition 2.2, \(\text {rank}_{\text {psd}}\,P \le \text {rank}_{\! \! {\sqrt{\ }}}\,S_P\). Therefore, if \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P = n+1\), then by Proposition 3.2, the psd rank of \(P\) is exactly \(n+1\).

Conversely, suppose \(\text {rank}_{\text {psd}}\,P = n+1\). Then there exists an \(\mathcal{S }_+^{n+1}\)-factorization of \(S_P\) which, by Proposition 3.2, has all factors of rank one. Thus, by Lemma 2.4, we have \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P \le n+1\). Since \(\text {rank}_{\! \! {\sqrt{\ }}}\,\) is bounded below by \(\text {rank}_{\text {psd}}\,\), we must have \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P = n+1\). \(\square \)

Theorem 3.5 says that if a full-dimensional polytope \(P \subset \mathbb{R }^n\) has the minimum possible psd rank \(n+1\), then there must be a Hadamard square root of \(S_P\) of rank \(n+1\) that serves as a witness. In the next section we exhibit several classes of \(n\)-polytopes whose psd rank is \(n+1\). We now give examples in the plane that show that many of the properties we have derived so far for \(n\)-polytopes of psd rank \(n+1\) fail when psd rank is larger than \(n+1\).

Example 3.6

Consider the pentagon \(P\) in \(\mathbb{R }^2\) with vertices

and a regular hexagon \(H\) in \(\mathbb{R }^2\). Then we have slack matrices:

Theorem 4.7 will show that these polytopes have psd rank at least four which is not the minimum possible in the plane. We make the following observations:

-

(i)

\(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P > \text {rank}_{\text {psd}}\,P\) This pentagon has psd rank four due to the \(\mathcal{S }_+^4\)-factorization given by the following matrices (the first five matrices correspond to the rows and the second five to the columns):

$$\begin{aligned}&\left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 3 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 1 &{} -1 \\ 0 &{} 1 &{} 1 &{} -1 \\ 0 &{} -1 &{} -1 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} -1 &{} 0 &{} 0 \\ -1 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} 1 &{} -1 \\ 0 &{} 0 &{} -1 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} 0 &{} 0 &{} -1 \\ 0 &{} 1 &{} -1 &{} 0 \\ 0 &{} -1 &{} 1 &{} 0 \\ -1 &{} 0 &{} 0 &{} 1 \end{array} \right] ,\\&\left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} 1 &{} 0 &{} 0 \\ 1 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} 1 &{} 1 \\ 0 &{} 0 &{} 1 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} 0 &{} 0 &{} 1 \\ 0 &{} 1 &{} 1 &{} 0 \\ 0 &{} 1 &{} 1 &{} 0 \\ 1 &{} 0 &{} 0 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 0 &{} 1 &{} 1 \\ 0 &{} 0 &{} 1 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} 1 &{} 1 &{} 1 \\ 1 &{} 1 &{} 1 &{} 1 \\ 1 &{} 1 &{} 1 &{} 1 \\ 1 &{} 1 &{} 1 &{} 1 \end{array} \right] ,\\&\left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} -1 &{} -1 &{} 1 \\ -1 &{} 1 &{} 1 &{} -1 \\ -1 &{} 1 &{} 1 &{} -1 \\ 1 &{} -1 &{} -1 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} -1 &{} 1 &{} -1 \\ -1 &{} 1 &{} -1 &{} 1 \\ 1 &{} -1 &{} 1 &{} -1 \\ -1 &{} 1 &{} -1 &{} 1 \end{array} \right] ,\quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} -1 &{} 0 \\ 0 &{} -1 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \end{array} \right] . \end{aligned}$$One can check that \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P = 5\) in this case via the following algebraic calculation. Create a symbolic matrix with the same zeros as a \(S_P\), say

$$\begin{aligned} S := \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c@{\quad }c} 0 &{} a &{} b &{} c &{} 0 \\ 0 &{} 0 &{} d &{} e &{} f \\ g &{} 0 &{} 0 &{} h &{} i \\ j &{} k &{} 0 &{} 0 &{} l \\ m &{} n &{} o &{} 0 &{} 0 \end{array} \right] . \end{aligned}$$Then there is a Hadamard square root of \(S_P\) of rank at most four if and only if there is a solution to the system of polynomial equations

$$\begin{aligned} \big \{\text {det}(S) = 0, \, a^2 = 4, \,b^2= 12,\,\,c^2=4, \ldots , o^2 = 8 \big \}. \end{aligned}$$Using a computer algebra package such as Macaulay2 [10], we can see that this system of equations has no solutions. Therefore, when the psd rank of an \(n\)-polytope is greater than \(n+1\), there need not be any Hadamard square root of the slack matrix whose rank equals the psd rank of the polytope.

-

(ii)

\(\text {rank}_{\! \! {\sqrt{\ }}}\,S_H < \text {rank}\,\root + \of {S_H}\) The all-nonnegative Hadamard square root \(\root + \of {S_H}\) has rank 5. The following Hadamard square root has rank 4:

$$\begin{aligned} \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c@{\quad }c@{\quad }c} 0 &{} \sqrt{2} &{} 2 &{} 2 &{} \sqrt{2} &{} 0 \\ 0 &{} 0 &{} \sqrt{2} &{} 2 &{} 2 &{} \sqrt{2} \\ \sqrt{2} &{} 0 &{} 0 &{} \sqrt{2} &{} 2 &{} 2 \\ -2 &{} -\sqrt{2} &{} 0 &{} 0 &{} \sqrt{2} &{} 2 \\ 2 &{} -2 &{} -\sqrt{2} &{} 0 &{} 0 &{} \sqrt{2} \\ \sqrt{2} &{} 2 &{} -2 &{} -\sqrt{2} &{} 0 &{} 0 \end{array} \right] . \end{aligned}$$Thus, it is not enough to check the positive Hadamard square root of \(S_P\) to get \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P\).

-

(iii)

Recall that if \(Q\) is an \(n\)-dimensional polytope and \(\text {rank}_{\text {psd}}\,Q = n+1\), then \(\text {rank}_{\text {psd}}\,Q = \text {rank}_{\! \! {\sqrt{\ }}}\,Q\) and all \(\mathcal{S }_+^{n+1}\)-factorizations of \(S_Q\) have factors of rank one. However, even if \(\text {rank}_{\text {psd}}\,Q = \text {rank}_{\! \! {\sqrt{\ }}}\,Q\), but \(\text {rank}_{\text {psd}}\,Q > n+1\), then there can be factorizations of \(S_Q\) by psd matrices of size \(\text {rank}_{\text {psd}}\,Q\) in which the factors do not all have rank one as in the case of the hexagon \(H\). From above, \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_H = 4\). A \(\mathcal{S }_+^4\)-factorization of \(S_H\) is obtained by assigning the following six psd matrices of rank two to the columns:

$$\begin{aligned} \left[ \begin{array}{l@{\quad }l@{\quad }l@{\quad }l} 1 &{} -1 &{} 0 &{} 1 \\ -1 &{} 1 &{} 0 &{} -1 \\ 0 &{} 0 &{} 1 &{} 0 \\ 1 &{} -1 &{} 0 &{} 1 \end{array} \right] , \quad \left[ \begin{array}{l@{\quad }l@{\quad }l@{\quad }l} 1 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 1 &{} -1 \\ 0 &{} 1 &{} 1 &{} -1 \\ 0 &{} -1 &{} -1 &{} 1 \end{array} \right] , \quad \left[ \begin{array}{l@{\quad }l@{\quad }l@{\quad }l} 1 &{} 1 &{} 1 &{} 0 \\ 1 &{} 1 &{} 1 &{} 0 \\ 1 &{} 1 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 &{} 1 \end{array} \right] , \\ \left[ \begin{array}{l@{\quad }l@{\quad }l@{\quad }l} 1 &{} 1 &{} 0 &{} 1 \\ 1 &{} 1 &{} 0 &{} 1 \\ 0 &{} 0 &{} 1 &{} 0 \\ 1 &{} 1 &{} 0 &{} 1 \end{array} \right] , \quad \left[ \begin{array}{l@{\quad }l@{\quad }l@{\quad }l} 1 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} -1 &{} 1 \\ 0 &{} -1 &{} 1 &{} -1 \\ 0 &{} 1 &{} -1 &{} 1 \end{array} \right] , \quad \left[ \begin{array}{l@{\quad }l@{\quad }l@{\quad }l@{\quad }l} 1 &{} -1 &{} 1 &{} 0 \\ -1 &{} 1 &{} -1 &{} 0 \\ 1 &{} -1 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 &{} 1 \end{array} \right] , \quad \end{aligned}$$and the following six psd matrices of rank one to the rows:

$$\begin{aligned}&\left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} 1 &{} 0 &{} 0 \\ 1 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \end{array} \right] , \quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 &{} 1 \\ 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 &{} 1 \end{array} \right] , \quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} -1 &{} 0 \\ 0 &{} -1 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \end{array} \right] , \quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 1 &{} -1 &{} 0 &{} 0 \\ -1 &{} 1 &{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \end{array} \right] , \\&\left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 0 &{} -1 \\ 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} -1 &{} 0 &{} 1 \end{array} \right] , \quad \left[ \begin{array}{c@{\quad }c@{\quad }c@{\quad }c} 0 &{} 0 &{} 0 &{} 0 \\ 0 &{} 1 &{} 1 &{} 0 \\ 0 &{} 1 &{} 1 &{} 0 \\ 0 &{} 0 &{} 0 &{} 0 \end{array} \right] . \end{aligned}$$

There is no systematic algorithm to find exact psd factorizations of the type shown above. The factorizations in the above example were obtained via trial and error with a pen and paper. We always tried to choose row factors of rank one and all factors as sparse as possible.

We now give two applications of Propositions 2.6 and 3.2. The first yields a method to produce polytopes of psd rank \(k\) from polytopes of psd rank \(k-1\).

Proposition 3.7

If \(P \subset \mathbb{R }^n\) is an \(n\)-dimensional pyramid over an \((n-1)\)-polytope \(Q\) and \(\text {rank}_{\text {psd}}\,Q = k\), then \(\text {rank}_{\text {psd}}\,P = k+1\).

Proof

Let \(S_Q\) be the slack matrix of \(Q\). By assumption, \(\text {rank}_{\text {psd}}\,S_Q = k\). We may assume without loss of generality that \(Q\) lies in the hyperplane \(x_n = 0\) and that the apex \(v\) of \(P\) has \(v_n > 0\). The facets of \(P\) that contain \(v\) are in bijection with the facets of \(Q\). The only other facet inequality of \(P\) is \(x_n \ge 0\). A slack matrix of \(P\) is

where the last row is indexed by \(v\) and the last column by \(x_n \ge 0\). Therefore, \(\alpha > 0\) and by Proposition 2.6, the psd rank of \(S_P\) is \(k+1\). \(\square \)

The following result will be used in Sect. 4.

proposition 3.8

If a polytope \(P\) has a facet of psd rank \(k\), then \(P\) has psd rank at least \(k+1\). In particular, if \({rank_\mathrm{{psd}}} P = n+1\) where \(P \subset \mathbb{R }^n\) is a \(n\)-polytope, then \({rank_\mathrm{{psd}}} F = i+1\) for every \(i\)-dimensional face of \(P\).

Proof

The first fact is an immediate consequence of the proof of Proposition 3.2 where we saw that if \(F\) is a facet of psd rank \(k\), then Proposition 2.6 can be used to construct a submatrix \(S_F^{\prime }\) of the slack matrix \(S_P\) that has psd rank at least \(k+1\). The second statement then follows from Proposition 3.2. \(\square \)

4 Families of Polytopes of Minimum psd Rank

Recall that if \(P\) is an \(n\)-dimensional polytope in \(\mathbb{R }^n\) then \(\text {rank}_+\,P \ge n+1\). It is straightforward to see that the only \(n\)-dimensional polytopes of nonnegative rank \(n+1\) are simplices. The psd situation is much richer with many more classes of polytopes achieving the minimum possible psd rank as we show in this section.

Definition 4.1

An \(n\)-dimensional polytope \(P \subset \mathbb{R }^n\) is said to be \(2\)-level if it has a slack matrix all of whose entries are zero or one. Geometrically, \(P\) is 2-level if and only if for each facet of the polytope, all vertices of \(P\) lie on the union of this facet and exactly one other parallel translate of the hyperplane spanning this facet.

It follows from [8] that a \(2\)-level polytope in \(\mathbb{R }^n\) admits an \(\mathcal{S }_+^{n+1}\)-lift which can be constructed explicitly using sums of squares polynomials. In the language of the current paper, it follows that \(n\)-dimensional 2-level polytopes have psd rank \(n+1\). We can also see this directly from Theorem 3.5.

Corollary 4.2

Let \(P\) be an \(n\)-dimensional 2-level polytope in \(\mathbb{R }^n\). Then the psd rank of \(P\) is exactly \(n+1\). Further, all the factors in any \(\mathcal{S }_+^{n+1}\)-factorization of \(P\) have rank one.

Proof

Since a 2-level polytope has a \(0/1\) slack matrix \(S_P\), \(\text {rank}\,\root + \of {S_P} = \mathrm rank S_P = n+1\). Therefore, \(\text {rank}_{\! \! {\sqrt{\ }}}\,S_P = n+1\), and by Theorem 3.5, the psd rank of a 2-level polytope equals \(n+1\). The second statement follows from Proposition 3.2. \(\square \)

Since any \(n\)-polytope with \(n+1\) vertices is a simplex which is \(2\)-level, its psd rank is \(n+1\). In fact, Theorem 3.5 implies the following stronger result.

Theorem 4.3

Any full-dimensional polytope in \(\mathbb{R }^n\) with \(n+2\) vertices has psd rank \(n+1\).

Proof

Suppose \(P\) is a polytope with \(n+2\) vertices. Then if \(f\) is the number of facets of \(P\), we have that \(S_P\) is an \((n+2) \times f\) matrix of rank \(n+1\). Let \(S_i\) denote the \(i\)th row of \(S_P\). Since \(\text {rank}\,S_P = n+1\), we have \(\sum _{i=1}^{n+2}a_iS_i = \left( 0, \ldots ,0 \right) \) for some \(a_i \in \mathbb{R }\). Each column of \(S_P\) must have at least \(n\) zeros, so when we consider the above equation component-wise, all but at most two of the summands must be zero. Thus, for each \(j = 1, \ldots , f,\, a_{i_0}\left( S_{i_0}\right) _j +a_{i_1}\left( S_{i_1}\right) _j = 0\) for some \(1 \le i_0, i_1 \le n+2\). For each \(a_i\) define \(b_i := \text {sgn}\left( a_i\right) \sqrt{\left| a_i\right| }\). Then \(b_{i_0}\sqrt{\left( S_{i_0}\right) _j} + b_{i_1}\sqrt{\left( S_{i_1}\right) _j} = 0\). Since this holds for each component, we have \(\sum _{i=1}^{n+2}b_i\sqrt{S_i} = \left( 0, \ldots ,0 \right) \). Thus, \(\root + \of {S_P}\) must have rank \(n+1\) and the result follows from Theorem 3.5. \(\square \)

There are \(\lfloor n^2/4\rfloor \) distinct combinatorial types of \(n\)-dimensional polytopes with \(n+2\) vertices [12]. In the plane, we get that all quadrilaterals have psd rank three. In \(\mathbb{R }^3\), the two combinatorial types of polytopes with five vertices are the pyramid over a quadrilateral and a double simplex (bipyramid over a triangle). A quadrilateral pyramid need not be \(2\)-level but it is combinatorially equivalent to a pyramid over a square which is 2-level. By Theorem 4.3, an \(n\)-dimensional double simplex (bipyramid over a simplex of dimension \(n-1\)) has psd rank \(n+1\). They are polytopes of minimum psd rank that are not combinatorially equivalent to 2-level polytopes.

Proposition 4.4

There is no 2-level polytope that is combinatorially equivalent to a double simplex except in the plane.

proof

Let \(P \subset \mathbb{R }^n\) be an \(n\)-dimensional double simplex. Then the support of any \((n+2) \times 2n\) slack matrix of \(P\) where the first and last rows correspond to the vertices acquired when taking the bipyramid over a \((n-1)\)-dimensional simplex is

The rank of \(M\) is \(n+1\) and hence the left kernel of \(M\) has dimension one and is generated by the vector \(z := (1,-1,-1,\ldots ,-1,-1,1) \in \mathbb{R }^{n+2}\) with all entries equal to \(-1\) except the first and last. Also, \(P\) is combinatorially equivalent to a 2-level polytope if and only if there is a (2-level) polytope with slack matrix \(M\).

Suppose \(M\) is the slack matrix of an \(n\)-dimensional polytope. Then we should be able to factorize \(M\) as in the proof of Lemma 3.1 into the form

Call the two factors \(V\) and \(F\). The left kernel of \(V\) is non-trivial since \(V\) is a \((n+2) \times (n+1)\) matrix. Let \(z^{\prime }\) be a non-zero element in the left kernel of \(V\). Then since \(z^{\prime }VF = 0\), it must also be that \(z^{\prime }M = 0\). This implies that \(z^{\prime }\) is a scalar multiple of \(z\) and hence \(z\) is in the left kernel of \(V\). But looking at the first column of \(V\), which is all ones, we see that \(z\) can be in the left kernel of \(V\) only if \(n=2\). \(\square \)

On the other hand, being combinatorially equivalent to a \(2\)-level polytope does not imply minimal psd rank. The regular octahedron in \(\mathbb{R }^3\) is a \(2\)-level polytope but we now show an octahedron whose psd rank is five.

Example 4.5

Consider the octahedron with vertices

which has slack matrix:

It can be checked algebraically as in Example 3.6 that no Hadamard square root of this slack matrix has rank four. However, the positive Hadamard square root has rank five and hence the psd rank of this octahedron is five.

Remark 4.6

We have seen that having the combinatorial type of a 2-level polytope is not enough for minimal psd rank, while being the image under a projective transformation of a 2-level polytope is enough. Proposition 4.4 shows that not all polytopes of minimal psd rank are projectively equivalent to 2-level polytopes. Strictly weaker than being projectively equivalent to a 2-level polytope is the existence of a positive scaling of each row and column of \(S_P\) that turns it into a \(0/1\)-matrix. This clearly implies minimal psd rank, and includes double simplices. So one could suppose this to be a necessary and sufficient condition for having minimal psd rank. This turns out to be false. Consider the prism with vertices \((0,0,0),\, (1,0,0),\, (0,1,0),\, (1,2,0),\, (0,0,1), \,(1,0,1), (0,1,1), \,(1,2,1)\) which has slack matrix

The positive square root of this matrix has rank four, so the polytope has minimal psd rank, but it is easy to see that we can never turn the submatrix from the first two rows and the fourth and sixth columns into a \(0/1\)-matrix by any scaling.

In the plane we can fully characterize the polytopes of psd rank three.

Theorem 4.7

A convex polygon \(P\) in the plane has psd rank three if and only if it has at most four vertices.

Proof

The “if” direction was discussed after Theorem 4.3.

Now suppose that \(P\) is a convex polygon with 5 or more vertices. By an affine transformation we can suppose \(P\) has facets given by \(x \ge 0\) and \(y \ge 0\) with vertices on \((0,0), \,(1,0)\) and \((0,1)\). Let \((a,b)\) be the vertex sharing an edge with \((0,1)\) and \((c,d)\) the one sharing an edge with \((1,0)\). These facets are then given by the two inequalities \((b-1)x - ay + a \ge 0\) and \((c-1)y-dx + d \ge 0\) respectively, so we can take the \(5 \times 4\) submatrix of the slack matrix of \(P\) indexed by these vertices and facets, which is then

It is then enough to show that every possible Hadamard square root of the \(4 \times 4\) upper left portion of this matrix has rank four. This matrix is given by

Assume this matrix has rank three. Since the first three rows are independent, we can write the fourth row as a combination of the first three. In such a combination, the coefficients for the first three rows must be \(\pm \sqrt{a+b-1},\, \pm \sqrt{b}\) and \(\pm \sqrt{a}\), respectively. For ease of notation, let \(\alpha =b(d+c-1)\) and \(\beta =d(a+b-1)\). Then \(\alpha ,\beta > 0\) and \(\alpha \ge \beta \). Looking at the last column, we see that

Out of these eight possible equations, the only four that are feasible are \(\pm \sqrt{\alpha -\beta }=\sqrt{\alpha }-\sqrt{\beta }\) and \(\pm \sqrt{\alpha -\beta }=-\sqrt{\alpha }+\sqrt{\beta }\), all of which imply \(\alpha =\beta \). Hence, \(cb-b=ad-d\) and we have that \(b/(a-1)=d/(c-1)\). Thus, the slope of the line between \((a,b)\) and \((1,0)\) equals the slope between \((c,d)\) and \((1,0)\), implying that the three are collinear and cannot all be vertices unless \((a,b)=(c,d)\). \(\square \)

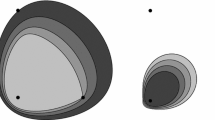

In \(\mathbb{R }^3\), it is more difficult to classify the convex polytopes of minimum psd rank. We have seen that all polytopes with four or five vertices have psd rank four. Additionally, we can say precisely which octahedra in \(\mathbb{R }^3\) have psd rank four. Let \(O \subset \mathbb{R }^3\) be a (combinatorial) octahedron. We say that \(O\) is planar with respect to a plane \(E\) if \(O \cap E\) contains four vertices of \(O\). For example, the regular octahedron is planar to the \(xy,\, xz\), and \(yz\) planes. A combinatorial octahedron can be planar with respect to at most three planes. We say \(O\) is biplanar if it is planar with respect to at least two distinct planes.

Theorem 4.8

An octahedron \(O \subset \mathbb{R }^3\) has psd rank four if and only if \(O\) is biplanar.

Proof

First, assume \(O\) is biplanar. Then, by applying an affine transformation, we can assume that \(O\) is planar with respect to the \(xy\) plane and has vertices \((0,0,0),\, (1,0,0), \,(0,1,0),\, (a,b,0),\, (z_1,z_2,z_3)\), and \((w_1,w_2,w_3)\) where \(z_3 > 0, w_3 < 0\), and \(a+b > 1\).

For ease of notation, let \(\alpha = z_3 - w_3,\, \beta = w_1z_3 - z_1w_3\), and \(\gamma = w_2z_3 - z_2w_3\). Then \((0,0,0), \,(a,b,0),\, (z_1,z_2,z_3), \,(w_1,w_2,w_3)\) are coplanar if and only if \(b\beta = a\gamma \) and \((1,0,0),\, (0,1,0),\, (z_1,z_2,z_3),\, (w_1,w_2,w_3)\) are coplanar if and only if \(\alpha = \beta + \gamma \). The combinatorics of \(O\) dictates that these are the only possible further planarities, and since \(O\) is biplanar, at least one of these conditions must be satisfied.

Now \(O\) has slack matrix \(S_O\):

In the case \(b\beta = a\gamma \) or the case \(\alpha = \beta + \gamma \), row reduction shows that \(\root + \of {S_O}\) has rank four. Hence, \(O\) has psd rank four.

For the converse, suppose \(O\) is planar to either one or zero planes. If a planar condition is satisfied, assume it is by the vertices \(v_1,v_2,v_3,v_4\). By applying an affine transformation, we can assume that \(v_1 = (0,0,1)\), \(v_2 = (0,0,0)\), \(v_3 = (1,0,0)\), and \(v_5 = (0,1,0)\). Let \(v_4 = (z_1,z_2,z_3)\) and \(v_6 = (w_1,w_2,w_3)\) where we must have

to preserve the combinatorial structure. (These are not all of the required conditions, but we will use these particular ones below.)

Since \(O\) cannot satisfy planarity conditions on the set of vertices \(\left\{ v_1,v_2,v_5,v_6\right\} \) or \(\left\{ v_3,v_4,v_5,v_6\right\} \), we must have that

We calculate the slack matrix \(S_O\) and consider its \(5 \times 5\) submatrix \(M\) indexed by the vertices \(v_1,v_2,v_3,v_5,v_6\) in the rows and the facets \(F_{1,3,5},\, F_{2,3,6},\, F_{2,4,5},\, F_{1,3,6}, F_{1,4,5}\) in the columns where \(F_{i,j,k}\) is the facet defined by the vertices \(v_i,v_j,v_k\). After multiplying the rows and columns by nonnegative constants, \(M\) has the form:

Now consider an arbitrary Hadamard square root \(\sqrt{M}\). For the purposes of calculating rank of \(\sqrt{M}\), we can assume that the \((1,2),\, (1,3), \,(2,1), \,(2,4)\), and \((2,5)\) entries of \(\sqrt{M}\) are all 1. Let

be a symbolic matrix corresponding to a \(\sqrt{M}\) and let \(\tilde{z}_1,\ldots ,\tilde{w}_3\) be variables corresponding to \(z_1,\ldots ,w_3\). Consider the ideal \(I\) generated by the polynomials:

Now if \(\text {rank}_{\text {psd}}\,O = 4\), then \(\text {rank}_{\! \! {\sqrt{\ }}}\,M \le 4\) and, hence, there must exist real numbers \(x_1,\ldots ,x_7\) such that \((x_1,\ldots ,x_7,z_1,\ldots ,w_3)\) lies in \(V(I)\), the variety of \(I\).

The three possible planarity conditions on \(O\) are given by the equations:

Let \(J_1, J_2, J_3\) be the ideals generated by two each of the three polynomials defining the above planarity conditions. Then the product ideal \(J := J_1*J_2*J_3\) has variety \(V(J) = V(J_1) \cup V(J_2) \cup V(J_3)\). By our planarity assumption on \(O\), \((x_1,\ldots ,x_7,z_1,\ldots ,w_3)\) is not contained in \(V(J)\). Now \(V(I) \backslash (V(J)\) is contained in the variety of the colon ideal \(I:J\) [2, Chap. 4.4, Theorem 7] and, hence, \((x_1,\ldots ,x_7,z_1,\ldots ,w_3)\) vanishes on every polynomial in \(I:J\). Using Macaulay2 [10], we can compute a set of generators of \(I:J\) and by elimination one sees that

lies in \(I:J\). However, no choice of \(z_1,\ldots ,w_3\) that is required to satisfy (1) and (2) can vanish on \(f\). Hence, we must have \(\text {rank}_{\text {psd}}\,O \ge 5\). \(\square \)

A cuboid, or combinatorial cube, is a polytope in \(\mathbb{R }^3\) that is combinatorially equivalent to a cube. Since the polars of cuboids are octahedra and psd rank is preserved under polarity, the cuboids of minimal psd rank are precisely those that are polars of biplanar octahedra. We call these biplanar cuboids. More explicitly, these are the cuboids for which there exist two sets of four facets whose supporting hyperplanes intersect in a point (possibly at infinity).

We will now argue that there are no polytopes in \(\mathbb{R }^3\) of psd rank four beyond the ones we have considered above (and their polars). Let \(P\) be a polytope in \(\mathbb{R }^3\) of psd rank four. By Proposition 3.8, all the facets of \(P\) must be triangles or quadrilaterals. Further, since \(\text {rank}_{\text {psd}}\,P^\circ = 4\), each vertex of \(P\) must be of degree three or four. Recall that the degree of a vertex of \(P\) is the number of edges of \(P\) incident to that vertex.

Lemma 4.9

Let \(P \subset \mathbb{R }^3\) be a three-dimensional polytope with \({rank_\mathrm{{psd}}} P = 4\). If \(p\) is a vertex of \(P\) of degree four, then the four facets incident to \(p\) must be triangles.

Proof

Let \(P\) and \(p\) be as above and suppose that the four facets incident to \(p\) are not all triangles. By Proposition 3.8, one of the facets surrounding \(p\) must be a quadrilateral and \(P\) contains the following structure (with \(p_1,\ldots ,p_5\) vertices of \(P\)):

Let \(S_P\) be a slack matrix of \(P\). Then \(S_P\) is of rank four. Further, since \(P\) has minimum psd rank, there exists a Hadamard square root \(\sqrt{S_P}\) of rank four. Let \(M\) be the \(5 \times 4\) submatrix of \(\sqrt{S_P}\) indexed by \(p, p_1,p_2,p_3, p_4\) in the rows and by the four facets incident to \(p\) in the columns. By scaling the columns of \(\sqrt{S_P}\) by nonzero scalars, we may assume that \(M\) is of the following form, with \(a,b,c,d,e\) nonzero:

The four rows of \(\sqrt{S_P}\) and \(S_P\) corresponding to the first four rows of \(M\) are linearly independent by the structure of \(M\). Hence, we can write the row of \(\sqrt{S_P}\) and \(S_P\) corresponding to the fifth row of \(M\) as a linear combination of the other four. Thus, we can write the fifth row of \(M\) and \(M^2\) as a linear combination of the first four. This results in two necessary equations: \(d + ae = abc\) and \(d^2 + a^2e^2 = (abc)^2\), which implies that \(ade=0\), a contradiction. \(\square \)

Proposition 4.10

A polytope in \(\mathbb{R }^3\) of psd rank four has the combinatorial type of a simplex, quadrilateral pyramid, bisimplex, triangular prism, octahedron, or cube.

Proof

Let \(P\) be a polytope in \(\mathbb{R }^3\) of psd rank four with \(v\) vertices, \(e\) edges, and \(f\) facets. Let \(v_t\) and \(v_q\) denote the number of vertices of degree three and four in \(P\), and let \(f_t\) and \(f_q\) denote the number of triangular and quadrangular facets of \(P\).

By double counting edges, \(2e = 3f_t+4f_q\), and by considering \(P^\circ \), we also see that \(2e=3v_t+4v_q\). Now using Euler’s formula, \(v-e+f=2\), it is easy to deduce that \(v_t\) and \(f_t\) are even and that \(v_t+f_t=8\). Hence, we only need to consider polytopes where \((v_t,f_t)\) equals (0,8), (2,6), (4,4), (6,2), or (8,0). Further, by taking polars we need only consider the cases where \((v_t,f_t)\) equals (0,8), (2,6), or (4,4).

When \((v_t,f_t) = (0,8)\), we have that every vertex is of degree four. Thus, by Lemma 4.9, every facet must be triangular. The only polytope in \(\mathbb{R }^3\) that satisfies these conditions is the octahedron.

Now suppose \((v_t,f_t) = (4,4)\). If there are no degree four vertices, then there are only four total vertices and the polytope must be the simplex. If there is a degree four vertex, then by Lemma 4.9 the polytope must contain the following configuration:

If vertex \(p_1,\, p_2, \,p_3\), or \(p_4\) has degree four, then we will be forced to include too many triangular facets. Thus, they all have degree three and the polytope is a quadrilateral pyramid.

Finally, suppose \((v_t,f_t) = (2,6)\). Then \(P\) must have a degree four vertex (call it \(p\)) and the configuration above is again included in the boundary complex of \(P\) with the four triangles shown being facets of \(P\). Since \(P\) has only two vertices of degree three, at least two of the vertices surrounding \(p\) must have degree four. Suppose two adjacent vertices among \(p_1,p_2,p_3,p_4\) have degree four. Then each of them must be contained in four triangular facets which means that each such vertex is incident to two triangular facets that are not shown in the figure. But since these degree four adjacent vertices already share a facet, they can share at most one of these four extra triangular facets. This creates a total of seven triangular facets in \(P\) contradicting \(f_t=6\). Therefore, the two vertices of degree four among \(p_1,p_2,p_3,p_4\) must be nonadjacent. As before, each is adjacent to two triangular facets that are not shown and since \(f_t=6\), it must be that the two vertices share these two triangular facets. Therefore, \(P\) is a bisimplex.

Now the facts that the polar of a bisimplex is combinatorially a triangular prism, and the polar of an octahedron is a cube completes the proof. \(\square \)

We now immediately obtain the following theorem which gives a complete classification of polytopes in \(\mathbb{R }^3\) of psd rank four.

Theorem 4.11

The polytopes in \(\mathbb{R }^3\) of psd rank four are precisely simplices, quadrilateral pyramids, bisimplicies, combinatorial triangular prisms, biplanar octahedra, and biplanar cuboids.

A major catalyst for the use of semidefinite programming in combinatorial optimization was the Lovász theta body of a graph [11, 14], denoted as \(\text {TH}(G)\), which is a convex relaxation of the stable set polytope of a graph. Let \(G = ([n],E)\) be a graph with vertex set \([n] := \{1, \ldots , n \}\) and edge set \(E\). Recall that a stable set of \(G\) is a subset \(S \subseteq [n]\) such that for all \(i,j \in S\), the pair \(\{i,j\}\) is not in \(E\). The characteristic vector of a stable set \(S\) is \(\mathcal{X }^S \in \{0,1\}^n\) defined as \((\mathcal{X }^S)_i = 1\) if \(i \in S\) and \(0\) otherwise. The stable set polytope of \(G\) is the \(n\)-dimensional polytope

and \(\text {TH}(G)\) is the following projection of an affine slice of \(\mathcal{S }_+^{n+1}\):

Further, \(\text {TH}(G) = \text {STAB}(G)\) if and only if \(G\) is a perfect graph [11, Chap. 9]. Hence if \(G\) is perfect, \(\text {rank}_{\text {psd}}\,\text {STAB}(G) = n+1\) and the description of \(\text {TH}(G)\) gives a \(\mathcal{S }_+^{n+1}\)-lift of \(\text {STAB}(G)\). In the context of this paper, it is natural to ask if there are non-perfect graphs for which \(\text {rank}_{\text {psd}}\,\text {STAB}(G) = n+1\), via other \(\mathcal{S }_+^{n+1}\)-lifts.

Theorem 4.12

Let \(G\) be a graph with \(n\) vertices. Then STAB\((G)\) has psd rank \(n+1\) if and only if \(G\) is perfect.

Proof

We saw that \(\text {rank}_{\text {psd}}\,\text {STAB}(G) = n+1\) when \(G\) is a perfect graph with \(n\) vertices. Suppose \(G\) is not perfect. By Proposition 3.8, it is enough to show that \(\text {STAB}(G)\) has a face that is not of minimal psd rank. By the perfect graph theorem [1], \(G\) contains a odd hole or odd anti-hole \(H\). Since \(\text {STAB}(H)\) forms a face of \(\text {STAB}(G)\), we just need to show that \(\text {STAB}(H)\) is not of minimal psd rank.

Let \(H=([2m+1],E)\) and assume \(H\) is an odd hole. The anti-hole case is exactly analogous and is omitted here. Now \(\text {STAB}(H)\) is a \((2m+1)\)-dimensional polytope with facet inequalities:

-

(1)

\(x_i \ge 0\) for each \(i \in [2m+1]\),

-

(2)

\(\mathbf{x}_e \le 1\) for each \(e \in E\),

-

(3)

\(\mathbf{x}_{[2m+1]} \le m\),

where \(\mathbf{x}_T := \sum _{i \in T} x_i\) for every subset \(T\) of \([2m+1]\) and \(\mathbf{x}_e := x_{i} + x_{j}\) for \(e=\left\{ i,j \right\} \in E\). Let \(S\) be the slack matrix of \(\text {STAB}(H)\) and let \(S^\prime \) be the \((2m+3) \times (2m+3)\) submatrix of \(S\) where \(S^\prime \) is indexed by the stable sets

in the rows and the facets \(\mathbf{x}_{\left\{ 1,2\right\} } \le 1, x_{1} \ge 0, \ldots , x_{2m+1} \ge 0, \mathbf{x}_{[2m+1]} \le m\) in the columns. Then \(S^\prime \) has the form:

Let \(\sqrt{S^\prime }\) be an arbitrary Hadamard square root and suppose that \(\text {rank}\,\sqrt{S^\prime } \le 2m+2\). Then since the first \(2m+2\) columns are linearly independent, we must have that the final column is a linear combination of the first \(2m+2\). Let \(\alpha _1,\ldots ,\alpha _{2m+2}\) be coefficients in such a combination. By looking at the first, second, fourth, and last columns, we see that \(\alpha _1 = \pm \sqrt{m},\, \alpha _2 = \pm \sqrt{m-1}\), and \(\alpha _4 = \pm \sqrt{m} \pm \sqrt{m-1}\). Now by looking at the last row, we must have \(\pm \alpha _2 \pm \alpha _4 = \pm \sqrt{m-2}\), which is a contradiction. Hence, \(\text {rank}_{\! \! {\sqrt{\ }}}\,S > 2m+2\) and we have that \(\text {STAB}(H)\) is not of minimal psd rank. \(\square \)

References

Chudnovsky, M., Robertson, N., Seymour, P., Thomas, R.: The strong perfect graph theorem. Ann. Math. 164(1), 51–229 (2006)

Cox, D., Little, J., O’Shea, D.: Ideals, Varieties and Algorithms. Springer, New York (1992)

Fiorini, S., Massar, S., Pokutta, S., Tiwary, H.R., de Wolf, R.: Linear vs. semidefinite extended formulations: exponential separation and strong lower bounds. In: Proceedings of STOC, New York (2012)

Fiorini, S., Rothvoss, T., Tiwary, H.R.: Extended formulations for polygons. Discrete Comput. Geom. 48(3), 658–668 (2012)

Fiorini, S., Kaibel, V., Pashkovich, K., Theis, D.O.: Combinatorial bounds on nonnegative rank and extended formulations. Discret. Math. 313(1), 67–83 (2013)

Gillis, N., Glineur, F.: On the geometric interpretation of the nonnegative rank. Linear Algebra Appl. 437(11), 2685–2712 (2012)

Goemans, M.: Smallest compact formulation of the permutahedron. Math. Program. B (to appear)

Gouveia, J., Parrilo, P.A., Thomas, R.R.: Theta bodies for polynomial ideals. SIAM J. Optim. 20(4), 2097–2118 (2010)

Gouveia, J., Parrilo, P.A., Thomas, R.R.: Lifts of convex sets and cone factorizations. Math. Oper. Res. 38, 248–264 (2013)

Grayson, D.R., Stillman, M.E.: Macaulay 2, a software system for research in algebraic geometry. http://www.math.uiuc.edu/Macaulay2/

Grötschel, M., Lovász, L., Schrijver, A.: Geometric Algorithms and Combinatorial Optimization, Algorithms and Combinatorics, 2nd edn. Springer, Berlin (1993)

Grünbaum, B.: Convex Polytopes, 2nd edn. Springer, New York (2003)

Kaibel, V., Pashkovich, K.: Constructing extended formulations from reflection relations. In: Günlük, O., Woeginger, G.J. (eds.) IPCO 2011, LNCS, pp. 287–300. Springer, Berlin (2011)

Lovász, L.: On the Shannon capacity of a graph. IEEE Trans. Inf. Theory 25(1), 1–7 (1979)

Lovász, L., Schrijver, A.: Cones of matrices and set-functions and 0–1 optimization. SIAM J. Optim. 1(2), 166–190 (1991)

Yannakakis, M.: Expressing combinatorial optimization problems by linear programs. J. Comput. Syst. Sci. 43(3), 441–466 (1991)

Acknowledgments

Gouveia was partially supported by the Centre for Mathematics at the University of Coimbra and Fundacão para a Ciência e a Tecnologia, through the European Program COMPETE/FEDER, Robinson by the U.S. National Science Foundation Graduate Research Fellowship under Grant No. DGE-0718124, and Thomas by the U.S. National Science Foundation Grant DMS-1115293.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gouveia, J., Robinson, R.Z. & Thomas, R.R. Polytopes of Minimum Positive Semidefinite Rank. Discrete Comput Geom 50, 679–699 (2013). https://doi.org/10.1007/s00454-013-9533-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-013-9533-x