Abstract

Background

Implementation Mapping is an organized method to select implementation strategies. However, there are 73 Expert Recommendations for Implementing Change (ERIC) strategies. Thus, it is difficult for implementation scientists to map all potential strategies to the determinants of their chosen implementation science framework. Prior work using Implementation Mapping employed advisory panels to select implementation strategies. This article presents a data-driven approach to implementation mapping, in which we systematically evaluated all 73 ERIC strategies using the Tailored Implementation for Chronic Diseases (TICD) framework. We illustrate our approach using implementation of risk-aligned bladder cancer surveillance as a case example.

Methods

We developed objectives based on previously collected qualitative data organized by TICD determinants, i.e., what needs to be changed to achieve more risk-aligned surveillance. Next, we evaluated all 73 ERIC strategies, excluding those that were not applicable to our clinical setting. The remaining strategies were mapped to the objectives using data visualization techniques to make sense of the large matrices. Finally, we selected strategies with high impact, based on (1) broad scope, defined as a strategy addressing more than the median number of objectives, (2) requiring low or moderate time commitment from clinical teams, and (3) evidence of effectiveness from the literature.

Results

We identified 63 unique objectives. Of the 73 ERIC strategies, 45 were excluded because they were not applicable to our clinical setting (e.g., not feasible within the confines of the setting, not appropriate for the context). Thus, 28 ERIC strategies were mapped to the 63 objectives. Strategies addressed 0 to 26 objectives (median 10.5). Of the 28 ERIC strategies, 10 required low and 8 moderate time commitments from clinical teams. We selected 9 strategies based on high impact, each with a clearly documented rationale for selection.

Conclusions

We enhanced Implementation Mapping via a data-driven approach to the selection of implementation strategies. Our approach provides a practical method for other implementation scientists to use when selecting implementation strategies and has the advantage of favoring data-driven strategy selection over expert opinion.

Similar content being viewed by others

Introduction

Implementation mapping has recently been described as an organized way to develop or select implementation strategies through five specific tasks guided by an implementation science framework [1]. The process of selecting implementation strategies can be challenging for implementation scientists. Appropriate strategies are guided by an implementation science theory or framework and consider contextual factors and known implementation barriers, which may differ across key stakeholders such as leaders, nurses, or providers [2]. One specific approach to the selection of implementation strategies is to map strategies to the determinants of the chosen implementation science framework, as initially described in 2019 as part of implementation mapping [1]. Since then, several researchers have reported on their application of implementation mapping. According to these reports, researchers used advisory groups (e.g., task force or stakeholder advisory group) to select implementation strategies from potentially applicable Expert Recommendations for Implementing Change (ERIC) strategies [3, 4]. While this approach worked, selection of strategies likely depended on the composition of these advisory groups and on the opinion of the individuals comprising them. Thus, one potential area for improvement in the application of implementation mapping is the use of a systematic data-driven approach to reviewing and prioritizing all 73 ERIC strategies.

For this reason, we operationalized implementation mapping through a data-driven process, considering all 73 ERIC strategies and every determinant of the Tailored Implementation for Chronic Diseases (TICD) framework. We used data visualization techniques to manage the consequently large number of objectives and ERIC strategies. In this manuscript, we illustrate our data-driven approach to implementation mapping using implementation of risk-aligned bladder cancer surveillance as a case example. Our approach is intended for use by implementation scientists who seek a rigorous selection process for implementation strategies.

Case example: risk-aligned bladder cancer surveillance

Bladder cancer is one of the most prevalent cancers in the Department of Veterans Affairs (VA) [5]. The vast majority of patients with bladder cancer have early stage cancer, which only grows superficially within the bladder [6]. Early stage bladder cancer patients undergo resection of the cancer from the bladder and are then at varying risks of cancer recurrence within the bladder—categorized as low, intermediate, and high according to current guidelines [7]. To detect these recurrences, patients undergo regular surveillance cystoscopy procedures, during which providers directly inspect the bladder via an endoscope. Given the broad range of cancer recurrence risks, providers should align the frequency with which patients undergo surveillance cystoscopy procedures with each patient’s individual risk of cancer recurrence. However, we previously found that there is both underuse of surveillance among high-risk and overuse of surveillance among low-risk patients, with up to three quarters of low-risk patients undergoing more procedures than are recommended [8]. Thus, we embarked on selecting implementation strategies to promote risk-aligned bladder cancer surveillance using a data-driven approach to implementation mapping.

Methods

Overview

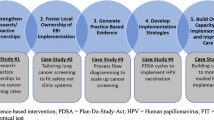

We employed implementation mapping guided by the TICD framework. Implementation mapping is a systematic process based on five tasks to develop or select strategies for the implementation of evidence-based practice [1]. The TICD framework was chosen because (1) it is an implementation science framework designed to guide efforts to improve care delivery; (2) it is based on a systematic review of 12 prior frameworks; (3) it has been widely used with more than 700 citations in the literature; and (4) it includes a patient factors domain [9]. The TICD includes 57 practice determinants across 7 domains [9]. In the following sections, we describe the implementation mapping tasks used to select and specify implementation strategies for risk-aligned bladder cancer surveillance (Fig. 1). The final task is an ongoing comprehensive evaluation of implementation outcomes to measure the impact of the strategies being pilot tested in four VA sites.

Needs assessment

The implementation mapping process was based on a needs assessment, for which we identified facilitators and barriers of risk-aligned bladder cancer surveillance. This was done via staff interviews across six Department of Veterans Affairs (VA) sites and has recently been published [10]. In this prior mixed-methods work, we used a quantitative approach to identify the six VA sites. Two sites commonly provided risk-aligned surveillance and four sites were deemed to have room for improvement, defined as sites which performed high intensity surveillance for low-risk and low intensity surveillance for high-risk early stage bladder cancer [10]. We purposively sampled 14 participants (6 providers, 2 nurses, 2 schedulers, 4 leaders) from risk-aligned sites and 26 participants (12 providers, 3 nurses, 3 schedulers, 8 leaders) from sites with room for improvement for semi-structured interviews. In sites with room for improvement, we found that absence of routines to incorporate risk-aligned surveillance into clinical workflow was a salient determinant contributing to less risk-aligned surveillance. Irrespective of site type, we found a lack of knowledge of guideline recommendations by nurses and providers, including attending and resident physicians, and advanced practice providers. We concluded that future implementation strategies will need to address the lack of routines to incorporate risk-aligned surveillance into clinical workflow, potentially via reminders or templates. In addition, implementation strategies addressing knowledge and resources could likely contribute to more risk-aligned surveillance [10].

Identification of performance and change objectives

This task entailed identification of two types of objectives, performance objectives and change objectives. Performance objectives are observable actions that need to be performed to provide risk-aligned bladder cancer surveillance and define “who has to do what” [11]. Change objectives are defined by what needs to be changed related to a specific determinant to accomplish the performance objective [11].

The performance objectives were organized by TICD framework domains and determinants and then by employee type (provider, nurse, scheduler, leader, patient). Performance objectives were formulated based on qualitative data from the prior staff interviews [10] and then reviewed and discussed in group sessions with the research team to assure they align with the qualitative data. These performance objectives were then discussed with one patient advisory group and one physician advisory group to solicit input.

To formulate change objectives, we then created a change matrix. Each row represented a specific performance objective. The columns listed the 57 determinants from the TICD framework [9]. In each cell of the change matrix, we denoted the change objective, i.e., what needs to be changed to accomplish the performance objective. Directionality was taken into account, i.e., the change objective had to logically affect the performance objective. To formulate the change objectives, two authors (AOO or FRS) independently filled in a first objective into applicable cells. Next, they reviewed each others’ work and then met to discuss edits, including addition of change objectives that were not identified on the initial pass, or changing cells to not being related to a performance objective after discussion. The change matrix was then reviewed by the research team and edited until consensus was reached on the content for each cell of the change matrix. From this final change matrix, we then obtained the unique change objectives. The change objectives were then reduced by combining change objectives that had conceptually overlapping topics.

Selection of implementation strategies

First, we developed an implementation strategy matrix linking unique change objectives (rows) to potential implementation strategies (columns). Implementation strategies were obtained and labeled according to the ERIC [12]. We reviewed all 73 ERIC strategies and excluded those that were not applicable for inclusion in our project (e.g., not feasible within confines or budget of the project, not appropriate for the context of working within VA, already completed as part of the mixed-methods needs assessment or as part of the research project development). Specifically, one author (FRS) performed an initial assessment of which ERIC strategies may not be applicable for inclusion in our project and specified reasons for exclusion. These decisions were then reviewed, discussed, and revised in meetings with two additional authors (AOO, LZ), and then with the entire research team. All decisions were documented along with reasons for exclusion (see methods journal tab in final implementation strategy matrix in Supplementary Material). Next, we wrote strategy-specific statements in each cell of the matrix on how each strategy could potentially affect a change objective. These statements were discussed by the team, and we came to consensus on the content for each cell of the implementation strategy matrix. The potential implementation strategies were then discussed with one patient advisory group and one physician advisory group to solicit input.

To prioritize strategies, we then created a plot from this matrix, showing how many and which change objectives are being addressed by each strategy. We categorized strategies into broad versus narrow scope based on whether or not they addressed eleven or more change objectives. Eleven or more was chosen as a cut-point because the median number of change objectives addressed by the strategies was 10.5. Next, we evaluated 3 factors for each strategy: (1) broad versus narrow scope based on number of change objectives addressed, (2) qualitative assessment of the required time commitment from local staff, and (3) likely impact of the strategy in our clinical setting based on the available evidence from prior studies. When drawing conclusions about likely impact, we specifically considered the clinical setting in which the prior studies were conducted and whether that setting was comparable to the setting of the current study. As a final task, we decided which strategy should be included or excluded, and reasons for inclusion and exclusion were documented along with the theoretical change methods driving each strategy [13].

Specification and production of implementation materials and activities

This task included operationalization and specification of each implementation strategy according to seven dimensions described by Proctor, including actors, actions, targets of actions, temporality, dose, implementation outcomes affected, and theoretical justification [14]. In addition, we produced implementation materials for each strategy (e.g., cheat sheets, posters, templates for the electronic medical record) with corresponding implementation activities. These were documented, including fidelity measures (i.e., non-modifiable components of each strategy) and allowable adaptations (i.e., allowable modifications based on local needs). Given the iterative nature of implementation mapping, we occasionally readdressed a prior task throughout the mapping process.

Results

Identification of performance and change objectives

We identified 49 performance objectives, i.e, observable actions that need to be performed to provide risk-aligned bladder cancer surveillance (Supplementary Material). To demonstrate the process from start to finish, Fig. 1 includes an example (right column). In the example, a performance objective is that each clinician conducts a risk assessment and then selects the appropriate frequency of risk-aligned bladder cancer surveillance (Fig. 1). Each performance objective was mapped against the 57 determinants of the TICD framework to develop the change matrix. The full change matrix is shown in the Supplementary Material, and an example is shown in Table 1. A change objective in the example shown in Fig. 1 (right column) is that a clinician documents guideline concordant risk assessment of the bladder cancer, the clinical reasoning behind such assessment, and the appropriate frequency of future surveillance cystoscopy procedures. The full change matrix included 107 unique change objectives in its cells. After combining those with conceptually overlapping objectives, 63 change objectives remained.

Selection of implementation strategies

The 63 unique change objectives were mapped against ERIC implementation strategies in the implementation strategy matrix. Of the 73 ERIC strategies, 45 were excluded because they were not applicable to our clinical setting (e.g., not feasible within the confines of the setting, not appropriate for the context, see full implementation strategy matrix in Supplementary Material for documentation of all reasons). Thus, 28 ERIC strategies were mapped to the 63 change objectives within the implementation strategy matrix (Supplementary Material). In Fig. 1 example (right column), an ERIC strategy was development of a reminder to integrate the appropriate frequency of surveillance cystoscopy procedures into routine care, which would make it easier for clinicians to document guideline concordant risk-assessment and surveillance.

To better interpret the information contained in the implementation strategy matrix, we created a plot showing how many and which change objectives are being addressed by each strategy (Fig. 2). Each ERIC strategy addressed 0 to 26 change objectives (median 10.5, Fig. 2). Fourteen strategies had a broad scope because they addressed a range of 11 to 26 tasks. Of the 28 ERIC strategies, 10 required low and 8 moderate time commitments from clinical teams. We selected 9 strategies based on high impact (Fig. 3), each with a clearly documented rationale for selection and justification (Table 2).

Summary plot of the implementation strategy matrix. Each row represents one of the 63 change objectives listed by TICD determinant along with the employee type who would have to implement the change. Each column represents one of the 28 ERIC strategies that were mapped to the change objectives. If a strategy was classified as affecting a change objective, the cell in the matrix was filled blue. At the bottom of each column, the number of change objectives addressed by each strategy is listed. L = Leader; N = Nurse; P = Provider; * = second assignment for the same determinant – employee type combination; ** = third assignment for the same determinant – employee type combination

Nine implementation strategies selected based on high impact. To select strategies with high impact, we considered (1) broad versus narrow scope based on number of change objectives addressed, (2) qualitative assessment of the required time commitment from local staff, and (3) likely impact of the strategy in the setting of our project based on the evidence available from prior studies

Specification and production of implementation materials and activities

The culmination of the Intervention Mapping process was the production of implementation materials and activities. We used one of the 9 strategies—the implementation blueprint—to codify the remaining 8 strategies for staff members at target sites and guide implementation efforts (Supplementary Material). We noted that there were synergistic effects between strategies, e.g., a local champion will help with educational meetings. Thus, we grouped the 8 strategies into four multifaceted improvement approaches, i.e., groups of implementation strategies that can be delivered together. These included: external facilitation (including facilitation, audit and provide feedback, and tailor strategies), educational meetings (including conduct of educational meetings, and identification and preparation of a champion), reminders (including changing the record system, and reminding clinicians), and prepare patients to be active participants (the only patient-facing improvement approach). The final blueprint included for each improvement approach: (1) what the approach entails, (2) the rationale for the approach, (3) specifics such as location, timing, who needs to do what, (4) a checklist of tasks, (5) expectations regarding minimum number of tasks performed, and (6) space to track any modifications made to the implementation strategies.

Discussion

We describe a rigorous and data-driven approach to consider every TICD implementation science framework determinant and every ERIC strategy during implementation mapping. We were able to interpret the large matrices by plotting the results of the implementation strategy matrix (Fig. 2) and the factors influencing strategy prioritization and selection (Fig. 3). This rigorous process allowed us to select implementation strategies primarily based on data rather than on opinions of the advisory groups alone. The implementation mapping process culminated in highly specified implementation strategies that were codified in an implementation blueprint.

Our approach is novel as the selection of implementation strategies was driven primarily by data. Prior work using implementation mapping employed advisory panels to select implementation strategies out of potential ERIC strategies [3, 4], which is more subjective, or did not clearly report how the selection was handled [20]. To overcome this limitation, we created an implementation strategy matrix, cross-walking all potentially applicable ERIC strategies against all change objectives. We then developed a plot visualizing this large matrix (Fig. 2). This allowed us to evaluate the scope of each ERIC strategy, based on the change objectives that were addressed. The plot also included visualization of which TICD framework domains and determinants were addressed by each strategy along with which employee types would be involved. This comprehensive representation of all mapping data then drove the decisions of which strategies to select.

To our knowledge, this study is the first to apply implementation mapping as recommended by Fernandez et al. [1] to improve guideline-concordant cancer care delivery in the clinic. Prior studies used implementation mapping in oncology to implement a phone navigation program [21] and exercise clinics in oncology [4], but not yet to directly improve cancer care delivery in the clinic.

We would like to emphasize that our data-driven approach to implementation mapping is not limited to a specific implementation science framework. Whereas our change objectives were categorized by TICD domains and determinants, other frameworks that can guide systematic categorization of determinants of evidence-based practice can be used in similar fashion. For example, the initial description of implementation mapping specifically mentions use of the Consolidated Framework for Implementation Science [22] and the Theoretical Domains Framework [23] as other suitable framework options [1].

It is important to acknowledge issues of equity and stakeholder preferences and values in the selection of implementation strategies. In our data-driven approach, equity and stakeholder preferences were included to the extent that they were represented in the prior mixed-methods assessments of staff needs [10]. However, diversity among stakeholders recruited for interviews and participation in advisory panels was somewhat limited with 8% African American and 2% Hispanic representation among interview participants [10] and no African American representation in our advisory panels. This could be seen as a limitation of our specific work and case example. However, our data-driven approach could easily be adapted for projects focused on diversity, equity, and inclusion. For example, one could use the Health Equity Implementation framework [24] to incorporate equity-relevant determinants into the data-driven implementation mapping process, optimizing the scientific yield and equity of implementation efforts [25].

Despite our approach’s innovation and rigor, there are several limitations to discuss. First, opinions of the research team affected certain parts of the implementation mapping process. This included the assessment of time commitment for local teams as well as the interpretation of the available literature when assessing the overall impact of a strategy. However, we tried to limit subjectivity as much as possible to focused questions and by including different perspectives from an implementation scientist, a urologist, an internist, and several implementation research staff members in this process. Second, whereas our implementation mapping process was primarily driven by data, we did not formally assess its reproducibility by an independent team. Third, the data-driven approach relied mostly on the work of the research team and a formal co-design approach was not included in the selection of the implementation strategies. Fourth, this study was focused on improving cancer surveillance in the VA, so findings regarding the impact of the selected implementation strategies may not readily translate to other healthcare settings or different clinical problems. However, our data-driven approach to implementation mapping will likely be helpful to others regardless of healthcare setting or clinical problem being addressed. Finally, implementation mapping in general is quite labor intensive. Our data-driven implementation mapping took about a year of part-time investigator and full-time research assistant effort. However, we were unable to quantify how much more effort was required for our approach compared to prior studies, as the authors of the prior studies did not report the amount of time, personnel, and expertise needed for their work [1, 20, 21]. We recognize that this level of rigor may not always be possible in our current era of rapid research or during routine operational activities. However, our visualization of the implementation strategy matrix (Fig. 2) could still be integrated into implementation mapping and will likely be helpful for researchers to understand, interpret, and present results.

It is also quite possible that our data-driven approach yielded additional information that otherwise might have been overlooked in implementation mapping as previously applied. Future work could address the empirical question whether our data-driven approach yielded additional information compared to an advisory panel approach, and whether this information is important enough to justify the additional time needed to complete the highly data-driven implementation mapping process.

Conclusions

In conclusion, we described a data-driven and rigorous implementation mapping process to select implementation strategies for risk-aligned bladder cancer surveillance. The implementation strategies are currently being pilot-tested across four VA sites, with the goal of measuring implementation outcomes and adapting strategies to different local preferences. Once piloting is complete, future work will likely entail testing both the strategies and the clinical innovation (i.e., risk-aligned bladder cancer surveillance) in a larger number of sites. We hope that our work will inspire other implementation scientists to use similar data-driven processes in their selection of implementation strategies, minimizing the risk of bias being introduced by heavy reliance on the opinions of advisory groups.

Availability of data and materials

All data generated or analyzed during this study are included in this published article and its supplementary information files.

Abbreviations

- cysto:

-

Cystoscopy

- ERIC:

-

Expert Recommendations for Implementing Change

- TICD:

-

Tailored Implementation for Chronic Diseases

- VA:

-

Department of Veterans Affairs

References

Fernandez ME, ten Hoor GA, van Lieshout S, Rodriguez SA, Beidas RS, Parcel G, et al. Implementation mapping: using intervention mapping to develop implementation strategies. Front Public Health. 2019;7 Available at: https://www.frontiersin.org/article/10.3389/fpubh.2019.00158/full, Accessed 6 Dec 2019.

Waltz TJ, Powell BJ, Fernández ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. 2019;14:42.

Klaiman T, Silvestri JA, Srinivasan T, Szymanski S, Tran T, Oredeko F, et al. Improving prone positioning for severe acute respiratory distress syndrome during the COVID-19 pandemic. An implementation-mapping approach. Ann Am Thorac Soc. 2021;18:300–7 PMCID: PMC7869786.

Kennedy MA, Bayes S, Newton RU, Zissiadis Y, Spry NA, Taaffe DR, et al. We have the program, what now? Development of an implementation plan to bridge the research-practice gap prevalent in exercise oncology. Int J Behav Nutr Phys Act. 2020;17:128 PMCID: PMC7545878.

Moye J, Schuster JL, Latini DM, Naik AD. The future of cancer survivorship care for veterans. Fed Pract Health Care Prof VA DoD PHS. 2010;27:36–43 PMCID: PMC3035919.

Nielsen ME, Smith AB, Meyer A-M, Kuo T-M, Tyree S, Kim WY, et al. Trends in stage-specific incidence rates for urothelial carcinoma of the bladder in the United States: 1988 to 2006. Cancer. 2014;120:86–95.

Chang SS, Boorjian SA, Chou R, Clark PE, Siamak D, Konety BR, et al. Non-muscle invasive bladder cancer: American urological association / SUO guideline; 2016. Available at: https://www.auanet.org/education/guidelines/non-muscle-invasive-bladder-cancer.cfm, Accessed 1 Apr 2019

Schroeck FR, Lynch KE, won Chang J, MacKenzie TA, Seigne JD, Robertson DJ, et al. Extent of risk-aligned surveillance for cancer recurrence among patients with early-stage bladder cancer. JAMA Netw Open. 2018;1:e183442 PMCID: PMC6241521.

Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:35 PMCID: PMC3617095.

Schroeck FR, Ould Ismail AA, Perry GN, Haggstrom DA, Sanchez SL, Walker DR, et al. Determinants of risk-aligned bladder cancer surveillance-mixed-methods evaluation using the tailored implementation for chronic diseases framework. JCO Oncol Pract. 2022;18:e152–62.

Bartholomew LK, Markham C, Ruiter RAC, Fernandez ME. Kok G and Parcel GS eds: planning health promotion programs: an intervention mapping approach. 4th ed. San Francisco: Wiley; 2016.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the expert recommendations for implementing change (ERIC) project. Implement Sci. 2015;10:21 PMCID: PMC4328074.

Kok G, Gottlieb NH, Peters G-JY, Mullen PD, Parcel GS, Ruiter RAC, et al. A taxonomy of behaviour change methods: an intervention mapping approach. Health. Psychol Rev. 2016;10:297–312 PMCID: PMC4975080.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:1 PMCID: PMC3882890.

Shoemaker-Hunt SJ, Wyant BE. The effect of opioid stewardship interventions on key outcomes: a systematic review. J Patient Saf. 2020;16:S36–41 PMCID: PMC7447172.

Lau R, Stevenson F, Ong BN, Dziedzic K, Treweek S, Eldridge S, et al. Achieving change in primary care—effectiveness of strategies for improving implementation of complex interventions: systematic review of reviews. BMJ Open. 2015;5:e009993.

Kamath CC, Dobler CC, McCoy RG, Lampman MA, Pajouhi A, Erwin PJ, et al. Improving blood pressure management in primary care patients with chronic kidney disease: a systematic review of interventions and implementation strategies. J Gen Intern Med. 2020;35:849–69.

Greene J, Hibbard JH. Why does patient activation matter? An examination of the relationships between patient activation and health-related outcomes. J Gen Intern Med. 2012;27:520–6.

Miech EJ, Rattray NA, Flanagan ME, Damschroder L, Schmid AA, Damush TM. Inside help: an integrative review of champions in healthcare-related implementation. SAGE Open Med. 2018;6 Available at: https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5960847/, Accessed 15 Mar 2021. PMCID: PMC5960847.

Roth IJ, Tiedt MK, Barnhill JL, Karvelas KR, Faurot KR, Gaylord S, et al. Feasibility of implementation mapping for integrative medical group visits. J Altern Complement Med. 2021;27:S-71.

Ibekwe LN, Walker TJ, Ebunlomo E, Ricks KB, Prasad S, Savas LS, et al. Using implementation mapping to develop implementation strategies for the delivery of a cancer prevention and control phone navigation program: a collaboration with 2-1-1. Health Promot Pract. 2020:152483992095797.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50 PMCID: PMC2736161.

Cane J, O’Connor D, Michie S. Validation of the theoretical domains framework for use in behaviour change and implementation research. Implement Sci. 2012;7:37 PMCID: PMC3483008.

Woodward EN, Matthieu MM, Uchendu US, Rogal S, Kirchner JE. The health equity implementation framework: proposal and preliminary study of hepatitis C virus treatment. Implement Sci. 2019;14:26.

Woodward EN, Singh RS, Ndebele-Ngwenya P, Melgar Castillo A, Dickson KS, Kirchner JE. A more practical guide to incorporating health equity domains in implementation determinant frameworks. Implement Sci Commun. 2021;2:61.

Acknowledgements

This study was supported using resources and facilities at the White River Junction Department of Veterans Affairs (VA) Medical Center, the Richard L. Roudebush Veterans Affairs Medical Center, and the VA Informatics and Computing Infrastructure (VINCI), VA HSR RES 13-457. We acknowledge Teresa M. Damush, PhD, for providing advice during the implementation mapping process, as well as the members of the advisory groups: Carlos Glender, Dean Walker, Ronald Goff, Suzanne B. Molloy, Dan Charland, Rachel Moses, Jacob McCoy, Muta Issa, Kevin Rice, and M. Minhaj Siddiqui.

Disclaimer

Opinions expressed in this manuscript are those of the authors and do not constitute official positions of the U.S. Federal Government or the Department of Veterans Affairs.

Funding

This work is supported by the Department of Veterans Affairs Health Services Research & Development (IIR 18-215, I01HX002780-01). The funding organizations had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; and decision to submit the manuscript for publication.

Author information

Authors and Affiliations

Contributions

Conception and design (FRS, LZ), acquisition of data (FRS, AAOI, SLS, DRW, LZ), analysis (all authors) and interpretation (all authors) of data, drafting of the manuscript (FRS), critical revision of the manuscript for important intellectual content (all authors), obtaining funding (FRS), administrative, technical, or material support (FRS, DAH), supervision (FRS, DAH, LZ). All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the Department of Veterans Affairs Central Institutional Review Board (Study #19-01). For the data and analyses presented here, no individual participants were recruited, thus consent to participate did not apply.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

Final performance objectives.

Additional file 2.

Final change matrix.

Additional file 3.

Final Implementation Strategy Matrix.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Schroeck, F.R., Ould Ismail, A.A., Haggstrom, D.A. et al. Data-driven approach to implementation mapping for the selection of implementation strategies: a case example for risk-aligned bladder cancer surveillance. Implementation Sci 17, 58 (2022). https://doi.org/10.1186/s13012-022-01231-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-022-01231-6