Abstract

Background

A fundamental challenge of implementation is identifying contextual determinants (i.e., barriers and facilitators) and determining which implementation strategies will address them. Numerous conceptual frameworks (e.g., the Consolidated Framework for Implementation Research; CFIR) have been developed to guide the identification of contextual determinants, and compilations of implementation strategies (e.g., the Expert Recommendations for Implementing Change compilation; ERIC) have been developed which can support selection and reporting of implementation strategies. The aim of this study was to identify which ERIC implementation strategies would best address specific CFIR-based contextual barriers.

Methods

Implementation researchers and practitioners were recruited to participate in an online series of tasks involving matching specific ERIC implementation strategies to specific implementation barriers. Participants were presented with brief descriptions of barriers based on CFIR construct definitions. They were asked to rank up to seven implementation strategies that would best address each barrier. Barriers were presented in a random order, and participants had the option to respond to the barrier or skip to another barrier. Participants were also asked about considerations that most influenced their choices.

Results

Four hundred thirty-five invitations were emailed and 169 (39%) individuals participated. Respondents had considerable heterogeneity in opinions regarding which ERIC strategies best addressed each CFIR barrier. Across the 39 CFIR barriers, an average of 47 different ERIC strategies (SD = 4.8, range 35 to 55) was endorsed at least once for each, as being one of seven strategies that would best address the barrier. A tool was developed that allows users to specify high-priority CFIR-based barriers and receive a prioritized list of strategies based on endorsements provided by participants.

Conclusions

The wide heterogeneity of endorsements obtained in this study’s task suggests that there are relatively few consistent relationships between CFIR-based barriers and ERIC implementation strategies. Despite this heterogeneity, a tool aggregating endorsements across multiple barriers can support taking a structured approach to consider a broad range of strategies given those barriers. This study’s results point to the need for a more detailed evaluation of the underlying determinants of barriers and how these determinants are addressed by strategies as part of the implementation planning process.

Similar content being viewed by others

Background

The gap between the identification of evidence-based innovations (EBIs) and their consistent and widespread use in healthcare is widely documented. Consequently, funders have prioritized implementation research dedicated to accelerating the pace of implementing EBIs in real-world healthcare settings. The challenges in implementing EBIs, or any significant organizational change, are significant and widespread. In an international survey of over 3000 executives, Meaney and Pung reported that two thirds of the respondents indicated that their companies had failed to achieve a true improvement in performance after implementing an organizational change [1]. Academic researchers can be even more critical of change efforts, noting that implementation efforts can even lead to organizational crises [2]. This is not surprising, given the lack of guidance about which implementation strategies to use for which EBIs in which contexts, and in what timeframe. Identification, development, and testing of implementation techniques and strategies, which constitute the “how to” of implementation efforts [3], are the top priorities for implementation science [4,5,6,7]. Despite the identification and categorization of a range of implementation strategies [8,9,10] and research assessing their effectiveness [11], there is little guidance on how to match implementation strategies with known barriers.

A foundational, though unproven, hypothesis, is that strategies must be tailored to local context [12,13,14,15]. Baker et al. [16] define tailoring as

“… strategies to improve professional practice that are planned, taking account of prospectively identified determinants of practice. Determinants of practice are factors that could influence the effectiveness of an intervention … and have been … referred to [as] barriers, obstacles, enablers, and facilitators [within the context in which the intervention occurs].”

While this overarching hypothesis seems intuitive, most studies have not tailored implementation strategies to context [17, 18]. A strategy that is successful in one context may be inert or result in failure in another. As Grol et al. state, “systematic and rigorous methods are needed to enhance the linkage between identified barriers and change strategies” [18]. Results from a systematic literature review [16] concluded that tailored implementation strategies:

“… can be effective, but the effect is variable and tends to be small to moderate. The number of studies remains small and more research is needed, including … studies to develop and investigate the components of tailoring (identification of the most important determinants, selecting interventions to address the determinants).”

Thus, tailoring strategies requires several steps: (1) assess and understand determinants within the local context, (2) identify change methods (theoretically and empirically based techniques that influence identified determinants) to address those determinants, and (3) develop or choose strategies that use those methods to address the determinants [19, 20].

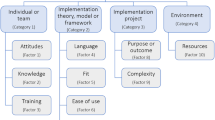

Theoretical frameworks have been developed to assess potential contextual determinants, referred to as determinant frameworks [21]. The Consolidated Framework for Implementation Research (CFIR) [22] is one of the most well-operationalized [23, 24] and widely used determinant frameworks, designed specifically to systematically assess potential determinants within local settings. The CFIR includes 39 constructs (i.e., determinants), organized into five domains: Innovation Characteristics (e.g., complexity, strength of the evidence), Outer Setting (e.g., external policy and incentives), Inner Setting (e.g., organizational culture, the extent to which leaders are engaged), Characteristics of Individuals Involved (e.g., self-efficacy using the EBI in a sustainable way), and Process (e.g., planning and engaging key stakeholders). The CFIR can be used to assess context prospectively. This information can help guide decisions about the types of strategies that may be appropriate and match the needs of the local context.

Compilations of implementation strategies such as the Expert Recommendations for Implementing Change (ERIC) [10] have been developed to support systematic reporting of implementation strategies both prospectively and retrospectively. The ERIC compilation has 73 discrete implementation strategies involving one action or process. These strategies can be viewed as the building blocks of multifaceted strategies used to address the potential determinants of implementation for a specific EBI. Previous research indicates that (1) most ERIC implementation strategies are rated as high in their potential importance [25], (2) high numbers of strategies are often selected as being applicable for particular initiatives prospectively [26,27,28], and (3) similarly high numbers of strategies are identified in retrospective analyses [27, 29].

There is a need to identify the specific strategies stakeholders should most closely consider using when planning implementation. Which strategies to consider is likely dependent on both the specific EBI or practice innovation and the implementation context. The CFIR [22] serves as a comprehensive framework for characterizing contextual determinants of implementation while the ERIC compilation of implementation strategies serves as a comprehensive collection of discrete implementation strategies. The purpose of this study was to identify which ERIC strategies best address specific CFIR determinants, framed as barriers. The primary task of the present study involved having a wide community of implementation researchers and practitioners specify the top 7 ERIC strategies for addressing specific CFIR-based barriers. These results were then used to build a tool that may serve as an aid for considering implementation strategies based on CFIR constructs as part of a broader planning effort.

Methods

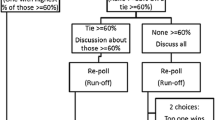

We elicited input from experts who were part of the broad implementation science and practice communities using an online platform that instructed experts to choose which ERIC strategies would best address specific CFIR-based barriers. Experts used a ranking method because previous research found that expert stakeholders tend to rate 70% of ERIC implementation strategies generally, as “moderately important” or higher [25]. When there is a skewed distribution, in which a high number of variables are important, use of a ranking method forces respondents to compare choices relative to one another. When selecting the “top 7” strategies of a set of 73, ranking forces identification of strategies of the highest value among potential near equals.

Recruitment

Recruitment targeted individuals familiar with CFIR, including first authors of articles citing the 2009 CFIR article, participants of a user panel for www.CFIRGuide.org (a technical assistance website), and individuals who had directly contacted the CFIR research team for assistance. Notices of the study were also distributed through the National Implementation Research Network (NIRN), the Society for Implementation Research Collaboration (SIRC), and the Implementation Networks’ message boards and mailing lists. Interested persons contacted the study team and were added to the invitation list. The research team sent reminders to potential participants weekly until at least 20 participants had provided responses to each of the 39 CFIR-based barrier statements.

Procedure

The welcome page of the online survey provided an overview of the tasks and a link to a PDF version of the list of ERIC implementation strategies and their definitions for easy reference during the survey. The survey began with four demographic questions to capture participants’ self-reported implementation science expertise (“Implementation experts have knowledge and experience related to changing care practices, procedures, and/or systems of care. Based on the above definition, could someone accuse you of being an implementation expert?”), VA affiliation, research responsibilities, and clinical responsibilities.

The second section of the survey presented participants with a randomly selected CFIR barrier (the 39 CFIR constructs were each used to describe a barrier) and a brief barrier narrative (see Table 2). Participants could choose to skip or accept the barrier presented. If the participant selected “skip,” they could choose to (a) receive another randomly selected CFIR barrier (selection without replacement), (b) go to the final five questions of the survey, or (c) exit with the option to return to the survey at a more convenient time. If the barrier was accepted, the participant was taken to a new page where they were instructed to “Select and rank up to 7 strategies that would best address the following barrier.” The CFIR barrier and its accompanying description were displayed, followed by further instructions to “Drag and drop ERIC strategies from the left column to the Ranking box and order them so that #1 is the top strategy” (see Fig. 1). The left column listed all 73 ERIC discrete implementation strategies. Next to each strategy was an information icon that users could click to see a pop-up definition of the strategy. These definitions were identical to those published [10] and provided in the PDF at the beginning of the survey. Participants had unlimited time to select their strategies and place them in rank order. The bottom of the page provided an optional open comment section to add an explanation, rationale, or information about their confidence in their ranking. Responses were saved when the participants clicked on the “Next” button, after which another randomly selected CFIR-based barrier was presented that the participant could either skip or accept. The option to skip or accept CFIR categories was given to ensure respondents could opt out of providing responses if they were unfamiliar with a particular barrier. Participants were encouraged to provide responses for at least eight CFIR barriers.

The third and final section of the survey asked participants to report to what extent feasibility, improvement opportunity, validity, difficulty, and relevance of each ERIC strategy influenced their ranked choices, on a three-point scale from “not influential” to “extremely influential.”

Results

Participants

A total of 435 invitations were emailed, and 169 individuals provided responses (39% participation rate) including demographics. Most participants self-identified as an implementation expert (85.2%). A similar percentage (81.7%) indicated that more than 50% of their employment was dedicated to research. Only 3% indicated that none of their employment was dedicated to research and the remainder (15.4%) indicated less than 50% of their employment was dedicated to research. About one fourth of the participants had clinical responsibilities (26.6%) with 7.1% providing direct patient care, 12.4% serving a management or oversight role for direct care, and 7.1% indicating having both direct care and management responsibilities. About a third (34.3%) of the participants were either employed or affiliated with the US Veterans Health Administration (VHA). Most respondents were from the USA (83%), Canada (7%), and Australia (4%), with the remainder from British, European, and African countries.

Each participant provided strategy rankings for an average of 6.1 CFIR barriers (range 1–36). The number of respondents for each CFIR barrier varied; across the barriers, an average of 26 respondents (range 21 to 33) selected up to seven strategies they felt would best address that barrier.

Endorsement of strategies

Participants identified an average of six strategies (SD = 4.75, range = 1 to 7, mode = 7) per barrier. There was considerable heterogeneity of opinion regarding which ERIC strategies best addressed each CFIR barrier. Across all 39 CFIR barriers, an average of 47 different ERIC strategies (SD = 4.8; range 35 to 55) was endorsed at least once, as being one of the top 7 strategies that would best address each barrier. Considering the 39 CFIR barriers and 73 ERIC strategies, there were 2847 possible barrier-strategy combinations (i.e., 39 CFIR barriers X 73 ERIC strategies). Altogether, at least one participant endorsed 1832 (64%) of the 2847 possible individual barrier-strategy combinations.

Table 1 shows the distribution of endorsements for a single example CFIR barrier, Reflecting and Evaluating (within the Process domain), which was described as, “There is little or no quantitative and qualitative feedback about the progress and quality of implementation nor regular personal and team debriefing about progress and experience.” Twenty-five participants identified ERIC strategies to address this barrier; 43 ERIC strategies were endorsed by at least one of the 25 respondents.

Over half of the 25 respondents endorsed two strategies (“Develop and implement tools for quality monitoring” and “Audit and Provide Feedback”). We characterize these strategies as level 1 endorsements because the majority of respondents endorsed those strategies. The top quartile of endorsement levels across all individual barrier-strategy combinations included combinations with at least 20% of respondents endorsing that combination. We refer to strategies with 20–49.9% endorsement as “level 2” endorsements. Table 1 lists eight level 2 strategies chosen by the participants that would best address the barrier related to lack of Reflecting and Evaluating.

Overall, level 2 strategies comprised 332 (18.1%) of the 1832 endorsed barrier-strategy combinations while level 1 comprised 39 (2.1%). Twenty-one of the 39 CFIR barriers did not have a level 1 recommended strategy; Level 1 endorsements ranged from 0 to 3. CFIR barriers had a range of 5 to 15 level 2 strategies endorsed by respondents. Table 2 lists the number of level 1 and level 2 endorsements for each CFIR barrier. The table includes the barrier description used for each CFIR construct. Additional file 1 lists the level of endorsement for each of the 2847 possible individual barrier-strategy combinations.

Table 3 summarizes considerations that influenced participants’ selections of strategies. The relevance of the strategy to the CFIR barrier was the single most influential consideration followed by the perceived improvement opportunity and feasibility of the chosen strategies. Level of difficulty, while endorsed by the majority of respondents as extremely or somewhat influential, nonetheless, had the lowest level of endorsement.

Of the 169 participants, 73 (43%) provided comments in the optional comment boxes at the bottom of each ranking page; a total of 187 comments were provided. Comments were coded by themes that were inductively derived based on descriptive coding of content [30]. Coding was completed by the primary coder (BA) and then reviewed by the research team. These themes included confidence in choice, elaboration on choice, issues related to CFIR barrier, issues related to context, issues related to implementation strategies, technical issues with the survey platform, and nonspecific comments. Twenty-three of the comments were coded as reflecting multiple themes. Most comments involved participants elaborating on their choices. Table 4 provides a summary of the qualitative content.

Discussion

This is the first study to engage expert implementation stakeholders in identifying discrete ERIC implementation strategies that would best address specific barriers based on the 39 CFIR contextual determinants of implementation. Of the 39 CFIR barrier scenarios, 21 barriers had at least one and up to three ERIC strategies chosen as “in the top seven” by most participants (i.e., level 1 endorsements). Otherwise, there was substantial heterogeneity in strategies chosen to address barriers. A range of 35 to 55 strategies was endorsed by one or more respondents across the range of barriers. These results illustrate that with the exception of a few strategies, there is little consensus regarding which strategies are most appropriate to address CFIR barriers when experts used a ranking approach.

Previous research reported that most ERIC strategies (i.e., 54 of 73 or 74.0%) were rated as moderately to extremely important by participating experts [25]. In the current study, of the 22 ERIC strategies receiving level 1 endorsement, 16 (72.7%) had been rated in the top quadrant of importance and feasibility out of the list of 73 strategies [25]. In contrast, only two of the 22 strategies (9.1%) were rated in the lowest quadrant of both importance and feasibility. Thus, the present findings indicate that importance and feasibility may vary based on the presence of specific CFIR barriers.

The present results can be juxtaposed with those of Rogal and colleagues [29] where a retrospective analysis of ERIC strategies used to implement a new generation EBI for hepatitis C virus (HCV) across 80 VHA health stations was conducted. Respondents (stakeholders actively involved in the HCV EBI’s implementation) were presented with the list of ERIC strategies and asked, “Did you use X strategy to promote HCV care in your center?” Respondents indicated yes for an average of 25 strategies (SD = 14) with higher numbers of strategies being associated with higher numbers of new EBI treatment starts. Thus, the heterogeneity of ERIC strategies endorsed in the present study based on brief descriptions of barriers, independent of a specific EBI implementation, is consistent with the heterogeneity observed in Rogal et al.’s retrospective assessment of HCV EBI implementation. Similar results have been found in other healthcare sectors as well [27, 28].

Based on results from the current study, we developed the CFIR-ERIC Implementation Strategy Matching Tool, which is publicly available on www.cfirguide.org. Because of the wide diversity of responses by our expert respondents and the lack of consensus this represents for the majority of endorsements, this tool must be used with caution.

It is likely that the diversity of endorsements of specific implementation strategies to address certain barriers also reflects the diversity of assumptions about how barriers interact with the program-specific needs experienced by the respondents. Another source of diversity for the endorsements is likely due to differences in interpretation of both the barrier described and the strategy. Nevertheless, these nascent results provide a productive starting point for structuring planning for tailoring an implementation effort to known contextual barriers. As more implementation researchers document barriers and strategies using these frameworks, and as processes for the development and selection of implementation strategies become more widespread [19, 20], the knowledge base for a barrier-strategy mapping scheme will strengthen.

Example application of the CFIR-ERIC tool

To illustrate the potential value of these findings, we provide a case example based on a published implementation evaluation of a telephone lifestyle coaching program (TLC) within VHA [31]. This study evaluated CFIR constructs across 11 medical centers that had varying success in implementing the program. Implementation effectiveness was assessed by measuring penetration, defined as “integration of a practice within a service setting” [32], and was operationalized as the rate of Veteran patients who were referred to TLC, divided by the number of Veterans who were candidate beneficiaries of the program. The site with the highest penetration had a rate of referrals that was more than seven times higher than the lowest penetration site. Seven CFIR constructs were identified as being associated with outcomes—in nearly all cases, these were rated as barriers at sites with lower penetration. One core theory within implementation science is that implementation will have the highest likelihood for success if implementation strategies are selected based on an assessment of context, all else being equal. In the case of TLC implementation, implementation strategies could be selected to address the specific barriers identified and packaged as a multi-level, multifaceted set of supports to implement TLC in medical centers.

The CFIR-ERIC Mapping Tool could be used to generate a list of ERIC strategies to consider for addressing each CFIR barrier. In this example, all 73 ERIC strategies received at least one endorsement across the seven CFIR barriers associated with TLC outcomes. The top 37 strategies are presented in Table 5, listing only strategies with a level 1 or 2 recommendation for at least one of the seven CFIR barriers. This list highlights the heterogeneity and thus nascent nature of the tool. However, it provides a useful starting point. Strategies are ordered by “cumulative percent”; the top listed strategies are ones that have the highest cumulative level of endorsement across all seven CFIR barriers. For example, Identify and Prepare Champions, is the first listed strategy. This means that using this strategy has the highest likelihood of addressing facets of one or more barriers; in fact, it was a level 1 or 2 endorsement for six of the seven barriers. Thus, a single strategy may simultaneously address multiple barriers, depending on how it is operationalized. Six of the strategies listed in Table 5 have level 1 endorsements (indicated by bolding) but are not all positioned at the top of the list. Level 1 strategies are highly specific to individual CFIR barriers and merit close reflection, independent of the cumulative percentages. This example illustrates how the CFIR-ERIC Mapping Tool can be used to support broad consideration of implementation strategies based on the assessment of contextual barriers.

There are several possible explanations for the reason respondents lacked consensus on their endorsed strategies, even when presented with seemingly specific contextual barriers. First, respondents had experience in implementation (practice or evaluation) in diverse settings around the world. Though CFIR constructs have been rated as highly operationalized [23], the definitions are nonetheless, designed to be relatively abstract so that they can be applied across a range of settings and to align with higher level theories that underlie each construct. Additionally, while the barriers described a specific challenge, they did not elucidate why the challenge was occurring which can be the most important information needed to inform strategy selection. Therefore, the respondent had to make assumptions about how exactly a particular “barrier” arose based on a specific determinant or combination of determinants (low knowledge, skills, capacity, negative attitudes, etc.). These interpretations of cause likely influenced opinions about which strategies would best address each barrier. Second, ERIC strategies have a range of specificity. For example, a strategy of identifying and preparing a champion is relatively discrete and clear but facilitation is a process that draws on a broad range of additional strategies. Additionally, strategies likely comprise either single or multiple mechanisms of change [33]. The ERIC strategies do not include descriptions of the mechanism of change (i.e., why it works), though work to link these strategies to potential mechanisms is currently underway [34, 35]. Thus, respondents may draw on a wide range of assumptions about how each strategy’s mechanisms may address lower-level determinants associated with each CFIR barrier. Taking all these considerations into account leads to the highly likely proposition that respondents each chose strategies based on an ideographic array of underlying assumptions. It is also plausible that multiple strategies and strategy combinations can, in fact, be used to successfully address determinants, indicating equifinality (i.e., multiple pathways) in producing positive implementation outcomes [36]. Thus, future research needs to elicit greater detail from respondents about how they envision their choice of strategies will address determinants to shed more light on the selection process [33]. We recommend that researchers explicitly specify hypothesized causal pathways through which implementation strategies exert their effects [35, 37] and specify implementation strategies using established reporting guidelines [3, 38]. Both of these steps will move researchers toward a greater understanding of when, where, how, and why implementation strategies are effective in improving implementation outcomes.

Systematic approaches to identifying determinants and matching strategies to address them have been developed [9, 20, 35, 39]. One way to address differences in assumed mechanisms and implied pathways of change, as highlighted above, is to use a transparent process to systematically and consistently match implementation strategies to address barriers such as intervention mapping (IM) [20]. IM has been recognized as a useful method for planning multifaceted implementation strategies [9, 37, 40]. A key feature of IM since its inception [41] has been its utility for developing multifaceted implementation interventions to enhance the adoption, implementation, and maintenance of clinical guidelines [42] and EBIs [43,44,45,46,47,48,49].

IM guides the development or selection of strategies by (1) conducting or using results from a needs assessment to identify determinants (barriers and facilitators) to implementation and identifying program adopters and implementers; (2) describing adoption and implementation outcomes and performance objectives (specific tasks related to implementation), identifying determinants of these, and creating matrices of change objectives; (3) choosing theoretical methods (mechanisms of change [19]); and (4) selecting or designing implementation strategies (i.e., practical applications) and producing implementation strategy protocols and materials. The detailed steps in this process help explain how the range of potential assumptions made by respondents as they chose strategies to address CFIR determinants in the present study may have contributed to the general lack of consensus in choices.

Within IM, identifying a barrier is not sufficient to guide the choice of a strategy. To thoroughly understand a problem with enough specificity to guide the effective selection of discrete strategies, the causes of each barrier must be specified along with the desired outcome in very specific terms (i.e., performance objective for implementation and their determinants). It is then necessary to identify specific methods or techniques [19, 20, 50] that can influence these determinants and operationalize these methods into concrete strategies. Behavior change techniques and methods (which stem from the basic sciences of behavior, organizational, and policy change) then are transparently and clearly specified within each selected implementation strategy.

Most intervention developers who follow systematic processes to create complex interventions at multiple levels would not select strategies (even strategies that had been widely used and clearly defined) without careful planning to ensure that the strategies would effectively address determinants of the problem or desired outcome. Ratings of considerations that most influenced the selection of strategies are consistent with this. Relevance and improvement opportunity were the primary influences on the rankings, and these considerations are also integral to the first three steps of IM noted above. Feasibility was also influential, but it did not seem to overshadow the need for a careful evaluation of the needs of the initiative through a process like IM. The work and resource requirements of an implementation strategy (i.e., difficulty) were influential, but not as influential as the other considerations. This may reflect the assumption that if an implementation initiative is a priority, work and resource requirements will be allocated to address barriers to implementation.

In implementation science and practice, a process akin to IM (systematically identifying barriers, determinants of these, change methods for addressing them, and development or selection for specific strategies) is not often followed or clearly documented, leading to gaps in understanding which strategies work and why they produce their effects. Implementation science is a relatively young field and frameworks to help identify implementation determinants such as CFIR have only recently been developed and operationalized. Nonetheless, exemplary studies can be found [49]. For example, Garbutt and colleagues utilized CFIR to characterize contextual determinants of EBI use, analyzed those determinants systematically via a theoretical framework, identified specific behavior change targets, and then selected relevant implementation strategies [51].

Limitations

This study has several limitations that may impact the validity of the inferences that can be made from this data set. In the absence of consensus in the field regarding how to identify “implementation experts,” this project asked individuals to self-identify whether or not they are implementation experts based upon a practical definition (“Implementation experts have knowledge and experience related to changing care practices, procedures, and/or systems of care. Based on the above definition, could someone accuse you of being an implementation expert?”). The breadth of this definition aimed to be inclusive of both researchers and practitioners who primarily work in the field. However, 81% of respondents indicated that research consisted of more than half of their job duties. Thus, different results may have been elicited if more non-research practitioners had been recruited. It is also possible that relying upon more objective indicators of expertise (e.g., individuals’ implementation science and practice-focused funding, publications, or relevant service on editorial boards or expert advisory committees) may have yielded a different pool of participants and different results.

As noted previously, the diversity of endorsements linking specific implementation strategies to specific barriers may reflect the diverse assumptions respondents brought to the task regarding how barriers interact with the program-specific needs given respondents’ unique histories of implementing different innovations. Given the abstract characterizations of both the barriers and the strategies, gathering data on these assumptions may have produced further insights into the endorsements provided. Future projects focusing on the implementation of specific innovations would benefit from adopting two practices. First, when a specific innovation is being investigated, it is possible to more clearly operationalize implementation strategies as they are applied to that innovation, using established reporting guidelines to specify the details of each strategy and articulate how they address contextual barriers [3, 38]. Second, within the specific initiative, respondents can specify the causal pathways through which included implementation strategies are hypothesized to exert their effects [35, 37].

Conclusions

Self-identified implementation experts engaged in a process to select up to seven ERIC implementation strategies that would best address specific CFIR-based barriers. Respondents’ endorsements formed the basis for the CFIR-ERIC Implementation Strategy Matching Tool. This tool can serve as a preliminary aid to implementers and researchers by supporting consideration of a broad array of candidate implementation strategies that may best address CFIR-based barriers. Due to the heterogeneity of endorsements obtained in this study, the ranked considerations provided by this tool should be coupled with a systemic process, such as intervention mapping, to further develop or identify and tailor strategies to address local contextual determinants for successful implementation.

Abbreviations

- CFIR:

-

Consolidated Framework for Implementation Research

- EBI:

-

Evidence-based innovation

- ERIC:

-

Expert Recommendations for Implementing Change

- HCV:

-

Hepatitis C virus

- IM:

-

Intervention mapping

- NIRN:

-

National Implementation Research Network

- SIRC:

-

Society for Implementation Research Collaboration

- TLC:

-

Telephone lifestyle coaching

- VHA:

-

US Veterans Health Administration

References

Meaney M, Pung C. McKinsey Global Survey results: creating organizational transformations. Seattle: McKinsey & Company; 2008. p. 1–7.

Probst G, Raisch S. Organizational crisis: the logic of failure. Acad Manag Exec. 2005;19(1):90.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139.

Institute of Medicine. Initial national priorities for comparative effectiveness research. Washington, DC: The National Academies Press; 2009.

Department of Health and Human Services. Dissimination and implementation research in health (R01 clinical trial optional). In: National Institutes of Health; 2017.

Eccles MP, Armstrong D, Baker R, Cleary K, Davies H, Davies S, Glasziou P, Ilott I, Kinmonth A-L, Leng G, et al. An implementation research agenda. Implement Sci. 2009;4:18.

Newman K, Van Eerd D, Powell BJ, Urquhart R, Cornelissen E, Chan V, Lal S. Identifying priorities in knowledge translation from the perspective of trainees: results from an online survey. Implement Sci. 2015;10:92.

Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, Glass JE, York JL. A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2):123–57.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, Mandell DS. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44(2):177–94.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21.

Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implement Sci. 2012;7:50.

Mittman BS. Implementation science in health care. In: Brownson RC, Proctor EK, editors. Dissemination and implementation research in health: translating science to practice. New York: Oxford; 2012. p. 400–18.

Baker R, Cammosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, Robertson N. Tailored interventions to overcome identified barriers to change: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2010;3(4):CD005470. https://doi.org/10.1002/14651858.CD005470.pub2.

Wensing M, Oxman A, Baker R, Godycki-Cwirko M, Flottorp S, Szecsenyi J, Grimshaw J, Eccles M. Tailored implementation for chronic diseases (TICD): a project protocol. Implement Sci. 2011;6:103.

Wensing M, Bosch M, Grol R. Selecting, tailoring, and implementing knowledge translation interventions. In: STJ S, Graham ID, editors. Knowledge translation in health care: moving from evidence to practice. Oxford: Wiley-Blackwell; 2009. p. 94–113.

Baker R, Camosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, Robertson N, Wensing M, Fiander M, Eccles MP, et al. Tailored interventions to address determinants of practice. Cochrane Database Syst Rev. 2015;4(4):Cd005470.

Bosch M, Weijden T, Wensing MJP, Grol RPTM. Tailoring quality improvement interventions to identified barriers: a multiple case analysis. J Eval Clin Pract. 2007;13(2):161–8.

Grol R, Bosch M, Wensing M. Development and selection of strategies for improving patient care. In: Grol R, Eccles M, Davis D, editors. Improving patient care: the implementation of change in health care. 2nd ed. Chichester: Wiley; 2013. p. 165–84.

Kok G, Gottlieb NH, Peters G-JY, Mullen PD, Parcel GS, Ruiter RAC, Fernández ME, Markham C, Bartholomew LK. A taxonomy of behaviour change methods: an intervention mapping approach. Health Psychol Rev. 2016;10(3):297–312.

Bartholomew Eldredge LK, Markham CM, Ruiter RAC, Fernández ME, Kok G, Parcel GS. Planning health promotion programs: an intervention mapping approach. 4th ed. San Francisco: Jossey-Bass; 2016.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10:53.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Tabak RG, Khoong EC, Chambers DA, Brownson RC. Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–50.

Fernandez ME, Walker TJ, Weiner BJ, Calo WA, Liang S, Risendal B, Friedman DB, Tu SP, Williams RS, Jacobs S, et al. Developing measures to assess constructs from the inner setting domain of the consolidated framework for implementation research. Implement Sci. 2018;13:52.

Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, Proctor EK, Kirchner JE. Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implement Sci. 2015;10:109.

Waltz TJ, Powell BJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. Structuring complex recommendations: methods and general findings. Implement Sci. 2016;11(Suppl 1):A23.

Bunger AC, Powell BJ, Robertson HA, MacDowell H, Birken SA, Shea C. Tracking implementation strategies: a description of a practical approach and early findings. Health Res Policy Syst. 2017;15:15.

Boyd MR, Powell BJ, Endicott D, Lewis CC. A method for tracking implementation strategies: an exemplar implementing measurement-based care in community behavioral health clinics. Behav Ther. 2018;49:525–37.

Rogal SS, Yakovchenko V, Waltz TJ, Powell BJ, Kirchner JE, Proctor EK, Gonzalez R, Park A, Ross D, Morgan TR, et al. The association between implementation strategy use and the uptake of hepatitis C treatment in a national sample. Implement Sci. 2017;12:60.

Sandelowski M. What’s in a name? Qualitative description revisited. Res Nurs Health. 2010;33(1):77–84.

Damschroder LJ, Reardon CM, Sperber N, Robinson CH, Fickel JJ, Oddone EZ. Implementation evaluation of the Telephone Lifestyle Coaching (TLC) program: organizational factors associated with successful implementation. Transl Behav Med. 2017;7(2):233–41.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38(2):65–76.

Powell BJ, Fernandez ME, Williams NJ, Aarons GA, Beidas RS, Lewis CC, McHugh SM, Weiner BJ. Enhancing the impact of implementation strategies in healthcare: a research agenda. Front Public Health. 2019;7:3.

Powell BJ, Garcia K, Fernández ME. Implementation strategies. In: Chambers D, Vinson C, Norton W, editors. Optimizing the cancer control continuum: advancing implementation research. New York: Oxford University Press; 2018.

Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, Walsh-Bailey C, Weiner B. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136.

Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1996;21:1055–80.

Weiner BJ, Lewis MA, Clauser SB, Stitzenberg KB. In search of synergy: strategies for combining interventions at multiple levels. J Natl Cancer Inst Monogr. 2012;2012(44):34–41.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348(mar07 3):g1687.

Colquhoun HL, Squires JE, Kolehmainen N, Fraser C, Grimshaw JM. Methods for designing interventions to change healthcare professionals’ behaviour: a systematic review. Implement Sci. 2017;12:30.

Powell BJ, Aarons GA, Amaya-Jackson L, Haley A, Weiner BJ. The collaborative approach to selecting and tailoring implementation strategies (COAST-IS). Implement Sci. 2018;13((Suppl 3): A79):39–40.

Bartholomew LK, Parcel GS, Kok G. Intervention mapping: a process for developing theory and evidence-based health education programs. Health Educ Behav. 1998;25(5):545–63.

Bartholomew LK, Cushman WC, Cutler JA, Davis BR, Dawson G, Einhorn PT, Graumlich JF, Piller LB, Pressel S, Roccella EJ, Simpson L. Getting clinical trial results into practice: design, implementation, and process evaluation of the ALLHAT Dissemination Project. Clinical Trials. 2009;6(4):329–43.

van Nassau F, Singh AS, van Mechelen W, Paulussen TG, Brug J, Chinapaw MJ. Exploring facilitating factors and barriers to the nationwide dissemination of a Dutch school-based obesity prevention program “DOiT”: a study protocol. BMC Public Health. 2013;13:1201.

Verloigne M, Ahrens W, De Henauw S, Verbestel V, Mårild S, Pigeot I, De Bourdeaudhuij I, Consortium I. Process evaluation of the IDEFICS school intervention: putting the evaluation of the effect on children's objectively measured physical activity and sedentary time in context. Obes Rev. 2015;16(S2):89–102.

van Schijndel-Speet M, Evenhuis HM, van Wijck R, Echteld MA. Implementation of a group-based physical activity programme for ageing adults with ID: a process evaluation. J Eval Clin Pract. 2014;20(4):401–7.

Peels DA, Bolman C, Golsteijn RH, De VH, Mudde AN, van Stralen MM, Lechner L. Differences in reach and attrition between web-based and print-delivered tailored interventions among adults over 50 years of age: clustered randomized trial. J Med Internet Res. 2012;14(6):e179.

Fernandez ME, Gonzales A, Tortolero-Luna G, Williams J, Saavedra-Embesi M, Chan W, Vernon SW. Effectiveness of Cultivando La Salud: a breast and cervical cancer screening promotion program for low-income Hispanic women. Am J Public Health. 2009;99(5):936–43.

Fernández ME, Gonzales A, Tortolero-Luna G, Partida S, Bartholomew LK. Using intervention mapping to develop a breast and cervical cancer screening program for Hispanic farmworkers: Cultivando la salud. Health Promot Pract. 2005;6(4):394–404.

Peskin MF, Hernandez BF, Gabay EK, Cuccaro P, Li DH, Ratliff E, Reed-Hirsch K, Rivera Y, Johnson-Baker K, Emery ST, et al. Using intervention mapping for program design and production of iCHAMPSS: an online decision support system to increase adoption, implementation, and maintenance of evidence-based sexual health programs. Front Public Health. 2017;5:203.

Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, Eccles MP, Cane J, Wood CE. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: building an international consensus for the reporting of behavior change interventions. Ann Behav Med. 2013;46:81–95.

Garbutt JM, Dodd S, Walling E, Lee AA, Kulka K, Lobb R. Theory-based development of an implementation intervention to increase HPV vaccination in pediatric primary care practices. Implement Sci. 2018;13:45.

Acknowledgements

Special thanks to Caitlin Kelley, MSI, Informationist at the Center for Clinical Management Research, VA Ann Arbor Healthcare System for her technical work on developing the custom features of the Qualtrics-based survey used to obtain participant responses. Appreciation is also extended to Claire Robinson, Caitlin Reardon, and Nicholas Yankey for their assistance in piloting the usability of the survey and relevant support materials.

Funding

This work was supported by research grants from the US Department of Veterans Affairs (QLP 92-025; QUERI DM, DIB 98-001). BJP was supported by the National Institute of Mental Health through K01MH113806 and R25MH080916.

Availability of data and materials

A deidentified data set is embedded in the tool posted at www.cfirguide.org. The full set of deidentified data, inclusive of the qualitative responses, is also available from the corresponding author upon request.

Author information

Authors and Affiliations

Contributions

All authors made substantial contributions to the conception and design or analysis and interpretation of the data and drafting of the article or critical revision for important intellectual content. Portions of this manuscript were presented at the Society for Implementation Research Collaboration conference in 2017. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This evaluation was approved by the Institutional Review Board at the VA Ann Arbor Healthcare System.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

The level of endorsement for each of the 2847 possible individual barrier-strategy combinations (XLSX 50 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Waltz, T.J., Powell, B.J., Fernández, M.E. et al. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implementation Sci 14, 42 (2019). https://doi.org/10.1186/s13012-019-0892-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-019-0892-4