Abstract

Background

Artificial intelligence (AI) is seen as one of the major disrupting forces in the future healthcare system. However, the assessment of the value of these new technologies is still unclear, and no agreed international health technology assessment-based guideline exists. This study provides an overview of the available literature in the value assessment of AI in the field of medical imaging.

Methods

We performed a systematic scoping review of published studies between January 2016 and September 2020 using 10 databases (Medline, Scopus, ProQuest, Google Scholar, and six related databases of grey literature). Information about the context (country, clinical area, and type of study) and mentioned domains with specific outcomes and items were extracted. An existing domain classification, from a European assessment framework, was used as a point of departure, and extracted data were grouped into domains and content analysis of data was performed covering predetermined themes.

Results

Seventy-nine studies were included out of 5890 identified articles. An additional seven studies were identified by searching reference lists, and the analysis was performed on 86 included studies. Eleven domains were identified: (1) health problem and current use of technology, (2) technology aspects, (3) safety assessment, (4) clinical effectiveness, (5) economics, (6) ethical analysis, (7) organisational aspects, (8) patients and social aspects, (9) legal aspects, (10) development of AI algorithm, performance metrics and validation, and (11) other aspects. The frequency of mentioning a domain varied from 20 to 78% within the included papers. Only 15/86 studies were actual assessments of AI technologies. The majority of data were statements from reviews or papers voicing future needs or challenges of AI research, i.e. not actual outcomes of evaluations.

Conclusions

This review regarding value assessment of AI in medical imaging yielded 86 studies including 11 identified domains. The domain classification based on European assessment framework proved useful and current analysis added one new domain. Included studies had a broad range of essential domains about addressing AI technologies highlighting the importance of domains related to legal and ethical aspects.

Similar content being viewed by others

Background

Artificial Intelligence (AI) includes various technologies based on advanced algorithms and learning systems. Different terms are used in connection with AI, such as machine learning, deep learning, and conventional neural networks [1]. Furthermore, there is no universally agreed-upon definition of AI, while it is suggested to define it as a system capable of interpreting and learning from data to produce a specific goal [2].

AI is seen as a digital transformation and could be one of the major disrupting tools in the future healthcare system [3]. Radiology and other imaging areas encompass a vast amount of manual image reviews and a steep increase in medical images has been observed in the last decade, which requires more interpretation times by the imaging specialist. This limiting factor could be reduced by incorporating computer-aided algorithms of machine learning into the clinical workflow [4]. Pattern recognition in medical images and AI technologies seems to be a good match [5], and this area is likely to be one of the first to benefit from AI, which provides high expectations. In the first quarter of 2019, funding in imaging AI companies exceeded 1.2 billion USD [6]. A consultancy company values the annual market for the top 10 AI-based healthcare solutions at 150 billion USD in 2026 [7]. Health care payers' and providers' expectations are to achieve cost savings, improve patient satisfaction, and optimise workforce resources [8].

Most of the published AI studies within medical imaging are retrospective with a technical focus, including reporting of clinical performance metrics, validation, or robustness of the model [9]. Evaluation of this phase is thoroughly described, e.g. in the guideline Checklist for Artificial Intelligence in Medical Imaging—CLAIM [10]. However, the lack of proven clinical utility, feasibility and effect on patient outcomes has been mentioned by several studies [11,12,13,14] as well as ethical, legal, economic, sharing of data, and implementation issues [15,16,17]. AI is a complex technology and implementing it in a complex healthcare system, critical to society, requires a broad framework.

Health technology assessment (HTA) provides a broad framework for valuing healthcare technologies and with several examples of being tailored for specific areas like telemedicine [18] and digital healthcare services [19]. Value is to be understood in a broad sense as referring to impact or effect in several different domains. HTA is a multidisciplinary process that summarizes information that has been collected in a systematic, transparent, unbiased, and robust manner. One example is the HTA-framework from EUnetHTA [20], where evaluation is performed from nine perspectives called “domains”: (1) the health problem and current use of technology; (2) description and technical characteristics of the new technology; (3) safety assessment; (4) clinical effectiveness; (5) economic evaluation; (6) ethical analysis; (7) organisational aspects; (8) patient and social aspects; and (9) legal aspects.

It is quite important to develop a holistic and tailored HTA tool for evaluating the value of AI to implement the correct AI technologies in the field of medical imaging. Therefore, we aimed to give a comprehensive overview of relevant identified domains in the literature when assessing the value of AI in medical imaging. This is the first step in creating an evidence-based assessment tool for valuing AI technology.

Methods

A scoping review aims to ‘map the key concepts underpinning a research area and the main sources and types of evidence available’ [21]. As such, scoping reviews typically address broad questions, potentially include a range of methodologies and do not undertake quality assessment. This contrasts with the focused question of a systematic review, which is answered from a relatively narrow range of quality-assessed studies. This scoping review was conducted based on the PRSIMA guideline [22]. The study conducted at the Centre for Innovative Medical Technology at Odense University Hospital (Denmark), covering studies between January 2016 and September 2020, including five following stages.

Stage 1: Identifying the research issues

All published papers containing information about assessment of value of AI-technology in the field of medical imaging within public healthcare organisations, e.g. hospitals, dentists and universities, are considered eligible. Three types of studies are included to cover all aspects of AI in medical imaging: (1) Actual evaluation of the value of a specific AI technology, (2) Guidelines, statements, recommendations, white papers, evaluation models or HTA frameworks, and (3) Review articles and surveys voicing future needs/research/challenges. Hence, we reviewed available literature related to the assessment of the value of AI in medical imaging for a comprehensive understanding of which HTA domains or topics are used—or perceived relevant.

Stages 2 and 3: Identifying relevant studies and study selection

The search terms are summarised in Table 1. Clusters one and two are inspired by a previous review by Elhakim et al. [23] and cluster three by a search strategy used in a model for telemedicine [24]. The search strategy was verified by an experienced librarian from the University of Southern Denmark. Duplications were removed and most of the article selection process was conducted in Covidence tool [25].

Literature searches was conducted during 17–18th September 2020 on following 10 databases: (1) Medline (Ovid), (2) Scopus, (3) ProQuest (includes EconLit), (4) Google Scholar, and six related grey literature and working paper resources, i.e. (5) International HTA Database [26], (6) OpenGrey [27], (7) National Institutes of Health [28], (8) National Health Services [29], (9) Folkehelseinstituttet [30], 10) Folkhälsomyndigheten [31]. A single backward snowballing was done on the 1–2 most central studies selected by each of the extractors to identify further studies. Developing the selection criteria was an iterative process, conducted by the entire research group through multiple consensus meetings. Two researchers (IF and TK) performed the abstract and the full-text screenings, independently, solving the disagreements mainly by supervision of the senior researcher (KK). The inclusion and exclusion criteria are shown in Table 2.

Stages 4 and 5: Charting the data and collating, summarising, and reporting the results

Initially, the required information on the included studies was extracted and summarised using an extraction template in Microsoft Office Excel software. Included studies were reviewed by two researchers independently. The extracted items were: Context (country, clinical area(s), type of study); mentioned domains in the study, including specific outcomes and items in each domain. The domain classification from EUnetHTA was used as a point of departure to group the extracted data into nine domains. If a topic did not fit these pre-existing domains, the extracted text was noted in the section of “other aspects” for later evaluation. Regarding the domains “clinical effectiveness” and “the health problem and current use of technology”, specific clinical outcomes are not part of the data extraction in this scoping review. We identified a new domain (development of AI algorithm, performance metrics and validation) but did not extract specific data as this domain has been thoroughly described already. For quality assurance purposes, the principal investigator (IF) had several bilateral consultations and group sessions with all extractors to solve any inconsistencies and challenges. Accordingly, a common understanding and agreement about which topics should go under which domains, how detailed or deep to extract data, arguments for excluded studies, was reached and helped align the raw data.

In the second phase, extracted data was analysed (by two researchers) and condensed for each domain. Qualitative content analysis was performed [32], covering four predetermined themes/questions: (1) Effects, outcomes, value or impacts mentioned—as well as future needs/challenges/topics relevant when evaluating the value of AI, (2) Specific outcome measures, (3) Frequency of using a given topic or outcome group, (4) Potential overlap with other domains. A summary for each domain was made as the last step of analysis.

Results

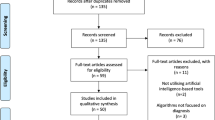

A study flow chart summarizes the process of literature retrieval (Fig. 1). In total, the literature search yielded 5890 papers, while 4292 papers remained after the removal of duplicates.

PRISMA flow chart for selection of the studies. *The number in front of the list with exclusion reasons refers to the exclusion criteria in Table 2

Based on titles and abstracts 166 papers were eligible for the full-text assessment, and a total of 79 papers [11, 12, 19, 33,34,35,36,37,38,39,40,41,42,43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77,78,79,80,81,82,83,84,85,86,87,88,89,90,91,92,93,94,95,96,97,98,99,100,101,102,103,104,105,106,107,108] fulfilled the inclusion criteria (see Additional file 1). Further, seven papers were included based on screening of reference lists in the most central articles [109,110,111,112,113,114,115]. Hence, 86 papers were included in the scoping review.

Characteristics and analysis of included AI-studies

The characteristics of the 86 included studies are presented in Table 3. The most frequent clinical areas covered are radiology (17%), medical imaging (14%) and radiomics (13%). Most studies (n = 61) are voicing future needs or challenges when evaluating AI, while there were also studies containing an actual evaluation (n = 15) as well as guideline, statement or framework (n = 10). Most studies are published in 2019 and 2020. The most frequently mentioned or perceived relevant domains are the development of AI algorithm, performance metrics and validation (78%), followed by technology aspects (73%). Excluding “other aspects”, the variation between how often domains is mentioned is in the range of 20% to 78%.

In total, we identified eleven relevant domains about assessing the value of AI (two more than the EUnetHTA domain classification). In addition, we extracted more specific data for eight domains including technology, safety, economics, ethics, organisational, patients and social, legal, and the other aspects. Regarding the remaining three domains (clinical effectiveness, the health problem and current use of technology, and development of AI algorithm, performance metrics and validation), we only noted whether information was present or not. Table 4 contains summary and specific outcome measures (if applicable) through extracted information of the included domains. Further details can be found in Additional file 2.

Clinical effects (domain 4)

Although clinical outcomes are not part of the extraction of the included studies in the scoping review the clinical domain is evidently of great importance. Several outcome measures for clinical effectiveness studies have been suggested in a systematic scoping review of AI methods applied to adult patients who underwent any health/medical intervention and reported therapeutic, preventive, or prognostic outcomes [116]. Based on 370 studies, this review found that AI was used primarily for the prediction/prognosis of more frequently reported outcomes, efficacy/effectiveness, and morbidity outcomes. Some examples of reasonably broad clinical or patient outcomes mentioned in the reviewed literature are fewer false positives in screening mammography, progression of disease and misdiagnosis, avoiding unnecessary stereotactic biopsies, improvement of treatment appropriateness (by physicians) and adherence (by patients) and the prevention of iatrogenic adverse events.

Discussion

Main findings

This scoping review regarding the value assessment of artificial intelligence in medical imaging yielded 86 included papers. Eleven domains were identified. The frequency of mentioning a domain varied from 20 to 78% within included studies. The studies were divided into three study types: studies voicing future needs or challenges when evaluating the value of AI, actual evaluations, and lastly, guidelines, statements, or frameworks. Out of 86 studies, only 15 were actual evaluations, and thus most data were based on statements and not actual outcomes of evaluations.

Comparing findings to the literature

The domain classification from the EUnetHTA framework proved very useful as extracted data used all the nine pre-existing domains and identified only one new domain (this is apart from a few issues filed in an “other domain”). However, the studies of value assessment of AI in the area of medical imaging includes a broad range of important domains in contrast to other studies. For example, an interview study in nine European countries investigated the information needs of hospital managers when deciding about investments in new treatments [117]). In that study, legal, social and ethical aspects were not deemed very important, which is in contrast with our findings. Further, in telemedicine, a scoping review of empirical studies that have applied the Model for Assessment of Telemedicine (MAST) shows that clinical, patient and economic effects are the most important areas [24]. Perhaps AI is unique in that all domains seem rather important—or perhaps in a more empirical setting and when more late evaluations become available this picture will change.

Limitations and strengths

Although the authors tried to provide a general overview regarding different aspects of value assessment for AI technology, there were some limitations. The terminology in the area of AI is still relatively immature. So although the focus was on medical imaging, we included articles in pathology and radiomics. Pathology and radiomics were not fully covered with our search terms, but this being a scoping review, we decided to keep these related studies and let the available data reflect in our analysis. Also, some of the included studies were narrative reviews that make it challenging to extract firm conclusions since there was no strong evidence to support mentioned results or claims. Another limitation was the considerable overlap between some domains where the included studies (and extractors) categorised data differently. This made it difficult to align the data analysis. We discussed overlaps in our joint meetings and selected the domain that best covered most of the included topics to handle these inconsistencies. For example, the topic of “explainable AI”/”black box” was initially extracted in both safety, technology, organisational and sometimes the other domain. Further, specific clinical outcomes are evidently of great importance when evaluating an AI technology. However, they were not part of the data extraction because most studies are very disease-specific and the number of included studies would have been unmanageable high in this review. This being a scooping review critical appraisal of the included studies were not done.

Regarding the strengths of this review, the broad coverage of areas of relevance for assessing the value of AI projects is in demand [17, 116, 118]. To the best of our knowledge, this is the first systematic scoping review about the value assessment of AI in field of medical imaging. Our search included grey literature which can be an important information source [119]. Furthermore, we have included the studies with different research methodologies to ensure the high coverage and a broad perspective in our data collection. The joint discussion sessions on topics with high overlap between the domains have likely improved the data analysis. High overlap was particularly observed in the topics of patient’s privacy (overlap between ethical, legal, and patient’s domains) and the earlier mentioned black box topic. Furthermore, most studies are published recently (after 2019) which shows a great interest in this field and reflects the newest information on the topic. Drawing a framework based on data from recent publications can strengthen the current value assessment to be considered in future evaluations in this field.

Future directions

A pipeline for an overview of AI technologies evaluation is shown in Fig. 2. A majority of the published AI studies within medical imaging is in the retrospective phase of Fig. 2, i.e. having a technical focus. However, as our review shows, it is important to evaluate AI projects both clinically and concerning many other areas, i.e. the prospective phase in Fig. 2, to ensure that AI technologies with no effect or unintended effects are not uncritically implemented.

Results from this review was used as part of a project with the overall aim to develop a Model for ASsessing the value of AI in medical imaging (MAS-AI) cf. publication [120]. MAS-AI was developed in three phases. First, this literature review. Next, we interviewed leading researchers in AI in Denmark. The third phase consisted of two workshops where decision-makers, patient organizations, and researchers discussed crucial topics when evaluating AI. The multidisciplinary team revised the model between workshops according to comments. The HTA framework MAS-AI is to support the introduction of AI technologies into healthcare in medical imaging.

It is important to ensure uniform and valid decisions regarding the adoption of AI technology with a structured process and tool. The MAS-AI model can help support these decisions and provide greater transparency for all parties involved.

Conclusion

This scoping review regarding value assessment of artificial intelligence in medical imaging yielded 86 papers fulfilling the inclusion criteria, and eleven domains were identified: (1) the health problem and current use of technology, (2) technology aspects, (3) safety assessment, (4) clinical effectiveness, (5) economics, (6) ethical analysis, (7) organisational aspects, (8) patients and social aspects, (9) legal aspects, (10) development of AI algorithm, performance metrics and validation, and (11) other aspects. The domain classification from the EUnetHTA framework proved very useful and analysis identified only one new real domain: domain 10 (a few issues were included in an “other domain”). Studies include a broad range of essential domains when addressing AI technologies; in contrast to other areas, legal and ethical aspects are highlighted as important in this review.

Availability of data and materials

The raw datasets used during the current study are available from the corresponding author on reasonable request.

Change history

23 January 2023

A Correction to this paper has been published: https://doi.org/10.1186/s12880-023-00965-z

Abbreviations

- AI:

-

Artificial intelligence

- CLAIM:

-

Checklist for Artificial Intelligence in Medical Imaging

- HTA:

-

Health technology assessment

- EUnetHTA:

-

European Network for Health Technology Assessment

- MAS-AI:

-

Model for ASsessing the value of AI in medical imaging

- MAST:

-

Model for ASessment of Telemedicine

References

Hashimoto DA, Rosman G, Rus D, Meireles OR. Artificial intelligence in surgery: promises and perils. Ann Surg. 2018;268(1):70–6.

Vaisman A, Linder N, Lundin J, Orchanian-Cheff A, Coulibaly JT, Ephraim RKD, et al. Artificial intelligence, diagnostic imaging and neglected tropical diseases: ethical implications. World Health Organ Bull World Health Organ. 2020;98(4):288–9.

Transforming healthcare with AI. The impact on the workforce and organizations 2020. https://www.mckinsey.com/industries/healthcare-systems-and-services/our-insights/transforming-healthcare-with-ai. Accessed 23 June 2022.

Erickson BJ, Korfiatis P, Akkus Z, Kline TL. Machine learning for medical imaging. Radiographics. 2017;37(2):505–15.

Liu X, Faes L, Kale AU, Wagner SK, Fu DJ, Bruynseels A, et al. A comparison of deep learning performance against health-care professionals in detecting diseases from medical imaging: a systematic review and meta-analysis. Lancet Digit Health. 2019;1(6):e271–97.

Harris S PS. Funding analysis of companies developing machine learning solutions for medical imaging 2019. https://s3-eu-west-2.amazonaws.com/signifyresearch/app/uploads/2019/01/31125920/Funding-Analysis-of-Companies-Developing-Machine-Learning-Solutions-for-Medical-Imaging_Jan-2019.pdf. Accessed 23 June 2022.

Accenture. Artificial Intelligence: Healthcare’s New Nervous System. 2017.

Kent J. Providers embrace predictive analytics for clinical, financial benefits 2018. https://healthitanalytics.com/news/providers-embrace-predictive-analytics-for-clinical-financial-benefits. Accessed 23 June 2022.

Dinga R, Penninx BW, Veltman DJ, Schmaal L, Marquand AF. Beyond accuracy: measures for assessing machine learning models, pitfalls and guidelines. Cold Spring Harbor: Cold Spring Harbor Laboratory Press; 2019.

Mongan J, Moy L, Kahn CE Jr. Checklist for Artificial Intelligence in Medical Imaging (CLAIM): a guide for authors and reviewers. Radiol Artif Intell. 2020;2(2): e200029.

Gahungu N, Trueick R, Bhat S, Sengupta PP, Dwivedi G. Current challenges and recent updates in artificial intelligence and echocardiography. Curr Cardiovasc Imaging Rep. 2020;13(2):66.

Park SH, Han K. Methodologic guide for evaluating clinical performance and effect of artificial intelligence technology for medical diagnosis and prediction. Radiology. 2018;286(3):800–9.

Pellegrini E, Ballerini L, del Maria CVH, Chappell FM, González-Castro V, Anblagan D, et al. Machine learning of neuroimaging to diagnose cognitive impairment and dementia: a systematic review and comparative analysis. Ithaca: Cornell University Library; 2018.

Young AT, Vora NB, Cortez J, Tam A, Yeniay Y, Afifi L, et al. The role of technology in melanoma screening and diagnosis. Pigm Cell Melanoma Res. 2020;6:66.

Alami H, Lehoux P, Auclair Y, de Guise M, Gagnon MP, Shaw J, et al. Artificial intelligence and health technology assessment: anticipating a new level of complexity. J Med Internet Res. 2020;22(7): e17707.

ATV. BEDRE SUNDHED MED AI? En hvidbog fra ATV. 2019.

Li RC, Asch SM, Shah NH. Developing a delivery science for artificial intelligence in healthcare. NPJ Digit Med. 2020;3:107.

Kidholm K, Ekeland AG, Jensen LK, Rasmussen J, Pedersen CD, Bowes A, et al. A model for assessment of telemedicine applications: mast. Int J Technol Assess Health Care. 2012;28(1):44–51.

Haverinen J, Keränen N, Falkenbach P, Maijala A, Kolehmainen T, Digi-HTA Reponen J. Health technology assessment framework for digital healthcare services. Finn J eHealth eWelfare. 2019;11(4):326–41.

EUnetHTA Joint Action 2. Work Package 8. HTA Core Model ® version 3.0 (Pdf) 2016. www.htacoremodel.info/BrowseModel.aspx. Accessed 23 June 2022.

Arksey H, O’Malley L. Scoping studies: towards a methodological framework. Int J Soc Res Methodol. 2005;8(1):19–32.

LE Tricco AC, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169(7):467–73.

Elhakim MT, Graumann O, Brønsro Larsen L, Nielsen M, Rasmussen BS. Kunstig intelligens til cancerdiagnostik i brystkraeftscreening. Ugeskr Laeger. 2020;182(16):1488–92.

Kidholm K, Clemensen J, Caffery LJ, Smith AC. The Model for Assessment of Telemedicine (MAST): a scoping review of empirical studies. J Telemed Telecare. 2017;23(9):803–13.

Babineau J. Product Review: Covidence (Systematic Review Software). J Can Health Libraries Assocn/J de l’Association des bibliothèques de la santé du Canada. 2014;35(2):68–71.

International HTA database 2021. https://database.inahta.org. Accessed 23 June 2022.

OpenGrey 2021. http://www.opengrey.eu. Accessed 23 June 2022.

National Institutes of Health 2021. https://www.nih.gov. Accessed 23 June 2022.

National Health Services 2021. https://www.nhs.uk. Accessed 23 June 2022.

FHI Folkehelseinstituttet 2021. https://www.fhi.no. Accessed 23 June 2022.

Folkhälsomyndigheten 2021. https://www.folkhalsomyndigheten.se. Accessed 23 June 2022.

Miles MB, Huberman AM, Saldana J. Qualitative data analysis: a methods sourcebook 3rd edn. Sage; 2014.

Alexander CA, Wang L. Big data analytics in heart attack prediction. J Nurs Care. 2017;6(393):2167–1168.

Angehrn Z, Haldna L, Zandvliet AS, Berglund EG, Zeeuw J, Amzal B, et al. Artificial intelligence and machine learning applied at the point of care. Frontiers in Pharmacology. 2020;11.

Baig MA, Almuhaizea MA, Alshehri J, Bazarbashi MS, Al-Shagathrh F. Urgent need for developing a framework for the governance of AI in healthcare. Stud Health Technol Inform. 2020;272:253–6.

Bakalo R, Goldberger J, Ben-Ari R. Weakly and semi supervised detection in medical imaging via deep dual branch net. Ithaca: Cornell University Library; 2020.

BenTaieb A, Hamarneh G. Deep learning models for digital pathology. Ithaca: Cornell University Library; 2019.

Bhattad Pradnya B, Jain V. Artificial intelligence in modern medicine—the evolving necessity of the present and role in transforming the future of medical care. Cureus. 2020;12(5):66.

Bhavani SR, Chilambuchelvan A, Senthilkumar J, Manjula D, Krishnamoorthy R, Kannan A. A secure cloud-based multi-agent intelligent system for mammogram image diagnosis. Int J Biomed Eng Technol. 2018;28(2):185–202.

Bi WL, Hosny A, Schabath MB, Giger ML, Birkbak NJ, Mehrtash A, et al. Artificial intelligence in cancer imaging: Clinical challenges and applications. CA Cancer J Clin. 2019;69(2):127–57.

Bohr A, Memarzadeh K. The rise of artificial intelligence in healthcare applications. Artif Intell Healthc. 2020;66:25–60.

Boissoneault J, Sevel L, Letzen J, Robinson M, Staud R. Biomarkers for musculoskeletal pain conditions: use of brain imaging and machine learning. Curr Rheumatol Rep. 2017;19(1):5.

Brzezicki MA, Kobetic MD, Neumann S, Pennington C. Diagnostic accuracy of frontotemporal dementia. An artificial intelligence-powered study of symptoms, imaging and clinical judgement. Adv Med Sci. 2019;64(2):292–302.

Cardenas CE, Yang J, Anderson BM, Court LE, Brock KB. Advances in auto-segmentation. Semin Radiat Oncol. 2019;29(3):185–97.

Chan HP, Samala RK, Hadjiiski LM. CAD and AI for breast cancer-recent development and challenges. Br J Radiol. 2020;93(1108):20190580.

Chennubhotla C, Clarke LP, Fedorov A, Foran D, Harris G, Helton E, et al. An assessment of imaging informatics for precision medicine in cancer. Yearb Med Inf. 2017;26(1):110–9.

Cheung CY, Tang F, Ting DSW, Tan GSW, Wong TY. Artificial intelligence in diabetic eye disease screening. Asia Pac J Ophthalmol. 2019;24:24.

Chow JCL. Internet-based computer technology on radiotherapy. Rep Pract Oncol Radiother. 2017;22(6):455–62.

Coccia M. Artifical intelligence technology in cancer imaging: Clinical challenges for detection of lung and breast cancer. J Soc Admin Sci. 2019;6(2):82–98.

Coccia M. Artificial intelligence technology in oncology: a new technological paradigm. Ithaca: Cornell University Library; 2019.

Dalal V, Carmicheal J, Dhaliwal A, Jain M, Kaur S, Batra SK. Radiomics in stratification of pancreatic cystic lesions: machine learning in action. Cancer Lett. 2020;469:228–37.

Dallora AL, Anderberg P, Kvist O, Mendes E, Diaz Ruiz S, Sanmartin BJ. Bone age assessment with various machine learning techniques: a systematic literature review and meta-analysis. PLoS ONE. 2019;14(7): e0220242.

Dallora AL, Eivazzadeh S, Mendes E, Berglund J, Anderberg P. Machine learning and microsimulation techniques on the prognosis of dementia: a systematic literature review. PLoS ONE. 2017;12(6):66.

Dankwa-Mullan I, Rivo M, Sepulveda M, Park Y, Snowdon J, Rhee K. Transforming diabetes care through artificial intelligence: the future is here. Popul Health Manag. 2019;22(3):229–42.

Das D, Ito J, Kadowaki T, Tsuda K. An interpretable machine learning model for diagnosis of Alzheimer’s disease. PeerJ. 2019;6:66.

Deng Y, Sun Y, Zhu Y, Xu Y, Yang Q, Zhang S, et al. A new framework to reduce doctor’s workload for medical image annotation. IEEE Access. 2019;7:107097–104.

deSouza NM, Achten E, Alberich-Bayarri A, Bamberg F, Boellaard R, Clément O, et al. Validated imaging biomarkers as decision-making tools in clinical trials and routine practice: current status and recommendations from the EIBALL* subcommittee of the European Society of Radiology (ESR). Insights Imaging. 2019;10(1):87.

Du-Harpur X, Watt FM, Luscombe NM, Lynch MD. What is AI? Applications of artificial intelligence to dermatology. Brit J Dermatol. 2020;6:66.

ErdaĞLi H. PROMETHEE Analysis of Breast Cancer Imaging Devices. 2019.

Fraser AG. A manifesto for cardiovascular imaging: addressing the human factor. Eur Heart J Cardiovasc Imaging. 2017;18(12):1311–21.

Gatta R, Depeursinge A, Ratib O, Michielin O, Leimgruber A. Integrating radiomics into holomics for personalised oncology: from algorithms to bedside. Eur Radiol Exp. 2020;4(1):11.

Geis JR, Brady AP, Wu CC, Spencer J, Ranschaert E, Jaremko JL, et al. Ethics of artificial intelligence in radiology: summary of the joint European and North American Multisociety Statement. J Am Coll Radiol. 2019;16(11):1516–21.

Geras KJ, Mann RM, Moy L. Artificial intelligence for mammography and digital breast tomosynthesis: current concepts and future perspectives. Radiology. 2019;293(2):246–59.

Goldberg-Stein S, Chernyak V. Adding value in radiology reporting. J Am Coll Radiol. 2019;16(9):1292–8.

Goldenberg SL, Nir G, Salcudean SE. A new era: artificial intelligence and machine learning in prostate cancer. Nat Rev Urol. 2019;16(7):391–403.

Groot OQ, Bongers MER, Ogink PT, Senders JT, Karhade AV, Bramer JAM, et al. Does artificial intelligence outperform natural intelligence in interpreting musculoskeletal radiological studies? A systematic review. Clin Orthop Relat Res. 2020;30:30.

Hekler A, Utikal JS, Enk AH, Berking C, Klode J, Schadendorf D, et al. Pathologist-level classification of histopathological melanoma images with deep neural networks. Eur J Cancer. 2019;115:79.

Hopkins JJ, Keane PA, Balaskas K. Delivering personalized medicine in retinal care: from artificial intelligence algorithms to clinical application. Curr Opin Ophthalmol. 2020;31(5):329–36.

Huellebrand M, Messroghli D, Tautz L, Kuehne T, Hennemuth A. An extensible software platform for interdisciplinary cardiovascular imaging research. Comput Methods Programs Biomed. 2020;184:66.

Ibrahim A, Gamble P, Jaroensri R, Abdelsamea MM, Mermel CH, Chen PHC, et al. Artificial intelligence in digital breast pathology: techniques and applications. Breast. 2020;49:267–73.

Jaremko JL, Azar M, Bromwich R, Lum A, Alicia Cheong LH, Gibert M, et al. Canadian Association of radiologists white paper on ethical and legal issues related to artificial intelligence in radiology. Can Assoc Radiol J. 2019;70(2):107–18.

Kann BH, Thompson R, Thomas CR Jr, Dicker A, Aneja S. Artificial intelligence in oncology: current applications and future directions. Oncology. 2019;33(2):46–53.

Krupinski EA. An ethics framework for clinical imaging data sharing and the greater good. Radiology. 2020;295(3):683–4.

Kusunose K, Haga A, Abe T, Sata M. Utilization of Artificial Intelligence in Echocardiography. Circ J. 2019;83(8):1623–9.

Lambin P, Leijenaar RTH, Deist TM, Peerlings J, De Jong EEC, Van Timmeren J, et al. Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol. 2017;14(12):749–62.

Langerhuizen DWG, Janssen SJ, Mallee WH, van den Bekerom MPJ, Ring D, Kerkhoffs G, et al. What are the applications and limitations of artificial intelligence for fracture detection and classification in orthopaedic trauma imaging? A systematic review. Clin Orthop Relat Res. 2019;477(11):2482–91.

Larson DB, Magnus DC, Lungren MP, Shah NH, Langlotz CP. Ethics of using and sharing clinical imaging data for artificial intelligence: a proposed framework. Radiology. 2020;295(3):675–82.

Le EPV, Wang Y, Huang Y, Hickman S, Gilbert FJ. Artificial intelligence in breast imaging. Clin Radiol. 2019;74(5):357–66.

Le Rest CC, Hustinx R. Are radiomics ready for clinical prime-time in PET/CT imaging? Q J Nucl Med Mol Imaging. 2019;63(4):347.

Lei W, Wang H, Gu R, Zhang S, Zhang S, Wang G. DeepIGeoS-V2: deep interactive segmentation of multiple organs from head and neck images with lightweight CNNs. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2019;11851 LNCS:61–9.

Liew C. The future of radiology augmented with artificial intelligence: a strategy for success. Eur J Radiol. 2018;102:152–6.

Liu Z, Wang S, Dong D, Wei J, Fang C, Zhou X, et al. The applications of radiomics in precision diagnosis and treatment of oncology: opportunities and challenges. Theranostics. 2019;9(5):1303–22.

Lundervold AS, Lundervold A. An overview of deep learning in medical imaging focusing on MRI. Ithaca: Cornell University Library; 2018.

Mathur PMF, Burns MLMP. Artificial intelligence in critical care. Int Anesthesiol Clin. 2019;57(2):89–102.

McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, et al. International evaluation of an AI system for breast cancer screening. Nature. 2020;577(7788):89–94.

Nagendran M, Chen Y, Lovejoy CA, Gordon AC, Komorowski M, Harvey H, et al. Artificial intelligence versus clinicians: systematic review of design, reporting standards, and claims of deep learning studies in medical imaging. BMJ. 2020;368:66.

O’Connor JP, Aboagye EO, Adams JE, Aerts HJ, Barrington SF, Beer AJ, et al. Imaging biomarker roadmap for cancer studies. Nat Rev Clin Oncol. 2017;14(3):169–86.

Oikonomou EK, Siddique M, Antoniades C. Artificial intelligence in medical imaging: a radiomic guide to precision phenotyping of cardiovascular disease. Cardiovasc Res. 2020;6:66.

Olthof AW, van Ooijen PMA, Rezazade Mehrizi MH. Promises of artificial intelligence in neuroradiology: a systematic technographic review. Neuroradiology. 2020;62(10):1265–78.

Papanikolaou N, Matos C, Koh DM. How to develop a meaningful radiomic signature for clinical use in oncologic patients. Cancer Imaging. 2020;20(1):33.

Peeken JC, Bernhofer M, Wiestler B, Goldberg T, Cremers D, Rost B, et al. Radiomics in radiooncology—challenging the medical physicist. Phys Med. 2018;48:27–36.

Peeken JC, Wiestler B, Combs SE. Image-guided radiooncology: the potential of radiomics in clinical application. Rec Results Cancer Res. 2020;216:773–94.

Pesapane F, Codari M, Sardanelli F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp. 2018;2(1):35.

Petrone PM, Casamitjana A, Falcon C, Artigues M, Operto G, Cacciaglia R, et al. Prediction of amyloid pathology in cognitively unimpaired individuals using voxel-wise analysis of longitudinal structural brain MRI. Alzheimer’s Res Therapy. 2019;11(1):66.

Pocevičiūtė M, Eilertsen G, Lundström C. Survey of XAI in Digital Pathology. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 2020;12090 LNCS:56–88.

Qi Q, Li Y, Wang J, Han Z, Huang Y, Ding X, et al. Label-efficient breast cancer histopathological image classification. IEEE J Biomed Health Inf. 2019;23(5):2108–16.

Retson TA, Besser AH, Sall S, Golden D, Hsiao A. Machine learning and deep neural networks in thoracic and cardiovascular imaging. J Thorac Imaging. 2019;34(3):192–201.

Saba L, Biswas M, Kuppili V, Cuadrado Godia E, Suri HS, Edla DR, et al. The present and future of deep learning in radiology. Eur J Radiol. 2019;114:14–24.

Shen FX, Wolf SM, Gonzalez RG, Garwood M. Ethical issues posed by field research using highly portable and cloud-enabled neuroimaging. Neuron. 2020;105(5):771–5.

Sollini M, Antunovic L, Chiti A, Kirienko M. Towards clinical application of image mining: a systematic review on artificial intelligence and radiomics. Eur J Nucl Med Mol Imaging. 2019;46(13):2656–72.

Starmans MPA, Voort SR, Tovar JMC, Veenland JF, Klein S, Niessen WJ. Radiomics. In: Handbook of medical image computing and computer assisted intervention. 2019. P. 429–56.

Takahashi R, Kajikawa Y. Computer-aided detection: cost effectiveness analysis with learning model. In: 2017 Portland international conference on management of engineering and technology (PICMET); 2017. p. 1–8.

Tesche C, De Cecco CN, Albrecht MH, Duguay TM, Bayer RR II, Litwin SE, et al. Coronary CT angiography-derived fractional flow reserve. Radiology. 2017;285(1):17–33.

van Timmeren JE, Cester D, Tanadini-Lang S, Alkadhi H, Baessler B. Radiomics in medical imaging—“how-to” guide and critical reflection. Insights Imaging. 2020;11(1):66.

Xie Q, Faust K, Van Ommeren R, Sheikh A, Djuric U, Diamandis P. Deep learning for image analysis: personalizing medicine closer to the point of care. Crit Rev Clin Lab Sci. 2019;56(1):61–73.

Xie Y, Gunasekeran DV, Balaskas K, Keane PA, Sim DA, Bachmann LM, et al. Health economic and safety considerations for artificial intelligence applications in diabetic retinopathy screening. Transl Vis Sci Technol. 2020;9(2):22.

Yuan J, Fan Y, Lv X, Chen C, Li D, Hong Y, et al. Research on the practical classification and privacy protection of CT images of parotid tumors based on ResNet50 model. J Phys Conf Ser. 2020;66:1576.

Zaharchuk G. Next generation research applications for hybrid PET/MR and PET/CT imaging using deep learning. Eur J Nucl Med Mol Imaging. 2019;46(13):2700–7.

Keel S, Lee PY, Scheetz J, Li Z, Kotowicz MA, MacIsaac RJ, et al. Feasibility and patient acceptability of a novel artificial intelligence-based screening model for diabetic retinopathy at endocrinology outpatient services: a pilot study. Sci Rep. 2018;8(1):4330.

Kohli M, Prevedello LM, Filice RW, Geis JR. Implementing machine learning in radiology practice and research. Am J Roentgenol. 2017;208(4):754–60.

Lin H, Li R, Liu Z, Chen J, Yang Y, Chen H, et al. Diagnostic efficacy and therapeutic decision-making capacity of an artificial intelligence platform for childhood cataracts in eye clinics: a multicentre randomized controlled trial. EClinicalMedicine. 2019;9:52–9.

Pesapane F, Suter MB, Codari M, Patella F, Volonté C, Sardanelli F. Chapter 52—Regulatory issues for artificial intelligence in radiology. In: Faintuch J, Faintuch S, editors. Precision Medicine for Investigators, Practitioners and Providers. New York: Academic Press; 2020. p. 533–43.

Pesapane F, Volonté C, Codari M, Sardanelli F. Artificial intelligence as a medical device in radiology: ethical and regulatory issues in Europe and the United States. Insights Imaging. 2018;9(5):745–53.

Safdar NM, Banja JD, Meltzer CC. Ethical considerations in artificial intelligence. Eur J Radiol. 2020;122: 108768.

Tang A, Tam R, Cadrin-Chênevert A, Guest W, Chong J, Barfett J, et al. Canadian Association of radiologists white paper on artificial intelligence in radiology. Can Assoc Radiol J. 2018;69(2):120–35.

Graili P, Ieraci L, Hosseinkhah N, Argent-Katwala M. Artificial intelligence in outcomes research: a systematic scoping review. Expert Rev Pharmacoecon Outcomes Res. 2021;66:1–23.

Kidholm K, Ølholm AM, Birk-Olsen M, Cicchetti A, Fure B, Halmesmäki E, et al. Hospital managers’ need for information in decision-making—an interview study in nine European countries. Health Policy. 2015;6:66.

Omoumi P, Ducarouge A, Tournier A, Harvey H, Kahn CE, Louvet-de Verchère F, et al. To buy or not to buy—evaluating commercial AI solutions in radiology (the ECLAIR guidelines). Eur Radiol. 2021;31(6):3786–96.

Paez A. Grey literature: an important resource in systematic reviews. J Evid Based Med. 2017;6:66.

Fasterholdt I, Kjølhede T, Naghavi-Behzad M, Schmidt T, Rautalammi Q, Hildebrandt M, et al. Model for ASsessing the value of AI in medical imaging (MAS-AI). International Journal of Technology Assessment in Health Care. 2022; 38(1), e74, 1–6. https://doi.org/10.1017/S0266462322000551.

Acknowledgements

The authors thank research librarian Mette Brandt Eriksen for help with narrowing down databases and providing technical help for searches and to trainee Laura Kuntz for conducting the searches and for honing the search criteria together with Iben Fasterholdt. Thanks to student assistant Jonathan Messerschmidt and Martin Grønne Jensen for obtaining the full text of the included studies and Sara Eun Lendal for contribution to the linguistic content of the article and Valeria E. Rac for adding clarity.

Funding

This study was supported by an in-house fund at Odense University Hospital (Denmark) named “Konkurrencemidler” in Danish. The funder had no role in the design of the study and collection, analysis, and interpretation of data or in writing the manuscript.

Author information

Authors and Affiliations

Contributions

IF, BR and KK conceived and designed the study. IF and TK did the literature search. IF, MNB, BR, TK, MMS and KK contributed to data collection and data analyses while IF did the data interpretation. IF, MNB, BR, TK, MMS, MGH and KK discussed the results and contributed to revise the final manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not relevant.

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original online version of this article has been revised to remove an incorrect word from Figure 1.

Supplementary Information

Additional file 1.

Final searches for the scoping review. The final searches for the scoping review is provided.

Additional file 2.

Full data analysis for all domains. The full data analysis for all domains is provided.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Fasterholdt, I., Naghavi-Behzad, M., Rasmussen, B.S.B. et al. Value assessment of artificial intelligence in medical imaging: a scoping review. BMC Med Imaging 22, 187 (2022). https://doi.org/10.1186/s12880-022-00918-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12880-022-00918-y