Abstract

One of the most promising areas of health innovation is the application of artificial intelligence (AI), primarily in medical imaging. This article provides basic definitions of terms such as “machine/deep learning” and analyses the integration of AI into radiology. Publications on AI have drastically increased from about 100–150 per year in 2007–2008 to 700–800 per year in 2016–2017. Magnetic resonance imaging and computed tomography collectively account for more than 50% of current articles. Neuroradiology appears in about one-third of the papers, followed by musculoskeletal, cardiovascular, breast, urogenital, lung/thorax, and abdomen, each representing 6–9% of articles. With an irreversible increase in the amount of data and the possibility to use AI to identify findings either detectable or not by the human eye, radiology is now moving from a subjective perceptual skill to a more objective science. Radiologists, who were on the forefront of the digital era in medicine, can guide the introduction of AI into healthcare. Yet, they will not be replaced because radiology includes communication of diagnosis, consideration of patient’s values and preferences, medical judgment, quality assurance, education, policy-making, and interventional procedures. The higher efficiency provided by AI will allow radiologists to perform more value-added tasks, becoming more visible to patients and playing a vital role in multidisciplinary clinical teams.

Similar content being viewed by others

Key points

-

Over 10 years, publications on AI in radiology have increased from 100–150 per year to 700–800 per year

-

Magnetic resonance imaging and computed tomography are the most involved techniques

-

Neuroradiology appears as the most involved subspecialty (accounting for about one-third of the papers), followed by musculoskeletal, cardiovascular, breast, urogenital, lung/thorax, and abdominal radiology (each representing 6–9% of articles)

-

Radiologists, the physicians who were on the forefront of the digital era in medicine, can now guide the introduction of AI in healthcare

Introduction

One of the most promising areas of health innovation is the application of artificial intelligence (AI) in medical imaging, including, but not limited to, image processing and interpretation [1]. Indeed, AI may find multiple applications, from image acquisition and processing to aided reporting, follow-up planning, data storage, data mining, and many others. Due to this wide range of applications, AI is expected to massively impact the radiologist’s daily life.

This article provides basic definitions of terms commonly used when discussing AI applications, analyses various aspects related to the integration of AI in the radiological workflow, and provides an overview of the balance between AI threats and opportunities for radiologists. Awareness of this trend is a necessity, especially for younger generations who will face this revolution.

Artificial intelligence: definitions

The term AI is applied when a device mimics cognitive functions, such as learning and problem solving [2]. More generally, AI refers to a field of computer science dedicated to the creation of systems performing tasks that usually require human intelligence, branching off into different techniques [3]. Machine learning (ML), a term introduced by Arthur Samuel in 1959 to describe a subfield of AI [4] that includes all those approaches that allow computers to learn from data without being explicitly programmed, has been extensively applied to medical imaging [5]. Among the techniques that fall under the ML umbrella, deep learning (DL) has emerged as one of the most promising. Indeed, DL is a technique belonging to ML, which in turn refers to a broader AI family (Fig. 1). In particular, DL methods belong to representation-learning methods with multiple levels of representation, which process raw data to perform classification or detection tasks [6].

Deep learning as a subset of machine learning methods, which represent a branch of the existing artificial intelligence techniques. Machine learning techniques have been extensively applied since the 1980s. Deep learning has been applied since the 2010s due to the advancement of computational resources

ML incorporates computational models and algorithms that imitate the architecture of the biological neural networks in the brain, i.e., artificial neural networks (ANNs) [7]. Neural network architecture is structured in layers composed of interconnected nodes. Each node of the network performs a weighted sum of the input data that are subsequently passed to an activation function. Weights are dynamically optimised during the training phase. There are three different kinds of layers: the input layer, which receives input data; the output layer, which produces the results of data processing; and the hidden layer(s), which extracts the patterns within the data. The DL approach was developed to improve on the performance of conventional ANN when using deep architectures. A deep ANN differs from the single hidden layer by having a large number of hidden layers, which characterise the depth of the network [8]. Among the different deep ANNs, convolutional neural networks (CNNs) have become popular in computer vision applications. In this class of deep ANNs, convolution operations are used to obtain feature maps in which the intensities of each pixel/voxel are calculated as the sum of each pixel/voxel of the original image and its neighbours, weighted by convolution matrices (also called kernels). Different kernels are applied for specific tasks, such as blurring, sharpening, or edge detection. CNNs are biologically inspired networks mimicking the behaviour of the brain cortex, which contains a complex structure of cells sensitive to small regions of the visual field [3]. The architecture of deep CNNs allows for the composition of complex features (such as shapes) from simpler features (e.g. image intensities) to decode image raw data without the need to detect specific features [3] (Fig. 2).

Comparison between classic machine learning and deep learning approaches applied to a classification task. Both depicted approaches use an artificial neural network organised in different layers (IL input layer, HL hidden layer, OL output layer). The deep learning approach avoids the design of dedicated feature extractors by using a deep neural network that represents complex features as a composition of simpler ones

Despite their performance, ML network architecture makes them more prone to fail in reaching the convergence and overfit training dataset. On the other hand, the complexity of deep network architectures makes them demanding in terms of computational resources and dimension of the training sample.

Success in DL application was possible mainly due to recent advancements in the development of hardware technologies, like graphics processing units [5]. Indeed, the high number of nodes needed to detect complex relationships and patterns within data may result in billions of parameters that need to be optimised during the training phase. For this reason, DL networks require a huge amount of training data, which in turn increase the computing power needed to analyse them. These are also the reasons why DL algorithms are showing increased performance and are, theoretically, not susceptible to the performance plateau of the simpler ML networks (Fig. 3).

Number of publications indexed on EMBASE obtained using the search query (‘artificial intelligence’/exp. OR ‘artificial intelligence’ OR ‘machine learning’/exp. OR ‘machine learning’ OR ‘deep learning’/exp. OR ‘deep learning’) AND (‘radiology’/exp. OR ‘radiology’ OR ‘diagnostic imaging’/exp. OR ‘diagnostic imaging’) AND ([english]/lim). EMBASE was accessed on April 24, 2018. For each year the number of publications was stratified for imaging modality. US ultrasound, MRI magnetic resonance imaging, CT computed tomography, PET positron emission tomography, SPECT single-photon emission tomography. Diagnostic modalities different from those listed above are grouped under the “other topic” label (e.g. optical coherence tomography, dual-energy x-ray absorptiometry, etc.)

Radiologists are already familiar with computer-aided detection/diagnosis (CAD) systems, which were first introduced in the 1960s in chest x-ray and mammography applications [5]. However, advances in algorithm development, combined with the ease of access to computational resources, allows AI to be applied in radiological decision-making at a higher functional level [7].

The wind of change

The great enthusiasm for and dynamism in the development of AI systems in radiology is shown by the increase in publications on this topic (Figs. 4 and 5). Only 10 years ago, the total number of publications on AI in radiology only just exceeded 100 per year. Thereafter, we had a tremendous increase, with over 700–800 publications per year in 2016–17. In the last couple of years, computed tomography (CT) and magnetic resonance imaging (MRI) have collectively accounted for more than 50% of articles, though radiography, mammography, and ultrasound are also represented (Table 1). Neuroradiology (here evaluated as imaging of the central nervous system) is the most involved subspecialty (accounting for about one-third of the papers), followed by musculoskeletal, cardiovascular, breast, urogenital, lung/thorax, and abdominal radiology, each representing between 6 and 9% of the total number of papers (Table 2). AI currently has an impact on the field of radiology, with MRI and neuroradiology as the major fields of innovation.

Number of publications indexed on EMBASE obtained using the search query (‘artificial intelligence’/exp. OR ‘artificial intelligence’ OR ‘machine learning’/exp. OR ‘machine learning’ OR ‘deep learning’/exp. OR ‘deep learning’) AND (‘radiology’ OR ‘diagnostic imaging’). EMBASE was accessed on April 24, 2018. For each year, the number of publications was subdivided separating opinion articles, reviews and conference abstracts from original articles in seven main subgroups considering subspecialty or body part. Other fields of medical imaging different from those listed above are grouped under the “other topics” label (e.g. dermatology, ophthalmology, head and neck, etc.)

Recent meetings have also proven the interest in AI applications. During the 2018 European Congress of Radiology and the 2017 Annual Meeting of the Radiological Society of North America, AI represented the focus of many talks. Studies showed the application of DL algorithms for assessing the risk of malignancy for a lung nodule, estimating skeletal maturity from paediatric hand radiographs, classifying liver masses, and even obviating the need for thyroid and breast biopsies [9,10,11]; at the same time, the vendors showed examples of AI applications in action [9, 10].

AI in radiology: threat or opportunity?

A motto of radiology residents is: “The more images you see, the more examinations you report, the better you get”. The same principle works for ML, and in particular for DL. In the past decades, medical imaging has evolved from projection images, such as radiographs or planar scintigrams, to tomographic (i.e. cross-sectional) images, such as ultrasound (US), CT, tomosynthesis, positron emission tomography, MRI, etc., becoming more complex and data rich. Even though this shift to three-dimensional (3D) imaging began during the 1930s, it was not until the digital era that this approach allowed high anatomic detail to be obtained and functional information to be captured.

The increasing amount of data to be processed can influence how radiologists interpret images: from inference to merely detection and description. When too much time is taken for image analysis, the time for evaluating clinical and laboratory contexts is squeezed [12]. The radiologist is reduced to being only an image analyst. The clinical interpretation of the findings is left to other physicians. This is dangerous, not only for radiologists but also for patients: non-radiologists can have a full understanding of the clinical situation but do not have the radiological knowledge. In other words, if radiologists do not have the time for clinical judgement, the final meaning of radiological examinations will be left to non-experts in medical imaging.

In this scenario, AI is not a threat to radiology. It is indeed a tremendous opportunity for its improvement. In fact, similar to our natural intelligence, AI algorithms look at medical images to identify patterns after being trained using vast numbers of examinations and images. Those systems will be able to give information about the characterisation of abnormal findings, mostly in terms of conditional probabilities to be applied to Bayesian decision-making [13, 14].

This is crucial because not all abnormalities are representative of disease and must be actioned. AI systems learn on a case-by-case basis. However, unlike CAD systems, which just highlight the presence or absence of image features known to be associated with a disease state [15, 16], AI systems look at specific labelled structures and also learn how to extract image features either visible or invisible to the human eye. This approach mimics human analytical cognition, allowing for better performance than that obtained with old CAD software [17].

With the irreversible increase in imaging data and the possibility to identify findings that humans can or cannot detect [18], radiology is now moving from a subjective perceptual skill to a more objective science [12]. In fact, the radiologist’s work is currently limited by subjectivity, i.e. variations across interpreters, and the adverse effect of fatigue. The attention to inter- and intra-reader variability [19] and the work committed to improve the repeatability and reproducibility of medical imaging over the past decades proves the need for reproducible radiological results. In a broader perspective, the trend toward data sharing also works in this case [20]. The key point is that AI has the potential to replace many of the routine detection, characterisation and quantification tasks currently performed by radiologists using cognitive ability, as well as to accomplish the integration of data mining of electronic medical records in the process [1, 7, 21].

Moreover, the recently developed DL networks have led to more robust models for radiomics, which is an emerging field that deals with the high-throughput extraction of quantitative peculiar features from radiological images [22,23,24,25,26]. Indeed, data derived from radiomics investigation, such as intensity, shape, texture, wavelength, etc., can be extracted from medical images [23, 27,28,29,30,31] and extracted by or integrated in ML approaches, providing valuable information for the prediction of treatment response, differentiating benign and malignant tumours, and assessing cancer genetics in many cancer types [23, 32,33,34]. Because of the rapid growth of this area, numerous published radiomics investigations lack standardised evaluation of both the scientific integrity and the clinical relevance [22]. However, despite the ongoing need for independent validation datasets to confirm the diagnostic and prognostic value of radiomics features, radiomics has shown several promising applications for personalised therapy [22, 23], not only in oncology but also in other fields, as shown by recent original articles that have proved the value of radiomics in the cardiovascular CT domain [35, 36].

Finally, AI applications may enhance the reproducibility of technical protocols, improving image quality and decreasing radiation dose, decreasing MRI scanner time [39] and optimising staffing and CT/MRI scanner utilisation, thereby reducing costs [1]. These applications will simplify and accelerate technicians’ work, also resulting in an average higher technical quality of examinations. This may counteract one of the current limitations of AI systems, i.e. the low ability to recognise the effects of positioning, motion artefacts, etc., also due to the lack of standardised acquisition protocols [15, 33, 34]. In other words, AI needs high-quality studies, but its application will lead towards better quality. The holy grail of standardisation in radiology may become attainable, also increasing productivity.

The quicker and standardised detection of image findings has the potential to shorten reporting time and to create automated sections of reports [2]. Structured AI-aided reporting represents a domain where AI may have a great impact, helping radiologists use relevant data for diagnosis and presenting it in a concise format [40].

Recently, the radiological community has discussed how such changes will alter the professional status of radiologists. Negative feelings were expressed, reflecting the opinions of those who are thinking that medicine will not need radiologists at all [21, 41, 42]. Should we consider closing postgraduate schools of radiology, as someone suggested [12, 21]? No. Healthcare systems would not be able to work without radiologists, particularly in the AI era. This answer is not based on a prejudicial defence of radiology as a discipline and profession. Two main ideas should guide this prediction: first, “The best qualification of a prophet is to have a good memory” (attributed to Marquis of Halifax) [43]; and second, “One way to predict the future is to create it” (attributed to Abraham Lincoln) [44].

Radiologists were on the forefront of the digital era in medicine. They guided the process, being the first medical professionals to adopt computer science, and are now probably the most digitally informed healthcare professionals [45]. Although the introduction of new technologies was mostly perceived as new approaches for producing images, innovation also deeply changed the ways to treat, present and store images. Indeed, the role of radiologists was strengthened by the introduction of new technologies. Why should it be different now? The lesson of the past is that apparently disrupting technologies (e.g. non-x-ray-based modalities, such as ultrasound and MRI) that seemed to go beyond radiology were embraced by radiologists. Radiology extended its meaning to radiation-free imaging modalities, now encompassing almost all diagnostic medical imaging, as demonstrated by the presence of the word “radiology” in numerous journals titles. This historical effect resulted from the capacity of radiologists to embrace these radiation-free modalities. In addition, electronic systems for reporting examination and archiving images were primarily modelled to serve radiologists.

The reasonable doubt is that we are now facing methods that not only cover the production of medical images but also involve detection and characterisation, properly entering the diagnostic process. Indeed, this is a new challenge, but also an additional value of AI. The professional role and satisfaction of radiologists will be enhanced by AI if they, as in the past, embrace this technology and educate new generations to use it to save time spent on routine and monotonous tasks, with strong encouragement to dedicate the saved time to functions that add value and influence patient care. This could also help radiologists feel less worried about the high number of examinations to be reported and rather focus on communication with patients and interaction with colleagues in multidisciplinary teams [46]. This is the way for radiologists to build their bright future.

We are at the beginning of the AI era. Until now, the clinical application of ML on medical imaging in terms of detection and characterisation have produced results limited to specific tasks, such as differentiation of normal from abnormal chest radiographs [41, 42, 47] or mammograms [48, 49]. The application of AI to advanced imaging modalities, such as CT and MRI, is now in its first phase. Examples of promising results are the differentiation of malignant from benign chest nodules on CT scans [50], the diagnosis of neurologic and psychiatric diseases [51, 52], and the identification of biomarkers in glioblastoma [53]. Interestingly, MRI has been shown to predict survival in women with cervical cancer [54, 55] and in patients with amyotrophic lateral sclerosis [56].

However, AI could already be used to accomplish tasks with a positive, immediate impact, several of them already described by Nance et al. in 2013 [57]:

-

1.

Prioritisation of reporting: automatic selection of findings deserving faster action.

-

2.

Comparison of current and previous examinations, especially in oncologic follow-up: tens of minutes are needed for this currently; AI could do this for us; we will supervise the process, extracting data to be integrated into the report and drawing conclusions considering the clinical context and therapy regimens; AI could also take into account the time interval between examinations

-

3.

Quick identification of negative studies: at least in this first phase, AI will favour sensitivity and negative predictive value over specificity and positive predictive value, finding the normal studies and leaving abnormal ones for radiologists [36]. This would be particularly useful in high-volume screening settings; this concept of quick negative should also represent a helpful tool for screening programmes in underserved countries [10, 37].

-

4.

Aggregation of electronic medical records, allowing radiologists to access clinical information to adapt protocols or interpret exams in the full clinical context.

-

5.

Automatic recall and rescheduling of patients: for findings deserving of an imaging follow-up.

-

6.

Immediate use of clinical decision support systems for ordering, interpreting, and defining further patient management.

-

7.

Internal peer-review of reports.

-

8.

Tracking of residents’ training.

-

9.

Quality control of technologists’ performance and tracked communication between radiologists and technologists.

-

10.

Data mining regarding relevant issues, including radiation dose [38].

In the mid-term perspective, other possibilities are open, such as:

-

1.

Anticipation of the diagnosis of cancerous lesions in oncologic patients using texture analysis and other advanced approaches [58].

-

2.

Prediction of treatment response to therapies for tumours, such as intra-arterial treatment for hepatocellular carcinoma [59].

-

3.

Evaluation of the biological relevance of borderline cases, such as B3-lesions diagnosed at pathology of needle biopsy of breast imaging findings [60].

-

4.

Estimation of functional parameters, such as the fractional flow reserve from CT coronary angiography using deep learning [61].

-

5.

Detection of perfusion defects and ischaemia, for example in the case of myocardial stress perfusion defects and induced ischaemia [62].

-

6.

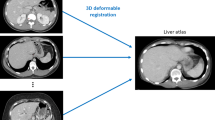

Segmentation and shape modelling, such as brain tumour segmentation [63] or, more generally, brain structure segmentation [64].

-

7.

Reducing diffusion MRI data processing to a single optimised step, for example making microstructure prediction on a voxel-by-voxel basis as well as automated model-free segmentation from MRI values [39].

The radiologists’ role and cooperation with computer scientists

The key point is the separation of diagnosis and prediction from action and recommendation. Radiologists will not be replaced by machines because radiology practice is much more than the simple interpretation of images. The radiologist’s duties also include communication of diagnosis, consideration of patients’ values and preferences, medical judgment, quality assurance, education, policy-making, interventional procedures, and many other tasks that, so far, cannot be performed by computer programmes alone [2].

Notably, it must be understood that the clinical role of radiologists cannot be “saved” only by performing interventional procedures. Even though interventional radiology is a fundamental asset to improve the clinical profile of radiology [45, 65,66,67], radiologists must act more as clinicians, applying their clinical knowledge in answering diagnostic questions and guiding decision-making, which represent their main tasks. Radiologists should keep their human control in the loop, considering clinical, personal and societal contexts in ways that AI alone is not able to do. So far, AI is neither astute nor empathic. Thus, physicians (i.e., we say here radiologists) remain essential for medical practice, because ingenuity in medicine requires unique human characteristics [40, 68]. If the time needed for image interpretation were shortened, radiologists would be able to focus on inference to improve patient care. If AI is based on a huge increase in information, the hallmark of intelligence is in reducing information to what is relevant [69].

However, it is impossible to exclude that the efficiency gain provided by AI may lead to a need for fewer radiologists. Of note, in the United States the competition for residency positions in radiology has decreased over time [70], potentially allowing for a better match between supply and demand for residency positions. However, also the opposite hypothesis cannot be excluded: AI-enhanced radiology may require more professionals in the field, including radiologists. In general, the history of automation shows that jobs are not lost. Rather, roles are reshaped; humans are displaced to tasks needing a human element [12]. The gain in efficiency provided by AI will allow radiologists to perform more value-added tasks, such as integrating patients’ clinical and imaging information, having more professional interactions, becoming more visible to patients and playing a vital role in integrated clinical teams [46]. In this way, AI will not replace radiologists; yet, those radiologists who take advantage of the potential of AI will replace the ones who refuse this crucial challenge.

Finally, we should remember that AI mimics human intelligence. Radiologists are key people for several current AI challenges, such as the creation of high-quality training datasets, definition of the clinical task to address, and interpretation of obtained results. Many labelled studies and findings provided by experienced radiologists are needed; those datasets are difficult to find and are time-consuming, implying high costs [3]. However, even if crucial, the radiologist’s role should not be confined to data labelling. Radiologists may play a pivotal role in the identification of clinical applications where AI methods may make the difference. Indeed, they represent the final user of these technologies, who knows where they can be applied to improve patient care. For this reason, their point of view is crucial to optimise the use of AI-based solutions in the clinical setting. Finally, the application of AI-based algorithms often leads to the creation of complex data that need to be interpreted and linked to their clinical utility. In this scenario, radiologists may play a crucial role in data interpretation, cooperating with data scientists in the definition of useful results.

Radiologists should negotiate the supply of these valuable datasets and clinical knowledge with a guiding role in the clinical application of AI programmes. This implies an increasing partnership with bioengineers and computer scientists. Working with them in research and development of AI applications in radiology is a strategic issue [45]. These professionals should be embedded in radiological departments, becoming everyday partners. Creating this kind of “multidisciplinary AI team” will help to ensure patient safety standards are met and creates judicial transparency, which allows legal liability to be assigned to the radiologist component human authority [71].

Another topical issue that needs to be faced are the legal implications of AI systems in healthcare. As soon as AI systems start making autonomous decisions about diagnoses and prognosis, and stop being only a support tool, a problem arises as to whether, when something ‘goes wrong’ following a clinical decision made by an AI application, the reader (namely, the radiologist) or the device itself or its designer/builder is to be considered at fault [72]. In our opinion, ethical and legal responsibility for decision making in healthcare will remain a matter of the natural intelligence of physicians. From this viewpoint, it is probable that the multidisciplinary AI team will take responsibility in difficult cases, considering relevant, but not always conclusive, what AI provided. It has been already demonstrated that groups of human and AI agents working together make more accurate predictions compared to humans or AI alone, promising to achieve higher levels of accuracy in imaging diagnosis and even prognosis [71].

Although the techniques of AI differ from diagnosis to prognosis, both applications still need validation, and this is challenging due to the large amount of data needed to achieve robust results [73]. Therefore, rigorous evaluation criteria and reporting guidelines for AI need to be developed in order to establish its role in radiology and, more generally, in medicine [22].

Conclusions

AI will surely impact radiology, and more quickly than other medical fields. It will change radiology practice more than anything since Roentgen. Radiologists can play a leading role in this oncoming change [74].

An uneasiness among radiologists to embrace AI may be compared with the reluctance among pilots to embrace autopilot technology in the early days of automated aircraft aviation. However, radiologists are used to facing technological challenges because, since the beginnings of its history, radiology has been the playfield of technological development.

An updated radiologist should be aware of the basic principles of ML/DL systems, of the characteristic of datasets to train them, and their limitations. Radiologists do not need to know the deepest details of these systems, but they must learn the technical vocabulary used by data scientists to efficiently communicate with them. The time to work for and with AI in radiology is now.

Abbreviations

- 3D:

-

Three-dimensional

- AI:

-

Artificial intelligence

- ANN:

-

Artificial neural network

- CAD:

-

Computer-aided detection/diagnosis

- CNN:

-

Convolutional neural network

- CT:

-

Computed tomography

- DL:

-

Deep learning

- ML:

-

Machine learning

- MRI:

-

Magnetic resonance imaging

References

Lakhani P, Prater AB, Hutson RK et al (2018) Machine learning in radiology: applications beyond image interpretation. J Am Coll Radiol 15:350–359

Russell S, Bohannon J (2015) Artificial intelligence. Fears of an AI pioneer. Science 349:252

Chartrand G, Cheng PM, Vorontsov E et al (2017) Deep learning: a primer for radiologists. Radiographics 37:2113–2131

Samuel AL (1959) Some studies in machine learning using the game of checkers. IBM J Res Dev 3:210–229

Lee JG, Jun S, Cho YW et al (2017) Deep learning in medical imaging: general overview. Korean J Radiol 18:570–584

LeCun Y, Bengio Y, Hinton G (2015) Deep learning. Nature 521:436–444

King BF Jr (2017) Guest editorial: discovery and artificial intelligence. AJR Am J Roentgenol 209:1189–1190

Erickson BJ, Korfiatis P, Akkus Z, Kline TL (2017) Machine learning for medical imaging. Radiographics 37:505–515

Casey B, Yee KM, Ridley EL, Forrest W, Kim A (2017) Top 5 trends from RSNA 2017 in Chicago. Available via https://www.auntminnie.com/index.aspx?sec=rca&sub=rsna_2017&pag=dis&ItemID=119393. Accessed 24 Apr 2018.

Ward P, Ridley E, Forrest W, Moan R (2018) Top 5 trends from ECR 2018 in Vienna. Available via https://www.auntminnie.com/index.aspx?sec=rca&sub=ecr_2018&pag=dis&ItemID=120195. Accessed 24 Apr 2018.

Patuzzi J (2017) Big data, AI look set to come under scrutiny at ECR 2018. Available via https://www.auntminnieeurope.com/index.aspx?sec=rca&sub=ecr_2018&pag=dis&ItemID=614795. Accessed 24 Apr 2018.

Jha S, Topol EJ (2016) Adapting to artificial intelligence: radiologists and pathologists as information specialists. JAMA 316:2353–2354

Sardanelli F, Hunink MG, Gilbert FJ, Di Leo G, Krestin GP (2010) Evidence-based radiology: why and how? Eur Radiol 20:1–15

Dodd JD (2007) Evidence-based practice in radiology: steps 3 and 4--appraise and apply diagnostic radiology literature. Radiology 242:342–354

Azavedo E, Zackrisson S, Mejàre I, Heibert Arnlind M (2012) Is single reading with computer-aided detection (CAD) as good as double reading in mammography screening? A systematic review. BMC Med Imaging 12:22

Dheeba J, Albert Singh N, Tamil Selvi S (2014) Computer-aided detection of breast cancer on mammograms: a swarm intelligence optimized wavelet neural network approach. J Biomed Inform 49:45–52

Kohli M, Prevedello LM, Filice RW, Geis JR (2017) Implementing machine learning in radiology practice and research. AJR Am J Roentgenol 208:754–760

Gillies RJ, Kinahan PE, Hricak H (2016) Radiomics: images are more than pictures, they are data. Radiology 278:563–577

Sardanelli F, Di Leo G (2009) Reproducibility: Intraobserver and Interobserver variability. In: Springer (ed) Biostatistics for radiologists - planning, performing, and writing a radiologic study, pp 125–140

Sardanelli F, Ali M, Hunink MG, Houssami N, Sconfienza LM, Di Leo G (2018) To share or not to share? Expected pros and cons of data sharing in radiological research. Eur Radiol 28:2328–2335

Krittanawong C (2018) The rise of artificial intelligence and the uncertain future for physicians. Eur J Intern Med 48:e13–e14

Lambin P, Leijenaar RTH, Deist TM et al (2017) Radiomics: the bridge between medical imaging and personalized medicine. Nat Rev Clin Oncol 14:749–762

Yip SSF, Parmar C, Kim J, Huynh E, Mak RH, Aerts H (2017) Impact of experimental design on PET radiomics in predicting somatic mutation status. Eur J Radiol 97:8–15

Parekh VS, Jacobs MA (2017) Integrated radiomic framework for breast cancer and tumor biology using advanced machine learning and multiparametric MRI. NPJ Breast Cancer 3:43

Sutton EJ, Huang EP, Drukker K et al (2017) Breast MRI radiomics: comparison of computer- and human-extracted imaging phenotypes. Eur Radiol Exp 1:22

Becker AS, Schneider MA, Wurnig MC, Wagner M, Clavien PA, Boss A (2018) Radiomics of liver MRI predict metastases in mice. Eur Radiol Exp 2:11

Davnall F, Yip CS, Ljungqvist G et al (2012) Assessment of tumor heterogeneity: an emerging imaging tool for clinical practice? Insights Imaging 3:573–589

Aerts HJ, Velazquez ER, Leijenaar RT et al (2014) Decoding tumour phenotype by noninvasive imaging using a quantitative radiomics approach. Nat Commun 5:4006

Rahmim A, Salimpour Y, Jain S et al (2016) Application of texture analysis to DAT SPECT imaging: relationship to clinical assessments. Neuroimage Clin 12:e1–e9

Pesapane F, Patella F, Fumarola EM et al (2017) Intravoxel incoherent motion (IVIM) diffusion weighted imaging (DWI) in the Periferic prostate cancer detection and stratification. Med Oncol 34:35

Fusco R, Di Marzo M, Sansone C, Sansone M, Petrillo A (2017) Breast DCE-MRI: lesion classification using dynamic and morphological features by means of a multiple classifier system. Eur Radiol Exp 1:10

Patella F, Franceschelli G, Petrillo M et al (2018) A multiparametric analysis combining DCE-MRI- and IVIM -derived parameters to improve differentiation of parotid tumors: a pilot study. Future Oncol. https://doi.org/10.2217/fon-2017-0655

King AD, Chow KK, Yu KH et al (2013) Head and neck squamous cell carcinoma: diagnostic performance of diffusion-weighted MR imaging for the prediction of treatment response. Radiology 266:531–538

Peng SL, Chen CF, Liu HL et al (2013) Analysis of parametric histogram from dynamic contrast-enhanced MRI: application in evaluating brain tumor response to radiotherapy. NMR Biomed 26:443–450

Dey D, Commandeur F (2017) Radiomics to identify high-risk atherosclerotic plaque from computed tomography: the power of quantification. Circ Cardiovasc Imaging. 10:e007254

Kolossváry M, Kellermayer M, Merkely B, Maurovich-Horvat P (2018) Cardiac computed tomography radiomics: a comprehensive review on Radiomic techniques. J Thorac Imaging 33:26–34

Sachs PB, Gassert G, Cain M, Rubinstein D, Davey M, Decoteau D (2013) Imaging study protocol selection in the electronic medical record. J Am Coll Radiol 10:220–222

Chen H, Zhang Y, Zhang W et al (2017) Low-dose CT via convolutional neural network. Biomed Opt Express 8:679–694

Golkov V, Dosovitskiy A, Sperl JI et al (2016) Q-space deep learning: twelve-fold shorter and model-free diffusion MRI scans. IEEE Trans Med Imaging 35:1344–1351

Verghese A, Shah NH, Harrington RA (2018) What this computer needs is a physician: humanism and artificial intelligence. JAMA 319:19–20

Chockley K, Emanuel E (2016) The end of radiology? Three threats to the future practice of radiology. J Am Coll Radiol 13:1415–1420

Obermeyer Z, Emanuel EJ (2016) Predicting the future - big data, machine learning, and clinical medicine. N Engl J Med 375:1216–1219

Marquis of Halifax. Available via http://www.met.reading.ac.uk/Research/cag-old/forecasting/quotes.html. Accessed 24 Apr 2018.

Abraham Lincoln. Available via https://www.goodreads.com/quotes/328848-the-best-way-to-predict-your-future-is-to-create

Sardanelli F (2017) Trends in radiology and experimental research. Eur Radiol Exp 1:1

Recht M, Bryan RN (2017) Artificial intelligence: threat or boon to radiologists? J Am Coll Radiol 14:1476–1480

Becker AS, Blüthgen C, Phi van VD et al (2018) Detection of tuberculosis patterns in digital photographs of chest x-ray images using deep learning: feasibility study. Int J Tuberc Lung Dis 22:328–335

Becker AS, Mueller M, Stoffel E, Marcon M, Ghafoor S, Boss A (2018) Classification of breast cancer in ultrasound imaging using a generic deep learning analysis software: a pilot study. Br J Radiol 91:20170576

Becker AS, Marcon M, Ghafoor S, Wurnig MC, Frauenfelder T, Boss A (2017) Deep learning in mammography: diagnostic accuracy of a multipurpose image analysis software in the detection of breast cancer. Investig Radiol 52:434–440

Cheng JZ, Ni D, Chou YH et al (2016) Computer-aided diagnosis with deep learning architecture: applications to breast lesions in US images and pulmonary nodules in CT scans. Sci Rep 6:24454

Lu X, Yang Y, Wu F et al (2016) Discriminative analysis of schizophrenia using support vector machine and recursive feature elimination on structural MRI images. Medicine (Baltimore) 95:e3973

Li R, Zhang W, Suk HI et al (2014) Deep learning based imaging data completion for improved brain disease diagnosis. Med Image Comput Comput Assist Interv 17:305–312

Korfiatis P, Kline TL, Coufalova L et al (2016) MRI texture features as biomarkers to predict MGMT methylation status in glioblastomas. Med Phys 43:2835–2844

Matsuo K, Purushotham S, Moeini A et al (2017) A pilot study in using deep learning to predict limited life expectancy in women with recurrent cervical cancer. Am J Obstet Gynecol 217:703–705

Obrzut B, Kusy M, Semczuk A, Obrzut M, Kluska J (2017) Prediction of 5-year overall survival in cervical cancer patients treated with radical hysterectomy using computational intelligence methods. BMC Cancer 17:840

van der Burgh HK, Schmidt R, Westeneng HJ, de Reus MA, van den Berg LH, van den Heuvel MP (2017) Deep learning predictions of survival based on MRI in amyotrophic lateral sclerosis. Neuroimage Clin 13:361–369

Nance JW Jr, Meenan C, Nagy PG (2013) The future of the radiology information system. AJR Am J Roentgenol 200:1064–1070

Chaudhary K, Poirion OB, Lu L, Garmire LX (2018) Deep learning-based multi-omics integration robustly predicts survival in liver cancer. Clin Cancer Res 24:1248–1259

Abajian A, Murali N, Savic LJ et al (2018) Predicting treatment response to intra-arterial therapies for hepatocellular carcinoma with the use of supervised machine learning-an artificial intelligence concept. J Vasc Interv Radiol 29:850–857

El-Sayed ME, Rakha EA, Reed J, Lee AH, Evans AJ, Ellis IO (2008) Predictive value of needle core biopsy diagnoses of lesions of uncertain malignant potential (B3) in abnormalities detected by mammographic screening. Histopathology 53:650–657

Itu L, Rapaka S, Passerini T et al (2016) A machine-learning approach for computation of fractional flow reserve from coronary computed tomography. J Appl Physiol (1985) 121:42–52

Nakajima K, Okuda K, Watanabe S et al (2018) Artificial neural network retrained to detect myocardial ischemia using a Japanese multicenter database. Ann Nucl Med 32:303–310

Pereira S, Pinto A, Alves V, Silva CA (2016) Brain tumor segmentation using convolutional neural networks in MRI images. IEEE Trans Med Imaging 35:1240–1251

Moeskops P, Viergever MA, Mendrik AM, de Vries LS, Benders MJ, Isgum I (2016) Automatic segmentation of MR brain images with a convolutional neural network. IEEE Trans Med Imaging 35:1252–1261

Makris GC, Uberoi R (2016) Interventional radiology-the future: evolution or extinction? Cardiovasc Intervent Radiol 39:1789–1790

Kwan SW, Talenfeld AD, Brunner MC (2016) The top three health care developments impacting the practice of interventional radiology in the next decade. AJR Am J Roentgenol 19:1–6

Kwan SW, Fidelman N, Ma E, Kerlan RK Jr, Yao FY (2012) Imaging predictors of the response to transarterial chemoembolization in patients with hepatocellular carcinoma: a radiological-pathological correlation. Liver Transpl 18:727–736

Miller DD, Brown EW (2018) Artificial intelligence in medical practice: the question to the answer? Am J Med 131:129–133

Jha S (2016) Will computers replace radiologists? Available via https://www.medscape.com/viewarticle/863127#. Accessed 24 Apr 2018.

Francavilla ML, Arleo EK, Bluth EI, Straus CM, Reddy S, Recht MP (2016) Surveying academic radiology department chairs regarding new and effective strategies for medical student recruitment. AJR Am J Roentgenol 207:1171–1175

Liew C (2018) The future of radiology augmented with artificial intelligence: a strategy for success. Eur J Radiol 102:152–156

Pesapane F, Volonté C, Codari M, Sardanelli F (2018) Artificial intelligence as a medical device in radiology: ethical and regulatory issues in Europe and the United States. Insights Imaging https://doi.org/10.1007/s13244-018-0645-y

Lisboa PJ, Taktak AF (2006) The use of artificial neural networks in decision support in cancer: a systematic review. Neural Netw 19:408–415

Ravi D, Wong C, Deligianni F et al (2017) Deep learning for health informatics. IEEE J Biomed Health Inform 21:4–21

Availability of data and materials

The data that support the findings of this study are available from the corresponding author, MC, upon reasonable request.

Funding

This study was supported by Ricerca Corrente funding from Italian Ministry of Health to IRCCS Policlinico San Donato.

Author information

Authors and Affiliations

Contributions

FP, MC and FS contributed to the design and implementation of the research, to the analysis of the results and to the writing of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Consent was not applicable due to the design of the study, which is a narrative review.

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Pesapane, F., Codari, M. & Sardanelli, F. Artificial intelligence in medical imaging: threat or opportunity? Radiologists again at the forefront of innovation in medicine. Eur Radiol Exp 2, 35 (2018). https://doi.org/10.1186/s41747-018-0061-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s41747-018-0061-6