Abstract

Here we review present knowledge of the long-term behaviour of solar activity on a multi-millennial timescale, as reconstructed using the indirect proxy method. The concept of solar activity is discussed along with an overview of the dedicated indices used to quantify different aspects of variable solar activity, with special emphasis on sunspot numbers. Over long timescales, quantitative information about past solar activity is historically obtained using a method based on indirect proxies, such as cosmogenic isotopes \(^{14}\)C and \(^{10}\)Be in natural stratified archives (e.g., tree rings or ice cores). We give a historical overview of the development of the proxy-based method for past solar-activity reconstruction over millennia, as well as a description of the modern state of the art. Special attention is paid to the verification and cross-calibration of reconstructions. It is argued that the method of cosmogenic isotopes makes a solid basis for studies of solar variability in the past on a long timescale (centuries to millennia) during the Holocene (the past \(\sim \)12 millennia). A separate section is devoted to reconstructions of extremely rare solar eruptive events in the past, based on both cosmogenic-proxy data in terrestrial and lunar natural archives, as well as statistics of sun-like stars. Finally, the main features of the long-term evolution of solar magnetic activity, including the statistics of grand minima and maxima occurrence, are summarized and their possible implications, especially for solar/stellar dynamo theory, are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The concept of the perfectness and constancy of the sun, postulated by Aristotle, was a strong belief for centuries and an official doctrine of Christian and Muslim countries. However, as people had noticed already before the time of Aristotle, some slight transient changes in the sun can be observed even with the naked eye. Although scientists knew about the existence of “imperfect” spots on the sun since the early 17th century, it was only in the 19th century that the scientific community recognized that solar activity varies in the course of an 11-year solar cycle. Solar variability was later found to have many different manifestations, including the fact that the “solar constant”, or the total solar irradiance, TSI, (the amount of total incoming solar electromagnetic radiation in all wavelengths per unit area at one astronomical unit, a.u.) is not a constant. The sun appears much more complicated and active than a static hot plasma ball, with a great variety of nonstationary active processes going beyond the adiabatic equilibrium foreseen in the basic theory of sun-as-star. Such transient nonstationary (often eruptive) processes can be broadly regarded as solar activity, in contrast to the so-called “quiet” sun. Solar activity includes active transient and long-lived phenomena on the solar photosphere and corona, such as spectacular solar flares, sunspots, prominences, coronal mass ejections (CMEs), etc. Interesting stories on the history of sunspot observations and studies can be found in various books (e.g., Arlt and Vaquero 2020; Brody 2002; Tassoul and Tassoul 2004).

The very fact of the existence of solar activity posed an enigma for solar physics, leading to the development of sophisticated models of the sun’s magnetic dynamo. The sun is the only star, which can be studied in great detail and thus can be considered as a proxy for cool stars. On the other hand, a study of the large population of sun-like stars can provide another view of the sun’s behaviour. Quite a number of dedicated ground-based and space-borne experiments were and are being carried out to learn more about solar variability. The use of the sun as a paradigm for cool stars leads to a better understanding of the processes driving the broader population of cool sun-like stars. Therefore, studying and modelling solar activity can increase the level of our understanding of nature.

On the other hand, the study of variable solar activity is not of purely academic interest, as it directly affects the terrestrial environment. Although changes in the sun are barely visible without the aid of precise scientific instruments, these changes have a great impact on many aspects of our lives. In particular, the heliosphere (a spatial region of about 200–300 AU across) is mainly controlled by the solar magnetic field. This leads to the modulation of galactic cosmic rays (GCRs) by solar magnetic activity. Additionally, eruptive and transient phenomena in the sun/corona and in the interplanetary medium can lead to a sporadic acceleration of energetic particles with greatly enhanced flux. Such processes can modify the radiation environment on Earth and need to be taken into account for planning and maintaining space missions and even transpolar jet flights. Solar activity can cause, through the coupling of solar wind and the Earth’s magnetosphere, strong geomagnetic storms, which may disturb radio-wave propagation and navigation-system stability, or induce dangerous spurious currents in long pipes or power lines. Another important aspect is a potential link between solar-activity variations and the Earth’s climate (see, e.g., reviews by Dudok de Wit et al. 2015; Gray et al. 2010; Haigh 2007; Mironova et al. 2015).

It is important to study solar variability on different timescales. The primary basis for such studies is observational (or reconstructed) data. The sun’s activity is systematically explored in different ways (solar, heliospheric, interplanetary, magnetospheric, terrestrial), including ground-based and space-borne experiments and dedicated space missions during the last few decades, thus covering 3–4 solar cycles. However, it should be noted that the modern epoch was characterized, until the earlier 2000s by high solar activity dominated by an 11-year cyclicity, and it is not straightforward to extrapolate present knowledge (especially empirical and semi-empirical relationships and models) to a longer timescale. The most recent cycles 23–25 indicate the return to the normal moderate level of solar activity, as manifested, e.g., via the extended and weak solar minimum in 2008–2009 and weak solar and heliospheric parameters, which are unusual for the space era but may be quite typical for the normal activity (see, e.g., Gibson et al. 2011). On the other hand, contrary to some predictions, a grand minimum of activity has not started. Thus, we may experience, in the near future, interplanetary conditions quite different with respect to those we got used to during the last decades.

Therefore, the behaviour of solar activity in the past, before the era of direct measurements, is of great importance for a variety of reasons. For example, it allows an improved knowledge of the statistical behaviour of the solar-dynamo process, which generates the cyclically-varying solar magnetic field, making it possible to estimate the fractions of time the sun spends in states of very-low activity, called grand minima. Such studies require a long time series of solar-activity data. The longest direct series of solar activity is the 410-year-long sunspot-number series, which depicts the dramatic contrast between the (almost spotless) Maunder minimum and the modern period of very high activity. Thanks to the recent development of precise technologies, including accelerator mass spectrometry, solar activity can be reconstructed over multiple millennia from concentrations of cosmogenic isotopes \(^{14}\)C and \(^{10}\)Be in terrestrial archives. This allows one to study the temporal evolution of solar magnetic activity, and thus of the solar dynamo, on much longer timescales than are available from direct measurements.

This paper gives an overview of the present status of our knowledge of long-term solar activity, covering the period of the Holocene (the last \(\sim \)12 millennia). A description of the concept of solar activity and a discussion of observational methods and indices are presented in Sect. 2. The proxy method of solar-activity reconstruction is described in some detail in Sect. 3. Section 4 gives an overview of what is known about past solar activity. The long-term averaged flux of solar energetic particles (SEPs) is discussed in Sect. 5. Finally, conclusions are summarized in Sect. 6.

2 Solar activity: concept and observations

2.1 The concept of solar activity

The sun is known to be far from a static state, the so-called “quiet” sun described by classical solar-structure theories, but instead goes through various nonstationary active processes. Such nonstationary and nonequilibrium (often eruptive) processes can be broadly regarded as solar activity. The concept of ‘solar activity’ is used since long (e.g., de La Rue et al. 1871) and is well established. The presence of magnetic activity, including stellar flares, is considered a common typical feature of sun-like stars (Maehara et al. 2012). Although a direct projection of the energy and occurrence frequency of superflares on sun-like stars (e.g., Shibata et al. 2013) does not agree with solar data (Aulanier et al. 2013) and terrestrial proxy (see Sect. 5), the existence of solar/stellar activity is clear. Whereas the concept of solar activity is quite a common term nowadays, it is neither straightforwardly interpreted nor unambiguously defined. For instance, solar-surface magnetic variability, eruption phenomena, coronal activity, radiation of the sun as a star or even interplanetary transients and geomagnetic disturbances can be related to the concept of solar activity. All these manifestations are driven by the solar magnetism. A variety of indices quantifying solar activity have been proposed in order to represent different observables and caused effects. Most of the indices are highly correlated to each other due to the dominant 11-year cycle, but may differ in fine details and/or long-term trends. In addition to the solar indices, indirect proxy data is often used to quantify solar activity via its presumably known effect on the magnetosphere or heliosphere. The indices of solar activity that are often used for long-term studies are reviewed below.

2.2 Indices of solar activity

Solar (as well as other) indices can be divided into physical and synthetic according to the way, they are obtained/calculated. Physical indices quantify the directly-measurable values of a real physical observable, such as, e.g., the radioflux, and thus have a clear physical meaning as they quantify physical features of different aspects of solar activity and their effects. Synthetic indices (the most common being sunspot number) are calculated (or synthesized) using a special algorithm from observed (often not measurable in physical units) data or phenomena. Additionally, solar activity indices can be either direct (i.e., directly relating to the sun) or indirect (relating to indirect effects caused by solar activity), as discussed in subsequent Sects. 2.2.1 and 2.2.2.

2.2.1 Direct solar indices

The most commonly used index of solar activity is based on sunspot number. Sunspots are dark (as observed in white light) areas on the solar disc (of size up to tens of thousands of km, lifetime up to half a year), characterized by a strong magnetic field, which leads to a lower temperature (about 4000 K compared to 5800 K in the photosphere) and observed as darkening in the visible wavelength. The sunspot number is a synthetic, rather than a physical, index, but it is a useful parameter in quantifying the level of solar activity. This index presents the weighted number of individual sunspots and/or sunspot groups, calculated in a prescribed manner from simple visual solar observations. The use of the sunspot number makes it possible to combine together thousands and thousands of regular and fragmentary solar observations made by earlier professional and amateur astronomers. The technique, initially developed by Rudolf Wolf, yielded the longest series of directly and regularly observed scientific quantities. Therefore, it is common to quantify solar magnetic activity via sunspot numbers. For details see the review on sunspot numbers and solar cycles (Clette and Lefèvre 2016; Clette et al. 2023; Hathaway and Wilson 2004; Hathaway 2015).

Wolf (WSN) and International (ISN) sunspot number series

The concept of the sunspot number was developed by Rudolf Wolf of the Zürich observatory in the middle of the 19th century. The sunspot series, initiated by him, is called the Zürich or Wolf sunspot number (WSN) series. The relative sunspot number \(R_{z}\) is defined as

where G is the number of sunspot groups, N is the number of individual sunspots in all groups visible on the solar disc and k denotes the individual correction factor, which compensates for differences in observational techniques and instruments used by different observers, and is used to normalize different observations to each other.

Annual sunspot activity for the last centuries. a International sunspot number series versions 1 and 2 (the former is scaled with a 1.67 factor, see SILSO at http://sidc.be/silso/datafiles). b Number of sunspot groups: HS98—(Hoyt and Schatten 1998); U16—(Usoskin et al. 2016b); S16—(Svalgaard and Schatten 2016). Standard (Zürich) cycle numbering is shown between the panels. Approximate dates of the Maunder minimum (MM) and Dalton minimum (DM) are shown in the lower panel

The value of \(R_{z}\) (see Fig. 1a) is calculated for each day using only one observation made by the “primary” observer (judged as the most reliable observer during a given time) for the day. The primary observers were Staudacher (1749–1787), Flaugergues (1788–1825), Schwabe (1826–1847), Wolf (1848–1893), Wolfer (1893–1928), Brunner (1929–1944), Waldmeier (1945–1980) and Koeckelenbergh (since 1980). If observations by the primary observer are not available for a certain day, the secondary, tertiary, etc. observers are used (see the hierarchy of observers in Waldmeier 1961). The use of only one observer for each day aims to make \(R_{z}\) a homogeneous time series. As a drawback, such an approach ignores all other observations available for the day, which constitute a large fraction of the existing information. Moreover, possible errors of the primary observer cannot be caught or estimated. The observational uncertainties in the monthly \(R_{z}\) can be up to 25% (e.g., Vitinsky et al. 1986). The WSN series is based on observations performed at the Zürich Observatory during 1849–1981 using almost the same technique. This part of the series is fairly stable and homogeneous although an offset due to the change of the weighting procedure might have been introduced in 1945–1946 (Clette et al. 2014) but the correction for this effect is not clear and leads to uncertainties (Friedli 2020; Lockwood et al. 2014). However, prior to that, there have been many gaps in the data that were interpolated. If no sunspot observations are available for some period, the data gap is filled, without note in the final WSN series, using interpolation between the available data and by employing some proxy data. In addition, earlier parts of the sunspot series were “corrected” by Wolf using geomagnetic observation (see details in Svalgaard 2012), which makes the series less homogeneous. Therefore, the WSN series is a combination of direct observations and interpolations for the period before 1849, leading to possible errors and inhomogeneities as discussed, e.g, by Vitinsky et al. (1986), Wilson (1998), Letfus (1999), Svalgaard (2012), Clette et al. (2014). The quality of the Wolf series before 1749 is rather poor and hardly reliable (Hathaway and Wilson 2004; Hoyt et al. 1994; Hoyt and Schatten 1998).

The main problem of the WSN was a lack of documentation so that only the final product was available without information on the raw data, which made a full revision of the series hardly possible. Although this information does exist, it was hidden in hand-written notes of Rudolf Wolf and his successors. The situation is being improved now with an effort of the Rudolf Wolf Gesellschaft (http://www.wolfinstitute.ch) to scan and digitize the original Wolf’s notes (Friedli 2020).

Note that the sun has been routinely photographed since 1876 so that full information on daily sunspot activity is available (the Greenwich series) with observational uncertainties being small compared to the observed variability, for the last 140 years.

The routine production of the WSN series was terminated in Zürich in 1982. Since then, the sunspot number series is routinely updated as the International sunspot number (ISN) \(R_{i}\), provided by the Solar Influences Data Analysis Center in Belgium (Clette et al. 2007). The international sunspot number series is computed using the same definition (Eq. 1) as WSN but it has a significant distinction from the WSN: it is based not on a single primary solar observation for each day but instead uses a weighted average of more than 20 approved observers. The ISN (see SILSO at http://sidc.be/silso/datafiles) has been recently updated to version 2 with corrections to some known inhomogeneities (Clette et al. 2014). A potential user should know that the ISN (v.2) is calibrated to Wolfer, in contrast to earlier WSN and ISN (v.1) calibrated to Wolf. As a result, a constant scaling factor of 0.6 should be applied to compare ISN (v.2) to ISN (v.1). The two versions are shown in Fig. 1a. One can see that the ISN v.2 is is very close to v.1 (scaled up by a factor of 1.67) with a small difference after the 1940s, because of the correction for the Waldmeier discontinuity (see below).

During the Second World War, production of the sunspot numbers was launched in the US, known as the American relative sunspot number \(R_{\text{A}}\) (Shapley 1949). It is continuously updated since then (Schaefer 1997) under the auspices of the Department of Terrestrial Magnetism of the Carnegie Institute and the American Association of Variable Star Observers (AAVSO—https://www.aavso.org/aavso-sunspot-count-data), but is not widely used, since the ISN is considered the reference sunspot series.

In addition to the international sunspot number \(R_{i}\), there is also a series of hemispheric sunspot numbers \(R_{\text{N}}\) and \(R_{\text{S}}\), which account for spots only in the northern and southern solar hemispheres, respectively (note that \(R_i=R_{\text{N}}+R_{\text{S}}\)). These series are used to study the N-S asymmetry of solar activity (Temmer et al. 2002).

Group sunspot number (GSN) series

Since the WSN series is of lower quality before the 1850s and is hardly reliable before 1750, there was a need to re-evaluate early sunspot data. This tremendous work has been done by Hoyt and Schatten (1996, 1998), who performed an extensive archive search and nearly doubled the amount of original information compared to the Wolf series. They have produced a new series of sunspot activity called the group sunspot numbers (GSN—see Fig. 1b), which includes all available archival records. The daily group sunspot number \(R_{g}\) is defined as follows:

where \(G_i\) is the number of sunspot groups recorded by the i-th observer, \(k'_i\) is the observer’s individual correction factor, n is the number of observers for the particular day, and 12.08 is a normalization number scaling \(R_{g}\) to \(R_{z}\) values for the period of 1874–1976. However, the exact scaling factor 12.08 has recently been questioned due to an inhomogeneity within the RGO data between 1874–1885 (Cliver and Ling 2016; Willis et al. 2016). \(R_{g}\) is more robust than \(R_{z}\) or \(R_i\) since it is based on more easily identified sunspot groups and does not include the number of individual spots. By this, the GSN avoids a problem related to the visibility of small sunspots since a group of several small spots would appear as one blurred spot for an observer with a low-quality telescope. Another potential uncertainty may be related to a problem of grouping individual spots into sunspot groups consistently throughout ages (Clette et al. 2014). This uncertainty directly affecting the GSN is also important for WSN/ISN series since the number of groups composes 50–90% of the WSN/ISN values. Another important advantage of the GSN series is that all the raw data are available. The GSN series includes not only one “primary” observation, but all available observations, and covers the period since 1610, being, thus, 140 years longer than the original WSN series. It is particularly interesting that the period of the Maunder minimum (1645–1715) was surprisingly well covered with daily observations (Hoyt and Schatten 1996; Ribes and Nesme-Ribes 1993) allowing for a detailed analysis of sunspot activity during this grand minimum (see also Sect. 4.2). Systematic uncertainties of the \(R_{g}\) values are estimated to be about 10% before 1640, less than 5% from 1640–1728 and from 1800–1849, 15–20% from 1728–1799, and about 1% since 1849 (Hoyt and Schatten 1998). The GSN series is more homogeneous and transparent than the WSN series before 1849. The two series are nearly identical after the 1870s (Hathaway and Wilson 2004; Hoyt and Schatten 1998; Letfus 1999). However, the GSN series still contains some lacunas, uncertainties and possible inhomogeneities (see, e.g., Cliver and Ling 2016; Letfus 2000; Usoskin et al. 2003a; Vaquero et al. 2012).

Maunder butterfly diagram of sunspot occurrence reconstructed from different sources as compiled by Muñoz-Jaramillo and Vaquero (2019)

Updates of the series

The search for other lost or missing records of past solar instrumental observations has not ended even since the extensive work by Hoyt and Schatten. Archival searches still give new interesting findings of forgotten sunspot observations, often outside major observatories—see a detailed review book by Vaquero and Vázquez (2009) and original papers by Casas et al. (2006), Vaquero et al. (2005, 2007), Arlt (2008), Arlt (2009), Carrasco and Vaquero (2016). Interestingly, not only sunspot counts but also regular drawings, forgotten for centuries, are being restored nowadays in dusty archives. A very interesting work has been done by Rainer Arlt (Arlt 2008, 2009; Arlt and Abdolvand 2011; Arlt et al. 2013) on recovering, digitizing, and analyzing regular drawings by Schwabe of 1825–1867 and Staudacher of 1749–1796. This work led to the extension of the Maunder butterfly diagram for several solar cycles backwards (Arlt 2009; Arlt and Abdolvand 2011; Arlt et al. 2013; Usoskin et al. 2009c). Recently, more research groups joined the effort of the past data recovery, active being groups from Spain (Victor Carrasco and José Vaquero) and Japan (Hisashi Hayakawa). Recent findings of the lost data by several observers made it possible to correct some earlier uncertain data and revise the pattern of the solar variability in the past (e.g., Carrasco et al. 2020a, b, 2021a; Hayakawa et al. 2021a; Vaquero et al. 2011). In particular, the butterfly diagram has been extended, fragmentary covering four hundred years as shown in Fig. 2.

Recent corrections to the group number database have been collected (http://haso.unex.es/?q=content/data) by Vaquero et al. (2016) who updated the database of Hoyt and Schatten (1998) by correcting some errors and inexactitudes and adding more data.

Several inconsistencies and discontinuities have been found in the existing sunspot series. For instance, Leussu et al. (2013) have shown that the values of WSN before 1848 (when Wolf had started his observations) were overestimated by \(\approx 20\%\) because of the incorrect \(k-\)factor ascribed by Wolf to Schwabe. This error, called the “Wolf discontinuity”, erroneously alters the WSN/ISN series but does not affect the GSN series. Another reported error is the so-called “Waldmeier discontinuity” around 1947 (Clette et al. 2014), related to the fact that Waldmeier had modified the procedure of counting spots, including ‘weighting’ sunspot number, without proper noticing which led to a greater sunspot number compared to the standard technique. This suggests that the WSN/ISN may be overestimated by 10–20% past 1947 (Clette et al. 2014; Lockwood et al. 2014), but this does not directly affect the GSN series. As studied by Friedli (2020), this weighting might have been introduced intermittently already in the early 20th century.

Clette et al. (2014) and Cliver and Ling (2016) proposed, based on the ratio of the number of groups reported by Wolfer to that based on the Royal Greenwich Observatory (RGO) data, that there might be a transition in the calibration of GSN around the turn of the 19th to 20th century (or even until 1915) related to the inhomogeneous quality of the RGO data used to build the GSN at that period. On the other hand, Carrasco et al. (2013) and Aparicio et al. (2014), using independent observations of David Hadden from Iowa or Madrid Observatory data found that the problem with RGO data is essential only before the 1880s but not after that. Willis et al. (2016) studied RGO data for the years 1874–1885 and found that the database of Hoyt and Schatten (1998) might have slightly underestimated the RGO number of groups during that time. Sarychev and Roshchina (2009) suggested that the RGO data are erroneous for the period 1874–1880 but quite homogeneous after that. Vaquero et al. (2014) reported that ISN values for the period prior to 1850 are discordant with the number of spotless days, and concluded that the problem could be related to the calibration constants by Wolf (as found by Leussu et al. 2013) and to the non-linearity of ISN for low values. Most of these errors affect the WSN/ISN series, while GSN is more robust.

Thus, such inconsistencies should be investigated, and new series, with corrections of the known problems, need to be produced. Different efforts have been made recently leading to inconsistent solar activity reconstructions. One of the new reconstructions was made by Clette et al. (2014) who introduced a revised version of the ISN (v.2—see Fig. 1a), correcting two apparent discontinuities, Wolf and Waldmeier, as described above. In addition, the entire series was rescaled to the reference level of Wolfer, while the ‘classic’ WSN/ISN series was scaled to Wolf. This leads to a constant scaling with the factor \(1.667=\, ^1\,/_{0.6}\) of the ISN_v.2 series with respect to other series. Keeping this scaling in mind, the ISN_v2 series is systematically different from the earlier one after the 1940s, and for a few decades in the mid-19th century. This was not a fundamental revision by a scaling correction for a couple of errors.

A full revision of the GSN series was performed by Svalgaard and Schatten (2016) who used the number of sunspot groups by Hoyt and Schatten (1998) but applied a different method to construct the new GSN series. They also used a daisy-chain linear regression to calibrate different observers but did it in several steps. A few key observers, called the ‘backbones’, were selected, and other observers were re-normalized to the ‘backbones’ using linear regressions. Then the ‘backbones’ were calibrated to each other, again using linear scaling. Before 1800, when the daisy-chain calibration cannot be directly applied, two other methods, the ‘high-low’ (observers reporting a larger number of groups were favoured over those reporting a smaller number of groups) and ‘brightest star’ (only the highest daily number of sunspot groups per year was considered) methods. This GSN series, called S16, is shown in Fig. 1b as the blue dotted curve. It suggests a much higher than usually thought, level of solar activity in the 19th and especially 18th centuries, comparable to that during the mid-20th century. As a result of the ‘brightest star’ method, it yields moderate values during the Maunder minimum in contrast to the present paradigm of virtually no sunspots (Eddy 1976; Ribes and Nesme-Ribes 1993; Hoyt and Schatten 1996; Usoskin et al. 2015; Vaquero et al. 2015). On the contrary, many recent studies confirmed, using the revised and newly found sunspot observations, the very low level of solar activity during the Maunder minimum (e.g., Carrasco et al. 2021b; Hayakawa et al. 2021b).

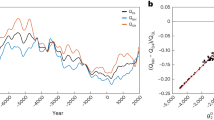

The use of the traditional \(k-\)factor method of linear scaling for the inter-calibration of solar observers has been found invalid (Dudok de Wit et al. 2016; Lockwood et al. 2016c, b; Usoskin et al. 2016b), and a need for a modern non-parametric method had emerged. This is illustrated by Fig. 3 which shows the ratio of the sunspot group number reported by Wolfer, \(G_{\text{Wolfer}}\), to that by Wolf, \(G_{\text{Wolf}}\), for days where both reports are available. This ratio obviously depends on the level of activity, being about two for low-activity days (\(G_{\text{Wolfer}}=1\)) and only \(\approx 1.2\) for high-activity days. The ratio is strongly non-linear due to the fact that large sunspot groups dominate during periods of high activity. The horizontal dash-dotted line denotes the constant scaling \(k-\)factor of 1.667 used earlier (Clette et al. 2014) between Wolf and Wolfer. One can see that the use of the \(k-\)factor leads to a significant, by \(\approx 40\%\), over-correction of the numbers from Wolf when scaling them to Wolfer, especially during the solar-cycle maximum. This has led to the concept of the c-factor which depends on the level of activity (Fig. 3).

Several new methods, free of the linear assumption, have been proposed recently. An advanced method was proposed by Chatzistergos et al. (2017), also based on the daisy-chain procedure of the observer calibration (e.g., S16) but replacing a linear scaling by the observer’s acuteness quantified in the minimum observable sunspot-group size.

Another method called the ADF-method (Usoskin et al. 2016b, 2021a), is based on a comparison of statistics of the active-day fraction (ADF) in the sunspot (group) records of an observer with that of the reference data-set (the RGO record of sunspot groups for the period 1900–1976). By comparing them, the observational acuity threshold \(A_{\text{th}}\) can be found and defined so that the observer is supposed to report all the sunspot groups with an area greater than the threshold and to miss all smaller groups. This threshold characterizes the quality of the observer and is further used to calibrate his/her records. The values of the defined thresholds for some principal observers of the 18–19th century are given in Table 1. This method tends to slightly underestimate the high activity and essentially overestimate the lower activity (Willamo et al. 2018). Based on the defined observational acuity thresholds for each observer, a new GSN series was constructed (Usoskin et al. 2016b), called U16, which is depicted in Fig. 1b as the red curve. It was slightly updated by Usoskin et al. (2021a). It lies lower than GSN S16 around solar maxima but slightly higher than HS98, in the 18-19th centuries.

Correction \(c-\)factor of Wolf to Wolfer. The grey scale represents the probability density function (PDF) of the ratio of the number of groups reported by Wolfer for days, when Wolf reported a given number of groups. The big dots with error bars depict the mean values. The dashed line is a functional exponential fit. The horizontal dot-dashed line represents the constant correction \(k-\)factor 1.667 (Clette et al. 2014). Modified after Usoskin et al. (2016b)

Another new method was proposed by Friedli (2020) who revised the WSN series based on recently digitized Wolf’s original notes and using the relation between the numbers of groups and individual spots. This series appears consistent with the GSN Us16 series and close to the classical ISN series but is lower than the GSN S16 series.

Several attempts to test/validate different sunspot reconstructions using indirect proxies yielded indicative results: tests based on cosmogenic radionuclides (Asvestari et al. 2017b), including \(^{44}\)Ti measured in the fallen meteorites (see Fig. 19) as well as geomagnetic and heliospheric proxies (Lockwood et al. 2016a, b) favor the ‘lower’ reconstructions (Hoyt and Schatten 1998; Usoskin et al. 2016b) against the ‘high’ reconstructions (Svalgaard and Schatten 2016). On the other hand, comparison with the solar open-magnetic-field models (Owens et al. 2016) cannot distinguish between different series.

The current situation with the sunspot number series is still developing and can hardly be resolved now. The old ‘classical’ WSN and GSN series need to be corrected for apparent inhomogeneities. Yet, newly emerging revisions of the sunspot series are mutually inconsistent and require efforts of the solar community on a consensus approach. On the other hand, the scientific community needs a ‘consensus’ series of solar activity and the work in this direction is underway (Clette et al. 2023). This Review will be updated as the situation progresses.

Other indices

An example of a synthetic index of solar activity is the flare index, representing solar-flare activity (e.g., Kleczek 1952; Özgüç et al. 2003). The flare index quantifies daily flare activity in the following manner; it is computed as a product of the flare’s relative importance I in the \(\text{H}_{\alpha }\)-range and duration t, \(Q=I\cdot t\), thus being a rough measure of the total energy emitted by the flare. The daily flare index is produced by Bogazici University (Özgüç et al. 2003) and is available since 1936.

A traditional physical index of solar activity is related to the radioflux of the sun in the wavelength range of 10.7 cm and is called the F10.7 index (e.g., Tapping and Charrois 1994). This index represents the flux (in solar flux units, \(1 \text{sfu} = 10^{-22}\ \text{Wm}^{-2}\ \text{Hz}^{-1}\)) of solar radio emission at a centimetric wavelength. There are at least two sources of 10.7 cm flux—free-free emission from hot coronal plasma and gyromagnetic emission from active regions (Tapping 1987). It is a good quantitative measure of the level of solar activity, which is not directly related to sunspots. A close correlation between the F10.7 index and sunspot number indicates that the latter is a good index of general solar activity, including coronal activity. The solar F10.7 cm record has been measured continuously since 1947.

Another physical index is the coronal index (e.g., Rybanský et al. 2005), which is a measure of the irradiance of the sun as a star in the coronal green line. Computation of the coronal index is based on observations of green corona intensities (Fe xiv emission line at 530.3 nm wavelength) from coronal stations all over the world, the data being transformed to the Lomnický Štit photometric scale. This index is considered a basic optical index of solar activity. A synthesized homogeneous database of the Fe xiv 530.3 nm coronal-emission line intensities has existed since 1943.

Often sunspot area is considered as a physical index representing solar activity (e.g., Baranyi et al. 2001; Balmaceda et al. 2005). This index gives the total area of visible spots on the solar disc in units of millionths of the sun’s visible hemisphere, corrected for apparent distortion due to the curvature of the solar surface. The area of individual groups may vary between tens of millionths (for small groups) up to several thousands of millionths for huge groups. This index has a physical meaning related to the solar magnetic flux emerging at sunspots. Sunspot areas are available since 1874 in the Greenwich series obtained from daily photographic images of the sun. Sunspot group areas were routinely produced by the Royal Greenwich Observatory from daily photographic images of the sun for the period between 1874–1976 and after 1976 extended by the SOON network. Note, that the quality of the RGO data before 1880–1890s may be uneven (see discussion in Sect. 2.2.1). Sunspot areas can be reconstructed even before that using drawing of the sun by H. Schwabe for the years 1826–1867 (Arlt et al. 2013; Senthamizh Pavai et al. 2015) and images by Spörer for the period 1861–1894 (Diercke et al. 2015). In addition, some fragmentary solar drawings exist even for earlier periods, including the Maunder minimum in the 17th century (Fig. 2).

An important quantity is the solar irradiance, total and spectral (Fröhlich 2012). Irradiance variations are physically related to solar magnetic variability (e.g., Solanki et al. 2000), and are often considered manifestations of solar activity, which is of primary importance for solar-terrestrial relations.

Other physical indices include spectral sun-as-star observations, such as the Ca II-K index (e.g., Donnelly et al. 1994; Foukal 1996), the space-based Mg II core-to-wing ratio as an index of solar UVI (e.g., Donnelly et al. 1994; Snow et al. 2005; Viereck and Puga 1999) and many others.

All the above indices are closely correlated to sunspot numbers on the solar-cycle scale, but may depict quite different behaviour on short or long timescales.

2.2.2 Indirect indices

Sometimes quantitative measures of solar-variability effects are also considered as indices of solar activity. These are related not to solar activity per se, but rather to its effect on different environments. Accordingly, such indices are called indirect and can be roughly divided into terrestrial/geomagnetic and heliospheric/interplanetary.

Geomagnetic indices quantify different effects of geomagnetic activity ultimately caused by solar variability, mostly by variations of solar-wind properties and the interplanetary magnetic field. For example, the aa-index, which provides a global index of geomagnetic activity relative to the quiet-day curve for a pair of antipodal magnetic observatories (in England and Australia), is available from 1868 (Mayaud 1972). An extension of the geomagnetic series is available from the 1840s using the Helsinki Ak(H) index (Nevanlinna 2004a, b). Although the homogeneity of the geomagnetic series is compromised (e.g., Love 2011; Lukianova et al. 2009), it still remains an important indirect index of solar activity. A review of the geomagnetic effects of solar activity can be found, e.g., in Pulkkinen (2007). It is noteworthy that geomagnetic indices, in particular low-latitude aurorae (Silverman 2006), are associated with coronal/interplanetary activity (high-speed solar-wind streams, interplanetary transients, etc.) that may not be directly related to the sunspot-cycle phase and amplitude, and therefore serve only as an approximate index of solar activity. One of the earliest instrumental geomagnetic indices is related to the daily magnetic declination range, the range of diurnal variation of magnetic needle readings at a fixed location, and is available from the 1780s (Nevanlinna 1995). However, this data exists as several fragmentary sets, which are difficult to combine into a homogeneous data series.

Several geomagnetic activity indices have been proposed recently. One is the IDV (inter-diurnal variability) index (Lockwood et al. 2013; Svalgaard and Cliver 2005) based on Bartels’ historical u-index of geomagnetic activity, related to the difference between successive daily values of the horizontal or vertical component of the geomagnetic field. Another index is IHV (inter-hourly variability) calculated from the absolute differences between successive hourly values of the horizontal component of the geomagnetic field during night hours to minimize the effect of the daily curve (Mursula and Martini 2006; Svalgaard et al. 2004). Some details of the derivation and use of these indirect indices for long-term solar-activity studies can be found, e.g., in the Living Review by Lockwood (2013).

Heliospheric indices are related to features of the solar wind or the interplanetary magnetic field measured (or estimated) in interplanetary space. For example, the time evolution of the total (or open) solar magnetic flux is extensively used (e.g., Krivova et al. 2007, 2021; Linker et al. 2021; Lockwood et al. 1999; Wang et al. 2005).

A special case of heliospheric indices is related to the galactic cosmic-ray intensity recorded in natural terrestrial archives. Since this indirect proxy is based on data recorded naturally throughout the ages and revealed now, it makes possible the reconstruction of solar activity changes on long timescales, as discussed in Sect. 3.

2.3 Solar activity observations in the pre-telescopic epoch

Instrumental solar data is based on regular observation (drawings or counting of spots) of the sun using optical instruments, e.g., the telescope used by Galileo in the early 17th century. These observations have mostly been made by professional astronomers whose qualifications and scientific thoroughness were doubtless. They form the basis of the group sunspot number series (Hoyt and Schatten 1998), which can be more-or-less reliably extended back to 1610 (see discussion in Sect. 2.2.1). However, some fragmentary records of qualitative solar and geomagnetic observations exist even for earlier times, as discussed below (Sects. 2.3.1–2.3.2).

2.3.1 Instrumental observations: Camera obscura

The invention of the telescope revolutionized astronomy. However, another solar astronomical instrument, the camera obscura, also made it possible to provide relatively good solar images and was still in use until the late 18th century. Camera obscura was known from early times, as they have been used in major cathedrals to define the sun’s position (see the review by Heilbron 1999; Vaquero 2007; Vaquero and Vázquez 2009). The earliest known drawing of the solar disc was made by Frisius, who observed the solar eclipse in 1544 using a camera obscura. That observation was performed during the Spörer minimum and no spots were observed on the sun. The first known observation of a sunspot using a camera obscura was done by Kepler in May 1607, who erroneously ascribed the spot on the sun to a transit of Mercury. Although such observations were sparse and related to other phenomena (solar eclipses or transits of planets), there were also regular solar observations by camera obscura. For example, about 300 pages of logs of solar observations made in the cathedral of San Petronio in Bologna from 1655–1736 were published by Eustachio Manfredi in 1736 (see the full story in Vaquero 2007). Therefore, observations and drawings made using camera obscura can be regarded as instrumental observations (e.g., Tovar et al. 2021).

2.3.2 Naked-eye observations

Even before regular professional observations performed with the aid of specially-developed instruments (what we now regard as scientific observations) people were interested in unusual phenomena. Several historical records exist based on naked-eye observations of transient phenomena in the sun or in the sky.

From even before the telescopic era, a large amount of evidence of spots being observed on the solar disc can be traced back as far as the middle of the 4th century BC (Theophrastus of Athens). The earliest known drawing of sunspots is dated to December 8, 1128 AD as published in “The Chronicle of John of Worcester” (Willis and Stephenson 2001). However, such evidence from occidental and Moslem sources is scarce and mostly related to observations of transits of inner planets over the sun’s disc, probably because of the dominance of the dogma on the perfectness of the sun’s body, which dates back to Aristotle’s doctrine (Bray and Loughhead 1964). Oriental sources are much richer for naked-eye sunspot records, but that data is also fragmentary and irregular (see, e.g., Clark and Stephenson 1978; Wittmann and Xu 1987; Yau and Stephenson 1988). Spots on the sun are mentioned in official Chinese and Korean chronicles from 165 BC to 1918 AD. While these chronicles are fairly reliable, the data is not straightforward to interpret since it can be influenced by meteorological phenomena, e.g., dust loading in the atmosphere due to dust storms (Willis et al. 1980) or volcanic eruptions (Scuderi 1990) can facilitate sunspots observations. Direct comparison of Oriental naked-eye sunspot observations and European telescopic data shows that naked-eye observations can serve only as a qualitative indicator of sunspot activity, but can hardly be quantitatively interpreted (see, e.g., Willis et al. 1996, and references therein). Moreover, as a modern experiment of naked-eye observations (Mossman 1989) shows, Oriental chronicles contain only a tiny (\(^{1}\,/_{200}{\,-\,}^{1}\,/_{1000}\)) fraction of the number of sunspots potentially visible with the naked eye (Eddy et al. 1989). This indicates that records of sunspot observations in the official chronicles were highly irregular (Eddy 1983) and probably dependent on dominating traditions during specific historical periods (Clark and Stephenson 1978). Although naked-eye observations tend to qualitatively follow the general trend in solar activity according to a posteriori information (e.g., Vaquero et al. 2002), extraction of any independent quantitative information from these records is very difficult as potentially influenced by the meteorological, astronomical and political factors at the time of observations..

Visual observations of aurorae Borealis at middle latitudes form another proxy for solar activity (e.g., Basurah 2004; Hayakawa et al. 2019b; Křivský 1984; Lee et al. 2004; Schove 1983; Schröder 1992; Silverman 1992; Siscoe 1980; Vázquez and Vaquero 2010). Fragmentary records of aurorae can be found in both occidental and oriental sources since antiquity. The first known dated notations of an aurora are from Babylon in 567 BCE and 660 BCE (Hayakawa et al. 2019a; Stephenson et al. 2004). Aurorae may appear at middle latitudes as a result of enhanced geomagnetic activity due to transient interplanetary phenomena. Of particular interest are ‘sporadic’ and ‘equatorial’ aurorae (e.g., Hayakawa et al. 2018; He et al. 2021). Although auroral activity reflects coronal and interplanetary features rather than magnetic fields on the solar surface, there is a strong correlation between long-term variations of sunspot numbers and the frequency of aurora occurrences. Because of the phenomenon’s short duration and low brightness, the probability of seeing an aurora is severely affected by other factors such as the weather (sky overcast, heat lightning), the Moon’s phase, season, etc. The fact that these observations were not systematic in early times (before the beginning of the 18th century) makes it difficult to produce a homogeneous data set. Moreover, the geomagnetic latitude of the same geographical location may change quite dramatically over centuries, due to the migration of the geomagnetic axis, which also affects the probability of watching aurorae (Oguti and Egeland 1995; Siscoe and Verosub 1983). For example, the geomagnetic latitude of Seoul (\(37.5^{\circ }\) N \(127^{\circ }\) E), which is currently less than \(30^{\circ }\), was about \(40^{\circ }\) a millennium ago (Kovaltsov and Usoskin 2007). This dramatic change alone can explain the enhanced frequency of aurorae observations recorded in oriental chronicles.

2.3.3 Mathematical/statistical extrapolations

Due to the lack of reliable information regarding solar activity in the pre-instrumental era, it seems natural to try extending the sunspot series back in time, before 1610 AD, by means of extrapolating its statistical properties. Indeed, numerous attempts of this kind have been made even recently (e.g., de Meyer 1998; Nagovitsyn 1997; Rigozo et al. 2001; Zharkova et al. 2015). Such models aim to find the main feature of the actually-observed sunspot series, e.g., a modulated carrier frequency or a multi-harmonic representation, which is then extrapolated backwards in time. The main disadvantage of this approach is that it is not a reconstruction based upon measured or observed quantities, but rather a “post-diction” based on extrapolation. This method is often used for short-term predictions, but it can hardly be used for the reliable long-term reconstruction of solar activity. In particular, it assumes that the sunspot time series is stationary, i.e., a limited-time realization contains full information on its future and past. Clearly, such models cannot include periods exceeding the time span of observations upon which the extrapolation is based. Hence, the pre- or post-diction becomes increasingly unreliable with growing extrapolation time, and its accuracy is hard to estimate.

Sometimes a combination of the above approaches is used, i.e., a fit of the mathematical model to indirect qualitative proxy data. In such models, a mathematical extrapolation of the sunspot series is slightly tuned and fitted to some proxy data for earlier times. For example, Schove (1955, 1979) fitted the slightly variable but phase-locked carrier frequency (about 11 years) to fragmentary data from naked-eye sunspot observations and auroral sightings. Phase locking was achieved by assuming exactly nine solar cycles per calendar century. This series, known as Schove series, reflects qualitative long-term variations of the solar activity, including some grand minima, but cannot pretend to be a quantitative representation of solar activity level. The Schove series played an important historical role in the 1960s. In particular, a comparison of the \(\varDelta ^{14}\)C data with this series succeeded in convincing the scientific community that secular variations of \(^{14}\)C in tree rings have solar and not climatic origins (Stuiver 1961). This formed a cornerstone of the precise method of solar-activity reconstruction, which uses cosmogenic isotopes from terrestrial archives. However, attempts to reconstruct the phase and amplitude of the 11-year cycle, using this method were unsuccessful. For example, Schove (1955) made predictions of forthcoming solar cycles up to 2005, which failed. We note that all these works are not able to reproduce, for example, the Maunder minimum (which cannot be represented as a result of the superposition of different harmonic oscillations), yielding too high sunspot activity compared to that observed. From the modern point of view, the Schove series can be regarded as archaic.

The main source of data on the past solar activity before the era of direct observations is related to cosmogenic-isotope data measured in terrestrial archives (see Sect. 3).

2.4 The solar cycle and its variations

The sunspot-number series based on telescopic observations since 1610 covers the full range of solar variability, from the grand Maunder minimum in the 17th century to the Modern grand maximum in the second half of the 20th century (e.g., Acero et al. 2018). Accordingly, it allows us to study typical features of solar variability on a multi-centennial timescale.

2.4.1 Quasi-periodicities

11-yr Schwabe cycle

The main feature of solar activity is its pronounced quasi-periodicity with a period of about 11 years, known as the Schwabe cycle, which varies in both amplitude and duration. The first observation of possible regular variability in sunspot numbers was made by the Danish astronomer Christian Horrebow in the 1770s on the basis of his sunspot observations from 1761–1769 (see details in Gleissberg 1952; Vitinsky 1965), but the results were forgotten. It took over 70 years before the amateur astronomer Heinrich Schwabe announced in 1844 that sunspot activity varies cyclically with a period of about 10 years (Schwabe 1844). This cycle, called the 11-year or Schwabe cycle, is the most prominent variability in the sunspot-number series. The length and amplitude of the Schwabe cycle vary essentially, from 8 to 15 years in duration and by a few orders of magnitude in size. There are also empirical rules relating different parameters of the solar cycle, most known being the Waldmeier relation (Waldmeier 1935, 1939) relating the cycle height and the length of the ascending phase (strong cycles raise fast), and the Gnevyshev–Ohl rule (Gnevyshev and Ohl 1948) clustering cycles to pairs of an even-numbered cycle followed by a stronger odd-numbered cycle. The use of cosmogenic data has confirmed the robustness of the Waldmeier rule but was unable to reproduce the Gnevyshev–Ohl rule on the 1000-yr timescale (Usoskin et al. 2021a). A detailed review of solar cyclic variability can be found in Hathaway (2015).

Solar cycles are consequently numbered since 1749 which was solar cycle #1 according to the Wolf numbering. Presently, solar cycle #25 is in progress.

The Schwabe cycle is recognized now as a fundamental feature of solar activity originating from the solar-dynamo process. This 11-year cyclicity is prominent in many other parameters including solar, heliospheric, geomagnetic, space weather, climate and others. The background for the 11-year Schwabe cycle is the 22-year Hale magnetic polarity cycle. George Hale found that the polarity of sunspot magnetic fields changes in both hemispheres when a new 11-year cycle starts (Hale et al. 1919). This relates to the reversal of the global magnetic field of the sun with the period of \(\approx \)22 years. It is often considered that the 11-year Schwabe cycle is the modulo of the sign-alternating Hale cycle (e.g., Bracewell 1986; de Meyer 1998; Kurths and Ruzmaikin 1990; Mininni et al. 2001; Sonett 1983), but this is only a mathematical representation.

Phase catastrophe?

Sometimes the regular time evolution of solar activity is broken up by periods of greatly depressed activity called grand minima. The last grand minimum (and the only one covered by direct solar observations) was the famous Maunder minimum from 1645–1715 (Eddy 1976, 1983). Other grand minima in the past, known from cosmogenic isotope data, include, e.g., the Spörer minimum around 1450–1550 and the Wolf minimum around the 14th century (see the detailed discussion in Sect. 4.2). Sometimes the Dalton minimum (ca. 1790–1820) is also considered to be a grand minimum. As suggested by Schüssler et al. (1997), this can be a separate, intermediate state of the dynamo between the grand minimum and normal activity, or an unsuccessful attempt of the sun to switch to the grand minimum state (Frick et al. 1997; Sokoloff 2004). This is observed as the “phase catastrophe” (disrupted smooth evolution of a dynamical system in the phase space) of solar-activity evolution (e.g., Kremliovsky 1994; Vitinsky et al. 1986). A peculiarity in the phase evolution of sunspot activity around 1800 was also noted by Sonett (1983), who ascribed it to a possible error in Wolf sunspot data, and by Wilson (1988a), who reported on a possible misplacement of sunspot minima for cycles 4–6 in the WSN series. It has been also suggested that the phase catastrophe can be related to a tiny cycle, which might have been lost at the end of the 18th century because of very sparse observations (Usoskin et al. 2001a, 2002b, 2003b). Independent evidence of the existence of the lost cycle has been proposed based on the reconstructed sunspot butterfly diagram for that period (Usoskin et al. 2009c). However, it is impossible to conclude, without the magnetic-polarity data, whether it was a full new (lost) cycle or an unusual burst of activity at the declining phase of the previous cycle as proposed by Zolotova and Ponyavin (2011). Cosmogenic-isotope data cannot resolve the existence of a full separate lost cycle (Jiang et al. 2011; Usoskin et al. 2021b).

Centennial Gleissberg cycle

The long-term change (trend) in the Schwabe cycle amplitude is known as the secular Gleissberg cycle (Gleissberg 1939) with a mean period of about 90 years. However, the Gleissberg cycle is not a cycle in the strict periodic sense but rather a modulation of the cycle envelope with a varying timescale of 60–140 years (e.g., Gleissberg 1971; Kuklin 1976; Ogurtsov et al. 2002).

See more discussion of this and longer cycles in Sect. 4.1 using cosmogenic data.

2.4.2 Randomness versus regularity

The short-term (days–months) variability of sunspot numbers is greater than the observational uncertainties indicating the presence of random fluctuations (noise). As typical for most real signals, this noise is not uniform (white), but rather red or correlated noise (e.g., Frick et al. 1997; Ostryakov and Usoskin 1990; Oliver and Ballester 1996), namely, its variance depends on the level of the signal. While the existence of regularity and randomness in sunspot series is apparent, their relationship is not clear (e.g., Wilson 1994)—are they mutually independent or intrinsically tied together? Moreover, the question of whether randomness in sunspot data is due to chaotic or stochastic processes, is still open.

Earlier it was common to describe sunspot activity as a multi-harmonic process with several basic harmonics (e.g., Sonett 1983; Vitinsky 1965; Vitinsky et al. 1986) with the addition of random noise, which plays no role in the solar-cycle evolution. However, it has been shown (e.g., Charbonneau 2001; Mininni et al. 2002; Rozelot 1994; Weiss and Tobias 2000) that such an oversimplified approach depends on the chosen reference time interval and does not adequately describe the long-term evolution of solar activity. A multi-harmonic representation is based on an assumption of the stationarity of the benchmark series, but this assumption is broadly invalid for solar activity (e.g., Kremliovsky 1994; Polygiannakis et al. 2003; Sello 2000). Moreover, a multi-harmonic representation cannot, for an apparent reason, be extrapolated to a timescale larger than that covered by the benchmark series. The fact that purely mathematical/statistical models cannot give good predictions of solar activity (as discussed below) implies that the nature of the solar cycle is not a multi-periodic or other purely deterministic process, but chaotic or stochastic processes play an essential role in sunspot cycle formation (e.g., Moss et al. 2008; Käpylä et al. 2012).

An old idea of the possible planetary influence on the dynamo has received a new pulse recently with some unspecified torque effect proposed to act on the assumed quasi-rigid non-axisymmetric tahocline (Abreu et al. 2012). However, this result was criticized by Poluianov and Usoskin (2014) as being an artefact of an inappropriate analysis (aliasing effect of incorrect smoothing). In addition, Cauquoin et al. (2014) have shown that such periodicities were not observed in \(^{10}\)Be data 330 kyr ago. Another speculated planetary effect is a tidal locking of the dynamo (Stefani et al. 2019). However, that work was reported to be methodologically flawed (Nataf 2022). Moreover, a theoretical consideration suggests that these effects are too weak per se and require an unknown strong amplification mechanism to become observable (Charbonneau 2022).

The question of whether solar cycles are independent of each other (short memory) or are synchronised by an internal/external clock is crucially important for the solar dynamo. This can be straightforwardly distinguished with a statistical test since the dispersions of the cycle period and phase would grow with the number of solar cycles if the cycles are independent (random walk), but remain constant for the synchronized cycles (clock). An analysis of the directly observed sunspot numbers series (e.g., Gough 1981) favoured the random-walk hypothesis but the synchronized clock could not be rejected because of the two short series (about 20 cycles). The recent 1000-year-long solar cycle reconstruction (Sect. 3.6.1) has greatly the statistic to 96 solar cycles that made it possible to finally resolve this ambiguity. As shown by Weisshaar et al. (2023), the dispersion linearly grows with the number of solar cycles analyzed, and the ‘clock’ hypothesis can be rejected with very high confidence. Thus, the idea of internal or external synchronisation of the solar cycle contradicts the available data.

Different numeric tests, such as an analysis of the Lyapunov exponents (Kremliovsky 1995; Mundt et al. 1991; Ostriakov and Usoskin 1990; Sello 2000), Kolmogorov entropy (Carbonell et al. 1994; Sello 2000) and Hurst exponent (Oliver and Ballester 1998; Ruzmaikin et al. 1994), confirm the irregular and unpredictable nature of the solar-activity time evolution (see, e.g., a review by Panchev and Tsekov 2007).

It was suggested quite a while ago that the variability of the solar cycle may be a temporal realization of a low-dimensional chaotic system (e.g., Ruzmaikin 1981). This concept became popular in the early 1990s when many authors considered solar activity as an example of low-dimensional deterministic chaos, described by a strange attractor (e.g., Kurths and Ruzmaikin 1990; Morfill et al. 1991; Mundt et al. 1991; Ostriakov and Usoskin 1990; Rozelot 1995; Salakhutdinova 1999; Serre and Nesme-Ribes 2000; Hanslmeier et al. 2013). Such a process naturally contains randomness, which is an intrinsic feature of the system rather than an independent additive or multiplicative noise. However, although this approach easily produces features seemingly similar to those of solar activity, quantitative parameters of the low-dimensional attractor varied greatly as obtained by different authors. Later it was realized that the analyzed data set was too short (Carbonell et al. 1993, 1994), and the results were strongly dependent on the choice of filtering methods (Price et al. 1992). Developing this approach, Mininni et al. (2000, 2001) suggested that one can consider sunspot activity as an example of a 2D Van der Pol relaxation oscillator with an intrinsic stochastic component.

Such phenomenological or basic principles models, while succeeding in reproducing (to some extent) the observed features of solar-activity variability, do not provide insight into the physical nature of regular and random components of solar variability. In this sense, efforts to understand the nature of randomness in sunspot activity in the framework of dynamo theory are more advanced. Corresponding theoretical dynamo models have been developed (see reviews by Charbonneau 2020; Ossendrijver 2003), which include stochastic or chaotic processes (e.g., Brandenburg and Sokoloff 2002; Brooke and Moss 1994; Charbonneau and Dikpati 2000; Feynman and Gabriel 1990; Hoyng 1993; Lawrence et al. 1995; Moss et al. 1992; Schmalz and Stix 1991; Schmitt et al. 1996; Weiss et al. 1984). For example, Feynman and Gabriel (1990) suggested that the transition from a regular to a chaotic dynamo passes through bifurcation. Charbonneau and Dikpati (2000) studied stochastic fluctuations in a Babcock–Leighton dynamo model and succeeded in the qualitative reproduction of the anti-correlation between cycle amplitude and length (Waldmeier rule). Their model also predicts a phase-lock of the Schwabe cycle, i.e., that the 11-year cycle is an internal “clock” of the sun. Most often the idea of fluctuations is related to the \(\alpha \)-effect, which is the result of the electromotive force averaged over turbulent vortices, and thus can contain a fluctuating contribution (e.g., Brandenburg and Spiegel 2008; Hoyng 1993; Moss et al. 2008; Ossendrijver et al. 1996). Note that a significant fluctuating component (with an amplitude greater than 100% of the regular component) is essential in all these models.

2.4.3 A note on solar activity predictions

Randomness (see Sect. 2.4.2) in the SN series is directly related to the predictability of solar activity. Forecasting solar activity has been a subject of intense study for many years (e.g., Gleissberg 1948; Newton 1928; Vitinsky 1965; Yule 1927) and has greatly intensified recently with hundreds of journal articles being published to predict the solar cycles No. 24 and 25 maxima (see, e.g., reviews by Jiang et al. 2023; Nandy 2021; Pesnell 2012, 2016; Pesnell and Schatten 2018), following the boost of space-technology development and increasing debates on solar-terrestrial relations. A systematic analysis (Nandy 2021) of the predictions published recently for cycles 24 and 25 implies that, while statistical and mathematical methods produce widely diverse results, physics-based predictions for the current cycle 25 converge and predict a moderately weak sunspot cycle, as confirmed by the developing solar cycle. The convergence of the physics-based models implies progress in the understanding of solar cycle evolution, in contrast to purely mathematical methods. As concluded by Nandy (2021), predictions for a solar cycle at around the end of the proceeding cycle as based on the observed polar field (flux) are fairly reliable. However, uncertainties in the observed magnetograms and sunspot emergence may affect the results (Jiang et al. 2023). Longer-scale predictions are hardly possible because of the random fluctuations (mostly related to the tilt-angle distribution of bipolar sunspot pairs) in the solar-cycle dynamo mechanism. Detailed reviews of the solar activity prediction methods and results have been recently provided by Petrovay (2020); Nandy (2021).

Note that several ‘predictions’ of the general decline of the coming solar activity have been made recently (Abreu et al. 2008; Lockwood et al. 2011; Solanki et al. 2004), however, these are not really true predictions but rather acknowledgements of the fact that the Modern Grand maximum (Solanki et al. 2004; Usoskin et al. 2003c) has ceased. Similar caution can be made about predictions of a grand minimum (e.g., Lockwood et al. 2011; Miyahara et al. 2010)—a grand minimum should appear soon or later, but presently we are hardly able to predict its occurrence.

2.5 Summary

In this section, the concept of solar activity and quantifying indices is discussed, as well as the main features of solar-activity temporal behaviour.

The concept of solar activity is quite broad and covers non-stationary and non-equilibrium (often eruptive) processes, in contrast to the “quiet” sun concept, and their effects upon the terrestrial and heliospheric environment. Many indices are used to quantify different aspects of variable solar activity. Quantitative indices include direct (i.e., related directly to solar variability) and indirect (i.e., related to terrestrial and interplanetary effects caused by solar activity) ones, they can be physical or synthetic. While all indices depict the dominant 11-year cyclic variability, their relationships on other timescales (short scales or long-term trends) may vary to a great extent.

The most common and the longest available direct index of solar activity is the sunspot number, which is a synthetic index and is useful for the quantitative representation of overall solar activity outside the grand minimum. During the grand Maunder minimum, however, it may give only a clue about solar activity whose level may drop below the sunspot formation threshold. The sunspot number series is available for the period from 1610 AD, after the invention of the telescope, and covers, in particular, the Maunder minimum in the late 17th century. However, this series has big uncertainties before 1900 (Sect. 2.2.1). Fragmentary non-instrumental observations of the sun before 1610, while giving a possible hint of relative changes in solar activity, cannot be interpreted in a quantitative manner.

Solar activity in all its manifestations is dominated by the 11-year Schwabe cycle, which has, in fact, a variable length of 9–14 years for individual cycles. The amplitude of the Schwabe cycle varies greatly—from the almost spotless Maunder minimum to the very high cycle 19, possibly in relation to the Gleissberg or secular cycle. Longer super-secular characteristic times can also be found in various proxies of solar activity, as discussed in Sect. 4.

Solar activity contains essential chaotic/stochastic components, that lead to irregular variations and make the prediction of solar activity for a timescale exceeding one solar cycle impossible.

3 Proxy method of past solar-activity reconstruction

In addition to direct solar observations, described in Sect. 2.2.1, there are also indirect solar proxies, which are used to study solar activity in the pre-telescopic era. Unfortunately, we do not have any reliable data that could give a direct index of solar variability before the beginning of the sunspot-number series. Therefore, one must use indirect proxies, i.e., quantitative parameters, which can be measured nowadays but represent different effects of solar magnetic activity in the past. It is common to use, for this purpose, signatures of terrestrial indirect effects induced by variable solar-magnetic activity, that are stored in natural archives. Such traceable signatures can be related to nuclear (used in the cosmogenic-isotope method) or chemical (used, e.g., in the nitrate method) effects caused by cosmic rays (CRs) in the Earth’s atmosphere, lunar rocks or meteorites.

The most common proxy of solar activity is formed by the data on cosmogenic radionuclides (e.g., \(^{10}\)Be, \(^{14}\)C and \(^{36}\)Cl), which are produced by cosmic rays in the Earth’s atmosphere (e.g, Bard et al. 1997; Beer et al. 1990, 2012; Stuiver and Quay 1980; Usoskin 2017).

Other cosmogenic nuclides, which are used in geological and paleomagnetic dating, are less suitable for studies of solar activity (see e.g., Beer et al. 2012). Cosmic rays are the main source of cosmogenic nuclides in the atmosphere (excluding anthropogenic factors during the last decades) with the maximum production being in the lower stratosphere. After a complicated transport in the atmosphere, the cosmogenic isotopes are stored in natural archives such as polar ice, trees, marine sediments, etc. This process is also affected by changes in the geomagnetic field and climate. Cosmic rays experience heliospheric modulation due to solar wind and the frozen-in solar magnetic field. The intensity of modulation depends on solar activity and, therefore, cosmic-ray flux and the ensuing cosmogenic isotope intensity depends inversely on solar activity. An important advantage of cosmogenic data is that primary archiving is done naturally in a similar manner throughout the ages, and these archives are measured nowadays in laboratories using modern techniques. If necessary, the measurements can be repeated and improved, as has been done for some radiocarbon samples. In contrast to fixed historical archival data (such as sunspot or auroral observations) this approach makes it possible to obtain homogeneous data sets of stable quality and to improve the quality of data with the invention of new methods (such as accelerator mass spectrometry). However, it only allows reconstructions of long-term variability with annual resolution at best, or even decadal, or extreme SEP events. Cosmogenic-isotope data is the main regular indicator of solar activity on a very long-term scale. The redistribution of nuclides in terrestrial reservoirs and archiving may be affected by local and global climate/circulation processes, which are, to a large extent, unknown in the past. However, a combined study of different nuclides data, whose responses to terrestrial effects are very different, may allow for disentangling external and terrestrial signals.

3.1 The physical basis of the method

3.1.1 Heliospheric modulation of cosmic rays

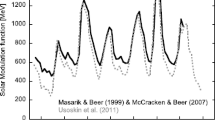

The flux of cosmic rays (highly energetic fully ionized nuclei) is considered roughly constant (at least at the time scales relevant to the present study) in the vicinity of the Solar system. However, before reaching the vicinity of Earth, galactic cosmic rays experience complicated transport in the heliosphere that leads to modulation of their flux. Heliospheric transport of GCR is described by Parker’s theory (Parker 1965; Toptygin 1985) and includes four basic processes (Potgieter 2013): the diffusion of particles due to their scattering on magnetic inhomogeneities, the convection of particles by the out-blowing solar wind, adiabatic energy losses in expanding solar wind, drifts of particles in the magnetic field, including the gradient-curvature drift in the regular heliospheric magnetic field, and the drift along the heliospheric current sheet, which is a thin magnetic interface between the two heliomagnetic hemispheres. Because of variable solar-magnetic activity, CR flux in the vicinity of Earth is strongly modulated (see Fig. 4). The most prominent feature in CR modulation is the 11-year cycle, which is in inverse relation to solar activity. The 11-year cycle in CR is delayed with a variable delay (from a month up to two years) with respect to the sunspots (e.g., Koldobskiy et al. 2022a). The time profile of cosmic-ray flux as measured by a neutron monitor (NM) is shown in Fig. 4 (panel b) together with the sunspot numbers (panel a). Besides the inverse relation between them, some other features can also be noted. A 22-year cyclicity manifests itself in cosmic-ray modulation through the alteration of sharp and flat maxima in cosmic-ray data, originating from the charge-dependent drift mechanism (Jokipii and Levy 1977). One may also note short-term fluctuations, which are not directly related to sunspot numbers but are driven by interplanetary transients caused by solar eruptive events, e.g., flares or CMEs or by fast solar-wind streams. An interesting feature is related to the recent decades. The CR flux in 2009 was the highest ever recorded by NMs (Moraal and Stoker 2010), as caused by the favourable heliospheric conditions (unusually weak heliospheric magnetic field and the flat heliospheric current sheet). On the other hand, the sunspot minimum was comparable to other minima. The level of CR modulation during the cycle 24 was moderate, shallower than for the previous cycles, reflecting the weak solar cycle 24. For the previous 50 years of high and roughly-stable solar activity, no trends have been observed in CR data; however, as will be discussed later, the overall level of CR has changed significantly on the centurial-millennial timescales.

Cyclic variations since 1951. Panel a: Time profiles of International sunspot number v.2 (http://sidc.be/silso/datafiles); Panel b: Cosmic-ray flux as the count rate of a subpolar neutron monitor (Oulu NM http://cosmicrays.oulu.fi, Climax NM data used before 1964), 100% NM count rate corresponds to May 1965

A full solution to the problem related to CR transport in the heliosphere is a complicated task and requires sophisticated 3D time-dependent self-consistent modelling. However, the problem can be essentially simplified for applications at a long timescale. An assumption on the azimuthal symmetry (requires times longer than the solar-rotation period of \(\approx \)27 days synodic) and quasi-steady changes reduce it to a 2D quasi-steady problem. A further assumption of the spherical symmetry of the heliosphere reduces the problem to a 1D case. This approximation can be used only for rough estimates since it neglects the drift effect, but it is useful for long-term studies when the heliospheric parameters cannot be evaluated independently. Further, but still reasonable, assumptions (constant solar-wind speed, roughly power-law CR energy spectrum, slow spatial changes of the CR density) lead to the force-field approximation (Caballero-Lopez and Moraal 2004; Gleeson and Axford 1968), which can be solved analytically in the form of characteristic curves. The differential intensity \(J_i\) of the cosmic-ray nuclei of type i with kinetic energy T at 1 AU is given in this case as

where \(\varPhi _i=(Z_i e/A_i)\phi \) for cosmic nuclei of i-th type (charge and mass numbers are \(Z_i\) and \(A_i\)), T and \(\phi \) are expressed in MeV/nucleon and in MV, respectively, and \(T_{\text{r}}=938\) MeV is the proton’s rest mass. T is the CR particle’s kinetic energy, and \(\phi \) is the modulation potential.

The local interstellar spectrum (LIS) \(J_{\text{LIS}}\) forms the boundary condition for the heliospheric transport problem. Recent data from Voyager 1 and 2 spacecraft travelling beyond the termination shock give a clue for the lower-energy (<100 MeV/nuc) range of LIS (Bisschoff and Potgieter 2016; Webber et al. 2008), although the residual modulation beyond the heliopause may still affect this (Herbst et al. 2012). The high-energy tail of LIS can be evaluated using near-Earth measurements, e.g., with the AMS-02 detector (Aguilar et al. 2021). However, the LIS is not well known in the energy range affected by the heliospheric modulation, between 0.1–20 GeV/nuc. Presently-used and historical approximations for LIS (e.g., Burger et al. 2000; Garcia-Munoz et al. 1975; Vos and Potgieter 2015; Webber and Higbie 2009) agree with each other for energies above 20 GeV but may contain uncertainties of up to a factor of 1.5 around 1 GeV (Asvestari et al. 2017a). These uncertainties in the boundary conditions make the results of the modulation theory slightly model-dependent (see discussion in Herbst et al. 2010; Usoskin et al. 2005) and require the LIS model to be explicitly cited.

This approach gives results, which are at least dimensionally consistent with the full theory and can be used for long-term studies (Caballero-Lopez and Moraal 2004; Usoskin et al. 2002a). Differential CR intensity is described by the only time-variable parameter, called the modulation potential \(\phi \), which is mathematically interpreted as the averaged rigidity (i.e., the particle’s momentum per unit of charge) loss of a CR particle in the heliosphere. However, it is only a formal spectral index whose physical interpretation is not straightforward, especially on short timescales and during active periods of the sun (Caballero-Lopez and Moraal 2004). Despite its cloudy physical meaning, this force-field approach provides a very useful and simple single-parametric approximation for the differential spectrum of GCR, since the spectrum of different GCR species directly measured near the Earth can be well fitted by Eq. (3) using only the parameter \(\phi \) in a wide range of solar activity levels (Usoskin et al. 2011, 2017). Therefore, changes in the whole energy spectrum (in the energy range from 100 MeV/nucleon to 100 GeV/nucleon) of cosmic rays due to the solar modulation can be described by this single number within the framework of the adopted LIS.

Since protons compose about 90% of GCR in the particle numbers, heavier species were sometimes neglected earlier, but this can lead to an essential error. Helium and heavier nuclei compose about 1/3 of GCR in the number of nucleons and since they are less modulated than protons in the heliosphere and magnetosphere, they can contribute up to 50% into atmospheric processes (Koldobskiy et al. 2019; Webber and Higbie 2003).

3.1.2 Geomagnetic shielding