Abstract

In this work, a numerical scheme based on combined Lucas and Fibonacci polynomials is proposed for one- and two-dimensional nonlinear advection–diffusion–reaction equations. Initially, the given partial differential equation (PDE) reduces to discrete form using finite difference method and \(\theta -\) weighted scheme. Thereafter, the unknown functions have been approximated by Lucas polynomial while their derivatives by Fibonacci polynomials. With the help of these approximations, the nonlinear PDE transforms into a system of algebraic equations which can be solved easily. Convergence of the method has been investigated theoretically as well as numerically. Performance of the proposed method has been verified with the help of some test problems. Efficiency of the technique is examined in terms of root mean square (RMS), \(L_2\) and \(L_\infty \) error norms. The obtained results are then compared with those available in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Partial differential equations (PDEs) have dominant applications in various physical, chemical, and biological processes. These processes are mostly modeled in the form of heat, Laplace, and wave-type differential equations. Finding analytical solution of these PDEs is not an easy task. Therefore, researchers are trying to develop an accurate and efficient numerical method for solving these PDEs. Here we construct general heat type time dependent PDEs of the following form:

with initial and boundary conditions

where g is source term depends on space and time, \(\Gamma \), \(\partial \Gamma \) are spatial domain and boundary of the domain, respectively, \(\alpha \) and \(\gamma \) are positive constants, \(\nabla =\partial _{\xi }+\partial _{\eta }\) and \(\Delta =\partial _{\xi \xi }+\partial _{\eta \eta }\) represents Laplacian operator. When \(\alpha =0\) and \(\beta =1\) Eq. (1) becomes heat equation which is used to model thermal conductivity over a solid body. This equation is also used to model other real-world phenomenons like Black–Scholes model; it is applied for price estimation, and in chemical process it is designed in the form of diffusion equation [18]. In the form of advection-diffusion equation it describes heat transfer in a solid body and dissipation of salt in ground water. In image processing it handles image at different scales [28]. The solution of this equation plays a significant role to examine behavior of such physical processes. Different numerical methods have been used for solution of heat equation by many researchers. A comparative study between the classical finite difference and finite element method was investigated by Benyam Mebrate [25]. B-spline and finite element method have been used by Darbel et al. [12]. Hooshmandasl et al. [18] used wavelet methods to solve one dimensional heat equations, while Dehghan [13] used second order finite difference scheme for two-dimensional heat equations. In [34], the authors applied haar wavelet method for two-dimensional fractional reaction–diffusion equations. In [2], the authors applied fifth-kind Chebyshev polynomials for the spectral solution of convection–diffusion equation.

When \(\alpha =1\) and \(\beta =1/Re\) (‘Re’ is Reynolds number), then Eq. (1) becomes well-known Burger equation which was experienced in the field of turbulence, shock wave theory, viscous fluid flow, gas dynamics, cosmology, traffic flow, quantum field, and heat conduction [27]. The low-kinematic viscosity shocks and the relation between cellular and large-scale structure of the universe have been described by one- and three-dimensional Burger equations. When traffic is treated as one-dimensional incompressible fluid, then the density wave in traffic flow which changes from non-uniform to uniform distribution is described by Burger equation [10]. The Burger equation was first introduced by Bateman in viscous fluid flow, which was then extended by Burger in (1948) to examine turbulence phenomena and that is why it is known as Burger equation [27]. Due to wide applications of Burger equation many numerical methods have been implemented to study behavior of the model. One-dimensional Burger equation has been solved using various techniques in [23, 35]. Mittal and Jiwari [27] implemented differential quadrature method for solution of Burger-type equation. Similarly El-sayed and Kaya [20] solved two-dimensional Burger equation using decomposition method. Liao [24] used fourth-order finite difference technique for the study of two-dimensional Burger equation. Oruc [33] applied meshless pseudo-spectral method to modified Burgers equations. The same author in [30] studied three-dimensional fractional convection–diffusion equation using meshless method based on multiple-scale polynomials. In this work, we study afore mentioned models by using combination of Lucas and Fibonacci polynomials. These polynomials can be directly obtained as a special case from the work done in [4, 6]. These polynomials are non-orthogonal and do not require domain and problem transformation which is important point of the proposed scheme. Also the higher order derivative of unknown functions can be easily approximated via Lucas and Fibonacci Polynomials. Second, it is straightforward and produces better accuracy for less number of nodal points. Many researchers applied these polynomials for the solution of fractional differential equations (FDEs) such as Elhameed and Youssri [3], who applied Lucas polynomial in a Caputo sense to FDEs. Moreover they computed the solution of fractional pantograph differential equations (FPDEs) using generalized Lucas polynomials [39]. Other polynomials applied for approximation of FDEs are studied in [5, 7]. Cetin [11] used Lucas polynomial approach to study a system of higher order differential equation, whereas Bayku [9] applied hybrid Taylor–Lucas collocation technique for delay differential equations. Mostefa [29] obtained solution of integro differential equation using Lucas series. Farshid et al. [26] applied Fibonacci polynomials for solution of Voltera–Fredholm integral equations. Omer Oruc [31, 32] applied a combined Lucas and Fibonacci polynomials approach for numerical solution of evolutionary equation for the first time. Recently, Ali et al. [8, 15, 17] applied Lucas polynomials coupled with finite differences and obtained accurate solution of various classes of one- and two-dimensional PDEs. In this paper, we implement the proposed method to one- and two-dimensional Burger and heat equations. The simulation is carried out with the help of MATLAB 2013 using Intel core-i7 machine with 4GB RAM. The error bound of the scheme is also investigated in this work. The paper is organized as follows: In Sect. 2 we define basic definitions and important concept which will be used in this work. In Sect. 3 methodology of the proposed scheme is formulated. Error analysis of the method is described in Sect. 4. Numerical experiments are presented in Sect. 5 followed by conclusion of the paper.

2 Basic concepts and definition

In this section, we describe some necessary definitions and concepts required for our subsequent development.

Definition 2.1

[22] Lucas polynomials are the generalization of well-known Lucas numbers which are generated by linear recursive relation as follows:

with \(L_{0}(\xi )=2\) and \(L_{1}(\xi )=\xi \), by putting \({\xi }=1\), Eq (2) gives Lucas numbers.

Definition 2.2

[22] Fibonacci polynomials can be generated by the following linear recursive relation:

where \(\mathcal {F}_{0}(\xi )=0,\) and \( \mathcal {F}_{1}(\xi )=1\), by putting \({\xi }=1\), Eq. (3) generate Fibonacci numbers.

Function Approximation. Let \(Y(\xi )\) be square integrable on (0, 1) and suppose that it can be expressed in terms of the Lucas polynomials given as follows:

where \(\mathbf{C} =\left[ c_{0},c_{1},...,c_{N}\right] ^{T}\) is \((N+1)\times 1\) vector of unknown coefficients and \(\mathbf{L} (\xi )=[L_{0}(\xi ),L_{1}(\xi ),...,L_{N}(\xi )]\) is \((N+1)\times (N+1)\) matrix of Lucas polynomials. Similarly the mth order derivative of the function \(Y(\xi )\) can be approximated in terms of finite Lucas series is given as follows:

in which \(\mathbf{L} ^{(m)}(\xi )=\left[ L_{0}^{(m)}(\xi ), L_{1}^{(m)}(\xi ),..., L_{N}^{(m)}(\xi ) \right] \) is square matrix.

Corollary 2.3

[22]: Let \(L^{(m)}_{k}(\xi )\) be the mth order derivative of Lucas polynomials; Then it can be expanded in terms of Fibonacci polynomials by the following relation:

where \(\mathbf {D}\) is \((N+1) \times (N+1)\) differentiation matrix such that:

in which \(\mathbf {d}\) is square matrix of order \(N \times N\) defined as:

For example, if we choose \(N=3\), then we have

and for \(m=2\) the second-order derivative of \(\mathbf{L} (\xi )\) can be obtained using Eq. (6)

where \(k=[0,1,2,3]\) after element wise multiplication we get

Now assume a two-dimensional continuous function \(Y(\xi ,\eta )\) can be written in terms of Lucas polynomials as follows:

where \(\mathbf{C} =[c_{00},...,c_{0N}, c_{N0},...,c_{NN}]^{T}\) is Lucas unknown coefficients vector of order \((N+1)^{2}\times 1\), and \(\mathbf{L} (\xi ,\eta )=[L_{00}(\xi ,\eta ),...,L_{0N}(\xi ,\eta ), L_{N0}(\xi ,\eta ),...,L_{NN}(\xi ,\eta )]\) is square matrix of order \((N+1)^2\).

3 Solution methodology

Consider the following evolutionary equation:

where \( \pounds \) is differential operator and \(g(\mathbf{x},t)\) is a given smooth function. The initial and boundary conditions are given as follows:

where \( {\mathfrak {B}} \) is boundary operator. To approximate Eq. (10), first we define time discretization given by the following:

where \(\delta t=T/M\) is time step size for variable t and T is final time. Now applying finite difference scheme to temporal part and \( \theta - \)weighted scheme to spatial part of Eq. (10), one can write

where \(Y^{n+1}(\mathbf{x} )=Y(\mathbf{x} ,t^{n+1})\) and so forth. By putting \(\theta =0.5\), the scheme in Eq. (12) represents Crank–Nicolson which is \(O(\delta t^2)\) accurate in time.

For discretization of spatial domain \(\Gamma =[a,b]\) we use regular grid point defined as follows:

For \((n+1)\) time level and at the collocation point \(\mathbf{x} =(\xi _{i},\eta _{j})\) Eq. (9) can be written as follows:

Using Eq. (13) in Eq. (12), we have

The above equation can also be written as

The boundary conditions (11) transform to

Matrix form of Eqs. (14) and (15) is given by

For \(k,m=0,\dots ,N\) elements for the above matrices can be obtained in the following:

where \(\mathbf {H}\), \(\mathbf {G}\) and \(\mathbf {B}\) are \((N+1)^{2}\) order square matrices. The unknown coefficient vector \(\mathbf {C}\) can be obtained by solving Eq. (16). Once the values of unknown coefficient are computed the solution of the problem under consideration can be obtained from Eq. (9).

4 Truncation error estimate

To study the error estimate of the proposed scheme, we follow the approach of Abd-Elhameed and Youssri [3].

Theorem 4.1

Let \(Y(\xi ,\eta )\) and \(Y^{*}(\xi ,\eta )\) be exact and approximate solutions of Eq. (1). Moreover, we expand \(Y(\xi ,\eta )\) in terms of Lucas sequence. Then truncation error is given as

Proof

Consider the absolute error between exact and approximate solution

where \(Y(\xi ,\eta )=\sum _{k=0}^{\infty } \sum _{m=0}^{\infty } \lambda _{k}\lambda _{m}L_{k}(\xi )L_{m}(\eta )\) and \(Y^{*}(x,y)=\sum _{k=0}^{M} \sum _{m=0}^{M} \lambda _{k}\lambda _{m}L_{k}(\xi )L_{m}(\eta )\). Then the truncated term is given as

It is shown in [3]

where \(\vartheta \) is well-known golden ratio. Therefore, Eq. (22) implies that

where \(\kappa =P \vartheta \). The above inequality can also be written as

Here, \(\Gamma (N+1,\kappa )\) is the incomplete gamma function and \(\Gamma (N+1)\) is complete gamma function [36]. In integral form Eq. (23) can be written as

As, \(\exp (-t)<1\), therefore, for all \(t>0\), we have

This completes the proof. \(\square \)

5 Numerical examples

In this section, one- and two-dimensional Burgers’ and diffusion equations have been solved using the proposed method. Performance of the technique is examined by computing \(L_2\), \(L_\infty \), and root mean square (RMS) error norms for different collocation points N and time T. The obtained results are then compared with available results in the literature.

Example 5.1

When \(\alpha =0, ~\beta =1\), \(\gamma =0\) and \(g(\xi ,t)=0\) then Eq. (1) becomes heat equation given by

with initial and boundary conditions

and exact solution [18].

Comparison of the above equations with Eqs. (10) and (11) gives

Applying the technique discussed in Sect. 2, Eq. (14) takes the following form:

where \(L^{''}_{k}(\xi )\) represents second-order derivative of Lucas polynomials which can be obtained using Eq. (6). The matrices in Eq. (17)–(19) takes the following form:

The problem has been solved for different values of nodal points N, and time T. Computed solutions are compared with the results provided by Chebyshev wavelet method in the form of various error norms which are shown in Table 1. It is clear from the table that proposed scheme gives excellent results than cited work. Numerical convergence and Cpu time have been reported in Table 2 for various values of N. From the table it can be observed that the solution converges as the nodal point N increases. i.e. (\(d\xi \) decreases). Error norms for large time level are recorded in Table 3 and better results noticed than that for small time level. Solution profile and absolute errors are presented in Fig. 1 which show that exact and approximate solution are well matched with each other showing efficiency of the proposed scheme.

Solution profile when \(T=0.1\), \(\delta t=0.001\), \(N=15\) of Example 5.1

Example 5.2

Consider Eq. (1), with \(\alpha =0\), \(\beta =1\), \(\gamma =-2\) and \(g(\xi ,t)=0\), defined as homogeneous heat equation given as follows

with exact solution

Initial and boundary conditions are extracted from exact solution. The problem has been solved by adopting the procedure discussed in Sect. 2. The results are computed for different values of N and T. RMS, \(L_2\), and \(L_\infty \) error norms have been calculated and comparison is made with available results in literature [18] for different values of \(T,~N=16,~\delta t=0.001\) that are shown in Table 4. From the table, it is straightforward that the results achieved using the proposed method are better than those available in literature which show proficiency of the method used. For convergence in space the results obtained are shown in Table 5 for different values of \(d\xi \) showing that the solution converges as the value \(d\xi \) decreases. Graphs of solutions along with absolute errors for \(T=1\) and N=15 are plotted in Fig. 2. The figure shows that exact and approximate solutions are closely matched.

Solution profile when \(T=1\), \(\delta t=0.001\), \(N=15\) of Example 5.2

Example 5.3

Consider \(\alpha =1\), \(\gamma =0,~g(\mathbf{x},t)=0\) in Eq. (1); we get the following two-dimensional nonlinear Burger equation:

There are two cases.

Case 1: In this case the exact solution is given as [16]

Initial and boundary conditions are extracted from the exact solution. The nonlinear part in Eq. (29) can be linearized by the following formula [8]:

Applying the technique discussed in Sect. 2 Eq. (14) takes the following form:

The matrices \(\mathbf {H, ~G}\) and \(\mathbf {B}\) in Eq. (16) for \(k,m=0,1,\dots ,N,\) are given as follows:

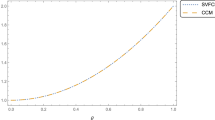

The approximate solution of the problem has been computed for different values of viscosity \(\beta \), time T, and nodal points N. Error norms \(L_2, L_\infty \) and RMS have been calculated and the obtained results compared with the results of Haar wavelet [16] and differential quadrature [19]. The results are presented in Tables 6 and 7. From these tables it is obvious that proposed method works pretty well than those available in the literature. The solution profile and error plots for \(T=2\) , \(\delta t=0.001\), \(\beta =1\), and nodal points \([20 \times 20]\) are shown in Fig. 3 showing efficiency of the proposed technique.

Solution profile when \(T=1\), \(\delta t=0.01\), \(N=20\) for case 1 Example 5.3

Case 2:

In this case exact solution of Eq. (29) is given as [19]

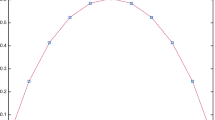

Here also initial and boundary conditions are extracted from the exact solutions. The method discussed in previous example is applied to solve this example for different value of viscosity \(\beta \). Here also the error norms \(L_2, ~ L_\infty \) and RMS have been computed and are compared with the results of meshless collocation method [19] available in literature for different values of N. The obtained results presented in Table 8 which shows that the present method gives better results than those available in literature. The graph of numerical and exact solution are shown in Fig. 4 which shows efficiency of the current technique.

Solution Profile when \(T=1\), \(\delta t=0.01\), \(\beta =0.01\), \(N=20\) of case 2 Example 5.3

Example 5.4

Finally, consider the case when \(\alpha =0,~\beta =1\), \(\gamma =0\) and \(g(\xi ,\eta ,t)=0\); Then Eq. (1) takes the following form:

The initial and boundary conditions are extracted from exact solution [37]

The problem has been solved in domain \([0,1]\times [0,1]\) over the time interval [0, 1] for different values of T and M. Error norms are computed and compared with the results of RBFs available in literature given in Tables 9 and 10. From these tables it is clear that the results got using the proposed technique are comparable with those of multiquadric (MQ) RBFs and better than those of wendland (WL) RBFs [37]. The solution profile is plotted in Fig. 5 when \(T=0.2\) showing efficiency of the method suggested.

Solution profile when \(T=0.2\), \(\delta =0.001\), \(N=10\) of Example 5.4

6 Conclusion

In this paper, we studied a numerical technique based on Lucas and Fibonacci polynomials. First, we discretized temporal part of PDEs by finite difference and spatial part by \(\theta -\) weighted scheme with \(\theta =1/2\) (Crank Nicolson method). Thenceforth, the unknown functions are expanded by Lucas series while their derivatives are replaced by Fibonacci polynomials. Performance and convergence of the method are investigated by several test problems including one- and two-dimensional linear and nonlinear equations. The results are compared with exact as well numerical results available in the literature. The comparison of the results justify demonstrates efficiency and applicability of the proposed methodology.

References

Abd-Elhameed, W.M.; Youssri, Y.: A novel operational matrix of caputo fractional derivatives of Fibonacci polynomials: spectral solutions of fractional differential equations. Entropy 18(10), 345 (2016)

Abd-Elhameed, W. M.; Youssri, H.: New formulas of the high-order derivatives of fifth-kind Chebyshev polynomials: Spectral solution of the convection-diffusion equation. Numer. Methods Partial Differ. Equ. (2021)

Abd-Elhameed, W.M.; Youssri, Y.H.: Spectral solutions for fractional differential equations via a novel Lucas operational matrix of fractional derivatives. Rom. J. Phys. 61, 795–813 (2016)

Abd-Elhameed, W.M.; Youssri, Y.H.: Generalized Lucas polynomial sequence approach for fractional differential equations. Nonlinear Dyn. 89, 1341–1355 (2017)

Abd-Elhameed, W.M.; Youssri, Y.H.: Fifth-kind orthonormal Chebyshev polynomial solutions for fractional differential equations. Comput. Appl. Math. 37(3), 2897–2921 (2018)

Abd-Elhameed, W.M.; Youssri, Y.H.: Spectral tau algorithm for certain coupled system of fractional differential equations via generalized Fibonacci polynomial sequence. Iran. J. Sci. Technol. Trans. A: Sci. 43, 543–554 (2019)

Abd-Elhameed, W.M.; Youssri, Y.H.: Sixth-kind Chebyshev spectral approach for solving fractional differential equations. Int. J. Nonlinear Sci. Numer. Simul. 20(2), 191–203 (2019)

Ali, I.; Haq, S.; Nisar, K.S.; Baleanu, D.: An efficient numerical scheme based on Lucas polynomials for the study of multidimensional Burgers-type equations. Adv. Differ. Equ. 2021(1), 1–24 (2021)

Baykus, N.; Sezer, M.: Hybrid Taylor-Lucas collocation method for numerical solution of high-order pantograph type delay differential equations with variables delays. Appl. Math. Inf. Sci. 11, 1795–1801 (2017)

Bonkile, M.P.; Awasthi, A.; Lakshmi, C.; Mukundan, V.; Aswin, V.S.: A systematic literature review of Burgers’ equation with recent advances. Pramana. 69, (2018)

Cetin, M.; Sezer, M.; Guler, C.: Lucas polynomial approach for system of high-order linear differential equations and residual error estimation. Math. Prob. Eng. (2015)

Dabral, V.; Kapoor, S.; Dhawan, S.: Numerical simulation of one dimensional heat equation B-spline finite element method. Indian J. Comput. Sci. Eng. 2, 222–235 (2011)

Dehghan, M.: A finite difference method for a non-local boundary value problem for two-dimensional heat equation. Appl. Math. Comput. 112, 133–142 (2000)

El-Sayed, S.M.; Kaya, D.: On the numerical solution of the system of two-dimensional Burgers equations by decomposition method. Appl. Math. Comput. 158, 101–109 (2004)

Haq, S.; Ali, I.: Approximate solution of two-dimensional Sobolev equation using a mixed Lucas and Fibonacci polynomials. Eng. Comput. 1–10 (2021)

Haq, S.; Ghafoor, A.: An efficient numerical algorithm for multi-dimensional time dependent partial differential equations. Comput. Math. Appl. 75(8), 2723–2734 (2018)

Haq, S.; Ali, I.; Nisar, K.S.: A computational study of two-dimensional reaction-diffusion Brusselator system with applications in chemical processes. Alexandria Eng. J. 6(5), 4381–4392 (2021)

Hooshmandasl, M.R.; Heydari, M.H.; Ghaini, F.M.: Numerical solution of the one-dimensional heat equation by using Chebyshev wavelets method. Appl. Comput. Math. 1, 6 (2012)

Hussain, I.; Mukhtar, S.; Ali, A.: A numerical meshless technique for the solution of the two dimensional Burger’s equation using collocation method. World Appl. Sci. J. 23(12), 29–40 (2013)

Kaya, D.: An explicit solution of coupled Burgers’ equations by decomposition method. Int. J. Math. Math. Sci. 27, 675–680 (2001)

Koc, A.B.; Cakmak, M.; Kurnaz, A.; Uslu, K.: A new Fibonacci type collocation procedure for boundary value problems. Adv. Differ. Equ. 1, 262 (2013)

Koshy, T.: Fibonacci and Lucas numbers with applications. Wiley, Hoboken (2019)

Kutluay, S.; Esen, A.; Dag, I.: Numerical solution of the Burgers’ equation by the least-squares quadratic B-spline finite element method. J. Comput. Appl. Math. 167, 21–33 (2004)

Liao, W.: A fourth-order finite-difference method for solving the system of two-dimensional Burgers equations. Int. J. Numer. Meth. Fluids 64(5), 565–590 (2009)

Mebrate, B.: Numerical solution of a one dimensional heat equation with Dirichlet boundary conditions. Am. J. Appl. Math. 3(6), 305–311 (2015)

Mirzaee, F.; Hoseini, S.F.: Application of Fibonacci collocation method for solving Volterra-Fredholm integral equations. Appl. Math. Comput. 273, 637–44 (2016)

Mittal, R.C.; Jiwari, R.: A differential quadrature method for numerical solutions of Burgers’-type equations. Int. J. Numer. Meth. Heat Fluid Flow 22(7), 880–895 (2012)

Mohebbi, A.; Dehghan, M.: High-order compact solution of the one-dimensional heat and advection-diffusion equations. Appl. Math. Model. 10, 3071–84 (2010)

Nadir, M.: Lucas polynomials for solving linear integral equations. J. Theor. Appl. Comput. Sci. 11, 13–19 (2017)

Oruç, O.: A meshless multiple-scale polynomial method for numerical solution of 3d convection-diffusion problems with variable coefficients. Eng. Comput. 1–14 (2019)

Oruc, O.: A new algorithm based on Lucas polynomials for approximate solution of 1D and 2D nonlinear generalized Benjamin-Bona-Mahony-Burgers equation. Comput. Math. Appl. 74(12), 3042–3057 (2017)

Oruc, O.: A new numerical treatment based on Lucas polynomials for 1D and 2D sinh-Gordon equation. Commun. Nonlinear Sci. Numer. Simul. 57, 14–25 (2018)

Oruç, O.: Two meshless methods based on pseudo spectral delta-shaped basis functions and barycentric rational interpolation for numerical solution of modified Burgers equation. Int. J. Comput. Math. 3(98), 461–479 (2021)

Oruç, O.; Esen, A.; Bulut, F.: A Haar wavelet approximation for two-dimensional time fractional reaction-subdiffusion equation. Eng. Comput. 1(35), 75–86 (2019)

Ozis, T.; Aksan, E.N.; Ozdes, A.: A finite element approach for solution of Burger’s equation. Appl. Math. Comput. 139, 417–428 (2003)

Rainville, D.E.: Special functions. New York. (1960)

Uddin, M.: On the selection of a good value of shape parameter in solving time-dependent partial differential equations using RBF approximation method. Appl. Math. Model. 38(1), 135–144 (2014)

Wazwaz, A.M.: Partial differential equations and solitary waves theory. Springer Science and Business Media, Berlin (2010)

Youssri, Y.H.; Abd-Elhameed, W.M.; Mohamed, A.S.; Sayed, S.M.: Generalized Lucas polynomial sequence treatment of fractional pantograph differential equation. Int. J. Appl. Comput. Math. 7(2), 1–16 (2021)

Acknowledgements

The authors would like to express their sincere thanks for the financial support of department of mathematics, Prince Sattam bin Abdulaziz University, Saudi Arabia, and to the Faculty of Engineering Sciences, GIK Institute, Topi 23640, KPK, Pakistan.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

It is stated that we have no conflict of interest with anyone.

Authors’ contributions

The idea of this paper was proposed by IA and prepared the manuscript initially. All authors read and approved the final manuscript.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ali, I., Haq, S., Nisar, K.S. et al. Numerical study of 1D and 2D advection-diffusion-reaction equations using Lucas and Fibonacci polynomials. Arab. J. Math. 10, 513–526 (2021). https://doi.org/10.1007/s40065-021-00330-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40065-021-00330-4