Abstract

Let E be a Banach space with the topological dual \(E^*\). The aim of this paper is two-fold. On the one hand, we prove some basic properties of Hadamard-type fractional integral operators. These results are related to earlier results about integral operators acting on different function spaces, but for the vector-valued case they are of independent interest. Note that we discuss it in a rather general setting. We study Hadamard–Pettis integral operators in both single and multivalued case. On the other hand, we apply these results to obtain the existence of solutions of the fractional-type problem

with certain constants \(\lambda , b\), where \(h \in E\) and \(f: [1,e]\times E \rightarrow E\) is Pettis integrable function such that, for every \(\varphi \in E^*\), \(\varphi f\) lies in an appropriate Orlicz spaces. Here \(\frac{d^\alpha }{d t^\alpha }\) stands the Caputo–Hadamard fractional differential operator.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and preliminaries

The issue of fractional calculus for real-valued functions in the context of Orlicz spaces has been studied for the first time by O’Neil [28]. Following the appearance of [28], there has been a significant interest in the study of this topic (cf. [17, 26, 39, 40] and the references therein for the part of the recent developments in this direction).

On the other hand, fractional calculus for functions that take values in Banach space has been studied by many authors (see e.g. [1,2,3,4] or [31,32,33,34, 36, 37] and the references therein for background on these topics). Most of the above investigates are restricted to the case of E-valued Pettis integrable functions x with the property that \(\varphi x \in L_p, \ \varphi \in E^*\). Here we contribute to consider the case when \(\varphi x\) lies in some Orlicz space. It allows us to cover the case of all Lebesgue spaces \(L_p\) for \(p > 1\) considered in earlier papers (cf. [34,35,36], for instance), but we are not restricted only to this case. As claimed below, there are several interconnections between Pettis integrals and Orlicz spaces being of special interests in fractional calculus.

We show that the Hadamard-type fractional integral operator maps the class \(x \in P[I,E]\) such that \(\varphi x\) belongs to some appropriate Orlicz space into the space of (weakly) continuous functions. Afterwards, we establish an existence result for the fractional-type problem

combined with appropriate boundary condition

with certain constants \(\lambda , b\), where \(h \in E\)\(f: [1,e]\times E \rightarrow E\) is Pettis integrable function. The multivalued Hadamard–Pettis integral and its applications are also studied.

The question of proving of the existence of solutions to the boundary value problem (1, 2) reduces to proving the existence of solutions of a Volterra equation [modelled off the problem (1, 2)]. Since the space of all Pettis integrable functions is not complete, not all methods of the proofs are allowed and we restrict our attention to the (weakly) continuous solutions of the mentioned integral equation, so to the really general case of pseudo-solutions of the problem (1, 2).

It is well known that a powerful tool for proving the existence of solutions to boundary value problem is the fixed point theory. The key tool in our approach is the following Mönch fixed-point theorem which was motivated by ideas in [5]:

Theorem 1

[23] Let E be a metrizable locally convex topological vector space and let D be a closed convex subset of E. Assume, that \(T : D \rightarrow D\) is ww-sequentially continuous mapping such which satisfies

Then T has a fixed point in D.

Let \( \psi : {\mathbb R}^+ \rightarrow {\mathbb R}^+ \) be a Young function, i.e., \(\psi \) is increasing, even, convex, and continuous with \(\psi (0) = 0\) and \(\lim _{u \rightarrow \infty } \psi (u)=\infty \). The Orlicz space \(L_\psi = L_\psi ([a, b], {\mathbb R})\) is a Banach space consisting of all (classes of) measurable functions \(x: [a, b] \rightarrow {\mathbb R}\) for which there exists a number \(k>0\) such that

The (Luxemburg) norm \(\left\| x\right\| _\psi \) is defined as the infimum of all such numbers k. The Young complement \(\widetilde{\psi }\) of \(\psi \) is defined for \(u \in {\mathbb R}\) by \(\widetilde{\psi }(u) :=\sup _{v \ge 0}\{v\cdot |u| - \psi (v)\}.\)

The following properties of Young functions and Orlicz spaces generated by such functions are well-known and will be used in the sequel (see e.g. [22, 35]).

- 1.

A function \(\psi \) is a Young function if and only if \(\widetilde{\psi }\) is a Young function.

- 2.

On a finite measure space and if \(\psi \) is not the null function, \(C[a,b] \subset L_{\infty }[a,b] \subset L_\psi [a,b] \subset L_1[a,b]\).

- 3.

The inclusion \(L_{\psi _1} \subset L_{\psi _2}\) holds if and only if there exists positive constants \(u_0\) and K such that \(\psi _2(u) \le K \psi _1(u)\) for \(u \ge u_0.\)

- 4.

Applicability of functions from \(L_{\psi }\) are wider than from \(L_{p}\). The function from usual Lebesgue spaces has at most polynomial growth. By using the class \(L_{\psi }\) we may relax this requirement, in this case, our assumptions will be less restrictive than the standard ones. Hovewer, \(L_p\) spaces are special cases of Orlicz spaces (for \(\psi (t) = \frac{x^p}{p}\)).

- 5.

For any Young function \(\psi \) we have \(\psi (u-v) \le \psi (u) - \psi (v)\) and \({u}\cdot {v} \le \psi (u) + \widetilde{\psi }(v) ~~~u,v\in {\mathbb R}\) (the Young inequality).

Further, we have a simple property which will be used in estimation of an order \(\alpha \) of the fractional integral operator

Proposition 1

Fix \( \lambda _1 \in (0,1)\). Let \(\psi \) be a Young function such that \(\int _0^t \psi (s^{-\lambda _1}) \,ds\) is finite for any \(t>0\). If \( \lambda _2<\lambda _1\), then the integral

is finite as well.

Proof

The proof of this Proposition is two-fold. On the one hand, if \(t \in (0,1]\) the finiteness of the integral in (3) follows immediately since

On the other hand, if \(t>1\), we have

Reasoning the finiteness of the integral \(\int _0^t \psi (s^{-\lambda _1}) \,ds,~~~t>0\), it follows that \(\psi (\xi )\) is finite for every \(\xi >0\), hence finite for every large \(\xi \). This yields the finiteness of \(\int _1^t \psi (s^{-\lambda _2}) \,ds,~~t>1.\) The result now follows by (4). This completes the proof. \(\square \)

Throughout this paper, we consider the measure space \((I,\varOmega ,\mu )\), where \(I=[1,e]\), \(\varOmega \) denotes the Lebesgue \(\sigma \)-algebra \(\mathcal {L}(I)\) and \(\mu \) stands the Lebesgue measure. E denotes a real Banach space with a norm \(\left\| \cdot \right\| \) and \(E^{*}\) its dual. By \(E_\omega \) we denotes the space E when endowed with the weak topology (i.e., generated by the continuous linear functionals on E). We will let \(C[I,E_\omega ]\) denotes the Banach space of continuous functions from I to E, with its weak topology (see also [13]).

Let P[I, E] denotes the space of E-valued Pettis integrable functions in the interval I (see [15] or [29] for the definition). Recall that (see e.g. [8,9,10,11,12,13,14,15]) the weakly measurable function \(x: I \rightarrow E\) is said to be \(\psi \)-Dunford (where \(\psi \) is a Young function) integrable on I if and only if \(\varphi x \in L_\psi (I)\) for each \(\varphi \in E^*\).

Recall that a map \(T:X \longrightarrow Y\), X and Y are Banach spaces, is said to be is weakly sequentially continuous (or: ww-sequentially continuous) if and only it takes weakly convergent sequences \((x_n)\) to \(x \in E\) into sequences \((T(x_n))\) weakly convergent to T(x) ([6]).

Recall, that the De Blasi measure of weak noncompactness is a function defined on bounded subsets \(X \subset E\) by

Its properties which will be useful in our paper are quite known and can be found in [14]. The following Ambrosetti-type lemma will be interesting for us:

Lemma 1

Let \(V\subset C[I,E_\omega ] \) be a family of strongly equicontinuous functions. Then the function \(t\mapsto \beta \left( V\left( t\right) \right) \) is strongly continuous and

defines a measure of weak noncompactness in \(C[I,E_\omega ] .\)

We need to recall the definition of the pseudo-derivative [13]:

Definition 1

A function \(x:I \rightarrow E\) is said to be pseudo-differentiable if there exists a function \(y : I \rightarrow E \) such that, for every \(\varphi \in E^{*}\), the real-valued function \(\varphi (x(t))\) is differentiable a.e., to the value \(\varphi (y(t))\). In this case, the function y is called the pseudo-derivative of the function x.

If the space E has total dual \(E^{*}\). (i.e., the space \(E^{*}\) contains a countable subset \((x_n^{*})\) which separates the points of E), then the pseudo-derivative, if exists, is uniquely determined up to a set of measure zero. However, a function can be pseudo-differentiable and either not strongly nor weakly differentiable (cf. [13]).

The following property will be used in the sequel:

Proposition 2

For any \( t \in {\mathbb R}^+\), \(\lambda \in (0,1)\) and any Young function \(\psi \), the set

is nonempty.

Proof

Obviously \(M_0^\psi =\{0\}\). We observe also that the derivative of the function

exists and is positive for some sufficiently large \(\rho >0\) (because \(\psi (u) \rightarrow 0\) as \(u \rightarrow 0\)). Consequently, for any \(~t>0\), there is a constant \(\rho _0>0\) such that

As \(M_0^\psi =\{0\}\), it means that for any \(t \ge 0\), \(M_{t}^\psi \not = \emptyset \). \(\square \)

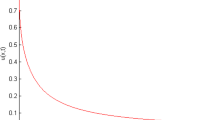

Given a fixed Young function \(\psi \) define a function \(\varPsi :{\mathbb R}^+ \rightarrow {\mathbb R}^+\) by

The following proposition provides a useful characterization of the function \(\varPsi \). Because our definition of \(\varPsi \) differs from typical N-functions studied in the theory of Orlicz spaces, we should present an important property:

Proposition 3

[30] The function \(\varPsi :{\mathbb R}^+ \rightarrow {\mathbb R}^+\) defined by (6) is increasing and continuous with \(\varPsi (0)=0\).

Definition 2

(cf. [35, Definition 2.4]) For a Young function \(\psi \), we define the class \( {\mathcal {H}}^\psi (E)\) to be the class of all Pettis integrable functions \(x: I \rightarrow E\) such that \(\varphi x \) is \(\psi \)-Dunford for every \(\varphi \in E^{*}.\) That is

Let us recall that the Pettis-integrability of strongly measurable functions is strictly related to our families of sets. Namely, we have the following theorem due to Uhl [41]:

Proposition 4

In order that a strongly measurable \(x : I \rightarrow E\) be in P[I, E] it is necessary and sufficient that there exists a Young function \(\psi \) such that \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \), \( \varphi x \in L_\psi \) for every \(\varphi \in E^{*}.\)

As for any \(x \in P[I,E]\) an appropriate Young function can be different, we will use the above result in one direction. Namely, by fixing such a Young function \(\psi \) we will investigate a much more natural subspace \({\mathcal {H}}^\psi (E) \subset P[I,E]\). Let us recall, that the condition \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \) is essential (to exclude the case of \(\psi (x) = ax\)), because for \(\psi (u) = u\) (which do not satisfy this condition) we get \(L_\psi (I,E) = L^1(I,E)\). It is well-known, that the space of Dunford integrable functions (i.e. scalarly Lebesgue integrable) is different than P[I, E]. Note that, in contrast to \(L_p\) spaces, for Orlicz spaces there is no linear order with respect to inclusion, so there exists Young functions \(\psi _1\), \(\psi _2\) for which neither \({\mathcal {H}}^{\psi _1}(E) \not \subset {\mathcal {H}}^{\psi _2}(E)\) nor \({\mathcal {H}}^{\psi _2}(E) \not \subset {\mathcal {H}}^{\psi _1}(E)\) (see [22, Theorem 13.1]).

Clearly, we get also the following immediate corollary

Corollary 1

For any \(\alpha > 0\) and any Young function \(\psi \) we get \(C[I,E_\omega ] \subset {\mathcal {H}}^\psi (E) \).

The following result extended [29, Theorem 3.4]:

Proposition 5

[35, Proposition 2.2] If \(x \in {\mathcal {H}}^\psi (E)\), then \(x(\cdot )y(\cdot ) \in P[I,E]\) for every \(y(\cdot ) \in L_{\widetilde{\psi }}\).

We omit the proof since it is almost identical to that of [35, Proposition 2.2] (with the exception of the use Young functions used instead of N-functions). Let us recall, that if a Young function M is additionally finite-valued, vanishes only at 0, \(\lim _{x \rightarrow 0^+} \frac{M(x)}{x} = 0\) and \(\lim _{x \rightarrow \infty } \frac{M(x)}{x} = \infty \) then M is called an N-function.

We should also note, that the connection between the Pettis integrability and Orlicz spaces is much deeper (see [7]).

Proposition 6

[7, Theorem 2.3] A strongly measurable function \(x : I \rightarrow E\) is in P[I, E] if and only if there is an N-function \(\psi \) with \(\psi (s\cdot t) \le \psi (s) \cdot \psi (t)\) for \(s,t > 0\) such that \(\{\varphi x : \varphi \in E^*, \Vert \varphi \Vert \le 1 \}\) is relatively weakly compact in \(L_\psi (I)\).

We should present a comment about “optimality” of the space of solutions with respect to \(\alpha \). For the case of Riemann–Liouville and Caputo derivatives we discuss this problem in [35]. Here, the problem is much more complicated and a sufficient condition binding \(\alpha \) and \(\psi \) will be presented in Theorem 2.

2 Hadamard-type fractional integrals and derivatives of vector-valued functions

In this section, we present some definitions and some properties of the Hadamard-type fractional Pettis integrals (and corresponding fractional derivatives) for the functions that take values in Banach space.

Motivated by the definition of the Hadamard fractional integral of real-valued function, we introduce the following

Definition 3

Let \(x:I\rightarrow E.\) The Hadamard-type fractional Pettis-integral (shortly HFPI) of x of order \(\alpha >0\) is defined by

In the preceding definition the sign “\( \int \)” stands the Pettis integral. In contrast to the case of the Riemann–Liouville integral, it is not a convolution type of fractional integrals, so it should be investigated separately.

It is known (cf. [35]), that this operators makes sense if \(x \in P[I,E]\) such that \(\varphi x \in L_p,~~p>\max \{1,\alpha \},~~~\varphi \in E^*\) (for a detailed study in this context, see [37]).

Definition 4

Let \(x : I \rightarrow E \). We define the Riemann–Louville Hadamard fractional-pesudo (-weak) derivative “shortly HFPD (HFWD)” of x of order \(\alpha \in (0, 1)\) by

Here D denote the pseudo-(weak-)differential operator. We use the notation \(\mathfrak {D}^{\alpha }_{p}\) and \(\mathfrak {D}^{\alpha }_{w}\) to characterize the Riemann–Louville Hadamard fractional-pseudo derivatives and Hadamard fractional-weak derivatives respectively.

Of course, \(\mathfrak {D}^{\alpha }_{1,p} x\) (\(\mathfrak {D}^{\alpha }_{1,w}x\)) makes sense, if \(x:I \rightarrow E\) is a function such that \(\mathfrak {J}^{1- \alpha }_1 x\) is pseudo (weakly) differentiable on I. It is easy to see that, if \(\mathfrak {D}^{\alpha }_{1,w}x\) exists a.e. on I, then \(\mathfrak {D}^{\alpha }_{1,p}x\) exists on I and \(\mathfrak {D}^{\alpha }_{1,p}x =\mathfrak {D}^{\alpha }_{1,w}x\) a.e. on I. For background and details pertaining to this differential operators, we refer the reader to [37].

Besides the Riemann–Louville Hadamard fractional-pseudo (weak) derivatives, we define the Caputo–Hadamard fractional-pseudo (weak) derivatives.

Definition 5

Let \(x : I \rightarrow E\). For \(\alpha \in (0, 1)\), we define the Caputo–Hadamard fractional-pesudo (-weak) derivative “shortly CFPD (CFWD)” of x of order \(\alpha \) by

We use the notation \(\frac{\mathrm {d}^{\alpha }_{\mathrm {p}}}{\mathrm {dt}^{\alpha }}\) and \(\frac{\mathrm {d}^{\alpha }_\omega }{\mathrm {dt}^{\alpha }}\) to characterize the Caputo fractional-pseudo derivatives and Caputo fractional-weak derivatives respectively.

The following theorem generalizes [37, Lemma 2.2.] and plays a major rule in our analysis.

Theorem 2

Let \(\alpha \in (0,1]\). For any Young function \(\psi \) with its complementary function \(\widetilde{\psi }\) such that

the operator \(\mathfrak {J}^\alpha _1\) sends \({\mathcal {H}}^\psi (E)\) into \( C[I,E_\omega ]\) (if we define \(\mathfrak {J}^\alpha _1 x(1) :=0\)). In particular, \(\mathfrak {J}^\alpha _1: C[I,E_\omega ] \rightarrow C[I,E_\omega ]\) is well-defined. However, \( \varphi (\mathfrak {J}^\alpha _1 x )= \mathfrak {J}^\alpha _1 \varphi x \) holds for every \(\varphi \in E^{*}\).

Proof

At the begging we note that the case when \(\alpha =1\) is straightforward and we left it to the readers. The “strategy” of the proof when \(\alpha \in (0,1)\) will consist of three steps:

- (a)

The operator \(\mathfrak {J}^\alpha _1\) makes sense (i.e., \(\mathfrak {J}^\alpha _1 x\) exists for every \(x \in {\mathcal {H}}^\psi (E)\)),

- (b)

The operator \(\mathfrak {J}^\alpha _1: {\mathcal {H}}^\psi (E) \rightarrow C[I,E_\omega ]\) is well-defined (i.e., \(\mathfrak {J}^\alpha _1 x \in C[I,E_\omega ]\) holds for every \(x \in {\mathcal {H}}^\psi (E)\)),

- (c)

\(\mathfrak {J}^\alpha _1\) maps \( C[I,E_\omega ] \) into itself.

Let \(x \in {\mathcal {H}}^\psi (E)\), \(\alpha \in (0,1)\) and define a function \(y:I \rightarrow {\mathbb R}^+\) by

We claim that \(y \in L_{\widetilde{\psi }}(I)\), once our claim is established, the assertion (a), follows immediately by Proposition 5. To see this, fix \( t \in [1,e]\) and choose an arbitrary \(k>0\). By using an appropriate substitution and the properties of Young functions, one can get

Thus, in view of (10) and by the aid of Proposition 2, we infer that \(y \in L_{\widetilde{\psi }}(I)\). Consequently, the assertion [(a)] follows directly from Proposition 5. Moreover, as \(s\mapsto [\log (t/s)]^{\alpha -1}\frac{ x(s)}{s}\) is Pettis integrable on [1, t] for every \(t \in I\), it follows, by the definition of Pettis integral, that for every \(t \in I\) there exists an element in E denoted by \(\mathfrak {J}^\alpha _1 x(t)\) such that

holds for every for every \(\varphi \in E^{*}\).

Next, we prove the assertion (b): let \(1\le \tau \le t \le e\). Without loss of generality, assume \(\mathfrak {J}^\alpha _1 x(t) - \mathfrak {J}^\alpha _1 x(\tau ) \not = 0\). Then there exists (as a consequence of the Hahn-Banach theorem) \(\varphi \in E^{*}\) with \(\left\| \varphi \right\| =1\) and \(\left\| \mathfrak {J}^\alpha _1 x(t) - \mathfrak {J}^\alpha _1 x(\tau ) \right\| =\varphi \left( \mathfrak {J}^\alpha _1 x(t) - \mathfrak {J}^\alpha _1 x(\tau ) \right) \). One can write the following chain of inequalities

where

We claim that \(h_i \in L_{\widetilde{\psi }}(I),~~~(i=1,2)\). Once our claim is established, we infer (in view of the Hölder inequality in Orlicz spaces) that

Fix \( 1\le \tau \le t \le e\) and \(k>0\). An appropriate substitution using the properties of Young functions leads to the estimate

Since

it follows

where

Similarly, by applying the classical mean value theorem and the monotonicity of \(\varPsi \), one can get

Thus, (14) is seen to be equivalent with the following estimate

This estimate shows, in view of the continuity of \(\widetilde{\varPsi }\) and the definition \(\mathfrak {J}^\alpha _1 x(1) :=0\), that \( \mathfrak {J}^\alpha _1 x\) is norm continuous. Thus, \(\mathfrak {J}^\alpha _1\) maps \({\mathcal {H}}^\psi (E)\) into \( C[I,E_w]\).

Finally, we prove the assertion [(b)]. To do this, we recall that [19, p. 73] the weak continuity implies the strong measurability. Thus, every \(x \in C[I,E_\omega ]\) is strongly measurable on I. Also, for any \(\varphi \in E^*\), the continuous function \(\varphi x \) lies \(L_\psi (I)\), for any Young function \(\psi \). Thus, in view of Proposition 4 we deduce that \( C[I,E_\omega ] \subseteq {\mathcal {H}}^\psi (E) \). That is \(\mathfrak {J}^\alpha _1: C[I,E_\omega ] \rightarrow C[I,E_\omega ]\) is well-defined. This is ensures the assertion [(c)]. \(\square \)

Remark 1

Theorem 2 is an extension for the [37, Lemma 2.2]: if we choose \(\psi (u)=\frac{|u|^p}{p}\) with \(p>1/\alpha \), it can be easily seen that [37, Lemma 2.2] is a simple particular case of our Theorem 2. However, when \(\psi (u)=\frac{|u|^p}{p},\)\(p>1/\alpha \), there is no difficulty to prove that \( \widetilde{\varPsi }(t)=\frac{t^{\alpha -\frac{1}{p}}}{\root q \of {q[1-(1-\alpha )q]}},~~~t \in {\mathbb R}^+,~~\frac{1}{p}+\frac{1}{q}=1.\)

In the following example we prove the existence of a Young function such that the hypotheses (10) of Theorem 2 are satisfied for all \(\alpha \in (0,1)\).

Example 1

Choose \(\psi (u)=e^{|u|}-|u|-1\) (whence \(\widetilde{\psi }=(1+|u|) \log (1+|u|)-|u|\))—see [22, p.14]. We will show that, for this choice of \(\psi \), the integral (10) is finite for any \(\alpha \in (0, 1)\). Evidently, for any \(t \in [1,e]\) and any \(\epsilon \) near 0, the integration by parts yields

Obviously, \( J_\epsilon \ge 0\) is bounded as \(\epsilon \downarrow 0\) and so we are done.

When \(E={\mathbb R}\) the operator \(J^\alpha \) maps all elements from the Orlicz space \(L_\psi \) into \(C[I,E_w]\) for any\(\alpha \in (0,1)\). We should note, that this property fails in the case of Lebesgue spaces \(L_p\). Recall, that the image of \(J^\alpha \) of \(L_p\) is in \(C[I,E_w]\) if \(p>1/\alpha \), for instance \(J^\alpha :L_2 \rightarrow C\) for \(\alpha \in (0.5,1)\) (see [37, Lemma 2.2]).

We now prove the following commutative property of the HFPI.

Lemma 2

Let \(\alpha , \beta \in (0,1)\) and \(\psi \) be a Young function with its complement \(\widetilde{\psi }\) such that

where \(\lambda :=\max \{1-\alpha , 1-\beta \}\). Then

holds for every \(x \in {\mathcal {H}}^\psi (E)\). In particular, (\(\maltese \)) holds for every \(x \in C[I,E_w]\).

Proof

Obviously, when \(\alpha +\beta \ge 1\), the continuity of \(\widetilde{\psi }\) yields the finiteness of the integral \(\int _0^t \widetilde{\psi }(s^{\alpha +\beta -1}) \,ds\) in [1, e]. If \(\alpha +\beta < 1\), the finiteness of the integral in (17) yields, in view of Proposition 1 the finiteness of each of the integrals \(\int _0^t \widetilde{\psi }(s^{\alpha +\beta -1}) ds\), \(\int _0^t \widetilde{\psi }(s^{\alpha -1}) \,ds\) and \(\int _0^t \widetilde{\psi }(s^{ \beta -1}) \,ds\) are finite for all \(t \in [1,e]\). Thus, in view of Theorem 2, \( \mathfrak {J}^\alpha _1 x\), \( \mathfrak {J}^\alpha _1 (\mathfrak {J}^\beta _1 x)\) and \( \mathfrak {J}^{\alpha +\beta }_1 x\) exist on I for every \(x \in {\mathcal {H}}^\psi (E)\) as a weakly continuous functions from I to E. Consequently, for any \(\varphi \in E^*\) we have (by the aid of ([21] Property 2.26), since \(\varphi x \in L_1[1,e]\) for every \(\varphi \in E^*\))

that is

Hence \(\mathfrak {J}^\alpha _1 \mathfrak {J}^\beta _1 x(t)=\mathfrak {J}^{\alpha +\beta }_1 x(t)\), \(t \in I\). Similarly, we are able to show that \(\mathfrak {J}^\beta _1 \mathfrak {J}^\alpha _1 x(t)=\mathfrak {J}^{\alpha +\beta }_1 x(t)\).

If \(x \in C[I,E_w]\), then (\(\maltese \)) follows as a direct consequence of Theorem 2. \(\square \)

The following Lemma is folklore in case \(E = {\mathbb R}\), but to see that it is also holds in the vector-valued case, we provide a proof. In our proof, we will use the following

Proposition 7

(see [27], Theorem 5.1) The function \(y:I \rightarrow E\) is an indefinite Pettis integral, if and only if, y is weakly absolutely continuous on I and have a pseudo-derivative on I. In this case, y is an indefinite Pettis integral of any of its pseudo-derivatives.

Lemma 3

Let \(0< \alpha < 1\) and \(\psi \) be a Young function with Young complement \(\widetilde{\psi }\) such that

If the function \(x:I \rightarrow E\) is weakly absolutely continuous on I and have a pseudo-derivative in \({\mathcal {H}}^\psi (E)\), then

In particular, if x have a weak derivative in \( C[I,E_\omega ]\), then

The proof of Lemma 3 will be done after some preliminary lemma is established.

Lemma 4

Let \(0< \alpha <1 \). For any \( x \in {\mathcal {H}}^\psi (E)\), where \(\psi \) is a Young function with its complement \(\widetilde{\psi }\) satisfying

Then

In particular, if \( x \in C[I,E_\omega ]\), we have \(\mathfrak {D}^{\alpha }_{\omega } \mathfrak {J}^{\alpha }_1 x = x\) on I.

Proof

Our assumption \(x \in {\mathcal {H}}^\psi (E)\) yields, in view of Lemma 2, that

Similarly, if \(x \in C[I,E_\omega ]\), we obtain

The claim now follows immediately, since the indefinite integral of (Pettis integrable) weakly continuous function is (pseudo-differentiable) weakly differentiable with respect to the right endpoint of the integration interval and its (pseudo-) weak derivative equals the integrand at the end point of the integration interval. \(\square \)

We are now ready to write the proof of Lemma 3.

Proof of Lemma 3

We observe that under the assumption imposed on \( D_{\mathrm {p}} x\) together with Proposition 7, the weakly absolutely continuity of x is equivalent to

where \(y :=D_{\mathrm {p}} x\) is one of the Pettis integrable pseudo-derivatives of x. Since \(y \in {\mathcal {H}}^\psi (E)\), we get \((\cdot )y(\cdot ) \in {\mathcal {H}}^\psi (E)\) as well: evidently, \(\varphi (s y(s))= s\varphi ( y(s))\in L_\psi (I)\) holds true for all \(\varphi \in E^*\) and \((\cdot )y(\cdot ) \in P[I,E]\) (it follows from Proposition 5). Thus

where the right hand side exists by Theorem 2. Consequently,

Hence, owing to Lemma 4, and by the aid (18), it follows that

which is what we wished to show. The proof for the weak derivative is very similar to the above for dor pseudo derivatives, so we omit the details. \(\square \)

Remark 2

The definition 5 of the Caputo–Hadamard fractional-pseudo (weak) derivatives has the disadvantage that it completely loses its meaning if x fails to be pseudo- (weak) differentiable on I.

For this reason, we use the property of Lemma 3 to define the general Caputo–Hadamard fractional-pseudo (weak) derivatives, i.e., we put

Lemma 3 implies that, for weakly absolutely continuous functions with an integrable pseudo- (weak) derivative, this definition coincides with the usual definition (Definition 5) of the Caputo–Hadamard fractional derivatives.

3 Caputo–Hadamard-type boundary value problem

In this section, we apply the results of Section (2), result to investigate the existence of weak solutions of the following Caputo–Hadamard boundary value problem

with certain constants \(\lambda , b,~h \in E,~~b \ne - 1\), where \(f: [1,e]\times E \rightarrow E\) is Pettis integrable function.

To obtain formally the integral equation modelled off the problem (26), let \(\psi \) be a Young function such that

Assume that

Thus, if \(x \in C[I,E_\omega ]\) solves (26), then formally we have

That is

This reads as

with some (presently unknown) quantity x(1). Solving (28) for x(1) with \(x(1)+ b x(e)=h\), we get

The next theorem gives a conditions that ensures the existence of solutions to the Volterra-type fractional integral equation (28) in \(C[I,E_\omega ]\). The case \(\alpha =1\) was studied in [13], for instance.

Let us mention, that here by a Kamke function (of the Hadamard-type) we mean a function \(g: {\mathbb {R}}_+ \rightarrow {\mathbb {R}}_+\) which is continuous, nondecreasing, \(g(1) =0\) and \(u \equiv 0\) is the only continuous solution of

Note, that in contrast to the case of the Riemann–Liouville fractional integrals (see [24], for instance) the problem of existence of necessary and sufficient conditions to be a Kamke function with the Hadamard fractional integral remains open.

Recall, that for any Young function \(\psi \)\(C(I) \subset L_{\infty }(I) \subset L_{{\psi }}(I)\), and then there exists a constant \(q>0\) such that \(\Vert w\Vert _{{\psi }} \le q\cdot \Vert w\Vert _{\infty }\) for all \(w \in C(I)\) (cf. (see [22, Theorem 13.3], a special case considered here is calculated in [20, Remark 1], namely we have \(q = (\psi ^{-1}((e-1)^{-1}))^{-1}\)) and we can use this constant in our assumptions too (see also [22, p. 79]. For brevity and to allows a generalizations, let us keep in the sequel a symbol q.

Theorem 3

Let \(\alpha \in (0,1]\). Assume, that \(\psi \) is a Young function satisfying \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \) and its complementary Young function \(\widetilde{\psi }\) satisfies

Let the function \( f: [1,e]\times E \rightarrow E\) be such that

- a)

for every \(t \in I\)\(f(t,\cdot )\) is ww-sequentially continuous,

- b)

for each \(x \in C[I,E_\omega ],~f (\cdot ,x(\cdot ))\) is strongly measurable,

- c)

for any \(r>0\) and for each \(\varphi \in E^*\) there exists an \(L_{{\psi }}(I,{\mathbb {R}})\)-integrable function \(M_r^{\varphi } : I \rightarrow {\mathbb R}^+\) such that \(| \varphi {(f(t,x))} | \le M_r^{\varphi }(t)\) for all \( t \in I\) and \(\left\| x\right\| \le r\). Moreover, for any \(s >0\) there exists a constant \(\kappa _s > 0\) such that for all \(\varphi \in E^*\), \(\Vert M_s^{\varphi } \Vert _\psi < \kappa _s\) and \(\int _0^\infty \frac{ds}{\Vert M_s^\varphi \Vert _\psi } = \infty \).

- d)

there exists a Kamke function g such that for any bounded subset X of E we have

$$\begin{aligned}{\beta }(f (I \times X)) \le g( {\beta }(X)) .\end{aligned}$$ - e)

the constant \( \lambda \in {\mathbb R}\) is such that there exists a positive constant r satisfying

$$\begin{aligned} \frac{\left\| h\right\| }{1+b} + \frac{4 |\lambda | \widetilde{\varPsi }(1) }{\varGamma (\alpha )} \cdot \left\| M_r^{\varphi }\right\| _\psi \left| \frac{ 2b+1}{(1+b) } \right| \le r . \end{aligned}$$

Then (28) has at least one solution in \(C[I,E_\omega ]\) satisfying \(x(1)+ b x(e)=h\).

Proof

We will consider the operator

where \(x \in C[I,E_\omega ]\). To shorten the proof, let us recall some estimations proved for the integral operator \(\mathfrak {J}^\alpha _1 f(t,x(t))\) in a previous section. As for any \(x \in C[I,E_\omega ]\) and \(\varphi \in E^*\) the superposition \(f (\cdot ,x(\cdot ))\) is strongly measurable, so in view of Proposition 4, from our assumption c) it follows that \(f (\cdot ,x(\cdot )) \in {\mathcal {H}}^\psi (E)\).

We divide our proof into steps, by proving some of important properties of T.

First, we need to claim, that this operator T is well-defined on \({\mathcal {H}}^\psi (E)\) (in view of Corollary 1 also in \(C[I,E_\omega ]\)) with its values in \(C[I,E_\omega ]\) (see Theorem 2). Let us also recall (see 11), that a function \(y:I \rightarrow {\mathbb R}^+\) defined by

is in an appropriate Orlicz space, i.e., \(y \in L_{\widetilde{\psi }}(I)\) (cf. Theorem 2). Let us estimate \(\Vert y\Vert _{\widetilde{\psi }}\). By the definition of the Luxemburg norm, by (12), we obtain an estimation:

for any \(k > 0\).

We need to show the existence of an invariant set for T, i.e., \(T : Q \rightarrow Q\). Denote by \(\kappa \) the constant from c) related to the value r described in e). Let us define a convex and closed subset \(Q \subset C[I, E_\omega ]\):

Due to Proposition 3 it is strongly equicontinuous set of functions.

Step 1. Linking our assumptions on \(\alpha \) and \(\psi \) and using Hölder inequality in Orlicz spaces, as in Theorem 2, for any \(\varphi \in E^{*}\), for \(\Vert x\Vert \le r\) one can obtain an estimation

As a consequence of the Hahn–Banach theorem, for any \(x \in Q\) there exists \(\varphi \in E^{*}\) with \(\left\| \varphi \right\| =1\) and \(\left\| T(x)(t) \right\| = \varphi \left( T(x)(t) \right) \) and then by the assumption e)

Let \( t, \tau \in [1,e]\) with \(t >\tau \) and fix \(x \in Q\). Without loss of generality, assume \(Tx(t) - Tx(\tau ) \not = 0\). Let \(\varphi \in E^{*}\) with \(\left\| \varphi \right\| =1\). Thus, by (16)

where \(h_1\) is defined in (13).

Due to (16) and since all the constants on the right-hand side of the above inequality are independent of \(\varphi \), we are able the supremum over all \(\varphi \in E^*\) with \(\left\| \varphi \right\| \le 1\) and we obtain

This estimate prove that T maps Q into itself.

Step 2. It remains to prove that \(T: Q \rightarrow Q \) ww-sequentially continuous. To see this, let \((x_n )\) be a sequence in Q weakly convergent to x. Thus \(x_n(t) \rightarrow x(t)\) in \((E, \omega )\) for each \(t \in I\).

Since f satisfies the Assumption b), for any \(t \in I\) the sequence \((f(t,x_n(t)))\) converge weakly to f(t, x(t)), so the sequence \(y(t)\varphi (f(t,x_n(t))))\) has is pointwisely convergent. Assumption c) allows to apply the Lebesgue dominated convergence theorem for the Pettis integral, so \((Tx_n(t))\) converges weakly to Tx(t) in \(E_\omega \). By the Dobrakov characterization of weak convergence in C[I, E] (cf. [13]) we can do it for each \(\varphi \in E^*\) and \(t \in I,\) so \(T: Q \rightarrow Q \)ww-sequentially continuous.

Step 3. Remark that for arbitrary \(w \in P[I,E]\) we have, \(t \in [1,e]\)

Suppose now, that the set \(V \subset Q\) has the following property: \(\overline{V} = \overline{\text{ conv }} \; (\{x\} \cup T(V))\) for some \(x \in Q\).

As for any \(\varepsilon > 0\) there exists (sufficiently small) \(\tau \) such that for any \(x \in V\)

we can cover the set \(\left\{ \int _{t-\tau }^t \frac{\lambda [\log (t/s)]^{\alpha -1} }{s \varGamma (\alpha )} f(s,x(s)) \,ds: s \in [t-\tau ,t] , x \in V\right\} \) by a ball with radius \(\varepsilon \) and then by the definition of the De Blasi measure of weak noncompactness (by the assumption c) we get the uniform Pettis integrability of the set of functions satisfying (32):

We need to estimate now the set of the above integrals on \([1,t-\tau ]\). Put \(v(s) = \beta (V(s))\). Note, that from our assumption it follows that \( s \rightarrow \frac{\lambda [\log (t/s)]^{\alpha -1} }{s \varGamma (\alpha )} g(v(s))\) is continuous on \([1,t-\tau ]\), so uniformly continuous (see Lemma 1).

Thus there exists \(\delta > 0\) such that

provided that \(|q-s| < \delta \) and \(|\eta -s|<\delta \) with \(\eta ,s,q \in [1,t-\tau ]\). Divide the interval \([1,t-\tau ]\) into n parts \(1 = t_0< t_1< \cdots < t_n = t- \tau \) such that \(|t_i - t_{i-1}| < \delta \) for \(i=1,2,\ldots ,n\). Put \(T_i = [t_{i-1},t_i]\). As v is uniformly continuous, there exists \(s_i \in T_i\) such that \(v(s_i) = \beta (V(T_i))\) (\(i=1,2, \ldots ,n\)).

As

and by the mean value theorem for the Pettis integral

Hence

Note, that from (33) it follows that

Finally, from the above estimations we get

As the last inequality is satisfied for any \(\varepsilon >0\), we obtain

Note that \(\beta (V(t)) = \beta (\overline{V}(t)) = \beta ( \overline{\text{ conv }} \; (\{x\} \cup T(V))(t)) = \beta (T(V)(t))\). Then

and then

From the definition of a Kamke function we get \(v(t) \equiv 0\), i.e., \(\beta (V(t)) = 0\), so V(t) are relatively weakly compact in E. Being strongly equicontinuous subset of \(C[I,E_\omega ]\) with relatively weakly compact sections it is relatively compact subset of this space (cf. Lemma 1).

All in all, the hypotheses of Theorem 1 are satisfied. Therefore, we can conclude that the operator T has at least one fixed point in Q, which completes the proof. \(\square \)

Obviously, if x is weakly (but not weakly absolutely) continuous solution to (28), then not only do we have x is no longer necessarily solves (26) (when the Caputo fractional weak derivative is taken in the sense of Definition 5), but even worse, the problem (26) is “meaningless” on I.

Now, we are in the position to state and prove the following existence theorem.

Theorem 4

If \(f:I \times E \rightarrow E\) is a function such that all conditions from Theorem 3 hold, then the problem (26) [where the Caputo fractional weak derivative is taken in the sense (24)] has a pseudo-solution on [1, e].

Proof

Let \( x \in C[I,E_\omega ]\) solves (28). Since x is weakly continuous [but it is not necessary weakly absolutely continuous) on [1, e] we have to invoke the Definition (24)] of the CHFD as follows (with the use of Lemma 4)

On the other hand, Eq. (28) implies

Thus if we plug (29) into (36), we arrive at the boundary condition \( x(1)+b x(e)=h\). This is may be combined with (35) in order to assure the existence of a solution \( x \in C[I,E_\omega ]\) to the problem (26). This completes the proof. \(\square \)

Remark 3

We should also briefly present an example of the use of our theorem to the problem (28). We refer to [35, Section 6]. Both examples could be easily adaptable to the case of (28).

Put \(\alpha =1/2\) and consider the Young function \(\psi (u)=e^{|u|}-|u|-1\). Then \(\widetilde{\psi }=(1+|u|) \log (1+|u|)-|u|\). A direct calculation leads to

So, owing to the definition of \(\widetilde{\varPsi }\), we conclude that \(\widetilde{\varPsi }(1) \le 3\) and our result applies. Direct formulas can be deduced as in [35]. Note that any earlier theorem cannot be applied in such a general case.

As claimed in Sect. 2 the case studied here is more complicated than the case of the Riemann–Liouville integral and approaches based on convolution-type operators. All the properties of integral operators should be proved without use of Hölder inequality for the convolution. It makes this case independent on the study of standard Abel integral equations (cf. [35] or the book [18]). It is another type of weakly singular problems and requires the study of different integral operators acting on function spaces.

4 Multivalued problems

Let us briefly explain how to extend the above results for multivalued problems. We extend both the case of classical Pettis integrals (i.e., \(\alpha = 1\)) [12]) and the case of Caputo (and Riemann–Liuoville) integrals [9]. We should mention that as the Hadamard integral is not of a convolution of a real-valued integrable function with the Pettis integrable vector-valued one, we are unable to apply the property of such integrals proved in [9], But still we can apply Proposition 5 instead. As we need to replace fractional Pettis integrals, by the multivalued ones, we need to recall some necessary notions.

By cwk(E) we denote the family of all nonempty convex weakly compact, subsets of E. For every \(C \in cb(E)\) the support function of C is denoted by \(s(\cdot ,C)\) and defined on \(X^*\) by \(s(\varphi ,C) = \sup _{x \in C} \varphi x \), for each \(\varphi \in X^*\). We say that F is scalarly integrable (Dunford integrable) if for any \(\varphi \in E^*\)\(s(\varphi ,F(\cdot ))\) is an integrable function.

Definition 6

A multifunction \(G:E\rightarrow 2^E\) is called weakly sequentially upper hemi-continuous (w-seq uhc) iff for each \(\varphi \in E^*\)\(s(\varphi ,G(\cdot )):E\rightarrow {\mathbb R}\) is sequentially upper semicontinuous from \((E,\omega )\) into \({\mathbb R}\).

An idea of Aumann–Pettis integral introduced by Valadier and we apply this idea for Hadamard-type integrals:

Definition 7

Let \(F:I \rightarrow cwk(E).\) The Hadamard-type fractional Aumann–Pettis integral (shortly (HAP) integral) of F of order \(\alpha >0\) is defined by

where \(S^{HAP}_F\) denotes the set of all Hadamard–Pettis integrable selections of F of order \(\alpha >0\) provided that this set is not empty.

As a consequence of Proposition 6 we get a nice characterization:

Proposition 8

Assume, that E is separable. Let \(F:I \rightarrow cwk(E)\) be (HAP) integrable of order \(\alpha > 0\). If \(\psi \) is a submultiplicative N-function, then \(\{\varphi f : f \in S^{HAP}_F , \varphi \in E^*, \Vert \varphi \Vert \le 1 \}\) is relatively weakly compact in \(L_\psi (I)\).

A subset \(K \subset {\mathcal {H}}^\psi (E)\) is said to be (HAP) uniformly integrable of order \(\alpha >0\) on I if, for each \(\varepsilon > 0\), there exists \(\delta _{\varepsilon }> 0\) such that

Following the idea of the proof from [16, Theorem 5.4], we can state a condition for (HAP)-integrability:

Lemma 5

Let \(\alpha \in (0,1]\). Assume, that \(\psi \) is a Young function satisfying \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \) and its complementary Young function \(\widetilde{\psi }\) satisfies

Let \(F : I \rightarrow cwk(E)\) be measurable and scalarly integrable multifunction. Then the following statements are equivalent:

- (a1)

every measurable selection of F is Hadamard–Pettis integrable of order \(\alpha > 0\),

- (a2)

for every measurable subset A of I the (HAP) integral \(I_A\) belongs to cwk(E) and, for every \(\varphi \in E^*\), one has

$$\begin{aligned} s(\varphi ,I_A) = \int _A \left( \log \frac{t}{s}\right) ^{\alpha -1} \frac{ s(\varphi ,F(s))}{s} \; ds. \end{aligned}$$

Proof

Fix arbitrary \(t \in I\) and define a new multifunction \(G : I \rightarrow cwk(E)\) by the following formula:

In the proof of Theorem 2 we showed, that \(s \mapsto \left( \log \frac{t}{s}\right) ^{\alpha -1} \frac{1}{s} \in L_{\widetilde{\psi }}(I)\). Then for any\(\varphi \in E^*\) and \(f \in L_{\psi }(I)\) by Proposition 5 we conclude, that \(s \mapsto \left( \log \frac{t}{s}\right) ^{\alpha -1} \frac{\varphi f(s)}{s}\) is integrable and then \(s \mapsto \left( \log \frac{t}{s}\right) ^{\alpha -1} \frac{f(s)}{s}\) is Pettis integrable.

We obtain our thesis by applying Theorem 4.5 in [16] directly for the multifunction G. \(\square \)

In view of the Definition 7 we are able to apply Theorem 2 for any \(f \in S^{HAP}_F\) separately, then apply the above Lemma and we get a new characterization of (HAP) integrals:

Proposition 9

Let \(\alpha \in (0,1]\). Assume, that \(\psi \) is a Young function satisfying \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \) and its complementary Young function \(\widetilde{\psi }\) satisfies

Then for any (HAP) multifunction \(F:I \rightarrow cwk(E)\) with \(s(\varphi ,F(\cdot )) \in {\mathcal {H}}^\psi (E)\), for every \(\varphi \in E^*\) and any measurable subset A of I we get \((HAP)\int _A F(s) \; ds \subset cwk(C[I,E_\omega ])\).

Let us note that the set \(\mathbf {S}_{F}^{HAP}\) of all Hadamard–Pettis integrable selections of F is nonempty and sequentially compact for the topology of pointwise convergence on \(\mathbf {L}^{\infty }\otimes E^*\) and that, as in a classical case, the multivalued integral \( (HAP)\int _I F(s) \; ds\) is convex.

The most important question is how to investigate assumption b) from out Theorem 3. We can apply the following:

Lemma 6

Let \(\alpha \in (0,1]\). Assume, that \(\psi \) is a Young function satisfying \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \) and its complementary Young function \(\widetilde{\psi }\) satisfies

Assume that E has separable dual \(E^*\). Let \(v \in C[I,E_\omega ]\), and let \(F:I\times E\rightarrow 2^E\) is such that:

- (i)

\(F(\cdot ,x)\)—has a weakly measurable selection for each \(x\in E\) ,

- (ii)

\(F(t,\cdot )\)—w-seq. uhc for each \(t\in I\),

- (iii)

F(t, x) are nonempty, closed and convex ,

- (iv)

\(F(t,x) \subset G(t)\) a.e., G has nonempty convex and weakly compact values and is (HAP) uniformly integrable of order \(\alpha >0\) on I.

Then there exists at least one Hadamard-type fractional Pettis integrable of order \(\alpha >0\) selection z of \(F(\cdot ,v(\cdot ))\).

Proof

By Lemma 3.2 in [11] we get the existence of a weakly measurable and Pettis integrable selection \(z_0\) of \(F(\cdot , v(\cdot ))\). Clearly, as E is separable, this function is strongly measurable too.

Moreover, again as in Lemma 5 for any\(\varphi \in E^*\) and \(f \in L_{\psi }(I)\) by Proposition 5 we conclude, that \(s \mapsto \left( \log \frac{t}{s}\right) ^{\alpha -1} \frac{\varphi z_0(s)}{s}\) is integrable and then \(s \mapsto \left( \log \frac{t}{s}\right) ^{\alpha -1} \frac{z_0(s)}{s}\) is Pettis integrable, so \(z_0\) is Hadamard-type fractional Pettis integrable of order \(\alpha >0\). \(\square \)

For the weakly compact-valued case, by applying selection arguments and the above results we are able to treat a multivalued problem as a single-valued one, considered in the previous section. We get the following multivalued extension of our main theorem from Sect. 3:

Theorem 5

Assume that E has separable dual \(E^*\). Let \(\alpha \in (0,1]\). Assume, that \(\psi \) is a Young function satisfying \(\lim _{u \rightarrow \infty }\frac{\psi (u)}{u}=\infty \) and its complementary Young function \(\widetilde{\psi }\) satisfies

Let \(F:[1,e] \times E \rightarrow cwk(E)\) is such that:

- a)

for each \(t \in [1,e]\)\(F(t,\cdot )\) - w-seq. uhc,

- b)

for each \(x \in C[[1,e],E_\omega ]\), \(F(\cdot ,x)\) has a weakly measurable selection f,

- c)

for a.e. \(t \in [1,e]\) and each \(x \in C[[1,e],E_\omega ]\), \(F(t,x) \subset G(t)\), where G has nonempty convex and weakly compact values and is (HAP) uniformly integrable of order \(\alpha >0\) on [1, e], for any \(\varphi \in E^*\)\(\int _0^\infty \frac{dr}{s(\varphi , G(r))} = \infty \) .

Then (28) has at least one solution in \(C[I,E_\omega ]\) satisfying \(x(1) + b x(e) = h\).

Proof

The proof follows the steps outlined in the demonstration of Theorem 3 for the multivalued operator \(T : C[I,E_\omega ] \rightarrow cwk(C[I,E_\omega ])\)

We need to prove the existence of a fixed point of T. When we have done, we can apply the Kakutani–Ky Fan fixed point theorem [5].

Indeed, by Lemma 6, for any \(x \in C[[1,e],E_\omega ]\) we have the existence of a Hadamard-type fractional Pettis integrable of order \(\alpha >0\) selection \(z_x\) of \(F(\cdot ,x(\cdot ))\) and then \(S^{HAP}_F\) is nonempty. As \(E^*\) is separable, so is E and hence any weakly measurable selection is strongly measurable. Thus by the assumption c) we get \(z_x \in {\mathcal {H}}^\psi (E)\). It follows that \((HAP)\int _1^t F(s,x(s)) \; ds \not = \emptyset \), F is (HAP) integrable, so T is well-defined on \(C[I,E_\omega ]\) with nonempty values. As for any Hadamard–Pettis selection the values are in \(C[I,E_\omega ]\), we have \(T : C[I,E_\omega ] \rightarrow 2^{C[I,E_\omega ]}\). By applying Proposition Lemma 9, we obtain that T has weakly compact values, i.e., \(T(x) \in cwk(C[I,E_\omega ])\)

Let \( U=\{x_f \in C([I,E_\omega ] : x_f(t) = \mathfrak {J}^\alpha _1 f(t) , t\in I, f \in S^{HAP}_G\}\). By our assumptions, \(S^{HAP}_G\) is (HAP) uniformly integrable of order \(\alpha >0\).

Moreover, by Assumption b), for any \(f \in S^{HAP}_G\) and \(\varphi \in E^*\) we have \(\varphi f \le s(\varphi ,G)\) and so, for arbitrary \(x_f \in U\) and \(t, \tau \in I\) we have:

By using the estimation (16) we obtain

As G, being uniformly (HAP) integrable, the value \(\sup _{\varphi \in B(E^*)} s(\varphi , G)\) is bounded, and then we conclude that U is an equicontinuous subset of \(C[I,E_\omega ]\).

We show now that U is closed. Take a sequence \(x_{f_n}(t) = \mathfrak {J}^\alpha _1 f_n(t)\) convergent uniformly to a continuous function x and prove that \(x\in {U}\). As \(S^{HAP}_G\) is sequentially compact for the topology induced by the tensor product \(L^{\infty } \otimes E^*\) , there exists a subsequence \(f_{n_k}\) convergent with respect to the Pettis norm to a selection \(f \in S^{HAP}_G\). It implies that \(\mathfrak {J}^\alpha _1 f_{n_k}(t)\) weakly converges to \(\mathfrak {J}^\alpha _1 f(t)\), whence \(x(t) = \mathfrak {J}^\alpha _1 f(t)\) for all \(t\in I\) and so, the set U is closed.

As G(t) are weakly compact for \(t \in I\), by Ascoli’s theorem, U is weakly compact in \(C[I,E_\omega ]\).

On the other hand, we have the following estimation:

As \(S_G^{(HAP)}\) is (HAP) uniformly integrable, there exists a constant \(N > 0\) such that \(\Vert (HAP) \int _{1}^e s(\varphi , G(s)) \; ds \Vert \le N\). Then for any \(t \in I\)

Therefore, for a solution of our problem we obtain an “a priori” estimation, namely \(\Vert x \Vert \le M\). Denote by \(B_M\) the ball \(\{ x \in C[I,E] : \Vert x \Vert \le M \}\) and let \(V = {U} \cap B_N\). It is weakly compact and convex set. In the next part of the proof we will restrict T to the set V. It is clear that \(T(V) \subset V\).

Now, we are in a position to show, that T has a weakly–weakly sequentially closed graph (therefore, it has closed graph when \(C[I,E_\omega ]\) is endowed with the weak topology, as this topology is metrizable on weakly compact sets). Let \((x_n,y_n)\in Gr T,\quad (x_n,y_n)\rightarrow (x,y)\) in \(C[I,E_\omega ]\).

Recall, that \(y_n\) is of the following form

Since \(f_n(t) \in {F(t,x_n(t))} \subset G(t)\) a.e. and \(S_{G}^{HAP}\) is nonempty, convex and is sequentially compact for the “weak” topology of pointwise convergence on \(L^{\infty } \otimes E^*\), we extract a subsequence \((f_{n_k})\) of \((f_n)\) such that \((f_{n_k})_k\) converges \(\sigma (P[I,E] ,L^{\infty } \otimes E^*)\) to a function \(f \in S^{HAP}_G\).

Fix an arbitrary \(\varphi \in E^*\). As for any measurable \(A \subset I\), \(h(t) = \chi _A(t) \in L_{\infty }(I)\) and since \((f_{n_{k}})\)\(\sigma (P[I,E] , L^{\infty } \otimes E^*)\)-converges to \(f \in S^{HAP}_G\), for any \(t \in I\), then we have

for each measurable \(A \subset I\). Thus \(y_{n_k}(t) = x_{n_k}(1) + \mathfrak {J}^\alpha _1 f_{n_k}(t)\) has the property that \(\varphi y_{n_k}\) is convergent to \(\varphi x(1) + \mathfrak {J}^\alpha _1 f(t)\), whence \(y(t)= x(1) + \mathfrak {J}^\alpha _1 f(t)\).

In order to have \((x,y) \in Graph \; T\) it remains to prove that \(f\in S_{F(\cdot ,x(\cdot ))}^{HAP}\). By [38, Lemma 12], there exists a convex combination \(h_k\) of elements of the sequence \(\{f_{n_m} : m\ge k\}\) that converges weakly to a measurable selection h of G. As the last sequence is (HAP) uniformly integrable, a consequence of a convergence theorem (cf. [25, Theorem 8.1]) is that the sequence of their integrals \((\mathfrak {J}^\alpha _1 h_{k}(t))_k\) weakly converges to \(\mathfrak {J}^\alpha _1 h(t)\). It follows that \(f = h\) a.e.

By weak sequential hemi-continuity of \(F(t,\cdot )\) and weak convergence of \(h_{k}(t)\) in E we obtain that \(h(t)\in F(t,x(t))\) a.e., therefore \(f \in S_{F(\cdot ,x(\cdot ))}^{HAP}\) and so, T has closed graph.

Thus, T satisfies the hypothesis of the Kakutani fixed point theorem and so, it has a fixed point. Moreover, the set of fixed points is compact in \(C[I,E_\omega ]\). \(\square \)

References

Agarwal, R., Lupulescu, V., O’Regan, D., ur Rahman, G.: Multi-term fractional differential equations in a nonreflexive Banach space. Adv. Differ. Equ. 2013, 302 (2013)

Agarwal, R., Lupulescu, V., O’Regan, D., ur Rahman, G.: Fractional calculus and fractional differential equations in nonreflexive Banach spaces. Commun. Nonlinear Sci. Numer. Simul. 20, 59–73 (2015)

Agarwal, R., Lupulescu, V., O’Regan, D., ur Rahman, G.: Nonlinear fractional differential equations in nonreflexive Banach spaces and fractional calculus. Adv. Differ. Equ. 2015, 112 (2015)

Agarwal, R., Lupulescu, V., O’Regan, D., ur Rahman, G.: Weak solutions for fractional differential equations in nonreflexive Banach spaces via Riemann-Pettis integrals. Math. Nachr. 289, 395–409 (2016)

Arino, O., Gautier, S., Penot, J.P.: A fixed point theorem for sequentially continuous mappings with application to ordinary differential equations. Funkcial. Ekvac. 27, 273–279 (1984)

Ball, J.M.: Weak continuity properties of mapping and semi-groups. Proc. R. Soc. Edinburgh Sect. A 72, 275–280 (1973–1974)

Barcenas, D., Finol, C.E.: On vector measures, uniform integrability and Orlicz spaces. Vector Measures, Integration and Related Topics, pp. 51–57. Basel, Birkhäuser (2009)

Calabuig, J.M., Rodríguez, J., Rueda, P., Sánchez-Pérez, E.A.: On \(p\)-Dunford integrable functions with values in Banach spaces. J. Math. Anal. Appl. 464, 806–822 (2018)

Castaing, Ch., Godet-Thobie, C., Truong, L.X., Mostefai, F.Z.: On a fractional differential inclusion in Banach space under weak compactness condition. Advances in Mathematical Economics, vol. 20, pp. 23–75. Springer, Singapore (2016)

Cichoń, M.: Differential inclusions and abstract control problems. Bull. Austral. Math. Soc. 53, 109–122 (1996)

Cichoń, K., Cichoń, M.: Some applications of nonabsolute integrals in the theory of differential inclusions in Banach spaces. Vector Measures, Integration and Related Topics, pp. 115–124. Basel, Birkhäuser (2009)

Cichoń, K., Cichoń, M., Satco, B.: Differential inclusions and multivalued integrals. Discuss. Math. Differ. Incl. Control Optim. 33, 171–191 (2013)

Cichoń, M.: Weak solutions of differential equations in Banach spaces. Discuss. Math. Differ. Incl. Control Optim. 15, 5–14 (1995)

DeBlasi, F.S.: On the property of the unit sphere in a Banach space. Bull. Math. Soc. Sci. Math. Roumanie (N.S.) 21, 259–262 (1977)

Diestel, J., Uhl Jr., J.J.: Vector Measures, Mathematical Surveys 15. American Mathematical Society, Providence (1977)

El Amri, K., Hess, Ch.: On the Pettis integral of closed valued multifunctions. Set-Valued Anal. 8, 329–360 (2000)

Fu, X., Yang, D., Yuan, W.: Generalized fractional integrals and their commutators over nonhomogenous metric measure spaces. Taiwan. J. Math. 18, 509–557 (2014)

Gorenflo, R., Vessella, S.: Abel Integral Equations, Lecture Notes in Mathematics, vol. 1461. Springer, Berlin (1991)

Hille, E., Phillips, R.S.: Functional Analysis and Semi-Groups, vol. 31. American Mathematical Society, Providence (1957)

Hudzik, H.: Orlicz spaces of essentially bounded functions and Banach–Orlicz algebras. Arch. Math. (Basel) 44, 535–538 (1985)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Krasnoselskii, M.A., Rutitskii, I.B.: Convex Functions and Orlicz Spaces. Noordhoff, Gröningen (1961)

Kubiaczyk, I.: On a fixed point theorem for weakly sequentially continuous mapping. Discuss. Math. Differ. Incl. Control Optim. 15, 15–20 (1995)

Mydlarczyk, W., Okrasiński, W.: On a generalization of the Osgood condition. J. Inequal. Appl. 5, 497–504 (2000)

Musiał, K.: Topics in the theory of Pettis integration. In: School of Measure theory and Real Analysis, Grado, Italy (1992)

Nakai, E.: On generalized fractional integrals in the Orlicz spaces on spaces of homogeneous type. Sci. Math. Jpn. 54, 473–487 (2001)

Naralenkov, K.: On Denjoy type extension of the Pettis integral. Czechoslovak Math. J. 60(135), 737–750 (2010)

O’Neil, R.: Fractional integration in Orlicz spaces I. Trans. Am. Math. Soc. 115, 300–328 (1965)

Pettis, B.J.: On integration in vector spaces. Trans. Am. Math. Soc. 44, 277–304 (1938)

Salem, H.A.H.: On functions without pseudo derivatives having fractional pseudo derivatives. Quaest. Math. (2018). https://doi.org/10.2989/16073606.2018.1523247

Salem, H.A.H., El-Sayed, A.M.A., Moustafa, O.L.: A note on the fractional calculus in Banach spaces. Stud. Sci. Math. Hungar. 42, 115–130 (2005)

Salem, H.A.H.: On the fractional order m-point boundary value problem in reflexive Banach spaces and weak topologies. J. Comput. Appl. Math. 224, 565–572 (2009)

Salem, H.A.H.: On the fractional calculus in abstract spaces and their applications to the Dirichlet-type problem of fractional order. Comput. Math. Appl. 59, 1278–1293 (2010)

Salem, H.A.H., Cichoń, M.: On solutions of fractional order boundary value problems with integral boundary conditions in Banach spaces. J. Funct. Sp. Appl. 2013, 13 (2013). (Article ID 428094)

Salem, H.A.H., Cichoń, M.: Second order three-point boundary value problems in abstract spaces. Acta Math. Appl. Sin. Engl. Ser. 30, 1131–1152 (2014)

Salem, H.A.H.: On the theory of fractional calculus in the Pettis-function spaces. J. Funct. Sp. (2018) (Article ID 8746148)

Salem, H.A.H.: Hadamard-type fractional calculus in Banach spaces. Rev. R. Acad. Cie. Exactas Fis. Nat. Ser. A Math. (2018). https://doi.org/10.1007/s13398-018-0531-y

Satco, B.: Volterra integral inclusions via Henstock-Kurzweil-Pettis integral. Discuss. Math. Differ. Incl. Control Optim. 26, 87–101 (2006)

Sawano, Y., Sugano, S., Tanaka, H.: Generalized fractional integral operators and fractional maximal operators in the framework of Morrey spaces. Trans. Am. Math. Soc. 363, 6481–6503 (2011)

Sihwaningrum, I., Maryani, S., Gunawan, H.: Weak type inequalities for fractional integral operators on generalized non-homogeneous Morrey spaces. Anal. Theory Appl. 28, 65–72 (2012)

Uhl Jr., J.J.: A characterization of strongly measurable Pettis integrable functions. Proc. Am. Math. Soc. 34, 425–427 (1972)

Yeong, L.T.: Henstock–Kurzweil integration on Euclidean Spaces, vol. 12. World Scientific Publishing Co. Pte. Ltd, Singapore (2011)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cichoń, M., Salem, H.A.H. On the solutions of Caputo–Hadamard Pettis-type fractional differential equations. RACSAM 113, 3031–3053 (2019). https://doi.org/10.1007/s13398-019-00671-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13398-019-00671-y