Abstract

How does the presence of a robot affect pedestrians and crowd dynamics, and does this influence vary across robot type? In this paper, we took the first step towards answering this question by performing a crowd-robot gate-crossing experiment. The study involved 28 participants and two distinct robot representatives: A smart wheelchair and a Pepper humanoid robot. Collected data includes: video recordings; robot and participant trajectories; and participants’ responses to post-interaction questionnaires. Quantitative analysis on the trajectories suggests the robot affects crowd dynamics in terms of trajectory regularity and interaction complexity. Qualitative results indicate that pedestrians tend to be more conservative and follow “social rules” while passing a wheelchair compared to a humanoid robot. These insights can be used to design a social navigation strategy that allows more natural interaction by considering the robot effect on the crowd dynamics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The use of robots within pedestrian spaces is becoming increasingly common. Mobile robots with various shapes, sizes and functions have been applied in different essential areas. For example, humanoid robots such as Pepper have been used in home and public environment [25], autonomous vehicles have been increasingly observed on the road and smart wheelchairs have been developed and tested in clinical trials [3, 7].

In many of these environments, the robot must interact with pedestrians in a safe and potentially social way, requiring an understanding of pedestrian dynamics in response to different robots. However, state-of-the-art approaches normally model pedestrian dynamics using simulation or with data collected in human-only experiments [11, 13]. The few works that have explored pedestrian dynamics in a robot-populated environment either studied this with a specific type of robot or limited the number of pedestrians [6, 21, 38]. Although human perception and interaction with different types of robots have been studied in many areas, it remains to be explored in a crowd-robot navigation scenario.

In this paper, we aim to understand whether and how pedestrian crowd-dynamics will be changed in the presence of a robot, and how this change would be affected by the robot type. To approach this problem, we identified two specific robots (a Pepper humanoid robot and a smart wheelchair) which have been widely used and researched, and represent two distinct types of robots that pedestrians would be likely to encounter in their daily life in the future. These two types of smart machines, while overlapping in the areas they would operate, differ in appearance, physics and level of autonomy, thus posing interesting cases to be studied. Specifically, we conducted a crowd-robot gate-crossing experiment with these two robots and measured the effect each robot exerted on the crowds macroscopically (i.e. as one moving body of people) and on groups of individuals microscopically (i.e. pedestrians in close proximity and far away from the robot). In addition, the pedestrians’ perception of the robot and the associated actions were measured qualitatively using surveys.

Our study makes the following contributions:

-

1.

The first controlled crowd-robot experiment with recorded pedestrian trajectory dataset in the presence of a robot, which presents novel results.

-

2.

An understanding of how pedestrian and crowd dynamics is affected by a robot. More specifically, we use both local and global metrics to explore further the effect of the robot motion on participants at the closest proximity of the robot. It will inform the design of a more natural crowd prediction method and a more realistic crowd simulation scenario.

-

3.

An understanding of how the type of robot affects pedestrian behaviour, which highlights considerations for designing robotic motion planning algorithms that also take into account the effect of the robot on the crowd.

2 Related Work

2.1 Pedestrian Robot Interaction

The study of human–robot interaction has been an emerging area over the past few years. People’s perception and reaction towards a robot is different from that to another human, and is greatly affected by factors such as demographics [22], appearance and size of the robot [5], perceived likeability and aggressiveness of the robot [23], and personal experience with pets or robots [34]. Despite this wide range of study, human–robot interaction in navigation is still relatively unexplored.

Recently, a small number of works have been exploring pedestrian-robot interaction and its effect on pedestrian behaviours. In general, the studies can be grouped into two categories, uncontrolled data collection with natural pedestrians or controlled experiment with recruited participants. Rothenbucher et al. (2016) studied the interaction between autonomous vehicles and road users such as pedestrians and cyclists in daily road crossing scenarios. The vehicle was operated by a human driver but was disguised as an autonomous one by hiding the driver from other road user’s view [32]. Results indicated that most people managed to interact smoothly with the vehicle while a small minority were hesitant about crossing due to the lack of a driver. Kidokoro et al. [16] investigated the influence of a humanoid robot on pedestrian comfort in a shopping mall, and classified the pedestrian behaviour towards the robot into four distinct types: stop to interact, stop to observe, slow down to look, uninterested. Results showed that 31% (out of 1115) of the pedestrians changed their behaviour by either slowing down or stopping as they encountered the robot.

Although these studies with natural pedestrians demonstrated valuable qualitative results in understanding high-level pedestrian-robot interaction, they are subject to various uncontrolled factors such as scenario context and thus having limited flexibility. On the other hand, some research explored the robot influence on pedestrian dynamics in a controlled environment quantitatively. Chen et al. [6] studied pedestrian-robot interaction in a corridor exiting experiment with 11 participants and 1 robot. Results indicated that pedestrians’ overall speed was affected by the presence of the robot. Vassallo et al. (2018) conducted a study which investigated a gate crossing scenario between one pedestrian and one robot where the robot was programmed to replicate the interaction rules of human walkers [37]. Experimental results indicated that there was no difference in terms of the crossing order between human–human and the human–robot case. Interestingly, their previous study indicated that pedestrians tend to give way to a robot which was programmed to move at a constant speed passively [38]. Similarly, Marvrogiannis et al. [21] conducted a within-subjects study to investigate the effect of distinct robot navigation algorithms on pedestrians’ behaviour. In each trial, 3 participants interacted with a lab-built robot which is either been teleoperated or equipped with one of two navigation algorithms. Results showed that the robot navigation algorithm has an effect on human acceleration.

These studies revealed the influence of robot on pedestrian dynamics in a local level. However, most of the experiments were conducted with a single or a small number of pedestrians, which limits their applicability to the crowd-robot navigation scenario. In addition, these works were concerned with one specific type of lab-made robot, which restricts the result from being generalized to other robots. This leads to a question: Does the occurrence of a robot and its type affect the crowd dynamics, and if so, how? In our work, we consider this question by performing a Crowd-Robot-Interaction (CRI) experiment with two different types of robot, and we highlight different effects on crowd dynamics from these two robots.

2.2 Crowd Prediction and Robot Navigation

A human is capable of navigating through crowds by predicting the motion trajectories of surrounding pedestrians and taking them into account when planning his or her own movement. To achieve safe and human-like navigation for robots, it is crucial to mimic this decision-making process by taking pedestrians’ trajectories into consideration. Early efforts in this area include ‘social force model’ [11] which used ‘attractive’ and ‘repulsive’ force to model pedestrian-obstacles and pedestrian-destination interactions.Several extensions are proposed to this model [14, 40]. Yamaguchi et al. [39] proposed to take the grouping behavior, smoothness of movements and preferred speed of the pedestrian into account. The main concern with these models is that the hand-crafted rules may not perfectly reflect the realistic behaviours of humans.

Data-driven approaches are then proposed to resolve this problem. They allow the natural human–human interaction to be captured and learned directly using real-world data. Machine learning and deep learning methods such as Long Short-Term Memory (LSTM) [1] have been applied to predict individual trajectories with the pairwise pedestrian interactions being learned via a social-pooling layer. Generative Adversarial Networks [9, 18], Transformer model [8], etc are also proposed for this task. However, the majority of these models are trained and validated on human trajectory datasets that either only contain pedestrian trajectories (e.g. ETH dataset [27], UCY dataset [20], Grand Central dataset [42]), or contain other non-robot road-users (e.g. Stanford drone dataset [31] that also contains trajectories of bikers, skateboarders, cars, buses, and golf carts, recorded in a University campus). Moreover, as reported in [2], many of these datasets only cover low-to-medium-density crowd activities. A model trained on such data might fail to make correct predictions in new situations.

State-of-the-art work also presents approaches that incorporate pedestrian trajectory prediction into robot navigation. Kerfs [15] adopted an improved version of social-LSTM to predict trajectories distributions and then used a dynamic A* for robot route planning. Similarly, Pradhan et al. [29] predicted pedestrians’ position and used a potential function based path planner for robot navigation in crowds. These approaches achieved robot navigation in human populated environments considering the human–human interaction and how human behaviour would affect the robot’s decision, but ignored the potential influence that the robot would exert on the pedestrians.

In order to consider this mutual effect, some works combined the prediction and planning process together. Trautman et al. [36] addressed this mutual interaction by reasoning the robot and pedestrians’ future trajectories jointly. Their solution was evaluated on the ETH pedestrian dataset [27]. Similarly, Kuderer et al. [36] also treated the navigation problem as jointly planning for robot and pedestrians. Differently from, they learned natural pedestrian behaviours features from their own lab-collected pedestrian data using the idea of maximum entropy [19]. However, the data was recorded without the presence of a robot, and it has been pointed out by the authors that pedestrians may react differently to robots than to other humans.

While the advances in trajectory prediction and robot navigation are often of great significance, they inevitably leave a gap in the validation of the suitability of pedestrian trajectory dataset in robot navigation which requires consideration of the potential effect created by the robot. This gap boils down to understanding the interaction between the crowd and the robot, which we aim to address in this study.

3 Method

3.1 Research Questions

Motivated by the results presented in the literature, we believe that before solving the social navigation problem, it is essential to understand whether the pedestrian and crowd behaviour would change when a robot is present. In this paper, we aim to address two main experimental questions and test our hypothesis:

Q1. How does the presence of a robot influence pedestrian and crowd dynamics?

H1. We hypothesize the robot will affect crowd dynamics both at a global and local level. Globally, we expect to see longer evacuation time, lower averaged speed and acceleration when a robot is added to the crowd. Locally, we expect to see the robot has a more significant effect on pedestrians who are in close proximity to it in terms of trajectory regularity and interaction complexity.

Q2. If the robot does influence crowd behaviour, how does the response vary across the robot type?

H2. We hypothesize pedestrians will behave differently towards different types of robot. In this study, we expect to see the crowds adopt a more conservative behaviour by giving more space and giving way to the smart wheelchair compared to a humanoid robot.

3.2 Crowd-Robot Gate-Crossing Experiment

In order to answer these questions, we conducted a preliminary study on crowd-robot interaction through a controlled gate-crossing experiment. Ethics approval for the study was granted by UCL Interaction Centre (UCLIC) Ethics Committee (ref. UCLIC_1819_011), following a thorough review of the experiment protocol, participant recruitment plan, data protection plan and risk assessments.

3.2.1 Choice of Robot

While a number of related works investigated pedestrian-robot interaction using a lab-made fully autonomous robot platform [6, 21], we decided to use one commercial humanoid robot (Pepper) and one smart wheelchair as shown in Fig. 1. A smart wheelchair is normally built on a standard powered wheelchair and has a collection of sensors for perception and navigation purpose. It can be either operated autonomously or controlled collaboratively between a user and a motion planner. In this study, we are interested in the latter. For a shared-controlled wheelchair, a user can express his or her driving intention through an interface (eg. Joystick), and the wheelchair’s movement will be the result of a negotiation between the user input and the motion planner. It provides people who have mobility impairments and are considered unsafe to drive a traditional wheelchair with a safe mobility solution and allow them to express their driving intentions.

The reason for using these two robots are twofold. First, these two robots are already prevalent in their areas which would allow us to generalize the findings for a wider range of robots. As the worlds’ first social humanoid robot, over 10,000 Pepper have been sold and adopted by companies in the area of education and hospitality [26]. While not being commercialized, smart wheelchairs have been widely used in research and tested in clinical trials. It can be expected to see these two types of robots being used in crowds in the near future. Secondly, the similarity and difference between these robots pose an interesting case to be studied. Pepper has a height of 120 cm which is comparable to the height of a person sitting on a power wheelchair. However, the fact that a person comes together with the smart wheelchair may greatly alter a pedestrian’s perception and thus generate interesting effects that need to be explored. State-of-the-art research that explores human-aware navigation is mainly designed for fully autonomous robots. However the navigation strategies remain to be fully explored for semi-autonomous robots such as the shared-control wheelchair, where a human driver can be seen by the pedestrians. It was suggested by Bingqing et al. [41] that in contrast to a standalone fully-autonomous robot, an additional interaction channel between the wheelchair user and the surrounding pedestrians should be considered for a shared-control wheelchair due to the assumption that a human driver would affect pedestrians’ perceptions and thus walking behaviours compared to the one with a standalone humanoid robot. Consequently, we included the smart (shared-control) wheelchair and Pepper in our study, and took the first step to collect such interaction data.

It should be noted that in this study, we do not consider the potential influence of different navigation algorithms on the pedestrians’ dynamics. Instead, we assume the robots are equipped with human-level navigation strategies. As a result, a Wizard-of-Oz [30] method was adopted in our experiment—the wheelchair was driven by an expert driver and the humanoid robot was tele-operated by an experienced operator. Although the wheelchair is not actually being shared-controlled by both the user and the planner in this experiment, we believe the intended end result of shared control would be similar as the one demonstrated by the expert driver. As a result, although no path planner was used in this experiment, the whole expert driver plus the wheelchair was considered the same as a special of type of robot—a shared-controlled wheelchair.

3.2.2 Participants

28 participants (15 females, 13 males) from different age groups (\(\hbox {M}=33\), \(\hbox {SD}=8.8\) years old) were recruited from our university and a participant pool. None had a mobility, sight or hearing impairment. The participants were given a copy of the information sheet and time to sign the consent form prior to the experiment. To prevent any potential bias, the actual purpose of the experiment was not revealed to them during the introduction.

3.2.3 Apparatus

The experiment took place in an indoor pedestrian accessibility lab. The lab environment allowed us to have significant control over multiple variables. The place consists of a platform which is constructed from \(6*10\) movable modules, with the size of each module being \(1.2~\hbox {m}*1.2~\hbox {m}\). We used \(6~\hbox {m}*12~\hbox {m}\) of the platform and constructed our gate using movable panels.

3.2.4 Task

In contrast to other work in the literature which studied interaction between one robot and one pedestrian [38] or a small groups of people [21], we designed a robot-in-crowd gate-crossing task. The gate-crossing task has been widely used in analysing crowds dynamics [35], in situations such as entering train stations and evacuation. During the experiment, each participant was asked to wear a coloured hat for detection and tracking purposes (see Fig. 2). In each run, 28 people and the robot were randomly given an initial starting position number which was represented as one circle in Fig. 3). In addition, we made sure that the starting positions assigned to the people in proximity to the “robot” were not the same in each run, so as to reduce the learning effect and potential bias caused by individual behaviour. Furthermore, for more valid comparison, we let participants keep their starting position across different scenarios. For example, the starting position in (S2, run1) is different from (S2, run2) but is the same as (S3, run1). All pedestrians were instructed to walk together from one side of the platform to the other side by crossing through a 2.2 m wide gate (See Fig. 3).

A vocal command was used to inform pedestrians of the start of each trial and the completion was achieved when all the pedestrians crossed a destination line at the end of the platform. After each scenario, all participants were asked to fill in a short survey which was designed for them to reflect on their behaviour.

Overview of the experimental plan. Each blue square with the red number represents one module on the platform. Black segments represent the movable panels which were used to form a gate. Gray circles represent the pedestrians and the blue circle stands for the robot. Positions were randomly assigned. (Color figure online)

3.2.5 Experimental Scenarios

We designed 4 testing scenarios with 3 independent variables: robot occurrence, robot type and robot speed (see Table 1). Due to the inherent difference in speed-capabilities of the robots, we set the low speed to approximately 0.5 m/s for the wheelchair and Pepper, while the high speed for the wheelchair is about 1 m/s which is comparable to normal pedestrian walking speed. Each scenario was repeated 5 times.

3.2.6 Recorded Data

The experiment was recorded by an overhead fish-eye IP video camera (Axis M3037) at 12.5 fps. An additional IP video camera was set up from the side with the aim to observe pedestrian behaviours qualitatively. In order to calibrate the fisheye camera, we used the well-known chessboard method, collecting 22 various shots while changing the location and orientation of the chessboard. We applied the Scaramuzza fisheye model [33] which introduces mapping coefficients between distorted and corrected images. We used the Matlab camera calibrator toolbox for calculating the coefficients and then undistorting the videos (see Fig. 4a) In the next step we computed the homography matrix between the undistorted image plane and the ground plane, by mapping pixel coordinates of 4 of the corners of the platform to their world coordinates. This process compensated the rotation of the camera with respect to the ground plane and gave top-view images which are suitable for object tracking (see Fig. 4b).

We then used a special-purpose software called PeTrack to extract the pedestrian trajectories. The issues of ID switches and tracker drift were solved using semi-automatic processes. Finally, we used separate linear functions to map the head locations to the ground. For the humans we assumed an average height of 170 cm. For Pepper and the wheelchair we used heights of 120 cm and 140 cm, respectively. In order to filter out high frequency jerk from the trajectories we applied a Kalman Smoother with a const-acceleration model. We configured the model with \(\hbox {dt}=1/12.5\), \(\hbox {q}=10\) (process noise constant) and \(\hbox {r}=1\) (observation noise constant). In total, 20(4 scenarios * 5 runs each scenario) trials were performed with valid interactions between the robot and the crowds. 546 pedestrian trajectories and robot trajectories were detected and extracted using the open-source software PeTrack [4].

4 Analysis

To better evaluate the effect of robot in crowd dynamics in both global and local level, we measured macroscopic and microscopic features, and reported the result quantitatively. Macroscopic features describe high-level crowd characteristics while microscopic features take individuals’ properties into account. In order to quantify the effect of the presence of a robot and its effect on pedestrian dynamics, we analyzed the extracted trajectories both macroscopically and microscopically based on some common metrics that have been used in previous works [21, 28, 38]. In general, we categorized the applied metrics to measure ‘trajectory regularity’ and ‘interaction complexity’.

4.1 Preprocessing

Before applying metrics to the trajectories, we defined the notion of region-of-interests (ROI) as 2 m before the gate and 0.5 m after the gate, we observed this was the region where most interaction happens. In addition, for more valid comparison, we splited all the trajectories extracted from the ROI into sub-trajectories with each having a fixed time length of 10 frames \((=0.8~\hbox {s})\).

4.2 Trajectory Regularity

We evaluated the geometrical and physical properties of the sub-trajectories in order to reflect their irregularities and deviations from simple linear trajectory models. For this purpose, we used three metrics, average speed, average acceleration and path efficiency. Path efficiency is normally calculated as the ratio of distance between two terminals (\(\vec {x}_{end}\) and \(\vec {x}_{start}\)) of the trajectory segment over the actual length of the segment [21]. However, in our experiment, the existence of the gate inherently affects pedestrians’ path efficiency. This issue is addressed by dividing the sub-trajectories which cross the gate into ’before gate’ and ’after gate’, with the sub-goal (\(\vec {x}_{sub}\)) being introduced as the point on the sub-trajectory at the gate. As a result, path efficiency \(\eta \) for a sub-trajectory \(\mathbf{X}^k\) is defined as:

where t ranges from start to end

4.3 Interaction Complexity

While the above metrics indicate the sub-trajectory regularity and motion complexity of each pedestrian, they do not imply the interaction between pedestrian–pedestrian and pedestrian-robot. Consequently, we applied another three metrics to evaluate the interaction complexity in each scene. They are evacuation time, local density, and pass order inversion.

Evacuation time has been used as to assess crowd dynamics in emergency situations. Here we defined it as the time elapsed from when the first pedestrian passes the gate to the time when the last pedestrian passes the gate. This quantity is further normalized by the number of pedestrian’s.

In terms of local density, Helbing et al. [12] proposed a formula based on the idea that each person occupies a fixed radius of area. In this paper, we adopted the notion proposed by Plaue et al. [28] where a nearest neighbor Gaussian kernel estimator is used, which allows the difference of each pedestrians occupied area to be taken into account. For a point \(x_t\), the local density \(p(x_t)\) is defined as,

where t is the time, \(K_t\) represents the total number of pedestrians at time t and \(d_t^i = min_{j\ne i}\Vert \vec {x}^j_{t}-\vec {x}^i_t\Vert \) is the Euclidean distance from agent i to its nearest neighbour and \(\lambda >0\) is a smoothing parameter. In this paper, we use the averaged local density for each sub-trajectory as an indicator. In daily life, a human always adapts to others when there is a risk of collision. To analyse whether such adaption exists in the human–robot navigation scenario and how it differs across different types of robot, we used a signed definition of minimum predicted distance (SMPD) to analyze the adaption behaviour [38]. As detailed in [24], minimal predicted distance (MPD) estimated the risk of future collision by calculating the distance to the closest approach (DCA) between the robot and the pedestrian at each time step, assuming they keep a constant velocity.

where u is a future time parameter, \(X_{{\textit{pred}},h}(t,u)\) and \(X_{{\textit{pred}},r}(t,u)\) are future positions of the human and the robot. By adding a sign to this metric, we can estimate whether the robot or the pedestrian is predicted to be ahead. In our study, we define \(t_{{\textit{enter}}}\) as when the robot entered the ROI and \(t_{{\textit{pass}}}\) as when either the pedestrian or the robot passed the gate. Consequently, we computed \(\hbox {SMPD}(t_{{\textit{enter}}})\) and \(\hbox {SMPD}(t_{{\textit{pass}}})\) for each pedestrian-robot pair.

Considering the human perception capability, we only considered pedestrians who are behind the robot at \(t_{{\textit{enter}}}\). We define positive SMPD if the robot should pass first and negative SMPD otherwise. As a result, a change of sign of SMPD means that the future crossing order between the robot and the participant is switched, and thus implies the adaption in the pass order. In general, we define four pass order groups based on the sign of SMPD at \(t_{{\textit{enter}}}\) and \(t_{{\textit{pass}}}\), namely: PosPos, NegNeg, PosNeg and NegPos. We classify ‘PosPos’ and ‘NegNeg’ as pedestrians who keep their pass order, ‘PosNeg’ represents pedestrians who overtake the robot while ‘NegPos’ implies pedestrians give the way to the robot.

4.4 Statistics

In order to assess the effect generated by the robot and whether it varies with robot type, detailed comparisons were made within and across scenarios. To guarantee valid data comparison, normality was assessed with the Kolmogorov–Smirnov test. It was indicated by the test result that statistics have a non-Gaussian distribution for some metrics. As a result, we used Wilcoxon ranked sum tests to determine differences and significant level. All effects were reported at \(p<0.05\). All the figures indicate the significant level with ‘*’, where ‘*’ stands for \(p<0.05\).

5 Results

5.1 Quantitative Result

5.1.1 Average Speed and Average Acceleration

Overall, our results indicate a significant difference between the human-only case (S1) and human–robot case (S2, S3, S4) in terms of average pedestrian speed and acceleration.

In order to further investigate the local effects, we grouped all pedestrian trajectories into two categories based on their spatial relationship to the robot. According to Hall’s personal space theorem [10], we evaluated each pedestrian’s distance to the robot at each time stamp. For a pedestrian sub-trajectory, only those with the median of Euclidean distance less than the robot’s close social space (\(<2.1~\hbox {m}\)) were considered as in proximity with the robot. This gives us 819 and 1067 pedestrian sub-trajectory segments near and far away from the robot.

Figure 5 depicts the average pedestrian speed categorized by its proximity to the robot. The average value obtained in S1 is used as a baseline for comparison. It can be observed that in most scenarios (except S2_farSpeed), the average speed for pedestrians near and far away from the robot are significantly different from the baseline (S1, no robot case). Additionally, within the same scenario, pedestrians’ average speed differs greatly based on their proximity to the wheelchair (S2, S3) or Pepper (S4). In terms of average pedestrian acceleration, similar difference exist between no robot and robot cases, while the local effect is less obvious.

5.1.2 Path Efficiency

In general, high path efficiency (\(>85\%\)) in all scenarios was observed. While comparing the path efficiency of sub-trajectories categorized by its proximity to the robot within the same scenario, pedestrians who are in close proximity with the robot in S3 and S4 have slightly lower path efficiency compared with those who are distinct from the robot.

Average pedestrian speed categorized by its proximity to the robot. ‘N’ and ‘F’ stand for the speed for pedestrians near the robot and far away from the robot. Pedestrian speeds in robot scenarios are significantly different from those in the no-robot scenario (S1). Within the same scenario, pedestrians that are in close proximity with the robot have lower speed compared to pedestrians far away from the robot

5.1.3 Evacuation Time

Figure 6 shows the evacuation time per person for all four scenes. By comparing S1 (no robot case) with S2-S4 (robot case), we can observed that crowd evacuation time significantly increased (\(p<0.05\)) when a wheelchair or Pepper is involved. In addition, a significant difference is observed between wheelchair case and Pepper case regardless of the robot speed.

5.1.4 Local Density

Average local density for each pedestrian or the robot sub-trajectory is illustrated in Fig. 7. Significant difference can be observed between no robot (S1) and robot (S2–S4) cases, as well as between the wheelchair (S2, S3) and Pepper (S4). When the wheelchair was involved, the wheelchair speed also showed influence on average pedestrian local density. In terms of the robot, local density around Pepper is significantly higher than that around the wheelchair.

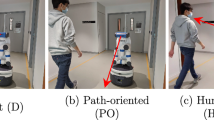

5.1.5 Pass Order

Figure 8 provides a summary plot for the human–robot pass order in all scenarios by analysing SMPD. We can observe that about 50% of pedestrians kept their pass order when they walk with a wheelchair or Pepper. Among the pedestrians who adapted their behaviour, less than 20% of them overtook the wheelchair while this number is over 80% in the case of Pepper. On the contrary, less than 20% of pedestrians gave way to the Pepper while over 80% of them let the wheelchair pass first regardless of the wheelchair speed setting. This behaviour difference is visualized in Fig. 9.

5.2 Qualitative Result

After each scenario, participants were asked to fill in a short survey. To begin with, we asked participants if they noticed the wheelchair or the humanoid robot during the last scenario. Among those participants who acknowledged the appearance of the ’robot’, most responded they looked at the robot before passing the gate(44%-wheelchair, 49%-Pepper) and while passing the gate (24%-wheelchair, 17%-Pepper). This result complies with our definition of ’region of the interest (gate area: 2m before the gate and 0.5m after the gate)’, with the assumption that most human–robot interaction happens in that region. We further asked pedestrians to reflect on their behaviour.

In Fig. 10, a noticeable difference in pedestrian walking behaviour can be observed between the wheelchair case and the humanoid robot case. In detail, 68% of the pedestrians admitted that they have adapted their behaviour by giving more space (50%) or deviated from their planned route (18%) when they noticed the wheelchair. In terms of the humanoid robot case, although more than a half of the pedestrians (52%) respond a change in behaviour, it was less obvious than the wheelchair case.

Figure 11 depicts the result of pedestrians’ perception on their collision avoidance behaviour. 73% pedestrians think they avoided the wheelchair in the same way as they avoided a person while more than half (54%) of pedestrians admit they avoided the humanoid robot differently as they would do to a person. This difference can be explained by the fact that pedestrians perception of the robotic wheelchair is affected by the presence of a human driver and their prior mental model towards a wheelchair, which in turn affected their behaviour.

Q1: If you have noticed the humanoid robot/wheelchair and the person on it, did that change your behaviour comparing to that in a scenario without the humanoid robot/wheelchair? Change in avoidance behaviour. In both cases, more than 50% of participants recognized they adapted their walking behavior to avoid the robot. People gave more space to the wheelchair compared to Pepper

6 Discussion

We studied how crowd dynamics in terms of trajectory regularity and interaction complexity are affected by the presence of a wheelchair or a humanoid robot Pepper. Overall, we find both H1 and H2 are confirmed.

In terms of H1, our results indicated that the occurrence of a robot indeed affect the crowd dynamics. By looking at the quantitative result, the global influence on trajectory regularity is mainly reflected on lower average walking speed and average acceleration, while the average path efficiency was less affected. This results is consistent with the one from previous studies [6] where the author showed a moving robot slows down a uni-directional flow. In addition, significant disparity has been observed across the scenarios in term of the interaction complexity. Temporally, the evacuation time per person has increased significantly when a wheelchair or Pepper is involved. Spatially, pedestrians’ averaged local density in S1 is higher than that in S2, S3 and S4. Furthermore, pedestrian’s local density decreases when the robot local density increases. These findings suggest pedestrians adapted their trajectory due to the occurrence of a robot, which was further supported by the qualitative results where pedestrians recognized their change in perception as well as walking behaviour. In a local level, when we categorized the sub-trajectories based on the spatial relationship to the robot, we have observed significant differences between the ‘near the robot’ and ‘far away from the robot’ group in terms of average speed and path efficiency. Pedestrians who are near the robot tend to move slower with lower path efficiency compared to those far away from the robot. No significant evidence has been observed on how the result is affected by the robot speed though.

In terms of H2, we observed the effect of robot type on crowd dynamics. The crowds also tend to spend more time in evacuation with the Pepper (S4) than with the wheelchair (S2, S3). When looking at how individuals adapt their behaviour, qualitative results indicated that most pedestrians adapt by overtaking Pepper, while giving the way to the wheelchair. Compared to previous studies where pedestrians gave way to a passively moving robot [38], our results imply an interesting change of such behaviour due to the robot type. Although further investigation on pedestrian factors would help us better understand the behaviour, this difference can be explained by the idea that pedestrians’ perception of the robotic wheelchair is affected by the presence of a human driver and their prior mental model towards a wheelchair, especially when a person appears to be mobility impaired. It makes them tend to follow ‘social rules’ and result in the change of their behaviour, while such a rule is not observed when the overtaking target is replaced by a humanoid robot. Spatially, robot type also has effect on pedestrians’ local density. While looking at the robots, the wheelchair was surrounded by fewer people compared to Pepper. This finding is further supported by our survey result as shown in Fig. 11, where more pedestrians were aware they ’gave more space’ to the wheelchair than the humanoid robot. These results suggest pedestrians’ local adaptive behaviour and the overall crowd interaction are affected by the occurrence of a robot, and this varies across the two tested robot platforms.

Our experiment was conducted with two specific robots, but we believe these results could be generalized to other robots. In this study, our robots are good representatives of mobile robots, which covers a great range of differences in terms of appearance, size, and dynamic constraints. In addition, by introducing the distinct factor of “human driver”, our result could also inform the design of navigation strategy for shared-controlled robots.

Q2: Did you treat avoiding the humanoid robot or wheelchair any differently to how you would have avoided a person? Difference in avoidance behaviour in human only and human–robot environment. Over 70% of participants think they avoided the wheelchair in the same way they would do to a person, while this number is only about 40% in the Pepper case

Therefore, we draw the following recommendations from our study:

-

1.

It would be important to consider the effect a robot exerts on the surrounding pedestrians while planning for its next motion—which means prediction and planning should be considered together to capture the natural interaction in complex environments.

-

2.

In order to achieve social robot navigation using data driven methods, pure pedestrian data recorded from an environment without robots may be insufficient, and it is better to obtain the pedestrian data where the specific robot is involved, as both the presence and the type of the robot affects pedestrian’s trajectory regularity as well as crowd interaction complexity. By doing so, more natural social navigation could potentially be achieved, though of course the generalization would need to be further explored. For example, Kim and Pineau (2016) approached the social navigation problem from inverse reinforcement learning, and learnt a cost function from data that was collected when a human demonstrator drove a smart wheelchair in crowds [17]. It allows the wheelchair to navigate in a socially adaptive way, while such a cost function may not generalize well to other types of robot, as the crowd dynamics may be affected by the robot itself (and the driver that comes along with the robot). On the other hand, if the difference in pedestrian dynamics can be modelled in a crowd simulator, more realistic interactions between pedestrians-crowds and the specific type of the robot could be simulated, thus providing us a powerful tool to validate the developed navigation algorithm.

-

3.

The social navigation strategy should potentially be developed differently for a shared-controlled robot and a fully autonomous humanoid robot. In this study, we did not explore the influence of the existence of a human driver on the pedestrian’s perception and behaviour by separating “the driver” and “the robotic wheelchair”, but rather considered the natural use case where the “wheelchair + driver” system is seen as a whole. In this experiment, the user did not actively interact with surrounding pedestrians but merely focused on the navigation task. In the future, it would be interesting to explore the user-pedestrian interaction, such as a wheelchair user approaching his/her friend while navigating through a crowd.

Although the results obtained from this experiment may be limited to certain design factors, we believe this work has set a very important first step to help us better understand crowd-robot interaction in navigation.

7 Conclusion

In this paper, we presented the first crowd-robot crossing experiment with collected trajectory dataset in the presence of two robot representatives: a smart wheelchair and a Pepper humanoid robot. Quantitative analysis implies the presence of the wheelchair and the Pepper affect crowd dynamics both locally and globally. Besides, the influence varies across the robot type. In general, the effect is reflected in the individual trajectory regularity and the interaction complexity. Qualitative results further supported the idea that pedestrians tend to behave more conservatively around the wheelchair compared to the Pepper, potentially due to the perception of a human driver. These results suggest the influence of the robot on crowds should be taken into consideration when designing the pedestrian model in simulation and navigation strategy for different kinds of robot.

In the future, this work could be extended to explore the effect of different types of robot on pedestrian dynamics in bi-directional or even more complex scenarios. In addition, the human factors such as age, gender, familiarity with the robot which would potentially affect crowd dynamics in social navigation could be further investigated.

Data availability

All trajectory data can be found at: https://github.com/akaimody123/PAMELA_dataset

References

Alahi A, Goel K, Ramanathan V, Robicquet A, Fei-Fei L, Savarese S (2016) Social LSTM: human trajectory prediction in crowded spaces. In: 2016 IEEE conference on computer vision and pattern recognition (CVPR), pp 961–971

Amirian J, Zhang B, Castro FV, Baldelomar JJ, Hayet JB, Pettre J (2020) Opentraj: assessing prediction complexity in human trajectories datasets. In: Proceedings of the Asian conference on computer vision (ACCV)

Babel M, Pasteau F, Guégan S, Gallien P, Nicolas B, Fraudet B, Achille-Fauveau S, Guillard D (2015) Handiviz project: clinical validation of a driving assistance for electrical wheelchair. In: 2015 IEEE international workshop on advanced robotics and its social impacts (ARSO). IEEE, pp 1–6

Boltes M, Seyfried A (2013) Collecting pedestrian trajectories. Neurocomputing 100:127–133. https://doi.org/10.1016/j.neucom.2012.01.036

Butler J, Agah A (2001) Psychological effects of behavior patterns of a mobile personal robot. Auton Robots 10:185–202. https://doi.org/10.1023/A:1008986004181

Chen Z, Jiang C, Guo Y (2018) Pedestrian-robot interaction experiments in an exit corridor. In: 2018 15th international conference on ubiquitous robots (UR), pp 29–34

Fehr L, Langbein WE, Skaar SB (2000) Adequacy of power wheelchair control interfaces for persons with severe disabilities: a clinical survey. J Rehabil Res Dev 37(3):353–360

Giuliari F, Hasan I, Cristani M, Galasso F (2020) Transformer networks for trajectory forecasting. arXiv:2003.08111

Gupta A, Johnson J, Fei-Fei L, Savarese S, Alahi A (2018) Social gan: Socially acceptable trajectories with generative adversarial networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition (CVPR)

Hall E (2009) A system of notation of proxemic behavior. Am Anthropol 65:1003–1026. https://doi.org/10.1525/aa.1963.65.5.02a00020

Helbing D, Molnár P (1995) Social force model for pedestrian dynamics. Phys Rev E 51:4282–4286. https://doi.org/10.1103/PhysRevE.51.4282

Helbing D, Johansson A, Al-Abideen H (2007) Dynamics of crowd disasters: an empirical study. Phys Rev E Stat Nonlinear Soft Matter Phys 75:046109. https://doi.org/10.1103/PhysRevE.75.046109

Hoogendoorn SP, Daamen W (2005) Pedestrian behavior at bottlenecks. Transp Sci 39(2):147–159. https://doi.org/10.1287/trsc.1040.0102

Karamouzas I, Heil P, van Beek P, Overmars MH (2009) A predictive collision avoidance model for pedestrian simulation. In: Egges A, Geraerts R, Overmars M (eds) Motion in games. Springer, Berlin, pp 41–52

Kerfs J (2017) Models for pedestrian trajectory prediction and navigation in dynamic environments. Master’s thesis, California Polytechnic State University, San Luis Obispo

Kidokoro H, Kanda T, Brščic D, Shiomi M (2013) Will i bother here? A robot anticipating its influence on pedestrian walking comfort. IEEE Press, New York

Kim B, Pineau J (2016) Socially adaptive path planning in human environments using inverse reinforcement learning. Int J Soc Robot 8(1):51–66

Kosaraju V, Sadeghian A, Martín-Martín R, Reid I, Rezatofighi SH, Savarese S (2019) Social-bigat: multimodal trajectory forecasting using bicycle-gan and graph attention networks. In: NeurIPS

Kuderer M, Kretzschmar H, Sprunk C, Burgard W (2012) Feature-based prediction of trajectories for socially compliant navigation. https://doi.org/10.15607/RSS.2012.VIII.025

Lerner A, Chrysanthou Y, Lischinski D (2007) Crowds by example. Comput Graph Forum 26(3):655–664. https://doi.org/10.1111/j.1467-8659.2007.01089.x

Mavrogiannis C, Hutchinson AM, Macdonald J, Alves-Oliveira P, Knepper RA (2019) Effects of distinct robot navigation strategies on human behavior in a crowded environment. In: 2019 14th ACM/IEEE international conference on human–robot interaction (HRI). IEEE, pp 421–430

May D, Holler K, Bethel C, Strawderman L, Carruth D, Usher J (2017) Survey of factors for the prediction of human comfort with a non-anthropomorphic robot in public spaces. Int J Soc Robot. https://doi.org/10.1007/s12369-016-0390-7

Mumm J, Mutlu B (2011) Human–robot proxemics: physical and psychological distancing in human-robot interaction. In: 2011 6th ACM/IEEE international conference on human–robot interaction (HRI), pp 331–338

Olivier AH, Marin A, Crétual A, Pettré J (2012) Minimal predicted distance: a common metric for collision avoidance during pairwise interactions between walkers. Gait Posture 36(3):399–404. https://doi.org/10.1016/j.gaitpost.2012.03.021

Pandey AK, Gelin R (2018a) A mass-produced sociable humanoid robot: Pepper: the first machine of its kind. IEEE Robot Autom Mag 25(3):40–48

Pandey AK, Gelin R (2018b) A mass-produced sociable humanoid robot: Pepper: the first machine of its kind. IEEE Robot Autom Mag. https://doi.org/10.1109/MRA.2018.2833157

Pellegrini S, Ess A, Van Gool L (2009) You’ll never walk alone: modeling social behavior for multi-target tracking. pp 261–268, https://doi.org/10.1109/ICCV.2009.5459260

Plaue M, Chen M, Bärwolff G, Schwandt H (2011) Trajectory extraction and density analysis of intersecting pedestrian flows from video recordings. In: Stilla U, Rottensteiner F, Mayer H, Jutzi B, Butenuth M (eds) Photogramm Image Anal. Springer, Berlin, pp 285–296

Pradhan N, Burg T, Birchfield S (2011) Robot crowd navigation using predictive position fields in the potential function framework. In: Proceedings of the 2011 American control conference, pp 4628–4633

Riek LD (2012) Wizard of OZ studies in HRI: a systematic review and new reporting guidelines. J Hum Robot Interact 1(1):119–136. https://doi.org/10.5898/JHRI.1.1.Riek

Robicquet A, Sadeghian A, Alahi A, Savarese S (2016) Learning social etiquette: human trajectory understanding in crowded scenes. In: ECCV

Rothenbücher D, Li J, Sirkin D, Mok B, Ju W (2016) Ghost driver: a field study investigating the interaction between pedestrians and driverless vehicles. In: 2016 25th IEEE international symposium on robot and human interactive communication (RO-MAN), pp 795–802

Scaramuzza D, Martinelli A, Siegwart R (2006) A toolbox for easily calibrating omnidirectional cameras. https://doi.org/10.1109/IROS.2006.282372

Takayama L, Pantofaru C (2009) Influences on proxemic behaviors in human-robot interaction. IEEE Press, IROS’09, pp 5495–5502

Tamura Y, Le PD, Hitomi K, Chandrasiri NP, Bando T, Yamashita A, Asama H (2012) Development of pedestrian behavior model taking account of intention. In: 2012 IEEE/RSJ international conference on intelligent robots and systems, pp 382–387

Trautman P, Ma J, Murray RM, Krause A (2013) Robot navigation in dense human crowds: the case for cooperation. In: 2013 IEEE international conference on robotics and automation, pp 2153–2160

Vassallo C, Olivier AH, Souéres P, Crétual A, Stasse O, Pettre J (2017a) How do walkers behave when crossing the way of a mobile robot that replicates human interaction rules? Gait Posture. https://doi.org/10.1016/j.gaitpost.2017.12.002

Vassallo C, Olivier AH, Souéres P, Crétual A, Stasse O, Pettré J (2017b) How do walkers avoid a mobile robot crossing their way? Gait Posture 51:97–103. https://doi.org/10.1016/j.gaitpost.2016.09.022

Yamaguchi K, Berg AC, Ortiz LE, Berg TL (2011) Who are you with and where are you going? CVPR 2011:1345–1352. https://doi.org/10.1109/CVPR.2011.5995468

Yan X, Kakadiaris I, Shah S (2014) Modeling local behavior for predicting social interactions towards human tracking. Pattern Recognit 47(4):1626–1641. https://doi.org/10.1016/j.patcog.2013.10.019

Zhang B, Holloway C, Carlson T, Herrera R (2019) Shared-control in wheelchairs—building interaction bridges

Zhou B, Wang X, Tang X (2012). Understanding collective crowd behaviors: learning a mixture model of dynamic pedestrian-agents. https://doi.org/10.1109/CVPR.2012.6248013

Funding

This study was funded by EU H2020 (Grant No. 779942).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Zhang, B., Amirian, J., Eberle, H. et al. From HRI to CRI: Crowd Robot Interaction—Understanding the Effect of Robots on Crowd Motion. Int J of Soc Robotics 14, 631–643 (2022). https://doi.org/10.1007/s12369-021-00812-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-021-00812-7