Abstract

As robots have become increasingly common in human-rich environments, it is critical that they are able to exhibit social cues to be perceived as a cooperative and socially-conformant team member. We investigate the effect of robot gaze cues on people’s subjective perceptions of a mobile robot as a socially present entity in three common hallway navigation scenarios. The tested robot gaze behaviors were path-oriented (looking at its own future path), or human-oriented (looking at the nearest person), with fixed-gaze as the control. We conduct a real-world study with 36 participants who walked through the hallway, and an online study with 233 participants who were shown simulated videos of the same scenarios. Our results suggest that the preferred gaze behavior is scenario-dependent. Human-oriented gaze behaviors which acknowledge the presence of the human are generally preferred when the robot and human cross paths. However, this benefit is diminished in scenarios that involve less implicit interaction between the robot and the human.

Similar content being viewed by others

Explore related subjects

Find the latest articles, discoveries, and news in related topics.Avoid common mistakes on your manuscript.

1 Introduction

As robotic research matures, there is increasing interest in using robotic agents in socially assistive applications, including senior care facilities [29], health centres [33], and classrooms [19]. For mobile robots to become integrated into these environments, they need to be competent at navigating in human-populated spaces. In addition to being able to safely maneuver around humans, robots operating in these shared environments need to be equipped with appropriate social skills and behave in a socially acceptable manner. Particularly in environments such as senior care facilities where humans are lacking adequate levels of social stimulation [29], robots which have the ability to establish a social connection with surrounding humans through non-verbal cues offer the opportunity to improve the level of well-being of the humans which it interacts with. Moreover, for humans to feel comfortable within a robot’s vicinity, it is important for the robot to act in a predictable manner to be perceived as a safe and trustworthy entity [30]. Humans typically navigate cooperatively when moving among other people [38]. A moving robot should similarly be capable of developing a mutual understanding between itself and the humans in its vicinity when navigating among other people. This will allow both the robot and human to accurately interpret the actions of one another and allow for predictable movements [22].

It is well known that humans use head and/or eye gaze (the direction in which one appears to be looking) in social situations to communicate intent and display mental states [11]. Extensive research that has been conducted regarding interpersonal communication suggests that more than half of the meaning in social situations is communicated non-verbally [4]. Therefore, it is critical that non-verbal cues such as eye gaze are integrated into existing navigation algorithms to improve the social aspects of the robot.

To enable effective human-robot collaboration and teaming, the robot must be viewed as a socially capable agent by humans. In Human-Robot Interaction (HRI) literature, this is referred to as the social presence of the robot, which is defined as the “sense of being together with another” [2]. With the goal of enabling social robots to operate more harmoniously and effectively around people, this paper investigates how different robot gaze behaviors during different navigation scenarios affect the robot’s perceived social presence by conducting user studies in both real-world and simulated environments. We compare three types of gaze behaviors: a default behavior with no head movement, a gaze which looks at the robot’s planned trajectory, and a gaze which briefly looks towards the user. The experiments consist of one navigation scenario in which the human and robot do not cross paths, and two scenarios in which they do cross paths. Our results show that the preferred robot gaze behavior is dependent on the navigation scenario. In particular, gazes that glance at the human are generally preferred in scenarios in which the human and robot cross paths.

2 Background

In this section, we briefly review the existing literature on the use of mobile robots and social cues in HRI, followed by a deeper look at the use of gaze behaviors employed by robots for autonomous mobile robots.

2.1 Mobile Robots in HRI

As opposed to robots with a fixed base, mobile robots have access to a global workspace, and are therefore advantageous for tasks which require the robot to traverse between multiple areas. Fetch-and-carry tasks are a common application of mobile robots, typically contextualized as an assistive robot in household [7, 35] or warehouse environments [3]. In this context, both social navigation in human-populated spaces [8, 34] and the direct social interactions with humans in the space, e.g., conversations [31] and handover/delivery actions [16, 26], are important fields of research. Trajectory planning in the presence of humans considers aspects such as safety and visibility to the human, while also abiding by social conventions or human preferences. Proxemics [27, 39] considers the distance a robot should maintain from a human, which is important to maximizing the robot’s perceived safety. In situations when the robot and human must give way to each other, effective communication and reactive planning is beneficial to cooperatively avoid collisions with each other [5].

2.2 Social Cues in HRI

Social cues are used by robots to communicate various types of information to nearby humans. Cid et al. [6] uses a robotic head with mechanisms to control neck, eye, eyebrow, and mouth motions to mimic human facial expressions. Similarly, Zecca et al. [43] combines facial expressions with full body motions to recreate body language. However these require complex mechanisms to recreate recognizable expressions. Semantic-free audio cues have also seen some interest in HRI, however is still relatively unexplored [42]. Tatarian et al. [36] investigated the use of multiple social cues simultaneously, including proxemics, gaze, gestures, and dialogue, and found that each social cue had a distinct effects on how the robot was perceived.

An important type of social cue is gaze cues [1]. Gazes have been investigated in a variety of HRI contexts, for communicating different types of information. Moon et al. [28] uses gaze cues during a robot-to-human handover to communicate handover location and timing information. Terziouglu et al. [37] utilize a variety of gaze cues, including gazes towards the task and target, as well as gazes towards the human collaborator to acknowledge completion of a task. In navigational scenarios, gaze can be used to convey navigational intent [15] or social presence with humans [20, 39]. The latter is the primary purpose for which we employ gaze behaviors in our current study, while acknowledging that other interpretations of the gaze may be possible distractors from the true intention of the robot.

2.3 Simulation in HRI

Due to the recent COVID-19 pandemic, there has been renewed interest in the effectiveness of simulated HRI user studies for remote experimentation as a cheaper and easier alternative to real-world experiments. Although there is evidence that results for simulated and real-world HRI experiments coincide, this is not conclusive. Video-based user studies and real-world studies were conducted to study preferences in robot approach directions for a fetch-and-deliver task in [40, 41], and robot politeness in [23]. In these studies, it was concluded that there was a high level of agreement between the results of the two user study modes, although users expressed a preference towards participating in the real-world studies, and effects tended to be weaker in the video-based studies. Similarly, in [13], real-world studies were compared to studies where the user would interact with the robot remotely. Again, no significant differences were found between results of the two user study modes, although it was noted that participants would experience a higher cognitive workload. On the other hand, in the simulated studies in [24] which compared real-world studies to virtual reality studies to research proxemics in HRI, significant differences were found between the two results.

2.4 Gaze in Navigational Scenarios

Existing studies have investigated different aspects of robot eye gaze during navigation, including different types of gaze behaviors and navigation scenarios. However, conflicting results have been reported regarding effects of gaze behaviors on the robot’s perceived social presence [20, 39]. The primary difference between the two studies was the difference in the initiation timing of the gaze behavior and the navigation scenario explored. In the study conducted by Wiltshire et al. [39], a robot and a person are engaged in a series of interactions in a hallway navigation scenario where the person must give way to the robot crossing paths with the person perpendicularly. During each of the interactions, nonverbal cues of the robot such as proxemics or gaze behaviors were altered. From their user study, it was concluded that altering the gaze behavior of the robot did not result in a higher social presence for the robot, and other non-verbal cues such as the proxemic behaviors exhibited by the robot are of more importance. In contrast, the study conducted by Khambhaita et al. [20], which used very similar eye gaze behaviors, concluded that altering the gaze behavior of the robot resulted in higher social presence. The gaze initiation timings between both of these studies were not the same, which may have contributed to the difference in the results. Furthermore, the navigation scenario used by Khambhaita et al. is not the same as what was used by Wiltshire et al. Therefore, it is still unclear and worthwhile to explore whether the appropriate gaze behavior, as well as the timings of gaze behavior execution, are dependent on the navigation scenario.

Another aspect of gaze during navigation is gaze fixation duration. Studies regarding gaze behaviors of adults during natural locomotion have found that they initiate gaze fixation 1.72 s before encountering an obstacle [18]. It was found that the duration of the fixation depended on the obstacle in question. For obstacles that were designed to be stimulating (stickers were placed on the obstacle), the fixation duration was found to be 0.53 s, while regular obstacles had a fixation duration of 0.2 s. However, the appropriate fixation duration for robots during navigation is still to be determined and is worth investigating.

3 Research Questions

As identified in the previous section, existing works on robot gaze during navigation has produced some conflicting results. In particular, whether and how navigation scenarios affect the perceived social presence of a robot along with robot gaze behavior has remained largely unexplored. Hence, our current work aims to address the following research questions

-

1.

How do different robot gaze behaviors during navigation affect people’s perception of the robot as a socially present entity?

-

2.

Does the appropriate gaze behavior vary depending on the navigation scenario?

To answer these research questions, we conducted simulated and real-world user studies to investigate people’s perceived social presence of a robot when different gaze behaviors are used in different navigation scenarios.

4 User Study Design

In this section, we detail the user study design used to answer our research questions. We conducted user studies where we measure the perceived social presence of a robot agent when users interact with it in various navigational scenarios and for various robot gaze behaviors. We initially conducted a large simulated user study where the user is shown videos of a human–robot interaction in a navigational scenario from a first-person perspective was conducted. This was followed by a smaller in-person user study involving the same gaze behaviors and scenarios.

4.1 Independent Variables

To answer our research questions, our study consists of a two-factor design, examining four gaze behaviors and three navigation scenarios, for a total of twelve conditions. These variables are detailed in the following sections.

4.1.1 Gaze Behaviors

We tested four gaze behaviors in our study, visualized in Fig. 1:

-

Default (D): The robot always looks forwards, and does not move its head. This gaze serves as the control for the user study.

-

Path Oriented (PO): The robot looks at a point on the ground 1.5m ahead along the path it is planning to travel in.

-

Human-Oriented Short (HO-S) and Long (HO-L): The robot “acknowledges" the human by briefly directing its gaze at the human when passing them. Two gaze fixation timings are tested based on the timings used by humans during locomotion [18]. These gaze periods are 0.2 s for HO-S, and 0.53 s for HO-L. The robot uses the default gaze before and after it looks at the human.

4.1.2 Navigational Scenarios

Our study incorporates three different navigation scenarios as illustrated in Fig. 2. Hallway scenarios were chosen as they are typical in many buildings and are the most common scenario used in existing literature regarding gaze behaviors in navigational scenarios [12, 20, 25, 39]. Furthermore, these scenarios permit us to make direct comparisons with existing works.

-

Two way (TW): The person and robot navigate down a hallway starting from opposite ends. An obstacle is placed in the path of the robot to accentuate the difference between the default and path-oriented gaze behaviors.

-

Robot exits hallway (EXT): The robot moves down the hallway then enters a room on the human’s left. The robot briefly pauses before entering to attempt to give way to the human but always decides to enter the room first as the human is too far away to give way to.

-

Robot enters hallway (ENT): The robot begins inside a room to the human’s left, where it is not initially visible to the human. The robot exits the room before turning right and moving down the hallway. The person is forced to give way to the robot for it to make its turn.

4.2 Dependent Variables

Like [20, 39], to measure the social presence of the robot we measure along the dimensions outlined in the Social Presence Inventory (SPI) developed by Harms and Biocca [14]. Specifically, we use the following dimensions:

-

Co-Presence (CP): The SPI defines co-presence as “the degree to which the observer believes he/she is not alone and secluded, their level of peripheral or focal awareness of the other, and their sense of the degree to which the other is peripherally or focally aware of them”. Co-presence fundamentally measures subjective perception rather than the objective condition of being observable. This means that co-presence is well suited for self-report measures such as a Likert scale that indicates the level of awareness of each other. The sensory awareness of agents in a given interaction can also be measured using observations such as measuring eye fixation, proxemic behavior exhibited, or physiological responses. The primary challenges with such methods are the difficulty in data collection, especially with a remote user study.

-

Perceived Message Understanding (PMU): The SPI defines PMU as “the ability of the user to understand the message being received from the interactant as well as their perception of the interactant’s level of message understanding”. These measures can be used to evaluate how the legibility of the robot changes when gaze behavior is altered.

-

Perceived behavioral Interdependence (PBI): The SPI defines PBI as the “extent to which a user’s behavior affects and is affected by the interactant’s behavior”. In a given interaction, the key sense of access to others is based on the degree to which the agents appear to interact with each other. This can include explicit behaviors such as waving of hands to acknowledge the presence of each other or non-explicit behaviors such as eye contact.

In addition to the social presence metrics, we also measure the following factors:

-

Perceived Safety: How safe the person feels in the presence of the robot.

-

Naturalness: How similar the robot behaves compared to the typical human.

All dependent variables are quantitative measures reflecting the subjective assessment of the human. These are acquired in the form of post-experiment survey questions, which are summarized in Table 1, and measured using a Likert scale. A 3-point Likert scale was used for the online study, whereas a 5-point Likert scale was used for the real-world study. The different scales were chosen as we believed anonymous online participants would be less attentive than in-person participants, and therefore would benefit from a smaller Likert scale which demands a lower mental load [32].

Note that for questions measuring social presence, like [20] we use only a subset of questions used in the original study. Specifically, we use only three questions for co-presence, and one question each for PMU and PBI. Additionally, like [20, 39] we have adapted questions to refer to the “robot” rather than “my partner” to be more suitable for the HRI context.

4.3 Hypotheses

We expect human-like gazes, such as human-oriented gazes which establish eye-contact with a human when they acknowledge the human’s presence, to feel more socially conformant and socially present (Hypothesis 1). As a result, we also expect human-oriented gazes to feel safer and more natural (Hypothesis 2). Therefore, we consider the following hypotheses:

-

H1: human-oriented gazes will have higher perceived social presence compared to other gazes in all scenarios.

-

H2: human-oriented gazes will improve the perceived safety and naturalness of the robot for all scenarios.

4.4 Implementation

The Fetch Mobile Manipulator robot, shown in Fig. 3, was chosen as the research platform. This robot has a head module that is capable of both pan and tilt, as well as a nonholonomic mobile base which can maneuver around a global workspace. We implemented the navigation and eye gaze behaviors described in Sect. 4.1 for both simulated and real-world robots.

4.4.1 Simulation Environment

For the simulated study, the Gazebo simulatorFootnote 1 was used to generate videos of the Fetch robot performing each gaze behavior in each navigational scenario. The simulated camera was taken from a first-person perspective of the human, and followed a scripted trajectory for each scenario.

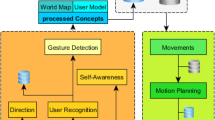

4.4.2 Real-World Implementation

Our real-world implementation is summarized in the system diagram shown in Fig. 4. We implemented our system using the Robot Operating System (ROS)Footnote 2 framework. The Head Control Node commands the robot head motion to exhibit different gaze behaviors. We use the Leg Detector ROS packageFootnote 3 to detect and track people around the robot using the robot’s laser scanner sensor, and the move_base package from the ROS Navigation stack for planning robot base motion. The Head Control Node receives the positions of the detected person from the Leg Detector, and the planned path of the robot from the Move Base node. The gaze behavior is executed by sending motion commands to the Point-Head Action Client, which controls the pan-tilt motions of the robot’s head.

To avoid collisions with obstacles or people, a safety feature was implemented where the robot would stop moving if the laser scanner detects an obstacle within a fixed distance (0.6 m in our experiments). However, this feature was not triggered in any of the user studies.

4.5 User Study Procedure

4.5.1 Simulated video study

Twelve videosFootnote 4 addressing each condition were generated using the Gazebo simulator. Each participant was shown the videos in a randomized order, and participants were instructed to view the videos full screen. After watching each video, participants were given a survey asking them to rate a series of statements (shown in Table 1) on a 3-point Likert scale. We distributed our study through Amazon Mechanical Turk and we compensated each participant who successfully completed the study with $2 USD. To ensure data quality, we included a control question asking the participant to identify which direction the robot went (left/right) at the end of the video. Data from those who failed the control question, or were incomplete, were rejected. 15 responses were rejected based on these criteria. In total we accepted responses from 233 participants (Male = 142, Female = 91).

4.5.2 Real-World Studies

At the beginning of each experiment, the participant is shown simulated videos (the same as those used for the simulated studies) of the D gaze behavior for each of the three navigation scenarios so that they understand how they should behave for each scenario. Participants then experienced each of the 12 total gaze behavior and navigation scenario combinations. These were ran in a pseudo-randomized order using a Balanced Latin SquareFootnote 5 to minimize effects caused by the specific ordering of trials in the experiment. Participants were informed about which navigation scenario they would face next, but not which gaze behavior the robot would exhibit. After each trial, participants were asked to respond to the same set of statements as the simulated study (shown in Table 1) using a 5-point Likert scale. Trials where either participants walked too quickly or the human detection algorithm failed were repeated. At the end of the experiment, participants were asked if they had any additional comments. Overall, we recruited 36 participants (Male = 27, Female = 9; 35 between ages 18–25, 1 aged 31–40) from University premises to participate in the real-world user studies.Footnote 6 Participants did not receive any reimbursement for their participation.

Results obtained for Perceived Message Understanding (PMU) for simulated (top) and real-world (bottom) user studies. Results are shown grouped by gaze behavior (left) and navigation scenario (right). Significant paired differences are indicated with (\(*\)) for \(p<0.05\), and with (\(**\)) for \(p<0.001\)

Results obtained for Perceived Behavioral Interdependence (PBI) for simulated (top) and real-world (bottom) user studies. Results are shown grouped by gaze behavior (left) and navigation scenario (right). Significant paired differences are indicated with (\(*\)) for \(p<0.05\), and with (\(**\)) for \(p<0.001\)

5 Results

Figures 6, 7, 8, 9, and 10 show the results from the survey questions for each outcome variable. Two-way repeated measures ANOVA tests were first conducted between gaze behaviors and navigation scenarios to confirm whether an interaction effect exists between the two variables. For measures with a statistically significant interaction effect, post-hoc analyses were performed to determine differences between gazes (scenarios) for each scenario (gaze) independently. If the interaction effect was not statistically significant but main effects due to gaze (scenario) were, the data was collapsed along the scenario (gaze) variable before performing post-hoc analyses. Post-hoc analyses were performed using paired sample t-tests with Bonferroni corrections. Parametric tests were used as they have been shown to provide similar results to non-parametric results for Likert items [10]. Post-hoc analysis results are summarized in Figs. 6, 7, 8, 9, and 10. Full post-hoc results can be found in the appendices.

5.1 Co-Presence

5.1.1 Simulated Studies

There was a significant interaction effect between the gaze behavior and the navigation scenario for CP (\(F(6,1404)=3.391\), \(p=2.53\times 10^{-3}\)), therefore, post hoc paired sample t-tests were performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 6a), we see that in general, either one or both of the person-orientated gaze behaviors (HO-S and HO-L) yield significantly higher CP measures. HO-L was most favoured for the TW scenario, HO-S was most favoured for the EXT scenario, and both HO-L and HO-S were equally most favoured for the ENT scenario. This supports Hypothesis 1.

Comparing navigation scenarios given each gaze behavior (Fig. 6b), we observe a common trend across all gaze behaviors. In general, people experience higher co-presence in the order of ENT, EXT, then TW, although the difference is not always significant between TW and EXT scenarios—specificially for PO and HO-L gazes.

5.1.2 Real-World Studies

Significant interaction effects were found between gaze behavior and navigation scenario (\(F(6,210)=3.599\), \(p=2.04\times 10^{-3}\)), therefore post hoc paired sample t-tests were performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 6c), a common observation between all navigation scenarios is that HO-S and HO-L gaze behaviors both have significantly better CP compared to both D and PO gaze behaviors. No significant difference was found between HO-S and HO-L, nor between D and PO gazes except for the ENT scenario. This is a similar but more consistent observation compared to the simulated results, providing further support for Hypothesis 1.

Comparing navigation scenarios given each gaze behavior (Fig. 6d), user responses to each gaze behavior appear to be influenced differently by the navigation scenarios. Notably, while the ENT scenario has the highest CP when performing the PO or HO-S gazes, it is the scenario with the lowest CP for the D gaze.

5.2 Perceived Message Understanding

5.2.1 Simulated Studies

There was significant interaction between the gaze behavior and the navigation scenario for PMU metric (\(F(6,1404)=4.771\), \(p=8.00\times 10^{-5}\)), therefore, post hoc paired sample t-test was performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 7a), we observe that PMU is significantly higher for the D gaze compared to the HO-S gaze for the TW scenario, which is in opposition to Hypothesis 1. However, for the EXT and ENT scenarios, the HO-S gaze is rated to have the best PMU, which is in support for Hypothesis 1.

Comparing navigation scenarios given each gaze behavior (Fig. 7b), a couple of observations can be made. For the D gaze, the TW scenario yielded a significantly better PMU compared to the EXT scenario. For the HO-S gaze however, the TW scenario yielded significantly worse PMU compared to the other two scenarios. For HO-L gazes, the ENT scenario yielded significantly better PMU compared to the EXT scenario.

5.2.2 Real-World Studies

Significant interaction effects were found between gaze behavior and navigation scenario (\(F(6,210)=5.370\), \(p=3.50\times 10^{-5}\)), therefore post hoc paired sample t-tests were performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 7c), for the EXT scenario, PO is rated to have the best PMU, and is significantly better compared to the D gaze. For the ENT scenario, the D gaze also has the worst PMU, however like the simulated studies the human-oriented gaze behaviors (HO-S and HO-L) are perceived to have the best PMU. Hypothesis 1 is therefore only supported for the ENT scenario.

Comparing navigation scenarios given each gaze behavior (Fig. 7d), we see that for the D gaze, the TW scenario had the best PMU compared to the other two scenarios, similar to the simulated results. For the PO gaze, the robot had the best PMU for the EXT scenario compared to the other two scenarios. For the HO-S gaze, the ENT scenario was found to have significantly better PMU compared to the EXT scenario.

5.3 Perceived Behavioral Interdependence

5.3.1 Simulated Studies

There was significant interaction between the gaze behavior and the navigation scenario for the PBI metric (\(F(6,1404)=3.354\), \(p=2.76\times 10^{-3}\)), therefore, post hoc paired sample t-test was performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 8a), we found that PBI with human-oriented gaze behaviors (HO-S and HO-L) was significantly higher compared to both D and PO gazes for EXT and ENT scenarios. However, there is no statistically significant difference between any gaze behaviors for the TW scenario. This supports Hypothesis 1 predicting improved social presence metrics when human-oriented gaze behaviors are used, but for the EXT and ENT scenarios only.

Comparing navigation scenarios given each gaze behavior (Fig. 8b), there is a general trend where PBI is highest in the ENT scenario, followed by the EXT scenario, followed by the TW scenario, when path or person oriented gazes are used (PO, HO-S, HO-L). However, this trend is not observed with the D gaze behavior.

5.3.2 Real-World Studies

There was no significant interaction effect between gaze behavior and navigation scenario (\(F(6,210)=1.679\), \(p=0.13\)). There were significant main effects exhibited by gaze behaviors (\(F(3,105)=29.041\), \(p=9.41\times 10^{-14}\)), but not by navigational scenarios (\(F(2,70)=0.616\), \(p=0.54\)). Therefore post hoc paired sample t-tests were performed for investigate the impact of gaze behaviors after collapsing results in the scenario variable.

Comparing between gaze behaviors (Fig. 8c), a similar observation to simulated study results is made where PBI is significantly higher for human-oriented gaze behaviors (HO-S and HO-L) compared to D and PO gazes. However unlike the simulated study results, this holds for all navigation scenarios, fully supporting Hypothesis 1.

5.4 Perceived Safety

5.4.1 Simulated Studies

There was significant interaction between the gaze behavior and the navigation scenario for the Safety metric (\(F(6,1404)=9.979\), \(p=8.21\times 10^{-11}\)), therefore, post hoc paired sample t-test was performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 9a), we observe mixed results for each scenario. For the TW scenario, the D gaze is found to be significantly safer compared to the HO-L gaze. For the EXT scenario, both D and HO-L gazes are found to be significantly safer compared to the PO gaze. In contrast, for the ENT scenario, the D gaze is significantly less safe compared to all other gazes. Overall, Hypothesis 3 is only partially supported for the EXT and ENT scenarios.

Comparing navigation scenarios given each gaze behavior (Fig. 9b), mixed observations are also made for each gaze. For the D gaze, the TW scenario is perceived as the safest. For all other gazes however, the ENT scenario is perceived as the safest. The EXT is perceived as the least safe for PO and HO-S gazes, while TW is the least safe for the HO-L gaze.

5.4.2 Real-World Studies

Unlike the simulated studies, no significant interaction effects were found between gaze behavior and navigation scenario (\(F(6,210)=1.842\), \(p=0.09\)). There were significant main effects exhibited by gaze behaviors (\(F(3,105)=13.043\), \(p=2.64\times 10^{-7}\)), but not by navigational scenarios (\(F(2,70)=1.812\), \(p=0.17\)). Therefore post hoc paired sample t-tests were performed for investigate the impact of gaze behaviors after collapsing results in the scenario variable.

Comparing between gaze behaviors (Fig. 9c), both human-oriented gaze behaviors (HO-S and HO-L) are perceived to be significantly safer compared to D and PO gazes, fully supporting Hypothesis 3.

5.5 Naturalness

5.5.1 Simulated Studies

There was significant interaction between the gaze behavior and the navigation scenario for the Naturalness metric (\(F(6,1404)=5.481\), \(p=1.30\times 10^{-5}\)), therefore, post hoc paired sample t-test was performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 7a), for the TW scenario, the D gaze is perceived as significantly more natural compared to the HO-L gaze, in contradiction to Hypothesis 3. In contrast, Hypothesis 3 is supported for the other two scenarios, where the D gaze is perceived as the least natural gaze, while the HO-S gaze is the most natural.

Comparing navigation scenarios given each gaze behavior (Fig. 7b), both the D and PO gazes are perceived as the most natural when performing the TW scenario. There is no significant difference found between navigation scenarios for the two human-oriented gaze behaviors (HO-S and HO-L).

5.5.2 Real-World Studies

Significant interaction effects were found between gaze behavior and navigation scenario (\(F(6,210)=2.687\), \(p=1.56\times 10^{-2}\)), therefore post hoc paired sample t-tests were performed for each gaze-scenario pair.

Comparing gaze behaviors within each navigation scenario (Fig. 7c), both human-oriented gaze behaviors (HO-S and HO-L) are perceived to be significantly more natural compared to D and PO gazes for the ENT scenario, supporting Hypothesis 3. No significant differences are found between any of the other scenarios.

Comparing navigation scenarios given each gaze behavior (Fig. 7d), no significant differences are found for any gaze.

6 Discussion

In the following section, we discuss the results of the simulated and real-world results separately before suggesting how these results may help to guide future implementations of robot gaze in navigational scenarios. Although certain big-picture trends in user preferences were similar between the simulated and real-world studies, there was not a consistent consensus between the two results. In particular, many of the statistically significant effects arising from gaze behaviors or navigational scenarios where different between the two study modes, which suggests that the user study mode has an effect on user preferences. However, this difference may be due to the different scales used.

6.1 Simulated Studies

The results for the SPI metrics are all generally in support for Hypothesis 1. The only results to the contrary are those for the PMU metric, which suggest that the robot’s intentions are less clear when it performs a short human-oriented gaze cue in the TW scenario. This may be due to the scenario itself being simple. As suggested by [9, 17, 18, 21], observing potential hazards during navigational scenarios is a principal factor in human gaze patterns, and can be more important than eye-contact with other humans. In scenarios where the human is moving parallel to the robot and is not a hazard, a gaze that instead focuses on the environment for other potential hazards may therefore be easier for users to understand. However, other scenarios where a collision between the two parties is more likely will require some communication with the human to indicate what the robot will do. Another interesting observation is that the TW scenario has the lowest PBI for the path-oriented and human-oriented gazes. This is likely because the robot does not need to react to the human in this scenario, while there is always someone giving way to the other in the other scenarios, and therefore must be reacting to one’s presence.

For Hypothesis 2, we have mixed results where the human-oriented gazes are perceived as safer and more natural than other gaze behaviors only in situations where the human and robot cross paths (EXT and ENT). Similar to the PMU results, this may again be due to how in the TW scenario it is more appropriate for the robot to be observing the environment for potential hazards. This suggests that gaze cues towards humans should be used to let the human feel acknowledged and safe when an interaction between the robot and human is required. However, gaze cues may otherwise be distracting or off-putting in other scenarios.

6.2 Real-World Studies

For the SPI metrics, a similar but somewhat stronger trend to that found in the simulated study is shared in the real-world study. Hypothesis 1 is fully supported for the CP and PBI metrics. CP was also commented on the most by the participants, with 10 participants sharing comments similar to “The eye contact was essential to me, knowing the robot acknowledged that I was there” (Participant 5).

Regarding PMU, the results suggest that for the EXT scenario, the PO gaze conveys the robot’s intentions most clearly, while the for ENT scenario the human-oriented gazes were the clearest. Similar to the simulated studies, the first observation is reflective of how gaze patterns in humans are typically task-based [9, 17, 18, 21], which makes it easy to understand the intention of path oriented gazes. However for the second observation, the human is conveniently in the direction that the robot wants to travel towards in the ENT scenario, and therefore the human-oriented gazes conveniently benefit from the dual effect of both acknowledging the human and looking at its planned path.

Some participants commented that they would have preferred a combination between path-oriented and human-oriented gazes, where the robot looks at where it will travel, quickly gazes at the human, then returns back to looking at its path. This would allow the human-oriented gazes to be more predictable for all navigation scenarios. However, not every participant understood the intended meaning of the path-oriented gaze, and often attributed it to the robot being “rude” (Participants 16 and 22) or “depressed” (Participant 5), and “[was not] sure what the robot was doing” (Participant 35). A path-oriented gaze behavior that does not look at the ground may therefore help to convey the robot’s intentions more clearly.

The real-world results support Hypothesis 2. In all three navigational scenarios, the human-oriented gazes are perceived as safer compared to non-human-oriented gazes, with a marginal preference for shorter gazes. In contrast to the simulated results which suggest short human-oriented gazes feel more natural, both short and long human-oriented gazes felt equally more natural compared to the other gazes, but only for the ENT scenario. We received mixed opinions about which human-oriented gaze participants preferred. Some participants shared sentiments similar to “I preferred it when the robot looked at me for a shorter period. The longer period felt like it was glaring at me” (Participant 16), while other participants commented that “The short period felt like a glitch. The longer gaze felt better” (Participant 23). This suggests that an optimal gaze behavior should take user preference into account.

6.3 Design Implications

Overall, both hypotheses are supported to an extent. However, whether our hypotheses are supported or not can depend on the navigational scenario. The simulated studies seem to suggest that for simple navigational scenarios such as the TW scenario where the robot and human don’t have a significant interaction, human-oriented gazes may actually be detrimental to PMU, perceived safety, and naturalness of the robot compared to a simple default gaze behavior, despite exhibiting stronger co-presence. For the other scenarios, the human-oriented gazes tend to have a positive effect on all metrics. This suggests that the use of human-oriented gazes should be dependent on the scenario, and should be used when there is an important interaction, such as giving way, that will occur between the human and the robot.

Real-world experiments suggest that human-oriented gazes are equal to, or superior in the majority of metrics for all scenarios. Only the PMU metric deviated from this pattern for the EXT scenario. To improve the PMU for these gazes, particularly when the human is not in the direction of the path it will take, a PO behavior should be adopted before and after it gazes at the human. There were generally no significant differences in preference between the length of the gaze, although we believe this is due to differences in preferences between participants rather than the gazes themselves being negligibly different. Future work should be performed to examine if it is possible to determine user preferences in gaze periods.

The results also show that the real-world results generally have much more muted differences between navigation scenarios compared to the simulated results. This may be due to differences in sample size or an artifact of using differently sized Likert scales between the simulated and real-world surveys.

The ENT scenario tended to draw out the most significant differences between gazes, particularly for the real-world results. We draw a comparison between this observation and Wiltshire et al. [39], which found that gaze had no statistically significant effect on social presence. A possible reason that Wiltshire et al. offers for this observation is that for the navigational scenario they studied, gaze had an effect at the automatic level of state attribution, whereas the SPI measures controlled and reflective processes. We speculate that the ENT scenario was the most stimulating experience for users, as the robot is initially unseen by the human before it comes out of the room, and therefore was more likely to trigger the second type of mental process. Therefore, effects on social presence for different gazes are more pronounced for this particular navigational scenario. Therefore, it is the situation which necessitates a cue towards the human the most. This suggests that robot cues to the human are more important for scenarios where the likelihood of a physical conflict is more likely.

7 Limitations and Conclusion

In this paper, we investigate the effects of varying the gaze behavior of a mobile robot for various common hallway navigation scenarios. Our work addresses conflicting results from previous studies and establishes that the beneficial use of robot gaze is scenario-dependent. These results will be beneficial in guiding future designs of non-verbal cues of robots in HRI environments, and help robots to be perceived as more socially present, safe, and natural entities to improve the well-being of the humans they interact with.

To answer our research questions, we performed simulated and real-world user studies across three different navigation scenarios. Our results vary based on which scenario is analyzed, showing how people’s perception of robotic gaze cues is situation-dependent. Overall, we find that scenarios with more potential conflicts benefit from human-oriented robot gaze cues the most, typically scoring higher in the Social Presence Inventory metrics, safety, and naturalness. However, the use of these gaze cues in simple scenarios requiring no interaction between the parties may yield no benefit, or as the simulated results suggest may even be detrimental to how socially present people perceive the robot to be.

While we have addressed multiple common hallway scenarios, which was a limitation of past studies, further investigation is required for understanding how the scenario should affect what kind of gaze behaviors should the robot employ. Our results may not generalize to a wide variety of dynamic navigation scenarios likely to occur in the real world. For example, scenarios with two or more robots/people introduces an extra layer of complexity to the system. The results from this study should provide valuable insight in developing systems that can tackle more complex navigation scenarios. Regarding the design of the survey, we did not use the full set of items provided by [14] to measure social presence, so a partial re-evaluation of the survey scales may be required to ensure our survey still measures the underlying concepts properly. Moreover, due to the different Likert-scales used between the simulated and real-world studies, we are unable to do a direct statistical comparison between the two results.

We have identified possible avenues for future work, summarized here. Firstly, we believe that a combined path-oriented and human-oriented gaze behavior will be able to alleviate some of the issues the human-oriented gaze behavior had with regard to the clarity of the robot’s intentions. Improvements to the path-oriented gaze, such as looking forwards instead of the ground, are also suggested. Secondly, based on our mixed findings about people’s preferences towards longer or shorter gazes, a more in-depth investigation into people’s preferences over the human-oriented gaze period length is recommended, with the aim to be able to reactively adapt the gaze period length to people’s preferences.

Data Availability

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

Notes

No external participants could be recruited for the real-world experiments due to the university COVID-19 policy.

References

Admoni H, Scassellati B (2017) Social eye gaze in human–robot interaction: a review. J Hum Robot Interact 6(1):25–63. https://doi.org/10.5898/JHRI.6.1.Admoni

Biocca F, Harms C, Burgoon JK (2003) Toward a more robust theory and measure of social presence: review and suggested criteria. Presence Teleoper Virtual Environ 12(5):456–480. https://doi.org/10.1162/105474603322761270

Bolu A, Korçak Ö (2021) Adaptive task planning for multi-robot smart warehouse. IEEE Access 9:27,346-27,358. https://doi.org/10.1109/ACCESS.2021.3058190

Burgoon JK, Manusov V, Guerrero LK (2021) Nonverbal communication. Routledge, New York

Che Y, Okamura AM, Sadigh D (2020) Efficient and trustworthy social navigation via explicit and implicit robot–human communication. IEEE Trans Robot 36(3):692–707. https://doi.org/10.1109/TRO.2020.2964824

Cid F, Moreno J, Bustos P et al (2014) Muecas: a multi-sensor robotic head for affective human robot interaction and imitation. Sensors 14(5):7711–7737. https://doi.org/10.3390/s140507711

Cosgun A, Christensen HI (2018) Context-aware robot navigation using interactively built semantic maps. Paladyn J Behav Robot 9(1):254–276. https://doi.org/10.1515/pjbr-2018-0020

Dautenhahn K, Walters M, Woods S et al (2006) How may I serve you? A robot companion approaching a seated person in a helping context. In: Proceedings of ACM SIGCHI/SIGART Conference HRI, pp 172–179. https://doi.org/10.1145/1121241.1121272

de Winter J, Bazilinskyy P, Wesdorp D et al (2021) How do pedestrians distribute their visual attention when walking through a parking garage? An eye-tracking study. Ergonomics 64(6):793–805. https://doi.org/10.1080/00140139.2020.1862310

de Winter JF, Dodou D (2010) Five-point likert items: t test versus Mann–Whitney–Wilcoxon. Pract Assess Res Eval 15(1):11. https://doi.org/10.7275/bj1p-ts64

Emery NJ (2000) The eyes have it: the neuroethology, function and evolution of social gaze. Neurosci Biobehav Rev 24(6):581–604. https://doi.org/10.1016/S0149-7634(00)00025-7

Fiore SM, Wiltshire TJ, Lobato EJ et al (2013) Toward understanding social cues and signals in human–robot interaction: effects of robot gaze and proxemic behavior. Front Psychol 4:859. https://doi.org/10.3389/fpsyg.2013.00859

Gittens CL (2021) Remote HRI: a methodology for maintaining covid-19 physical distancing and human interaction requirements in HRI studies. Inf Syst Frontiers. https://doi.org/10.1007/s10796-021-10162-4

Harms C, Biocca F (2004) Internal consistency and reliability of the networked minds measure of social presence. In: Seventh annual international workshop: Presence, Universidad Politecnica de Valencia Valencia, Spain

Hart J, Mirsky R, Xiao X et al (2020) Using human-inspired signals to disambiguate navigational intentions. In: Proceedings of international conference social robotics. Springer, pp 320–331. https://doi.org/10.1007/978-3-030-62056-1_27

He K, Simini P, Chan WP et al (2022) On-the-go robot-to-human handovers with a mobile manipulator. In: Proceedings of IEEE RO-MAN, pp 729–734. https://doi.org/10.1109/RO-MAN53752.2022.9900642

Hessels RS, Benjamins JS, van Doorn AJ et al (2020) Looking behavior and potential human interactions during locomotion. J Vis 20(10):5–5. https://doi.org/10.1167/jov.20.10.5

Hessels RS, van Doorn AJ, Benjamins JS et al (2020) Task-related gaze control in human crowd navigation. Atten Percept Psychophys 82(5):2482–2501. https://doi.org/10.3758/s13414-019-01952-9

Kanda T, Shimada M, Koizumi S (2012) Children learning with a social robot. In: Proceedings of the seventh annual ACM/IEEE international conference on human–robot interaction, pp 351–358. https://doi.org/10.1145/2157689.2157809

Khambhaita H, Rios-Martinez J, Alami R (2016) Head-body motion coordination for human aware robot navigation. In: Proceedings of the international workshop HFR, pp 1–8. https://hal.laas.fr/hal-01568838

Kitazawa K, Fujiyama T (2009) Pedestrian vision and collision avoidance behavior: investigation of the information process space of pedestrians using an eye tracker. In: Pedestrian and evacuation dynamics 2008. Springer, pp 95–108. https://doi.org/10.1007/978-3-642-04504-2_7

Klein G, Feltovich PJ, Bradshaw JM et al (2005) Common ground and coordination in joint activity, chap 6. Wiley, Hoboken, pp 139–184

Kumar S, Itzhak E, Edan Y et al (2022) Politeness in human–robot interaction: a multi-experiment study with non-humanoid robots. Int J Soc Robot 14(8):1805–1820. https://doi.org/10.1007/s12369-022-00911-z

Li R, van Almkerk M, van Waveren S et al (2019) Comparing human–robot proxemics between virtual reality and the real world. In: 2019 14th ACM/IEEE international conference on human–robot interaction (HRI). IEEE, pp 431–439

Lu DV (2014) Contextualized robot navigation. PhD thesis, Washington University in St. Louis

Mainprice J, Gharbi M, Siméon T et al (2012) Sharing effort in planning human-robot handover tasks. In: Proceedings of IEEE RO-MAN, pp 764–770. https://doi.org/10.1109/ROMAN.2012.6343844

Mead R, Matarić MJ (2017) Autonomous human–robot proxemics: socially aware navigation based on interaction potential. Auton Robot 41(5):1189–1201. https://doi.org/10.1007/s10514-016-9572-2

Moon A, Troniak DM, Gleeson B et al (2014) Meet me where I’m gazing: how shared attention gaze affects human–robot handover timing. In: Proceedings of ACM IEEE conference HRI, pp 334–341. https://doi.org/10.1145/2559636.2559656

Robinson F, Nejat G (2022) An analysis of design recommendations for socially assistive robot helpers for effective human–robot interactions in senior care. J Rehabil Assist Technol Eng 9(20556683221101):389. https://doi.org/10.1177/20556683221101389

Salem M, Dautenhahn K (2015) Evaluating trust and safety in HRI: practical issues and ethical challenges. Emerging policy and ethics of human–robot interaction

Satake S, Kanda T, Glas DF et al (2009) How to approach humans? Strategies for social robots to initiate interaction. In: Proceedings of the 4th ACM/IEEE international conference on human robot interaction, pp 109–116. https://doi.org/10.1145/1514095.1514117

Schrum ML, Johnson M, Ghuy M et al (2020) Four years in review: statistical practices of likert scales in human–robot interaction studies. In: Companion of the 2020 ACM/IEEE international conference on human–robot interaction, pp 43–52. https://doi.org/10.1145/3371382.3380739

Scoglio AA, Reilly ED, Gorman JA et al (2019) Use of social robots in mental health and well-being research: systematic review. J Med Internet Res 21(7):e13,322. https://doi.org/10.2196/13322

Sisbot EA, Marin-Urias LF, Alami R et al (2007) A human aware mobile robot motion planner. IEEE Trans Robot 23(5):874–883. https://doi.org/10.1109/TRO.2007.904911

Srinivasa SS, Ferguson D, Helfrich CJ et al (2010) HERB: a home exploring robotic butler. Auton Robot 28(1):5–20. https://doi.org/10.1007/s10514-009-9160-9

Tatarian K, Stower R, Rudaz D et al (2021) How does modality matter? Investigating the synthesis and effects of multi-modal robot behavior on social intelligence. Int J Soc Robot. https://doi.org/10.1007/s12369-021-00839-w

Terzioğlu Y, Mutlu B, Şahin E (2020) Designing social cues for collaborative robots: the role of gaze and breathing in human–robot collaboration. In: Proceedings of ACM IEEE conference HRI, pp 343–357. https://doi.org/10.1145/3319502.3374829

Vemula A, Muelling K, Oh J (2017) Modeling cooperative navigation in dense human crowds. In: 2017 IEEE international conference on robotics and automation (ICRA). IEEE, pp 1685–1692. https://doi.org/10.1109/ICRA.2017.7989199

Wiltshire T, Lobato E, Wedell A et al (2013) Effects of robot gaze and proxemic behavior on perceived social presence during a hallway navigation scenario. Proc Hum Factors Ergon Soc 57:1273–1277. https://doi.org/10.1177/1541931213571282

Woods S, Walters M, Koay KL et al (2006a) Comparing human robot interaction scenarios using live and video based methods: towards a novel methodological approach. In: 9th IEEE international workshop on advanced motion control, 2006. IEEE, pp 750–755. https://doi.org/10.1109/HRI.2019.8673116

Woods SN, Walters ML, Koay KL et al (2006b) Methodological issues in HRI: a comparison of live and video-based methods in robot to human approach direction trials. In: ROMAN 2006—the 15th IEEE international symposium on robot and human interactive communication. IEEE, pp 51–58. https://doi.org/10.1109/ROMAN.2006.314394

Yilmazyildiz S, Read R, Belpeame T et al (2016) Review of semantic-free utterances in social human–robot interaction. Int J Hum Comput Interact 32(1):63–85. https://doi.org/10.1080/10447318.2015.1093856

Zecca M, Mizoguchi Y, Endo K et al (2009) Whole body emotion expressions for KOBIAN humanoid robot—preliminary experiments with different emotional patterns. In: Proceedings of IEEE RO-MAN, pp 381–386. https://doi.org/10.1109/ROMAN.2009.5326184

Acknowledgements

We would like to thank our collaborators Matthias Beyrle and Jan Faber at the German Aerospace Center, DLR, for their expert advice and guidance on this project.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This project was supported by the Australian Research Council Discovery Projects Grant, Project ID: DP200102858.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethics Approval

This study has been approved by the Monash University Human Research Ethics Committee (Application ID: 25549).

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Simulated Post-hoc results

The full set of results for the post-hoc t-test analyses with Bonferroni corrections for the simulated studies are shown in Tables 2, 3, 4, 5, 6, 7, 8, 9, 10, and 11. Note that the reported t-statistics are all computed as the condition in the first column less that in the second column—-i.e., a positive t-statistic implies the condition in the first column had a higher average rating compared to the second column. Statistically significant results (\(p<0.05\)) are bolded in all tables. To perform Bonferroni corrections, we adjusted the p-values rather than the alpha level of the test, and capped the reported p-values at a maximum value of 1.

Appendix B: Real-world Post-hoc results

The full set of results for the post-hoc t-test analyses with Bonferroni corrections for the real-world studies are shown in Tables 12, 13, 14, 15, 16, 17, 18, 19, 20, and 21. Like the simulated results, the reported t-statistics are all computed as the condition in the first column less that in the second column. Statistically significant results (\(p<0.05\)) are bolded in all tables. To perform Bonferroni corrections, we adjusted the p-values rather than the alpha level of the test, and capped the reported p-values at a maximum value of 1.

Note that for Tables 16, 17, 18, and 19, there was no significant interaction effect between gaze behavior and navigational scenario, so the data was collapsed in each dimension before performing post-hoc analyses on the other dimension.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

He, K., Chan, W.P., Cosgun, A. et al. Robot Gaze During Autonomous Navigation and Its Effect on Social Presence. Int J of Soc Robotics 16, 879–897 (2024). https://doi.org/10.1007/s12369-023-01023-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-023-01023-y