Abstract

Several remote sensing-based methods have been developed to apply site-specific nitrogen (N) fertilization in crops. They consider spatial and temporal variability in the soil-plant-atmosphere continuum to modulate N applications to the actual crop nutrient status and requirements. However, deriving fertilizer N recommendations exclusively from remote proximal and remote sensing data can lead to substantial inaccuracies and new, more complex approaches are needed.

Therefore, this study presents an improved approach that integrates crop modelling, proximal sensing and forecasts weather data to manage site-specific N fertilization in winter wheat. This improved approach is based on four successive steps: (1) optimal N supply is estimated through the DSSAT crop model informed with a combination of observed and forecast weather data; (2) actual crop N uptake is estimated using proximal sensing; (3) N prescription maps are created merging crop model and proximal sensing information, considering also the contribution of the soil N mineralisation; (4) N-Variable Rate Application (N-VRA) is implemented in the field. A VRA method based on DSSAT fed with historical weather data and a business-as- usual uniform fertilization were also compared.

The methods were implemented in a 23.4 ha field in Northern Italy, cropped to wheat and characterized by large soil variability in texture and organic matter content. Results indicated that the model-based approaches consistently led to higher yields, agronomic efficiencies and gross margins than the uniform N application rate. Furthermore, the proximal sensing-based approach allowed capturing of the spatial variability in crop N uptake and led to a substantial reduction of the spatial variability in yield and protein content. This study grounds the development of web-based software as a friendly tool to optimize the N variable rate application in winter cereals.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Feeding an estimated global population of 9 billion people by 2050 represents a significant challenge for agriculture. According to FAOSTAT (2021), wheat (Triticum aestivum L. and Triticum durum Desf.) is the crop with the largest harvested area worldwide and in Europe. In Italy, winter wheat is the most widely grown cereal and accounts for approximately 62.1% of the total cereal-cultivated land and for 47.1% of the total cereal production (ISTAT Agricoltura, 2021).

Nitrogen (N) fertilization in wheat is commonly based on yield goals, derived by applying uniform rates without considering the spatial and temporal variability in the soil-plant-atmosphere continuum (Johnson & Raun, 2003). Plant response to different N fertilization rates highly depends on the weather conditions occurred in a given growing season (Basso et al., 2007; Dumont et al., 2015). Therefore, uniform fertilization rates can lead to a misalignment between the N supply and the crop demand, resulting in economic and environmental losses. While an insufficient N supply leads to economic penalties by inhibiting crop development, yield and grain quality (Karatay & Meyer-Aurich, 2020), overfertilization causes environmental problems, such as N leaching, ammonia volatilization and N2O emissions (Perego et al., 2013; De Antoni Migliorati et al., 2014).

To this end, several site-specific approaches have been proposed to optimize the fertilizer rates. They mainly collect in-season spectral data to detect differences in crop growth and nutrient uptake and are then translated into site-specific N rates through algorithms relating optical measures to potential yield (Erdle et al., 2011). Unfortunately, although these approaches increase N use efficiency and yields while reducing N losses, they provide unsatisfactory results when adverse post-sensing weather conditions occur, leading to unexpected reductions in crop production (Raun et al., 2005). In addition, other adverse conditions (e.g. water stress) could be a confounding factor when sensing N status through proximal sensors (Colaco and Bramley, 2018). Therefore, a viable solution to this drawback relies on coupling process-based crop simulation models and seasonal weather forecasts (Hoogenboom, 2000; Jha et al., 2019; Morari et al., 2021). The latter are seasonal time-scale predictions of changes in the climate system, typically provided as monthly anomalies of meteorological variables up to three to six months ahead of time (Cantelaube & Terres, 2005).

Crop simulation models (CSMs) have been primarily employed to quantify the long-term effects of nutrients and water availability on crop growth and development over different environmental conditions (Delin & Stenberg, 2014; Jones et al., 2003). However, their point-based application across the whole field is not feasible due to processing costs and information availability. To address this issue, fields can be divided into homogeneous management zones (MZs) to simulate the crop-soil interactions within such zones to implement a variable rate application (VRA) approach (Basso et al., 2011; Cammarano et al., 2021). To implement this strategy, CSMs can be run with two distinct approaches: (i) using historical weather data (Basso et al., 2011, 2012; Miao et al., 2006; Wong & Asseng, 2006) or (ii) using seasonal forecasts (Cantelaube & Terres, 2005; Pagani et al., 2017). On the one hand, historical weather data are often freely available even though not as sensitive to current weather trends or climate shocks as the weather forecasts. On the other hand, seasonal forecasts and their reliability is strongly related to the geomorphology and atmospheric variability of the investigated area (Pavan & Doblas-Reyes, 2013). Crop simulation models and winter wheat are highly affected by the variability in agrometeorological variables, especially rainfall and temperature. Therefore, accurate seasonal seasonal forecasts are necessary for an early prediction of the final crop yield. In particular, seasonal forecasts are more reliable in areas where climate variability is driven by solid climate signals (such as in the United States; Basso & Liu 2019) than those produced in the Mediterranean region, where climate signals are less evident (Morari et al., 2021). In the next decades, advances in the science of seasonal forecasting will need to allow accurate and reliable forecasts even in areas, such as the Mediterranean Basin, where today they often perform poorly.

However, studies to date have only evaluated the performance of either CSMs fed with historical weather data (Giola et al., 2012; Brown et al., 2018) or seasonal forecasts (Ferrise et al., 2015; Togliatti et al., 2017), while comparisons among these methodologies have been limited (Basso & Liu, 2019). Significantly, so far, no on-farm N-VRA study has compared the agronomic and economic gains of using the uniform N application rate approach with adopting a CSM-based approach that integrates remotely sensed plant N status, seasonal forecasts or historical weather data.

The objectives of the current study were therefore to (1) evaluate an improved N-VRA method based on a CSM integrated with remotely sensed plant N status, seasonal forecasts or historical weather data, and (2) compare the CSM-based N-VRA approaches to the farmer’s uniform fertilization in terms of harvested crop yield and N use efficiency.

Materials and methods

Study site

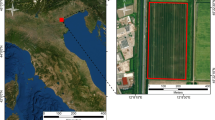

The experiment was conducted over two growing seasons (2018–2019 and 2019–2020) on a 23.4 ha field divided into 16 plots (1.45 ha each) located at Vallevecchia di Caorle (45°37’44.3"N 12°57’07.8"E), Italy. The area belongs to the Venice Lagoon watershed and is classified as vulnerable to nitrate pollution according to the Nitrates Directive 91/676 (EEC 1991). Each plot was rectangular (320 m x 32 m) and bordered by open ditches along the two longer sides. Soil type was mainly sandy-loam (Molli-Gleyic, Cambisols; FAO, 2001), and most of the experimental area was below sea level. The climate is sub-humid, with annual rainfalls of 829 mm and a monthly mean temperature increase from January (-0.1 °C) to July (29.6 °C). The reference evapotranspiration (ET0, yearly average of 860 mm) exceeds rainfall from May to September.

Winter wheat (Triticum aestivum L.) cv. Rebelde (APSOV sementi, Italy) was cultivated during the 2018–2019 season (planting date, November 19th, 2018; harvest date July 6th, 2019) on plots 9–16 and 2019–2020 season (planting date, December 12th, 2019; harvest date, July, 1st, 2020) on plots 17–24 (Fig. 1). Winter wheat was cultivated in the northern part during 2018–2019 and in the southern part during 2019–2020 to test the different methodologies in all management zones.

Minimum and maximum temperature (°C), solar irradiance (W m− 2), rainfall (mm), relative humidity (%), wind speed (m/s) and direction were collected hourly from an on-site automatic weather station (DeltaOHM Srl, Italy). The data was sent via GSM technology to an FTP server weekly. In addition, historical weather data (1992–2018) was retrieved from a nearby weather station (Agenzia Regionale per la Prevenzione e Protezione Ambientale del Veneto, ARPAV) located in Lugugnana, Italy. During the two growing seasons, the area was rainfed and managed according to minimum tillage practices and conventional herbicide, fungicide and insecticide treatments.

Definition of homogeneous management zones

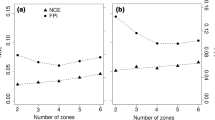

To identify the management zones (MZs), soil properties and yield data were combined using a fuzzy c-means unsupervised clustering algorithm (Odeh et al., 1992) in the Management Zone Analyst (Fridgen et al., 2004) software as reported by Cillis et al., (2018).

The analysis resulted in four different management zones (Fig. 1): two high yielding zones in the northern part of the experimental area (zones 3 and 4) and two low yielding zones (zones 1 and 2) in the southern part. The main soil properties of the four management zones are reported in Table 1.

Fertilization treatments

In this study, three fertilization treatments were tested:

-

1)

Uniform rate: 32 kg N ha-1 (both 2018–2019 and 2019–2020) applied before planting, 70 kg N ha-1 for 2018–2019 and 40 ha-1 for 2019–2020 supplied at tillering, and 70 kg N ha-1 supplied at flag leaf in 2018–2019 and 2019–2020 for both zones. The prescribed rates at tillering followed the business-as-usual quantity of fertilizer supplied by the farmer, higher in 2018–2019 than in 2019–2020 because of plots located in different fertility zones.

-

2)

VRA1: 32 kg N ha-1 applied at planting, N rates for the side dressing 1 and 2 defined by using the DSSAT crop simulation model fed with historical weather data;

-

3)

VRA2: 32 kg N ha-1 applied at planting, N rates for the side dressing 1 and 2 defined by coupling the DSSAT crop simulation model with seasonal forecasts and proximal sensing;

The locations of the different fertilization treatments evaluated in this study are presented in Fig. 2.

Crop and soil samples

2018–2019 and 2019–2020 seasons

Sixteen soil samples, distributed across the field to cover the different fertilization treatment x MZ combinations, were collected prior to the commencement of the growing seasons at three depths (0-0.2 m, 0.2–0.4 m and 0.4–0.6 m) on plots 9–16 during the 2018–2019 season and on plots 17–24 during the 2019–2020 season. Samples were then analyzed for bulk density, soil texture, pH, soil organic matter and total nitrogen. From sowing to harvest, soil moisture was recorded hourly at three different depths (0.2, 0.4 and 0.6 m) on four sampling points (Fig. 2) using fixed soil moisture sensors (HD3910.1; DeltaOHM Srl, Italy).

Soil preparation was carried out following a conventional tillage technique for both growing seasons. Prior to wheat planting, the plots were fertilized using NPK 8-24-24 at a uniform rate of 400 kg ha− 1 (32 kg N ha− 1, 96 kg P2O5 ha− 1 and 96 kg K2O ha− 1). Winter wheat seeds (cultivar Rebelde from Apsov Sementi, Italy) were planted on November 19th, 2018, on plots 9–16, and on December 12th, 2020, on plots 17–24 at an inter-row spacing of 0.125 m and a seeding rate of 220 kg ha− 1. The location of the different fertilization treatments (uniform, VRA1, and VRA2, discussed in the “CSM-based approaches for defining optimal N rates” section) evaluated in this study are presented in Fig. 2. During 2018–2019 and 2019–2020, the uniform treatment was tested over four different plots, while VRA1 and VRA2 treatments were tested over two plots.

During both growing seasons, normalized difference vegetation index (NDVI), leaf area index (LAI), plant height and total aboveground biomass were measured on each of the 16 sampling points at five specific growth stages: tillering (February 27th, 2019 and March 31st, 2020), stem elongation (April 16th, 2019 and April 21st, 2020), flag leaf (May 8th, 2019 and May 12th, 2020), flowering (May 24th, 2019, not collected in 2019–2020), and harvest (July 6th, 2019 and July 1st, 2020). LAI was determined using an AccuPAR LP-80 (Decagon Devices Inc., Pullman, WA, USA) ceptometer. LAI readings were taken within ± 2 h from solar noon. Plant height was measured on five plants per sampling point to monitor plant growth.

Estimation of the optimal N rates with a CSM

Crop simulation model

The decision support system for agrotechnology transfer (DSSAT; Jones et al., 2003)-CERES-Wheat model was used in the study to simulate winter wheat responses to different fertilization rates. The DSSAT crop simulation model has been extensively used over a wide range of soil, environmental conditions, and crops (Anar et al., 2019; Cammarano et al., 2020). The soil water movement is simulated with a tipping bucket approach where saturated downward flow occurs when a soil layer has a water content greater than the drain upper limit (Ritchie et al., 1998). In the CERES-wheat model, plant development is divided into specific phases and the rate of development is calculated as a function of growing degree days (GDDs). In addition, daily dry matter accumulation is computed using the intercepted photosynthetically active radiation (PAR) and crop-specific radiation use efficiency (RUE) values (Jones et al., 2003). The input dataset used in this study consisted of daily weather data (minimum and maximum air temperature, solar radiation and precipitation), soil texture and chemical (organic carbon, pH and total nitrogen) properties, agronomic management and crop parameters.

Crop model calibration and evaluation

The crop parameters were manually calibrated for the Rebelde cultivar through an iterative, trial-and-error procedure. The crop parameters involved in the calibration process were optimum vernalizing temperature (P1V), photoperiod response (P1D), phyllochron (PHINT), kernel number per unit canopy weight at anthesis (G1), standard kernel size under optimum conditions (G2) and non-stressed mature tiller weight (G3). First, the phenology was calibrated by adjusting P1V and P1D. Next, the aboveground biomass and the final yield were calibrated by acting on PHINT, G1, G2 and G3.

The calibration procedure was performed on an independent winter wheat dataset that included phenology (emergence, anthesis and maturity dates) and yield data collected during the 2014–2015 and 2015–2016 seasons in the same experimental field (Cillis et al., 2017). The calibration dataset included yield and phenology data from 8 different fertilization treatments in 2014–2015 and 15 fertilization treatments in 2015–2016, for a total of 23.

In addition, the model was evaluated using phenology (emergence, anthesis, harvest), plant biomass and N uptake, leaf area index and yield over the 2018–2019 and 2019–2020 growing seasons. The dataset included data from 7 different fertilization treatments in 2018–2019 and 6 fertilization treatments in 2019–2020, for a total of 13.

CSM-based approaches for defining optimal N rates

The calibrated model was adopted to determine optimal N rates for the 2018–2019 and 2019–2020 growing seasons for the VRA1 and VRA2 methods. DSSAT was run for the different MZs using two approaches: (1) using 27 years (1992–2018) of historical weather data (VRA1), and (2) informing the model with measured weather data up to the fertilization date, integrated with seasonal forecasts from the fertilization date up to harvest (VRA2).

In the VRA1 method, a set of simulations was run immediately before tillering using historical weather data (1992–2018). Then, the same set of simulations was rerun at the flag leaf stage, specifying the actual fertilization date for the 2nd top dressing.

For VRA2, following the approach described in Morari et al., (2021), the N rate was calculated according to the following steps: (1) use of the DSSAT crop simulation model to estimate the optimal N rates for each MZ; (2) estimation of the actual N uptake with proximal sensing technologies; (3) building of N prescription maps; (4) N variable rate application (VRA).

In the VRA2 method, two distinct sets of simulations were run, one at tillering (1st top dressing) and one at flag leaf (2nd top dressing). On the one hand, DSSAT was run at tillering using the combination of observed weather from sowing date to the 1st top dressing date, and seasonal forecasts from the 1st top dressing up to the harvest date. On the other hand, the weather dataset used for the simulations run at the flag leaf stage included the combination of measured data from sowing date to the 2nd top dressing and seasonal forecasts from the 2nd top dressing up to harvest.

The forecast data were provided as seasonal forecasts, a set of monthly anomalies of meteorological variables for the months following the prediction date (Ferrise et al., 2015), and coupled with the crop model following the approach described in Morari et al., (2021). Seasonal forecasts were produced by the United States National Centers for Environmental Prediction Climate Forecast System version 2 (CFS2; http://cfs.ncep.noaa.gov), which is a fully coupled model representing the complex interaction among the atmosphere, oceans, land and sea-ice extension (Saha et al., 2014).

Two different seasonal forecast datasets were employed for the VRA2 method: (i) the dataset utilized at the 1st top dressing (Forecast 1), which consisted of a combination of actual weather data (covering from planting date to the 1st top dressing) and seasonal forecasts (from the 1st top dressing to harvest date), and (ii) the dataset utilized at the 2nd top dressing (Forecast 2), which combined actual weather data (spanning from planting date to the 2nd top dressing) and seasonal forecasts (from the 2nd top dressing to harvest date).

Definition of the optimal N rates

DSSAT was run with either VRA1 and VRA2 method in each MZ with incremental N rates (from 0 kg N ha− 1 to 100 kg N ha− 1) for both top-dress applications. The optimal combination (1st + 2nd top dressing) was determined using the DSSAT economic analysis package to identify the most economically efficient among the simulated N combinations.

Base production costs were estimated using reference prices for each tillage and harvest operation. Grain price and N fertilizer cost were retrieved immediately before the fertilization date from the Bologna cereal market (http://www.agerborsamerci.it). The price for each N fertilization was set at 20 €/application.

Estimation of the actual N uptake with proximal sensing technologies

Definition of NDVI to N uptake relationships

During the 2018–2019 and 2019–2020 growing seasons, normalized difference vegetation index (NDVI) maps were generated on the aforementioned sampling dates using a Trimble FMX 1000 integrated display (Trimble, Sunnyvale, CA, USA) with data acquired by two proximal GreenSeeker (NTech Industries Incorporation, Ukiah, CA, USA) sensors placed 6-m apart on a bar mounted on a tractor.

On each of the aforementioned sampling dates, all the plants over a 1 m2 area around each sampling point were destructively harvested to determine the total aboveground biomass. Harvested plant samples were dried at 65 °C in a forced-air oven for 72 h for dry weight determination and total nitrogen content (Italian Ministry of Agricultural Food and Forestry Policies, 1999). Then, plant N uptake was calculated by multiplying the N content (in %) by the total aboveground dry biomass weight. Finally, NDVI to N uptake relationships were compared to those reported in Morari et al., 2021 (Figure S1, supplementary materials).

Definition of the actual N uptake maps

NDVI measurements collected immediately before the fertilization dates were converted into N uptake using the linear equation proposed by Bruce et al., (2019):

where GDDacc is the cumulative growing degree days from planting to the fertilization date.

The NDVI map was built using a 1 × 1 m-grid kriging analysis, and the N uptake was computed for each pixel according to the aforementioned linear equation. Since the N uptake and its variability at tillering are usually low, the N rates estimated by the CSM for VRA2 were corrected with in-season estimates of crop N status only at the second top-dressing.

N prescription maps and N variable rate application

While for the VRA1 methodology, the most economically convenient N amounts defined by the CSM were directly assigned in the prescription map, the most economically convenient N amounts for the VRA2 method were coupled with in-season estimates of crop N status following the methodology presented in Morari et al., (2021) and modified as follows:

where OPTIMAL Nup is the total N uptake (in kg N ha− 1) simulated by the model at harvest; ACTUAL Nup (in kg N ha− 1) is the average N uptake estimated in 15 × 30 m pixels using the relationship between NDVI and N uptake, No is the soil N contribution (in kg N ha− 1) from the fertilization date (ti) to the end of the season (tend). EffC is the average fertilization efficiency calculated by the crop model from the fertilization date (ti) to the end of the season (tend).

The optimal N rates defined using the aforementioned methodology for the VRA1 and VRA2 approaches were assigned to the specific polygon in the prescription map. In the VRA2 method plots, N fertilization rates were defined on the basis of the spatial resolution of the fertilizer spreader, which resulted in 15 × 30 m pixels (Fig. 3b, d).

The uniform fertilization treatment consisted of 32 kg N ha− 1 (both 2018–2019 and 2019–2020) applied before planting, 70 kg N ha− 1 for 2018–2019 and 40 ha− 1 for 2019–2020 supplied at tillering, and 70 kg N ha− 1 supplied at flag leaf in 2018–2019 and 2019–2020 for both zones. The prescribed rates at tillering followed the business-as-usual quantity of fertilizer supplied by the farmer, higher in 2018–2019 than in 2019–2020 because of plots located in different fertility zones. (Table 2).

Fertilizers were supplied using a DCM SW5 (DCM, Verona, Italy) ISOBUS, double disk centrifugal spreader equipped with a load cell system. The prescription maps were uploaded to a Trimble GFX-750 (Trimble, Sunnyvale, CA, USA) display with RTK correction. The spreader was calibrated right before each fertilization date. After each fertilization, the map with the applied N rates (as-applied map) was downloaded from the GFX display.

Yield and protein data collected at harvest

Yield data was recorded using a John Deere S680 (Deere & Co, Moline, IL, USA) equipped with a yield monitor and a differential global positioning system. The combine had a 9 m header and collected data every second. Raw yield data was imported using FarmWorks (Trimble, Sunnyvale, CA, USA) cleaned to exclude field-edge effects and harvester manoeuvres using the methodology described in Vega et al., (2019). Yield data were expressed as grain dry matter per hectare (t DM ha− 1).

In addition, 120 destructive wheat samples were collected in both growing seasons, following a mixed sampling scheme based on a regular grid (Chiericati et al., 2007): 40 samples were collected at the nodes of a 30 × 70 m grid and 80 additional samples were collected on transects set out in the east axis at 10 and 20 m from each node. Samples were air-dried at 65 °C for 24 h in a forced-air oven, sieved at 2 mm, and analyzed following the Kjeldahl method.

Nitrogen agronomic efficiency (AE) was calculated in 2018–2019 and 2019–2020 for each 15 × 30 m pixel, dividing the average harvested yield (kg DM ha− 1) by the average total N supplied (kg N ha− 1).

Statistical analysis

The goodness of fit between simulated and observed values during the model calibration and evaluation was determined using the root mean square error (RMSE), the Pearson’s correlation coefficient (r), and the Wilmott index of agreement (D-Index; Willmott 1982):

where Oi is the observed data, O is the mean of the observed data, Si is the simulated data and n is the number of simulated-observed pairs.

The D-Index ranges between 0 (poor fit between simulated and observed values) and 1 (good fit between simulated and observed values).

Yield and protein data were subjected to ordinary kriging using ArcGIS 10.7.1 (ESRI, Redlands, CA, USA) to produce field maps.

Treatment effects were assessed for each parameter of interest (yield, protein content and AE) using a one-way analysis of variance (ANOVA). Post hoc analysis to test differences between treatment means was conducted using Tukey’s honestly significant difference (HSD). For all the analyses, p < 0.10 indicated a significant difference between means.

Results

Weather and soil data

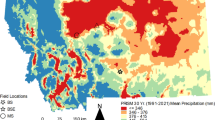

Rainfall totaled 675 mm and 489 mm during the 2018–2019 and the 2019–2020 growing seasons, respectively, while the average amount recorded for the same period between 1992 and 2018 was 485 mm. Both growing seasons were characterized by dry conditions from tillering to the end of April and wet May and June. Daily air temperature ranged from a minimum of -3.4 °C and − 4.9 °C in January to a maximum of 31.3 °C and 31.0 °C at the end of June, respectively (Fig. 4a, b).

Model calibration: 2014–2015 and 2015–2016 growing seasons

Cultivar parameters were adjusted manually during the calibration through an iterative, trial-and-error procedure. Estimated crop model parameters are reported in the supplementary material (Table S1). During the 2014–2015 and 2015–2016 seasons, no differences were observed in the phenological development among the different MZs.

The emergence date was simulated one day later than the observed date (15 DAP vs. 14 DAP) in 2014–2015 and 2 days earlier than the observed date (13 DAP vs. 15 DAP) in 2015–2016. The anthesis date was simulated one day earlier than the observed date (204 DAP vs. 205 DAP) in 2014–2015 and 2 days later than the observed date (201 DAP vs. 199 DAP) in 2015–2016. The maturity date was simulated within one day (234 DAP vs. 233 DAP) in 2014–2015 and 4 days (236 DAP vs. 232 DAP) in 2015–2016 (Fig. 5a).

The model showed a good performance in simulating the harvested yield compared to the observed values (Fig. 5b). The harvested yield had an RMSE of 750.7 kg DM ha− 1, a D-index of 0.82, and a correlation coefficient (r) of 0.76.

NDVI and N uptake maps

N uptake maps collected immediately before the 1st top dressing ranged between 0.4 and 23.5 kg N ha− 1 in 2018–2019 and between 1.1 and 10.6 kg N ha− 1 in 2019–2020. Due to the early sensing period, both maps showed limited spatial variability, with a standard deviation equal to 2.2 kg N ha− 1 for 2018–2019 (Fig. 6a) and 1.4 kg N ha− 1 for 2019–2020 (Fig. 6b).

N uptake maps collected immediately before the 2nd top dressing showed great spatial variability, with standard deviations equal to 24.8 kg N ha− 1 in 2018–2019 and 20.5 kg N ha− 1 in 2019–2020. Nitrogen uptake ranged between 15.7 and 137.1 kg N ha− 1 in 2018–2019 (Fig. 6c) and between 9.5 and 111.7 kg N ha− 1 in 2019–2020 (Fig. 6d). During the 2019–2020 growing season, the unfavorable weather conditions penalized winter wheat growth and resulted in an average N uptake at the flag leaf stage of 52.6 N ha− 1 compared to the 90.2 N ha− 1 observed in 2018–2019.

Identification of the optimal N rates.

Table 2 presents the N fertilization rates for the different treatments (uniform fertilization, VRA1 and VRA2) supplied at tillering (1st top-dressing; February 26th, 2019 and March 31st, 2020) and at flag leaf swollen (2nd top dressing; May 8th, 2019 and May 12th, 2020) during the 2018–2019 and 2019–2020 growing seasons. In addition, response curves of final yield to different total N fertilization rates for the four different management zones simulated using the VRA1 and VRA2 methods are reported in Fig. 7.

During the 2018–2019 season, the VRA1 method identified as optimal N rate 32 kg N ha− 1 at planting, 70 kg N ha− 1 at tillering and 60 kg N ha− 1 at flag leaf for zone 3, whereas 32 kg N ha− 1 + 70 kg N ha− 1 + 80 kg N ha− 1 were recommended for zone 4. During the 2019–2020 season, the VRA1 method recommended 32 kg N ha− 1 at planting, 60 kg N ha− 1 at tillering, 40 kg N ha− 1 at flag leaf for zone 1 and 32 kg N ha− 1 + 70 kg N ha− 1 + 30 kg N ha− 1 for zone 2 (Table 2).

The optimal N rates for the VRA2 methods were 32 kg N ha− 1 + 80 kg N ha− 1 for both zone 3 and 4, during 2018–2019 (Fig. 3a) and 32 kg N ha− 1 + 70 kg N ha− 1 for zone 1 and 32 kg N ha− 1 + 80 kg N ha− 1 for zone 2 in 2019–2020 (Fig. 3c). In 2018–2019 DSSAT simulations recommended to apply 70 kg N ha− 1 at the second top dressing (zone 3) and 90 kg N ha− 1 (zone 4) and no N application in 2019–2020. However, NDVI-derived N uptake values on 15 × 30 m pixels (Gobbo et al., 2021) corrected such recommendations suggesting to apply N rates of 20 kg N ha− 1 for zone 3 and 25 kg N ha− 1 for zone 4 in 2018–2019 (Fig. 3b) and 30 kg N ha− 1 for zone 1 and 15 kg N ha− 1 for zone 2 in 2019–2020 (Fig. 3d).

Comparison among weather datasets.

Table 3 presents the average of solar radiation (MJ m− 2) and temperatures (minimum and maximum), and the sum of rainfall recorded between the 1st top dressing (February 26th, 2019 and March 31st, 2020) and the harvest date (July 6th, 2019 and July 1st, 2020).

During the 2018–2019 growing season, the historical dataset was the closest to the observed temperatures, solar radiation and rainfall values. Forecast 1 and Forecast 2 had higher solar radiation (+ 1.3 and + 1.5 MJ m− 2), Tmin (+ 0.2 °C and + 0.4 °C), Tmax (+ 0.3 °C and + 1.2 °C) than the observed. In addition, the two seasonal forecast datasets severely underestimated the observed rainfall by 249.7 and 297.5 mm, respectively.

During the 2019–2020 growing season, the historical dataset had similar Tmin (+ 0.2 °C) and Tmax (-0.1 °C) values to the observed, while Forecast 1 and 2 were similar to the observed solar radiation (+ 0.4 MJ m− 2). Forecast 1 was the closest to observed rainfall (-10.1 mm), whereas the historical data and Forecast 2 had similar deviations from the observed (+ 30.3 mm and − 26.3 mm, respectively).

Yield and protein content maps

Yields measured across the field showed significant spatial variability in 2018–2019, with values ranging from less than 1.0 t DM ha− 1 to more than 9.0 t DM ha− 1. Yield averages at treatment level ranged from a minimum of 4.72 t DM ha− 1 for the VRA2-Zone 3 treatment to a maximum of 5.77 t DM ha− 1 for VRA1-Zone 3. Higher yields were observed in the northern part of the map, while lower values were observed in the southeastern part (Fig. 8a). Protein content showed great spatial variability, with values ranging from 13.6 to 17.0%. Low protein contents were observed in the northeastern part of the experimental area and lower values in the south and southeastern parts (Fig. 8b).

During the 2019–2020 season, measured yields were lower than in the 2018–2019 one due to unfavourable weather conditions that occurred immediately after planting (wetter and colder than usual) and from the 2nd node to booting (dryer than usual). Nevertheless, spatial variability in yield was also observed in 2019–2020, with values ranging from less than 1.0 t DM ha− 1 to more than 5.5 t DM ha− 1 (Fig. 8c). Treatment yield averages ranged from a minimum of 2.72 t DM ha− 1 for the uniform treatment to a maximum of 3.38 t DM ha− 1 for VRA2-Zone 1. As in 2018–2019, protein content showed a substantial spatial variability (Fig. 8d), with values ranging from 12.4 to 17.8%. On average, higher protein content values were observed in the VRA1 and VRA2 treatments (plots 2, 3, 7, and 8) and lower values in the uniform treatment (plots 1, 3, 4, and 5).

In 2018–2019, the VRA1 had a significantly higher yield (5.53 t DM ha− 1) than the uniform (4.90 t DM ha− 1) and VRA2 (4.84 t DM ha− 1) fertilization treatments. VRA1 and VRA2 treatments had slightly higher protein content, on average + 0.4% and 0.5%, respectively, but not significantly different than the uniform treatment. Moreover, the uniform treatment had the lowest agronomic efficiency (AE; 28.7 kg grain kg N− 1) when compared to VRA1 (31.7 kg grain kg N− 1 applied) and VRA2 (36.2 kg grain kg N− 1 applied). In addition, the uniform fertilization had the lowest gross margin (506.8 € ha− 1), while the VRA1 and VRA2 methodologies resulted in higher gross margins (659.2 € ha− 1 and 538.0 € ha− 1).

In 2019–2020, the uniform fertilization treatment had the lowest production (2.72 t DM ha− 1), with an average loss of 0.22 and 0.39 t DM ha− 1 compared with VRA1 and 2 (Table 4). The difference in production between the treatments was, however, not statistically significant. Both VRA1 and VRA2 treatments resulted in significantly higher protein content (16.4% and 16.3%) than the uniform treatment (14.1%). Moreover, the uniform treatments had the lowest agronomic efficiency (AE; 19.2 kg grain kg N− 1) (p < 0.01) when compared to VRA1 (22.3 kg grain kg N− 1 applied) and VRA2 (25.4 kg grain kg N− 1 applied).

The VRA1 and VRA2 treatments showed a significantly higher agronomic efficiency compared with the uniform application rate (p < 0.10) (Table 4). The uniform fertilization had the lowest gross margin (62.3 € ha− 1), while the VRA1 and VRA2 methodologies resulted in + 101% and + 84% greater gross margins (125.4 € ha− 1 and 167.9 € ha− 1), respectively.

Model evaluation: 2018–2019 and 2019–2020 growing seasons

The calibrated model was evaluated using the datasets collected over the 2018–2019 and 2019–2020 growing seasons. No differences were observed in the phenological development among the different MZs. The emergence date was simulated one day earlier than the observed date (23 DAP vs. 24 DAP) in 2018–2019 and 3 days later than the observed date (31 DAP vs. 28 DAP) in 2019–2020. The anthesis date was simulated one day later than the observed date (184 DAP vs. 182 DAP) in 2018–2019 and 3 days earlier than the observed date (156 DAP vs. 159 DAP) in 2019–2020. Finally, the harvest date was simulated within four days (214 DAP vs. 218 DAP) in 2018–2019 and 2 days (189 DAP vs. 191 DAP) in 2019–2020.

The model performed well in predicting the observed aboveground crop parameters. The comparison between observed and simulated harvested yield resulted in an RMSE of 437.9 kg DM ha− 1, a D-index of 0.94 and a coefficient of determination (r2) of 0.88 (Fig. 9a). The comparison resulted in an RMSE of 0.67 and a D-index of 0.93 for leaf area index (LAI; Fig. 9b) and an RMSE of 1263.2 kg DM ha− 1 and 15.5 kg N ha− 1, and a D-index of 0.97 and 0.96 for aboveground biomass and N uptake, respectively (Fig. 9c, d).

Discussion

Model parameterization

The yields achieved with the three fertilization approaches during the 2018–2019 season were in line with the seasonal average winter wheat yields reported for the Veneto region (5.5 t ha− 1). However, in 2019–2020, the yields measured at the trial were less than half of the average regional yields reported for that year(6.5 t ha− 1; ISTAT Agricoltura, 2021). During the 2018–2019, winter wheat was grown on the two most productive managements zones (Zone 3 and 4), resulting in yields close to the Veneto regional average. In 2019–2020, winter wheat was grown on the two least productive management zones, resulting in lower crop yields. Low yields in 2019–2020 were also related to outlier weather conditions observed at the experimental area, with planting conditions occurring in sub-ideal soil moisture due to the wet winter.

However, the model was able to successfully reproduce these seasonal dynamics, showing the robustness and importance of the calibration and evaluation process. The calibration and evaluation statistics obtained in this study (RMSE and D-index) agree with previous results (Attia et al., 2016; Li et al., 2018) and indicate the model evaluation results were satisfactory. A recent study by Zhang et al., (2019) used the DSSAT model to evaluate long-term responses in winter wheat productivity and N use to N fertilization. In this study, the calculated RMSE ranged between 1148.3 and 1307.5 kg DM ha− 1 for simulated biomass, 274.6 and 476.8 kg DM ha− 1 for yield, and between 3.11 and 17.78 kg N ha− 1 for canopy N. These results by Zhang et al., (2019) align with the RMSEs obtained in this study, which were 1263.2 kg DM ha− 1 for biomass, 437.9 kg DM ha− 1 for yield, and 15.5 kg N ha− 1 for canopy N.

Importantly, in the study, model performance during the evaluation dataset was better than during the calibration one (RMSE of 750.7 DM ha− 1 in the calibration vs. 437.9 kg DM ha− 1 in the evaluation for yields). This result is relatively rare across studies employing crop models (Jing et al., 2017; Roll et al., 2020), as weaker model performances in the evaluation phase often occur when the dataset used in the model calibration is comparatively small and/or contains experimental errors. Therefore, the satisfactory validation results achieved in this study show the importance of parameterizing crop models using large, multi-seasonal datasets characterized by limited experimental errors (Ehrhardt et al., 2018).

Proximal sensing and spatial variability

Proximally-sensed NDVI showed low spatial variability at tillering, while substantial spatial variability was observed immediately before the second top dressing at flag leaf. The use of proximal sensing captured spatial variability in plant vigor at a high spatial resolution. This aspect is essential for small fields such as the one used in this study (characterized by 30 × 500 m plots), where remote sensing platforms are highly influenced by field-edge effects (Mulla, 2013). Proximal sensors and biomass samples (Figure S1, supplementary materials) confirmed previous NDVI to N uptake relationships in winter wheat (Li et al., 2008; Morari et al., 2021).

As widely reported, the sensitivity of proximal sensors to changes in plant biomass decrease dramatically when approaching NDVI saturation (Aparicio et al., 2000; Naser et al., 2020).

To avoid this issue, this study adopted an early application of N fertilizers before the booting stage and used the linear correlation described in Bruce et al., (2019), which accounts for accumulated GDDs.

CSM-based approaches

Crop simulation models have been extensively applied in the scientific literature to determine optimal fertilization rates (De Antoni Migliorati et al., 2021; Cammarano et al., 2021) and assess the impact of climate change on crop production (Asseng et al., 2013, 2019; Cammarano et al., 2019). However, no on-farm N-VRA study has compared the agronomic and economic gains of the uniform N application rate approach with adopting CSM-based methodologies integrating remotely sensed plant N status and seasonal forecasts or historical weather data.

The two CSM-based approaches resulted in significantly higher wheat yield in 2018–2019, protein content in 2019–2020 and agronomic efficiency in 2018–2019 and 2019–2020 growing seasons (Table 4). CSM-based N variable rate resulted in a greater gross margin (+ 30% and + 84% for VRA1 and + 6% and + 101% for VRA2) than the values observed in the uniform treatment. These findings confirm the accuracy and profitability of CSM-based N rates when the model is parameterized using high-quality, multi-seasonal datasets. These results agree with the findings reported by other authors on N-VRA approaches. For example, Basso et al., (2011) utilized the SALUS model to provide N-VRA rates in Italy, showing how CSM-based approaches had similar AE and crop yield (on average, + 1.36%) and greater NUE than uniform fertilization. In a recent study, Argento et al., (2021) stated that variable-rate fertilization resulted in greater yield (from 2 to 10%) and greater profit than standard fertilization. Similar results were provided for winter wheat by Stamatiadis et al., (2018), where N-VRA utilized 38–72% fewer N inputs and had a 118 € ha− 1 higher revenue over fertilizer costs than the farmer’s uniform fertilization approach.

Using a CSM to provide in-season N recommendation offered clear advantages, but its implementability depends on the type of weather data used to inform the model. In this study, feeding the model with historical weather data resulted in greater agronomic and economic performance than the uniform treatment, requiring only a limited amount of data to run the simulations. Moreover, historical weather data are easily and freely accessible in many countries and do not require any further steps to be utilized in a CSM. On the other hand, feeding the model with seasonal forecasts data requires substantial technical capabilities and efforts to produce reliable seasonal forecasts. Of course, producing seasonal forecasts is not as easy as downloading historical data from a national or international database, on top of which it has to be noted that seasonal forecasts produced in the Mediterranean regions are usually less reliable than those produced in areas characterized by solid climate signals (Pavan & Doblas-Reyes, 2013; Basso & Liu, 2019). As reported in Costa-Saura et al., (2022), several sources of uncertainties exist for explaining this poor performance. Beside the already mentioned weak relationships between large-scale climate signals and local climate variability, model approximations of physical processes and the imperfect knowledge of the system’s initial conditions may lead to inaccurate predictions. Nonetheless, seasonal forecasting since the beginning has experiencing a persistent improvement (Weisheimer & Palmer, 2014). In particular, several research programs, in recent years, have been started to explore the source of uncertainties and improve seasonal forecasts in areas where they currently perform poorly, thus increasing their potential usefulness for decision making under different geographical and environmental contexts (Costa-Saura et al., 2022).

Togliatti et al., (2017), who coupled seasonal forecasts with the APSIM model, found that the accuracy in yield predictions was inversely proportional to the forecast length. In the current study, seasonal forecasts performed poorly in the 2018–2019 growing season, underestimating the observed rainfall by 45.7% for the Forecast 1 dataset and 54.4% for the Forecast 2 dataset. Conversely, seasonal forecasts produced in 2019–2020 had solar radiation, Tmin and rainfall values close to the observed ones, while Tmax was on average 1 °C higher than the observed. During both growing seasons, moving from an early prediction (Forecast 1; 120 days before harvest) to seasonal forecasts produced approximately 60 days before harvest did not improve the accuracy of the dataset compared to the observed weather variables. As discussed in Morari et al., (2021), accurate crop simulations were produced when the observed rainfall was similar to or lower than the climatological average (i.e. 2019–2020), while the system performed poorly when the growing season was characterized by abundant precipitation (i.e. 2018–2019).

Importantly, the accurate seasonal forecasts in 2019–2020 resulted in substantially better model performance, which led to VRA2 recording the higher yields and AE compared with running the model with historical weather (VRA1) (Table 4). These results confirm the remarks reported by Asseng et al., (2016), who observed how accurate short-term rainfall forecasts in dryland agriculture could increase farmer’s benefits because of better sowing, fertilization and fungicide application decisions.

Conclusion

Even if weather conditions differed substantially across the 2018–2019 and 2019–2020 growing seasons in this study, crop model-based fertilization recommendations resulted in consistently greater yields, N efficiency and profit for farmers than the uniform N application approach. These findings indicate that either (i) the use of historical weather data or (ii) seasonal forecasts provide farmers with better fertilization recommendations than the uniform, business-as-usual method, even when outlier conditions occur. In addition, the combination of proximally-sensed NDVI with seasonal forecasts well captured the spatial and temporal variability in plant vigor immediately before fertilization, which led to substantial increases in N use efficiency.

The results of this study confirm that a system that integrates crop modelling, proximal sensing and seasonal historic or forecast weather data could be used to develop an intuitive online tool that allows farmers to define the best N fertilization rates for the simulated crop. Therefore, future research will need to focus on integrating proximally and remotely sensed data into the crop simulation model through data assimilation or autocalibration strategies, leading to accurate yield estimates and optimal N rates for each management zone.

References

Anar, M. J., Lin, Z., Hoogenboom, G., Shelia, V., Batchelor, W. D., Teboh, J. M., et al. (2019). Modeling growth, development and yield of Sugarbeet using DSSAT. Agricultural Systems, 169, 58–70. https://doi.org/10.1016/j.agsy.2018.11.010

Aparicio, N., Villegas, D., Casadesus, J., Araus, J. I., & Royo, C. (2000). Spectral Vegetation Indices as Nondestructive Tools for Determining Durum Wheat Yield. Agronomy Journal, 91, 83–91. https://doi.org/10.2134/agronj2000.92183x

Argento, F., Anken, T., Abt, F., Vogelsanger, E., Walter, A., & Liebisch, F. (2021). Site-specific nitrogen management in winter wheat supported by low-altitude remote sensing and soil data. Precision Agriculture, 22(2), 364–386

Asseng, S., Ewert, F., Rosenzweig, C., Jones, J. W., Hatfield, J. L., Ruane, A. C., et al. (2013). Uncertainty in simulating wheat yields under climate change. Nature climate change, 3(9), 827–832. https://doi.org/10.1038/nclimate1916

Asseng, S., McIntosh, P. C., Thomas, G., Ebert, E. E., & Khimashia, N. (2016). Is a 10-day rainfall forecast of value in dry-land wheat cropping? Agricultural and Forest Meteorology, 216, 170–176. https://doi.org/10.1016/j.agrformet.2015.10.012

Asseng, S., Martre, P., Maiorano, A., Rötter, R. P., O’Leary, G. J., Fitzgerald, G. J., et al. (2019). Climate change impact and adaptation for wheat protein. Global Change Biology, 25(1), 155–173. https://doi.org/10.1111/gcb.14481

Attia, A., Rajan, N., Xue, Q., Nair, S., Ibrahim, A., & Hays, D. (2016). Application of DSSAT-CERES-Wheat model to simulate winter wheat response to irrigation management in the Texas High Plains. Agricultural Water Management, 165, 50–60. https://doi.org/10.1016/j.agwat.2015.11.002

Basso, B., Bertocco, M., Sartori, L., & Martin, E. C. (2007). Analyzing the effects of climate variability on spatial pattern of yield in a maize–wheat–soybean rotation. European Journal of Agronomy, 26(2), 82–91. https://doi.org/10.1016/j.eja.2006.08.008

Basso, B., & Liu, L. (2019). Seasonal crop yield forecast: Methods, applications, and accuracies. Advances in Agronomy, 154, 201–255. https://doi.org/10.1016/bs.agron.2018.11.002

Basso, B., Ritchie, J. T., Cammarano, D., & Sartori, L. (2011). A strategic and tactical management approach to select optimal N fertilizer rates for wheat in a spatially variable field. European Journal of Agronomy, 35(4), 215–222. https://doi.org/10.1016/j.eja.2011.06.004

Basso, B., Sartori, L., Cammarano, D., Fiorentino, C., Grace, P. R., Fountas, S., et al. (2012). Environmental and economic evaluation of N fertilizer rates in a maize crop in Italy: A spatial and temporal analysis using crop models. Biosystems Engineering, 113(2), 103–111. https://doi.org/10.1016/j.biosystemseng.2012.06.012

Brown, J. N., Hochman, Z., Holzworth, D., & Horan, H. (2018). Seasonal climate forecasts provide more definitive and accurate crop yield predictions. Agricultural and Forest Meteorology, 260, 247–254. https://doi.org/10.1016/j.agrformet.2018.06.001

Bruce, M. A., Moretto, J., Polese, R., & Morari, F. (2019). Optimizing durum wheat cultivation in Northern Italy: Assessing proximal and remote sensing derived from different platforms for variable-rate application of nitrogen. In Stafford, J. V. (Ed.) Proceedings of the 12th European Conference on Precision Agriculture. Precision agriculture’19 (pp. 73–84). Wageningen, The Netherlands: Wageningen Academic Publishers. https://doi.org/10.3920/978-90-8686-888-9_1

Cammarano, D., Basso, B., Holland, J., Gianinetti, A., Baronchelli, M., & Ronga, D. (2021). Modeling spatial and temporal optimal N fertilizer rates to reduce nitrate leaching while improving grain yield and quality in malting barley. Computers and Electronics in Agriculture, 182, 105997. https://doi.org/10.1016/j.compag.2021.105997

Cammarano, D., Ronga, D., di Mola, I., Mori, M., & Parisi, M. (2020). Impact of climate change on water and nitrogen use efficiencies of processing tomato cultivated in Italy. Agricultural Water Management, 241, 106336. https://doi.org/10.1016/j.agwat.2020.106336

Cammarano, D., Ceccarelli, S., Grando, S., Romagosa, I., Benbelkacem, A., Akar, T., et al. (2019). The impact of climate change on barley yield in the Mediterranean basin. European Journal of Agronomy, 106, 1–11. https://doi.org/10.1016/j.eja.2019.03.002

Cantelaube, P., & Terres, J. M. (2005). Seasonal weather forecasts for crop yield modelling in Europe. Tellus A: Dynamic Meteorology and Oceanography, 57(3), 476–487. https://doi.org/10.3402/tellusa.v57i3.14669

Chiericati, M., Morari, F., Sartori, L., Ortiz, B., Perry, C., & Vellidis, G. (2007). Delineating management zones to apply site-specific irrigation in the Venice lagoon watershed. In J. V. Stafford (Ed.), Precision Agriculture ‘07—Proceedings of the 6th European Conference on Precision Agriculture (pp. 599–605). Wageningen, The Netherlands: Wageningen Academic Publishers. https://doi.org/10.3920/978-90-8686-603-8

Cillis, D., Pezzuolo, A., Marinello, F., Basso, B., Colonna, N., Furlan, L., et al. (2017). Conservative Precision Agriculture: an assessment of technical feasibility and energy efficiency within the LIFE + AGRICARE project. In J A Taylor, D Cammarano, A Prashar, A Hamilton (Eds.) Proceedings of the 11th European Conference on Precision Agriculture. Advances in Animal Biosciences, 8(2), 439–443. https://doi.org/10.1017/S204047001700019X

Cillis, D., Pezzuolo, A., Marinello, F., & Sartori, L. (2018). Field-scale electrical resistivity profiling mapping for delineating soil condition in a nitrate vulnerable zone. Applied Soil Ecology, 123, 780–786. https://doi.org/10.1016/j.apsoil.2017.06.025

Colaço, A. F., & Bramley, R. G. (2018). Do crop sensors promote improved nitrogen management in grain crops? Field Crops Research, 218, 126–140

Costa-Saura, J., Mereu, V., Santini, M., Trabucco, A., Spano, D., & Bacciu, V. (2022). Performances of climatic indicators from seasonal forecasts for ecosystem management: The case of Central Europe and the Mediterranean. Agricultural and Forest Meteorology, 319, 108921. https://doi.org/10.1016/j.agrformet.2022.108921

De Antoni Migliorati, M., Scheer, C., Grace, P. R., Rowlings, D. W., Bell, M., & McGree, J. (2014). Influence of different nitrogen rates and DMPP nitrification inhibitor on annual N2O emissions from a subtropical wheat–maize cropping system. Agriculture Ecosystems & Environment, 186, 33–43. https://doi.org/10.1016/j.agee.2014.01.016

De Antoni Migliorati, M., Parton, W. J., Bell, M. J., Wang, W., & Grace, P. R. (2021). Soybean fallow and nitrification inhibitors: Strategies to reduce N2O emission intensities and N losses in Australian sugarcane cropping systems. Agriculture. Ecosystems & Environment, 306, 107150. https://doi.org/10.1016/j.agee.2020.107150

Delin, S., & Stenberg, M. (2014). Effect of nitrogen fertilization on nitrate leaching in relation to grain yield response on loamy sand in Sweden. European Journal of Agronomy, 52, 291–296. https://doi.org/10.1016/j.eja.2013.08.007

Dumont, B., Basso, B., Leemans, V., Bodson, B., Destain, J. P., & Destain, M. F. (2015). A comparison of within-season yield prediction algorithms based on crop model behaviour analysis. Agricultural and Forest Meteorology, 204, 10–21. https://doi.org/10.1016/j.agrformet.2015.01.014

Directive, E. C. C. (1991). Council Directive 91/676/EEC Concerning the Protection of Waters Against Pollution Caused by Nitrates from Agricultural Sources

Ehrhardt, F., Soussana, J. F., Bellocchi, G., Grace, P., McAuliffe, R., Recous, S., et al. (2018). Assessing uncertainties in crop and pasture ensemble model simulations of productivity and N2O emissions. Global Change Biology, 24(2), e603–e616. https://doi.org/10.1111/gcb.13965

Erdle, K., Mistele, B., & Schmidhalter, U. (2011). Comparison of active and passive spectral sensors in discriminating biomass parameters and nitrogen status in wheat cultivars. Field Crops Research, 124(1), 74–84. https://doi.org/10.1016/j.fcr.2011.06.007

FAOSTAT (2021). Winter wheat data for Europe. http://www.fao.org/faostat/en/#data/QCL. Accessed November 17th, 2021

Ferrise, R., Toscano, P., Pasqui, M., Moriondo, M., Primicerio, J., Semenov, M. A., et al. (2015). Monthly-to-seasonal predictions of durum wheat yield over the Mediterranean Basin. Climate Research, 65, 7–21. https://doi.org/10.3354/cr01325

Fridgen, J. J., Kitchen, N. R., Sudduth, K. A., Drummond, S. T., Wiebold, W. J., & Fraisse, C. W. (2004). Management Zone Analyst (MZA): Software for Subfield Management Zone Delineation. Agronomy Journal, 96(1), 1–17. https://doi.org/10.2134/agronj2004.1000

Giola, P., Basso, B., Pruneddu, G., Giunta, F., & Jones, J. W. (2012). Impact of manure and slurry applications on soil nitrate in a maize–triticale rotation: Field study and long term simulation analysis. European Journal of Agronomy, 38, 43–53. https://doi.org/10.1016/j.eja.2011.12.001

Gobbo, S., Morari, F., Ferrise, R., De Antoni Migliorati, M., Furlan, L., & Sartori, L. (2021). Evaluation of different crop model-based approaches for variable rate nitrogen fertilization in winter wheat. In Stafford, J. V. (Ed.) Proceedings of the 13th European Conference on Precision Agriculture. Precision agriculture’21 (pp. 49–56). Wageningen, The Netherlands: Wageningen Academic Publishers. https://doi.org/10.3920/978-90-8686-916-9_4

Hoogenboom, G. (2000). Contribution of agrometeorology to the simulation of crop production and its applications. Agricultural and Forest Meteorology, 103(1–2), 137–157. https://doi.org/10.1016/S0168-1923(00)00108-8

Istituto Nazionale di Statistica (ISTAT) (2021). Istituto Nazionale di Statistica (ISTAT): http://dati.istat.it. Accessed on November 17th, 2021

Italian Ministry of Agricultural Food and Forestry Policies (1999). Metodi ufficiali di analisi chimica del suolo, 13(09)

Jha, P. K., Athanasiadis, P., Gualdi, S., Trabucco, A., Mereu, V., Shelia, V., et al. (2019). Using daily data from seasonal forecasts in dynamic crop models for yield prediction: a case study for rice in Nepal’s Terai. Agricultural and Forest Meteorology, 265, 349–358. https://doi.org/10.1016/j.agrformet.2018.11.029

Jing, Q., Qian, B., Shang, J., Huffman, T., Liu, J., Pattey, E., et al. (2017). Assessing the options to improve regional wheat yield in eastern Canada using the csm–ceres–wheat model. Agronomy Journal, 109(2), 510–523. https://doi.org/10.2134/agronj2016.06.0364

Johnson, G. V., & Raun, W. R. (2003). Nitrogen response index as a guide to fertilizer management. Journal of Plant Nutrition, 26(2), 249–262. https://doi.org/10.1081/PLN-120017134

Jones, J. W., Hoogenboom, G., Porter, C. H., Boote, K. J., Batchelor, W. D., Hunt, L. A., et al. (2003). The DSSAT cropping system model. European Journal of Agronomy, 18(3–4), 235–265. https://doi.org/10.1016/S1161-0301(02)00107-7

Karatay, Y. N., & Meyer-Aurich, A. (2020). Profitability and downside risk implications of site-specific nitrogen management with respect to wheat grain quality. Precision Agriculture, 21(2), 449–472. https://doi.org/10.1007/s11119-019-09677-3

Li, Z., He, J., Xu, X., Jin, X., Huang, W., Clark, B., et al. (2018). Estimating genetic parameters of DSSAT-CERES model with the GLUE method for winter wheat (Triticum aestivum L.) production. Computers and Electronics in Agriculture, 154, 213–221. https://doi.org/10.1016/j.compag.2018.09.009

Li, F., Gnyp, M. L., Jia, L., Miao, Y., Yu, Z., Koppe, W., et al. (2008). Estimating N status of winter wheat using a handheld spectrometer in the North China Plain. Field Crops Research, 106(1), 77–85. https://doi.org/10.1016/j.fcr.2007.11.001

Miao, Y., Mulla, D. J., Batchelor, W. D., Paz, J. O., Robert, P. C., & Wiebers, M. (2006). Evaluating management zone optimal nitrogen rates with a crop growth model. Agronomy Journal, 98(3), 545–553. https://doi.org/10.2134/agronj2005.0153

Morari, F., Zanella, V., Gobbo, S., Bindi, M., Sartori, L., Pasqui, M., et al. (2021). Coupling proximal sensing, seasonal forecasts and crop modelling to optimize nitrogen variable rate application in durum wheat. Precision Agriculture, 22, 75–98. https://doi.org/10.1007/s11119-020-09730-6

Mulla, D. J. (2013). Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosystems engineering, 114(4), 358–371. https://doi.org/10.1016/j.biosystemseng.2012.08.009

Naser, M. A., Khosla, R., Longchamps, L., & Dahal, S. (2020). Using NDVI to differentiate wheat genotypes productivity under dryland and irrigated conditions. Remote Sensing, 12(5), 824. https://doi.org/10.3390/rs12050824

Odeh, I. O. A., McBratney, A. B., & Chittleborough, D. J. (1992). Soil pattern recognition with fuzzy-c‐means: Application to classification and soil landform interrelationships. Soil Science Society of America Journal, 56(2), 505–516. https://doi.org/10.2136/sssaj1992.03615995005600020027x

Pagani, V., Guarneri, T., Fumagalli, D., Movedi, E., Testi, L., Klein, T., et al. (2017). Improving cereal yield forecasts in Europe–The impact of weather extremes. European Journal of Agronomy, 89, 97–106. https://doi.org/10.1016/j.eja.2017.06.010

Pavan, V., & Doblas-Reyes, F. J. (2013). Calibrated multi-model ensemble summer temperature predictions over Italy. Climate Dynamics, 41(7–8), 2115–2132. https://doi.org/10.1007/s00382-013-1869-7

Perego, A., Giussani, A., Fumagalli, M., Sanna, M., Chiodini, M., Carozzi, M., et al. (2013). Crop rotation, fertilizer types and application timing affecting nitrogen leaching in nitrate vulnerable zones in Po Valley. Italian Journal of Agrometeorology, 2, 39–50

Raun, W. R., Solie, J. B., Stone, M. L., Martin, K. L., Freeman, K. W., Mullen, et al. (2005). Optical sensor-based algorithm for crop nitrogen fertilization. Communications in Soil Science and Plant Analysis, 36(19–20), 2759–2781. https://doi.org/10.1080/00103620500303988

Ritchie, J. T., Singh, U., Godwin, D. C., & Bowen, W. T. (1998). Cereal growth, development and yield. In G. Y. Tsuji, G. Hoogenboom, & P. K. Thornton (Eds.), Understanding Options for Agricultural Production (pp. 79–98). Dordrecht, The Netherlands: Kluwer Academic Publishers. https://doi.org/10.1007/978-94-017-3624-4_5

Röll, G., Memic, E., & Graeff-Hönninger, S. (2020). Implementation of an automatic time-series calibration method for the DSSAT wheat models to enhance multi‐model approaches. Agronomy Journal, 112(5), 3891–3912. https://doi.org/10.1002/agj2.20328

Saha, S., Moorthi, S., Wu, X., Wang, J., Nadiga, S., Tripp, P., et al. (2014). The NCEP climate forecast system version 2. Journal of Climate, 27(6), 2185–2208. https://doi.org/10.1175/JCLI-D-12-00823.1

Stamatiadis, S., Schepers, J. S., Evangelou, E., Tsadilas, C., Glampedakis, A., Glampedakis, M., et al. (2018). Variable-rate nitrogen fertilization of winter wheat under high spatial resolution. Precision Agriculture, 19(3), 570–587. https://doi.org/10.1007/s11119-017-9540-7

Togliatti, K., Archontoulis, S. V., Dietzel, R., Puntel, L., & VanLoocke, A. (2017). How does inclusion of weather forecasting impact in-season crop model predictions? Field Crops Research, 214, 261–272. https://doi.org/10.1016/j.fcr.2017.09.008

Vega, A., Córdoba, M., Castro-Franco, M., & Balzarini, M. (2019). Protocol for automating error removal from yield maps. Precision Agriculture, 20(5), 1030–1044. https://doi.org/10.1007/s11119-018-09632-8

Weisheimer, A., & Palmer, T. N. (2014). On the reliability of seasonal climate forecasts. Journal of The Royal Society Interface, 11(96), 20131162

Willmott, C. J. (1982). Some comments on the evaluation of model performance. Bulletin of the American Meteorological Society, 63(11), 1309–1313. https://doi.org/10.1175/1520-0477

Wong, M. T. F., & Asseng, S. (2006). Determining the causes of spatial and temporal variability of wheat yields at sub-field scale using a new method of upscaling a crop model. Plant and Soil, 283(1), 203–215. https://doi.org/10.1007/s11104-006-0012-5

Zhang, J., Liu, X., Liang, Y., Cao, Q., Tian, Y., Zhu, Y., et al. (2019). Using a portable active sensor to monitor growth parameters and predict grain yield of winter wheat. Sensors (Basel, Switzerland), 19(5), 1108. https://doi.org/10.3390/s19051108

Cited website

Acknowledgements

This project was funded by the Cassa di Risparmio di Padova e Rovigo (CARIPARO), project AGRIGNSSVeneto-Precision Positioning for Precision Agriculture. The authors are grateful to Dr. Franco Gasparini, Dr. Domenico Giora, Dr. Marco Benetti and Dr. Filippo Ferro for the technical assistance provided during the field experiment.

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that thay have no conflict of interest.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gobbo, S., De Antoni Migliorati, M., Ferrise, R. et al. Evaluation of different crop model-based approaches for variable rate nitrogen fertilization in winter wheat. Precision Agric 23, 1922–1948 (2022). https://doi.org/10.1007/s11119-022-09957-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-022-09957-5