Abstract

This paper investigates the potential for a causal relationship between certain supply-side policies and UK output and productivity growth between 1970 and 2009. We outline an open economy DSGE model of the UK in which productivity growth is determined by the tax and regulatory environment faced by firms. This model is estimated and tested using simulation-based econometric methods (indirect inference). Using Monte Carlo methods we investigate the power of the test as we apply it, allowing the construction of uncertainty bounds for the structural parameter estimates and hence for the quantitative implications of policy reform in the estimated model. We also test and confirm the model’s identification, thus ensuring that the direction of causality is unambiguously from policy to productivity. The results offer robust empirical evidence that temporary changes in policies underpinning the business environment can have sizeable effects on economic growth over the medium term.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this study, simulation-based econometric methods are used to investigate whether certain supply-side policies – specifically tax and regulatory policies – affected economic growth in recent UK history (1970-2009). This period saw major reform to the UK’s institutions: marginal tax rates were reduced and the regulative system was altered, notably including hiring and firing restrictions and union laws. The stated objective of these so-called ‘supply-side’ reforms was to reduce barriers to entrepreneurial innovation and so affect the macroeconomy via the production function. The episode has attracted great interest from academics and policymakers alike as a case study of what supply-side policy can or cannot achieve, and continues to do so. The UK government’s Plan for Growth (HM Treasury 2011) emphasized the business start-up and operation channel, to be targeted by reducing “burdens” from tax and regulation, in particular employment regulation.Footnote 1 No UK government since has signalled a movement away from this strategy; alongside its protective role, regulatory policy is viewed as a barrier to entrepreneurship and hence to growth.Footnote 2 The area has become heavily politicised, but there are empirical questions here which deserve examination and this is what we set out to do in this paper.

We take a DSGE model of the (highly open) UK economy in which supply-side policy affects incentives to set up innovative business ventures at the microfoundational level, and so plays a causal role in aggregate productivity behaviour over the short- to medium-run. The model is estimated and tested using Indirect Inference methods (Le et al. 2011). Two recent surveys of this testing method published in this journal (Le et al. 2016; Meenagh et al. 2019) set out how its power exceeds the traditional data-likelihood method. It also has low estimation bias in small samples. In this paper, we present the results of our own Monte Carlo exercise into the power of this test as we have applied it. We also apply a test of identification (Le et al. 2017) to the DSGE model, complete with its unambiguous relationship from policy to productivity growth, finding that it is indeed identified. With this assured, the result of the Indirect Inference test offers empirical support (or a lack of it, should the model be rejected) for the specified growth policy mechanism. Furthermore, the results of the power exercise imply uncertainty bounds for the structural parameter estimates we obtain and for the quantitative results of policy reform exercises conducted with the estimated model. The work is therefore complementary to existing empirical work on the macroeconomic effects of structural reforms, as there is no question about the exogeneity of policy in the identified model, and the conclusions rest on an estimated structural model that is formally evaluated by classical econometric methods.

We find that this model in which temporary supply-side policy shocks generate long-lasting productivity growth episodes is not rejected for the UK 1970-2009 sample with the estimated parameter set. Using the estimated model, a one-off 1 percentage point reduction in tax and regulatory policy leads in simulation to an average higher growth rate of 0.09 percentage points per annum over 70 quarters.

The paper is structured as follows. A brief discussion of related work is provided in Section 2; the structural model is described in Section 3; Section 4 presents the empirical work, including discussion of data, methods and robustness checks; a policy reform experiment is given in Sections 5 and 6 concludes.

2 Related Work

Numerous models exist of how innovation raises productivity, and how policy can enter that process. In New Endogenous Growth theory, spillovers drive a wedge between private and social returns to innovation (Aghion and Howitt 1992; Romer 1990); such models recommend subsidies to research, while lowering barriers to entry (such as regulation and tax) has an ambiguous effect on innovation (Aghion and Howitt 2006; Acemoglu 2008). Related empirical work generally uses formal R&D expenditure and patent counts to proxy innovation (e.g. Jaumotte and Pain 2005) but since formal R&D is dominated by large established firms, this overlooks innovation by small and/or new businesses. Acs et al. (2009) refocus the growth driver on entrepreneurs:Footnote 3 entrepreneurship is decreasing in regulatory and administrative burdens and government “barriers to entrepreneurship” including labour market rigidities, taxes and bureaucratic constraints. In Braunerhjelm et al. (2010), the distribution of resources between R&D and entrepreneurship is as important to growth as purposeful R&D investments (cf. Michelacci 2003). The implication is that “Policy makers would be seriously misguided in focusing exclusively on knowledge creation” (Acs and Sanders 2013, p. 787) while ignoring the effective commercialisation of knowledge by entrepreneurs. This is a key factor in our modelling choices (on which we say more below); we allow for tax and regulatory policies to affect incentives to profit-motivated innovative activities that may include formal R&D but are not limited to it.

Empirical work on structural policy-growth relationships falls roughly into three categories: aggregate growth regressions (e.g. Erken et al. 2008; Acs et al. 2012; Djankov et al. 2006; Djankov et al. 2010), simulated reform exercises using calibrated DSGE models, and microeconometric studies on policy’s role in firm- or industry-level panels (see e.g. Scarpetta et al. 2002 and Myles 2009). Studies in the first category have serious difficulty establishing causality while the third category, though often more successful at addressing identification issues than macro-regressions, cannot reveal the macroeconomic impacts of policy. This motivates our DSGE-based approach, and we discuss the second category here.

Blanchard and Giavazzi (2003) derive a New Keynesian DSGE model in which product and labour market regulation affect the number of firms, employment and the real wage. Everaert and Schule (2008) and Gomes et al. (2011) use similar calibrated models to analyse the macroeconomic impact of structural reforms in EU countries. Regulatory reforms are treated as reductions in price and wage mark-ups in labour and product markets which lower product and labour market slack, stimulating employment and investment. Cacciatore and Fiori (2016) add search and matching frictions, allowing hiring and firing costs to be modelled in a less reduced-form fashion.

Coenen et al. (2008) use a calibrated two-country DSGE model (a version of the New Area-Wide Model) to investigate the macroeconomic effects of reforms to labour-market distorting tax rates, following the reasoning of Prescott (2004) that higher tax wedges in the euro area relative to the US explain differences in output, hours worked and labour productivity. They use the model to simulate the effect of reducing the tax wedge from European to US levels and find an increase in output and hours worked above 10%. Similarly, Poschke (2010), in a DSGE model with heterogeneous firms, finds that raising administrative entry costs from US to German levels (c. 30% of GDP per capita) reduces the difference between US and German TFP by about one third – a large impact. The reform reduces substitutability among differentiated goods (i.e. competition is reduced) and so markups rise and the market share of high productivity firms falls in general equilibrium. The calibrated model’s performance is judged on whether it generates certain data features – a standard matching approach that has been called ‘calibrationist’ (Canova 1994, Chapter 3). Our empirical approach aligns us more closely with a growing macroeconomic literature concerned with estimating and formally evaluating DSGE models; see e.g. Schorfheide (2011) and Ruge-Murcia (2014). In using indirect inference methods and adopting the ‘directed’ Wald test (i.e. focusing the test on particular features of most interest, rather than testing the model in every dimension), our approach follows Le et al. (2011) – similar approaches are Dridi et al. (2007), Guerron-Quintana et al. (2017), and Hall et al. (2012).

3 Structural Model

As the backdrop for our investigation into the role of supply side policy in the growth process, we use an open economyFootnote 4 real business cycle model adapted from Meenagh et al. (2010). It is a two-country model, with one country modeled after the UK economy and the other representing the rest of the world; foreign prices and consumption demand are treated as exogenous, and international markets are cleared by the real exchange rate. The model omits many of the nominal and real frictions we are used to seeing in the standard New Keynesian framework, while still capturing UK real exchange rate movements (Meenagh et al. 2010). We are not alone in observing that “modern DSGE models need not embed large batteries of frictions and shocks to account for the salient features of postwar business cycles” (Ambler et al. 2012). Moreover, for an empirical analysis of the UK there is a clear advantage to abstracting from monetary policy which underwent numerous regime changes during this sample period. To emphasise, this simple DSGE model is intended as a vehicle within which to test empirically the central hypothesis about supply-side policy and economic growth using Indirect Inference methods.

Productivity is non-stationary (Eq. 27) and depends on time spent in innovative activity zt, the consumer’s choice (cf. Lucas 1990). This activity is subject to a proportional cost due to government policy, \(\tau _{t}^{\prime }\); this policy variable is subject to temporary but persistent shocks that generate long-lasting episodes of growth in TFP and output around balanced growth behaviour, via its incentive effects on zt. In this paper, zt is conceived of as entrepreneurship. A sizeable literature looks for a precise and workable definition of this ‘activity.’ Here we follow the synthesis definition of Wennekers and Thurik (1999), that entrepreneurship is the “ability and willingness [...] to perceive and create new economic opportunities [...] and to introduce their ideas in the market, in the face of uncertainty and other obstacles [...] it implies participation in the competitive process” (p. 46-47).Footnote 5 We discuss the growth process in more detail below.

3.1 Consumer Problem

The consumer chooses consumption (Ct) and leisure (xt) to maximise lifetime utility, U:

u(.) takes the form:

ρ1,ρ2 > 0 are coefficients of relative risk aversion; γt and ξt are preference shocks; 0 < 𝜃0 < 1 is consumption preference. The agent divides time among three activities: leisure, labourNt supplied to the firm for real wage wt, and activity zt that is unpaid at t but known to have important future returns. The time endowment is:

Here the consumer chooses leisure, consumption, domestic and foreign bonds (b, bf) and bonds issued by the firm to finance its capital investment (\(\tilde {b}\)), and new shares (Sp) purchased at price q, subject to the real terms budget constraint.Footnote 6

Taxbill Tt is defined further below. The only taxed choice variable in the model is zt; all other taxes are treated as lump sum to rule out wealth effects. Since the zt choice is left aside until Section 3.4 on endogenous growth, the taxbill is not yet relevant. \(Q_{t}=\frac {{P_{t}^{f}}}{ P_{t}}.\hat {E}_{t}\) gives relative consumer prices. The nominal exchange rate \(\hat {E}_{t}\) is assumed fixed, so Qt is the relative import price. Footnote 7 Higher Qt implies a real depreciation of domestic goods on world markets and hence an increase in competitiveness; this can be thought of as a real exchange rate depreciation.

The consumer’s first order conditions yield the Euler Eq. (5), the intratemporal condition (6),Footnote 8 real uncovered interest parity (7 ), and the share price formula (8). First order conditions on \(\tilde { b}_{t+1}\) and bt+ 1 combine for \(\hat {r}_{t}=r_{t}\). Indeed, returns on all assets (\({S_{t}^{p}}\), bt+ 1, \(\tilde {b}_{t+1}\) and \(b_{t+1}^{f}\)) are equated.

Equation 8 rests on the further assumption that qt does not grow faster than the interest rate, \(\lim _{i\rightarrow \infty }\frac {q_{t+i}}{ \prod \limits _{j=0}^{i-1}(1+r_{t+j})}=0\).

The domestic country has a perfectly competitive final goods sector, producing a version of the final good differentiated from the product of the (symmetric) foreign industry. The model features a multi-level utility structure (cf. Feenstra et al. 2014). The level of Ct chosen above must satisfy the expenditure constraint,

\({p_{t}^{d}}\equiv \frac {{P_{t}^{d}}}{P_{t}}\). \({C_{t}^{d}}\) and \({C_{t}^{f}}\) are chosen to maximise \(\tilde {C}_{t}\) via the following utility function (Eq. 10), subject to the constraint that \(\tilde {C}_{t}\leqslant C_{t}\).

At a maximum the constraint binds; 0 < ω < 1 denotes domestic preference bias. Import demand is subject to a shock, σt. The elasticity of substitution between domestic and foreign varieties is constant at \(\sigma =\frac {1}{1+\rho }\). First order conditions imply the relative demands for the imported and domestic goods:

Given Eq. 11 above, the symmetric equation for foreign demand for domestic goods (exports) relative to general foreign consumption is

* signifies a foreign variable; ωF and σF are foreign equivalents to ω and σ. \(Q_{t}^{\ast }\) is the foreign equivalent of Qt, import prices relative to the CPI, and \(\ln Q_{t}^{\ast }\simeq \ln {p_{t}^{d}}-\ln Q_{t}\).Footnote 9 An expression for \({p_{t}^{d}}\) as a function of Qt follows from the maximised Eq. 10:

A first order Taylor expansion around pd ≃ Q ≃ σ ≃ 1, with σ = 1, yields a loglinear approximation for this:

The export demand equation is then

where \(\check {c}\) collects constants and \(\varepsilon _{ex,_{t}}=\sigma ^{F}[\ln \sigma _{t}^{\ast }+\frac {1-\omega }{\omega }\frac {1}{\rho }\ln \sigma _{t}]\). Assuming no capital controls, the real balance of payments constraint is satisfied.

3.2 Firm Problem

The representative firm produces the final good via a Cobb Douglas function with constant returns to scale and diminishing marginal returns to labour and capital, where At is total factor productivity:

The firm undertakes investment, purchasing new capital via debt issue (\( \tilde {b}_{t+1}\)) at t; the cost \(\hat {r}_{t}\) is payable at t + 1. Bonds are issued one for one with capital units demanded: \(\tilde {b}_{t+1}=K_{t}\). There are convex adjustment costs to capital. The cost of capital covers the return demanded by debt-holders, capital depreciation δ and adjustment costs, \(\tilde {a}_{t}\).Footnote 10 The profit function is:

\(\tilde {w}_{t}\) is the real unit cost of labour; κt and χt are cost shocks capturing random movements in marginal tax rates. From the consumer first order conditions, \(\hat {r}_{t}=r_{t}\). Substituting for this and for \(\tilde {b}_{t+1}=K_{t}\), profits are:

Here adjustment costs are explicit, having substituted \(\tilde {b}_{t+1} \tilde {a}_{t}=K_{t}\tilde {a}_{t}=\frac {1}{2}\zeta ({\Delta } K_{t})^{2}\). Parameter ζ is constant.

The firm chooses Kt and Nt to maximise expected profits, taking rt and \(\tilde {w}_{t}\) as given. Assume free entry and a large number of firms operating under perfect competition. The optimality condition for Kt equates the marginal product of capital (net of adjustment costs and depreciation) to its price, plus cost shock – d is the firm’s discount factor. Rearranged, this gives a non-linear difference equation in capital.

Given capital demand, the firm’s investment, It, follows via the capital accumulation identity.

The optimal labour choice gives the firm’s labour demand condition:

Internationally differentiated goods introduce a wedge between the consumer real wage, wt, and the real labour cost for the firm, \(\tilde {w}_{t}\).Footnote 11 The wedge is \({p_{t}^{d}}=\frac {w_{t}}{ \tilde {w}_{t}},\) implying, via 15, the following:

3.3 Government

The government spends on the consumption good (Gt) subject to its budget constraint.

Spending is assumed to be non-productive (transfers). As well as raising tax revenues Tt the government issues one-period bonds. Each period, revenues cover spending and the current interest bill: Tt = Gt + rt− 1bt so bt = bt+ 1. Revenue Tt is as follows.

τt is a proportional rate on time spent in innovative activity zt. Assuming that all policy costs on zt are genuine external social costs redistributed to the consumer via a reduction in the lumpsum levy Φt, tax revenue collected by government is equal to that taxbill paid by consumers.Footnote 12 Lumpsum tax Φt captures revenue effects of all other tax instruments, responding to changes in τtzt for revenue neutrality in the government budget constraint. Government spending is modeled as an exogenous trend stationary AR(1) process, where ∣ρg∣ < 1 and ηg,t is a white noise innovation.

3.4 Productivity Growth

Productivity growth is a linear function of time spent in an activity zt , where a1 > 0:

Policy, \(\tau _{t}^{\prime }\), drives growth systematically through zt.Footnote 13 This section derives the linear relationship between productivity growth and \(\tau _{t}^{\prime }\) driving the model’s dynamic behaviour in simulations. We adapt the endogenous growth process from Meenagh et al. (2007) to a decentralised framework. It resembles Lucas (1990) in that the agent can invest time in a growth-driving activity.Footnote 14

The consumer chooses zt to maximise utility (Eqs. 1 and 2 ), subject to Eqs. 3, 4 and 25. Assume for the consumer’s shareholdings that \({S_{t}^{p}}=\bar {S}=1\).Footnote 15 The rational agent expects zt to raise her consumption possibilities through her role as the firm’s sole shareholder, knowing that, given Eq. 27, a marginal change in zt permanently raises productivity from t + 1. This higher productivity is fully excludable and donated to the atomistic firm she owns; higher productivity is anticipated to raise household income via firm profits paid out as dividends, dt (everything leftover from revenue after labour and capital costs are paid). The choice is thought not to affect economy-wide aggregates; all prices are taken as parametric (note that the productivity increase is not expected to increase the consumer real wage here, though it does so in general equilibrium - cf. Boldrin and Levine (2002, 2008)).Footnote 16

Rearranging the first order condition with respect to zt (see Appendix A for full derivation), the expression can be approximated as

This is in terms of \(\frac {\tau _{t}}{w_{t}}\equiv \tau _{t}^{\prime }\), the ratio of τt to the wage (the opportunity cost of zt). \(\tau _{t}^{\prime }\) is a unit free rate unlike τt which, like the wage, is a rate per unit of time. A first order Taylor expansion around \(\tau _{t}^{\prime }=\tau ^{\prime }\) of Eq. 28 gives the following linear relationship:

\(b_{1}=-a_{1}.\frac {\frac {\beta \rho _{\gamma }}{1-\beta \rho _{\gamma }} \frac {Y}{C}}{\frac {w}{C}(1+\tau ^{\prime })^{2}}<0\) for a policy raising the costs of innovation.Footnote 17 At this point, we revisit our conception of entrepreneurship which is admittedly broad. Our entrepreneurship growth channel encompasses business activities which push forward the production possibility frontier (the creative responder/destructor identified by Schumpeter (1947, 1942)) or raise the average productivity level in the economy (the arbitrageur emphasized by Kirzner (1973)), perhaps by implementing foreign technologies at home. There is no explicit entry or exit in this model, and no international spillover. To reiterate, the share-holder entrepreneur donates ideas resulting from zt to her firm, capturing the full return to zt, except for taxes and regulatory costs. Non-rival technology – leading to costless spillovers – and fixed innovating costs lead many to discard perfect competition as a viable framework for examining innovation. However, Boldrin and Levine (2008, 2002) argue against costless spillovers. Returns to technological progress generated by the entrepreneur may accrue formally to fixed factors of production, rather than appearing as supernormal profits; this is what happens in our model, while also the individual entrepreneur/owner acts taking prices and costs as parametric. This model provides a framework in which to test the hypothesis of interest: whether a causal relationship from supply-side policy ‘barriers’ to economic growth exists in the UK macroeconomic data. If a relationship from policy to growth is found, it is left to future work to examine which process drives it by defining the microstructure more minutely.

To close this section, we outline the implications of policy incentives for labour in the model. Equations 28 and 27 relate zt to \(\tau _{t}^{\prime }\). Define \(\frac {\partial z_{t}}{\partial \tau _{t}^{\prime }}\equiv c_{1}\), a constant parameter featuring in the producer labour cost equation:

where \(e_{w,t}=-\ln \gamma _{t}+\ln \xi _{t}+\frac {1}{\rho }\left [ \frac { 1-\omega }{\omega }\right ]^{\sigma }\ln \sigma _{t}\).This equation is derived from the intratemporal condition (Eq. 6 - see Appendix A for full derivation). \(\tau _{t}^{\prime }\) penalises zt, so c1 < 0, hence \(\frac {d\ln \tilde {w}_{t}}{d\tau _{t}^{\prime }}<0\) or \( \frac {d\ln N_{t}}{d\tau _{t}^{\prime }}>0\). Equation 30 is the rearranged labour supply condition; the worker responds to a higher penalty on zt by raising labour time.Footnote 18

3.5 Closing the Model

Goods market clearing in volume terms is:

All asset markets also clear.

A transversality condition rules out balanced growth financed by insolvent borrowing rather than growing fundamentals. The balance of payments is restricted so that the long run change in net foreign assets (the capital account) is zero. At a notional date T when the real exchange rate is constant, the cost of servicing the current debt is met by an equivalent trade surplus.

The numerical solution path is forced to be consistent with the constraints this condition places on the rational expectations. In practice it constrains household borrowing since government solvency is ensured already, and firms do not borrow from abroad. When solving the model, the balance of payments constraint is scaled by output so that the terminal condition imposes that the ratio of debt to gdp must be constant in the long run, \( {\Delta } \hat {b}_{t+1}^{f}=0\) as t →∞, where \(\hat {b} _{t+1}^{f}=\frac {b_{t+1}^{f}}{Y_{t+1}}\). The model is loglinearised before solution and simulation; the full model listing is in Appendix B.

3.6 Exogenous Variables

Stationary exogenous variables consist of shocks to real interest rates (Euler equation), labour demand, real wages, capital demand, export demand and import demand. These are not directly observed but are implied as the difference between the data and the model predictions (cf. the ‘wedges’ of Chari et al. (2007)). Those differences ei,t, which we call structural residuals or shocks, are treated as trend stationary AR(1) processes:

ηi,t is an i.i.d mean zero innovation term; i identifies the shock. We model foreign consumption demand, government consumption, foreign interest rates and policy variable \(\tau _{t}^{\prime }\) similarly. AR(1) coefficients ρi are estimated. Where expectations enter, they are estimated using a robust instrumental variable technique (Wickens 1982; McCallum 1976); they are the one step ahead predictions from an estimated VECM. We do not stationarise any of the endogenous variables and so the exogenous shocks (wedges) extracted from each structural equation are either stationary, trend-stationary, or non-stationary in the case of TFP. Where ai≠ 0 and bi≠ 0, the detrended residual \(\hat {e}_{i}\) is used:

The innovations ηi,t are approximated by the fitted residuals from estimation of Eq. 34, \(\hat {\eta }_{i,t}\). The Solow residual lnAt is modelled as a unit root process with drift driven by a stationary AR(1) shock and by the detrended exogenous variable \(\tau _{t}^{\prime }\), following Eq. 29.

Deterministic trends are removed from exogenous variables since they enter the model’s balanced growth path. We focus here on how the economy deviates from steady state in response to shocks - in particular, stationary innovations to the policy variable, \(\tau _{t}^{\prime }\). Such innovations will have a permanent shift effect on the path of TFP via its unit root. Due to their persistence they also generate transitional TFP growth episodes above long-run trend. Note that, were \(\tau _{t}^{\prime }\) to be non-stationary, it would cause simulated output to be I(2) which is not empirically defensible. We discuss this treatment of τ′ further in Sections 4.1.2 and 4.2.2 below.

4 Empirical Work

This empirical work is an application of the Indirect Inference testing method given in Le et al. (2011). The method involves simulating the DSGE model by repeated resampling of the shocks implied by the data, and then comparing the properties of these model-generated simulations with the actual data. For that comparison we use a theory-neutral descriptive model, the ‘auxiliary model,’ from which a formal test statistic is derived. Our choice of auxiliary model and the method we apply, along with an exploration of its small sample properties, are discussed further below, though we refer readers to the recent surveys in this journal for a more in-depth treatment (Le et al. 2016; Meenagh et al. 2019).

4.1 Data

4.1.1 Macroeconomic Data for the UK

The sample is unfiltered UK macroeconomic data for 1970 to 2009; key series are plotted in Fig. 1 (sources in Appendix). In this model, shocks to policy can have long-lasting transitional effects on endogenous variables, and such shocks are occasionally large. In both cases the HP filter distorts the estimates of underlying trends (Hodrick and Prescott 1997); where we would want to analyse the model’s adjustment to the policy shock, the HP filter may interpret it as a change in underlying potential and remove it. For further discussion of the problems induced by filtering, see e.g. Hamilton (2018). Given our non-stationary data, we choose a Vector Error Correction Model as the auxiliary model - this is discussed further in Section 4.2.1 below. Footnote 19

4.1.2 Data for Policy Variable

For policy indicator \(\tau _{t}^{\prime }\) we collect UK data on regulation and tax, two key components of the business environment. On regulation, the focus is on the labour market; we use an index of centralized collective bargaining (CCB) produced by the World Economic Forum and a mandated cost of hiring index (MCH) from the World Bank Doing Business project; the latter reflects the costs of social security and other benefits such as holiday pay. More detail is given in Appendix C. Taking the trade union membership rate we interpolate the lower frequency indices using the Denton proportionate variant adjustment method (Denton 1971). An equally weighted arithmetic average of the resulting quarterly series for collective bargaining and mandated hiring costs gives the labour market regulation (LMR) indicator used here to reflect labour market inefficiency; see Fig. 2, Panel 1.Footnote 20

In the absence of a good ‘effective’ entrepreneur tax rate for 1970-2009, which is prohibitively complex to calculate, we use the top marginal income tax rate. This is not to say that every entrepreneur gets into the top income tax bracket; the expected return to entrepreneurship is generally small. This top marginal tax rate is a proxy for the profit motive central to the notion of entrepreneurship as we have defined it; cf. Lee and Gordon (2005). See also e.g. Baliamoune-Lutz and Garello (2014) who find that a reduction in marginal tax rates at the top of the income distribution relative to the marginal tax rate at average earnings increases entrepreneurship.

The top marginal income tax rate is measured annually; the series is interpolated to a quarterly frequency by constant match. The series falls consistently until 2009 with the introduction of the 50p tax rate on income over £150,000 (Fig. 2, Panel 1). The main policy indicator used in empirical work is an equally weighted average of top marginal income tax and labour market regulation (Tau Series (1), Fig. 2 Panel 2). The SME rate of corporation tax may well belong in \( \tau _{t}^{\prime }\); reductions in this rate lower the costs of running a new business. However, reducing corporation tax relative to other forms of taxation (employee or self-employed labour income) could distort incentives to incorporate at the small end of the firm size distribution for reasons unrelated to productivity growth. For instance, incorporation soared in the UK after the 2002 Budget when the starting rate on corporate profits up to £10,000 was reduced to zero (Crawford and Freedman 2010). Corporation tax is therefore excluded from the main \(\tau _{t}^{\prime }\) index. However, an alternative policy variable constructed from the labour market indicator and corporation tax rates (in place of top marginal income tax) is investigated in Section 4.4.1 (Tau Series (2), Fig. 2, Panel 2).

The index falls over the sample, irregularly due to steps in marginal income tax.Footnote 21 In our model of productivity growth τ′ is modelled exogenously as a stationary stochastic series with high persistence, i.e. before solving the model a linear trend term is estimated and removed, leaving the detrended \(\tau _{t}^{\prime }\) rate (see Section 3.6). We can justify this in two ways. Either the trend in τ′ is fully offset by other deterministic factors affecting TFP in the long run, or (our preferred assumption) the trend that we estimate in our sample is not a true long run trend. τ′ could not continue indefinitely along its sample trend, since this would imply tax/regulative levels going to minus infinity. The policy variable is by definition bounded between 0 and 1, and theoretically it should be stationary in the very long run. There are sound political economy arguments to say that such policies should be modelled as persistent AR(1) series which stabilise around a long-run positive mean (hitting the zero lower bound and staying there is politically infeasible for this variable).

An implication of this treatment is that the economy has a constant balanced growth path along the lines of a standard neoclassical growth model, since the growth of productivity is constant apart from the stationary shocks to τ′ and the residual error. The detrended series is plotted against the changes in the Solow residual (in logs) in Fig. 3. This shows some significant movements around trend in the policy variable and the interest is in whether such movements cause the behaviour of productivity. Since our results may be sensitive to the choice of detrending procedure, we conduct robustness tests on this in Section 4.4.1.

4.2 Indirect Inference Methods

See Le et al. (2016) for a full explanation of the methodology. Here we give a brief overview. J bootstrap samples are generated from the DSGE model and some parameter set 𝜃. Each sample is estimated using an auxiliary model, yielding coefficient vectors aj for j = 1,..,J. Using the variance-covariance matrix Ω for the distribution of aj implied by the structural model and 𝜃, we construct the small-sample distribution for the Wald statistic, \(WS(\theta )=(a_{j}-\overline { a_{j}(\theta )})^{\prime }W(\theta )(a_{j}-\overline {a_{j}(\theta )})\), where \(\overline {a_{j}(\theta )}{\kern 1.7pt} \ \)is the mean of the J estimated vectors and \(W(\theta )=\hat {\Omega }(\theta )^{-1}\). The same auxiliary model is estimated with the observed data, yielding vector \(\hat {\alpha }\). The test statistic is then \(WS^{\ast }(\theta )=(\hat {\alpha }-\overline { a_{j}(\theta )})^{\prime }W(\theta )(\hat {\alpha }-\overline {a_{j}(\theta )})\). A WS∗(𝜃) falling in the 95th percentile of the distribution or above implies a rejection of the structural model with 𝜃 at 5% significance. The Wald percentile can be converted into an equivalent t-statisticFootnote 22 or p-value.

This Wald test procedure is the basis for estimation. Within a bounded parameter space an algorithm searches for a parameter set, 𝜃, which minimises the Wald percentile for this structural model.

4.2.1 Auxiliary Model

The DSGE model solution can be written as a cointegrated VECM – we rearrange and approximate this as a VARX(1); see Appendix. This approximation to the structural model’s reduced form is the unrestricted auxiliary model used in the indirect inference Wald test (Eq. 38).

t captures the deterministic trend in \(\bar {x}_{t}\) (the balanced growth behaviour of the exogenous variables) affecting endogenous and exogenous variables (respectively yt and xt). Lagged difference regressors are in the error qt. Unit root variables, xt− 1, control for permanent effects of past shocks on x and y. Our research question is whether tax and regulation play a causal role in determining TFP and output growth, so these are initially the endogenous variables in the auxiliary VARX(1). This is therefore a ‘directed’ Wald test (Le et al. 2011). The policy variable \(\tau _{t-1}^{\prime }\) and net foreign assets \(b_{t-1}^{f}\) are included as lagged exogenous variables; unit root variable \(b_{t-1}^{f}\) captures the model’s stochastic trend.Footnote 23

Vector \(\hat {\alpha }\) contains OLS estimates of the coefficients on observed data for these lagged endogenous and exogenous variables plus the auxiliary model error variances. The vector aj is composed similarly and used to construct the Wald distribution. Auxiliary model errors are checked for stationarity. Though the trend term must be present to capture deterministic behaviour, we focus on the stochastic behaviour induced by the shocks and therefore exclude the deterministic trend from the test. We expand on this further below.

4.2.2 Dealing with Balanced Growth Behaviour

The model’s balanced growth path is its deterministic behaviour in the absence of stochastic shocks and with all long run conditions imposed. In the theoretical model, the deterministic behaviour of each endogenous variable along the BGP is a combination of the true deterministic trends in the exogenous variables, including (indeed principally) the exogenous trend in the I(1) TFP process. The deterministic trend behaviour implied by the model’s long run solution can be calculated and added back into the simulated data, using the sample estimates for the exogenous variable trends in place of the true trends.

In the actual data and also in our model-simulated samples with deterministic behaviour added back in, there are both deterministic and stochastic trend components in the endogenous variables. In the auxiliary VECM used as the descriptive basis for the test, the combination of deterministic trends in the exogenous variables would be captured by the deterministic trend term, while stochastic trends are captured by the non-stationary variables. As noted above, temporary shocks to τ′ have a permanent effect on I(1) productivity, which explains why τ′ has such a large effect on long run output (see Table 5).

However, though we allow for the deterministic trend in the VECM, in testing the model we ignore its coefficient. We rely for the test on the ‘dynamic terms’ in the VECM, relating output and productivity to each other. These provide high statistical power as our Monte Carlo experiments show (see Section 4.3 below). Adding in the trend terms from the model would diminish this power because there are many and when combined would have a very large standard error, making it too easy for the model to match the estimated output trend in the VECM.

Indeed, we would emphasise the well-understood point that these sample trends do not necessarily apply in the long run. For example τ′, as discussed above in Section 4.1.2, cannot continue on a strong downward trend indefinitely without absurd implications. Hence, another of the long-run conditions imposed when we calculate the balanced growth path for the model is that these trends are not very long run (population) trends. Effectively this means that the balanced growth behaviour of our model is like any neoclassical exogenous growth model in that the deterministic trend in TFP is exogenous; though in theory it would be affected by a deterministic trend in τ′, we assume that in the very long run the trend in τ′ is zero.

4.3 Test Power and Model Identification

4.3.1 Power Exercise for the Indirect Inference Wald Test

Since the Wald test is the basis for the estimation process, the results below and the associated variance decomposition and simulated policy reform rely for their validity on its power to reject misspecified models. In other macro-modelling applications the test’s power has been found to be considerable; see Le et al. (2016). Here we investigate the power of the test exactly as it has been applied here for this particular model via a Monte Carlo exercise. Table 1 reports the rejection rates when the DSGE model parameters are perturbed away from their true values (randomly up or down) to an increasing extent.Footnote 24 We find that structural coefficients 3% away from true are rejected by the test 99.3% of the time, while 3.5% falseness leads to rejection 100% of the time.

We would also like to know how often the test will reject a model when just a few of the coefficients are misspecified. The coefficients of most interest here are b1 and c1, since they determine the importance of the policy variable we have added into the model. The power exercise is therefore repeated when these two coefficients alone are falsified; we are particularly interested in picking up on falseness as the coefficient gets closer to zero, so that we can be sure that policy is significant in the model. When these two coefficients alone are 50% false (in the direction of zero), the model is rejected 99.08% of the time. This provides a ‘worst case’ bound for our estimates of these parameters; in practice it is unlikely that all other coefficients would have zero misspecification.

4.3.2 Model Identification

We also formally test the model’s identification using the numerical test developed by Le et al. (2017), again using Monte Carlo methods. A true model is used to create numerous large samples, and the identification test checks whether another parameter set can generate the same auxiliary model distribution as the true model, by comparing the Indirect Inference Wald test rejection rates for true versus alternative parameterisations. If some alternative model is rejected 5% of the time (i.e. at the same rate as the true model) the model cannot be identified, as this occurs only when the reduced form descriptions of true and alternative models are indistinguishable. For a full explanation of the procedure, see Le et al. (2017).Footnote 25

We find that structural parameters are rejected 100% of the time when 1% away from true. When 0.7% away from the true set, alternative models are rejected 99.88% of the time. These results on identification and test power give an idea of the reliability of the method and of the estimation results which follow.

4.4 Estimation and Test Results

Table 2 presents structural coefficients that are held fixed throughout the analysis. Long run ratios \(\frac {M}{Y},\frac {X}{Y}, \frac {Y}{C}\) and \(\frac {G}{C}\) are set to UK post-war averages; these then imply values for \(\frac {X}{C}\) and \(\frac {M}{C}\). The rest are calibrated from Meenagh et al. (2010). Other parameters in the DSGE model are estimated via the Indirect Inference procedure. The estimates for this model, with τ(1) as the policy variable driving productivity, are given in Table 3. The associated Wald percentile is 72, equivalent to a p-value of 0.28, well within the non-rejection area of the bootstrap distribution. The implied AR(1) coefficients for the exogenous variables are reported in Table 4.Footnote 26′Footnote 27

A full set of impulse response functions was obtained for every shock in the model; the model generates standard RBC behaviour with this parameter set. The estimated import and export elasticities sum to 2.337, satisfying the Marshall-Lerner condition.Footnote 28 They are also consistent with US estimates obtained by Feenstra et al. (2014), and with UK estimates from Hooper et al. (2000). Given the long run constraint on the capital equation that ζ3 = 1 − ζ1 − ζ2 , only ζ1 and ζ2 were estimated freely. The estimated capital equation coefficients imply a strong pull of past capital on the current value (0.636), indicating high adjustment costs, while the lower estimate of the coefficient on expected capital, ζ2, at 0.335 implies a discount rate for the firm far higher the consumer’s rate. This captures the effects of idiosyncratic risks faced by the price-taking firm, e.g. the risk that the general price level will move once his own price is set in his industry. We assume that idiosyncratic risks to the firm’s profits cannot be insured and that managers are incentivised by these. We can also think of there being a (constant) equity premium on shares – though this, being constant, does not enter the simulation model. The impact of a policy shock at t on the change in log productivity next quarter is estimated at − 0.1209.

Given the estimates for 𝜃 we calculate a variance decomposition, bootstrapping the model and calculating the variance in each simulated endogenous variable for each shock, as reported in Table 5. This gives some insight into the historical data from 1970-2009 given the non-rejection of the model with \(\hat {\theta }\). The policy variable plays a significant part in generating variation in the level of all variables, particularly output, consumption, labour supply (and hence the producer cost of labour \(\tilde {w}\)), exports and the real exchange rate. It is also responsible for generating over 18% of the variation in the quarterly growth rate of productivity. Therefore we can be sure this is distinct from an exogenous growth model; policy has an important role in the dynamics. This is because innovations in τ′ enter TFP, an I(1) process, therefore having permanent effects on the model and generating large variation in the endogenous variables. τ′ also has a direct effect on labour supply via Eq. 30.

This model has passed an extremely powerful test in which only 3% falsity leads to rejection in our Monte Carlo exercise. For a policymaker, as we have seen, this implies a very low range of parameter uncertainty and so a high degree of policy robustness. It also turns out that the estimated model can pass yet more powerful tests – see Appendix, Table 11 – but this is essentially otiose, given the high robustness achieved on the current test.

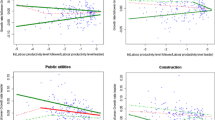

4.4.1 Robustness - Filtering Methods and Alternative Measures of τ′

The policy variable has been made stationary by removing a linear trend, on the basis that this removes the least information from the series. Removing a linear trend leaves stochastic variation which turns out to be stationary. Here we check whether the results reported above are sensitive to a change in the detrending method; we use the widely used HP filter for this check. The two different trends are plotted in Fig. 4. When the HP filtered τ(1) variable is used when testing the structural model with the estimated coefficients reported in Table 3, we still find that the model is not rejected. The test statistic falls in the 92nd percentile of the bootstrap Wald distribution, equivalent to a p-value of 0.08.

When the τ′ series is stationarised by HP filtering rather than extracting a linear trend, the resulting innovations are smaller because the two-sided filter removes stochastic information. This explains the change in p-value for the test result. The HP filter is a different and probably worse detrending treatment of the variable (see Hamilton, 2018) which we report simply as a check on robustness to possible detrending processes. As the model is still not rejected by quite a margin, it demonstrates that the results we report do not stand or fall on the linear detrending method used to stationarise the policy variable.

The estimation and test results presented in Table 3 are for τ(1); see Fig. 2. Here the results are checked for three measures of τ′ (Table 6). Using the Wald-minimising coefficients found above, we tested the DSGE model using τ(2) for the policy data, finding the test statistic still well inside the non-rejection region (the Wald percentile is roughly 85). The same tests were carried out using τ(3); again, the model is not rejected at 5% significance, (Wald percentile 94.41). These robustness checks show that the model’s test performance is not overly sensitive to the weighting/composition of the policy index; the conclusions do not stand or fall on one component of the business environment versus another. The model passes the test for a policy driver reflecting labour market flexibility alone, and when tax indicators are added. However, the inclusion of the top marginal income tax rate with its large step changes yields a lower Wald percentile for the model and this policy component seems to have had important effects.Footnote 29

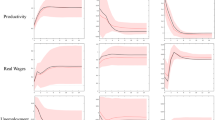

5 Growth Episode After a Policy Reform

Impulse response functions for a one-off 1 percentage point reduction in τ(1) illustrate the resulting growth episode, given the structural parameters in Table 3.Footnote 30 Although the policy shock is temporary, it affects the level of productivity permanently and shocks growth above its deterministic rate for a lengthy period (Fig. 5).Footnote 31 The 1 percentage point τ(1) shock is gradually reversed over time, taking roughly ten years to die away; on average this implies that the penalty is 0.5 percentage points lower for 10 years. The log level of output is 1.6 percentage points higher than its no-shock level after 18 years. This translates to an average higher growth rate of 0.09 percentage points per annum. The growth multiplier effect of an average 0.5 percentage point τ(1) reduction over ten years is therefore in the region of 0.17 for two decades. Footnote 32 Relating this to the UK data, Fig. 3 shows two large downward shocks around trend, the first in 1979, the second in 1988; these correspond to the 1979 budget and the 1988 budget, both of which contained sharp personal income tax rate cuts in the top band (from 0.83 to 0.6, and from 0.6 to0.4 respectively). When the deterministic trend is extracted, these shocks are far smaller. Nevertheless, according to this model, such supply-side policy shocks would help to explain the observed reversal of UK economic decline between 1980 and the2000s.

In conjunction with the Directed Wald test results in Section 5.1, which show the estimated model passes empirically as the explanatory process for productivity, output and a range of other macroeconomic variables, the suggestion is that UK policy over the sample period had substantial effects on economic growth and welfare.Footnote 33

6 Conclusion

We set up an identified model in which policy reform causes short- to medium-run growth episodes, and estimate its structural parameters by indirect inference, a method attracting increasing attention in the macroeconomics literature and which is discussed further in two recent surveys in this journal (Le et al. 2016; Meenagh et al. 2019). The simulated features of this estimated model – summarised by an auxiliary model – were found through an indirect inference Wald test to be formally close to the UK data features. We interpret this as empirical evidence for the hypothesis that temporary movements in tax and regulatory policy around trend drive short-run productivity growth in our UK sample (1970-2009). Since policy shocks in the model are exogenous and uncorrelated with other shocks in the model, there is no ambiguity surrounding causation.

The tax and regulatory policy environment for this period is proxied by a weighted combination of the top marginal rate of personal income tax and a labour market regulation indicator. The estimation and test results suggest that these proxies for ‘barriers to entrepreneurship’ affected UK TFP growth negatively, consistent with the argument of Crafts (2012), Card and Freeman (2004) and Acs et al. (2009).

The Monte Carlo results we report on the statistical power of the indirect inference test as we apply it offer a sense of the robustness of these findings. The introduction of 3.5% misspecification into our structural coefficients results in rejection by the indirect inference test procedure 100% of the time. Even if only two of the structural parameter estimates are misspecified (those two being the coefficients governing the role of policy in the model), the test rejects with near certainty when those coefficients stray 50% below their true values: so for our parameter estimate of b1, the one-period ahead impact of a one percentage point increase in the supply-side policy indicator, the estimate we obtain is -0.12 and this ‘worst-case’ power exercise furnishes a lower bound for that estimate of -0.06.

We also subject the model to the identification test of Le et al. (2017) and conclude that it is identified. The causal mechanism embedded in the DSGE model – from an increase in labour market frictions and marginal tax rates to a decrease in productivity growth – is integral to the model data generating process. Therefore if in fact (in some alternative ‘true’ model) shocks to the tax and regulatory policy index increased productivity growth rather than decreasing it, or had no perceptible effect, this model would be rejected by the test.

The implication is that for policymakers to focus exclusively on knowledge creation policy (i.e. incentivising R&D) while ignoring incentives around entrepreneurship would indeed be “seriously misguided” (Acs and Sanders 2013, p. 787). The results indicate that the creation of an environment in which businesses operate flexibly and innovatively played a supportive role in UK macroeconomic performance in 1970-2009; a less flexible environment would, based on these results, have led to a relatively worse performance in this period.

When governments must spend without building up excessive debt, the temptation is to increase marginal tax rates at the top of the income distribution; this is also a natural response to increasing social inequality.Footnote 34 The question of whether top marginal tax rises come with an attached growth penalty is of some relevance when considering this policy option, though of course economic growth is just one consideration in the pursuit of a social welfare optimum (albeit an important one). Our treatment of the tax structure here has abstracted from key features. Next steps would be to look at distributional effects within a heterogeneous agent framework (e.g. Coenen et al. (2008)), and to look at how revenue is raised through various distortionary tax instruments. This paper offers empirical evidence on the role of supply-side policy in past UK growth at a highly aggregated level. Future work may, by introducing more complexity into the model, look at interactions between tax policy, regulatory policy and other macroeconomic policy interventions.

Notes

The “overarching ambitions” are: 1) “to create the most competitive tax system in the G20”; 2) “to make the UK one of the best places in Europe to start, finance and grow a business” (p.5); 3) to stimulate investment and exports; 4) to “create a more educated workforce that is the most flexible in Europe”. Human capital accumulation is notably last on this list and even then, the fourth point conflates two workforce objectives: skill accumulation and labour market flexibility. This last is to be achieved by ensuring the UK has the “Lowest burdens from employment regulation in the EU”, while the business environment is to be improved by achieving “A lower domestic regulatory burden,” amongst other policies (p.6).

The OECD characterises regulation as a barrier to entrepreneurship. See e.g. OECD (2015), Figure 25, a graph entitled “There is scope to reduce barriers to entrepreneurship” plotting UK Product Market Regulation (PMR) scores against the average ‘best’ five OECD countries in terms of freedom from PMR.

In their model, investment in R&D by incumbent firms yields intratemporal spillovers which generate entrepreneurial opportunities

The UK economy is highly open and an empirical study such as this must acknowledge that, though our principal focus is the behaviour of output and TFP.

Otherwise, much of this description follows L. Minford and Meenagh (2019).

Price Pt of consumption bundle is numeraire

\(b_{t+1}^{f}\) is a real bond - it costs what a unit of the foreign consumption basket (\(C_{t}^{\ast }\)) would cost, i.e. \(P_{t}^{\ast }\) (the foreign CPI). In domestic currency, this is \(P_{t}^{\ast }\hat {E}_{t}\). Assuming \(P_{t}^{\ast }\simeq {P_{t}^{f}}\) (i.e. exported goods from the home country have little impact on the larger foreign country) the unit cost of \( b_{t+1}^{f}\) is Qt.

Later we show that the return on labour time, wt, is equal at the margin to the return on zt.

\(Q_{t}^{\ast }=\frac { {P_{t}^{d}}}{P_{t}^{\ast }}\) - since \(Q_{t}=\frac {{P_{t}^{f}}}{P_{t}}\) and Pt is numeraire, \(Q_{t}={P_{t}^{f}}\). If domestic export prices hardly influence the foreign CPI then \(P_{t}^{\ast }\simeq {P_{t}^{f}}\).

The adjustment cost attached to \(\tilde {b}_{t+1}\) is: \(\tilde {b}_{t+1}\tilde { a}_{t}=\tilde {b}_{t+1}.\frac {1}{2}\zeta \left (\tilde {b}_{t+1}+\frac {\tilde {b }_{t}^{2}}{\tilde {b}_{t+1}}-2\tilde {b}_{t}\right ) =\frac {1}{2}\zeta ({\Delta } \tilde {b}_{t+1})^{2}\)

The firm’s real cost of labour is the nominal wage Wt relative to domestic good price, \({P_{t}^{d}}\), while the real consumer wage is Wt relative to the general price Pt.

It is possible that only a proportion 0 < ψ < 1 of the penalty paid on zt enters the government budget as revenue, the rest being deadweight loss that reduces the payoff to innovation without benefiting the consumer in other ways. In that case revenue is \(\tilde {T}_{t}=\psi \tau _{t}z_{t}+{\Phi }_{t}\) while the consumer tax bill is Tt = τtzt + Φt. Here ψ is assumed to be 1, though notionally it could vary stochastically.

All other factors - e.g. human capital or firm specific R&D investment - are in the error term.

In Lucas’ model, human capital accumulation increases labour efficiency and future earnings. The trade-off is between time spent in this productivity-enhancing activity and ordinary labour, which yields the current wage immediately.

This allows the substitution in the budget constraint that \( q_{t}{S_{t}^{p}}-(q_{t}+d_{t})S_{t-1}^{p}=-d_{t}\).

Given the time endowment 1 = Nt + xt + zt, the agent has indifference relations between zt and xt, between xt and Nt, and zt and Nt. The intratemporal condition in 6 gives the margin between xt and Nt; here we focus on the decision margin between zt and Nt, so the margin between zt and xt is implied. Therefore the substitution Nt = 1 − xt − zt can be made in the budget constraint.

Other terms in the expansion are treated as part of the error term.

Substituting into Eq. (28) from (27), rearranging for zt, then taking the derivative with respect to \(\tau _{t}^{\prime }\), we find \(c_{1}=-\frac {\frac {\beta \rho _{\gamma }}{1-\beta \rho _{\gamma }} \frac {Y_{t}}{C_{t}^{\rho _{1}}}}{\frac {w_{t}}{C_{t}^{\rho _{1}}}(1+\tau _{t}^{\prime })^{2}}\); we could potentially calibrate c1 from this, taking appropriate values for righthand side variables. However there is flexibility around what values are ‘appropriate’. The same is true for b1.

The model is solved using the Extended Path Algorithm similar to Fair and Taylor (1983), which ensures that the one period ahead expectations are consistent with the model’s own predictions. Additionally, the expectations satisfy terminal conditions which ensure that simulated paths for endogenous variables converge to long run levels consistent with the model’s own long run implications. These long run levels depend on the behaviour of the non-stationary driving variables (TFP and net foreign assets) as they evolve stochastically over the simulation period (deterministic trend behaviour is removed).

\(\tau _{t}^{\prime }\) excludes other types of regulation as data going back to 1970 is unavailable. However, the indices we use are highly correlated with the OECD index of product market regulation - see Appendix for further discussion.

KPSS and ADF test results support the decision to treat the series as trend stationary.

Since the Wald is a chi-squared, the square root is asymptotically a normal variable.

Though this is a significant approximation to the full solution, it is still a demanding test of the model which must match the joint behaviour of output and TFP, conditional on the non-stationary predetermined variable \( b_{t-1}^{f}\) and on τ′. Moreover, this level of approximation in the auxiliary model does not affect the power of the test (see Section 4.3; or the small sample properties of Indirect Inference in general, see Le et al. (2011) and Le et al. (2016)).

For example, if coefficient ρ2 is 1.2 then inducing falseness by + 3% means setting it at 1.236.

The auxiliary model used for the test is a 5 variable VARX(4); the fuller auxiliary model is used in order to be a closer approximation of the DSGE model’s solution.

Note that these AR(1) coefficients are high in many cases since we use unfiltered data when we extract the structural residuals (wedges).

The second row entry is the estimated persistence for eA, see Eq. 37. It is relatively low since TFP itself is unit root and eA enters the first difference of TFP (Eq. 36). Thus it is closer to the AR parameter that would be observed on the first differenced TFP series, were we to estimate \({\Delta } A_{t}=d+b_{1}\tau _{t-1}^{\prime }+\rho {\Delta } A_{t-1}+\eta _{A,t}\).

The current account balance improves when the real exchange rate depreciates.

Robustness was also carried out around the interpolation technique of τ(1). The conclusions are unchanged when the Denton method is applied in levels rather than differences for the labour market indicators. Where components are interpolated to quarterly frequency, robustness checks around the interpolation technique show the conclusions are similarly unaffected (constant match interpolation was checked against quadratic interpolation).

In the exogenous τ′ process, \(\tau _{t}^{\prime }=\rho _{\tau }\tau _{t-1}^{\prime }+\eta _{\tau ,t}\), innovation ητ,0 = 0.01 while ητ,t = 0 for t > 0. All other ηi,t are set to zero for all t.

Labour supply falls initially, as the lower opportunity cost of z makes labour relatively less attractive. This causes output to fall at first, but as higher innovation in period 1 causes higher productivity next period, output rises from t = 2. Over the simulation, real wages rise to offset the income effect on labour supply from the productivity increase. Eventually Y and w converge to higher levels. Productivity growth also triggers a real business cycle upswing, not illustrated.

The episode is long-lasting because capital takes a long time to react fully to the rise in TFP, due to adjustment costs.

We use the utility function to calculate welfare implications of the reform, confirming these growth gains are not achieved at the expense of welfare. However, the welfare function is basic so we do not emphasise this exercise.

The UK government raised the top rate of income tax in 2009 from 40 to 50p, the first increase in this band for over 20 years.

\(\frac {dA_{t+i}}{dA_{t+i-1}}=\frac {A_{t+i}}{A_{t+i-1}}\). Hence for i ≥ 1,

$$ \frac{d\text{\textit{\ }}A_{t+i}}{dz_{t}}=\frac{d\text{\textit{\ }}A_{t+i}}{ dA_{t+i-1}}.\frac{d\text{\textit{\ }}A_{t+i-1}}{dA_{t+i-2}}.....\frac{d\text{ \textit{\ }}A_{t+2}}{dA_{t+1}}.\frac{d\text{\textit{\ }}A_{t+1}}{dz_{t}} =A_{t+i}\frac{A_{t}}{A_{t+1}}a_{1} $$(40)so \(\frac {dd_{t+i}}{dz_{t}}=\frac {Y_{t+i}}{A_{t+i}}A_{t+i}\frac {A_{t}}{ A_{t+1}}a_{1}\). It may be objected that dzt will enhance output directly through its effect on productivity (holding inputs fixed), and will also induce the firm to hire more capital in order to exploit its higher marginal product (similarly for labour). I assume that the effect of dzt on the future dividend (dt+i = πt+i) is simply its direct effect through higher TFP, on the basis that any effects on the firm’s input demands are second order and can be ignored. Therefore the expected change in the dividend stream is based on forecasts for choice variables (set on other first order conditions) that are assumed independent of the agent’s own activities in context of price forecasts; she anticipates only the effect of zt on the level of output that can be produced with given inputs from t + 1 onwards.

The non-policy cost of generating new productivity via zt is assumed to be zero. The model abstracts from a fixed or sunk cost of innovating. Moreover, time in zt leads in a certain fashion to higher productivity, except in so far as the relationship is subject to a random shock.

Although in balanced growth \(\frac {C}{Y}\) is constant, in the presence of shocks the ratio will move in an unpredictable way (see Meenagh et al. 2007 for discussion). At any given point in the sample, the model is not in balanced growth, though it tends to it in the future if no further shocks are expected.

“The formula used to calculate the zero-to-10 ratings was: (Vmax - Vi) / (Vmax - Vmin) multiplied by 10. Vi represents the hiring cost (measured as a percentage of salary). The values for Vmax and Vmin were set at 33% (1.5 standard deviations above average) and 0%, respectively. Countries with values outside of the Vmax and Vmin range received ratings of either zero or 10, accordingly”. Fraser Institute (2009).

Alternative theories predict a negative correlation between MCH and union membership (the idea that unions are only needed when the government fails to represent workers’ interests directly) but the data indicate a positive correlation.

The interpolation is carried out for both level and first differences of y/x, where y is the low frequency series and x the higher frequency series (the union membership rate); the resulting series are very similar but first differences are smoother. We use the first difference output.

A fuller measure would reflect employment protection legislation including firing costs (see e.g. Botero et al. 2004), but data availability is a constraint. Correlations of our LMR indicators with (highly time-invariant) OECD EPL measures from 1985 for the UK are actually negative; our indicators do not fully capture the increases in dismissal regulation over the period and thus may slightly overstate the extent to which the UK labour market is ‘deregulated’; however, the strong decline of collective bargaining and union power over the period represents the removal of significant labour market friction.

In fact the matrix π is found when we solve for the terminal conditions on the model, which constrain the expectations to be consistent with the structural model’s long run equilibrium.

References

Acemoglu D (2008) Oligarchic versus democratic societies. J Eur Econ Assoc 6(1):1–44

Acs Z, Sanders M (2013) Knowledge spillover entrepreneurship in an endogenous growth model. Small Bus Econ 41(4):775–795

Acs Z, Braunerhjelm P, Audretsch D, Carlsson B (2009) The knowledge spillover theory of entrepreneurship. Small Bus Econ 32(1):15–30

Acs Z, Audretsch D, Braunerhjelm P, Carlsson B (2012) Growth and entrepreneurship. Small Bus Econ 39(2):289–300

Aghion P, Howitt P (1992) A model of growth through creative destruction. Econometrica 60:323–51

Aghion P, Howitt P (2006) Joseph Schumpeter lecture: appropriate growth policy: a unifying framework. J Eur Econ Assoc 4(2-3):269–314

Ambler S, Guay A, Phaneuf L (2012) Endogenous business cycle propagation and the persistence problem:, The role of labour market frictions. J Econ Dyn Control 36:47–62

Baliamoune-Lutz M, Garello P (2014) Tax structure and entrepreneurship. Small Bus Econ 42(1):165–190

Blanchard O, Giavazzi F (2003) Macroeconomic effects of regulation and deregulation in goods and labor markets. Q J Econ 118(3):879–907

Boldrin M, Levine D (2002) Perfectly competitive innovation, Levine’s Working Paper Archive, 625018000000000192, David K. Levine

Boldrin M, Levine D (2008) Perfectly competitive innovation. J Monet Econ 55(3):435–453

Botero J, Djankov S, La Porta R, Lopez-De-Silanes F (2004) The regulation of labor. Q J Econ 119(4):1339–1382

Braunerhjelm P, Acs Z, Audretsch D, Carlsson B (2010) The missing link:, knowledge diffusion and entrepreneurship in endogenous growth. Small Bus Econ 34 (2):105–125

Cacciatore M, Fiori G (2016) The macroeconomic effects of goods and labor market deregulation. Rev Econ Dyn 20:1–24

Canova F (1994) Statistical inference in calibrated models. J Appl Econom 9 (S):S123–44

Card D, Freeman R (2004) What have two decades of british economic reform delivered?, NBER Chapters. In: Seeking a Premier Economy: The Economic Effects of British Economic Reforms, 1980-2000, 9-62

Chari V, Kehoe P, McGrattan E (2007) Business cycle accounting. Econometrica 75(3):781–836

Coenen G, McAdam P, Straub R (2008) Tax reform and labour-market performance in the euro area: A simulation-based analysis using the New Area-Wide Model. J Econ Dyn Control 32:2543–2583

Crafts N (2012) British relative economic decline revisited : the role of competition. Explor Econ Hist 49(1):17–29

Crawford C, Freedman J (2010) Small business taxation. In: Mirrlees J, Adam S, Besley T, Blundell R, Bond S, Chote R, Gammie M, Johnson P, Myles G, Poterba J (eds) Dimensions of Tax Design: The Mirrlees Review. Oxford University Press

Denton FT (1971) Adjustment of monthly or quarterly series to annual totals: an approach based on quadratic minimization. J Am Stat Assoc 66:99–102

Djankov S, McLiesh C, Ramalho R (2006) Regulation and growth. Econ Lett 92(3):395–401

Djankov S, Ganser T, McLiesh C, Ramalho R, Shleifer A (2010) The effect of corporate taxes on investment and entrepreneurship. Am. Econ J Macroecon 2(3):31–64

Dridi R, Guay A, Renault E (2007) Indirect inference and calibration of dynamic stochastic general equilibrium models. J Econ 136:397–430

Erken H, Donselaar P, Thurik R (2008) Total factor productivity and the role of entrepreneurship. Jena Economic Research Papers 2008–019

Everaert L, Schule W (2008) Why it pays to synchronize structural reforms in the euro area across markets and countries. IMF Staff Pap 55(2):356–366. https://www.imf.org/External/Pubs/FT/staffp/2008/02/everaert.htm

Fair RC, Taylor JB (1983) Solution and maximum likelihood estimation of dynamic nonlinear rational expectations models. Econometrica 51(4):1169–85

Feenstra R, Luck P, Obstfeld M, Russ K (2014) In search of the Armington elasticity, NBER Working paper No. 20063

Fraser Institute (2009) Appendix: Explanatory Notes and Data Sources, Economic Freedom of the World: 2009 Annual Report, 191-202

Gomes S, Jacquinot P, Mohr M, Pisani M (2011) Structural Reforms and Macroeconomic Performance in the Euro Area countries: A Model-based Assessment. ECB Working Paper Series, No. 1323

Guerron-Quintana P, Inoue A, Kilian L (2017) Impulse response matching estimators for DSGE models. J Econ 196(1):144–155

Hall AR, Inoue A, Nason JM, Rossi B (2012) Information criteria for impulse response function matching estimation of DSGE models. J Econ 170:499–518

Hamilton J (2018) Why you should never use the Hodrick-Prescott filter. Rev Econ Stat 100(5):831–843

HM Treasury (2011) The plan for growth. Autumn Statement 2011

Hodrick R, Prescott E (1997) Postwar U.S. Business cycles: an empirical investigation. J Money Credit Bank 29(1):1–16

Hooper P, Johnson K, Marquez J (2000) Trade Elasticities for the G-7 Countries. Princeton Studies in International Economics, 87

Jaumotte F, Pain N (2005) From Ideas to development: the determinants of R&D and patenting. OECD economics department working paper no. 457, OECD, Paris

Kirzner IM (1973) Competition and Entrepreneurship. University of Chicago Press, Chicago and London

Le VPM, Meenagh D, Minford APL, Wickens MR (2011) How much nominal rigidity is there in the US economy? Testing a new Keynesian DSGE model using indirect inference. J Econ Dyn Control 35(12):2078–2104

Le VPM, Meenagh D, Minford APL, Wickens M, Xu Y (2016) Testing macro models by Indirect inference: a survey for users. Open Econ Rev 27(1):1–38

Le VPM, Meenagh D, Minford APL, Wickens M (2017) A Monte Carlo procedure for checking identification in DSGE models. J Econ Dyn Control 76 (C):202–210

Lee Y, Gordon RH (2005) Tax structure and economic growth. J Public Econ 89(5-6):1027–1043

Lucas RE Jr (1990) Supply-Side economics: an analytical review. Oxf Econ Pap 42(2):293–316

McCallum B (1976) Rational expectations and the natural rate hypothesis: some consistent estimates. Econometrica 44:43–52

Meenagh D, Minford APL, Wang J (2007) Growth and relative living standards - testing Barriers to Riches on post-war panel data. CEPR Discussion Papers 6288

Meenagh D, Minford APL, Nowell E, Sofat P (2010) Can a real business cycle model without price and wage stickiness explain UK real exchange rate behaviour?. J Int Money Financ 29(6):1131–1150

Meenagh D, Minford APL, Wickens M, Xu Y (2019) Testing DSGE Models by Indirect Inference: a Survey of Recent Findings, Forthcoming in Open Economies Review

Minford L, Meenagh D (2019) Testing a model of UK growth: a causal role for R&D subsidies. Forthcoming in Economic Modelling

Michelacci C (2003) Low returns in R&D due to the lack of entrepreneurial skills. Econ J 113:207–225

Myles GD (2009) Economic growth and the role of taxation - disaggregate data. OECD Economics Department Working Papers 715

OECD (2015) OECD Economic Surveys: United Kingdom. OECD Publishing, Paris

Poschke M (2010) The regulation of entry and aggregate productivity. Econ J 120(549):1175–1200

Prescott EC (2004) Why do Americans work so much more than Europeans? Federal Reserve Bank of Minneapolis Quarterly Review 28:2–13

Romer P (1990) Endogenous technical change. J Polit Econ 98:71–102

Ruge-Murcia F (2014) Indirect inference estimation of nonlinear dynamic general equilibrium models: with an application to asset pricing under skewness risk. Centre Interuniversitaire de Recherche en Economie Quantitative (CIREQ) Cahier 15–2014

Scarpetta S, Hemmings P, Tressel T, Woo J (2002) The Role of Policy and Institutions for Productivity and Firm Dynamics: Evidence from Micro and Industry Data, OECD Economics Department Working Papers, No. 329, OECD

Schorfheide F (2011) Estimation and evaluation of DSGE models: progress and challenges. NBER Working Paper Series Working Paper 16781

Schumpeter J (1942) Capitalism, Socialism and Democracy. Harper and Brothers, New York

Schumpeter J (1947) The creative response in economic history. J Econ Hist 7:149–159

Wennekers S, Thurik R (1999) Linking Entrepreneurship and Economic Growth. Small Bus Econ 13(1):27–55

Wickens M (1982) The efficient estimation of econometric models with rational expectations. Rev Econ Stud 49:55–67

Acknowledgments

The authors would like to thank anonymous referees and the Editor of this journal for constructive suggestions on an earlier version of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A: Model Derivations, cont.

1.1 A.1 First order condition for z(t)

The first order condition for zt is:

At the (Nt,zt)margin, the optimal choice of zt trades off the impacts of a small increase dzt on labour earnings (lower in period t due to reduced employment time), subsidy payments (higher at t in proportion to the increase in zt), and expected dividend income. Footnote 35 With substitution from 27, the first order condition can be rearranged as follows:

On the left hand side is the return on the marginal unit of Nt, the real consumer wage; on the right is the present discounted value of the expected increase in the dividend stream as a result of a marginal increase in zt, plus time t subsidy incentives attached to R&D activity. Footnote 36 Substituting again from 27 for zt yields

Modeling the preference shock to consumption, γt, as an AR(1) stationary process such that γt = ργγt− 1 + ηγ,t, Setting ρ1 ≃ 1, we approximate \(\frac {C_{t}}{ Y_{t}}\) as a random walk, so \(E_{t}\frac {Y_{t+i}}{C_{t+i}}=\frac {Y_{t}}{C_{t} }\) for all i > 0.Footnote 37 The expression becomes

where \(\frac {\tau _{t}}{w_{t}}\equiv \tau _{t}^{\prime }\). A first order Taylor expansion of the righthand side of Eq. 28 around a point where \(\tau _{t}^{\prime }=\tau ^{\prime }\) gives a linear relationship between \(\frac {A_{t+1}}{A_{t}}\) and \(\tau _{t}^{\prime }\) of the form

where \(b_{1}=-a_{1}.\frac {\frac {\beta \rho _{\gamma }}{1-\beta \rho _{\gamma }}\frac {Y}{C}}{\frac {w}{C}(1+\tau ^{\prime })^{2}}\). Other terms in the expansion are treated as part of the error term.

1.2 A.2 Deriving the labour supply response to policy shocks

Taking the total derivative of the time endowment in 3 gives dxt = −dNt − dzt, and hence \(\frac {dx_{t}}{x_{t}}=\frac {-dN_{t}-dz_{t} }{x_{t}}\). Assuming \(\bar {N}\approx \bar {x}\approx \frac {1}{2}\) in some initial steady state with approximately no z activity implies

Substituting into the loglinearised intratemporal condition for ln wt from 23 and using 45a, we obtain

Integrating this and rearranging for the log of the firm’s real unit cost of labour, \(\ln \tilde {w}_{t}\), gives

where

Substituting into Eq. (28) from (27) and rearranging for zt, then taking the derivative with respect to \(\tau _{t}^{\prime }\), we find \(c_{1}=-\frac {\frac {\beta \rho _{\gamma }}{1-\beta \rho _{\gamma }} \frac {Y_{t}}{C_{t}^{\rho _{1}}}}{\frac {w_{t}}{C_{t}^{\rho _{1}}}(1+\tau _{t}^{\prime })^{2}}\). We could potentially calibrate c1 from this, taking appropriate values for righthand side variables. However there is flexibility around what values are appropriate in practice.

Appendix B: The Linearised System

The linearised system of optimality conditions and constraints solved numerically is given below. Each equation is normalised on one of the endogenous variables (constants are suppressed in the errors). Variables are in natural logs except where already expressed in percentages. For clarity, \( \ln ({C_{t}^{d}})^{\ast }\) and \(\ln {C_{t}^{f}}\) are denoted ln EXt and ln IMt.

Appendix C: Data Appendix

Table 7 contains all definitions and sources of data used in the study, as well as a symbol key. Most UK data are sourced from the UK Office of National Statistics (ONS); others from International Monetary Fund (IMF), Bank of England (BoE), UK Revenue and Customs (HMRC) and Organisation for Economic Cooperation and Development (OECD). Labour Market Indicators are taken from the Fraser Institute Economic Freedom Project, which sources them from the World Economic Forum’s Global Competitiveness Report (GCR) and the World Bank (WB). All data seasonally adjusted and in constant prices unless specified otherwise.

1.1 C.1 Data for Policy Indicator

UK data on \(\tau _{t}^{\prime }\) reflects regulation and tax. On regulation, the focus (due to data range and availability) is on the labour market. Two components are selected from the labour market sub-section of the Economic Freedom (EF) indicators compiled by the Fraser Institute: the Centralized Collective Bargaining (CCB) index and Mandated Cost of Hiring (MCH) index. Of the labour market measures, these two components span the longest time-frame.

The original data source for CCB is World Economic Forum’s Global Competitiveness Report (various issues). Survey participants answer the following question: “Wages in your country are set by a centralized bargaining process (= 1) or up to each individual company (= 7)”. The Fraser Institute converts these scores onto a [0,10] interval. MCH is constructed from World Bank Doing Business data, reflecting “the cost of all social security and payroll taxes and the cost of other mandated benefits including those for retirement, sickness, health care, maternity leave, family allowance, and paid vacations and holidays associated with hiring an employee” (Fraser Institute 2009). These costs are also converted to a [0,10] interval; zero represents a hiring process with high regulatory burden.Footnote 38 Labour market flexibility increases with both indices in their raw form. These [0,10] scores are scaled to a [0,1] interval before being interpolated as follows.