Abstract

Considering co-simulation and solver coupling approaches, the coupling variables have to be approximated within a macro-time step (communication-time step), e.g., by using extrapolation/interpolation polynomials. Usually, the approximation order is assumed to be fixed. The efficiency and accuracy of a co-simulation may, however, be increased by using a variable approximation order. Therefore, a technique to control the integration order is required. Here, an order control algorithm for co-simulation and solver coupling methods is presented. The order controller is incorporated into the control algorithm for the macro-step size so that co-simulations with variable integration order and variable macro-step size can be carried out. Different numerical examples are presented, which illustrate the applicability and benefit of the proposed order control strategy. This contribution mainly focuses on mechanical systems. The presented techniques may, however, also be applied to nonmechanical dynamical systems.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Co-simulation terms a variety of numerical techniques, which can be applied to couple different solvers in time domain. A classical field of application for co-simulation methods concerns the simulation of multiphysical systems, see, e.g., [13, 16, 20, 49, 69]. In this case, each physical subdomain is modeled and simulated with its own (specialized) subsystem solver, whereas each subsystem may be considered as a black-box system. The subsystem solvers are numerically coupled by an appropriate co-simulation method. In literature, many interesting multidisciplinary problems have been solved incorporating co-simulation techniques, e.g., fluid/structure interaction problems [1, 43], analysis of coupled finite-element/multibody models [2, 3, 48], multidisciplinary simulations in the framework of vehicle dynamics [11, 36, 45] or coupled problems including particle models [14, 33, 70, 76].

Besides this classical area of application, co-simulation may also be applied advantageously to parallelize monodisciplinary dynamical systems, see, for instance, [4, 10, 31, 32, 38, 44, 46, 63, 74]. Therefore, the global system is split into a certain number of subsystems, which are connected and simulated by a proper co-simulation approach. As a consequence, the overall computation time may be reduced massively.

A very straightforward consideration can be useful to demonstrate the possible computational benefit, which may be achieved by a co-simulation-based parallelization approach. We consider therefore a multibody system with \(n\) degrees of freedom, which is decomposed into \(r\) equally sized subsystems. The decomposed system is assumed to be integrated with an implicit predictor/corrector co-simulation method, see Sect. 3. Note that for implicit co-simulation approaches, an interface-Jacobian is required, which may, however, be calculated very easily in parallel with the predictor/corrector subsystem integrations so that the computation of the interface-Jacobian will not (markedly) increase the overall co-simulation time. Regarding an implicit co-simulation approach, the main computational effort is produced by the repetition of the macro-step for carrying out the corrector iteration over the macro-interval. Now, we assume that an \(\mathcal{O} \left ( n^{\alpha } \right )\)-algorithm is used for solving the equations of motion, where \(\alpha \) is usually a value between 1 and 3. Consequently, the simulation time for the monolithic model is given by \(T_{\mathit{Mono}} =\mathit{constant} \cdot n^{\alpha } \). Applying an implicit co-simulation scheme and assuming an average number of \(l\) corrector iterations within each macro-step, the simulation time of the co-simulation may roughly be estimated by \(T_{\mathit{Cosim}} =(1+l) \cdot \mathit{constant} \cdot \left ( \frac{n}{r} \right )^{\alpha } +\mathit{Overhead}\) (one predictor-step and \(l\) corrector-steps). Practical simulations show (see [40]) that the number of corrector steps \(l\) is frequently rather small (\(l=2\) or \(l=3\)). Hence, if the overall model is decomposed into a sufficiently large number of subsystems, the reduction of computation time may become very significant. It should be noted that the estimation formula for \(T_{\mathit{Cosim}}\) will only yield reasonable results if the (average) integration step-size of the monolithic model equals the (average) integration step-size of the subsystems, see Sect. 6.6. The possible speed-up compared to a monolithic simulation may be improved even more, if the co-simulation is accomplished with a variable macro-step size (macro-step size controller). A further improvement may be achieved if a variable integration order is used (i.e., variable approximation order for the coupling variables). Applying an explicit co-simulation method, the possible speed-up may become even larger, since \(l=0\) for explicit co-simulations. It should be pointed out that for the case that \(r\) is chosen too large, the overhead will become the dominant part of the computation time so that the co-simulation will get inefficient [29, 40].

Besides the directly obvious speed-up due to the decomposition into subsystems, which can be solved independently and in parallel within each macro-step, an improvement with respect to the simulation time may also be achieved due to the multi-rate effect. If the global model can be split into a small-sized stiff subsystem and a large-scaled non-stiff subsystem, only the smaller stiff subsystem has to be integrated with small subsystem integration-step sizes, while the larger non-stiff subsystem can be integrated very efficiently and stable with larger subsystem integration-step sizes.

Remark 1

Parallelization of a dynamical system can be realized in different ways. Considering a monolithic dynamical model, which is discretized by an (implicit) time-integration scheme (e.g., BDF method, Runge–Kutta method), an algebraic system of equations has to be solved in each time step. Usually, parallelization is accomplished by solving the algebraic system of equations in parallel, i.e., the equation solver is parallelized. In contrast to this classical type of parallelization, a further parallelization can be achieved by making use of a co-simulation approach, as mentioned above. Hence, parallelization may be realized on two levels, namely

-

i)

by a decomposition of the overall model into subsystems, which are integrated in parallel within each macro-time step and

-

ii)

by applying the classical type of parallelization, i.e., by using parallelized subsystem solvers for solving the algebraic subsystem equations.

Here, we omit a detailed literature review on co-simulation and solver coupling techniques. The interested reader is referred to literature, see, e.g., [17, 18, 22, 56].

Within a co-simulation approach, a macro-time grid (also called communication-time grid) has to be defined, i.e., macro-time points \(T_{i}\) \((i=0,1,2,\ldots)\) have to be specified, where the subsystems exchange the coupling variables. Between the macro-time points, the coupling variables are approximated, e.g., by means of extrapolation/interpolation polynomials of degree \(k\). In the simplest case, an equidistant macro-time grid is used and the order of the approximation polynomials for the coupling variables is assumed to be constant. Using a co-simulation with constant order and constant macro-step size may, however, be rather inefficient. It has been shown in literature that the efficiency, accuracy, and stability of a co-simulation implementation may significantly be improved by incorporating a macro-step size controller, see [6, 40, 51, 53, 54].

In the current manuscript, the possible improvement of a co-simulation with variable integration order is investigated. For realizing a variable integration order, a strategy is required, which determines the optimal integration order. Therefore, the local truncation error within the macro-step from \(T_{N}\) to \(T_{N+1} = T_{N} + H_{N}\) (\(H_{N}\) denotes the current macro-step size) is estimated for different polynomial degrees \(k\). The optimal polynomial order minimizes the estimated local error. Applying an appropriate order control algorithm, co-simulations with variable integration order can be realized. In this work, only co-simulation implementations with variable macro-step size are considered. It should therefore be stressed that the control algorithm for the integration order and the macro-step size control algorithm cannot be considered independently, since both influence each other. As a consequence, order and step-size have to be controlled simultaneously.

In the paper at hand, mechanical co-simulation models with an arbitrary number of subsystems are investigated. The developed order control algorithm may, however, also be used for nonmechanical systems. Considering mechanical systems, the coupling of the subsystems can be realized either by constitutive laws (coupling by applied-forces) [1, 3, 9, 19, 21, 48, 58, 60] or by algebraic constraint equations (coupling by reaction forces) [5, 23, 30, 39, 55, 57, 59, 61, 62, 72, 73, 75]. In this work, we restrict ourselves to the case of applied-force coupling approaches (explicit and implicit). Furthermore, we only consider parallel co-simulation implementations of Jacobi type. Sequential integration techniques (Gauss–Seidel type) [26, 27] are not applied, since we focus on fully parallelizable models. To approximate the coupling variables between two macro-time points, Lagrange polynomials are used here. Other approximation approaches – acceleration based techniques [34, 35], interface-model-based approaches [47], context-based approximation polynomials [7], methods applying a least square fit [42], etc. – are not considered in the current manuscript.

The new contributions of this manuscript can be summarized as follows:

-

An algorithm for controlling the order of the approximation polynomials for co-simulation and solver coupling methods is presented.

-

The developed order controller is imbedded into the macro-step size control algorithm.

-

Efficiency, accuracy, and stability of a co-simulation implementation with variable integration order and variable macro-step size is analyzed with different numerical examples.

-

The large potential of parallelizing dynamical models with the help of co-simulation is demonstrated.

The manuscript is organized as follows: In Sect. 2, the basic idea of co-simulation and the relevant definitions are briefly summarized. The classical explicit and implicit co-simulation methods are shortly recapitulated in Sect. 3. The different error estimators, which are used in the current work for controlling the macro-step size, are collected in Sect. 4. The order control strategy and the combined algorithm for controlling the integration order and the macro-step size simultaneously are presented in Sect. 5. Numerical examples are given in Sect. 6. The paper is concluded in Sect. 7. Calculation of the interface-Jacobian, which is required for the implicit co-simulation approach, is treated in Appendix A. Some additional simulation results for Example 3 in Sect. 6.3 are collected in Appendix B.

2 Co-simulation model

2.1 General case: arbitrary mechanical system decomposed into subsystems

To demonstrate the basic strategy behind a co-simulation approach, a general mechanical system is considered, which is mathematically defined by the following first-order DAE system (differential-algebraic system of equations)

The vector \(\boldsymbol{z}\) contains the position variables \(\boldsymbol{q}\), the velocity variables \(\boldsymbol{v}\), and also the Lagrange multipliers \(\boldsymbol{\lambda } \); \(\boldsymbol{B}\) represents a symmetric square matrix, which is not invertible in the general case, where Eq. (1) represents a DAE. For classical constrained multibody systems [12], for instance, the matrix \(\boldsymbol{B}\) is given by \(\left ( \begin{array}{c@{\quad }c@{\quad }c} \boldsymbol{I} & \boldsymbol{0} & \boldsymbol{0}\\ \boldsymbol{0} & \boldsymbol{M} & \boldsymbol{0}\\ \boldsymbol{0} & \boldsymbol{0} & \boldsymbol{0} \end{array} \right )\), where \(\boldsymbol{I}\) denotes the identity matrix and \(\boldsymbol{M}\) the mass matrix. In the ODE case, \(\boldsymbol{B}\) is invertible.

Next, the overall system is decomposed into \(r\) subsystems. To describe the coupling between the subsystems, appropriate coupling variables \(\boldsymbol{u}\) have to be defined. Throughout this manuscript, we assume that the subsystems are connected by applied forces/torques (applied-force coupling) so that the coupling between the subsystems is described by constitutive laws. Constraint coupling, where the subsystems are connected by algebraic constraint equations and corresponding reaction forces (Lagrange multipliers) is not considered here. In the framework of a co-simulation approach, macro-time points \(T_{i} \ (i=0,1,2,\dots )\) have to be defined. Macro-time points are discrete, not necessarily equidistant time points. With the macro-time points, the macro-step size \(H_{N} = T_{N+1} - T_{N}\) is defined. If a constant macro-step size \(H= T_{N+1} - T_{N} =\mathit{const}\). is used, \(H\) has to be specified by the user so that the co-simulation runs stable and provides accurate results. Applying an appropriate error estimator, the current macro-step size \(H_{N}\) is determined by the macro-step size controller so that a user-defined error criterion is fulfilled. Between the macro-time points, the subsystems are integrated independently, since the subsystems only exchange information at the macro-time points. To carry out the subsystem integrations between the macro-time points, the coupling variables have to be approximated. In this work, Lagrange polynomials are used for approximating the coupling variables.

The states and multipliers of an arbitrary subsystem \(s\in \left \{ 1,\dots ,r \right \} \) are arranged in the vector \(\boldsymbol{z}^{s} = ( \boldsymbol{q}^{s^{T}}, \boldsymbol{v}^{s^{T}}, \boldsymbol{\lambda }^{s^{T}} )^{T}\). Note that the number of the subsystem is indicated by the superscript \(s\). The equations of motion of subsystem \(s\) read

The coupling variables \(\boldsymbol{u}\), which here represent input variables of the subsystems, can be defined implicitly by the coupling functions \(\boldsymbol{g}_{c}:=\boldsymbol{u}-\boldsymbol{\varphi } \left ( t, \boldsymbol{z},\boldsymbol{u} \right ) = \boldsymbol{0}\). It should be mentioned that the subsystem coupling is, one the one hand, defined by the constitutive laws of the coupling forces/torques and, on the other hand, by the decomposition technique. Note that basically three different decomposition techniques may be distinguished, namely force/force-decomposition, force/displacement-decomposition, and displacement/displacement-decomposition, see [58, 61, 75] for more details. It should further be stressed that for applied-force coupling approaches, the coupling variables are only functions of \(\boldsymbol{q}\) and \(\boldsymbol{v}\), i.e., the coupling functions may be expressed as \(\boldsymbol{g}_{c}:=\boldsymbol{u}-\boldsymbol{\varphi } \left ( t, \boldsymbol{q},\boldsymbol{v},\boldsymbol{u} \right ) = \boldsymbol{0}\). Making use of the coupling variables, the equations of motion of subsystem \(s\) can be rewritten as

Collecting the right-hand sides of all subsystem equations into the vector \(\boldsymbol{f}^{\mathit{co}} \left ( t, \boldsymbol{z},\boldsymbol{u} \right ) = ( \boldsymbol{f}^{\mathit{co},1} \left ( t, \boldsymbol{z}^{1},\boldsymbol{u} \right )^{T},\dots , \boldsymbol{f}^{\mathit{co},r} \left ( t, \boldsymbol{z}^{r},\boldsymbol{u} \right )^{T} )^{T}\), the decomposed co-simulation system can be formulated as

with \(\boldsymbol{z}= ( \boldsymbol{z}^{1^{T}},\dots , \boldsymbol{z}^{r^{T}} )^{T}\). Note that in the framework of a co-simulation approach, the coupling conditions are only enforced at the macro-time points \(T_{N}\). Between the macro-time points, approximated coupling variables are used. Further details and examples concerning the decomposition of an overall system into subsystems can, for instance, be found in [29, 40]. It should be emphasized again that co-simulation and the here investigated strategies for controlling the macro-step size and approximation order are not limited to mechanical systems.

2.2 Special case: system of coupled multibody models

In the subsequent sections, we focus on the special case that the subsystems are general constrained multibody systems. Then, the equations of motion (3) for an arbitrary subsystem \(s\) can be written as

The matrix \(\boldsymbol{H}^{s}\) defines the relationship between the (generalized) velocity variables \(\boldsymbol{v}^{s}\) and the (generalized) position variables \(\boldsymbol{x}^{s}\); \(\boldsymbol{M}^{s}\) denotes the symmetric mass matrix, and \(\boldsymbol{f}^{s}\) the vector of the applied and Coriolis forces. The algebraic constraint equations of the subsystem are collected in the vector \(\boldsymbol{g}^{\boldsymbol{s}} \left ( t, \boldsymbol{x}^{s} \right ) =\boldsymbol{0}\). The term \(\boldsymbol{H}^{s^{\mathrm{T}}} \left ( \boldsymbol{x}^{s} \right ) \boldsymbol{G}^{s ^{\mathrm{T}}} \left ( t, \boldsymbol{x}^{s} \right ) \boldsymbol{\lambda }^{s}\) with the constraint Jacobian \(\boldsymbol{G}^{s} = \frac{\partial \boldsymbol{g}^{\boldsymbol{s}}}{\partial \boldsymbol{x}}\) and the vector of Lagrange multipliers \(\boldsymbol{\lambda }^{s}\) represents the constraint forces/torques generated by the subsystem constraint equations. The coupling forces/torques of subsystem \(s\) are arranged in the vector \(\boldsymbol{f}^{c,\mathrm{s}}\). A comparison with the general case of Sect. 2.1 shows that one gets for the special case of a system of coupled multibody models the relationships \(\boldsymbol{B}^{s} \left ( t,\boldsymbol{z} \right ) = \mathrm{blockdiag} ( \boldsymbol{I}, \boldsymbol{M}^{s},\boldsymbol{0})\) and \(\boldsymbol{f}^{\mathit{co},s} = \left ( \begin{array}{c} \boldsymbol{H}^{s} \boldsymbol{v}^{s}\\ \boldsymbol{f}^{s} - \boldsymbol{H}^{s^{\mathrm{T}}} \boldsymbol{G}^{s^{\mathrm{T}}} \boldsymbol{\lambda }^{s} + \boldsymbol{f}^{\mathrm{c},\mathrm{s}}\\ \boldsymbol{g}^{s} \end{array} \right )\).

2.2.1 Example: two multibody systems coupled by a flexible bushing element

As an example, two arbitrary unconstrained mechanical subsystems are considered. It is assumed that the subsystems are mechanically coupled by a linear viscoelastic bushing element (flexible atpoint joint) [58], see Fig. 1. More precisely, point \(C_{i}\) of body \(i\) (center of mass \(S_{i}\), mass \(m_{i}\), inertia tensor \(\boldsymbol{J}_{i}\)) belonging to subsystem 1 is connected to marker \(C_{j}\) of body \(j\) (center of mass \(S_{j}\), mass \(m_{j}\), inertia tensor \(\boldsymbol{J}_{j}\)) related to subsystem 2 by a linear three-dimensional spring/damper element.

The coupling points \(C_{i}\), \(C_{j}\) are represented by the body fixed vectors \(\boldsymbol{r}_{C_{i}}\), \(\boldsymbol{r}_{C_{j}}\). Applying absolute coordinates, position and orientation of an arbitrary rigid body \(q\) is defined by the 3 coordinates of the center of mass \(\boldsymbol{r}_{S_{q}}\) and by 3 rotation parameters arranged in the vector \(\boldsymbol{\gamma }_{q} \in \mathbb{R}^{3}\) (e.g., 3 Bryant angles). The coordinates of the angular velocity \(\boldsymbol{\omega }_{q}\) are associated with the time derivative of the rotation parameters by the general expression \(\dot{\boldsymbol{\gamma }}_{q} = \boldsymbol{H} \left ( \boldsymbol{\gamma }_{q} \right ) \boldsymbol{\omega }_{q}\) with \(\boldsymbol{H} \in \mathbb{R}^{3\times 3}\). The equations of motion (Newton–Euler equations) for the coupling bodies \(i\) and \(j\) are given by

Coupling body \(i\) (subsystem 1):

Coupling body \(j\) (subsystem 2):

In the above equations, \(\boldsymbol{f}_{i}\) and \(\boldsymbol{f}_{j}\) represent the resultant applied forces, which are acting on the two coupling bodies. The resultant applied torques are denoted by \(\boldsymbol{\tau }_{i}\) and \(\boldsymbol{\tau }_{j}\).

The coupling force \(\boldsymbol{f}^{{c}}\) represents a spring/damper force, which is proportional to the relative displacement and to the relative velocity of the coupling points \(C_{i}\) and \(C_{j}\); \(\boldsymbol{f}^{{c}}\) can implicitly be described by the three coupling conditions

where the diagonal matrix \(\boldsymbol{C} = \mathrm{diag} \,\left ( c_{x}, c_{y}, c_{z} \right )\) collects the three spring constants and the diagonal matrix \(\boldsymbol{D} = \mathrm{diag}\, \left ( d_{x}, d_{y}, d_{z} \right )\) the three damping coefficients. Note that the total time derivative with respect to the inertial system \(K_{0}\) is denoted by \(\frac{d}{dt}\). Hence, the coupling forces and torques, which are acting on the two coupling bodies, read

3 Classical explicit and implicit co-simulation schemes

For approximating the coupling variables \(\boldsymbol{u}\) within the macro-step \(T_{N} \rightarrow T_{N+1}\), extrapolation/interpolation polynomials \(\boldsymbol{p}_{N+1} \left ( t \right )\) of degree \(k\) (local approximation order \(k+1\), i.e., \(\mathcal{O} \left ( H^{k+1} \right )\)) are used. Since the subsystems only exchange information at the macro-time points, the coupling conditions are only enforced at the macro-time points \(T_{N}\), i.e., \(\boldsymbol{g}_{c} \left ( t, \boldsymbol{z},\boldsymbol{u} \right ) = \boldsymbol{0} \) is only fulfilled at the macro-time points. Between the macro-time points, the subsystems can be integrated independently.

Applying a co-simulation approach, system (1) is replaced by the weakly coupled co-simulation system

The new variables \(\boldsymbol{z}_{N+1}\) are obtained by integrating Eq. (10) from \(T_{N}\) to \(T_{N+1}\) with the initial conditions \(\boldsymbol{z}_{N}\).

It should be noted that for an explicit co-simulation method, the extrapolated coupling variables \(\boldsymbol{p}_{N+1} ( T_{N+1} )\) will usually not satisfy the coupling equations at the new macro-time point \(T_{N+1}\) so that \(\boldsymbol{p}_{N+1} \left ( T_{N+1} \right ) -\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{p}_{N+1} ( T_{N+1} ) \right ) \neq \boldsymbol{0}\). Consistent coupling variables \(\boldsymbol{u}_{N+1}\) can, however, simply be calculated by an update step. Therefore, the coupling equations \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{u}_{N+1} \right )\) have just to be solved for \(\boldsymbol{u}_{N+1}\). The update step will produce (small) jumps in the coupling variables, because \(\boldsymbol{p}_{N+1} \left ( T_{N+1} \right )\) is usually not equivalent with the updated coupling variables \(\boldsymbol{u}_{N+1}\).

3.1 Explicit co-simulation scheme

The classical explicit co-simulation approach can be described very easily by considering the general macro-time step from \(T_{N} \rightarrow T_{N+1}\) [52, 60, 64, 69]. We assume that at the beginning of the macro-time step, the variables of the subsystems (subsystem states and subsystem multipliers) as well as the coupling variables are known.

-

In a first step, the vector collecting the extrapolation polynomials \(\boldsymbol{p}_{N+1}^{\mathit{ext}} \left ( t \right )\) for the coupling variables \(\boldsymbol{u} (t )\) in the time interval [\(T_{N}\),\(T_{N+1}\)] is generated by making use of the \(k+1\) sampling points \(\left ( T_{N}, \boldsymbol{u}_{N} \right ), \left ( T_{N-1}, \boldsymbol{u}_{N-1} \right ), \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right )\).

-

In a second step, Eq. (10) is integrated from \(T_{N} \rightarrow T_{N+1}\) using the extrapolation polynomials \(\boldsymbol{p}_{N+1}^{\mathit{ext}} \left ( t \right )\). This yields the variables \(\boldsymbol{z}_{N+1}^{\mathit{ext}}\). In case of the explicit co-simulation scheme, the new variables at \(T_{N+1}\) are simply given by \(\boldsymbol{z}_{N+1}:= \boldsymbol{z}_{N+1}^{\mathit{ext}}\).

-

In a final step, updated coupling variables \(\boldsymbol{u}_{N+1}\) are computed. Therefore, the variables \(\boldsymbol{z}_{N+1}\) are inserted into the coupling equations and \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{u}_{N+1} \right )\) is solved for \(\boldsymbol{u}_{N+1}\).

Now, the next macro-time step \(T_{N+1} \rightarrow T_{N+2}\) can be accomplished. The integration scheme is sketched in Fig. 2.

Note that for practical reasons, problems may occur in conjunction with feed-through systems. From the practical point of view, it may be complicated to perform the update step, e.g., if commercial simulation tools are used with reduced solver access. In this case, \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{u}_{N+1} \right )\) is just replaced by \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{p}_{N+1} \left ( T_{N+1} \right ) \right )\). As a result, problems with the error estimator may arise (see [40] for more details). In the following, we therefore assume that updated coupling variables \(\boldsymbol{u}_{N+1}\) are calculated.

3.2 Implicit co-simulation scheme

The classical implicit co-simulation scheme is based on a predictor/corrector approach, see, e.g., [58, 60]. Again, the general macro-time step from \(T_{N}\) to \(T_{N+1}\) is considered to explain the approach. The coupling variables \(\boldsymbol{u} \left ( t \right )\) are approximated by Lagrange polynomials of degree \(k\). At the beginning of the macro-time step, the subsystem variables and the coupling variables are assumed to be known.

Predictor step:

-

Firstly, predictor polynomials \(\boldsymbol{p}_{N+1}^{\mathit{pre}} \left ( t \right )\) for the coupling variables \(\boldsymbol{u} (t )\) in the time interval [\(T_{N}\),\(T_{N+1}\)] are generated by making use of the \(k+1\) sampling points \(\left ( T_{N}, \boldsymbol{u}_{N} \right ), \left ( T_{N-1}, \boldsymbol{u}_{N-1} \right ), \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right )\).

-

Secondly, Eq. (10) is integrated from \(T_{N} \rightarrow T_{N+1}\) with \(\boldsymbol{p}_{N+1}^{\mathit{pre}} \left ( t \right )\). This yields the variables \(\boldsymbol{z}_{N+1}^{\mathit{pre}}\). It should be mentioned that the predictor step is identical with the explicit co-simulation step of Sect. 3.1 (except for the update step).

Corrector iteration:

-

Let \(\boldsymbol{u}_{N+1}^{*}\) denote arbitrary (initially unknown) coupling variables at the macro-time point \(T_{N+1}\). Using \(\boldsymbol{u}_{N+1}^{*}\), interpolation polynomials \(\boldsymbol{p}_{N+1}^{*} \left ( t \right )\) for the coupling variables \(\boldsymbol{u} (t )\) in the time interval [\(T_{N}\),\(T_{N+1}\)] can formally be generated with the help of the \(k+1\) sampling points \(\left ( T_{N+1}, \boldsymbol{u}_{N+1}^{*} \right ), \left ( T_{N}, \boldsymbol{u}_{N} \right ),\ \dots , \left ( T_{N-k+1}, \boldsymbol{u}_{N-k+1} \right )\). Note that \(\boldsymbol{u}_{N}\),…,\(\boldsymbol{u}_{N-k+1}\) term the coupling variables at the previous macro-time points, which are assumed to be known.

-

A formal integration of the subsystems with the polynomials \(\boldsymbol{p}_{N+1}^{*} \left ( t \right )\) will yield a relationship of the form \(\boldsymbol{z}_{N+1}^{*}\)(\(\boldsymbol{u}_{N+1}^{*}\)).

-

By inserting \(\boldsymbol{z}_{N+1}^{*}\)(\(\boldsymbol{u}_{N+1}^{*}\)) into the coupling equations \(\boldsymbol{g}_{c}\), one formally gets the relationship \(\boldsymbol{g}_{c} \left ( T_{N+1}, \boldsymbol{z}_{N+1}^{*} ( \boldsymbol{u}_{N+1}^{*} ), \boldsymbol{u}_{N+1}^{*} \right )\). Considering arbitrary coupling variables \(\boldsymbol{u}_{N+1}^{*}\), the coupling equations are not fulfilled, i.e., \(\boldsymbol{g}_{c} \left ( T_{N+1}, \boldsymbol{z}_{N+1}^{*} ( \boldsymbol{u}_{N+1}^{*} ), \boldsymbol{u}_{N+1}^{*} \right ) \neq \boldsymbol{0}\).

To compute corrected coupling variables \(\boldsymbol{u}_{N+1}\), which fulfill the coupling equations \(\boldsymbol{g}_{c} \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{u}_{N+1} \right ) = \boldsymbol{0}\), a Newton iteration has to be carried out. Therefore, the relationship \(\boldsymbol{g}_{c} \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{u}_{N+1} \right ) = \boldsymbol{0}\) is linearized by a Taylor series expansion.

-

In the first corrector iteration step, the predictor point \(\left ( T_{N+1}, \boldsymbol{z}_{N+1}^{\mathit{pre}} ( \boldsymbol{u}_{N+1}^{\mathit{pre}} ), \boldsymbol{u}_{N+1}^{\mathit{pre}} \right )\) is used as expansion point for the Taylor series expansion. Note that the predictor point may also be denoted as zeroth corrector point, i.e., \(\left ( T_{N+1}, \boldsymbol{z}_{N+1}^{\mathit{cor},0} ( \boldsymbol{u}_{N+1}^{\mathit{cor},0} ), \boldsymbol{u}_{N+1}^{\mathit{cor},0} \right )\). The Taylor series expansion yields the linearized coupling equations

$$\begin{aligned} &\boldsymbol{g}_{c}^{\mathit{linear}} \bigl( T_{N+1}, \boldsymbol{z}_{N+1} ( \boldsymbol{u}_{N+1} ), \boldsymbol{u}_{N+1} \bigr) \\ &\quad := \boldsymbol{g}_{c} \bigl( T_{N+1}, \boldsymbol{z}_{N+1}^{\mathit{pre}} \bigl( \boldsymbol{u}_{N+1}^{\mathit{pre}} \bigr), \boldsymbol{u}_{N+1}^{\mathit{pre}} \bigr) + \left . \frac{d \boldsymbol{g}_{c}}{d \boldsymbol{u}_{N+1}^{*}} \right \vert _{\boldsymbol{u}_{N+1}^{\mathit{pre}}} \cdot \bigl( \boldsymbol{u}_{N+1} - \boldsymbol{u}_{N+1}^{\mathit{pre}} \bigr) = \mathbf{0}\, . \end{aligned}$$(11)By solving the linear system (11) for \(\boldsymbol{u}_{N+1}\), corrected coupling variables \(\boldsymbol{u}_{N+1}^{\mathit{cor},1}\) are obtained.

To compute corrected subsystem variables \(\boldsymbol{z}_{N +1}^{\mathit{cor},1}\), interpolation polynomials \(\boldsymbol{p}_{N +1}^{\mathit{cor},1} \left ( t \right )\) for the coupling variables \(\boldsymbol{u} (t )\) are generated by using the \(k+1\) sampling points \(\left ( T_{N+1}, \boldsymbol{u}_{N +1}^{\mathit{cor},1} \right ), \left ( T_{N}, \boldsymbol{u}_{N} \right ), \ldots , \left ( T_{N- k +1}, \boldsymbol{u}_{N - k +1} \right )\). Now, the subsystem integration from \(T_{N}\rightarrow T_{N+1}\) is repeated with the polynomials \(\boldsymbol{p}_{N +1}^{\mathit{cor},1} \left ( t \right )\), which yields the corrected subsystem variables \(\boldsymbol{z}_{N +1}^{\mathit{cor},1}\).

-

Considering the \(\alpha \)th corrector step, the (\(\alpha -\)1)th corrector point \(\left ( T_{N+1}, \boldsymbol{z}_{N +1}^{\mathit{cor}, \alpha -1} \left ( \boldsymbol{u}_{N +1}^{\mathit{cor}, \alpha -1} \right ), \boldsymbol{u}_{N +1}^{\mathit{cor}, \alpha -1} \right )\) is used as expansion point for the Taylor series expansion and the above procedure is repeated.

-

The procedure is repeated \(l\) times, until a convergence criterion is fulfilled. The final variables \(\boldsymbol{u}_{N +1}:= \boldsymbol{u}_{N +1}^{\mathit{cor}, l}\) are the corrected variables.

-

By setting \(\boldsymbol{z}_{N+1}:= \boldsymbol{z}_{N+1}^{\mathit{cor},l}\), the next macro-step from \(T_{N+1} \rightarrow T_{N+2}\) is accomplished.

The implicit co-simulation scheme is illustrated in Fig. 3.

Some remarks on the different possibilities to calculate/approximate the interface-Jacobian \(\frac{d \boldsymbol{g}_{c}}{d \boldsymbol{u}_{N+1}^{*}}\) can be found in Appendix A. In the following sections, basically two methods are used and compared, namely the calculation of the interface-Jacobian by a finite difference approach (Appendix A.1) and the approximate computation of the interface-Jacobian based on a low-order expansion technique (Appendix A.2).

4 Error estimators for the classical explicit and implicit co-simulation schemes

The efficiency and accuracy of a co-simulation may significantly be improved by using a macro-step size controller so that a nonequidistant communication-time grid can be realized. Therefore, error estimators are required, which can be used to estimate the numerical error produced by the co-simulation approach, i.e., the error introduced by the polynomial approximation of the coupling variables. In [40], three different error estimators for the classical explicit co-simulation approach (called ExLocal, ExMilne, ExSLocal) and two error estimators for the classical implicit co-simulation approach (called ImMilne, ImSLocal) have been derived. The basic idea for the construction of an error estimator, which estimates the local error generated in the macro-step \(T_{N} \rightarrow T_{N+1}\), is to compare two different solutions at \(T_{N+1}\): the state variables \(\boldsymbol{q}_{N+1}\), \(\boldsymbol{v}_{N+1}\) (or, alternatively, the coupling variables \(\boldsymbol{u}_{N+1}\)) of the actual co-simulation generated with the classical explicit/implicit approach are compared with reference variables \(\hat{\boldsymbol{q}}_{N+1}\), \(\hat{\boldsymbol{v}}_{N+1}\) (or alternatively \(\boldsymbol{u}_{N+1}^{\mathit{ext}}\), \(\boldsymbol{u}_{N+1}^{\mathit{pre}}\)). The differences \(\boldsymbol{q}_{N+1} - \hat{\boldsymbol{q}}_{N+1}\), \(\boldsymbol{v}_{N+1} - \hat{\boldsymbol{v}}_{N+1}\) (or, alternatively, \(\boldsymbol{u}_{N+1} - \boldsymbol{u}_{N+1}^{\mathit{ext}}\) for the explicit and \(\boldsymbol{u}_{N+1} - \boldsymbol{u}_{N+1}^{\mathit{pre}}\) for the implicit co-simulation) can be used to construct an error estimator. In this section, the main formulas for the five error estimators are shortly recapitulated. For a detailed description, we refer to [40]. It should be pointed out that the error estimators used here are only valid for continuous co-simulation systems. Considering discontinuous systems, systems with switches, etc., a modified error estimation strategy has to be applied.

In this work, the classical explicit and implicit co-simulation schemes are considered, see Sect. 3. Using extrapolation/interpolation polynomials of degree \(k\) (i.e., polynomial order \(k+1\)), the coupling variables are approximated with \(\mathcal{O} \left ( H^{k+1} \right ) \). Since the coupling variables and therefore the accelerations are approximated with \(\mathcal{O} \left ( H^{k+1} \right )\), the velocities \(\boldsymbol{v}_{N+1}\) will converge with \(\mathcal{O} \left ( H^{k+2} \right )\) and the position variables \(\boldsymbol{q}_{N+1}\) with order \(\mathcal{O} \left ( H^{k+3} \right )\) for both the explicit and implicit scheme. Hence, the following estimates hold:

where \(\boldsymbol{q} \left ( T_{N+1} \right )\) and \(\boldsymbol{v} \left ( T_{N+1} \right )\) denote the analytical solutions with \(\boldsymbol{q} \left ( T_{N} \right ) = \boldsymbol{q}_{N}\) and \(\boldsymbol{v} \left ( T_{N} \right ) = \boldsymbol{v}_{N}\). As can be seen, the integration order of the co-simulation may directly be controlled by controlling the polynomial degree of the approximation polynomials (see Sect. 5.2). Note that the error bounds of Eq. (12) are only valid for the case that the errors resulting from the numerical subsystem integrations can be neglected, i.e., strictly speaking for the case that the subsystems are integrated analytically.

4.1 Error estimator 1 for explicit co-simulation (ExLocal)

The first error estimator for the classical explicit co-simulation is based on a local extrapolation technique [65, 71] and is denoted by ExLocal. In order to calculate an error estimator for \(\boldsymbol{q}_{N+1}\) and \(\boldsymbol{v}_{N+1}\), comparative solutions \(\hat{\boldsymbol{q}}_{N+1}\) and \(\hat{\boldsymbol{v}}_{N+1}\) are needed. If a local extrapolation technique is applied for deriving an error estimator, two solutions with different convergence orders are compared. For this purpose, two parallel co-simulations are carried out from \(T_{N}\) to \(T_{N+1}\), whereby the comparative solution is calculated with the same initial conditions (\(\hat{\boldsymbol{z}}_{N} = \boldsymbol{z}_{N}\)):

-

The firstsimulation (actual co-simulation) is accomplished with the extrapolation polynomials \(\boldsymbol{p}_{N+1}^{\mathit{ext}} \left ( t \right ) = \boldsymbol{P}_{N+1}^{k} \left ( t; \left ( T_{N}, \boldsymbol{u}_{N} \right ), \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right ) \right )\) of degree \(k\) (approximation order \(k+1\)). This integration yields the variables \(\boldsymbol{q}_{N+1}\) and \(\boldsymbol{v}_{N+1}\).

-

The second simulation (comparative solution) is carried out with the extrapolation polynomials \(\hat{\boldsymbol{p}}_{N+1}^{\mathit{ext}} \left ( t \right ) = \boldsymbol{P}_{N+1}^{k+1} \left ( t; \left ( T_{N}, \boldsymbol{u}_{N} \right ), \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right ), \left ( T_{N-k-1}, \boldsymbol{u}_{N-k-1} \right ) \right )\) of degree \(k+1\). The second integration provides the variables \(\hat{\boldsymbol{q}}_{N+1}\) and \(\hat{\boldsymbol{v}}_{N+1}\).

The two co-simulations are illustrated in Fig. 4. Within the local extrapolation technique, the local error of the co-simulation in the macro-step from \(T_{N} \rightarrow T_{N+1}\) can very easily be estimated by the difference of the two solutions. Considering the first simulation, the local errors of the position variable \(q_{i}\) and the velocity variable \(v_{i}\) are defined by

Substituting the analytical solutions \(q_{i} \left ( T_{N+1} \right )\) and \(v_{i} \left ( T_{N+1} \right )\) by \(\hat{q}_{i,N+1} = q_{i} \left ( T_{N+1} \right ) + \mathcal{O} \left ( H^{k+4} \right )\) and \(\hat{v}_{i,N+1} = v_{i} \left ( T_{N+1} \right ) + \mathcal{O} \left ( H^{k+3} \right )\), one gets

Therefore, it is possible to use the expressions

as error estimators for the actual co-simulation. The estimators converge to the local errors \(e_{i,N+1}^{\mathit{pos}}\) and \(e_{i,N+1}^{\mathit{vel}}\) with order \(\mathcal{O} \left ( H^{k+4} \right )\) and \(\mathcal{O} \left ( H^{k+3} \right )\), respectively [40].

With the error estimators \(\varepsilon _{i,N+1}^{\mathit{pos}}\) and \(\varepsilon _{i,N+1}^{\mathit{vel}}\) according to Eq. (15), it is possible to monitor the local error of all state variables. To simplify the implementation, it would be possible to only monitor the state variables of the coupling bodies.

4.2 Error estimator 2 for explicit co-simulation (ExMilne)

The second error estimator for the classical explicit co-simulation approach is indicated by ExMilne and based on a Milne device approach [41]. Within this approach, two different solutions with the same convergence order but different leading error terms are compared. Again, two parallel co-simulations from \(T_{N}\) to \(T_{N+1}\) are executed with the same initial conditions (\(\hat{\boldsymbol{z}}_{N} = \boldsymbol{z}_{N}\)):

-

The first simulation (actual co-simulation) is carried out with the extrapolation polynomials \(\boldsymbol{p}_{N+1}^{\mathit{ext}} \left ( t \right ) = \boldsymbol{P}_{N+1}^{k} \left ( t; \left ( T_{N}, \boldsymbol{u}_{N} \right ), \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right ) \right )\), which yields the variables \(\boldsymbol{q}_{N+1}\) and \(\boldsymbol{v}_{N+1}\).

-

The second simulation (comparative solution) with the interpolation polynomials \(\hat{\boldsymbol{p}}_{N+1}^{\mathit{int}} \left ( t \right ) = \boldsymbol{P}_{N+1}^{k} \left ( t; \left ( T_{N+1}, \hat{\boldsymbol{u}}_{N +1} \right ), \left ( T_{N}, \boldsymbol{u}_{N} \right ), \dots , \left ( T_{N-k+1}, \boldsymbol{u}_{N-k+1} \right ) \right )\) provides the variables \(\hat{\boldsymbol{q}}_{N+1}\) and \(\hat{\boldsymbol{v}}_{N+1}\), where \(\hat{\boldsymbol{u}}_{N +1} = \hat{\boldsymbol{p}}_{N+1}^{\mathit{ext}} \left ( T_{N+1} \right )\) represent extrapolated coupling variables generated with the extrapolation polynomials \(\hat{\boldsymbol{p}}_{N+1}^{\mathit{ext}} \left ( t \right ) = \boldsymbol{P}_{N+1}^{k+1} ( t; ( T_{N}, \boldsymbol{u}_{N} ), \dots , ( T_{N-k}, \boldsymbol{u}_{N-k} ), ( T_{N-k-1}, \boldsymbol{u}_{N-k-1} ) )\) of degree \(k +1\).

The two co-simulations are illustrated in Fig. 5 for the case \(k=1\). Note that \(\boldsymbol{q}_{N+1}\) and \(\hat{\boldsymbol{q}}_{N+1}\) are calculated with polynomials of degree \(k\) and locally convergence with \(\mathcal{O} \left ( H^{k+3} \right )\). In [40] (Appendix A.2), it is shown that the local errors of \(\boldsymbol{q}_{N+1}\) and \(\hat{\boldsymbol{q}}_{N+1}\) may be written as

where \(H_{N} = T_{N+1} - T_{N}\) represents the current macro-step size. The error constants \(C_{\mathit{pos},N+1}^{k}\) and \(C_{\mathit{pos},N+1}^{k+1}\) can be expressed as special integrals of the Lagrange basis polynomials \(L^{k} \left ( t \right )\), namely

The matrix \(\boldsymbol{A}_{N}^{\mathit{co}}\) represents a special Jacobian matrix, which does not explicitly depend on the macro-step size \(H_{N}\). The vector \(\Delta \boldsymbol{p}_{N+1}\) is also not explicitly depending on \(H_{N}\) and may be calculated by the difference of two polynomials. A direct calculation of the leading error term \(H_{N}^{2} C_{\mathit{pos},N+1}^{k+1} \boldsymbol{A}_{N}^{\mathit{co}} \Delta \boldsymbol{p}_{N+1}\) in Eq. (16) is not possible. The leading error term may, however, be determined in a straightforward manner with the help of the two solutions \(\boldsymbol{q}_{N+1}\) and \(\hat{\boldsymbol{q}}_{N+1}\). Subtracting Eq. (16a) from Eq. (16b) and multiplying by \(C_{\mathit{pos},N+1}^{k+1} / C_{\mathit{pos},N+1}^{k}\) gives

Obviously, the local error \(e_{i,N+1}^{\mathit{pos}} = \left \vert q_{i,N+1} - q_{i} \left ( T_{N+1} \right ) \right \vert \) of the actual co-simulation can be estimated by the error estimator

which converges to the local error \(e_{i,N+1}^{\mathit{pos}}\) with order \(\mathcal{O} \left ( H^{k+4} \right )\).

Also, an error estimator \(\varepsilon _{i,N+1}^{\mathit{vel}}\) for the velocity variables can be derived, namely

which approximates the local error \(e_{i,N+1}^{\mathit{vel}} = \left \vert v_{i,N+1} - v_{i} \left ( T_{N+1} \right ) \right \vert \) of the actual co-simulation with order \(\mathcal{O} \left ( H^{k+3} \right )\).

4.3 Error estimator 3 for the explicit co-simulation (ExSLocal)

The third error estimator for the classical explicit co-simulation approach is also constructed with the help of the local extrapolation technique and termed by ExSLocal. With the error estimators of Sects. 4.1 and 4.2, the local errors \(e_{i,N+1}^{\mathit{pos}} = \left \vert q_{i,N+1} - q_{i} \left ( T_{N+1} \right ) \right \vert \) and \(e_{i,N+1}^{\mathit{vel}} = \left \vert v_{i,N+1} - v_{i} \left ( T_{N+1} \right ) \right \vert \) of the state variables \(\boldsymbol{q}\) and \(\boldsymbol{v}\) can be estimated. In an alternative approach, the quality of the co-simulation is not directly measured by considering the local error of the state variables, but by regarding the local error of the coupling variables \(\boldsymbol{u}\). The coupling variables are generally defined by the implicit coupling conditions \(\boldsymbol{u}-\boldsymbol{\varphi } \left ( t, \boldsymbol{q},\boldsymbol{v},\boldsymbol{u} \right ) = \boldsymbol{0}\) and may therefore simply be considered as a nonlinear function of \(\boldsymbol{q}\) and \(\boldsymbol{v}\). Instead of using \(e_{i,N+1}^{\mathit{pos}}\) and \(e_{i,N+1}^{\mathit{vel}}\) for measuring the quality of the co-simulation, the local error \(\left \vert u_{i,N+1} - u_{i} \left ( T_{N+1} \right ) \right \vert \) of the coupling variables may be considered.

Applying the classical explicit co-simulation scheme, the extrapolated coupling variables \(\boldsymbol{p}_{N+1}^{\mathit{ext}} \left ( t \right ) = \boldsymbol{P}_{N+1}^{k} \left ( t; \left ( T_{N}, \boldsymbol{u}_{N} \right ),\ \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right ) \right )\) are used for integrating the subsystems. After the subsystem integration from \(T_{N}\) to \(T_{N+1}\) has been accomplished, updated coupling variables \(\boldsymbol{u}_{N+1}\) are computed at the new time point \(T_{N+1}\). Therefore, the new state variables \(\boldsymbol{q}_{N+1}\), \(\boldsymbol{v}_{N+1}\) are inserted into the coupling equations and the relationship \(\boldsymbol{u}_{N+1} -\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{q}_{N+1}, \boldsymbol{v}_{N+1}, \boldsymbol{u}_{N+1} \right ) = \boldsymbol{0}\) is solved for \(\boldsymbol{u}_{N+1}\).

The predicted coupling variables \(\boldsymbol{u}_{N+1}^{\mathit{ext}} = \boldsymbol{P}_{N+1}^{k} \left ( T_{N+1}; \left ( T_{N}, \boldsymbol{u}_{N} \right ), \dots , \left ( T_{N-k}, \boldsymbol{u}_{N-k} \right ) \right )\) are usually different from the updated coupling variables \(\boldsymbol{u}_{N+1}\). The difference \(\boldsymbol{u}_{N+1}^{\mathit{ext}} - \boldsymbol{u}_{N+1}\) may therefore be used to construct an error estimator based on the local extrapolation technique. It has been shown in [40] that the local error \(e_{i,N+1}^{u} = \left \vert u_{i,N+1}^{\mathit{ext}} - u_{i} \left ( T_{N+1} \right ) \right \vert \) of the predicted coupling variable \(u_{i,N+1}^{\mathit{ext}}\) can be estimated with the help of the updated coupling variable \(u_{i,N+1}\) according to

The estimator \(\varepsilon _{i,N+1}^{u}\) converges with order \(\mathcal{O} \left ( H^{k+2} \right )\) to the local error \(e_{i,N+1}^{u}\) if consistent coupling variables \(u_{i,N+1}\) are used. Hence, this estimator can only be applied, if updated coupling variables are calculated at \(T_{N+1}\) by solving \(\boldsymbol{u}_{N+1} -\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{q}_{N+1}, \boldsymbol{v}_{N+1}, \boldsymbol{u}_{N+1} \right ) = \boldsymbol{0}\) for \(\boldsymbol{u}_{N+1}\). Note that \(e_{i,N+1}^{u}\) does not monitor the local error \(\left \vert u_{i,N+1} - u_{i} \left ( T_{N+1} \right ) \right \vert \) of the updated coupling variables, but the local error \(\left \vert u_{i,N+1}^{\mathit{ext}} - u_{i} \left ( T_{N+1} \right ) \right \vert \) of the predicted coupling variables.

It should be mentioned that for systems without feed-through, consistent coupling variables can be calculated explicitly in a very straightforward manner with the help of the new variables \(\boldsymbol{q}_{N+1}\) and \(\boldsymbol{v}_{N+1}\) by simply evaluating \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{q}_{N+1}, \boldsymbol{v}_{N+1} \right )\). For systems with feed-though, the update relationship \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{u}_{N+1} \right )\) is – for practical reasons – sometimes replaced by the simplified update \(\boldsymbol{u}_{N+1} =\boldsymbol{\varphi } \left ( T_{N+1}, \boldsymbol{z}_{N+1}, \boldsymbol{p}_{N+1} \left ( T_{N+1} \right ) \right )\). In this case, \(\varepsilon _{i,N+1}^{u}\) will in general not converge with \(\mathcal{O} \left ( H^{k+2} \right )\) to the local error \(e_{i,N+1}^{u}\). As a consequence, Eq. (21) will not represent a mathematically sound error estimator for the predicted coupling variables.

4.4 Error estimator 4 for the implicit co-simulation (ImMilne)

The implicit co-simulation scheme according to Sect. 3.2 is based on a predictor/corrector approach. With the help of the Milne device technique, the difference between the predicted and corrected state variables can be used to construct an error estimator [40]. The local error of the corrected position variables \(q_{i,N+1}\) can be estimated by

where \(C_{\mathit{pos},N+1}^{k}\) are the error constants defined in Eq. (17). The estimator \(\varepsilon _{i,N+1}^{\mathit{pos}}\) converges to the local error \(e_{i,N+1}^{\mathit{pos}} = \left \vert q_{i,N+1} - q_{i} \left ( T_{N+1} \right ) \right \vert \) with order \(\mathcal{O} \left ( H^{k+4} \right )\).

A similar approach for the velocity variables yields the error estimator

where \(C_{\mathit{vel},N+1}^{k}\) denote the error constants of Eq. (20). The estimator \(\varepsilon _{i,N+1}^{\mathit{vel}}\) approximates the local error \(e_{i,N+1}^{\mathit{vel}} = \left \vert v_{i,N+1} - v_{i} \left ( T_{N+1} \right ) \right \vert \) of the corrected velocity variables with order \(\mathcal{O} \left ( H^{k+3} \right )\).

4.5 Error estimator 5 for the implicit co-simulation (ImSLocal)

This error estimator, which is based on a local extrapolation approach, can be used to monitor the error of the predicted coupling variables \(\boldsymbol{u}_{N+1}^{\mathit{pre}}\), i.e., to estimate the local error \(e_{i,N+1}^{u} = \left \vert u_{i,N+1}^{\mathit{pre}} - u_{i} \left ( T_{N+1} \right ) \right \vert \). As shown in [40], the difference between the predicted and corrected coupling variables, i.e.,

converges with order \(\mathcal{O} \left ( H^{k+2} \right )\) to the local error \(e_{i,N+1}^{u}\).

5 Macro-step size and order control algorithm

The here proposed algorithm for controlling the macro-step size and the integration order (i.e., the polynomial degree \(k\) of the approximation polynomials for the coupling variables) is carried out in three steps. The suggested approach may be interpreted as a straightforward generalization of step size and order control strategies well-established in the field of classical time integration [8, 15, 67, 68]. In the first step, it is checked, whether the macro-step can be accepted or has to be repeated since the estimated error is too large (Sect. 5.1). For that purpose, the error estimators for the local error of Sect. 4 are used. The second step contains the algorithm for the selection of the optimal integration order (Sect. 5.2). In the third step, the optimal step size for the next macro-step is determined (Sect. 5.3).

5.1 Step 1: Local error test

After the macro-step \(T_{N} \rightarrow T_{N+1}\) with \(T_{N+1} = T_{N} + H_{N}\) has been accomplished, the local error is estimated with the help of one of the entire five methods suggested in Sect. 4. The estimated error \(\boldsymbol{\varepsilon }_{N+1}\) – more precisely the weighted root mean square norm of \(\boldsymbol{\varepsilon }_{N+1}\) – has to pass the subsequent local error test

Note that \(n^{*}\) designates the number of variables, which are used for controlling the macro-step size. Basically, three alternatives exist. First, all state variables of all subsystems may be considered in the sum of Eq. (25). Second, only the state variables of the coupling bodies are taken into account in the summation. Third, in connection with the error estimators of Sect. 4.2 and Sect. 4.5, the summation is carried out over the \(n_{c}\) coupling variables (i.e., \(n^{*} = n_{c}\)). The weights \(W_{i,N+1}\) depend on the user-defined relative and absolute error tolerances \(\mathit{rtol}\) and \(\mathit{ato} l_{i}\) and also on the magnitude of the variables, which are used for the error estimation. Hence, essentially two cases have to be distinguished:

-

i)

The error of the state variables is estimated (ExLocal, ExMilne, ImMilne);

-

ii)

The error of the coupling variables is estimated (ExSLocal, ImSLocal).

In case i), the error analysis is carried out separately on position level and on velocity level. This is reasonable, since the position and velocity variables exhibit a different convergence behavior, see Sect. 4. Hence, in case i) the local error test

with the weights \(W_{i,N+1}^{\mathit{pos}} =\mathit{ato} l_{i}^{\mathit{pos}} +\mathit{rtol}\cdot \left \vert q_{i,N+1} \right \vert \) and \(W_{i,N+1}^{\mathit{vel}} =\mathit{ato} l_{i}^{\mathit{vel}} +\mathit{rtol}\cdot \left \vert v_{i,N+1} \right \vert \) is applied. Principally, all subsystem state variables can be used for the error estimation, i.e., the index \(i\) in Eq. (25) runs over all bodies. Alternatively, only the states of the coupling bodies are considered for the error estimation so that the index \(i\) only runs over the coupling bodies. It should be mentioned that the value \(\mathit{ato} l_{i}\) can be chosen individually for each component, while \(\mathit{rtol}\) is identical for all components. It is clear that the proper choice of \(\mathit{ato} l_{i}\) and \(\mathit{rtol}\) is problem-dependent and strongly affects the accuracy and the efficiency of the co-simulation.

In case ii), the local error test reads

with the weights \(W_{i,N+1}^{u} =\mathit{ato} l_{i}^{u} +\mathit{rtol}\cdot \left \vert u_{i,N+1} \right \vert \). The index \(i\) in Eq. (25) runs over all coupling variables.

Now, two possibilities have to be considered:

-

a)

The local error test is passed, i.e., condition (26) or condition (27) is fulfilled. Then, the next macro-step \(T_{N+1} \rightarrow T_{N+2}\) with \(T_{N+2} = T_{N+1} + H_{N+1}\) can be carried out.

-

b)

The local error test is not passed. In this case, a repetition of the current macro-step \(T_{N} \rightarrow \tilde{T}_{N+1}\) (\(\tilde{T}_{N+1} = T_{N} + \tilde{H}_{N}\)) with a reduced step size \(\tilde{H}_{N}\) has to be accomplished.

For a concise notation, both possibilities are combined. Therefore, the macro-step carried out next is denoted by \(T_{M} \rightarrow T_{M+1}\) (\(T_{M+1} = T_{M} + H_{M}\)), where

In short, the index \(M\) is set to \(M:=N+1\) if the local error test is passed. If the local error test has failed, the index \(M\) is set to \(M:=N\).

5.2 Step 2: optimal integration order and order control algorithm

The task consists of the selection of the optimal polynomial degree \(k\) for the approximation polynomials for the next macro-step \(T_{M} \rightarrow T_{M+1}\). Note again that the next macro-step is either a new macro-step (case a)) or a repetition of the current macro-step (case b)).

5.2.1 Order control algorithms for BDF solvers

a) Basic idea:

The order control algorithm applied here for calculating the optimal polynomial degree \(k\) for the approximation polynomials is inspired by well-established order control approaches used in connection with BDF solvers. Regarding variable-order BDF solvers, the optimal integration order is determined by considering the leading error term in the remainder of a Taylor series expansion. The remainder of the Taylor series is used for estimating the local truncation error (LTE). The local truncation error of an arbitrary time integration method of order \(k\) depends on the (\(k+1\))th derivative of the solution vector \(\boldsymbol{z}\) and on the solver step size \(h\) [15, 67] and reads

Note that \(c_{k+1}\) terms the leading error constant, which generally depends on the numerical integration method. Considering concretely the time step from \(t_{N}\) to \(t_{N+1} = t_{N} +h\) carried out with a BDF integrator of order \(k\), the local truncation error reads \(\boldsymbol{LTE} \left ( k \right ) = \frac{1}{k +1} h^{k +1} \boldsymbol{z}^{\left ( k +1 \right )} \left ( t_{i +1} \right ) + {\mathcal{O}} \left ( h^{k +2} \right )\). Since \(\boldsymbol{z}^{\left ( k +1 \right )} \left ( t_{i +1} \right )\) is unknown at the beginning of the time step, the term \(\boldsymbol{z}^{\left ( k +1 \right )} \left ( t_{i +1} \right )\) is replaced by the \((k+ 1)^{\mathrm{st}}\) derivative of a predictor polynomial \(\boldsymbol{P}_{k +1}^{\mathit{pre}} \left ( t \right )\), i.e., \(\boldsymbol{z}^{\left ( k +1 \right )} \left ( t_{i +1} \right ) \approx \left . \frac{d^{k +1}}{dt^{k +1}} \boldsymbol{P}_{k +1}^{\mathit{pre}} \left ( t \right ) \right \vert _{t_{i +1}}\).

The integration order for the next solver step is determined with the aim to maximize the integration step size. Therefore, the local truncation error norms \(\left \Vert \boldsymbol{LTE} \left ( k-1 \right ) \right \Vert \approx \left \Vert c_{k} h^{k} \boldsymbol{z}^{(k)} \right \Vert \), \(\left \Vert \boldsymbol{LTE} \left ( k \right ) \right \Vert \approx \left \Vert c_{k+1} h^{k+1} \boldsymbol{z}^{\left ( k+1 \right )} \right \Vert \), and \(\left \Vert \boldsymbol{LTE} \left ( k+1 \right ) \right \Vert \approx \left \Vert c_{k+2} h^{k+2} \boldsymbol{z}^{(k+2)} \right \Vert \) are considered. The integration order, which provides the lowest estimated truncation error and consequently allows the largest step size, is used for the next step [50].

If higher order integration methods are used (\(k>2\)), the order selection algorithm is usually extended by a heuristic approach to detect numerical instabilities. The heuristic approach suggested in [68] is based on the idea that oscillations with high frequencies and growing amplitudes will arise, if the simulation gets unstable. As a consequence, the local truncation error will oscillate rapidly with increasing magnitudes, when solver instabilities occur.

b) Modified approach:

A slightly modified approach for controlling the integration order, which is, for instance, implemented in the IDA solver of the SUNDIALS package [25], has been suggested in [8]. Note that IDA is a variable-order, variable-coefficient BDF solver for the solution of implicit differential-algebraic equation systems. The modified approach also considers the leading error term of the remainder of a Taylor series expansion. However, the leading error term is scaled to be independent of the error constant. Within the control algorithm proposed in [8], the three terms \(\left \Vert h^{k-1} \boldsymbol{z}^{(k-1)} \right \Vert \), \(\left \Vert h^{k} \boldsymbol{z}^{(k)} \right \Vert \), and \(\left \Vert h^{k+1} \boldsymbol{z}^{(k+1)} \right \Vert \) are compared. If these three terms do not form a decreasing sequence, i.e., if the condition \(\left \Vert h^{k-1} \boldsymbol{z}^{(k-1)} \right \Vert > \left \Vert h^{k} \boldsymbol{z}^{(k)} \right \Vert > \left \Vert h^{k+1} \boldsymbol{z}^{(k+1)} \right \Vert \) is not fulfilled, the integration order is decreased to \(k-1\). If the condition is fulfilled and if also \(\left \Vert h^{k+1} \boldsymbol{z}^{(k+1)} \right \Vert > \left \Vert h^{k+2} \boldsymbol{z}^{(k+2)} \right \Vert \) is satisfied, the order may be increased to \(k+1\). Numerical studies have shown that applying the modified approach, the integration order is reduced sooner in case of numerical instabilities compared to the approach described in a).

5.2.2 Order control algorithm for co-simulation

The order control algorithm implemented here for controlling the integration order of the co-simulation may be regarded as a straightforward adaption of the order control strategy suggested in [8] for BDF solvers. Within each macro-step, extrapolation polynomials \(\boldsymbol{P}_{M+1}^{j} = \boldsymbol{P}_{M+1}^{j} \left ( t; \left ( T_{M}, \boldsymbol{u}_{M} \right ), \dots , \left ( T_{M-j}, \boldsymbol{u}_{M-j} \right ) \right )\) of different degrees \(j=1,\dots ,k+1\) are considered. Then, corresponding scaled derivative norms according to \(\mathit{SDN} \left ( j \right ):= \left \Vert H_{N}^{j} \boldsymbol{P}_{M+1}^{j,(j)} \right \Vert \) are computed for the different polynomials, where \(H_{N}\) is the current macro-step size. Note that the upper index in brackets denotes the differentiation with respect to time \(t\), i.e., \(\boldsymbol{P}_{M+1}^{j,(j)} = \frac{d^{j} \boldsymbol{P}_{M+1}^{j}}{d t^{j}}\). If the condition

is fulfilled, the co-simulation is continued with the current polynomial degree \(k\). If the condition is not fulfilled, the polynomial degree is reduced to \(k-1\). If the condition (30) is fulfilled and if \(k+1\) consecutive macro-time steps have been carried out successfully with degree \(k\), also the term \(\bigl \Vert H_{N}^{k+2} \boldsymbol{P}_{M+1}^{k+2,(k+2)} \bigr \Vert \) is computed. The polynomial degree will be increased to \(k+1\), if \(\bigl \Vert H_{N}^{k+1} \boldsymbol{P}_{M+1}^{k+1,(k+1)} \bigr \Vert > \bigl \Vert H_{N}^{k+2} \boldsymbol{P}_{M+1}^{k+2,(k+2)} \bigr \Vert \) is fulfilled.

The complete order control algorithm implemented here is summarized in the subsequent scheme and in the flowchart of Fig. 6 and is mainly based on the order controller of the IDA solver [25]:

-

Check, if polynomial order has to be decreased:

-

If \(k=1\) and \(\mathit{SDN} \left ( 1 \right ) \leq \frac{1}{2} \mathit{SDN} \left ( 2 \right )\):

-

⁎

\(k_{\mathit{new}}:= 0\)

-

⁎

-

Else if \(k>1\) and \(\max \left ( \mathit{SDN} \left ( k \right ),\mathit{SDN}(k-1) \right ) \leq \mathit{SDN}(k+1)\):

-

⁎

\(k_{\mathit{new}}:=k-1\)

-

⁎

-

Else:

-

⁎

\(k_{\mathit{new}}:=k\)

-

⁎

-

-

Additional condition based on the number of consecutive failed macro-steps \(N_{\mathit{fail}}\):

-

If \(N_{\mathit{fail}} >5\):

-

⁎

\(k_{\mathit{new}}:=0\)

-

⁎

-

Else if \(N_{\mathit{fail}} >3\):

-

⁎

\(k_{\mathit{new}}:= \min \left ( k_{\mathit{new}},1 \right )\)

-

⁎

-

Else:

-

⁎

\(k_{\mathit{new}}\) is not adjusted

-

⁎

-

-

If the number of consecutive successful macro-steps with constant order \(k\) and without reducing the macro-step size fulfills \(N_{\mathit{succ}} >k\), then \(\mathit{SDN} \left ( k+2 \right )\) is computed and an adjustment of the order is considered:

-

If \(k=0\) and \(\mathit{SDN} \left ( 2 \right ) < \frac{1}{2} \mathit{SDN} \left ( 1 \right )\):

-

⁎

\(k_{\mathit{new}}:=1\)

-

⁎

-

Else if \(k>0\) and \(\mathit{SDN} \left ( k \right ) \leq \min \left ( \mathit{SDN} \left ( k+1 \right ),\mathit{SDN} \left ( k+2 \right ) \right )\):

-

⁎

\(k_{\mathit{new}}:=k-1\)

-

⁎

-

Else if \(k>0\) and \(\mathit{SDN} \left ( k+2 \right ) <\mathit{SDN} \left ( k+1 \right )\):

-

⁎

\(k_{\mathit{new}}:=k+1\)

-

⁎

-

Else:

-

⁎

\(k_{\mathit{new}}\) is not adjusted

-

⁎

-

5.3 Macro-step size controller

After the polynomial degree \(k:= k_{\mathit{new}}\) for the next macro-step has been determined, the algorithm for the macro-step size selection is executed. The macro-step size controller computes a positive, real-valued scaling factor \(r^{\rho }\). This factor is used for calculating the new macro-step size \(H_{M}\) for the next macro-step \(T_{M} \rightarrow T_{M+1}\) (see Eq. (28)) by means of the simple relationship \(H_{M} = r^{\rho } \ \cdot H_{N}\). The calculation of the new macro-step size \(H_{M}\) is based on the estimated error \(\boldsymbol{\varepsilon }_{N+1}\). The idea is to select the new macro-step size \(H_{M}\) in such a way that the resulting estimated error exactly fulfills the local error condition (25), i.e., \(\left \Vert \boldsymbol{\varepsilon }_{M+1} \right \Vert _{\mathit{WRMS}} =1\).

The determination of \(H_{M}\) for the next macro-step \(T_{M} \rightarrow T_{M+1}\) on the basis of the estimated error \(\boldsymbol{\varepsilon }_{N+1}\) is only possible, if the next macro-step \(T_{M} \rightarrow T_{M+1}\) is carried out with the same polynomial degree \(k\) as the current macro-step \(T_{N} \rightarrow T_{N+1}\). Therefore, two cases have to be distinguished:

-

1)

The two consecutive macro-steps \(T_{N} \rightarrow T_{N+1}\) and \(T_{M} \rightarrow T_{M+1}\) are carried out with the same polynomial degree. In this case, the error estimator \(\boldsymbol{\varepsilon }_{N+1}\) according to Sect. 4, which has been used for the local error test in Eq. (25), is also applied for calculating the new macro-step size \(H_{M}\).

-

2)

After the macro-step \(T_{N} \rightarrow T_{N+1}\) has been accomplished, the polynomial degree is changed by the order control algorithm. In this second case, the error estimator \(\boldsymbol{\varepsilon }_{N+1}\) cannot be used to calculate the step size for the next macro-step. Hence, the alternative a priori error estimator

is used for the next macro-step \(T_{M} \rightarrow T_{M+1}\). The two vectors \(\boldsymbol{P}_{M+1}^{k+1}(t) = \boldsymbol{P}_{M+1}^{k+1} ( t; ( T_{M}, \boldsymbol{u}_{M} ), \dots , ( T_{M-k-1}, \boldsymbol{u}_{M-k-1} ) )\) and \(\boldsymbol{P}_{M+1}^{k}(t) = \boldsymbol{P}_{M+1}^{k} ( t; ( T_{M}, \boldsymbol{u}_{M} ), \dots , ( T_{M-k}, \boldsymbol{u}_{M-k} ) )\) collect approximation polynomials of degree \(k\) and \(k+1\), respectively. Note again that the upper index in brackets denotes the differentiation with respect to the time, i.e., \(\boldsymbol{P}_{M+1}^{k+1, \left ( k+1 \right )} = \frac{d^{k+1} \boldsymbol{P}_{M+1}^{k+1}}{d t^{k+1}}\). It should be mentioned that \(\tilde{\boldsymbol{\varepsilon }}_{M+1}^{u}\) simply estimates the local error of the predicted coupling variables.

Remark 1

The a priori error estimator \(\tilde{\boldsymbol{\varepsilon }}_{M+1}^{u}\) is only used to determine the new macro-step size \(H_{M}\). After the macro-step \(T_{M} \rightarrow T_{M+1}\) has been accomplished with \(H_{M}\), the error is again checked with the a posteriori error estimator \(\boldsymbol{\varepsilon }_{M+1}\) according to Sect. 4. Hence, acceptance of a macro-step, see Eq. (25), is always based on the a posteriori error estimator.

Remark 2

The a priori estimator only monitors the error of the coupling variables. Using the error estimators ExLocal, ExMilne, ImMilne, however, the error of the state variables is monitored. As a consequence, the a priori estimator and the a posteriori estimator may be based on different variables.

Considering case 1), the scaling factor \(r^{\rho } \) for the new (optimal) macro-step size \(H_{M}\) is calculated by one of the following two relations:

Relation (\(\alpha \)) is used for the error estimators ExLocal, ExMilne and ImMilne, which are based on state variables; (\(\beta \)) is applied in connection with the error estimators ExSLocal and ImSLocal, which are based on coupling variables.

Regarding case 2), the scaling factor \(r^{\rho } \) for the new macro-step size \(H_{M}\) is computed by

The exponents in Eqs. (32) and (33) refer to the convergence order of the co-simulation method, which is \(k+3\) on position level and \(k+2\) on velocity level for the here considered explicit and implicit co-simulation approaches. The coupling variables converge with order \(k+1\). A detailed derivation of Eqs. (32) and (33) can be found in [40]; \(\mathit{SF}\) is a user-defined safety factor, e.g., \(\mathit{SF}\in [2, 6]\). The safety factor has to be chosen carefully. If the safety factor \(\mathit{SF}\) is chosen too small, the number of macro-step repetitions may increase significantly due to the increased number of failed local error tests. Choosing \(\mathit{SF}\) too large may entail too small macro-step sizes. If both the error of the position variables and the error of the velocity variables are estimated (ExLocal, ExMilne, ImMilne), the scaling factor is here chosen conservatively by means of \(r^{\rho } = \min \left ( r^{\mathit{pos}}, r^{\mathit{vel}} \right )\).

Summarizing, the scaling factor \(r^{\rho } \) is determined according to

Finally, the macro-step size control algorithm is explained. Depending on the number \(N_{\mathit{fail}}\) of consecutively failed macro-steps within the current step \(T_{N} \rightarrow T_{N+1}\), the following limitations for the scaling factor \(r^{\rho } \) are used (see also the flowchart in Fig. 7):

-

\(N_{\mathit{fail}} =0\):

-

(a)

If \(r^{\rho } \geq r_{\max }^{\rho } \):

perform next macro-step with \(H_{M}:= r_{\max }^{\rho } \cdot \ H_{N}\) (e.g., \(r_{\max }^{\rho } =2.0\)) (restriction for maximum step-size increase).

-

(b)

If \(r^{\rho } <1\):

perform next macro-step with \(H_{M}:= \min \left \{ 0.9, \max ( r_{\min }^{\rho }, \ r^{\rho } ) \right \} \cdot \ H_{N}\) (e.g., \(r_{\min }^{\rho } =0.5\)).

-

(c)

If \(1\leq r^{\rho } < r_{\max }^{\rho } \):

perform next macro-step with \(H_{M}:= H_{N}\).

-

(a)

-

\(N_{\mathit{fail}} =1\):

repeat current macro-step with reduced step size \(H_{M}:= \min \left \{ 0.9, \max (0.25, \ 0.9 \cdot r^{\rho } ) \right \} \cdot \ H_{N}\).

-

\(N_{\mathit{fail}} >1\) or corrector failed to converge (implicit co-simulation):

repeat current macro-step with reduced step size \(H_{M}:= 0.25 \cdot \ H_{N}\).

-

If \(H_{M} \leq H_{M,\min } = 10^{-12}\):

simulation is aborted and an error message is printed.

It should be noted that the error tolerances \(\mathit{atol}_{i}\) and \(r \mathit{tol}\) for the co-simulation as well as the safety factor \(\mathit{SF}\) have to be specified by the user. Generally, they are problem-dependent.

The macro-step size control algorithm described above may be interpreted as an I-controller. Alternatively, a PI-controller may be used by incorporating the previous errors \(\varepsilon _{i,N}^{\mathit{pos}}\) and \(\varepsilon _{i,N}^{\mathit{vel}}\) [24].

6 Numerical examples

The co-simulation models considered here consist of the subsystems and the co-simulation interface. The communication between the co-simulation interface and the subsystems is managed over the Message Passing Interface v3.1 (Intel MPI Library). Each subsystem is executed by an individual MPI-rank. If multiple integrations of the same subsystem within a macro-time step are required, e.g., to numerically compute the interface Jacobian by a finite difference approach or in connection with the calculation of the error estimator, the additional subsystem integrations are parallelized via OpenMP. The parallel threads are synchronized at the macro-time points.

A flowchart of the parallel implementation of the explicit co-simulation method with the macro-step size and order control algorithm is shown in Fig. 8. A corresponding flowchart of the implicit co-simulation approach is depicted in Fig. 9.

It should be mentioned that the co-simulations have been executed fully parallelized on a high-performance supercomputer [37] with Intel Xeon Platinum 9242 processors. Each subsystem integration has been accomplished on an individual core; the different cores are connected over an InfiniBand interconnect. Note that the computation times shown in the subsequent figures reflect the wall-clock time (including communication latency).

6.1 Example 1: Nonlinear two-mass oscillator

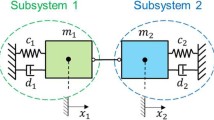

As a first example, we consider the nonlinear two-mass oscillator shown in Fig. 10 (masses \(m_{1}\), \(m_{2}\); spring constants \(c_{1}\), \(c_{2}\); damping coefficients \(d_{1}\), \(d_{2}\)). The two masses are coupled by the nonlinear force \(f^{\mathrm{c}} = c_{c} \cdot \left ( x_{2} - x_{1} \right ) + d_{c} \cdot \left ( v_{2} - v_{1} \right ) + C_{c} \cdot \left ( x_{2} - x_{1} \right )^{3} + D_{c} \cdot \left ( v_{2} - v_{1} \right )^{3}\). Furthermore, a penalty-based contact force \(F_{C} =- 10^{-2} \ \mathrm{N} \cdot e^{( 10^{7} m^{-1} \cdot x_{1} )}\) is assumed to act at \(m_{1}\).

Applying a force/force-decomposition approach, the coupled co-simulation system is defined by the following semi-explicit index-1 DAE system

Subsystem 1:

Subsystem 2:

Coupling condition:

The simulations have been carried out with the subsequent parameters: \(m_{1} =50\ \mathrm{kg}\), \(m_{2} =1\ \mathrm{kg}\), \(c_{1} =1.0E5\ \frac{\mathrm{N}}{\mathrm{m}}\), \(c_{2} =1.0E3\ \frac{\mathrm{N}}{\mathrm{m}}\), \(c_{c} =1.0E5\ \frac{\mathrm{N}}{\mathrm{m}}\), \(d_{1} = d_{2} = d_{c} =0\), \(C_{c} =1.0E5\ \frac{\mathrm{N}}{\mathrm{m}^{3}}\), and \(D_{c} =1.0E-2\ \frac{\mathrm{Ns}^{3}}{\mathrm{m}^{3}}\). The initial conditions are: \(x_{1,0} =-2.0\ \mathrm{m}\), \(x_{2,0} =0\ \mathrm{m}\), \(v_{1,0} =100\ \frac{\mathrm{m}}{\mathrm{s}}\), and \(v_{2,0} =-200\ \frac{\mathrm{m}}{\mathrm{s}}\). Subsystem integration has been accomplished with a BDF integrator [25] (variable step-size, relative and absolute error tolerances \(\mathit{ato} l_{x}^{\mathit{BDF}} = 10^{-9}\), \(\mathit{ato} l_{v}^{\mathit{BDF}} = 10^{-6}\), \(\mathit{rto} l^{\mathit{BDF}} = 10^{-6}\)). The following parameters have been used for the macro-step size controller: \(\mathit{SF} =6.0\), \(\mathit{atol}_{x} = 10^{-8}\), \(\mathit{ato} l_{v} = 10^{-5}\), \(\mathit{rtol} = 10^{-5}\), \(\mathit{atol}_{f_{c}} = 10^{-1}\), \(\mathit{rto} l_{f_{c}} = 10^{-3}\), \(r_{\min }^{\rho } =0.5\), and \(r_{\max }^{\rho } =2.0\).

Figure 11 depicts the displacement \(x_{1} (t)\) and the corresponding velocity \(v_{1} (t)\) for the different error estimators of Sect. 4 in combination with the order control algorithm of Sect. 5. The reference solution has been generated with a monolithic model, which has been integrated with very small error tolerances. The macro-step sizes for the different error estimators are shown in Fig. 12; the degree \(k\) of the approximation polynomials is depicted in Fig. 13. It can clearly be seen that the macro-step size and also the polynomial degree are reduced at the time points where the contacts occur. The macro-step size is reduced very strongly with the simplified error estimators (ExSLocal and ImSLocal), since these two error estimators are based on the coupling force, which is large at the time points where the contacts occur. The total number of macro-steps and the resulting global error are illustrated in Fig. 14. Note that the scalar error norm used in Fig. 14(a) is based on the normalized root mean square error \(e\) according to

where \(\boldsymbol{z}^{\mathit{ref}} \in \mathbb{R}^{N\times N_{z}}\) denotes the reference solution; \(N_{z}\) terms the number of state variables, and \(N\) is the number of output points.

6.2 Example 2: Comparative study between explicit and implicit co-simulation approaches

A chain of \(n_{K}\) masses is considered, see Fig. 15. The masses, described by the displacement coordinates \(x_{i}\) and the velocities \(v_{i} = \dot{x}_{i}\), are connected by nonlinear spring/damper elements. The spring/damper element depicted in Fig. 16, which connects the two masses \(m_{i -1}\) and \(m_{i}\), is defined by the constitutive equation

where \(c_{i}\), \(d_{i}\), \(C_{i}\), \(D_{i}\) characterize the linear and nonlinear stiffness and damping parameters. The chain model is used here because it is simple to scale with respect to the number of degrees of freedom. Also, the number of subsystems can be changed very easily. Therefore, the model is well suited to carry out reliable numerical studies in order to compare efficiency and accuracy of different co-simulation methods.

The model is separated into \(r\) subsystems via force/force-decompositions. The \(n_{c} = r -1\) coupling conditions are defined by the constitutive equations of the coupling spring/damper elements. Regarding the two adjacent subsystems \(s\) and \(s +1\), the coupling condition \(g_{s}\) is given by

At special time points \(t_{\alpha } \) (\(t_{\alpha } =0, 0.05~\mathrm{s}, 0.1~\mathrm{s}, \dots \)), impulse-shaped external forces \(F_{\mathit{Imp},j} = \frac{1}{2} \Delta F_{j} \left [ \tanh \left ( \frac{t- t_{\alpha }}{\delta } \right ) - \tanh \left ( \frac{t- \left ( t_{\alpha } + \Delta t \right )}{\delta } \right ) \right ]\) with \(\delta =1.0E-5\ \mathrm{s}\) and \(\Delta t=2.5E-3\ \mathrm{s}\) are applied at 30% of the masses (randomly selected). The force amplitude \(\Delta F_{j}\) is chosen randomly in the interval \(\pm [1.0E6,\ 5.0E6]\ \mathrm{N}\). Moreover, at 10% of the masses (randomly chosen), contact forces are applied by means of the penalty approach \(F_{\mathit{Con},i} =A\ e^{B x_{i}}\) with \(A=-1.0E-2\ \mathrm{N}\) and \(B=5.0E5\ \mathrm{m}^{-1} \).

A chain with 10,000 masses is considered here, which is decomposed into 191 subsystems. The simulations have been carried out with the subsequent parameters: \(m_{i} =1.0E-1\ \mathrm{kg}\) (varied in case study 3), \(c_{i} =1.0E7\ \frac{\mathrm{N}}{\mathrm{m}}\) (varied in case study 2), \(d_{i} =1.0E4\ \mathrm{Ns} / \mathrm{m}\) (varied in case study 1), \(C_{i} =1.0E14\ \frac{\mathrm{N}}{\mathrm{m}^{3}}\), \(D_{i} =1.0E2\ \frac{\mathrm{Ns}^{3}}{\mathrm{m}^{3}}\), simulation time \(t_{\mathit{sim}} =0.5\ \mathrm{s}\). Random initial conditions have been used (\(x_{0,i} = \left [ -1.0E-3,\ 1.0E-3 \right ] \ \mathrm{m}\) and \(v_{0,i} = \left [ -1.0E1,1.0E1 \right ]\ \frac{\mathrm{m}}{\mathrm{s}}\)). The subsystem integrations have been accomplished with a variable step-size BDF integrator [25] with the solver settings \(\mathit{rto} l^{\mathit{BDF}} =1.0E-6\), \(\mathit{ato} l_{x}^{\mathit{BDF}} =1.0E-9\), and \(\mathit{ato} l_{v}^{\mathit{BDF}} =1.0E-6\). The co-simulations have been carried out with the macro-step size and order control algorithm described in Sect. 5. Simulation results are presented for the two error estimators ExSLocal and ImSLocal, see Sect. 4, with the subsequent macro-step size controller parameters: \(\mathit{SF} =6.0\), \(\mathit{atol}_{\lambda _{c}} = 10^{-1}\), \(\mathit{rto} l_{\lambda _{c}} = 10^{-3}\), \(r_{\min }^{\rho } =0.5\), and \(r_{\max }^{\rho } =2.0\).

6.2.1 Case study 1

The main intention of Example 2 is to compare the numerical performance of the explicit with the implicit co-simulation approach. Giving general statements is, of course, not possible since the performance of a co-simulation approach strongly depends on the system and the model parameters. For the considered model, our simulations have, however, shown that the damping coefficients are crucial and important parameters for comparing the efficiency of explicit and implicit methods. Within a first numerical study, the damping parameter \(d_{i}\) of the spring/damper elements is therefore varied in the range \(\left [ 1.0E3,\ 1.0E6 \right ] \ \mathrm{Ns} / \mathrm{m}\), see Figs. 17–21.

Figure 17 shows displacement and velocity of mass 6891 for \(d_{i} =1.0E4\ \mathrm{Ns} / \mathrm{m}\) simulated with the explicit co-simulation approach (variable co-simulation order; variable macro-step size with error estimator ExSLocal). Although the system is rather stiff and although steep gradients occur due to the impulse-shaped excitation, the co-simulation is stable and the results are accurate and close to the reference solution.

Figure 18 collects simulation results accomplished with the implicit co-simulation method (variable co-simulation order; variable macro-step size with error estimator ImSLocal). The same model parameters have been used as for the explicit co-simulations depicted in Fig. 17; only the damping parameter has been increased to \(d_{i} =5.0E5\ \mathrm{Ns} / \mathrm{m}\). Again, a good agreement between the co-simulation and the reference solution can be observed.