Abstract

We consider boundary value problems for 1D autonomous damped and delayed semilinear wave equations of the type

with smooth coefficient functions a and b such that \(a(x,\lambda )>0\) and \(b(x,\lambda ,0,0,0,0) = 0\) for all x and \(\lambda \). We state conditions ensuring Hopf bifurcation, i.e., existence, local uniqueness (up to time shifts), regularity (with respect to t and x) and smooth dependence (on \(\tau \) and \(\lambda \)) of small non-stationary time-periodic solutions, which bifurcate from the stationary solution \(u=0\), and we derive a formula which determines the bifurcation direction with respect to the bifurcation parameter \(\tau \). To this end, we transform the wave equation into a system of partial integral equations by means of integration along characteristics and then apply a Lyapunov-Schmidt procedure and a generalized implicit function theorem. The main technical difficulties, which have to be managed, are typical for hyperbolic PDEs (with or without delay): small divisors and the “loss of derivatives” property. We do not use any properties of the corresponding initial-boundary value problem. In particular, our results are true also for negative delays \(\tau \).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 The Problem

This paper concerns 1D autonomous damped and delayed semilinear wave equation of the general type

with one Dirichlet and one Neumann boundary condition

It is supposed that \(b(x,\lambda ,0,0,0,0)=0\) for all x and \(\lambda \), i.e., that \(u=0\) is a stationary solution to (1.1)–(1.2) for all \(\tau \) and \(\lambda \).

The goal is to describe Hopf bifurcation, i.e., existence and local uniqueness (up to time shifts) of families (parametrized by \(\tau \) and \(\lambda \)) of non-stationary time-periodic solutions to (1.1)–(1.2), which bifurcate from the stationary solution \(u=0\).

Our main result, stated in Theorem 2 below, is quite similar to Hopf bifurcation theorems for delayed ODEs (see, e.g., [8, 12, Chapter 5.5], [13, Chapter 11], [38, 42]) and for delayed parabolic PDEs (see, e.g., [4, 7, 9, 33, 45, Chapter 6]). However, the analysis of Hopf bifurcation for hyperbolic PDEs is faced with considerable complications if compared to ODEs or parabolic PDEs (with or without delay). In the present paper we provide an approach for overcoming the following technical difficulties, which appear in dissipative hyperbolic PDEs and do not appear in ODEs or parabolic PDEs:

First, the question, whether a nondegenerate time-periodic solution to a dissipative nonlinear wave equation is locally unique (up to time shifts in the autonomous case) and whether it depends smoothly on the system parameters, is much more delicate than for ODEs or parabolic PDEs (cf., e.g., [14, 15]). One reason for that is the so-called loss of derivatives for hyperbolic PDEs. To overcome this difficulty, we use a generalized implicit function theorem [25, Theorem 2.2], which is applicable to abstract equations with a loss of derivatives property. Remark that for smoothness of the data-to-solution map of hyperbolic PDEs it is necessary, in general, that the equation depends smoothly not only on the data and on the unknown function u, but also on the space variable x (and the time variable t in the non-autonomous case). This is completely different to what is known for parabolic PDEs (cf. [10]).

Second, analysis of time-periodic solutions to hyperbolic PDEs usually encounters a complication known as the problem of small divisors [2, 18, 44]. Since Hopf bifurcations can be expected only in the so-called non-resonant case, where small divisors do not appear, we have to impose a condition (assumption (1.7) below) preventing small divisors from coming up. That condition has no counterparts in the case of ODEs or parabolic PDEs.

And third, linear autonomous hyperbolic PDEs with one space dimension differ essentially from those with more than one space dimension: They satisfy the spectral mapping property (see [39] in \(L^p\)-spaces and, more important for applications to nonlinear problems, [30] in C-spaces) and they generate Riesz bases (see, e.g., [11, 19]), what is not the case, in general, if the space dimension is larger than one (see the celebrated counter-example of M. Renardy in [41]). Therefore the question of Fredholmness of the corresponding differential operators in appropriate spaces of time-periodic functions is highly difficult.

The main consequence (from the point of view of mathematical techniques) of the fact, that the space dimension of (1.1), (1.2) is one, consists in the following: We can use integration along characteristics in order to replace (1.1), (1.2) by an nonlinear partial integral equation (see [1] for the notion “partial integral equation”). After that, we can apply known Fredholmness properties to the linearized partial integral equation ( [24, 25, Corollary 4.11]) and, hence, we can apply the Lyapunov-Schmidt reduction method to the nonlinear partial integral equation.

Summarizing, the technical difficulties mentioned above are unusual from the point of view of ODEs and parabolic PDEs, although the goal is to get results, which are quite usual for ODEs and parabolic PDEs. Therefore, the essential part of the present paper is quite technical. However, those technical difficulties appear quite naturally during the execution of a well-known and widely used algorithm in local bifurcation theory, the Lyapunov-Schmidt procedure. Mainly, they appear in the proof of the Fredholmness result (see Lemma 10 in Subsect. 4.1, where we use Nikolskii’s Fredholmness criterion given by Theorem 13) and in the proof of the unique solvability of the external Lyapunov-Schmidt equation (see Lemma 20, where we use our generalized implicit function Theorem 17). Roughly speaking, the technical difficulties appear because the abstract equation (4.3) (which is equivalent to our bifurcation problem (1.1)–(1.2)) does not depend smoothly of the control parameters \(\tau \) and \(\lambda \) and also one of the state parameters, namely the frequency \(\omega \).

1.2 Main Results

Our goal is to investigate time-periodic solutions to (1.1)–(1.2). In order to work in spaces of functions with fixed time period \(2\pi \), we put the frequency parameter \(\omega \) explicitely into the equation by scaling the time variable t and by introducing a new unknown function u as follows:

The problem (1.1)–(1.2) for the new unknown function u and the unknown frequency \(\omega \) reads

Throughout this paper we suppose (and we do not mention it further) that

Assumptions \(\mathbf (A1)\)–\(\mathbf (A3)\) below are standard for Hopf bifurcation. To formulate them, we consider the following eigenvalue problem for the linearization of (1.3) in \(u=0\), \(\omega =1\) and \(\lambda =0\):

Here \(\mu \in {\mathbb {C}}\) and \(u:[0,1] \rightarrow {\mathbb {C}}\) are eigenvalue and eigenfunction, respectively. The coefficients \(a_0\) and \(b^0_j\) in (1.4) are defined by

where \(\partial _jb\) is the partial derivative of the function b with respect to its jth variable.

Our first assumption states that for certain delay \(\tau =\tau _0\) there exists a pair of pure imaginary geometrically simple eigenvalues to (1.4) (without loss of generality we may assume that the pair is \(\mu =\pm i\)):

- (A1):

-

There exists \(\tau _0\in {\mathbb {R}}\) such that for \(\mu =i\) and \(\tau =\tau _0\) there exists exactly one (up to linear dependence) solution \(u\ne 0\) to (1.4).

The second assumption is the so-called nonresonance condition:

- (A2):

-

If \(u\not =0\) is a solution to (1.4) with \(\mu =ik, k \in {\mathbb {Z}}\) and \(\tau =\tau _0\), then \(k=\pm 1\).

The third assumption is the so-called transversality condition with respect to change of parameter \(\tau \). It states that for all \(\tau \approx \tau _0\) there exists exactly one eigenvalue \(\mu ={\hat{\mu }}(\tau )\approx i\) to (1.4) and that this eigenvalue crosses the imaginary axis transversally if \(\tau \) crosses \(\tau _0\). In order to formulate this more explicitly, we consider the adjoint problem to (1.4) with \(\mu =i\) and \(\tau =\tau _0\):

Because of assumption (A1) there exists exactly one (up to linear dependence) solution \(u\ne 0\) to (1.6). The transversality condition is the following:

(A3) For any solution \(u=u_0\ne 0\) to (1.4) with \(\tau =\tau _0\) and \(\mu =i\) and for any solution \(u=u_*\ne 0\) to (1.6) it holds

Remark that \(\text{ Re }\,{\hat{\mu }}'(\tau _0)=\rho \), and this real number does not depend on the choice of the eigenfunctions \(u_0\) and \(u_*\). The complex number \(\sigma \) depends on the choice of the eigenfunctions \(u_0\) and \(u_*\), but the fact, if condition \(\sigma \not = 0\) is satisfied or not, does not depend on this choice.

Definition 1

-

(i)

We denote by \(C_{2\pi }({\mathbb {R}}\times [0,1])\) the space of all continuous functions \(u:[0,1] \times {\mathbb {R}} \rightarrow {\mathbb {R}}\) such that \(u(t+2\pi ,x)=u(t,x)\) for all \(t \in {\mathbb {R}}\) and \(x \in [0,1]\), with the norm

$$\begin{aligned} \Vert u\Vert _\infty :=\max \{|u(t,x)|: t \in {\mathbb {R}}, \; x \in [0,1]\}. \end{aligned}$$ -

(ii)

For \(k \in {\mathbb {N}}\) we denote by \(C^k_{2\pi }({\mathbb {R}}\times [0,1])\) the space of all \(C^k\)-smooth \(u\in C_{2\pi }({\mathbb {R}}\times [0,1])\), with the norm \( \max \{\Vert \partial ^i_t\partial ^j_xu\Vert _\infty : 0 \le i+j\le k\}. \)

Now we are prepared to formulate our Hopf bifurcation theorem.

Theorem 2

Suppose that conditions \(\mathbf (A1)\)–\(\mathbf (A3)\) are fulfilled as well as

Let \(u=u_0 \ne 0\) be a solution to (1.4) with \(\tau =\tau _0\) and \(\mu =i\), and let \(u=u_* \ne 0\) be a solution to (1.6). Then there exist \(\varepsilon _0>0\) and a \(C^\infty \)-map

such that the following is true:

-

(i)

Existence: For all \((\varepsilon ,\lambda )\in (0,\varepsilon _0]\times [-\varepsilon _0,\varepsilon _0] \) the function \(u=\varepsilon {\hat{u}}(\varepsilon ,\lambda )\) is a non-stationary solution to (1.3) with \(\omega ={{\hat{\omega }}}(\varepsilon ,\lambda )\) and \(\tau ={{\hat{\tau }}}(\varepsilon ,\lambda )\).

-

(ii)

Asymptotic expansion: It holds

$$\begin{aligned} {[}{\hat{u}}(0,0)](t,x)=\mathop {\mathrm {Re}}\nolimits u_0(x)\cos t - \mathop {\mathrm {Im}}\nolimits u_0(x)\sin t \text{ for } \text{ all } t \in {\mathbb {R}} \text{ and } x \in [0,1], \end{aligned}$$(1.8)\({{\hat{\omega }}}(0,0)=1\), \({{\hat{\tau }}}(0,0)=\tau _0\) and

$$\begin{aligned} \partial _\varepsilon {{\hat{\omega }}}(0,\lambda )=\partial _\varepsilon {{\hat{\tau }}}(0,\lambda )=0 \text{ for } \text{ all } \lambda \in [-\varepsilon _0,\varepsilon _0]. \end{aligned}$$(1.9) -

(iii)

Local uniqueness: There exists \(\delta >0\) such that for all solutions \((u,\omega ,\tau ,\lambda )\) to (1.3) with \(u \ne 0\) and \(\Vert u\Vert _\infty + |\omega -1| + |\tau -\tau _0|+|\lambda |<\delta \) there exist \(\varepsilon \in (0,\varepsilon _0]\) and \(\varphi \in {\mathbb {R}}\) such that \(\omega ={{\hat{\omega }}}(\varepsilon ,\lambda )\), \(\tau ={{\hat{\tau }}}(\varepsilon ,\lambda )\) and \(u(x,t)=\varepsilon [{\hat{u}}(\varepsilon ,\lambda )](x,t+\varphi )\) for all \(t\in {\mathbb {R}}\) and \(x\in [0,1]\).

-

(iv)

Regularity: For all \(\varepsilon \in [0,\varepsilon _0]\), \(\lambda \in [-\varepsilon _0,\varepsilon _0]\) and \(k \in {\mathbb {N}}\) it holds \({\hat{u}}(\varepsilon ,\lambda ) \in C^k_{2\pi }({\mathbb {R}} \times [0,1])\).

-

(v)

Smooth dependence: The map \((\varepsilon ,\lambda ) \in [0,\varepsilon _0]\times [-\varepsilon _0,\varepsilon _0] \mapsto {\hat{u}}(\varepsilon ,\lambda ) \in C^k_{2\pi }({\mathbb {R}} \times [0,1])\) is \(C^\infty \)-smooth for any \(k \in {\mathbb {N}}\).

Remark 3

The parametrizations \(u=\varepsilon \hat{u}(\varepsilon ,\lambda )\), \(\omega ={\hat{\omega }}(\varepsilon ,\lambda )\) and \(\tau ={\hat{\tau }}(\varepsilon ,\lambda )\) depend on the choice of the eigenfunctions \(u_0\) and \(u_*\), in general, while the sign of \(\partial _\varepsilon ^2{\hat{\tau }}(0,0)\), determining the bifurcation direction, does not.

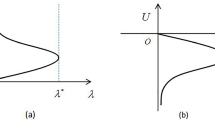

In descriptions of Hopf bifurcation phenomena one of the main questions is that of the so-called bifurcation direction, i.e. the question if the bifurcating time-periodic solutions exist for bifurcation parameters (close to the bifurcation point) such that the stationary solution is unstable (in this case the Hopf bifurcation is called supercritical) or not. For ODEs and parabolic PDEs (with or without delay) it is known that, under reasonable additional assumptions, in the supercritical case the bifurcating time-periodic solutions are orbitally stable. For hyperbolic PDEs this relationship between bifurcation direction and stability is believed to be true also, but rigorous proofs are not available up to now. More exactly, it is expected that the bifurcating non-stationary time-periodic solutions, which are described by Theorem 2, are orbitally stable if for all eigenvalues \(\mu \not =\pm i\) of (1.4) with \(\tau =\tau _0\) it holds \(\mathop {\mathrm {Re}}\nolimits \mu <0\) and if

Anyway, in Theorem 4 below we present a formula which shows how to calculate the number \(\partial _\varepsilon ^2{\hat{\tau }}(0,0)\) by means of the eigenfunctions \(u_0\) and \(u_*\) and and of the first three derivatives of the nonlinearity \(b(x,0,\cdot ,\cdot ,\cdot ,\cdot )\). It is known that those formulae may be quite complicated and not explicit (see, e.g., [17, Section 3.3], [21, 22, Theorem I.12.2]; [23, Theorem 1.2(ii)], [29]). Therefore, in order to keep the technicalities simple, in Theorem 4 below we consider only nonlinearities of the type

with \(C^\infty \)-functions \(\beta _j:[0,1]\times {\mathbb {R}}^2 \rightarrow {\mathbb {R}}\) such that

Set

Our result about the bifurcation direction reads as follows:

Theorem 4

Let the assumptions of Theorem 2 and the conditions (1.10) and (1.11) be fulfilled. Then

Remark 5

We do not know if generalizations of Theorems 2 and 4 to higher space dimensions and/or to quasilinear equations exist and how they should look like.

Our paper is organized as follows:

In Subsect. 1.3 we comment about some publications which are related to ours.

In Sect. 2 we show that any solution to (1.3) creates a solution to a semilinear first-order \(2 \times 2\) hyperbolic system, namely (2.1), and vice versa. In Sect. 3 we show (by using the method of integration along characteristics) that any solution to the first-order hyperbolic system (2.1) solves a system of partial integral equations, namely (3.1), and vice versa. Remark that in Sects. 2 and 3 we do pure transformations, i.e., problem (1.3) is equivalent to problem (3.1). Especially, the technical difficulties of (1.3), like small divisors and loss of smoothness, are hidden in (3.1) also. But it turns out that in (3.1) they can be handled more easily than in (1.3).

In Sects. 4 and 5 we do a Lyapunov-Schmidt procedure in order to reduce locally the system (3.1) with infinite-dimensional state parameter to a problem with two-dimensional state parameter. Here the main technical results are Lemma 10 about Fredholmness of the linearization of (3.1) and Lemma 20 about local unique solvability and smooth dependence of the infinite dimensional part of the Lyapunov-Schmidt system. The proofs of those lemmas are much more complicated than the corresponding proofs for ODEs or parabolic PDEs (with or without delay).

In particular, in the proof of Lemma 10 (more exactly in the proof of Claim 4 there) we use assumption (1.7), and it turns out that the conclusions of Lemma 10 (and of Theorem 2 as well) are not true, in general, if (1.7) is not true.

In the proof of Lemma 20 we use a generalized implicit function theorem, which is a particular case of [25, Theorem 2.2] and concerns abstract parameter-dependent equations with a loss of smoothness property. This generalized implicit function theorem is presented in Subsect. 5.1.

In Sect. 6 we put the solution of the infinite dimensional part of the Lyapunov-Schmidt system into the finite dimensional part and discuss the behavior of the resulting equation. This is completely analogous to what is known from Hopf bifurcation for ODEs and parabolic PDEs.

In Sect. 7 we prove Theorem 4, and in Sect. 8 give an example.

Finally, in Sect. 9 we discuss cases of other than (1.2) boundary conditions.

1.3 Remarks on Related Work

The main methods for proving Hopf bifurcation theorems are, roughly speaking, center manifold reduction and Lyapunov-Schmidt reduction. In order to apply them to evolution equations, one needs to have a smooth center manifold for the corresponding semiflow (for center manifold reduction) or a Fredholm property of the linearized equation on spaces of periodic functions (for Lyapunov-Schmidt reduction).

In [5, 22] Hopf bifurcation theorems for abstract evolution equations are proved by means of Lyapunov-Schmidt reduction, and in [16, 34, 43] by means of center manifold reduction. In [5, 22] it is assumed that the operator of the linearized equation is sectorial (see [5, Hypothesis (HL)] and [22, Hypothesis I.8.8]), hence this setting is not appropriate for hyperbolic PDEs. In [16, 34, 43] the assumptions concerning the linearized operator are more general, including non-sectorial operators. However, it is unclear if our problem (1.1), (1.2) can be written as an abstract evolution equation satisfying those conditions.

In [43] it is shown that 1D semilinear damped wave equations without delay of the type \(\partial _t^2u=\partial _x^2u-\gamma \partial _tu+f(u)\) with \(f(0)=0\), subjected to homogeneous Dirichlet boundary conditions, can be written as an abstract evolution equation satisfying the general assumptions of [43], and a corresponding Hopf bifurcation theorem is proved. But it turns out that nonlinearities of the type \(f(u,\partial _xu)\) cannot be treated this way. In [26] a Hopf bifurcation theorem is stated without proof for second-order quasilinear hyperbolic systems without delay with arbitrary space dimension subjected to homogeneous Dirichlet boundary conditions. In [23] a Hopf bifurcation theorem for general semilinear first-order 1D hyperbolic systems without delay is proved by means of Lyapunov-Schmidt reduction, and applications to semiconductor laser modeling are described. In [31, 35, 36] the authors considered Hopf bifurcation for scalar linear first-order PDEs without delay of the type \((\partial _t +\partial _x + \mu )u = 0\) on the semi-axis \((0,\infty )\) with a nonlinear integral boundary condition at \(x=0\).

In [3] small periodic forcings of an undamped linear autonomous wave equation are considered. Because of lack of damping, small divisors come up, and Nash-Moser iterations have to be used. However, Lyapunov-Schmidt reduction is applied there as well as in the present paper.

What concerns Hopf bifurcation for hyperbolic PDEs with delay, to the best of our knowledge there exist only the two results [27, 28] of N. Kosovalić and B. Pigott. In [27] the authors consider 1D damped and delayed Sine-Gordon-like wave equations of the type

with \(f(-x,-u)=-f(x,u)\) and \(f(x,0)=\partial _uf(x,0)=0\). Because of the symmetry assumption on the nonlinearity f the bifurcating time-periodic solutions can be determined by means of Fourier expansions. In [28] these results are generalized to equations on d-dimensional cubes, but locally unique bifurcating solution families can be described for fixed prescribed spatial frequency vectors only.

Our results in the present paper extend those of [27] mainly by two facts: Our equation (1.1) is more general than (1.12) (and does not have any symmetry property, in general), and we allow the presence of the perturbation parameter \(\lambda \). The symmetry assumptions of [27] allow one to use Fourier series techniques, while we use integration along characteristics.

2 Transformation of the Second-order Equation into a First-order System

In this section we show that any solution u to (1.3) creates a solution \(v=(v_1,v_2)\) to the first-order hyperbolic system

and vice versa. Here the nonlinear operator B is defined as

with partial integral operators \(J_\lambda \) defined by

and with “pointwise” operators K and \(K_\lambda \) defined by

Definition 6

-

(i)

We denote by \(C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) the space of all continuous functions \(v:[0,1] \times {\mathbb {R}} \rightarrow {\mathbb {R}}^2\) such that \(v(t+2\pi ,x)=v(t,x)\) for all \(t \in {\mathbb {R}}\) and \(x \in [0,1]\), with the norm

$$\begin{aligned} \Vert v\Vert _\infty :=\max \{|v_1(t,x)|+|v_2(t,x)|: t \in {\mathbb {R}}, \; x \in [0,1]\}. \end{aligned}$$ -

(ii)

For \(k \in {\mathbb {N}}\) we denote by \(C^k_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) the space of all \(C^k\)-smooth functions \(v\in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\), with the norm \(\max \{\Vert \partial ^i_t\partial ^j_xv\Vert _\infty : 0 \le i+j\le k\}\).

Lemma 7

For all \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) and \(k=2,3,\ldots \) the following is true:

-

(i)

If \(u \in C_{2\pi }^k({\mathbb {R}}\times [0,1])\) is a solution to (1.3), then the function \(v \in C_{2\pi }^{k-1}({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\), which is defined by

$$\begin{aligned} v_1:=\omega \partial _tu+a(x,\lambda ) \partial _xu, \;v_2:=\omega \partial _tu-a(x,\lambda ) \partial _xu,\nonumber \\ \end{aligned}$$(2.5)is a solution to (2.1).

-

(ii)

If \(v \in C_{2\pi }^{k-1}({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) is a solution to (2.1), then the function \(u \in C_{2\pi }^{k-1}({\mathbb {R}}\times [0,1])\), which is defined by

$$\begin{aligned} u(t,x):=\frac{1}{2}\int _0^x\frac{v_1(t,\xi )-v_2(t,\xi )}{a(\xi ,\lambda )}d\xi , \end{aligned}$$(2.6)is \(C^k\)-smooth and a solution to (1.3).

Proof

(i) Let \(u \in C_{2\pi }^k({\mathbb {R}}\times [0,1])\) be a solution to (1.3), and let \(v \in C_{2\pi }^{k-1}({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) be defined by (2.5). From (2.5) follows

Hence

and

From \(u(t,0)=\partial _xu(t,1)=0\) (cf. (1.3)) and (2.7) follows \(v_1(t,0)-v_2(t,0)=0\) and \(v_1(t,1)+v_2(t,1)=0\), i.e. the boundary conditions of (2.1). Further, from \(u(t,0)=0\) and (2.7) follows also \(u=J_\lambda v\). Hence, (2.7), (2.8) and the differential equation in (1.3) yield the differential equations in (2.1).

(ii) Let \(v \in C_{2\pi }^{k-1}({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) be a solution to (2.1), and let \(u \in C_{2\pi }^{k-1}({\mathbb {R}}\times [0,1])\) be defined by (2.6). From (2.1) and (2.6) it follows that

Hence, \(\partial _tu\) is \(C^{k-1}\)-smooth, and

Further, (2.6) yields

i.e. \(\partial _xu\) is \(C^{k-1}\)-smooth also, i.e. u is \(C^{k}\)-smooth, and \(2(\partial _xa\partial _xu+a\partial _x^2u)=\partial _xv_1-\partial _xv_2\), i.e.

But (2.1), (2.9) and (2.11) imply \(\omega ^2\partial ^2_tu-a^2\partial ^2_xu=B(v,\omega ,\tau ,\lambda )+\frac{1}{2}\partial _xa(v_1-v_2)\), i.e. the differential equation in (1.3). The boundary conditions in (1.3) follow from the boundary conditions in (2.1) and from (2.6) and (2.10). \(\square \)

Let us calculate the linearization of the operator B (cf. (2.2)) with respect to v in \(v=0\). For that reason we use the following notation:

Remark that \(b_j(x,0)=b_j^0(x)\) (cf. (1.5)). We have

with

By reasons which will be seen in Sects. 3 and 4 below we rewrite system (2.1) in the following way:

with

The operators \({{\mathcal {B}}}_1,{{\mathcal {B}}}_2:C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\times {\mathbb {R}}^3\rightarrow C_{2\pi }({\mathbb {R}} \times [0,1])\), introduced in (2.16), define an operator

Moreover, the operator \({{\mathcal {B}}}(\cdot ,\omega ,\tau ,\lambda ):C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\rightarrow C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) is \(C^\infty \)-smooth because the function b is supposed to be \(C^\infty \)-smooth, and

with operators \({{\mathcal {J}}}(\omega ,\tau ,\lambda ),{{\mathcal {K}}}(\lambda )\in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2))\). Their components are defined by (cf. (2.13) and (2.16))

and

Hence, the linearization with respect to v in \(v=0\) of the right-hand side of (2.15) has a special structure: It is the sum of the partial integral operator \({{\mathcal {J}}}(\omega ,\tau ,\lambda )\) and of the “pointwise” operator \({{\mathcal {K}}}(\lambda )\), which has vanishing diagonal part. This structure will be used in Subsect. 4.1 below, cf. Remark 11.

3 Transformation of the First-order System into a System of Partial Integral Equations

In this section we show (by using the method of integration along characteristics) that any solution to (2.1), i.e. to (2.15), solves the system of partial integral equations

and vice versa. Here the operators \({{\mathcal {B}}}_1\) and \({{\mathcal {B}}}_1\) are from (2.16), and the functions \(c_1\), \(c_2\) and A are defined by (cf. (2.12) and (2.14))

Lemma 8

For all \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) the following is true:

-

(i)

If \(v \in C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) is a solution to (2.1), then it is a solution to (3.1).

-

(ii)

If \(v \in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) is a solution to (3.1) and if \(\partial _tv\) exists and is continuous, then v belongs to \(C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) and solves (2.1).

Proof

(i) Let \(v\in C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) be given. Because of \(c_1(x,x,\lambda )=1\) and \(A(x,x,\lambda )=0\) we get

From \(\partial _\xi A(x,\xi ,\lambda )=-1/a(\xi ,\lambda )\) and \(\partial _\xi c_1(x,\xi ,\lambda )=b_1(\xi ,\lambda )c_1(x,\xi ,\lambda )/a(\xi ,\lambda )\) it follows that

Similarly one shows that

But this yields that, if v is a solution to (2.1), i.e. to (2.15), then it is a solution to (3.1)

(ii) Let \(v \in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) be a solution to (3.1). The first equation of (3.1) yields \(v_1(t,0)=c_1(0,0,\lambda )v_2(t+\omega A(0,0,\lambda ),0)=-v_2(t,0)\), i.e. the first boundary condition of (2.1). Similarly the second boundary condition of (2.1) follows from the second equation of (3.1).

Further, from (3.1) and from the assumption, that \(\partial _tv\) exists and is continuous, it follows that also \(\partial _xv\) exists and is continuous, i.e. \(v\in C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\).

Now, let us verify the differential equations in (2.1), i.e. in (2.15). From (3.1) it follows that

Because of \(\partial _xc_1(x,0,\lambda )=-b_1(x,\lambda )c_1(x,0,\lambda )/a(x,\lambda )\) and

the left-hand side of (3.2) is \( \left( \omega \partial _t-a(x,\lambda )\partial _x\right) v_1(t,x)+b_1(x,\lambda )v_2(t+\omega A(x,0,\lambda ),0), \) and the right-hand side of (3.2) is

Hence, the first equation of (2.15) is shown. Using \(\partial _xc_2(x,0,\lambda )=b_2(x,\lambda )c_1(x,0,\lambda )/a(x,\lambda )\), one gets similarly

i.e. the second equation of (2.15) is shown. \(\square \)

4 Lyapunov-Schmidt Procedure

In this and the next sections we do a Lyapunov-Schmidt procedure in order to reduce locally for \(v \approx 0\), \(\omega \approx 1\), \(\tau \approx \tau _0\) and \(\lambda \approx 0\) the problem (3.1) with the infinite-dimensional state parameter \((v,\omega )\) to the problem (6.1) with the three-dimensional state parameter \((u,\omega )\).

For the sake of simplicity, we will write the problem (3.1) in a more abstract way. For that reason for \(\omega ,\lambda \in {\mathbb {R}}\) let us introduce operators \( {\mathcal {C}}(\omega ,\lambda ),{\mathcal {D}}(\omega ,\lambda )\in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)) \) with components \({\mathcal {C}}_j(\omega ,\lambda ),{\mathcal {D}}_j(\omega ,\lambda )\in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}} \times [0,1]);{\mathbb {R}}^2);C_{2\pi }({\mathbb {R}} \times [0,1]))\), \(j=1,2\), which are defined by

and

Using this notation, the system (3.1) reads

where the nonlinear operator \({{\mathcal {B}}}\) is introduced in (2.16).

Remark 9

Also the first-order hyperbolic system (2.15) can be written in an abstract way, namely as

with \({{\mathcal {A}}}(\omega ,\lambda ) \in {{\mathcal {L}}}(C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2);C_{2\pi } ({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\) defined by

Remark that in the proof of Lemma 8 we showed that for all \(\omega ,\lambda \in {\mathbb {R}}\) it holds

and

It is easy to see that the operators \({\mathcal {C}}(\omega ,\lambda ),{\mathcal {D}}(\omega ,\lambda )\), \({{\mathcal {J}}}(\omega ,\tau ,\lambda )\) and \({{\mathcal {K}}}(\lambda )\) (cf. (2.18), (2.19)) are bounded with respect to \(\omega \) and \(\tau \) and locally bounded with respect to \(\lambda \), i.e., for any \(c>0\) it holds

But, unfortunately, the operators \({\mathcal {C}}(\omega ,\lambda )\) and \({\mathcal {D}}(\omega ,\lambda )\) do not depend continuously (in the sense of the uniform operator norm in \({{\mathcal {L}}}(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2))\) on \(\omega \) and \(\lambda \), in general, and \({{\mathcal {J}}}(\omega ,\tau ,\lambda )\) does not depend continuously on \(\omega \) and \(\tau \), in general. This is the main technical difficulty which we have to overcome in order to analyze the bifurcation problem (4.3). Remark that this difficulty appears also in the case if \(\tau \) is fixed to be zero (and \(\lambda \) is used to be the bifurcation parameter), i.e. in the case of Hopf bifurcation for semilinear wave equations without delay.

It should be emphasized that the equation (4.3) does not depend smoothly on \(\omega \), \(\tau \), and \(\lambda \). But after the Lyapunov-Schmidt reduction the equation (6.1), which is locally equivalent to (4.3), depends smoothly on all its parameters. In other words, during the Lyapunov-Schmidt reduction the main difficulties of the present paper have been eliminated, with hard technical work behind.

4.1 Fredholmness of the Linearization

We intend to show that the linearization of (4.3) at \(v=0\), i.e., the operator

is a Fredholm operator of index zero from the space \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself.

Lemma 10

Let the condition (1.7) be fulfilled. Then there exists \(\delta >0\) such that for all \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) with \(\omega \ne 0\) and \(|\lambda |<\delta \) the operator \(I-{\mathcal {C}}(\omega ,\lambda )-{\mathcal {D}}(\omega ,\lambda )\partial _v{{\mathcal {B}}}(0,\omega ,\tau ,\lambda )\) is a Fredholm operator of index zero from the space \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself.

The main complication in the proof is caused by the fact that the operators \({\mathcal {C}}(\omega ,\lambda )+{\mathcal {D}}(\omega ,\lambda )\partial _v{{\mathcal {B}}}(0,\omega ,\tau ,\lambda )\) are not completely continuous from the space \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself, in general.

The proof will be divided into a number of claims.

Claim 1

For all \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) with \(\omega \ne 0\) and all \(v \in C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) we have \({\mathcal {D}}(\omega ,\lambda ){{\mathcal {J}}}(\omega ,\tau ,\lambda )v \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\), and for any \(c>0\) it holds

Proof of Claim

The idea of the proof is to show that the composition of the two partial integral operators \({\mathcal {D}}(\omega ,\lambda )\) and \({{\mathcal {J}}}(\omega ,\tau ,\lambda )\) is an integral operator mapping \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into \(C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\). Indeed, for \(v \in C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) we have

where

Here we changed the integration variable \(\xi \) to a new integration variable

Note that for \(\omega \ne 0\) the inverse transformation \(\xi =\xi _{\eta ,t,\omega ,\lambda }(\zeta )\) exists and depends smoothly on \(\eta ,t,\omega ,\lambda \) and \(\zeta \).

Obviously, the absolute values of the partial derivatives of (4.10) with respect to t and x exist and can be estimated from above by a constant times \(\Vert v\Vert _\infty \). Moreover, as long as \(\omega \) and \(\lambda \) are varying in the ranges \(1/c\le \omega \le c\) and \(|\lambda | \le c\), the constant may be chosen to be independent on \(\omega ,\tau \) and \(\lambda \) (and to depend on c only). The same can be shown for the terms

and

Claim 1 is therefore proved for the first component \({\mathcal {D}}_1(\omega ,\lambda ){{\mathcal {J}}}(\omega ,\tau ,\lambda )\). The same argument applies to the second component \({\mathcal {D}}_2(\omega ,\lambda ){{\mathcal {J}}}(\omega ,\tau ,\lambda )\).

Claim 2

For all \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) with \(\omega \ne 0\) and all \(v \in C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) we have \({\mathcal {D}}(\omega ,\lambda ){{\mathcal {K}}}(\lambda ){\mathcal {D}}(\omega ,\lambda )v \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\), and for any \(c>0\) it holds

Proof of Claim

The proof is similar to the proof of Claim 1. We have

Here we changed the integration variable \(\xi \) to

and denoted by \(\xi =\xi _{\eta ,t,\omega ,\lambda }(\zeta )\) the inverse transformation. Now we proceed as in the proof of Claim 2.

Remark 11

In the proof of Claim 2 we used that the diagonal part of the operator \({{\mathcal {K}}}(\lambda )\) vanishes. Indeed, if in place of (2.19) we would have, for example, \( [{{\mathcal {K}}}_1(\lambda )v](t,x)=v_1(t,x)+b_2(x,\lambda )v_2(t,x), \) then in \([{\mathcal {D}}_1(\omega ,\lambda ){{\mathcal {K}}}(\lambda ){\mathcal {D}}(\omega ,\lambda )v](t,x)\) there would appear the additional summand

Because of \(A(x,\xi ,\lambda )+A(\xi ,\eta ,\lambda )=A(x,\eta ,\lambda )\) this equals to

and this is not differentiable with respect to t, in general, if \(v_1\) is not differentiable with respect to t.

Claim 3

For all \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) with \(\omega \ne 0\) and all \(v \in C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) we have \({\mathcal {D}}(\omega ,\lambda ){{\mathcal {K}}}(\lambda ){\mathcal {C}}(\omega ,\lambda )v \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\), and for any \(c>0\) it holds

Proof of Claim

We have

Here we changed the integration variable \(\xi \) to

and \(\xi =\xi _{t,\omega ,\lambda }(\zeta )\) is the inverse transformation. Again, now we can proceed as in the proof of Claim 1.

Claim 4

Let the condition (1.7) be fulfilled. Then there exists \(\delta >0\) such that for all \(\omega ,\lambda \in {\mathbb {R}}\) with \(|\lambda |\le \delta \) the operator \(I-{\mathcal {C}}(\omega ,\lambda )\) is an isomorphism from \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) to itself. Moreover,

Proof of Claim

Take \(f \in C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\). We have to show that for all real numbers \(\omega \) and \(\lambda \) with \(\lambda \approx 0\) there exists a unique function \(v \in C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) satisfying the equation

and that \(\Vert v\Vert _\infty \le \text{ const }\Vert f\Vert _\infty \), where the constant does not depend on \(\omega ,\lambda \) and f. Equation (4.14) is satisfied if and only if for all \(t \in {\mathbb {R}}\) and \(x \in [0,1]\) it holds

System (4.15), (4.16) is satisfied if and only if (4.15) is true and if it holds

i.e., if and only if (4.15) and (4.17) are true and if

Equation (4.18) is a functional equation for the unknown function \(v_2(\cdot ,0)\). In order to solve this equation let us denote by \(C_{2\pi }({\mathbb {R}})\) the Banach space of all \(2\pi \)-periodic continuous functions \({\tilde{v}}:{\mathbb {R}} \rightarrow {\mathbb {R}}\) with the norm \(\Vert {\tilde{v}}\Vert _\infty :=\max \{|{\tilde{v}}(t)|: \; t \in {\mathbb {R}}\}\). Equation (4.18) is an equation in \(C_{2\pi }({\mathbb {R}})\) of the type

with \({\tilde{v}},{\tilde{f}}\in C_{2\pi }({\mathbb {R}})\) defined by \({\tilde{v}}(t):=v_2(t,0)\) and

and with \({\widetilde{{\mathcal {C}}}}(\omega ,\lambda )\in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}}))\) defined by

From the definitions of the functions \(c_1\) and \(c_2\) it follows that

and assumption (1.7) yields

Now, we distinguish two cases.

Case 1: \(c_0<1\). Then there exists \(\delta >0\) such that for all \(\lambda \in [-\delta ,\delta ]\) it holds \(c_1(1,0,\lambda )c_2(0,1,\lambda )\le \frac{1+c_0}{2}<1\). Therefore

Hence, for all \(\lambda \in [-\delta ,\delta ]\) the operator \(I-{\widetilde{{\mathcal {C}}}}(\omega ,\lambda )\) is an isomorphism from \(C_{2\pi }({\mathbb {R}})\) to itself, and

Therefore, for all \(\omega ,\lambda \in {\mathbb {R}}\) with \(|\lambda | \le \delta \) there exists exactly one solution \(v_2(\cdot ,0)\in C_{2\pi }({\mathbb {R}})\) to (4.18), and

where the constants do not depend on \(\omega ,\lambda \) and f. Inserting this solution into the right-hand side of (4.17) we get \(v_2 \in C_{2\pi }({\mathbb {R}}\times [0,1])\), and inserting this into the right-hand side of (4.15) we get finally \(v_1 \in C_{2\pi }({\mathbb {R}}\times [0,1])\), i.e. the unique solution \(v=(v_1,v_2) \in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) to (4.15), (4.16) such that \(\Vert v\Vert _\infty \le \text{ const }\Vert f\Vert _\infty \), where the constant does not depend on \(\omega ,\lambda \) and f.

Case 2: \(c_0>1.\) Then there exists \(\delta >0\) such that for all \(\lambda \in [-\delta ,\delta ]\) it holds \(c_1(1,0,\lambda )c_2(0,1,\lambda )\ge \frac{1+c_0}{2}>1\). Equation (4.18) is equivalent to

This equation is again of the type (4.19), but now with \(\Vert {\widetilde{{\mathcal {C}}}}(1,0)\Vert _{ {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}}))}\le 1/c_0\). Hence, there exists \(\delta >0\) such that

we can, therefore, proceed as in the case \(c_0<1\).

Remark 12

Definition (4.21) implies that \(\frac{d}{dt}{\widetilde{{\mathcal {C}}}}(\omega ,\lambda ){\tilde{v}}={\widetilde{{\mathcal {C}}}} (\omega ,\lambda )\frac{d}{dt}{\tilde{v}}\) for all \({\tilde{v}}\in C^1_{2\pi }({\mathbb {R}})\). This yields the estimate

Hence, \((I-{\widetilde{{\mathcal {C}}}}(\omega ,\lambda ))^{-1}\) is a linear bounded operator from \(C^1_{2\pi }({\mathbb {R}})\) into \(C^1_{2\pi }({\mathbb {R}})\) for \(\lambda \approx 0\). It follows that, for given \(f \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\), the solution v to (4.14) belongs to \(C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) and, moreover,

Let us turn back to Fredholmness of the operator \(I-{\mathcal {C}}(\omega ,\lambda )-{\mathcal {D}}(\omega ,\lambda )({{\mathcal {J}}}(\omega ,\tau ,\lambda )+{{\mathcal {K}}}(\lambda ))\) from the space \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself for \(\omega \ne 0\) and \(\lambda \approx 0\). Note that the space \(C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) is completely continuously embedded into the space \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\). By Claim 1, for given \(\omega \ne 0\), the operator \({\mathcal {D}}(\omega ,\lambda ){{\mathcal {J}}}(\omega ,\tau ,\lambda )\) is completely continuous from \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself. Therefore, it remains to show that for \(\omega \ne 0\) and \(\lambda \approx 0\) the operator \(I-{\mathcal {C}}(\omega ,\lambda )-{\mathcal {D}}(\omega ,\lambda ){{\mathcal {K}}}(\lambda )\) is Fredholm of index zero from \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself. By Claim 4, this is true whenever the operator \(I-(I-{\mathcal {C}}(\omega ,\lambda ))^{-1}{\mathcal {D}}(\omega ,\lambda ){{\mathcal {K}}}(\lambda )\) is Fredholm of index zero from \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself, for \(\omega \ne 0\) and \(\lambda \approx 0\). For that we use the following Fredholmness criterion of S. M. Nikolskii (cf. e.g. [20, Theorem XIII.5.2]):

Theorem 13

Let U be a Banach space and \(K \in {{\mathcal {L}}}(U)\) be an operator such that \(K^2\) is completely continuous. Then the operator \(I-K\) is Fredholm of index zero.

On the account of Theorem 13, it remains to prove the following statement.

Claim 5

For given \(\omega \ne 0\) and \(\lambda \approx 0\), the operator \( ((I-{\mathcal {C}}(\omega ,\lambda ))^{-1}{\mathcal {D}}(\omega ,\lambda ){{\mathcal {K}}}(\lambda ))^2\) is completely continuous from \(C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) into itself.

Proof of Claim

A straightforward calculation shows that

The desired statement now follows from Claims 2 and 3.

Remark 14

For proving Lemma 10 we did not need the estimates (4.9), (4.11)–(4.13) and (4.22). These estimates will be used in the proof of Lemma 20 below (more exactly, in the proof of Claim 7 there).

4.2 Kernel and Image of the Linearization

This subsection concerns the kernel and the image of the operator

where

(cf. (2.18), (2.19), (4.1) and (4.2)). From now on we will use assumptions \(\mathbf (A1)\)–\(\mathbf (A3)\) and (1.7) of Theorem 2. In particular, we will fix a solution \(u=u_0\ne 0\) to (1.4) with \(\tau =\tau _0\) and \(\mu =i\) and a solution \(u=u_*\ne 0\) to (1.6) fulfilling assumption \(\mathbf (A3)\) (or, more precisely, (4.41) below).

We will describe the kernel and the image of the operator \({{\mathcal {L}}}\) by means of the eigenfunctions \(u_0\) and \(u_*\). To this end, we introduce two functions \(v_0,v_*:[0,1] \rightarrow {\mathbb {C}}^2\), two functions \({{\varvec{v}}}_0,{{\varvec{v}}}_*:{\mathbb {R}}\times [0,1] \rightarrow {\mathbb {C}}^2\) and four functions \(v_0^1,v_0^2,v_*^1v_*^2:{\mathbb {R}}\times [0,1] \rightarrow {\mathbb {R}}^2\) by

and

where

Lemma 15

If the conditions of Theorem 2 are fulfilled, then \(\ker {{\mathcal {L}}}= \text{ span }\{v^1_0,v^2_0\}\).

Proof

Because \(u_0\) is a solution to (1.4) with \(\tau =\tau _0\) and \(\mu =i\), the complex-valued function \( {{\varvec{u}}}_0(t,x):=e^{it}u_0(x) \) is a solution to the linear homogeneous problem

On the other hand, if u is a solution to (4.29), then for all \(k \in {\mathbb {Z}}\) the functions

satisfy the ODE \(\left( -k^2-b_3^0(x)-b_4^0(x)e^{ik\tau _0}-ikb_5^0(x)\right) {\tilde{u}}_k(x)=a_0(x)^2{\tilde{u}}_k''(x)+b_6^0(x){\tilde{u}}_k'(x)\) with boundary conditions \({\tilde{u}}_k(0)={\tilde{u}}_k'(1)=0\). Assumptions \(\mathbf (A1)\) and \(\mathbf (A2)\) imply that \({\tilde{u}}_k=0\) for all \(k \in {\mathbb {Z}}\setminus \{\pm 1\}\) and \({\tilde{u}}_1=c u_0\) for some constant c, i.e., \(u\in \text{ span }\{{{\varvec{u}}}_0,\overline{{{\varvec{u}}}_0}\}\). In other words, \(\text{ span }\{{{\varvec{u}}}_0,\overline{{{\varvec{u}}}_0}\}\) consists of all solutions \(u:{\mathbb {R}} \times [0,1] \rightarrow {\mathbb {C}}\) to (4.29).

Now we apply Lemmas 7 and 8 with \(\omega =1\), \(\tau =\tau _0\), \(\lambda =0\) and with \(b(x,\lambda ,u_3,u_4,u_5,u_6)\) replaced by \(b_3^0(x)u_3+b_4^0(x)u_4+b_5^0(x)u_5+b_6^0(x)u_6\). We conclude that \(\text{ span }\{{{\varvec{v}}}_0,\overline{{{\varvec{v}}}_0}\}\) consists of all solutions \(v:{\mathbb {R}} \times [0,1] \rightarrow {\mathbb {C}}^2\) to the linear homogeneous equation \( v={\mathcal {C}} v-{\mathcal {D}}({{\mathcal {J}}}+{{\mathcal {K}}})v, \) where \({{\varvec{v}}}_0\) is defined by (4.26). As \(v_0^1=\text{ Re }{{\varvec{v}}}_0\) and \(v_0^2=\text{ Im }{{\varvec{v}}}_0\), the proof is complete. \(\square \)

In what follows we denote by “\(\cdot \)” the Hermitian scalar product in \({\mathbb {C}}^2\), i.e. \(v\cdot w:=v_1\overline{w_1}+v_2\overline{w_2}\) for \(v,w \in {\mathbb {C}}^2\). Further, for continuous functions \(v,w:[0,2\pi ]\times [0,1]\rightarrow {\mathbb {C}}^2\) we write

Moreover, we will work with the operator \({{\mathcal {A}}}\in {{\mathcal {L}}}\left( C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2);C_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\right) \), the components of which are defined by

i.e. \({{\mathcal {A}}}={{\mathcal {A}}}(1,0)\) (cf. (4.5)), and its formal adjoint one \({{\mathcal {A}}}^* \in {{\mathcal {L}}}\left( C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2);C_{2\pi }\right. \left. ({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\right) \), which is defined by

It is easy to verify that \(\langle {{\mathcal {A}}}v,w\rangle =\langle v,{{\mathcal {A}}}^*w\rangle \) for all \(v,w \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) which satisfy the boundary conditions in (2.1).

Lemma 16

If the conditions of Theorem 2 are fulfilled, then

Proof

It follows from Lemmas 10 and 15 that \(\text{ im }\,{{\mathcal {L}}}\) is a closed subspace of codimension two in \(C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\). Hence, it suffices to show that

and that

To prove (4.30), fix an arbitrary \(v \in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\). There exists a sequence \(w^1,w^2,\ldots \in C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) such that \(\Vert v-w^k\Vert _\infty \rightarrow 0\) as \(k\rightarrow \infty \). Moreover, the functions \(({\mathcal {C}}+{\mathcal {D}}({{\mathcal {J}}}+{{\mathcal {K}}}))w^k\) satisfy the boundary conditions in (2.1). Also the function \(v_*^1\) satisfies the boundary conditions in (2.1). The last fact follows from the equalities \([(u_* +iU_*)+(u_* -iU_*)]_{x=0}=2u_*(0)=0\) and

because the eigenfunction \(u_*\) satisfies the boundary conditions in (1.6). Therefore, by (4.6),

where the operators \({{\mathcal {J}}}^*,{{\mathcal {K}}}^*\in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\) are the formal adjoint operators to \({{\mathcal {J}}}\) and \({{\mathcal {K}}}\). Due to (2.18) and (2.19), they are given by the formulas

and

respectively. It follows that

Similarly, \(\langle {{\mathcal {L}}}v,{{\mathcal {A}}}^*v_*^2\rangle =\langle v,({{\mathcal {A}}}^*-{{\mathcal {J}}}^*-{{\mathcal {K}}}^*)v_*^2\rangle \). Hence, in order to prove (4.30) it suffices to show that

Taking into account the definitions of the operators \({{\mathcal {A}}}^*\), \({{\mathcal {J}}}^*\) and \({{\mathcal {K}}}^*\) and of the function \(v_*\) (cf. (4.27)), it is easy to see that (4.33) is satisfied if and only if, for any \(x \in [0,1]\),

where \(v_{*1}=u_*+iU_*\) and \(v_{*2}=u_*-iU_*\) are the components of the vector function \(v_*\). Considering the sum and the difference of these two equations and taking into account that \(v_{*1}+v_{*2}=2u_*\) and \(v_{*1}-v_{*2}=2iU_*\), we get

Thus, (4.33) is equivalent to (4.34)–(4.35). In order to show (4.34), we use the equality \(b_1^0+b_2^0=b_5^0\) (cf. (2.14)) and note that (4.34) is equivalent to

On the other side, (4.28) yields

Inserting (4.37) into (4.36), we conclude that (4.34) is true if \(u_*\) solves the ordinary differential equation in (1.6), i.e. (4.34) is satisfied.

Equation (4.35) is satisfied by the definition (4.28) of the function \(U_*\) and the equality \(b_1^0-b_2^0=-a_0'+b_6^0/a_0\) (cf. (2.14)). The proof of (4.33) and, hence, of (4.30) is therefore complete.

It remains to prove (4.31). To this end, we introduce functions \(w_0: [0,1] \rightarrow {\mathbb {C}}^2\), \({{\varvec{w}}}_0: {\mathbb {R}} \times [0,1] \rightarrow {\mathbb {C}}^2\) and \(w^1,w^2 \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) by

and

Note that the equations (4.39) define the functions \(w^1,w^2 \in C^1_{2\pi }({\mathbb {R}} \times [0,1];{\mathbb {R}}^2)\) uniquely, as follows from Claim 4 in Sect. 4.1 (see also Remark 12). Combining (4.6) with (4.39), we obtain

Therefore,

By (4.27) and (4.38), the right hand side of (4.40) is equal to

Finally, we use (4.28) and the definition of \(\sigma \) in (A3) to get

Similarly,

Now, we normalize the eigenfunctions \(u_0\) and \(u_*\) so that

It follows that

which yields (4.31), as desired. \(\square \)

4.3 Splitting of Equation (4.3)

Given \(\varphi \in {\mathbb {R}}\), we introduce a time shift operator \(S_\varphi \in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\) by

It is easy to verify that

and

for all \(\varphi , \omega , \tau , \lambda \in {\mathbb {R}}\) and \(v \in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\). It follows that \(S_\varphi {{\mathcal {L}}}={{\mathcal {L}}}S_\varphi \), in particular,

Since \(\ker {{\mathcal {L}}}\) is finite dimensional, there exists a topological complement \({{\mathcal {W}}}\) (i.e., a closed subspace which is transversal) to \(\ker {{\mathcal {L}}}\) in \( C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\). Since the map \(\varphi \in {\mathbb {R}} \mapsto S_\varphi \in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\) is strongly continuous, \({{\mathcal {W}}}\) can be chosen to be invariant with respect to \(S_\varphi \), i.e.,

(cf. [6, Theorem 2]). Further, let us introduce a projection operator \(P \in {{\mathcal {L}}}(C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2))\) by

where the functions \(v_*^j\) and \(w^k\) are given by (4.26) and (4.39). The projection property \(P^2=P\) follows from (4.42). Moreover, Lemma 16 implies that

Furthermore, from (4.38) it follows that \([S_\varphi {{\varvec{w}}}_0](t,x)={{\varvec{w}}}_0(t+\varphi ,x)=e^{i(t+\varphi )}{{\varvec{w}}}_0(x)=e^{i\varphi }{{\varvec{w}}}_0(x)\) and, hence,

Similarly one shows that \( S_\varphi v_*^1=\cos \varphi \,v_*^1-\sin \varphi \,v_*^2\) and \( S_\varphi v_*^2=\cos \varphi \,v_*^2+\sin \varphi \,v_*^1. \) On the account of (4.44), for every \(v \in C_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) we obtain

Finally, we use the ansatz (cf. (4.47))

and rewrite equation (4.3) as a system of two equations, namely

5 The External Lyapunov-Schmidt Equation

In this section we solve the so-called external Lyapunov-Schmidt equation (4.53) with respect to \(w \approx 0\) for \(u\approx 0\), \(\omega \approx 1\), \(\tau \approx \tau _0\) and \(\lambda \approx 0\). More exactly, in Subsect. 5.1 we present a generalized implicit function theorem, which will be used in Subsect. 5.2 to solve equation (4.53).

5.1 A Generalized Implicit Function Theorem

In this subsection we present the generalized implicit function theorem, which is a particular case of [25, Theorem 2.2]. It concerns abstract parameter-dependent equations of the type

Here F is a map from \({{\mathcal {W}}}_0 \times {{\mathcal {P}}}\) to \(\widetilde{{\mathcal {W}}}_0\), \({{\mathcal {W}}}_0\) and \({\widetilde{{\mathcal {W}}}}_0\) are Banach spaces with norms \(\Vert \cdot \Vert _0\) and \(|\cdot |_0\), respectively, and \({{\mathcal {P}}}\) is a finite dimensional normed vector space with norm \(\Vert \cdot \Vert \). Moreover, it is supposed that

We are going to state conditions on F such that, similarly to the classical implicit function theorem, for all \(p \approx 0\) there exists exactly one solution \(w \approx 0\) to (5.1) and that the data-to-solution map \(p \mapsto w\) is smooth. Similarly to the classical implicit function theorem, we suppose that

However, unlike to the classical case, we do not suppose that \(F(w,\cdot )\) is smooth for all \(w \in {{\mathcal {W}}}_0\). In our applications the map \((w,p) \mapsto \partial _wF(w,p)\) is not even continuous with respect to the uniform operator norm in \({{\mathcal {L}}}({{\mathcal {W}}}_0;{\widetilde{{\mathcal {W}}}}_0)\), in general. Hence, the difference of Theorem 17 below in comparison with the classical implicit function theorem is not a degeneracy of the partial derivatives \(\partial _wF(w,p)\) (like in implicit function theorems of Nash-Moser type), but a degeneracy of the partial derivatives \(\partial _pF(w,p)\) (which do not exist for all \(w \in {{\mathcal {W}}}_0\)).

Thus, we consider parameter depending equations, which do not depend smoothly on the parameter, but with solutions which do depend smoothly on the parameter. For that, of course, some additional structure is needed, which will be described now.

Let \(\varphi \in {\mathbb {R}} \mapsto S(\varphi ) \in {{\mathcal {L}}}({{\mathcal {W}}}_0)\), \(\varphi \in {\mathbb {R}} \mapsto {\widetilde{S}}(\varphi ) \in {{\mathcal {L}}}(\widetilde{{{\mathcal {W}}}}_0)\), and \(\varphi \in {\mathbb {R}} \mapsto T(\varphi ) \in {{\mathcal {L}}}({{\mathcal {P}}})\) be strongly continuous groups of linear bounded operators on \({{\mathcal {W}}}_0\), \(\widetilde{{{\mathcal {W}}}}_0\) and \({{\mathcal {P}}}\), respectively. We suppose that

Furthermore, let \(A:D(A) \subseteq {{\mathcal {W}}}_0 \rightarrow {{\mathcal {W}}}_0\) be the infinitesimal generator of the \(C_0\)-group \(S(\varphi )\). For \(l\in {\mathbb {N}}\), let

denote the domain of definition of the l-th power of A. Since A is closed, \({{\mathcal {W}}}_l\) is a Banach space with the norm

We suppose that for all \(k,l\in {\mathbb {N}}\)

and, for all \(w,w_1,\ldots ,w_k \in {{\mathcal {W}}}_l\) and \(p,p_1,\ldots ,p_l \in {{\mathcal {P}}}\) with \(\Vert w\Vert _l+\Vert p\Vert \le 1\),

where the constants \(c_{kl}\) do not depend on \(w,w_1,\ldots ,w_k,p,p_1,\ldots ,p_l\).

Theorem 17

[25, Theorem 2.2] Suppose that the conditions (5.2)–(5.6) are fulfilled. Furthermore, assume that there exist \(\varepsilon _0 >0\) and \(c>0\) such that for all \(p \in {{\mathcal {P}}}\) with \(\Vert p\Vert \le \varepsilon _0\)

and

Then there exist \(\varepsilon \in (0,\varepsilon _0]\) and \(\delta >0\) such that for all \(p \in {{\mathcal {P}}}\) with \(\Vert p\Vert \le \varepsilon \) there is a unique solution \(w=\hat{w}(p)\) to (5.1) with \(\Vert w\Vert _0 \le \delta \). Moreover, for all \(k\in {\mathbb {N}}\) we have \(\hat{w}(p) \in {{\mathcal {W}}}_k\), and the map \(p \in {{\mathcal {P}}}\mapsto \hat{w}(p) \in {{\mathcal {W}}}_k\) is \(C^\infty \)-smooth.

Remark 18

The maps \(\varphi \in {\mathbb {R}} \mapsto S(\varphi ) \in {{\mathcal {L}}}({{\mathcal {W}}}_0)\) and \(\varphi \in {\mathbb {R}} \mapsto {\widetilde{S}}(\varphi ) \in {{\mathcal {L}}}(\widetilde{{\mathcal {W}}}_0)\) are not continuous, in general. Nevertheless, since \({{\mathcal {P}}}\) is supposed to be finite dimensional, the map \(\varphi \in {\mathbb {R}} \mapsto T(\varphi ) \in {{\mathcal {L}}}({{\mathcal {P}}})\) is \(C^\infty \)-smooth. This is essential in the proof of Theorem 17 in [25].

Remark 19

In Theorem 17 we do not suppose that \(\partial _wF(0,p)\) depends continuously on p in the sense of the uniform operator norm in \({{\mathcal {L}}}({{\mathcal {W}}}_0;{\widetilde{{\mathcal {W}}}}_0)\). Hence, assumptions (5.7) and (5.8) cannot be replaced by their versions with \(p=0\), in general.

5.2 Solution of the external Lyapunov-Schmidt equation

In what follows, we use the following notation (for \(\varepsilon >0\) and \(k\in {\mathbb {N}}\)):

We are going to solve the so-called external Lyapunov-Schmidt equation (4.53) with respect to \(w \approx 0\) for \(u\approx 0\), \(\omega \approx 1\), \(\tau \approx \tau _0\) and \(\lambda \approx 0\).

Lemma 20

Let the conditions of Theorem 2 be fulfilled. Then there exist \(\varepsilon >0\) and \(\delta >0\) such that for all \(u \in {{\mathcal {U}}}_\varepsilon \) and \((\omega ,\tau ,\lambda )\in {{\mathcal {P}}}_\varepsilon \) there is a unique solution \(w=\hat{w}(u,\omega ,\tau ,\lambda ) \in {{\mathcal {W}}}\) to (4.53) with \(\Vert w\Vert _\infty <\delta \). Moreover, for all \(k\in {\mathbb {N}}\) it holds \(\hat{w}(u,\omega ,\tau ,\lambda ) \in C^k_{2\pi }\), and the map \((u,\omega ,\tau ,\lambda ) \in {{\mathcal {U}}}_\varepsilon \times {{\mathcal {P}}}_\varepsilon \mapsto \hat{w}(u,\omega ,\tau ,\lambda ) \in C^k_{2\pi }\) is \(C^\infty \)-smooth.

We have that \(w=0\) is a solution to (4.53) with \(u=0\), \(\omega =1\), \(\tau =\tau _0\) and \(\lambda =0\). This suggests that Lemma 20 can be obtained from an appropriate implicit function theorem. Unfortunately, the classical implicit function theorem does not work here, because the left-hand side of (4.53) is differentiable with respect to \(\omega \), \(\tau \) and \(\lambda \) not for any \(w\in C_{2\pi }\). We will apply Theorem 17.

Let us verify the assumptions of Theorem 17 in the following setting:

Note that \({{\mathcal {W}}}_0\) and \({\widetilde{{{\mathcal {W}}}}}_0\) are Banach spaces with the norm \(\Vert \cdot \Vert _\infty \). Conditions (5.2), (5.3) and (5.7) are fulfilled, the last one being true due to Lemma 10.

It remains to verify conditions (5.4)–(5.6) and (5.8).

We begin with verifying (5.4). We identify \(S_\varphi \) and \({\widetilde{S}}_\varphi \) with \(S_\varphi \) defined by (4.43) restricted to \({{\mathcal {W}}}_0\) and \({\widetilde{{{\mathcal {W}}}}}_0\), respectively. Let

It follows from (4.50) that \(S_\varphi {\widetilde{{{\mathcal {W}}}}}_0={\widetilde{{{\mathcal {W}}}}}_0\). Taking into account (4.44) and (4.45), we get

which gives (5.4).

To verify assumption (5.5), recall that the infinitesimal generator of the group \(S_\varphi \) is the differential operator \(A=\frac{d}{dt}\). Therefore,

We have

and

Taking into account that any \(u \in \ker {{\mathcal {L}}}\) is \(C^\infty \)-smooth and satisfies the equality \(\Vert \partial _t^ju\Vert _\infty =\Vert u\Vert _\infty \) (cf. Lemma 15), our task is reduced to show that for all \(k,l\in {\mathbb {N}}\) and all \(w,w_1,\ldots ,w_k \in {{\mathcal {W}}}^l\) the functions \({\mathcal {C}}(\omega ,\lambda )w\) and \({\mathcal {D}}(\omega ,\lambda )\partial ^k_v{{\mathcal {B}}}(u+w,\omega ,\tau ,\lambda )(w_1,\ldots ,w_k)\) depend \(C^l\)-smoothly on \((\omega ,\tau ,\lambda )\) and that condition (5.6) is fulfilled.

The proof goes through two claims.

Claim 6

For all \(l,m\in {\mathbb {N}}\) and \(w \in {{\mathcal {W}}}^{l+m}\) the map \((\omega ,\lambda ) \in {\mathbb {R}}^2 \mapsto {\mathcal {C}}(\omega ,\lambda ) w \in C_{2\pi }\) is \(C^{l+m}\)-smooth. Moreover,

where the constant \(c_{lm}\) does not depend on \(\omega \), \(\lambda \) and w for \(\omega \) and \(\lambda \) varying on bounded intervals.

Proof of Claim

Since \(w(\cdot ,x)\) is \(C^l\)-smooth, definition (4.1) implies that \({\mathcal {C}}(\cdot ,\cdot )w\) is \(C^l\)-smooth, and the derivatives can be calculated by the chain rule. For example,

It follows that \(\Vert \partial _\omega [{\mathcal {C}}(\omega ,\lambda )w]\Vert _\infty \le \text{ const }\Vert w\Vert _1\), where the constant does not depend on \(\omega \) and \(\lambda \) (varying in bounded intervals) and on \(w \in {{\mathcal {W}}}^1\).

Similarly one can handle \(\partial _\lambda {\mathcal {C}}(\omega ,\lambda )w\) and higher order derivatives, and similarly one can show (5.11).

Remark 21

In (5.12) the loss of derivatives property can be seen explicitely: Taking a derivative with respect to \(\omega \) leads to a derivative with respect to t. The same happens in formulas (5.14), (5.16) and (5.17) below.

Claim 7

For all \(k,l,m,n\in {\mathbb {N}}\), \(u \in \ker {{\mathcal {L}}}\) and \(w,w_1,\ldots ,w_k \in {{\mathcal {W}}}^{l+m+n}\), the map \((\omega ,\tau ,\lambda ) \in {\mathbb {R}}^3 \mapsto {\mathcal {D}}(\omega ,\lambda )\partial _v^k{{\mathcal {B}}}(u+w,\omega ,\tau ,\lambda ) \in C_{2\pi }\) is \(C^{l+m+n}\)-smooth. Moreover,

where the constant \(c_{klmn}\) does not depend on \(\omega \), \(\tau \), \(\lambda \), u and w for \(\Vert u\Vert _\infty \), \(\Vert w\Vert _{l+m+n}\), \(\omega \), \(\tau \) and \(\lambda \) varying on bounded intervals.

Proof of Claim

Differentiation of (4.2) with respect to \(\omega \) gives

where

Hence, for \(v,w \in {{\mathcal {W}}}^1\), it holds

Furthermore, similarly to (2.17), we have

where

and

Here the coefficients \({\tilde{b}}_k\) are defined appropriately (similarly to (2.12) and (2.14)), as follows:

and

Now, \([\partial _v{{\mathcal {B}}}_j(v,\cdot ,\cdot ,\cdot )w](\cdot ,x)\) is \(C^l\)-smooth because \(v(\cdot ,x)\) and \(w(\cdot ,x)\) are \(C^l\)-smooth. The derivatives can be calculated by the product and chain rules. In particular, for \(v,w \in {{\mathcal {W}}}^1\) we have

where

and

Moreover, for \(k=3,4,5,6\),

The functions \(\partial _\omega {\tilde{b}}_k\) are bounded as long as as \(\Vert v\Vert _1\) \(\omega \), \(\tau \) and \(\lambda \) are bounded. Hence, we have

where the constant does not depend on \(\omega \), \(\tau \), \(\lambda \), v and w as long as \(\Vert v\Vert _1\), \(\Vert w\Vert _1\) \(\omega \), \(\tau \) and \(\lambda \) are bounded. Similarly one shows \( \Vert \partial _t[\partial _v{{\mathcal {B}}}_j(v,\omega ,\tau ,\lambda )w]\Vert _\infty \le \text{ const }\Vert w\Vert _1. \) Using (5.15) we get

where the constant does not depend on \(\omega \), \(\tau \), \(\lambda \), v and w as long as \(\Vert v\Vert _1\), \(\Vert w\Vert _1\) \(\omega \), \(\tau \) and \(\lambda \) are bounded. Similarly one shows the estimates (5.13) for \(\partial _\tau [{\mathcal {D}}(\omega ,\lambda )\partial _v{{\mathcal {B}}}(v,\omega ,\tau ,\lambda )w]\) and \(\partial _\lambda [{\mathcal {D}}(\omega ,\lambda )\partial _v{{\mathcal {B}}}(v,\omega ,\tau ,\lambda )w]\) and for the higer order derivatives.

Finally, we verify assumption (5.8) of Theorem 17.

Claim 8

There exist \(\delta >0\) and \(c>0\) such that, for all \(u \in \ker {{\mathcal {L}}}\) and \(\omega ,\tau ,\lambda \in {\mathbb {R}}\) with \(\Vert u\Vert _\infty +|\omega -1|+|\tau -\tau _0|+|\lambda |<\delta \), it holds

Proof of Claim

We will follow ideas which are used to prove coercitivity estimates for singularly perturbed linear differential operators (see, e.g., [37, Lemma 1.3] and [40, Sect. 3]).

Suppose the contrary. Then there exist sequences \(w_n \in {{\mathcal {W}}}\), \(u_n \in \ker {{\mathcal {L}}}\) and \((\omega _n,\tau _n,\lambda _n) \in {\mathbb {R}}^3\) such that

and

We have to construct a contradiction.

For the sake of simpler writing we will use the following notation:

Note that the operators \({{\mathcal {E}}}_n\) are well defined due to Claim 4 from Sect. 4.1. By assumption (5.20), we have

Moreover, because of (4.13) it follows that \(\Vert (I-{{\mathcal {E}}}_n)w_n\Vert _\infty \rightarrow 0\) and, on the account of (4.8) and (4.13), that

Let us show that the sequence \({{\mathcal {E}}}_n^2w_n\) is bounded in the space \(C^1_{2 \pi }\). A straightforward calculation shows that

with

From (4.8), (4.22), (5.18) and (5.23) follows that, in order to show that \({{\mathcal {E}}}_n^2w_n\) is bounded in \(C^1_{2 \pi }\), it suffices to show that the operators sequences \({\mathcal {D}}_n^2\), \({\mathcal {D}}_n{\mathcal {C}}_n\) and \({{\mathcal {R}}}_n\) are bounded with respect to the uniform operator norm in \({{\mathcal {L}}}(C_{2\pi };C^1_{2\pi })\). Let us start with

This sequence is bounded in \({{\mathcal {L}}}(C_{2\pi };C^1_{2\pi })\) because of (4.8), (4.9) and (4.11). Then consider

This sequence is bounded in \({{\mathcal {L}}}(C_{2\pi };C^1_{2\pi })\) because of (4.8), (4.9) and (4.12). And finally, the operator sequence \({{\mathcal {R}}}_n\) is bounded in \({{\mathcal {L}}}(C_{2\pi };C^1_{2\pi })\) because of (4.8), (4.13), (4.22) and because the projection P belongs to \({{\mathcal {L}}}(C_{2\pi };C^1_{2\pi })\) (cf. (4.48)).

Let us summarize: We showed that the sequence \({{\mathcal {E}}}_n^2w_n\) is bounded in \(C^1_{2 \pi }\). Because of the Arzela-Ascoli Theorem, without loss of generality we may assume that this sequence converges in \(C_{2 \pi }\) to some function \(w_* \in C_{2 \pi }\). Then (5.22) implies the convergence

In particular, \(w_* \in {{\mathcal {W}}}\).

If we would have

then it would follow \(w_* \in \ker {{\mathcal {L}}}\cap {{\mathcal {W}}}\) and, on the account of (4.47), \(w_*=0\), contradicting (5.18) and (5.25). Hence, it remains to prove (5.26).

In order to prove (5.26), we take arbitrary \(w,h \in C_{2\pi }\) and calculate

Hence,

Similarly one shows for all \(h_1,h_2,h_3 \in C_{2\pi }\) the convergence

Therefore, we get from (4.49), (5.18) and (5.21) that

for all \(h \in C_{2\pi }\), i.e., (5.26) is true.

We have shown that Theorem 17 can be applied to equation (4.53) in the setting (5.9). This implies the following fact.

Claim 9

There exist \(\varepsilon >0\) and \(\delta >0\) such that for all \(u \in {{\mathcal {U}}}_\varepsilon \) and \((\omega ,\tau ,\lambda )\in {{\mathcal {P}}}_\varepsilon \) there is a unique solution \(w=\hat{w}(u,\omega ,\tau ,\lambda ) \in {{\mathcal {W}}}\) to (4.53) with \(\Vert w\Vert _\infty <\delta \). Moreover, for all \(k\in {\mathbb {N}}\) the partial derivatives \(\partial _t^k\hat{w}(u,\omega ,\tau ,\lambda )\) exist and belong to \(C_{2\pi }\), and the map \((u,\omega ,\tau ,\lambda ) \in {{\mathcal {U}}}_\varepsilon \times {{\mathcal {P}}}_\varepsilon \mapsto \partial _t^k\hat{w}(u,\omega ,\tau ,\lambda ) \in C_{2\pi }\) is \(C^\infty \)-smooth.

In order to finish the proof of Lemma 20 by using Claim 9, we have to show that for all \(k \in {\mathbb {N}}\) it holds

and that the map

Let us first prove (5.27). We use induction with respect to k. For \(k=0\) condition (5.27) is true because of Claim 9.

In order to do the induction step we use that \(\hat{w}(u,\omega ,\tau ,\lambda )\in {{\mathcal {W}}}^{k+1}\) (because of Claim 9) and that \(\hat{w}(u,\omega ,\tau ,\lambda )\in C^k_{2\pi }\) (because of the induction assumption), and we have to show that \(\hat{w}(u,\omega ,\tau ,\lambda )\in C^{k+1}_{2\pi }\) (induction assertion). It holds

where the map \(F:{{\mathcal {W}}}\times {{\mathcal {U}}}_\varepsilon \times {{\mathcal {P}}}_\varepsilon \rightarrow {{\mathcal {W}}}\) is defined by

Hence, we have to show that

Obviously, for all \(w \in C_{2\pi }\) and \( (u,\omega ,\tau ,\lambda ) \in {{\mathcal {U}}}_\varepsilon \times {{\mathcal {P}}}_\varepsilon \) it holds \((I-P)(I-{\mathcal {C}}(\omega ,\lambda ))u \in C^{l}_{2\pi }\) and \(P{\mathcal {D}}(\omega ,\lambda ){{\mathcal {B}}}(u+w,\omega ,\tau ,\lambda ) \in C^{l}_{2\pi }\) for any \(l \in {\mathbb {N}}\). Hence, in order to prove (5.29), it remains to show that

or, the same, that

Due to the definitions of \({{\mathcal {B}}}, {\mathcal {C}}\) and \({\mathcal {D}}\), given by the formulas (2.16), (4.1) and (4.2), it holds

where

Hence, (5.30) is true.

Now, let us prove (5.28). Again, we use induction with respect to k. For \(k=0\), condition (5.28) is true due to Claim 9, again. For the induction step, we proceed as above. We have to show that the map

under the assumption that

Thanks to (5.32), the maps

are \(C^\infty \)-smooth, which implies (5.31) as desired.

Remark 22

The uniqueness assertion of Lemma 9 and equality (5.10) yield

Moreover, the uniqueness assertion of Lemma 9 along with equality \({{\mathcal {B}}}(0,\omega ,\tau ,\lambda )=0\) implies that

Finally, differentiating the identity

with respect to u in \(u=0\), \(\omega =1\), \(\tau =\tau _0\) and \(\lambda =0\), we conclude that \({{\mathcal {L}}}\partial _u\hat{w}(0,1,\tau _0,0)=0\), i.e., \(\partial _u\hat{w}(0,1,\tau _0,0) \in \ker {{\mathcal {L}}}\cap {{\mathcal {W}}}\), i.e.,

6 The bifurcation equation

In this section we substitute the solution \(w=\hat{w}(u,\omega ,\tau ,\lambda )\) to (4.53) into (4.52) and solve the resulting so-called bifurcation equation

with respect to \(\omega \approx 1\) and \(\tau \approx \tau _0\) for \(u\approx 0\) and \(\lambda \approx 0\). The definition (4.48) of the projection P shows that equation (6.1) is equivalent to

By Lemma 15, the variable \(u \in \ker {{\mathcal {L}}}\) in (6.2) can be replaced by \(\xi \in {\mathbb {C}}\), by using the ansatz

We, therefore, get the following equation:

On the account of (4.26) and (4.27), we have \(S_\varphi {{\varvec{v}}}_0=e^{i\varphi }{{\varvec{v}}}_0\) and \(S_\varphi {{\varvec{v}}}_*=e^{i\varphi }{{\varvec{v}}}_*\) and, hence, \(S_\varphi \text{ Re }\,(\xi {{\varvec{v}}}_0)=\text{ Re }\,(e^{i\varphi }\xi {{\varvec{v}}}_0)\). Now, (4.44), (4.45) and (5.33) yield

Our task is, therefore, reduced to determining all solutions \(\xi \approx 0\), \(\omega \approx 1\), \(\tau \approx \tau _0\) and \(\lambda \approx 0\) with real non-negative \(\xi \). Since from now on \(\xi \) is considered to be a real parameter, we redenote it by \(\varepsilon \). Equation (6.4) then reads

From \({{\mathcal {B}}}(0,\omega ,\tau ,\lambda )=0\) and (5.34) it follows that \(G(0,\omega ,\tau ,\lambda )\equiv 0\). This means that, to solve (6.6) with \(\varepsilon >0\), it suffices to solve the so-called scaled or restricted bifurcation equation

In particular, on the account of (4.6) and (6.6) it holds

By (5.34), (5.35), (6.8) and Lemma 15 we have \(H(0,1,\tau _0,0)=\langle {{\mathcal {L}}}v_0^1,{{\mathcal {A}}}^*{{\varvec{v}}}_*\rangle =0\). Hence, in order to solve (6.7) with respect to \(\omega \approx 1\) and \(\tau \approx \tau _0\) (for \(\varepsilon \approx 0\) and \(\lambda \approx 0\)) by using the classical implicit function theorem we have to show that

Let us calculate the partial derivatives in the Jacobian in the left-hand side of (6.10). Due to (4.24), (6.9) and Lemma 15 we have

Similarly one gets

On the other hand, (2.18) implies that for \(k=1,2\)

Moreover, (4.5) yields \(\partial _\omega {{\mathcal {A}}}(1,0)\mathbf {{{\varvec{v}}}_0}=\partial _t \mathbf {{\varvec{v}}}_0\). Hence, from (4.26) it follows that

and, for \(k=1,2\), that

where

are the components of the vector function \(v_0\) (cf. (4.26)). Analogously one gets \( [\partial _\tau {{\mathcal {J}}}_k(1,\tau _0,0)v_0^1](t,x)=b_4^0(x)\,\text{ Im } \left( e^{i(t-\tau _0)}u_0(x)\right) . \) We insert this into (6.11) and (6.12) and get

Here we used (4.32). By (4.36) and (4.41), it holds

Similarly,

Hence, assumption (A2) implies that

i.e., (6.10) is true.

Now, the classical implicit function theorem can be applied to solve (6.7) with respect to \(\omega \approx 1\) and \(\tau \approx \tau _0\) for \(\varepsilon \approx 0\) and \(\lambda \approx 0\). We, therefore, conclude that there exist \(\varepsilon _0>0\) and \(C^\infty \)-smooth functions \({\hat{\omega }},{\hat{\tau }}:[-\varepsilon _0,\varepsilon _0]^2\rightarrow {\mathbb {R}}\) with \({\hat{\omega }}(0,0)=1\) and \({\hat{\tau }}(0,0)=\tau _0\) such that \((\varepsilon ,\omega ,\tau ,\lambda ) \approx (0,1,\tau _0,0)\) is a solution to (6.7) if and only if

Moreover, equality (6.5) implies that \(F(-\xi ,\omega ,\tau ,\lambda )=-F(\xi ,\omega ,\tau ,\lambda )\), i.e., \(H(-\varepsilon ,\omega ,\tau ,\lambda )=H(\varepsilon ,\omega ,\tau ,\lambda )\). Thus, \({\hat{\omega }}(-\varepsilon ,\lambda )={\hat{\omega }}(\varepsilon ,\lambda )\) and \({\hat{\tau }}(-\varepsilon ,\lambda )={\hat{\tau }}(\varepsilon ,\lambda )\). This yields (1.9). Now, by (5.34) and (5.35), the corresponding solutions to (2.1), where \(\omega \) and \(\tau \) are given by (6.16), read

where \(o(\varepsilon )/\varepsilon \rightarrow 0\) for \(\varepsilon \rightarrow 0\) uniformly with respect to \(\lambda \in [-\varepsilon _0,\varepsilon _0]\). Finally, we take into account (2.6) and conclude that the solutions to (1.3), corresponding to \(\omega \) and \(\tau \), are defined by

which proves (1.8).

7 The bifurcation direction: Proof of Theorem 4

Differentiating the identity \( \text{ Re }\,H(\varepsilon ,{\hat{\omega }}(\varepsilon ,0),{\hat{\tau }}(\varepsilon ,0),0)\equiv 0 \) two times with respect to \(\varepsilon \) at \(\varepsilon =0\) and taking into account (1.9), (6.14) and (6.15), we get

Furthermore, (6.8), (5.34) and (5.35) yield the equality

Now we use the special structure (1.10), (1.11) of the nonlinearity b as it is assumed in Theorem 4. It follows from (1.10), (1.11), (2.2) and (2.16) that \(\partial ^2_v{{\mathcal {B}}}(0,1,\tau _0,0)=0\). Moreover, for \(j=1,2\), it holds

Furthermore, (2.3), (2.4), (4.26) and (6.13) yield

Therefore,

Inserting this into (7.1) and (7.2), we end up with the equality

This is exactly the desired formula in Theorem 4 with \(\sigma =1\) (cf. (4.41)).

8 Example

Let us consider problem (1.1), (1.2) with

and a smooth function \(c:[0,1] \rightarrow {\mathbb {R}}\). The function \(u(x)= \sin \frac{\pi x}{2}\) then solves (1.4) with \(\mu =i\) and (1.6), and the choice \(u_0(x)=u_*(x)=\sin \frac{\pi x}{2}\) gives

Hence, if \(\int _0^1c(x)\sin \left( \frac{\pi x}{2}\right) ^2 dx\not =0\), then all assumptions of Theorem 2 are satisfied. If, additionally, \(\beta _4^0(x)=0\) for all \(x \in [0,1]\), then

Therefore, if this number is positive, then the Hopf bifurcation is supercritical.

9 Other Boundary Conditions

The results of Theorems 2 and 4 can be extended to other than (1.2) boundary conditions, for example, for two Dirichlet, or two Robin (in particular, Neumann), or for periodic boundary conditions. However, in those cases the transformation (2.5) is not appropriate anymore. Instead of (2.5), the following transformation can be used:

The inverse transformation is then given by

More precisely, if \(u \in C^2_{2\pi }({\mathbb {R}}\times [0,1])\) satisfies the second order differential equation in (1.3) and if \(v \in C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) is defined by (9.1), then v satisfies the first order system

with

and with operators K and \(K_\lambda \) defined in (2.4). And vice versa, if \(v \in C^1_{2\pi }({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) satisfies (9.3) and if \(u \in C^1_{2\pi }({\mathbb {R}}\times [0,1])\), is defined by (9.2), then u is \(C^2\)-smooth and satisfies the differential equation in (1.3). Note that till now no boundary conditions were used, but only the periodicity in time.

Now, for definiteness, suppose that u satisfies the Dirichlet boundary conditions

Then, accordingly to (9.1), the function v satisfies

In this case the system (3.1) of partial integral equations reads

where the operator \({{\mathcal {B}}}\) is now defined by

while the functions \(b_1,b_2,c_1,c_2\) and A are introduced in Sects. 2 and 3.

More exactly, if \(v \in C_\pi ({\mathbb {R}}\times [0,1];{\mathbb {R}}^2)\) satisfies (9.3) and if \(\partial _tv\) exists and is continuous, then the function u, defined by (9.2), is \(C^2\)-smooth and satisfies the differential equation in (1.3) and the boundary condition (9.4).

The system (9.6) can be written, again, as an operator equation of the type (4.3) with operators \({\mathcal {C}}_1\) and \({\mathcal {D}}\) as in Sect. 4, with \({\mathcal {C}}_2\) slightly changed (cf. (4.1)) to

and with operator \({{\mathcal {B}}}\) from (9.7).

Now we can proceed as in Sects. 4–7. Specifically, the linearization of operator \({{\mathcal {B}}}\) in \(v=0\) is, again, a sum of a partial integral operator and a pointwise operator with zero diagonal part (cf. (2.17)):

with, for \(k=1,2\),

(with functions \(b_3,b_4,b_5\) from (2.12)) and

The definition (4.26) of the function \(v_0\) has to be changed to

and similarly for \({{\varvec{v}}}_0\), \(v_0^1\) and \(v_0^2\). The definitions (4.27) of \(v_*\), \({{\varvec{v}}}_*\), \(v_*^1\) and \(v_*^2\) stay the same. The functions \(v_*^1\) and \(v_*^2\) satisfy the boundary conditions (9.5) because

Here \(u_0\) and \(u_*\) are eigenfunctions to the eigenvalue problems (1.4) (with \(\mu =i\) and \(\tau =\tau _0\)) and (1.6), where in both eigenvalue problems the boundary conditions are changed to (9.4). With these eigenfunctions, the formulas for \(\sigma \) and \(\rho \) in (A3) and the formula for \(\partial _\varepsilon ^2{\hat{\tau }}(0,0)\) in Theorem 4 remain unchanged.

References

Appell, J., Kalitvin, A. S., Zabrejko, P.P.: Partial Integral Operators and Integro-Differential Equations. Pure and Appl. Math. 230, Marcel Dekker, (2000)

Arnold, V.I.: Small divisor problems in classical and celestial mechanics. Russian Math. Survey 18, 85–191 (1963)

Chen, B., Li, Y., Yang, X.: Periodic solutions to nonlinear wave equation with \(x\)-dependent coefficients under the general boundary conditions. J. Dyn. Differ. Equ. 31, 321–368 (2019)

Chen, S., Wei, J., Zhang, X.: Bifurcation analysis for a delayed diffusive logistic population model in the advective heterogeneous environment. J. Dyn. Differ. Equations 32, 823–847 (2020)

Crandall, M.G., Rabinowitz, P.H.: The Hopf bifurcation theorem in infinite dimensions. Arch. Rat. Mech. Anal. 67, 53–72 (1978)

Dancer, E.N.: The \(G\)-invariant implicit function theorem in infinite dimensions. Proc. Royal Soc. Edinburgh 92A, 13–30 (1982)

Du, Y., Niu, B., Guo, Y.: Double Hopf bifurcation in delayed reaction-diffusion systems. J. Dyn. Differ. Equ. 32, 313–358 (2020)

Erneux, T.H.: Applied Delay Differential Equations. Surveys and Tutorials in the Applied Mathematical Sciences. Springer, Berlin (2009)

Faria, T.: Normal forms and Hopf bifurcation for partial differential equations with delays. Trans. AMS 352, 2217–2238 (2000)

Griepentrog, J.A., Recke, L.: Local existence, uniqueness and smooth dependence for nonsmooth quasilinear parabolic problems. J. Evol. Equ. 10, 341–375 (2010)

Guo, Bao-Zhu, Wang, Jun-Min: Control of Wave and Beam PDEs. The Riesz Basis Approach. Communications in Control Engineering, Springer (2019)

Guo, S., Wu, J.: Bifurcation Theory of Functional Differential Equations. Applied Mathematical Sciences. Springer, Berlin (2013)