Abstract

Vertical mixing in oceans is an essential component of the dynamics of ocean circulation, including meridional circulation. Nevertheless, various aspects of mixing, particularly in conjunction with global ocean energetics, remain debatable. One of the biggest reasons is the lack of observational facts. With the recent expansion of global vertical-mixing observations, attempts have been made to estimate the ocean state using vertical-mixing observation data to better understand the role of mixing in oceanography. In this review, we discuss the current status of the ocean state estimation and future synthesis of vertically mixing observation data into the oceanic basin-scale state estimation, including progress of data assimilation studies using numerical models. These will contribute to the construction of the future line of observation, model, and data synthesis studies along which the issues on ocean mixing can be consistently resolved.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Since the beginning of modern ocean observations with the Challenger in the 1870s, various geophysical and biochemical variables have been observed. A wide variety of ocean observation data has been accumulated since the beginning of water sampling using ships with the development of observation instruments such as Conductivity Temperature Depths (CTDs) and eXpendable Bathy Thrermographs (XBTs). The international collaboration in the World Ocean Circulation Experiment Program (WOCE) and the development of global observations using satellites in the 1990s, along with the beginning of the international Argo program (Argo Science Team 2001) in the 2000s, have led to a drastic increase in the amount of observed data and a steady decrease in spatiotemporal porosity. Nevertheless, the problem of spatiotemporal resolution persists, especially in subsurface oceanography. The number of high-accuracy observations is particularly low in areas of the ocean where the sea conditions are severe in the winter and where the sea is far from the general navigation routes and lands.

With respect to the quality of observed data, it has become possible to obtain high-precision data due to the improvement of sensor accuracy and development of observation platforms. On the other hand, there are a variety of data, ranging from highly accurate ship observation data to the developing sensors and automatic instruments with medium accuracy. In addition to incessant oceanographic observations, various approaches are needed to capture the dynamics of the ocean accurately at the basin scale. In this context, data synthesis can provide an answer to better utilize the wide variety of available observations.

Synthesis of oceanographic data has been attempted in various ways. For example, the World Ocean Atlas (Monterey and Levitus 1997) uses only observational data to produce map data in time and space by interpolation under statistical assumptions. Many of these approaches are based purely on observational data, which is effective in areas and periods of relatively high observational density. More recently, integrated data sets of temperature and salinity fields compiled by month using data from an Argo float have been published (Hosoda et al. 2008).

In the late 1990s, as computing power continued to improve, the synthesis of observational data on a global scale using numerical models also flourished (e.g., Sciller et al. 2013). These research and development efforts were based on statistical mathematics, control engineering, and meteorological "data assimilation" technology, which was already used for weather forecasting.

Data integration in the ocean field using numerical models can be divided into two main categories: those that assimilate observational data sequentially and primarily aim to provide initial values for relatively short term (days to months) prediction experiments, and those that search for the time evolution of a three-dimensional distribution close to the observational data as a smooth time-evolving arena for short-, medium-, and long-term (seasons to decades) predictions, dynamical analysis, and estimation of the time evolution of heat and mass transports (e.g., Sciller et al. 2013). The former is often called "ocean reanalysis", while, hereafter, we refer to the latter as ocean state estimation (Wunsch and Heimbach 2013). For the ocean state estimation, a method that emphasizes dynamical consistency is desirable, and dynamical interpolation of observed data should be considered (e.g., Stammer et al. 2002a).

Following CLIVAR (Climate and Ocean: Variability, Predictability and Change; http://www.clivar.org), which was launched in 1995, GODAE (Global Ocean Data Assimilation Experiment; https://www.godae.org) was launched in 1997 with the aim of accelerating research on global ocean data synthesis. This movement continues today through the CLIVAR GSOP (Global Synthesis and Observations Panel; http://www.clivar.org/panels-and-working-groups/gsop/gsop.php) and GODAE OceanView (https://www.godae-oceanview.org), which were followed by OceanPredict in 2019.

In recent years, global data integration studies that consider observational variables excluded from the forecast variables of numerical models have also been pursued. In particular, there is a discussion on how to refine the mapping of vertical mixing, which is essential for the dynamics of oceanic circulation, including meridional circulation, using observed information.

In this paper, we present the current status of the global ocean state estimation. The future approach to synthesize vertical-mixing observation data using data assimilation systems is also discussed. Chapter 2 introduces the current ocean state estimation, and Chapter 3 presents the optimization of the vertical diffusion coefficient using temperature and salinity data. Chapter 4 discusses the promising direction of data synthesis for vertical-mixing observations, and Chapter 5 summarizes future challenges.

2 Current ocean state estimation

Since the 2000s, several research groups have estimated the ocean state. This estimation often involves the application of a four-dimensional variational adjoint method (e.g., Sasaki 1970; Awaji et al. 2003; Wunsch and Heimbach 2007) or the Kalman smoother approach (e.g., Evensen and van Leeuwen 2000; Fukumori 2002). These operations typically require large computers and complex coding schemes. Consequently, there is a limited number of institutions conducting data synthesis studies on the ocean state estimation. Representative examples of a global-scale long-term state estimation are presented next. The ECCO consortium, led by NASA's Physical Oceanography, Modeling, and Cryosphere Programs (https://ecco-group.org/home.cgi) in the United States, was the first to successfully estimate the global ocean state over several decades, and it has been providing high quality data sets such as those reported by Stammer et al. (2002b) and Wuncsh and Heimbach (2013). This technology has also been transferred to the University of Hamburg, Germany, and separately developed as a German ECCO (G-ECCO), which has also significantly contributed to the global ocean state estimation (e.g., Köhl et al. 2012).

In Japan, the K7 consortium formed at JAMSTEC, Kyoto University, has been conducting long-term global ocean data synthesis studies since the early 2000s (Awaji et al. 2003; Masuda et al. 2003). In the 2010s, the consortium provided a synthesized dataset for climate research (Estimated STate of Ocean for Climate research: ESTOC; Osafune et al. 2015). This dataset is capable of successfully reproducing mid- and long-term changes in the deep ocean by applying an anomaly data assimilation for the full depth of the ocean. Climate change research targeting deep-water warming (e.g., Fukasawa et al. 2004) using this system represents a unique achievement that demonstrates the advantage of the state estimation (Masuda et al. 2010). ESTOC has also been used to assess the reliability of estimates of global deep-sea heat storage increases. It has significantly contributed to studies showing that increases in deep-sea heat storage represent 8–20% of the ocean surface (Kouketsu et al. 2011). Table 1 summarizes global ocean data synthesis efforts using smoother methods by updating a review article of Sciller et al. (2013).

The estimation of the long-term global ocean state is becoming possible. Therefore, the role of oceans in global changes should be further elucidated at this stage by comprehensively understanding the changes in the subsurface layers of the ocean. For this reason, the dynamics of the mid-deep ocean must be understood more accurately. To obtain more accurate estimations, expansion of the subsurface observation data such as the enhancement of repeat hydrography (http://www.go-ship.org), expansion of the automatic ascending drifting buoy array, Argo array (http://www.jcommops.org/board?t=Argo), and its extension to the deep sea (http://www.jamstec.go.jp/ARGO/deepninja) should be vital.

The performance of numerical models is also recognized as an important factor. Some research groups have successfully constructed state-of-the-art basin-scale state estimations of 1/6 − 1/10 degree horizontal resolution (SOSE: Mazloff et al. 2010; FORA-WNP30: Usui et al. 2017), leading to a new global state estimation. In addition, another promising line is the utilization of observation information that does not correspond to model variables, which has been difficult to integrate directly.

3 Ocean state estimation optimized by controlling vertical mixing

Vertical mixing, especially diapycnal diffusivity, is critical to determine the energetics in the global ocean in association with meridional overturning, heat, and mass budgets (e.g., Munk and Wunsch 1998). Global dissipations of about 2 TW are thought to be mostly compensated by internal wave power sources from tides and winds (e.g., Kunze 2017). Hence, mixing has a large intermittency in space and time (e.g., Kunze et al. 2006) and depends on the ocean state, sources, and bottom topography through wave dynamics (e.g., Hibiya et al. 2017). Among these, deep ocean mixing mainly provides a downward buoyancy flux to maintain global meridional circulation while main thermocline mixing is smaller by one order (e.g., Lumpkin and Speer 2007). Deep ocean mixing is, thus, an important factor in refining the ocean state estimation. In numerical models, it is common for diffusion coefficients to be treated as an external model parameter rather than a model variable.

In this context, it is important to determine if the amount of change in the results when the diffusion coefficient of the model changes is relevant, that is, if it is appropriate to use the diffusion coefficient as a control variable. The dependence of numerical calculations on the magnitude of diffusion coefficients has been studied since the early stage of ocean circulation model development (e.g., Bryan 1987; Cummins et al. 1990; Sasaki et al. 2012; Richards et al. 2012; Melet et al. 2013; Oka and Niwa 2013). Recently, Furue et al. (2015) and Jia et al. (2015) have examined in detail how different vertical diffusion coefficients for different oceans affect the representativity of the model. The results indicate that the application of vertical diffusion coefficients with spatiotemporal distribution effectively reproduces realistic ocean circulation fields in terms of reducing representativeness errors. Another study (Niwa and Hibiya 2004) evaluated the three-dimensional distribution of tidal mixing using a tidal model. All these studies have important implications for understanding the distribution of vertical mixing and the dynamics of ocean circulation.

Attempts have been made to optimize the diffusion coefficients at the ocean basin scale using general circulation models and conventional ocean observation data. Liu et al. (2012), based on the G-ECCO system, applied the four-dimensional variational adjoint method to optimize global vertical and horizontal diffusion coefficients as control variables using data such as temperature, salinity, and sea surface height. Consequently, the reproducibility (cost attenuation) of temperature, salinity, and sea surface height anomalies has been improved by 10–20%, and the mean sea level deviation has been improved by 45%. Liu et al. (2014) analyzed the geographic distribution of the model parameters for diffusion (Fig. 1) and proposed a new parameterization by focusing on their correlation with the seafloor topography. The effects of internal waves generated by surface wind and long-propagating waves from remote sources are implicitly excluded according to a strong-constraint formalism. These are practical examples that can be adapted to other models and help elucidate the dynamics related to diffusion.

Distribution of the estimated kgmskew, eddy-induced thickness advection parameter in m2 s−1, at 1160 m applied by Liu et al. (2014). This parameter represents the skewed part incorporated in an eddy-mixing scheme presented by Gent and McWilliams (1990) and Gent et al. (1995) (Eden et al. 2007). Black contours show the bottom depth H in m, and green contours show the barotropic stream function in Sv (Liu et al. 2014)

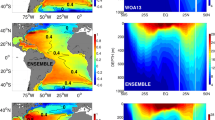

Toyoda et al. (2015) applied Green's function method (e.g., Menemenlis et al. 2005) to temperature and salinity data to blend several existing vertical diffusion schemes at optimal proportions. They assumed a simple linear coupling and obtained the optimal mixing ratio for vertical diffusion coefficients (Fig. 2). The mixed layer scheme was independent from this optimization procedure. Consequently, they improved the reproducibility of the water temperature distribution and circulation field, mainly in the deeper layers. Moreover, their method significantly reduced the degrees of freedom and, by adopting a Monte Carlo legal strategy, efficiently achieved optimization with a relatively small amount of computational resources.

Horizontally averaged vertical profiles of vertical diffusivity for various schemes. Toyoda et al. (2015) considers a linear combination of three different vertical diffusivity schemes: Type III of Tsujino et al. (2000) as one of the most skillful background vertical diffusivity (TJN; green), Gargett’s (1984) state-dependent scheme (GGT; yellow), and the Hasumi and Suginohara’s (1999) scheme for bottom-intensified vertical diffusivity (HSM; blue). Optimization result is obtained through the best linear combination of the three schemes (red) (Toyoda et al. 2015)

These results may be highly model-dependent as the dynamics represented by the diffusion coefficients may differ according to the model resolution and other factors. In this context, there are unique state estimations that implicitly revise vertical mixing in the model by controlling model errors with oceanic initial conditions (DeVries and Primeau 2011; DeVries and Holzer 2019). Regardless of the approach selected, a careful comparison and verification with direct vertical-mixing observations (e.g., Waterhouse et al. 2014) and field information on vertical mixing assessed from existing temperature and salinity data, for example from the global Argo array (Whalen et al. 2012), will be essential.

4 Data synthesis of vertical-mixing observations

The number of vertical-mixing observations is much lower than the data on temperature and salinity. In addition, vertical mixing has a remarkably high spatiotemporal variability. Although Waterhouse et al. (2014) compiled the available microstructure profiles to detect global patterns of diapycnal mixing, in general, it is difficult to construct a continuous map of mixing on the global scale solely from observations. The data synthesis experiments using a global-scale numerical model shown in the previous section used observation data of temperature, salinity, and sea surface height properties, but not vertical mixing when refining the model parameters (vertical diffusion coefficients). Thus, there are not data synthesis of vertical mixing observations. Here, we discuss technical issues unique to the synthesis of mixing data using numerical models and effective approaches to solve such issues.

Considering the same physical quantities for the variables calculated in the numerical model, such as water temperature and salinity, data synthesis is relatively easy to envision. The nudging method, one of the most simple and traditional approaches, could be used for this synthesis. In this method, the model variables are restored towards the observed values. Since water with its properties modified through this method is transported through advection and diffusion, this creates a continuous data set with a distribution close to the observed values. However, it is difficult to create a map of vertical mixing in the same manner because the turbulent energy dissipation rate obtained from observations is not calculated in most numerical models and it is not a conservative variable.

The optimization of model parameters (vertical diffusion coefficients) using water temperature, salinity, and sea surface height anomaly observations, as described above, can provide a realistic ocean state (dynamically self-consistent results) together with optimal parameters. It is a kind of data synthesis of observed information and a dynamical interpolation that considers a numerical model (available formalism or model equations).

If the use of vertical-mixing observation data is considered as an analogy to the use of temperature, salinity, and sea surface height observations, an approach, which optimizes parameters such as vertical diffusion coefficients along with temperature, salinity fields, and circulation fields as control variables using vertical-mixing observations, can be considered. In this context, the distribution of vertical diffusion coefficients obtained with information from observations, including vertical mixing, can represent the data synthesis of vertical mixing observations. The use of a data synthesis system in which the majority of the model representation errors are compensated by modifying vertical-mixing parameters will likely compromise the reliability of the diffusivity values, and there is a danger that the optimized vertical-mixing map cannot be applied to observational data synthesis. Therefore, a vertical mixed parameterization rooted in mechanics should be adopted to the fullest (e.g., St. Laurent et al. 2002), and the results of careful optimization to mitigate the representation errors of the model should be verified.

5 Discussion

With the expansion of vertical-mixing observations, Yasuda et al. (http://omix.aori.u-tokyo.ac.jp), who proposed the new academic area "ocean mixing", started to synthesize these observations. This synthesis is an attempt to refine the reproduction of the mid-deep ocean state by utilizing vertical-mixing observations. In this framework, the adjoint-based four-dimensional variational method was applied to estimate the ocean state and optimal spatial three-dimensional distribution of the vertical diffusivity by modifying parameters in parameterizations of tidal-induced vertical mixing based on outputs of a global barotropic tide model (St. Laurent et al. 2002; Hibiya et al. 2006). Hibiya et al. (2006) comprehensively includes remote sources mainly by bottom topographies.

St. Laurent et al. (2002) proposed a mixing scheme, which is widely used in OGCMs at present. Following their scheme, we represent the turbulent dissipation rate, \(\varepsilon\), as

where q is the local dissipation efficiency, \(\rho\) is the reference density of seawater (kg m–3), \({E}_{g}\) (W m–2) is the rate of conversion of barotropic tidal energy into internal waves, and \(F\left(z\right)\) is a vertical distribution function that assumes \(\varepsilon\) decays exponentially away from the ocean bottom as

where z is the vertical coordinate (positive upward), \(H\) is the bottom depth, and \(h\) is the vertical decay scale. Vertical diffusivity, κ, is calculated following the Osborn (1980) relationship for the mechanical energy budget of turbulence as κ \(=\Gamma \varepsilon {N}^{-2}\), where \(\Gamma\) is the mixing efficiency of turbulence and \(N\) is the buoyancy frequency. While St. Laurent et al. (2002) assumed q was a constant, Tanaka et al. (2010) showed that q can take different values for subinertial and superinertial tidal frequencies, which are referred to as \({q}_{\mathrm{sub}}\) and \({q}_{\mathrm{sup}}\), respectively.

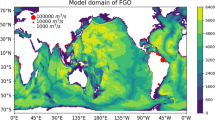

Osafune et al. (2014) calculated the adjoint sensitivity of these parameters in addition to some other parameters using turbulent dissipation rate observations in the North Pacific sections. Then the adjoint sensitivity was used as a clue to optimize the parameters (Fig. 3). The sensitivity here is a statistically evaluated variable, and it indicates that the difference between the observed data and numerical model results can be resolved by changing the vertical diffusivity. It is an approach to synthesize vertical-mixing observations.

Example of adjoint signal distribution at a 3000-m depth in the ongoing ESTOC for the case that includes turbulent dissipation rate observations. Red shades indicate the mixing parameter values should be further reduced, and blue indicates they should be further increased to reduce the difference between observation and state estimation for water temperature and salinity. Parameters are obtained from St. Laurent et al. (2002). (Osafune, personal communication)

The dataset obtained as a result of optimization will provide new insights for various scientific issues on the ocean interior, which have attracted attention in recent years such as the identification of the mechanisms of deep ocean climate change and changes in the meridional circulation, including deep-water warming. In addition, the distribution of optimized vertical mixing, although it contains model representativeness errors, should be validated as a single mapping result that synthesizes observations. The continuous research along this line with sustainable observations will lead to breakthroughs that shall elucidate the influence of vertical mixing in ocean circulation and global change.

In addition, the construction of a new numerical model framework in which the variables on turbulence are used as model variables may become possible in the near future due to advances in computer science. A wide range of direct observation data is expected to be densely acquired through turbulence observations using platforms such as Argo floats and underwater gliders, which are now entering the practical stage.

References

Argo Science Team (2001) Argo: The global array of profiling floats. In: Koblinsky CJ, Smith NR (eds) Observing the Oceans in the 21st Century. Breau of Meteorology, GODAE Project Office, Melbourne, pp 248–258

Awaji T, Masuda S, Ishikawa Y, Sugiura N, Toyoda T, Nakamura T (2003) State estimation of the North Pacific Ocean by a four-dimensional variational data assimilation experiment. J Oceanogr 59:931–943

Bryan F (1987) Parameter sensitivity of primitive equation ocean general circulation models. J Phys Oceanogr 17:970–985

Cosme E, Brankart JM, Verron J, Brasseur P, Krysta M (2010) Implementation of a reduced rank square-root smoother for high resolution ocean data assimilation. Ocean Model 33(1–2):87–100

Cummins PF, Holloway G, Gargett AE (1990) Sensitivity of the GFDL ocean general circulation model to a parameterization of vertical diffusion. J Phys Oceanogr 20:817–830

DeVries T, Holzer M (2019) Radiocarbon and Helium Isotope Constraints on Deep Ocean Ventilation and Mantle-3He Sources. J Geophys Res -Oceans 124:3036–3057. https://doi.org/10.1029/2018JC014716

DeVries T, Primeau F (2011) Dynamically and observationally constrained estimates of water-mass distributions and ages in the global ocean. J Phys Oceanogr 41:2381–2401. https://doi.org/10.1175/JPO-D-10-05011.1

Eden C, Greatbatch RJ, Willebrand J (2007) A diagnosis of thickness fluxes in an eddy-resolving model. J Phys Oceanogr 37:727–742

Evensen G, van Leeuwen PJ (2000) An ensemble kalman smootherfor nonlinear dynamics. Mon Wea Rev 128:1852–1867

Freychet N, Cosme E, Brasseur P, Brankart J-M, Kpemlie E (2012) Obstacles and benefits of the implementation of a reduced rank smoother with a high resolution model of the Atlantic ocean. Ocean Sci Discuss 9:1187–1229. https://doi.org/10.5194/osd-9-1187-2012

Fukasawa M, Freeland H, Perkin R, Watanabe T, Uchida H, Nishina A (2004) Bottom water warming in the North Pacific ocean. Nature 427:825–827. https://doi.org/10.1038/nature02337

Fukumori I (2002) A partitioned kalman filter and smoother. Mon Wea Rev 130:1370–1383. https://doi.org/10.1175/1520-0493

Furue R, Jia Y, McCreary JP, Schneider N, Richards KJ, Müller P, Cor-nuelle BD, Martínez Avellaneda N, Stammer D, Liu C, Köhl A (2015) Impacts of regional mixing on the temperature structure in the equatorial Pacific Ocean. Part1: Vertically uniform vertical diffusion. Ocean Modell 91:91–111

Gargett AE (1984) Vertical eddy diffusivity in the ocean interior. J Mar Res 42:359–393. https://doi.org/10.1357/002224084788502756

Gent PR, McWilliams JC (1990) Isopycnal mixing in ocean circulation models. J Phys Oceanogr 20:150–155

Gent PR, Willebrand J, McDougall TJ, McWilliams JC (1995) Parameterizing eddy-induced tracer transports in ocean circulation models. J Phys Oceanogr 25:463–474

Hasumi H, Suginohara N (1999) Effects of locally enhanced vertical diffusivity over rough topography on the world ocean circulation. J Geophys Res 104:23364–23374. https://doi.org/10.1029/1999JC099191

Hibiya T, Nagasawa M, Niwa Y (2006) Global mapping of diapycnal diffusivity in the deep ocean based on the results of expendable current profiler (XCP) surveys. Geophys Res Lett 33:L03611. https://doi.org/10.1029/2005GL025218

Hibiya T, Ijichi T, Robertson R (2017) The impacts of ocean bottom roughness and tidal flow amplitude on abyssal mixing. J Geophys Res 122:5645–5651. https://doi.org/10.1002/2016JC012564

Hosoda S, Ohira T, Nakamura T (2008) A monthly mean dataset of global oceanic temperature and salinity derived from Argo float observations. JAMSTEC Rep Res Dev 8:47–59

Hoteit I, Cornuelle B, Heimbach P (2010) An Eddy-permitting, dynamically consistent adjoint-based assimilation system for the tropical pacific: hindcast experiments in 2000. J Geophys Res 115:C03001

Jia Y, Furue R, McCreary JP (2015) Impacts of regional mixing on the temperature structure of the equatorial Pacific Ocean. Part2: Depth-dependent vertical diffusion. Ocean Modell 91:112–127

Köhl A, Stammer D (2008a) Variability of the meridional overturning in the North Atlantic from 50-year GECCO state estimation. J Phys Oceanogr 38:1913–1930

Köhl A, Stammer D (2008b) Decadal sea level changes in the 50-year GECCO ocean synthesis. J Clim 21:1866–1890

Köhl A, Siegismund F, Stammer D (2012) Impact of assimilating bottom pressure anomalies from GRACE on ocean circulation estimates. J Geophys Res. https://doi.org/10.1029/2011JC007623

Kouketsu S, Doi T, Kawano T, Masuda S, Sugiura N, Toyoda T, Igarashi H, Kawai Y, Katsumata K, Uchida H, Fukasawa M, Awaji T (2011) Deep ocean heat content changes estimated from observation and reanalysis product and their influence on sea level change. J Geophys Res 116:C03012. https://doi.org/10.1029/2010JC006464

Kunze E (2017) Internal-Wave-Driven Mixing: Global Geography and Budgets. J Phys Oceanogr 47:1325–1345. https://doi.org/10.1175/JPO-D-16-0141.1

Kunze E, Firing E, Hummon JM, Chereskin TK, Thurnherr AM (2006) Global abyssal mixing inferred from lowered ADCP shear and CTD strain profiles. J Phys Oceanogr 36:1553–1576. https://doi.org/10.1175/JPO2926.1

Liu C, Köhl A, Stammer D (2014) Interpreting layer thickness advection in terms of eddy–topography interaction. Ocean Modell 81:65–77

Liu C, Köhl A, Stammer D (2012) Adjoint-based estimation of eddy-induced tracer mixing parameters in the global ocean. J Phys Oceanogr 42:1186–1206

Lumpkin R, Speer K (2007) Global ocean meridional overturning. J Phys Oceanogr 37:2550–2562. https://doi.org/10.1175/JPO3130.1

Masuda S, Awaji T, Sugiura N, Ishikawa Y, Baba K, Horiuchi K, Komori N (2003) Improved estimates of the dynamical state of the North Pacific Ocean from a 4 dimensional variational data assimilation. Geophys Res Lett 30(16):1868. https://doi.org/10.1029/2003GL017604

Masuda S, Awaji T, Sugiura N, Matthews JP, Toyoda T, Kawai Y, Doi T, Kouketsu S, Igarashi H, Katsumata K, Uchida H, Kawano T, Fukasawa M (2010) Simulated rapid warming of abyssal north pacific waters. Science 329:319–322. https://doi.org/10.1126/science.1188703

Mazloff MR, Heimbach P, Wunsch C (2010) An eddy-permitting southern ocean state estimate. J Phys Oceanogr 40:880–899. https://doi.org/10.1175/2009JPO4236.1

Melet A, Hallberg RW, Legg S, Polzin K (2013) Sensitivity of the ocean state to the vertical distribution of internal-tide driven mixing. J Phys Oceanogr. https://doi.org/10.1175/JPO-D-12-055.1

Menemenlis D, Fukumori I, Lee T (2005) Using Green’s functions to calibrate an ocean general circulation model. Mon Wea Rev 133:1224–1240

Monterey GI, Levitus S (1997) Climatological cycle of mixed layer depth in the world ocean. U.S. Gov Printing Office NOAA NESDIS, Washington, p 5

Munk W, Wunsch C (1998) Abyssal recipes II: energetics of tidal and wind mixing. Deep-Sea Res I 45:1977–2010. https://doi.org/10.1016/S0967-0637(98)00070-3

Niwa Y, Hibiya T (2004) Three-dimensional numerical simulation of M2 internal tides in the East China Sea. J Geophys Res. https://doi.org/10.1029/2003JC001923

Oka A, Niwa Y (2013) Pacific deep circulation and ventilation controlled by tidal mixing away from the sea bottom. Nat Commun 4:2419

Osafune S, Doi T, Masuda S, Sugiura N, Hemmi T (2014) Estimated state of ocean for climate research (ESTOC). JAMSTEC. https://doi.org/10.17596/0000106

Osafune S, Masuda S, Sugiura N, Doi T (2015) Evaluation of the applicability of the Estimated Ocean State for Climate Research (ESTOC) dataset. Geophys Res Lett 42(12):4903–4911

Osborn TR (1980) Estimates of the local rate of vertical diffusion from dissipation measurements. J Phys Oceanogr 10:83–89

Richards KJ, Kashino Y, Natarov A, Firing E (2012) Mixing in the western equatorial Pacific and its modulation by ENSO. Geophys Res Lett 39:L02604. https://doi.org/10.1029/2011GL050439

Sasaki Y (1970) Some basic formalisms in numerical variational analysis. Mon Weather Rev 98:875

Sasaki W, Richards KJ, Luo J-J (2012) Role of vertical mixing originating from small vertical scale structures above and within the equatorial thermocline in an OGCM. Ocean Modell 57–58:29–42

Sciller A, Tong L, Masuda S (2013) Methods and applications of ocean synthesis in climate research. In: Siedler G, Griffies SM, Gould J, Church JA (eds) Ocean circulation & climate—a 21st century perspective, international geophysics, vol 103. Elsevier, Amsterdam, pp 581–601. https://doi.org/10.1016/B978-0-12-391851-2.00022-2

St. Laurent LC, Simmons HL, Jayne SR (2002) Estimating tidally driven mixing in the deep ocean. Geophys Res Lett 29:2106. https://doi.org/10.1029/2002GL015633

Stammer D, Wunsch C, Fukumori I, Marshall J (2002a) State estimation in modern oceanographic research. EOS 83(27):289

Stammer D, Wunsch C, Giering R, Eckert C, Heimbach P, Marotzke J, Adcroft A, Hill CN, Marshall J (2002b) Global ocean circulation during 1992–1997, estimated from ocean observations and a general circulation model. J Geophys Res 107(C9):3118

Tanaka Y, Hibiya T, Niwa Y, Iwamae N (2010) Numerical study of K1 internal tides in the Kuril straits. J Geophys Res 115:C09016. https://doi.org/10.1029/2009JC005903

Toyoda T, Sugiura N, Masuda S, Sasaki Y, Igarashi H, Ishikawa Y, Hatayama T, Kawano T, Kawai Y, Kouketsu S, Katsumata K, Uchida H, Doi T, Fukasawa M, Awaji T (2015) An improved simulation of the deep Pacific Ocean using optimally-estimated vertical diffusivity based on the Green’s function method. Geophys Res Lett 42:9916–9924. https://doi.org/10.1002/2015GL065940

Tsujino H, Hasumi H, Suginohara N (2000) Deep Pacific circulation controlled by vertical diffusivity at the lower thermocline depths. J Phys Oceanogr 30:2853–2865. https://doi.org/10.1175/1520-0485(2001)031%3c2853:DPCCBV%3e2.0.CO;2

Usui N, Wakamatsu T, Tanaka Y, Nishikawa S, Igarashi H, Nishikawa H, Ishikawa Y, Kamachi M (2017) Four-dimensional variational ocean reanalysis: a 30-year high-resolution dataset in the western North Pacific (FORA-WNP30). J Oceanogr 73:205–233. https://doi.org/10.1007/s10872-016-0398-5

Waterhouse AF et al (2014) Global patterns of diapycnal mixing from measurements of the turbulent dissipation rate. J Phys Oceanogr 44:1854–1872

Wenzel M, Schröter J (2007) The global ocean mass budget in 1993–2003 estimated from sea level. J Phys Oceanogr 37(2):203–213. https://doi.org/10.1175/JPO3007.1

Whalen CB, Talley LD, MacKinnon JA (2012) Spatial and temporal variability of global ocean mixing inferred from Argo profiles. Geophys Res Lett 39:L18612. https://doi.org/10.1029/2012GL053196

Wunsch C, Heimbach P (2007) Practical global oceanic state estimation. Physica D-Nonlinear Phenomena 20:197–208. https://doi.org/10.1016/j.physd.2006.09.040

Wunsch C, Heimbach P (2013) Dynamically and kinematically consistent global ocean circulation and ice state estimates. In: Siedler G, Church J, Gould J, Griffies S (eds) Ocean circulation and climate: a 21st century perspective, chapter 21. Elsevier, Amsterdam, pp 553–579 (10.1016/B978-0-12-391851-2.00021-0)

Acknowledgements

This work was partly supported by Grants-in-Aid for Scientific Research on Innovative Areas (MEXT KAKENHI-JP15H05817/JP15H05819).

Author information

Authors and Affiliations

Contributions

SM reviewed and wrote the paper. SO provided figures and fomulations of model schemes. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Masuda, S., Osafune, S. Ocean state estimations for synthesis of ocean-mixing observations. J Oceanogr 77, 359–366 (2021). https://doi.org/10.1007/s10872-020-00587-x

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10872-020-00587-x