Abstract

Propagation models of flow-like landslides can be calibrated by comparing on-site evidence of past occurrences with the propagation paths and the deposition zones resulting from numerical simulations of the phenomena. Most typically, the performance of these models is evaluated considering the events independently from one another and, heuristically, i.e., subjectively assessing the fit between numerical results and available on-site data. At regional scale, however, storms often trigger, within a given area, multiple landslides of the flow type that occur more or less simultaneously. At this scale, a procedure that objectively quantifies the success, or the errors, of the numerical simulations of multiple landslides is lacking. In this study, such a quantitative calibration procedure is proposed, and assessed, considering the debris flows that occurred in Sarno in 1998 (Italy). The numerical model used is called Debris Flow Predictor (DFP), which is able to simulate the propagation paths and the accumulation depths of multiple debris flows, at regional scale, from a series of predefined triggering areas. The model employs a cellular automata method with a probabilistic behavioral rule, which is a function of the adopted digital elevation model and a series of parameters related to the erosional, the depositional, and the spreading capacity of the propagating soil mass. The numerical simulations were evaluated over the study area considering the entire set of debris flow events, as well as the individual debris flows, following a preliminary discretization of both the mapped footprints and the remaining portion of the territory. The relative and total operator characteristic curves, in addition to 6 indicators derived from a confusion matrix, have been used to quantify the performance of the simulations. The results show that the quantitative evaluation of the numerical results is essential to properly calibrate the adopted model, i.e., to discriminate among different simulations arising from different sets of model parameters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Landslides of the flow-type, and debris flows in particular, may be catastrophic natural events due to the high mobility of the propagating material (from hundreds of meters to few kilometers) and to their extremely high flow velocity (in the order of meters per second). The high destructive power of such phenomena poses a significant risk to the communities, the structures, and the infrastructures located along their propagation path (Hungr et al. 2001; Cascini et al. 2010). To properly devise risk mitigation measures aimed at reducing the potential destructive consequences of such phenomena, numerical analyses can be used to estimate their main characteristics, such as flow path, volume of soil mobilized, flow velocity, and extent of the impacted area.

Models of the post-failure stage of flow-like landslides are typically calibrated adopting on-site evidence, i.e., comparing the simulation results with past events, most often by means of trial-and-error procedures (McDougall 2017). Some recent literature contributions have dealt with the issue of parameter calibration for such models, such as Aaron et al. (2019), proposing an optimization approach based on Gauss-Marquardt–Levenberg algorithm; Depina et al. (2020), who adopted a Bayesian updating approach for calibrating the distribution of the spatially variable parameters; and Brezzi et al. (2016), employing an Ensemble Smoother, i.e., a Bayesian data assimilation method, to minimize the variance of the estimated error between the model results and the field data collected. Other contributions explicitly proposed indicators to quantitatively evaluate the model capability to simulate the real event, such as Cepeda et al. (2010) and Lee et al. (2022), adopting methods based on receiver operating characteristic (ROC) analyses, and Anderson et al. (2016), who quantified the model performance in terms of three success metrics. However, most typically, the evaluation of numerical simulations of landslides of the flow type is based on a qualitative comparison of the fit between model results and data from real events (Lo et al. 2011; Dai et al. 2014; Pastor et al. 2014, 2015; Li et al. 2019; Zhao et al. 2020; Fan et al. 2020; Guo et al. 2022, among others). Another characteristic of flow-like landslides model calibration is that it usually emerges from the back analysis of single events. However, it surely can more powerful if “groups of similar events are back-analyzed together because the resulting patterns are more broadly applicable and can be used in a statistically justifiable probabilistic way” (McDougall 2017). Following this line of thought, and concurrently attempting to overcome the widely-adopted practice of subjective qualitative evaluation of model results, this study proposes a procedure to objectively assess the performance of flow-like landslides propagation models operating at regional scale.

Quantifying model performance based on historical data

Several features of past landslides of the flow-type can be used in the calibration procedure, such as the footprint of the phenomenon, its runout distance, the flow velocity, and the eroded or deposited volumes (Kwan and Sun 2007; Hungr and McDougall 2009; Lo et al. 2011; Cascini et al. 2014; Cuomo et al. 2015, 2019; Llano-Serna et al. 2016; Calvello et al. 2017; Liu et al. 2019). The easiest information to get from a past event, and indeed the one that is most often used for model calibration purposes also at local scale, is the footprint left on the territory by the landslide during its propagation phase. Footprints include the triggering, the run-out, and the deposition zones.

For the calibration of a numerical model at regional scale, we herein assume that the results of a propagation model—hereafter model outcome—can be characterized using a case study for which the only information available is the digital elevation model (DEM). The DEM of the area of interest—hereafter sample space—contains the footprints of the flow-like landslide event—hereafter real event. Figure 1 shows a schematic of the procedure that has been defined to this purpose. The various steps of the procedure will be described in the following sub-sections.

Real event compared with model outcome in the sample space

Landslide footprints can be used to derive a binary classification of the agreement between model outcome and real event by means of a 2 × 2 confusion matrix, also known as contingency table, computed evaluating the fit cell by cell in the sample space. In such a matrix, four outcomes are possible within any given DEM cell (Fig. 2):

-

True Positive (TP), if the model correctly predicts a positive class, i.e., an area involved in the real event

-

True Negative (TN), if the model correctly predicts a negative class, i.e., an area free of landslides

-

False Positive (FP), if the model incorrectly predicts a positive class

-

False Negative (FN), if the model incorrectly predicts a negative class

To approximate the continuous data (the real event) into a discrete variable, the resolution of the adopted DEM must be considered. Cells are assumed part of a landslide footprint if their barycenter is inside the polygon used to describe it.

Several indexes can be derived from the adopted confusion matrix, representative of either the positive or the negative performance of the model in relation to the real event. The following are the ones used hereinafter (see Fig. 2 for the meaning of the terms in the equations).

-

Positive predicted value (PPV), which is an indicator of how much of the model outcome is included within the boundaries of the real event

$$\mathrm{PPV}=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FP}}=\frac{\mathrm{TP}}{\mathrm{Model\;outcome}}$$(1) -

True positive rate (TPR), which is an indicator of how much of the real event is correctly predicted by the model

$$\mathrm{TPR}=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FN}}=\frac{\mathrm{TP}}{\mathrm{Real\;Event}}$$(2) -

False positive rate (FPR), which is an indicator of how much of the area free of landslides is incorrectly predicted by the model

$$\mathrm{FPR}=\frac{\mathrm{FP}}{\mathrm{FP}+\mathrm{TN}}=\frac{\mathrm{FP}}{\mathrm{Sample\;Space}-\mathrm{Real\;Event}}$$(3) -

Critical success index (CSI), which is the ratio of the correct model predictions within the landslide boundaries and the union between model outcome and real event

$$\mathrm{CSI}=\frac{\mathrm{TP}}{\mathrm{TP}+\mathrm{FN}+\mathrm{FP}}=\frac{\mathrm{TP}}{\mathrm{Real\;Event }\bigcup \mathrm{ Model}}$$(4) -

True negative rate (TNR), which is an indicator of how much of the area free of landslides is correctly predicted

$$\mathrm{TNR}=\frac{\mathrm{TN}}{\mathrm{TN}+\mathrm{FP}}=1-\mathrm{FPR}$$(5) -

Accuracy (ACC), which is an indicator of how much of the model outcome correctly predict the reality, i.e., both real event and area free of landslides

$$\mathrm{ACC}=\frac{\mathrm{TP}+\mathrm{TN}}{\mathrm{TP}+\mathrm{FP}+\mathrm{FN}+\mathrm{TN}}=\frac{\mathrm{TP}+\mathrm{TN}}{\mathrm{Sample\;Space}}$$(6)When the model outcomes can be ranked, for instance, considering the values of a model output variable in the cells of the sample space, other statistical tools can be used for computing the ability of a model to correctly predict a real event. They are the total characteristic curve (TOC), introduced by Pontius and Si (2014); and the relative operatic characteristics (ROC) analysis, originally developed for medical applications (Metz 1978) but nowadays frequently used in many other fields—in landslide analyses mostly in relation to susceptibility studies (e.g., Ciurleo et al. 2017; Piciullo et al. 2018). Both TOC and ROC plots visualize model performance considering varying thresholds of a model output variable representing the magnitude of the model prediction, therefore essentially generating and reporting different contingency tables in relation to the value assumed by that variable (Fig. 3). The ROC curve is plotted adopting a FPR-TPR plane, whereas the TOC graph is plotted using a (TP + FP)-TP plane. In both graphs, it is possible to identify common reference elements such as (i) the diagonal, which represents the locus of random points, also known as uninformed classifier; (ii) point A, which is the perfect classifier; (iii) point B, which is the limit of the random line; and (iv) point C, which is the worst classifier. A widely adopted indicator computed from these curves is the area under curve (AUC) introduced by Hanley and McNeil (1982). For the ROC curve, the AUC value varies in the range 0–1 and random guessing produces an AUC value of 0.5—diagonal line between (0, 0) and (1, 1); thus, no realistic classifier should have an AUC value lower than 0.5 (Fawcett 2006). In the TOC curve, the AUC is not normalized, and therefore, its upper boundary depends on the sample space dimension, function of N, the total number of cells free of debris flows, and P, the total number of cells involved in the debris flow event. However, dividing the AUC of a generic curve in the TOC space by the area of the parallelogram 0ABC (in other words the AUC of the ideal forecast), the result is a normalized value which has the same meaning of the AUC of the ROC space. In the TOC space, it is much easier to directly identify the absolute values of the elements of the confusion matrix resulting from different values (thresholds) of the considered model output variable. Considering this, and in addition to the indexes reported above, the following new indicator is herein proposed to quantify the positive performance of a model for any given value of the considered model output variable (See Appendix for more details).

-

Distance from uninformed classifier (DUC), which is the normalized distance of a generic point of the analysis’ curve from the line representing the random guess in ROC and TOC space

$$\mathrm{DUC}=\frac{\mathrm{TP}\cdot N-\mathrm{FP}\cdot P}{N\cdot P}$$(7)

Post-processing the footprints of flow-like landslides using their geometrical features

Figure 4 shows how the shapes of the landslide footprints can be further processed to refine the comparison with the model outcome, using an objective procedure that can be easily automated, e.g., in GIS environment. The “landslide track” can be defined as the line that is equidistant from the boundaries of the polygon identifying landslide footprint. It can be derived drawing several rings inside the landslide polygon at assigned distance until the rings do not collapse on themselves. Subsequently, the vertexes of the most inner polygons are joined to obtain a single line (Fig. 4a). At this point, it is possible to divide the landslide footprint into multiple polygons adopting a series of cross sections, drawn at equal distance along the track. Consequently, the landslide footprint can be described as a sequence of “landslide sectors” whose union is the real event (Fig. 4b). This procedure, as it will be shown in the case study section, allows to characterize and to effectively display the results of a propagation model along the propagation path of each landslide in the sample space. Therefore, it will be used as a tool to evaluate the quality of the model results for the different phenomena comprising the regional event. As such, this procedure is also applicable for analyses performed at local scale.

Treating historical events at regional scale

When several flow-like landslides are triggered in a given zone in a short period, the different landslides can show either totally independent run-out paths or cases of overlapping propagation paths. In such cases, it is important to separate the total involved area into individual footprints and individual areas of influence that can be attributed to the different phenomena. At this scope, assumptions need to be made, and the results of the numerical models should be post-processed coherently. Consequently, for analyses at regional scale, in addition to partitioning the landslide footprints as presented before, the area of interest also needs to be partitioned to evaluate the results of the model also outside the footprints. The partition of the sample space can be performed following the same logic used to define the landslide track. Starting from the boundaries of the landslide footprints: a series of ring buffers outside the landslide polygons are drawn at assigned distances, until the area of interest is completely covered. When these lines cross each other, the intersections are used to divide the area of interest into individual areas of influence of the different phenomena. In this way, the entire domain is partitioned, and the results of the model can be evaluated also considering each landslide individually (Fig. 5).

Case study

Landslide event: Sarno (Italy) 1998

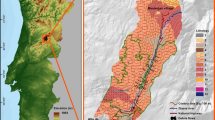

The area of interest in this study is part of a hilly territory known as Pizzo D’Alvano. This region contains shallow deposits of coarse-grained pyroclastic soils, which stem from the explosive eruptions of the Somma-Vesuvio volcano and overlay fractured limestone formations beneath. The deposits exhibit a highly diverse thickness, giving rise to a complex, multilayered pyroclastic covering. The current status of the cover is the result of several processes that occurred over the years, such as erosion, ancient landsliding, sediment transportation, and anthropogenic activities. The different geotechnical and hydrological properties of the pyroclastic layers, together with the water supplies coming from the fractured bedrock underlying the shallow deposit, lead to a groundwater circulation that is able to trigger landslide phenomena (Guadagno et al. 2005; Cascini et al. 2005). On 4–5 May 1998, several shallow landslides were triggered by intense and prolonged rainfall in the upper parts of the slopes, giving way to a large number of flow-like landslides (Cascini 2004). The landslides were characterized by relatively small triggering volumes of pyroclastic soils that significantly increased along the propagation path due to soil erosion, soil entrainment, and slope undercutting (Cascini et al. 2008; Cuomo et al. 2014). The mechanical properties of the soil involved, together with its high degree of saturation, lead to long propagation distances. This allowed the landslides to reach densely populated areas to destroy many urban structures of four villages located at the foot of the hill, and to kill 159 people. A very large number of papers have been written on this case study, also in recent years (e.g., Picarelli et al. 2020; Santangelo et al. 2021), and many different terms have been used to categorize these landslides (Guadagno et al. 2011). For the purposes of this work, all these landslides of the flow type will hereinafter simply be called debris flows.

The specific area considered in this study has an extension of around 12 km2. Several triggering zones have produced many concurrent and subsequent propagation events. The phenomena that affected the Episcopio settlement of the Sarno municipality can be grouped in five major debris flows (Fig. 6a). The DEM utilized in the debris flow model has a resolution of 5 m. The presence of buildings inside the urban area, which is known to significantly influence the propagation path of debris flows (Gao et al. 2017; Feng et al. 2019), has been considered by adding a height of 10 m to the elevation of the DEM’s cells that falls into the building footprints. Multiple triggering zones (Fig. 6a) have been defined at the topmost locations of the debris flow footprints because the adopted model is not able simulate retrogressions. As these zones are not clearly discernible due to their size in relation to the propagation paths and to figure’s dimension, in the top-right part of Fig. 6a, the triggering zones used for debris flow 3 are highlighted. The debris flow sectors of the five phenomena are shown in Fig. 6b. They were derived following the procedure described in the previous section, considering a partitioning space along the landslide track of 50 m. Debris flows 3 and 1 are, respectively, characterized by the longest, 3850 m, and the shortest, 1900 m, run-out distance. The partitions of the sample space are shown in Fig. 6c. In this case, the domain has been divided into two groups. Inside the debris flow footprints, the width of the offset rings was set to 10 m. Outside the debris flow boundaries, the first ring is 10-m wide (relatively small buffer for taking into account possible DEM resolution and mapping errors), the second one is 40-m wide, while the others, up to a distance of 500 m, are 50-m wide. The remaining part of the sample space is not partitioned further.

Probabilistic model: debris flow predictor

To test the calibration procedure in our area of interest (Sarno), we deploy a relatively new model called debris flow predictor (DFP). DFP is an agent-based simulation, i.e., a cellular automata model of computation, wherein individual agents either occupy or do not occupy cells in a 5-m DEM (the matrix), following rules for calculation of direction of travel, spread (and the spawning of new agents), mass, scour, deposition, and interaction with other agents in discrete timesteps (Guthrie et al. 2008; Guthrie and Befus 2021). At its core, DFP uses probability curves for scour and deposition, themselves based on approximately 1,700 observations about debris flow behavior, that vary with the underlying slope. Agents continue to scour and deposit in the matrix in successive timesteps until such time as their mass is zero and they are terminated. They change the DEM in any single run (reset between runs), and multiple landslides will be affected by the DEM changes. Landslide shape, and spawning of new agents, is controlled by a probability density function whose parameters may be adjusted by the user within the program interface (Guthrie and Befus 2021).

where \({m}_{\mathrm{MAX}}\)= a limit on spreading behavior called Fan Maximum Slope, \(m\) = DEM slope, \(n\) = a curve shape parameter (skew fanning to low slopes) whereby numbers less than one are convex and numbers greater than one are concave and focus spreading to lower slopes, \({\sigma }_{\mathrm{L}}\)= low slope coefficient controlling the amount of spread on low slopes, and \({\sigma }_{\mathrm{S}}\) = steep slope coefficient controlling the amount of spread on steep slopes.

Other controls exist including the ability to limit the number of agents that can be spawned by any one agent (Max Spawns Allowed), a “frictional” function meant to simulate deposition in corners (mass loss per 45° turn), and a minimum initiation depth. Effects of local geology, in addition to the spreading functions, are controlled by post calculation multipliers for deposition and erosion.

The model has proven to be computationally efficient (Guthrie and Befus 2021; Crescenzo et al. 2021; Arghya et al. 2022), applicable to a wide variety of geographic settings at both the regional and local scale (e.g., Guthrie et al. 2022; Wasklewicz et al. 2022; Arghya et al. 2022) and easy to re-calibrate in order to test the methods applied herein.

The numerical analyses

In DFP, the travel, spread, and spawning of new agents in the sample space simulating the debris flow propagation, are controlled stochastically by means of a probability density function (Eq. 8), and, therefore, every numerical simulation in DFP will always produce different results. A probabilistic analysis conducted by means of DFP is a set of numerical simulations performed considering the same initial conditions and the same values of input parameters. Table 1 reports the values assumed by the DFP input parameters for the four probabilistic analyses that will be considered and comparatively evaluated herein. All of them employ the same initial conditions, i.e., the same triggering zones located at the topmost locations of the debris flow footprints (Fig. 6a). Each analysis includes 100 numerical simulations. The easiest way to assess the results of each analysis is by counting, in each cell of the sample space, the number of simulations for which the cell is part of the debris flow. From those numbers, each cell can be assigned a frequency from 0 to 100%, with a resolution of 1%, which must be considered the model computed probability of presence of a debris flow in that cell.

The base case analysis is called A0, and it employs a set of input parameters values that have been effectively adopted to back analyze the occurrence of multiple debris flows in other study areas (Guthrie and Befus 2021). This base case analysis yielded, in this case study, unsatisfactory values of most performance indicators. The other three analyses (named A1, A2, and A3) were defined by changing, using trial-and-error, the values of the input parameters (6 out of 9) to which the numerical results were most sensitive. Significant changes from the base case affected the following four parameters: fan maximum slope, from 27° to 37° (value kept constant for analyses A1 to A3); sigma low slope, from 1.35 to 3.81 (analyses A2 and A3); skew fanning to low slopes, from 1.1 to 0.5 (analysis A3); and deposition multiplier, from 0.6 to 0.3 (analysis A3). For convenience in the illustration of the case study, the three analyses were aptly named in increasing order of overall performance, A3 being the best-performing one.

Results and discussion

Figure 7 shows the ROC and TOC curves for the four analyses. The curves were computed and drawn for the outcomes of the 100 simulations available for each analysis. Figure 7 also reports the AUC, whose value constantly increases passing from A0 to A3. From here onward, the evaluation of the model performance will be focused on the results of the analyses considering a threshold frequency of 5% (in Fig. 7 and hereafter: Ai–T5). In other words, only the cells that had a probability higher than 5% of being affected by a debris flow are considered. Figure 8 shows the results of the four analyses in the sample space, grouped in classes of frequencies from 5% onward. A qualitative evaluation of these four maps allows one to discriminate between A0–T5 and the other analyses, yet the differences between A1–T5, A2–T5, and A3–T5 are not straightforward to identify with this representation of the results. Instead, Fig. 9 shows a radar chart with the values of the six performance indicators previously defined for the four analyses. As already stated, they refer to contingency matrices computed considering a threshold frequency of 5%.

Results of the four analyses in terms of performance indicators for contingency matrices computed considering a threshold frequency of 5%: a for all the analyses carried out assessing globally the models result; b for the analysis A3–T5 considering each landslide events individually (dashed line) and the global event (continuous line)

It is worth to notice that, for a single analysis, increasing of PPV values corresponds to decreasing TPR values because the two indices are linked. The values of TNR and ACC are not very sensitive to the different analyses, and this can be attributed to the large number of the TN cells in the sample space, a common feature of debris flow events. Indeed, even when the debris flows are relatively large and turn into very catastrophic phenomena, the area usually involved by the event represents only a small part of the area available. Consequently, it may be stated that these kinds of indicators are not fit for evaluating the model performance at regional scale. In contrast, the newly proposed indicator DUC is relatively sensitive to the models’ results, even if it contains in its formulation the number of TN. Looking at the maps reporting the results (Fig. 8), it is straightforward to understand that A0–T5 does not simulate in an acceptable way the landslide events. This is confirmed quantitatively (Fig. 9a), as the values of 3 performance indicators out of 6 is lower than the assigned threshold of 0.5. Passing from A1–T5 to A3–T5, the PPV values decrease, and, coherently, the values of TPR, CSI, and DUC increase. Only the analysis A3–T5 is able to overcome the threshold of 0.5 for all the 6 considered indicators and, therefore, can be considered the best performing one. To explore the results of the analysis A3–T5 in more detail, Fig. 9b reports the indicators’ value also the five debris flow events considered independently. Debris flow 1 yields the worse results in terms of PPV and CSI, and this can be attributed to a series of factors: the thin landslide propagation path which is comparable to the DEM resolution; a channelized deposition path difficult to reproduce within the adopted model considering the DEM characteristics in that area and thus high numbers of false positives; and a possible inaccurate identification of the debris flow boundary mapped. As it will be seen in the next steps, these false positive cells are mostly located in the 10-m buffer. Table 2 shows the numerical value of the 6 indicators adopted for the analysis A3–T5, considering each debris flow separately and the global event.

The landslide thickness map related to the analysis A3–T5 is reported in Fig. 10. It is worth mentioning that the mobilized and the deposited depths are in line with the data reported in Cascini (2004) and Cascini et al. (2013).

Considering the discretization of the debris flows, Fig. 11 reports the results for the single phenomena related to the analysis A3–T5, plotting the TP and the FP for each sector in which the footprints are discretized. It is relevant to stress that the sum of TP and FP makes up the real event. Therefore, the graphs are set up to easily convey how much, in each sector along the propagation track, the model is able to cover the area of the observed debris flow. For instance, it can be noted that for debris flow 1 (Fig. 11a), there is a gap of TP between the sectors 1600 m and 1750 m. Indeed, the real debris flow is channelized into a man-made drainage system which is not detectable in the adopted DEM, due to its relatively small cross section, and therefore, the modeled propagation occurs along a different path for those 150 m. Similarly, for debris flow 4 (Figs. 11d, 6b), the sectors between 1700 and 2100 m are characterized by a retrogressive failure due to undercutting at the slope foot. This phenomenon cannot be reproduced by this model without considering initiation zones in those sectors. The graphs also provide a visual indication of the width of the debris flow along the propagation path. Indeed, the distance of two consecutive cross-sections is fixed, and therefore, the total number of cells in each sector (TP and FP) is proportional to the average width of the real event in that sector. For instance, the middle part of debris flow 3 (Fig. 11c) and parts of debris flows 1 (Fig. 11a) and 5 (Fig. 11e) are clearly channelized.

Finally, the evaluation of the model results in the entire sample space is reported in Fig. 12, for both each debris flow considered singularly and for the global event. It is important to stress that the rings corresponding to the − 10 ÷ 0 m and the 0 ÷ 10 m range are the closest rings to the boundaries of the debris flows, mapped, respectively, inside and outside the footprints. Consequently, the inner rings show only TP and FN values, whereas the outer rings show only FP and TN values. It is clearly visible that for all the debris flows, there is a relatively high number of cells computed as FP in the first ring outside the footprint. These “minor errors” can be attributed to the spatial accuracy of the model results, which is in turn highly influenced by the resolution of the adopted DEM, but they could also be related to the spatial accuracy of the adopted mapping and in particular to the identified position of the boundaries of the different debris flows along their propagation path. Another issue to address is the potential overlapping of the areas of influence of the different phenomena. For instance, for debris flow 1, the FP in the rings > 350 m are related to a propagation branch of debris flow 2 that entered the area of influence of the debris flow 1. This model error is present in all the analyses conducted, and it may be attributed to a local problem in the DEM adopted in the Piedmont area (Fig. 8).

Results of analysis A3–T5 in terms of number of cells in the elements of a contingency matrix (pie charts) and in the elements of the sample space partitioning (bar charts), considering each debris flow individually and the global event. Note: negative interval values refer to the rings inside the footprints of the debris flows, where only TP and FN can be calculated; positive interval values refer to the rings outside the footprints, where only FP and TN can be calculated

In summary, the calibration of the numerical model was conducted considering simultaneously five debris flows, some of which interacted during the propagation stage. To test the calibration procedure, four sets of input parameters were considered. The approach allowed to select the best performing numerical model. The four analyses were indeed evaluated and compared employing six performance indicators obtained from the contingency table classification, the AUC values of the ROC and TOC curves, and two sets of infographics reporting the results for each debris flow. Despite two performance indicators (i.e., TNR and ACC) did not provide useful information due to the large number of TN cells located in the sample space, the other four performance indicators (PPV, TPR, CSI, DUC) and the AUC of the ROC curve were successfully used to individuate the optimal parameter set. A detailed inspection of the best fit simulation was then conducted adopting two purposefully designed infographics.

The comparison between the model results and the real event focused both on the overall global event and on the five individual debris flows. Considering the probabilistic nature of the adopted propagation model, in each analysis, each cell of the sample space was ranked on the basis of a computed probability of presence of a debris flow. With such setting, ROC curves and AUC values proved to be a good synthetic way to compare, for the global event, results coming from the different numerical analyses (see Fig. 7). In fact, they clearly highlighted that the A3–T5 analysis yields the best results. The same conclusion is confirmed by the fact that only in this analysis the threshold of 0.5 is exceeded for all six considered indicators (see Fig. 9 and Table 2). This conclusion, however, cannot be drawn by only looking at the results in terms of propagation maps (see Fig. 8). To quantitatively assess the model outcome in more detail considering, for each debris flow, different sectors along the propagation track and different areas outside the footprint, two graphical representations provided relevant hindsight on areas where the fit between the model results and the real event was optimal or, conversely, not satisfactory (see Fig. 11 and Fig. 12). More generally, by using the proposed infographics, limitations and shortcomings in the adopted model and/or in the data available can be highlighted and potentially resolved. The graphs also provide a visual indication of the width of the debris flows along their propagation path (Fig. 11) and a way to identify the spatial distribution of the model errors (Fig. 12). For instance, a gap of TP in two specific sectors of debris flows 1 and 4 can be justified by noting that in those areas, (i) debris flow 1 is channelized into a man-made drainage system which is not detectable in the adopted DEM; (ii) debris flow 4 is characterized by a retrogressive failure due to undercutting at the slope foot. Notably, for all the phenomena, the closest rings to the debris flow boundaries, ranging from − 10 m to 0 m and from 0 to 10 m, are highlighted as the most significant for the performance evaluation. The errors in these areas can be attributed to the spatial accuracy of both model results and adopted mapping.

The proposed procedure, which includes the utilization of many performance indicators, clear rules for partitioning the sample space, and innovative graphical representations of the results, has to be considered a tool for calibrating propagation models of landslides of the flow type. The procedure is mainly set up for applications at regional scale, as it assumes that debris flow propagation models can be effectively calibrated using only the footprints of past events and the DEM of the area where such events occurred. However, the performance indicators and the visual representation of the results herein introduced could also be profitably used in the case other relevant information on past flow-like landslides is available, e.g., velocity regime or volume mobilized, for instance, in analyses to be performed with physically based models at local scale. The procedure could also potentially implement an optimization algorithm to search the best parameter set, instead of comparing a predetermined number of simulations. However, it is important to highlight that the comparison between the model outcome and the real event is based on a suite of parameters and performance indicators that cannot be looked at in isolation. In fact, the analyst must properly acknowledge the information value and the limitations of each single indicator, as well as to give a meaning to the detailed information provided by the two proposed infographics. The role of “expert judgment” in the evaluation of the model results cannot, and indeed should not, be neglected. Incorporating a multi-objective optimization algorithm with experts’ judgment can possibly be considered a more effective strategy to calibrate the input parameter set. However, such an implementation is computationally demanding, and evaluating it is out of the scope of this paper.

Conclusions

Simulations of the post-failure stage of flow-like landslides are commonly calibrated considering single past occurrences and adopting qualitative expert judgment to assess the model results in relation to in situ evidence. In this study, a quantitative approach has been proposed to evaluate the performance of propagation models of flow-like landslides, considering multiple occurrences at regional scale. The approach has been validated using a case study of rainfall-induced debris flows in southern Italy and a model that simulates debris flow propagation probabilistically.

The main outcomes of the study are synthetized hereinafter.

-

At regional scale, the results of a propagation model for flow-like landslides can be assessed, and thus the model can be calibrated, using only information on the footprints of past flow-like landslide events.

-

An innovative automated procedure in GIS environment was devised to objectively process, at regional scale, footprints data over a sample space. The procedure has been effectively used for model calibration purposes in two ways: (i) to define a set of indicators of model performance that are based on a binary classification of the agreement between model outcome and real events, i.e., a contingency matrix, and (ii) to report the comparison between past flow-like landslides and numerical simulations by means of two sets of infographics.

-

Many performance indicators must be used, collectively, to evaluate the results of a numerical analysis at regional scale. The evaluation must properly acknowledge the information value and the limitations of each single indicator. For instance, if the presence of TN cells in the sample space is predominant, as in the case study and as it is often the case for flow-like landslide events, TNR and ACC are inadequate for evaluating model performance.

-

The two infographics proposed in this study were effective in providing, for each considered debris flow of the case study, the model fit in different sectors along the propagation track and in different areas outside the footprint. Therefore, they can be considered useful visual tools to identify the relative entity and the main locations of model errors.

References

Aaron J, McDougall S, Nolde N (2019) Two methodologies to calibrate landslide runout models. Landslides 16:907–920. https://doi.org/10.1007/s10346-018-1116-8

Anderson E R, Griffin R E, Irwin D E (2016) Implications of different digital elevation models and preprocessing techniques to delineate debris flow inundation hazard zones in El Salvador Natural Hazard Uncertainty Assessment: Modeling and Decision Support 167–177. https://doi.org/10.1002/9781119028116.ch11

Arghya A B, Hawlader B, Guthrie R H (2022) A comparison of two runout programs for debris flow assessment at the Solalex-Anzeindaz region of Switzerland. In: Geohazards 8, Quebec City, pp 325–331

Brezzi L, Bossi G, Gabrieli F, Marcato G, Pastor M, Cola S (2016) A new data assimilation procedure to develop a debris flow run-out model. Landslides 13(5):1083–1096. https://doi.org/10.1007/s10346-015-0625-y

Calvello M, Cuomo S, Ghasemi P (2017) The role of observations in the inverse analysis of landslide propagation. Comput Geotech 92:11–21. https://doi.org/10.1016/j.compgeo.2017.07.011

Cascini L (2004) The flowslides of May 1998 in the Campania region, Italy: the scientific emergency management. Italian Geotechnical Journal 2:11–44

Cascini L, Cuomo S, Guida D (2008) Typical source areas of May 1998 flow-like mass movements in the Campania region, Southern Italy. Eng Geol 96:107–125. https://doi.org/10.1016/j.enggeo.2007.10.003

Cascini L, Cuomo S, Pastor M (2013) Inception of debris avalanches: remarks on geomechanical modelling. Landslides 10:701–711. https://doi.org/10.1007/s10346-012-0366-0

Cascini L, Cuomo S, Pastor M, Sorbino G (2010) Modeling of rainfall-induced shallow landslides of the flow-type. J Geotech Geoenviron Eng 136:85–98. https://doi.org/10.1061/ASCEGT.1943-5606.0000182

Cascini L, Cuomo S, Pastor M, Sorbino G, Piciullo L (2014) SPH run-out modelling of channelised landslides of the flow type. Geomorphology 214:502–513. https://doi.org/10.1016/j.geomorph.2014.02.031

Cascini L, Cuomo S, Sorbino G (2005) Flow-like mass movements in pyroclastic soils: remarks on the modelling of triggering mechanisms. Italian Geotech J 4:11–31

Cepeda J, Chávez JA, Cruz Martínez C (2010) Procedure for the selection of runout model parameters from landslide back-analyses: application to the Metropolitan Area of San Salvador, El Salvador. Landslides 7:105–116. https://doi.org/10.1007/s10346-010-0197-9

Ciurleo M, Cascini L, Calvello M (2017) A comparison of statistical and deterministic methods for shallow landslide susceptibility zoning in clayey soils. Eng Geol 223:71–81. https://doi.org/10.1016/j.enggeo.2017.04.023

Crescenzo L, Pecoraro G, Calvello M, Guthrie R H (2021) A probabilistic model for assessing debris flow propagation at regional scale: a case study in Campania region, Italy. In: EGU General Assembly Conference Abstracts, Online, pp 2224. https://doi.org/10.5194/egusphere-egu21-2224

Cuomo S, Calvello M, Villari V (2015) Inverse analysis for rheology calibration in SPH analysis of landslide run-out. In: Engineering geology for society and territory, 2 nd volume. Springer, Cham, pp 1635–1639 https://doi.org/10.1007/978-3-319-09057-3_291

Cuomo S, Ghasemi P, Martinelli M, Calvello M (2019) Simulation of liquefaction and retrogressive slope failure in loose coarse-grained material. Int J Geomech 19:04019116. https://doi.org/10.1061/(ASCE)GM.1943-5622.0001500

Cuomo S, Pastor M, Cascini L, Castorino GC (2014) Interplay of rheology and entrainment in debris avalanches: a numerical study. Can Geotech J 51:1318–1330. https://doi.org/10.1139/cgj-2013-0387

Dai Z, Huang Y, Cheng H, Xu Q (2014) 3D numerical modeling using smoothed particle hydrodynamics of flow-like landslide propagation triggered by the 2008 Wenchuan earthquake. Eng Geol 180:21–33. https://doi.org/10.1016/j.enggeo.2014.03.018

Depina I, Oguz EA, Thakur V (2020) Novel Bayesian framework for calibration of spatially distributed physical-based landslide prediction models. Comput Geotech 125:103660. https://doi.org/10.1016/j.compgeo.2020.103660

Fan X, Yang F, Siva Subramanian S, Xu Q, Feng Z, Mavrouli O, Ouyang PM, C, Jansen J D, Huang R, (2020) Prediction of a multi-hazard chain by an integrated numerical simulation approach: the Baige landslide, Jinsha River, China. Landslides 17:147–164. https://doi.org/10.1007/s10346-019-01313-5

Fawcett T (2006) An introduction to ROC analysis. Pattern Recogn Lett 27:861–874. https://doi.org/10.1016/j.patrec.2005.10.010

Feng SJ, Gao HY, Gao L, Zhang LM, Chen HX (2019) Numerical modeling of interactions between a flow slide and buildings considering the destruction process. Landslides 16:1903–1919. https://doi.org/10.1007/s10346-019-01220-9

Gao L, Zhang LM, Chen HX (2017) Two-dimensional simulation of debris flow impact pressures on buildings. Eng Geol 226:236–244. https://doi.org/10.1016/j.enggeo.2017.06.012

Guadagno FM, Forte R, Revellino P, Fiorillo F, Focareta M (2005) Some aspects of the initiation of debris avalanches in the Campania Region: the role of morphological slope discontinuities and the development of failure. Geomorphology 66:237–254. https://doi.org/10.1016/j.geomorph.2004.09.024

Guadagno F M, Revellino P, Grelle G (2011) The 1998 Sarno landslides: conflicting interpretations of a natural event. Italian J Eng Geol Environ 71–81. https://doi.org/10.4408/IJEGE.2011-03.B-009

Guo J, Cui Y, Xu W, Yin Y, Li Y, Jin W (2022) Numerical investigation of the landslide-debris flow transformation process considering topographic and entrainment effects: a case study. Landslides 19:773–788. https://doi.org/10.1007/s10346-021-01791-6

Guthrie RH, Befus A (2021) Debris flow predictor: an agent-based runout program for shallow landslides. Nat Hazard 21:1029–1049. https://doi.org/10.5194/nhess-21-1029-2021

Guthrie RH, Deadman PJ, Cabrera AR, Evans SG (2008) Exploring the magnitude–frequency distribution: a cellular automata model for landslides. Landslides 5:151–159. https://doi.org/10.1007/s10346-007-0104-1

Guthrie R H, Grasso K, Befus A (2022) A new landslide runout model and implications for understanding post wildfire and earthquake threats to communities in California. In: Lifelines 2022: Advancing Lifeline Engineering for Community Resilience, Los Angeles, pp 278–288. https://doi.org/10.1061/9780784484449.025

Hanley JA, McNeil BJ (1982) The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology 143:29–36. https://doi.org/10.1148/radiology.143.1.7063747

Hungr O, Evans SG, Bovis MJ, Hutchinson JN (2001) A review of the classification of landslides of the flow type. Environ Eng Geosci 7:221–238. https://doi.org/10.2113/gseegeosci.7.3.221

Hungr O, McDougall S (2009) Two numerical models for landslide dynamic analysis. Comput Geosci 35:978–992. https://doi.org/10.1016/j.cageo.2007.12.003

Kwan J S H, Sun H W (2007) Benchmarking exercise on landslide mobility modelling–runout analyses using 3dDMM. In: Proceedings of the 2007 International Forum on Landslide Disaster Management, Hong Kong, pp 945–966.

Lee S, An H, Kim M, Lim H, Kim Y (2022) A simple deposition model for debris flow simulation considering the erosion–entrainment–deposition process. Remote Sensing 14:1904. https://doi.org/10.3390/rs14081904

Li P, Shen W, Hou X, Li T (2019) Numerical simulation of the propagation process of a rapid flow-like landslide considering bed entrainment: a case study. Eng Geol 263:105287. https://doi.org/10.1016/j.enggeo.2019.105287

Liu W, Wang D, Zhou J, He S (2019) Simulating the Xinmo landslide runout considering entrainment effect. Environmental Earth Sciences 78:1–16. https://doi.org/10.1007/s12665-019-8596-2

Llano-Serna MA, Farias MM, Pedroso DM (2016) An assessment of the material point method for modelling large scale run-out processes in landslides. Landslides 13:1057–1066. https://doi.org/10.1007/s10346-015-0664-4

Lo CM, Lin ML, Tang CL, Hu JC (2011) A kinematic model of the Hsiaolin landslide calibrated to the morphology of the landslide deposit. Eng Geol 123:22–39. https://doi.org/10.1016/j.enggeo.2011.07.002

McDougall S (2017) 2014 Canadian Geotechnical Colloquium: landslide runout analysis—current practice and challenges. Can Geotech J 54:605–620. https://doi.org/10.1139/cgj-2016-0104

Metz CE (1978) Basic principles of ROC analysis. In: Seminars in nuclear medicine, WB Saunders, pp 283–298. https://doi.org/10.1016/S0001-2998(78)80014-2

Pastor M, Blanc T, Haddad B, Drempetic V, Morles MS, Dutto P, Martin Stickle M, Mira P, Fernández Merodo JA (2015) Depth averaged models for fast landslide propagation: mathematical, rheological and numerical aspects. Arch Comput Methods Eng 22:67–104. https://doi.org/10.1007/s11831-014-9110-3

Pastor M, Blanc T, Haddad B, Petrone S, Sanchez Morles M, Drempetic V, Issler D, Crosta GB, Cascini L, Sorbino G, Cuomo S (2014) Application of a SPH depth-integrated model to landslide run-out analysis. Landslides 11:793–812. https://doi.org/10.1007/s10346-014-0484-y

Picarelli L, Olivares L, Damiano E, Darban R, Santo A (2020) The effects of extreme precipitations on landslide hazard in the pyroclastic deposits of Campania Region: a review. Landslides 17:2343–2358. https://doi.org/10.1007/s10346-020-01423-5

Piciullo L, Calvello M, Cepeda JM (2018) Territorial early warning systems for rainfall-induced landslides. Earth Sci Rev 179:228–247. https://doi.org/10.1016/j.earscirev.2018.02.013

Pontius RG Jr, Si K (2014) The total operating characteristic to measure diagnostic ability for multiple thresholds. Int J Geogr Inf Sci 28:570–583. https://doi.org/10.1080/13658816.2013.862623

Santangelo N, Forte G, De Falco M, Chirico GB, Santo A (2021) New insights on rainfall triggering flow-like landslides and flash floods in Campania (Southern Italy). Landslides 18:2923–2933. https://doi.org/10.1007/s10346-021-01667-9

Wasklewicz T, Guthrie R H, Eickenberg P, Kramka B (2022) Lessons learned from the local calibration of a debris flow model and importance to a geohazard assessment. In: Geohazard VIII, Quebec, p 5

Zhao L, Liu X, Mao J, Shao L, Li T (2020) Three-dimensional distance potential discrete element method for the numerical simulation of landslides. Landslides 17:361–377. https://doi.org/10.1007/s10346-019-01282-9

Funding

Open access funding provided by Università degli Studi di Salerno within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no competing interests.

Appendix: Derivation of the distance from uninformed classifier (DUC) index

Appendix: Derivation of the distance from uninformed classifier (DUC) index

The distance between a point M and a line r is the shortest distance between M and r. Let us consider as line r the random line into the TOC space and let us assume a point M which represents a result of a generic analysis. In order to define the distance between the point M and the line r, it is possible to draw a straight line through the point M and perpendicular to r (Fig. 13a).

A random line can be expressed in explicit form as:

where m is the slope of the line and q is the y-axis intercept.

First step: calculation of the normal line to r. By definition, if two lines are perpendicular, their slopes each are the opposite of the reciprocal of the other one, thus the normal line to r, hereafter n, can be defined as follows:

Second step: calculation of the intersection point between r and n. Since n passes through M with a known slope, its equation can be written as follows:

In order to define the intersection between the lines, it should verify at the same time the two conditions:

Substituting the first equation into the second one:

Inserting the x coordinate of the intersection point into the first equation:

The intersection point coordinates are the following:

Third step: calculation of the distance between the points P and I.

Fourth step: particularize the expression obtained into TOC space. The equation of the random line, r, can be expressed in the TOC space (Fig. 13b) as follows:

where

A generical point representative of the model result M has the following coordinates:

The distance between the point of the model M and the random line can be expressed as follows:

Fifth step: normalization of the model point distance. Since that the TOC space is not a normalized space, the distance obtained from the previous equation is represented by a value function of the sample space dimensions. Therefore, a comparison between different models is not straightforward. In order to define an index whose value is comprised between 0 and 1, the distance dM can be normalized by the maximum distance that can be individuated in the TOC space, dA, which is the distance between the perfect classifier (point A) and the random line:

Thus, the distance from uninformed classifier (DUC) is the following:

It can be demonstrated that the DUC calculated in ROC space has the same value of the DUC computed in TOC space.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Crescenzo, L., Guthrie, R. & Calvello, M. Evaluating the performance of propagation models of flow-like landslides at regional scale. Landslides 21, 229–243 (2024). https://doi.org/10.1007/s10346-023-02162-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10346-023-02162-z