Abstract

This study presents an approach to two-pulse 3D particle tracking using methods developed within the Shake-The-Box (STB, Schanz et al. in Exp Fluids 57:70, 2016) Lagrangian particle tracking (LPT) framework. The original STB algorithm requires time-resolved data and reconstructs 3D trajectories using a particle position prediction–correction scheme. However, dual-frame 3D acquisition systems, consisting of a dual-cavity laser and double-frame cameras, remain commonly used for many particle-image-based investigations in a wide range of flow velocities and applications. While such systems can be used to capture short Multi-Pulse particle trajectories (Multi-Pulse STB, MP-STB—Novara et al. in Exp Fluids 57:128, 2016a; Novara et al. in Exp Fluids 60:44, 2019), the most widespread application is still a single-pulse illumination of each of the two available frames. As a consequence, 3D LPT approaches capable of dealing with two-pulse recordings are of high interest for both the scientific community and industry. Several methods based on various evaluation schemes have been developed in the past. In the present study, a Two-Pulse Shake-The-Box approach (TP-STB) is proposed, based on the advanced IPR algorithm presented by Jahn et al. (Exp Fluids 62:179, 2021), in combination with an iterative scheme of reconstruction and tracking, ideally with the help of a predictor gained by Particle Space Correlation. It basically constitutes a lean version of the MP-STB technique, with lower demands on experimental setup and processing time. The performances of TP-STB are assessed by means of comparison with the results from the time-resolved STB algorithm (TR-STB) both concerning synthetic and experimental data. The suitability of the algorithm for the analysis of dual-frame 3D particle imaging datasets is assessed based on the processing of existing images from a tomographic PIV experiment from 2012. The comparison with the results published by Henningsson et al. (J R Soc Interface 12:20150119, 2015) confirms the capability of TP-STB to accurately reconstruct individual particle tracks despite the limited time-resolution information offered by two-frame recordings.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The problem of reconstructing the three-dimensional positions of particle flow tracers from their projections on multiple cameras lies at the heart of several 3D particle-image-based velocimetry and Lagrangian particle tracking (LPT) measurement techniques (Schröder and Schanz 2023).

While cross-correlation-based techniques such as tomographic-PIV (Tomo-PIV, Elsinga et al. 2006) make use of algebraic methods (e.g., MART, Herman and Lent 1976) to reconstruct particles as intensity peaks in a discretized voxel space, triangulation-based methods (3D-PTV, Nishino et al. 1989, Maas et al. 1993 and Iterative Particle Reconstruction, IPR, Wieneke 2013, Jahn et al. 2021) leverage epipolar geometry to reconstruct individual particles as positions and intensity values in the 3D domain.

Despite the inherent differences concerning accuracy, robustness and computational cost between the two approaches, in both cases the 3D reconstruction represents a bottleneck when the spatial resolution (i.e., particle image density, indicated in particles-per-pixel, \(\mathrm{ppp}\)) of the measurement is considered.

In fact, as the number of particles to be reconstructed increases (assuming constant properties of the imaging system), the reconstruction process becomes increasingly difficult due to the underdetermined nature of the problem. This typically results in a lower number and positional accuracy of the reconstructed particles, as well as an increasing number of spurious particles (ghost particles, Elsinga et al. 2011) which affect the accuracy of the measurement.

As a consequence, during the last decade, several methods have been developed to increase the performances of the reconstruction technique; an overview of these developments can be found in Scarano (2012) and Jahn et al. (2021). Among these methods, a number of techniques have been proposed to improve the accuracy of the reconstruction of instantaneous recordings by exploiting the coherence of the particle tracers moving with the flow over two or more realizations in the recording sequence.

Concerning cross-correlation-based methods, the Motion Tracking Enhancement technique (MTE, Novara et al. 2010) proposed the combined use of two or more recordings (for double-frame and time-resolved acquisition, respectively) to produce an enhanced initial guess for the Tomo-PIV algebraic reconstruction algorithm; for time-resolved recording sequences, a time-marching approach was introduced by Lynch and Scarano (2015) (sequential MTE, SMTE).

On the other hand, when Lagrangian particle tracking techniques are considered, the Shake-The-Box algorithm (STB, Schanz et al. 2013b, 2016) introduced a predictor/corrector scheme to integrate the temporal domain into the IPR-based reconstruction process. While Wieneke (2013) reported \(0.05\text{ ppp}\) as an upper limit for IPR (already one order of magnitude larger than typically employed for 3D-PTV single-pass triangulation), STB can deliver practically ghost-free tracks at particle image densities \({N}_{\mathrm{I}}\) exceeding \(0.15\text{ ppp}\) (Huhn et al. 2017; Bosbach et al. 2019); particle image densities up to \({N}_{\mathrm{I}}=0.2\text{ ppp}\) have been successfully tackled for synthetic data (Sciacchitano et al. 2021). The combination of accurate LPT results from STB and data assimilation algorithms (FlowFit, Gesemann et al. 2016, VIC+, Schneiders and Scarano 2016) allows to further enhance the spatial resolution of the measurement and provides access to the spatial gradients (i.e., flow structures) and to the instantaneous 3D pressure field.

When high-speed flows are considered, time-resolved sequences of recordings are not available due to the frequency limitation of current acquisition systems. In order to overcome this limitation and extend the advantages of STB to higher flow velocities, the use of multi-pulse systems (i.e., capturing four illuminations with a dual imaging setup) in combination with an iterative STB approach (Multi-Pulse Shake-The-Box, MP-STB) was proposed by Novara et al. (2016a); an iterative strategy based on the sequential application of IPR and particle tracking is employed to progressively reduce the complexity of the reconstruction problem. The use of multi-exposed frames (Novara et al. 2019) allows to acquire multi-pulse recordings for MP-STB with a single imaging system.

While performing LPT with STB requires either the availability of time-resolved recordings (TR-STB) or the use of a relatively complex multi-pulse setup for MP-STB, dual-frame 3D acquisition systems, consisting in a dual-cavity laser and a system of double-frame cameras, are commonly used for many particle-image-based investigations in a wide range of flow velocities and applications. As a consequence, particle tracking methods for two-pulse recordings are of high interest for the scientific community as they allow to extend the benefits of accurate LPT analysis to a wider range of applications and users and enable the processing of existing datasets recorded with dual-frame systems (i.e., older Tomo-PIV experiments).

These considerations motivated a number of attempts to perform Lagrangian particle tracking with dual-frame recordings. Fuchs et al. (2016) proposed a hybrid method that leverages tomographic PIV and 3D particle tracking. An iterative algorithm based on IPR reconstruction, 3D cross-correlation and two-pulse tracking was used by the DLR Göttingen group to analyze the single-exposed double-frame recording of Case C of the 4th International PIV Challenge in 2014 (Kähler et al. 2016). The same synthetic dataset was analyzed by Jahn et al. (2017) adopting the simultaneous IPR reconstruction of the two frames combined with a particle-matching-based filtering technique. A non-iterative 2D/3D particle tracking velocimetry algorithm was proposed by Fuchs et al. (2017); the authors reported that the technique was successful in tackling images with a particle image density up to \(0.06\text{ ppp}\). Lasinger et al. (2020) presented a variational approach to jointly reconstruct the individual tracer particles and dense 3D velocity fields. A novel technique for performing 3D-PTV from double-frame images (DF-TPTV) has been proposed by Cornic et al. (2020) where particle tracers are reconstructed on a voxel grid with a sparsity-based method and then tracked with the aid of a low-resolution displacement predictor from correlation; the algorithm has been applied to experimental data from a round jet in air at \(0.06\text{ ppp}.\)

Recently, an advanced version of the IPR algorithm has been proposed (Jahn et al. 2021), which significantly improves the performances of the reconstruction; in synthetic test cases, single recordings can be reconstructed up to a particle image density of \(0.14\text{ ppp}\) with a very low occurrence of ghost particles even at realistic conditions concerning the image noise.

In the present study, a Two-Pulse STB approach (TP-STB) is presented which makes use of the enhanced IPR algorithm combined with the iterative STB strategy commonly adopted for MP-STB (Novara et al. 2016a, 2019), and aided by velocity field prediction from Particle Space Correlation (PSC, Novara et al. 2016b). The TP-STB algorithm was already successfully applied to analyze two-pulse synthetic images from the First Challenge on Lagrangian Particle Tracking and Data Assimilation (Leclaire et al. 2021), conducted in the framework of the European Union’s Horizon 2020 project HOMER (Holistic Optical Metrology for Aero-Elastic Research). Results presented in Sciacchitano et al. (2021) showed that, using TP-STB, nearly the totality of particle tracers could be reconstructed up to \(0.16\text{ ppp}\), with an almost negligible number of spurious ghost particles and a very high particle position accuracy (positional mean error magnitude of approximately \(0.06\text{ px}\)).

In the present study the working principle and processing scheme of TP-STB are thoroughly described and its performances further characterized.

The TP-STB algorithm is presented in Sect. 2. A performance assessment is carried out in Sect. 3 based on the application of TP-STB to two recordings from a time-resolved sequence, where the TR-STB results provide a reference for the ground-truth solution. Both synthetic data from a significantly more challenging (higher image noise level) database generated within the HOMER project (Sciacchitano et al. 2022) and experimental data from a Rayleigh Bénard convection investigation (Weiss et al. 2022) have been used for the assessment (Sects. 3.1, 3.2, respectively). Finally, the TP-STB algorithm is applied to an existing dataset from a double-frame tomographic PIV investigation of the wake flow behind a flying desert locust (Henningsson et al. 2015); results from the LPT analysis, as well as the comparison with velocity fields from Tomo-PIV are presented in Sect. 4.

2 Iterative STB for two-pulse recordings

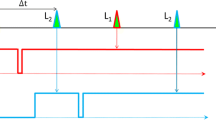

The iterative particle reconstruction/tracking strategy for TP-STB is shown in Fig. 1-left; the working principle of the algorithm is based on the MP-STB processing technique from Novara et al. (2016a, 2019). The 3D particle positions and intensities are reconstructed by means of advanced IPR (Jahn et al. 2021) for both recordings in the two-pulse sequence. Then, two-pulse tracks are identified, possibly with the aid of a velocity field predictor, between the two reconstructed 3D particle fields (see Sect. 2.1).

As the position of ghost particles mainly depends on geometrical properties (i.e., relative position of the tracers with respect to the cameras lines-of-sight), depending on the spatial velocity gradients in the investigated domain, the displacement of spurious particles is typically not coherent with the flow field (Elsinga et al. 2011). For this reason, only the particles that can be tracked over the two recordings are retained; unmatched particles (in gray in Fig. 1-left), possibly ghosts, are discarded (i.e., filtering step).

The positions of the retained particles are back-projected onto the image plane (projected images) and subtracted from the original recordings (recorded images) to obtain the residual images; the IPR reconstruction, tracking step and evaluation of projected/residual images constitute a single STB iteration. The residual images are used as input for another TP-STB iteration, starting with an IPR reconstruction of the residual particle field.

Assuming that a number of tracks can be successfully identified in the first STB iteration, the residual images will exhibit a lower particle image density than the original recordings, therefore offering a progressively easier reconstruction problem for the following iterations. If a particle is erroneously discarded during the filtering step (e.g., untracked particles due to insufficiently large search radii or to an inaccurate displacement predictor field) the related particle image will remain on the residual images, making it possible for the particle to be reconstructed again and tracked at a subsequent iteration. The two-pulse tracks identified in each iteration are added to the ones already known and all will be used to determine the residual images for yet another iteration.

The number of STB iterations required to achieve convergence depends on the experimental and imaging conditions (i.e., particle image density and diameter, number of cameras, image quality); the effect of this processing parameter is discussed in the performance assessment (Sect. 3).

2.1 Particle tracking strategy

The two-pulse particle tracking strategy is shown in Fig. 1-right. A search area is established around the reconstructed particles in the first pulse (\({t}_{1}\)); if a particle from \({t}_{2}\) is found within the search area, a track candidate is identified. If a predictor for the displacement field is available (orange arrow in Fig. 1-right), the search radius \({\delta }_{2p}\) can be reduced to avoid ambiguities.

For each candidate, a cost function is defined as the standard deviation of the particle intensity along the track candidate (\({\sigma }_{\mathrm{I}}\)); if a velocity predictor is available, the magnitude difference between the velocity estimated from the track candidate and that from the predictor (\({\varepsilon }_{\mathrm{pred}}\)) is integrated in the cost function. The relative contribution of these two parameters to the cost function can be weighted based on the confidence in the particle peak intensity consistency and in the accuracy of the predictor field.

Two-pulse tracks are obtained by filtering the track candidates to solve possible ambiguities; among multiple candidates sharing the same particle, the one which minimizes the cost function is retained, while the others are discarded.

An additional filtering of the tracks can be applied by defining maximum accepted values for the cost function terms; tracks exhibiting values exceeding these thresholds (\({\sigma }_{\mathrm{I}}^{\mathrm{max}}\) and \({\varepsilon }_{\mathrm{pred}}^{\mathrm{max}}\)) are discarded from the reconstruction in order to avoid possible outliers.

For the first TP-STB iteration, an estimate of the instantaneous 3D velocity field to be used as a displacement predictor can be obtained by analyzing the two 3D point clouds reconstructed from IPR with the Particle Space Correlation algorithm (PSC, see Sect. 2.2).

The PSC consists of a 3D cross-correlation approach in the particle space; unlike for tomographic PIV, where the cross-correlation is applied to the voxel space, the PSC makes use only of the discrete particle locations and intensities as obtained from IPR. A trilinear interpolation is used to evaluate the predicted displacement from the PSC result at the location of the reconstructed particles (orange arrow in Fig. 1-right).

Due to the large cross-correlation volumes, the resulting velocity field from PSC (\({u}_{\mathrm{PSC}}\)) is typically strongly modulated; for subsequent STB iterations, a displacement predictor can be obtained by interpolating the scattered velocity measurements from the tracks identified in the previous iterations (\({u}_{\mathrm{tracks}}\)). On the other hand, a constant shift can be used as a displacement predictor (\({u}_{\mathrm{const}}\)); \({u}_{\mathrm{const}}=0\) corresponds to a situation where no displacement predictor is used (Fig. 1-right-top).

A different set of particle tracking parameters (i.e., search radius \({\delta }_{2p}\), cost function parameters \({\sigma }_{\mathrm{I}}\text{, }{\varepsilon }_{\mathrm{pred}}\), relative weights and threshold values (\({\sigma }_{\mathrm{I}}^{\mathrm{max}}\), \({\varepsilon }_{\mathrm{pred}}^{\mathrm{max}}\)), the use and choice of velocity predictor (\({u}_{\mathrm{const}}, {u}_{\mathrm{PSC}}, {u}_{\mathrm{tracks}}\))) can be employed for each TP-STB iteration, depending on the experimental conditions and image quality. Alternatively, multiple tracking iterations can be performed on the same reconstructed particle fields, typically adopting increasingly relaxed tracking parameters as the predictor field from PSC is replaced by the more accurate result from the interpolation of tracks reconstructed at previous tracking iterations. This option is typically adopted in case a single TP-STB iteration is performed.

2.2 Particle Space Correlation

A key aspect of the tracking strategy of the Two-Pulse STB algorithm is the availability (or the lack) of a predictor for the particle displacement. As illustrated in Fig. 1-right, the use of an educated guess for the particle position in the second frame allows for the reduction of the search radius employed for the nearest neighbor search during the particle matching step. A smaller search area greatly reduces the possible ambiguities in the determination of the particle tracks candidate, resulting in a lower chance in creating spurious tracks (ghosts), particularly in high particle image density conditions.

While tomographic reconstruction and 3D cross-correlation would surely accomplish the purpose of providing a low spatial resolution estimate to be used as a predictor, the computational cost of the dense voxel-based reconstruction approach would nullify the benefits offered by LPT approaches in terms of computational efficiency.

As a result, in the framework of the development of the Multi-Pulse STB algorithm (which provided the basis for the current two-pulse approach) Novara et al. (2016b) introduced the Particle Space Correlation (PSC) method. The PSC makes use only of the particle peak locations and intensities as obtained from IPR to provide a lower resolution velocity field to be used as a predictor for particle tracking.

The PSC technique relies on a cross-correlation approach similar to that typically used in 3D PIV; after the IPR reconstruction of the two pulses (frame 1 and 2 at times \({t}_{1}\) and \({t}_{2}\), respectively), a Cartesian grid is defined. For each grid-point, a 3D interrogation volume is established and the particles belonging to such volume are identified in the two IPR objects.

For each particle in the first frame, the possible displacements (Fig. 2-left) are computed as:

where \(i\) and \(j\) indicate the particles at \({t}_{1}\) and \({t}_{2}\), respectively, and \(k\) the number of possible matches.

Working principle of the Particle Space Correlation in a reduced-dimensionality 2D example. Left: particles within the interrogation volume in the first and second frame (in black and gray, respectively). Right: map of the possible particle displacements; \({\delta }_{s}\) indicates the search radius while the black dotted circle the clustering around the most probable displacement (black arrow)

For each particle pair, the product of the particle peak intensities is computed as:

For each interrogation volume the possible displacements are collected into a 3D map (2D in the example in Fig. 2-right) in the space of the particle shifts. Assuming that the particles within the interrogation volumes exhibit a rather uniform displacement, a cluster of points in the map of the shifts indicates the location of the most probable displacement.

The search for the location of the cluster is limited to a search area defined by the search radius \({\delta }_{s}\); by doing so, the size of the search area is decoupled from that of the interrogation volume, allowing for a larger number of particles to contribute to the same peak without resulting in a significant increase of the computational cost needed to identify the cluster.

In the current implementation of the PSC, the search for the cluster position is performed by discretizing the search area in cubic elements, typically with an edge length of a pixel in world-space (a voxel); a Gaussian blob, typically of \(3\times 3\times 3\) elements and with a peak value proportional to \({I}_{k}^{*}\), is positioned at each shift location (orange dots in Fig. 2-right). By adding the contribution of each blob to the discretized map a peak is formed at the most probable displacement location; the signal-to-noise ratio is enhanced thanks to the \({I}_{k}^{*}\) factor which ensures a higher weight for the contributions of the brighter particles. As for typical PIV evaluations, a Gaussian fit of the cross-correlation peak is applied to retrieve the three components of the displacement vector with sub-pixel accuracy.

An alternative method for the detection of the cluster location in the map of the shifts can be envisioned. A clustering algorithm (HDBSCAN, McInnes et al. 2017), together with a weight of each point based on the \({I}_{k}^{*}\) factor, could be employed to identify the position of the most probable displacement cluster without the need for a computationally expensive discretization of the search volume.

Nevertheless, the computational cost of the PSC, even with the discretization approach, is still not comparable to that of the Tomo-PIV analysis, as no dense reconstruction of the particle field on a voxel grid is required.

An iterative procedure analogous to the volume deformation multi-grid cross-correlation technique (VODIM, Scarano and Poelma 2009) is applied to progressively reduce the interrogation volume size, therefore increasing the spatial resolution of the velocity field from PSC. Unlike for the case of a dense voxel intensity field, the volume deformation step within the PSC is not computationally expensive, as the individual particle peak locations are simply shifted according to the previously estimated velocity field.

As a consequence, a quick trilinear interpolation is required, as opposed to a costly interpolation of the full voxel space. Furthermore, the search radius is progressively reduced after the first iteration, which further reduces the computational cost.

3 Performance assessment: comparison with time-resolved STB

Typically, the performances of a novel algorithm are assessed by applying it to a dataset where the ground-truth solution is known (i.e., synthetically generated dataset).

On the other hand, the results presented in Sciacchitano et al. 2021 concerning the assessment of STB applied to time-resolved recordings, show that TR-STB is capable to provide a close-to-perfect reconstruction up to \(0.2\text{ ppp}\) with a very low number of ghost particles (\(<0.1\%\)) and a high particle peak positional accuracy (positional mean error magnitude \(<0.05\text{ px}\)).

As a consequence, it can be assumed that, under a wide range of particle image densities and imaging conditions, the results offered by TR-STB provide an accurate reference for the actual ground-truth 3D particle distribution. Therefore, in the present study, instead of creating a dedicated synthetic test-dataset, the performance assessment of TP-STB is carried out by analyzing a two-pulse recording sequence extracted from a longer acquisition, where the reference solution is obtained from a TR-STB analysis in the converged state (Schanz et al. 2016).

This approach offers the twofold advantage of allowing the assessment of the performances of TP-STB based on a synthetic dataset where the actual ground-truth is not known (HOMER internal database, Sciacchitano et al. 2022, Sect. 3.1)—and therefore without any bias due to pre-knowledge of parameters—and on experimental data from a time-resolved 3D investigation (Rayleigh Bénard convection, Weiss et al. 2022). The experimental images from the RBC investigation are of a superior quality when compared to those typically encountered in air experiments. In order to also assess the performance on TP-STB in more demanding imaging conditions, we use a synthetic test case that exhibits a high noise level and tracers’ diameter polydispersity. Additionally, we include another experimental case with images from a dual-frame investigation in air (see Sect. 4).

3.1 Synthetic dataset: HOMER Lagrangian particle tracking database

A detailed description of the HOMER LPT database can be found in Sciacchitano et al. (2022); synthetic images from a 3D imaging system have been generated based on a simulation of the air flow around a cylinder in ground effect, where the wall contains a flexible oscillating panel. Among the several cases within the database, a time-resolved sequence of recordings from a four-camera system is produced, where particle images with a significant noise level have been generated at particle image densities of \({N}_{\mathrm{I}}=0.05\text{ ppp}\) and \({N}_{\mathrm{I}}=0.12\text{ ppp}\). For each particle image density, a two-pulse sequence is analyzed by means of TP-STB; as mentioned above, performances in terms of track yield and reconstruction positional accuracy are assessed against a result extracted from a converged time-resolved STB (Schanz et al. 2016) analysis.

The simulated free-stream velocity \({V}_{\infty }\) is \(10 \, \mathrm{m}/\mathrm{s}\), the cylinder has a diameter \(D\) of \(10 \, \mathrm{mm}\) and it is located \(15\text{ mm}\) upstream of the upstream edge of the \(100\times 100\text{ m}{\text{m}}^{2}\) panel at a distance of \(10 \, \mathrm{mm}\) from the undeformed wall location (\(Z=0 \, \mathrm{mm}\)). The \(X\) axis is aligned with the streamwise direction, the wall-normal \(Z\) axis is directed away from the wall and the spanwise \(Y\) axis orientation follows the right-hand rule.

The measurement volume spans \(100\times 100\times 30 \, {\mathrm{mm}}^{3}\) in the \(X, Y\) and \(Z\) directions, respectively; the \(1920\times 1200\text{ px}\) camera sensors have a pixel pitch of \(10 \, \mathrm{\mu m}\). The four cameras are arranged in in-line configuration with viewing angles of \(-30^\circ , -10^\circ , +10^\circ\) and \(+30^\circ\) with respect to the \(Z\) axis; the average digital resolution is approximately \(10.94 \, \mathrm{px}/\mathrm{mm}\). The time-resolved sequence (TR) contains \(251\) recordings with a time separation between pulses of \(40 \, \mathrm{\mu s}\) (recordings 183–184 have been chosen for the TP-STB analysis); the maximum particle image shift is approximately \(4.4\text{ px}\).

The relatively low particle displacement between the two recordings would allow for the selection of a larger interval between the instants chosen for the TP-STB analysis (i.e., recordings 183–185 for \(\Delta t=80 \, \mathrm{\mu s}\) and maximum shift of \(8.8 \, \mathrm{px}\), or recordings 183–186 for \(\Delta t=120 \, \mathrm{\mu s}\) and maximum shift of \(13.2 \, \mathrm{px}\)). However, for sake of comparison with the results reported by Sciacchitano et al. (2022), the authors decided to keep the time separation originally proposed for the two-pulse analysis (\(\Delta t=40 \, \mathrm{\mu s}\)); the capability of TP-STB to tackle more realistic particle shifts typical of dual-frame investigations (10–15 px)are demonstrated for the experimental applications presented in Sects. 3.2 and 4, where the maximum particle displacement is approximately \(11\) and \(10.5\text{ px}\), respectively.

Approximately \(\mathrm{59,400}\) and \(\mathrm{140,000}\) tracks are identified by the TR-STB analysis for the \(0.05\) and \(0.12\text{ ppp}\) cases; details on the TR-STB analysis can be found in Sciacchitano et al. (2022).

A detail of the original particle images for camera 2 is presented in Fig. 3 for both particle image densities. The position of the detected particle images peaks is indicated with red markers (approximately \(100\) and \(140\) peaks for \(0.05\) and \(0.12\text{ ppp}\), respectively), while the locations of the back-projection of the particles tracked by TR-STB is marked in blue (approximately \(150\) and \(330\) particles for \(0.05\) and \(0.12\text{ ppp}\), respectively). The lower number of detected peaks, particularly for the higher seeding density case, confirms how peak detection algorithms, as well as the human eye, struggle in identifying individual peaks where a significant particle image overlap occurs. The very low percentage of ghost tracks in the reference TR-STB reconstruction (0.1 and 0.9% for the low and high seeding density cases, respectively—Table 3 in Sciacchitano et al. 2022) ensures that no significant portion of the back-projected peaks are caused by spurious particles in the reconstruction.

To counteract the relatively high image noise level, a constant value of \(100\text{ counts}\) is subtracted from the recorded images before TP-STB processing. The main processing parameters are summarized in Table 1; the TP-STB analysis was carried out applying \(11\) STB iterations. The IPR settings have been optimized for the \(0.12\text{ ppp}\) case and applied for the lower seeding density as well for sake of consistency and ease of description; however, for the \(0.05\text{ ppp}\) case a leaner processing would have sufficed to produce results of the same quality.

A single 3D particle field is reconstructed in about 1 and 2 min for \(0.05\) and \(0.12 \, \mathrm{ppp}\), respectively; as the particle image density on the residual images decreases with the STB iterations so does the IPR processing time, depending on the fraction of tracks successfully reconstructed in the previous iterations. For a detailed description of the IPR algorithm and settings used within the TP-STB the authors refer to Jahn et al. 2021.

The displacement field predictor was estimated by PSC with a final cross-correlation volume of \(20\times 20\times 5\text{ px}\) (approximately \(1.8\times 1.8\times 0.45 \, {\mathrm{mm}}^{3}\)). The search for two-pulse track candidates was conducted without the aid of a predictor field (search predictor in Table 1 set to \({u}_{\mathrm{const}}=0\)); instead, a large enough search radius (\(8 \, \mathrm{px}\)) was used which ensures that even the largest particles displacements with the time separation between the pulses can be captured. On the other hand, the displacement predictor fields \({u}_{\text{PSC}}\) and \({u}_{\mathrm{tracks}}\) have been used to evaluate the cost function term \({\varepsilon }_{\mathrm{pred}}\) (residual predictor in Table 1); a linearly increasing value from 1 to 10 px of \({\varepsilon }_{\mathrm{pred}}^{\mathrm{max}}\) is used to filter out possible outliers.

The performance assessment of the TP-STB results follows the same approach presented in Sciacchitano et al. (2021, 2022); a reconstructed particle is considered correct (i.e., hit) if a reference particle (from TR-STB) is found within a radius of \(1 \, \mathrm{px}\). On the other hand, a particle is considered a ghost either when no reference particle is present in its vicinity, or when a found reference particle has already been matched to a closer reconstructed particle (i.e., when two particles are reconstructed near a reference particle, the closest one is labeled as hit and the other one as ghost).

As only particles that could be tracked between the two pulses are considered for the analysis, the terms particle and track are used interchangeably in the present document.

Two processing strategies have been implemented, namely the iterative and the single-iteration TP-STB strategy. The iterative TP-STB follows the scheme presented in Fig. 1-left, where a new set of particle images is reconstructed with IPR at each iteration (original recordings for the first TP-STB iteration, and residual images for the following ones). At each of the \(11\) TP-STB iterations, a single particle tracking iteration is performed making use of a different set of parameters; the value of \({\varepsilon }_{\mathrm{pred}}^{\mathrm{max}}\) is progressively increased when the PSC predictor is replaced by a more accurate one based on the interpolation of previous tracks. The processing parameters are indicated in Table 1.

On the other hand, for the single-iteration TP-STB, the IPR reconstruction is performed only once starting from the recorded images, and the processing is concluded after the particle tracking step (i.e., without the iterative evaluation of back-projected and residual images shown in Fig. 1-left-bottom). The same particle tracking parameters shown in Table 1 are employed. The 11 tracking iterations are performed on the same 3D particle clouds from the single IPR reconstructions of the two pulses; after each tracking iteration, the tracked particles are removed from the reconstruction and the tracking continues on the remaining particles using the updated parameters set.

The fraction of correct and ghost particles as obtained from the analysis of both particle image density levels is presented in Fig. 4 as a function of the number of STB iterations applied. For the iterative TP-STB case (solid lines with markers) the rather conservative choice for the tracking parameters used for the first iterations (i.e., \({\varepsilon }_{\mathrm{pred}}^{\mathrm{max}}\)) is responsible for the lower number of reconstructed tracks attained for STB iterations 1–5. The star markers and dotted lines refer, instead, to the final result of the single-iteration TP-STB processing (i.e., after the last particle tracking iteration).

Fraction of reconstructed tracks from TP-STB with respect to the number of tracks from the reference TR-STB solution for both particle image densities. The fraction of correctly reconstructed tracks (hits in blue) and of ghost tracks (ghosts in orange) are shown for a reconstruction using only a single iteration (star marker and dotted line) and for the complete iterative TP-STB strategy (solid lines and markers)

Due to the already excellent performances of the single-recording enhanced IPR (Jahn et al. 2021), the beneficial effect of the iterative STB scheme depicted in Fig. 1-left can only be appreciated at the higher seeding density conditions; for \(0.12\text{ ppp}\), \(4\%\) more of the ground-truth tracks can be correctly identified when an iterative approach is adopted (\(\approx 86\%\)) with respect to the single-iteration result (\(\approx 82\%\)).

The relatively small advantage of the iterative over the single-iteration approach can be attributed to the advanced IPR algorithm used for reconstruction (Jahn et al. 2021), which is highly effective at this seeding density and noise level. Cases with even more particles, higher noise levels or less controlled imaging conditions (like in most experiments) will show a higher gain from the iterative processing.

On the other hand, the ghost particle level remains very low for both seeding densities (\(0.2\) and \(0.5\%\) for \(0.05\) and \(0.12\text{ ppp}\), respectively). When the fraction of spurious particles is considered, it is interesting to note that the ghost level for a single-recording IPR reconstruction (i.e., no particle is discarded when failing to build a two-pulse track) is approximately \(0.6\) and \(3.2\%\) for \(0.05\) and \(0.12\text{ ppp}\), respectively. This result shows once again the impact of exploiting the time information embedded in a sequence of recordings.

Given the better performances in terms of tracks yield, the results presented in the remainder of this section refer to the iterative TP-STB processing results; the fraction of correctly reconstructed tracks in the converged state is around \(91\%\) (\(\mathrm{54,300}\) tracks) and \(86\%\) (\(\mathrm{120,400}\) tracks) for \(0.05\) and \(0.12\text{ ppp}\), respectively.

The analysis of the HOMER Lagrangian Particle Tracking and Data Assimilation database presented in Sciacchitano et al. (2022) allows to put these values in relation to the actual ground-truth, despite it not being available to the authors. In fact, Table 3 in Sciacchitano et al. (2022) reports that TR-STB is capable of accurately reconstructing approximately \(98\) and \(96\%\) of the actual particle tracks at \(0.05\) and \(0.12\text{ ppp}\), respectively. Based on these values, the performances of TR-STB with respect to the actual ground-truth can be estimated as 89 and 82%, respectively.

It should be noted that the missing particles for the time-resolved case are mainly due to the high image noise; because of the simulated particle diameter polydispersity, a significant portion of the particle images cannot be detected on the recordings because their peak value drops below the noise level (Fig. 3).

On the other hand, the lower performances at higher seeding density, are mainly due to the increase in overlapping particle images which pose a challenge for the reconstruction approach, particularly when no time-resolution is available, as confirmed by the relatively poor performances of the peak detection algorithm when applied to the recorded images shown in Fig. 3. The fact that more than \(80\%\) of the ground-truth particles can be found despite the weak performance of the peak detection on the original images confirms the ability of the iterative processes to reveal previously hidden peaks by subtracting the images of known particles.

The analysis of the 3D particle peak positional accuracy is carried out by comparing the TP-STB results with the reference TR-STB solution making use of the matched particles (hits) for both seeding densities; the histograms of the errors in the streamwise (\(X\)) and wall-normal (\(Z\)) directions are presented in Fig. 5. The results in the spanwise component (\(Y\)) are not shown as they resemble closely those for the \(X\) direction. For the low seeding density case (Fig. 5-left), the root-mean-square (RMS) errors for the \(X\) and \(Z\) positions are approximately \(4.2 \, \mathrm{\mu m}\) (\(0.05\text{ px}\)) and \(11 \, \mathrm{\mu m}\) (\(0.12\text{ px}\)), respectively; the \(2.6\) factor between the accuracy in the two directions can be ascribed to the viewing direction of the imaging system being aligned with the wall-normal direction (\(Z\)). For the \(0.12 \, \mathrm{ppp}\) case, due to the higher complexity of the reconstruction problem (i.e., overlapping particle images), as expected, the positional errors increase to \(5.5 \, \mathrm{\mu m}\) (\(0.06 \, \mathrm{px}\)) and \(14 \, \mathrm{\mu m}\) (\(0.16 \, \mathrm{px}\)) for \(X\) and \(Z\), respectively. The results shown in Fig. 5 compare well with those presented in Sciacchitano et al. (2022), confirming that the TR-STB method is capable of providing a good approximation of the actual ground-truth particle distribution.

3.2 Experimental dataset: Rayleigh Bénard convection flow

The performance assessment of TP-STB based on experimental data is carried out by analyzing a two-pulse sequence from the time-resolved recordings from the Rayleigh Bénard convection cell investigation presented by Weiss et al. (2022). As for the analysis presented in the previous section, the results from TR-STB are taken as a reference of the unknown ground-truth 3D particle tracks field.

The convection flow is issued within a rectangular cell of \(320\times 320\times 20 \, {\mathrm{mm}}^{3}\) filled with water; a heated copper plate is located at the bottom of the cell, while a water-cooled borosilicate glass plate is used at the top in order to provide optical access for the imaging system (Fig. 6-left).

Left: Sketch of experimental setup (reproduced from Weiss et al. 2022). Right: detail of CMOS camera image; the full-frame image size is \(2160\times 2560 \, \mathrm{px}\)

The applied temperature difference between the top and the bottom plate varies between \(2\) and \(20\text{ K}\). The flow was seeded with fluorescent \(50 \, \mathrm{\mu m}\) polyethylene microspheres, illuminated by two-pulsed UV-LED arrays. A detailed description of the experimental setup can be found in Weiss et al. (2022).

A system of six scientific CMOS cameras was operated at a frequency facq= 40–60 Hz to acquire time-resolved sequences of recordings; a detail of a \(2160\times 2560 \, \mathrm{px}\) camera image is shown in Fig. 6-right. The particle image density is approximately \(0.075\text{ ppp}\); around \(\mathrm{332,000}\) instantaneous particles are successfully reconstructed and tracked by the TR-STB algorithm.

In order to increase the dynamic range of the TP-STB evaluation, a time separation of three frames has been chosen between the two recordings used for the two-pulse reconstruction. Given a digital resolution of approximately \(7.15 \, \mathrm{px}/\mathrm{mm}\), a maximum particle displacement of 11 PX is expected for the case presented in this section (\({f}_{\mathrm{acq}}=19\text{ Hz}\), maximum flow velocity magnitude of \({V}_{\mathrm{max}}\approx 0.01 \, \mathrm{m}/\mathrm{s}\)).

Due to the relatively low image noise and good image quality (i.e., consistent particle peak intensity for all cameras in the imaging system and over the recording sequence), a single-iteration of TP-STB has been employed for the results shown in the present section; the main processing parameters are presented in Table 2. The displacement field predictor was estimated by PSC with a final cross-correlation volume of \(20\times 20\times 10 \, \mathrm{px}\) (approximately \(2.8\times 2.8\times 1.4 \, {\mathrm{mm}}^{3}\)).

The number of correct tracks reconstructed by TP-STB, as well as their positional error, is computed with respect to the reference tracks from TR-STB obtained after filtering the particle positions along the tracks by means of the TrackFit spline interpolation scheme (Gesemann et al 2016); the cut-off frequency of the low-pass filter is determined from the spectral distribution of the unfitted tracks in order to remove the high-frequency measurement noise and preserve the physical part of the fluctuations.

The reference velocity values are computed by a linear fit of the reference particle positions from the fitted TR-STB tracks extracted at the two time-instants used for the TP-STB processing; this allows to isolate the contribution to the velocity error associated to the random positional noise due to the IPR reconstruction.

On the other hand, the truncation error due to the finite time separation between the two pulses is not included in the present analysis. This choice is motivated, on the one hand, by the fact that the magnitude of the truncation error strongly depends on the particular investigated flow (i.e., ratio between the temporal scales and the chosen time separation between pulses). On the other hand, the smallest time separation adopted for dual-frame investigation is not a free parameter that can be changed in post-processing, but it is typically set in order to limit the maximum particle displacement between the two frames to a value that would allow a robust velocimetry analysis either by cross-correlation or particle tracking (\(\approx 11 \, \mathrm{px}\) in the present investigation).

Approximately \(87\%\) of the reference tracks (\(\approx \mathrm{290,000}\) tracks) are correctly reconstructed by TP-STB; the ghost particle level remains below \(1\%\) (\(\approx 2800\) tracks). The positional and velocity analysis based on the correctly reconstructed tracks is presented in Fig. 7. In the chosen reference system, the \(XY\) plane is parallel to the top and bottom plates of the cell; on the other hand, \(Z\) is aligned with the imaging system viewing direction. The limited aperture of the imaging system (41.1 degree between the outer cameras) justifies the larger errors in the \(Z\) direction by a factor of approximately \(3.5\). The positional RMS errors are approximately \(5 \, \mathrm{\mu m}\) (\(0.04 \, \mathrm{px}\)) and \(19 \, \mathrm{\mu m}\) (\(0.14 \, \mathrm{px}\)) for \(X\) and \(Z\), respectively; the velocity RMS errors are approximately \(0.69\% {V}_{\mathrm{max}}\) and \(2.27\% {V}_{\mathrm{max}}\) for the in-plane and wall-normal components, respectively.

A visualization of the 3D instantaneous tracks obtained with TP-STB is presented in Fig. 8.

Instantaneous result from TP-STB; particles color-coded with the velocity component along \(Z.\) Blue indicates sinking particles while yellow indicates rising ones. Left: complete track field (approximately \(\mathrm{290,000}\) particles) marked with velocity vector. Right: spherical particle markers in a \(8 \, \mathrm{mm}\) \(XY\)-slice (top) and a \(10 \, \mathrm{mm}\) \(XZ\)-slice (bottom)

The scattered results from TP-STB are interpolated onto a regular grid by means of the FlowFit data assimilation algorithm (Gesemann et al 2016); the FlowFit algorithm makes use of the input from the Lagrangian velocities and leverages Navier–Stokes constraints to fit a system of smooth 3D B-splines, avoiding low-pass filtering effects and delivering access to the full velocity gradient tensor via analytical derivatives.

The results from the FlowFit algorithm applied to the reference and TP-STB Lagrangian tracks are presented in Fig. 9-top on the left and right column, respectively; an internal cell size of \(1 \, {\mathrm{mm}}^{3}\) was used (\(\approx 0.11\) particles-per-cell), while the solution was sampled with a resolution of \(0.25 \, \mathrm{mm}\) in all three directions.

Top: iso-contours of vertical velocity component obtained with the FlowFit data assimilation algorithm applied to the scattered LPT results (\(Z=-18 \, \mathrm{mm}\)); reference results from TR-STB (left), result from TP-STB (right). The magnitude of the difference between the two cases is shown over half the slice in the top-right plot. Bottom: 3D iso-surfaces of Q criterion (\(0.25 \, 1/{\mathrm{s}}^{2}\)) color-coded by the vorticity component along the \(Y\) axis (\({\omega }_{y}\)) in the area indicated by the black rectangle in the top-left plot

Contours of the vertical velocity component (\(w\)) are shown for the \(Z=-18 \, \mathrm{mm}\) position along the vertical axis \(Z\), in proximity of the warm plate. Furthermore, the magnitude of the difference between the two results is presented over the right half of the plotted area in Fig. 9-top-right.

The visual inspection of these velocity fields confirms the relatively good quality of the TP-STB results in terms of percentage of retrieved tracks and positional and velocity errors; TP-STB is capable of resolving the same small structures that can be identified in the reference solution. As expected, a slight modulation of the velocity fluctuations, as well as a higher noise level can be noticed when closely inspecting the two results.

These considerations are confirmed when looking at the velocity spatial gradients. In Fig. 9-bottom the flow structures identified by means of the Q criterion (Hunt et al. 1988) are presented; iso-surfaces at \(Q=0.25 \, 1/{\mathrm{s}}^{2}\) are color-coded by the \(Y\) component of the vorticity (\({\omega }_{y}\)). The reference results are shown on the left and the Two-Pulse-STB result on the right; for sake of visualization, these results are presented for a small portion of the investigated volume.

While, as observed concerning Fig. 9-top, the same structures can be identified in both results, the lower noise of the TR-STB solution can be inferred by the smoother Q criterion field. At the same time, the higher values of Q attained allow for the reconstruction of connected structures that appear incomplete in the two-pulse result (e.g., elongated ring at approximately \(X=10 \, \mathrm{mm}\) and \(Y=-30 \, \mathrm{mm}\), Fig. 9-bottom).

When comparing the two results, it must be taken into consideration that the reference result is obtained by the TR-STB algorithm in a fully converged state (Schanz et al 2016); in fact, the time instants chosen for the comparison refer to recordings \(190\) and \(193\) in the images sequence. As also shown for synthetic data (Sciacchitano et al. 2021, 2022), the STB algorithm is able to perform a close-to-perfect reconstruction with very low values of ghost particles and high positional, velocity and acceleration accuracies, when the information embedded in time-resolved recordings is exploited.

Furthermore, when the acceleration is available (as for TP-STB results), the full non-linear FlowFit optimization can be applied (second generation FlowFit, Gesemann et al 2016); on the other hand, since only the particle position and velocity can be extracted from the two-pulse tracking, the linear (first generation) FlowFit is used for the TP-STB results, where only the difference from the scattered velocity input and the velocity divergence are minimized as part of the cost function during the optimization problem.

Given the above considerations, the accuracy of the reconstruction attained by the two-pulse particle tracking with TP-STB (i.e., percentage of actual tracks identified, ghost level, positional and velocity errors), as well as the level of detail achieved in the data assimilation results (i.e., contours of velocity components, spatial velocity gradients) is remarkable when considering the large difference in terms of the number of recordings used for the reconstruction (i.e., two against hundreds) with respect to the time-resolved reference result.

On the other hand, it must be added that the excellent image quality attained in the present time-resolved investigation (unlike that of the synthetic case presented in Sect. 3.1) is expected to play an important role, particularly when the track yield and positional error are considered.

In order to assess the performances of TP-STB in more realistic imaging conditions for dual-frame experimental dataset, the tracking algorithm is applied to images from an actual tomographic PIV measurement from 2012 (using DEHS seeding tracers and dual-cavity laser illumination); the results of the TP-STB algorithm applied to this pre-existing dataset are presented in the following section.

4 Application to dual-frame tomographic PIV experimental data

The TP-STB Lagrangian particle tracking algorithm is applied to double-frame recordings from a 3D investigation of the wake flow behind a flying locust. The experiment has been conducted as a collaborative work between the Department of Biology of Lund University, LaVision GmbH (Göttingen, Germany), the Structure and Motion Laboratory (University of London) and the DLR Göttingen in 2012.

A tethered desert locust was flying in the 1-m wind-tunnel facility at the DLR Göttingen (Fig. 10-left); a flow speed of approximately \(3.3 \, \mathrm{m}/\mathrm{s}\) was chosen to match the locust's equilibrium flight speed.

Left: Experimental setup for the investigation of the wake flow of a flying desert locust by means of a 3D double-frame acquisition system (adapted from Henningsson et al. 2015). Right: instantaneous dual-frame recordings from two of the eight cameras; first and second frames on top and bottom row, respectively)

The air flow was seeded with \(1 \, \mathrm{\mu m}\) DEHS droplets and illumination was provided by a dual Nd: YAG laser system (Spectra Physics Quanta Ray Pro) with a pulse energy of \(\approx 1 \, \mathrm{J}/\mathrm{pulse}\); a volume of approximately \(200\times 240\times 50 \, {\mathrm{mm}}^{3}\) was illuminated along the streamwise (\(X\)), spanwise \(Y\) and vertical (\(Z\)) directions.

A system of eight LaVision Imager CMOS cameras (\(2560\times 2160 \, \mathrm{px}\)) in Scheimpflug configuration were used to acquire particle images (Fig. 10-left). The imaging system was calibrated making use of a 3D calibration target; the volume self-calibration correction (VSC, Wieneke 2008) was applied to reduce the calibration error down to the sub-pixel range; the optical transfer function (OTF, Schanz et al. 2013a) was calibrated alongside. The digital resolution is approximately \(10.85 \, \mathrm{px}/\mathrm{mm}\).

Several flight postures were investigated; for each configuration, a sequence of \(230\) double-frame recordings were acquired at a frequency ranging between \(5\) and \(10 \, \mathrm{Hz}\); the time separation between the two frames was set to \(200 \, \mathrm{\mu s}\); the maximum particle displacement between the frames is \(\approx 10.5 \mathrm{px}\) (maximum flow velocity magnitude up to \(\approx 5 \, \mathrm{m}/\mathrm{s}\)).

The experiment was designed for the reconstruction and velocity analysis of the particle images by means of tomographic PIV, a detailed description of the measurement scope is presented in Henningsson et al. (2015), as well as the analysis of the Tomo-PIV results, particularly concerning the flow patterns identified in the locust wake flow.

Given the double-frame acquisition strategy, unlike for the TP-STB applications presented in the previous sections, a reference solution from TR-STB is not available. Therefore, in the present study, the Two-Pulse STB results are compared with those obtained with tomographic PIV presented in Henningsson et al. (2015).

The choice of this particular experimental dataset to assess the suitability of TP-STB for double-frame recordings is motivated by the fact that this is an already existing dataset (from 2012), where no optimization of the experimental parameters for particle tracking purposes was performed.

On the contrary, given the maturity of the multiplicative algebraic reconstruction technique and the robustness of the 3D cross-correlation velocimetry of Tomo-PIV, the particle images where recorded at a relatively high particle image density (0.04–0.06 \(\mathrm{ppp}\)). Furthermore, as reported in Novara et al. (2016a, 2019) concerning four-pulse tracking, a few issues typical of double-frame laser illumination (e.g., inconsistent particle peak intensity across the cameras, laser pulse intensity difference between the two frames) are particularly critical in case individual particle tracers need to be triangulated and tracked.

Exemplary images from two of the eight cameras are presented in Fig. 10-right (cameras 1 and 2 on left and right, respectively); the two frames are shown in the top and bottom rows. The inhomogeneity of the laser illumination in the sensor plane (roughly parallel to the \(XY\) plane) is significant, a result of the laser beam profile, optical path (lenses and back-reflection mirror) and the aperture installed to cut the illumination to a rectangular beam. Also, a clear difference in terms of particle peak intensity is visible both between the two cameras and across the two frames from each camera.

This difference can be better appreciated in the detailed zoomed-in images presented in Fig. 11 where the same particles can be identified moving from left to right from frame 1 to frame 2; however, the peak intensities on the second frame appear approximately \(70\%\) of those on the first frame.

As a consequence, it is expected that a relevant number of particle peaks will drop below the image noise level in the dimmer frame, while being detectable in the brighter one; in this situation, the particles will still appear in one of the two IPR reconstructions, but fail to produce a two-pulse tracks during the tracking step of TP-STB (i.e., loss of tracks).

Another source of loss of tracks is due to the different particle peak intensity levels of the same tracer when imaged from different directions (i.e., cameras) with respect to the Mie scattering lobes, as some particles could disappear below the noise level in some camera images while being visible in others.

To mitigate this problem, and ensure that as many particles as possible can be reconstructed by IPR, the final triangulation iterations are performed with fewer cameras required to register a peak at the projection point (down to five cameras out of the eight available for the last two outer IPR iterations), following Wieneke (2013). Also, as proposed in Jahn et al. (2021), multiple triangulations using a permutation of the camera order are used, and the three brightest cameras are ignored during the particle-intensity update step of IPR, in order to suppress ghost particle creation. A summary of the IPR parameters is presented in Table 3-top.

Furthermore, before TP-STB processing, the images are pre-processed in order to equalize the particle peak intensity across the sensor plane, the different cameras and the frames via minimum image subtraction, division by the average image, multiplication by the mean average-intensity image over the two frames and multiplication of the second frame by the intensity ratio of the first and second frame of the average image. Finally, the intensity of the second frame is multiplied by a factor \(1.3\).

The result of the image pre-processing is shown in Fig. 12 for the same portion of the images presented in Fig. 11; while the pre-processing operation cannot resolve the issue of particle peaks disappearing below the noise level in the weaker frame, the more consistent peak intensity values represent an advantage during the peak detection step of the IPR, where the same intensity threshold can be used for all images.

Approximately \(\mathrm{370,000}\) particles are reconstructed by IPR for each frame of recording \(79\) from the recording sequence considered in Henningsson et al. (2015) (velocity field from Tomo-PIV at file B00079.vc7 in the online database, https://datadryad.org/stash/dataset/doi:10.5061/dryad.1cn55).

Given the relatively high noise levels and the large depth of the reconstruction volume, it is expected that the single-frame reconstruction will show considerably higher ghost levels as seen in the synthetic results in Sect. 3.1. However, these spurious reconstructions are typically not coherent with the flow motion (Elsinga et al. 2011) and can be filtered out by means of an accurate particle tracking scheme.

The TP-STB processing parameters are presented in Table 3. A total of three TP-STB iterations are performed; an outlier detection and removal procedure similar to what proposed by Schanz et al. (2016) is applied after the last STB iteration. As for the applications presented in Sect. 3, the tracking process is aided by a predictor from Particle Space Correlation. The multi-grid correlation approach was applied starting from a \(128\times 128\times 128 \, \mathrm{px}\) interrogation volume, progressively reduced to \(48\times 48\times 48 \, \mathrm{px}\) during four PSC iterations. The final size of the interrogation volume (\(4.42\times 4.42\times 4.42 \, {\mathrm{mm}}^{3}\)) is similar to that used for the tomographic PIV analysis presented in Henningsson et al. (2015); for the Tomo-PIV analysis a \(75\%\) overlap factor was used, resulting in a \(1.07\text{ mm}\) vector spacing.

Approximately \(\mathrm{140,000}\) particles are tracked between the two frames; \(20\:mm\)-thick volume slices are shown in Fig. 13, where tracked particles markers are color-coded with the spanwise velocity component (\(v\), along \(Y\)).

The stripy pattern observed in the light sheet from visual inspection of the camera images (Fig. 10-right) can be identified in the tracking result as well, particularly from the top view (local lower density of the markers at \(X\approx -125\text{ mm}\)). While the investigation of the flow topology and dynamics goes beyond the scope of the present study, the pattern outlined by the high and low spanwise velocity fluctuations is consistent with the findings reported in Henningsson et al. (2015).

In order to assess the accuracy of the TP-STB reconstruction, given the absence of a reference solution for the ground-truth velocity field, a comparison with the Tomo-PIV results obtained by Henningsson et al. (2015) is carried out.

As tomographic PIV results are obtained on a regular grid, the scattered LPT results are interpolated onto a comparable grid by means of the FlowFit algorithm; the size of the internal cell used for the B-splines fit was \(1.5\times 1.5\times 1.5 \, {\mathrm{mm}}^{3}\) (\(\approx 0.15\) particles-per-cell), while the result was sampled using a resolution of \(0.375\times 0.375\times 0.375 \, {\mathrm{mm}}^{3}\). The direct comparison between the Tomo-PIV and TP-STB/FlowFit results is presented in Fig. 14, where iso-surfaces of Q criterion are color-coded with the streamwise vorticity component \({\omega }_{x}\). For the full FlowFit regularization approach (i.e., divergence free optimization based on position and velocity input from TP-STB) two results are shown, corresponding to the complete TP-STB processing (three iterations, Fig. 14-bottom-left) and to a single TP-STB iteration (Fig. 14-top-right).

Iso-surfaces of Q criterion (\(\mathrm{10,000} \, 1/{\mathrm{s}}^{2}\)) color-coded by the streamwise vorticity component. Top-left: result from tomographic PIV (adapted from Henningsson et al. 2015). Top-right: result from TP-STB (single iteration) and FlowFit (full regularization). Bottom-left: result from TP-STB (three iterations) and FlowFit (full regularization). Bottom-right: result from TP-STB (three iterations) and FlowFit (interpolation)

As observed in Sect. 3.2, the visual inspection of the velocity spatial gradients provides an accurate feedback on the errors affecting the velocity field; spurious iso-surfaces of Q criterion indicate a higher noise level, while broken vortex filaments suggests a modulation of the signal (i.e., underestimation of the velocity fluctuations). Smoother and thicker vortex filaments suggest, instead, an improvement of the spatial resolution of the measurement.

The results shown in Fig. 14 confirm the capability of TP-STB LPT and FlowFit data assimilation in matching the flow topology of the results from tomographic PIV even in experimental conditions not expressly optimized for particle tracking. Furthermore, noticeable improvements in the areas marked with A, B and C in Fig. 14-middle suggest that the TP-STB algorithm is able to resolve regions of the flow where the filtering effect of the cross-correlation volume size results in a modulation of the signal (i.e., disconnected flow structures). On the other hand, the major improvement observed in the area marked with the letter D for the TP-STB result (longitudinal vortex connecting upstream structure with the downstream one) is to be ascribed to the smaller reconstruction volume along \(Z\) chosen for the Tomo-PIV analysis. The comparison between the full TP-STB processing result (Fig. 14-bottom-left) and the one from the faster single-iteration processing (Fig. 14-top-right), where the number of tracked particles reaches only \(\mathrm{110,000}\), confirms the benefit of the iterative STB approach shown, for synthetic data, in Fig. 4. However, even this lower-quality reconstruction compares well with the result from tomographic PIV.

Furthermore, a FlowFit result obtained with the three-iterations TP-STB result while omitting the penalization of the velocity divergence is presented in Fig. 14-bottom-right. This result presents what can be achieved by a simple B-spline interpolation of the LPT results if a data assimilation algorithm is not available. While the performances of this simpler approach do not match those of the full FlowFit optimization (e.g., region D), this result still shows a quality that is comparable to the Tomo-PIV solution. The computational time of the simple interpolation (\(\approx 85 \, \mathrm{s}\)) is \(30\%\) lower than that required by the full regularization (\(\approx 120\text{ s})\), and should compare well to a high-quality 3D cross-correlation.

In conclusion, the overall smoothness of the LPT solution, and the fact that flow structures are more clearly defined (e.g., larger diameter of the vortex filaments), combined with a similar, if not lower, noise level when compared to the Tomo-PIV result, suggest that some of the advantages of LPT approaches (i.e., in spatial resolution) can be extended to the domain of double-frame measurements, even in non-ideal imaging conditions.

The results presented above focus on the performance assessment of the TP-STB algorithm concerning the quality of the reconstructed flow field rather than on the track yield. In fact, as the evaluation of the particle image density based on particle peak detection on the recorded images becomes increasingly difficult for densities above \(0.04 \, \mathrm{ppp}\) (Novara 2013), the number of imaged particles cannot be accurately estimated, particularly for the camera images exhibiting the larger number of peaks. Furthermore, the number of particles detected on a camera image does not necessarily correspond to the number of particles that could potentially be tracked. In fact, mainly due to the Mie scattering lobes (Manovski et al. 2021), a particle visible to one camera (i.e., viewing direction) would not necessarily appear in the recorded images from other cameras in the imaging system.

Nevertheless, while the exact number of particles expected in the 3D volume cannot be accurately determined, an indication of a plausible range can be given by considering the variability of the particle image density across the imaging system as well as the properties of the IPR algorithm.

A conservative estimate for the number of particles in the volume can be obtained by counting the particle image peaks on the camera frame exhibiting the lowest particle image density (approximately \(0.036 \, \mathrm{ppp}\) for the second frame in camera 4); given the active size of the camera sensor, this amounts to approximately \(\mathrm{152,000}\) particles. However, the IPR algorithm makes use of a number of iterations where a reduced number of cameras is considered for the triangulation; this allows for the reconstruction of particles images compromised on a particular camera, possibly due to noise or overlapping situations (Jahn et al. 2021). Since the minimum number of cameras used for the IPR reconstruction for the present case is \(5\) (see Table 3), the number of peaks identified on the fourth weakest cameras (\(\approx \mathrm{182,000}\) on the second frame of camera 1, \(\approx 0.043 \, \mathrm{ppp}\)) can be used to provide an estimate for the lower bound of the track yield range of TP-STB. Based on these considerations, it can be concluded that the TP-STB is capable of successfully reconstructing between \(77\) and \(92\%\) of the total number of particles expected in the imaged 3D domain.

While the upper bound of \(92\%\) is consistent with the track yield performances of the algorithm presented in Sects. 3.1, 3.2, the challenges posed by experiments in air (low image signal-to-noise ratio, inhomogeneity of the illumination, particle diameter polydispersity, Mie scattering lobes among others) could result in the poorer performances indicated by the lower bound of the range.

Nevertheless, even if the conservative figure of \(77\%\) track yield is considered, it is interesting to note that this relatively low number of reconstructed tracks, combined with the FlowFit data assimilation algorithm, is enough to provide a better result (in terms of spatial resolution) than that from tomographic PIV.

Furthermore, the tracking scheme seems capable of filtering out spurious particles, even with the limited temporal resolution provided by two pulses only. The latter point is proven by the absence of characteristic artifacts introduced in the FlowFit solution by outliers in the input tracks, typically appearing as unphysical small vortex rings (donut-shaped) in the visualization of 3D iso-surfaces of Q criterion.

A final consideration is devoted to the subject of computational cost. Henningsson et al. (2015) reported that the analysis of the full dataset analyzed in the study took \(96\) days of around-the-clock processing on a server with 48 AMD cores and 64 Gb of RAM, in 2012. Assuming that the processing of the different recordings was not performed in parallel, it follows that the complete Tomo-PIV processing (reconstruction and 3D cross-correlation) required for a single double-frame realization was approximately \(10\) h. Despite the significant improvements in processors architecture that occurred in the last ten years, it can be safely assumed that the Tomo-PIV analysis would still result in a significant computational cost today.

On the other hand, the sparse reconstruction approach offered by the IPR reconstruction and individual particle tracking of the STB, greatly reduces the processing time required for the LPT analysis.

For the results presented in Fig. 14, on a 16 cores AMD Threadripper 1950X machine, the computational time for the full TP-STB processing (three iterations) amounted to \(7.5\) min (PSC: \(60 \, \mathrm{s}\), IPR + tracking: \(260 \, \mathrm{s}\), FlowFit: \(120 \, \mathrm{s}\)), while a single TP-STB iteration took \(5\) minutes (PSC: \(60 \, \mathrm{s}\), IPR + tracking: \(122 \, \mathrm{s}\), FlowFit: \(120 \, \mathrm{s}\)). The processing of the entire recording sequence would take under \(30\) h for three-iteration TP-STB, without making use of parallel processing.

5 Conclusions and outlook

An iterative Two-Pulse Shake-The-Box (TP-STB) approach has been presented aimed at extending the benefits of 3D Lagrangian particle tracking (accuracy, spatial resolution, computational efficiency) to double-frame particle image recordings from a multi-camera imaging system.

The method makes use of the advanced Iterative Particle Reconstruction algorithm presented by Jahn et al. (2021) in combination with the iterative scheme of reconstruction and tracking proposed in the framework of the Multi-Pulse STB algorithm development (Novara et al. 2016a, 2019), in order to take advantage of the, despite limited, temporal information contained in a two-pulse recording sequence.

An independent performance assessment of the TP-STB algorithm (Sciacchitano et al. 2021) already demonstrated the potential of the technique.

Here, the performances of TP-STB are further assessed in terms of particle yield and accuracy of the reconstruction, based on its application to a higher image-noise synthetic dataset (up to \(0.12 \, \mathrm{ppp}\)) and to an experimental one (at \(0.075\text{ ppp}\)); in both cases a reference for the ground-truth solution is provided by the results from time-resolved STB (TR-STB, Schanz et al. 2016) applied to the time-resolved recordings.

The results confirm the capabilities of the TP-STB technique and show that the majority of the tracers can be reconstructed, while the fraction of spurious peaks (ghost particles) remains low. As reported in the literature for the case of Multi-Pulse STB, the iterative STB strategy yields a higher number of correct tracks for TP-STB as well, particularly at higher particle image densities.

The positional accuracy is in good agreement with that reported from Sciacchitano et al. (2021) for similar data, thus confirming the suitability of adopting the TR-STB solution as a proxy for the ground-truth when a time-resolved sequence of recordings is available.

Finally, the TP-STB algorithm has been applied to existing double-frame images from a desert locust wake tomographic PIV investigation dataset acquired in 2012. The comparison of the LPT results to those from Tomo-PIV reported in Henningsson et al. (2015), confirmed the accuracy of the TP-STB reconstruction (combined with the FlowFit data assimilation algorithm) when operating on actual double-frame recordings where the imaging conditions had not been optimized for a particle tracking approach.

The visual inspection of the flow structures detected in the tracking result, matching and sometimes surpassing the accuracy of those from Tomo-PIV, suggests that the main advantages in terms of spatial resolution of LPT approaches can be extended to the domain of two-pulse recordings by making use of the proposed TP-STB technique.

The successful application of this tracking algorithm, even in challenging imaging conditions and high particle image density (up to \(0.12\) and \(0.075\text{ ppp}\) for synthetic and experimental data, respectively), can be mainly ascribed to the recent advances in IPR reconstruction (Jahn et al. 2021), particle tracking strategies (Schanz et al. 2016; Novara et al. 2016a and 2019) and the FlowFit data assimilation algorithm (Gesemann et al. 2016).

The large number of cameras used in the desert locust experiment enables a more accurate reconstruction when compared to typical three- or four-cameras system as both the tomographic reconstruction and IPR benefit from the higher number of projections. This feature opens the possibility of using the present dataset to investigate the effect of the number of views on the reconstruction accuracy; in fact, a reference solution could be obtained by making use of the full imaging system, and the sensitivity of the methods to the number of projections assessed making use of a subset of cameras. This analysis, however, goes beyond the scope of the present study.

Furthermore, a study of the uncertainty quantification in the determination of statistical quantities from TP-STB instantaneous track fields could be envisioned. Despite the fact that the results presented here do not indicate a strong presence of spurious tracks, the possible bias introduced by ghost tracks could be investigated, and the introduction of filtering techniques that would allow to mitigate this effect discussed. This investigation, possibly aided by dedicated tailored experiments, is devoted to a future study.

Availability of data and materials

Velocity fields from the tomographic-PIV experiment described in Henningsson et al. (2015) are available on the online database (https://datadryad.org/stash/dataset/doi:10.5061/dryad.1cn55).

References

Bosbach J, Schanz D, Godbersen P, Schröder A (2019) Dense Lagrangian particle tracking of turbulent Rayleigh Bénard convection in a cylindrical sample using Shake-The-Box. In: 17th European Turbulence Conference

Cornic P, Leclaire B, Champagnat F, Le Besnerais G, Cheminet A, Illoul C, Losfeld G (2020) Double-frame tomographic PTV at high seeding densities. Exp Fluids 61(2):1–24

Elsinga GE, Scarano F, Wieneke B, van Oudheusden BW (2006) Tomographic particle image velocimetry. Exp Fluids 41(6):933–947

Elsinga GE, Westerweel J, Scarano F, Novara M (2011) On the velocity of ghost particles and the bias errors in tomographic-PIV. Exp Fluids 50(4):825–838

Fuchs T, Hain R, Kähler CJ (2016) Double-frame 3D-PTV using a tomographic predictor. Exp Fluids 57(11):1–5

Fuchs T, Hain R, Kähler CJ (2017) Non-iterative double-frame 2D/3D particle tracking velocimetry. Exp Fluids 58(9):1–5

Gesemann S, Huhn F, Schanz D, Schröder A (2016) From noisy particle tracks to velocity, acceleration and pressure fields using B-splines and penalties. In: 18th International symposium on applications of laser and imaging techniques to fluid mechanics, Lisbon, Portugal, pp 4–7

Henningsson P, Michaelis D, Nakata T, Schanz D, Geisler R, Schröder A, Bomphrey RJ (2015) The complex aerodynamic footprint of desert locusts revealed by large-volume tomographic particle image velocimetry. J R Soc Interface 12(108):20150119

Herman GT, Lent A (1976) Iterative reconstruction algorithms. Comput Biol Med 6(4):273–294

Huhn F, Schanz D, Gesemann S, Dierksheide U, van de Meerendonk R, Schröder A (2017) Large-scale volumetric flow measurement in a pure thermal plume by dense tracking of helium-filled soap bubbles. Exp Fluids 58(9):1–19

Hunt JC, Wray AA, Moin P (1988) Eddies, streams, and convergence zones in turbulent flows. Studying turbulence using numerical simulation databases, 2. In: Proceedings of the Summer Program, 1988

Jahn T, Schanz D, Schröder A (2021) Advanced iterative particle reconstruction for Lagrangian particle tracking. Exp Fluids 62:179

Jahn T, Schanz D, Gesemann S, Schröder A (2017) 2-Pulse STB: 3D particle tracking at high particle image densities. In: 12th International symposium on PIV–PIV17. Busan, Korea

Kähler CJ, Astarita T, Vlachos PP, Sakakibara J, Hain R, Discetti S, Cierpka C (2016) Main results of the 4th International PIV Challenge. Exp Fluids 57(6):97

Lasinger K, Vogel C, Pock T, Schindler K (2020) 3D fluid flow estimation with integrated particle reconstruction. Int J Comput Vis 128(4):1012–1027

Leclaire B, Mary I, Liauzun C, Péron S, Sciacchitano A, Schröder A, Champagnat F (2021) First challenge on Lagrangian particle tracking and data assimilation: datasets description and planned evolution to an open online benchmark. In: 14th International symposium on particle image Velocimetry (Vol. 1, No. 1)

Lynch KP, Scarano F (2015) An efficient and accurate approach to MTE-MART for time-resolved tomographic PIV. Exp Fluids 56(3):1–16

Maas HG, Gruen A, Papantoniou D (1993) Particle tracking velocimetry in three-dimensional flows. Exp Fluids 15(2):133–146

Manovski P, Novara M, Mohan NKD, Geisler R, Schanz D, Agocs J, Schröder A (2021) 3D Lagrangian particle tracking of a subsonic jet using multi-pulse Shake-The-Box. Exp Therm Fluid Sci 123:110346

McInnes L, Healy J, Astels S (2017) hdbscan: Hierarchical density-based clustering. J Open Source Softw 2(11):205

Nishino K, Kasagi N, Hirata M (1989) Three-dimensional particle tracking velocimetry based on automated digital image processing. J Fluids Eng 111:384–391

Novara M, Batenburg KJ, Scarano F (2010) Motion tracking-enhanced MART for tomographic PIV. Meas Sci Technol 21(3):035401

Novara M, Schanz D, Reuther N, Kähler CJ, Schröder A (2016a) Lagrangian 3D particle tracking in high-speed flows: Shake-The-Box for multi-pulse systems. Exp Fluids 57(8):128

Novara M, Schanz D, Geisler R, Gesemann S, Voss C, Schröder A (2019) Multi-exposed recordings for 3D Lagrangian particle tracking with multi-pulse Shake-The-Box. Exp Fluids 60(3):44

Novara M, Schanz D, Gesemann S, Lynch K, Schröder A (2016b). Lagrangian 3D particle tracking for multi-pulse systems: performance assessment and application of Shake-The-Box. In: 18th International symposium on applications of laser and imaging techniques to fluid mechanics, Lisbon, Portugal

Novara M (2013) Advances in tomographic PIV. Doctoral thesis. Delft University of Technology, Delft, The Netherlands