Abstract

We analyze generic sequences for which the geometrically linear energy

remains bounded in the limit \(\eta \rightarrow 0\). Here \( e(u) \,{:}{=}\,1/2(Du + Du^T)\) is the (linearized) strain of the displacement u, the strains \(e_i\) correspond to the martensite strains of a shape memory alloy undergoing cubic-to-tetragonal transformations and the partition into phases is given by \(\chi _i:{B_{1}\left( 0\right) } \rightarrow \{0,1\}\). In this regime it is known that in addition to simple laminates, branched structures are also possible, which if austenite was present would enable the alloy to form habit planes. In an ansatz-free manner we prove that the alignment of macroscopic interfaces between martensite twins is as predicted by well-known rank-one conditions. Our proof proceeds via the non-convex, non-discrete-valued differential inclusion

satisfied by the weak limits of bounded energy sequences and of which we classify all solutions. In particular, there exist no convex integration solutions of the inclusion with complicated geometric structures.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Due to the many possible applications of the eponymous shape memory effect, shape memory alloys have attracted a lot of attention of the engineering, materials science and mathematical communities. Their remarkable properties are due to certain diffusionless solid–solid phase transitions in the crystal lattice of the alloy, enabling the material to form microstructures. More specifically, the lattice transitions between the cubic austenite phase and multiple lower-symmetry martensite phases, triggered by crossing a critical temperature or applying stresses; see Bhattacharya [6] for a thorough introduction.

A sketch of the cubic-to-tetragonal transformation. The left-hand side represents the cubic austenite phase, while the right-hand side represents the martensite variants that are elongated in the direction of one of the axes of the cube and shortened in the other two. Adapted from [6, Figure 4.5]

As a result, these materials often form microstructures. In shape memory alloys undergoing cubic-to-tetragonal transformations, see Fig. 1, one frequently observes the following types of microstructures:

-

1.

Twins: Fine-scale laminates of martensite variants, see Fig. 2a and both sides of the interface at the center of Fig. 2b.

-

2.

Habit planes: Almost sharp interfaces between austenite, and a twin of martensites, where the twin refines as it approaches the interface, see Fig. 2a.

-

3.

Second-order laminates, or twins within a twin: Essentially sharp interfaces between two different refining twins, see Fig. 2b.

-

4.

Crossing second-order laminates: Two crossing interfaces between twins and pure phases, see for example Fig. 2c.

-

5.

Wedges: Materials whose lattice parameters satisfy a certain relation can form a wedge of two martensite twins in austenite, see [6, Chapter 7.3.1] and Fig. 2d.

Furthermore, at least in Microstructures 1, 2 and 5, all observed interfaces form parallel to finitely many different hyperplanes relative to the crystal orientation. In this paper, we present a theorem characterizing all possible microstructures whose energy is comparable to that of a habit plane in the geometrically linear theory.

a Optical micrograph of a habit plane with austenite on the right-hand side and twinned martensite on the left-hand side in a Cu–Al–Ni alloy undergoing cubic-to-orthorhombic transformations. Reprinted by permission from Springer Customer Service Centre GmbH [17]. b Optical micrograph of a second-order laminate in a Cu–Al–Ni alloy, by courtesy of C. Chu and R.D. James. c Optical micrograph of two crossing second-order laminates in an Indium–Thallium crystal. The bottom region is in the austenite phase. All other regions show twinned martensite variants with the twinning in the left-hand side one being almost parallel to the surface of the sample. Reprinted from [4], with permission from Elsevier. d Optical micrograph of a wedge in a Cu–Al–Ni alloy, by courtesy of C. Chu and R.D. James

1.1 Contributions of the Mathematical Community

1.1.1 Modeling

The first use of energy minimization in the modeling of martensitic phase transformations has been made by Khatchaturyan, Roitburd and Shatalov [24,25,26, 36, 37] on the basis of linearized elasticity. This allowed prediction of certain large scale features of the microstructure such as the orientation of interfaces between phases.

Variational models based on nonlinear elasticity go back to Ball and James [2, 3]. They formulated a model in which the microstructures correspond to minimizing sequences of energy functionals vanishing on

for finitely many suitable symmetric matrices \(U_i\) with \(i=1,\ldots m\) and \(m\in \mathbb {N}\). In their theory, the orientations of interfaces arise from a kinematic compatibility condition known as rank-one connectedness, see [6, Chapter 2.5]. For cubic-to-tetragonal transformations, Ball and James prove in an ansatz-free way that the fineness of the martensite twins in a habit plane is due to only certain mixtures of martensite variants being compatible with austenite. Their approach is closely related to the phenomenological (or crystallographic) theory of martensite independently introduced by Wechsler, Lieberman and Read [42] and Bowles and MacKenzie [8, 33].

A comparison of the nonlinear and the geometrically linear theories can be found in an article by Bhattacharya [5]. Formal derivations of the geometrically linear theory from the nonlinear one have been given by Kohn [28] and Ball and James [3]. A rigorous derivation via \(\varGamma \)-convergence has been given by Schmidt [41] with the limiting energy in general taking a more complicated form than the usually used piecewise quadratic energy densities.

1.1.2 Rigidity of Differential Inclusions

The interpretation of microstructure as minimizing sequences naturally leads to analyzing the differential inclusions

sometimes called the m-well problem, or variants thereof such as looking for sequences \(u_k\) such that \({\text {dist}}(Du_k,K) \rightarrow 0\) in measure. In fact, the statements of Ball and James are phrased in this way [2, 3]. A detailed discussion of these problems which includes the theory of Young measures has been provided by Müller [34].

However, differential inclusions in themselves are not accurate models: Müller and Šverák [35] constructed solutions with a complex arrangement of phases of the differential inclusion \(Du \in SO(2) A \cup SO(2) B\) with \({\text {det}}(A) = {\text {det}} B = 1\), for which one would naively only expect laminar solutions, in two space dimensions using convex integration. Later, Conti, Dolzmann and Kirchheim [15] extended their result to three dimensions and the case of cubic-to-tetragonal transformations.

But Dolzmann and Müller [19] also noted that if the inclusion \(Du \in SO(2) A \cup SO(2) B\) is augmented with the information that the set \(\{Du \in SO(2)A\}\) has finite perimeter, then Du is in fact laminar. Also this result holds in the case of cubic-to-tetragonal transformations as shown by Kirchheim.Footnote 1 There has been a series of generalizations including stresses [13, 16, 22, 31, 32]. However, these are more in the spirit of the geometric rigidity theorem due to Friesecke, James and Müller [21], since they rely on the perimeter being too small for lamination and as such do not give insight into the rigidity of twins. In the presence of a single rank-one connection and an additional anisotropic pertubation of the energy, this problem has more recently been overcome by Davoli and Friedrich [18] by exploiting a version of the geometric rigidity theorem for matrix fields with non-zero curl.

In contrast, the differential inclusion arising from the geometrically linear setting

where \(e_i\) for \(i=1,2,3\) are the linearized strains corresponding to the cubic-to-tetragonal transformation, see (4), is rigid in the sense that all solutions are laminates even without further regularizations as proven by Dolzmann and Müller [19]. Quantifying this result Capella and Otto [10, 11] proved that laminates are stable in the sense that if the energy (1) (including an interfacial penalization) is small then the geometric structure of the configuration is close to a laminate. Additionally, there is either only austenite or only mixtures of martensite present. Capella and Otto also noted that for sequences with bounded energy such a result cannot hold due to a well-known branching construction of habit planes (Fig. 2a) given by Kohn and Müller [29, 30].

Therein, Kohn and Müller used a simplified scalar version of the geometrically linear model with surface energy to demonstrate that compatibility of austenite with a mixture of martensites only requires a fine mixture close to the interface so that the interfacial energy coarsens the twins away from the interface. Kohn and Müller also conjectured that the minimizers exhibit this so-called branching, which Conti [14] affirmatively answered by proving minimizers of the Kohn–Müller functional to be asymptotically self-similar.

In view of the results by Kohn and Müller, and Capella and Otto it is natural to consider sequences with bounded energy in order to analyze the rigidity of branching microstructures.

1.1.3 Some Related Problems

So far, we have mostly discussed the literature describing the microstructure of single crystals undergoing cubic-to-tetragonal transformations. However, the variational framework can be used to address related problems, for which we highlight a few contributions as an exhaustive overview is outside the scope of this introduction.

An overview of microstructures arising in other transformations can be found in the book by Bhattacharya [6]. Rigorous results for cubic-to-orthorhombic transformations in the geometrically linear theory can be found in a number of works by Rüland [38, 39]. For the much more complicated cubic-to-monoclinic-I transformations with its twelve martensite variants, Chenchiah and Schlömerkemper [12] proved the existence of certain non-laminate microstructures in the geometrically linear case without surface energy.

For an overview over the available literature on polycrystalline shape memory alloys we refer the reader once again to Bhattacharya’s book [6, Chapter 13] and an article by Bhattacharya and Kohn [7].

Another problem is determining the shape of energy-minimizing inclusions of martensite with given volume in a matrix of austenite, for which scaling laws have been obtained by Kohn, Knüpfer and Otto [27] for cubic-to-tetragonal transformations in the geometrically linear setting.

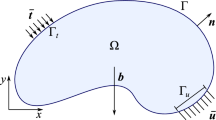

1.2 Definition of the Energy

In order to analyze the rigidity properties of branched microstructures we choose the geometrically linear setting, since the quantitative rigidity of twins is well understood due to the results by Capella and Otto [10, 11]. In fact, we continue to work with the same already non-dimensionalized functional, namely

where

Here \(u \in W^{1,2}({B_{1}\left( 0\right) }; \mathbb {R}^3)\) is the displacement and \(e(u) =\frac{1}{2}\left( Du + Du^T\right) \) denotes the strain. Furthermore, the partition into the phases is given by \(\chi _i \in L^\infty ({B_{1}\left( 0\right) } ; \{0,1\})\) for \(i=1,2,3\) with \( \sum _{i=1}^3\chi _i = 1\) and the strains associated to the phases are given by

In particular, we assume the reference configuration to be in the austenite state, but that the transformation has occurred throughout the sample, i.e., there is no austenite present. This simplifying assumption does rule out habit planes, see Fig. 2a, but a look at Fig. 2b suggests that we can still hope for an interesting result. Besides, the responsible mechanism for macroscopic rigidity is the rank-one connectedness of the average strains \(e(u_\eta ) \rightharpoonup e(u)\) in \(L^2\), which cannot distinguish between pure phases and mixtures.

The condition of the material being a shape memory alloy is encoded in the fact that \({\text {tr}}(e_i) = 0\) for all \(i=1,2,3\) as this corresponds to the transformation being volume-preserving.

Further simplifying choices are using equal isotropic elastic moduli with vanishing second Lamé constant for all martensite phases and penalizing interfaces by the total variation of \(D\chi _i\) for \(i=1,2,3\). Of course, as such it is unlikely that the model can give quantitatively correct predictions. Bhattacharya for example argues that assuming equal elastic moduli is not reasonable [5, Page 238].

We still expect our analysis to give relevant insight as we will for the most part prove compactness properties of generic displacements \(u_\eta \in W^{1,2}({{B_{1}\left( 0\right) }};\mathbb {R}^3)\) and partitions \(\chi _\eta \) for \(\eta >0\) such that

This regime is the appropriate one to analyze branching microstructures: On the one hand, (generalizations of) the Kohn–Müller branching construction of habit planes have bounded energy. On the other hand, the stability result of Capella and Otto [11] rules out branching in sequences with asymptotically vanishing energy by ensuring that in a strong topology there is either almost exclusively austenite or the configuration is close to a laminate. In other words, the branching construction implies that the stability result is sharp with respect to the energy regime as pointed out by Capella and Otto in their paper.

1.2.1 Compatibility Properties of the Stress-Free Strains

It is well known, see [6, Chapter 11.1], that for \(M_1\), \(M_2 \in \mathbb {R}^{3\times 3}\) and \(n \in {\mathbb {S}}^2\) the following two statements are equivalent:

-

There exists a continuous function \(u:\mathbb {R}^3 \rightarrow \mathbb {R}^3\) such that for almost all \(x\in \mathbb {R}^3\) it holds that

$$\begin{aligned} e(u)(x) = {\left\{ \begin{array}{ll} M_1 &{}\quad {\text { if }} x \cdot n > 0,\\ M_2 &{}\quad {\text { if }} x \cdot n < 0, \end{array}\right. } \end{aligned}$$(5)see Fig. 3a.

-

The two strains are (symmetrically) rank-one connected in the sense that there exists \(a \in \mathbb {R}^3\) such that

$$\begin{aligned} M_1 - M_2 = a \odot n \,{:}{=}\, \frac{1}{2}( a \otimes n + n \otimes a) . \end{aligned}$$

Note that the condition is symmetric in a and n thus every rank-one connection generically gives rise to two possible normals. Additionally, as rank-one connectedness is also symmetric in \(M_1\) and \(M_2\) this allows for the construction of laminates.

a Geometry of an interface parallel to the plane \(\{x\cdot n =0\}\) in a laminate joining the strains \(M_1\) and \(M_2\). b Sketch relating the martensite strains with the cone C (dotted) of symmetrized rank-one matrices in the two-dimensional strain space S. Note that C is a union of three lines parallel to the edges of the triangle \(\mathscr {K}\)

In order to present the result of applying the rank-one connectedness condition to the case of cubic-to-tetragonal transformations notice that

Here, we call the two-dimensional space S strain space. It can be shown, either by direct computation or an application of [12, Lemma 3.1], that all rank-one directions in S are multiples of \(e_2 - e_1\), \(e_3 - e_2\) and \(e_1 - e_3\); this means that they are parallel to one of the sides of the equilateral triangle

spanned by \(e_1, e_2\) and \(e_3\) shown in Fig. 3b. In particular, the martensite strains are mutually compatible but austenite is only compatible to certain convex combinations of martensites which turn out to be \(\frac{1}{3} e_i + \frac{2}{3} e_j\) for \(i,j =1,2,3\) with \(i\ne j\).

1.3 The Contributions of the Paper

We study the rigidity of branching microstructures due to “macroscopic” effects in the sense that we only look at the limiting volume fractions \(\chi _{i,\eta } \overset{*}{\rightharpoonup } \theta _i\) in \(L^\infty \) after passage to a subsequence, which completely determines the limiting strain \(e(u_\eta ) \rightharpoonup e(u)\) in \(L^2\).

Similarly to the result of Capella and Otto [11], our main result, Theorem 1, is local in the sense that we can classify the function \(\theta \) on a smaller ball \({B_{r}\left( 0\right) }\) of universal radius \(0<r<1\). As such, having posed the problem on \({B_{1}\left( 0\right) }\) instead of a more general domain does not present a significant restriction of the result. As the characterization of each of the four possible cases is a bit lengthy, we postpone a detailed discussion to Section 2.3. An important point is that we deduce all interfaces between different mixtures of martensites to be hypersurfaces whose normals are as predicted by the rank-one connectedness of the average strains on either side. In this respect our theorem improves on previously available ones, as they either explicitly assume the correct alignment of a habit plane, see e.g. Kohn and Müller [30], or require other ad-hoc assumptions; for example, Ball and James [2, Theorem 3] show habit planes to be flat under the condition that the austenite phase defines a connected set.

The broad strategy of our proof is to first ensure that in the limit the displacement satisfies the non-convex differential inclusion

encoding that locally at most two variants are involved, see Definition (7) and Fig. 3, and then to classify all solutions. We strongly stress the point that we do not need to assume any additional regularity in order to do so. In particular, the differential inclusion is rigid in the sense that it does not allow for convex integration solutions with extremely intricate geometric structure. To our knowledge this is the first instance of a rigidity result for a non-discrete differential inclusion in the framework of linearized elasticity.

The main idea is that “discontinuity” of e(u) and the differential inclusion \(e(u) \in \mathscr {K}\) balance each other: If \(e(u) \notin VMO\), see Definition 1, a blow-up argument making use of measures describing the distribution of values \(e(u)\in \mathscr {K}\), similar in spirit to Young measures, proves that the strain is independent of one direction. If \(e(u) \in VMO\) the differential inclusion gives us less information, but we can still prove that throughout some smaller ball \({B_{r}\left( 0\right) }\), \(r\in (0,1)\), only two martensite variants are involved by using an approximation argument. Finally, we classify all solutions which are independent of one direction.

The structure of the paper is as follows: in Section 2 we state and discuss our main theorem in detail. We then give an in-depth explanation of most necessary auxilliary results required to prove Theorem 1 in Section 3. All proofs of the statements in Sections 2 and 3 are presented in Section 4 in the order of their appearance. The “Appendix A” finally contains two lemmas of a technical nature, along with their proofs.

2 The Main Rigidity Theorem

Theorem 1

There exists universal radii \(r,\tilde{r} \in (0,1)\) such that the following holds: For \(n \in \mathbb {N}\) let \(\eta _n >0\) be a sequence with \(\lim _{n \rightarrow \infty } \eta _n = 0\). Let \(u_{\eta _n}\in W^{1,2}({B_{1}\left( 0\right) }; \mathbb {R}^3)\) and \(\chi _{\eta _n} \in L^\infty ({B_{1}\left( 0\right) } ; \{0,1\}^3)\) with \(\sum _{i=1}^3 \chi _{\eta _n,i} \equiv 1\) almost everywhere be sequences of displacements and partitions such that \(\limsup _{n\rightarrow \infty } E_{\eta _n}(u_{\eta _n},\chi _{\eta _n}) < \infty \) and such that there exist \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) and \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) with

in the limit \(n\rightarrow \infty \). Then for almost all \(x\in {B_{1}\left( 0\right) }\) we have \(\theta _i(x) \in [0,1]\) for \(i=1,2,3\),

Furthermore, all solutions to this differential inclusion are two-variant configurations, planar second-order laminates, planar checkerboards on \({B_{r}\left( 0\right) }\) or planar triple intersections on \({B_{\tilde{r}}\left( 0\right) }\), according to Definitions 2–6 below.

Note that after modifying \(u_{\eta _n}\) for \(n\in \mathbb {N}\) so that

any sequence with asymptotically bounded energy has subsequences (not relabeled) such that \(u_{\eta _n} \rightharpoonup u\) in \(W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) and \(\chi _{\eta _n} \overset{*}{\rightharpoonup } \theta \) in \(L^\infty ({B_{1}\left( 0\right) };\mathbb {R}^3)\) due to Korn’s and Poincaré’s inequalities.

The first part of the conclusion states that the volume fractions \(\theta _i\) for \(i=1,2,3\) act as barycentric coordinates for the triangle in strain space with vertices \(e_1\), \(e_2\) and \(e_3\). In terms of these, the differential inclusion \(e(u) \in \mathscr {K}\) boils down to locally only two martensite variants being present.

In plain words, the classification of solutions states that

-

1.

only two martensite variants are involved, see Definition 2,

-

2.

or the volume fractions \(\theta \) only depend on one direction and look like a second order laminate, see Definition 4,

-

3.

or they are independent of one direction and look like a checkerboard of up to two second-order laminates crossing, see Definition 5,

-

4.

or they are independent of one direction and macroscopically look like three second-order laminates crossing in an axis, see Definition 6.

Comparing this list to the list of observed microstructures in the introduction, we see that three crossing second-order laminates are missing. Indeed, we are unaware of them being mentioned in the currently available literature. One possible explanation for the absence of planar triple intersections in observations is that they could be an artifact of the linear theory. Another one is that their very rigid geometry, see Definition 6, could lead to them being unlikely to develop during the inherently dynamic process of microstructure formation.

Furthermore, we see that the theorem of course captures neither wedges (which are known to be missing in the geometrically linearized theory anyway [5]) nor habit planes due to austenite being absent. Unfortunately, an extension of the theorem including austenite does not seem tractable with the methods used here: The central step allowing to classify all solutions of the differential inclusion is to show that most configurations are independent of some direction. Even those that do depend on all three variables have a direction in which they are very well-behaved, i.e., they are affine. However, with austenite being present this property is lost, as the following example shows:

Lemma 1

There exist solutions \(u: \mathbb {R}^3 \rightarrow \mathbb {R}^3\) of the differential inclusion \(e(u) \in \mathscr {K}\cup \{0\}\) such that e(u) has a fully three dimensional structure.

Note that Theorem 1 strongly restricts the geometric structure of the strain, even if the four cases exhibit varying degrees of rigidity. Therefore, we can interpret it as a rigidity statement for the differential inclusion \(e(u) \in \mathscr {K}\). For example, it can be used to prove that \(u(x) \equiv M \in \mathscr {K}\) is the only solution of the boundary value problem

with affine boundary data, for which convex integration constructions would give a staggering amount of solutions with complicated geometric structures. This can be seen by transporting the decomposition into one-dimensional functions of Definitions 2–6 to the boundary using the fact that they are unique up to affine functions, see [11, Lemma 5].

2.1 Inferring the Microscopic Behavior

In order to properly interpret the various cases Theorem 1 provides, we first need a clear idea of precisely what information the local volume fractions contain. In principle, they have the same downside of using Young measures to describe microstructures: They do not retain information about the microscopic geometric properties of the microstructures. In fact, the Young measures generated by finite energy sequences are fully determined by the volume fractions via the expression \(\sum _{i=1}^3\theta _i \delta _{e_i}\), since the Young measures concentrate on the matrices \(e_1\), \(e_2\) and \(e_3\), which span a non-degenerate triangle.

As every rank-one connection has two possible normals, see Equation (8), giving rise to two different twins, we cannot infer from the volume fractions which twin is used. Consequently, what looks like a homogeneous limit could in principle be generated by a patchwork of different twins. In fact, Fig. 4 shows an experimental picture of such a situation.

Additionally, without knowing which twin is present the interpretation of changes in volume fractions is further complicated by the fact there are at least three mechanisms which could be responsible:

-

1.

If there is only one twin throughout \({B_{1}\left( 0\right) }\) then the volume fractions can vary freely in the direction of lamination because there are no restrictions on the thickness of martensite layers in twins apart from the very mild control coming from the interface energy.

-

2.

If there is only one twin, the volume fractions may, perhaps somewhat surprisingly, vary perpendicularly to the direction of lamination in a sufficiently regular manner. Constructions exhibiting this behavior have been given by Conti [14, Lemma 3.1] and Kohn, Misiats and MüllerFootnote 2 for the scalar Kohn–Müller model.

-

3.

There is a jump in volume fractions across a habit plane or a second-order twin. As such a behavior costs energy, one would expect that it cannot happen too often. However, in the present setting we can only prove, roughly speaking, that the corresponding set of interfaces has at most Hausdorff-dimension \(3-\frac{2}{3}\), which will be presented in a forthcoming paper.Footnote 3

2.2 Some Notation

The rank-one connections between the martensite strains are

where the possible normals are given by

Here, we use crystallographic notation, meaning we define \(\overline{1} \,{:}{=}\, -1\). In addition, we use round brackets “( )” for dual vectors, i.e., normals of planes, while square brackets “[ ]” are used for primal vectors, i.e., directions in real space.

These normals can be visualized as the surface diagonals of a cube aligned with the coordinate axes and with side lengths \(\frac{1}{\sqrt{2}}\), see Fig. 5a. We group them into three pairs according to which surface of the cube they lie in, i.e., according to the relation \(\nu _i\cdot E_i=0\), where \(E_i\) is the standard i-th basis vector of \(\mathbb {R}^3\): Let

Note that this grouping is also appears in Equation (8). We will also frequently want to talk about the set of all possible twin and habit plane normals, which we will refer to by \(N\,{:}{=}\, N_1\cup N_2 \cup N_3\).

Throughout the paper we make use of cyclical indices 1, 2 and 3 corresponding to martensite variants whenever it is convenient.

Remark 1

An essential combinatorial property is that for \(i\in \{1,2,3\}\) and any \(\nu _i \in N_i\), \(\nu _{i+1} \in N_{i+1}\) there exists exactly one \(\nu _{i-1} \in N_{i-1}\) such that \(\{\nu _{i},\nu _{i+1},\nu _{i-1}\}\) is linearly dependent: Indeed, the linear relation is given by \(\nu _j \cdot d = 0\) for \(j \in \{1,2,3\}\) and a space diagonal

of the unit cube; see Fig. 5b. By virtue of \(|\nu \cdot \tilde{\nu }|=\frac{1}{2}\) for \(\nu \in N_i\) and \(\tilde{\nu } \in N_j\) with \(i\ne j\), the two vectors form \(60^{\circ }\) or \(120^{\circ }\) angles. In particular, we have for all \(\nu \in N_i\) and \(\tilde{\nu } \in N_j\) with \(i\ne j\), all \(x \in {\text {span}}\{\nu ,\tilde{\nu }\}\) and \(r>0\) that

Additionally, for every \(\nu \in N\) there exist precisely two \(d,\tilde{d} \in \mathscr {D}\) such that \(\nu \cdot d \ne 0\) and \(\nu \cdot \tilde{d} \ne 0\), and for every \(\tilde{\nu } \in N {\setminus } \{\nu \}\) we have \(\tilde{\nu } \cdot d = 0\) or \(\tilde{\nu } \cdot \tilde{d} = 0\). Furthermore, for all \(\nu \in N_i\) and \(\tilde{\nu } \in N_{i+1}\) with \(i\in \{1,2,3\}\) there exists a single \(d \in \mathscr {D}\) such that \(\nu \cdot d = \tilde{\nu } \cdot d =0\). In contrast, for each \(d\in \mathscr {D}\) we have \(\nu _i^+\cdot d=0\) and \(\nu _i^- \cdot d \ne 0\) or vice versa.

a Sketch relating the normals \(\nu _{3}^+, \nu _3^- \in N_3\) of the gray planes and \(E_3\). Primal vectors are shown as dashed, dual vectors as continuous lines. The picture does not attempt to accurately capture the lengths of the vectors. b Sketch showing the linearly dependent normals \(\nu _1^+\), \(\nu _2^+\) and \(\nu _3^-\) spanning the gray plane. The point p indicates the intersection of the affine span of the space diagonal \([11\overline{1}] \in \mathscr {D}\), see definition (10), with the span of the normals

Additionally, we will frequently want to express e(u) in terms of barycentric coordinates with respect to \(e_1\), \(e_2\) and \(e_3\), which are given by the function \(\theta : {B_{1}\left( 0\right) } \rightarrow [0,1]^3\) due to \(e(u) = \sum _{i=1}^3 \theta _i e_i\), see Theorem 1 or Lemma 2 below. For almost all \(x \in {B_{1}\left( 0\right) }\), the inclusion \(e(u)(x) \in \mathscr {K}\) can then be expressed as

Furthermore, for \(\nu \in N\), \(x\in \mathbb {R}^3\) and \(\alpha \in \mathbb {R}\) we will also set

to be the projection onto \({\text {span}} (\nu )\), respectively the plane normal to \(\nu \) containing \(\alpha \nu \). For \(x \in \mathbb {R}^3\) and \(r>0\) the symbol \({B_{r}\left( x\right) }\) denotes the corresponding three-dimensional ball, while for \(y \in \mathbb {R}^2\) the symbol \(B_r^{\,2}(y)\) denotes the two-dimensional ball. The essential infimum of a function \(h \in L^\infty (U)\) for \(U \subset \mathbb {R}^n\) is defined as \(\hbox {ess inf}_U h\, {:}{=}\, - \hbox {ess sup}_U - h\).

For the convenience of the reader, we also provide a definition of the space VMO(U) for an open Lipschitz domain \(U \subset \mathbb {R}^n\) for \(n\in \mathbb {N}\), which is modeled after the one given by Sarason [40] in the whole space case.

Definition 1

Let \(U \subset \mathbb {R}^n\) with \(n \in \mathbb {N}\) be an open domain and let \(f\in L^1(U)\). We say that the function f is of bounded mean oscillation, or \(f\in BMO(U)\), if we have

If we additionally have

then f is of vanishing mean oscillation, in which case we write \(f\in VMO(U)\).

It can be shown that at least for sufficiently nice sets U the space VMO is the BMO-closure of the continuous functions on U and as such it serves as a substitute for C(U) in our setting. Functions of vanishing mean oscillation need not be continuous, although they do share some properties with continuous functions, such as the “mean value theorem”, see Lemma 8 below. We stress that the uniformity in x of the convergence as \(r\rightarrow 0\) is crucial and cannot be omitted without changing the space, as can be proven by considering a function consisting of very thin spikes of height one clustering at some point.

Finally, for two real numbers \(s,t>0\) we use the notation \(s \lesssim t\) if there exists a universal constant \(C>0\) such that \(s \le C t\). In proofs, such constants may grow from line to line.

2.3 Description of the Limiting Configurations

In what follows we describe all types of configurations we can obtain as weak limits. We start with those in which globally only two martensite variants are involved.

Definition 2

Let \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) solve the differential inclusion \(e(u) \in \mathscr {K}\), i.e., there exists a measurable function \(\theta : {B_{1}\left( 0\right) } \rightarrow [0,1]^3\) such that for almost all \(x\in {B_{1}\left( 0\right) }\) we have \( e(u)(x) \equiv \sum _{i=1}^3 \theta _i(x) e_i\) and \(\theta (x) \in \widetilde{\mathscr {K}}\), see defintion (12).

We say that the configuration e(u) is a two-variant configuration on \({B_{r}\left( 0\right) }\) with \(r>0\) if there exist \(i \in \{1,2,3\}\), \(\lambda \in \mathbb {R}\) and functions \(\smash {f_{\nu _i^+}, f_{\nu _i^-} }\in L^\infty (-r,r)\) for \(\nu \in N_i\) such that for almost all \(x \in {B_{r}\left( 0\right) }\) we have

For a definition of the normals \(\nu \) see Section 2.2.

An experimental picture of a two-variant configuration resolving the acutal microstructure can be found in Fig. 4. In contrast, Fig. 6a only keeps track of the local volume fractions and indicates how they can vary in space. The deceptively similar overall geometric structure of both figures is due to the rank-one connections for the microscopic and macroscopic interfaces coinciding. This is also the reason why we cannot infer the microscopic structure from the limiting volume fractions. We can only attribute the affine change in \(x_i\) to Mechanism 2 from Section 2.1.

In the context of the other structures appearing in Theorem 1, two-variant configurations are best interpreted as their building blocks, since said structures typically consist of patches where only two martensite variants are involved. In the following, we will see that on these patches the microstructures are much more rigid than those in Fig. 6a as a result of the non-local nature of kinematic compatibility.

Apart from two-variant configurations, all others will only depend on two variables. We will call such configurations planar.

Definition 3

In the setting of Definition 2, a configuration e(u) is planar with respect to \(d \in \mathscr {D}\), see (10), on a ball \({B_{r}\left( 0\right) }\) with \(r>0\) if the following holds: For \(i\in \{1,2,3\}\) let \(\nu _i\) be the unique normal \(\nu _i \in N_i\) with \(\nu _i \cdot d = 0\), see Remark 1. Then for all \(i=1,2,3\) there exist functions \(f_{\nu _i}\in L^\infty (-r,r)\) and affine functions \(g_i:\mathbb {R}^3 \rightarrow \mathbb {R}\) with \(\partial _d g_i=0\) such that for almost all \(x\in {B_{r}\left( 0\right) }\) we have

There will be three cases of planar configurations, which at least in terms of their volume fractions look like a single second-order laminate, a “checkerboard” structure of two second order laminates crossing, or three single interfaces of second order laminates crossing in a common axis.

The first two cases are closely related to each other, the first one being almost contained in the second. However, the first case has slightly more flexibility away from macroscopic interfaces. Despite the caveat discussed in Section 2.1, we will name them planar second-order laminates.

Definition 4

In the setting of Definition 2, a configuration e(u) is a planar second-order laminate on a ball \({B_{r}\left( 0\right) }\) for \(r>0\) if it is planar and takes the following form: There exist an index \(i\in \{1,2,3\}\), \(\nu \in N_i\), \(A\subset (-r,r)\) measurable and a, \(b \in \mathbb {R}\) such that for almost all \(x \in {B_{r}\left( 0\right) }\) we have

A sketch of a planar second-order laminate can be found in Fig. 7, along with a matching experimental picture of a Cu–Al–Ni alloy, which, admittedly, undergoes a cubic-to-orthorhombic transformation.

Indeed, such configurations can be interpreted and constructed as limits of finite-energy sequences as follows, using Fig. 7 as a guide: for simplicity let us assume that A is a finite union of intervals, and that \(i=1\). Then on the interior of \(\{x\cdot \nu \in A\}\) the configuration will be generated by twins of variants 1 and 2, while on the interior of \(\{x\cdot \nu \in {A}^{\mathsf {c}}\}\), it will be generated by twins of variants 1 and 3. At interfaces, a branching construction on both sides will be necessary to join these twins in a second-order laminate. In order to realize the affine change in the direction of \(\nu \) we will need to combine Mechanisms 1 and 2 of Section 2.1 because \(\nu \) is neither a possible direction of lamination between variants 1 and 2 or variants 1 and 3, nor is it normal to one of them.

The second case consists of configurations in which two second-order laminates cross. In contrast to the first case, the strains are required to be constant away from macroscopic interfaces leading to only four different involved macroscopic strains.

Definition 5

In the setting of Definition 2, we will say that a configuration e(u) is a planar checkerboard on \({B_{r}\left( 0\right) }\) for \(r>0\) if it is planar and takes the following form: There exist \(i\in \{1,2,3\}\), \(A,B \subset (-r,r)\) measurable, \(a,b \ge 0 \) with \(a + b = 1\) and \(\nu _j \in N_j\) for \(j \in \{1,2,3\}{\setminus } \{i\}\) such that for almost all \(x \in {B_{r}\left( 0\right) }\) we have

a Sketch of a planar checkerboard with strains \(M_1\), ..., \(M_4\). The cross-section can be chosen such that the average strains are independent of the direction perpendicular to the cross-section. b Relation of the the strains in strain space. c Checkerboard structure in an Indium–Thallium crystal. Note that here the bottom region is in the austenite phase. Reprinted from [4], with permission from Elsevier

For a sketch of such configurations, see Fig. 8. Again, we briefly discuss the construction of such limiting strains. On \(\{x\cdot \nu _{i+1} \in {A}^{\mathsf {c}}\}\cap \{ x\cdot \nu _3 \in {B}^{\mathsf {c}}\}\) there is of course only the martensite variant i present. On all other patches there will be twinning and the macroscopic interfaces require branching constructions unless the interface and the twinning normal coincide, which can only happen if both strains lie on the same edge of \(\mathscr {K}\). In particular, on \(\{x\cdot \nu _{i+1} \in A,\, x\cdot \nu _{i-1} \in B\}\) there has to be branching towards all interfaces, i.e., the structure has to branch in two linearly independent directions.

Lastly, we remark on the case of three crossing second-order laminates.

Definition 6

In the setting of Definition 2, a configuration is called a planar triple intersection on \({B_{r}\left( 0\right) }\) for \(r>0\) if it is planar and the following holds: For \(i=1,2,3\) let \(\nu _i \in N_i\) and let \(\tilde{\nu }_i \in \{\nu _i,-\nu _i\}\) be oriented such that we have \(\tilde{\nu }_1 + \tilde{\nu }_2 + \tilde{\nu }_3 = 0\), see Remark 1. For all \(i=1,2,3\), there exist sets \(J_i \subset \mathbb {R}\) and \(x_0 \in {B_{r}\left( 0\right) }\) such that we have either

or

Furthermore, for all \(i=1,2,3\) there exist \(a,b_i \in \mathbb {R}\) such that \(\sum _{i=1}^3 b_i =1\) such that for almost all \(x\in {B_{r}\left( 0\right) }\) we have

A sketch of a planar triple intersection can be found in Fig. 9. Note that due to the requirement \(x_0 \in {B_{r}\left( 0\right) }\), i.e., we ask the axis of intersection of the discontinuities to intersect \({B_{r}\left( 0\right) }\), the restriction of a planar triple intersection to a smaller ball does not necessarily yield a triple intersection again, which is why Theorem 1 is formulated for two different universal radii.

a Sketch of a planar triple intersection with \(a=0\) with average strains \(M_1\),..., \(M_6\). Again the cross-section is chosen such that the strains are independent of the direction perpendicular to the cross-section. The hatching indicates a possible choice for the microscopic twins, but does not encode the necessary branching. b Relation of the strains in strain space

There are a number of possible choices of microscopic twins for constructing triple sections. We will only describe the simplest one here, which is depicted in Fig. 9a. Going around the central axis the macroscopic interfaces alternate between being a result of Mechanism 1 from Section 2.1, namely varying the relative thickness of layers in a twin, and Mechanism 3, i.e., branching, otherwise. Similarly to the case of second-order laminates, the affine changes require a combination of Mechanisms 1 and 2 on the individual patches in Fig. 9a.

3 Outline of the Proof

We will give the ideas behind each individual part of the proof of our main theorem in its own subsection. The contents of each are organized by increasing detail, so that the reader may skip to the next subsection once they are satisfied with the explanations given.

3.1 The Differential Inclusion

We first mention that the inclusion \(e(u) \in \mathscr {K}\) holds.

Lemma 2

For \(n \in \mathbb {N}\) let \(\eta _n >0\) be such that \(\lim _{n \rightarrow \infty } \eta _n = 0\). Consider sequences of displacements and partitions \(u_{\eta _n}\in W^{1,2}({B_{1}\left( 0\right) }; \mathbb {R}^3)\) and \(\chi _{\eta _n} \in L^\infty ({B_{1}\left( 0\right) }; \{0,1\}^3)\) with \(\sum _{i=1}^3 \chi _{\eta _n,i} =1\) almost everywhere such that \(\limsup _{n\rightarrow \infty } E_{\eta _n}(u_{\eta _n},\chi _{\eta _n}) < \infty \) and such that there exist \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) and \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) with

in the limit \(n\rightarrow \infty \). Then almost everywhere on \( {B_{1}\left( 0\right) }\) we have

The statement \(e(u) \equiv \sum _{i=1}^3 \theta _i e_i\) is an immediate consequence of the elastic energy vanishing in the limit, while the proof of the non-convex inclusion relies on the rescaling properties of the energy and the Capella–Otto rigidity result [11]. For \(x\in \mathbb {R}^2\) and \(r>0\) we will set

where \(\eta \) needs to be re-scaled as well due to it playing the role of a length scale, to obtain

The right-hand side consequently behaves better than just taking averages, which allows us to locally apply the result by Capella and Otto to get the statement.

3.2 Decomposing the Strain

Next, we link the convex differential inclusion

see also definition (6), to a decomposition of the strain into simpler objects, namely functions of only one variable and affine functions. Already Dolzmann and Müller [19] used the interplay of this decomposition with the non-convex inclusion \(e(u) \in \{e_1,e_2,e_3\}\) to get their rigidity result. In our case, it will however be more convenient to directly state the decomposition in terms of the barycentric coordinates \(\theta \) with respect to \(e_1\), \(e_2\) and \(e_3\). For all cyclical indices \(i=1,2,3\) the relation, valid almost everywhere in \({B_{1}\left( 0\right) }\),

ensures that both viewpoints are equivalent.

Lemma 3

There exists a universal \(r \in (0,1)\) with the following property: Let the displacement \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) and the function \(\theta \in L^\infty ({B_{1}\left( 0\right) };\mathbb {R}^3)\) almost everywhere on \({B_{1}\left( 0\right) }\) satisfy

Then for all \(\nu \in N\) and all cyclical indices \(i=1,2,3\) there exist \(f_\nu \in L^\infty (-r,r)\) and affine functions \(g_i : \mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x\in {B_{r}\left( 0\right) }\) we have

The only (marginally) new aspect of Lemma 3 compared to the previously known versions [19, Lemma 3.2] and [11, Proposition 3.9] is the statement \(f_\nu \in L^\infty \) for all \(\nu \in N\). We will thus only highlight the required changes to the proof of Capella and Otto [11, Proposition 3.9]. Essentially, the strategy here is to integrate the Saint–Venant compatibility conditions for linearized strains, which in our situation take the form of six two-dimensional wave equations, see Lemma 5. Thus it is not surprising that the decomposition is in fact equivalent to

being a symmetric gradient.

A central part of the proof of Lemma 3 is uniqueness up to affine functions of the decomposition [11, Lemma 3.8]. We can apply this result to characterize two-variant configurations as the only ones with \(\theta _i \equiv 0\) for some \(i =1,2,3\), i.e., as the only ones that indeed only combine two variants.

Corollary 1

Almost everywhere on \({B_{1}\left( 0\right) }\), let \(e(u) \in \mathscr {K}\) with \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) be such that the barycentric coordinates \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) satisfy \(\theta \in \widetilde{\mathscr {K}}\). Furthermore, for all \(\nu \in N\) and all cyclical indices \(i=1,2,3\) let there exist \(f_\nu \in L^\infty (-1,1)\) and affine functions \(g_i:\mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x \in {B_{1}\left( 0\right) }\) we have

If for some \(i \in \{1,2,3\}\) we have \(\theta _i \equiv 0\) a.e. on \({B_{1}\left( 0\right) }\), then the solution of the differential inclusion is a two-variant configuration on \({B_{1}\left( 0\right) }\) according to Definition 2.

Another very useful consequence of the decomposition (15) is that such functions have traces on hyperplanes as long as none of the individual one-dimensional functions are necessarily constant on them. See Fig. 10 for the geometry in a typical application.

Lemma 4

Let \(m,n,k,P \in \mathbb {N}{\setminus }\{0\}\) with \(n\ge 2\) and \(k<n\). Let \(\mathscr {C}\subset \mathbb {R}^m\) be a closed, convex set. For \(i=1,\ldots , P\) let \( \nu _i\in {\mathbb {S}}^{n-1}\) and \(f_i \in L^1_{\mathrm{loc}}( \mathbb {R}; \mathbb {R}^m) \) be such that \(F: \mathbb {R}^n \rightarrow \mathscr {C}\) for almost all \(x\in \mathbb {R}^n\) satisfies the decomposition

Furthermore, let \(V\subset \mathbb {R}^n\) be a k-dimensional subspace such that \(\nu _i \notin V^\perp \) for all indices \(i=1,\ldots , P\).

Then the decomposition (17) defines a locally integrable trace \(F|_V: V \rightarrow \mathscr {C}\), and for all \(\delta >0\) and \(\mathscr {H}^k\)-almost all \(x \in V\) we have

in the limit \(\delta \rightarrow 0\).

Finally, we give the wave equations constituting the Saint–Venant compatibility conditions.

Lemma 5

For \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) and \(\theta \in L^\infty ({B_{1}\left( 0\right) };\mathbb {R}^3)\) such that for almost all \(x\in {B_{1}\left( 0\right) }\) we have \(\sum _{i=1}^3 \theta _i(x) = 1\) and \( e(u)(x) = \sum _{i=1}^3 \theta _i(x) e_i\), the barycentric coordinates \(\theta \) distributionally satisfy the following wave equations:

3.3 Planarity in the Case of Non-trivial Blow-Ups

While the statements in the previous subsections either rely on rather soft arguments or were previously known, we now come to the main ideas of the paper. As \(\smash {\widetilde{\mathscr {K}}}\), see definition (12), is a connected set, there are no restrictions on varying single points continuously in \(\smash {\widetilde{\mathscr {K}}}\). However, the crucial insight is that two different points \(\theta ^{(1)}, \theta ^{(2)} \in \smash {\widetilde{\mathscr {K}}}\) with \(\smash {\theta ^{(1)}_1 = \theta ^{(2)} _1} >0\) are much more constrained.

To illustrate this rigidity, we first for simplicity assume that there exist functions \(f_1, f_2, f_3 \in L^\infty (-1,1)\) such that for almost all \(x \in {B_{1}\left( 0\right) }\) we have the decomposition

Furthermore, suppose that \(f_1\) is a BV-function with a jump discontinuity of size \(\delta f_1\) at \(x_1=0\) and that the other functions are continuous. Thus the blow-up of \(\theta \) at some point \((0,x')\in {B_{1}\left( 0\right) }\) takes two values \(\smash {\theta ^{(1)},\theta ^{(2)}} \in \mathscr {\widetilde{K}}\), which agree in their first component. Specifically, we have \(\smash {\theta ^{(1)}_1 = \theta ^{(2)}_1} =\theta _1(0,x')\). A look at Fig. 11 suggests that \(\theta _1(0,x')\) can take at most two values, which furthermore are independent of \(x'\). As it is a sum of two one-dimensional functions some well-known, straightforward combinatorics imply that one of the two functions must be constant. Consequently \(\theta \) only depends on two directions.

Illustration of the argument for two-valuedness of \(\theta _1\) near \(x_1= 0\). The length of the dotted line has to be \(3\sqrt{2}\,\, \delta f_1\), where \(\delta f_1 >0\) is the size of the jump of \(f_1\) at zero. Consequently, the function \(\theta _1\) can only take the two values 0 or \(1- \delta f_1\)

This can be adapted to our more complex decomposition (15), even without any a priori regularity of the one-dimensional functions. To this end, we require a topology for the blow-ups which respects the non-convex inclusion \(e(u) \in \mathscr {K}\), and a quantification of discontinuity for \(f_\nu \) which ensures that its blow-up is non-constant.

In order to keep the non-convexity, we for \(x\in \mathbb {R}^3\) and \(\varepsilon > 0\) consider the push-forward measures

in the limit \(\varepsilon \rightarrow 0\). This approach is very similar in spirit to using Young-measures, but without a further localization in the variable y. Positing that \(f_\nu \) does not have a constant blow-up along some sequence then means that \(f_\nu \) does not converge strongly to a constant on average, i.e., it does not converge to its average on average. If one allows the midpoints x of the blow-ups to depend on \(\varepsilon \), we see that this is equivalent to \(f_\nu \notin VMO\) according to Definition 1 given above.

The resulting statement is

Proposition 1

There exist universal radii \(r\in (0,\frac{1}{64})\) and \(\tilde{r} \in (0,r)\) with the following property: Almost everywhere on \({B_{1}\left( 0\right) }\), let \(e(u) \in \mathscr {K}\) with \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) be such that the barycentric coordinates \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) satisfy \(\theta \in \widetilde{\mathscr {K}}\). Furthermore, for all \(\nu \in N\) and all cyclical indices \(i=1,2,3\) let there exist \(f_\nu \in L^\infty (-1,1)\) and affine functions \(g_i:\mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x \in {B_{1}\left( 0\right) }\) we have

Additionally, let there exist \(i\in \{1,2,3\}\) and \(\nu \in N_i\) such that \(f_{\nu } \notin VMO(-\tilde{r}, \tilde{r})\). Then there exists \(d \in \mathscr {D}\), see (10), with \(d\cdot \nu =0\) such that the configuration is planar with respect to d on \({B_{r}\left( 0\right) }\) or we have \(\theta _i \equiv 0\) on on \({B_{\tilde{r}}\left( 0\right) }\), i.e., a two-variant configuration.

Furthermore, in the first case there exist \(\alpha \in [-\tilde{r}, \tilde{r}]\), \(0<b<1\) and a Borel-measurable set \(B \subset H(\alpha ,\nu )\cap B_{1/8}(0)\) with \(\mathscr {H}^2(B \cap B_{r}(0))>0\) such that the trace \(\theta _i|_{H(\alpha ,\nu )}\) defined by Lemma 4 satisfies \(\theta _i|_{ H(\alpha ,\nu )} = b \chi _B \) on \(H(\alpha ,\nu )\cap B_{1/8}(0)\), \(\mathscr {H}^2\)-almost everywhere. (Here, Lemma 4 applies due to \(\tilde{\nu } \cdot \nu \ne 0\) for all \(\tilde{\nu } \in N_{i+1}\cup N_{i-1}\), which are precisely the normals of the decomposition (15) of \(\theta _i\), see Fig. 10.)

There is another slightly more subtle issue in the proof of Proposition 1: As already explained, our argument works by looking at a single plane at which we blow-up. Consequently, we can only distinguish the two cases \(\theta _i \equiv 0\) and \(\theta _i \not \equiv 0\) on said hyperplane. Therefore we need a way of transporting the information \(\theta _i \equiv 0\) from the hyperplane to an open ball. Given our combinatorics this turns out to be the 3D analog of the question: “If \(F(x,y) = f(x)+g(y)\) is constant on the diagonal, is it constant on an non-empty open set?” Looking at the function \(F(x,y) = x - y\) one might think that the argument is doomed since F vanishes on the diagonal but clearly does not do us the favor of vanishing on a non-empty open set.

However, the fact that 0 is an extremal value for \(\theta _1\) saves us: If F is constant on the diagonal of a square and achieves its minimum there, then it has to be constant on the entire square, see also Fig. 12a. For later use we already state this fact in its perturbed form.

Lemma 6

Let \(f, g \in L^\infty (0,1)\) and \(c\in \mathbb {R}\) be such that \(f(x_1) + g(x_2) \ge c\) for almost all \(x \in (0,1)^2\) . Let \(\varepsilon \ge 0\) and let one of the following two statements be true:

-

1.

For almost all \(x\in (0,1)^2\) the sum satisfies \(f(x_1) + g(x_2) \le c +\varepsilon \).

-

2.

For almost all \(t\in (0,1)\) the sum satisfies \(f(t) + g(t) \le c + \varepsilon \).

With the essential infima as defined in Section 2.2, it then holds that

-

3.

For almost every \(t\in (0,1)\) we have \(f(t) \le \hbox {ess inf}_{(0,1)} f + \varepsilon \), \(g(t) \le \hbox {ess inf}_{(0,1)} g + \varepsilon \) and \(c \le \hbox {ess inf}_{(0,1)} f + \hbox {ess inf}_{(0,1)} g \le c + \varepsilon \) .

If \(\varepsilon = 0\), then all three statements are equivalent.

This statement can be lifted to three-dimensional domains. Its analogue states that in order to deduce that \(\theta _i\) for some \(i=1,2,3\) is constant and extremal, it is enough to know that the extremal value is attained on a line parametrized by \(l(t)\, {:}{=}\, x_0 + \sqrt{2}t E_i\) for \(t\in I\) and some interval \(I \subset \mathbb {R}\). Here, \(E_i\) is the i-th standard basis vector of \(\mathbb {R}^3\) and the restriction of \(\theta _i\) to the image of l is defined by Lemma 4. It will later be important that we have a precise description of the maximal set to which the information \(\theta _i = 0\) can be transported, which turns out to be the polyhedron

see Fig. 12b. The general strategy of the proof is described in Fig. 13.

a For \(f,g \in L^\infty (0,1)\), the information \(f(x_1) +g(x_2) = c\) along the dashed diagonal can be transported to the whole gray square provided \(f(x_1) +g(x_2) \ge c\). b Sketch of the polyhedron P with normals \(\nu _i^\pm \) for \(i=2,3\), which in the setting of Lemma 7 is the maximal set to which we can propagate the information \(\theta _1 \equiv 0\) or \(\theta _1\equiv 1\) on the dashed line l(I)

There is also a generalization of the one-dimensional functions being almost constant in two dimensions: In three dimensions, the one-dimensional functions are close to being affine on P in the sense that the inequality (23) holds. (Lemma 13, see Appendix A, ensures that then there exists an affine function which is uniformly close.) As we only need this part of the statement in approximation arguments we may additionally assume that the one-dimensional functions are continuous to avoid technicalities.

a In the setting of Lemma 7 we first transport the information \(\{\theta _1 \approx 0 \}\) from the dashed line l(I) to the gray plane \(H(0,\frac{1}{\sqrt{2}}(011))\cap P\) using the two-dimensional result. b In a second step, we use \(\{\theta _1 \approx 0 \}\) along another dashed line \(\tilde{l} (\tilde{I})\) parallel to \(E_1\) to propagate the information to \(H(\alpha ,\frac{1}{\sqrt{2}}(01\overline{1}))\cap P\) for all \(\alpha \in \mathbb {R}\)

The resulting statement is the following:

Lemma 7

There exists a universal radius \(r\in (0,1)\) with the following property: Let \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) be such that for all \(\nu \in N\) and all cyclical indices \(i=1,2,3\) there exist \(f_\nu \in L^\infty (-1,1)\) affine functions \(g_i:\mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x \in {B_{1}\left( 0\right) }\) we have

Let \(i \in \{1,2,3\}\). Let \(I \subset \mathbb {R}\) be a closed interval and let \(x_0 \in \mathbb {R}^3\) such that \(x_0 + \sqrt{2} I E_i \subset {B_{r}\left( 0\right) }\). For \(t \in I\) we define \(l(t) \, {:}{=}\, x_0 + \sqrt{2} tE_i\) and the polyhedron P to be

see also Fig. 12b. For \(\varepsilon >0\) assume that either

Then it holds that \(P \subset {B_{1}\left( 0\right) }\) and we in the respective cases have

Furthermore, if additionally for all \(\nu \in N_{i+1} \cup N_{i-1}\) the one-dimensional functions \(f_\nu \) are continuous, then they all are almost affine in the sense that for all \((s,h,\tilde{h}) \in \mathbb {R}^3\) with \(s, s+ h, s+ \tilde{h}, s+h + \tilde{h} \in \nu \cdot l(I)\) for all \(\nu \in N_{i+1} \cup N_{i-1}\) we have

3.4 The Case \(f_\nu \in VMO\) for all \(\nu \in N\)

Having simplified the case where one of the one-dimensional functions is not of vanishing mean oscillation, we now turn to the case where all of them lie in VMO. The statement we will need to prove here is the following:

Proposition 2

There exists a universal constant \(r\in (0,1)\) such that the following holds: Almost everywhere on \({B_{1}\left( 0\right) }\), let \(e(u) \in \mathscr {K}\) with \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) be such that the barycentric coordinates \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) satisfy \(\theta \in \widetilde{\mathscr {K}}\). Furthermore, for all \(\nu \in N\) and all cyclical indices \(i=1,2,3\) let there exist \(f_\nu \in VMO(-1,1)\) and affine functions \(g_i:\mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x \in {B_{1}\left( 0\right) }\) we have

Then e(u) is a two-variant configuration on \({B_{r}\left( 0\right) }\) in the sense of Definition 2.

Remark 2

To fix ideas, let us first illustrate the argument in the case of continuous functions in the whole space: By the mean value theorem the case \(e(u) \in \{e_1,e_2,e_3\}\) is trivial. Therefore, we may suppose that there is a point \(x \in \mathbb {R}^3\) such that e(u)(x) lies strictly between two pure martensite strains, e.g., we have \(\theta _1(x) = 0\) and \(0< \theta _2(x), \theta _3(x) <1\), see Fig. 14. By continuity of \(\theta _2 \) and \(\theta _3\), the set \(\{\theta _1 = 0\}\) has non-empty interior, and, by the decomposition (15), any connected component of it should be a polyhedron P whose faces have normals lying in \(N_2\cup N_3\), see Fig. 15a. Additionally, continuity implies that

Unfortunately, on a face with normal in \(N_i\) for \(i=2,3\) only \(\theta _i\) will later be a well-defined function due to Lemmas 3 and 4 after dropping continuity. Therefore on such a face we can only use the above information in the form

In the setting of Remark 2: a Sketch of a connected component P of \(\theta _1^{-1}(0)\) with normals \(\nu _2^+\), \(\nu _3^-\) and \(\nu _3^+\). On the gray face we get the information \(\theta _2 \equiv 0\) or \(\theta _2 \equiv 1\). In particular, we get it along the line l, which is parallel to \(E_2\). b Sketch of the polyhedron Q that transports the information \(\theta _2 \equiv 0\) or \(\theta _2 \equiv 1\) along l to the inside of P

Using Lemma 7 we get a polyhedron Q that transports this information back inside P, see Fig. 15b. The goal is then to show that we can reach x in order to get a contradiction to e(u)(x) lying strictly between \(e_2\) and \(e_3\), which we will achieve by using the face of P closest to x.

In order to turn this string of arguments into a proof in the case \(f_\nu \in VMO(-1,1)\) for all \(\nu \in N\) the key insight is that non-convex inclusions and approximation by convolutions interact very nicely for VMO-functions. This elementary, if maybe a bit surprising fact has previously been used to in the degree theory for VMO-functions, see Brezis and Nirenberg [9, Inequality (7)], who attribute it to L. Boutet de Monvel and O. Gabber. For the convenience of the reader, we present a proof in Section 4.

Lemma 8

(L. Boutet de Monvel and O. Gabber) For \(n,d \in \mathbb {N}\) let \(U \subset \mathbb {R}^n\) be open and let \(K \subset \mathbb {R}^d\) be compact. Let \(f \in VMO(U)\) with \(f\in K\) almost everywhere. For \(\delta >0\) and \(x\in U\), let \(f_\delta (x)\, {:}{=}\, \fint _{{B_{\delta }\left( x\right) }} f(y) \, \mathrm {d}y\), where we extend f by 0 outside of U. Then \(f_\delta \) is continuous and we have that \({\text {dist}}(f_\delta , K) \rightarrow 0\) locally uniformly in U as \(\delta \rightarrow 0\).

Unfortunately, formalizing the set \(\{\theta _{1,\delta } \approx 0\}\) in such a way that connected components are polyhedra is a bit tricky. We do get that they contain polyhedra on which the one-dimensional functions are close to affine ones, see Lemmas 7 and 13. (The latter can be found in the Appendix A.) However, we do not immediately get the other inclusion: As the directions in the decomposition are linearly dependent, one of the one-dimensional functions deviating too much from their affine replacement does not translate into \(\theta _1\) deviating too much from zero.

We side-step this issue by first working on hyperplanes \(H(\alpha ,\nu _1^+)\) for some \(\alpha \in (-r,r)\) where \(r \in (0,1)\). In that case, the decomposition of \(\theta _1\) simplifies to two one-dimensional functions and thus we do get that connected components of \(\{\theta _{1,\delta } \approx 0\} \cap H(\alpha ,\nu _1^+)\) are parallelograms. The goal is then to prove that at least some of them do not shrink away in the limit \(\delta \rightarrow 0\). Making use of Lemma 7 we can go back to a full dimensional ball and get that the set \(\{\theta _1 =0\}\) has non-empty interior. This allows the argument for continuous functions to be generalized to VMO-functions.

3.5 Classification of Planar Configurations

It remains to exploit the two-dimensionality that was the result of Proposition 1. It allowed us to reduce the complexity of the decomposition (15) to three one-dimensional functions with linearly dependent normals and three affine functions. We first deal with the easier case where one of the one-dimensional functions is affine.

Lemma 9

There exists a universal number \(r\in (0,1)\) with the following property: Almost everywhere on \({B_{1}\left( 0\right) }\), let \(e(u) \in \mathscr {K}\) with \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) be such that the barycentric coordinates \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) satisfy \(\theta \in \widetilde{\mathscr {K}}\). Let the configuration be planar on \({B_{1}\left( 0\right) }\) with respect to the direction \(d \in \mathscr {D}\), and let the functions of one variable involved in the decomposition (14) be given by \(f_{\nu _i} \in L^\infty (-1,1)\) for \(\nu _i \in N_i\) with \(\nu _i \cdot d= 0\) for all \(i=1,2,3\). Furthermore, assume that there exists \(j\in \{1,2,3\}\) with \(\nu _j \in N_j\) such that the function \(f_{\nu _j}\) is affine on \((-1,1)\).

Then, on \({B_{r}\left( 0\right) }\), the configuration is a two-variant configuration, a planar second-order laminate or a planar checkerboard.

While the preceding lemma is mostly an issue of efficient book-keeping to reap the rewards of previous work, we now have to make a last effort to prove the rather strong rigidity properties of planar triple intersections:

Proposition 3

There exists a universal radius \(r\in (0,\frac{1}{2})\) with the following property: Almost everywhere on \({B_{1}\left( 0\right) }\), let \(e(u) \in \mathscr {K}\) with \(u \in W^{1,2}({B_{1}\left( 0\right) };\mathbb {R}^3)\) be such that the barycentric coordinates \(\theta \in L^\infty ({B_{1}\left( 0\right) };[0,1]^3)\) satisfy \(\theta \in \widetilde{\mathscr {K}}\). Let the configuration be planar on \({B_{1}\left( 0\right) }\) with respect to the direction \(d \in \mathscr {D}\) and let the functions of one variable involved in the decomposition (14) be given by \(f_{\nu _i} \in L^\infty (-1,1)\) for \(\nu _i \in N_i\) with \(\nu _i \cdot d= 0\) for all \(i=1,2,3\). Furthermore, let all \(f_{\nu _i}\) for \(i=1,2,3\) be non-affine on \(\left( -r,r \right) \).

Then the configuration is a two-variant configuration on \({B_{r}\left( 0\right) }\) or a planar triple intersection on \({B_{2r}\left( 0\right) }\).

Letting \(\pi _i(x)\, {:}{=} \, \nu _i \cdot x\) for \(i=1,2,3\) and \(x\in \mathbb {R}^3\), the idea is to prove for all \(i=1,2,3\) that there exist \(J_i \subset (-\frac{1}{2},\frac{1}{2})\) such that we have

i.e., they are product sets in suitable coordinates. Note that the condition \(e(u) \in \mathscr {K}\) almost everywhere is then equivalent to \(\bigcup _{i=1}^3 \theta _i^{-1}(0) = {B_{1}\left( 0\right) }\). Writing this in terms of \(J_i\) with \(i=1,2,3\) allows us to apply Lemma 10 below to conclude that \(J_i\) is an interval for all \(i=1,2,3\). The actual representation of the strain is then straightforward to obtain.

Lemma 10

Let \(\nu _1, \nu _2, \nu _3 \subset {\mathbb {S}}^1\) be linearly dependent by virtue of \(\nu _1 + \nu _2 + \nu _3 = 0\). For \(i=1,2,3\) and \(x \in \mathbb {R}^2\) let \(\pi _i(x) = x\cdot \nu _i\). Let \(J_1,J_2,J_3 \subset \left( -8,8\right) \) be measurable such that

-

1.

we have

$$\begin{aligned} \left| B^{\,2}_{8}(0) \cap \left( \pi _1^{-1}(J_1)\cap \pi _2^{-1}(J_2)\cap \pi _3^{-1}(J_3)\right) \right|&=0,\nonumber \\ \left| B^{\,2}_{8}(0) \cap \left( \pi _1^{-1}({J}^{\mathsf {c}}_1)\cap \pi _2^{-1}({J}^{\mathsf {c}}_2)\cap \pi _3^{-1}({J}^{\mathsf {c}}_3)\right) \right|&= 0, \end{aligned}$$(25) -

2.

and the two sets \(J_1\) and \(J_2\) neither have zero nor full measure in \(\left( -1,1 \right) \) in the sense that

$$\begin{aligned} 0< \left| J_1\cap \left( -1,1 \right) \right|&<2,\nonumber \\ 0< \left| J_2\cap \left( -1,1\right) \right|&<2. \end{aligned}$$(26)

Then there exist a point \(x_0 \in B^{\,2}_2(0)\) such that

or

To illustrate the proof let us first assume that \(J_1\) and \(J_2\) are intervals of matching “orientations”, e.g., we have \(J_1 = J_2 = (-\infty ,0)\), in which case Fig. 16a suggests that also \(J_3 = (-\infty ,0)\).

If they are not intervals of matching “orientations”, we will see that, locally and up to symmetry, more of \(J_1\) lies below, for example, the value 0 than above, while the opposite holds for \(J_2\). The corresponding parts of \(J_1\) and \(J_2\) are shown in Fig. 16b. One then needs to prove that sufficiently many lines \(\pi _3^{-1}(s)\) for parameters \(s\in \mathbb {R}\) close to 0 intersect the “surface” of \(\pi _1^{-1}(J_1) \cap \pi _2^{-1}(J_2)\), see Lemma 11 below. As a result less than half the parameters around 0 are contained in \(J_3\). The same argument for the complements ensures that also less than half of them are not contained in \(J_3\), which cannot be true.

Sketches illustrating the proof of Lemma 10. The arrows in the middle indicate the three linearly dependent directions \(\nu _1, \nu _2, \nu _3\). a In the setting of Lemma 10, the set \(\pi _3^{-1}(J_3)\) (hatched) may only intersect \(\pi _1^{-1}({J_1}^{\mathsf {c}}) \cap \pi _2^{-1}({J_2}^{\mathsf {c}})\) (light gray) and its complement may only intersect \(\pi _1^{-1}(J_1) \cap \pi _2^{-1}(J_2)\) (dark gray). b The line \(\pi _3^{-1}(s)\) intersects both a subset of \(\pi _1^{-1}(J_1) \cap \pi _2^{-1}(J_2)\) (dark gray) and a subset of \(\pi _1^{-1}({J_1}^{\mathsf {c}}) \cap \pi _2^{-1}({J_2}^{\mathsf {c}})\) (light gray)

To link intersecting lines to the “surface area” we use that our sets are of product structure, i.e., they can be thought of as unions of parallelograms, and that the intersecting lines are not parallel to one of the sides of said parallelograms. In the following and final lemma, we measure-theoretically ensure the line \(\pi _3^{-1}(s)\) for \(s\in \mathbb {R}\) intersects a product set \(\pi _1^{-1}(J_1) \cap \pi _2^{-1}(J_2)\) by asking

Lemma 11

Let \(\nu _1, \nu _2, \nu _3 \in {\mathbb {S}}^1\) with \(\nu _1 + \nu _2 + \nu _3 = 0\). Let \(J_1, J_2 \subset \mathbb {R}\) be measurable with \(|J_1|,|J_2|>0\). Then the set

is measurable and satisfies \(A \subset \pi _3\left( \pi _1^{-1}(J_1) \cap \pi _2^{-1}(J_2) \right) \), as well as \(|A|\ge |J_1| + |J_2|\).

4 Proofs

Proof of Theorem 1

We first use Lemma 2 to see that the limiting differential inclusion \(e(u)\in \mathscr {K}\) in fact holds. Furthermore, we obtain a function \(\theta \in L^\infty ({B_{1}\left( 0\right) };\mathbb {R}^3)\) almost everywhere satisfying \(e(u) = \sum _{i=1}^3 \theta _i e_i\) and \(\theta \in \widetilde{\mathscr {K}}\). Next, we apply Lemma 3 to deduce the existence of a universal radius \(r_1\in (0,1)\) such that for all \(\nu \in N\) and all cyclical indices \(i=1,2,3\) there exist \(f_\nu \in L^\infty (-r_1,r_1)\) affine functions \(g_i :\mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x \in {B_{r_1}\left( 0\right) }\) we have

Let \( r_3 < r_2 \in (0,\frac{1}{2})\) be the two universal radii of Proposition 1. By a rescaling argument, we may suppose that \(r_2\) is also the universal radius of Proposition 3. Let \(r_4\, {:}{=}\, r_1r_2\) and \(r_5\, {:}{=}\, r_1r_3\), so that by another rescaling argument, the conclusions of Propositions 1 and 3 hold for the respective radii \(r_5<r_4\). If \(f_\nu \in VMO(-r_5,r_5)\) for all \(\nu \in N\), then a rescaling argument and Proposition 2 imply that there exists a universal \(r_6 \in (0,r_5)\) such that the solution of the differential inclusion is a two-variant configuration on \({B_{r_6}\left( 0\right) }\). If \(f_{\nu _i} \notin VMO(-r_5,r_5)\) for some \(\nu _i \in N_i\) and \(i \in \{1,2,3\}\) we can use the same rescaling argument and Proposition 1 to deduce that the configuration is planar on \({B_{r_4}\left( 0\right) }\) or a two-variant configuration on \({B_{r_5}\left( 0\right) }\).

We are thus left with classifying planar configurations on \({B_{r_4}\left( 0\right) }\), i.e., there exists \(d\in \mathscr {D}\) such that for all \(j=1,2,3\) there exist \(\nu _j \in N_j\) with \(d\cdot \nu _j = 0\), functions \(\tilde{f}_{\nu _j} \in L^\infty (-r_4,r_4)\) and affine functions \(\tilde{g}_j:\mathbb {R}^3 \rightarrow \mathbb {R}\) with \(\partial _d g_j = 0\) such that for almost all \(x\in {B_{r_4}\left( 0\right) }\) we have

If, additionally, one of the functions \(\tilde{f}_{\nu _j}\) for \(j\in \{1,2,3\}{\setminus } \{i\}\) is affine on \((-r_4,r_4)\), we can apply Lemma 9 after rescaling to see that the configuration is a two-variant configuration, a planar second-order laminate or a planar checkerboard on \({B_{r_7}\left( 0\right) }\) for some universal \(r_7>0\). Otherwise \(f_{\nu _j}\) is not affine on \((-r_4,r_4)\) for all \(j \in \{1,2,3\}{\setminus }\{i\}\), and \(f_{\nu _i}\) is not affine on \((-r_4,r_4)\) by virtue of \(f_{\nu _i} \not \in VMO(-r_5,r_5)\) and \(r_5<r_4\). Therefore, remembering that we can apply Proposition 3 with the radius \(r_4\), we obtain that the configuration is a two-variant configuration on \({B_{r_4}\left( 0\right) }\) or a planar triple intersection on \({B_{2r_4}\left( 0\right) }\).

Let \(r\, {:}{=}\, \min \{r_4,r_5,r_6,r_7\}\) and \(\tilde{r}\, {:}{=}\, 2r_4\). Restricting to the smaller ball \({B_{r}\left( 0\right) }\) where possible, we see that we have a two-variant configuration, a planar second-order laminate or a planar checkerboard on \({B_{r}\left( 0\right) }\), or a planar triple intersection on \({B_{\tilde{r}}\left( 0\right) }\), concluding the proof. \(\quad \square \)

4.1 Construction of a Fully Three-Dimensional Structure in the Presence of Austenite

Here we flesh out the previously announced example in Lemma 1. The idea is to construct a two-variant configuration which can be shifted in strain space to include austenite, see Fig. 18.

Proof of Lemma 1

Recall \(\nu _1^+= \frac{1}{\sqrt{2}} (011),\nu _1^- = \frac{1}{\sqrt{2}} (01\overline{1}) \) from Section 2.2 and let \(\nu _3 \, {:}{=}\, \nu _3^+ =\frac{1}{\sqrt{2}} (110)\). It is clear that \(\{\nu _1^+,\nu _1^-,\nu _3\}\) is a basis of \(\mathbb {R}^3\), see also Fig. 17. Let \(\chi _1^+, \chi _1^-, \chi _3: \mathbb {R}\rightarrow \{0,1\}\) be measurable characteristic functions. For \(x\in \mathbb {R}^3\), we define the volume fractions to be

which clearly a.e. satisfy \(0\le \theta _i \le 1\) for all \(i=1,2,3\) and \(\theta _1 + \theta _2 + \theta _3 \equiv 1\). As \(\{\nu _1^+,\nu _1^-,\nu _3\}\) constitutes a basis of \(\mathbb {R}^3\), the structure is indeed fully three-dimensional.

Straightforward case distinctions ensure that \(\theta _i = 0\) for some \(i=1,2,3\) or \(\theta _i =\frac{1}{3}\) for all \(i=1,2,3\) almost everywhere. Setting \(G\, {:}{=}\, \sum _{i=1}^3 \theta _i e_i\) we see that this implies \(G \in \mathscr {K}\cup \{0\}\) almost everywhere. A sketch of cross-sections through G on \(H(c,\nu _1^-)\) both with \(\chi _1^-(c) =0\) and \(\chi _1^-(c) =1\) is given in Fig. 18.

Sketch showing the basis \(\{\nu _1^+, \nu _1^-,\nu _3\}\) and, in gray, a plane normal to \(\nu _3\), parallel to which the cross-sections of Fig. 18 are chosen

a Cross-section of the construction for Lemma 1 with \(x\cdot \nu _3 = c\). If \(c\in \mathbb {R}\) is such that \(\chi _3(c) = 0\) then the strains \(M_1, M_2,M_3\) are as in Subfigure (b) if \(\chi _3(c) = 1\) they are as in Subfigure (c)

Finally, in order to identify G as the symmetric gradient of a displacement we define functions \(F_1^+,F_1^-, F_3 : \mathbb {R}\rightarrow \mathbb {R}\) such that for almost all \(s\in \mathbb {R}\) we have

For \(x\in \mathbb {R}^3\) we then set

The identity \(e(u) \equiv G\) a.e. on \(\mathbb {R}^3\) is straightforward to check. \(\quad \square \)

4.2 The Differential Inclusion

Proof of Lemma 2

For simplicity, we suppress the sequence parameter in the notation throughout the proof. Let \(\eta >0\). For Borel sets \(B\subset {B_{1}\left( 0\right) }\) we interpret the energies

as finite Radon measures on \({B_{1}\left( 0\right) }\).

Let \(y \in {B_{1}\left( 0\right) }\) and \(r>0\) be such that \({B_{r}\left( y\right) }\subset {B_{1}\left( 0\right) }\). We rescale \({B_{r}\left( y\right) }\) to the unit ball by setting \(\hat{\eta }\, {:}{=} \,\frac{\eta }{r}\), and defining \(\hat{u}_{\hat{\eta }} \in W^{1,2}({B_{1}\left( 0\right) }; \mathbb {R}^3)\) and \(\hat{\chi }_{\hat{\eta }} \in L^\infty ( {B_{1}\left( 0\right) } ;\{0,1\}^3)\) with \(\sum _{i=1}^3 \hat{\chi }_{\hat{\eta }, i}=1 \) a.e. for \(\hat{x} \in {B_{1}\left( 0\right) } \) to be

By the Capella-Otto rigidity result [11, Theorem 2.2] there exists a universal radius \(0<s<1\) such that

The energy of the rescaled functions is

so that rescaling back to \({B_{r}\left( y\right) }\), we get

After passing to a subsequence (not relabeled), we have \(E_\eta {\mathop {\rightharpoonup }\limits ^{*}} E\) as Radon measures in the limit \(\eta \rightarrow 0\). Consequently weak lower semi-continuity of the \(L^1\)-norm and upper semi-continuity of the total variation on compact sets imply

For \(T\, {:}{=}\, \left\{ y \in {B_{1}\left( 0\right) } : \limsup _{r\rightarrow 0} r^{-3 + \frac{2}{3}}E\left( \overline{{B_{r}\left( y\right) }}\right) >0\right\} \) and for all \(\varepsilon >0\) we get \(\mathscr {H}^{3-\frac{2}{3}+\varepsilon }(T) = 0\) by [1, statement (2.40)]. Using [1, Theorem 2.49 (iii) and Theorem 2.53], along with Lebesgue point theory we thus for almost all \(y \in {B_{1}\left( 0\right) }\) obtain

\(\square \)

4.3 Decomposing the Strain

Proof of Lemma 3

First we notice that for all \(i=1,2,3\) and almost all \(x\in {B_{1}\left( 0\right) }\) the relation \(e(u)(x) = \sum _{i=1}^3 \theta _i(x) e_i\), the definition (4) of \(e_i\) and the assumption \(\sum _{i=1}^3 \theta _i(x) = 1\) imply

Therefore, we only have to argue that for all \(i=1,2,3\) a decomposition for \(e(u)_{ii}\) analogous to the Equation (15) holds.

The remaining proof is essentially a translation of the proofs of Capella and Otto [11, Lemma 3.7 and Proposition 3.9] into our setting. To this end, we use the “dictionary”

where the left-hand side shows our objects and the right-hand side shows the corresponding ones of Capella and Otto. The two main changes are the following:

-

1.

In our case all relevant second mixed derivatives vanish (see Lemma 5), instead of being controlled by the energy. Furthermore, whenever Capella and Otto refer to their “austenitic result”, we just have to use the fact that \(e(u)_{11} + e(u)_{22} + e(u)_{33} \equiv 0\).

-

2.

We need to check at every step that boundedness of all involved functions is preserved.

We will briefly indicate how boundedness of all functions is ensured. The functions in [11, Lemma 3.7] are constructed by averaging in certain directions. This clearly preserves boundedness. The proof of [11, Proposition 3.9] works by applying pointwise linear operations to all functions, which again preserves boundedness, and by identifying certain functions as being affine, which are also bounded on the unit ball. \(\quad \square \)

Proof of Corollary 1

By symmetry we can assume \(i=1\). Applying [11, Lemma 3.8] to \(\theta _1\) we see that for every \(x\in {B_{1}\left( 0\right) }\) there exists \(r>0\) such that the functions \(y \mapsto f_\nu (y\cdot \nu )\) for \(\nu \in N_2 \cup N_3\) are affine on the ball \({B_{r}\left( x\right) }\). Consequently, they are affine on \({B_{1}\left( 0\right) }\). Thus we can find affine functions \(\tilde{g}_2, \tilde{g}_3 : \mathbb {R}^3 \rightarrow \mathbb {R}\) such that for almost all \(x\in {B_{1}\left( 0\right) }\) the decomposition (16) reduces to

As the vectors \(\nu _1^+\) and \(\nu _1^-\) form a basis of the plane \(H(0,E_1)\) defined in (13), we can find functions \(\tilde{f}_{\nu _1^+}, \tilde{f}_{\nu _1^-} \in L^\infty (-1,1)\), an affine function \(\hat{g}_3 :\mathbb {R}^3 \rightarrow \mathbb {R}\) and \(\lambda \in \mathbb {R}\) such that for almost all \(x\in {B_{1}\left( 0\right) }\) we have

Therefore, for almost all \(x\in {B_{1}\left( 0\right) }\) we have \(\hat{g}_3(x) = -\lambda x_1\) due to the assumption \(\theta (x)\in \mathscr {\widetilde{K}}\) in the form of \(\sum _{i=1}^3\theta _i(x)= 1\), and thus the decomposition simplifies to

\(\square \)

Proof of Lemma 4

For \(t \in \mathbb {R}\) and \(\delta >0\) let

For all \(x\in V\) and all \(\delta >0\) we have that