Abstract

In this paper we deal with a reaction–diffusion equation in a bounded interval of the real line with a nonlinear diffusion of Perona–Malik’s type and a balanced bistable reaction term. Under very general assumptions, we study the persistence of layered solutions, showing that it strongly depends on the behavior of the reaction term close to the stable equilibria \(\pm 1\), described by a parameter \(\theta >1\). If \(\theta \in (1,2)\), we prove existence of steady states oscillating (and touching) \(\pm 1\), called compactons, while in the case \(\theta =2\) we prove the presence of metastable solutions, namely solutions with a transition layer structure which is maintained for an exponentially long time. Finally, for \(\theta >2\), solutions with an unstable transition layer structure persist only for an algebraically long time.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The goal of this paper is to investigate the persistence of phase transition layer solutions to the reaction–diffusion equation

where \(u=u(x,t): [a,b]\times (0,+\infty )\rightarrow \mathbb {R}\), complemented with homogeneous Neumann boundary conditions

and initial datum

In (1.1) \(\varepsilon >0\) is a small parameter, \(Q:\mathbb {R}\rightarrow \mathbb {R}\) is a Perona–Malik’s type diffusion [21], while \(F:\mathbb {R}\rightarrow \mathbb {R}\) is a double well potential with wells of equal depth. More precisely, we assume that \(Q\in C^1(\mathbb {R})\) satisfies

for all \(s\in \mathbb {R}\) and that there exists \(\kappa >0\) such that

The prototype examples we have in mind are

which satisfy assumptions (1.5) with \(\kappa = 1\) and \(\kappa = \frac{1}{\sqrt{2}}\), respectively (see the left hand picture of Fig. 1).

In the left hand picture we depict the two prototype examples for the diffusion Q; in the right hand side there are several plots of the potential function (1.10) for different choices of the parameter \(\theta \)

Regarding the reaction term, we require that the potential \(F\in C^1(\mathbb {R})\) satisfies

and that there exist constants \(0<\lambda _1\le \lambda _2\), \(\eta >0\) and \(\theta >1\) such that:

Therefore, (1.7) ensures that F is a double well potential with wells of equal depth in \(u=\pm 1\) and (1.8) describes the behavior of F close to the minimum points. In particular, notice that by integrating (1.8) and using (1.7), we obtain

The simplest example of potential F satisfying (1.7)–(1.8) is

which is depicted in Fig. 1 for different choices of \(\theta >1\). It is worth mentioning that when \(\theta =2\) in (1.10), we obtain the classical double well potential \(F(u)=\frac{1}{4}(1-u^2)^2\), which, in particular, satisfies \(F''(\pm 1)>0\); on the other hand, if \(\theta \in (1,2)\) the second derivative of the potential (1.10) blows up as \(u\rightarrow \pm 1\), while for \(\theta >2\) we have the degenerate case \(F''(\pm 1)=0\). Finally, notice that (1.7) implies that the reaction term \(f=-F'\) satisfies \(\displaystyle \int _{-1}^1 f(s)\,ds=0\), being the reason why we call f a balanced bistable reaction term.

The competition between a balanced reaction term satisfying the additional assumption \(F''(\pm 1)>0\) and a classical linear diffusion is described by the celebrated Allen–Cahn equation [1], which can be obtained from (1.1) by choosing \(Q(s)=s\) and reads as

Such model has been extensively studied since the early works [3, 4, 13], and it is well known that the solution of (1.11) subject to (1.2)–(1.3) exhibits a peculiar phenomenon when the diffusion coefficient \(\varepsilon >0\) is very small, known in literature as metastability: if the initial datum has a transition layer structure, that is \(u_0\) is close to a step function taking values in \(\{\pm 1\}\) and has sharp transition layers, then the corresponding solution evolves very slowly in time and the layers move towards one another or towards the endpoints of the interval (a, b) at an extremely low speed. Once two layers are close enough or one of them is sufficiently close to a or b, they disappear quickly and, after that, again the solution enters a slow motion regime. The latter phenomenon repeats until all the transitions disappear and the solution reaches a stable configuration, which is given by one of the two stable equilibria \(u=\pm 1\). A rigorous description of such metastable dynamics first appeared in the seminal work [4] where, in particular, it is proved that the time needed for the annihilation of the closest layers is of order \(\exp (Al/\varepsilon )\), where \(A:=\min \left\{ F''(\pm 1)\right\} >0\) and l is the distance between the layers. Therefore, the dynamics strongly depends on the parameter \(\varepsilon >0\) and the evolution of the solution is extremely slow when \(\varepsilon \rightarrow 0^+\). Moreover, the assumption \(F''(\pm 1)>0\) is necessary to have metastability and almost 25 years later than the publication of [3, 4, 13], in [2] the authors prove that in the degenerate case \(F''(\pm 1)=0\) the exponentially small speed of the layers is replaced by an algebraic upper bound. On the other hand, the slow motion phenomena described above appear only for potentials \(F\in C^2(\mathbb {R})\), while in the case of a potential of the form (1.10) with \(\theta \in (1,2)\) there exist stationary solutions to (1.11)–(1.2) that attain the values \(\pm 1\), with an arbitrary number of layers randomly located inside the interval (a, b), for details see [8] or [10]. In addition, in [10] a more general equation than (1.11) is considered: the linear diffusion \(u_{xx}\) is replaced by the (nonlinear) p-Laplace operator \((|u_x|^{p-2}u_x)_x\) and it is shown that the aforementioned phenomena strongly rely on the interplay between the parameters \(p,\theta >1\). To be more precise, there exist stationary solutions with a transition layer structure for any \(\theta \in (1,p)\); the metastable dynamics appears in the case \(\theta =p\) and, finally, the solutions exhibit an algebraic slow motion for any \(\theta>p>1\).

The main novelty of our work consists in considering a general function Q satisfying (1.4)–(1.5), with the purpose of extending the aforementioned results to the reaction–diffusion model (1.1). The choice of a function Q satisfying (1.4)–(1.5) is inspired by [21], where the authors introduced the so-called Perona–Malik equation (PME)

with Q as in (1.6), to describe noise reduction and edge detection of digitalized images. To be more precise, in [21] the authors consider the multi-dimensional version of (1.12) in the cylinder \(\left\{ (x,t)\in \mathbb {R}^3 \, : \, x\in \Omega ,\, t\ge 0\right\} \), where \(\Omega \subset \mathbb {R}^2\) is a bounded open set, with initial datum \(u(x,0)=u_0(x)\) and homogeneous Neumann boundary conditions on \(\partial \Omega \). The initial datum \(u_0\) represents the brightness (or the grey level) of a picture which one wants to denoise and it is numerically shown that the evolution according to (1.12) smooths the zones where \(|\nabla u_0|<\kappa \) and enhances the zones with \(|\nabla u_0|>\kappa \), providing a sharper image than the initial one. Ever since it was proposed by Perona and Malik in 1990, the nonlinear forward-backward heat Eq. (1.12) has attracted the interest of the mathematical community. The main reason is that, on the one hand, numerical experiments exhibit good stability properties and produce the desired effect of fading out flat noise, but on the other hand from the analytical point of view the forward-backward character of (1.12) induces a general skepticism, partially supported by some negative results about the ill-posedness nature of (1.12). This is usually referred in literature as “the Perona–Malik paradox” [19]. Without claiming to be complete, we list some fundamental analytical results about (1.12). In [14], the author proves that if \(u:\mathbb {R}^2\rightarrow \mathbb {R}\) is a \(C^1\) solution of (1.12), then there exist \(a,b\in \mathbb {R}\) such that \(u(x,t)=ax+b\), for any \((x,t)\in \mathbb {R}^2\). It is important to mention that such a result is a consequence of the forward-backward character of the equation, since it is well known that it is false, in general, for forward parabolic equation: for instance, the function \(u(x,t)=e^t\sin (x)\) is an entire solution of the classical heat equation. The initial boundary value problem (IBVP) associated to (1.12) with homogeneous Neumann boundary conditions (1.2) has been investigated in [18], where the authors prove the following results:

-

If the initial datum satisfies \(|u_0'(x)|>\kappa \), for some \(x\in [a,b]\), then (IBVP) does not admit any \(C^1\) solution defined for every \(t\ge 0\).

-

\(C^1\) solutions of (IBVP) are unique. This is false if one considers weaker solutions as it is shown in [17].

-

If the initial datum satisfies \(|u_0'(x)|<\kappa \), for any \(x\in [a,b]\), then there exists a unique global classical solution, namely a unique solution \(u\in C^2((a,b)\times (0,T))\), for any \(T>0\).

Finally, we recall that in [15] it is shown that PME admits a natural regularization by forward-backward diffusions possessing better analytical properties than PME itself.

Many papers have also been devoted to the study of (1.12) with the presence of reaction and/or convection terms: for instance, we mention [12] where a Burgers type equation with Perona–Malik diffusion is considered and [6], where the authors study existence of wavefront solutions for a reaction–convection equation with Perona–Malik diffusion. Regarding the reaction–diffusion model (1.1), we recall that the corresponding multi-dimensional model is studied in [20], where it is shown that combining the properties of an anisotropic diffusion like the Perona–Malik’s with those of bistable reaction terms provides a better processing tool which enables noise filtering, contrast enhancement and edge preserving.

Up to our knowledge, the long time behavior of phase transition layer solutions to reaction–diffusion models with a nonlinear diffusion of the form \(Q(u_x)_x\) has been studied only in the case of the p-Laplacian [10] and mean curvature operators [9, 11]. Both cases are rather different from (1.4)–(1.5): to highlight the differences, let us expand the term \(Q(u_x)_x\) as \(Q'(u_x)u_{xx}\) and notice that the derivative of Q plays the role of the diffusion coefficient. In the case of the p-Laplacian, \(Q'\) is singular at \(s=0\) for \(p\in (1,2)\) and degenerate (\(Q'(0)=0\)) for \(p>2\), while in the cases studied in [9, 11] the function Q is explicitly given by

Hence, in the first case the derivative \(Q'\) is strictly positive for any \(s\in \mathbb {R}\) and since \(Q'(s)\rightarrow 0\) as \(|s|\rightarrow \infty \), one has a degenerate diffusion coefficient for large values of \(|u_x|\), while in the latter case \(Q'(s)\rightarrow \pm \infty \) as \(s\rightarrow \pm 1\) and, as a consequence, the diffusion coefficient is strictly positive but singular at \(\pm 1\). In the case considered in this article, (1.4)–(1.5) imply that not only the diffusion coefficient is degenerate at \(\pm \infty \) and \(\pm \kappa \), but the diffusion coefficient is even strictly negative when \(|u_x|>\kappa \). Thus, our work provides the first investigation of long time behavior of phase transition layer solutions in the case of a degenerate nonlinear diffusion term \(Q(u_x)_x\), with Q that changes monotonicity; however, as we will see, it is sufficient that \(Q'>0\) in a neighborhood of the origin to prove our results, see also Remark 3.2. Moreover, we mention that in [9,10,11] an explicit formula for Q is considered, while here Q is not explicit but it is a generic function satisfying (1.4)–(1.5). Actually, the interested reader can check that even if considering specific examples as in (1.6), one cannot obtain explicit formulas for the involved functions, so that the computations become much more complicated; for details, see Remark 2.2.

The main contribution of this paper is to show that the well know results about the classic model (1.11) described above can be extended to (1.1), even if Q is a non-monotone function. Roughly speaking, the condition \(Q'>0\) in \((-\kappa ,\kappa )\) for some generic \(\kappa \) is enough to obtain on one side the existence of compactons, which are stationary solutions (hence, invariant under the dynamics of (1.1)) with a transition layer structure, in the case F satisfy (1.7)–(1.8) with \(\theta \in (1,2)\); on the other side, one can prove existence of metastable patterns if \(\theta =2\) and existence of algebraically slowly moving structures when \(\theta >2\). The dynamical (in)stability of the compactons remains an interesting open problem; it is not clear whether small perturbations of compactons generate a slow motion dynamics similar to the case \(\theta =2\) or the transition layer structure is maintained for all times \(t>0\).

1.1 Plan of the Paper

We close the Introduction with a short plan of the paper; Sect. 2 is devoted to the stationary problem associated to (1.1). We will consider steady states both in the whole real line and in bounded intervals, showing also that there are substantial differences depending on the value of the power \(\theta \) appearing in (1.8). Indeed, the existence of the aforementioned compactons is a peculiarity of the case \(\theta \in (1,2)\) and it is established in Proposition 2.7. On the contrary, in the case \(\theta \ge 2\) we focus our attention on the existence of periodic solutions in the real line and their restriction on a bounded interval [a, b] (see Propositions (2.8) and 2.9 respectively) that oscillate among values \(\pm {\bar{s}}\), with \({\bar{s}}\approx 1\) (strictly less that one). In Sect. 3 we prove some variational results and lower bounds on the energy associated to (1.1) (for its definition, we refer to (3.1)) which will be crucial in order to prove the results of Sect. 4; we here focus on the asymptotic behavior of the solutions to (1.1)–(1.2)–(1.3), showing that if the dynamics starts from an initial datum with N transition layers inside the interval [a, b], then such configuration will be maintained for extremely long times. As it was previously mentioned, the time taken for the solution to annihilate the unstable structure of the initial datum strongly depends on the choice of the parameter \(\theta >1\) appearing in (1.8) and we have either metastable dynamics (exponentially slow motion) in the critical case \(\theta =2\) or algebraic slow motion in the degenerate case \(\theta >2\). We underline again that in the case \(\theta \in (1,2)\) solutions with N transition layers are either stationary solutions (compactons) or close to them. Numerical simulations, which illustrate the analytical results, are provided at the end of Sect. 4.

2 Stationary Solutions

The aim of this section is to analyze the stationary problem associated to (1.1) and to prove the existence of some special solutions to

with \(\varepsilon >0\), Q satisfying assumptions (1.4)–(1.5) and F as in (1.7)–(1.8), both in the whole real line and in a bounded interval complemented with homogeneous Neumann boundary conditions (1.2). In order to prove the results of this section we actually have to require more regularity on the diffusion flux Q, that is \(Q \in C^3(\mathbb {R})\); however, we underline that the basic examples (1.6) we have in mind satisfy such additional assumption as well.

2.1 Standing Waves

We start by considering problem (2.1) in \(\mathbb {R}\), and we focus the attention on standing waves, that can be defined as follows: an increasing standing wave \(\Phi _\varepsilon :=\Phi _\varepsilon (x)\) is a solution to (2.1) in the whole real line satisfying either

for some \(x_\pm \in \mathbb {R}\) with \(x_-<x_+\) or

Similarly, a decreasing standing wave \(\Psi _\varepsilon :=\Psi _\varepsilon (x)\) satisfies (2.1) and either

for some \(x_\pm \in \mathbb {R}\) with \(x_-<x_+\) or

It is easy to check that solutions to (2.1)–(2.2) and (2.1)–(2.3) are invariant by translation; thus, in order to deduce a unique solution we add the further assumption \(\Phi _\varepsilon (0)=0\) and we rewrite the problems (2.1)–(2.2) and (2.1)–(2.3) as

Analogously, in the decreasing case we have

As we will see below, there is a fundamental difference whether F satisfies (1.8) with \(\theta \in (1,2)\) or with \(\theta >2\): in the first case, the standing waves touch the values \(\pm 1\), namely the increasing standing waves satisfy (2.2). Conversely, if \(\theta >2\) the standing waves reach the values \(\pm 1\) only in the limit: for instance, in the increasing case, they satisfy (2.3). In order to prove such claim, as well as the existence of a unique solution to (2.4) (or, alternatively, of (2.5)), we need to premise the following technical result.

Lemma 2.1

Let \(Q \in C^1(\mathbb {R})\) satisfying (1.4)–(1.5). Denote by

and

Then, there exists a unique (strictly positive) function \(J_\varepsilon \) which inverts the equation \(P_\varepsilon (s)=\xi \) in \([0,\kappa \varepsilon ^{-2}]\): for any \(s\in [0,\kappa \varepsilon ^{-2}]\) and \(\xi \in [0,\ell \varepsilon ^{-2}]\) there holds

Moreover, the following expansion holds true

Proof

In order to study the invertibility of the equation \(P_\varepsilon (s)=\xi \), we observe that

Hence, \(P_\varepsilon \) is an even function satisfying \(P_\varepsilon '(0)=P_\varepsilon '(\pm \kappa \varepsilon ^{-2})=0\) and

because of the definition (2.7) and the assumptions on Q (1.4)–(1.5). Thus, the equation \(P_\varepsilon (s)=\xi \) has exactly two solutions in \([-\kappa \varepsilon ^{-2},\kappa \varepsilon ^{-2}]\), provided that \(\xi \in [P_\varepsilon (0),P_\varepsilon (\kappa \varepsilon ^{-2})]\). Going further, one has \(P_\varepsilon (0)=0\) while

where the constant \(\ell \), defined in (2.6), is strictly positive because \(\kappa \, Q(\kappa )\) is indeed greater than \({\tilde{Q}}(\kappa )\), which represents the area underneath the function Q in the interval \([0,\kappa ]\). Hence, (2.8) holds true and it remains to prove (2.9). Using (2.10), we deduce

Since the function H in (2.11) satisfies \(H(0)=H'(0)=0\) and \(H''(0)=Q'(0)>0\), for \(s \sim 0\) we have

and the latter equality gives a hint that \(J_\varepsilon \) behaves like the square root of s for \(s \sim 0\). To prove it, let us study the behavior of \((H^{-1})^2\) close to the origin. We have

so that

Thus,

where \(R(s)={\mathcal {O}}(s^2)\). Hence, we can state that

Indeed,

By using (2.8)–(2.11), one obtains

that is (2.9) and the proof is complete. \(\square \)

Remark 2.2

It is interesting to notice that, even if we consider the explicit examples in (1.6), it is not possible to give an explicit formula for the function \(J_\varepsilon \) in (2.8). As we will see in the rest of the paper, not having an explicit formula for \(J_\varepsilon \) considerably complicates the proof of our results, in which the expansion (2.9) plays a crucial role.

We have now all the tools to prove the following existence result.

Proposition 2.3

Let Q satisfy (1.4)–(1.5) and F satisfy (1.7)–(1.8). Then, there exists \(\varepsilon _0>0\) such that problem (2.4) admits a unique solution \(\Phi _\varepsilon \in C^2(\mathbb {R})\) for any \(\varepsilon \in (0,\varepsilon _0)\). Moreover we have the following alternatives:

-

(i)

If \(\theta \in (1,2)\), then the profile \(\Phi _\varepsilon \) satisfies (2.2); more precisely one has

$$\begin{aligned} \Phi _\varepsilon (x^\varepsilon _1)=1, \quad \Phi _\varepsilon (x^\varepsilon _2)=-1, \end{aligned}$$(2.12)where

$$\begin{aligned} x^\varepsilon _1=\varepsilon {\bar{x}}_1+o(\varepsilon ), \quad x^\varepsilon _2=-\varepsilon {\bar{x}}_2+o(\varepsilon ), \end{aligned}$$(2.13)for some \({\bar{x}}_i>0\), for \(i=1,2\) which do not depend on \(\varepsilon \).

-

(ii)

If \(\theta =2\), then \(\Phi _\varepsilon \) satisfies (2.3) with the following exponential decay:

$$\begin{aligned} \begin{aligned}&1-\Phi _\varepsilon (x)\le c_1 e^{-c_2x}, \quad&\text{ as } x\rightarrow +\infty ,\\&\Phi _\varepsilon (x)+1\le c_1 e^{c_2x},&\text{ as } x\rightarrow -\infty , \end{aligned} \end{aligned}$$for some \(c_1,c_2>0\).

-

(iii)

If \(\theta >2\), then \(\Phi _\varepsilon \) satisfies (2.2) with algebraic decay:

$$\begin{aligned} \begin{aligned}&1-\Phi _\varepsilon (x)\le d_1 x^{-d_2}, \quad&\text{ as } x\rightarrow +\infty ,\\&\Phi _\varepsilon (x)+1\le d_1x^{-d_2},&\text{ as } x\rightarrow -\infty , \end{aligned} \end{aligned}$$for some \(d_1,d_2>0\).

Proof

In order to prove the existence of a solution to (2.4), we multiply the ordinary differential equation by \(\Phi '_\varepsilon =\Phi '_\varepsilon (x)\), deducing

As a consequence,

where \(P_\varepsilon \) is defined in (2.7). In order to solve the Cauchy problem (2.14), we apply Lemma 2.1; hence, we need to require \(F(\Phi _\varepsilon ) \le \ell \varepsilon ^{-2}\), namely we choose

Condition (2.15) ensures that we can find monotone solutions to (2.14) applying the standard method of separation of variables; in particular, we obtain a solution \(\Phi _\varepsilon \), satisfying \(\Phi '_\varepsilon \in [0,\kappa \varepsilon ^{-2}]\), which is implicitly defined by

where \(J_\varepsilon \) is defined in (2.8). Since \(J_\varepsilon \left( F(u)\right) =0\) if and only if \(F(u)=0\) (that is, \(u= \pm 1\)), in order to prove the uniqueness of \(\Phi _\varepsilon \) and its behavior described in the properties (i), (ii) and (iii) of the statement, we need to study the convergence of the following improper integrals

Substituting (2.9) in the first integral of (2.16), we end up with

where

The crucial point is that the character of the integral in (2.17) is simply given by \({\bar{x}}_1\), since there exists \(\varepsilon _0>0\) such that \(|I(\varepsilon )|<\infty \) for any \(\varepsilon \in (0,\varepsilon _0)\). Indeed, using the estimate \(|\rho (\varepsilon ^2F(u))| \le C \varepsilon ^2 F(u)\), one gets

and we can choose \(\varepsilon >0\) sufficiently small such that

Moreover, notice that \({\bar{x}}_1\) does not depend on \(\varepsilon \), while \(I(\varepsilon )=o(\varepsilon )\). By using (1.9), we obtain

Hence, \({\bar{x}}_1 < +\infty \) if and only if \(\theta < 2\), and the point (i) of the thesis follows; the first equality in (2.13) is a consequence of (2.17). Going further, points (ii)–(iii) of the statement are a consequence of the standard separation of variables; indeed (2.14) can be rewritten as

with \(J_\varepsilon \ge 0\) satisfying

In particular, we have exponential decay if \(\theta =2\) and algebraic decay if \(\theta >2\).

The computations are completely similar if considering the second integral in (2.16), and we thus proved the existence of a unique solution of (2.4) satisfying properties (i), (ii) and (iii). Precisely, if \(\theta \ge 2\) there exists a unique solution of (2.14), while if \(\theta \in (1,2)\), (2.14) has infinitely many solutions, but the additional requirement \(\Phi _\varepsilon '(x)\ge 0\), for any \(x\in \mathbb {R}\), guarantees that there is a unique solution of (2.4). \(\square \)

Remark 2.4

We notice that the condition \(\Phi '_\varepsilon \in [0,\kappa \varepsilon ^{-2}]\) allows also for high values of the first derivative; to be more precise, one has

As a corollary of Proposition 2.3, we can prove existence of a unique solution to (2.5), sharing similar properties to (i)–(ii) and (iii).

Corollary 2.5

Under the same assumptions of Proposition 2.3, there exists \(\varepsilon _0>0\) such that problem (2.5) admits a unique solution \(\Psi _\varepsilon \) for any \(\varepsilon \in (0,\varepsilon _0)\). Moreover, if \(\theta \in (1,2)\), then

where \(x^\varepsilon _i\), \(i=1,2\) are defined in (2.13). On the other hand, if \(\theta =2\) (\(\theta >2\)) the profile \(\Psi _\varepsilon \) has an exponential (algebraic) decay towards the states \({\mp }1\).

Proof

Using the symmetry of Q and, in particular, the fact that \(Q'\) is an even function, it is a simple exercise to verify that \(\Psi _\varepsilon (x):=\Phi _\varepsilon (-x)\), with \(\Phi _\varepsilon \) given by Proposition 2.3, is the unique solution to (2.5). \(\square \)

Remark 2.6

The existence of a unique solution \(\Psi _\varepsilon \) to (2.5) can be proven independently of the one of \(\Phi _\varepsilon \): indeed, it is enough to adapt the proof of Proposition 2.3 by inverting the equation \(P_\varepsilon (s)=\xi \) in the interval \([-\kappa \varepsilon ^{-2},0]\) (in this case the inverse is \(-J_\varepsilon \), see (2.8)), and obtaining the existence of a unique solution \(\Psi _\varepsilon \) with negative derivative \(\Psi '_\varepsilon \in [-\kappa \varepsilon ^{-2},0]\).

The previous results are instrumental to prove the existence of a special class of stationary solutions on a bounded interval in the case \(\theta \in (1,2)\), as we will see in the next section. Indeed, in such a case the standing wave reaches \(\pm 1\) for a finite value of the x-variable and with zero derivative, so that we are able to construct infinitely many steady states oscillating between \(\pm 1\) and satisfying the boundary conditions (1.2). On the contrary, if \(\theta \ge 2\), all the standing waves satisfy (2.3), so that they never satisfy the homogeneous Neumann boundary conditions and can never solve (2.1)–(1.2) in any bounded interval.

2.2 Compactons

We here consider the so-called compactons, which are by definition stationary solutions connecting two phases on a finite interval. More explicitly, we prove the existence of infinite solutions to the stationary problem associated to (1.1)–(1.2), namely

oscillating between \(-1\) and \(+1\) (touching them), provided that \(\varepsilon \) is sufficiently small. The existence of such solutions is shown by proving that, given an arbitrary set of real numbers in [a, b], for sufficiently small \(\varepsilon \), there are two solutions \(\varphi _1\) and \(\varphi _2\) to (2.19) having such numbers as zeros, satisfying

and oscillating between \(-1\) and \(+1\) ( \(+1\) and \(-1\), respectively).

Proposition 2.7

Let \(1<\theta <2\), \(N\in {\mathbb {N}}\) and let \(h_1,h_2,\dots , h_N\) be any N real numbers such that \(a<h_1<h_2<\cdots<h_N<b\). Then, for any \(\varepsilon \in (0,{\bar{\varepsilon }})\) with \({\bar{\varepsilon }}>0\) sufficiently small, there exist two solutions \(\varphi _1\) and \(\varphi _2\) to (2.19) satisfying (2.20), oscillating between \(-1\) and \(+1\) and between \(+1\) and \(-1\) respectively, and having precisely N zeros at \(h_1,h_2,\dots , h_N\).

Proof

We start by proving the existence of the solution \(\varphi _1\) to (2.19) on the interval [a, b] satisfying the first condition in (2.20), oscillating between \(-1\) and \(+1\) and having \(h_1,h_2,\dots , h_N\) as zeros. To this aim, we consider the function

where \(\Phi _\varepsilon \) is the increasing standing wave solution of Proposition 2.3. Then, the function \(\Phi _{\varepsilon }^1\) has a zero at \(h_1\). Furthermore, by (2.4) and (2.12), recalling that

for some \(\bar{x}_1, \bar{x}_2>0\), we can conclude that \(\Phi _{\varepsilon }^1\) takes the values \(-1\) on \((-\infty , h_1+x_2^\varepsilon ]\) and \(+1\) on \([h_1+x_1^\varepsilon , +\infty )\); we notice that if \(\varepsilon \) is sufficiently small, then \(h_1+x_2^\varepsilon <h_1\). Let us now fix \(\varepsilon >0\) small enough so that \(h_1+x_2^\varepsilon >a\); the restriction of \(\Phi _{\varepsilon }^1\) to the interval \([a,h_1+x_1^\varepsilon ]\), denoted with the same symbol, turns out to be equal to \(-1 \) for every \(x\in [a,h_1+x_2^\varepsilon ]\), touching \(+1\) at \(h_1+x_1^\varepsilon \). Let us introduce the notation

for every \(j=1,\dots , N\), and for every \(i=1,\dots , N-1\) let us define the function

For each fixed \(i=1,\dots , N-1\), the function \(\Phi _{\varepsilon }^{i+1}\) has a zero at \(h_{i+1}\), and takes the values \(-1\) for every \(x \ge h_{i+1}+y_{i+1}^\varepsilon \) and \(+1\) for every \(x\le h_{i+1}-y_{i}^\varepsilon \) as long as i is odd, otherwise it takes the values \(-1\) for every \(x \le h_{i+1}-y_{i}^\varepsilon \) and \(+1\) for every \(x\ge h_{i+1}+y_{i+1}^\varepsilon \). Up to choosing \(\varepsilon \) possibly smaller in order to have \(h_i+ y_i^\varepsilon \le h_{i+1} -y_i^\varepsilon \), for every \(i=1,\dots , N-1\) (namely \(2y_i^\varepsilon \le h_{i+1} -h_i \)), the restriction of the function \(\Phi _{\varepsilon }^{i+1}(x)\) to the interval \([h_i+y_i^\varepsilon , h_{i+1}+y_{i+1}^\varepsilon ]\) (still using the same notation), takes the value \((-1)^{i+1}\) for every \(x\in [h_i+y_i^\varepsilon , h_{i+1}-y_i^\varepsilon ]\), touching \((-1)^i\) at \(h_{i+1}+y_{i+1}^\varepsilon \). Selecting \(\varepsilon >0\) sufficiently small so that \(h_N+y_N^\varepsilon < b\), we end up defining \(\varphi _1\) in the following way

By construction, the resulting map \(\varphi _1\in C^2([a,b])\) solves (2.19) and has exactly N zeros at points \(h_1,h_2,\dots , h_N\).

Arguing as above, we can construct the compacton \(\varphi _2\) which satisfies the second condition in (2.20) in the following way

The proof is thereby complete. \(\square \)

We, again, point out that since the integrals in (2.16) are finite only if \(\theta \in (1,2)\), the compactons solutions constructed in Proposition 2.7 exist only in such a case.

2.3 Periodic Solutions for \(\theta \ge 2\)

As already mentioned in the previous section, when \(\theta \ge 2\) the integrals in (2.16) diverge, so that solutions to (2.19) cannot touch the values \(\pm 1\) and compactons solutions do not exist anymore. In this case, we construct a different type of stationary solutions with a transition layer structure, which can be seen as a restriction of periodic solutions on the whole real line. The study of all periodic solutions to (2.1) is beyond the scope of the paper and it is strictly connected to the specific form of the potential F which, in our case, is a very generic function satisfying (1.7)–(1.8) and may give raise to infinitely many kinds of periodic solutions in the whole real line, see two examples in Figs. 2 and 3 below. Indeed, assumptions (1.7)–(1.8) only assure that F is a double well potential with wells of equal depth in \(\pm 1\), and describe its behavior close to these minimum points, while give no informations of the shape of F between them. Here, we are interested in periodic solutions oscillating between values close to \(\pm 1\) and assumptions (1.7)–(1.8) are enough to prove their existence. Denote by

As it was mentioned before, assumptions (1.7)–(1.8) give no information on the structure of \({\mathcal {Z}}\), that is the set of all the critical points of F inside \((-1,1)\), that could be a discrete set or even an interval. Multiplying (2.1) by \(\varphi '\), we deduce that

where C is an appropriate integration constant. For instance, if \(C=0\) we obtain the constant solutions \(\pm 1\) or the standing wave constructed in Proposition 2.3; in the next result, we prove that the choice \(C\in \left( -\Gamma ,0\right) \) gives raise to periodic (bounded) solutions.

Proposition 2.8

Let \(\varepsilon >0\), \(P_\varepsilon \) as in (2.7), F satisfying (1.7)–(1.8) with \(\theta \ge 2\) and \(C\in \left( -\Gamma ,0\right) \), where \(\Gamma \) is defined in (2.21). Denote by \({\bar{s}}\in (0,1)\) the unique number such that \(-F({\bar{s}})=C\). Then there exists \(\varepsilon _0>0\) such that, for any \(\varepsilon \in (0,\varepsilon _0)\), problem (2.22) admits periodic solutions \(\Phi _{T_\varepsilon }\), oscillating between \(-{\bar{s}}\) and \({\bar{s}}\), with fundamental period \(2T_\varepsilon \), where

with \(J_\varepsilon \) defined in (2.8).

Proof

Fix \(C\in \left( -\Gamma ,0\right) \) and notice that the assumption (1.7) together with definition (2.21) imply the existence of a unique \(s\in (0,1)\) such that \(-F({\bar{s}})=C\). Since any periodic solution is unique up to translation we can assume, without loss of generality, that \(\Phi _{T_\varepsilon }(0)=-{\bar{s}}\). By using (2.8), from Eq. (2.22) we can infer that \(\Phi _{T_\varepsilon }\) is implicitly defined as

provided that \(F(u)+C \in [0,\ell \varepsilon ^{-2}])\), where \(\ell \) is defined in (2.6). Hence, we need to require

and, since \(C<0\), such condition is satisfied again as soon as (2.15) holds. We now have to verify the convergence of the improper integral

By using (2.9) and the Taylor expansion \(F(s)-F({\bar{s}})= F'({\bar{s}})(s-{\bar{s}})+o\left( |s-{\bar{s}}|\right) \), with \(F'({\bar{s}})<0\) because \({\bar{s}}\in (0,1)\), we deduce

Similarly, one can prove that

and, as a consequence, the improper integral (2.24) is finite. Therefore, we have constructed a solution \(\Phi _{T_\varepsilon }:[0, T_\varepsilon ({\bar{s}})]\), satisfying

Let us now define

It is easy to check that \(\Phi _{T_\varepsilon }\) solves (2.22) in \(\left[ {T_\varepsilon ({\bar{s}})},2T_\varepsilon ({\bar{s}})\right] \) and

We have thus extended the solution in the interval \(\left[ {T_\varepsilon ({\bar{s}})},2T_\varepsilon ({\bar{s}})\right] \), and thus constructed a solution in \(\left[ 0,2T_\varepsilon ({\bar{s}})\right] \); by iterating the same argument, we can extend the solution to the whole real line by \(2T_\varepsilon ({\bar{s}}) - \)periodicity, and the proof is complete. \(\square \)

We now make use of the solutions constructed in Proposition 2.8 to construct solutions to (2.19) having N equidistant zeroes in [a, b].

Proposition 2.9

Let \(\varepsilon >0\), Q satisfying (1.4)–(1.5), F satisfying (1.7)–(1.8) with \(\theta \ge 2\) and let us fix \(N \in \mathbb {N}\). Then, if \(\varepsilon \in (0,{\bar{\varepsilon }})\) with \({\bar{\varepsilon }}>0\) sufficiently small, there exists \({\bar{s}}\) close to (and strictly less than) \(+1\), such that problem (2.19) admits a solution oscillating between \(\pm {\bar{s}}\) and with N equidistant zeroes inside the interval [a, b], located at

where \(T_\varepsilon ({\bar{s}})\) is defined in (2.23).

Proof

The solution we are looking for can be constructed by shifting and modifying properly \(\Phi _{T_\varepsilon }\), the periodic solution of Proposition 2.8. Hence, in order to construct a solution \(\psi \) with exactly N zeroes in [a, b] such that \(\psi '(a)=\psi '(b)=0\), we proceed as follows: first of all, we define \(\psi (x)= \Phi _{T_\varepsilon }(x-a)\) (recall that \(\Phi _{T_\varepsilon }(0)=-{\bar{s}}\)). In such a way \(\psi (a)=-{\bar{s}}\), and we also have \(\psi '(a)=0\). Moreover, the first zero \(h_1\) of \(\psi \) is located exactly at \(T_\varepsilon /2+a\), while \(h_2=T_\varepsilon + h_1\), \(h_3= T_\varepsilon +h_2\) and so on, leading to (2.25). Thus, in order to have b located in the middle point after the last zero of \(\psi \) (so that, consequently, \(\psi '(b)=0\)), we have to choose \({\bar{s}}\) in such a way that \(b=N T_\varepsilon ({\bar{s}})+a\), if \(\varepsilon \in (0,{\bar{\varepsilon }})\). In other words, we have to prove that, if \(\varepsilon \in (0,{\bar{\varepsilon }})\), for any \(N \in \mathbb {N}\) and \(a,b \in \mathbb {R}\) there exists \({\bar{s}}\approx 1\) such that

To this purpose, by putting the expansion (2.9) in the definition (2.23), we infer

Thus, we have to find \({\bar{s}}\approx 1\) such that

Since the integral on the left hand side is a monotone function of \({\bar{s}}\approx 1\) [4] and it satisfies

we can state that if \(\varepsilon \) is sufficiently small, then there exists a unique \({\bar{s}}\approx 1\) such that \(T_\varepsilon ({\bar{s}})= (b-a)/N\), and the proof is complete. \(\square \)

Remark 2.10

We point out that in the case \(\theta \in (1,2)\) we proved existence of compactons with a generic number \(N\in {\mathbb {N}}\) of layers located at arbitrary positions \(h_1,h_2,\dots ,h_N\) in the interval [a, b]. On the other hand, in Proposition 2.9, we proved that, for \(\theta \ge 2\), there exist solutions with N layers, which oscillate among the values \(\pm {\bar{s}}\), with \({\bar{s}}\approx 1\) determined by the condition \(T_\varepsilon (\bar{s})= (b-a)/N\), but the layers position is given by (2.25), and so it is not random. Nevertheless, such result can be proved also when \(\theta \in (1,2)\), but only if \(\varepsilon \) is large enough: indeed, one has

and one can easily see that the right hand side of (2.26) diverges if \(\varepsilon \rightarrow 0^+\).

Finally, notice that we proved that for \(\varepsilon \in (0,{\bar{\varepsilon }})\) there exists a unique \({\bar{s}}\) (depending on \(\varepsilon \)) such that \(T_\varepsilon ({\bar{s}})= (b-a)/N\) and, in particular, \(\displaystyle {\lim \nolimits _{\varepsilon \rightarrow 0^+}}{\bar{s}}=1,\) meaning that the smaller \(\varepsilon \) is, the closer \({\bar{s}}\) is to 1.

2.3.1 Particular Cases of Potential F

In order to give a hint of what can happen for particular choices of the potential F satisfying (1.7)–(1.8), we consider two specific examples. In the first one, F is given by (1.10) with \(\theta \ge 2\) and, as a consequence, the admissible levels of the energy that lead to periodic (bounded) solutions are \(C \in \left( -\frac{1}{2\theta },0\right) \), see Fig. 2.

On the other hand, let us consider a symmetric potential F with a local minimum located in \(u=0\) and, as a consequence, two local maxima located in \(\pm {\bar{u}}\), for some \({\bar{u}} \in (0,1)\), see Fig. 3, where \(-F\) is depicted; in this case, the periodic solutions described in Proposition 2.8 appear for \(C \in \left( -F(0), 0\right) \) (hence with \(\Gamma =F(0)\) in (2.21)), see the green line in Fig. 3. Moreover, if \(C= -F(0)\), homoclinic solutions appear (see the blue line in Fig. 3), while for \(C \in \left( -F({\bar{u}}), F(0)\right) \) one can construct new periodic solutions entirely contained either in the negative or in the positive half plane. Of course the case of a non-symmetric potential will be even more difficult (for instance, one will have several level of the energy corresponding to different homoclinic solutions), and this study will be the object of further investigations.

3 Variational Results

In this section we collect and prove some variational results needed in order to show the slow motion phenomena of the solutions to (1.1)–(1.2) in the case \(\theta \ge 2\), whose analysis will be performed in Sect. 4. The idea is to apply the strategy firstly developed by Bronsard and Kohn in [3], subsequently improved by Grant in [16] and successfully used in many other models, see for instance [9,10,11] and references therein.

3.1 Lyapunov Functional

We start by introducing the energy associated to (1.1)–(1.2)

where \({\tilde{Q}}\) is defined in (2.6); we first prove that (3.1) is a Lyapunov functional for the model (1.1)–(1.2), that is a functional whose time derivative is negative if computed along the solutions to (1.1)–(1.2).

Lemma 3.1

Let \(u\in C([0,T],H^2(a,b))\cap C^1([0,T],H^1(a,b))\) be a solution of (1.1)–(1.2). Let \(E_\varepsilon \) be the functional defined in (3.1). Then

and

Proof

(3.3) directly follows from (3.2): indeed once (3.2) is proved, an integration with respect to time over the interval [0, T] yields (3.3).

As for the proof of (3.2), by differentiating with respect to time the energy \(E_\varepsilon \), we have

where we have used that \({\tilde{Q}}'(\varepsilon ^2 u_x)=Q(\varepsilon ^2 u_x)\). Integrating by parts, exploiting the boundary conditions (1.2) and that \(Q(0)=0\), we deduce

From this, since u satisfies Eq. (1.1), we end up with (3.2), thus completing the proof. \(\square \)

Remark 3.2

The equality (3.3) plays a crucial role in Sect. 4, where we analyze the slow motion of some solutions of (1.1)–(1.2). Then, in our analysis we can only consider sufficiently regular solutions. However, it is important to notice that all the stationary solutions constructed in Sect. 2 are such that the diffusion coefficient \(Q'(\varepsilon ^2u_x)\) is strictly positive (see (2.8) and (2.18)), namely the solutions are defined in the region where (1.1) is parabolic. Similarly, all the solutions we consider in the rest of the paper satisfy the same estimates and, as a consequence, they have the necessary regularity to apply Lemma 3.1.

3.2 Lower Bounds

The aim of this subsection is to prove some lower bounds for the energy \(E_\varepsilon \), defined in (3.1), associated to a function which is sufficiently close in \(L^1\)-sense to a jump function with constant values \(-1\) and \(+1\) (we refer the reader to Definition 3.8). Such variational results present a different nature depending on either \(\theta =2\) or \(\theta >2\); moreover, we underline that in their proof the Eq. (1.1) does not come into play, unlike the result contained in Subsect. 3.1.

3.2.1 A Crucial Inequality

The first tool we need to prove the aforementioned lower bounds is an inequality involving the functions Q and \(J_\varepsilon \). To better understand the motivation behind such a tool, we recall the equation which identifies the standing waves solutions, that is

which in turn, using (2.10), can be rewritten as follows

We now observe that (2.8) implies \(\Phi '_\varepsilon =J_\varepsilon (F(\Phi _\varepsilon ))\), so that substituting into (3.4), we arrive at

Therefore, we seek a suitable inequality such that in some sense the equality holds along the standing wave solutions. Inspired by the previous considerations, we state and prove the following lemma.

Lemma 3.3

Let \(\varepsilon ,L>0\). If \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5) and \(J_\varepsilon \) satisfies (2.8), then

for any \((x,y)\in [-\kappa \varepsilon ^{-2}, \kappa \varepsilon ^{-2}]\times [0,\ell \varepsilon ^{-2}]\), where \(\kappa \) and \(\ell \) are defined in (1.5) and (2.6), respectively.

Proof

Since, by assumption, \({\tilde{Q}}\) is even, in order to prove (3.6) it is sufficient to study the sign of the function

for all \( x \in [0,\kappa \varepsilon ^{-2}]\) and for all \( y \in [0,\ell \varepsilon ^{-2}]\). For any (x, y) belonging to the inside of such a rectangle we have

if and only if \(Q(\varepsilon ^2 x)= Q(\varepsilon ^2 J_\varepsilon (y))\). Since Q is strictly increasing in the interval \([0,\kappa \varepsilon ^{-2}]\), we thus have \(g_x(x,y)=0\) if and only if \(x=J_\varepsilon (y)\). Let us now evaluate \(g_y\) in such points:

where we used (2.7) and (2.8). It follows that the only internal critical points of the function g are given by \(x=J_\varepsilon (y)\), and we have

Let us now study the function g on the boundary of the rectangle \([0, \kappa \varepsilon ^{-2}]\times [0,\ell \varepsilon ^{-2}]\), which is formed by four segments; we start with

and

where we used the fact that \(J_\varepsilon (0)=0\) and \(Q(0)=0\). Next, for \(y \in [0, \ell \varepsilon ^{-2}]\) we consider the function

and we have

Recalling (2.6), we observe that the function \(g_1\) is such that

Moreover \(g_1(0)= {\tilde{Q}}(\kappa ) >0\) while

implying that \(g(\kappa \varepsilon ^{-2},y) \ge 0\) for all \(y \in [0, \ell \varepsilon ^{-2}]\). Finally, for \(x \in [0,\kappa \varepsilon ^{-2}]\) we consider

where in the last equality we used (2.6)–(2.8), which imply \(J_\varepsilon (\ell \varepsilon ^{-2}) = \kappa \varepsilon ^{-2}\). Going further, we have

and

since Q is increasing and \(x \le k\varepsilon ^{-2}\). Hence, \(g(x, \ell \varepsilon ^{-2}) \ge 0\) for all \(x \in [0, \kappa \varepsilon ^{-2}]\).

We thus proved that g is non negative on the boundary; since \(g=0\) at the only internal critical points, we have that g is non negative for all \((x,y)\in [0,\kappa \varepsilon ^{-2}]\times [0,\ell \varepsilon ^{-2}]\), and the proof is complete. \(\square \)

The inequality (3.6) is crucial because it allows us to state that if \(\bar{u}\) is a monotone function connecting the two stable points \(+1\) and \(-1\) and (2.15) holds true, then the energy (3.1) satisfies

Our next goal is to show that the positive constant \(c_\varepsilon \) defined in (3.7) represents the minimum energy to have a single transition between \(-1\) and \(+1\); having this in mind, we fix once for all \(N\in {\mathbb {N}}\) and a piecewise constant function v with N transitions as follows:

The aforementioned lower bounds will allow us to state that if \(\{ u^\varepsilon \}_{\varepsilon >0}\) is a family of functions sufficiently close to v in \(L^1\), then

where the reminder term \(R_{\theta ,\varepsilon }\) goes to zero as \(\varepsilon \rightarrow 0^+\) with a speed rate depending on \(\theta \).

3.2.2 Lower Bound in the Critical Case \(\theta =2\)

Let us start by proving the lower bound in the case \(\theta =2\), where the reminder term \(R_{\theta ,\varepsilon }\) is exponentially small as \(\varepsilon \rightarrow 0^+\).

Proposition 3.4

Assume that \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5) and that \(F\in C^1(\mathbb {R})\) satisfies (1.7)–(1.8) with \(\theta =2\). Let us set

where \(\kappa \) is given in (1.5). Moreover, let v be as in (3.8) and \(A\in (0,r\sqrt{2\lambda _1 {\mathcal {Q}}^{-1}})\) with \(\lambda _1>0\) (independent on \(\varepsilon \)) as in (1.8). Then, there exist \(\varepsilon _0,C,\delta >0\) (depending only on Q, F, v and A) such that if \(u\in H^1(a,b)\) satisfies

then for any \(\varepsilon \in (0,\varepsilon _0)\),

where \(E_\varepsilon \) and \(c_\varepsilon \) are defined in (3.1) and (3.7), respectively.

Proof

Fix \(u\in H^1(a,b)\) satisfying (3.10) and \(\varepsilon \) such that (2.15) holds true. Take \({\hat{r}}\in (0,r)\) so small that

Then, choose \(0<\rho <\eta \) (with \(\eta \) given by (1.8)) sufficiently small that

Let us focus our attention on \(h_i\), one of the discontinuous points of v and, to fix ideas, let \(v(h_i\pm r)=\pm 1\), the other case being analogous. We claim that assumption (3.10) implies the existence of \(r_+\) and \(r_-\) in \((0,{\hat{r}})\) such that

Indeed, assume by contradiction that \(|u-1|\ge \rho \) throughout \((h_i,h_i+{\hat{r}})\); then

and this leads to a contradiction if we choose \(\delta \in (0,{\hat{r}}\rho )\). Similarly, one can prove the existence of \(r_-\in (0,{\hat{r}})\) such that \(|u(h_i-r_-)+1|<\rho \).

Now, we consider the interval \((h_i-r,h_i+r)\) and claim that

for some \(C>0\) independent on \(\varepsilon \). Observe that from (3.6), it follows that for any \(a\le c<d\le b\),

Hence, if \(u(h_i+r_+)\ge 1\) and \(u(h_i-r_-)\le -1\), then from (3.16) we can conclude that

which implies (3.15). On the other hand, notice that in general we have

where we again used (3.16). Let us estimate the first two terms of (3.17). Regarding \(I_1\), assume that \(1-\rho<u(h_i+r_+)<1\) and consider a minimizer \(z:[h_i+r_+,h_i+r]\rightarrow \mathbb {R}\) of \(I_1\) subject to the boundary condition \(z(h_i+r_+)=u(h_i+r_+)\); the existence of such a minimizer can be proved following [7, Chapter 4]. If the range of z is not contained in the interval \((1-\eta ,1+\eta )\), then from (3.16), it follows that

by the choice of \(r_+\) and \(\rho \), see (3.13). Suppose, on the other hand, that the range of z is contained in the interval \((1-\eta ,1+\eta )\). Then, the Euler–Lagrange equation for z is

Denoting by \(\psi (x):=(z(x)-1)^2\), we have \(\psi '=2(z-1)z'\) and

where we used the fact that \(\varepsilon ^2|z'|\le \kappa \) (see (2.18)) and (3.9). Since \(|z(x)-1|\le \eta \) for any \(x\in [h_i+r_+,h_i+r]\), using (1.8) with \(\theta =2\), we obtain

where \(\mu =A/(r-{\hat{r}})\) and we used (3.12). Thus, \(\psi \) satisfies

We compare \(\psi \) with the solution \({\hat{\psi }}\) of

which can be explicitly calculated to be

By the maximum principle, \(\psi (x)\le {\hat{\psi }}(x)\) so, in particular,

Then, we have

Thanks to the expansion

and (1.9)–(2.9), we can choose \(\varepsilon >0\) small enough that

for any \(s\in [z(h_i+r),1]\); as a consequence, (3.19) yields

From (3.16)–(3.22) it follows that, for some constant \(C>0\),

Combining (3.18) and (3.23), we get that the constrained minimizer z of the proposed variational problem satisfies

The restriction of u to \([h_i+r_+,h_i+r]\) is an admissible function, so it must satisfy the same estimate and we have

The term \(I_2\) on the right hand side of (3.17) is estimated similarly by analyzing the interval \([h_i-r,h_i-r_-]\) and using the second condition of (3.13) to obtain the corresponding inequality (3.18). The obtained lower bound reads:

Finally, by substituting (3.24) and (3.25) in (3.17), we deduce (3.15). Summing up all of these estimates for \(i=1, \dots , N\), namely for all transition points, we end up with

and the proof is complete. \(\square \)

3.2.3 Lower Bound in the Supercritical Case \(\theta >2\)

We now deal with the case \(\theta >2\), where we have a weaker lower bound for the energy, which is stated and proved in the following proposition.

Proposition 3.5

Assume that \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5) and that \(F\in C^1(\mathbb {R})\) satisfies (1.7)–(1.8) with \(\theta >2\). Let v as in (3.8) and define the sequence

Then, for any \(j\in {\mathbb {N}}\) there exist constants \(\delta _j>0\) and \(C>0\) such that if \(u\in H^1(a,b)\) satisfies

and

with \(\varepsilon \) sufficiently small, then

Proof

We prove our statement by induction on \(j\ge 1\). Let us begin by considering the case of only one transition \(N=1\) and let \(h_1\) be the only point of discontinuity of v and assume, without loss of generality, that \(v=-1\) on \((a,h_1)\). Also, we choose \(\delta _j\) small enough such that

Our goal is to show that for any \(j\in {\mathbb {N}}\) there exist \(x_j\in (h_1- 2j\delta _j,h_1)\) and \(y_j\in (h_1, h_{1}+2j\delta _j)\) such that

and

where \(\{k_j\}_{{}_{j\ge 1}}\) is defined in (3.26). We start with the base case \(j=1\), and we show that hypotheses (3.27) and (3.28) imply the existence of two points \(x_1{\in (h_1-2\delta _j,h_1)}\) and \(y_1\in (h_1,h_1+2\delta _j)\) such that

Here and throughout, C represents a positive constant that is independent of \(\varepsilon \), whose value may change from line to line. From hypothesis (3.27), we have

so that, denoting by \(S^-:=\{y:u(y)\le 0\}\) and by \(S^+:=\{y:u(y)>0\}\), (3.33) yields

Furthermore, from (3.29) with \(j=1\), we obtain

and therefore there exists \(y_1\in S^+\cap (h_1,h_1+2\delta _j)\) such that

Since F vanishes only at \(\pm 1\) and \(u(y_1)>0\) we can choose \(\varepsilon \) so small that the latter condition implies \(|u(y_1)-1|<\eta \); hence, from (1.9), it follows that \(u(y_1)\ge 1-C\varepsilon ^{\frac{1}{\theta }}\). The existence of \(x_1{\in S^-\cap (h_1-2\delta _j,h_1)}\) such that \(u(x_1)\le -1+C\varepsilon ^\frac{1}{\theta }\) can be proved similarly.

Now, let us prove that (3.32) implies (3.31) in the case \(j=1\), and as a trivial consequence we obtain the statement (3.28) with \(j=1\) and \(N=1\). Indeed, by using (3.6) and (3.32) one deduces

Reasoning as in (3.21) and using (1.9), we infer

and, as a trivial consequence,

where \(\alpha \) is defined in (3.26). This concludes the proof in the case \(j=1\) with one transition \(N=1\).

We now enter the core of the induction argument, proving that if (3.31) holds true for for any \(i\in \{1,\dots ,j-1\}\), \(j\ge 2\), then (3.30) holds true. By using (3.27) we have

Furthermore, by using (3.29) and (3.31) in the case \(j-1\), we deduce

implying

Finally, from (3.35) and (3.36) there exists \(y_{j}\in S^+\cap (y_{j-1},y_{j-1}+2\delta _j)\) such that

and, as a consequence, we have the existence of \(y_{j}\in (y_{j-1},y_{j-1}+2\delta _j)\) as in (3.30). The existence of \(x_{j}\in (x_{j-1}-2\delta _j,x_{j-1})\) can be proved similarly.

Proceeding as done to obtain (3.34), one can easily check that (3.30) implies

Since the definition (3.26) implies \(\alpha (k_j+1)=k_{j+1}\), the induction argument is completed, as well as the proof in case \(N=1\).

The previous argument can be easily adapted to the case \(N>1\). Let v be as in (3.8), and set \(a=h_0, h_{N+1}=b\). We argue as in the case \(N=1\) in each point of discontinuity \(h_i\), by choosing the constant \(\delta _j\) so that

and by assuming, without loss of generality, that \(v=-1\) on \((a,h_1)\). Proceeding as in (3.32), one can obtain the existence of \(x^i_1\in (h_i-2\delta _j,h_i)\) and \(y^i_1\in (h_i,h_i+2\delta _j)\) such that

On each interval \((x_1^i,y_1^i)\) we bound from below \(E_\varepsilon \) as in (3.34), so that by summing one obtains

that is (3.28) with \(j=1\). Arguing inductively as done in the case \(N=1\), we obtain (3.29) for \(E_\varepsilon \) in the general case \(j\ge 2\). \(\square \)

3.2.4 Some Comments on the Lower Bounds

First of all, let us notice that in the proof of Proposition 3.5, we simply took advantage of the behavior of Q in a neighborhood of zero, and of the fact that the potential \(F \in C(\mathbb {R})\) satisfies \(F(u)>0\) for any \(u \ne \pm 1\) and (1.9) for some \(\theta >0\). Hence, Proposition 3.5 holds also in the case \(\theta \in (0,2]\); moreover, since in such a case \(\alpha >1\), the increasing sequence in (3.26) is unbounded, and we can rewrite the estimate (3.28) as

provided that

Nevertheless, if \(\theta =2\), we underline that (3.11) provides a stronger lower bound, where the error is exponentially small rather than algebraically small.

On the other hand, if \(\theta >2\) then \(\alpha \in (0,1)\), and, consequently

Therefore, in the case \(\theta >2\) one only has the lower bounds

As a direct consequence of Propositions 3.4–3.5 we have the following result, showing that if the family \(\{ u_\varepsilon \}_{\varepsilon >0}\) makes N transitions among \(+1\) and \(-1\) in an energy efficient way (see (3.39) for the rigorous definition), then \(E_\varepsilon [u^\varepsilon ]\) converges, as \(\varepsilon \rightarrow 0\), to the minimum energy in the case of the classical Ginzburg-Landau functional (see [3]).

Corollary 3.6

Let v be as in (3.8) and let \(u^\varepsilon \in H^1(a,b)\) be such that

and assume that there exists a function \(\nu \, : \, (0,1) \rightarrow (0,1)\) such that

where \(c_\varepsilon \) is defined in (3.7). Then

Proof

By Propositions 3.4–3.5 and from (3.39) we have:

where

with A appearing in Proposition 3.4 and \(\{ k_j \}_{j \in \mathbb {N}}\) defined in (3.26). Moreover, by using (2.9) and (3.20), we deduce that

and substituting into the definition (3.7), we end up with

Hence, the thesis follows by simply passing to the limit as \(\varepsilon \rightarrow 0^+\) in (3.40). \(\square \)

Example of a function satisfying the assumptions of Corollary 3.6 We conclude this section by showing that there exist a family of functions satisfying assumptions (3.38)–(3.39). First of all, we observe that it is easy to check that the compactons \(\varphi _1\) and \(\varphi _2\) constructed in Proposition 2.7 satisfy (3.38) and \(E_\varepsilon [\varphi _{1}] =E_\varepsilon [\varphi _{2}] = N c_\varepsilon \). However, such stationary solutions exist only in the case \(\theta \in (1,2)\); the idea is to use a similar construction as the one done for compactons to obtain a function satisfying (3.38)–(3.39) also when \(\theta \ge 2\); we underline that in this case these profiles are not stationary solutions.

Let us thus consider the increasing standing wave \(\Phi _\varepsilon \), solution to (2.4), and observe that

Now, choose \(\varepsilon _0>0\) small enough so that the condition (2.15) holds true for any \(\varepsilon \in (0,\varepsilon _0)\) and fix \(N\in {\mathbb {N}}\) transition points \(a<h_1<h_2<\dots<h_n<b\). Denoted by

the middle points, we define

Notice that \(u^\varepsilon (h_j)=0\), for \(j=1,\dots ,N\) and for definiteness we choose \(u^\varepsilon (a)<0\) (the case \(u^\varepsilon (a)>0\) is analogous). Let us now prove that \(u^\varepsilon \) satisfies (3.38)–(3.39). It is easy to check that \(u^\varepsilon \in H^1(a,b)\) and satisfies (3.38); concerning (3.39), the definitions of \(E_\varepsilon \) and \(u^\varepsilon \) give

From (3.5), it follows that

where \(c_\varepsilon \) is defined in (3.7). Summing up all the terms we end up with \(E_\varepsilon [u^\varepsilon ]\le Nc_\varepsilon \) which clearly implies (3.39).

4 Slow Motion

In this last section we investigate the long time dynamics of the solutions to (1.1)–(1.2)–(1.3) with a special focus to their different speed rate of convergence towards an asymptotic configuration, which heavily depends on the parameter \(\theta \) appearing in the potential F (see the assumptions (1.7) and (1.8)). Specifically, the main results of this section are contained in Theorems 4.1 and 4.4: in the former we prove that in the critical case \(\theta =2\) the solutions to (1.1)–(1.2)–(1.3) exhibit a metastable behaviour, namely, they maintain the same unstable structure of the initial datum for a time which is exponentially long with respect to the parameter \(\varepsilon \); in the latter, concerning instead the supercritical case \(\theta >2\), we prove slow motion with a speed rate which is only algebraic with respect to \(\varepsilon \).

Preliminary assumptions Here and in the rest of the section, we fix a function v as in (3.8) and we assume that the initial datum in (1.3) depends on \(\varepsilon \) and satisfies

Moreover, we assume that there exist \(C, \varepsilon _0>0\) such that, for any \(\varepsilon \in (0,\varepsilon _0)\),

where \(R_{\theta ,\varepsilon }\) is defined in (3.41), that is \(u^\varepsilon _0\) satisfies the assumptions of Corollary 3.6.

We emphasize that an initial datum as the one satisfying the assumptions (4.1)–(4.2) is far from being a stationary solution if and only if \(\theta \ge 2\); indeed, when \(\theta \in (1,2)\), in Proposition 2.7 we proved the existence of a particular class of stationary solutions (compactons), which have an arbitrary number of transition layers that are randomly located inside the interval [a, b]. Hence, an initial datum satisfying (4.1)–(4.2) is either a steady state or a small perturbation of it; thus, proving that the corresponding time dependent solution maintains the same structure for long times is either trivially true (indeed, the same structure is maintained for all \(t>0\)) or only a partial result, since we do not know if such structure will be lost at some point. In other words, in the case \(\theta \in (1,2)\) transition layers do not evolve in time and could persist forever. On the contrary, if \(\theta \ge 2\), then stationary solutions can only have layers that are equidistant (see Proposition 2.9), so that an initial configuration as \(u^\varepsilon _0\) is in general far away from any steady state.

4.1 Exponentially Slow Motion

In this subsection we examine the persistence of layered solutions to (1.1)–(1.2)–(1.3) when \(\theta =2\) in the assumption (1.8) on the potential F. In particular, we prove that in this case a metastability phenomenon occurs, showing that the solutions perpetuate the same behavior of the initial datum for an \(\varepsilon \)-exponentially long time, that is, at least for a time equals \(m\,e ^{A/\varepsilon }\) for some \(A>0\) and any \(m>0\), both independent of \(\varepsilon \), as stated in the following theorem.

Theorem 4.1

(exponentially slow motion when \(\theta =2\)) Assume that \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5) and that \(F\in C^1(\mathbb {R})\) satisfies (1.7)–(1.8) with \(\theta =2\). Let v be as in (3.8) and \(A\in (0,r\sqrt{2\lambda _1 {\mathcal {Q}}^{-1}})\), with \({\mathcal {Q}}\) defined in (3.9) and \(\lambda _1>0\) (independent of \(\varepsilon \)) as in (1.8). If \(u^\varepsilon \) is the solution of (1.1)–(1.2)–(1.3) with initial datum \(u_0^{\varepsilon }\) satisfying (4.1) and (4.2) with \(R_{\theta ,\varepsilon }= \exp (-A/\varepsilon )\), then

for any \(m>0\).

The proof of Theorem 4.1 strongly relies on the following result which provides an estimate from above of the \(L^2\)-norm of the derivative with respect to time of the solution \(u^\varepsilon (\cdot ,t)\) to (1.1)–(1.2)–(1.3) under the assumptions of Theorem 4.1.

Proposition 4.2

Under the same assumptions of Theorem 4.1, there exist positive constants \(\varepsilon _0, C_1, C_2>0\) (independent on \(\varepsilon \)) such that

for all \(\varepsilon \in (0,\varepsilon _0)\).

Proof

Let \(\varepsilon _0>0\) so small that for all \(\varepsilon \in (0,\varepsilon _0)\), (4.2) holds and

where \(\delta \) is the constant of Proposition 3.4. Let \({\hat{T}}>0\); we claim that if

then there exists \(C>0\) such that

Indeed, inequality (4.7) follows from Proposition 3.4 if \(\Vert u^\varepsilon (\cdot ,{\hat{T}})-v\Vert _{{}_{L^1}}\le \delta \). By using triangle inequality, (4.5) and (4.6), we obtain

Substituting (4.2) and (4.7) in (3.3), one has

It remains to prove that inequality (4.6) holds for \({\hat{T}}\ge C_1\varepsilon ^{-1}\exp (A/\varepsilon )\). If

there is nothing to prove. Otherwise, choose \({\hat{T}}\) such that

Using Hölder’s inequality and (4.8), we infer

so that there exists \(C_1>0\) such that

and the proof is complete. \(\square \)

Now we are ready to prove Theorem 4.1.

Proof of Theorem 4.1

Fix \(m>0\). As a consequence of the triangle inequality we have that

Hence, since the second term in the right-hand side of (4.9) tends to 0 by (4.1), in order to prove (4.3), it is sufficient to show that

To this aim, we first observe that up to taking \(\varepsilon \) so small that \(m<C_1\varepsilon ^{-1}\), we can apply (4.4) to deduce

Moreover, for all \(t\in [0,m\exp (A/\varepsilon )]\) we have

where we applied Hölder’s inequality and (4.11). We thus obtained (4.10) and the proof is complete. \(\square \)

Remark 4.3

We stress that the constant \({\mathcal {Q}}\) defined in (3.9) (and appearing the first time in the constant A of the lower bound (3.11)) plays a relevant role in the dynamics of the solution; indeed, such lower bound is needed to prove Theorem 4.1, and, from estimate (4.3), we can clearly see that the bigger is A (that is the smaller is \({\mathcal {Q}}\)) the slower is the dynamics. As to give a hint on what happens even with a small variation of \({\mathcal {Q}}\), if we choose \(Q'\) so that its maximum is 4 instead of 1 (as it is for the examples (1.6) we considered) then the time taken for the solution to drift apart from the initial datum \(u_0\) reduces from \(T_\varepsilon \) to \(\sqrt{T_\varepsilon }\); we will see further details with the numerical simulations of Sect. 4.4.

4.2 Algebraic Slow Motion

In this subsection we consider the case in which the potential F satisfies assumption (1.8) with \(\theta >2\) and we show that the evolution of the solutions drastically changes with respect to the critical case \(\theta =2\), studied in Sect. 4.1. Indeed, the exponentially slow motion proved in Theorem 4.1 is a peculiar phenomenon of non-degenerate potentials, while if \(\theta >2\), then the solution maintains the same unstable structure of the initial profile only for an algebraically long time with respect to \(\varepsilon \), that is, at least for a time equals \( l\varepsilon ^{-\beta }\), for any \(l>0\), with \(\beta >0\) defined in (3.37). This is a consequence of the fact that when \(\theta >2\), we have no longer a lower bound like the one exhibited in Proposition 3.4 (with an exponentially small reminder), but only a lower bound with an algebraic small reminder, see Proposition 3.5. Our second main result is the following one.

Theorem 4.4

(algebraic slow motion when \(\theta >2\)) Assume that \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5) and that \(F\in C^1(\mathbb {R})\) satisfies (1.7)–(1.8) with \(\theta >2\). Moreover, let v be as in (3.8) and let \(\left\{ k_j\right\} _{j\in {\mathbb {N}}}\) be as in (3.26). If \(u^\varepsilon \) is the solution to (1.1)–(1.2)–(1.3), with initial profile \(u_0^{\varepsilon }\) satisfying (4.1) and (4.2) with \(R_{\theta ,\varepsilon }=\varepsilon ^{k_j}\), then

for any \(l>0\).

Proof

The proof follows the same steps of the proof of Theorem 4.1 and it is obtained by using Proposition 3.5 instead of Proposition 3.4. In particular, proceeding as in the proof of Proposition 4.2, one can prove that there exist \(\varepsilon _0, C_1, C_2>0\) (independent on \(\varepsilon \)) such that

for all \(\varepsilon \in (0,\varepsilon _0)\). Thanks to the latter estimate, we can prove (4.12) in the same way we proved (4.3) (see (4.9) and the following discussion). \(\square \)

4.3 Layer Dynamics

In this last subsection, we ultimate our investigation giving a description of the slow motion of the transition points \(h_1,\ldots ,h_N\). More precisely, we will incorporate the analysis in both the critical case (that is when \(\theta =2\)) and the subcritical one (namely \(\theta >2\)), showing that the transition layers evolve with a velocity which goes to zero as \(\varepsilon \rightarrow 0^+\), according to Theorem 4.6.

For this, let us fix a function v as in (3.8) and define its interface I[v] as the set

Moreover, for any function \(u:[a,b]\rightarrow {\mathbb {R}}\) and for any closed subset \(K\subset \mathbb {R}\backslash \{\pm 1\}\), the interface \(I_K[u]\) is defined by

Finally, we recall the notion of Hausdorff distance between any two subsets A and B of \({\mathbb {R}}\) denoted with d(A, B) and given by

where \(d(\beta ,A):=\inf \{|\beta -\alpha |: \alpha \in A\}\), for every \(\beta \in B \).

Before stating the main result of this subsection (see Theorem 4.6), we prove the following lemma which is merely variational, meaning that it does not take into account the Eq. (1.1) , and establishes that, if a function \(u\in H^1([a,b])\) is close to v in \(L^1\) and its energy \(E_\varepsilon [u]\) (defined in (3.1)) exceeds for a small quantity with respect to \(\varepsilon \) the minimum energy to have N transitions, then the distance between the interfaces \(I_K[u]\) and \(I_K[v]\) remains small.

Lemma 4.5

Assume that \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5), \(F\in C^1(\mathbb {R})\) satisfies (1.7)–(1.8) with \(\theta \ge 2\) and let v be as in (3.8). Given \(\delta _1\in (0,r)\) and a closed subset \(K\subset \mathbb {R}\backslash \{\pm 1\}\), there exist positive constants \({\hat{\delta }},\varepsilon _0\) such that, if for all \(\varepsilon \in (0,\varepsilon _0)\) \(u\in H^1([a,b])\) satisfies

for some \(M_\varepsilon >0\) and with \(E_\varepsilon [u]\) defined in (3.1), we have

Proof

Fix \(\delta _1\in (0,r)\) and choose \(\rho >0\) small enough that

and

where

By using the first assumption in (4.13) and by reasoning as in the proof of (3.14) in Proposition 3.4, we can prove that, if we consider \(h_i\) the discontinuous points of v, then for each \(i= 1, \dots , N\) there exist

such that

Now suppose by contradiction that (4.14) is violated. Using (3.6), we deduce

On the other hand, we have

Substituting the latter bound in (4.15), we deduce

which implies, because of the choice of \(\rho \), that

which is a contradiction with assumption (4.13). Hence, the bound (4.14) is true and the proof is completed. \(\square \)

Finally, thanks to Theorems 4.1, 4.4 and Lemma 4.5, we can prove the main result of the present subsection, which provides information about the slow motion of the transition layers as \(\varepsilon \) goes to 0. In particular, we highlight that the minimum time so that the distance between the interface of the time-dependent solution \(u^\varepsilon (\cdot ,t)\) and that one of the initial datum becomes greater than a fixed quantity is exponentially big with respect to \(\varepsilon \) in the critical case \(\theta =2\), while only algebraically large in the supercritical case \(\theta >2\).

Theorem 4.6

Assume that \(Q\in C^1(\mathbb {R})\) satisfies (1.4)–(1.5) and that \(F\in C^1(\mathbb {R})\) satisfies (1.7)–(1.8) with \(\theta \ge 2\). Let \(u^\varepsilon \) be the solution of (1.1)–(1.2)–(1.3), with initial datum \(u_0^{\varepsilon }\) satisfying (4.1) and (4.2). Given \(\delta _1\in (0,r)\) and a closed subset \(K\subset \mathbb {R}\backslash \{\pm 1\}\), set

Then, there exists \(\varepsilon _0>0\) such that if \(\varepsilon \in (0,\varepsilon _0)\)

where A and \(k_j\) are defined as in Propositions 3.4 and 3.5, respectively.

Proof

First of all we notice that if \(u_0^\varepsilon \) satisfies (4.1) and (4.2), then there exists \(\varepsilon _0>0\) so small that \(u_0^\varepsilon \) automatically verifies assumption (4.13) for all \(\varepsilon \in (0,\varepsilon _0)\). Hence, we are in the position to apply Lemma 4.5, obtaining that for all \(\varepsilon \in (0,\varepsilon _0)\)

Now, for each fixed \(\varepsilon \in (0,\varepsilon _0)\), we consider \(u^\varepsilon (\cdot ,t)\) for all time \(t>0\) such that

Then, \(u^\varepsilon (\cdot ,t)\) satisfies the first condition in assumption (4.13) either thanks to (4.3) if \(\theta =2\) or by (4.12) if \(\theta >2\). The second condition in (4.13) can be easily deduced observing that the energy \(E_\varepsilon [u^\varepsilon ](t)\) is a non-increasing function of t. Then, we have also that

for all t as in (4.17). Combining (4.16) and (4.18), and using the triangle inequality, we deduce that

for all t as in (4.17), as desired. \(\square \)

Remark 4.7

We underline, according to Theorem 4.6, one must wait an extremely long time (which is either exponentially or algebraically long, depending on wether \(\theta =2\) or \(\theta >2\)) to see an appreciable change in the position of the zeros of \(u^\varepsilon \). Once again, this proves a slow dynamics of the solution only because \(\theta \ge 2\); indeed, in such a case we are sure that an initial datum \(u_0^\varepsilon \) satisfying (4.1)–(4.2) is neither a stationary solution nor is close to it, implying that there exists a finite time \({\bar{t}}\) such that the solution \(u^\varepsilon (\cdot , {\bar{t}})\) will drift apart from \(u_0^\varepsilon \). Hence, proving that the layers of \(u^\varepsilon (\cdot , t)\) stay close to the layers of \(u_0^\varepsilon \) for long times is not a trivial result.

4.4 Numerical Experiments

We conclude the paper with some numerical simulations showing the slow evolution of the solutions to (1.1)–(1.2)–(1.3) rigorously described in the previous analysis. All the numerical computations, done for the sole purpose of illustrating the theoretical results, were performed using the built-in solver pdepe by Matlab\(^\copyright \), which is a set of tools to solve PDEs in one space dimension. In all the examples we consider Eq. (1.1) with Q given by one of the two explicit functions of (1.6), while \(F(u)=\frac{1}{2\theta }|1-u^2|^\theta \) (see (1.10)) with different values of \(\theta \ge 2\), depending on whether we aim at showing exponentially or algebraic slow motion.

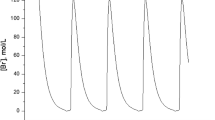

4.4.1 Example No. 1

We start with an example illustrating the result of Theorem 4.1; indeed, in this case \(F(u)=\frac{1}{4}(1-u^2)^2\) (hence we are in the critical case \(\theta =2\)), and we choose \(Q(s)=\frac{s}{1+s^2}\). In the left picture of Fig. 4 we can see the solution maintains six transitions until \(t=7*10^3\) and, suddenly, the closest layers collapse; after that, one has to wait up to \(t=2.9*10^4\) to see another appreciable change in the solution, see the right hand picture of Fig. 4. It is worth mentioning that the distance between the first layers which disappear (left hand picture) is \(d=0.9\), while the distance of the layers disappearing in the right hand picture is \(d=1\); hence, a small variation in the distance between the layers at the initial time \(t=0\) gives rise to a big change on the time taken for the solution to annihilate them.

In this figure we consider the same problem as in Fig. 4, with the only difference \(Q(s)= \frac{\alpha s}{1+s^2}\), \(\alpha >0\). In the left hand side \(\alpha =1/4\), so that \({\mathcal {Q}}=1/4\), while on the right hand side \(\alpha ={\mathcal {Q}}=2\)

4.4.2 Example No. 2

In this second numerical experiment, we emphasize which is the role of the constant

in the metastable dynamics of the solutions; in particular, and as we noticed in Remark 4.3, the constant \({\mathcal {Q}}\) affects the evolution of the solution, since it appears in the minimum time needed for the solution to drift apart from its initial transition layer structure (see Theorem 4.1). To be more precise, if \({\mathcal {Q}}=1\) as in the example of Fig. 4, the first bump collapses at \(t \approx 7190\), while the second at \(t \approx 3*10^4\); such result has to be compared with Fig. 5, where all the data are the same as Fig. 4 except for the choice of \({\mathcal {Q}}\). In the left hand side of Fig. 5 we choose Q such that \({\mathcal {Q}}=1/4\), and we can see that the evolution becomes much slower, as the first bump collapses at \(t=10^{10}\), instead of \(t=7190\); on the contrary, if \({\mathcal {Q}}=2\) (right hand picture), the dynamics accelerates and the first two bumps disappear respectively at \(t\approx 200\) and \(t\approx 470\).

4.4.3 Example No. 3

In this example we show what happens if considering a discontinuous initial datum \(u_0\) which is a small perturbation of the unstable equilibrium zero. In the left hand side of Fig. 6 we can see how, in extremely short times, such configuration develops into a continuous function with two transition layers; after that (right hand picture), we have to wait until \(t \approx 10^{11}\) to see the first interface to collapse. In particular, such numerical experiment shows that, even if the solution does not satisfy the assumptions (4.1)–(4.2) at \(t=0\), at \(t=9\) we already entered into the framework described by Theorem 4.1 and we witness the exponentially slow motion of the solution. This picture is not surprising, since it seems to confirm the well known behavior of the solution to the linear diffusion equation \(u_t= \varepsilon ^2 u_{xx} -F'(u)\); indeed, in [5] the author rigorously proves that there are different phases in the dynamics, the first one being the generation of a metastable layered solution, which is governed by the ODE \(u_t = -F'(u)\). We conjecture that the same results hold true also in the nonlinear diffusion case (1.1), being \(Q(0)=0\).

Here, we consider the same problem as in Fig. 4 with the discontinuous initial datum \(u_0(x) = u_* \chi _{(-4,-3) \cup (1,4)}-u_* \chi _{(-3,1)}\), where \(u_* =10^{-2}\). In the left hand side we depict the formation of a transition layer structure in relatively small times, while on the right hand side we see the subsequent slow motion

4.4.4 Example No. 4

In this last numerical experiment, we illustrate the results of Theorem 4.4 by choosing F as in (1.10) with \(\theta >2\). In both the pictures of Fig. 7 we select \(Q(s)=se^{-s^2}\), and either the initial datum of Fig. 4 (left picture) or the discontinuous one of Fig. 6 (right picture), so that we can compare the two numerical experiments. In the left hand picture, since \(\theta =4\), the time taken to see two bumps disappear is \(t \approx 450\) (hence much smaller if compared to the right hand side of Fig. 4, where one has to wait until \(t \approx 10^4\)). Similarly, in the right side of Fig. 7 (where \(\theta =3\)), the time employed by the first interface to disappear is \(t \approx 8*10^4 \ll 10^{11}\), that is the time exhibited in the right hand side of Fig. 6.

Data availability

No data was used for the research described in the article.

References

Allen, S., Cahn, J.: A microscopic theory for antiphase boundary motion and its application to antiphase domain coarsening. Acta Metall. 27, 1085–1095 (1979)

Bethuel, F., Smets, D.: Slow motion for equal depth multiple-well gradient systems: the degenerate case. Discrete Contin. Dyn. Syst. 33, 67–87 (2013)

Bronsard, L., Kohn, R.: On the slowness of phase boundary motion in one space dimension. Commun. Pure Appl. Math. 43, 983–997 (1990)

Carr, J., Pego, R.L.: Metastable patterns in solutions of \(u_t=\varepsilon ^2u_{xx}-f(u)\). Commun. Pure Appl. Math. 42, 523–576 (1989)

Chen, X.: Generation, propagation, and annihilation of metastable patterns. J. Differ. Equ. 206, 399–437 (2004)

Corli, A., Malaguti, L., Sovrano, E.: Wavefront solutions to reaction-convection equations with Perona–Malik diffusion. J. Differ. Equ. 208, 474–506 (2022)

Dacorogna, B.: Direct methods in the calculus of variations. Appl. Math. Sci. vol. 78. Springer-Verlag, Berlin (1989)

Drábek, P., Robinson, S.B.: Continua of local minimizers in a non-smooth model of phase transitions. Z. Angew. Math. Phys. 62, 609–622 (2011)

Folino, R., Plaza, R.G., Strani, M.: Metastable patterns for a reaction–diffusion model with mean curvature-type diffusion. J. Math. Anal. Appl. 493, 124455 (2021)

Folino, R., Plaza, R.G., Strani, M.: Long time dynamics of solutions to \(p\)-Laplacian diffusion problems with bistable reaction terms. Discrete Contin. Dyn. Syst. 41, 3211–3240 (2021)

Folino, R., Strani, M.: On reaction-diffusion models with memory and mean curvature-type diffusion. J. Math. Anal. Appl. 522, 127027 (2023)

Folino, R., Strani, M.: On the speed rate of convergence of solutions to conservation laws with nonlinear diffusions. Nonlinear Anal. 196, 111762 (2020)

Fusco, G., Hale, J.: Slow-motion manifolds, dormant instability, and singular perturbations. J. Dyn. Differ. Equ. 1, 75–94 (1989)

Gobbino, M.: Entire solutions of the one-dimensional Perona–Malik equation. Commun. Partial Differ. Equ. 32, 719–743 (2007)

Guidotti, P.: A backward-forward regularization of the Perona–Malik equation. J. Differ. Equ. 252, 3226–3244 (2012)

Grant, C.P.: Slow motion in one-dimensional Cahn–Morral systems. SIAM J. Math. Anal. 26, 21–34 (1995)

Höllig, K.: Existence of infinitely many solutions for a forward–backward heat equation. Trans. Am. Math. Soc. 278, 299–316 (1983)