Abstract

Background

The focus of the paper is on scenario studies that examine energy systems. This type of studies is usually based on formal energy models, from which energy policy recommendations are derived. In order to be valuable for strategic decision-making, the comprehensibility of these complex scenario studies is necessary. We aim at highlighting and mitigating the problematic issue of lacking transparency in such model-based scenario studies.

Methods

In the first part of the paper, the important concept of transparency in the context of energy scenarios is introduced. In the second part, we develop transparency criteria based on expert judgement. The set of selected criteria is structured into ‘General Information’, ‘Empirical Data’, ‘Assumptions’, ‘Modeling’, ‘Results’, and ‘Conclusions and Recommendations’. Based on these criteria, a transparency checklist is generated.

Results

The proposed transparency checklist is not intended to measure the quality of energy scenario studies, but to deliver a tool which enables authors of energy scenario studies to increase the level of transparency of their work. The checklist thus serves as a standardized communication protocol and offers guidance for interpreting these studies. A reduced and a full version of the checklist are provided. The former simply lists the transparency criteria and can be adopted by authors with ease; the latter provides details on each criterion. We also illustrate how the transparency checklist may be applied by means of examples.

Conclusions

We argue that transparency is a necessary condition for a reproducible and credible scenario study. Many energy scenario studies are at present characterized by an insufficient level of transparency. In essence, the checklist represents a synthesizing tool for improving their transparency. The target group of this work is experts, in their role of authors and/or readers of energy scenario studies. By applying the transparency checklist, the authors of energy scenario studies signal their commitment to a high degree of transparency, in consonance with scientific standards.

Similar content being viewed by others

Background

Model-based energy scenarios

Scenario analysis is becoming an increasingly recognized area of research. As a result, the number of scenario studies published in recent years has risen tremendously. In 2011, for example, the European Environment Agency (EEA) listed 263 scenarioFootnote 1 studies [1]. Despite its limitations (e.g. availability biasFootnote 2) [2], the scenario analysis is regarded as an adequate method to deal with what Lempert et al. [3] call ‘deep uncertainty’. Furthermore, Wright and Goodwin [4] propose an approach on how to overcome some of these limitations. In the context of energy research, energy scenarios are considered to be a suitable and helpful means of depicting possible future pathways in an energy system. Basically, they have two main purposes: First, to offer orientation and contribute to discussions about energy futures [5]; second, to support strategic decision-making on energy issues. In this case, they can be seen as ‘a useful tool to helping decision-makers in government and industry to prepare for the future and to develop long-term strategies in the energy sector’ (p. 89) [6]. Attempts to classify scenarios have been made by other authors (see e.g. [7, 8]).

Due to the complex nature of energy systems, mostly energy scenario studies benefit from modelsFootnote 3 which may capture qualitative and quantitative aspects of the systems. We call these ‘model-based energy scenario studies (ESS)’.

Challenges in dealing with model-based energy scenarios

The complexity of the present and future energy systems and their highly uncertain and dynamic nature evoke challenges for energy scenario analysis. The related questions most likely have to be tackled from an interdisciplinary perspective which consequently leads to the application of a broad diversity of methods and models, with their underlying assumptions. Thus, this represents a challenge for the readersFootnote 4 of the ESS. In our view, comprehensibility or intelligibility of a particular model-based ESS requires two conditions to be met for a reader:

First, the reader needs to have the technical expertise or skills to understand what has been done in the study. Energy scenarios in model-based ESS vary depending on their primary purpose (e.g. assessing mitigation possibilities [9]). Even if a single method is used to construct a model-based ESS, various modeling techniques may be employed by the authors of the study [7]. For example, a model resulting from applying a particular simulation method may encapsulate a series of scientific techniques such as Monte Carlo simulations and Kalman filtering. Often, model-based ESS are the result of adopting several methods. In addition, the results of model-based ESS may be used as input data for further model-based investigations regarding questions of future developments. Suffice to say here that the adaptation of different models and the abundance of ad hoc techniques from which results can be derived are a source of rich diversity in energy scenarios [1, 10].

Due to the increasing importance of ESS and the expanding computing possibilities, the total number of available energy models has grown considerably. These models vary significantly in terms of structure and application which leads to greater complexity in understanding and interpreting model-based ESS. Thus, navigating through this type of study becomes a challenging task. The heterogeneity of applied energy models and corresponding model-based energy scenarios demands specific technical skills for the adequate assessment of such (often complex) interdisciplinary studies. This represents not only a key barrier to the comprehensibility of a particular study but it also makes the comparability of the study more difficult. Over time, a number of studies (e.g. [11–16]) have presented numerous classifications of energy models which provide insight into the differences and similarities between the models to facilitate the understanding of ESS.

The second requirement for the comprehensibility of model-based energy scenario studies is transparency. Arguably, transparency is even more important than technical skills, for it is a basic requirement of any research. Transparency is a key concept of scientific work and is particularly relevant for studies looking to the future [17]. Transparency is a necessary but insufficient condition for a reproducible and valuable scenario study. In ESS transparency means that the necessary information to comprehend, and perhaps reproduce, the model results is adequately communicated by the authors of the study. Bossel [18] uses the concepts of ‘black box’, ‘glass box’ and ‘grey box’ to highlight ‘different possibilities for simulating system behavior’ (p. 19). These ideas can be related to different degrees of transparency, to be chosen by the modeler to characterize a certain system (e.g. energy) in a model-based study. In this manner, the black box represents a low level of transparency, and the glass box a high level of transparency. We argue that the employment of the latter is desirable in scientific work because it allows reproducibility. In addition, Weßner and Schüll [19] consider the provision of background information about a study, for example, if it is financed by a third party, to be important to ensure scientific integrity.

Considering one important part of ESS, a concrete example of the need for transparency is the communication of assumptionsFootnote 5 (for further explanations see ‘Methods’ and ‘Results and discussion’ section). Ideally, the ESS authorFootnote 6 would fully articulate all the assumptions, thereby facilitating that the reader understands how a particular model-based scenario study has been constructed. In practice, when a large model or a set of large models is used, there is a trade-off between completeness and succinctness and only the main assumptions can be communicated exhaustively in the model documentation. In extreme cases, critical assumptions are made implicitly, unnecessarily obscuring the modeling exercise. In the worst case, such an approach may be a deliberate strategy to attach objectivity to ideology [20]. It can be concluded that providing comprehensibility for ESS appears to be a challenging issue since the selection of information to be communicated needs to take into account various aspects.

Aim and outline

This paper addresses and attempts to mitigate opacity in model-based ESS. In particular, we adopt the view that comprehensibility is necessary, if ESS are intended to successfully fulfil their purpose of adding value to strategic decision-making. To do this effectively, there is a need to fully assess the essential content of an ESS. But many of the existing studies are not sufficiently comprehensible for a complete external evaluation of the quality and usefulness of such studies. Since readers do have different questions and educational backgrounds, there is a need for comprehensibility on several communication levels (inter alia proposed in [21]). In the best case, a wide spectrum of addressees such as experts, decision-makers and the public is enabled to build their own opinions based on the outcome of ESS.

However, even the minimum requirement, which is for us full traceability of ESS for experts, often cannot be met [5]. In general, two kinds of required comprehensibility can be distinguished in this context: First, model comprehensibility, which aims at ensuring comparability and reproducibility by experts. The second one is study-results comprehensibility which enables the interpretation of ESS outcomes also for non-experts. If results comprehensibility cannot be sufficiently provided, it is an obvious precondition that at least experts who work in the field of energy scenario construction and application need to be able to fully retrace the work of other experts in order to explain it to the remaining addressees.

We claim to tackle this issue by highlighting the important role of transparency. Therefore, we aim to provide an additional approach for practical use cases where a glass box model is difficult to be achieved. Its purpose is to ensure leastways the provision of necessary information for expert judgement for both model comprehensibility and also results comprehensibility. For this reason, the target group of our work’s outcome is limited to experts, in their role of authors and/or readers of ESS.

The next subsection provides a review of existing scientific work that addresses quality criteria in model-based studies with guidelines of good practice. Furthermore, the applied methodology for the determination of the transparency criteria for model-based scenario studies is explained in the ‘Methods’ section. ‘Results and discussion’ section introduces the ESS transparency checklist where the identified criteria are collected and discusses several of its key points. Finally, in the ‘Conclusions’ section, a further collection of transparency criteria for addressees other than experts is suggested.

Literature review

The issue of insufficient comprehensibility in scenario studies is not new and the contributions of previous work to address it have been made. For example, a recent study of the International Risk Governance Council [22] provides a comprehensive methodological review on energy scenario and modeling techniques. The work emphasizes a clear (i.e. transparent) communication of the scenario and model outcome, especially in terms of possible uncertainties and biases. Although [22] delivers novel insights with regard to shortcomings of energy scenario methods, it provides little guidance on the possible ways of ensuring transparency in model-based ESS. In this section, two main bodies of literature are examined; one dealing more generally with model comprehensibility; the other, with existing tools for model documentation and transparency.

In general, there are several approaches to tackle the need for comprehensibility in model-based ESS. One is the use of standards which mainly refers to requirements for documentation and data handling. Standards enable the reader of ESS to find a common understanding of the whole modeling process. Examples which strive for the standardization of applied models, data sets or assumptions are calls for research projects in the context of the German Energiewende [23] as well as requirements of methodologies [24] and planning tools for policy advice in the USA [25].

Open source and access approaches for both model code and the related data are another way of dealing with the previously described challenges. The concept incorporates advantages such as improved reproducibility of results or distributed peer reviewing, which partially eliminates shortcomings regarding the comprehensibility of energy scenarios. While the idea of open source and access is claimed to be essential for transparent research and reproducibility [26, 27], the matter has not been fully established in model-based energy scenario analysis yet. Nevertheless, open access policy is a requirement for funding grants in some research fields, such as the Public Access Plan of the US Department of Energy (DOE) [28]. The plan demands that all DOE-granted publications have to be uploaded to a public repository, while for the related data, a data management plan has to be provided.

The issue of model documentation in scientific work is notably addressed in the field of ecology. Benz et al. [29] introduce ECOBAS, a standardized model documentation system that facilitates the model creation, documentation and exchange in the field of ecology and environmental sciences. ECOBAS is designed to overcome the difficulties in writing model documentation and applying the documentation to any model language. Schmolke et al. [30] and Grimm et al. [31] propose ‘transparent and comprehensive ecological modeling evaluation’ (TRACE), a tool for planning, performing and documenting good modeling practice. The authors aim to establish expectations of what modelers should clearly communicate when presenting their model (e.g. clear model description and sensitivity analysis of the model output). The purpose of a TRACE-based document is to provide convincing evidence that a model is thoroughly designed, correctly implemented, well-tested, understood and appropriately applied for its intended purpose.

In the context of model-based research in the social sciences, Rahmandad and Sterman [32] provide reporting guidelines to facilitate model reproducibility. They distinguish between a ‘minimum’ and a ‘preferred’ reporting requirement. For computer-simulation models, they further differentiate between a ‘model’ and a ‘simulation’ reporting requirement. Two types of simulation methods are commonly used: agent-based modeling (ABM) and system dynamics (SD). For studies using ABM, Grimm et al. [33] suggest a framework via the Overview, Design concepts, and Details (ODD) protocol and provide examples of how to apply it. The ODD can be understood as a communication tool to enable ABM replication. Later, the authors assess the critical points raised against ODD and offer an updated and improved version of the protocol [34]. Concerning the SD approach, Rahmandad and Sterman [32] illustrate how to implement their reporting guidelines using an innovation diffusion model. In principle, the SD modeling approach is suitable for a high level of model transparency. However, although the qualitative visualization of an SD model is common practice, this is not always the case for the model code. Efforts to enhance the documentation of such models are made by e.g. [35].

In the context of policy analysis, Gass et al. [36] propose a hierarchical approach for producing and organizing documentation of complex models. It recommends four major documentation levels: (1) rote operation of the model, (2) model use, (3) model maintenance and (4) model assessment. Another documentation framework, especially designed for energy system models, is published by Dodds et al. [37]. The focus of the work lies on the challenges due to the increasing complexity which is affected by the ongoing development of often applied optimization models. Although the proposed design metrics are influenced by the structure of optimization models, the presented approach incorporates a way of dealing with the evolution of different model types and thus their input and output data as well.

In essence, the literature shows that standards enable comprehensibility through the harmonization of regulations, frameworks and documentations, whereas open source approaches provide comprehensibility through transparency. However, on the one hand, standards, such as ECOBAS or ODD, are often specifically designed for a certain field of research (ECOBAS: ecology and environmental sciences) or model type (ODD: agent-based models). In this sense, we think that they are an adequate way to tackle what we call model comprehensibility, but do not provide full result comprehensibility. On the other hand, we consider open source approaches to be an extreme case of transparency that does not automatically facilitate the comprehensibility of studies for policy advice. For instance, in order to benefit from full open source, substantial investment in familiarization with the source is required. Thus, depending on the background knowledge of an ESS user, open source may also compromise the comprehensibility of a study due to information overload. The latter can be tackled through different levels of details (for a broader discussion of this issue, see section ‘Results and discussion’). Our contribution to the current state of research therefore addresses a synthesis of standardization and increasing, but balanced, transparency in energy scenario studies (including result and model comprehensibility), if these are to be seen as the result of reproducible, scientific research.

Methods

Conceptual framework

In order to clarify the meaning of frequently used terms within the following text sections, Fig. 1 shows the data and information flow within a model-based ESS. Its purpose is to consistently put the key terms ‘Empirical data’, ‘Assumptions’, ‘Model exercise’, ‘Results’ and ‘Conclusions and recommendations’ into a context representing the background for the construction and discussion of transparency criteria. Thus, the conceptual framework follows the typical steps of conducting a model-based ESS: collection and preparation of empirical data for the model-based data processing, assumption-making, model application and preparation of model outputs as well as deriving comprehensive conclusions and recommendations. As depicted in Fig. 1, empirical data can be divided into primary input data which is imported to the model directly and secondary input data which needs pre-processing before being imported. The model exercise contains at least one model (here called ‘model A’). However, in some cases, a combination of two models or more is applied (for simplicity, we depicted a combination of only two models via a linkage stream). Similar to input data, outputs are divided into primary and secondary data. Further, this figure illustrates that assumptions can be made for the model as well as optionally for the pre-processing, post-processing, additional applied model(s) and linkage(s) between models. Results represent the last step of the model exercise. They are given based on the model output data. Finally, conclusions and recommendations are made based on the whole chain from the empirical data to the results (indicated by the solid arrow in Fig. 1).

Construction of transparency criteria

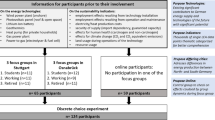

With the aim of increasing the transparency of ESS, we collected criteria on information needed to understand the fundamental contents of an ESS, initially adopting the perspective of an ESS user. The applied methodology can be seen as an alternating combination of two established approaches: qualitative expert interviews [38] and expert validations. Applying the conceptual framework above introduced, we set up a first collection of transparency criteria. It is based on the results of an ESS assessment workshop conducted by members of the Helmholtz School on Energy Scenarios [39]. In individual preparation for this workshop, specific questionsFootnote 7 on two explicit ESS [40, 41] had to be answered. As all of the participants of this workshop are ESS authors as well as users, the initial compilation of transparency criteria represents the first round of the expert validations.

Meeting the identified transparency criteria means creating a complete and comprehensible overview of the underlying work and premises of an ESS. We found that this information needs to be provided in a format that can be easily used. Standard protocols or rather strict guidelines can help to list and describe assumptions as well as to transparently communicate the functional links between these and the model results. However, formulating such an instrument in a way that is too constricted bears the risk of high entry barriers and prohibits transferability over a wide range of model-based ESS. We therefore concluded that a simple as well as flexible tool is essential and intentionally propose the format of a checklist.

Thus, we subsequently rearranged the first collection of transparency criteria for ESS and extended it by frequently asked questions (FAQ). The first version of the checklist represented the template for individual interviews and discussions with three German post-doc researchers, one expert working with energy system modeling and two experts in the field of energy scenario assessment and scientific policy advice. Hence, the listing was updated by the feedback of these selected experts. For the second expert validation round especially, the perspective of ESS authors was emphasized. The idea of a transparency checklist for ESS was presented during the second workshop of the openmod initiative [26], attended by 35 researchers from European research institutions who are experienced in the field of energy system modeling. The presented version of the checklist was again evaluated and updated with respect to the feedback of the openmod workshop. Considering the outcome of our literature research, we finally added study examples to each transparency criterion and conducted final expert interviews. This time, one post-doc social scientist and one post-doc energy system modeler were asked to suggest improvements to the checklist.

In the following sections, we call the final product of this construction process the ‘ESS transparency checklist’.

Limitations of the construction approach

Individual expert interviews are a well-established methodology in social science [42]. In addition, the expert validation has similarities to DELPHI approaches [43]. Hence, it provides the evaluation of a broad set of opinions on an interdisciplinary research topic and subsequently complements the individual expert interviews. It represents therefore an appropriate way to avoid expert dilemma and to gain an intersubjective collection of transparency criteria as far as possible [44]. However, only a limited number of experts were involved in the checklist compilation. Also, it should be noted that the energy modelers obviously dominated the selection of respondents. Thus, the checklist cannot claim to reflect a representative range of opinions in the scientific community dealing with ESS.

Moreover, the provision of a checklist template allows on the one hand a comfortable way to compare the outcome of the interviews and to extend it with additional viewpoints. But, on the other hand, as an existing guideline, it also restricts the spectrum of conceivable criteria to be discussed. Consequently, possible improvements to the construction methodology could be achieved by extending it by both further approaches such as constellation analysis [45] as well as a broader and more balanced selection of involved experts.

Results and discussion

The ESS transparency checklist can be seen as an overlay communication protocol between ESS authors and users which is not intended to automatically assess the quality of ESS and their content (data, model or assumptions), but at least to enable readers to assess these points for other addressees or their own work.

From the point of view of an ESS author, the collection of transparency criteria results in a checklist containing questions which are frequently asked by modeling experts trying to understand an ESS done by other experts. In this context, a checklist gives unexperienced ESS authors a summary of important aspects that need to be considered, especially for performing a scenario-based analysis used for deriving recommendations for decision-makers.

From the user’s perspective, the ESS transparency checklist is a catalogue of FAQ related to a section of the appropriate ESS document or report. Consequently, adding the proposed checklist to an energy scenario study means in a broader sense providing effective access to individually relevant and structured information to the user by an additional table of contents. The experienced reader benefits from this representation format of transparency criteria because time-consuming searching through the document for specific information can be avoided.

The ESS transparency checklist and its application

We distinguish between two versions of the transparency checklist (see section ‘Appendix’). The full version, provided at the end of this manuscript, can be seen as the checklist’s manual which clarifies in detail what is meant by the various criteria. This is realized in three ways: by asking relevant expert questions, by using simple made-up examples and by referencing an existing study that meets the particular criteria. Although we consider the ESS transparency checklist to be, in principle, applicable to any model-based scenario study, the examples provided are, given our focus, pertinent to energy scenarios.

The second version of the transparency checklist is the reduced version. This version is intended for application to a particular ESS (cf. Table 1). As an additional table of contents, this reduced version only consists of the transparency criteria and a second table column. In the latter, an ESS author only has to enter the specific page numbers of the study, on which a certain transparency criterion is supposed to be fulfilled. However, the extent to which any criterion is met depends on the ESS authors’ assessment. With the checklists’ primary purpose to raise awareness for transparency, we intentionally chose this open way of dealing with the transparency criteria to keep the cost of its application low. Thus, ESS authors can use the reduced version of the checklist at ease and add it to any document without the need of changing the structure or content of the ESS it should be applied to.

Discussion of transparency criteria—the full version

In the following, we discuss the several categories of the ESS transparency checklist in more detail. It is mainly conceived for practitioners to facilitate full application of the checklist (see section ‘Appendix’).

This should be enabled by additional columns for a further description of the criteria. So, the column ‘transparency question’ contains one or more exemplary expert questions which can be assigned to a transparency criterion. The column ‘examples and further description’ shows simple examples for formulations that would contribute to fulfilling the transparency criterion. Finally, the column ‘applied study’ gives a concrete example of existing ESS where providing transparency is done from our point of view.

General information

The first part of the ESS transparency checklist targets basic background information required for the interpretation and classification of a study. Apart from giving information about the ESS author(s) and the institution(s), one key point is the communication of the objectives (e.g. overarching research question) and the funding of the study [46]. Another point that affects the interpretation of a study is the use of key terms, which usually depend on the professional background of the ESS authors. Often, similar terms are used which have very different meanings or definitions. Thus, we propose by providing a glossary describing those terms to avoid potential misunderstandings and misinterpretation of the study’s outcomes (‘Key term definitions’).

Input data preparation

The main part of the ESS transparency checklist deals with the model exercise which, in a more simplified way, could be described as data processing with a model. Thus, the checklist’s categories coming along with the model exercise (‘Empirical data’, ‘Assumptions’, ‘Results and conclusions’) include topics such as data sources, data selection, data processing or the interpretation of data.

As well as distinguishing several types of data like inputs and outputs, the ESS transparency checklist also stresses a distinction between data influenced by assumptions and data that is gained from the past. The category Empirical data therefore asks how the latter is treated. This kind of data is also known as primary data and includes data from statistical surveys (e.g. databases of the International Energy Agency [47]) or measurements of physical quantities (e.g. technical datasheets). According to the three levels of transparency [18], listing the sources of the empirical data and pointing out the modifications that are applied corresponds to providing the ‘opaque box’. Thus, full transparency goes a step further and means also enabling access to the used primary data as it is described by [48] for the treatment of fundamental electricity data.

‘Pre-processing’ of data is often done to unify the given data from different sources or to adapt it to the requirements of the modeling environment. Due to a lack of appropriate empirical data, this also commonly goes along with the formulation of assumptions (‘Assumptions for data modification’). A typical example is the spatial unification of technical characteristics and costs, which means that known regional differences of these parameters are ignored or considered to be negligible. Since such assumptions seem to be quite irrelevant in regard to the overarching objective of the study, they are often rarely documented. However, assumptions are an indispensable part of an ESS and therefore play a crucial role for the input and output data preparation as well as for the application of different model types (cf. Fig. 1). The knowledge about these assumptions is thus a precondition for ensuring complete reproducibility of the modeling exercise.

Assumptions communication

Besides the assumptions for data modification, estimations about the future developments also cannot be made without assumptions. The ESS transparency checklist emphasizes this issue with the following criteria for assumptions communication which are based on the three typical steps of a scenario construction process:

First, the necessity of the model exercise to rely on assumptions leads to intrinsic uncertainties within the study’s conclusions and derived recommendations. In principal, two fundamentally different types of uncertainty exist. On the one hand, input data is associated with possible ranges of values and therefore may affect the outcome of ESS (data uncertainty). On the other hand, the model approach itself might include undetectable inaccuracies intrinsically (model uncertainty). The latter might also be interpreted as an error due to abstractions of the real world in order to create a simplified model. In this sense, Brock and Durlauf [49] give the example of economic actors which cannot fulfil the assumptions regarding rational behavior in macroeconomics (i.e. homo oeconomicus). A broader definition of uncertainty is given by Walker et al. [50] who describe uncertainty as ‘any departure from the unachievable ideal of complete determinism’ (p. 8). Trutnevyte et al. [51] point out that different dimensions of uncertainty (e.g. input data uncertainty, unawareness or unpredictability of events) lead to an infinite amount of robust scenarios. Therefore, the decisions about which uncertain developments are included and excluded in an ESS play a major role for the interpretation of its outcomes. The transparency criterion ‘Identification of uncertain developments’ addresses the associated requirement to communicate those uncertainties which are explicitly assessed in the study.

For the derivation of model inputs, usually, a range of future developments can be identified which is addressed by bandwidths of qualitative or quantitative values. In terms of quantitative values, it is a good practice in scenario studies to deal with them by performing parameter variations on input data. However, the number of practicable model runs is limited, and the number of assumptions can become quite large. Hence, parameter variations cannot be done for all quantitative values, and also qualitative assumptions are not covered by this approach.

There are qualitative assumptions which are considered only indirectly in a study, e.g. if a very high share of electric vehicles is assumed, this goes along with lifestyle changes, although those are not stated explicitly in the study. Still, qualitative assumptions like lifestyle changes can also find direct access in ESS by translating them into quantitative values for model input. But even in this translation process, it goes along with assumptions which do not necessarily have to be explicit. Therefore, the meaning of specific assumptions on the study’s outcome is only comprehensible if it is complemented by the information in which way the corresponding uncertainties are treated in the study. Thus, the second aspect of assumption communication is about how the main uncertainties are considered (‘uncertainty consideration’). This includes statements whether the bandwidth for certain numerical values which go into the model are justified by applying approaches such as own estimations, literature research or expert workshops. Even the explicit information that instead of a bandwidth, only a single, numerical value is chosen arbitrarily can contribute to an increase of transparency.

Third, to combine the involved assumptions to an applicable model framework, a storyline is usually constructed (‘storyline construction’). ‘Storyline-based scenarios are expressed as qualitative narratives that in length may range from brief titles to very long and detailed descriptions’ (p. 26) [52]. For the users of model-based ESS, it is of interest what the storylines are about, how they were constructed and which normative assumptionsFootnote 8 are included there as they are used to determine the relevant ranges for numerical assumptions.

Modeling

A critical aspect regarding transparency is the balance between enabling access to all relevant information and information overload. The easiest way of tackling this issue is to provide information on different levels of detail. In the case of listing all assumptions, this can be realized by selecting the crucial or new ones for the main document of the study while referring to an extra document which contains the complete list of assumptions.

Balanced information sharing is especially important when it comes to the description of the modeling itself. Thus, to be transparent and nevertheless avoid overstraining the user, we suggest different levels of detail for the model documentation beginning with a factsheet. The model factsheet lists basic information which is useful for comparing similar models. This includes the model category, its temporal resolution or its geographical and sectoral focus. As an example for model classification, we propose Fig. 2 given at the end of the full version of the ESS transparency checklist.

Another criterion on the ESS transparency checklist targets ‘model-specific properties’ which aims at providing a critical assessment regarding explicitly what the model can show and what it cannot show. Concerning the aforementioned risk of information overload, we suggest listing here only key aspects such as new equations or modules implemented. The ‘model documentation’ further refers to a more detailed information level of the process of modeling. This entails a description of how the perception of a real world problem is translated into a quantitative abstract model using mathematics (predominantly equations). It may also touch upon issues such as the range of application of the model and the feasibility of implementing the policies examined in the model. A separate document (following e.g. the TRACE guidelines [31]) may be used in this case, thereby mitigating the impact of information overload. Providing a structured and user-friendly documentation can be a challenging task as its users might have different expectations about the content. These can range from tutorials for out-of-the-box model application to concrete source code implementation details for further model development. In addition, usually model source code evolves continuously which demands maintaining also the documentation. In this regard, Dodds et al. [37] propose an approach called model archaeology to especially incorporate the effect of different model versions on the documentation in a structured way. While open source represents the most detailed information level in this context, it can be stressed that even limited access to the models’ source codes contributes to an increase in transparency of a certain ESS.

As mentioned above, the model itself represents a source of uncertainty. It is state of the art to perform validation tests to tackle this issue. But as models applied for energy scenario studies cover whole systems and usually assess possible future developments, classical experimental validation techniques are rarely applicable on these models. However, several approaches exist to check the model’s outcome. They range from simple structural validity tests (e.g. plausibility checks) to more sophisticated methods like empirical validity tests (e.g. back testing of the system’s historical behavior) [18]. For a better external assessment of the model quality, the ESS transparency checklist therefore also lists the criterion ‘model validation’ where the applied methods can be documented.

Moreover, typical experts’ questions aim at distinguishing inputs and outputs. Therefore, independent of what happens within a specific model, a clear labeling of the inputs and outputs of the model is required. This information becomes especially important if more than one model is involved in an ESS. In order to be able to assess whether a result is already predetermined by assumptions going into the model exercise, it is necessary to show by which input parameters an output value could be affected. Consequently, the transparency criterion ‘model interaction’ emphasizes the data exchange of a model with its environment. This environment can either be other applied models or in the simplest case the inputs and outputs of the whole model exercise.

To illustrate this model interaction in terms of transparent information exchange, various approaches exist. Standardized model documentation protocols, such as ODD or TRACE (see section ‘Literature review’) propose simple tables and class diagrams using Unified Modeling Language (UML) [53] for the documentation of model variables. However, we think that these methods are also applicable to show model interaction. The ODD protocol [33] suggests flow charts or pseudo-code for the transparent process overview and interactions between models. In addition, also a kind of interaction matrix could be provided. For instance, a listing of all inputs and outputs involved in the model exercise represents the matrix’s rows, whereas each individual model of the model exercise is represented by a column. Finally, by checking the appropriate cells of the ‘interaction matrix’, the model interaction could be indicated.

Model output and results

Besides the required knowledge about the origin of certain data, we take the view that on a more detailed information level, also all numerical values generated within the study need to be accessible. The associated criterion ‘output data access’ is strongly connected to the transparency criteria regarding the results communicated in a study. But, since the raw model outputs are usually post-processed for the presentation of the study’s outcome, we explicitly distinguish it from the ‘Results’ which represent information in a more condensed way. Although the usual purpose of these data modifications is the reduction in the complexity of the results (e.g. for answering the overarching research question), even assumptions can play a role at this point of the model exercise. In the simplest case, applying mathematical aggregation functions such as summation does not affect the meaning of the results (e.g. summing up the CO2 emissions of administrative regions to obtain the number of total CO2 emissions of a state). But, in contrast to this, data interpretation is another source for output data modification. ESS authors need to be aware that even if such data modifications are not intended, implicit assumptions such as individual opinions can affect the data interpretation and accordingly the results of the model exercise itself. Consequently, stating adaptations applied to the output data, the transparency criterion ‘post-processing’ aims at raising awareness on the ESS authors’ side.

In order to assess the discussed types of uncertainty (see section ‘Assumptions communication’), different methods exist. The most prominent examples are sensitivity and robustness analyses. While the former investigates the effect of input parameter variations on the results within the same model, the latter employs different models to validate the outcome of a specific ESS.

In the case of sensitivity analyses, Hamby [54] reviews alternative approaches. He shows that the use of sensitivity analysis techniques provides valuable insights with regard to the correlation of specific input parameters with the model output and also enables the elimination of certain input data due to its insignificance on the results.

Weisberg [55] defines a robustness analysis as ‘an indispensable procedure in the arsenal of theorists studying complex phenomena’ (p. 742), which examines ‘a group of similar, but distinct, models for a robust behaviour’ (p. 737), searching for ‘predictions common to several independent models’ (p. 730). Brock and Durlauf [49] further suggest Bayesian analysis as a method to quantify model uncertainty, but argue that a robustness analysis is most appropriate for models which are characterized by rather similar purposes. An example for an uncertainty analysis in ESS using a Bayesian model is given by Culka [56].

To attribute the effect of input parameter and the model selection on the results, both sensitivity and robustness analyses are listed as criteria in the ESS transparency checklist.

Conclusions and recommendations of model-based ESS

Although the ESS transparency checklist aims at ensuring model comprehensibility for experts in the first place, making the model exercise transparent is not sufficient to justify conclusions. As a fundamental part of a scientific study, the conclusions represent, in contrast to the model outputs, the outcome of the whole modeling framework. Especially, this part of the checklist assists ESS users to fully grasp the studies’ outcomes. The conclusions are supposed not to be drawn only from the model results, but also to take into consideration the underlying assumptions. Concerning communication of the latter, the Progressive Disclosure of Information (PDI) strategy offers a detailed guideline [21].

As in ESS, ‘Conclusions and recommendations’, in general, are mostly intended to give some kind of advice to decision-makers, and another level of information detail is required to assess the study’s outcome in terms of answering the overarching research question. This means that even if the necessary information for an uncertainty assessment by external experts is provided, we recommend that ESS authors give a statement about the effect of uncertainties, because they are the most knowledgeable about the model exercise. For instance, it can be explicitly stressed which alternative future developments are also possible even if they are not covered by the studies’ results.

Information on how the uncertainties have been dealt with is required not only for the formulation of the conclusions but also for the formulation of the recommendations. Depending on the degree of uncertainty, three types of statements are possible: probabilistic, possibilistic or deterministic. This has an influence on how recommendations are communicated. As an example for improving current practice, ESS authors could learn from climate research which delivers a prototype for giving policy advice taking into account uncertainty communication [57]. Probabilistic statements for explorative scenarios rarely can be made since the capability to predict future developments is limited [58]. A common misinterpretation by ESS users is mistaking of business-as-usual scenarios as predictions of what will happen (instead of what can happen).

In addition, the missing transparency of how concrete proposals for decision-making are derived from the theoretical model exercise was a major critique on existing model-based ESS during the process of developing the transparency criteria. The ESS authors face the risk that their recommendations are perceived as untraceable or, in extreme cases, arbitrary if they fail to clarify the relationship between their conclusions and their recommendations. In order to mitigate this risk, the ESS authors should provide a clear argument for their recommendations. This would mean that the description of the causal chain captured by their model is complemented by argumentation analysis to support their recommendations, thereby highlighting the process by which the results-recommendation relationship is created.

Limitations of the ESS transparency checklist

The ESS transparency checklist as a first step to improve transparency in ESS is an expert-to-expert tool. This entails a restricted perspective of the issue of lacking transparency in ESS as well as a limited transferability to non-experts. However, the difference in perspective between experts (here, modelers or ESS authors) and non-experts is important. For example, Walker et al. [50] explicitly distinguish, in the context of uncertainty, between the modeler’s view and the decision-makers’ or policymakers’ view. To some extent, the transparency criteria in their current form are beneficial for non-experts as they provide a first insight into the key assumptions and methodologies of an ESS. Nevertheless, although this may be of value to non-experts, addressing their needs in a more comprehensive manner requires an approach that differs from the ESS transparency checklist. For instance, an adaptation or enlargement of the transparency checklist might ensure applicability to a broader audience.

Furthermore, asking ESS authors to fill out the checklist by themselves may raise the question of quality assurance and as a qualitative empiric tool the checklist includes potential conflicts of interest of energy modelers. However, with regard to this, for quality assurance, we rely on the practice of good scientific conduct in the modeling community.

Finally, the ESS transparency checklist facilitates and also requires a certain level of standardization, which is a key element in order to provide comparability of model-based ESS. Consequently, it determines to some extent in which way ESS are presented (i.e. what information is conveyed and at what level of transparency). However, it is important to stress that standardization is not a panacea, since ESS are very diverse and the current version of the checklist naturally does not exhaust all reporting possibilities. This is one reason why ESS authors might find it challenging to apply the checklist to their study.

Conclusions and outlook

If ESS are to meet their purpose, openness to public scrutiny is needed. We argue that a high degree of transparency in consonance with scientific standards is still pending in model-based ESS. In this paper, an instrument to tackle this issue has been proposed in the form of the ESS transparency checklist. This tool is conceived as an addition to transparency approaches such as open source which represent so called glass box (i.e. high level of transparency) models. It presents the key information of model-based ESS in a compact and standardized manner. In practical cases where, for various reasons, ESS authors are unable to provide their glass box model, the checklist may be used as a tool that meets a minimum requirement for transparency.

The ESS checklist is the outcome of a process which includes literature review, expert interviews and expert validations. Its structure follows the method of data and information processing within a model-based ESS. Therewith, it distinguishes between input parameter modification in advance of the modeling (pre-processing) and post-processing which aims at condensing rare model outputs (post-processing). Stressing, especially, the importance of assumptions communication and model documentation, the checklist considers different levels of detail to provide information for study users with different degrees of knowledge about a modeling exercise. Although the ESS transparency checklist appears to be a useful tool for modeling experts in the first place, it is the first step for a standardized communication protocol between performers and assessors of complex studies in the field of energy scenarios.

We do not expect to leave this issue completely resolved. Instead, an attempt is made to highlight one weakness in ESS, and we put forward our initial suggestion for improvement. For instance, we suggest the development of transparency criteria for a broader spectrum of addressees, such as the public or policy-makers. The reduced version of the ESS transparency checklist (see Additional file 1) can be a valuable starting point for this purpose, but further questions (e.g. ‘What does a solver routine do?’) need to be considered as well. In order to identify transparency criteria, e.g. for politicians or public stakeholders, we suggest further surveys, specially adapted to these addressees taking into account customized communication strategies as proposed by Kloprogge et al. [21]. A useful manner of addressing the needs of non-experts may be by means of producing a modeling guide for non-experts. Such a guide could contain fundamental issues and answers to questions a non-expert user should ask about the model exercise.

We think that the ESS transparency checklist is a simple but very helpful tool for authors and readers of ESS and expect that its adoption will help improve the quality of such studies in the future. We would like to invite potential users to benefit by applying it to their studies and reports. Comments and critiques from the research community and experienced users of model-based ESS are welcomed.

Notes

In this paper, we refer to the following definition of the word ‘scenario’ given by the Intergovernmental Panel on Climate Change (IPCC): ‘A plausible and often simplified description of how the future may develop, based on a coherent and internally consistent set of assumptions about driving forces and key relationships’ (p. 86) [59].

‘Availability bias’ describes the tendency of ESS authors to include their knowledge of historic events (e.g. the past development of electricity prices) and own experience into the rationale behind their model parametrization or model methodology. In consequence, unexpected or disruptive elements might be neglected in the modeling approach. For the availability heuristic and availability biases in the context of risk, see [61, 62].

In this paper, a ‘model’ is defined as a mathematically consistent framework including an inter-dependent set of equations which aims to analyse how phenomena occur in a complex system. It is usually in the form of a computer algorithm.

In general the terms ‘reader’ or ‘user’ are reserved to designate those people who—expert or not—use the outcome of ESS, e.g. for decision making or subsequent modeling exercises. Note that this paper addresses expert users in particular.

By assumptions, we mean reasonable, best guess definitions for unknown values or relationships between variables which are supposed to be plausible but cannot be directly validated by measured data. In ESS, this applies either for future developments, generalization in order to reduce complexity (of data or models) or incomplete data sets for which measurements are not fully available. Thus, assumptions may differ depending on the professional background, intention, or even ideology of persons who make them.

The term ‘ESS author’ refers to those people who develop ESS. Thus, in this paper, ESS authors can be understood as modeling experts having the intention to document an ESS. Often ESS authors are also readers of ESS.

A complete summary of the questions from the workshop is attached to the ‘Appendix’ section below.

These normative assumptions are a part of the storyline. For instance, they do influence the distinction of what is (not) included in the data processing and therefore define the system boundaries of the model(s).

Abbreviations

- ABM:

-

Agent-based modeling

- DOE:

-

US Department of Energy

- ESS:

-

Energy scenario studies

- FAQ:

-

Frequently asked questions

- IPCC:

-

Intergovernmental Panel on Climate Change

- ODD:

-

Overview, Design concepts and Details

- PDI:

-

Progressive Disclosure of Information

- SD:

-

System dynamics

- TRACE:

-

Transparent and comprehensive ecological modeling evaluation

- UML:

-

Unified Modeling Language

References

European Environmental Agency (2011) Catalogue of scenario studies. Knowledge base for forward-looking information and services. EEA Technical report No. 1. Available via: http://scenarios.pbe.eea.europa.eu/. Accessed September 2015

Gregory WL, Duran A (2001) Scenarios and acceptance of forecasts. In: Principles of Forecasting 30. Springer US, New York, pp 519–540. doi:10.1007/978-0-306-47630-3_23

Lempert RJ, Popper SW, Bankes SW (2003) Shaping the next one hundred years: new methods for quantitative, longer-term policy analysis. Santa Monica, Pittsburgh

Wright G, Goodwin P (2009) Decision making and planning under low levels of predictability: enhancing the scenario method. Int J Forecast 4:813–825

Dieckhoff C, Appelrath HJ, Fischedick M, Grunwald A, Höffler F, Mayer C, Weimer-Jehle W (2014) Schriftenreihe Energiesysteme der Zukunft: Zur Interpretation von Energieszenarien. Analysis ISBN: 978-3-9817048-1-5, Deutsche Akademie der Technikwissenschaften e. V., München

Dieckhoff C, Fichtner W, Grunwald A, Meyer S, Nast M, Nierling L, Renn O, Voß A, Wietschel M (2011) Energieszenarien: Konstruktion; Bewertung und Wirkung - “Anbieter” und “Nachfrager” im Dialog. KIT Scientific Publishing, Karlsruhe, 89. doi:10.5445/KSP/1000021684

Börjeson L, Höjer M, Dreborg KH, Ekvall T, Finnveden G (2006) Scenario types and techniques: towards a user’s guide. Futures (7), 723–739 doi:10.1016/j.futures.2005.12.002

Möst D, Fichtner W, Grunwald A (2008) Energiesystemanalyse: Tagungsband des Workshops “Energiesystemanalyse” vom 27. November 2008 am KIT Zentrum Energie. Proceedings, Universität Karlsruhe, Karlsruhe

Hoffman KC, Wood DO (1976) Energy system modeling and forecasting. Annual Review of Environment and Resources (1), 423–453

Keles D, Möst D, Fichtner W (2011) The development of the German energy market until 2030—a critical survey of selected scenarios. Energy Policy 2(39):812–825. doi:10.1016/j.enpol.2010.10.055

Jebaraj S, Iniyan S (2004) A review of energy models. Renew Sust Energ Rev 10(4):281–311. doi:10.1016/j.rser.2004.09.004

Connolly D, Lund H, Mathiesen BV, Leahy M (2010) A review of computer tools for analysing the integration of renewable energy. Applied Energy, 1059–1082

Bhattacharyya SC, Timilsina GR (2010) A review of energy system models. International Journal of Energy Sector Management 4(4):494–518. doi:10.1108/17506221011092742

Droste-Franke B, Paal B, Rehtanz C, Sauer DU, Schneider JP, Schreurs M, Ziesemer T (2012) Balancing renewable electricity: energy storage, demand side management, and network extension from an interdisciplinary perspective, 1st edn. Springer, Heidelberg. doi:10.1007/978-3-642-25157-3

Droste-Franke B, Carrier M, Kaiser M, Schreurs M, Weber C, Ziesemer T (2014) Improving energy decisions towards better scientific policy advice for a safe and secure future energy system, 1st edn. Springer, Switzerland. doi:10.1007/978-3-319-11346-3

Després J, Hadjsaid N, Criqui P, Noirot I (2015) Modelling the impacts of variable renewable sources on the power sector: reconsidering the typology of energy modelling tools. Energy 80(1 February 2015), 486–495 doi: 10.1016/j.energy.2014.12.005

Schüll E, Gerhold L (2015) Nachvollziehbarkeit. In : Standards und Gütekriterien der Zukunftsforschung. VS Verlag für Sozialwissenschaften, Wiesbaden 197

Bossel H (2007) Systems and models: complexity, dynamics, evolution, sustainability. Books on Demand GmbH, Norderstedt

Weßner A, Schüll E (2015) Code of Conduct - Wissenschaftliche Integrität. In: Standards und Gütekriterien der Zukunftsforschung. VS Verlag für Sozialwissenschaften, Wiesbaden 197

Grunwald A (2011) Energy futures: diversity and the need for assessment. Futures (8), 820-830 doi:10.1016/j.futures.2011.05.024

Kloprogge P, van der Sluijs J, Wardekker A (2007) Uncertainty communication—issues and good practice. Report ISBN: 978-90-8672-026-2, Copernicus Institute for Sustainable Development and Innovation, Utrecht

International Risk Governance Council (2015) Assessment of future energy demand—a methodological review providing guidance to developers and users of energy models and scenarios. Concept Note, International Risk Governance Council http://www.irgc.org/wp-content/uploads/2015/05/IRGC-2015-Assessment-of-Future-Energy-Demand-WEB-12May.pdf

Menzen G (2014) Bekanntmachung Forschungsförderung im 6. Energieforschungsprogramm “Forschung für eine umweltschonende, zuverlässige und bezahlbare Energieversorgung”. Bundesanzeiger, 18

Sullivan P, Cole W, Blair N, Lantz E, Krishnan V, Mai T, Mulcahy D, Porro G (2015) Standard scenarios annual report: U.S. Electric Sector Scenario Exploration., Golden

Lawrence Berkeley National Laboratory (2015) Resource planning portal. Available via: http://resourceplanning.lbl.gov. Accessed 15 July 2015

Openmod initiative (2015) Open energy modeling initiative. Available via: http://openmod-initiative.org/manifesto.html. Accessed 09 November 2015

NFQ Solutions (2015) PriceProfor Engine. Available via: http://ekergy.github.io/. Accessed 29 Aug 2016.

U.S. Department of Energy (2014) Available via: http://www.energy.gov/sites/prod/files/2014/08/f18/DOE_Public_Access%20Plan_FINAL.pdf. Accessed 23 October 2015

Benz J, Hoch R, Legovic T (2001) ECOBAS—modelling and documentation. Ecological Modelling (1–3), 3–15 doi: 10.1016/S0304-3800(00)00389-6

Schmolke A, Thorbek P, DeAngelis DL, Grimm V (2010) Ecological models supporting environmental decision making: a strategy for the future. Trends Ecol Evol 25(8):479–486

Grimm V, Augusiakd J, Focksd A, Franke BM, Gabsif F, Johnstong ASA, Liug C, Martina BT, Melij M, Radchukc V et al (2014) Towards better modelling and decision support: documenting model development, testing, and analysis using TRACE. Ecological modelling., pp 129–139. doi:10.1016/j.ecolmodel.2014.01.018

Rahmandad H, Sterman JD (2012) Reporting guidelines for simulation-based research in social sciences. System Dynamics Review (4), 396–411 doi:10.1002/sdr.1481

Grimm V, Berger U, Bastiansen F, Eliassen S, Ginot V, Giske J, Goss-Custard J, Grand T, Heinz SK, Huse G et al (2006) A standard protocol for describing individual-based and agent-based models. Ecological Modelling., pp 115–126

Grimm V, Berger U, DeAngelis DL, Polhill JG, Giske J, Railsback SF (2010) The ODD protocol: a review and first update. Ecological Modelling., pp 2760–2768

Martinez-Moyano IJ (2012) Documentation for model transparency. System Dynamics Review (2), 199–208 doi: 10.1002/sdr.1471

Gass SI, Hoffman KL, Jackson RHF, Joel LS, Saunders PB (1981) Documentation for a model: a hierarchical approach. Commun ACM 24(11):728–733

Dodds EP, Keppo I, Strachan N (2015) Characterising the evolution of energy system models using model archaeology. Environmental Modeling & Assessment 20(2):83–102

Wassermann S (2015) Das qualitative Experteninterview. In : Methoden der Experten-und Stakeholdereinbindung in der sozialwissenschaftlichen Forschung. Springer, Wiesbaden doi: 10.1007/978-3-658-01687-6

Betz G, Pregger T (2014) Helmholtz Research School on Energy Scenarios: advanced module—case studies. 17-20 February 2014, Stuttgart, Karlsruhe http://www.energyscenarios.kit.edu/

Greenpeace (2012) energy [r]evolution - a sustainable world energy outlook 4th edn. Greenpeace International http://www.greenpeace.org/international/Global/international/publications/climate/2012/Energy%20Revolution%202012/ER2012.pdf

European Commission (2011) EU Energy Roadmap 2050—impact assessment and scenario analysis Part 2/2. Available via: https://ec.europa.eu/energy/sites/ener/files/documents/sec_2011_1565_part2.pdf. Accessed 30 Mar 2015

Bogner A, Littig B, Menz W (2009) Expert interviews—an introduction to a new methodological debate. In: Interviewing Experts. Palgrave Macmillan, United Kingdom. doi:10.1057/9780230244276_1

Cuhls K (2009) Delphi-Befragungen in der Zukunftsforschung. In : Zukunftsforschung und Zukunftsgestaltung. Springer, Berlin Heidelberg doi: 10.1007/978-3-540-78564-4_15

Landeta J (2006) Current validity of the Delphi method in social sciences. Technol Forecast Soc Chang 5(73):467–482. doi:10.1016/j.techfore.2005.09.002

Ohlhorst D, Kröger M (2015) Konstellationsanalyse: Einbindung von Experten und Stakeholdern in interdisziplinäre Forschungsprojekte. In : Methoden der Experten- und Stakeholdereinbindung in der sozialwissenschaftlichen Forschung. Springer, Wiesbaden doi: 10.1007/978-3-658-01687-6_6

Acatech (2015) Mit Energieszenarien gut beraten. Anforderungen an wissenschaftliche Politikberatung. Deutsche Akademie der Technikwissenschaften, Deutsche Akademie der Naturforscher Leopoldina und Union der deutschen Akademien der Wissenschaften, Berlin

International Energy Agency; International Renewable Energy Agency Policies and Measures Databases (PAMS). Available via: http://www.iea.org/policiesandmeasures/renewableenergy/. Accessed April 2016

European Regulators’ Group for Electricity and Gas (2010) Comitology Guidelines on Fundamental Electricity Data Transparency. Initial Impact Assessment, European Regulators’ Group for Electricity and Gas, Brussels http://www.poyry.co.uk/sites/www.poyry.co.uk/files/17.pdf

Brock WA, Durlauf SN (2005) Local robustness analysis. Theory and application. J Econ Dyn Control 29(11):2067–2092. doi:10.1016/j.jedc.2005.06.001

Walker WE, Harremoës P, Rotmans J, van der Sluijs JP, van Asselt MBA, Janssen P, Krayer von Krauss MP (2003) Defining uncertainty. A conceptual basis for uncertainty management in model-based decision support. Integr Assess 4(1):5–17. doi:10.1076/iaij.4.1.5.16466

Trutnevyte E, Guivarch C, Lempert R, Strachan N (2016) Reinvigorating the scenario technique to expand uncertainty consideration, 34th edn. Springer, Netherlands, p 135. doi:10.1007/s10584-015-1585-x

Trutnevyte E, Barton J, O’Grady Á, Ogunkunle D, Pudjianto D, Robertson E (2014) Linking a storyline with multiple models: a cross-scale study of the UK power system transition. Technological Forecasting and Social Change., pp 26–42

Object Management Group (2011) Object Management Group. Available via: http://www.omg.org/spec/UML/2.4.1/Superstructure/PDF/. Accessed 09 September 2015

Hamby DM (1994) A review of techniques for parameter sensitivity analysis of environmental models. Environ Monit Assess 32(2):135–154

Weisberg M (2006) Robustness analysis. Philos Sci 73(5):730–742

Culka M (2016) Uncertainty analysis using Bayesian model averaging: a case study of input variables to energy models and inference to associated uncertainties of energy scenarios. Energy, Sustainability and Society 6(1)

Mastrandrea MD, Mach KJ, Plattner GK, Edenhofer O, Stocker TF, Field CB, Matschoss PR (2011) The IPCC AR5 guidance note on consistent treatment of uncertainties: a common approach across the working groups. Clim Chang 8(4):675–691. doi:10.1007/s10584-011-0178-6

Betz G (2010) What’s the worst case? The methodology of possibilistic prediction. Analyse & Kritik (1), 87–106

Intergovernmental Panel on Climate Change (2008) Synthesis report. Contribution of working groups I, II and III to the fourth assessment report of the intergovernmental panel on climate change. In: Climate Change 2007. IPCC, Geneva, p 86

Resch G, Liebmann L, Lamprecht M, Hass R, Pause F, Kahles M (2014) Phase out of nuclear power in Europe—from vision to reality. Report, GLOBAL 2000, Vienna http://www.bund.net/fileadmin/bundnet/publikationen/atomkraft/130304_bund_atomkraft_phase_out_nuclear_power_europe_dt_kurzfassung.pdf

WETO (2011) World and European energy and environment transition outlook. Available via: http://espas.eu/orbis/document/world-and-european-energy-and-environment-transition-outlook. Accessed 03 30 2015

Davis CB, Chmieliauskas A, Dijkema GPJ, Nikolic I Enipedia. In: Energy & Industry group, Faculty of Technology, Policy and Management, TU Delft. Available via: http://enipedia.tudelft.nl/wiki/Main_Page. Accessed April 2016

European Commission Eurostat. Available via: http://ec.europa.eu/eurostat/. Accessed April 2016

International Energy Agency (2014) The future and our energy sources—ETP 2014 report. Available via: http://www.iea.org/etp/etp2014. Accessed 30 March 2015

Jacobson MZ, Delucchi MA, Bazouin G, Bauer ZAF, Heavey CC, Fisher E, Morris SB, Diniana JYP, Vencill TA, Yeskoo TW (2015) 100% clean and renewable wind, water, and sunlight (WWS) all-sector energy roadmaps for the 50 United States. Energy & Environmental Science., pp 2093–2117

Vögele S, Hansen P, Poganietz WR, Prehofer S, Weimer-Jehle W (2015) Building Scenarios on energy consumption of private households in germany using a multi-level cross-impact balance approach. Preprint, Institute of Energy and Climate Research, Julich

O’Mahony T, Zhou P, Sweeney J (2013) Integrated scenarios of energy-related CO2 emissions in Ireland: a multi-sectoral analysis to 2020. Ecological Economics., pp 385–397

Trutnevyte E, Stauffacher M, Scholz RW (2012) Linking stakeholder visions with resource allocation scenarios and multi-criteria assessment. European Journal of Operational research., pp 762–772

Feix O, Obermann R, Strecker M, König R (2014) 1. Entwurf Netzentwicklungsplan Strom. Report, German Transmission System Operators, Berlin, Dortmund, Bayreuth, Stuttgart http://www.netzentwicklungsplan.de/file/2674/download?token=BbPkI0OO.

Suwala W, Wyrwa A, Pluta M, Jedrysik E, Karl U, Fehrenbach D, Wietschel M, Boßmann T, Elsland R, Fichtner W et al (2013) Shaping our energy system—combining European modelling expertise: case studies of the European energy system in 2050. Energy System Analysis Agency, Karlsruhe

Weber C (2005) Uncertainty in the Electric Power Industry. Springer, New York. doi:10.1007/b100484

International Energy Agency (2009) Transport, energy and CO2: moving toward sustainability. Report ISBN: 978-92-64-07316-6, International Energy Agency, Organisation for Economic Co-operation and Development, Paris

Façanha C, Blumberg K, Miller J (2012) Global transportation energy and climate roadmap—the impact of transportation policies and their potential to reduce oil consumption and greenhouse gas emissions. Report, International Council on Clean Transportation, Washington http://www.theicct.org/sites/default/files/publications/ICCT%20Roadmap%20Energy%20Report.pdf

Barlas Y (1996) Formal aspects of model validity and validation in system dynamics. Syst Dyn Rev 3(12):183–210. doi:10.1002/(SICI)1099-1727(199623)12:33.0.CO;2-4

Sterman J (2000) Business dynamics: systems thinking and modeling for a complex world. Mcgraw-Hill Education Ltd, Boston

Wansart J (2012) Analyse von Strategien der Automobilindustrie zur Reduktion von CO2-Flottenemissionen und zur Markteinführung Alternativer Antriebe. Springer Gabler, Wiesbaden doi: 10.1007/978-3-8349-4499-3

Dargay J, Gately D, Sommer M (2007) Vehicle ownership and income growth, worldwide: 1960-2030. Energy J 4:143–170

Brouwer AS, van den Broek M, Zappa W, Turkenburg WC, Faaij A (2015) Least-cost options for integrating intermittent renewables in low-carbon power systems. Applied Energy., pp 48–74

Frew BA, Becker S, Dvorak MJ, Andresen GB, Jacobson MZ (2016) Flexibility mechanisms and pathways to a highly renewable US electricity future. Energy 101:65–78

Global Fuel Economy Initiative (undated) 50by50. Available via: http://www.fiafoundation.org/connect/publications/50by50-global-fuel-economy-initiative. Accessed 30 March 2015

Tomaschek J (2013) Long-term optimization of the transport sector to address greenhouse gas reduction targets under rapid growth: application of an energy system model for Gauteng province, South Africa. Dissertation, Universität Stuttgart, Stuttgart. doi:10.18419/opus-2313

Acknowledgements

We gratefully acknowledge the aforementioned funding. We also would like to thank people who contributed to the processes of evolving the transparency criteria and reviewing our work, including two anonymous reviewers as well as Sebastian Cacean, Christian Dieckhoff, Wolf Fichtner, Bert Droste-Franke, Patrick Jochem, Wolfgang Weimer-Jehle and the members of the Helmholtz Research School on Energy Scenarios. Finally, we would like to thank Gregor Betz and Thomas Pregger for providing the questionnaire for the initial workshop.

Members of the interdisciplinary Helmholtz Research School on Energy Scenarios

Karl-Kiên Cao, Felix Cebulla, Jonatan J. Gómez Vilchez, Babak Mousavi, Sigrid Prehofer.

Funding

We acknowledge support by Deutsche Forschungsgemeinschaft and Open Access Publishing Fund of Karlsruhe Institute of Technology. This work was also supported by the Helmholtz Research School on Energy Scenarios.

Authors’ contributions

All mentioned authors were involved in the conception and refinement of the ESS transparency checklist as well as the writing and revision of the manuscript, but with different main responsibilities. The transparency checklist was discussed and elaborated within workshops conducted by KKC, FC, BM and SP, and the expert interviews within that process were carried out mainly by FC and BM. The article structure was mainly elaborated by JGV as well as the first draft of the abstract, introduction and the conclusions. JGV and BM were mainly responsible for the literature review, SP for the section about the construction of the transparency checklist and KKC for the results section. Apart from those main responsibilities, all sections also include designed or drafted parts by other authors. KKC and FC integrated all feedback, comments and revisions in the paper and revised the paper according to the journals standards. Finally, all authors (KKC, FC, JGV, BM and SP) helped integrate the reviewers’ comments. All authors read and approved the final manuscript.

Authors’ information

Karl-Kiên Cao, M.Sc. (KKC): Mr. Cao graduated as Bachelor in Electrical Power Engineering at the Baden-Wuerttemberg Cooperative State University Mannheim in 2010. He received his M.Sc. in Electrical Engineering and Information Technologies at the Karlsruhe Institute of Technology. Since 2013, he has worked as a PhD-student at the German Aerospace Center, Institute of Engineering Thermodynamics in Stuttgart.

Dipl.-Wi.-Ing. Felix Cebulla (FC): Mr. Cebulla studied Industrial Engineering for Energy and Environmental Management at the University of Flensburg and the Victoria University of Wellington. After his graduation in 2012, he started his PhD at the German Aerospace Center, Institute of Engineering Thermodynamics in Stuttgart.

Jonatan J. Gómez Vilchez, M.A. (JGV): Mr. Gómez Vilchez holds a Master in Transport Economics (University of Leeds). He is a PhD student at the Chair of Energy Economics, Institute for Industrial Production at Karlsruhe Institute of Technology (KIT).

Dipl.-Geogr. Sigrid Prehofer (SP): Mrs. Prehofer studied geography and political science at the Free University of Berlin and social work in Vienna and Berlin. Since July 2012, she has been a PhD student at ZIRIUS (Research Center for Interdisciplinary Risk and Innovation Studies) in Stuttgart.

Babak Mousavi, M.Sc. (BM): Mr. Mousavi holds a Master in Industrial Engineering at Amirkabir University of Technology in Tehran. Since March 2013, he has been a PhD student at IER (Institute of Energy economics and the Rational use of energy) in Stuttgart.

Competing interests

The authors declare that they have no competing interests.

Author information

Authors and Affiliations

Corresponding author

Additional file

Additional file 1:

The ESS transparency checklist is freely available from [10.1186/s13705-016-0090-z]. The xls-file named “Cao et al. (2016) Additional file 1_Checklist” contains the reduced version. Both the reduced and the full versions of the ESS transparency checklist are shown in ‘Appendix’ section. (XLSX 39.5 kb)

Appendix

Appendix

Questionnaire for the initial collection of transparency criteria

Assumptions

What assumptions about price paths and technology costs in the future were made and how do they affect the scenario results and derived recommendations? Is there sufficient transparency to assess this? Are the assumptions well-founded or could other developments be assumed just as well?

Scenario methods

How can the basic methodology of the scenario construction be described and how can we distinguish it from other approaches? In particular, which methodology has been used to develop future technology splits for the electricity, heat and transport sectors and how far have economic and infrastructural aspects been taken into account (keyword: system costs)? Is the study sufficiently transparent to assess this?

Consistency

Are assumptions and scenario results consistent (consumption and demand drivers in the energy sectors, supply/generation, costs, and conclusions)? Is there sufficient transparency to judge this? Are interactions between the electricity, heat and transport sectors considered?

Uncertainty

How does the study communicate uncertainties of its main findings? Are scenarios considered to be plausible worlds, or possible future pathways, or likely evolutions of the energy system? Do the main findings of the studies represent possibility statements? Are uncertainties expressed in a probabilistic way, either qualitatively or quantitatively? Does the study pretend to arrive at robust results? And is the specific way the study presents uncertainties adequate?

Policy advice

Does the study derive policy recommendations from the scenarios? If so, how? And is this reasoning valid? Which additional normative assumptions enter the derivation of policy recommendations and are they made sufficiently transparent?

Reception

How has the study been received (in the public, by stakeholders, in the media, etc.)? Has this reception been politically biased? Were the findings over-simplified and did this seriously distort the original content of the study?

Classification of energy system models based on [81]

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Cao, KK., Cebulla, F., Gómez Vilchez, J.J. et al. Raising awareness in model-based energy scenario studies—a transparency checklist. Energ Sustain Soc 6, 28 (2016). https://doi.org/10.1186/s13705-016-0090-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13705-016-0090-z