Abstract

Evaluation and communication of the relative degree of certainty in assessment findings are key cross-cutting issues for the three Working Groups of the Intergovernmental Panel on Climate Change. A goal for the Fifth Assessment Report, which is currently under development, is the application of a common framework with associated calibrated uncertainty language that can be used to characterize findings of the assessment process. A guidance note for authors of the Fifth Assessment Report has been developed that describes this common approach and language, building upon the guidance employed in past Assessment Reports. Here, we introduce the main features of this guidance note, with a focus on how it has been designed for use by author teams. We also provide perspectives on considerations and challenges relevant to the application of this guidance in the contribution of each Working Group to the Fifth Assessment Report. Despite the wide spectrum of disciplines encompassed by the three Working Groups, we expect that the framework of the new uncertainties guidance will enable consistent communication of the degree of certainty in their policy-relevant assessment findings.

Similar content being viewed by others

1 Introduction

Assessment Reports of the Intergovernmental Panel on Climate Change (IPCC) provide comprehensive assessments of climate change, its impacts, and response strategies by synthesizing and evaluating scientific understanding. A guiding principle of IPCC assessments has been to be policy relevant without being policy prescriptive (IPCC 2008). The reports are written by experts, with the objective of assessing the state of knowledge to inform decision making, and the reports undergo multiple rounds of review by experts from the scientific community and by governments to ensure they are comprehensive and balanced. For these assessments, the theme of consistent treatment of uncertainties, including the use of calibrated uncertainty language, has long cut across IPCC Working Groups. Such calibrated language aims to allow clear communication of the degree of certainty in assessment findings, including findings that span a range of possible outcomes. It also avoids descriptions of uncertainties using more casual terms, which may imply different meanings to different disciplines and/or in different languages.

Since the First Assessment Report (FAR; IPCC 1990), IPCC Assessment Reports have used calibrated language to characterize the scientific understanding and associated uncertainties underlying assessment findings. Starting with the Third Assessment Report (TAR), guidance outlining a common approach for treatment of uncertainties across the Working Groups has been provided to all authors in each assessment cycle (Moss and Schneider 2000; IPCC 2005; Mastrandrea et al. 2010; see Mastrandrea and Mach, this issue, for further description). The purpose of each uncertainties guidance paper has been to encourage, across the Working Groups, consistent characterization of the degree of certainty in key findings based on the strength of and uncertainties in the underlying knowledge base.

In the previous IPCC assessment cycle, the Guidance Notes for Lead Authors of the IPCC Fourth Assessment Report on Addressing Uncertainties (IPCC 2005) attempted to create a common framework that could be used by all three Working Groups. It responded to divergence in usage of uncertainty language across the Working Groups in the TAR (IPCC 2001a, 2001b, 2001c) and was developed following the IPCC Workshop on Describing Uncertainties in Climate Change to Support Analysis of Risk and of Options (Manning et al. 2004). This framework further distinguished the quantitative metrics—confidence and likelihood—that had been employed by Working Groups I and II in the TAR, including revisions to the confidence scale presented in the TAR guidance paper (Moss and Schneider 2000).

In the AR4, all Working Groups did employ calibrated uncertainty language, with Working Group III doing so for the first time (IPCC 2007a, 2007b, 2007c). In practice in the AR4, each Working Group favored different metrics and approaches to evaluating and communicating the degree of certainty in key findings. In addition, there was sometimes inconsistent interpretation and usage of the quantitative confidence and likelihood scales between Working Groups I and II in the AR4. Working Group III employed only the qualitative summary terms for evidence and agreement, making this choice because of the nature of the disciplinary sciences it assesses and because of its interpretation of the uncertainty language appropriate to characterize this information.

Given this context, there was considerable interest in developing a revised uncertainties guidance paper for the Fifth Assessment Report (AR5) cycle. In July of 2010, discussions took place at an IPCC Cross-Working Group Meeting on Consistent Treatment of Uncertainties. Following this meeting, a writing team, including a Co-Chair from each IPCC Working Group, scientists from the Technical Support Units, and other experts in treatment of uncertainties, drafted the guidance paper for the AR5, building from the uncertainties guidance provided to TAR and AR4 authors (Moss and Schneider 2000; IPCC 2005). This guidance paper also addressed the recommendations made by the 2010 independent review of the IPCC by the InterAcademy Council (InterAcademy Council 2010). After extensive discussions among the author team and other meeting participants to determine a framework and calibrated language that could be consistently applied across all three IPCC Working Groups, the Guidance Note for Lead Authors of the IPCC Fifth Assessment Report on Consistent Treatment of Uncertainties (Mastrandrea et al. 2010) was finalized in November of 2010 and was subsequently accepted by the Panel at the 33rd Session of the IPCC in May of 2011. The authors of this paper are the IPCC Working Group Co-Chairs and members of the Working Group Technical Support Units who were authors of the AR5 Guidance Note.

2 Overview of the AR5 guidance note

The AR5 Guidance Note (Mastrandrea et al. 2010) provides Lead Authors of all three IPCC Working Groups with an approach for considering key findings in the assessment process, for supporting key findings with traceable accounts in the chapters, and for characterizing the degree of certainty in key findings with two metrics:

-

“• Confidence in the validity of a finding, based on the type, amount, quality, and consistency of evidence (e.g., mechanistic understanding, theory, data, models, expert judgment) and the degree of agreement. Confidence is expressed qualitatively.

-

• Quantified measures of uncertainty in a finding expressed probabilistically (based on statistical analysis of observations or model results, or expert judgment).” (Mastrandrea et al. 2010)

The following sections describe important elements of the framework for treatment of uncertainties provided in the AR5 Guidance Note.

2.1 Traceable accounts for key findings

The AR5 Guidance Note instructs an author team, when writing a chapter of an IPCC assessment, to describe and evaluate the state of knowledge related to topics under its purview: particularly, the type, amount, quality, and consistency of evidence and the degree of agreement.

Types of evidence include, for example, mechanistic or process understanding, underlying theory, model results, observational and experimental data, and formally elicited expert judgment. The amount of evidence available can range from small to large, and that evidence can vary in quality. Evidence can also vary in its consistency, i.e., the extent to which it supports single or competing explanations of the same phenomena, or the extent to which projected future outcomes are similar or divergent.

The degree of agreement is a measure of the consensus across the scientific community on a given topic and not just across an author team. It indicates, for example, the degree to which a finding follows from established, competing, or speculative scientific explanations. Agreement is not equivalent to consistency. Whether or not consistent evidence corresponds to a high degree of agreement is determined by other aspects of evidence such as its amount and quality; evidence can be consistent yet low in quality.

The assessment of the state of knowledge for topics covered in AR5 chapters will necessarily provide descriptions of relevant evidence and agreement. For each topic, the description should summarize the author team’s evaluation of evidence and agreement for that topic and any conclusions the author team reaches based on its assessment. It should also present the range of relevant scientific explanations where a diversity of views exist. For example, the author team could summarize the literature published on a topic and its evaluation of the scientific validity of potential explanations for the information available.

A key finding is a conclusion of the assessment process that the author team may choose to include in the chapter’s Executive Summary. The author team’s evaluation of evidence and agreement provides the basis for any key findings it develops and also the foundation for determining the author team’s degree of certainty in those findings. The description of the author team’s evaluation of evidence and agreement is called a traceable account in the Guidance Note. Each key finding presented in a chapter’s Executive Summary will include reference to the chapter section containing the traceable account for the finding.

2.2 Process for evaluating the degree of certainty in key findings

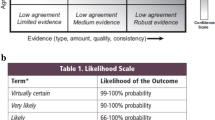

The AR5 Guidance Note outlines the following process for evaluating the degree of certainty in key findings and communicating that degree of certainty using calibrated uncertainty language. Figure 1 illustrates this general evaluation process and summarizes the appropriate calibrated uncertainty language used in different situations. In all cases, the traceable account for a key finding presents the outcome of the author team’s assessment, describing the corresponding evaluation of evidence and agreement and the basis for any calibrated language assigned.

Process for Evaluating and Communicating the Degree of Certainty in Key Findings. This schematic illustrates the process for evaluating and communicating the degree of certainty in key findings that is outlined in the Guidance Note for Lead Authors of the IPCC Fifth Assessment Report on Consistent Treatment of Uncertainties (Mastrandrea et al. 2010)

2.2.1 Summary terms for evidence and agreement

The first step in this process (the upper left of Fig. 1) is for the author team to consider the appropriate summary terms corresponding to its evaluation of evidence and agreement. As outlined in paragraph 8 of the Guidance Note and depicted in Fig. 2, the summary terms for evidence (characterizing the type, amount, quality, and consistency of evidence) are limited, medium, or robust. The Guidance Note indicates that evidence is generally most robust when there are multiple, consistent independent lines of high-quality evidence. The summary terms for the degree of agreement are low, medium, or high.

A depiction of evidence and agreement statements and their relationship to confidence. The nine possible combinations of summary terms for evidence and agreement are shown, along with their flexible relationship to confidence. In most cases, evidence is most robust when there are multiple, consistent independent lines of high-quality evidence. Confidence generally increases towards the top-right corner as suggested by the increasing strength of shading. Figure reproduced and legend adapted from Mastrandrea et al. (2010)

2.2.2 Levels of confidence

A level of confidence provides a qualitative synthesis of an author team’s judgment about the validity of a finding; it integrates the evaluation of evidence and agreement in one metric. As the second step in determining the degree of certainty in a key finding, the author team decides whether there is sufficient evidence and agreement to evaluate confidence. This task is relatively simple when evidence is robust and/or agreement is high. For other combinations of evidence and agreement, the author team should evaluate confidence whenever possible. For example, even if evidence is limited, it may be possible to evaluate confidence if agreement is high. Evidence and agreement may not be sufficient to evaluate confidence in all cases, particularly when evidence is limited and agreement is low. In such cases, the author team instead presents the assigned summary terms as part of the key finding.

The qualifiers used to express a level of confidence are very low, low, medium, high, and very high. Figure 2 depicts summary statements for evidence and agreement and their flexible relationship to confidence.

2.2.3 Likelihood and probability

As a third step if it has evaluated confidence, the author team determines if the type of available evidence allows probabilistic quantification of the uncertainties associated with a finding, such as a probabilistic estimate of a specific occurrence or a range of outcomes. Probabilistic information may originate from statistical or modeling analyses, elicitation of expert views, or other quantitative analyses. When probabilistic quantification is not possible, the author team presents the assigned level of confidence.

If uncertainties can be quantified probabilistically, the finding can be characterized using likelihood or a more precise presentation of probability. Table 1 presents the calibrated language for describing quantified uncertainties through the likelihood scale. Depending on the nature of the probabilistic information available, author teams can present probability more precisely than with the likelihood scale, for example presenting a complete probability distribution or a percentile range. In particular, the AR5 Guidance Note encourages author teams to provide information, where possible, on the tails of distributions important for risk management.

In most cases, the author team should have high or very high confidence in a finding characterized probabilistically. There may be some cases, however, where the author team may find it appropriate to quantify uncertainties probabilistically even with lower levels of confidence in the underlying evidence and agreement. For example, the author team may sometimes determine that it is more informative to develop probabilistic key findings on a topic for which some aspects are understood with higher confidence than others. If confidence in a finding characterized probabilistically is not high or very high, the author team should also explicitly present the level of confidence as part of the finding.

2.3 Commonly encountered categories of key findings

The AR5 Guidance Note lists commonly encountered categories of findings, providing author teams with instructions for assigning calibrated uncertainty language for each category. These criteria, described in paragraph 11 of the Guidance Note, revise the list of quantitative categories presented in the AR4 Guidance Notes (IPCC 2005). The AR5 Guidance Note attempts to make the instructions more broadly applicable across all three Working Groups, describing how summary terms for evidence and agreement, confidence, likelihood, and other probabilistic measures should be used to characterize findings in different situations. The AR5 Guidance Note also explicitly removes the concept of this listing being a “hierarchy.” It instead indicates that calibrated uncertainty language should be used to provide the most information to the reader and should reflect the nature of the available evidence and agreement, which varies by discipline and by topic.

2.4 Other aspects of the AR5 guidance note

The AR5 Guidance Note, as in the TAR guidance paper (Moss and Schneider 2000) and AR4 Guidance Notes (IPCC 2005), contains further, more general advice for effectively constructing key findings of the assessment process and for communicating their degree of certainty. Author teams are encouraged to be aware of group dynamics that can affect the assessment process, to consider ways to phrase findings clearly and precisely, and to consider all plausible sources of uncertainty. Additionally, the AR5 Guidance Note more extensively encourages author teams to provide information on the range of possible outcomes, including the tails of distributions of variables relevant to decision-making and risk management. Finally, the AR5 Guidance Note describes formulation of conditional findings, for which the degrees of certainty in findings (effects) conditional on other findings (causes) can be evaluated and communicated separately. Construction of conditional findings can combine information from multiple chapters within one Working Group or across Working Groups.

3 Working group perspectives

For the three IPCC Working Groups, the sections below provide perspectives on application of the AR5 uncertainties guidance in the current assessment cycle, highlighting considerations and challenges for each contribution.

3.1 Working group I: perspective and challenges

The consideration of uncertainties is key to the comprehensive and robust assessment of the “Physical Science Basis of Climate Change” by Working Group I of the IPCC. Working Group I has successfully applied the calibrated IPCC uncertainty language in past assessment reports. In fact, the quantification of uncertainty ranges in experimental and theoretical science in general, and in climate science in particular, is one of the central tasks of the scientific activity. The common approach is to report this in an uncertainty estimate based, e.g., on the statistical measure of the standard deviation. However, the challenge when writing IPCC assessment reports is to translate this numerical information into text that is widely understandable and is interpreted correctly.

Since the TAR, when a first version of an IPCC guidance document on the treatment of uncertainties (Moss and Schneider 2000) was made available to the authors, key scientific statements in Working Group I’s contribution have been accompanied by the assessment of associated uncertainty and communicated using calibrated uncertainty language, with levels of confidence to express expert judgments on the correctness of the underlying science and likelihood statements to indicate the assessed likelihood of an outcome or a result. This approach was then further expanded on in the AR4, using the IPCC uncertainties guidance paper for the AR4 (IPCC 2005; Manning 2006), and key statements from the TAR were updated accordingly. The currently underway Working Group I contribution to the AR5 will use the by now well established approach as laid out in the revised AR5 guidance paper (Mastrandrea et al. 2010).

3.1.1 Example illustrating progress in the assessment and communication of uncertainty

One instructive example of how scientific understanding has improved and how uncertainties have correspondingly decreased is the evolution of the key detection and attribution statement on observed changes in the global mean temperature in Working Group I contributions to IPCC Assessment Reports over the past two decades. “Detection and attribution” here refers to the methodology which links quantitatively the observed changes in the climate system to specific causes and drivers of these changes (see Hegerl et al. 2010).

The Working Group I Summary for Policymakers (SPM) in the Second Assessment Report (SAR) stated that “[t]he balance of evidence suggests a discernible human influence on global climate” (IPCC 1996). The TAR Working Group I SPM then concluded that “[t]here is new and stronger evidence that most of the warming observed over the last 50 years is attributable to human activities” (IPCC 2001a). The AR4 Working Group I SPM further strengthened previous statements by concluding that “[m]ost of the observed increase in global average temperatures since the mid-20th century is very likely due to the observed increase in anthropogenic greenhouse gas concentrations” (IPCC 2007a).

The advance in the attribution of the observed changes to specific causes from the SAR to the AR4 is paralleled by the advance in the detection of the changes in global mean temperature, which in AR4 were presented as a fact expressed by the statement that the “warming of the climate system is unequivocal,” thus without using uncertainty qualifiers. This factual statement was the result of the assessment of multiple, independent lines of evidence. Factual statements carry a substantial weight in a key document such as the Summaries for Policymakers of Working Group contributions or the Synthesis Report. Therefore, a prerequisite is a clear consensus in the underlying report which must be based on multiple lines of independent, high-quality evidence.

3.1.2 Example of a complex, multi-step process to arrive at a synthesis statement of uncertainty

Even though the physically based climate sciences offer various mathematical methods to determine and estimate uncertainties, overall statements require ultimately some amount of expert judgment. A good example for this complex assessment process is the range of projected global mean temperature increase for a selected emissions scenario (Meehl et al. 2007). The quantitative information comes from multiple lines of independent evidence, based on simulations using a hierarchy of climate models and different experimental set-ups as illustrated in Fig. 3. Using a vast range of climate model simulations, individual physics tests, and idealized simulations from models including additional processes such as the global carbon cycle, each approach provides an outcome or range of the increase of global mean temperature by the end of the 21st century. All these estimates are obtained from formal calculations applying well-defined and well-established mathematical and statistical techniques. A synthesis of the results for each scenario is shown in Fig. 4.

Illustration of the various sources of information which flow into a final synthesis statement with uncertainty range. This approach was used in Chapter 10 of the Working Group I contribution to AR4 (Meehl et al. 2007). Information comes from the coordinated model intercomparison project CMIP3, under the auspices of WCRP; from physics tests of parameterizations using individual models; from a range of earth system models of intermediate complexity, EMICs; and from climate models which include additional Earth System processes (e.g., the carbon cycle). Model simulations build the base from which formal calculations of uncertainty ranges are performed. These different ranges are then synthesized using expert judgment

Working Group I AR4 synthesis of projected global mean temperature increase in 2090 to 2099 (relative to the 1980 to 1999 average) and associated uncertainties for the six SRES marker scenarios. All quantitative estimates for projected warming by the end of the 21st century available at the time of the assessment from a hierarchy of climate and carbon cycle models have been combined into a single estimate of the range of uncertainty for each emissions scenario using expert judgment. For more technical information on the individual estimates and their combination see Meehl et al. (2007) and Knutti et al. (2008). [Figure from Meehl et al. 2007]

The challenge of the assessment process is to synthesize this information into one uncertainty range. Due to the variety, different quality and origin of the information, a formal procedure is not available and expert judgment is called for in the synthesis, the last step of the assessment of uncertainty. The result of this final expert judgment is the −40% to +60% range shown as the grey bars in Fig. 4. This range provides an uncertainty range which captures the basic spread of the model projections when all the combined information is considered. More importantly, the asymmetry of the uncertainty range conveys the important message that uncertainty is larger at the upper end of the temperature range. This result is based on process understanding stemming from carbon cycle simulations and expresses the consensus that the global mean feedback, when including the carbon cycle response in climate models, is positive.

Meehl et al. (2007) therefore concluded in the Executive Summary of Chapter 10 of Working Group AR4 that “[A]n assessment based on AOGCM projections, probabilistic methods, EMICs [Earth System Models of Intermediate Complexity], a simple model tuned to the AOGCM responses, as well as coupled climate carbon cycle models, suggests that for non-mitigation scenarios, the future increase in global mean SAT [surface air temperature] is likely to fall within −40 to +60% of the multi-model AOGCM mean warming simulated for a given scenario. The greater uncertainty at higher values results in part from uncertainties in the carbon cycle feedbacks”. The best estimates and asymmetric likely ranges of projected global mean temperature increase for the SRES non-mitigation scenarios were elevated to both Technical Summary and SPM of Working Group I AR4, and further highlighted in key figures and tables of both documents. A more extensive discussion about these issues can be found in the review by Knutti et al. (2008). It is important to recognize that the ultimate expert judgment (Fig. 3) is only made possible by the large amount of model-based information which resulted from a concerted and timely effort of the climate modeling community.

3.1.3 Example of assignment of likelihood to a partial range of a variable

With an increased interest in the projection of extreme events and the assessment of related risks, which necessarily concern the tails of distributions, it may be useful that an uncertainty statement can be made for specific parts of a range of a variable. This option has already been applied in Working Group I in AR4 and provided much new and useful insight, although in such an approach the degree of information is inhomogeneous across the range. Equilibrium climate sensitivity (ECS, i.e., the global mean equilibrium temperature increase when doubling atmospheric CO2 concentration) is a fundamental quantity in the climate system which is widely used to characterize climate models. For many years, the scientific consensus was that ECS lies between 1.5 and 4.5°C. WGI AR4 concluded that the likely range for ECS is 2°C to 4.5°C, that ECS is very unlikely smaller than 1.5°C, and that the likelihood for ECS larger than 4.5°C cannot be quantified (“[V]alues substantially higher than 4.5°C cannot be excluded…”, IPCC 2007a). By applying the calibrated language to only parts of a range, quantitative information about a distribution of a variable can be given with differentiated uncertainty for specific parts of the distribution. It is expected that use of this approach will also be made in the Working Group I AR5 assessment, e.g., for sea level rise projections whose upper end is much less well constrained than the lower end (Nicholls and Cazenave 2010). A differentiated expression of likelihood is required in the case of confidence that varies across a range.

The assessment and consistent treatment of uncertainties as illustrated above will continue to be a central component in the ongoing Working Group I AR5 assessment. This was recognized early on in the preparations for the AR5. Working Group I has therefore organized targeted IPCC Expert Meetings and Workshops to support the science community in the assessment process in areas where the current scientific understanding is limited and where uncertainties are recognized to be particularly large (IPCC Workshop on Sea Level Rise and Ice Sheet Instabilities; IPCC 2010a) and where the systematic assessment of uncertainties will be of key importance in the assessment process (IPCC Expert Meeting on Assessing and Combining Multi Model Climate Projections; IPCC 2010b).

In order to ensure the consistent evaluation of uncertainties within the Working Group I contribution—across the assessment of observations, paleoclimate information, process understanding, forcing, attribution, and projections of future climate change and its predictability—the treatment of uncertainties in the Working Group I contribution to AR5 will be laid out in Chapter 1: Introduction. The use of calibrated IPCC uncertainty terminology throughout the report will require, in each case, a traceable account of how the chapter author team has arrived at the particular estimate of a level of confidence and quantitative uncertainty. This account is crucial and will be an integral part of the Working Group I assessment.

3.2 Working group II: perspective and challenges

The Working Group II contribution to the AR5 spans a wide spectrum of topics, with expanded coverage of climate change impacts and adaptation as compared to the AR4 contribution (IPCC 2007b). New chapters will focus on oceans, on additional dimensions of human systems, and on further aspects of adaptation. Impacts will be considered more extensively under multiple scenarios of climate change and of socioeconomic development, as well as in the context of adaptation and mitigation strategies, particularly at the regional scale. The contribution will include expanded consideration of the interaction among climate change and other stressors. Throughout, the contribution will emphasize information to inform risk management decisions, particularly information on the range of possible outcomes, including the tails of distributions where probabilistic information is available.

The Working Group II contribution will assess knowledge derived from many disciplines, and the nature of relevant uncertainties will vary, requiring different approaches for evaluating the degree of certainty in key findings. All chapters will be encouraged to describe evaluations of evidence and agreement with traceable accounts, including discussion of the range of relevant scientific interpretations of the evidence available. Traceable accounts will constitute a fundamental component of each chapter’s assessment, communicating the author team’s conclusions and the evaluation of evidence and agreement underpinning those conclusions. Based on their evaluations of evidence and agreement, author teams will be able to choose to express their degree of certainty in findings using summary terms for evidence and agreement, levels of confidence, or likelihood terms or other probabilistic measures, using the AR5 Guidance Note.

Constructions of conditional findings are expected to be particularly relevant for the Working Group II contribution. Conditional findings as introduced in the AR5 Guidance Note can separately characterize the degree of certainty in causes and effects. For example, a Working Group II author team could construct a finding about impacts or adaptation that is conditional on a Working Group I finding about changes in the physical climate system. A finding about potential changes in the physical climate system can describe expected directions or magnitudes of change, providing information about possible climate outcomes such as best estimates, ranges, or relative likelihoods. Presented climate outcomes will have implications for impacts and adaptation, and thus findings about the physical climate system, impacts, and adaptation are linked. The degree of certainty in a finding about impacts or adaptation, however, can be evaluated separately from the degree of certainty in a finding about the climate outcome to which it is linked. A finding about impacts or adaptation will be based on evaluation of evidence and agreement regarding the consequences of a given change in climate, which is independent from evaluation of whether the change will occur or not. In this way, a finding regarding impacts of or appropriate adaptation responses to a climate outcome (were it to occur) could have a high degree of certainty even if the degree of certainty in the climate outcome itself is low. Conversely, a finding regarding impacts or adaptation could have a low degree of certainty even if the degree of certainty in the climate outcome is high.

Throughout the Working Group II contribution, findings will require specification of underlying assumptions on which impacts and adaptation outcomes are dependent. For example, projected impacts are based not only on projected changes in climate, but also on assumptions about trajectories for socioeconomic development and their implications for vulnerability to climate changes. Figure 5 illustrates the linkages among impacts and adaptation outcomes conditional on a hypothetical range of climate outcomes and on one set of socioeconomic assumptions. Each combination of climate outcome and socioeconomic assumptions will have different implications for impacts and adaptation, but there may also be commonalities across such combinations that emerge, for example when a given impact or adaptation outcome is projected for a range of possible climate outcomes. Impacts or adaptation findings need to communicate clearly the climate and socioeconomic assumptions on which they are based and where such commonalities exist. It is important to distinguish, for example, between impacts or adaptation outcomes associated with only one future climate and socioeconomic scenario and outcomes that arise under a range of future scenarios.

Schematic illustrating the flow of information from projected changes in climate and socioeconomic factors to impacts and adaptation outcomes. Climate projections inform possible climate outcomes. Together with assumed changes in socioeconomic factors, climate outcomes have implications for impacts and adaptation. The degree of certainty in findings about climate outcomes and about impacts and adaptation outcomes can be evaluated separately. Findings about impacts and adaptation need to clearly communicate the climate and socioeconomic assumptions on which they are based

The Guidance Note mentions the importance of developing findings that synthesize across underlying lines of evidence, providing general, substantive conclusions that appropriately reflect the available evidence. Along these lines in the Working Group II contribution, an author team may consider constructing a key finding that extends beyond the spatial or temporal scale of available evidence. That is, an author team may consider a finding about a region based on evidence for several countries within that region. Such generalizations beyond the scale of available evidence affect the degree of certainty in those findings, and author teams will need to consider and communicate assumptions made.

The Working Group II contribution to the AR5 will employ all forms of calibrated uncertainty language presented in the AR5 Guidance Note: summary terms for evidence and agreement, as well as the more clearly distinguished confidence and likelihood metrics. With the confidence metric qualitatively calibrated, it is expected to be broadly applicable across the Working Group II contribution for findings where probabilistic information is not available, for example, for findings related to adaptation and for findings that qualitatively project future outcomes. Where probabilistic information is available, likelihood terms or more precise probabilistic measures will be used, with an emphasis on providing as much information as possible on the full distribution of possible outcomes, including the tails, to inform policy decisions for risk management. In all cases, it is important that findings in the Working Group II contribution explicitly communicate their degree of certainty, specify assumptions appropriately, and be clearly supported by traceable accounts in the underlying chapter text.

3.3 Working group III: perspective and challenges

The Working Group III contribution to the AR5 will explore a wide range of available options and instruments for greenhouse gas mitigation. The outline of the Working Group III contribution to the AR5 foresees a detailed assessment of options for reducing land use (change) emissions and for transforming energy systems, energy use of households, industry, transport and other sectors. It will also include a review of the emerging literature on geoengineering options such as managing the carbon cycle and altering global radiation balance.

In order to provide a comprehensive and consistent assessment of mitigation options available to society and decision makers, the IPCC has initiated a dialogue with several scientific communities in the areas of climate science, mitigation, and adaptation. These communities are engaging in a coordinated scenario-building process where the IPCC no longer commissions specific scenarios, but restricts its role to facilitating the scenario process which is conducted by self-organized bodies of the scientific communities (Moss et al. 2010). The new scenarios are characterized by combining (i) levels of radiative forcing (Representative Concentration Pathways - RCPs) (van Vuuren et al. 2011) with (ii) a set of socioeconomic reference assumptions that specify future developments for key parameters such as GDP and population (van Vuuren et al. 2010; Kriegler et al. 2010).

In order to be policy relevant without being policy prescriptive, an ex-post analysis of these scenarios has to take into account and make explicit (i) underlying value judgments of scenarios and (ii) the interaction between ends and means, i.e., how the direct and indirect consequences of the development of mitigation options affects the assessment of the ends (e.g., global stabilization targets) and vice versa. For example, low-stabilization scenarios might imply an extensive use of biomass which may be associated with serious conflicts over land-use and trade-offs between clean energy and low-cost food supply. The IPCC intends to assess multiple scenarios describing different plausible future pathways for mitigation. These multiple scenarios should also include second best scenarios which explicitly consider, for example, the limited availability of technologies, fragmented carbon markets, or suboptimal policy instruments.

Beyond these ex-post analyses pointing out the options decision-makers might have at their disposal, a well-designed uncertainty analysis is required to address epistemic uncertainties about the socioeconomic system. These epistemic uncertainties can be characterized by model uncertainty and parametric uncertainty.

Model uncertainty refers to different philosophies of representing socioeconomic and technology systems as well as value systems. For example, economic Partial Equilibrium models (such as energy system models) differ substantially from economic General Equilibrium models (such as Computable General Equilibrium Models). In general, model uncertainty may be reduced by eliminating some model structures from the set of plausible models. One way of doing so is validating models against empirical evidence to discriminate “better” models and consequently discard “bad” models. This requires, however, a clear idea about the relevant empirical evidence and the appropriate type of validation exercises—a particular difficult issue for policy evaluation models that commonly use counterfactual no policy cases in the evaluation process. But in the economic community, for example, there is no consensus regarding which stylized facts of past economic development should be reproduced by models. There is substantial disagreement about the relevant scale (global, regional) and the relevant time horizon (short-term, long-term) of stylized facts.

However, even “perfect validation” is only a necessary but not a sufficient condition for the selection of models. “Ockham’s razor” proposes that if a model explains the same empirical phenomena using less specific or more plausible assumptions and parameters than another model, then it can be deemed preferable (Edenhofer et al. 2006). Yet to this date, the theoretical and empirical foundation of model types within economics remains insufficient to allow for a consensus within the scientific community according to these principles. In other words, under the present state of the art, the uncertainties about the appropriate economic model structure would remain even if there is a consensus on the stylized facts.

A promising approach for explaining differences in model results is to conduct modeling comparison exercises of climate and energy economics models that trace the underlying drivers of different model results. Such exercises also enable evaluation and explanation of results when sensitivity analysis leads to the same qualitative results despite structural differences of models (e.g. most models used in climate economics find that more ambitious mitigation targets increase costs). The Joint IPCC Workshop of Working Group III and II on Socioeconomic Scenarios for Climate Change Impact and Response Assessments, held in November 2010, included discussion of the importance of modeling comparison exercises by the scientific community to enhance the understanding of underlying model philosophies and the robustness of the results.

Parameter uncertainty refers to a lack of empirical knowledge to calibrate the parameters of a model to their “true” values. Parameter uncertainty implies uncertainty in the predictions of any one model, and discrepancies may result even for otherwise very similar models when different assumptions about parameter values are adopted. Parameter uncertainty can be addressed in model specific uncertainty analyses including sensitivity analysis and Monte Carlo simulations. Examples for parameter uncertainty include technology costs, resource availabilities, or political decisions such as on international cooperation. Sensitivity analyses should not only be conducted on uncertain economic assumptions such as base rates of technological progress and price elasticities, but also across so-called second best worlds, in which certain assumptions on, for example, first best policy and market fragmentation or technology availability are varied to study their impacts on mitigation strategies. The evaluation of second best worlds typically reveals that such imperfections can have a pronounced effect on costs of mitigation (Clarke et al. 2009; Edenhofer et al. 2009; Edenhofer et al. 2010; Luderer et al. 2011). Conducting such studies is thus vital to represent policy options in a more realistic manner, and Working Group III intends to emphasize the assessment of such scenarios in AR5.

The new AR5 Guidance Note provides calibrated language for systematic evaluation and representation of these uncertainties. At a first level, both model uncertainty and parametric uncertainty can be characterized by the criteria of evidence and agreement. The Guidance Note indicates that evidence is generally most robust when there are multiple, consistent and independent lines of high-quality evidence. For example, the reference to modeling comparison exercises and to sensitivity studies should enable authors to trace back their assessments to scientific literature that aggregates findings from multiple independent modeling efforts. Admittedly, model comparison exercises will not resolve the problem of an incomplete representation of reality, as some feedback loops ignored in current models could become important in the future. In addition, the conventional validation procedure will not work in such a context. Modeling comparison exercises, however, can still be used as a social learning process to facilitate an ongoing discussion about feedback loops that might be important in the future, and they are valuable to justify the level of agreement within the scientific community. In addition, modeling comparison exercises and sensitivity studies may allow authors to qualitatively aggregate evidence and agreement into confidence statements. Even if the evidence base for quantitative evaluation of likelihoods in the context of Working Group III is limited, the AR5 will benefit substantially from qualitative explication of the uncertainties underlying the assessment of mitigation options.

4 Conclusion

The AR5 uncertainties guidance paper provides a common framework for the evaluation and communication of the degree of certainty in assessment findings. We have provided a summary of the process author teams in AR5 will employ for developing key findings from evaluation of evidence and agreement and describing this evaluation in traceable accounts within chapters. As also described in this paper, the AR5 uncertainties guidance presents calibrated language for characterizing the degree of certainty in findings based on this evaluation: summary terms for evidence and agreement, levels of confidence, and quantified measures including likelihood. We anticipate that the metrics employed most frequently in the contribution of each Working Group will vary depending on the nature of the underlying evidence and relevant disciplines. The perspectives presented here highlight ways in which the three Working Groups will apply the framework of the AR5 uncertainties guidance consistently across the spectrum of relevant knowledge.

References

Clarke L, Edmonds J, Krey V, Richels R, Rose S, Tavoni M (2009) International climate policy architectures: overview of the EMF 22 international scenarios. Energ Econ 31:S64–S81

Edenhofer O, Lessmann K, Kemfert C, Grubb M, Koehler J (2006) Induced technological change: exploring its implications for the economics of atmospheric stabilization. The Energy Journal 93:57–107

Edenhofer O, Carraro C, Hourcade J-C, Neuhoff K, Luderer G, Flachsland C, Jakob M, Popp A, Steckel J, Strohschein J, Bauer N, Brunner S, Leimbach M, Lotze-Campen H, Bosetti V, de Cian E, Tavoni M, Sassi O, Waisman H, Crassous-Doerfler R, Monjon S, Dröge S, van Essen H, del Río P, Türk A (2009) The economics of decarbonization. Report of the RECIPE project. Potsdam Institute for Climate Impact Research, Potsdam

Edenhofer O, Knopf B, Leimbach M, Bauer N (eds) (2010) The economics of low stabilization. Energ J 31 (Special Issue 1)

Hegerl GC, Hoegh-Guldberg O, Casassa G, Hoerling MP, Kovats RS, Parmesan C, Pierce DW, Stott PA (2010) Good practice guidance paper on detection and attribution related to anthropogenic climate change. In: Stocker TF, Field CB, Qin D, Barros V, Plattner G-K, Tignor M, Midgley PM, Ebi KL (eds) Meeting report of the Intergovernmental Panel on Climate Change Expert Meeting on detection and attribution related to anthropogenic climate change. IPCC Working Group I Technical Support Unit, University of Bern, Bern, pp 1–8

InterAcademy Council (2010) Climate change assessments, review of the processes and procedures of the IPCC. InterAcademy Council, Amsterdam, The Netherlands. http://reviewipcc.interacademycouncil.net/. Accessed 20 February 2011

IPCC (1990) Climate change: The IPCC scientific assessment. Report prepared for IPCC by Working Group I. In: Houghton JT, Jenkins GJ, Ephraums JJ (eds) Cambridge University Press, Cambridge

IPCC (1996) Climate change 1995: The science of climate change. Contribution of Working Group I to the Second Assessment Report of the Intergovernmental Panel on Climate Change. Houghton JT, Meira Filho LG, Callander BA, Harris N, Kattenberg A, Maskell K (eds) Cambridge University Press, Cambridge

IPCC (2001a) Climate change 2001: The scientific basis. Contribution of Working Group I to the Third Assessment Report of the Intergovernmental Panel on Climate Change. In: Houghton JT, Ding Y, Griggs DJ, Noguer M, van der Linden PJ, Dai X, Maskell K, Johnson CA (eds) Cambridge University Press, Cambridge

IPCC (2001b) Climate change 2001: Impacts, adaptation, and vulnerability. Contribution of Working Group II to the Third Assessment Report of the Intergovernmental Panel on Climate Change. In: McCarthy JJ, Canziani OF, Leary NA, Dokken DJ, White KS (eds) Cambridge University Press, Cambridge

IPCC (2001c) Climate change 2001: Mitigation. Contribution of Working Group III to the Third Assessment Report of the Intergovernmental Panel on Climate Change. In: Metz B, Davidson O, Swart R, Pan J (eds) Cambridge University Press, Cambridge

IPCC (2005) Guidance notes for lead authors of the IPCC Fourth Assessment Report on addressing uncertainties. Intergovernmental Panel on Climate Change (IPCC), Geneva, Switzerland. http://www.ipcc.ch/pdf/supporting-material/uncertainty-guidance-note.pdf. Accessed 20 February 2011

IPCC (2007a) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. iN: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt KB, Tignor M, Miller HL (eds) Cambridge University Press, Cambridge

IPCC (2007b) Climate change 2007: Impacts, adaptation and vulnerability. Contribution of Working Group II to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Parry ML, Canziani OF, Palutikof JP, van der Linden PJ, Hanson CE (eds) Cambridge University Press, Cambridge

IPCC (2007c) Climate change 2007: Mitigation. Contribution of Working Group III to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. In: Metz B, Davidson OR, Bosch PR, Dave R, Meyer LA (eds) Cambridge University Press, Cambridge

IPCC (2008) Appendix A to the principles governing IPCC work. Procedures for the preparation, review, acceptance, adoption, approval and publication of IPCC reports. Intergovernmental Panel on Climate Change (IPCC). http://www.ipcc.ch/organization/organization_procedures.shtml. Accessed 20 February 2011

IPCC (2010a) Workshop report of the Intergovernmental Panel on Climate Change workshop on sea level rise and ice sheet instabilities. In: Stocker TF, Qin D, Plattner G-K, Tignor M, Allen S, Midgley PM (eds) IPCC Working Group I Technical Support Unit, University of Bern, Bern

IPCC (2010b) Meeting report of the Intergovernmental Panel on Climate Change expert meeting on assessing and combining multi model climate projections. In: Stocker TF, Qin D, Plattner G-K, Tignor M, Midgley PM (eds) IPCC Working Group I Technical Support Unit, University of Bern, Bern

Knutti R, Allen MR, Friedlingstein P, Gregory JM, Hegerl GC, Meehl GA, Meinshausen M, Murphy JM, Plattner G-K, Raper SCB, Stocker TF, Stott PA, Teng H, Wigley TML (2008) A review of uncertainties in global temperature projections over the twenty-first century. J Clim 21:2651–2663

Kriegler E, O’Neill B, Hallegatte S, Kram T, Lempert R, Moss R, Wilbanks T (2010) Socio-economic scenario development for climate change analysis. Working paper. http://www.ipcc-wg3.de/meetings/expert-meetings-and-workshops/WoSES. Accessed 29 April 2011

Luderer G, Bosetti V, Jakob M, Leimbach M, Edenhofer O (2011) The economics of decarbonizing the energy system: results and insights from the RECIPE model intercomparison. Climatic Change

Manning MR (2006) The treatment of uncertainties in the fourth IPCC assessment report. Adv Clim Change Res 2:13–21

Manning M, Petit M, Easterling D, Murphy J, Patwardhan A, Rogner H-H, Swart R, Yohe G (eds) (2004) IPCC workshop on describing scientific uncertainties in climate change to support analysis of risk and of options: Workshop report. Intergovernmental Panel on Climate Change (IPCC), Geneva, Switzerland. http://www.ipcc.ch/pdf/supporting-material/ipcc-workshop-2004-may.pdf. Accessed 20 February 2011

Mastrandrea MD, Field CB, Stocker TF, Edenhofer O, Ebi KL, Frame DJ, Held H, Kriegler E, Mach KJ, Matschoss PR, Plattner G-K, Yohe GW, Zwiers FW (2010) Guidance note for lead authors of the IPCC Fifth Assessment Report on consistent treatment of uncertainties. Intergovernmental Panel on Climate Change (IPCC). http://www.ipcc-wg2.gov/meetings/CGCs/index.html#UR. Accessed 20 February 2011

Meehl GA, Stocker TF, Collins WD, Friedlingstein P, Gaye AT, Gregory JM, Kitoh A, Knutti R, Murphy JM, Noda A, Raper SCB, Watterson IG, Weaver AJ, Zhao Z-C (2007) Global climate projections. In: Solomon S, Qin D, Manning M, Chen Z, Marquis MC, Averyt K, Tignor M, Miller HL (eds) Climate change 2007: The physical science basis. Contribution of Working Group I to the Fourth Assessment Report of the Intergovernmental Panel on Climate Change. Cambridge University Press, Cambridge, pp 747–845

Moss RH, Schneider SH (2000) Uncertainties in the IPCC TAR: Recommendations to lead authors for more consistent assessment and reporting. In: Pachauri, R, Taniguchi T, Tanaka K (eds) Guidance papers on the cross cutting issues of the Third Assessment Report of the IPCC. World Meteorological Organization, Geneva, pp 33–51. http://www.ipcc.ch/pdf/supporting-material/guidance-papers-3rd-assessment.pdf. Accessed 20 February 2011

Moss RH, Edmonds JA, Hibbard KA, Manning MR, Rose SK, van Vuuren DP, Carter TR, Emori S, Kainuma M, Kram T, Meehl GA, Mitchell JFB, Nakicenovic N, Riahi K, Smith SJ, Stouffer RJ, Thomson AM, Weyant JP, Wilbanks TJ (2010) The next generation of scenarios for climate change research and assessment. Nature 463:747–756

Nicholls RJ, Cazenave A (2010) Sea-level rise and its impact on coastal zones. Science 328:1517–1520

van Vuuren DP, Riahi K, Moss RH, Edmonds J, Thomson A, Nakicenovic N, Kram T, Berkhout F, Swart R, Janetos A, Rose SK, Arnell N (2010) Developing new scenarios as a common thread for future climate research. Working paper. http://www.ipcc-wg3.de/meetings/expert-meetings-and-workshops/WoSES. Accessed 29 April 2011

van Vuuren DP, Edmonds J, Kainuma M, Riahi K, Thomson A, Hibbard K, Hurtt GC, Kram T, Krey V, Lamarque J-F, Matsui T, Meinshausen M, Nakicenovic N, Smith SJ, Rose SK (2011) The representative concentration pathways: an overview. Climatic Change

Acknowledgments

We thank John Houghton, Robert Lempert, Martin Manning, and several anonymous reviewers for comments on an earlier draft of the manuscript.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Mastrandrea, M.D., Mach, K.J., Plattner, GK. et al. The IPCC AR5 guidance note on consistent treatment of uncertainties: a common approach across the working groups. Climatic Change 108, 675 (2011). https://doi.org/10.1007/s10584-011-0178-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10584-011-0178-6