Abstract

In this paper, we investigate the stability of neural networks with both time-varying delays and uncertainties. A novel delayed intermittent control scheme is designed to ensure the globally asymptotical stability of the addressed system. Some new delay dependent sufficient criteria for globally asymptotical stability results are derived in term of linear matrix inequalities (LMIs) by using free-weighting matrix techniques and Lyapunov–Krasovskii functional method. Finally, a numerical simulation is provided to show the effectiveness of the proposed approach.

Similar content being viewed by others

1 Introduction

Neural networks (NNs) have been widely investigated recently because of their potential applications in many areas such as associative memory, pattern recognition, parallel computing, and image processing; see [1,2,3,4,5,6,7] and references therein. In these real applications, stability property of equilibrium points of the networks is an important factor in the design of NNs. However, due to the inherent communication between neurons and the finite switching speed of amplifiers, time delays are always unavoidably encountered in neural networks, and their existence may cause poor performance and even instability. Therefore, stability analysis of time-delay neural networks has received considerable attention, and many interesting results have been obtained in the literature [8,9,10,11,12,13,14,15,16,17].

In recent years, the discontinuous control approaches, such as intermittent and impulsive control, have aroused a great deal of interest in many applications because these control methods can reduce the amount of the transmitted information and thus are more economic [18,19,20,21,22]. An intermittent control scheme comprises working and rest times in turn, and only in each working time, the controller is activated. Compared with impulsive control which is activated only at certain instants, intermittent control has the advantage of easy implementation in process control and engineering applications because of its nonzero control width. Owing to these merits, the intermittent control method has been widely applied to the fields of chaotic systems and networks; see, e.g., [18, 23,24,25,26,27,28,29,30,31,32,33] and references therein.

Nevertheless, most researches focus on the periodic case, i.e., the control width and period are fixed. For example, the authors of [25, 26] considered the exponential stabilization problem for chaotic systems without or with constant delays by periodically intermittent control. In [27, 28], the authors treated neural networks with time-varying delays, but these studies were based on the fact that time-varying delays are differentiable. Moreover, the stability criteria were presented in terms of transcendental equations or nonlinear matrix inequalities, which are computationally difficult. In [18], a class of time-delay neural networks was also studied via periodically intermittent control under the restriction of differentiability of time-varying delays. Some delay-dependent sufficient conditions for exponential stability were obtained in the form of linear matrix inequalities, which were easily checked by Matlab LMI toolbox. However, subject to the change of the real environment, some systems such as the generation of wind power and information exchange for routers on the internet are typically aperiodically intermittent [29, 30]. Periodically intermittent control may be unreasonable and inadequate in practice. Therefore, it is of significance to investigate the cases where the control width and period are not fixed.

Very recently, in [31], an aperiodically intermittent pinning control was introduced to guarantee the synchronization of hybrid-coupled delayed dynamical networks. Hu et al. [32] addressed stabilization and synchronization of chaotic systems without delays under adaptive intermittent control strategy with generalized control width and period. In [33], by designing an intermittent control scheme with non-fixed control width and period, Song et al. further considered the stabilization and synchronization of chaotic systems with mixed time varying delays. Note that all those results in the literature are based on the fact that parameters are known. Actually, the exact values of parameters are difficult to obtain in neural networks because of the external disturbances or the modeling inaccuracies. Discarding parametric uncertainties may lead to wrong conclusions in studying dynamical behaviors of systems. On the other hand, receiving a signal and transmitting it from the controller to the controlled system need some time. So, it is more reasonable that the control input is relevant to the previous state variables. Moreover, sometimes introducing time delays in control schemes can acquire better control performance, such as the delayed state-feedback control [34]. It could adjust the value of time delay in the controller without adding control gain, which begins to attract considerable attention for the good of control performance in recent years [35, 36]. To the best of our knowledge, although there are many results on intermittent control for stability of neural networks, few results are concerned with the stability analysis of uncertain neural networks by using delayed intermittent control. This motivates our present study.

Based on the above discussions, in this paper, we investigate the globally asymptotical stability of neural networks with both time-varying delays and uncertainties. A delayed intermittent controller with non-fixed control width and period is designed, which is new in the sense that by selecting an appropriate matrix B, it could be activated in all states or in some states, and non-fixed control width and period make the control scheme more flexible. By constructing a proper Lyapunov–Krasovskii functional, some new delay-dependent sufficient criteria for globally asymptotical stability of the addressed system are derived in terms of LMIs. The proposed stability criteria establish the relationship between the transmission delay in system and time delay in the controller, which could be easily verified by Matlab LMI toolbox. Moreover, the value of time delay in the controller can be adjusted without adding control gain and free-weighting matrices with full cross-terms are employed in this paper, which provide more feasible results than those studied in the previous work [32, 33]. Finally, a numerical example is studied to show the effectiveness of the proposed approach.

The rest of this paper is organized as follows. Section 2 introduces some preliminaries assumptions, basic definitions, and necessary lemmas. In Sect. 3, the globally asymptotical stability results with corresponding proofs are presented. The effectiveness of the developed methods is shown by a numerical example in Sect. 4.

Notations

By \(\mathbb{R}^{n}\) we denote the n-dimensional real space equipped with the Euclidean norm \(\Vert \cdot \Vert \); \(\mathbb{R} ^{n\times m}\) refers to the \(n\times m\)-dimensional real space. Let \(\mathbb{Z}^{+}\) represent the set of positive integers, and \(\mathbb{N}\) denote the set of nonnegative integers; \(A<0\) or \(A>0\) means that the matrix A is a symmetric and negative definite or positive definite matrix. If A, B are symmetric matrices, \(A>B\) means that \(A-B\) is a positive definite matrix. By \(A^{-1}\) and \(A^{T}\) we denote the inverse and the transpose of A, respectively. Let \(\alpha \vee \beta \) denote the maximum value of α and β. For any \(\mathrm{J}\subseteq \mathbb{R}\) and \(\mathrm{S} \subseteq \mathbb{R}^{k}\) (\(1\leq k\leq n\)), set \(C(\mathrm{J}, \mathrm{S})=\{\varphi :\mathrm{J}\rightarrow \mathrm{S}\text{ is continuous}\}\) and \(C^{1}(\mathrm{J},\mathrm{S})=\{\varphi :\mathrm{J}\rightarrow \mathrm{S}\text{ is continuously differentiable}\}\). For each \(\varphi \in C^{1}([-\rho ,0],\mathbb{R}^{n})\), the norm is defined by \(\Vert \varphi \Vert _{\rho }=\sup_{s\in [-\rho ,0]}\{ \vert \varphi (s) \vert , \vert \dot{\varphi }(s) \vert \}\). With ∗ we always denote the symmetric block in a symmetric matrix, and \(\varLambda =\{1,2,\ldots,n\}\).

2 Preliminaries

Consider the time-delay neural networks with uncertainties

where \(x(t)=(x_{1}(t),x_{2}(t),\ldots,x_{n}(t))\in \mathbb{R}^{n}\) is the neural state vector, \(f(x(t))=(f_{1}(x_{1}(t)), f_{2}(x_{2}(t)),\ldots,f_{n}(x_{n}(t)))^{T}\in \mathbb{R}^{n}\) is the neuron activation function, \(C(t)=C+\Delta C(t)\), \(W_{1}(t)=W_{1}+\Delta W_{1}(t)\), \(W_{2}(t)=W_{2}+\Delta W_{2}(t)\), in which C is a positive diagonal matrix, \(W_{1},W_{2}\in \mathbb{R}^{n\times n}\) are the connection weight matrices of the neurons, and \(\Delta C(t)\), \(\Delta W_{1}(t)\), \(\Delta W_{2}(t)\) are time-varying parametric uncertainties, \(\phi \in C^{1}([-\rho ,0],\mathbb{R}^{n})\) is the initial state, J is the external input, \(\tau (t)\) is a time-varying delay which satisfies \(0\leq \tau (t)\leq \tau \), where τ is a constant.

The following assumptions are made throughout this paper:

- \((H_{1})\):

The activation function \(f\in C(\mathbb{R}^{n},\mathbb{R} ^{n})\), \(f_{j}(0)=0\), \(j\in \varLambda \), is such that there exist constants \(l_{j}^{-}\) and \(l_{j}^{+}\) such that

$$\begin{aligned}& l_{j}^{-}\leq \frac{f_{j}(\alpha _{1})-f_{j}(\alpha _{2})}{\alpha _{1}- \alpha _{2}}\leq l_{j}^{+}, \quad \forall \alpha _{1},\alpha _{2}\in \mathbb{R}, \alpha _{1}\neq \alpha _{2}. \end{aligned}$$For convenience, we denote

$$ L_{1}=\operatorname{diag}\bigl(l_{1}^{-}l_{1}^{+},l_{2}^{-}l_{2}^{+}, \ldots,l _{n}^{-}l_{n}^{+}\bigr), \quad\quad L_{2}=\operatorname{diag} \biggl(\frac{l_{1}^{-}+l_{1}^{+}}{2}, \frac{l_{2} ^{-}+l_{2}^{+}}{2},\ldots,\frac{l_{n}^{-}+l_{n}^{+}}{2} \biggr). $$- \((H_{2})\):

The time-varying parametric uncertainties \(\Delta C(t)\), \(\Delta W_{1}(t)\), \(\Delta W_{2}(t)\), \(\Delta B(t)\) are of the form

$$ \bigl(\Delta C(t),\Delta W_{1}(t),\Delta W_{2}(t), \Delta B(t) \bigr)=HA(t) (E _{1},E_{2},E_{3},E_{4}), $$where \(A(t)\) is an unknown matrix satisfying \(A^{T}(t)A(t)\leq I\), and H, \(E_{1}\), \(E_{2}\), \(E_{3}\), \(E_{4}\) are known constant matrices of appropriate dimensions.

Suppose that \(x^{\star }=(x_{1}^{\star },x_{2}^{\star },\ldots,x_{n} ^{\star })^{T}\) is an equilibrium point of system (1). By the transformation \(y=x-x^{\star }\), the equilibrium point \(x^{\star }\) can be shifted to the origin. Then system (1) turns into

where \(\varphi (t)=\phi (t)-x^{\star }\), \(\varphi \in C^{1}([-\rho ,0], \mathbb{R}^{n})\) and \(F(y)=f(y+x^{\star })-f(x^{\star })\).

To achieve the stability of system (2), firstly, a delayed intermittent control with non-fixed control width and period is designed. The controlled system of (2) can be described as follows:

where \(B(t)=B+\Delta B(t)\), \(B\in \mathbb{R}^{n\times m}\) represents a known real matrix, \(\Delta B(t)\) is a time-varying parametric uncertainty, \(u(t)\in \mathbb{R}^{m}\) is a control input with the form:

with γ is a positive constant delay, \(K\in \mathbb{R}^{m \times n}\) is the controller gain to be designed, \(d_{k}\) is the so-called control width, and \(t_{k+1}-t_{k}\) is the control period. The control instant is defined by \(0=t_{1}< t_{2}<\cdots <t_{k}<\cdots \) , \(\lim_{k\rightarrow \infty }t_{k}=+\infty \), \(k\in \mathbb{Z}^{+}\).

Then, one may transform system (3) and (4) into the following closed-loop system:

where \(D(t)=B(t)K\) and \(\rho =\gamma \vee \tau \).

Definition 1

([37])

System (5) is said to be globally asymptotically stable if it is stable in the sense of Lyapunov and \(\lim_{t\rightarrow \infty }y(t) = 0\) for any initial condition \(\varphi \in C^{1}([- \rho ,0],\mathbb{R}^{n})\).

Definition 2

([18])

System (5) is said to be globally exponentially stable if there exist two positive constants \(\varepsilon >0\) and \(\tilde{A}>0\) such that the solution \(y(t)\) of (5) satisfies \(\Vert y(t) \Vert \leq \tilde{A} \Vert \varphi \Vert _{\rho }e^{-\varepsilon t}\), for all \(t\geq 0\), \(\varphi \in C^{1}([-\rho ,0],\mathbb{R}^{n})\).

Lemma 1

([36])

Suppose that matrix \(M=M^{T}>0\)is a real matrix of appropriate dimensions and \(\omega (\cdot ):[a,b]\mapsto \mathbb{R}^{n}\)is a vector function such that the integrations concerned are well defined, then

Lemma 2

([38])

For matricesJ̃, E, andVof appropriate dimensions and assuming \(V^{T}=V\),

holds for all matricesLsatisfying \(L^{T}L\leq I\)if and only if there exists a constant \(\varepsilon >0\)such that

3 Main results

This section is devoted to study the globally asymptotical stability of the neural networks (5) by constructing suitable Lyapunov–Krasovskii functional. First, the following stability results can be derived in terms of LMIs.

Theorem 1

Under conditions \((H_{1})\)and \((H_{2})\), the neural network (5) is globally asymptotically stable if for given constants \(\alpha >0\), \(\beta \geq -\alpha \), \(c\geq 0\), there exist \(n\times n\)matrices \(P>0\), \(Q>0\), \(n\times n\)diagonal matrices \(X>0\), \(Y>0\), \(n\times n\)matrixRand \(2n\times 2n\)matrix

such that following inequalities hold:

and the control width and period satisfy

where \(M_{11}=\alpha P-\gamma ^{-1}Q-L_{1}X\), \(M_{12}=P-RC(t)\), \(M_{13}=T _{12}\), \(M_{14}=\gamma ^{-1}Q\), \(M_{15}=L_{2}X\), \(M_{22}=\tau e^{\alpha \tau }T_{22}+\gamma e^{\alpha \gamma }Q-R-R^{T}\), \(M_{24}=RD(t)\), \(M_{25}=RW _{1}(t)\), \(M_{26}=RW_{2}(t)\), \(M_{33}=\tau T_{11}-T_{12}-T_{12}^{T}-L _{1}Y\), \(M_{36}=L_{2}Y\), \(M_{44}=-\gamma ^{-1}Q\), \(N_{11}=-\beta P-\gamma ^{-1}Q-L_{1}X\).

Proof

We construct a Lyapunov–Krasovskii functional of the form

where

Calculating the derivative of \(V(t)\) along the trajectory of neural network (5) at the interval \(t\in [t_{k},t_{k+1})\), it follows that

By Lemma 1, we have

Then

Consider \(n\times n\) diagonal matrices \(X>0\), \(Y>0\). From assumption \((H_{1})\), we get

and

It then follows from inequalities (10)–(15) that

In addition, when \(t_{k}\leq t< t_{k}+d_{k}\), we introduce the auxiliary equality as follows:

Then, from (16) and (17), we obtain

where \(\eta (t)= ( y^{T}(t), \dot{y}^{T}(t), y^{T}(t-\tau (t)), y ^{T}(t-\gamma ), F^{T}(y(t)), F^{T}(y(t-\tau (t)) ))^{T}\), which together with (7) yields

Let \(G_{1}(t)=e^{\alpha t}V(t)\). By (18), one can see that \(G_{1}(t)\) is a monotone decreasing function on \(t\in [t_{k},t_{k}+d_{k})\). Then

which implies that

When \(t_{k}+d_{k}\leq t< t_{k+1}\), from the second equation of system (5), it follows that

Since \(\alpha +\beta >0\), from (8), (16) and (21), we obtain

Let \(G_{2}(t)=e^{-\beta t}V(t) \). Then \(G_{2}(t)\) is a monotone decreasing function on \(t\in [t_{k}+d _{k},t_{k+1})\) and we have

which implies that

Thus, combing (20) and (24), one may deduce that

When \(t_{k}\leq t< t_{k}+d_{k}\), it follows from (19) and (25) that

When \(t_{k}+d_{k}\leq t< t_{k+1}\), it follows from (9), (23) and (26) that

Therefore, we obtain from (27) and (28) that

Combing condition (9), we have \(\lim_{t\rightarrow \infty }V(t)=0\), which implies that \(\lim_{t\rightarrow \infty }y(t)=0\). Hence, system (5) is globally asymptotically stable. The proof is completed. □

Especially, in the case of system (5) without parametric uncertainties, i.e., when \(\Delta C(t)=\Delta W_{1}(t)=\Delta W_{2}(t)= \Delta B(t)=0\), system (5) reduces to

where the matrix \(D=BK\). Then the following corollary is easily derived.

Corollary 1

Suppose that \((H_{1})\)holds. Then the neural network (29) is globally asymptotically stable if for given constants \(\alpha >0\), \(\beta \geq -\alpha \), \(c\geq 0\), there exist \(n\times n\)matrices \(P>0\), \(Q>0\), \(n\times n\)diagonal matrices \(X>0\), \(Y>0\), \(n\times n\)matrixRand \(2n\times 2n\)matrix

such that following inequalities hold:

and the control width and period satisfy (9), where \(\tilde{M} _{12}=P-RC\), \(\tilde{M}_{24}=RD\), \(\tilde{M}_{25}=RW_{1}\), \(\tilde{M} _{26}=RW_{2}\), and other parameters are the same as in Theorem 1.

It is worth noticing that Theorem 1 can be applied only if the uncertainty \(A(t)\) and the matrix \(D(t)\) are exactly known. When just an estimate of \(A(t)\) is known or K is a control gain to be designed, it is difficult to use the above derived results. Next, we will give a result for such general case, in which the control gain K is determined by making some transformations.

Theorem 2

Under conditions \((H_{1})\)and \((H_{2})\), the neural network (5) is globally asymptotically stable if for given constants \(\mu _{i}\), \(i=1,2,\ldots,7\), \(\alpha >0\), \(\beta \geq -\alpha \), \(c\geq 0\), there exist \(n\times n\)diagonal matrix \(S>0\)and \(m\times n\)matrixZsuch that the following inequalities hold:

and the control width and period satisfy (9), where \(\phi _{11}=\alpha S-\gamma ^{-1}\mu _{4}S-\mu _{1}SL_{1}+(\varepsilon _{1}\mu _{3}^{2})HH^{T}\), \(\phi _{12}=S-\mu _{3}CS\), \(\phi _{13}=\mu _{6}S\), \(\phi _{14}=\gamma ^{-1}\mu _{4}S\), \(\phi _{15}=\mu _{1}SL_{2}\), \(\phi _{22}= \mu _{7}\tau e^{\alpha \tau }S+\mu _{4}\gamma e^{\alpha \gamma }S-2\mu _{3}S+(\varepsilon _{2}\mu _{3}^{2})HH^{T}\), \(\phi _{24}=\mu _{3}BZ\), \(\phi _{25}=\mu _{3}W_{1}S\), \(\phi _{26}=\mu _{3}W_{2}S\), \(\phi _{33}=\mu _{5} \tau S-2\mu _{6}S-\mu _{2}SL_{1}\), \(\phi _{36}=\mu _{2}SL_{2}\), \(\phi _{44}=- \gamma ^{-1}\mu _{4}S\), \(\tilde{\phi }_{11}=-\beta S-\gamma ^{-1}\mu _{4}S- \mu _{1}SL_{1}+(\varepsilon _{1}\mu _{3}^{2})HH^{T}\), \(\tilde{\phi }_{22}= \mu _{7}\tau e^{\alpha \tau }S+\mu _{4}\gamma e^{\alpha \gamma }S-2\mu _{3}S+(\varepsilon _{3}\mu _{3}^{2})HH^{T}\).

Moreover, the gain matrixKis given by \(K=ZS^{-1}\).

Proof

When \(t_{k}\leq t< t_{k}+d_{k}\), the condition \(M_{1}<0\) in Theorem 1 can be rewritten as

where \(\varGamma _{1}^{T}=(\begin{array}{cccccc}H^{T}R^{T}&0&0&0&0&0\end{array})^{T}\), \(\varGamma _{2}^{T}=( \begin{array}{cccccc}0&H ^{T}R^{T}&0&0&0&0\end{array})^{T}\), \(\varUpsilon _{1}= (\begin{array}{cccccc}0&-E_{1}&0&0&0&0\end{array})\), \(\varUpsilon _{2}= (\begin{array}{cccccc}0&0&0&E_{4}K&E_{2}&E_{3}\end{array})\), and \(M_{2}\) is defined as in Corollary 1.

When \(t_{K}+d_{k}\leq t< t_{k+1}\), the condition \(N_{1}<0\) in Theorem 1 can be written as

where \(\varUpsilon _{3}=(\begin{array}{cccccc}0&0&0&0&E_{2}&E_{3}\end{array})\) and \(N_{2}\) is defined as in Corollary 1. By Lemma 2, (33) and (34) are equivalent to following inequalities:

Then applying Schur complement lemma, we have from the above inequalities that

where \(\hat{M}=M_{2}+\varepsilon _{1}\varGamma _{1}^{T}\varGamma _{1}+ \varepsilon _{2}\varGamma _{2}^{T}\varGamma _{2}\) and \(\hat{N}=N_{2}+ \varepsilon _{1}\varGamma _{1}^{T}\varGamma _{1}+\varepsilon _{3}\varGamma _{2}^{T} \varGamma _{2}\). Let \(X=\mu _{1}P\), \(Y=\mu _{2}P\), \(R=\mu _{3}P\), \(Q=\mu _{4}P\), \(T_{11}=\mu _{5}P\), \(T_{12}=\mu _{6}P\), \(T_{22}=\mu _{7}P\) in \(M_{2}\) and \(N_{2}\) and let \(S=P^{-1}\), \(Z=SK\), where P is a diagonal matrix. By pre-multiplying and post-multiplying inequality (6) with diag \(\{P^{-1},P^{-1}\}\), inequalities (37) and (38) with diag \(\{P^{-1},P^{-1},P^{-1},P^{-1},P^{-1},P^{-1},I,I\}\), we obtain that inequalities (6), (37) and (38) are equivalent to inequalities (30), (31) and (32), which completes the proof. □

Remark 1

Up to now, many interesting results on intermittent control have been reported in the literature [18, 25,26,27,28]. Note that most of them are based on the facts that the control scheme is periodic or only associated with the state of current time. However, in many applications, the fixed control width and period may be inadequate [29, 30], and there always exist delays in the process of inputting control. Therefore, the existing control schemes in [18, 25,26,27,28] seem to be unreasonable. In is paper, we focus on the delayed intermittent control with non-fixed control width and period for the stability of uncertain neural networks with delays. Because the value of time delay in a controller could be changed without adding control gain and the control width and period are non-fixed, the delayed intermittent control has better performance, which is more flexible and practical in engineering control and industrial process.

Remark 2

The control term in system (3) is given in the form of Bu. Then the controller can be input to some states, not all states by selecting proper matrix B, which has some significant importance in engineering control problems. The numerical examples are shown in Sect. 4.

In particular, if there exists no time delay in the controller, i.e., \(\gamma =0\), it is easy to prove the following corollary.

Corollary 2

Under conditions \((H_{1})\)and \((H_{2})\), neural network (5) with \(\gamma =0\)is globally asymptotically stable if for given constants \(\mu _{i}\), \(i=1,2,3,5,6,7\), \(\alpha >0\), \(\beta \geq -\alpha \), \(c\geq 0\), there exist \(n\times n\)diagonal matrix \(S>0\)and \(m\times n\)matrixZsuch that (30) and the following inequalities hold:

and the control width and period satisfy (9), where \(\pi _{11}=\alpha S-\mu _{1}SL_{1}+\varepsilon _{4}\mu _{3}^{2}HH^{T}\), \(\pi _{12}=S-\mu _{3}CS+\mu _{3}BZ\), \(\pi _{13}=\mu _{6}S\), \(\pi _{14}=\mu _{1}SL _{2}\), \(\pi _{22}=\mu _{7}\tau e^{\alpha \tau }S-2\mu _{3}S+\varepsilon _{5}\mu _{3}^{2}HH^{T}\), \(\pi _{24}=\mu _{3}W_{1}S\), \(\pi _{25}=\mu _{3}W_{2}S\), \(\pi _{33}=\mu _{5}\tau S-2\mu _{6}S-\mu _{2}SL_{1}\), \(\pi _{35}=\mu _{2}SL _{2}\), \(\tilde{\pi }_{11}=-\beta S-\mu _{1}SL_{1}+\varepsilon _{6}\mu _{3} ^{2}HH^{T}\), \(\tilde{\pi }_{12}=S-\mu _{3}CS\).

Moreover, the gain matrixKis taken as \(K=ZS^{-1}\).

Proof

Consider a Lyapunov–Krasovskii functional \(V=V_{1}+V _{2}+V_{3}\), where \(V_{1}\), \(V_{2}\) and \(V_{3}\) are the same as in Theorem 1. When \(t_{k}\leq t< t_{k}+d_{k}\), if

it can be derived that \(\dot{V}(t)\leq \eta ^{T}(t)M_{5}\eta (t)- \alpha V(t) \leq -\alpha V(t)\), where

\(\varphi _{11}=\alpha P-L_{1}X\), \(\varphi _{12}=P-RC+RD\), \(\varphi _{13}=T _{12}\), \(\varphi _{14}=L_{2}X\), \(\varphi _{22}=\tau e^{\alpha \tau }T_{22}-R-R ^{T}\), \(\varphi _{24}=RW_{1}\), \(\varphi _{25}=RW_{2}\), \(\varphi _{33}=\tau T _{11}-T_{12}-T_{12}^{T}-L_{1}Y\), \(\varphi _{35}=L_{2}Y\), \(\varGamma _{3}^{T}=( \begin{array}{ccccc}H ^{T}R^{T}&0&0&0&0\end{array})^{T}\), \(\varGamma _{4}^{T}=(\begin{array}{cccccc}0&H^{T}R^{T}&0&0&0\end{array})^{T}\), \(\varUpsilon _{4}=(\begin{array}{ccccc}0&-E_{1}+E_{4}K&0&0&0\end{array})\), \(\varUpsilon _{5}=(\begin{array}{ccccc}0&0&0&E _{2}&E_{3}\end{array})\).

When \(t_{K}+d_{k}\leq t< t_{k+1}\), if

we have \(\dot{V}(t)\leq \eta ^{T}(t)N_{5}\eta (t)+\beta V(t)\leq \beta V(t)\), where

\(\hat{\varphi }_{11}=-\beta P-L_{1}X\), \(\hat{\varphi }_{12}=P-RC\), \(\varUpsilon _{6}=(\begin{array}{cccccc}0&-E_{1}&0&0&0\end{array})\).

Then by following the steps of Theorem 1, under conditions (6), (41), and (42), system (5) with \(\gamma =0\) is globally asymptotically stable. Next, we will make some transformations in order to get equivalent stability conditions which can be solved by LMI toolbox. By Lemma 2 and Schur complement lemma, (41) and (42) are equivalent to the following inequalities:

where \(\bar{M}=M_{6}+\varepsilon _{4}\varGamma _{3}^{T}\varGamma _{3}+ \varepsilon _{5}\varGamma _{4}^{T}\varGamma _{4}\), \(\bar{N}=N_{6}+\varepsilon _{6}\varGamma _{3}^{T}\varGamma _{3}+\varepsilon _{5}\varGamma _{4}^{T}\varGamma _{4}\). Similarly, repeating the arguments as in Theorem 2, one can obtain that (6), (43), and (44) are equivalent to (30), (39), and (40). The proof is completed. □

It should be noted that the proposed approach in this paper is also available to study the stability of system (5) when the control scheme is periodic.

In this case, the intermittent controller \(u(t)\) can be written as

where δ denotes the control width and \(T>0\) is the control period. System (5) turns into the following form:

where \(k\in \varLambda \). Then we can get the result as follows.

Corollary 3

Under conditions \((H_{1})\)and \((H_{2})\), the neural network (46) is globally exponentially stable if for given constants \(\mu _{i}\), \(i=1,2,\ldots,7\), \(\alpha >0\), \(\beta \geq -\alpha \), \(c\geq 0\), there exist \(n\times n\)diagonal matrix \(S>0\)and \(m\times n\)matrixZsuch that inequalities (30)–(32) hold and the control period and control width satisfy

Proof

When \(kT\leq t< kT+\delta \), based on (27) and (47), we get

where \(C_{1}=e^{(\alpha +\beta )\delta }\). When \(kT+\delta \leq t<(k+1)T\), using (28) and (47), we can obtain

where \(C_{2}=e^{ \vert \beta \vert c-\beta T+2(\alpha +\beta )\delta }\). Therefore, it follows from (48) and (49) that

where \(\zeta =\frac{(\alpha +\beta )\delta -\beta T}{2T}>0\). Letting

one get

Thus, \(\Vert y(t) \Vert \leq C_{4} \Vert \phi \Vert _{\rho }e^{-\zeta t}\), where \(C_{4}=e^{\zeta \delta } \sqrt{C_{2}C_{3}/\lambda _{\min }(P)}\). The proof is completed. □

Remark 3

In [18, 27, 28], the exponential stability of time-delay neural networks has been extensively studied by the periodically intermittent control method. However, these studies were based on the fact that time-varying delays are differentiable. Corollary 3 removes the restriction of differentiability of the transmission delays and gets the globally exponential stability of uncertain neural networks (46), which improves the results in [18, 27, 28]. Moreover, the fact that the value of time delay in the controller is adjustable makes our results more practical in real applications.

4 Numerical illustrations

In this section, a numerical simulation is provided to show the effectiveness of the proposed approach.

Consider a 2D neural network (5) with parameters as follows:

It is obtained that \(l_{1}^{-}=-0.2\), \(l_{1}^{+}=0.5\), \(l_{2}^{-}=-0.3\), \(l _{2}^{+}=0.5\), i.e.,

The delayed intermittent controller u is designed as

where

and \(\gamma =0.01\).

In particular, we consider the case that \(\varphi _{1}(t)=-0.5\sin t\), \(\varphi _{2}(t)=0.5\cos t\), \(\alpha =0.1\), \(\beta =0.1\), \(c=0.4\), \(\tau =0.11\), \(\mu _{1}=1.01\), \(\mu _{2}=0.38\), \(\mu _{3}=0.08\), \(\mu _{4}=0.5\), \(\mu _{5}=1.4\), \(\mu _{6}=0.26\), \(\mu _{7}=0.93\). By MATLAB LMI toolbox, it follows from Theorem 2 that

Then the gain matrix of the control law is taken as \(K=[\begin{array}{cc} -0.0667 & -11.5328 \end{array}] \).

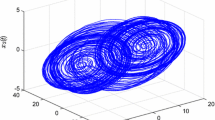

The numerical simulation of neural network (5) with controller \(u=0\) in \([0,+\infty )\) is presented in Fig. 1, which shows that the system is unstable. Under the designed intermittent controller (50) (see Fig. 3), neural network (5) becomes a closed-loop system. In this case, Fig. 2 illustrates that the neural network (5) is globally asymptotical stable. Noting that \(B=[0,1]^{T}\), the controller is only imposed on the second variable. Actually, the stability of system (5) is achieved through the interaction of \(x_{1}\) and \(x_{2}\).

State trajectories for neural networks (5) without control input

Delayed intermittent controller (50)

Remark 4

The established stability conditions in this paper depend both on the upper bound of transmission delays of the system and time delay in the controller. The relationship between the two delays is shown in Tables 1 and 2, which can be conveniently checked by the MATLAB LMI toolbox.

5 Conclusion

This paper was dedicated to the stability problem of neural networks with both time-varying delays and uncertainties. A novel delayed intermittent controller with non-fixed control width and period has been designed in the sense that it could be activated in all states or in some states and the value of time delay in controller could be adjusted without adding control gain. Moreover, the control width and period are non-fixed, which makes the control scheme is more flexible in real applications. Some delay-dependent stability criteria have been presented by using free-weighting matrix techniques and Lyapunov–Krasovskii functional method. It is shown that such criteria can provide better feasibility results than some existing ones. Actually, from the viewpoint of switched systems, the intermittently controlled system can be seen as a switched system composed of an unstable-uncontrolled subsystem and a stable-controlled subsystem. Switching phenomena are likely to cause impulsive effects to systems. Hence, how to develop our results to impulsive systems is an interesting problem for the future.

References

Chua, L., Yang, L.: Cellular neural networks: applications. IEEE Trans. Circuits Syst. 35, 1273–1290 (1988)

Wöhler, C., Anlauf, J.: A time delay neural network algorithm for estimating image-pattern shape and motion. Image Vis. Comput. 17, 281–294 (1999)

Wu, J.: Introduction to Neural Dynamics and Signal Transmission Delay. de Gruyter, New York (2001)

Li, X., O’Regan, D., Akca, H.: Global exponential stabilization of impulsive neural networks with unbounded continuously distributed delays. IMA J. Appl. Math. 80, 85–99 (2015)

Xiong, W., Shi, Y., Cao, J.: Stability analysis of two-dimensional neutral-type Cohen–Grossberg BAM neural networks. Neural Comput. Appl. 28, 703–716 (2017)

Adhikari, S., Kim, H., Yang, C., Chua, L.: Building cellular neural network templates with a hardware friendly learning algorithm. Neurocomputing 312, 276–284 (2018)

Lv, X., Li, X., Cao, J., Duan, P.: Exponential synchronization of neural networks via feedback control in complex environment. Complexity 2018, 1–13 (2018)

Chen, J., Li, X., Wang, D.: Asymptotic stability and exponential stability of impulsive delayed Hopfield neural networks. Abstr. Appl. Anal. 2013, 1 (2013)

Hu, J., Sui, G., Lv, X., Li, X.: Fixed-time control of delayed neural networks with impulsive perturbations. Nonlinear Anal., Model. Control 23, 904–920 (2018)

Yang, D., Li, X., Qiu, J.: Output tracking control of delayed switched systems via state-dependent switching and dynamic output feedback. Nonlinear Anal. Hybrid Syst. 32, 294–305 (2019)

Huang, C., Zhang, H., Huang, L.: Almost periodicity analysis for a delayed Nicholson’s bloflies model with nonlinear density-dependent mortality term. Commun. Pure Appl. Anal. 18, 3337–3349 (2019)

Li, X., Shen, J., Rakkiyappan, R.: Persistent impulsive effects on stability of functional differential equations with finite or infinite delay. Appl. Math. Comput. 329, 14–22 (2018)

Lv, X., Rakkiyappan, R., Li, X.: μ-Stability criteria for nonlinear differential systems with additive leakage and transmission time-varying delays. Nonlinear Anal., Model. Control 23, 380–400 (2018)

Huang, C., Liu, B.: New studies on dynamic analysis of inertial neural networks involving non-reduced order method. Neurocomputing 325, 283–287 (2019)

Roska, T., Chua, L.: Cellular neural networks with delay type template elements and nonuniform grids. Int. J. Circuit Theory Appl. 20, 469–481 (1992)

Gilli, M.: Strange attractors in delayed cellular neural networks. IEEE Trans. Circuits Syst. I, Fundam. Theory Appl. 40, 849–853 (1993)

Huang, C., Su, R., Cao, J., Xiao, S.: Asymptotically stable high-order neutral cellular neural networks with proportional delays and D operators. Math. Comput. Simul. (2019). https://doi.org/10.1016/j.matcom.2019.06.001

Zhang, Z., He, Y., Zhang, C., Wu, M.: Exponential stabilization of neural networks with time-varying delay by periodically intermittent control. Neurocomputing 207, 469–475 (2016)

Tan, X., Cao, J.: Intermittent control with double event-driven for leader-following synchronization in complex networks. Appl. Math. Model. 64, 372–385 (2018)

Li, X., Akc, H., Fu, X.: Uniform stability of impulsive infinite delay differential equations with applications to systems with integral impulsive conditions. Appl. Math. Comput. 219, 7329–7337 (2013)

Yang, X., Li, X., Xi, Q., Duan, P.: Review of stability and stabilization for impulsive delayed systems. Math. Biosci. Eng. 15, 1495–1515 (2018)

Li, X., Yang, X., Huang, T.: Persistence of delayed cooperative models: impulsive control method. Appl. Math. Comput. 342, 130–146 (2019)

Huang, J., Li, C., Huang, T., Han, Q.: Lag quasi-synchronization of coupled delayed systems with parameter mismatch by periodically intermittent control. Nonlinear Dyn. 71, 469–478 (2013)

Zhang, G., Shen, Y.: Exponential synchronization of delayed memristor-based chaotic neural networks via periodically intermittent control. Neural Netw. 55, 1–10 (2014)

Huang, J., Li, C., He, X.: Stabilization of a memristor-based chaotic system via intermittent control and fuzzy processing. Int. J. Control. Autom. Syst. 11, 643–647 (2013)

Huang, J., Li, C., Han, Q.: Stabilization of delayed chaotic neural networks by periodically intermittent control. Circuits Syst. Signal Process. 28, 567–579 (2009)

Hu, C., Yu, J., Jiang, H., Teng, Z.: Exponential stabilization and synchronization of neural networks with time-varying delays via periodically intermittent control. Nonlinearity 23, 2369–2391 (2010)

Zhang, G., Lin, X., Zhang, X.: Exponential stabilization of neutral-type neural networks with mixed interval time-varying delays by intermittent control: a CCL approach. Circuits Syst. Signal Process. 33, 371–391 (2014)

Liu, X., Chen, T.: Synchronization of nonlinear coupled networks via aperiodically intermittent pinning control. IEEE Trans. Neural Netw. Learn. Syst. 26, 113–126 (2015)

Liu, X., Chen, T.: Synchronization of complex networks via aperiodically intermittent pinning control. IEEE Trans. Autom. Control 60, 3316–3321 (2015)

Liu, M., Huang, H., Hu, C.: Synchronization of hybrid-coupled delayed dynamical networks via aperiodically intermittent pinning control. J. Franklin Inst. 353, 2722–2742 (2016)

Hu, C., Yu, J.: Generalized intermittent control and its adaptive strategy on stabilization and synchronization of chaotic systems. Chaos Solitons Fractals 91, 262–269 (2016)

Song, Q., Huang, T.: Stabilization and synchronization of chaotic systems with mixed time-varying delays via intermittent control with non-fixed both control period and control width. Neurocomputing 154, 61–69 (2015)

Richard, J.: Time-delay systems: an overview of some recent advances and open problems. Automatica 39, 1667–1694 (2003)

Safa, A., Baradarannia, M., Kharrati, H., Khanmohammadi, S.: Global attitude stabilization of rigid spacecraft with unknown input delay. Nonlinear Dyn. 82, 1623–1640 (2015)

Zhu, H., Rakkiyappan, R., Li, X.: Delayed state-feedback control for stabilization of neural networks with leakage delay. Neural Netw. 105, 249–255 (2018)

Ahmadi, A., Khadir, B.: A globally asymptotically stable polynomial vector field with rational coefficients and no local polynomial Lyapunov function. Syst. Control Lett. 121, 50–53 (2018)

Wu, Y., Cao, J., Alofi, A., Mazrooei, A., Elaiw, A.: Finite-time boundedness and stabilization of uncertain switched neural networks with time-varying delay. Neural Netw. 69, 135–143 (2015)

Acknowledgements

The authors wish to express their gratitude to the editors and the reviewers for the helpful comments.

Availability of data and materials

Not applicable.

Funding

This work is supported by National Natural Science Foundation of China (11501333, 11301308, 61673247), and the Research Fund for Distinguished Young Scholars and Excellent Young Scholars of Shandong Province (JQ201719, ZR2016JL024).

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Tian, Y., Wang, F., Wang, Y. et al. Stability of delay neural networks with uncertainties via delayed intermittent control. Adv Differ Equ 2019, 464 (2019). https://doi.org/10.1186/s13662-019-2401-0

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2401-0