Abstract

This paper investigates the finite-time anti-synchronization of time-varying delayed neural networks. A simple intermittent adjustment feedback controller is designed to ensure the drive-response systems realize anti-synchronization in a finite time. By employing some differential inequalities and finite-time stability theory, some novel and effective finite-time anti-synchronization criteria are derived based on the Lyapunov functional method. This paper extends some traditional anti-synchronization criteria by using intermittent adjustment feedback control. Finally, two numerical examples are given to show the effectiveness of the proposed method.

Similar content being viewed by others

1 Introduction

The nonlinear systems have been widely studied in the real world [1–7]. During the past 30 years, neural networks have become one of the most important nonlinear systems and have been intensively applied to solve various optimization problems [8]. On the other hand, time-delay may often occur in many practical systems such as neural networks [9–12]. At the same time, it can lead to many complex dynamic behaviors such as divergence, oscillation and instability of the neural networks [13]. It is very important that the stability analysis for delayed neural networks between theoretical and practical systems.

In the past decades, synchronization and anti-synchronization of neural networks have caused wide attention because of its potential application in various fields of science and humanity worldwide, such as image encryption [14], physical systems [15], secure communication [16] and laser technology [17]. Meanwhile, many control methods and techniques have been proposed to synchronize the behavior of neural networks. These control methods are divided into adaptive control [18], nonlinear control [19], active control [20], etc. Most of these methods are based on continuous control and discontinuous control strategies are rarely studied. The intermittent control [21] is a kind of discontinuous control methods and control of the system in a discontinuous time period. It is a more effective and economical approach than continuous control. So far, it is widely applied to solve the synchronization problem of neural networks [22–24].

We say that two systems achieve anti-synchronization when they have the same amplitude but opposite signs. Then the sum of two signals will converge to zero when the anti-synchronization phenomenon occurs. Therefore, the authors have studied the anti-synchronization phenomenon on neural networks and some relevant theoretical results have been established [25–27]. In the current research of synchronization theory, most authors studying the anti-synchronization of neural networks have based themselves on the convergence time being large enough. This indicates that the control can drive the slave system to anti-synchronize the master system after the infinite horizon. The control of infinite time is not difficult to realize in the actual system, but it takes a higher cost. We hope to control the cost as much as possible and to reach stability of the system as quickly as possible. In order to realize the stability and synchronization of the system quickly, one can use finite-time techniques to get a faster convergent speed. Finite-time synchronization of complex networks has been investigated in [28, 29]. Unfortunately, there are few papers considering the finite-time anti-synchronization of neural networks via feedback control with intermittent adjustment.

Motivated by the above discussion, this paper will investigate the finite-time anti-synchronization of time-varying delayed neural networks. Furthermore, by applying an inequality technique and the Lyapunov stability theorem, the finite-time anti-synchronization can be realized between the drive and response systems by designing an intermittent adjustment feedback controller. Lastly, two numerical examples are given to prove the correctness of the proposed method.

The remainder of this paper is organized as follows. In Section 2, the model formulation and some preliminaries are given. The main results will be obtained in Section 3. Two examples are given to show the effectiveness of our results in Section 4. Finally, conclusions are drawn in Section 5.

Notations: Throughout this paper, \(\mathbb{R}^{n}\) denotes n dimensional real numbers set, \(\mathbb{N}\) denotes natural numbers set. \(A_{m \times n}\) and \(I_{N}\) refer to \(m \times n\) matrix and \(N \times N\) identity matrix, respectively. The superscript T denotes vector transposition. \(\Vert \cdot \Vert \) is the Euclidean norm in \(\mathbb{R}^{n}\). If A is a matrix, \(\Vert A \Vert \) denotes its operator norm. If not explicitly stated, matrices are assumed to have compatible dimensions.

2 Model description and preliminaries

In this section, a neural network model and definition will be introduced. Furthermore, some useful lemmas will also be given, which will be used later.

The model of the neural network with time-varying delay can be described by

where \({x_{i}}(t)\) denotes the state variable associated with the ith neuron. In compact matrix form, (1) can be rewritten as

where \(x(t) = {({x_{1}}(t),{x_{2}}(t),\ldots,{x_{n}}(t))^{T}} \in{\mathbb {R}^{n}}\) is the state vector of the neural networks at time t. \({c_{i}} > 0\) (\(i = 1,2,\ldots,n\)) are constants, \(C = \operatorname {diag}({c_{1}},{c_{2}},\ldots ,{c_{n}})\) is diagonal matrix. \(A = {({a_{ij}})_{n \times n}}\), \(B = {({b_{ij}})_{n \times n}}\) represent the connect weight matrix and the delayed connection weight matrix with proper dimension. \({a_{ij}}\), \({b_{ij}}\) denote the strengths of connectivity between the cell i and j at time t and at time \(t - \tau(t)\), respectively; \(f(x(t)) = ({f_{1}}({x_{1}}(t)),\ldots,{f_{n}}({x_{n}}(t)))^{T}\) is the activation function of the neurons and satisfy the global Lipschitz conditions with Lipschitz constants \(L > 0\). \(\tau(t)\) is the time-varying delay of neural network, \(0 \leq{\tau_{1}} \leq\tau(t) \leq{\tau_{2}}\), \(\dot{\tau}(t) \le h < 1\), \({\tau _{1}}\), \({\tau_{2}}\) and h are constants. In addition, the initial conditions of system (2) are given by \(x(s) = \phi(s) = {({\phi_{1}}(s),{\phi_{2}}(s),\ldots,{\phi_{n}}(s))^{T}}\).

In this paper, we consider system (2) as the drive system, and the corresponding response system is given by

or equivalently

where the initial conditions \(y(s) = \omega(s) = {({\omega _{1}}(s),{\omega_{2}}(s),\ldots,{\omega_{n}}(s))^{T}} \in\mathbb{R}^{n}\), \(y(t) = ({y_{1}}(t),{y_{2}}(t), \ldots,{y_{n}}(t))^{T}\) is the response state vector of the neural networks at time t. \(u(t) = ({u_{1}}(t),{u_{2}}(t), \ldots,{u_{n}}(t))^{T}\) is an intermittent adjustment feedback controller that will be designed to achieve the anti-synchronization between drive-response systems.

Define the anti-synchronization errors \({e_{i}}(t) = {y_{i}}(t) + {x_{i}}(t)\), \(i = 1,2,\ldots,n\). \({x_{i}}(t)\) and \({y_{i}}(t)\) are the state variables of drive system (1) and response system (3), respectively. Therefore, the error system can be derived as follows:

or equivalently

with initial conditions \({\psi_{i}}(t) = {\omega_{i}}(t) + {\phi_{i}}(t)\), \(i = 1,2,\ldots,n\). Here \(e(t) = {({e_{1}}(t),{e_{2}}(t),\ldots,{e_{n}}(t))^{T}} \in{\mathbb{R}^{n}}\), \({F_{j}}({e_{j}}(t)) = {f_{j}}({y_{j}}(t)) + {f_{j}}({x_{j}}(t))\), \({F_{j}}({e_{j}}(t - \tau(t))) = {f_{j}}({y_{j}}(t - \tau (t))) + {f_{j}}({x_{j}}(t - \tau(t)))\).

Through this paper, in order to obtain the anti-synchronization results, we give the following assumption, definition and some useful lemmas.

Assumption 1

[30]

There exists a constant \(L > 0\), for any \(x(t),y(t) \in{\mathbb{R}^{n}}\), such that

therefore, we can obtain \(\Vert {F(e(t))} \Vert \le L \Vert {e(t)} \Vert \).

Definition 1

[23]

System (1) and (3) are said to be finite-time anti-synchronization if for suitable intermittent adjustment feedback controller \({u_{i}}(t)\), there exists a constant \(\bar{T} > 0\) such that

and \(\Vert {{e_{i}}(t)} \Vert = 0\) for \(t > \bar{T} \), where T̄ is a function about the initial state vector value, The function T̄ is named the settling-time function and its value is called the settling time.

Lemma 1

[31]

If \({a_{1}},{a_{2}},\ldots,{a_{n}}\) are positive number and \(0 < p < q\), then

Lemma 2

[32]

Suppose that a function \(V(t)\) is continuous and non-negative when \(t \in[ {0, + \infty} )\), and it satisfies the following conditions:

where \(\alpha> 0\), \(T > 0\), \(0 < \eta< 1\), \(0 < \theta< 1\), \(H \in\mathbb {N}\), then the following inequality holds:

and the constant T̄ is the settling time.

Lemma 3

[33]

Given any real matrices A, B, Q of appropriate dimensions and a scalar \(m > 0\), such that \(0 < Q = {Q^{T}}\). Then the following inequality holds:

3 Main results

In this section, the finite-time anti-synchronization of neural networks will be studied between system (1) and system (3). In order to obtain the results, an intermittent adjustment feedback controller can be designed as follows:

where \(0 < \alpha< 1\), \(\beta> 0\) is a positive constant called control gain, \(k > 0\) is a tunable real constant, \(\operatorname {sgn}( \cdot)\) is the sign function. \(H \in\mathbb{N}\), \(T > 0\) is the control period, \(\delta> 0\) is called the control duration.

In the same way, (7) can be expressed by the following form:

where

Let \(\theta= \frac{\delta}{T}\) be the ratio of the control width δ to the control period T, which is called the control rate. Then, substituting (8) into (6), the error system between (2) and (4) can be expressed as

Remark 1

When \(HT \le t < HT + \theta T\), \(H \in\mathbb{N}\). If \(0 < \alpha< 1\), the controller \(u(t)\) is a continuous function with respect t. If \(\alpha= 0\), \(u(t)\) turns to be discontinuous one, which is similar to the controller that have been considered in [34]. If \(\alpha= 1\), the controller will become typical feedback control issues, which only can realize an asymptotical anti-synchronization in an infinite time.

Theorem 1

Suppose Assumption 1 holds, and there exists a positive constant \({m_{1}}\) such that the following condition:

then system (2) and system (4) can realize finite-time anti-synchronization under the intermittent adjustment feedback controller (8) in a finite time:

where \(V(0) = {e^{T}}(0)e(0) = \sum_{i = 1}^{n} ({e_{i}}(0))^{2} \), \(e(0) = y(0) + x(0)\).

Proof

Constructing the following Lyapunov function:

at the same time, \({e^{T}}(t)e(t)\) also can be described as \(\sum_{i = 1}^{n} ({e_{i}}(t))^{2}\). Then the derivative of \(V(t)\) with respect to time t along the solutions of equation (9) can be calculated as follows.

When \(HT \le t < HT + \theta T\), \(H \in\mathbb{N}\),

Using Lemma 3 and Assumption 1, the following inequality can be established:

Similarly,

Substituting (13) and (14) into \(\dot{V}(t)\)

From Lemma 1, we get

Hence,

Therefore, according to the condition (10)

According to (14), the results can be obtained

When \(HT + \theta T \le t < (H + 1)T\), \(H \in\mathbb{N}\), according to the condition (10),

Then we have

According to Lemma 2, the following inequality can be obtained:

Letting \(\eta= \frac{{\alpha+ 1}}{2}\), \(\alpha=k\), it is easy to get

Hence, the error vector \(e(t)\) will converge to zero within T̄. Consequently, under controller (8), systems (2) and (4) realize finite-time anti-synchronization. This completes the proof of the theorem. □

Remark 2

The settling time of anti-synchronization can be estimated in a finite time. The sufficient conditions given in Theorem 1 can avoid the problem that the neural network only realizes anti-synchronization when time tends to infinity efficiently, and this has significant meanings in real engineering applications of network synchronization.

Remark 3

The larger of the control rate θ, the faster convergence speed. When \(\theta= 0\), the intermittent adjustment feedback controller (8) can be described as \(u(t) = - \frac{\beta}{2}e(t)\), and the systems (2) and (4) can achieve asymptotical anti-synchronization. When \(\theta= 1\), the controller (8) is degenerated to a continuous control input. If we change the controller (8) to the following form:

then the following corollary can be derived.

Corollary 1

Suppose Assumption 1 holds, the systems (2) and (4) can realize finite-time anti-synchronization via continuous controller (15), if there exists a positive constant \({m_{1}}\) such that the following conditions hold:

Then system (2) and system (4) can realize finite-time anti-synchronization under the controller (15) in a finite time:

4 Numerical simulations

In this section, two numerical examples are given to show the effectiveness of Theorem 1.

Example 1

Considering the following model as the master system:

where \(x(t) = {({x_{1}}(t),{x_{2}}(t))^{T}}\), \(f(x(t)) = {(f({x_{1}}(t)),f({x_{2}}(t)))^{T}}\), \(f(x) = \tanh(x)\),

Next, the corresponding slave system is considered as follows:

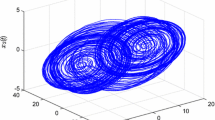

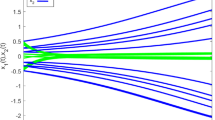

When \(\tau(t) = 1\), \(u(t)\) is the form of (8), system (16) has a chaotic attractor, as shown in Figure 1. According to system (16) and (17), the state trajectories can be drawn without the controller (8) in Figure 2 and Figure 3. In Figure 4, it is referring to the anti-synchronization errors without the controller (8).

The state trajectories of \(\pmb{x_{1}}\) , \(\pmb{y_{1}}\) without the controller ( 8 ).

The state trajectories of \(\pmb{x_{2}}\) , \(\pmb{y_{2}}\) without the controller ( 8 ).

The anti-synchronization errors \(\pmb{e_{1}}\) , \(\pmb{e_{2}}\) without the control ( 8 ).

In this simulation, the values of the parameters for the intermittent adjustment feedback controller (8) are taken as \(\beta= 25.1\), \(k = 12\), \(\alpha= 0.95\), \(T = 2\) and \(\theta= 0.6\). when \(L = 1\), \(h = 0.1\), \({m_{1}} = 0.2\). The initial values are taken as \(x(0) = (0.2,0.5)\), \(y(0) = (0.3,0.6)\). By using Matlab toolbox, \(- 2 \Vert C \Vert + m_{1} \Vert AA^{T} \Vert + m_{1}^{-1}L + \frac{L}{1 - h} \Vert BB^{T} \Vert + 1 - \beta= - 0.0755 < 0 \), the settling time \(\bar{T}= 5.5246\), it can be verified that the condition (10) in Theorem 1 are satisfied. And the state trajectories can be drawn with the controller (8) in Figure 5 and Figure 6. The anti-synchronization errors with the controller (8) are drawn in Figure 7.

The state trajectories of \(\pmb{x_{1}}\) , \(\pmb{y_{1}}\) under the controller ( 8 ).

The state trajectories of \(\pmb{x_{2}}\) , \(\pmb{y_{2}}\) under the controller ( 8 ).

The anti-synchronization errors \(\pmb{e_{1}}\) , \(\pmb{e_{2}}\) under the control ( 8 ).

Example 2

Considering the following model as the master system:

where \(x(t) = {({x_{1}}(t),{x_{2}}(t),{x_{3}}(t))^{T}}\), \(f(x(t)) = {(f({x_{1}}(t)),f({x_{2}}(t)),f(x_{3}(t)))^{T}}\), \(f(x) = \tanh(x)\),

Next, the corresponding slave system is considered as follows:

When \(\tau(t) = 1\), system (18) has a chaotic attractor, as shown in Figure 8. At the same time, the state trajectories of system (18) and (19) can be drawn without the intermittent adjustment feedback controller (8) in Figure 9. In Figure 10, it is referring to the anti-synchronization errors without the controller (8).

The state trajectories of \(\pmb{x_{i}}\) , \(\pmb{y_{i}}\) , \(\pmb{i=1,2,3}\) without the controller ( 8 ).

The anti-synchronization errors \(\pmb{e_{1}}\) , \(\pmb{e_{2}}\) , \(\pmb{e_{3}}\) without the control ( 8 ).

In this simulation, the values of the parameters for controller (8) are taken as \(\beta= 13\), \(k = 12\), \(\alpha= 0.94\), \(T = 3\) and \(\theta= 0.6\). when \(L = 1\), \(h = 0.1\), \({m_{1}} = 0.2\). The initial values are taken as \(x(0) = (0.2,0.5,0.7)\), \(y(0) = (0.3,0.6,0.8)\). By using Matlab toolbox, \(- 2 \Vert C \Vert + m_{1} \Vert AA^{T} \Vert + m_{1}^{-1}L + \frac{L}{1 - h} \Vert BB^{T} \Vert + 1 - \beta= -0.2795 < 0 \), the settling time \(\bar{T}= 4.7276\), it can be verified that the condition (10) in Theorem 1 are satisfied. And the state trajectories can be drawn with the controller (8) in Figure 11. The anti-synchronization errors with the controller (8) are drawn in Figure 12.

The state trajectories of \(\pmb{x_{i}}\) , \(\pmb{y_{i}}\) , \(\pmb{i=1,2,3}\) under the controller ( 8 ).

The anti-synchronization errors \(\pmb{e_{1}}\) , \(\pmb{e_{2}}\) , \(\pmb{e_{3}}\) under the control ( 8 ).

Remark 4

In the intermittent adjustment feedback controller (7) and (8), the parameter k plays an important part in improving the convergence speed. The larger the parameter k, the shorter the settling time T̄. Examples 1 and 2 shows the simulation results when \(k = 12 \). Table 1 gives the settling times when the values of k are different in Examples 1 and 2.

Remark 5

In this paper, our theorem conditions and the settling time can simply the calculation through Matlab to get the desired results. As regards references [19] and [30], our research is based on them, which can make the system stable in a shorter time. Therefore, it has higher research value in the actual system.

5 Conclusion

In this paper, we investigate the finite-time anti-synchronization by using Lyapunov functional method. A simple intermittent adjustment feedback controller is designed to control the states of two systems to achieve anti-synchronization within a finite time. Some sufficient conditions are put forward for the anti-synchronization of drive-response systems, it plays an important role in practical application. The main contribution of this paper is that system (1) and (3) can realize anti-synchronization in a finite time.

Furthermore, two numerical simulation examples are provided to verify the rightness of the proposed anti-synchronization criteria. It is important to note that the intermittent adjustment feedback control can reduce the control time and cost rather than the continuous control. Therefore, our method has very extensive application in transportation, communications and other areas. Finally, it is still a big challenge to investigate the finite-time anti-synchronization of neural networks with discontinuous dynamic behaviors; these problems will be considered in the next papers.

References

Hu, H, Jiang, B, Yang, H: A new approach to robust reliable h∞ control for uncertain nonlinear systems. Int. J. Inf. Syst. Sci. 47(6), 1376-1383 (2016)

Li, H, Gao, Y, Shi, P, Lam, HK: A new approach to robust reliable h∞ control for uncertain nonlinear systems. IEEE Trans. Autom. Control 61(9), 2745-2751 (2016)

Dong, H, Wang, Z, Gao, H: Distributed h∞ filtering for a class of Markovian jump nonlinear time-delay systems over Lossy sensor networks. IEEE Trans. Autom. Control 60(10), 4665-4672 (2013)

Liang, J, Sun, F, Liu, X: Finite-horizon h∞ filtering for time-varying delay systems with randomly varying nonlinearities and sensor saturations. Syst. Sci. Control Eng. 2(1), 108-118 (2014)

Ding, D, Wang, Z, Dong, H, Shu, H: Distributed h∞ state estimation with stochastic parameters and nonlinearities through sensor networks: the finite-horizon case. Automatica 48(8), 1575-1585 (2012)

Shen, B, Wang, Z, Ding, D, Shu, H: h∞ state estimation for complex networks with uncertain inner coupling and incomplete measurements. IEEE Trans. Neural Netw. Learn. Syst. 24(12), 2027-2037 (2013)

Chen, Y, Hoo, KA: Stability analysis for closed-loop management of a reservoir based on identification of reduced-order nonlinear model. Syst. Sci. Control Eng. 1(1), 12-19 (2013)

Forti, M, Tesi, A: New conditions for global stability of neural networks with application to linear and quadratic programming problems. IEEE Trans. Circuits Syst. 42(7), 354-366 (1995)

Cao, J, Lu, J: Adaptive synchronization of neural networks with or without time-varying delay. Chaos 16(1), 013133 (2006)

Abdurahman, A, Jiang, H, Teng, Z: Finite-time synchronization for fuzzy cellular neural networks with time-varying delays. Fuzzy Sets Syst. 297, 96-111 (2016)

Kaviarasan, B, Sakthivel, R, Lim, Y: Synchronization of complex dynamical networks with uncertain inner coupling and successive delays based on passivity theory. Neurocomputing 186, 127-138 (2016)

Abdurahman, A, Jiang, H, Teng, Z: Finite-time synchronization for memristor-based neural networks with time-varying delays. Neural Netw. 69, 20-28 (2015)

Wang, H, Song, Q, Duan, C: Lmi criteria on exponential stability of bam neural networks with both time-varying delays and general activation functions. Math. Comput. Simul. 81(4), 837-850 (2010)

Hammami, S: State feedback-based secure image cryptosystem using hyperchaotic synchronization. ISA Trans. 54, 837-850 (2015)

Olusola, OI, Vincent, UE, Njah, AN: LMI criteria on exponential stability of bam neural networks with both time-varying delays and general activation functions. Nonlinear Dyn. 13(3), 258-269 (2013)

Wu, X, Zhu, C, Kan, H: An improved secure communication scheme based passive synchronization of hyperchaotic complex nonlinear system. Appl. Math. Comput. 252, 201-214 (2015)

Pisarchik, AN, Arecchi, FT, Meucci, R, DiGarbo, A: Synchronization of Shilnikov chaos in a \(\mathit{co}_{2}\) laser with feedback. Laser Phys. 11(11), 1235-1239 (2001)

Li, XF, Liu, XJ, Han, XP, Chu, YD: Adaptive synchronization of identical chaotic and hyper-chaotic systems with uncertain parameters. Nonlinear Anal. 11(4), 2215-2223 (2010)

Al-Sawalha, MM, Noorani, MSM: Anti-synchronization of two hyperchaotic systems via nonlinear control. Commun. Nonlinear Sci. Numer. Simul. 14(8), 3402-3411 (2009)

Ahmad, I, Saaban, AB, Ibrahim, AB, Shahzad, M: Global chaos synchronization of new chaotic system using linear active control. Complexity 21(1), 379-389 (2015)

Xia, W, Cao, J: Pinning synchronization of delayed dynamical networks via periodically intermittent control. Chaos 19(1), 013120 (2009)

Zhang, W, Li, C, Huang, T: Stability and synchronization of memristor-based coupling neural networks with time-varying delays via intermittent control. Neurocomputing 173, 1066-1072 (2016)

Zheng, M, Li, L, Peng, H, Xiao, J, Yang, Y, Zhao, H, Ren, J: Finite-time synchronization of complex dynamical networks with multi-links via intermittent controls. Eur. Phys. J. B 89(2), 1-12 (2016)

Anbuvithya, R, Mathiyalagan, K, Sakthivel, R, Prakash, P: Non-fragile synchronization of memristive bam networks with random feedback gain fluctuations. Commun. Nonlinear Sci. Numer. Simul. 29(1), 427-440 (2015)

Sakthivel, R, Anbuvithya, R, Mathiyalagan, K, Ma, YK, Prakash, P: Reliable anti-synchronization conditions for bam memristive neural networks with different memductance functions. Appl. Math. Comput. 275, 213-228 (2016)

Ahn, CK: Anti-synchronization of time-delayed chaotic neural networks based on adaptive control. Int. J. Theor. Phys. 48(12), 3498-3509 (2009)

Zhang, G, Shen, Y, Wang, L: Global anti-synchronization of a class of chaotic memristive neural networks with time-varying delays. Neural Netw. 46, 1-8 (2013)

Yang, X, Wu, Z, Cao, J: Finite-time synchronization of complex networks with nonidentical discontinuous nodes. Nonlinear Dyn. 73(4), 2313-2327 (2013)

Wang, W, Peng, H, Li, L, Xiao, J, Yang, Y: Finite-time function projective synchronization in complex multi-links networks with time-varying delay. Neural Process. Lett. 41(1), 71-88 (2015)

Ren, F, Cao, J: Anti-synchronization of stochastic perturbed delayed chaotic neural networks. Neural Comput. Appl. 18(5), 71-88 (2009)

Xu, L, Wang, X: Mathematical Analysis Methods and Examples. Higher Education Press (1983)

Mei, J, Jiang, M, Xu, W, Wang, B: Finite-time synchronization control of complex dynamical networks with time delay. Commun. Nonlinear Sci. Numer. Simul. 18(9), 2462-2478 (2013)

Boyd, S, Ghaoui, L, Feron, E, Balakrishnan, V: Linear Matrix Inequalities in System and Control Theory. SIAM, Philadelphia (1994)

Lu, J, Ho, DW, Wu, L: Exponential stabilization of switched stochastic dynamical networks. Nonlinearity 22(4), 889-911 (2009)

Acknowledgements

This work was jointly supported by the Natural Science Foundation of Jiangsu Province of China under Grant No. BK20161126, the Graduate Innovation Project of Jiangsu Province under Grant No. KYLX16_0778, and the Fundamental Research Funds for the Central Universities JUSRP51317B.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

XS carried out the main results of this paper and drafted the manuscript. YY directed the study and helped to inspect the manuscript. All authors read and approved the final manuscript.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Sui, X., Yang, Y., Wang, F. et al. Finite-time anti-synchronization of time-varying delayed neural networks via feedback control with intermittent adjustment. Adv Differ Equ 2017, 229 (2017). https://doi.org/10.1186/s13662-017-1264-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-017-1264-5