Abstract

Short-term forecasting is an important tool in understanding environmental processes. In this paper, we incorporate machine learning algorithms into a conditional distribution estimator for the purposes of forecasting tropical cyclone intensity. Many machine learning techniques give a single-point prediction of the conditional distribution of the target variable, which does not give a full accounting of the prediction variability. Conditional distribution estimation can provide extra insight on predicted response behavior, which could influence decision-making and policy. We propose a technique that simultaneously estimates the entire conditional distribution and flexibly allows for machine learning techniques to be incorporated. A smooth model is fit over both the target variable and covariates, and a logistic transformation is applied on the model output layer to produce an expression of the conditional density function. We provide two examples of machine learning models that can be used, polynomial regression and deep learning models. To achieve computational efficiency, we propose a case–control sampling approximation to the conditional distribution. A simulation study for four different data distributions highlights the effectiveness of our method compared to other machine learning-based conditional distribution estimation techniques. We then demonstrate the utility of our approach for forecasting purposes using tropical cyclone data from the Atlantic Seaboard. This paper gives a proof of concept for the promise of our method, further computational developments can fully unlock its insights in more complex forecasting and other applications.

Similar content being viewed by others

Change history

26 August 2022

A Correction to this paper has been published: https://doi.org/10.1007/s10651-022-00543-6

References

Biswas MK, Carson L, Newman K, Bernardet L, Kalina E, Grell E, Frimel J (2017) Community hwrf users guide v3. 9a

Chung Y, Dunson DB (2009) Nonparametric bayes conditional distribution modeling with variable selection. J Am Stat Assoc 104(488):1646–1660

Cloud KA, Reich BJ, Rozoff CM, Alessandrini S, Lewis WE, Delle Monache L (2019) A feed forward neural network based on model output statistics for short-term hurricane intensity prediction. Weather and Forecasting 34(4):985–997

Dalmasso N, Pospisil T, Lee AB, Izbicki R, Freeman PE, Malz AI (2020) Conditional density estimation tools in python and r with applications to photometric redshifts and likelihood-free cosmological inference. Astronomy and Computing 30:100362

Dunson DB, Pillai N, Park J-H (2007) Bayesian density regression. Journal of the Royal Statistical Society: Series B (Statistical Methodology) 69(2):163–183

Efromovich S (2010) Orthogonal series density estimation. Wiley Interdisciplinary Reviews: Computational Statistics 2(4):467–476

Escobar MD, West M (1995) Bayesian density estimation and inference using mixtures. J Am Stat Assoc 90(430):577–588

Fahey MT, Thane CW, Bramwell GD, Coward WA (2007) Conditional gaussian mixture modelling for dietary pattern analysis. Journal of the Royal Statistical Society: Series A (Statistics in Society) 170(1):149–166

Fan J-Q, Peng L, Yao Q-W, Zhang W-Y (2009) Approximating conditional density functions using dimension reduction. Acta Mathematicae Applicatae Sinica, English Series 25(3):445–456

Fithian W, Hastie T (2013) Finite-sample equivalence in statistical models for presence-only data. The annals of applied statistics 7(4):1917–1939

Fithian W, Hastie T (2014) Local case-control sampling: Efficient subsampling in imbalanced data sets. Ann Stat 42(5):1693

Friedman J, Hastie T, Tibshirani R (2010) Regularization paths for generalized linear models via coordinate descent. J Stat Softw 33(1):1

Friedman J, Hastie T, Tibshirani R (2009) glmnet: Lasso and elastic-net regularized generalized linear models. R package version 1(4)

Geweke J, Keane M (2007) Smoothly mixing regressions. Journal of Econometrics 138(1):252–290

Gilardi N, Bengio S, Kanevski M (2002) Conditional gaussian mixture models for environmental risk mapping. In Proceedings of the 12th IEEE Workshop on Neural Networks for Signal Processing, pp. 777–786. IEEE

Gneiting T, Raftery AE (2007) Strictly proper scoring rules, prediction, and estimation. J Am Stat Assoc 102(477):359–378

Hall P, Wolff RC, Yao Q (1999) Methods for estimating a conditional distribution function. J Am Stat Assoc 94(445):154–163

Hall P, Racine J, Li Q (2004) Cross-validation and the estimation of conditional probability densities. J Am Stat Assoc 99(468):1015–1026

Hall P, Yao Q et al (2005) Approximating conditional distribution functions using dimension reduction. Ann Stat 33(3):1404–1421

Hannah LA, Blei DM, Powell WB (2011) Dirichlet process mixtures of generalized linear models. Journal of Machine Learning Research 12(6)

Hersbach H (2000) Decomposition of the continuous ranked probability score for ensemble prediction systems. Weather and Forecasting 15(5):559–570

He K, Zhang X, Ren S, Sun J (2015) Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. pp. 1026–1034

Hornik K, Stinchcombe M, White H et al (1989) Multilayer feedforward networks are universal approximators. Neural Netw 2(5):359–366

Hothorn T, Zeileis A (2017) Transformation forests. arXiv preprint arXiv:1701.02110

Hyndman RJ, Bashtannyk DM, Grunwald GK (1996) Estimating and visualizing conditional densities. J Comput Graph Stat 5(4):315–336

Ioffe S, Szegedy C (2015) Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv preprint arXiv:1502.03167

Izbicki R, Lee AB (2016) Nonparametric conditional density estimation in a high-dimensional regression setting. J Comput Graph Stat 25(4):1297–1316

Izbicki R, Lee AB et al (2017) Converting high-dimensional regression to high-dimensional conditional density estimation. Electronic Journal of Statistics 11(2):2800–2831

Jara A, Hanson TE (2011) A class of mixtures of dependent tail-free processes. Biometrika 98(3):553–566

Jarner MF, Diggle P, Chetwynd AG (2002) Estimation of spatial variation in risk using matched case-control data. Biometrical Journal: Journal of Mathematical Methods in Biosciences 44(8):936–945

Kingma DP, Ba J (2014) Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980

Krüger F, Lerch S, Thorarinsdottir TL, Gneiting T (2016) Predictive inference based on markov chain monte carlo output. arXiv preprint arXiv:1608.06802

Kundu S, Dunson DB (2014) Bayes variable selection in semiparametric linear models. J Am Stat Assoc 109(505):437–447

Lenk PJ (1988) The logistic normal distribution for bayesian, nonparametric, predictive densities. J Am Stat Assoc 83(402):509–516

Li R, Bondell HD, Reich BJ (2019) Deep distribution regression. arXiv:1903.06023

Matheson JE, Winkler RL (1976) Scoring rules for continuous probability distributions. Manage Sci 22(10):1087–1096

Meinshausen N (2006) Quantile regression forests. Journal of Machine Learning Research 7(Jun), 983–999

Müller P, Erkanli A, West M (1996) Bayesian curve fitting using multivariate normal mixtures. Biometrika 83(1):67–79

Park J-H, Dunson DB (2010) Bayesian generalized product partition model. Statistica Sinica, 1203–1226

Payne RD, Guha N, Ding Y, Mallick BK (2020) A conditional density estimation partition model using logistic gaussian processes. Biometrika 107(1):173–190

Peng F, Jacobs RA, Tanner MA (1996) Bayesian inference in mixtures-of-experts and hierarchical mixtures-of-experts models with an application to speech recognition. J Am Stat Assoc 91(435):953–960

Pospisil T, Lee AB (2018) Rfcde: Random forests for conditional density estimation. arXiv preprint arXiv:1804.05753

Rojas AL, Genovese CR, Miller CJ, Nichol R, Wasserman L (2005) Conditional density estimation using finite mixture models with an application to astrophysics

Rosenblatt M (1969) Conditional probability density and regression estimators. Multivariate analysis II 25:31

Shahbaba B, Neal R (2009) Nonlinear models using dirichlet process mixtures. Journal of Machine Learning Research 10(8)

Song X, Yang K, Pavel M (2004) Density boosting for gaussian mixtures. In International Conference on Neural Information Processing, pp. 508–515. Springer

Taddy MA, Kottas A (2010) A bayesian nonparametric approach to inference for quantile regression. Journal of Business & Economic Statistics 28(3):357–369

Tokdar ST, Kadane JB et al (2012) Simultaneous linear quantile regression: a semiparametric bayesian approach. Bayesian Anal 7(1):51–72

Tokdar ST, Zhu YM, Ghosh JK et al (2010) Bayesian density regression with logistic gaussian process and subspace projection. Bayesian Anal 5(2):319–344

Trippa L, Müller P, Johnson W (2011) The multivariate beta process and an extension of the polya tree model. Biometrika 98(1):17–34

Tung NT, Huang JZ, Khan I, Li MJ, Williams G et al. (2014) Extensions to quantile regression forests for very high-dimensional data

Weierstrass K (1885) Über die analytische darstellbarkeit sogenannter willkürlicher functionen einer reellen veränderlichen. Sitzungsberichte der Königlich Preußischen Akademie der Wissenschaften zu Berlin 2:633–639

Wood SA, Jiang W, Tanner M (2002) Bayesian mixture of splines for spatially adaptive nonparametric regression. Biometrika 89(3):513–528

Author information

Authors and Affiliations

Corresponding author

Additional information

Handling Editor: Luiz Duczmal.

We’d like to thank Dr. Kevin Gunn for lending equipment and technical assistance to obtain some of the results for the tropical cyclone intensity forecasting application.

Appendices

Appendix A: Case–control sampling justification

We exploit the relationship between an inhomogeneous Poisson process (IPP) model and our problem to re-frame case and control samples into presence and background samples from presence-only datasets. Presence-only data is generally used with species distribution modeling where surveyors record all species presences within a pre-specified region along with randomly sampled background data across the region. Fithian (2013) lay out how to model presence-only data in a two-dimensional spatial domain using an IPP model. For our method, the domain for cases (and controls) is [0, 1] as previously noted. The IPP requires an intensity function \(\lambda \) to be specified, which represents the likelihood of a case being present at any location in the given domain. The average intensity function for response value \(\textit{z}\) over the domain is given as

An IPP model with intensity function \(\lambda \) gives the probability distributions for both the total number of cases as well as the locations of those cases. Conditional on the number of cases (governed by a Poisson distribution with mean \(\Lambda \)), the locations of the cases are independently and identically distributed as

If we define \(\lambda (\textit{z})=e^{q(\textit{z},{\varvec{X}})}\), then the IPP model becomes

We recognize this distribution form in the continuous logistic transformation formula given in 2. The cases are independently and identically distributed, so the log likelihood objective function is

where \(i=1,\ldots ,n\) and \(\varvec{\theta }\) is the parameter vector for the selected \(q\) model, as before. Fithian (2013) discuss how to evaluate the integral in the denominator by approximating it using a finite set of control (background) samples. Let \(k=1,\ldots ,K\) index the control samples, so that \(\textit{z}_{ik}\) denotes the \(k\)th control value for observation \(i\). Then, the log likelihood objective function with an approximated integral becomes

We recognize this as the log likelihood objective function in Sect. 8. Fithian and Hastie do not approximate the integral using a \(q\) function with a case plus a set of controls, instead only using the set of controls. However, the case is only providing more information about the integral than the controls would on their own so we do not expect this to produce any inconsistencies or biases. They state that the control points can be uniformly sampled from the domain, which implies that selecting as few as \(K=1\) controls still can provide valid inference about the target distribution function. Fithian and Hastie also state that the control points can be chosen through weighted sampling, referring to using quadrature weights. The additional ridge penalty component in 8 could have been added to the IPP likelihood as well.

Note that for presence-only data, Fithian and Hastie detail possible sampling bias issues that can arise due to imperfect detection of presences during data collection. However, we do not think detectability issues are relevant to our usage of this framework. We make the assumption that the detectability parameter equals 1 in our context, so we do not have to worry about sampling bias in this respect.

Appendix B: Computation

We use a variety of techniques to determine the optimal set of parameter estimates for the chosen model.

1.1 B.1: Polynomial regression model

For the polynomial regression model, we can manipulate the data in order to evaluate the parameters using penalized logistic regression. Recall the polynomial regression model in Sect. 3. We can express the polynomial model in 3 in matrix form as \(q(\textit{z},{\varvec{X}})={\varvec{X}}(\textit{z},B)\varvec{\xi }\), where \({\varvec{X}}(\textit{z},B)\) is the full covariate matrix as a function of the transformed response \(\textit{z}\) and the highest polynomial power \(B\) and \(\varvec{\xi }=[\varvec{\xi }_{10},\ldots ,\varvec{\xi }_{{B}p} ]\) is the associated parameter vector. Then, we can rewrite the objective function in Sect. 8 using the polynomial regression from Sect. 3 as

We recognize the form of this objective function for a logistic regression where every binary outcome equals 1. If we add a small amount of dummy observations where the covariate and outcome values are all 0, we can evaluate our parameter vector using penalized logistic regression. For each run of the polynomial regression case-control method, we used two dummy observations. The GLMNet package in R evaluates these parameters extremely fast, making this method practical and convenient (Friedman et al. 2009).

We ran preliminary simulation runs on a smaller number of datasets for a variety of ridge penalties to determine the appropriate penalty values to use for each scenario of the simulation and the application. For Model 1, 2, and 4 the ridge penalties used were 0.025, 0.05, and 0.05, respectively. For Model 3, the ridge penalty used for 200 observations was 0.025, whereas the ridge penalty used for the other two sample size settings was 0.01.

For the application, we considered a variety of ridge penalties to determine which penalty minimized the mean 5-fold CRPS. For lag 3 and lag 6, a ridge penalty of 0.000001 and 0.0005 were chosen, respectively.

1.2 B.2: Deep learning model

For the deep learning case–control approximation, we cannot manipulate the data like in Sect. B2 and instead rely on gradient descent to evaluate the parameters.

Deep learning models can be difficult to train with a basic gradient descent algorithm. We extend or alter the basic gradient descent algorithm in a multitude of ways designed to improve parameter estimation. Mini-batch gradient descent is used with batch sizes of 50 and an initial step size of 1. For the case study, the batch sizes are approximate as the number of observations is not divisible by 50. The models are run for 600 gradient descent steps for the simulation and 300 gradient descent steps for the case study, respectively. Adaptive moment estimation (ADAM) is implemented along with a step decay that halves the initial step size every 100 steps for the simulation models and 50 steps for the case study models (Kingma and Ba 2014). We apply the batch normalization algorithm described in Ioffe and Szegedy (2015). Model weights are initiated using the He initialization scheme, while the batch normalization shift and scale parameters are initialized to 0 and 1, respectively (He et al. 2015).

Deep learning IPP approximation (\(K=20\)) conditional maximum 10-m wind speed distribution predictions for lag 3 and 6 using model constructed with observations from fold 3 as the testing dataset and all other observations as the training dataset. Estimated conditional response probabilities for 100 equally spaced quantiles sequenced between 0.5 and 99.5 are displayed with linear interpolation between quantiles. The 0.5th and 99.5th quantile density function values are rounded to 0. \(\Pr (\textit{Y}=\textit{y}|X)\) refers to the relative probability that the maximum 10-meter wind speed \(\textit{Y}=\textit{y}\) occurs given the HWRF-forecasted maximum 10-meter wind speed value \(X\). \(\textit{Y}|X\) refers to the conditional response value \(\textit{Y}\) given the covariate \(X\)

Polynomial MCC approximation (\(K=1\)) conditional maximum 10-m wind speed distribution predictions for lag 3 and 6 using model constructed with with observations from fold 3 as the testing dataset and all other observations as the training dataset. Estimated conditional response probabilities for 100 equally spaced quantiles sequenced between 0.5 and 99.5 are displayed with linear interpolation between quantiles. The 0.5th and 99.5th quantile density function values are rounded to 0. \(\Pr (\textit{Y}=\textit{y}|X)\) refers to the relative probability that the maximum 10-meter wind speed \(\textit{Y}=\textit{y}\) occurs given the HWRF-forecasted maximum 10-meter wind speed value \(X\). \(\textit{Y}|X\) refers to the conditional response value \(\textit{Y}\) given the covariate \(X\)

The gradient descent algorithm requires the calculation of a gradient vector, which contains the first derivative value of the objective function with respect to each parameter. We obtain these values using back-propagation. The individual chain rule components for the gradient vector calculations for a single observation and case/control value are given in Sect. B3. The gradient vector can be calculated by summing the appropriate terms across observations and cases/controls.

The components for the batch normalization parameters can be obtained following the steps in (Ioffe and Szegedy 2015).

We ran preliminary simulation runs on a smaller number of datasets for a variety of ridge penalties to determine the appropriate penalty values to use for each scenario of the simulation and the application. For simulation Model 1, we used 0.025, 0.002, and 0.0025 as the penalties for 200, 1000, and 4000 observations respectively. For simulation Model 2, we used 0.015, 0.0025, and 0.001 as the penalties for 200, 1000, and 4000 observations respectively. For simulation Model 3, we used 0.0075, 0.001, and 0.001 as the penalties for 200, 1000, and 4000 observations respectively. For simulation Model 4, we used 0.01, 0.001, and 0.0005 as the penalties for 200, 1000, and 4000 observations respectively.

For the application, we considered a variety of ridge penalties and initial learning rates for the deep learning IPP approximation method. For both lags, the tuning settings that optimized the mean 5-fold CRPS were a ridge penalty of 0.000001 and an initial learning rate of 1.

Appendix C: Predicted distribution plots for individual fold

The predicted distribution plots for a range of covariate values using the full tropical cyclone dataset is given in Sect. 5, however the results in Table 2 were produced by obtaining separate models for each testing dataset fold. Here, we present an example of the predicted distribution plots for the third fold for both methods and both lags. The predicted distributions for the individual folds can vary somewhat compared to the overall predicted response distributions fitted using the full tropical cyclone dataset, as seen below for the deep learning IPP approximation. The polynomial MCC approximation predicted conditional distributions for the individual folds were generally similar to the overall predicted conditional distributions in Fig. 3.

Appendix D: CRPS control sensitivity analysis

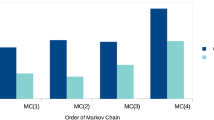

The simulation study results in Sect. 4 indicate that the number of \(K\) controls is influential for deep learning approximation model performance when there are only 200 observations. The deep learning IPP approximation model with \(K=1\) control was outperformed by QRF and DDR in three of the four data models, whereas the deep learning IPP approximation model with \(K=10\) controls outperformed QRF and DDR in three of the four data models. We further explore the effect of the number of \(K\) for these four data models and 200 observations in this section.

Figure 6 gives the average CRPS divergence for 200 observations under each data model for \(K\in \{1,2,3,4,5,6,7,8,9,10,15,20\}\) controls. There is a notable decrease in CRPS divergence when increasing from \(K=1\) to \(K=2\) controls for all four data models. The decrease in CRPS divergence for \(K>2\) is also present, however the improvements diminish as \(K\) is increased.

These results suggest that it is important to use multiple controls when utilizing the deep learning IPP approximation for a small dataset. The CRPS divergence scores either improve or stay similar as more controls are used, however the benefit of increasing the number of controls beyond \(K=2\) are increasingly marginal. The results in Table 1 point to the computational efficiency of the deep learning approximation method as a significant consideration, although not quite as significant for only 200 observations. Still, this suggests that the optimal selection of \(K\) controls would factor in both accuracy and computation time rather than choosing the highest \(K\) value possible.

Appendix E: CRPS grid points sensitivity analysis

The methods used for the case study were evaluated using the CRPS performance metric with 1000 evenly spaced grid points, consistent with the specifications used in Li et al. (2019). To investigate whether the case study results are impacted by the number of grid points, we conducted a sensitivity analysis. The CRPS scores for each case study method are calculated using 250, 500, 750, 1000, 1250, 1500, 1750, and 2000 evenly spaced grid points and the results are compared.

Tables 3 and 4 give the CRPS scores and accompanying standard errors for each method using the various amounts of evenly spaced grid points for the lag 3 and lag 6 datasets, respectively. The results across all methods and datasets are extremely insensitive to the number of evenly spaced grid points. The average CRPS scores for different number of grid points are nearly identical, indicating that the takeaways of the case study were not influenced by our choice to use 1000 evenly spaced grid points.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Huberman, D.B., Reich, B.J. & Bondell, H.D. Nonparametric conditional density estimation in a deep learning framework for short-term forecasting. Environ Ecol Stat 29, 677–704 (2022). https://doi.org/10.1007/s10651-021-00499-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10651-021-00499-z