Abstract

We are interested in the clusters formed by a Poisson ensemble of Markovian loops on infinite graphs. This model was introduced and studied in Le Jan (C R Math Acad Sci Paris 350(13–14):643–646, 2012, Ill J Math 57(2):525–558, 2013). It is a model with long range correlations with two parameters \(\alpha \) and \(\kappa \). The non-negative parameter \(\alpha \) measures the amount of loops, and \(\kappa \) plays the role of killing on vertices penalizing (\(\kappa >0\)) or favoring (\(\kappa <0\)) appearance of large loops. It was shown in Le Jan (Ill J Math 57(2):525–558, 2013) that for any fixed \(\kappa \) and large enough \(\alpha \), there exists an infinite cluster in the loop percolation on \({\mathbb {Z}}^d\). In the present article, we show a non-trivial phase transition on the integer lattice \({\mathbb {Z}}^d\) (\(d\ge 3\)) for \(\kappa =0\). More precisely, we show that there is no loop percolation for \(\kappa =0\) and \(\alpha \) small enough. Interestingly, we observe a critical like behavior on the whole sub-critical domain of \(\alpha \), namely, for \(\kappa =0\) and any sub-critical value of \(\alpha \), the probability of one-arm event decays at most polynomially. For \(d\ge 5\), we prove that there exists a non-trivial threshold for the finiteness of the expected cluster size. For \(\alpha \) below this threshold, we calculate, up to a constant factor, the decay of the probability of one-arm event, two point function, and the tail distribution of the cluster size. These rates are comparable with the ones obtained from a single large loop and only depend on the dimension. For \(d=3\) or 4, we give better lower bounds on the decay of the probability of one-arm event, which show importance of small loops for long connections. In addition, we show that the one-arm exponent in dimension 3 depends on the intensity \(\alpha \).

Similar content being viewed by others

1 Introduction

Consider an unweighted undirected graph \(G=(V,E)\) and a random walk \((X_m,m\ge 0)\) on it with transition matrix \(Q\). Unless specified, we will assume that \((X_m,m\ge 0)\) is a simple random walk (SRW) on \({\mathbb {Z}}^d\).

As in [17], an element \(\dot{\ell }= (x_1,\ldots ,x_n)\) of \(V^n, n\ge 2\), satisfying \(x_1\ne x_2,\ldots ,x_n\ne x_1\) is called a non-trivial discrete based loop. Two based loops of length \(n\) are equivalent if they coincide after a circular permutation of their coefficients, i.e., \((x_1,\ldots ,x_n)\) is equivalent to \((x_i,\ldots ,x_n,x_1,\ldots ,x_{i-1})\) for all \(i\). Equivalence classes of non-trivial discrete based loops for this equivalence relation are called (non-trivial) discrete loops.

Given an additional parameter \(\kappa >-1\), we associate to each based loop \(\dot{\ell }=(x_1,\ldots ,x_n)\) the weight

The push-forward of \(\dot{\mu }_\kappa \) on the space of discrete loops is denoted by \(\mu _\kappa \). (Note that our parameter \(\kappa \) in (1) corresponds to \(\frac{\kappa }{2d}\) in [17].)

For \(\alpha >0\) and \(\kappa >-1\), let \(\mathcal {L}_{\alpha ,\kappa }\) be the Poisson loop ensemble of intensity \(\alpha \mu _\kappa \), i.e, \(\mathcal {L}_{\alpha ,\kappa }\) is a random countable collection of discrete loops such that the point measure \(\sum \nolimits _{\ell \in \mathcal {L}_{\alpha ,\kappa }}\delta _{\ell }\) is a Poisson random measure of intensity \(\alpha \mu _\kappa \). (Here, \(\delta _\ell \) means the Dirac mass at the loop \(\ell \).) The collection \(\mathcal {L}_{\alpha ,\kappa }\) is induced by the Poisson ensemble of non-trivial continuous loops defined by Le Jan [16].

The Poisson ensembles of Markovian loops were introduced informally by Symanzik [29]. They have been rigorously defined and studied by Lawler and Werner [13] in the context of two dimensional Brownian motion (the Brownian loop soup). The random walk loop soup on graphs was studied by Lawler and Limic [11, Chapter 9], and its convergence to the Brownian loop soup by Ferreras and Lawler [12]. Extensive investigation of the loop soup on finite and infinite graphs was done by Le Jan [14, 15] for reversible Markov processes, and by Sznitman [32] in the context of reversible Markov chains on finite graphs from the point of view of occupation field and relation with random interlacement. A comprehensive study of Poisson ensembles of loops of one-dimensional diffusions was done by Lupu [19]. Let us also mention the works of Sheffield and Werner [28] and Camia [3], who studied clusters in the two dimensional Brownian loop soup.

In this paper we are interested in percolative properties of clusters formed by \(\mathcal {L}_{\alpha ,\kappa }\) on \({\mathbb {Z}}^d\), motivated by the work of Le Jan and Lemaire [17]. An edge \(e\in E\) is called open for \(\mathcal {L}_{\alpha ,\kappa }\) if it is traversed by at least one loop from \(\mathcal {L}_{\alpha ,\kappa }\). Maximal connected components of open edges for \(\mathcal {L}_{\alpha ,\kappa }\) form open clusters \(\mathcal {C}_{\alpha ,\kappa }\) of vertices. The percolation probability is defined as

It is known that \(\theta (\alpha ,\kappa )\) is an increasing function of \(\alpha \) and a decreasing function of \(\kappa \), see [17, Proposition 4.3]. In particular, if we define the critical thresholds

then \(\theta (\alpha ,\kappa ) = 0\) for \(\alpha <\alpha _c(\kappa )\) or \(\kappa >\kappa _c(\alpha )\), and \(\theta (\alpha ,\kappa ) > 0\) for \(\alpha >\alpha _c(\kappa )\) or \(\kappa <\kappa _c(\alpha )\), \(\alpha _c(\kappa )\) is non-decreasing in \(\kappa \) and \(\kappa _c(\alpha )\) is non-decreasing in \(\alpha \). The first properties of these thresholds were proved by Le Jan and Lemaire [17, Proposition 4.3, Remark 4.4]:

-

for fixed \(\kappa \), \(\alpha _c(\kappa )<\infty \), i.e., for large enough \(\alpha \) there is an infinite open cluster,

-

for fixed \(\alpha \), \(\kappa _c(\alpha )<\infty \), i.e., there is no infinite open cluster for large enough \(\kappa \),

-

on \({\mathbb {Z}}^2\), for any \(\alpha \), \(\kappa _c(\alpha )\ge 0\).

This picture can be complemented with the following result.

Theorem 1.1

For simple random walk loop percolation on \({\mathbb {Z}}^d\), \(d\ge 3\),

-

1.

\(\alpha _c\mathop {=}\limits ^{def} \alpha _c(0)>0\),

-

2.

for any \(\alpha >0\), \(\kappa _c(\alpha )\ge 0\),

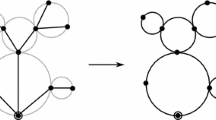

i.e., the percolation phase transition is non-trivial, see Fig. 1.

In contrast, for any connected recurrent Footnote 1 graph \(G\), for \(\kappa =0\) and \(\alpha >0\), with probability \(1\), all the vertices are in the same open cluster.

The first statement of Theorem 1.1 will directly follow from further stronger results of Theorem 1.3 that for some positive value of \(\alpha \), the one arm probability tends to \(0\) polynomially, the second statement will be proved in Proposition 3.4, and the third in Proposition 7.3. We should mention that during the write up of this paper, Titus Lupu posted a paper [20] in which he proves that for the loop percolation on \(\mathbb {Z}^d\), \(d\ge 3\), \(\alpha _c\ge \frac{1}{2}\) using a new coupling between the loop percolation and the Gaussian free field. Later in Theorem 1.7 we provide an asymptotic expression for \(\alpha _c\) as the dimension \(d\rightarrow \infty \).

The loop percolation on \({\mathbb {Z}}^d\), \(d\ge 3\), has long range correlations, see Proposition 3.1, it is translation invariant and ergodic with respect to the lattice shifts, see Proposition 3.2, it satisfies the positive finite energy property, and thus there can be at most one infinite open cluster, see Proposition 3.3. It is worth to make a comparison with other percolation models with long range correlations, which have been recently actively studied, for instance, the vacant set of random interlacements [30] or the level sets of the Gaussian free field [2, 25]. As we will soon see from our main results, the loop percolation displays a rather different behavior in the sub-critical regime than the above mentioned models. The decay of the one-arm probability in the loop percolation is at most polynomial, and in the other models it is exponential or stretched exponential, see [22, 23]. The latter is a consequence of the so-called decoupling inequalities [22, 23, 31]. They are pivotal in the study of phase transitions in those models, but could not be applicable to the questions of this paper.

For \(x,y\in V\), we write \(x\mathop {\longleftrightarrow }\limits ^{\mathcal {L}_{\alpha ,\kappa }}y\) if \(y\in \mathcal {C}_{\alpha ,\kappa }(x)\) (equivalently, \(x\in \mathcal {C}_{\alpha ,\kappa }(y)\)). For two vertex sets \(A\) and \(B\), the notation \(A\overset{\mathcal {L}_{\alpha ,\kappa }}{\longleftrightarrow } B\) means that there exist \(x\in A\) and \(y\in B\) such that \(x\overset{\mathcal {L}_{\alpha ,\kappa }}{\longleftrightarrow } y\). For a vertex \(x\) and a set of vertices \(B\), we write \(x\overset{\mathcal {L}_{\alpha ,\kappa }}{\longleftrightarrow } B\) instead of \(\{x\}\overset{\mathcal {L}_{\alpha ,\kappa }}{\longleftrightarrow } B\).

Our main object of interest in this paper is the one arm probability for the loop percolation on \(\mathbb {Z}^d\) with \(\alpha <\alpha _c\) and \(\kappa =0\):

where \(\partial B(0,n)\) is the boundary of the box of side length \(2n\) centered at \(0\). A general lower bound for \(\alpha >0\) can be obtained by calculating the probability of connection by one big loop in \(\mathcal {L}_{\alpha ,0}\):

Theorem 1.2

For \(d\ge 3\), \(\alpha >0\) and \(\kappa =0\), there exists \(c = c(d)>0\) such that for all \(n\ge 1\),

It is interesting that we have in the same model a non-trivial phase transition together with an at most polynomial decay of one-arm connectivity for sub-critical domain. This critical-like behavior might be understood in the following way. By the second statement of Theorem 1.1, for any \(\alpha >0\), \(\kappa _c(\alpha )\ge 0\). Thus, \(]0,\alpha _c[\times \{0\}\) is a part of the critical curve of \((\alpha ,\kappa )\), see Fig. 1. This polynomial decay phenomenon appears exactly at the critical value 0 of the parameter \(\kappa \).

It is natural to consider whether \(n^{2-d}\) is the right order of \(\mathbb {P}[0\overset{\mathcal {L}_{\alpha ,0}}{\longleftrightarrow }\partial B(0,n)]\). We cannot give an answer for the whole sub-critical domain. We introduce an auxiliary parameter as follows: for \(d\ge 3\),

Our first step is the following polynomial upper bound:

Theorem 1.3

-

(a)

For \(d\ge 3\), \(\alpha _{1}>0\).

-

(b)

For \(d\ge 3\) and \(\alpha <\alpha _{1}\), there exist constants \(C(d,\alpha )<\infty \) and \(c(d,\alpha )>0\) such that \(\lim \nolimits _{\alpha \rightarrow 0}c(d,\alpha )=d-2\) and for \(N\ge 1\),

$$\begin{aligned} \mathbb {P}[0\overset{\mathcal {L}_{\alpha ,0}}{\longleftrightarrow } \partial B(0,N)]\le C(d,\alpha )\cdot N^{- c(d,\alpha )}. \end{aligned}$$In fact, one can take \(c(d,\alpha ) = d-2 - C(d)(\log \frac{1}{\alpha })^{-1}\) as \(\alpha \rightarrow 0\), where \(C(d)\) is a large enough constant.

By Theorem 1.2, \(c(d,\alpha )\le d-2\), and Theorem 1.3 suggests that \(d-2\) could probably be the right exponent for the one-arm decay. This is indeed the case when the expected cluster size is finite, see Theorem 1.4. To state the result, we introduce another auxiliary parameter corresponding to the finiteness of expected cluster size:

Our next result provides the strict positivity of \(\alpha _{\#}\) and the order of one-arm decay for \(d\ge 5\) together with the order of two point connectivity, the tail of cluster size and comparison between \(\alpha _{\#}\) and \(\alpha _{1}\):

Theorem 1.4

-

(a)

For \(d\ge 5\), \(\alpha _{\#}>0\), and for \(d=3\) or \(4\), \(\alpha _{\#}=0\).

-

(b)

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exist constants \(0<c(d,\alpha )<C(d,\alpha )<\infty \) such that for all \(n\),

$$\begin{aligned} c(d,\alpha )n^{2-d}\le \mathbb {P}[0\overset{\mathcal {L}_{\alpha ,0}}{\longleftrightarrow }\partial B(0,n)]\le C(d,\alpha )n^{2-d}. \end{aligned}$$ -

(c)

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exist \(0<c(d,\alpha )<C(d,\alpha )<\infty \) such that for all \(x\in \mathbb {Z}^d\),

$$\begin{aligned} c(d,\alpha ) (||x||_{\infty }+1)^{2(2-d)}\le \mathbb {P}[x\in \mathcal {C}_{\alpha ,0}(0)]\le C(d,\alpha ) (||x||_{\infty }+1)^{2(2-d)}. \end{aligned}$$ -

(d)

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exist \(0<c(d,\alpha )<C(d,\alpha )<\infty \) such that for all \(n\),

$$\begin{aligned} c(d,\alpha )n^{1-d/2}\le \mathbb {P}[\#\mathcal {C}_{\alpha ,0}(0)>n]\le C(d,\alpha )n^{1-d/2}, \end{aligned}$$ -

(e)

For \(d\ge 5\), \(\alpha _{\#}\le \alpha _{1}\).

Theorems 1.2 and 1.4 suggest the following picture for sub-critical loop percolation in dimension \(d\ge 5\): the large cluster typically contains a macroscopic loop of diameter comparable with the diameter of the cluster.

This scenario however cannot be true for sub-critical loop percolation in dimensions \(d = 3,4\), as we can get better lower bounds on the one-arm probability. In dimension \(d=3\), we prove that \(d-2\) is not the right exponent for the one-arm probability:

Theorem 1.5

For \(d=3\), for \(\alpha >0\), there exist \(\epsilon (\alpha ), c(\alpha )>0\) such that for all \(n\),

Note that \(\lim \nolimits _{\alpha \rightarrow 0}\epsilon (\alpha )=0\) by Theorem 1.3.

In dimension \(d=4\), we get an improved lower bound for the one-arm probability, still with exponent \(d-2\), but with an extra logarithmic correction:

Theorem 1.6

For \(d=4\), there exist \(\epsilon (\alpha ),c(\alpha )>0\) such that

We conjecture that for the sub-critical loop percolation in dimension \(d=4\), an upper bound on the one-arm probability is in similar form with logarithmic correction.

The results of Theorems 1.5 and 1.6 imply that the structure of connectivities in sub-critical loop percolation in dimensions \(d=3,4\) is different from that in dimensions \(d\ge 5\): macroscopic loops are not essential for formation of large connected components.

All the upper bounds that we obtain hold either for \(\alpha <\alpha _{\#}\) or for \(\alpha <\alpha _{1}\), i.e., for subregimes of the sub-critical phase. We expect that for \(d\ge 5\), \(\alpha _{\#}= \alpha _c\), and for \(d\ge 3\), \(\alpha _{1}= \alpha _c\), but we do not have a proof yet. However, we can show that asymptotically, as \(d\rightarrow \infty \), all these thresholds coincide.

Theorem 1.7

Asymptotically, as \(d\rightarrow \infty \),

Outline of the paper. In the next section, we introduce the commonly used notation and collect some preliminary results about simple random walk on \(\mathbb {Z}^d\) and some properties of the loop measure \(\mu \). In Sect. 3, we prove some elementary properties of the loop percolation on \(\mathbb {Z}^d\), such as long-range correlations, translation invariance and ergodicity, the uniqueness of the infinite cluster, and the connectedness for \(\kappa <0\). Except for the translation invariance, these properties will not be used in the proofs of the main results. In Sect. 4, we prove Theorems 1.2 and 1.3. Finer results for the loop percolation in dimensions \(d\ge 5\) are presented in Sect. 5. In particular, the first 5 subsections are devoted to the proof of Theorem 1.4, which is split into \(5\) Propositions 5.1, 5.2, 5.3, 5.4, and 5.10, and the last subsection contains the proof of Theorem 1.7. The proofs of Theorems 1.5 and 1.6 (refined lower bounds in dimension \(d=3\) or \(4\)) are given in Sect. 6. In Sect. 7, we collect some results for the loop percolation on general graphs, such as triviality of the tail sigma-algebra, connectedness in recurrent graphs, and the continuity of \(\kappa _c(\alpha )\). We finish the paper with an overview of some open questions.

2 Notation and preliminary results

2.1 Notation

Let \(G = (V,E)\) be an unweighted undirected graph. For \(F\subseteq V\), let \(\partial F = \{x\in F:\exists y\in V{\setminus }F\text { such that }\{x,y\}\text { is an edge}\}\) be the boundary of \(F\).

Let \((X_n,n\ge 0)\) be a simple random walk (SRW) on \(G\). Let \(\mathbb {P}^x\) be the law of SRW started from \(x\in V\). Let \((G(x,y))_{x,y\in V}\) be the Green function for \((X_n,n\ge 0)\).

For \(F\subseteq V\), let \(\tau (F)\) be the entrance time of \(F\) and \(\tau ^{+}(F)\) be the hitting time of \(F\) by \((X_n,n\ge 0)\):

For \(x\in V\), we use the notation \(\tau (x)\) and \(\tau ^{+}(x)\) instead of \(\tau (\{x\})\) and \(\tau ^{+}(\{x\})\).

Definition 2.1

The capacity of a set \(F\) is defined by

For finite subsets of vertices of a transient graph, the capacity is positive and monotone, i.e., for any finite \(F\subset F'\subset V\), \(0<{\text {Cap}}(F)\le {\text {Cap}}(F')\).

For \(x\in V\) and a loop \(\ell \), we write \(x\in \ell \) if \(\ell \) visits \(x\), i.e., for some based loop \(\dot{\ell }\) in the equivalence class \(\ell \), \(\dot{\ell }= (x_1,\ldots ,x_n)\) with \(x_1 = x\). For \(F\subseteq V\), we write \(\ell \cap F\ne \phi \) if \(\ell \) visits at least one vertex in \(F\), and \(\ell \subset F\) if all the vertices visited by \(\ell \) are contained in \(F\). For two sets of vertices \(F_1\) and \(F_2\), we write \(F_1\mathop {\longleftrightarrow }\limits ^{\ell }F_2\) if the loop \(\ell \) intersects both \(F_1\) and \(F_2\). If some of the two sets is a singleton, say \(\{x\}\), then we omit the brackets from the notation. For instance, \(x\mathop {\longleftrightarrow }\limits ^{\ell }y\) means that the loop \(\ell \) intersects both \(x\) and \(y\).

For \(F\subseteq V\), \(\alpha >0\) and \(\kappa >-1\), we write

Since most of the time we will deal with the case \(\kappa = 0\), we accept the following convention:

For instance, we will write \(\mu = \mu _0\), \(\mathcal {L}_{\alpha } = \mathcal {L}_{\alpha ,0}\), \(\mathcal {C}_{\alpha } = \mathcal {C}_{\alpha ,0}\).

Throughout the following context, we denote by \(M_p^{+}\) the set of \(\sigma \)-finite point measures on the space of discrete loops on \(G\), and by \(\mathcal {F}\) the canonical \(\sigma \)-algebra on \(M_p^+\). For \(K\subseteq V\) and a point measure \(m=\sum \nolimits _{i\in \mathbb {N}}c_i\delta _{\ell _i}\) of loops where \(\delta _{\ell _i}\) is the Dirac mass at the loop \(\ell _i\), define \(m_K=\sum \nolimits _{i\in \mathbb {N}}c_i\delta _{\ell _i}1_{\{\ell _i\cap K\ne \phi \}}\) and \(m^K=\sum \nolimits _{i\in \mathbb {N}}c_i\delta _{\ell _i}1_{\{\ell _i\subset K\}}\). We denote by \(\mathcal {F}_K\) the \(\sigma \)-field generated by \(\{m_K:m\in M_{p}^{+}\}\) and by \(\mathcal {F}^K\) the \(\sigma \)-field generated by \(\{m^K:m\in M_{p}^{+}\}\). A random set \(\mathcal {K}\) is called \((\mathcal {F}_{K})_{\{K\text { finite}\}}\)-optional iff for any deterministic \(K\subseteq V\), \(\{\mathcal {K}\subset K\}\) is \(\mathcal {F}_{K}\)-measurable. Then, define

Similar definitions hold for the filtration \((\mathcal {F}^{K})_{\{K\text { finite}\}}\).

For \(x\in \mathbb {Z}^d\) and natural number \(n\in \mathbb {N}\), denote by \(B(x,n)\) the box of side length \(2n\) centered at \(x\).

2.2 Facts about random walk

Lemma 2.1

([11, Proposition 4.6.4]) For a transient graph and a subset \(F\) of vertices, by last passage time decomposition,

Lemma 2.2

([11, Theorem 4.3.1]) For simple random walk on \(\mathbb {Z}^d\), \(d\ge 3\), there exist \(0<c(d)\le C(d)<\infty \) such that

More precisely, \(G(x,y)=\frac{d\Gamma (d/2)}{(d-2)\pi ^{d/2}}(||x-y||_{2}+1)^{2-d}+O((||x-y||_2+1)^{-d})\).

Lemma 2.3

([11, Proposition 6.5.1]) There exist \(0<c(d)<C(d)<\infty \) such that for \(n\ge 1\),

The following lemma provides an estimate on the capacity of the random walk range.

Lemma 2.4

For a SRW on \(\mathbb {Z}^d\), \(d\ge 3\), there exists \(c(d)>0\) such that

where \(F(d,n)=1_{d=3}\cdot n+1_{d=4}\cdot \frac{n^2}{\log n}+1_{d\ge 5}\cdot n^2\).

Proof

It suffices to show that there exists \(c' =c'(d)>0\) such that for all \(T\ge 0\),

Indeed, let \(\tau (n) = \tau (\partial B(0,n))\). By the strong Markov property and Harnack’s inequality,

By Kolmogorov’s maximal inequality for the coordinates,

We choose \(\delta = \frac{c'}{8}\) and apply (3) with \(T = \lfloor \frac{c'}{8} n^2\rfloor \) to get \(\mathbb {P}^{0}[{\text {Cap}}(\{X_0,\ldots ,X_{\tau (\lceil n/2\rceil )}\})>cF(d,n)]\ge \frac{c'}{2}\) for a suitable choice of \(c=c(d)\).

It remains to verify (3). By the Paley–Zygmund inequality it suffices to check that for some \(c(d)>0\) and \(C(d)<\infty \),

and

The first inequality was proved in [24, Lemma 4] for all \(d\ge 3\), and the second was proved in [24] for \(d=3\) and \(d\ge 5\), see the proof of Lemma 5 there. For \(d=4\), the second inequality is obtained in [24] with a logarithmic correction, which is not enough to imply (3). Below we provide a proof of the correct bound using a result about intersection of SRWs from [9, Theorem 2.2]. We prove that there exists \(C\) such that for the SRW on \(\mathbb {Z}^4\),

Let \((X^0_n)_{n\ge 0},(X^1_n)_{n\ge 0},(X^2_n)_{n\ge 0}\) be three independent SRWs. Denote by \(\mathbb {E}_{(i)}^{x}\) the expectation corresponding to the random walk \(X^i\) with initial point \(x\). Similarly, we define \((\mathbb {E}_{(i),(j)}^{x,y})_{i\ne j}\). For simplicity of notation, we denote by \(\mathbb {E}^{x,y,z}\) (or \(\mathbb {P}^{x,y,z}\)) the expectation (or probability) corresponding to \(X^0,X^1\) and \(X^2\) with initial points \(x,y\) and \(z\), respectively. Denote by \(X^0[0,T]\) the range of \(X^0\) up to time \(T\). Similarly, we define \(X^1[0,\infty [\) and \(X^2[0,\infty [\).

Let \(x_0 = (2T,0,\ldots ,0)\). By Lemmas 2.1 and 2.2, \(\mathbb {E}^0[{\text {Cap}}(\{X_0,\ldots ,X_{T}\})^2]\) is comparable to

For \(i=1\) and \(2\), define \(\tau _i=\inf \{j\ge 0:X^0_j\in X^{i}[0,\infty [\}\). By symmetry,

By conditioning on \(X^1\) and \(X^2\) and then applying the strong Markov property for \(X^0\) at time \(\tau _1\),

Then, we take the expectation with respect to \(X^2\) and get that

Note that \(||y-x_0||_{2}\ge T\) for all \(y\in B(0,T)\). By [9, Theorem 2.2], there exists \(C<\infty \) such that

Thus, (4) is proved and the proof of Lemma 2.4 is complete. \(\square \)

2.3 Properties of the loop measure \(\mu \)

In this subsection, we present several properties of loop measure \(\mu \). Most of them are taken from [15–17].

Lemma 2.5

(Proposition 18 in Chapter 4 of [15]) For a finite subset of vertices \(F\) of a transient graph,

where \(G\) is the Green function viewed as a matrix \((G(x,y))_{x,y}\), and \(G|_{F\times F}\) is its sub-matrix with indexes on \(F\times F\).

As a corollary, for \(n\) different vertices \(x_1,\ldots ,x_n\),

We point out that Le Jan uses a different normalization for the Green function and refer to Proposition 18 in Chapter 4 of [15] for a proof.

The following lemma is a special case of the result in [15, Eq. (4.3)]. In fact, Le Jan proves that the joint distribution of visiting times for a set of points is multi-variate binomial distribution. The result about the excursions can be derived from explicit calculation.

Lemma 2.6

([15]) Fix a vertex \(x_0\) in a transient graph with the Green function \(G\). Let \(\xi (x_0,\ell )\) count the number of visits of vertex \(x_0\) in the loop \(\ell \). Set \(\xi (x_0,\mathcal {L}_{\alpha })=\sum \nolimits _{\ell \in \mathcal {L}_{\alpha }}\xi (x_0,\ell )\) be the total number of visits of \(x_0\) for the loop ensemble \(\mathcal {L}_{\alpha }\). Then, \(\xi (x_0,\mathcal {L}_{\alpha })\) follows a negative binomial (or Pólya) distribution, i.e.,

By cutting down all the loops from \(\mathcal {L}_{\alpha }\) into excursions from \(x_0\), we get \(\xi (x_0,\mathcal {L}_{\alpha })\)-many excursions. Conditionally on \(\xi (x_0,\mathcal {L}_{\alpha })=k\), those excursions are i.i.d. sample of the SRW excursions with finite length.

We proceed by describing a useful representation of the measure of a given loop visiting two disjoint sets as a linear combination of the measures of based loops starting on the boundary of one of the sets, see (6). We first introduce some notation. For a based loop \(\dot{\ell }\), the multiplicity of \(\dot{\ell }\) is defined as

The multiplicity of a loop \(\ell \), denoted also by \(m(\ell )\), is the multiplicity of any of the based loops in the equivalence class. By (1) and the definition of \(\mu \) as the push-forward of \(\dot{\mu }\), for any loop \(\ell \) of length \(n\),

For two disjoint subsets \(S_1\) and \(S_2\), consider the map \(L(S_1,S_2)\) from the space of loops visiting \(S_1\) and \(S_2\) to subsets of based loops such that

-

(a)

any \(\dot{\ell }= (x_1,\ldots ,x_n)\in L(S_1,S_2)(\ell )\) is in the equivalence class \(\ell \),

-

(b)

\(x_1\in S_1\),

-

(c)

there exists \(i\) such that \(x_i\in S_2\) and \(x_j\notin (S_1\cup S_2)\) for all \(j>i\).

Note that \(L(S_1,S_2)(\ell )\ne \phi \) if and only if \(\ell \) visits \(S_1\) and \(S_2\).

For any \(\dot{\ell }= (x_1,\ldots ,x_n)\in L(S_1,S_2)(\ell )\), we define recursively the sequence \((\tau _i)_{i\ge 0}\) as follows: for \(k\ge 0\),

We write \(\inf \{\phi \} = n+1\). By the definition of \(L(S_1,S_2)\), there exists \(k(\dot{\ell })\ge 1\) such that \(\tau _{2k(\dot{\ell })-1} \le n\) and \(\tau _{2k(\dot{\ell })} = n+1\). The value of \(k(\dot{\ell })\) is the same for all \(\dot{\ell }\in L(S_1,S_2)(\ell )\), and we denote it by \(k(\ell )\). In words, it is half of the number of excursions of \(\ell \) between \(S_1\) and \(S_2\).

Claim 1

For any loop \(\ell \) of length \(n\) visiting \(S_1\) and \(S_2\),

Proof

The claim is immediate from the fact that \(k(\ell ) = m(\ell )\cdot |L(S_1,S_2)(\ell )|\) and (5). \(\square \)

We end this section with crucial estimates which will be frequently used in the proofs.

Lemma 2.7

-

(a)

For \(d\ge 3\) and \(\lambda >1\), there exists \(C = C(d,\lambda )<\infty \) such that for \(N\ge 1\), \(M\ge \lambda N\), and \(K\subset B(0,N)\),

$$\begin{aligned} \mu (\ell : K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,M))\le C\cdot {\text {Cap}}(K)\cdot M^{2-d}. \end{aligned}$$ -

(b)

For \(d\ge 3\), let \(F(d,n)=1_{d=3}\cdot n+1_{d=4}\cdot \frac{n^2}{\log n}+1_{d\ge 5}\cdot n^2\). For any \(\lambda >1\), there exists \(c = c(d,\lambda )>0\) such that for \(N\ge 1\), \(M\ge \lambda N\), and \(K\subset B(0,N)\),

$$\begin{aligned} \mu (\ell :K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,M),\quad {\text {Cap}}(\ell )>cF(d,M))\ge c\cdot {\text {Cap}}(K)\cdot M^{2-d}. \end{aligned}$$

Proof

-

(a)

Let \((\tau _n)_{n\ge 0}\) be the sequence of stopping times defined recursively by

$$\begin{aligned} \tau _0&\overset{\text {def}}{=}\tau (K),\\ \tau _{2k+1}&\overset{\text {def}}{=}\inf \{n>\tau _{2k}:X_n\in \partial B(0,M)\},\\ \tau _{2k+2}&\overset{\text {def}}{=}\inf \{n>\tau _{2k+1}:X_n\in K\}. \end{aligned}$$$$\begin{aligned} \mu (\ell : K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,M))=\sum \limits _{n\ge 1}\frac{1}{n} \sum \limits _{x\in \partial K}\mathbb {P}^x[X_{\tau _{2n}}=x]. \end{aligned}$$By the strong Markov property, for \(n\ge 0\),

$$\begin{aligned} \mathbb {P}^x[X_{\tau _{2n+2}}=x]=\sum \limits _{\begin{array}{c} y\in \partial K\\ z\in \partial B(0,M) \end{array}}\mathbb {P}^x[X_{\tau _{2n}}=y]\mathbb {P}^y[X_{\tau (\partial B(0,M))}=z]\mathbb {P}^z[X_{\tau (K)}=x]. \end{aligned}$$By Harnack’s inequality, there exists a constant \(C = C(d,\lambda )\) such that

$$\begin{aligned} \max \limits _{y\in \partial K}\mathbb {P}^y[X_{\tau (\partial B(0,M))}=z]\le C\cdot \mathbb {P}^0[X_{\tau (\partial B(0,M))}=z]. \end{aligned}$$Therefore,

$$\begin{aligned} \mathbb {P}^x[X_{\tau _{2n+2}}=x]&\le C\cdot \mathbb {P}^x[\tau _{2n}<\infty ]\sum \limits _{z\in \partial B(0,M)}\mathbb {P}^0[X_{\tau (\partial B(0,M))}=z]\mathbb {P}^z[X_{\tau (K)}=x]\\&\le C\cdot \left( \max \limits _{x\in \partial K}\mathbb {P}^x[\tau _{2n}<\infty ]\right) \cdot \mathbb {P}^0[X_{\tau _2}=x]\\&\le C\cdot \left( \max \limits _{x\in \partial K}\mathbb {P}^x[\tau _{2}<\infty ]\right) ^n\cdot \mathbb {P}^0[X_{\tau _2}=x]. \end{aligned}$$Under the assumption \(\lambda >1\), there exists \(\rho = \rho (d,\lambda )<1\) such that

$$\begin{aligned} \max \limits _{x\in \partial K}\mathbb {P}^x[\tau _2<\infty ]<\rho <1. \end{aligned}$$Thus, there exists \(C' = C'(d,\lambda )\) such that

$$\begin{aligned} \mu (\ell :K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,M))\le & {} C\cdot \mathbb {P}^0[\tau _2<\infty ]\cdot \left( \sum \limits _{n\ge 1}\frac{1}{n}(\max \limits _{x\in \partial K}\mathbb {P}^x[\tau _{2}<\infty ])^{n-1}\right) \\\le & {} C'\cdot \mathbb {P}^0[\tau _2<\infty ] \le C'\cdot \max \limits _{z\in \partial B(0,M)}\mathbb {P}^{z}[\tau (K)<\infty ]. \end{aligned}$$By Lemmas 2.1 and 2.2 and using the assumption \(\lambda >1\), there exists \(C'' = C''(d,\lambda )\) such that for any \(z\in \partial B(0,M)\),

$$\begin{aligned} \mathbb {P}^z[\tau (K)<\infty ]\le C''\cdot M^{2-d}\cdot \sum \limits _{w\in \partial K}\mathbb {P}^{w}[\tau ^{+}(K)=\infty ] = C''\cdot M^{2-d}\cdot {\text {Cap}}(K). \end{aligned}$$The result follows.

-

(b)

By Lemma 2.4 and the monotonicity of the capacity of finite sets, there exists \(c =c(d,\lambda )>0\) such that

$$\begin{aligned}&\min \limits _{y\in \partial B(0,M)}\mathbb {P}^y[{\text {Cap}}(\{X_0,\ldots ,X_{\tau (K)}\})> cF(d,M)|X_{\tau (K)}=x]\nonumber \\&\quad \ge \min \limits _{y\in \partial B(0,M)}\mathbb {P}^y[{\text {Cap}}(\{X_0,\ldots ,X_{\tau (\partial B(y,M-N))}\})>cF(d,M)|X_{\tau (K)}=x]\nonumber \\&\quad \ge \min \limits _{z\in \partial B(0,M-N)}\mathbb {P}^0[{\text {Cap}}(\{X_0,\ldots ,X_{\tau (\partial B(0,M-N))}\})\\&\quad >cF(d,M)|X_{\tau (\partial B(0,M-N))}=z] \ge c. \end{aligned}$$By (1) and (6) (and ignoring the loops with \(k(\ell ) \ge 2\)),

$$\begin{aligned}&\mu (\ell :K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,M),\quad {\text {Cap}}(\ell )>cF(d,M))\\&\quad \ge \sum \limits _{x\in \partial K,y\in \partial B(0,M)}\mathbb {P}^x[X_{\tau (\partial B(0,M))}=y]\cdot \mathbb {P}^y[X_{\tau (K)}=x,{\text {Cap}}(\{X_0,\ldots ,X_{\tau (K)}\})\\&\quad >cF(d,M)] \ge c\cdot \sum \limits _{x\in \partial K,y\in \partial B(0,M)}\mathbb {P}^x[X_{\tau (\partial B(0,M))}=y]\cdot \mathbb {P}^y[X_{\tau (K)}=x]. \end{aligned}$$The rest of the proof is very similar to that of Part a). It is based on an application of Harnack’s inequality, Lemmas 2.1 and 2.2. We omit the details.

\(\square \)

3 Some basic properties of loop percolation

In this section we collect some elementary properties of the loop percolation on \(\mathbb {Z}^d\):

-

long range correlations, see Proposition 3.1,

-

translation invariance and ergodicity, see Proposition 3.2,

-

the uniqueness of infinite cluster, see Proposition 3.3,

-

the existence of percolation for \(\alpha >0\) and \(\kappa <0\), see Proposition 3.4.

We remark that except for the translation invariance, these properties will not be used in the proofs of the main results.

The following proposition shows the long range correlations in SRW loop percolation on \(\mathbb {Z}^d\) for \(d\ge 3\) and \(\kappa =0\).

Proposition 3.1

Let \(d\ge 3\), \(\alpha >0\), and \(\kappa = 0\). For \(x\in \mathbb {Z}^d\), we write \(x\in \mathcal {L}_\alpha \) if there exists \(\ell \in \mathcal {L}_\alpha \) such that \(x\in \ell \). For any \(x,y\in \mathbb {Z}^d\),

Proof

By Lemma 2.5, for \(F\subset \mathbb {Z}^d\),

Thus,

This gives the first statement. The second follows from Lemma 2.2. \(\square \)

Next, we prove the ergodicity of SRW loop soup under lattice shifts \((t_x)_{x\in \mathbb {Z}^d}\), \(t_x:\ell \mapsto \ell +x\).

Proposition 3.2

The Poisson loop ensemble associated with a simple random walk on \(\mathbb {Z}^d\) is invariant under lattice shifts. Moreover, it is ergodic under these translations.

Proof of Proposition 3.2

The translation invariance of Poisson loop ensemble comes from the translation invariance of its intensity measure and we omit its proof here. For the ergodicity, let us fix \(x\in \mathbb {Z}^d\), a measurable event \(A\) and show that

By the law of large numbers, it is enough to show that for measurable events \(A\) and \(B\),

Or equivalently, by translation invariance, it is enough to show

By a classical monotone class argument (Dynkin’s \(\pi -\lambda \) theorem), it is enough to show it for \(A,B\in \bigcup \nolimits _{K\text { finite}}\mathcal {F}^{K}\). (Recall that \(\mathcal {F}^K\) is the sigma-filed generated by loops inside \(K\).) We choose \(K\) large enough such that \(A\) and \(B\) are \(\mathcal {F}^{K}\)-measurable. Then, \(1_A\circ t_x^n\) is \(\mathcal {F}^{t_x^{-n}(K)}\)-measurable. For \(n\) large enough, \(t_x^{-n}(K)\cap K\) is empty. Recall that Poisson random measure of disjoint sets are independent. Then, for the same \(n\), we have independence between \(1_B\) and \(1_A\circ t_x^n\) since we have independence between the loops inside \(t_x^{-n}(K)\) and those loops inside \(K\). Thus,

\(\square \)

Ergodicity implies that any translation invariant event has probability either 0 or 1. Consequently,

The next proposition states the uniqueness of infinite cluster.

Proposition 3.3

(Uniqueness of infinite cluster) For the Poisson loop ensemble associated with a SRW on \(\mathbb {Z}^d\), there is at most one infinite cluster in the corresponding loop percolation.

Proof

By Theorem 1 in [5], “translation invariance” and “positive finite energy property” imply the uniqueness. Thus, we only need to show the positive finite energy property: for all \(e=\{x,y\}\),

where \(\omega _e\in \{0,1\}\) and \(\omega _e =1\) if and only if the edge \(e\) is traversed by a loop from \(\mathcal {L}_\alpha \).

From the independence structure of Poisson loop ensemble between two disjoint sets, the event \(\{\text {the loop } (x,y)\text { is in } \mathcal {L}_\alpha \}\) is independent from \(\sigma (\{\omega _f:f\text { is an edge in }\mathbb {Z}^d\text { and }f\ne e\})\). Thus,

\(\square \)

Remark 3.1

In fact, for \(d\ge 3\), for an edge \(e=\{x,y\}\) in the integer lattice \(\mathbb {Z}^d\), one can also prove that

As a consequence, there exists at most one infinite cluster of closed edges.

We complete this section with a statement about triviality of loop percolation on \(\mathbb {Z}^d\) for \(\alpha >0\) and \(\kappa <0\). In particular, it implies the second statement of Theorem 1.1.

Proposition 3.4

For \(d\ge 3\), \(\alpha >0\), \(\kappa <0\), and \(x\in \mathbb {Z}^d\), the graph \(\mathbb {Z}^d\) is covered by the loops \(\{\ell \in \mathcal {L}_{\alpha }:x\in \ell \}\) passing through \(x\).

Proof

For \(y\ne x\), by (1) and (6) applied to \(S_1 = \{x\}\) and \(S_2 = \{y\}\), and by ignoring all the loops with \(k(\ell )\ge 2\), we get that for any \(n\ge 1\),

Moreover, for any \(n\ge 1\),

Since the above inequalities hold for all \(n\ge 1\), \(\mu (\ell :x,y\in \ell ) = \infty \). Therefore,

\(\square \)

4 First results for the one-arm connectivity for \(\mathbb {Z}^d (d\ge 3)\) and \(\kappa =0\)

4.1 Lower bound: Proof of Theorem 1.2

For any \(d\ge 3\), \(\alpha >0\), and \(n\ge 1\),

\(\square \)

4.2 Upper bound: Proof of Theorem 1.3

4.2.1 \(\alpha _{1}>0\)

We will prove the following lemma.

Lemma 4.1

For \(d\ge 3\) and \(\beta >1\), there exists \(C(d,\beta )<\infty \) such that for all \(\alpha >0\) and \(n\ge 1\),

Lemma 4.1 implies that \(\alpha _{1}>0\) for all \(d\ge 3\).

Proof

Fix \(d\ge 3\) and \(\beta >1\). For \(\alpha >0\) and \(n\ge 1\), define the function

By Lemma 2.7, there exists \(C_1 = C_1(d)<\infty \) such that

We will prove that there exists \(C_2 = C_2(d,\beta )<\infty \) such that for all \(\alpha >0\) and \(n\ge 1\),

Before we prove (9), we show how it implies (8). Take \(C_3 = C_3(d,\beta )=\max (C_1,2C_2)\). On the one hand for \(\alpha \le C_3^{-2}\), by induction on \(m\), we obtain that \(f^{(\alpha )}(4^m)\le C_3\cdot \alpha \) for all \(m\ge 0\). Then by monotonicity of \(f^{(\alpha )}\), \(f^{(\alpha )}(n)\le C_3\cdot \alpha \) for all \(\alpha \le C_3^{-2}\) and \(n\ge 1\). On the other hand for \(\alpha \ge C_3^{-2}\) and \(n\ge 1\), \(f^{(\alpha )}(n) \le 1 \le C_3^2\cdot \alpha \). Thus (8) follows with \(C(d,\beta ) = \max (C_3,C_3^2)\).

It remains to prove (9). For \(x\in \mathbb {Z}^d\), \(m\ge k\ge 1\), consider the events

It suffices to show that

Please see Fig. 2 for an illustration of the event mentioned above. Moreover, we may suppose \(k\ge \frac{1}{\beta -1}\). Let

The key observation is that if \(E^\alpha (0,a_k,d_k)\) occurs and \(\mathcal {L}_\alpha \) does not contain a loop intersecting both \(B(0,b_k)\) and \(\partial B(0,c_k)\), then \(B(0,a_k)\) is connected to \(\partial B(0,b_k)\) by loops from \(\mathcal {L}_\alpha \) which are contained in \(B(0,c_k-1)\), and \(B(0,c_k)\) is connected to \(\partial B(0,d_k)\) by loops which are not contained in \(B(0,c_k-1)\). Since the two collections of loops are disjoint and \(\mathcal {L}_\alpha \) is a Poisson point process, the two events are independent. Thus,

Since \(c_k>b_k\) and \(\lim \nolimits _{k\rightarrow \infty }\frac{c_k}{b_k}=3\), by Lemmas 2.7 and 2.3,

It remains to show that for some \(C(d,\beta )<\infty \), \(\mathbb {P}[E^\alpha (0,a_k,b_k)]\le C(d,\beta )\cdot \mathbb {P}[E^\alpha (0,k,\lceil \beta k\rceil )]\) and \(\mathbb {P}[E^\alpha (0,c_k,d_k)]\le C(d,\beta )\cdot \mathbb {P}[E^\alpha (0,k,\lceil \beta k\rceil )]\).

Let \(s = s(d)\) be such that there exist \(x_1,\ldots ,x_s\in \mathbb {Z}^d\) such that \(B(x_i,k)\subseteq B(0,4k)\) and \(\cup _{i=1}^s\partial B(x_i,k)\supseteq \partial B(0,4k)\). Let \(S = S(d,\beta )\) be such that there exist \(y_1,\ldots , y_S\in \mathbb {Z}^d\) such that \(B(y_i,k)\cap B(0,\lceil 4\beta k\rceil -1)=\phi \) and \(\cup _{i=1}^S\partial B(y_i,k)\supseteq \partial B(0,\lceil 4\beta k\rceil )\). Such a choice of \(s\), \(S\) always exists, and we fix some suitable \(s\), \(S\) and some corresponding \(x_1,\ldots , x_s\) and \(y_1,\ldots , y_S\). Note that \(E^\alpha (0,a_k,b_k)\) implies that \(E^\alpha (x_i,k,\lceil \beta k\rceil )\) occurs for some \(1\le i\le s\), and \(E^\alpha (0,c_k,d_k)\) implies that \(E^\alpha (y_i,k,\lceil \beta k\rceil )\) occurs for some \(1\le i\le S\). Thus using translation invariance, \(\mathbb {P}[E^\alpha (0,a_k,b_k)]\le s\cdot \mathbb {P}[E^\alpha (0,k,\lceil \beta k\rceil )]\) and \(\mathbb {P}[E^\alpha (0,c_k,d_k)]\le S\cdot \mathbb {P}[E^\alpha (0,k,\lceil \beta k\rceil )]\). The result follows. \(\square \)

4.2.2 Upper bound on the one-arm probability for \(\alpha <\alpha _{1}\)

Let \(d\ge 3\), \(\alpha >0\) and \(\beta >1\). Define two random sequences \((\mathcal {A}_n)_n\) and \((\mathcal {B}_n)_n\) as follows. Let \(\mathcal {B}_n = \lceil \beta \mathcal {A}_n\rceil \) and

Since the graph \(\mathbb {Z}^d\) is transient, the total mass under \(\mu \) of the loops intersecting \(B(0,N)\) is finite for any \(N\) according to Lemma 2.5. Therefore, in the Poisson loop ensemble \(\mathcal {L}_{\alpha }\), the number of the loops intersecting \(B(0,N)\) is almost surely finite. Thus, \(\mathcal {A}_n\) are almost surely finite for all \(n\).

Consider

We first show that for all \(n\ge 1\),

The proof is by induction on \(n\). The case \(n=1\) follows from the definitions of \(r\), \(\mathcal {A}_1\) and \(\mathcal {B}_1\). For \(n\ge 2\),

Using the fact that the loops intersecting \(B(0,\mathcal {B}_{n-1})\) never visit \(\partial B(0,\mathcal {A}_n)\), we can rewrite summands in the above display as

The event \(\{\mathcal {B}_{n-1} = b_{n-1},\quad \mathcal {A}_n = a_n,\quad 0\mathop {\longleftrightarrow }\limits ^{\mathcal {L}_\alpha }\partial B(0,b_{n-1})\}\) is a measurable function of loops intersecting \(B(0,b_{n-1})\), and the event \(\{B(0,a_n)\mathop {\longleftrightarrow }\limits ^{\mathcal {L}^{B(0,b_{n-1})^c}_\alpha }\partial B(0,\lceil \beta a_n\rceil )\}\) depends only on loops which do not intersect \(B(0,b_{n-1})\). Thus, the two events are independent. Moreover, the random loops \(\mathcal {L}^{B(0,b_{n-1})^c}_\alpha \) avoiding \(B(0,b_{n-1})\) is a subset of \(\mathcal {L}_\alpha \). Thus, by monotonicity,

As a result, we get

which is precisely (11).

Next we prove that there exists \(C = C(d)<\infty \) such that for any \(\delta \in (0,d-2)\) and \(n\ge 1\),

Define \(\mathcal {G}_{k}=\mathcal {F}_{B(0,\mathcal {B}_k)}\). Since \(\frac{\mathcal {B}_{k+1}}{\mathcal {B}_{k}}\) is \(\mathcal {G}_k\)-measurable,

where \(\mathbb {P}^{B(0,b_k)^c}\) is the law of the loops avoiding \(B(0,b_k)\) and

Since

By Lemma 2.7, there exists \(C = C(d)<\infty \) such that for all \(\lambda >2\),

Therefore,

and (12) follows.

We can now complete the proof of Theorem 1.3. From (11) and (12), for \(N\ge 1\) and \(\epsilon \in (0,d-2)\),

Choosing

we get

Note that for any \(\alpha <\alpha _{1}\), there exists \(\beta >1\) such that \(r<1\). Thus, there exist \(C = C(d,\alpha )<\infty \) and \(c = c(d,\alpha )\in (0,d-2)\) such that

Moreover, by Lemma 4.1, there exists \(C' = C'(d,\beta )<\infty \) such that for all \(\alpha \), \(r\le C'\cdot \alpha \). Therefore, one can choose \(c(d,\alpha )\) above so that \(\lim _{\alpha \rightarrow 0}(d-2-c)\cdot \log \frac{1}{\alpha }<\infty \). \(\square \)

5 Loop percolation in dimension \(d\ge 5\)

In this section we prove Theorems 1.4 and 1.7. The proof of Theorem 1.4 is split into 5 parts given in 5 different subsections of this section, see, respectively, Propositions 5.1, 5.2, 5.3, 5.4, and 5.10. Theorem 1.7 is restated and proved as Proposition 5.14 in the last subsection.

5.1 \(\alpha _{\#}>0\) if and only if \(d\ge 5\)

We prove here that the expected size of \(\mathcal {C}_\alpha (0)\) is finite for small enough \(\alpha \) only if \(d\ge 5\). The size of \(\mathcal {C}_\alpha (0)\) is stochastically dominated by the total progeny of a Galton–Watson process with offspring distribution defined by the size of

Thus, if for some \(\alpha >0\), \(\mathbb {E}[\#\mathcal {C}_\alpha (0,1)]<1\), then the Galton–Watson process is sub-critical, and the expected progeny is finite. Existence of such \(\alpha \) will follow from the dominated convergence, as soon as we show that for some \(\alpha >0\), \(\mathbb {E}[\#\mathcal {C}_\alpha (0,1)]<\infty \).

Proposition 5.1

\(\alpha _{\#}>0\) if and only if \(d\ge 5\).

Proof

Let \(d\ge 3\) and \(\alpha >0\). We compute

which is finite if and only if \(d\ge 5\) by Lemma 2.2. In particular, if \(d= 3\) or \(4\), then \(\mathbb {E}[\#\mathcal {C}_\alpha (0)] \ge \mathbb {E}[\#\mathcal {C}_{\alpha }(0,1)] = \infty \) for all \(\alpha >0\). On the other hand, for \(d\ge 5\), by the dominated convergence, there exists \(\alpha >0\) such that \(\mathbb {E}[\#\mathcal {C}_{\alpha }(0,1)]<1\). For such \(\alpha \), \(\#\mathcal {C}_\alpha (0)\) is dominated by the total progeny of a subcritical Galton–Watson process with offspring distribution \(\mathbb {P}[\#\mathcal {C}_\alpha (0,1)\in \cdot ]\). Thus, \(\mathbb {E}[\#\mathcal {C}_\alpha (0)]<\infty \). \(\square \)

Remark 5.1

Domination of the cluster size by the total progeny of a Galton–Watson process is used rather often in studies of sub-critical percolation models. In the context of loop ensembles, it was used in [18, Section 2] to study the distribution of connected components of loops on the complete graph.

5.2 One-arm connectivity

In this section, we prove the second statement in Theorem 1.4, which we restate in the following proposition.

Proposition 5.2

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exist constants \(0<c(d,\alpha )<C(d,\alpha )<\infty \) such that for all \(n\),

We need to introduce the notion of loop distance and decompose the cluster at 0 according to the loop distance from 0.

Definition 5.1

Define a random loop distance \(\mathfrak {d}\) on \(\mathbb {Z}^d\):

Then, we decompose \(\mathcal {C}_{\alpha }(0)\) into a countable disjoint union:

Proof of Proposition 5.2

The lower bound follows from Theorem 1.2.

For \(k\ge 0\), set \(\mathcal {C}_k=\bigcup \nolimits _{i=0}^{k}\mathcal {C}_{\alpha }(0,i)\). Then,

If \(\mathcal {C}_k\cap \partial B(0,n)\ne \phi \), then \(\mathcal {C}_\alpha (0)\) contains a loop from \(\mathcal {L}_\alpha \) with diameter \(\ge \frac{n}{k}\). By considering the loop distance \(\mathfrak {d}(0,\ell )\) and using the first moment method, we get

On the other hand,

Since \(\mathcal {C}_{\alpha }(0,k)\) is \(\mathcal {F}_{\mathcal {C}_{k-1}}\) measurable and

we get

Putting two bounds together,

We choose \(k=k_0\) large enough such that \(\mathbb {E}[\#\mathcal {C}_{\alpha }(0,k_0)]\le 2^{1-d}\) and take \(C'(d,\alpha )=\max (2^{d-1}k_0^{d-2}C(d,\alpha ),1)\). Then, by induction on \(n\),

The proof is complete by the monotonicity of \(\mathbb {P}[0\overset{\mathcal {L}_{\alpha }}{\longleftrightarrow }\partial B(0,n)]\) in \(n\). \(\square \)

Remark 5.2

Recall from Theorem 1.2 and Lemma 2.7 that the probability that a single loop from \(\mathcal {L}_\alpha \) passing through a given vertex \(x\in \mathbb {Z}^d\) has diameter \(\ge n\) is of order \(n^{2-d}\). Thus, for \(d\ge 5\) and \(\alpha <\alpha _{\#}\), the probability that \(\mathcal {C}_\alpha (0)\) contains a loop of diameter \(\ge n\) is of the same order as the probability of one arm to \(\partial B(0,n)\). This suggests that long connections in \(\mathcal {L}_\alpha \) for \(d\ge 5\) and \(\alpha <\alpha _{\#}\) arise because of a single big loop. It is indeed the case, as one can show that the probability that \(\mathcal {C}_\alpha (0)\) contains at least two loops of diameter \(\ge m\) is \(O(m^{6-2d})\), and the probability of having a path from \(0\) to \(\partial B(0,n)\) only through loops of \(\mathcal {L}_\alpha \) of diameter \(\le m\) is \(O(e^{-c(d,\alpha )\cdot \frac{n}{m}})\). Since we do not use such refined estimates, we omit details of their proofs. Curiously, as we will see later, the situation in dimensions \(d=3\) and 4 is rather different, as long connections through small loops are more likely than connections through a single big loop.

5.3 Two point connectivity

In this section we prove the third statement of Theorem 1.4 about the bounds on the two point connectivity, which we restate in the next proposition. As in the case of one arm connectivity, the lower bound here is given by one loop connection. While the upper bound is obtained by one loop connection together with the upper bound for the decay of one arm connectivity.

Proposition 5.3

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\),

Proof

Since \(\mathbb {P}[x\in \mathcal {C}_{\alpha }(0)]\ge \mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha }:0\mathop {\longleftrightarrow }\limits ^{\ell }x]\), the lower bound is given by Lemmas 2.5 and 2.2. It remains to show the upper bound.

Let \(n=||x||_{\infty }\). Without loss of generality we may suppose \(n\ge 3\). We divide the loops \(\mathcal {L}_{\alpha }\) into four independent set of loops as follows:

-

\(\mathcal {L}_{1,1}\overset{\text {def}}{=} \{\ell \in \mathcal {L}_{\alpha }:\ell \text { intersects }B(0,\lfloor n/3\rfloor )\text { and }B(x,\lfloor n/3\rfloor )\}\),

-

\(\mathcal {L}_{1,0}\overset{\text {def}}{=} \{\ell \in \mathcal {L}_{\alpha }:\ell \text { intersects }B(0,\lfloor n/3\rfloor )\text { but not }B(x,\lfloor n/3\rfloor )\}\),

-

\(\mathcal {L}_{0,1}\overset{\text {def}}{=} \{\ell \in \mathcal {L}_{\alpha }:\ell \text { intersects }B(x,\lfloor n/3\rfloor )\text { but not }B(0,\lfloor n/3\rfloor )\}\),

-

\(\mathcal {L}_{0,0}\overset{\text {def}}{=} \{\ell \in \mathcal {L}_{\alpha }:\ell \text { avoids }B(0,\lfloor n/3\rfloor )\text { and }B(x,\lfloor n/3\rfloor )\}\).

Let \(\mathcal {C}_\alpha ^k(z)\) be the cluster of \(z\) induced by the loops of \(\mathcal {L}_\alpha \) which are entirely contained in \(B(z,k)\). The main observation is that when \(x\in \mathcal {C}_{\alpha }(0)\), at least one of the four events occurs:

-

\(E_1\overset{\text {def}}{=}\left\{ 0\overset{\mathcal {L}_{1,0}}{\longleftrightarrow }\partial B(0,\lfloor n/3\rfloor )\right\} \cap \left\{ x\overset{\mathcal {L}_{0,1}\cup \mathcal {L}_{0,0}}{\longleftrightarrow }\partial B(x,\lfloor n/3\rfloor )\right\} \),

-

\(E_2\overset{\text {def}}{=}\left\{ 0\overset{\mathcal {L}_{1,0}\cup \mathcal {L}_{0,0}}{\longleftrightarrow }\partial B(0,\lfloor n/3\rfloor )\right\} \cap \left\{ x\overset{\mathcal {L}_{0,1}}{\longleftrightarrow }\partial B(x,\lfloor n/3\rfloor )\right\} \),

-

\(E_3\overset{\text {def}}{=} \big \{\exists a\in \mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(0),\quad b\in \mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(x),\quad \ell \in \mathcal {L}_{1,1} : a\mathop {\longleftrightarrow }\limits ^{\ell }b\big \}\),

-

\(E_4\overset{\text {def}}{=}\left\{ \exists a\in \mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(0),\quad b\in \mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(x), \quad \ell _1,\ell _2\in \mathcal {L}_{1,1} : \begin{array}{c} a\in \ell _1,~\ell _1\cap \mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(x) = \phi ,\\ b\in \ell _2,~\ell _2\cap \mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(0) = \phi \end{array}\right\} \).

Thus, \(\mathbb {P}[x\in \mathcal {C}_{\alpha }(0)]\le \mathbb {P}[E_1] +\mathbb {P}[E_2]+\mathbb {P}[E_3]+\mathbb {P}[E_4]\). By independence of \(\mathcal {L}_{0,1},\mathcal {L}_{1,0}\), and \(\mathcal {L}_{1,1}\), translation invariance of \(\mathcal {L}_\alpha \), and Proposition 5.2,

Next we estimate \(\mathbb {P}[E_3]\):

Since \(||a-b||_{\infty }\ge n/3\) for all \(a\in B(0,\lfloor n/3\rfloor )\) and \(b\in B(x,\lfloor n/3\rfloor )\), by Lemmas 2.5 and 2.2, \(\mathbb {P}[E_3]\le C_2(d,\alpha )\cdot n^{2(2-d)}\).

Finally, we estimate \(\mathbb {P}[E_4]\) by first conditioning on \(\mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(0)\) and \(\mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(x)\), and then using independence between loops from \(\mathcal {L}_{1,1}\) that intersect \(\mathcal {C}_\alpha ^{\lfloor n/3\rfloor }(0)\) and do not intersect it:

Thus, for \(||x||_{\infty }\ge 3\), \(\mathbb {P}[x\in \mathcal {C}_\alpha (0)]\le (2C_1+C_2+C_3)\cdot ||x||^{2(2-d)}\). \(\square \)

Remark 5.3

As in the case of one arm connectivity, see Remark 5.2, one can show that for \(d\ge 5\) and \(\alpha <\alpha _{\#}\), the most likely situation for \(0\mathop {\longleftrightarrow }\limits ^{\mathcal {L}_\alpha }x\) is to have a large loop which passes near \(0\) and near \(x\), i.e., the existence of connections between \(0\) and \(x\) with two large loops or with only small loops are both of probability \(o(||x||^{2(2-d)})\).

5.4 Tail of the cluster size

In this section we prove the fourth statement of Theorem 1.4, showing that the tail of the distribution of \(\#\mathcal {C}_\alpha (0)\) is of order \(n^{1-d/2}\), see Proposition 5.4. The lower bound is given by the loops passing through \(0\). Roughly speaking, the upper bound is given by the total progeny of a sub-critical Galton–Watson process which dominates the cluster size. The existence of such sub-critical Galton–Watson process is guaranteed by assumption \(\alpha <\alpha _{\#}\). An upper bound for the sub-critical Galton–Watson process is given in Lemma 5.6. Later we will take the offspring distribution to be the distribution of \(\#\mathcal {U}_{\alpha }(0,K)\) where for \(K\ge 1\),

The crucial point is that for \(x,y\in \mathbb {Z}^d\), the following is an increasing event:

This enables us to dominate \((\#\mathcal {C}_{\alpha }(0,Ki))_{i\ge 0}\) by a sub-critical Galton–Watson process with offspring \(\#\mathcal {U}_{\alpha }(0,K)\). In order to apply Lemma 5.6 to dominate the total progeny, we need an upper bound estimate for the tail of \(\mathcal {U}_{\alpha }(0,K)\). This is given in Lemma 5.7.

Proposition 5.4

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exist \(0<c(d,\alpha )<C(d,\alpha )<\infty \) such that for all \(n\),

The proof of the proposition is based on the following lemmas.

Lemma 5.5

Suppose \(\xi \) and \(\eta \) are \(\mathbb {N}\) valued variables with finite means. Denote by \(\bar{F}\) the tail of the distribution function of \(\xi \) and by \(\bar{G}\) that of \(\eta \). Suppose \(\bar{F}(x),\bar{G}(x)\le x^{-a}h(x)\) where \(a>1\) and \(h\) slowly variesFootnote 2 as \(x\rightarrow \infty \). Take a sequence \((\eta _i)_{i\ge 0}\) of independent copies of \(\eta \) which is also independent of \(\xi \). Then there exists \(C<\infty \) such that for \(n\ge 1\),

Lemma 5.6

Let \(\bar{F}(x) = 1 - F(x)\) be the tail of a distribution function \(F\). As in Lemma 5.5, we suppose that \(\bar{F}(x)\le x^{-a}h(x)\) where \(a>1\) and \(h\) is slowly varying when \(x\rightarrow \infty \). For a sub-critical Galton–Watson process \((Z_n)_{n\ge 0}\) with offspring distribution \(F\), let \(S_n=\sum \nolimits _{i=0}^{n}Z_i\). Then there exists a constant \(C<\infty \) such that

Recall the definition of the partition \((\mathcal {C}_\alpha (0,i),i\ge 0)\) of \(\mathcal {C}_\alpha (0)\) from (13).

Lemma 5.7

For \(d\ge 5\) and \(K\ge 1\), there exist \(0<c(d,\alpha )\le C(d,\alpha ,K)<\infty \) such that

Since \(\mathcal {U}_{\alpha }(0,K)\subset \bigcup \nolimits _{i=1}^{K}\#\mathcal {C}_{\alpha }(0,K)\), the same upper bound holds for the tail distribution of \(\#\mathcal {U}_{\alpha }(0,K)\):

We postpone the proof of the lemmas until the end of this section.

Proof of Proposition 5.4

The lower bound follows from Lemma 5.7.

For the upper bound, let \(d\ge 5\) and \(\alpha <\alpha _{\#}\). Since \(\#\mathcal {C}_{\alpha }(0)\) is finite almost surely, \(\lim \nolimits _{K\rightarrow \infty }\#\mathcal {U}_{\alpha }(0,K)=0\). By the dominated convergence, we can choose \(K\) large enough such that \(\mathbb {E}[\#\mathcal {U}_{\alpha }(0,K)]<1\). Then we define a sub-critical Galton–Watson process \((Z_i)_{i\ge 0}\) with offspring distribution \(\mathbb {P}[\#\mathcal {U}_{\alpha }(0,K)\in \cdot ]\), so that \(\sum \nolimits _{i\ge 0}\#\mathcal {C}_{\alpha }(0,Ki)\) is stochastically dominated by \(S_{\infty }\overset{\text {def}}{=}\sum \nolimits _{i=0}^{\infty }Z_i\). By Lemmas 5.7 and 5.6, there exists \(C=C(d,\alpha )<\infty \) such that

Let \((\eta _i)_i\) be a sequence of independent copies of \(S_{\infty }\). We further suppose that they are independent of \(\mathcal {L}_{\alpha }\). Then for \(j=1,\ldots ,K-1\), \(\sum \nolimits _{i\ge 0}\#\mathcal {C}_{\alpha }(0,Ki+j)\) is stochastically dominated by \(\sum \nolimits _{i=1}^{\#\mathcal {C}_{\alpha }(0,j)}\eta _i\). By applying Lemma 5.5 for \(\sum \nolimits _{i=1}^{\#\mathcal {C}_{\alpha }(0,j)}\eta _i\), there exists \(C'=C'(d,\alpha )<\infty \) such that for \(j=1,\ldots ,K-1\),

Similar upper bound with a bigger constant holds for \(\#\mathcal {C}_{\alpha }(0)\), since

The proof is complete. \(\square \)

It remains to prove the lemmas.

5.4.1 Proof of Lemma 5.5

By [21, Theorem 2], for \(\gamma >0\),

By taking \(\gamma =\mathbb {E}[\eta ]\), \(k=\xi \) and \(x=n/2\), there exists \(C<\infty \) such that

Since \(\mathbb {P}[\xi >\frac{n}{2\mathbb {E}[\eta ]}]\le (2\mathbb {E}[\eta ])^an^{-a}h(\frac{n}{2\mathbb {E}[\eta ]})\) by assumption,

By the definition of slowly varying function, there exists \(C'<\infty \) such that

\(\square \)

5.4.2 Proof of Lemma 5.6

Set \(H(x)=x^{-a}h(x)\). We may assume that \(H(x)\le 1\) for \(0<x \le 1\). Denote by \(m\overset{\text {def}}{=}\mathbb {E}[Z_1]\). By assumption, \(m<1\), hence \(\mathbb {E}[Z_{k-1}]=m^{k-1}\) and \(\mathbb {P}[Z_{k-1}>0]\le \mathbb {E}[Z_{k-1}]=m^{k-1}\).

Fix \(\delta >0\) and take \(\rho \in ]m,1[\) close enough to \(1\) so that \(m\rho ^{-a-\delta }<1\). (This particular choice of \(\rho \) will be clear during the proof.) Let \(\gamma =\rho -m\). Then,

By [21, Theorem 2], for \(\gamma >0\),

where \((\eta _i)_i\) are i.i.d. variables with the distribution \(F\). By conditioning on \(Z_{k-1}\) and then applying (19) with \(p=Z_{k-1}\) and \(x=\gamma \rho ^{k-1}n\),

By Protter’s Theorem, see [1, Theorem 1.5.6], there exists \(C'= C'(\delta )\) such that for all \(n\ge 1\) and \(c\in ]0,1[\), \(H(cn)\le C'\cdot c^{-a-\delta }\cdot H(n)\). Therefore,

For \(n>1/\rho \), \(Z_0=1<\rho n\). Thus,

and for \(n>1/\rho \),

Finally, there exists \(C''<\infty \) such that for \(n\ge 1\), \(\mathbb {P}[S_{\infty }>n]<C''\cdot H(n)\). \(\square \)

5.4.3 Proof of Lemma 5.7

Lemma 5.7 follows from the lemma:

Lemma 5.8

For \(d\ge 3\) and \(\alpha >0\), as \(x\rightarrow \infty \),

In particular, for \(p\ge 0\), \(\mathbb {E}[(\#\mathcal {C}_{\alpha }(0,1))^{p}]<\infty \) iff \(p<\frac{d}{2}-1\).

Indeed, the case \(K=1\) in Lemma 5.7 follows from Lemma 5.8. We prove the general case by induction on \(K\). Suppose that for any \(K\le m\) there exists \(C(d,\alpha ,K)<\infty \) such that

Let \((\eta _i)_{i}\) be a sequence of independent variables with distribution \(\mathbb {P}[\#\mathcal {C}_{\alpha }(0,1)\in \cdot ]\) which is also independent from \(\mathcal {L}_{\alpha }\). Then, \(\#\mathcal {C}_{\alpha }(0,m+1)\) is stochastically dominated by \(\sum \nolimits _{i=1}^{\#\mathcal {C}_{\alpha }(0,m)}\eta _i\). The proof is complete by using (21) for \(K=1\) and \(K=m\), and by applying Lemma 5.5 with \(\xi =\#\mathcal {C}_{\alpha }(0,m)\). \(\square \)

It remains to prove Lemma 5.8. We first prove a result on the tail of the range of the first finite excursion of a SRW at 0.

Lemma 5.9

Let \(\mathbb {P}^{0}\) be the law of SRW on \(\mathbb {Z}^d\) starting from \(0\). Let \(\mathbb {P}^{ex}\) be the law of the first excursion under \(\mathbb {P}^{0}[\cdot |\tau ^{+}(0)<\infty ]\). Let \(\mathbb {P}^{ex,2n}\) be the law of the first excursion given that the first excursion has exactly \(2n\) jumps. Set \(F=\mathbb {P}^{0}[\tau ^{+}(0)<\infty ]=1-\frac{1}{G(0,0)}\). Then,

-

(a)

for any \(\epsilon >0\),

$$\begin{aligned} \lim \limits _{n\rightarrow \infty }\mathbb {P}^{ex,2n} \left[ \left| \frac{1}{2n}\#(\text {Range of excursion})-(1-F)\right| >\epsilon \right] =0; \end{aligned}$$ -

(b)

\(\mathbb {P}^{ex}[\#(\text {Range of excursion})>x]\sim \frac{d^{d/2}(1-F)^{d/2+1}}{(d/2-1)(2\pi )^{d/2}F}x^{1-\frac{d}{2}}\).

Proof

Firstly, by [6], for \(d\ge 3\), as \(n\rightarrow \infty \),

Then, by [10, Theorem 1.2.1],

Thus,

-

(a)

We see that \(\mathbb {P}^{0}[\tau ^{+}(0)=2n]\) decays polynomially. By [8, Theorem 1],

$$\begin{aligned} \mathbb {P}^{0}\left[ \frac{1}{2n}\#(\text {Range of }\{X_0,\ldots ,X_{2n}\})>1-F+\epsilon \right] \text { goes to }0\text { exponentially fast}. \end{aligned}$$Then,

which also goes to 0 exponentially fast. On the other hand, for any fixed \(\delta >0\), the Radon–Nikodym derivative \(\frac{d\mathbb {P}^{ex,2n}}{d\mathbb {P}^{0}}\) with respect to \(\sigma (X_0,\ldots ,X_{\lfloor 2n-n\delta \rfloor })\) is bounded by a constant \(c(\epsilon )\):

$$\begin{aligned} \mathbb {E}^0\left[ \frac{d\mathbb {P}^{ex,2n}}{d\mathbb {P}^{0}} \Bigg |\sigma (X_0,\ldots ,X_{\lfloor 2n-n\delta \rfloor })\right]&= 1_{\{\tau ^{+}(0)>\lfloor 2n-n\delta \rfloor \}}\frac{\mathbb {P}^{X_{\lfloor 2n-n\delta \rfloor }}[\tau ^{+}(0)=\lceil n\delta \rceil ]}{\mathbb {P}^0[\tau ^{+}(0)=2n]}\\&\le \frac{\mathbb {P}^{X_{\lfloor 2n-n\delta \rfloor }}[X_{\lceil n\delta \rceil }=0]}{\mathbb {P}^0[\tau ^{+}(0)=2n]}\\&\le c(\delta ) \end{aligned}$$where the last inequality comes from local central limit theorem with Eq. (22). For any fixed \(\epsilon >0\), choose \(\delta \) small enough such that \((1-F-\epsilon )\frac{2}{2-\delta }<1-F-\epsilon /2\). Then, for \(n\) large enough,

$$\begin{aligned}&\mathbb {P}^{ex,2n} \left[ \frac{1}{2n}\#(\text {Range of excursion})<1-F-\epsilon \right] \\&\quad \le \mathbb {P}^{ex,2n}\left[ \frac{1}{2n}\#(\text {Range of }\{X_0,\ldots ,X_{\lfloor 2n-n\delta \rfloor }\})<1-F-\epsilon \right] \\&\quad \le \mathbb {P}^{ex,2n}\left[ \frac{1}{\lfloor 2n-n\delta \rfloor }\#(\text {Range of }\{X_0,\ldots ,X_{\lfloor 2n-n\delta \rfloor }\})<1-F-\epsilon /2\right] \\&\quad \le c(\epsilon )\mathbb {P}^{0}\left[ \frac{1}{\lfloor 2n-n\delta \rfloor }\#(\text {Range of }\{X_0,\ldots ,X_{\lfloor 2n-n\delta \rfloor }\})<1-F-\epsilon /2\right] \end{aligned}$$which goes to \(0\) as \(n\) tends to infinity since under \(\mathbb {P}^0\),

$$\begin{aligned} \frac{1}{n}\#(\text {Range of }\{X_0,\ldots ,X_n\})\overset{n\rightarrow \infty }{\longrightarrow } 1-F\text { in probability}, \end{aligned}$$see e.g. [27, T1 in Section 4].

-

(b)

By decomposing the event according to the value of \(\tau ^{+}(0)\), we see that as \(x\rightarrow \infty \),

$$\begin{aligned} \mathbb {P}^{ex}[\#(\text {Range of excursion})>x]&= \sum \limits _{n\ge \lfloor x/2\rfloor }\mathbb {P}^{0}[\tau ^{+}(0)=2n]/ \mathbb {P}^{0}[\tau ^{+}(0)<\infty ]\\&\quad \times \mathbb {P}^{ex,2n}[\#(\text {Range of excursion})>x]. \end{aligned}$$By using the result in the first part and Eq. (22), as \(x\rightarrow \infty \)

$$\begin{aligned} \mathbb {P}^{ex}[\#(\text {Range of excursion})>x]&\sim \sum \limits _{n\ge \frac{x}{2(1-F)}}\mathbb {P}^{0}[\tau ^{+}(0)=2n]/ \mathbb {P}^{0}[\tau ^{+}(0)<\infty ]\\&\sim \sum \limits _{n\ge \frac{x}{2(1-F)}}\frac{(1-F)^2}{F} \frac{2d^{d/2}}{(4\pi n)^{d/2}}\\&\sim \frac{d^{d/2}(1-F)^{d/2+1}}{(d/2-1) (2\pi )^{d/2}F}x^{1-\frac{d}{2}}. \end{aligned}$$

\(\square \)

Proof of Lemma 5.8

By [4, Theorem3.29], the result of Lemma 5.9 implies that the distribution of \(\#(\text {Range of excursion})\) under \(\mathbb {P}^{ex}\) is sub-exponential for \(d\ge 3\). By [4, Theorem 3.37], the random stopped sum \(S_{\tau }\overset{\text {def}}{=}\sum \nolimits _{i=1}^{\tau }\eta _i\) of i.i.d. sub-exponential variables \((\eta _i)_i\) is again sub-exponential if \(\mathbb {E}[(1+\delta )^{\tau }]<\infty \) for some \(\delta >0\). Moreover,

Here, we are in a slightly different situation. In fact, by Lemma 2.6, we have that

where \((\mathrm {range}_i)_i\) are i.i.d. variables which are independent of the Poisson loop soup and follow the distribution of the range of excursion under \(\mathbb {P}^{ex}\). We see that

where \(\eta _i\overset{\text {def}}{=}\#\mathrm {range}_i\) for all \(i\). We will see that \(\max \nolimits _{i=1}^{\xi (0,\mathcal {L}_{\alpha })}\eta _i\) and \(\sum \nolimits _{i=1}^{\xi (0,\mathcal {L}_{\alpha })}\eta _i\) have the same tail behavior: On one hand, since \(\xi (0,\mathcal {L}_{\alpha })\) has an exponentially decayed tail by Lemma 2.6, we can apply [4, Theorem3.37]. Then, as \(x\rightarrow \infty \),

On the other hand,

By Lemma 5.9, \(\mathbb {P}[\eta _1>x]\rightarrow 0\) as \(x\rightarrow \infty \). Then, by the dominated convergence,

Therefore, as \(x\rightarrow \infty \),

Thus, we must have that as \(x\rightarrow \infty \),

By Lemma 2.6,

By Lemma 5.9, as \(x\rightarrow \infty \),

Thus, as \(x\rightarrow \infty \),

In particular, for \(p\ge 0\), \(\mathbb {E}[(\#\mathcal {C}_{\alpha }(0,1))^{p}]<\infty \) iff \(p<\frac{d}{2}-1\). \(\square \)

5.5 \(\alpha _{\#}\le \alpha _{1}\)

In this section we complete the proof of Theorem 1.4 by showing the relation between \(\alpha _{\#}\) and \(\alpha _{1}\).

Proposition 5.10

For \(d\ge 5\), \(\alpha _{\#}\le \alpha _{1}\).

We prove Proposition 5.10 by showing that if \(\mathbb {E}[\#\mathcal {C}_\alpha (0)]<\infty \), then the probabilities of crossing annuli of large enough aspect ratio by chains of loops from \(\mathcal {L}_\alpha \) are uniformly smaller than \(1\). For a given annulus, we distinguish three possible situations: (a) one of the \(5\) disjoint subannuli is crossed by a single loop from \(\mathcal {L}_\alpha \), (b) there is a crossing with at least 3 big loops, and (c) every crossing contains many loops. Probabilities of these events are estimated in 3 lemmas below.

The first lemma estimates the probability that one of the 5 disjoint subannuli of a given annulus is crossed by a loop.

Lemma 5.11

For integers \(\beta \ge 2\), \(n\ge 1\), and \(i=1,2,3,4,5\), let

Then, there exists \(C(d)<\infty \) such that for \(n\ge 1\),

The next lemma estimates the probability that there is a chain of loops from a given vertex which contains at least \(k\) loops of diameter \(\ge m\). (In the proof of Proposition 5.10 we will only need the case \(k=3\).) To state the lemma, we introduce some notation. For \(x,y\in \mathbb {Z}^d\) and \(m\ge 1\), define the events \(J_{x,m}\) and \(J_{x,y,m}\) by

For \(x\in \mathbb {Z}^d\), define the event

where the notation “\(\circ \)” means the disjoint occurrence in the sense of loops instead of edges. To be more precise, for \(x,y\in \mathbb {Z}^d\) and a set of loops \(O\), denote by \(x\overset{O}{\longleftrightarrow }y\) the connection from \(x\) to \(y\) by loops in \(O\):

with the conventions that \(\{x\overset{\phi }{\longleftrightarrow }x\}\) is the sure event. Then,

Figure 3 is an illustration of event \(E^{x}_{m,3}\).

Lemma 5.12

For \(d\ge 5\), \(\alpha <\alpha _{\#}\), and \(k\ge 1\), there exists constant \(C_k(d,\alpha )\) such that

Finally, the next lemma provides a bound on the probability of a long “geodesic” chain of loops from \(0\).

Lemma 5.13

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exist \(c(d,\alpha )>0\) and \(C(d,\alpha )<\infty \) such that for \(k\ge 0\)

We can now deduce Proposition 5.10 from the above three lemmas, and after that prove the lemmas.

Proof of Proposition 5.10

Let \(d\ge 5\) and \(\alpha <\alpha _{\#}\). To prove that \(\alpha \le \alpha _{1}\), we need to show that there exist \(\beta \ge 2\) and \(r<1\) such that

We cut the annulus into 5 concentric annuli and denote by \(W_{n,i}\) the one loop crossing event for each annulus for \(i=1,2,3,4,5\):

By Lemma 5.11, we can choose \(\beta \) large enough such that for \(n\ge 1\),

Next, we estimate the probability that a path of loops from \(B(0,n)\) to \(\partial B(\beta ^5n)\) contains at least \(3\) large loops. Take \(m=\lfloor n^{5/6}\rfloor \). By Lemma 5.12,

which tends to \(0\) as \(n\rightarrow \infty \).

On the event

every path of loops in \(\mathcal {L}_\alpha \) from \(B(0,n)\) to \(\partial B(0,\beta ^5n)\) must cross at least one of the \(5\) subannuli only by loops of diameter smaller than \(m\). Since the infinity distance between the inner boundary and the outer boundary of each subannulus is at least \((\beta -1)n\), every such path must consist of at least \(\frac{(\beta -1)n}{m}\) loops, which implies that for some \(x\in \partial B(0,n)\), \(\mathcal {C}_{\alpha }(x,\frac{(\beta -1)n}{m})\ne \phi \). By Lemma 5.13, the probability of this event is bounded from above by \(C(d,\alpha )\cdot n^{d-1}\exp \{-c(d,\alpha )n^{1/6}\}\), which goes to \(0\) as \(n\rightarrow \infty \).

Finally, we conclude (24) with \(r=1/2\). \(\square \)

It remains to prove the lemmas.

Proof of Lemma 5.11

By Lemmas 2.7 and 2.3, there exists \(C(d)<\infty \) such that for \(n\ge 1\),

\(\square \)

Proof of Lemma 5.12

We prove (23) by induction in the following three steps:

-

(I)

Proof of (23) for \(k=1\): By considering

$$\begin{aligned} \min \{q\ge 0:\text {there exists }\ell \in \mathcal {L}_{\alpha }\text { such that }\ell \cap \mathcal {C}_{\alpha }(0,q)\ne \phi ,Diam(\ell )\ge m\} \end{aligned}$$and then using the first moment method,

$$\begin{aligned} \mathbb {P}[E^{0}_{m,1}]&\le \mathbb {E}[\#\mathcal {C}_{\alpha }(0)]\cdot \mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha } : 0\in \ell ,~Diam(\ell )\ge m]\\&\le \mathbb {E}[\#\mathcal {C}_{\alpha }(0)]\cdot \mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha } : 0\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,\lfloor m/2\rfloor )]\\&\le \alpha \cdot \mathbb {E}[\#\mathcal {C}_{\alpha }(0)]\cdot \mu (\ell : 0\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,\lfloor m/2\rfloor )). \end{aligned}$$ -

(II)

Suppose (23) holds for \(k\le K\). Then for \(k=K+1\), since \(E^x_{m,K}\) are increasing events and \(\mathcal {L}_{\alpha }\) is translation invariant, by the first moment method,

$$\begin{aligned} \mathbb {P}[E^{0}_{m,K+1}]\le \sum \limits _{x,y\in \mathbb {Z}^d}\mathbb {P} [\{0\overset{\mathcal {L}_{\alpha }}{\longleftrightarrow } x\}\circ \{\exists \ell \in \mathcal {L}_{\alpha }:x,y\in \ell ,Diam(\ell )\ge m\}\circ E^y_{m,K}]. \end{aligned}$$(25)where \(A\circ B\) means the disjoint occurrence for two increasing events \(A\) and \(B\) in the sense of loops instead of edges. We set \(\omega (\ell )=1\) iff \(\ell \in \mathcal {L}_{\alpha }\). Similarly to the primitive loops considered in [17, Section 2.1], the distribution of the random configuration measure \((\omega (\ell ))_{\ell }\) is a product measure by the definition of Poisson random measure. It is known that the BK inequality holds for product measure on \(\{0,1\}^m\) for finite \(m\ge 1\), see e.g. [7, Theorem 2.12]. Although \(\{0,1\}^{\{\text {loop space on }\mathbb {Z}^d\}}\) is not finite, the finite volume approximation works in many situations. Indeed, the increasing event considered in this proof can be approximated by monotone sequence of finitely loop dependent events. Thus, we can apply BK inequality:

$$\begin{aligned} (25)\le \sum \limits _{x,y\in \mathbb {Z}^d}\mathbb {P} [0\overset{\mathcal {L}_{\alpha }}{\longleftrightarrow } x]\cdot \mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha }:x,y\in \ell ,Diam(\ell )\ge m]\cdot \mathbb {P}[E^y_{m,K}]. \end{aligned}$$By translation invariance of \(\mathcal {L}_{\alpha }\),

$$\begin{aligned} \mathbb {P}[E^{0}_{m,K+1}]\le \mathbb {E} [\#\mathcal {C}_{\alpha }(0)]\cdot \mathbb {P}[E^{0}_{m,K}]\cdot \sum \limits _{y\in \mathbb {Z}^d}\mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha } : 0,y\in \ell ,Diam(\ell )\ge m]. \end{aligned}$$In the next step, we will bound \(\sum \nolimits _{y\in \mathbb {Z}^d}\mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha } : 0,y\in \ell ,~Diam(\ell )\ge m]\) by \(C(d,\alpha )\cdot m^{4-d}\), which will finish the proof of the lemma.

-

(III)

By comparing \(\#\mathcal {C}_{\alpha }(0,1)\) with \(m^2\),

$$\begin{aligned}&\sum \limits _{y\in \mathbb {Z}^d}\mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha } : 0,y\in \ell ,\quad Diam(\ell )\ge m] \le \mathbb {E}\left[ 1_{\{\#\mathcal {C}_{\alpha }(0,1)\ge m^2\}}\cdot \#\mathcal {C}_{\alpha }(0,1)\right] \\&\quad +m^2\mathbb {P}[\exists \ell \in \mathcal {L}_{\alpha } : 0\in \ell ,\quad Diam(\ell )\ge m]. \end{aligned}$$By Lemma 5.8, there exists \(C(d,\alpha )<\infty \) such that

$$\begin{aligned} \mathbb {E}[1_{\{\#\mathcal {C}_{\alpha }(0,1)\ge m^2\}}\cdot \#\mathcal {C}_{\alpha }(0,1)]\le C(d,\alpha )\cdot m^{4-d}. \end{aligned}$$The second term is estimated by \(m^2\cdot \mathbb {P}[E^0_{m,1}]\le C'(d,\alpha )\cdot m^{4-d}\). The proof of the lemma is thus complete. \(\square \)

Proof of Lemma 5.13

Recall the definition of \(\mathcal {U}_\alpha (0,K)\) from (14).

For \(d\ge 5\) and \(\alpha <\alpha _{\#}\), there exists \(K(d,\alpha )\in \mathbb {N}\) large enough such that

Since \((\#\mathcal {C}(0,K(d,\alpha )i))_{i\ge 0}\) is dominated by a sub-critical Galton–Watson process with offspring distribution \(\mathbb {P}[\#\mathcal {U}_{\alpha }(0,K(d,\alpha ))\in \cdot ]\),

\(\square \)

5.6 Asymptotic expression for \(\alpha _c\) as \(d\rightarrow \infty \)

The main result of this section is Proposition 5.14, which shows that all the critical thresholds defined in this paper asymptotically coincide as the dimension \(d\rightarrow \infty \), and gives also their asymptotic value. The proof involves a careful estimate of \(\mathbb {E}[\#\mathcal {C}_{\alpha }(0,1)]\) together with an upper bound of critical value \(\alpha _c\) in [17, Proposition 4.3(ii)].

Proposition 5.14

Asymptotically, as \(d\rightarrow \infty \),

Proof

The upper bound of the critical value \(\alpha _c\) follows from the comparison between the loop percolation and Bernoulli bond percolation in [17, Proposition 4.3(ii)],

where \(p_c\) is the critical value of Bernoulli bond percolation, and the asymptotic expansion for \(p_c\) as in [26, (11.19)],

For the lower bound on \(\alpha _{\#}\), recall from the proof of Proposition 5.1 that for \(d\ge 5\),

For \(\alpha \ge 1\),

Thus, the lower bound on \(\alpha _{\#}\) follows from the following claim:

For (26), it suffices to show that

We use Fourier transforms. For an absolutely summable function \(f : \mathbb {Z}^d\rightarrow \mathbb {C}\) and \(k\in ]-\pi ,\pi [^d\), the Fourier transform of \(f\) at \(k\) is defined by

Let \(D(x) = \frac{1}{2d} \mathrm {1}_{\{||x||_2 = 1\}}\) be the transition probability for SRW from \(0\). Then \(\hat{D}(k)=\frac{1}{d}\sum \nolimits _{i=1}^{d}\cos (k_i)\) and \(\hat{G}(k)=\frac{1}{1-\hat{D}(k)}\) in the sense of \(L^2(\mathrm {d}k)\) for \(d\ge 5\), where \(\hat{G}(k)\) is the Fourier transform of \(x\mapsto G(0,x)\).

We first consider \(\sum \nolimits _{x\in \mathbb {Z}^d}(G(0,x))^2\). By Parseval’s identity, for \(d\ge 5\),

It will be convenient to use the probabilistic interpretation. Let \((U_i)_i\) be independent random variables uniformly distributed in \(]-\pi ,\pi [\), and define \(Z_d=\frac{1}{d}\sum \nolimits _{i=1}^{d}\cos (U_i)\). Then,

We expand \((1-Z_d)^{-2}=\sum \nolimits _{n\ge 0}(n+1)Z_d^n\) into a power series of \(Z_d\). We first estimate the error term

If \(|Z_d|<1/2\),

if \(|Z_d|\ge 1/2\),

Note that

and by the exponential Markov inequality, \(\mathbb {P}[|Z_d|\ge \frac{1}{2}] \le 2\cdot e^{-d/8}\). Thus, for some \(C'(m)<\infty \),

By Hölder’s inequality and the exponential Markov inequality,

We will show that there exists a universal constant \(C<\infty \) such that for \(d\ge 5\),

Once (29) is proved, by using the bound on \(\mathbb {E}[R_{d,6}]\), we get

and the first part of (27) follows by direct calculation of moments of \(Z_d\) up to order \(O(d^{-3})\).

It remains to prove (29). By convexity of the function \(h\mapsto (1-h)^{-7/3}\) and the definition of \(\hat{D}(k)\),

Thus, the uniform bound in (29) follows by induction from (30) as soon as we show that for any \(d\ge 5\), \(\mathbb {E}[(1-Z_d)^{-7/3}]<\infty \). This follows from the definition of \(\hat{D}(k)\), the fact that \(\min \nolimits _{x\in ]-\pi ,\pi [}\frac{1-\cos (x)}{x^2}>0\), and the finiteness of the integral \(\frac{1}{(2\pi )^d}\int \nolimits _{]-\pi ,\pi [^d}\frac{1}{||k||_2^{14/3}}\mathrm {d}^dk\) for any \(d\ge 5\).

The proof of the first expansion in (27) is complete. Since \(\frac{1}{1-\hat{D}(k)}\in L^1\) by (29), the expansion for \(G(0,0)\) in (27) can be done similarly by the inverse Fourier transform. We omit the details, and complete the proof. \(\square \)

6 Refined lower bound for \(d=3,4\)

6.1 \(d=3\): Proof of Theorem 1.5

In this section we prove that \(n^{2-d}\) is not the correct order of decay of the one arm probability in dimension \(d=3\) by providing a lower bound on the one arm probability of order \(n^{2-d+\varepsilon }\). The key ingredient for the proof is the following lemma which gives a lower bound of the expected capacity of the open cluster at 0 formed by the loops \((\mathcal {L}_{\alpha })^{B(0,k)}\) contained inside the box \(B(0,k)\).

Lemma 6.1

For \(d=3\) and \(\alpha >0\), denote by \(\mathcal {C}_{\alpha }^k(0)\) the open cluster at \(0\) formed by the loops \((\mathcal {L}_{\alpha })^{B(0,k)}\) contained inside the box \(B(0,k)\). Then, there exist positive constants \(\epsilon (\alpha )\) and \(c(\alpha )\) such that

Before proving the lemma, we show how to deduce Theorem 1.5 from it.

Proof of Theorem 1.5

Let \(d =3\) and \(\alpha >0\). Take \(\mathcal {C}_\alpha ^{\lfloor n/2\rfloor }(0)\) as in the statement of Lemma 6.1. We always have

Note that \(\mathcal {C}_{\alpha }^{\lfloor n/2\rfloor }(0)\) depends only on the loops \((\mathcal {L}_{\alpha })^{B(0,\lfloor n/2\rfloor )}\) inside the box \(B(0,\lfloor n/2\rfloor )\). Thus, it is independent from the loops \((\mathcal {L}_{\alpha })_{(B(0,\lfloor n/2\rfloor ))^c}\) intersecting \((B(0,\lfloor n/2\rfloor ))^c\). Then, by Lemma 2.7, there exists \(c>0\) such that

Since by Lemma 2.3, \(n^{-1}{\text {Cap}}(\mathcal {C}_{\alpha }^{\lfloor n/2\rfloor }(0))\le n^{-1}{\text {Cap}}(B(0,\lfloor n/2\rfloor ))\) is uniformly bounded, there exists a constant \(c = c(\alpha )\) such that

The proof is complete by Lemma 6.1. \(\square \)

We complete this section with the proof of the remaining lemma.

Proof of Lemma 6.1

Let \(d = 3\) and \(\alpha >0\).

-

(I)

By Lemma 2.7, there exist constants \(\lambda _1>1\) and \(c>0\) such that for \(n\ge 1\), \(m\ge 2n\), \(M\ge \lambda _1m\), and \(K\subset B(0,n)\),

$$\begin{aligned}&\mu (\ell : K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,m),\quad \ell \subset B(0,M),\quad {\text {Cap}}(\ell )>cm)\nonumber \\&\quad \ge \mu (\ell : K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,m),\quad {\text {Cap}}(\ell )>cm) - \mu (\ell : K\mathop {\longleftrightarrow }\limits ^{\ell }\partial B(0,M))\nonumber \\&\quad \ge c\cdot {\text {Cap}}(K)\cdot m^{-1}. \end{aligned}$$(32) -

(II)

Denote by \(\mathcal {C}_{\alpha }(K,m,M)\) the set of vertices visited by the loops from \(\mathcal {L}_\alpha \) which are contained in \(B(0,M)\) and intersect both \(K\) and \(\partial B(0,m)\). We claim that there exists \(\lambda _2 = \lambda _2(\alpha )>1\) such that for \(n\ge 1\), \(m\ge 2n\), \(M>\lambda _2m\), and \(K\subset B(0,n)\),