Abstract

Background

Implementation strategies are strategies to improve uptake of evidence-based practices or interventions and are essential to implementation science. Developing or tailoring implementation strategies may benefit from integrating approaches from other disciplines; yet current guidance on how to effectively incorporate methods from other disciplines to develop and refine innovative implementation strategies is limited. We describe an approach that combines community-engaged methods, human-centered design (HCD) methods, and causal pathway diagramming (CPD)—an implementation science tool to map an implementation strategy as it is intended to work—to develop innovative implementation strategies.

Methods

We use a case example of developing a conversational agent or chatbot to address racial inequities in breast cancer screening via mammography. With an interdisciplinary team including community members and operational leaders, we conducted a rapid evidence review and elicited qualitative data through interviews and focus groups using HCD methods to identify and prioritize key determinants (facilitators and barriers) of the evidence-based intervention (breast cancer screening) and the implementation strategy (chatbot). We developed a CPD using key determinants and proposed strategy mechanisms and proximal outcomes based in conceptual frameworks.

Results

We identified key determinants for breast cancer screening and for the chatbot implementation strategy. Mistrust was a key barrier to both completing breast cancer screening and using the chatbot. We focused design for the initial chatbot interaction to engender trust and developed a CPD to guide chatbot development. We used the persuasive health message framework and conceptual frameworks about trust from marketing and artificial intelligence disciplines. We developed a CPD for the initial interaction with the chatbot with engagement as a mechanism to use and trust as a proximal outcome leading to further engagement with the chatbot.

Conclusions

The use of interdisciplinary methods is core to implementation science. HCD is a particularly synergistic discipline with multiple existing applications of HCD to implementation research. We present an extension of this work and an example of the potential value in an integrated community-engaged approach of HCD and implementation science researchers and methods to combine strengths of both disciplines and develop human-centered implementation strategies rooted in causal perspective and healthcare equity.

Similar content being viewed by others

Background

The field of implementation science was created to address the gap between what should be done based on existing evidence and what is done in practice. Implementation strategies—“methods or techniques used to enhance the adoption, implementation, and sustainability of a clinical program or practice” [1]—are central to implementation science. In 2015, the Expert Recommendations for Implementing Change project compiled 73 different implementation strategies used in the field [2]. However, as implementation science has evolved, experts have recognized that (a) more implementation strategies exist than have been cataloged and (b) developing or tailoring implementation strategies may benefit from integrating approaches from other disciplines (e.g., behavioral economics and human-centered design) [3,4,5]. Yet, current guidance on how to effectively incorporate methods from other disciplines to develop and refine innovative implementation strategies is limited.

The causal pathway diagram (CPD) is an implementation science method that can be used to support development and refinement of implementation strategies [6]. CPDs help researchers to understand implementation strategies as they are intended to work. In building a CPD, researchers identify the implementation strategy, the mechanism(s) through which the strategy is thought to lead to the intended outcome, proximal outcomes which may provide signals of effect earlier than the intended outcome, and the distal (intended) outcome. CPDs also include moderators which may enhance or dampen pathway effect and pre-conditions which are necessary for the pathway to proceed. In developing and refining implementation strategies, CPDs help investigators map key determinants (barriers or facilitators) that implementation strategies address and mechanisms by which strategies are posited to effect change. Theory and existing evidence are typically used to construct CPDs [7]. One potential limitation of the CPD is that while there is an emphasis on incorporating theory and existing evidence, there are fewer examples of incorporating implementation partners’ and/or community needs and context in the initial creation of the CPD [8].

Co-creation—a collaborative process including people with a diversity of roles/positions to attain goals—is increasingly recognized as an approach for implementation scientists to integrate partners/communities in research [9]. In co-creation, researchers may employ several different methodologies and methods. For example, many researchers use community-engaged research approaches to meaningfully include community members in intervention development and implementation with goals to increase relevance, effectiveness, and sustainment of interventions [9, 10]. Co-creation can be framed as an overarching concept that includes co-design (intervention development) and co-production (intervention implementation) [11]. Co-design is specifically relevant for the design of novel implementation strategies and researchers often use human-centered design methods for co-designing interventions [12, 13]. In co-design, multiple methods may be used synergistically. For example, researchers can simultaneously use community-based participatory research methods to meaningfully include community members and human-centered design (HCD) methods to guide intervention design [14, 15].

HCD methods are particularly useful in co-design for technology-based implementation strategies with key standards and principles for designing interactive systems [16]. HCD is a “flexible, yet disciplined and repeatable approach to innovation that puts people at the center of activity.” [17] HCD methods elicit information regarding user environment and experience through continuous partner/user engagement and draw from multidisciplinary expertise [18]. An initial phase of HCD is to establish the context of use and requirements of users [19]. In this exploratory phase, researchers often collect and analyze qualitative data through interviews, focus groups, and/or co-design sessions. In addition to questions about context and user requirements, interviews will often include “mockups” or early prototypes for initial reactions and feedback.

Establishing context of use and user requirements in HCD is synergistic to the needs for specifying implementation strategies and developing CPDs [20]. HCD methods can be used to build and inform CPDs by gaining understanding of key determinants to the desired program or practice within a specific context and identifying potential facilitators and barriers to the implementation strategy itself. Early qualitative data from HCD methods and identification of key determinants can help to inform use of theory in building CPDs. Finally, HCD methods can further help to understand and test assumptions related to mechanisms of an implementation strategy [20].

Haines et al. described the application of HCD methods in defining context and connecting to evidence-based practices and implementation strategies. [4] We build on this work by describing a way to explicitly include partners (organizational and community) in the co-design of implementation strategies while maintaining a causal basis and perspective. We present an example incorporating HCD methods in CPDs to design an innovative outreach strategy to address inequities in breast cancer screening using mammography among Black women. In this case study, we illustrate how HCD methods were used to (1) identify and prioritize key determinants, (2) select and apply conceptual frameworks, and (3) understand (and design for) strategy mechanisms.

Methods

Case example: designing an outreach tool to address breast cancer screening inequities

Inequities in breast cancer mortality among Black people have been recognized for decades and yet persist [21, 22]. These inequities are partly due to later stage breast cancer diagnosis [21]. Regular interval breast cancer screening with mammography aligned with the United States Preventive Services Task Force (USPSTF) guidelines is an evidence-based intervention to improve earlier diagnosis of and mortality from breast cancer [23]. Therefore, addressing breast cancer screening inequities among Black people eligible for screening aligned with guidelines may facilitate early detection and improve breast cancer survival [24,25,26]. Research to date has demonstrated that Black women experience multiple barriers to breast cancer screening including reduced access to care, mistrust and decreased self-efficacy, fear of diagnosis, prior negative health care experiences, and lack of information regarding breast cancer risk [27,28,29,30,31,32,33,34,35]. Black women may also not feel included or prioritized in breast cancer screening campaigns [30].

Tailored interventions to improve breast cancer screening among Black women have demonstrated modest effect in improving breast cancer screening rates, yet many of these interventions, such as use of health navigators, are resource intensive and must be repeated annually [36,37,38,39,40,41,42,43,44,45,46,47]. Mobile technology interventions can be culturally tailored and may address limitations related to cost and time [48, 49]. Mobile technology interventions using short message service (SMS) text are accessible to individuals across a range of sociodemographic factors and have been shown to be effective in primary care behavioral and disease management interventions [50]. Black women have reported SMS text-based breast cancer screening interventions to be accessible and acceptable [51]. SMS text-based interventions are also more accessible than patient portal-based interventions which lack adequate reach due to substantial racial inequities in patient portal use [52, 53]. Mobile health interventions using conversational interfaces such as chatbots via SMS text can act as virtual health navigators providing individualized information about and connecting individuals to healthcare [54]. Prior research has shown chatbots increase levels of trust in web-based information and are easy to use and scale [55, 56].

While the use of health navigators is an established implementation strategy, there are little data on integrating conversational agents in primary care outreach and none that we are aware of that specifically address healthcare inequities in cancer screening [57]. Literature on digital health interventions emphasizes need for careful attention to and planning for implementation to optimize integration in the healthcare system and patient use [58]. Moreover, evidence of bias in artificial intelligence raises caution in the design of chatbot interventions [59,60,61]. We identified chatbots as a promising, innovative implementation strategy to address breast cancer screening inequities; however, one that warrants rigorous methods and community engagement to design and tailor.

In late 2020, we brought together a team of researchers and health system leaders at a large academic medical center to address inequities in breast cancer screening through the design of a chatbot that could facilitate outreach. Breast cancer screening rates in the health system at the time using the National Committee for Quality Assurance Healthcare Effectiveness Data and Information Set measure based on the USPSTF guidelines were 61.5% among Black women compared to 73.3% among White women (internal health system data) [23, 62]. The chatbot implementation strategy was favored as an intervention among interdisciplinary team members because of its innovation and the low resource burden to primary care with better potential for sustainability. Usual care consisted of chart review and telephone outreach by a primary care health navigator and then connection to radiology for mammogram scheduling. The chatbot intervention could be sent to people due for screening and could be configured to schedule a mammogram during the chatbot interaction, expending less resources with greater efficiency. The study protocol was reviewed and determined exempt by the University of Washington Institutional Review Board. This manuscript adheres to the Enhancing the Quality and Transparency of health research (EQUATOR) Better Reporting of Interventions: template for intervention description and replication (TIDieR) checklist and guide and the Consolidated criteria for REporting Qualitative research (COREQ) guidelines [63, 64].

Personnel

To approach implementation-focused research questions, interdisciplinary teams of researchers, operational partners, and end-users are advantageous to develop optimized implementation strategies or innovations. Implementation science and human-centered design researchers co-leading efforts (e.g., as PI, Co-PI, or MPIs) can help to support integration of methods and perspectives. In patient-facing interventions—particularly those addressing inequities among marginalized communities—including patient/community partners can center intervention development on patient/community needs, facilitate participant recruitment, help refine study protocol, and support the analyses of collected data [10, 65].

In designing a chatbot for breast cancer screening outreach to address racial inequities, our team included an HCD researcher (G.H.), a primary care physician and early-stage investigator with focus in implementation science (L.M.M.), an HCD PhD candidate (R.L.), and a community-based organization leader (B.H.H.) with expertise in conducting interviews and focus groups for qualitative research and extensive community connections. We received project mentorship from the Optimizing Implementation in Cancer Control (OPTICC) team that includes several leaders and experts in implementation science (e.g., B.J.W., A.R.L.) [66]. We drew input from key health system partners including health care equity leadership (P.L.H.), primary care and population health leadership (N.A., V.F.), and primary care health navigators. We held regular interdisciplinary team meetings, most frequently in the initial stages of innovation design. Throughout the development of the chatbot tool, we sought feedback from community members through interviews, focus groups, and (planned) co-design sessions.

Positionality statement

Our team included trainees, researchers, clinicians, and operational leaders at the University of Washington and a community-based organization leader. Previous research interests/experience included communication technologies to promote health and well-being (G.H.) and improving quality and equity in primary care services (L.M.M.). B.H.H. provided health equity expertise; she has led a Seattle-based survivor and support organization for African American women with cancer for over 25 years; in that time, she has collaborated with researchers on over 60 grants. Most of our team identifies as women and several of our team members identify as Black women. Research analysis was conducted primarily by R.L., G.H., and L.M.M. (none of whom are Black/African American); all data analysis/interpretation was reviewed with B.H.H. in bi-weekly research meetings.

Identify and prioritize key determinants

Overview

Key determinant (i.e., facilitators and barriers) identification is critical in implementation strategy development and a first step in creating a CPD. Determinants may be identified initially through evidence review and contextually through qualitative (e.g., interviews) and/or quantitative (e.g., survey) methods. The use of HCD methods can augment identification of key determinants and other components in CPD via mockups and/or early prototypes to elicit feedback on initial design and use. This approach is particularly useful because determinants can be elicited in the context of the implementation strategy—which may help to optimize determinant-strategy matching. We identified and prioritized key determinants through rapid evidence review of breast cancer screening determinants among Black women and HCD methods. We conducted qualitative analysis of semi-structured interviews including a chatbot mockup and focus groups with end-users who were shown an early prototype of the chatbot which was iterated based on qualitative data analysis of the interviews. Interviews and focus groups were led almost entirely by B.H.H., a community member, to provide comfortable environments to share perspectives. Our underlying interpretive framework most closely followed social constructivism; we focused on the content of participant words and experiences with the goal to minimize researcher interpretation [67]. Any question of participant meaning was reviewed with B.H.H.

Rapid evidence review

Objective

Our objective was to identify determinants to breast cancer screening among Black women emergent from recent literature.

Procedure

We conducted rapid evidence review following established methods described in the National Collaborating Centre for Methods and Tools Rapid Review Guidebook [68]. We defined a research question—“among Black women in the United States, what are determinants (i.e., facilitators and barriers) to breast cancer screening?”, searched for research evidence, critically appraised information sources, and synthesized evidence.

Search strategy

Our search strategy prioritized evidence in the past 3 years and included search terms in or related to the research question: (Mammogram, Mammography, Cancer Screening, Breast Cancer Screening), (Breast Cancer), (Women), (Black, African American, African American, Minority), (Race, Ethnicity), (Disparities, Determinants), and (Facilitators, Barriers). Searches were conducted in PubMed, Health Evidence, Public Health + , and the National Institute of Health and Care Excellence.

Review criteria

We considered studies done in the USA as race is a social construct and the experience and impacts of individual and systemic racism differ geographically. We focused on results among Black/African American individuals given the research question and aim to identify specific determinants within this group; however, we did include studies with multiple racial groups represented. We focused on studies that included individuals aged 40–74 years to match the population eligible for average-risk breast cancer screening. Publications in the 3 years prior to evidence review were prioritized acknowledging determinants may change over time (e.g., with technology advancements such as online scheduling or policy changes allowing for mammogram scheduling without primary care provider (PCP) referral) and in keeping with methods in the National Collaborating Centre for Methods and Tools Rapid Review Guidebook [68].

Critical appraisal and evidence synthesis

Critical appraisal was guided by the 6S Pyramid framework developed and made available by the National Collaborating Centre for Methods and Tools [69]. We categorized data by source (i.e., search engine), study type (e.g., single study, meta-analysis), population, and results.

Interviews with mockup

Objective

Our objective was to elicit determinants to breast cancer screening among Black women living in western Washington as well as feedback about an initial mockup of the chatbot through interviews with community members.

Interview guide

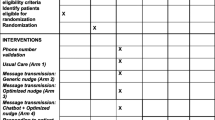

The interview guide was developed by our research team with additional input from members of the Breast Health Equity committee—a health system committee including operational leaders, physicians, and researchers dedicated to addressing inequities in care related to breast cancer screening, diagnosis, and treatment (Additional file 1: Appendix A). While we incorporated feedback from committee members after the guide was drafted, we did not pilot test with community members before starting interviews. Questions focused on determinants to breast cancer screening and past experiences with breast cancer screening. Additionally, two members of the research team (G.H. and R.L.) created a mockup of the chatbot tool including several mockups of a chatbot for breast cancer screening outreach (Fig. 1).

Sample and recruitment

We used convenience sampling through fliers posted in primary care clinics and email to the research team’s established community networks to identify and recruit individuals who identified as Black/African American women between the ages of 40 and 74 years and lived in either King or Pierce counties in Washington state. We recruited 21 individuals for interviews which we estimated would be adequate to provide sufficient data for understanding our research question [70].

Procedure

Two members of the research team (B.H.H., A.G.) conducted interviews (n = 21); the community engagement lead on the team (B.H.H.) conducted the vast majority (n = 18). Interviews were conducted via Zoom videoconferencing technology and were audio recorded. In addition to questions regarding determinants to and experience of breast cancer screening, we showed participants screenshots of the initial mockup for the chatbot tool and asked specific questions for feedback. No field notes were collected during/after interviews. All interviews were transcribed.

Analysis

Four members of the research team (R.L. and three undergraduate students listed in acknowledgements: A.A., N.S., X.S.) read and coded the transcripts to generate and refine themes through several iterations until consensus was reached. Each interview was analyzed and coded once by individuals on the research team using a directed content analysis approach; codes were then discussed as a team [5, 71]. Deductive codes were created using prior research organizing breast cancer screening barriers as personal, structural, and clinical [34]. Inductive codes emerged from a close reading of an initial subset of the transcripts and were added to the codebook (Additional file 1: Appendix Table B). Qualitative data analysis resulted in themes around the chatbot design, and determinants to breast cancer screening. We facilitated an ideation workshop with the research team and Breast Health Equity committee to brainstorm how this research might address the themes brought up in the interviews. We used a 2 × 2 prioritization matrix as a tool to identify the most impactful and feasible ideas that arose. This analysis was used to develop an early chatbot prototype. Results were shared with participants in a newsletter with invitation to respond to interpretation and/or presentation prior to manuscript submission (Additional file 1: Appendix C).

Focus groups

Objective

The objectives were to elicit feedback on an early static prototype of the chatbot tool informed by the interviews.

Early prototype creation

The research team developed an early static prototype of the chatbot tool iterating on the initial mockup using themes and feedback that emerged from qualitative data analysis of the individual interviews (Fig. S1). In addition to the prototype screens, we included short videos with questions and answers to questions such as—“Why should I get screened?”, “What can I expect from a mammogram?”, “What happens if the mammogram is abnormal?”.

Focus group guide

The focus group guide was developed by our research team with additional input from members of the Breast Health Equity committee (Additional file 1: Appendix D). The guide included questions about perceptions of, engagement with, and usability of the chatbot based on the prototype screens and videos.

Sample and recruitment

The same sampling and recruiting methods were used for the focus groups as were used for the individual interviews. We conducted three focus groups with a total of nine participants.

Procedure

Focus groups were led by B.H.H. and joined by multiple members of the research team (V.F., A.G., R.L., L.M.M.). We showed participants three example interactions with the chatbot prototype, (1) patient-initiated scheduling of a mammogram, (2) system-initiated patient education, and (3) system-initiated re-scheduling, and asked specific questions for feedback. The same procedures were followed as for the individual interviews; team members debriefed after each focus group.

Analysis

We used template analysis with pre-defined domains derived from focus group questions and interview themes to analyze focus group content [72]. Template analysis may be used as a rapid qualitative analysis approach for focus group data [73]. Template domains were agreed upon by investigators (L.M.M., G.H., R.L., B.H.H.). One investigator (L.M.M.) then reviewed focus groups and conducted content analysis using the templates. The completed templates were summarized in a matrix for data visualization and reviewed by all investigators; any disagreements were addressed and resolved. Results were shared with participants in a newsletter with invitation to respond to interpretation and/or presentation prior to manuscript submission (Additional file 1: Appendix C).

Synthesis: developing a causal pathway diagram

CPDs can help to map out the pathway of an implementation strategy as it is intended to work [6]. For innovative implementation strategies, CPDs can help establish and test theorized mechanisms. Conceptual frameworks help to inform pathway components and may be drawn from disciplines outside of implementation research for novel strategies.

From our rapid evidence review, qualitative interviews, and focus groups, we identified key determinants—both in the context of breast cancer screening and the chatbot implementation strategy—and hypothesized mechanisms. Our research team prioritized determinants that were identified across data sources (e.g., in interviews and in rapid evidence review). We focused CPD development on the initial engagement with the chatbot.

Using the selected key determinants, we worked to identify conceptual frameworks to develop a CPD. We expanded the search for conceptual frameworks outside of healthcare to include disciplines such as marketing and computer science that are relevant to the implementation strategy. We selected frameworks based on relevance to and connection of our implementation strategy and proposed mechanism. We used the conceptual frameworks to inform mechanisms through which we hypothesize the implementation strategy to work and moderators which could increase or decrease strategy effect via the strategy mechanism. We iterated on the CPD as a team and received feedback from the OPTICC center team (B.J.W. and A.R.L.).

Results

Informing CPD development: identifying and prioritizing key determinants

In the rapid evidence review, 41 relevant studies were identified out of 114 search results. A narrative synthesis was written summarizing determinants identified in the literature (Additional file 1: Appendix E). Determinants identified were cataloged and prioritized based on relevance to the implementation strategy. For example, one study found perceptions of lower quality of care if mammograms were done in a mobile clinic setting; we did not include this as a priority determinant because this would not be particularly modifiable in the chatbot design [33]. Priority determinants included facilitators such as having personal or family history of breast cancer and recommendations from PCPs and barriers such as medical mistrust (Table 1).

One priority barrier that emerged from the rapid evidence review was lack of knowledge about breast cancer screening; prior literature recommended patient education to explain and help individuals learn about the process of getting a mammogram [33, 76, 78]. This informed our design of the initial mockup and early chatbot prototype as a patient education and scheduling tool.

For the qualitative data analysis, we interviewed 21 of 39 individuals who responded to recruitment and completed a screening survey. Only 4 of 39 were ineligible due to living outside the 2 designated counties. Several people invited for interviews had to cancel due to schedule conflicts. All interviews were completed once started (no one dropped out of the study after starting an interview). Interview participants all identified as Black/African American, were between the ages of 40 and 69 years, and several were multilingual. We did not collect specific demographic characteristics for focus group participants. Focus group participants signed up for 1 of 4 focus groups; we did not have anyone drop out of focus groups once started.

In the qualitative analysis of interviews and focus groups, we elicited facilitators and barriers to breast cancer screening. Most of the determinants that emerged from interviews and focus groups were also identified in the evidence review (Table 1). Facilitators that appeared in evidence review and interviews and/or focus groups included recommendations and/or advocacy from a PCP, health-related social support, family or personal history of breast cancer, and adequate preparation before a mammogram (lack of preparation was framed as a barrier in evidence review). Overlapping barriers included lack of resources (e.g., cost, insurance, transportation), anxiety about what to expect, fear about negative outcomes associated with the procedure (e.g., pain), medical mistrust, prior negative experiences with the health system (including experiences of racism), lack of knowledge about breast cancer screening, lack of discussion with family and friends, and lack of clear recommendation from a PCP. Some determinants that arose from the interview and focus group data were not present in the evidence review but were prioritized given relevance to the implementation strategy. For example, participants identified the time spent to make an appointment and the time until the appointment as moderators to scheduling a mammogram (i.e., a barrier if time to make an appointment and time until appointment is long and facilitator if time to make an appointment and time until appointment is relatively short). In terms of initial reactions to the chatbot mockup, 18 out of 20 participants asked thought that the chatbot would be useful for scheduling (one participant was not asked this question).

In the template analysis of focus groups, we elicited facilitators and barriers to and feedback about the chatbot implementation strategy (Table 2; Additional file 1: Appendix F). Participants appreciated the purpose of the chatbot but thought that in many ways it fell short.

“I mean because that's what the app is for… To kind of make us feel… to draw us in and make us feel taken care of and informed. Educated.” (Participant, Focus Group 2).

Participants expressed mistrust in the chatbot persona, questioning the chatbot’s credibility and describing privacy concerns and intent. They emphasized the importance of cultural inclusivity and familiarity but did not feel like the chatbot prototype achieved these goals.

“I do agree with the fact that it needs to be more culturally inclusive and appropriate for us. I didn't feel like it was personalized outside of [B.H.H.’s] involvement, there was nothing that really spoke to our people.” (Participant, Focus Group 2).

The chatbot presented to the focus groups was named “Sesi” which means “sister” in Sotho, a Bantu language spoken mostly in Southern Africa. Participants expressed frustration about conflating African and Black American experience.

“Sometimes, because we're Black, other communities patronize on us being Black… they just patronize us as if we know what it is to be in Africa and we don't. We've never been to Africa. We still have the same issues, yes, but we've never been there so we can't relate to certain things or cultures that have because we don't have that. We've never, that was not brought along with us here.” (Participant, Focus Group 3).

They questioned the value-add of the chatbot presumed to be an app that would require effort to download onto a phone but might only be used once a year. Though participants did think that they would use the chatbot if it could be used to schedule a mammogram more efficiently than by telephone conversation.

Overall, mistrust was a major theme in both qualitative data analyses and rapid evidence review. In interviews and focus groups, mistrust arose both in the context of interactions with the health care system and the chatbot technology. At the same time, participants noted aspects of the chatbot that increased trust and engagement. For example, they felt reassured to see women who looked like themselves in the chatbot interaction, such as an image of a Black female mammography technician. Given these findings, we decided to focus on optimizing trust in the design of the initial chatbot engagement.

Causal pathway diagram

We drew from multiple theoretical frameworks regarding trust as a determinant to the evidence-based intervention (breast cancer screening) and the implementation strategy (chatbot) (Table 3). The persuasive health message framework for developing culturally specific messages details source, channel, and message as distinct components in health messaging and has been used in prior breast cancer screening campaigns [81, 82]. We defined our implementation strategy components using these conventions—source (i.e., chatbot persona—communication style and identity), channel (i.e., form of message delivery, e.g., SMS text), and message (i.e., content of messages) (Fig. 2). As we focused first on the initial engagement with the chatbot, we attended to source credibility to engender trust. We drew from a conceptual framework in marketing that identifies expertise, homophily, and trustworthiness as characteristics of source credibility—or the belief that a source of information can be trusted [83]. Finally, to capture trustworthiness in technology, we used a conceptual framework regarding trust in artificial intelligence which includes personality and ability as human characteristics that are important drivers of trust in AI [84].

We used the CPD to model how we might address the barrier of mistrust using initial engagement with the chatbot as a mechanism and trust as a proximal outcome. Using the conceptual frameworks described above, we posited moderators to be (1) chatbot expertise, (2) chatbot designed for familiarity (i.e., homophily), and (3) chatbot personality or communication style. With our components defined, we created a CPD (Fig. 2).

Discussion

We presented a case study example of the use of HCD methods to inform and build CPDs to design an implementation strategy rooted in causal perspective and informed by community partners. This approach addresses gaps in the use of implementation strategies including identifying and prioritizing determinants and knowledge of strategy mechanisms [6, 66].

Our work highlights the value of HCD methods which integrate end-users in the development of innovative implementation strategies and provides an approach for community/partner engagement that is especially useful when designing technology-based strategies. By using HCD methods, we were able to elicit determinants to both the evidence-based practice (breast cancer screening) and the proposed implementation strategy (chatbot). Moreover, we received nuanced feedback about the chatbot in its current design rather than as a hypothetical strategy (as visual tools in qualitative research can enhance the quality and clarity of data) [85]. By doing so, we gained important insights about the implementation strategy—e.g., our early prototype design lacked the depth of cultural inclusivity and familiarity needed to elicit trust and promote use of the chatbot despite being informed by breast cancer screening determinants elicited in our initial interviews. These insights were integral to the CPD development and prioritization of trust as a determinant to chatbot use and subsequent breast cancer screening.

Using HCD methods meant that we brought community partners into the design process in the earliest stages. Having text and visual content to react to allowed community partners to identify specifically what they liked and disliked about the design and how they would word chatbot messages differently—giving very concrete feedback to incorporate into future design iterations. There have been many calls to incorporate health equity in implementation science frameworks, strategies, and outcomes [65, 86,87,88,89]. Integrating the perspectives of populations with marginalized identities into the development of implementation strategies can help to address health equity more effectively and mitigate intervention-generated inequities [90, 91].

The CPD which was constructed by data from HCD methods directly informed our next steps in development of the chatbot prototype—(1) a factorial design experiment measuring degree of trust and engagement with different chatbot personas and (2) co-design sessions to craft chatbot messaging. While our case example details the design of an innovative implementation strategy, we believe this approach could be useful in tailoring a broad spectrum of implementation strategies and adds to existing literature on methods to tailor implementation strategies [92].

Limitations

Our case study example has several limitations. Topically, while most people will have access to SMS-based interventions, this intervention will not be accessible and/or acceptable for all eligible patients [51]. During this work, we received feedback that the chatbot may be especially effective among younger age groups, but that uptake may be lower among older adults. As the USPSTF guidelines are expected to change to recommend earlier screening starting at 40 years, this implementation strategy could be particularly acceptable to outreach to newly eligible patients [93]. We readily acknowledge that a single intervention will not fully address breast cancer screening inequities and should be implemented as one part of a multi-faceted health system approach.

Methodologically, the chatbot development case example could have been strengthened using a determinant framework. We would encourage investigators interested in this approach to incorporate determinant frameworks in initial evidence review and data collection. Our methodological approach could also be strengthened by increasing community engagement [10, 14]. While we incorporated several elements of community-engaged research (including community members on the research team, having a trained community member conduct research with community participants, and community member co-authorship), we could have further expanded community participation (e.g., seek community input in initial intervention design, create a community advisory board).

Conclusions

The use of interdisciplinary methods is core to implementation science [94]. HCD is a particularly synergistic discipline with multiple existing applications of HCD to implementation research. We present an extension of this work and an example of the potential value in an integrated approach of HCD and IS researchers and methods to combine strengths of both disciplines and develop human-centered, co-designed implementation strategies rooted in causal perspective and healthcare equity.

Availability of data and materials

Aggregated qualitative data is available by request in accordance with a funding agreement. Disaggregated data is not available to maintain privacy and confidentiality of interview and focus group participants.

Change history

25 April 2024

A Correction to this paper has been published: https://doi.org/10.1186/s43058-024-00586-9

Abbreviations

- CPD:

-

Causal pathway diagram

- EHR:

-

Electronic health record

- EQUATOR:

-

Enhancing the Quality and Transparency of health research

- HCD:

-

Human-centered design

- PCP:

-

Primary care provider

- SMS:

-

Short message service

- TIDieR:

-

Template for intervention description and replication

- USPSTF:

-

United States Preventive Services Task Force

References

Proctor,Enola. Implementation strategies: recommendations for specifying and reporting. https://doi.org/10.1186/1748-5908-8-139.

Powell BJ, Waltz TJ, Chinman MJ, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21. https://doi.org/10.1186/s13012-015-0209-1.

Beidas RS, Buttenheim AM, Mandell DS. Transforming mental health care delivery through implementation science and behavioral economics. JAMA Psychiat. 2021;78(9):941–2. https://doi.org/10.1001/jamapsychiatry.2021.1120.

Haines ER, Dopp A, Lyon AR, et al. Harmonizing evidence-based practice, implementation context, and implementation strategies with user-centered design: a case example in young adult cancer care. Implement Sci Commun. 2021;2(1):45. https://doi.org/10.1186/s43058-021-00147-4.

Lyon AR, Munson SA, Renn BN, et al. Use of human-centered design to improve implementation of evidence-based psychotherapies in low-resource communities: protocol for studies applying a framework to assess usability. JMIR Res Protoc. 2019;8(10):e14990. https://doi.org/10.2196/14990.

Lewis CC, Klasnja P, Powell BJ, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136. https://doi.org/10.3389/fpubh.2018.00136.

Lewis CC, Klasnja P, Lyon AR, et al. The mechanics of implementation strategies and measures: advancing the study of implementation mechanisms. Implement Sci Commun. 2022;3(1):114. https://doi.org/10.1186/s43058-022-00358-3.

Beidas RS, Dorsey S, Lewis CC, et al. Promises and pitfalls in implementation science from the perspective of US-based researchers: learning from a pre-mortem. Implement Sci IS. 2022;17(1):55. https://doi.org/10.1186/s13012-022-01226-3.

Pérez Jolles M, Willging CE, Stadnick NA, et al. Understanding implementation research collaborations from a co-creation lens: Recommendations for a path forward. Front Health Serv. 2022;2:942658. https://doi.org/10.3389/frhs.2022.942658.

Key KD, Furr-Holden D, Lewis EY, et al. The continuum of community engagement in research: a roadmap for understanding and assessing progress. Prog Community Health Partnersh Res Educ Action. 2019;13(4):427–34. https://doi.org/10.1353/cpr.2019.0064.

Vargas C, Whelan J, Brimblecombe J, Allender S. Co-creation, co-design, co-production for public health - a perspective on definition and distinctions. Public Health Res Pract. 2022;32(2):3222211. https://doi.org/10.17061/phrp3222211.

Slattery P, Saeri AK, Bragge P. Research co-design in health: a rapid overview of reviews. Health Res Policy Syst. 2020;18(1):17. https://doi.org/10.1186/s12961-020-0528-9.

Woodward M, Dixon-Woods M, Randall W, et al. How to co-design a prototype of a clinical practice tool: a framework with practical guidance and a case study. BMJ Qual Saf. Published online December 12, 2023:bmjqs-2023–016196. https://doi.org/10.1136/bmjqs-2023-016196.

Henderson VA, Barr KL, An LC, et al. Community-based participatory research and user-centered design in a diabetes medication information and decision tool. Prog Community Health Partnersh Res Educ Action. 2013;7(2):171–84. https://doi.org/10.1353/cpr.2013.0024.

Chen E, Leos C, Kowitt SD, Moracco KE. Enhancing community-based participatory research through human-centered design strategies. Health Promot Pract. 2020;21(1):37–48. https://doi.org/10.1177/1524839919850557.

ISO 9241–210:2019(en), Ergonomics of human-system interaction — part 210: human-centred design for interactive systems. Accessed February 24, 2024. https://www.iso.org/obp/ui/#iso:std:iso:9241:-210:ed-2:v1:en.

Holeman I, Kane D. Human-centered design for global health equity. Inf Technol Dev. 2019;26(3):477–505. https://doi.org/10.1080/02681102.2019.1667289.

Gasson S. Human-centered vs. user-centered approaches to information system design. J Inf Technol Theory Appl JITTA. 2003;5(2). https://aisel.aisnet.org/jitta/vol5/iss2/5.

Harte R, Glynn L, Rodríguez-Molinero A, et al. A human-centered design methodology to enhance the usability, human factors, and user experience of connected health systems: a three-phase methodology. JMIR Hum Factors. 2017;4(1):e8. https://doi.org/10.2196/humanfactors.5443.

Lyon AR, Brewer SK, Areán PA. Leveraging human-centered design to implement modern psychological science: Return on an early investment. Am Psychol. 2020;75(8):1067–79. https://doi.org/10.1037/amp0000652.

Hardy D, Du DY. Socioeconomic and racial disparities in cancer stage at diagnosis, tumor size, and clinical outcomes in a large cohort of women with breast cancer, 2007–2016. J Racial Ethn Health Disparities. 2021;8(4):990–1001. https://doi.org/10.1007/s40615-020-00855-y.

Eley JW, Hill HA, Chen VW, et al. Racial differences in survival from breast cancer. Results of the National Cancer Institute Black/White Cancer Survival Study. JAMA. 1994;272(12):947–54. https://doi.org/10.1001/jama.272.12.947.

Siu AL, U.S. Preventive Services Task Force. Screening for Breast Cancer: U.S. Preventive Services Task Force Recommendation Statement. Ann Intern Med. 2016;164(4):279–296. https://doi.org/10.7326/M15-2886.

Ahmed AT, Welch BT, Brinjikji W, et al. Racial disparities in screening mammography in the United States: a systematic review and meta-analysis. J Am Coll Radiol JACR. 2017;14(2):157-165.e9. https://doi.org/10.1016/j.jacr.2016.07.034.

Smith-Bindman R, Miglioretti DL, Lurie N, et al. Does utilization of screening mammography explain racial and ethnic differences in breast cancer? Ann Intern Med. 2006;144(8):541–53. https://doi.org/10.7326/0003-4819-144-8-200604180-00004.

Chapman CH, Schechter CB, Cadham CJ, et al. Identifying equitable screening mammography strategies for Black women in the United States using simulation modeling. Ann Intern Med. 2021;174(12):1637–46. https://doi.org/10.7326/M20-6506.

Ko NY, Hong S, Winn RA, Calip GS. Association of insurance status and racial disparities with the detection of early-stage breast cancer. JAMA Oncol. 2020;6(3):385–92. https://doi.org/10.1001/jamaoncol.2019.5672.

Jones CE, Maben J, Jack RH, et al. A systematic review of barriers to early presentation and diagnosis with breast cancer among black women. BMJ Open. 2014;4(2):e004076. https://doi.org/10.1136/bmjopen-2013-004076.

Katapodi MC, Pierce PF, Facione NC. Distrust, predisposition to use health services and breast cancer screening: results from a multicultural community-based survey. Int J Nurs Stud. 2010;47(8):975–83. https://doi.org/10.1016/j.ijnurstu.2009.12.014.

Passmore SR, Williams-Parry KF, Casper E, Thomas SB. Message received: African American women and breast cancer screening. Health Promot Pract. 2017;18(5):726–33. https://doi.org/10.1177/1524839917696714.

Thompson HS, Valdimarsdottir HB, Winkel G, Jandorf L, Redd W. The Group-Based Medical Mistrust Scale: psychometric properties and association with breast cancer screening. Prev Med. 2004;38(2):209–18. https://doi.org/10.1016/j.ypmed.2003.09.041.

Orji CC, Kanu C, Adelodun AI, Brown CM. Factors that influence mammography use for breast cancer screening among African American women. J Natl Med Assoc. 2020;112(6):578–92. https://doi.org/10.1016/j.jnma.2020.05.004.

Adegboyega A, Aroh A, Voigts K, Jennifer H. Regular mammography screening among African American (AA) women: qualitative application of the PEN-3 framework. J Transcult Nurs Off J Transcult Nurs Soc. 2019;30(5):444–52. https://doi.org/10.1177/1043659618803146.

Young RF, Schwartz K, Booza J. Medical barriers to mammography screening of African American women in a high cancer mortality area: implications for cancer educators and health providers. J Cancer Educ. 2011;26(2):262–9. https://doi.org/10.1007/s13187-010-0184-9.

Molina Y, Kim S, Berrios N, Calhoun EA. Medical mistrust and patient satisfaction with mammography: the mediating effects of perceived self-efficacy among navigated African American women. Health Expect Int J Public Particip Health Care Health Policy. 2015;18(6):2941–50. https://doi.org/10.1111/hex.12278.

Copeland VC, Kim YJ, Eack SM. Effectiveness of interventions for breast cancer screening in African American women: a meta-analysis. Health Serv Res. 2018;53 Suppl 1(Suppl Suppl 1):3170–88. https://doi.org/10.1111/1475-6773.12806.

Sung JF, Blumenthal DS, Coates RJ, Williams JE, Alema-Mensah E, Liff JM. Effect of a cancer screening intervention conducted by lay health workers among inner-city women. Am J Prev Med. 1997;13(1):51–7.

West DS, Greene P, Pulley L, et al. Stepped-care, community clinic interventions to promote mammography use among low-income rural African American women. Health Educ Behav Off Publ Soc Public Health Educ. 2004;31(4 Suppl):29S-44S. https://doi.org/10.1177/1090198104266033.

Russell KM, Champion VL, Monahan PO, et al. Randomized trial of a lay health advisor and computer intervention to increase mammography screening in African American women. Cancer Epidemiol Biomark Prev Publ Am Assoc Cancer Res Cosponsored Am Soc Prev Oncol. 2010;19(1):201–10. https://doi.org/10.1158/1055-9965.EPI-09-0569.

Marshall JK, Mbah OM, Ford JG, et al. Effect of patient navigation on breast cancer screening among African American Medicare beneficiaries: a randomized controlled trial. J Gen Intern Med. 2016;31(1):68–76. https://doi.org/10.1007/s11606-015-3484-2.

Zhu K, Hunter S, Bernard L, et al. An intervention study on screening for breast cancer among single African-American women aged 65 and older. Ann Epidemiol. 2000;10(7):462–3. https://doi.org/10.1016/s1047-2797(00)00089-2.

Goel A, George J, Burack RC. Telephone reminders increase re-screening in a county breast screening program. J Health Care Poor Underserved. 2008;19(2):512–21. https://doi.org/10.1353/hpu.0.0025.

Hendren S, Winters P, Humiston S, et al. Randomized, controlled trial of a multimodal intervention to improve cancer screening rates in a safety-net primary care practice. J Gen Intern Med. 2014;29(1):41–9. https://doi.org/10.1007/s11606-013-2506-1.

Jibaja-Weiss ML, Volk RJ, Kingery P, Smith QW, Holcomb JD. Tailored messages for breast and cervical cancer screening of low-income and minority women using medical records data. Patient Educ Couns. 2003;50(2):123–32. https://doi.org/10.1016/s0738-3991(02)00119-2.

Gathirua-Mwangi WG, Monahan PO, Stump T, Rawl SM, Skinner CS, Champion VL. Mammography adherence in African-American women: results of a randomized controlled trial. Ann Behav Med Publ Soc Behav Med. 2016;50(1):70–8. https://doi.org/10.1007/s12160-015-9733-0.

Kreuter MW, Sugg-Skinner C, Holt CL, et al. Cultural tailoring for mammography and fruit and vegetable intake among low-income African-American women in urban public health centers. Prev Med. 2005;41(1):53–62. https://doi.org/10.1016/j.ypmed.2004.10.013.

Champion VL, Springston JK, Zollinger TW, et al. Comparison of three interventions to increase mammography screening in low income African American women. Cancer Detect Prev. 2006;30(6):535–44. https://doi.org/10.1016/j.cdp.2006.10.003.

De Jesus M, Ramachandra S, De Silva A, et al. A mobile health breast cancer educational and screening intervention tailored for low-income, uninsured latina immigrants. Womens Health Rep New Rochelle N. 2021;2(1):325–36. https://doi.org/10.1089/whr.2020.0112.

Ruco A, Dossa F, Tinmouth J, et al. Social media and mHealth technology for cancer screening: systematic review and meta-analysis. J Med Internet Res. 2021;23(7):e26759. https://doi.org/10.2196/26759.

Free C, Phillips G, Watson L, et al. The effectiveness of mobile-health technologies to improve health care service delivery processes: a systematic review and meta-analysis. PLoS Med. 2013;10(1):e1001363. https://doi.org/10.1371/journal.pmed.1001363.

Ntiri SO, Swanson M, Klyushnenkova EN. Text messaging as a communication modality to promote screening mammography in low-income African American women. J Med Syst. 2022;46(5):28. https://doi.org/10.1007/s10916-022-01814-2.

Peacock S, Reddy A, Leveille SG, et al. Patient portals and personal health information online: perception, access, and use by US adults. J Am Med Inform Assoc JAMIA. 2017;24(e1):e173–7. https://doi.org/10.1093/jamia/ocw095.

Anthony DL, Campos-Castillo C, Lim PS. Who isn’t using patient portals and why? Evidence and implications from a national sample of US adults. Health Aff (Millwood). 2018;37(12):1948–54. https://doi.org/10.1377/hlthaff.2018.05117.

P G, Mb H, L R, et al. Reaching women through health information technology: the Gabby preconception care system. Am J Health Promot AJHP. 2013;27(3 Suppl). https://doi.org/10.4278/ajhp.1200113-QUAN-18.

Rickenberg R, Reeves B. The effects of animated characters on anxiety, task performance, and evaluations of user interfaces. In: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems. CHI ’00. Association for Computing Machinery; 2000:49–56. https://doi.org/10.1145/332040.332406.

Bickmore TW, Pfeifer LM, Byron D, et al. Usability of conversational agents by patients with inadequate health literacy: evidence from two clinical trials. J Health Commun. 2010;15(Suppl 2):197–210. https://doi.org/10.1080/10810730.2010.499991.

Graham AK, Lattie EG, Powell BJ, et al. Implementation strategies for digital mental health interventions in health care settings. Am Psychol. 2020;75(8):1080–92. https://doi.org/10.1037/amp0000686.

Parker VA, Lemak CH. Navigating patient navigation: crossing health services research and clinical boundaries. Adv Health Care Manag. 2011;11:149–83. https://doi.org/10.1108/s1474-8231(2011)0000011010.

Oca MC, Meller L, Wilson K, et al. Bias and inaccuracy in AI chatbot ophthalmologist recommendations. Cureus. 2023;15(9):e45911. https://doi.org/10.7759/cureus.45911.

Sallam M. ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. Healthc Basel Switz. 2023;11(6):887. https://doi.org/10.3390/healthcare11060887.

Garcia Valencia OA, Suppadungsuk S, Thongprayoon C, et al. Ethical implications of chatbot utilization in nephrology. J Pers Med. 2023;13(9):1363. https://doi.org/10.3390/jpm13091363.

HEDIS. NCQA. Accessed September 23, 2023. https://www.ncqa.org/hedis/.

Hoffmann TC, Glasziou PP, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687. https://doi.org/10.1136/bmj.g1687.

Tong A, Sainsbury P, Craig J. Consolidated criteria for reporting qualitative research (COREQ): a 32-item checklist for interviews and focus groups. Int J Qual Health Care J Int Soc Qual Health Care. 2007;19(6):349–57. https://doi.org/10.1093/intqhc/mzm042.

Adsul P, Chambers D, Brandt HM, et al. Grounding implementation science in health equity for cancer prevention and control. Implement Sci Commun. 2022;3(1):56. https://doi.org/10.1186/s43058-022-00311-4.

Lewis CC, Hannon PA, Klasnja P, et al. Optimizing Implementation in Cancer Control (OPTICC): protocol for an implementation science center. Implement Sci Commun. 2021;2(1):44. https://doi.org/10.1186/s43058-021-00117-w.

Creswell JW and Poth CN. Qualitative Inquiry and Research Design: Choosing Among Five Approaches. 4th ed. Los Angeles | London | New Dehli | Singapore | Washington DC: Sage; 2017. p. 15–40.

Dobbins M. Rapid review guidebook. Hamilton, ON: National Collaborating Centre for Methods and Tools. Retrieved from Organization website. 2017. http://www.nccmt.ca/resources/rapid-review-guidebook. Accessed 18 Mar 2024.

Search | National Collaborating Centre for Methods and Tools. Accessed February 14, 2023. https://www.nccmt.ca/tools/eiph/search.

Hennink MM, Kaiser BN, Marconi VC. Code saturation versus meaning saturation: how many interviews are enough? Qual Health Res. 2017;27(4):591–608. https://doi.org/10.1177/1049732316665344.

Hsieh HF, Shannon SE. Three approaches to qualitative content analysis. Qual Health Res. 2005;15(9):1277–88. https://doi.org/10.1177/1049732305276687.

Brooks J, McCluskey S, Turley E, King N. The utility of template analysis in qualitative psychology research. Qual Res Psychol. 2015;12(2):202–22. https://doi.org/10.1080/14780887.2014.955224.

Fox AB, Hamilton AB, Frayne SM, et al. Effectiveness of an evidence-based quality improvement approach to cultural competence training: The Veterans Affairs’ “Caring for Women Veterans” Program. J Contin Educ Health Prof. 2016;36(2):96–103. https://doi.org/10.1097/CEH.0000000000000073.

Tsapatsaris A, Reichman M. Project ScanVan: mobile mammography services to decrease socioeconomic barriers and racial disparities among medically underserved women in NYC. Clin Imaging. 2021;78:60–3. https://doi.org/10.1016/j.clinimag.2021.02.040.

Wang H, Gregg A, Qiu F, et al. Breast cancer screening for patients of rural accountable care organization clinics: a multi-level analysis of barriers and facilitators. J Community Health. 2018;43(2):248–58. https://doi.org/10.1007/s10900-017-0412-x.

Huq MR, Woodard N, Okwara L, Knott CL. Breast cancer educational needs and concerns of African American women below screening age. J Cancer Educ Off J Am Assoc Cancer Educ. 2022;37(6):1677–83. https://doi.org/10.1007/s13187-021-02012-3.

Guo Y, Cheng TC, Yun LH. Factors associated with adherence to preventive breast cancer screenings among middle-aged African American women. Soc Work Public Health. 2019;34(7):646–56. https://doi.org/10.1080/19371918.2019.1649226.

Ferreira CS, Rodrigues J, Moreira S, Ribeiro F, Longatto-Filho A. Breast cancer screening adherence rates and barriers of implementation in ethnic, cultural and religious minorities: a systematic review. Mol Clin Oncol. 2021;15(1):139. https://doi.org/10.3892/mco.2021.2301.

Davis CM. Health beliefs and breast cancer screening practices among African American women in California. Int Q Community Health Educ. 2021;41(3):259–66. https://doi.org/10.1177/0272684X20942084.

Agrawal P, Chen TA, McNeill LH, et al. Factors associated with breast cancer screening adherence among church-going African American women. Int J Environ Res Public Health. 2021;18(16):8494. https://doi.org/10.3390/ijerph18168494.

Hall IJ, Johnson-Turbes A. Use of the Persuasive Health Message framework in the development of a community-based mammography promotion campaign. Cancer Causes Control CCC. 2015;26(5):775. https://doi.org/10.1007/s10552-015-0537-0.

Witte K. Fishing for success: Using the persuasive health message framework to generate effective campaign messages. In: Designing Health Messages: Approaches from Communication Theory and Public Health Practice. Sage Publications, Inc; 1995:145–166. https://doi.org/10.4135/9781452233451.n8.

Ismagilova E, Slade E, Rana NP, Dwivedi YK. The effect of characteristics of source credibility on consumer behaviour: a meta-analysis. J Retail Consum Serv. 2020;53: 101736. https://doi.org/10.1016/j.jretconser.2019.01.005.

Siau K, Wang W. Building trust in artificial intelligence, machine learning, and robotics. Cut Bus Technol J. 2018;31(2):47–53.

Glegg SMN. Facilitating interviews in qualitative research with visual tools: a typology. Qual Health Res. 2019;29(2):301–10. https://doi.org/10.1177/1049732318786485.

Adapting strategies to promote implementation reach and equity (ASPIRE) in school mental health services - Gaias - 2022 - Psychology in the Schools - Wiley Online Library. Accessed February 15, 2023. https://doi.org/10.1002/pits.22515.

Allen M, Wilhelm A, Ortega LE, Pergament S, Bates N, Cunningham B. Applying a race(ism)-conscious adaptation of the CFIR framework to understand implementation of a school-based equity-oriented intervention. Ethn Dis. 2021;31(Suppl 1):375–88. https://doi.org/10.18865/ed.31.S1.375.

Chinman M, Woodward EN, Curran GM, Hausmann LRM. Harnessing implementation science to increase the impact of health equity research. Med Care. 2017;55 Suppl 9 Suppl 2(Suppl 9 2):S16–23. https://doi.org/10.1097/MLR.0000000000000769.

Woodward EN, Matthieu MM, Uchendu US, Rogal S, Kirchner JE. The health equity implementation framework: proposal and preliminary study of hepatitis C virus treatment. Implement Sci IS. 2019;14(1):26. https://doi.org/10.1186/s13012-019-0861-y.

Veinot TC, Mitchell H, Ancker JS. Good intentions are not enough: how informatics interventions can worsen inequality. J Am Med Inform Assoc JAMIA. 2018;25(8):1080–8. https://doi.org/10.1093/jamia/ocy052.

Lorenc T, Oliver K. Adverse effects of public health interventions: a conceptual framework. J Epidemiol Community Health. 2014;68(3):288–90. https://doi.org/10.1136/jech-2013-203118.

Powell BJ, Beidas RS, Lewis CC, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44(2):177–94. https://doi.org/10.1007/s11414-015-9475-6.

Draft Recommendation: Breast Cancer: Screening | United States Preventive Services Taskforce. Accessed July 8, 2023. https://www.uspreventiveservicestaskforce.org/uspstf/draft-recommendation/breast-cancer-screening-adults#bcei-recommendation-title-area.

Mitchell SA, Chambers DA. Leveraging implementation science to improve cancer care delivery and patient outcomes. J Oncol Pract. 2017;13(8):523–9. https://doi.org/10.1200/JOP.2017.024729.

Acknowledgements

We thank Aiza Ali, Natasha Schmid, and Xuan Song for their work in qualitative data collection and analysis, Lorella Palazzo and Nora Henrikson for their support and feedback in rapid qualitative methods and rapid evidence review, respectively, and Predrag Klasnja for his advice and guidance in this work. We thank all the interview and focus group participants who gave us valuable feedback to inform this work.

Funding

Research reported in this publication was supported by the University of Washington Medicine Patients are First Innovation Pilot (institutional funding, no grant number), the National Cancer Institute of the National Institutes of Health under Award Number P50CA244432, and the Agency for Healthcare Research and Quality under Award Number K12HS026369. The content is solely the responsibility of the authors and does not necessarily represent the official views of University of Washington, the National Institutes of Health, or the Agency for Healthcare Research and Quality.

Author information

Authors and Affiliations

Contributions

L.M.M. and G.H. had full access to all the data in the study and take responsibility for the integrity of the data and the accuracy of the data analysis. L.M.M., R.L., B.H.H., A.G., A.R.L., B.J.W., N.A., P.L.H., V.F., and G.H. were involved in concept and design. L.M.M., R.L., B.H.H., A.G., N.A., V.F., and G.H. were involved in the data collection process. L.M.M., R.L., B.H.H., and G.H. analyzed and interpreted the data. L.M.M. drafted the manuscript and L.M.M., R.L., B.H.H., A.G., A.R.L., B.J.W., N.A., P.L.H., V.F., and G.H. revised the manuscript. L.M.M., R.L., B.H.H., A.G., A.R.L., B.J.W., N.A., P.L.H., V.F., and G.H. approved the final submitted version, agreed to be accountable for the report, and had final responsibility for the decision to submit for publication. L.M.M. and G.H. confirm that they had access to the data in the study and accept responsibility to submit for publication.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study protocol was reviewed and determined exempt by the University of Washington Institutional Review Board. Participants were consented to participate prior to interviews and focus groups. All participants were sent a newsletter update summarizing manuscript and including all quotes for member checking with invitation to review full manuscript prior to submission.

Consent for publication

N/A.

Competing interests

Authors report no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The original version of this article was revised: The name of co-author Bridgette H. Hempstead was misspelled as Bridgette R. Hempstead. The error has been corrected.

Supplementary Information

Additional file 1.

Appendices.

Additional file 2: Fig. S1.

Early Chatbot Prototype.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Marcotte, L.M., Langevin, R., Hempstead, B.H. et al. Leveraging human-centered design and causal pathway diagramming toward enhanced specification and development of innovative implementation strategies: a case example of an outreach tool to address racial inequities in breast cancer screening. Implement Sci Commun 5, 31 (2024). https://doi.org/10.1186/s43058-024-00569-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-024-00569-w