Abstract

Background

Attempting to implement evidence-based practices in contexts for which they are not well suited may compromise their fidelity and effectiveness or burden users (e.g., patients, providers, healthcare organizations) with elaborate strategies intended to force implementation. To improve the fit between evidence-based practices and contexts, implementation science experts have called for methods for adapting evidence-based practices and contexts and tailoring implementation strategies; yet, methods for considering the dynamic interplay among evidence-based practices, contexts, and implementation strategies remain lacking. We argue that harmonizing the three can be facilitated by user-centered design, an iterative and highly stakeholder-engaged set of principles and methods.

Methods

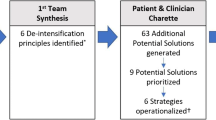

This paper presents a case example in which we used a three-phase user-centered design process to design and plan to implement a care coordination intervention for young adults with cancer. Specifically, we used usability testing to redesign and augment an existing patient-reported outcome measure that served as the basis for our intervention to optimize its usability and usefulness, ethnographic contextual inquiry to prepare the context (i.e., a comprehensive cancer center) to promote receptivity to implementation, and iterative prototyping workshops with a multidisciplinary design team to design the care coordination intervention and anticipate implementation strategies needed to enhance contextual fit.

Results

Our user-centered design process resulted in the Young Adult Needs Assessment and Service Bridge (NA-SB), including a patient-reported outcome measure and a collection of referral pathways that are triggered by the needs young adults report, as well as implementation guidance. By ensuring NA-SB directly responded to features of users and context, we designed NA-SB for implementation, potentially minimizing the strategies needed to address misalignment that may have otherwise existed. Furthermore, we designed NA-SB for scale-up; by engaging users from other cancer programs across the country to identify points of contextual variation which would require flexibility in delivery, we created a tool intended to accommodate diverse contexts.

Conclusions

User-centered design can help maximize usability and usefulness when designing evidence-based practices, preparing contexts, and informing implementation strategies—in effect, harmonizing evidence-based practices, contexts, and implementation strategies to promote implementation and effectiveness.

Similar content being viewed by others

Background

Evidence-based practice (EBP) implementation is often challenged by the poor fit between EBPs and their implementation contexts (i.e., the “set[s] of characteristics and circumstances that consist of active and unique factors, within which the implementation is embedded”) [1, 2]. the use of an EBP (i.e., practice with proven efficacy and effectiveness, including interventions, policies, assessments [3]) in a context for which it is not well-suited can compromise its effectiveness and burden users (e.g., patients, providers, healthcare organizations) with elaborate strategies intended to force implementation. However, EBPs are seldom designed to address the nuances of multiple, varying, complex, and changing practice contexts [1]. To accommodate nuanced contexts, EBP developers may produce increasingly complex EBPs [4], resulting in EBPs “that are ultimately too expensive, impractical, or even impossible to construct within real-world constraints” [5].

Despite consistent recognition that there is no implementation without some adaptation, methods to inform systematic EBP adaptation are in their infancy [6, 7]. Implementation scientists have identified various EBP characteristics that influence implementation [8]; such evidence may inform efforts to adapt EBPs to improve implementation. However, the relationship between EBP characteristics and implementation outcomes varies across EBPs and contexts [8], and the same EBP may demonstrate varying degrees of effectiveness in achieving the desired patient outcomes across different contexts [9]. All of this suggests that an EBP’s implementation and effectiveness are inextricably linked to the dynamic and multilevel contexts in which they are implemented [10]. Methods for considering the dynamic interplay between EBP and context have not been well articulated [6, 11].

To address discordance between EBPs and contexts, implementation scientists often turn to implementation strategies—i.e., “methods or techniques used to enhance the adoption, implementation, and sustainability” of EBPs [12, 13]. However, a “more is better” approach to deploying implementation strategies to compensate for poor EBP-context fit may burden EBP users. Moreover, implementation strategies have often shown only modest effect sizes [14]. For example, in a synthesis of systematic review findings on the effectiveness of clinical guideline implementation strategies, the authors concluded that the evidence base was modest [15]. Similarly, a systematic review of audit and feedback interventions found only small effect sizes [16]. These findings may be in part due to an insufficient consideration of key determinants, such as contextual appropriateness, when selecting or designing implementation strategies [17]. To this end, implementation scientists have called for methods for tailoring implementation strategies to EBPs and contexts [17, 18].

Rather than deploying cumbersome EBPs or implementation strategies to improve EBP-context fit, implementation scientists should seek to harmonize EBPs, contexts, and strategies (i.e., design each with respect to the other two). An analogy (Fig. 1) helps illustrate this harmonization: in embroidery, decisions about fabric, needle, or thread are interdependent. For example, a lightweight fabric and thin thread demand a smaller needle, using a large needle may damage the lightweight fabric and thin thread. Likewise, a too-thin thread may break if used with a thick needle or heavy fabric. Depending on the thread count, the fabric may require a stabilizer or alteration before embroidering. Similarly, an EBP (i.e., the thread), context (i.e., the fabric), and implementation strategies (i.e., the needle) should be harmonized to minimize user burden and optimize implementation. “Threading the needle” requires designing EBPs and implementation strategies that are aligned with key features of context.

There is a critical need for the development of “relational and dynamic approaches to theorizing the complex interplay between the characteristics of interventions, the activities of implementers, and the properties of variable broader contexts” [19]. Indeed, advancing methods for harmonizing EBPs, contexts, and implementation strategies have been articulated as a priority for implementation research [8, 20]. Here, we argue that such harmonizing may be facilitated with user-centered design (UCD), an iterative and highly stakeholder-engaged process for designing EBPs, preparing contexts, and informing implementation strategies. To demonstrate, we present a case example in young adult cancer care. Specifically, we describe a three-phase UCD process—(1) usability testing (optimizing the thread—i.e., EBP), (2) ethnographic contextual inquiry (understanding and preparing the fabric—i.e., context), and (3) prototyping with a multidisciplinary design team (threading the needle—i.e., designing EBP and implementation strategies)—to design a care coordination intervention for implementation in a comprehensive cancer center.

User-centered design

UCD, which is closely related to and often used interchangeably with the term “human-centered design” [21], is an iterative and highly stakeholder-engaged process for creating products which are directly responsive to their intended users and users’ contexts [22]. The primary goals of UCD are improving EBP usability (the ease with which it can be successfully used [23]) and usefulness (the extent to which it does what it is intended to do [24]). Usability and usefulness are theorized proximal determinants of perceptual implementation outcomes (i.e., acceptability, feasibility, and appropriateness; e.g., usability promotes acceptability) through which they also influence distal behavioral implementation outcomes (e.g., acceptability promotes reach) [25].

Most UCD definitions and frameworks share a common set of principles that contribute to harmonizing EBPs, contexts, and implementation strategies: (1) engaging prospective users to achieve a nuanced understanding of context, (2) refining EBPs based on user input to optimize usability and usefulness [26], and (3) a multidisciplinary design team collaborating to produce design and implementation prototypes. Together, these steps comprise an iterative cycle in which an EBP’s design and implementation strategies are refined until optimized for a given context [27]. For each of these steps, UCD offers myriad methods [26] and strategies [28] for harmonizing EBPs, contexts, and implementation strategies (summarized in Table 1). Although some of UCD’s discrete methods and principles resemble those traditionally used in implementation science (e.g., stakeholder engagement), UCD is unique in its offering of an extensive suite of methods that may be leveraged to refine EBPs, contexts, and implementation strategies. We present UCD as one promising set of approaches implementation scientists may consider drawing upon to promote adoption, implementation, and sustainment.

Figure 1 illustrates the potential of UCD for EBP-context implementation strategy harmonization (i.e., design of each with respect to the other two). In this conceptual model, this harmonization promotes an EBPs’ usability and usefulness and, subsequently, implementation (e.g., acceptability and, in turn, reach), thus limiting demand for implementation strategies. When combined with Proctor’s framework [32], this framework suggests UCD’s potential to improve an EBPs’ service and patient outcomes.

Methods

Case example: implementation of a care coordination intervention for young adults with cancer

Background and project objectives

Each year, more than 20,000 young adults between the ages of 18 and 30 are diagnosed with cancer [33]; many of them do not receive services to meet the range of needs they experience during and after cancer treatment [34,35,36,37,38]. Young adults’ unmet needs result in negative outcomes, including higher distress [35, 36], poorer health-related quality of life [39], and higher physical symptom burden [34]. Despite the complexity and scope of their needs, young adults often do not use potentially beneficial services/resources, even when access is not an issue [40,41,42]. This disconnect between young adult needs and their use of existing services/resources suggests the need for a care coordination model that (1) effectively assesses young adults’ multifaceted, age-specific, individual, and dynamic needs and (2) uses that information to efficiently connect them to services/resources.

A substantial step toward this care coordination model was the development of the first multidimensional measure of unmet needs designed specifically for adolescents and young adults: the Cancer Needs Questionnaire - Young People (CNQ-YP) [43, 44]. However, limitations to the usability and usefulness of patient-reported outcome measures like the CNQ-YP (e.g., length, wording ambiguity, redundancy or missing content, lack of connection between identified needs and follow-up actions) have frustrated their real-world implementation and effect on patient outcomes [45, 46]. Despite its potential limitations, we selected the CNQ-YP as a starting point for our intervention because of its specificity to the unique needs of young adults with cancer and because of preliminary evidence pointing to its face and content validity [43]. In this project, we used UCD to redesign the CNQ-YP to optimize its usability and usefulness in the North Carolina Cancer Hospital (NCCH), identify context modifications needed to promote receptivity to its implementation, and anticipate minimally necessary implementation strategies. Our UCD process (Table 2, Fig. 2) produced the Needs Assessment and Service Bridge (NA-SB), a care coordination intervention for young adults with cancer, and a plan for its implementation at NCCH. All procedures were approved by the University of North Carolina’s Institutional Review Board.

Multidisciplinary design team

In implementation research, stakeholder engagement has sometimes been limited or superficial [47,48,49]. In contrast, UCD demands an active and iterative approach to engagement, often with the same group of users reviewing prototypes at multiple time points [50]. Thus, at the beginning of the project, we convened an NA-SB design team comprised of key stakeholder groups. Throughout the project, the investigator team presented prototypes and other information to the design team and, based on their interactions with prototypes and collaborative discussion, made iterative improvements to NA-SB design and implementation strategies.

Design team members included researchers in cancer care delivery, patient-reported outcomes, UCD, and implementation science (n=4) and prospective NA-SB users, including NCCH clinical partners (oncologist; social worker/director of NCCH’s young adult program [n=2]) and young adult representatives (n=5) nominated by clinical partners. Nominees were primarily individuals who had previously expressed interest in research or advocacy activities related to young adult cancer and, thus, would be more likely to consider the extensive and ongoing participation that joining the design team would entail.

To recruit young adult representatives for the design team, clinical partners connected young adults via email to the project lead (EH). EH provided them with materials including a project summary, a breakdown of their expected role and time commitment, and a brief summary of UCD. EH then met with each young adult interested in participating to discuss the project and develop rapport, then met with them all together to build group rapport. Young adult representatives received a one-time $150 incentive for participation.

Review and refine prototypes (optimize the thread)

Overview

Usability testing involves hands-on evaluation of the extent to which a product or innovation can be used by specified users to achieve specified goals; usability testing can be used to iteratively refine EBPS to better align with context [51]. We conducted three rounds of usability testing to examine user interactions with the CNQ-YP: (1) an online survey assessing young adults’ needs and preferences for a needs assessment using the CNQ-YP as a prototype for them to react to, (2) cognitive interviews [52] with young adults to triangulate survey data with in-depth evidence of their perceptions of the CNQ-YP’s usability and usefulness, and (3) concept mapping [53] exercises focused on usefulness, in which young adult providers mapped needs onto services/resources to address the needs.

Young adult survey

Objectives

The objectives are to identify missing content, streamline redundant or low-priority content, and identify other usability and usefulness concerns.

Instrument

The survey instrument (Additional File 1) included three sections: (1) study information, consent, and demographic items (i.e., age, gender, clinical characteristics, social support, educational/vocational status, health insurance status); (2) the CNQ-YP in its original form; and (3) items assessing respondents’ perception of the CNQ-YP. To assess general attitudes toward the tool, we used items from three Likert-type measures of feasibility, acceptability, and appropriateness [54]. We assessed usefulness through two Likert-type items asking (1) the extent to which respondents thought the CNQ-YP accurately captured their needs and (2) the likelihood that they would use services or resources offered to them based on indicated needs. For each of these measures, we qualitatively probed respondents on usability and usefulness issues driving their concerns with the tool’s feasibility, acceptability, or appropriateness.

Sample and recruitment

To be included in the survey, we required participants (n=100) to be age 18–30 and have been diagnosed with cancer prior to survey administration. Although usability testing can be done with small samples (e.g., n=20) [51, 55], our target sample size was n=100 because we wanted to achieve breadth in usability data prior to achieving more depth through cognitive interviews. To promote young adult participant diversity (race, ethnicity, age, geographic region, setting of care, etc.), we recruited through key contacts (i.e., leaders of young adult programs and advocacy groups in the USA identified by our clinical partners), social media (i.e., a series of Twitter messages shared by tagging relevant groups and hashtags), and our design team.

Procedure

We administered the survey through a secure online platform, Qualtrics (Provo, UT). On average, the survey took 15 min to complete.

Analysis

We used descriptive statistics for respondents’ demographics, needs reported on the CNQ-YP tool, and perceptions of the CNQ-YP. To identify emergent themes regarding the CNQ-YP’s usability and usefulness in free-text responses, we used template analysis [56].

Cognitive interviews

Objective

The objective is to triangulate survey data on CNQ-YP usability and usefulness through a nuanced understanding of content, wording, or comprehension concerns.

Interview guide

With input from the design team, we developed the cognitive interview guide to encourage participants to “think aloud” as they read and reflected on the CNQ-YP itemset and probe them to comment on topics such as item content and wording, response options, format, length, comprehensiveness, and repetitiveness (Additional File 2).

Sample and recruitment

We purposively sampled from among survey participants. Consistent with cognitive interview methodology [52], the target sample size was small (i.e., n=5–10); however, we prioritized demographic variation when sampling to promote NA-SB’s relevance to diverse young adults. We recruited young adults (n=5) until we reached thematic saturation, i.e., when subsequent interviews did not generate new information regarding CNQ-YP’s usability or usefulness.

Procedure

EH conducted 1-h cognitive interviews (n=5) via Zoom, a video-conferencing platform. Interviews were audio-recorded. EH navigated the CNQ-YP through the screen-share function, soliciting participants’ input on each item. At the end of each interview, EH summarized her takeaways with interviewees for the purposes of member checking [57].

Analysis

We inductively identified themes, noting concerns related to the CNQ-YP’s usability and usefulness. We then created a table organizing participants’ concerns within each of the identified themes for presentation to the design team during our first workshop (described later).

Concept mapping

Objective

The objective is to promote the usefulness of the CNQ-YP by grouping needs by services/resources expected to address those needs.

Instrument

The design team approved changes to CNQ-YP content based on survey and cognitive interview results. We pre-loaded the resulting list of young adult needs into an online secure platform called Concept Systems Global Max © (CSGM). CSGM included two concept mapping exercises: (1) sorting an electronic deck of cards, each containing a young adult need, into like categories (i.e., “follow-up domains”) that could be addressed by the same service/resource (e.g., needs related to depression and anxiety might be grouped together as potentially addressable by referral to a mental health professional) and (2) rating needs on Likert-type response scales in terms of two key pragmatic properties: importance (i.e., severity of consequences if that need goes unmet) and actionability (i.e., likelihood that need can be met through a service or resource) [58].

Sample and recruitment

Concept mapping participants included cancer program providers (e.g., oncologists, nurses, and social workers) and staff (e.g., program managers and administrators)—i.e., the prospective NA-SB user groups expected to have the most knowledge about service and resource delivery for this population. Recruitment through the key contacts established during survey recruitment was intended to achieve the minimum sample size of n=15 needed for concept mapping analyses [59].

Procedure

Participants accessed the web-based concept mapping exercises through emailed links to the project in CSGM. The exercises took approximately 30 min to complete.

Analysis

CSGM used hierarchical cluster analysis to characterize how participants grouped needs, creating several potential cluster maps based on proximity among needs, where proximal needs were more frequently grouped together as triggering the same follow-up action than distal ones, and “go-zone graphs,” in which needs are displayed as points on a quadrant in terms of their relative importance and actionability. Concept mapping data was presented to the design team for interpretation during the first prototyping workshop (described later).

Identify user and contextual requirements (understand and prepare the fabric)

Overview

We used contextual inquiry [60], including ethnographic guided tours [61] and interviews, to gather detailed information about context to inform context modifications needed to promote receptivity to NA-SB implementation and the identification of minimally necessary implementation strategies. In contextual inquiry, which comes from UCD, in-depth data on a few carefully selected individuals provides a fuller picture of users and their context [62]. By documenting naturally occurring user tasks and interactions among patients and providers through in-depth observation, ethnography, a promising yet underused method for implementation research [63], provides rich data on implementation context [64, 65], making it useful for contextual inquiry. Ethnographic methods are relevant to UCD because they offer a more nuanced understanding of users and context than traditional questionnaires or interviews, including novel insights on user tasks, attitudes, and interactions with their environment [22, 26, 66]. Additional File 4 includes the Standards for Reporting Qualitative Research (SRQR) checklist adhered to for these data collection activities.

Guided tours

Objective

The objective is to capture the contextual elements beyond just those which users can verbalize, including details and motivations that have become habitual or implicit to the tasks they perform [67].

Instrument

To promote the flexibility required for guided tours [61, 68], we identified potential questions based on four domains of Maguire et al.’s typology of user and contextual factors to consider in UCD from which we could choose: (1) user characteristics, (2) user tasks, (3) physical and technical environment, and (4) organizational environment [26] (Additional File 3).

Sample and recruitment

To capture the perspective of potential NA-SB implementers, we conducted guided tours with our clinical partners at NCCH (n=2). To capture the patient perspective, we conducted guided tours with young adults ages 18–30 receiving inpatient or outpatient care at NCCH (n=10). Consistent with the preferred approach for determining sample size in qualitative research [69], young adults were recruited until thematic saturation was reached, i.e., when subsequent guided tours did not generate new information regarding contextual factors. Our clinical partners at NCCH facilitated the recruitment of young adults for guided tours by distributing a recruitment flyer and connecting EH via email to those interested.

Procedure

EH conducted 4-h guided tours with clinical partners as they completed clinical, administrative, and other duties, asking questions about their tasks and thoughts. EH followed young adults and accompanying family members from the moment they entered the hospital for their outpatient appointments until the moment they exited, asking them questions as they interacted with their environment and healthcare professionals, while attempting to minimize participant disruptions. For inpatient guided tours, EH spent 2 h with young adults receiving inpatient care. EH took extensive field notes and audio-recorded portions of the guided tours for which only consenting parties were present. We offered young adult participants a $50 participation incentive.

Analysis

We used template analysis, identifying a priori themes based on Maguire’s constructs and allowing for the identification of additional themes [56]. To calibrate our coding schema, EH and a colleague independently coded excerpts from one set of guided tour field notes and interview transcriptions per Maguire constructs; EH proceeded to code the remaining data. For each Maguire domain, we collaboratively synthesized user and contextual factors and created a “translation table” [70], which translated factors into their implications for NA-SB design and implementation (i.e., user and contextual requirements). For example, providers reported the importance of integrating new tools into the electronic medical record; we translated this into the requirement that NA-SB interfaces with NCCH’s electronic medical record. All requirements were vetted and prioritized by the design team during the second workshop (see description below).

Semi-structured interviews

Objectives

The objectives are to review the findings from guided tours with external users and identify any areas of divergence or additional needs or contextual features, thus promoting the generalizability of findings.

Interview guide

With input from the design team, we developed a semi-structured interview guide based on Maguire’s typology [26] and guided tour findings.

Sample

We conducted semi-structured interviews with young adult providers and advocates who had previously facilitated survey and concept mapping recruitment: program managers (n=2) and nurse navigators (n=2) serving primarily young adults, and consultants (n=2) involved in young adult program development. Given the variation across interviewees’ contexts (e.g., variation by location, institution type, model of young adult care, funding source), we considered the small sample size sufficient to achieve the objective of the interviews, which was to identify potential areas where NA-SB delivery or implementation may differ across contexts.

Procedure

EH conducted 1-h semi-structured telephone interviews. At the end of each interview, EH summarized major takeaways for member checking [57]. We audio-recorded and transcribed the interviews verbatim.

Analysis

We analyzed the interview data using template analysis [56].

Design prototypes based on user and contextual requirements (thread the needle)

Overview

UCD often involves engaging the design team in prototyping workshops or sessions during which limited versions of the intervention/product are generated collaboratively. This iterative prototyping process—which relies on visual cues to digest user data with multiple user groups—represents a novel method for coproduction in implementation science. Through two 3-h workshops, our design team collaboratively redesigned the CNQ-YP (i.e., the thread) with usability and usefulness in mind, redesigned NCCH care processes (i.e., the fabric) to facilitate the tool’s implementation and usefulness in routine care, and anticipated minimally necessary implementation strategies (i.e., the needle). It resulted in NA-SB and a compilation of implementation strategies, each informed by context and designed to account for the other’s characteristics.

Design team workshop #1

Objective

During the first design team workshop, we used usability testing data to inform the elimination, addition, or refinement of CNQ-YP items. We also used concept mapping data to group needs into follow-up domains.

Sample

The sample is design team members.

Materials

Design team members were given a summary of project information and usability testing results. Additionally, the study team developed index cards representing each item up for discussion (i.e., those for which usability or usefulness issues had been identified), which included usability testing data with respect to that item. We also developed index cards representing potential additional items elicited from usability testing data (see Fig. 3 for an example index card). Finally, attendees were given “cluster comparison worksheets” (see example in Fig. 3) which visually depicted, by cluster, the differences between various cluster maps generated from concept mapping data.

Procedure

To begin the design team workshop, EH gave a brief overview of the project and usability testing results. Next, we used index cards and stickers to discuss each item and vote on decisions as to whether that item should be eliminated, added, or revised. Votes were then tallied to arrive at a decision about that particular item; where voting was split (i.e., greater than two design team members in opposition), we discussed further until the design team reached consensus.

Once item revisions were made, we turned our focus toward grouping items into appropriate follow-up domains. We used the “cluster comparison worksheets” to review the concept mapping cluster maps and, through collaborative discussion, selected the most interpretable cluster map. We then moved items between clusters, as needed, and labeled each cluster according to the service that needs in that cluster should trigger. After grouping high-priority needs by follow-up domains, the design team identified services/resources at NCCH which corresponded to each follow-up domain, establishing explicit referral pathways for each domain. We also anticipated implementation strategies needed to facilitate this kind of multidisciplinary service provision through collaborative discussion.

Analysis

Detailed notes were taken during design team workshop #1 to capture all discussion points leading to decisions on itemset content; the meeting was also recorded for further elaboration on meeting notes. After the workshop, EH drafted the revised patient-reported outcome measure, grouping needs by follow-up domain, and obtained additional design team feedback via email.

Design team workshop #2

Overview

After soliciting user and contextual data through guided tours and interviews, we convened the design team for a second workshop during which we presented them the ethnography findings in juxtaposition with the patient-reported outcome measure and referral pathways produced during the first design team workshop. This juxtaposition allowed design team members to anticipate context modifications and needed implementation strategies with respect to the redesigned tool itself. Through popular UCD methods, “storyboarding” (i.e., “sequences of images which show the relationship between user actions or inputs and system” [26]), “personas” (i.e., using caricatures of key user groups to convey users’ needs to the design team), and “scenarios of use” (i.e., using specific examples of how users, context, and NA-SB might interact) [26], we collaboratively specified who will deliver the needs assessment, when, how often, and the materials and procedure that will be used to do so. This workshop was also used to plan for NA-SB implementation.

Sample

Design team members plus various additional providers involved in young adult care at NCCH (n=6) including (1) a pediatric oncology nurse practitioner, (2) a pediatric palliative care social worker, (3) a nurse navigator, (4) a pediatric palliative care physician, (5) a chaplain, and (6) a second young adult social worker. The purpose of including these individuals was to capture the perspectives of the range of provider groups that might interface with NA-SB in practice and also to build buy-in for future NA-SB implementation at NCCH, potentially strengthening referral pathways.

Materials

We gave design team participants a packet of information including a project overview, the revised patient-reported outcome measure and referral pathways developed through design team workshop #1, and a summary of ethnography results. Other materials included a storyboard depicting the steps of NA-SB delivery, personas, and scenarios of use, all of which were generated based on ethnography findings. Four personas were crafted to represent four user types: (1) a young adult receiving care in pediatric oncology, (2) a young adult with frequent inpatient stays, (3) a young adult receiving maintenance treatment with appointments occurring less frequently, and (4) a young adult with a prognosis of less than 1 year. Scenarios of use reflected various appointment types and were presented using a flowchart of patients’ appointments. For example, one scenario included labs, treatment, and a clinical appointment; another included just treatment; a third included just a clinical appointment.

Procedure

First, we gave an overview of the project and ethnography results. Second, we discussed the ethnography translation table, giving the design team the opportunity to vet the research team’s translation of user and contextual factors into user and contextual requirements. We then engaged design team members through storyboarding, scenarios of use, and personas to inform the collaborative specification of NA-SB delivery. To facilitate this discussion, we divided NA-SB delivery into six segments: (1) young adult receives and completes needs assessment, (2) young adult “turns in” needs assessment, (3) data is documented, (4) data is interpreted to identify appropriate services/resources, (5) service/resource providers are notified, and (6) services and resources are provided. We then walked workshop attendees through each segment, priming them with the user and contextual requirements relevant to that segment. Together, we specified each segment of delivery, discussing both a pilot scenario as well as future broader implementation. The selected specification options were then vetted in terms of personas and scenarios of use generated from ethnographic data.

During the second design team workshop, we also discussed the future implementation of NA-SB, anticipating barriers and facilitators to implementation and brainstorming strategies to optimize NA-SB implementation. This discussion was informed by a list of barriers and facilitators gleaned from usability testing and ethnographic data. We used PollEverywhere to rank this list of barriers from most to least salient. We then discussed the three barriers ranked as the most salient in terms of the mechanisms driving those barriers as well as potential strategies to address them.

Analysis

EH took detailed notes during design team workshop #2 to capture all discussion points leading to decisions on NA-SB delivery and implementation; the meeting was also recorded for further elaboration on meeting notes. We synthesized and analyzed notes inductively to document the results of design team prototyping and generate guidance for NA-SB delivery and implementation.

Results

By ensuring NA-SB directly responded to features of users and context, we designed NA-SB for implementation, potentially minimizing the strategies needed to address misalignment that may have otherwise existed. Furthermore, we designed NA-SB for scale-up; by engaging users from other cancer programs across the country to identify points of contextual variation which would require flexibility in delivery, we created a tool not overly tailored to one unique context. To allow for a more detailed focus on our methods, we have summarized our results in Additional File 5. This file includes results from usability testing (i.e., participant demographics, ratings of CNQ-YP needs, evaluation of CNQ-YP, and grouping of needs from concept mapping), ethnographic contextual inquiry (i.e., participant demographics, guided tour, and interview translation tables), and design team prototyping workshops (e.g., summaries of item decisions, selected concept mapping cluster map, anticipated implementations strategies). Briefly, the methods described above culminated in an NA-SB prototype, including a redesigned patient-reported outcome measure and referral pathways that are triggered based on needs young adults report, as well as a plan for implementation. To achieve our NA-SB prototype, many adaptations were made to the CNQ-YP to promote its usability and usefulness; for example, we added missing content (e.g., items on sexual health), removed unactionable content (i.e., needs that are not addressable by services), revised confusing or unpalatable items, streamlined the tool’s sequencing and response options, and, importantly, linked the needs assessed by the tool to referrals pathways expected to address them. In addition to adapting the CNQ-YP, we also identified context modifications needed to promote receptivity to NA-SB implementation (e.g., changes in social worker workflow to accommodate NA-SB administration; modification of electronic medical record to allow for documentation of NA-SB). To address remaining gaps between NA-SB and its implementation context that were not addressed by intervention or context modifications, we anticipated needed implementation strategies (e.g., building buy-in among providers across disease groups; taking a phased-in approach to implementation).

Discussion

Increasingly, we have seen critiques of the traditional research pipeline which “spans from basic science to treatment development to efficacy and occasional effectiveness trials and then to implementation” [71]. From our perspective, we need to move away from a strict adherence to this supposed linear research trajectory. There is a need to embed implementation research earlier in the pipeline and expand the scope of the field to address critical gaps that are slowing the uptake of evidence (e.g., designing interventions that are implementable; obtaining a rich understanding of context and using that understanding to improve implementation and sustainment). Such efforts may be enhanced through the application of methods from other disciplines like UCD.

Just as embroidering requires compatible thread, fabric, and needle, implementation may be optimized by harmonizing EBP, context, and implementation strategies. We acknowledge that this analogy is imperfect; for example, some might regard embroidery as decoration or embellishment; on the contrary, our intention with this analogy is to convey the integration of the thread such that it becomes a part of the fabric itself. Despite its imperfection, the analogy is useful as it urges implementation scientists to attend equally to features of EBPs, context, and implementation strategies. Doing so has the potential to limit the challenges associated with complex EBPs and implementation strategies that burden stakeholders. In this study, we leveraged methods from UCD to harmonize EBP, context, and implementation; the benefits and challenges associated with these methods are summarized in Table 3.

In this case example, usability testing elicited user concerns about the CNQ-YP that may have limited its uptake in practice, allowing our design team to redesign the CNQ-YP to maximize usability and usefulness. For example, through concept mapping, providers identified needs assessed by the CNQ-YP which, as originally written, could not be addressed with available services/resources (e.g., “I feel frustrated”); assessing such unactionable needs would have produced additional burden for users, without improving care. Through the survey and cognitive interviews, young adults identified important missing content (e.g., sexual health), and other areas in which the CNQ-YP’s content, length, wording, and response format were unacceptable. By addressing these usability and usefulness concerns upfront, we designed a tool to be more feasible, acceptable, and appropriate to users.

Considering EBP characteristics like usability and usefulness in a vacuum may compromise implementation and burden stakeholders. To avoid these concerns, we leveraged UCD contextual inquiry methods to describe both NA-SB’s specific implementation context (i.e., NCCH) as well as its broader future scale-up context (i.e., other young adult cancer programs in the USA). To explore the context, UCD offers frameworks (e.g., Maguire’s framework), as well as questionnaires (e.g., System Usability Scale [29]), and a menu of methods (e.g., diary keeping, user surveys [26]) compatible with others used by implementation scientists in the assessment of implementation determinants. Despite some overlap in UCD and implementation science methods, UCD goes further than traditional barriers/facilitators assessment by embedding users more deeply in the process. In this case example, we used ethnographic contextual inquiry to obtain a detailed understanding of users and context. Additionally, we went further than traditional barriers/facilitators assessments by engaging users in analysis to promote a shared understanding of the context: our design team reviewed ethnography findings to ensure that the user interpretation of context remained central, as opposed to relying solely on the researcher’s interpretation of contextual data.

UCD also provides methods for translating user and contextual factors into user and contextual requirements—i.e., usability and usefulness determinants [26]. Translating contextual factors into contextual requirements using UCD requirements engineering approaches (e.g., translation tables, personas, and scenarios-of-use) could help implementation scientists prioritize implementation determinants by focusing attention on the critical subset of contextual factors that influence EBP usability and usefulness [17]. In this case example, the ethnography provided valuable source data for workshop materials, helping us to leverage design team expertise to identify these usability determinants and prioritize contextual features to target with EBP redesign, context preparation, or implementation strategies. For example, during design team workshop #2, we presented several alternative scenarios of use, or simple descriptions of plausible user interactions with NA-SB, to inform the specification of NA-SB delivery. These scenarios provide user- and task-oriented information about the context in which an EBP has to operate [72], and also offer concrete examples for design team members to react to. For example, scenarios of use helped our design team walk through different patient visit types (e.g., just infusion versus infusion + clinical visit) to ensure that design decisions about staffing and timing for NA-SB administration suited the range of potential appointments.

We used UCD to enhance the usability and usefulness of NA-SB and reduce the number of implementation strategies needed to embed the tool in routine care. However, where EBP and context diverge, UCD can help tailor strategies which make EBP and context more compatible. In this case example, we anticipated the areas where NA-SB provision may clash with user or contextual requirements, some of which could not be addressed by EBP redesign or context preparation. For example, NA-SB—a tool that spans across multiple domains of care—will require the cooperation of multiple departments and disciplines; although users are more likely to buy into a usable and useful tool [73, 74] and engaging users in its development likely generated some buy-in, additional implementation strategies targeting cross-department buy-in may be required. These remaining gaps in EBP-context fit inform the selection of strategies to promote NA-SB implementation. Leveraging UCD to identify the user and contextual requirements and tailor implementation strategies addresses an articulated need in the field [18, 75] and complement approaches for selecting and tailoring strategies that have recently been proposed in the implementation science literature [17]. Future work will assess the extent to which UCD minimizes the need for complex implementation strategies or, when needed, aids in the tailoring of strategies that are contextually appropriate and minimally burdensome.

As demonstrated by this case example, UCD can help implementation scientists to operationalize the field’s commitment to stakeholder engagement. For example, establishing a design team upfront ensured that users remained central throughout NA-SB development and implementation planning. Design team members offered key insights to inform data collection (e.g., review of instruments), data analysis (e.g., selection of concept mapping cluster map; prioritization of user and contextual requirements), and, ultimately, NA-SB and implementation strategy design. Further, design team members proved critical to the recruitment of users for usability testing and ethnographic data collection. UCD also offers methods for translating user feedback into design decisions, addressing another articulated gap in implementation science [76]. For example, the use of storyboards, personas, and scenarios of use allowed our design team to translate ethnographic data into NA-SB design features in a way that group discussion without such engagement methods may not have. Finally, UCD demands an iterative approach to user engagement, often with the same group of users reviewing prototypes at multiple time points; this type of iteration may be a key moderator in the relationship between stakeholder engagement and improved EBP design [50]. In keeping with the iterative nature of UCD, in future work, NA-SB will undergo additional refinement based on user interactions with our NA-SB prototype.

Applying UCD to implementation science has notable challenges. Embedding the extensive engagement UCD requires can sometimes be costly and time-intensive. Additionally, this level of engagement places issues of sampling and recruitment at the forefront. For example, the UCD process hinges on complex decisions about who counts as a user and which individuals accurately represent users more broadly. Prioritizing divergent feedback from multiple user groups [77], or weighing the relative importance of user feedback with the feasibility of design solutions, may not always be straightforward. Inexpert application of UCD methods may lead to “feature creep,” in which new ideas are incorporated into the EBP without careful consideration and evaluation of the effects of the added features. UCD’s emphasis on iterative design thinking and local insights may also raise concerns about diminishing fidelity as EBPs are recurrently revised to better align with context outside of the controlled environment where the EBP was originally designed and tested. Finally, implementation scientists may struggle to shoulder the challenges associated with incorporating new disciplines into already multidisciplinary teams and projects (e.g., reconciling terminology and frameworks). However, if implementation scientists are to leverage key insights from other disciplines, we must continue to surmount such roadblocks to knowledge integration.

Conclusions

Implementing change in dynamic healthcare settings is complex; understanding the nuances of implementation requires a multimodal, multidisciplinary purview. To this end, implementation scientists have borrowed knowledge and approaches from systems science [78, 79], organizational studies [80], cultural adaptation [81], community-based participatory research [82], behavioral psychology [83], and quality improvement [84], just to name a few. We argue that UCD methods like usability testing, ethnographic contextual inquiry, and design team prototyping can join the list of approaches available to implementation scientists. This may first require investigation of where UCD and implementation science converge and diverge. Fortunately, efforts to this effect are currently underway [85]. While points of divergence may represent barriers to integration of the two fields, they may also represent important new insights and approaches for implementation scientists to consider.

Just as embroidery requires the alignment of thread, fabric, and needle, EBP implementation and sustainment requires harmonizing EBP, context, and implementation strategies. The importance of each of these has been acknowledged; however, methods for understanding the dynamic interplay among them and optimizing each with respect to the other two are lacking. UCD offers methods and approaches for achieving this. Future research should explore the utility of collaborating with UCD experts or embedding UCD approaches in implementation research [85]. In particular, we argue that UCD’s potential for promoting harmonization among EBP, context, and implementation should be tested empirically, work that is currently underway [86]. To the extent that UCD helps facilitate this harmonization, it will advance us toward the field’s goal of bridging the gap between research and practice.

Availability of data and materials

Data collection instruments are available as additional files.

Abbreviations

- EBP:

-

Evidence-based practice

- UCD:

-

User-centered design

- NA-SB:

-

Needs Assessment and Service Bridge

- CNQ-YP:

-

Cancer Needs Questionnaire - Young People

- CSGM:

-

Concept Systems GlobalMax©

References

Chambers DA, Glasgow RE, Stange KC. The dynamic sustainability framework: addressing the paradox of sustainment amid ongoing change. Implement Sci. 2013;8(1):117. https://doi.org/10.1186/1748-5908-8-117.

Pfadenhauer LM, Mozygemba K, Gerhardus A, Hofmann B, Booth A, Lysdahl KB, et al. Context and implementation: a concept analysis towards conceptual maturity. Zeitschrift fur Evidenz, Fortbildung und Qualitat im Gesundheitswesen. 2015;109(2):103–14. https://doi.org/10.1016/j.zefq.2015.01.004.

Rabin BA, Brownson RC. Terminology for dissemination and implementation research. Dissemination and implementation research in health: Translating science to practice. 2017;2:19–45.

Chaffin M, Silovsky JF, Funderburk B, Valle LA, Brestan EV, Balachova T, et al. Parent-child interaction therapy with physically abusive parents: efficacy for reducing future abuse reports. J Consult Clin Psychol. 2004;72(3):500–10. https://doi.org/10.1037/0022-006X.72.3.500.

Lyon AR, Koerner K. User-centered design for psychosocial intervention development and implementation. Clin Psychol Sci Pract. 2016;23(2):180–200. https://doi.org/10.1111/cpsp.12154.

Chambers DA, Norton WE. The adaptome: advancing the science of intervention adaptation. Am J Prev Med. 2016;51(4):S124–31. https://doi.org/10.1016/j.amepre.2016.05.011.

Kirk MA, Moore JE, Stirman SW, Birken SA. Towards a comprehensive model for understanding adaptations’ impact: the model for adaptation design and impact (MADI). Implement Sci. 2020;15(1):1–15.

Greenhalgh T, Robert G, Macfarlane F, Bate P, Kyriakidou O. Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581–629. https://doi.org/10.1111/j.0887-378X.2004.00325.x.

Titler MG. The evidence for evidence-based practice implementation. In: Patient safety and quality: An evidence-based handbook for nurses. edn.: Agency for Healthcare Research and Quality (US); 2008.

Tierney S, Kislov R, Deaton C. A qualitative study of a primary-care based intervention to improve the management of patients with heart failure: the dynamic relationship between facilitation and context. BMC Fam Pract. 2014;15(1):153. https://doi.org/10.1186/1471-2296-15-153.

Nilsen P, Bernhardsson S. Context matters in implementation science: a scoping review of determinant frameworks that describe contextual determinants for implementation outcomes. BMC Health Serv Res. 2019;19(1):189. https://doi.org/10.1186/s12913-019-4015-3.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8(1):139. https://doi.org/10.1186/1748-5908-8-139.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, et al. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10(1):21. https://doi.org/10.1186/s13012-015-0209-1.

Brownson RC, Colditz GA, Proctor EK. Chapter 15 Implementation strategies in dissemination and implementation research in health: translating science to practice: Oxford University Press; 2018.

Prior M, Guerin M, Grimmer-Somers K. The effectiveness of clinical guideline implementation strategies–a synthesis of systematic review findings. J Eval Clin Pract. 2008;14(5):888–97. https://doi.org/10.1111/j.1365-2753.2008.01014.x.

Ivers N, Jamtvedt G, Flottorp S, Young JM, Odgaard-Jensen J, French SD, O'Brien MA, Johansen M, Grimshaw J, Oxman AD. Audit and feedback: effects on professional practice and healthcare outcomes (Review). Cochrane Database Syst Rev. 2012;6. https://doi.org/10.1002/14651858.CD000259.pub3.

Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, et al. Methods to improve the selection and tailoring of implementation strategies. J Behav Health Serv Res. 2017;44(2):177–94. https://doi.org/10.1007/s11414-015-9475-6.

Grol R, Wensing M, Eccles M, Davis D. Improving patient care: the implementation of change in health care: John Wiley & Sons; 2013. https://doi.org/10.1002/9781118525975.

Kislov R, Pope C, Martin GP, Wilson PM. Harnessing the power of theorising in implementation science. Implement Sci. 2019;14(1):103. https://doi.org/10.1186/s13012-019-0957-4.

Eccles MP, Armstrong D, Baker R, Cleary K, Davies H, Davies S, et al. An implementation research agenda. Implement Sci. 2009;4(1):18. https://doi.org/10.1186/1748-5908-4-18.

DIS I: 9241-210: 2010. Ergonomics of human system interaction-part 210: human-centred design for interactive systems (formerly known as 13407). Switzerland: International Standardization Organization (ISO); 2010.

Johnson CM, Johnson TR, Zhang J. A user-centered framework for redesigning health care interfaces. J Biomed Inform. 2005;38(1):75–87. https://doi.org/10.1016/j.jbi.2004.11.005.

Standardization IOf: ISO 9241-11: Ergonomic requirements for office work with visual display terminals (VDTs): part 11: guidance on usability; 1998.

Nielsen J. Usability engineering: Elsevier; 1994.

Lyon AR, Bruns EJ. User-centered redesign of evidence-based psychosocial interventions to enhance implementation—hospitablesoil or better seeds?. JAMA psychiatry. 2019;76(1):3-4.

Maguire M. Methods to support human-centred design. Int J Hum Comput Stud. 2001;55(4):587–634. https://doi.org/10.1006/ijhc.2001.0503.

Witteman HO, Dansokho SC, Colquhoun H, Coulter A, Dugas M, Fagerlin A, et al. User-centered design and the development of patient decision aids: protocol for a systematic review. Syst Rev. 2015;4(1):11. https://doi.org/10.1186/2046-4053-4-11.

Dopp AR, Parisi KE, Munson SA, Lyon AR. A glossary of user-centered design strategies for implementation experts. Transl Behav Med. 2018.

Brooke J. SUS-A quick and dirty usability scale. Usability Eval Industry. 1996;189(194):4–7.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4(1):50. https://doi.org/10.1186/1748-5908-4-50.

Lyon AR, Coifman J, Cook H, et al. The Cognitive Walkthough for Implementation Strategies (CWIS): A Pragmatic Method for Assessing Implementation Strategy Usability. Research Square; 2020. https://doi.org/10.21203/rs.3.rs-136222/v1.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health Ment Health Serv Res. 2011;38(2):65–76. https://doi.org/10.1007/s10488-010-0319-7.

U.S. Department of Health and Human Services CfDCaPaNCI: U.S. Cancer Statistics Working Group. U.S. Cancer Statistics Data Visualizations Tool, based on November 2018 submission data (1999-2016). 2019.

Keegan TH, Lichtensztajn DY, Kato I, Kent EE, Wu X-C, West MM, et al. Unmet adolescent and young adult cancer survivors information and service needs: a population-based cancer registry study. J Cancer Surviv. 2012;6(3):239–50. https://doi.org/10.1007/s11764-012-0219-9.

Dyson GJ, Thompson K, Palmer S, Thomas DM, Schofield P. The relationship between unmet needs and distress amongst young people with cancer. Support Care Cancer. 2012;20(1):75–85. https://doi.org/10.1007/s00520-010-1059-7.

Sawyer SM, McNeil R, McCarthy M, Orme L, Thompson K, Drew S, et al. Unmet need for healthcare services in adolescents and young adults with cancer and their parent carers. Support Care Cancer. 2017;25(7):2229–39. https://doi.org/10.1007/s00520-017-3630-y.

Zebrack BJ, Corbett V, Embry L, Aguilar C, Meeske KA, Hayes-Lattin B, et al. Psychological distress and unsatisfied need for psychosocial support in adolescent and young adult cancer patients during the first year following diagnosis. Psychooncology. 2014;23(11):1267–75. https://doi.org/10.1002/pon.3533.

Zebrack BJ, Block R, Hayes-Lattin B, Embry L, Aguilar C, Meeske KA, et al. Psychosocial service use and unmet need among recently diagnosed adolescent and young adult cancer patients. Cancer. 2013;119(1):201–14. https://doi.org/10.1002/cncr.27713.

Smith A, Parsons H, Kent E, Bellizzi K, Zebrack B, Keel G, et al. Unmet support service needs and health-related quality of life among adolescents and young adults with cancer: the AYA HOPE Study. Front Oncol. 2013;3(75):1-11. https://doi.org/10.3389/fonc.2013.00075.

Smits-Seemann RR, Kaul S, Zamora ER, Wu YP, Kirchhoff AC. Barriers to follow-up care among survivors of adolescent and young adult cancer. J Cancer Surviv. 2017;11(1):126–32. https://doi.org/10.1007/s11764-016-0570-3.

Gardner MH, Barnes MJ, Bopanna S, Davis CS, Cotton PB, Heron BL, et al. Barriers to the use of psychosocial support services among adolescent and young adult survivors of pediatric cancer. J Adolescent Young Adult Oncol. 2014;3(3):112–6. https://doi.org/10.1089/jayao.2013.0036.

Barakat LP, Galtieri LR, Szalda D, Schwartz LA. Assessing the psychosocial needs and program preferences of adolescents and young adults with cancer. Support Care Cancer. 2016;24(2):823–32. https://doi.org/10.1007/s00520-015-2849-8.

Clinton-McHarg T, Carey M, Sanson-Fisher R, D’Este C, Shakeshaft A. Preliminary development and psychometric evaluation of an unmet needs measure for adolescents and young adults with cancer: the Cancer Needs Questionnaire - Young People (CNQ-YP). Health Qual Life Outcomes. 2012;10(1):13. https://doi.org/10.1186/1477-7525-10-13.

Clinton-McHarg T, Carey M, Sanson-Fisher R, Shakeshaft A, Rainbird K. Measuring the psychosocial health of adolescent and young adult (AYA) cancer survivors: a critical review. Health Qual Life Outcomes. 2010;8(1):25. https://doi.org/10.1186/1477-7525-8-25.

Howell D, Molloy S, Wilkinson K, Green E, Orchard K, Wang K, et al. Patient-reported outcomes in routine cancer clinical practice: a scoping review of use, impact on health outcomes, and implementation factors. Ann Oncol. 2015;26(9):1846–58. https://doi.org/10.1093/annonc/mdv181.

Papadakos JK, Charow RC, Papadakos CJ, Moody LJ, Giuliani ME. Evaluating cancer patient–reported outcome measures: readability and implications for clinical use. Cancer. 2019;125(8):1350–6. https://doi.org/10.1002/cncr.31928.

Oetzel JG, Zhou C, Duran B, Pearson C, Magarati M, Lucero J, et al. Establishing the psychometric properties of constructs in a community-based participatory research conceptual model. Am J Health Promot. 2015;29(5):e188–202. https://doi.org/10.4278/ajhp.130731-QUAN-398.

Brownson RC, Jacobs JA, Tabak RG, Hoehner CM, Stamatakis KA. Designing for dissemination among public health researchers: findings from a national survey in the United States. Am J Public Health. 2013;103(9):1693–9. https://doi.org/10.2105/AJPH.2012.301165.

Holt CL, Chambers DA. Opportunities and challenges in conducting community-engaged dissemination/implementation research. Transl Behav Med. 2017;7(3):389–92. https://doi.org/10.1007/s13142-017-0520-2.

Lai J, Honda T, Yang MC. A study of the role of user-centered design methods in design team projects. Artificial Intelligence for Engineering Design, Analysis and Manufacturing. 2010;24(3):303–16. https://doi.org/10.1017/S0890060410000211.

Lyon AR, Koerner K, Chung J. Usability Evaluation for Evidence-Based Psychosocial Interventions (USE-EBPI): a methodology for assessing complex intervention implementability. Implement Res Pract. 2020;1:2633489520932924.

DeMaio TJ, Rothgeb JM. Cognitive interviewing techniques: in the lab and in the field. In: Answering questions: methodology for determining cognitive and communicative processes in survey research. edn. San Francisco, CA: US: Jossey-Bass; 1996. p. 177–95.

Trochim W. The reliability of concept mapping. In: Annual Conference of the American Evaluation Association; 1993. p. 1993.

Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12(1):108. https://doi.org/10.1186/s13012-017-0635-3.

Faulkner L. Beyond the five-user assumption: benefits of increased sample sizes in usability testing. Behav Res Methods Instrum Comput. 2003;35(3):379–83. https://doi.org/10.3758/BF03195514.

King N. Using templates in the thematic analysis of text. Essential guide to qualitative methods in organizational research. 2004;2:256–70.

Carlson JA. Avoiding traps in member checking. Qual Rep. 2010;15(5):1102–13.

Lewis CC, Weiner BJ, Stanick C, Fischer SM. Advancing implementation science through measure development and evaluation: a study protocol. Implement Sci. 2015;10(1):102. https://doi.org/10.1186/s13012-015-0287-0.

Kane M, Trochim WM. Concept mapping for planning and evaluation: Sage Publications, Inc; 2007. https://doi.org/10.4135/9781412983730.

Wixon D, Flanders A, Beabes MA. Contextual inquiry: grounding your design in user’s work. In: Conference companion on Human factors in computing systems, vol. 1996; 1996. p. 354–5.

Balbale SN, Locatelli SM, LaVela SL. Through their eyes: lessons learned using participatory methods in health care quality improvement projects. Qual Health Res. 2015;26(10):1382–92. https://doi.org/10.1177/1049732315618386.

Beyer H, Holtzblatt K. Contextual design. Interactions. 1999;6(1):32–42.

Weiner BJ, Amick HR, Lund JL, Lee S-YD, Hoff TJ. Review: Use of qualitative methods in published health services and management research: a 10-year review. Med Care Res Rev. 2010;68(1):3–33. https://doi.org/10.1177/1077558710372810.

Carnevale FA, Macdonald ME, Bluebond-Langner M, McKeever P. Using participant observation in pediatric health care settings: ethical challenges and solutions. J Child Health Care. 2008;12(1):18–32. https://doi.org/10.1177/1367493507085616.

Hammersley M. Reading ethnographic research: a critical guide [Internet]. London, UK: Longman; 1990.

Locatelli SM, Turcios S, LaVela SL. Optimizing the patient-centered environment: results of guided tours with health care providers and employees. HERD. 2015;8(2):18–30. https://doi.org/10.1177/1937586714565610.

Daae J, Boks C. A classification of user research methods for design for sustainable behaviour. J Clean Prod. 2015;106:680–9. https://doi.org/10.1016/j.jclepro.2014.04.056.

Brown L, Durrheim K. Different kinds of knowing: generating qualitative data through mobile interviewing. Qual Inq. 2009;15(5):911–30. https://doi.org/10.1177/1077800409333440.

Trotter RT II. Qualitative research sample design and sample size: resolving and unresolved issues and inferential imperatives. Prev Med. 2012;55(5):398–400. https://doi.org/10.1016/j.ypmed.2012.07.003.

Van Velsen L, Wentzel J, Van Gemert-Pijnen JEWC. Designing eHealth that matters via a multidisciplinary requirements development approach. JMIR Res Protocols. 2013;2(1):e21. https://doi.org/10.2196/resprot.2547.

Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clin Transl Sci. 2012;5(1):48–55. https://doi.org/10.1111/j.1752-8062.2011.00383.x.

Battle L, Taylor DH: Sharing the vision= designs that get built.

Jensen-Doss A, Hawley KM. Understanding barriers to evidence-based assessment: clinician attitudes toward standardized assessment tools. J Clin Child Adolesc Psychol. 2010;39(6):885–96. https://doi.org/10.1080/15374416.2010.517169.

Ivatury SJ, Hazard-Jenkins HW, Brooks GA, McCleary NJ, Wong SL, Schrag D. Translation of patient-reported outcomes in oncology clinical trials to everyday practice. Ann Surg Oncol. 2020;27(1):65-72.

Wensing M, Bosch M, Grol R, Straus S, Tetroe J, Graham I. Knowledge translation in health care: moving from evidence to practice; 2009.

Benotti E, Goodwin C, Jurczak A, Karlage A, Singal R., Henrich, N. "Context Assessment for Implementation Success." Ariadne Labs. https://www.ariadnelabs.org/areas-of-work/atlas-initiative/resources/#Downloads&%20Tools[ariadnelabs.org]. 2019. Accessed 17 Mar 2020.

Oliver K, Kothari A, Mays N. The dark side of coproduction: do the costs outweigh the benefits for health research? Health Res Policy Syst. 2019;17(1):33. https://doi.org/10.1186/s12961-019-0432-3.

Zimmerman L, Lounsbury DW, Rosen CS, Kimerling R, Trafton JA, Lindley SE. Participatory system dynamics modeling: increasing stakeholder engagement and precision to improve implementation planning in systems. Adm Policy Ment Health Ment Health Serv Res. 2016;43(6):834–49. https://doi.org/10.1007/s10488-016-0754-1.

Luke DA, Morshed AB, Mckay VR, Combs TB. Systems science methods in dissemination and implementation research. In: Dissemination and implementation research in health: translating science to practice. edn: Oxford University Press; 2018. p. 157–74.

Birken SA, Bunger AC, Powell BJ, Turner K, Clary AS, Klaman SL, et al. Organizational theory for dissemination and implementation research. Implement Sci. 2017;12(1):62. https://doi.org/10.1186/s13012-017-0592-x.

Cabassa LJ, Baumann AA. A two-way street: bridging implementation science and cultural adaptations of mental health treatments. Implement Sci. 2013;8(1):90. https://doi.org/10.1186/1748-5908-8-90.

Wallerstein N, Duran B. Community-based participatory research contributions to intervention research: the intersection of science and practice to improve health equity. Am J Public Health. 2010;100(S1):S40–6. https://doi.org/10.2105/AJPH.2009.184036.

Michie S, Johnston M. Theories and techniques of behaviour change: developing a cumulative science of behaviour change. Health Psychol Rev. 2012;6(1):1–6. https://doi.org/10.1080/17437199.2012.654964.

Balasubramanian BA, Cohen DJ, Davis MM, Gunn R, Dickinson LM, Miller WL, et al. Learning evaluation: blending quality improvement and implementation research methods to study healthcare innovations. Implement Sci. 2015;10(1):31. https://doi.org/10.1186/s13012-015-0219-z.

Dopp AR, Parisi KE, Munson SA, Lyon AR. Aligning implementation and user-centered design strategies to enhance the impact of health services: results from a concept mapping study. Implement Sci Commun. 2020;1(1):1–13.

Lyon AR, Munson SA, Renn BN, Atkins DA, Pullmann MD, Friedman E, Areán PA: Human-centered design to improve implementation of evidence-based psychotherapies in low-resource communities: UW ALACRITY Center Methods Core protocol. Journal of Medical Internet Research in press.

Acknowledgements

Not applicable

Funding

Dr. Haines’ effort was supported by funding from UNC Lineberger’s University Cancer Research Fund and by 2T32 CA122061 from the National Cancer Institute. Dr. Dopp is an investigator with the Implementation Research Institute, at the George Warren Brown School of Social Work, Washington University in St. Louis, through an award from the National Institutes of Mental Health (5R25MH08091607) and the Department of Veterans Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative (QUERI). Dr. Witteman is supported by a Canada Research Chair in Human-Centred Digital Health. Dr. Birken’s effort was supported by the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant KL2TR002490. The content is solely the responsibility of the authors and does not necessarily represent the official views of the Canada Research Chairs program nor the NIH.

Author information

Authors and Affiliations

Contributions

All authors (ERH, AD, AL, HW, MB, GV, DH, SB) were involved in the manuscript conceptualization, drafting, and editing. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

This study was approved by the University of North Carolina’s Institutional Review Board (19-0255).

Consent for publication

Not applicable

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

This file contains the survey instrument used for the online survey of young adults, including demographic questions, questions from the Cancer Needs Questionnaire-Young People tool, and questions surrounding the tool’s usability and usefulness.

Additional file 2.

This file contains the cognitive interview guide, including the original Cancer Needs Questionnaire-Young People.

Additional file 3.

This file contains Maguire et al.’s framework of user and contextual factors to consider in User-Centered Design. The file also includes example questions within each domain of Maguire et al.’s framework used during ethnographic contextual inquiry (i.e., guided tours and semi-structured interviews).

Additional file 4.

This file contains the Standards for Reporting Qualitative Research (SRQR) checklist.

Additional file 5.

This file contains a summary of results from each phase of our user-centered design process. This includes results from usability testing (i.e., participant demographics, ratings of CNQ-YP needs, evaluation of CNQ-YP, and grouping of needs through concept mapping), ethnographic contextual inquiry (i.e., participant demographics, guided tour and interview translation tables), and design team prototyping workshops (e.g., summaries of item decisions, selected concept mapping cluster map, anticipated implementations strategies).

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Haines, E.R., Dopp, A., Lyon, A.R. et al. Harmonizing evidence-based practice, implementation context, and implementation strategies with user-centered design: a case example in young adult cancer care. Implement Sci Commun 2, 45 (2021). https://doi.org/10.1186/s43058-021-00147-4

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-021-00147-4