Abstract

Background

Evidence-based interventions (EBIs) could reduce cervical cancer deaths by 90%, colorectal cancer deaths by 70%, and lung cancer deaths by 95% if widely and effectively implemented in the USA. Yet, EBI implementation, when it occurs, is often suboptimal. This manuscript outlines the protocol for Optimizing Implementation in Cancer Control (OPTICC), a new implementation science center funded as part of the National Cancer Institute Implementation Science Consortium. OPTICC is designed to address three aims. Aim 1 is to develop a research program that supports developing, testing, and refining of innovative, efficient methods for optimizing EBI implementation in cancer control. Aim 2 is to support a diverse implementation laboratory of clinical and community partners to conduct rapid, implementation studies anywhere along the cancer care continuum for a wide range of cancers. Aim 3 is to build implementation science capacity in cancer control by training new investigators, engaging established investigators in cancer-focused implementation science, and contributing to the Implementation Science Consortium in Cancer.

Methods

Three cores serve as OPTICC’s foundation. The Administrative Core plans coordinates and evaluates the Center’s activities and leads its capacity-building efforts. The Implementation Laboratory Core (I-Lab) coordinates a network of diverse clinical and community sites, wherein studies are conducted to optimize EBI implementation, implement cancer control EBIs, and shape the Center’s agenda. The Research Program Core conducts innovative implementation studies, measurement and methods studies, and pilot studies that advance the Center’s theme. A three-stage approach to optimizing EBI implementation is taken—(I) identify and prioritize determinants, (II) match strategies, and (III) optimize strategies—that is informed by a transdisciplinary team of experts leveraging multiphase optimization strategies and criteria, user-centered design, and agile science.

Discussion

OPTICC will develop, test, and refine efficient and economical methods for optimizing EBI implementation by building implementation science capacity in cancer researchers through applications with our I-Lab partners. Once refined, OPTICC will disseminate its methods as toolkits accompanied by massive open online courses, and an interactive website, the latter of which seeks to simultaneously accumulate knowledge across OPTICC studies.

Similar content being viewed by others

Optimizing Implementation in Cancer Control (OPTICC): protocol for an implementation science center

The next decade offers an unparalleled opportunity for implementation science to reduce cancer burden for fifteen million people in the USA who will be diagnosed with cancer [1]. Evidence-based interventions (EBIs) could reduce cervical cancer deaths by 90%, colorectal cancer deaths by 70%, and lung cancer deaths by 95% if widely and effectively implemented in the USA [1]. Yet, EBI implementation, when it occurs, is often suboptimal. In “implementation as usual,” implementation strategies are not often matched to important contextual factors; instead, they are selected based on personal preference and organizational routine, for example. Guidance for matching strategies to determinants (i.e., barriers and facilitators; see key terms and definitions in Table 1) is lacking even for established strategies like audit and feedback, which can be carried out in many ways [3, 4]. For implementation science to support optimized EBI implementation, four critical barriers must be overcome: (1) underdeveloped methods for determinant identification and prioritization [5], (2) incomplete knowledge of strategy mechanisms [6, 7], (3) underuse of methods for optimizing strategies [7], and (4) poor measurement of implementation constructs [8, 9].

Underdeveloped methods for determinant identification and prioritization

Settings in which cancer control EBIs are implemented can have dozens of implementation determinants [10, 11], complicating decisions about which to prioritize and target with implementation strategies. Typically, determinants of cancer control EBI implementation are identified by participant interviews, focus groups, or surveys with providers and/or healthcare administrators using general determinants frameworks such as the Consolidated Framework for Implementation Research (CFIR) or the Theoretical Domains Framework [11,12,13,14,15,16,17,18]. These easy-to-use methods for determinant identification can promote consistency and cumulativeness across studies; however, they are subject to the limitations of self-report, including low recognition by participants (insight), low saliency (recall), and low disclosure (social desirability). These methods are also dependent on the psychometric strength of the measures employed, which tends to be low or unknown [19]. Moreover, general determinants frameworks identify general determinants; EBI- or setting-specific determinants may go undetected. Finally, these methods (e.g., surveys, focus groups) often identify more determinants than can be addressed with available resources [11]. Yet methods for prioritizing identified determinants are rarely reported [5]. Those methods that have been reported favor prioritization of “feasible” determinants to address, not necessarily the determinants with greatest potential to undermine implementation [20]. To support optimized EBI implementation in cancer control, advances are needed in the methods for identifying and prioritizing determinants.

Incomplete knowledge of strategy mechanisms

Mechanisms are the processes through which implementation strategies produce their effects [2]. Much like knowing how hammers’ and screwdrivers’ work supports the selection of one tool over the other for specific tasks (e.g., hanging a picture), knowing how strategies work supports effective matching of strategies to determinants. For example, clinical reminders for cancer screening [strategy] are effective in addressing provider habitual behavior [determinant] by providing a cue to action [mechanism] at the point of care [7]. Although strategies have been compiled, labeled, and defined, their mechanisms remain largely unknown [6, 21]. Published systematic reviews of strategy mechanisms in mental health [6] and health care [21] revealed few mechanistic studies and only one empirically supported mechanism. Matching strategies to determinants absent knowledge of mechanisms is largely guesswork, like selecting a tool for a specific task without knowing how any of your tools work. This guesswork is evident in the relative lack of consensus among implementation scientists about which strategies among the 73 described in the Expert Recommendations for Implementing Change compilation best address the 39 potential determinants in the CFIR [22, 23]. More theoretical and empirical work is needed to establish strategy mechanisms to support effective strategy-determinant matching in cancer control EBI implementation.

Underuse of methods for optimizing strategies

In testing implementation strategies, researchers typically conduct a formative assessment to identify determinants, develop a multi-component strategy thought to address the determinants, pilot the strategy, and evaluate it in a randomized controlled trial (RCT). Well-conducted trials can generate robust evidence about the effectiveness of a multicomponent strategy, as a package, in the form it took in the evaluation (e.g., the specific way audit and feedback was conducted). However, this approach has three limitations: (i) The reliance on RCTs for experimental control and the focus on implementation outcomes (e.g., screening rates) limit opportunities to determine if strategy components are addressing identified determinants. (ii) RCTs of multi-component strategies provide limited information about which components drive effects, if all components are needed, how component strategies interact, how strategies should be modified to be more effective, and which combination of strategy components are most cost-effective. (iii) The jump from pilot study to RCT leaves little room for optimizing strategy delivery such as ensuring the most effective and efficient format, source, or dose is used. Thus, multi-component strategies evaluated in expensive, time-consuming RCTs are often suboptimal in their mode of delivery (e.g., in-person versus virtual), their potency to change clinical practice, and their costs to deploy. Moreover, when trials generate null results, as they often do, determining why is nearly impossible. These limitations can be addressed using principles from agile science [24, 25], a multidisciplinary method for creating and evaluating interventions through user-centered design and optimization.

Poor measurement of implementation constructs

To optimize EBI implementation in cancer control, implementers need reliable, valid, pragmatic measures to identify local implementation determinants, assess mechanism activation, and evaluate implementation outcomes. Systematic reviews indicate few such measures exist [9, 26, 27]. Most available measures of implementation constructs have unknown or dubious reliability and validity [9, 26,27,28,29]; moreover, many lack the pragmatic features valued by implementers: relevance, brevity, low burden, and actionability [30,31,32]. Although work to develop better measures to guide implementation research and practice is underway [33,34,35], more work is needed to address the measurement gap for key implementation constructs.

Optimizing Implementation in Cancer Control (OPTICC)

Optimizing Implementation in Cancer Control (P50CA244432) was funded by the National Cancer Institute as one of seven Implementation Science Centers through a one-time strategic request for proposals [36]. OPTICC is a collaboration of the University of Washington (UW), Kaiser Permanente Washington Health Research Institute (KPWHRI), and the Fred Hutchinson Cancer Research Center (FHCRC). OPTICC’s mission is to improve cancer outcomes by supporting optimized EBI implementation in community and clinical settings for a wide range of cancers across the cancer care continuum. OPTICC is guided by three specific aims.

-

Aim 1. Develop a research program that supports development, testing, and refinement of innovative, efficient, and economical methods for optimizing EBI implementation in cancer control

-

Aim 2, Support a diverse implementation laboratory of clinical and community partners to conduct rapid, implementation studies anywhere along the cancer care continuum for a wide range of cancers

-

Aim 3. Build implementation science capacity in cancer control by training new investigators, engaging established investigators in cancer-focused implementation science, and contributing to the Implementation Science Consortium in Cancer

Three cores support OPTICC’s aims. The Administrative Core plans coordinate and evaluate the Center’s activities and lead its capacity-building efforts. The Implementation Laboratory Core coordinates a network of diverse clinical and community sites to conduct studies to optimize EBI implementation, implement cancer control EBIs, and shape the Center’s agenda. The Research Program Core conducts innovative implementation studies, measurement and methods studies, and pilot studies that advance the Center’s theme of optimizing EBI implementation at any point along the cancer control continuum. A three-stage approach to optimizing EBI implementation is taken—identify and prioritize determinants, match strategies, and optimize strategies (Fig. 1). This protocol manuscript details the partners and methods guiding OPTICC’s work.

Methods/design

OPTICC’s Implementation Laboratory

OPTICC established an I-Lab to shape the Center’s research agenda and to partner with OPTICC’s Research Program Core in research to optimize implementation of EBIs in cancer control. The I-Lab is staffed by investigators and staff who conduct cancer implementation research in partnership with clinical and community organizations. We intentionally recruited I-Lab partners that could focus on OPTICC’s optimizing EBI implementation theme at any point along the cancer control continuum. These clinical systems and organizations have a history of research collaboration, and the capacity to grow their collaborations to include implementation research.

The I-Lab includes eight networks and organizations across six states. The I-Lab and its partners represent four main health-related settings: primary care clinics (e.g., federally qualified health clinics, hospital-affiliated clinics, private practices), larger health systems (with hospitals and their affiliated specialty and primary care clinics and services), cancer centers, and health departments (state and local). Table 2 details the characteristics of OPTICC’s I-Lab partners.

Engaging the I-Lab to shape the OPTICC research agenda

Throughout OPTICC’s life course, we will engage regularly with I-Lab representatives and various members of their organizations and networks. We will ensure that OPTICC’s research agenda includes I-Lab priorities through outreach communications (e.g., a quarterly newsletter), mutual participation in meetings and conferences (i.e., I-Lab representatives will attend OPTICC meetings and I-Lab leads will attend I-Lab partners’ meetings), and annual check-ins with individual I-Lab representatives to assess their organizations’ current cancer control priorities and implementation challenges. This level of engagement will enable OPTICC to (a) create requests for new proposals that address I-Lab partners’ specific cancer control priorities and implementation pain points and (b) foster partnerships between I-Lab members and investigators that result in proposals to do relevant, practice-based implementation research and speed the initial collaboration between investigators and their I-Lab partners.

OPTICC’s Research Program Core (RPC)

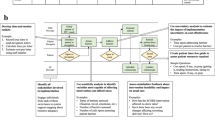

OPTICC’s RPC is staffed by a transdisciplinary group of investigators with expertise in implementation science, clinical psychology, organizational psychology, information science, computer science, sociology, medical anthropology, and public health. The core faculty use team science to develop, test, refine, and disseminate new methods for addressing the four critical barriers via studies taking place in the I-Lab. By design, project leads are supported by RPC core faculty to learn and apply OPTICC methods to build their implementation science capacity via team science. OPTICC approaches optimizing EBI implementation as a three-stage process (Fig. 1). Stage I is identify and prioritize determinants. Providing researchers and implementers with robust, efficient methods for determinant identification and prioritization will enable precise targeting of high-priority problems. Stage II is match strategies. Modeling via causal pathway diagrams (CPDs) to show relationships between strategies, mechanisms, moderators, and outcomes will clarify how strategies function, facilitate effective matching to determinants, and identify the conditions that affect strategy success. Stage III is optimize strategies. Supporting rapid testing in analog (artificially generated experimental conditions) or real-world conditions by testing causal pathways will maximize the accumulation and use of knowledge across projects.

Our focus on implementation strategies and their causal operations is rooted in Collins’s multiphase optimization strategy (MOST [37, 38]) for assessing intervention components and articulating optimization criteria (e.g., per-participant costs) for constructing effective behavioral interventions. In OPTICC, we anticipate that our I-Lab partners will generate questions that span at least seven optimization criteria (Table 3). Based on the state of the science and our partners’ goals, we will help I-Lab partners articulate which optimization criteria to prioritize, especially when they are in conflict, to inform study design and methods. For instance, one of our partners has the goal of increasing HPV home-testing reach using patient outreach materials as an implementation strategy and simultaneously optimize these materials according to patient preference; these criteria are fortunately not in conflict. However, another partner has the goal of increasing practice facilitation impact as an implementation strategy to support colorectal cancer screening while also optimizing efficiency; these criteria may be in conflict, but we have designed a study to test for optimization of each simultaneously (see below). For each of the three optimization stages (Fig. 1), we propose to iterate new methods that can be used independently or be combined depending on which criteria are prioritized in a study. Across methods, we draw on agile science [24, 25], an extension of MOST that emphasizes constructing explicit representations of hypothesized causal pathways that connect strategies to mechanisms, determinants, and outcomes for planning evaluations and organizing evidence. Through agile science, OPTICC draws on user-centered design [39, 40] (UCD) to specifically optimize implementation strategies. UCD is a principled method of technology, EBI, or strategy development that focuses on the needs and desires of end users to create compelling, intuitive, and effective interfaces [41]. A key thread throughout OPTICC is an emphasis on efficient and economical learning, so ineffective ideas are discarded quickly, and additional resources expended only when preliminary evidence indicates that an idea is worth investigating further. Another key thread is an emphasis on usability of evidence for researchers and stakeholders. Below is a summary of our three-stage approach and associated new methods that address limitations of traditional implementation science approaches. Each method will be applied by study leads with support of Research Program Core faculty across OPTICC-funded studies, refined each year, and built into massive open online courses (MOOCs) and toolkits for international dissemination.

Stage I: Identify and prioritize determinants (Fig. 2)

This stage identifies determinants of implementation success [11] that are active in the specific implementation setting. Strategies not matched to high-priority determinants operating in the implementation setting are unlikely to be effective [5]. Existing methods for this stage have at least four limitations: (I) They typically do not consider relevant determinants identified in the literature. (II) They are subject to issues of recall, bias, and social desirability. (III) They do not sufficiently engage the end user in the EBI prior to assessment. (IV) Approaches to determinant prioritization typically rely on stakeholder ratings of feasibility, among other parameters, of addressing determinants, which may have little to do with impact or import. To address these limitations, we have developed four new, complementary stage I methods.

First, we will summarize and synthesize research literature on known determinants for implementing EBIs of interest in settings of interest on variants known determinants for implementing the EBI of interest in the settings of interest. Unlike traditional systematic reviews, which can take 12+ months, rapid reviews may be completed in 3 months or less. Rapid evidence reviews are increasingly used in to guide implementation [42,43,44,45] in healthcare settings, but they are not typically employed to identify implementation determinants. Implementation Study 1, for example, will ask “What are the known barriers to implementing mailed fecal immunochemical test kit programs in Federally Qualified Health Centers?” The RPC experts will collaborate with project leads and the practice partners to clarify the question and scope each review. Abstraction will focus on identified determinants and any information about timing (i.e., implementation phase), modifiability, frequency, duration, and prevalence. The output will be a list of determinants organized by consumer, provider, team, organization, system, or policy level that will inform observational checklists and interview guides for rapid ethnographic assessment.

Second, rapid ethnographic assessment (REA) will efficiently gather ethnographic data about determinants by seeking to understand people, tasks, and environments from stakeholder perspectives, engaging stakeholders as active participants and applying user-centered approaches to efficiently elicit information. Ethnographic observation will include semi-structured observations and shadowing intended or actual EBI users (e.g., in Implementation Study 1, primary care clinics that implement colorectal cancer screening interventions with and without fidelity), which overcomes self-report biases. Through combined written and audio-recorded field notes, researchers will document activities, interactions, and events (including duration, time, and location); note the setting’s physical layout; and map flows of people, work, and communication. For a range of experiences, ethnographic interviews will be informal during observation and formal through scheduled interactions with key informants. Interviews will be unstructured, descriptive, and ask task-related questions. Researchers will document occurrence or presence of barriers, noting the duration, time, location, and affected persons.

Third, design probes will elicit new and different information from observations and interviews [46,47,48]. Design probes are user-centered research toolkits with items such as disposable cameras, albums, and illustrated cards. End users are prompted to take pictures, make diary entries, draw maps, or make collages in response to tasks such as “Describe a typical day” or “Describe using [the EBI]”. With design probes, participants have 1 week to observe, reflect on, and report experiences to generate insights, reveal ideas, and illuminate lived experiences as they relate to implementing the EBI [46] (e.g., feelings, attitudes), which overcomes the limitation of assessing stakeholder perceptions in a vacuum. In follow-up interviews, participants will reflect on their engagement with the task. Through memo writing, research team members will analyze the data generated from design probes and interviews to identify new determinants, corroborate determinants discovered via REA, and describe the salience, meaning, and importance of determinants to end users.

Finally, our determinant prioritization methods rely on three criteria: criticality, chronicity, and ubiquity, which overcome the limitations of traditional rating categories that are not clearly linked to impact [49]. Criticality is how a determinant affects or likely affects an implementation outcome. Some determinants are prerequisites for outcomes (e.g., EBI awareness). The influence of other determinants on outcomes depends on their potency (e.g., strength of negative attitudes). Chronicity is how frequently a determinant occurs in the case of events (e.g., shortages of critical supplies) or persists in the case of states (e.g., unsupportive leadership). Ubiquity is how pervasive a determinant is, affecting many EBI implementers. For each identified determinant, granular data generated by the rapid review, rapid ethnographic assessment, and design probes will be organized in a table by these three criteria (criticality, chronicity, and ubiquity) and then independently rated by 3 researchers using a 4-point Likert scale from 0 to 3 (e.g., not at all critical, somewhat critical, critical, necessary) and 3 stakeholders. Priority scores [50,51,52] and inter-rater agreement will be calculated. The outcome will be a list of determinants ordered by priority scores.

Stage II: Match strategies (Fig. 3)

To effectively impact implementation outcomes, strategies must alter prioritized determinants. Drawing on agile science [24, 53], we will develop methods to create CPDs that represent evidence and hypotheses about mechanisms by which implementation strategies impact target determinants and downstream (distal) implementation outcomes. Per Hill [54] and Kazdin [55], we define implementation mechanisms as events or processes by which implementation strategies influence implementation outcomes. Our systematic review on mechanisms of implementation found that researchers frequently underspecify (or mis-specify) key factors by labeling them all “determinants,” without declaring the factor’s roles in a strategy’s operation [21]. Moreover, of the 46 studies we identified, none established a mechanism. Also, tests of hypothesized mechanisms often overlooked proximal outcomes and preconditions. As we established previously [7], CPDs would benefit implementation science by (1) driving precision in use of terms for easier comparison of results across studies; (2) articulating hypotheses about the roles of factors that influence implementation strategy functions, enabling explicit testing of these hypotheses; (3) formulating proximal outcomes that can be assessed quickly with rapid analog methods; (4) informing the choice of study designs by clarifying temporal dynamics of represented processes and constraints (e.g., preconditions) that a study must account for [7]; and (5) making evidence more useful and usable. OPTICC’s Research Program Core will support study leads to develop CPDs, which will serve as an organizing structure of our relational database for accumulating knowledge, which is described in more detail in the Discussion. We will create a toolkit for building CPDs with templates, guiding questions, and decision rules. Diagrams will include several key factors (Table 1, Fig. 4): (a) implementation strategy intended to influence the target determinant, (b) mechanism by which the strategy is hypothesized to affect the determinant, (c) target determinant, (d) observable proximal outcomes for testing mechanism activation and precursors to implementation outcomes, (e) preconditions for the mechanism to be activated and to affect outcome(s), (f) moderators (intrapersonal, interpersonal, organizational, etc.) that could impede strategy impact, and (g) implementation outcomes that should be altered by determinant changes.

CPD construction has five steps that will be elaborated in a methodology paper that includes applications across the initial OPTICC studies. In brief, the steps are as follows: One, teams must select promising strategies to target prioritized determinants. OPTICC suggests that the following inputs should inform strategy selection and differential weight applied to their influence in this order: evidence (i.e., extant literature), plausibility (i.e., a hypothesized strategy-outcome causal chain stands up to logic), feasibility (i.e., the intended site has the capacity to carry out the strategy), and level of analysis (i.e., strategies with direct impact on prioritized determinants are prioritized over those with indirect impact). Two, confirm strategy-determinant alignment by articulating the mechanisms. To this end, articulate concrete operationalizations of the selected strategies as they may take many forms. Three, identify preconditions, which are factors that must be in place for the selected strategy to activate the mechanism. Preconditions are factors that may occur at the intrapersonal, interpersonal, organizational, or system level. Four, identify moderators, which are factors that can amplify or weaken strategy effects and can occur at multiple levels like preconditions. Five, identify proximal outcomes. Too often study teams focus on distal implementation outcomes that may take months or even years to manifest. Identifying proximal outcomes means that observable, measurable, short-term changes can be rapidly detected, which could ultimately save time and money for our partners. If operationalizing the strategy is thought to operate through multiple mechanisms, the same process should be repeated for those mechanisms as well. Once these diagrams are created, these steps should be repeated with different implementation strategy operationalizations to check if different ways of administering the strategy operate through the same mechanisms or if other moderators or preconditions should be considered. For instance, the implementation strategy learning collaboratives could occur in-person or virtually and it would be important to capture CPDs for each to determine if different factors emerge as important. If a strategy can be operationalized in several ways, diagrams should be created for operationalizations being considered for implementation. We acknowledge this represents an overly-simplified, artificially linear representation of implementation, one that we will refine over time, but this process of aligning strategies ➔ mechanisms ➔ determinants ➔ outcomes, and mapping related factors, may still be a practical and useful tool for both researchers and stakeholders.

Stage III: Optimize strategies (Fig. 5)

OPTICC will develop and refine methods, guidelines, and decision rules for efficient and economical optimization of implementation strategies, with the objective of helping researchers and stakeholders construct strategies that precisely impact their target determinants. Drawing on MOST and underused experimental methods (Table 4), we will develop guidelines for selecting experimental designs that can efficiently answer key questions at different stages of implementation research and obtain the right level of evidence needed for the primary research question. We will prioritize signal testing of individual strategies to identify most promising forms and studies for optimizing blended strategies before testing them in a full-scale confirmatory RCT. Drawing on UCD, we will refine methods for ideation and low-fidelity prototyping to help researchers consider a broader range of alternatives for how an implementation strategy can be operationalized, enabling efficient testing of multiple versions and selecting the version that is most likely to balance effectiveness and burden or cost. We will package developed methods and guidelines as toolkits that will be housed on our publicly available website and will be searchable through the relational database.

While RCTs provide robust evidence for strategy effectiveness, they do not provide a way to efficiently and rigorously test strategy components [56, 57]. Faced with a similar problem in behavioral intervention science, MOST was developed to help behavioral scientists use a broader range of experimental designs to optimize interventions. The OPTICC Center will leverage these designs to optimize strategies, including: factorial experiments, microrandomized trials (MRTs) [53, 62], sequential multiple assignment randomized trials (SMARTs), and single-case experimental designs (SCEDs) [60, 61]. These designs, described in Table 4 are highly efficient, requiring far fewer participants to test strategy components than a traditional RCT, enabling a range of research questions to be answered in less time and with fewer resources.

Testing and refining OPTICC’s methods through I-Lab partnered applications

Researchers and implementers can begin work in any of OPTICC’s EBI implementation stages and move forward or backward depending on their optimization goals. A linear progression (stage I ➔ II ➔ III) might be appropriate if researchers or implementers need to clarify critical determinants to select and then test strategies to alter them. Others may have an effective multicomponent strategy that could be optimized by moving backward to mapping strategy components (stage II) and then forward to optimization testing of strategy components before large-scale evaluation or use in clinical or community settings (stage III). OPTICC’s initial studies include those that approach the stages left to right (implementation study 2), right to left (implementation study 1), and those that stay within a single stage (pilot study 2) and those that span two stages (pilot study 1). Measurement development spans the stages; researchers and implementers need robust, useful measures of determinants (stage I), mechanisms (stage II), and outcomes (stage III). Project leads are supported by RPC faculty in their methods application specific to their project work and offered consultation from a national expert to further build their general implementation science capacity.

The first group of I-Lab pilot studies was identified through a competitive process as we wrote our grant proposal for OPTICC. Investigators were encouraged to focus on implementation challenges related to cancer control initiatives. OPTICC investigators evaluated proposals for fit with OPTICC’s methods as well as potential fit with one or more I-Lab partners. Several proposals built on investigators’ established relationships with I-Lab partners, ensuring that there was buy-in from an I-Lab member organization and that our first projects could hit the ground running. What follows is an overview of OPTICC’s initial studies highlighting which stage(s) of EBI implementation they occupy, the optimization goals they are motivated by, and the OPTICC methods applications (Table 5). There will be future open calls for OPTICC studies, which will engage additional I-Lab partners.

Implementation study 1 will partner with WPRN and/or BCCHP clinics to optimize the impact of practice facilitation on colorectal cancer screening in federally qualified health centers. Practice facilitation is an effective strategy for improving preventive service delivery and chronic disease management in primary care settings [63]. However, practice facilitation can be conducted in myriad ways, resulting in varying degrees of effectiveness. This study will test (using stage III methods) whether practice facilitation’s impact on colorectal cancer screening rates can be optimized through feedback on baseline determinants (using stage I methods) and monitoring strategy-determinant alignment (using stage II methods). The study is expected to increase colorectal cancer screening rates in safety-net clinic settings and optimize a widely used, yet poorly understood implementation strategy.

Implementation study 2 will partner with KPWA to optimize strategies to increase HPV self-sampling for cervical cancer screening. In a recently completed pragmatic trial, home-based testing increased screening by 50% in a hard-to-reach population [64, 65]; however, qualitative inquiry with women who did not complete screening highlighted opportunities to optimize implementation by distributing patient-centered outreach materials with HPV self-sampling kits. Using stage II methods, the study will develop outreach materials addressing determinants specific to home-based testing and known screening determinants that might be amplified in the home-testing environment. Using stage III methods, these materials will then be “tested for signal” to ensure they address identified determinants to screening completion. These optimized outreach materials will be ready for use and evaluation in a subsequent confirmatory RCT.

Pilot study 1 will partner with Harborview Medical Center/UW Medicine to assess the acceptability, feasibility, and demand for a ride-share transportation program for patients with abnormal fecal immunochemical test (FIT). Transportation is a frequently cited determinant to colonoscopy completion and a likely contributor to lack of FIT follow-up [66]. Ride-share platforms are potentially scalable and cost-effective strategies as rides are scheduled by the health care team, costs are billed to the organization and utilization does not require individual smartphone ownership. However, ride-share programs to address this transportation determinant to screening completion can be designed in different ways; using stage III methods, this study will explore ride-share employee, patient, and provider perspectives on different ride-share program models. A ride-share program optimized for patient acceptability and demand and provider acceptability and feasibility can be tested in a subsequent trial for effectiveness in reducing disparities in follow-up of abnormal FIT.

Pilot study 2 will partner with KPWA to identify promising strategies to implement hereditary breast cancer risk assessment guidelines. Clinical guidelines recommend routine ascertainment of individuals at increased hereditary breast and ovarian cancer risk to facilitate timely access to counseling, testing, and risk-management [67]. Yet only about 20% of eligible women have ever discussed genetic testing with a health professional [68]. The study leverages an existing implementation effort in Kaiser Permanente Washington to increase access to cancer genetic services. Using stage I and stage II methods, the study will match strategies to high-priority determinants to routine hereditary cancer risk assessment and delivery of genetic testing, and evaluate a staged, stakeholder-driven approach to program implementation planning. The study will generate usable knowledge for optimizing program implementation while testing the acceptability, feasibility, and usefulness of stage II methods with stakeholders.

Discussion

Response to COVID-19 pandemic

The OPTICC Center began in September 2019, just before the COVID-19 pandemic. In response to public health restrictions, clinical organizations modified their operations [69]. which led to reductions in cancer care across the cancer control continuum [70,71,72]. In the state of Washington, where COVID-19 had the earliest impact in the USA, our I-Lab partners experienced similar changes and observed new barriers related to the pandemic. We surveyed federally qualified health centers in one of our I-Lab networks. We found that they reported substantial clinic closures and decreases in overall visits. Half reported a significant reduction in cancer screening activities, partly because staff had their time shifted to COVID-19 response. One of our other I-Lab networks analyzed Puget Sound Surveillance, Epidemiology, and End Results data immediately prior to and following the start of the pandemic impact in the US. They found that fewer cancers were being detected, and a shift to diagnosis at later stages than prior to COVID-19. Cancer patients had fewer in-office visits; some, but not all of these visits were replaced by telemedicine. Some cancer treatments, such as chemotherapy, increased during the pandemic, while other treatments, such as surgeries, declined [72]. We will interview all I-Lab partners about the impact of COVID-19 on their cancer control efforts in January 2021 to tailor our research opportunities and capacity-building efforts to their current needs.

Health equity

As we prepare for the next group of pilot studies, the I-Lab leads will meet with representatives of each I-Lab member to learn their current cancer control priorities and implementation challenges, so that our next call for proposals can be driven largely by the implementation practice challenges faced by our partners, including those imposed by COVID-19. We will also prioritize studies that address health equity as cancer burden falls inequitably on traditionally underserved populations. To realize the Cancer Moonshot Blue Ribbon Panel’s vision [1], cancer control EBIs must be rapidly, effectively, and efficiently implemented in clinical and community settings where traditionally underserved populations receive care, work, and live. Some of the initial OPTICC studies will attempt to address health disparities by (1) testing and refining methods for optimizing EBI implementation in settings that serve racially, ethnically, and geographically diverse populations and (2) optimizing strategies that address determinants to cancer control EBIs that disadvantaged populations disproportionately experience.

Growing a diverse workforce through measurement studies

OPTICC will also advance implementation science measurement. With funding through a diversity supplement to grow the implementation science workforce, OPTICC investigators will create a scalable, flexible method of quantitatively identifying and prioritizing determinants to EBI implementation. Focusing on the Intervention Characteristics domain of the CFIR [18]—where few reliable and valid measures exist [73]—we will develop item banks for each determinant in that domain and administer the items to a large sample of healthcare professionals in our I-Lab. Study participants will be randomly assigned one of two cancer control EBIs, which they will then rate using the items. They will also complete a measure of implementation stage and intention to use the EBI, a proximal outcome to EBI adoption. We will then use item response theory to create robust, streamlined measures that can be used to assess the intervention characteristics of a wide range of EBIs yet can be tailored to different implementation contexts. In addition, we will use multiple regression to link each determinant to implementation stage and intention to use, facilitating the development of empirically valid cut-off scores indicating whether the determinant poses a barrier or facilitator to EBI implementation.

Dissemination

Across OPTICC-funded studies, our methods will be refined to ensure they are most efficient, economical, and useful to stakeholders. We will develop toolkits for each method and associated training opportunities in the form of MOOCs, for example. We will also create a website with separate pages for each OPTICC Center method. The front end of the website will be constructed leveraging user-centered design principles, including articulation of user archetypes and iterative design sessions. The backend of the website will contain a relational database to allow for accumulation of knowledge first within OPTICC but ideally, longer-term, across the Implementation Science Consortium and possibly beyond. The relational database will be explicitly structured around categories used in our CPDs to curate evidence across studies about determinants, strategies, mechanisms, etc., which are essentially common data elements. This information architecture will be a scientific contribution, as it will represent a way to unify and structure evidence about the operation of a wide range of diverse implementation strategies.

Conclusions

We expect OPTICC to produce the following outcomes: (1) improved methods for identifying and prioritizing determinants, (2) refined methods for matching strategies to determinants, (3) optimized strategies ready for large-scale evaluation and use, and (4) new, reliable, valid, and pragmatic measures of key implementation constructs. In addition to changing the methods and measures used in implementation science, the Center will significantly impact public health by supporting cancer control EBI implementation, with research findings publicly available to implementers via a user-friendly website offering practical tools and guidance for optimizing EBI implementation.

Availability of data and materials

Not applicable.

References

Canfield DV, Dubowski KM, Whinnery JE, Lewis RJ, Ritter RM, Rogers PB. Increased cannabinoids concentrations found in specimens from fatal aviation accidents between 1997 and 2006. Forensic Sci Int. 2010;197(1-3):85–8.

Kazdin AE. Mediators and mechanisms of change in psychotherapy research. Annu Rev Clin Psychol. 2007;3:1–27.

Foy R, Eccles MP, Jamtvedt G, Young J, Grimshaw JM, Baker R. What do we know about how to do audit and feedback? Pitfalls in applying evidence from a systematic review. BMC Health Serv Res. 2005;5:50.

Ivers NM, Grimshaw JM, Jamtvedt G, Flottorp S, O'Brien MA, French SD, et al. Growing literature, stagnant science? Systematic review, meta-regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med. 2014;29(11):1534–41.

Baker R, Camosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, Robertson N, Wensing M, Fiander M, Eccles MP, Godycki-Cwirko M, van Lieshout J, Jäger C. Tailored interventions to address determinants of practice. Cochrane Database Syst Rev. 2015;2015(4):CD005470. https://doi.org/10.1002/14651858.CD005470.pub3. PMID: 25923419; PMCID: PMC7271646.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Adm Policy Ment Health. 2016;43(5):783–98.

Lewis CC, Klasnja P, Powell BJ, Lyon AR, Tuzzio L, Jones S, et al. From classification to causality: advancing understanding of mechanisms of change in implementation science. Front Public Health. 2018;6:136.

Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implement Sci. 2014;9:118.

Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci. 2015;10:155.

Lewis CC, Scott K, Marriott BR. A methodology for generating a tailored implementation blueprint: an exemplar from a youth residential setting. Implement Sci. 2018;13(1):68.

Krause J, Van Lieshout J, Klomp R, Huntink E, Aakhus E, Flottorp S, et al. Identifying determinants of care for tailoring implementation in chronic diseases: an evaluation of different methods. Implement Sci. 2014;9:102.

Colquhoun HL, Squires JE, Kolehmainen N, Fraser C, Grimshaw JM. Methods for designing interventions to change healthcare professionals’ behaviour: a systematic review. Implement Sci. 2017;12(1):30.

Cole AM, Esplin A, Baldwin LM. Adaptation of an evidence-based colorectal cancer screening program using the consolidated framework for implementation research. Prev Chronic Dis. 2015;12:E213.

Liang S, Kegler MC, Cotter M, Emily P, Beasley D, Hermstad A, et al. Integrating evidence-based practices for increasing cancer screenings in safety net health systems: a multiple case study using the Consolidated Framework for Implementation Research. Implement Sci. 2016;11:109.

VanDevanter N, Kumar P, Nguyen N, Nguyen L, Nguyen T, Stillman F, et al. Application of the Consolidated Framework for Implementation Research to assess factors that may influence implementation of tobacco use treatment guidelines in the Viet Nam public health care delivery system. Implement Sci. 2017;12(1):27.

McSherry LA, Dombrowski SU, Francis JJ, Murphy J, Martin CM, O’Leary JJ, et al. ‘It’s a can of worms’: understanding primary care practitioners’ behaviours in relation to HPV using the theoretical domains framework. Implement Sci. 2012;7(1):73.

Birken SA, Presseau J, Ellis SD, Gerstel AA, Mayer DK. Potential determinants of health-care professionals' use of survivorship care plans: a qualitative study using the theoretical domains framework. Implement Sci. 2014;9:167.

Damschroder LJ, Aron D, Keith R, Kirsh S, Alexander J, Lowery J. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Huijg JM, Gebhardt WA, Dusseldorp E, Verheijden MW, van der Zouwe N, Middelkoop BJ, et al. Measuring determinants of implementation behavior: psychometric properties of a questionnaire based on the theoretical domains framework. Implement Sci. 2014;9:33.

Aakhus E, Granlund I, Oxman AD, Flottorp SA. Tailoring interventions to implement recommendations for the treatment of elderly patients with depression: a qualitative study. Int J Mental Health Syst. 2015;9:36.

Lewis CC, Boyd MR, Walsh-Bailey C, Lyon AR, Beidas R, Mittman B, Aarons GA, Weiner BJ, Chambers DA. A systematic review of empirical studies examining mechanisms of implementation in health. Implement Sci. 2020;15(1):21. https://doi.org/10.1186/s13012-020-00983-3. PMID: 32299461; PMCID: PMC7164241.

Consolidated Framework for Implementation Research. Center for Clinical Management Research. 2020. Available from: https://cfirguide.org/choosing-strategies/.

Waltz TJ, Powell BJ, Fernandez ME, Abadie B, Damschroder LJ. Choosing implementation strategies to address contextual barriers: diversity in recommendations and future directions. Implement Sci. 2019;14(1):42.

Hekler EB, Klasnja P, Riley WT, Buman MP, Huberty J, Rivera DE, et al. Agile science: creating useful products for behavior change in the real world. Transl Behav Med. 2016;6(2):317–28.

Klasnja P, Hekler EB, Korinek EV, Harlow J, Mishra SR. Toward usable evidence: optimizing knowledge accumulation in HCI research on health behavior change. Proc SIGCHI Conf Hum Factor Comput Syst. 2017;2017:3071–82.

Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8:22.

Chor KH, Wisdom JP, Olin SC, Hoagwood KE, Horwitz SM. Measures for predictors of innovation adoption. Adm Policy Ment Health. 2015;42(5):545–73.

Weiner BJ, Amick H, Lee SY. Conceptualization and measurement of organizational readiness for change: a review of the literature in health services research and other fields. Med Care Res Rev. 2008;65(4):379–436.

Gagnon MP, Attieh R, Ghandour el K, Legare F, Ouimet M, Estabrooks CA, et al. A systematic review of instruments to assess organizational readiness for knowledge translation in health care. PLoS One. 2014;9(12):e114338.

Glasgow RE, Riley WT. Pragmatic measures: what they are and why we need them. Am J Prev Med. 2013;45(2):237–43.

Powell BJ, Stanick CF, Halko HM, Dorsey CN, Weiner BJ, Barwick MA, et al. Toward criteria for pragmatic measurement in implementation research and practice: a stakeholder-driven approach using concept mapping. Implement Sci. 2017;12(1):118.

Stanick CF, Halko HM, Dorsey CN, Weiner BJ, Powell BJ, Palinkas LA, et al. Operationalizing the ‘pragmatic’ measures construct using a stakeholder feedback and a multi-method approach. BMC Health Serv Res. 2018;18(1):882.

Kegler MC, Liang S, Weiner BJ, Tu SP, Friedman DB, Glenn BA, et al. Measuring constructs of the consolidated framework for implementation research in the context of increasing colorectal cancer screening in federally qualified health center. Health Serv Res. 2018;53(6):4178–203.

Fernandez ME, Walker TJ, Weiner BJ, Calo WA, Liang S, Risendal B, et al. Developing measures to assess constructs from the Inner Setting domain of the Consolidated Framework for Implementation Research. Implement Sci. 2018;13(1):52.

Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, et al. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12(1):108.

National Institutes of Health. Implementation Science for Cancer Control: Advanced Centers (P50 Clinical Trial Optional). (RFA-CA-19-006). National Cancer Institute; 2019. https://grants.nih.gov/grants/guide/rfa-files/rfa-ca-19-006.html.

Collins LM, Murphy SA, Nair VN, Strecher VJ. A strategy for optimizing and evaluating behavioral interventions. Ann Behav Med. 2005;30(1):65–73.

Collins LM, Murphy SA, Strecher V. The multiphase optimization strategy (MOST) and the sequential multiple assignment randomized trial (SMART): new methods for more potent eHealth interventions. Am J Prev Med. 2007;32(5 Suppl):S112–8.

Rogers Y, Sharp H, Preece J. Interaction design: beyond human-computer interaction. Chichester: Wiley; 2011.

Saffer D. Designing for interaction: creating innovative applications and devices. 2nd ed. Berkeley: New Riders; 2010.

Courage C, Baxter K. Understanding your users: a practical guide to user requirements methods, tools, and techniques. Burlington: Morgan Kaufmann Publishers, Inc.; 2004.

Owusu-Addo E, Ofori-Asenso R, Batchelor F, Mahtani K, Brijnath B. Effective implementation approaches for healthy ageing interventions for older people: a rapid review. Arch Gerontol Geriatr. 2021;92:104263.

Wolfenden L, Carruthers J, Wyse R, Yoong S. Translation of tobacco control programs in schools: findings from a rapid review of systematic reviews of implementation and dissemination interventions. Health Promot J Austr. 2014;25(2):136–8.

Teper MH, Godard-Sebillotte C, Vedel I. Achieving the goals of dementia plans: a review of evidence-informed implementation strategies. World Health Popul. 2019;18(1):37–45.

Slade SC, Philip K, Morris ME. Frameworks for embedding a research culture in allied health practice: a rapid review. Health Res Policy Syst. 2018;16(1):29.

Mattelmäki T. Applying probes – from inspirational notes to collaborative insights. CoDesign. 2005;1(2):83–102.

Sanders EBN, Stappers PJ. Probes, toolkits and prototypes: three approaches to making in codesigning. CoDesign. 2014;10(1):5–14.

Mattelmäki T. Probing for co-exploring. CoDesign. 2008;4(1):65–78.

van Tuijl AAC, Wollersheim HC, Fluit C, van Gurp PJ, Calsbeek H. Development of a tool for identifying and addressing prioritised determinants of quality improvement initiatives led by healthcare professionals: a mixed-methods study. Implement Sci Commun. 2020;1:92.

Wensing M, Oxman A, Baker R, Godycki-Cwirko M, Flottorp S, Szecsenyi J, et al. Tailored Implementation For Chronic Diseases (TICD): a project protocol. Implement Sci. 2011;6:103.

Gurses AP, Murphy DJ, Martinez EA, Berenholtz SM, Pronovost PJ. A practical tool to identify and eliminate barriers to compliance with evidence-based guidelines. Jt Comm J Qual Patient Saf. 2009;35(10):526–32 485.

Flottorp SA, Oxman AD, Krause J, Musila NR, Wensing M, Godycki-Cwirko M, et al. A checklist for identifying determinants of practice: a systematic review and synthesis of frameworks and taxonomies of factors that prevent or enable improvements in healthcare professional practice. Implement Sci. 2013;8:35.

Klasnja P, Hekler EB, Korinek EV, Harlow J, Mishra SR. Toward usable evidence: optimizing knowledge accumulation in HCI research on health behavior change. In: 2017 CHI Conference on Human Factors in Computing Systems. Denver: ACM; 2017. p. 3071–82.

Hill AB. The environment and disease: association or causation? Proc R Soc Med. 1965;58:295–300.

Kazdin AE. Evidence-based treatment and practice: new opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. Am Psychol. 2008;63(3):146–59.

Collins LM, Dziak JJ, Kugler KC, Trail JB. Factorial experiments: efficient tools for evaluation of intervention components. Am J Prev Med. 2014;47(4):498–504.

Collins LM, Trail JB, Kugler KC, Baker TB, Piper ME, Mermelstein RJ. Evaluating individual intervention components: making decisions based on the results of a factorial screening experiment. Transl Behav Med. 2014;4(3):238–51.

Lei H, Nahum-Shani I, Lynch K, Oslin D, Murphy SA. A “SMART” design for building individualized treatment sequences. Annu Rev Clin Psychol. 2012;8:21–48.

Murphy SA. An experimental design for the development of adaptive treatment strategies. Stat Med. 2005;24(10):1455–81.

Dallery J, Cassidy RN, Raiff BR. Single-case experimental designs to evaluate novel technology-based health interventions. J Med Internet Res. 2013;15(2):e22.

Dallery J, Raiff BR. Optimizing behavioral health interventions with single-case designs: from development to dissemination. Transl Behav Med. 2014;4(3):290–303.

Liao P, Klasnja P, Tewari A, Murphy SA. Sample size calculations for micro-randomized trials in mHealth. Stat Med. 2016;35(12):1944–71.

Baskerville NB, Liddy C, Hogg W. Systematic review and meta-analysis of practice facilitation within primary care settings. Ann Fam Med. 2012;10(1):63–74.

Winer RL, et al. "Effect of mailed human papillomavirus test kits vs usual care reminders on cervical cancer screening uptake, precancer detection,and treatment: a randomized clinical trial." JAMA network open 2.11. 2019;e1914729.

Home-based HPV self-sampling to increase cervical cancer screening participation: a pragmatic randomized trial in a U.S. Healthcare delivery system (Abstract FC 08-05): European Research Organization on Genital Infection and Neoplasia; 2017 [Available from: https://www.eurogin.com/images/PDF/EUROGIN-2017.pdf.

Rohan EA, Slotman B, DeGroff A, Morrissey KG, Murillo J, Schroy P. Refining the patient navigation role in a colorectal cancer screening program: results from an intervention study. J Natl Compr Canc Netw. 2016;14(11):1371–8.

Moyer VA. Risk assessment, genetic counseling, and genetic testing for BRCA-related cancer in women: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2014;160(4):271–81.

Childers CP, Childers KK, Maggard-Gibbons M, Macinko J. National Estimates of Genetic Testing in Women With a History of Breast or Ovarian Cancer. J Clin Oncol. 2017;35(34):3800–6.

Uzzo RK, A. Geynisman, D. M. Coronavirus disease 2019 (COVID-19): Cancer screening, diagnosis, treatment, and posttreatment surveillance in uninfected patients during the pandemic. UpToDatecom. 2020.

DuBois RN. COVID-19, cancer care and prevention. Cancer Prev Res (Phila). 2020;13(11):889–92.

Carethers JM, Sengupta R, Blakey R, Ribas A, D'Souza G. Disparities in cancer prevention in the COVID-19 era. Cancer Prev Res (Phila). 2020;13(11):893–6.

Shankaran V, Chen CP. Perspectives on Cancer care in Washington State: Structural Inequities in Care Delivery and Impact of COVID-19. Value in Cancer Care Summit: Fred Hutch: Hutchinson Institute for Cancer Outcomes Research. Seattle: 2020. https://www.fredhutch.org/content/dam/www/research/institute-networksircs/hicor/vccs/2019/2020/VCC%20Summit%20Final%20Presentation_11.9.2020.pdf.

Lewis CC, Mettert K, Lyon AR. Determining the influence of intervention characteristics on implementation success requires reliable and valid measures: results from a systematic review. Implement Res Pract. accepted.

Acknowledgements

We would like to acknowledge the exceptional scientific editing services of Chris Tachibana.

OPTICC Consortium

Paula Blasi1, Diana Buist1, Allison Cole2,3, Shannon Dorsey4, 5, Marlaine Figueroa Gray1, Nora B. Henrikson1, Rachel Issaka6,7, Salene Jones6, Sarah Knerr2, Aaron R. Lyon8, Lorella Palazzo1, Laura Panattoni6, Michael D. Pullmann8, Leah Tuzzio1, Thuy Vu2, John Weeks1, Rachel Winer9

1. Kaiser Permanente Washington Health Research Institute, Seattle, Washington, USA

2. Department of Health Services, University of Washington, Seattle, Washington, USA

3. Department of Family Medicine, University of Washington, Seattle, Washington, USA

4. Department of Global Health, University of Washington, Seattle, Washington, USA

5. Department of Psychology, University of Washington, Seattle, Washington

6. Fred Hutch Cancer Research Center, Seattle, Washington, USA

7. Gastroenterology and Heptology, University of Washington, Seattle, Washington, USA

8. Department of Psychiatry and Behavioral Sciences, University of Washington, Seattle, Washington, USA

9. Department of Epidemiology, University of Washington, Seattle, Washington, USA

Funding

Funding for the Optimizing Implementation in Cancer Control Center (P50CA244432) is provided by the National Cancer Institute.

Author information

Authors and Affiliations

Consortia

Contributions

The OPTICC Center was developed in collaboration with the entire authorship team. This manuscript was led by CCL who brought together relevant grant text for the introduction, methods, and discussion. CCL, BJW, and PK developed the methods featured in OPTICC’s Research Program Core. PH and LMB developed the Implementation Laboratory, with AJ contributing text for this section in this manuscript. All named authors (including RH and JB) and the OPTICC Team reviewed and approved the final version of this manuscript for publication.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Lewis, C.C., Hannon, P.A., Klasnja, P. et al. Optimizing Implementation in Cancer Control (OPTICC): protocol for an implementation science center. Implement Sci Commun 2, 44 (2021). https://doi.org/10.1186/s43058-021-00117-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s43058-021-00117-w