Abstract

Background

Two of the current methodological barriers to implementation science efforts are the lack of agreement regarding constructs hypothesized to affect implementation success and identifiable measures of these constructs. In order to address these gaps, the main goals of this paper were to identify a multi-level framework that captures the predominant factors that impact implementation outcomes, conduct a systematic review of available measures assessing constructs subsumed within these primary factors, and determine the criterion validity of these measures in the search articles.

Method

We conducted a systematic literature review to identify articles reporting the use or development of measures designed to assess constructs that predict the implementation of evidence-based health innovations. Articles published through 12 August 2012 were identified through MEDLINE, CINAHL, PsycINFO and the journal Implementation Science. We then utilized a modified five-factor framework in order to code whether each measure contained items that assess constructs representing structural, organizational, provider, patient, and innovation level factors. Further, we coded the criterion validity of each measure within the search articles obtained.

Results

Our review identified 62 measures. Results indicate that organization, provider, and innovation-level constructs have the greatest number of measures available for use, whereas structural and patient-level constructs have the least. Additionally, relatively few measures demonstrated criterion validity, or reliable association with an implementation outcome (e.g., fidelity).

Discussion

In light of these findings, our discussion centers on strategies that researchers can utilize in order to identify, adapt, and improve extant measures for use in their own implementation research. In total, our literature review and resulting measures compendium increases the capacity of researchers to conceptualize and measure implementation-related constructs in their ongoing and future research.

Similar content being viewed by others

Background

Each year, billions of dollars are spent in countries around the world to support the development of evidence-based health innovations [1, 2]—interventions, practices, and guidelines designed to improve human health. Yet, only a small fraction of these innovations are ever implemented into practice [3], and efforts to implement these practices can take many years [4]. Thus, new approaches are greatly needed in order to accelerate the rate at which existing and emergent knowledge can be implemented in health-related settings around the world.

As a number of scholars have noted, researchers currently face significant challenges in measuring implementation-related phenomena [5–7]. The implementation of evidence-based health innovations is a complex process. It involves attention to a wide array of multi-level variables related to the innovation itself, the local implementation context, and the behavioral strategies used to implement the innovation [8, 9]. In essence, there are many ‘moving parts’ to consider that can ultimately determine whether implementation efforts succeed or fail.

These challenges also stem from heterogeneity across the theories and frameworks that guide implementation research. There is currently no single theory or set of theories that offer testable hypotheses about when and why specific constructs will predict specific outcomes within implementation science [5, 10]. What does exist in implementation science, however, are a plethora of frameworks that identify general classes or typologies of factors that are hypothesized to affect implementation outcomes (i.e., impact frameworks [5]). Further, within the available frameworks, there is considerable heterogeneity in the operationalization of constructs of interest and the measures available to assess them. At present, constructs that have been hypothesized to affect implementation outcomes are often poorly defined within studies [11, 12]. And, the measures used to assess these constructs are frequently developed without direct connection to substantive theory or guiding frameworks and with minimal analysis of psychometric properties, such as internal reliability and construct validity [12].

In light of these measurement-related challenges, increasing the capacity of researchers to both conceptualize and measure constructs hypothesized to affect implementation outcomes is a critical way to advance the field of implementation science. With these limitations in mind, the main goals of the current paper were threefold. First, we expanded existing multi-level frameworks in order to identify a five-factor framework that organizes the constructs hypothesized to affect implementation outcomes. Second, we conducted a systematic review in order to identify measures available to assess constructs that can conceivably act as causal predictors of implementation outcomes, and coded whether each measure assessed any of the five factors of the aforementioned framework. And third, we ascertained the criterion validity—whether each measure is a reliable predictor of implementation outcomes (e.g., adoption, fidelity)—of these measures identified in the search articles.

A multi-level framework guiding implementation science research

Historically, there has been great heterogeneity in the focus of implementation science frameworks. Some frameworks examine the impact of a single type of factor, positing that constructs related to the individual provider (e.g., practitioner behavior change: Transtheoretical Model [13, 14]) or constructs related to the organization (e.g., organizational climate for implementation: Implementation Effectiveness model [15]) impact implementation outcomes. More recently, however, many frameworks have converged to outline a set of multi-level factors that are hypothesized to impact implementation outcomes [9, 16–18]. These frameworks propose that implementation outcomes are a function of multiple types of broad factors that can be hierarchically organized to represent micro-, meso-, and macro-level factors.

What, then, are the multi-level factors hypothesized to affect the successful implementation of evidence-based health innovations? In order to address this question, Durlak and DuPre [19] reviewed meta-analyses and additional quantitative reports examining the predictors of successful implementation from over 500 studies. In contrast, Damschroder et al.[20] reviewed 19 existing implementation theories and frameworks in order to identify common constructs that affect successful implementation across a wide variety of settings (e.g., healthcare, mental health services, corporations). Their synthesis yielded a typology (i.e., the Consolidated Framework for Implementation Research [CFIR]) that largely overlaps with Durlak and DuPre’s [19] analysis. Thus, although these researchers utilized different approaches—with one identifying unifying constructs from empirical results [20] and the other identifying unifying constructs from existing conceptual frameworks [19]—both concluded that structural- (i.e., community-level [19]; outer-setting [20]), organizational- (i.e., prevention delivery system organizational capacity [19]; inner setting [20]), provider-, and innovation-level factors predict implementation outcomes [19, 20].

The structural-level factor encompasses a number of constructs that represent the outer setting or external structure of the broader sociocultural context or community in which a specific organization is nested [3]. These constructs could represent aspects of the physical environment (e.g., topographical elements that pose barriers to clinic access), political or social climate (e.g., liberal versus conservative), public policies (e.g., presence of state laws that criminalize HIV disclosure), economic climate (e.g., reliance upon and stability of private, state, federal funding), or infrastructure (e.g., local workforce, quality of public transportation surrounding implementation site) [21, 22].

The organizational-level factor encompasses a number of constructs that represent aspects of the organization in which an innovation is being implemented. These aspects could include leadership effectiveness, culture or climate (e.g., innovation climate, the extent to which organization values and rewards evidence-based practice or innovation [23]), and employee morale or satisfaction.

The provider-level factor encompasses a number of constructs that represent aspects of the individual provider who implements the innovation with a patient or client. We use ‘provider’ as an omnibus term that refers to anyone who has contact with patients for the purposes of implementing the innovation, including physicians, other clinicians (e.g., psychologists), allied health professionals (e.g., dieticians), or staff (e.g., nurse care managers). These aspects could include attitudes towards evidence-based practice [24] or perceived behavioral control for implementing the innovation [25].

The innovation-level factor encompasses a number of constructs that represent aspects of the innovation that will be implemented. These aspects could include the relative advantage of utilizing an innovation above existing practices [26] and quality of evidence supporting the innovation’s efficacy (Organization Readiness to Change Assessment, or ORCA [27]).

But, where does the patient or client fit in these accounts? The patient-level factor encompasses patient characteristics such as health-relevant beliefs, motivation, and personality traits that can impact implementation outcomes [28]1. In efficacy trials that compare health innovations to a standard of care or control condition, patient-level variables are of primary importance both as outcome measures of efficacy (e.g., improved patient health outcomes) and as predictors (e.g., patient health literacy, beliefs about innovation success) of these efficacy outcomes. Patient-level variables such as behavioral risk factors (e.g., alcohol use [29]) and motivation [30, 31] often moderate the retention in and efficacy of behavioral risk reduction interventions. Moreover, patients’ distrust of medical practices and endorsement of conspiracy beliefs have been linked to poorer health outcomes and retention in care, especially among African-American patients and other vulnerable populations [32]. However, in implementation trials testing whether and to what degree an innovation has been integrated into a new delivery context, the outcomes of interest are different from those in efficacy trials, typically focusing on provider- or organizational-level variables [33, 34].

Despite the fact that they focus on different outcomes, what implementation trials have in common with efficacy trials is that patient-level variables are important to examine as predictors, because they inevitably impact the outcomes of implementation efforts [28, 35]. The very conceptualization of some implementation outcomes directly implicates the involvement of patients. For example, fidelity, or ‘the degree to which an intervention was implemented as it was prescribed in the original protocol or as it was intended by the program developers’ [6], necessarily involves and is affected by patient-level factors. Further, as key stakeholders in all implementation efforts, patients are active agents and consumers of healthcare from whom buy-in is necessary. In fact, in community-based participatory research designs, patients are involved directly as partners in the research process [36, 37]. Thus, as these findings reiterate, patient-level predictors explain meaningful variance in implementation outcomes, making failure to measure these variables as much a statistical as a conceptual omission.

For the aforementioned reasons, we posit that a comprehensive multi-level framework must include a patient-level factor. Therefore, in the current review, we employed a comprehensive multi-level framework positing five factors representing structural-, organizational-, patient-, provider-, and innovation-levels of analysis. We utilized this five-factor framework as a means of organizing and describing important sets of constructs, as well as the measures that assess these constructs. Figure 1 depicts our current conceptual framework. The left side of the figure depicts causal factors, or the structural-, organizational-, patient-, provider-, and innovation-level constructs that are hypothesized to cause or predict implementation outcomes. These factors represent multiple levels of analysis, from micro-level to macro-level, such that a specific innovation (e.g., evidence-based guideline) is implemented by providers to patients who are nested within an organization (e.g., clinical care settings), which is nested within a broader structural context (e.g., healthcare system, social climate, community norms). The right side of the figure depicts the implementation outcomes—such as adoption, fidelity, implementation cost, penetration, and sustainability [6] — that are affected by the causal factors. Together, these factors illustrate a hypothesized causal effect wherein constructs lead to implementation outcomes.

Available measures

What measures are currently available to assess constructs within these five factors hypothesized to predict implementation outcomes? The current systematic review seeks to answer this basic question and act as a guide to assist researchers in identifying and evaluating the types of measures that are available to assess structural, organizational, provider, patient, and innovation-level constructs in implementation research.

A number of researchers have also provided reviews of limited portions of this literature [38–41]. For example, French et al.[40] focused on the organizational-level by conducting a systematic review to identify measures designed to assess features of the organizational context. They evaluated 30 measures derived from both the healthcare and management/organizational science literatures, and their review found support for the representation of seven primary attributes of organizational context across available measures: learning culture, vision, leadership, knowledge need/capture, acquiring new knowledge, knowledge sharing, and knowledge use. Other systematic reviews and meta-analyses have focused on measures that assess provider-level constructs, such as behavioral intentions to implement evidence-based practices [38] and other research-related variables (e.g., attitudes toward and involvement in research activities) and demographic attributes (e.g., education [41]). Finally, it is important to note that other previous reviews have focused on the conceptualization [6] and evaluation [12] of implementation outcomes, including the psychometric properties of research utilization measures [12].

To date, however, no systematic reviews have examined measures designed to assess constructs representing the five types of factors—structural, organizational, provider, patient, and innovation—hypothesized to predict implementation outcomes. The purpose of the current systematic review is to identify measures available to assess this full range of five factors. In doing so, this review is designed to create a resource that will increase the capacity of and speed with which researchers can identify and incorporate these measures into ongoing research.

Method

We located article records by searching MEDLINE, PsycINFO, and CINAHL databases and abstracts of articles published in the journal Implementation Science through 12 August 2012. There was no restriction on beginning date of this search. (See Additional file 1 for full information about the search process). We searched with combinations of keywords representing three categories: implementation science, health, and measures. Given that the field of implementation science includes terminology contributions from many diverse fields and countries, we utilized thirteen phrases identified as common keywords from Rabin et al.’s systematic review of the literature [34]: diffusion of innovations, dissemination, effectiveness research, implementation, knowledge to action, knowledge transfer, knowledge translation, research to practice, research utilization, research utilisation, scale up, technology transfer, translational research.

As past research has demonstrated, use of database field codes or query filters is an efficient and effective strategy for identifying high-quality articles for individual clinician use [42, 43] and systematic reviews [44]. In essence, these database restrictions can serve to lower the number of ‘false positive’ records identified in the search of the literature, creating more efficiency and accuracy in the search process. In our search, we utilized several such database restrictions in order to identify relevant implementation science-related measures.

In our search of PsycINFO and CINAHL, we used database restrictions that allowed us to search each of the implementation science keywords within the methodology sections of records via PsycINFO (i.e., ‘tests and measures’ field) and CINAHL (i.e., ‘instrumentation’ field). In our hand search of Implementation Science we searched for combinations of the keyword ‘health’ and the implementation science keywords in the abstract and title. In our search of MEDLINE, we used a database restriction that allowed us to search for combinations of the keyword ‘health,’ the implementation science keywords, and the keywords ‘measure,’ ‘questionnaire,’ ‘scale,’ ‘survey,’ or ‘tool’ within the abstract and title of records listed as ‘validation studies.’ There were no other restrictions based on study characteristics, language, or publication status in our search for article records.

Screening study records and identifying measures

Article record titles and abstracts were then screened and retained for further review if they met two inclusion criteria: written in English, and validated or utilized at least one measure designed to quantitatively assess a construct hypothesized to predict an implementation science related outcome (e.g., fidelity, exposure [6, 34]). Subsequently, retained full-text articles were then reviewed and vetted further based on the same two inclusion criteria utilized during screening of the article records. The remaining full-text articles were then reviewed in order to extract all measures utilized to assess constructs hypothesized to predict an implementation science related outcome. Whenever a measure was identified from an article that was not the original validation article, we used three methods to obtain full information: ancestry search of the references section of article in which the measure was identified, additional database and Internet searches, and directly contacting corresponding authors via email.

Measure and criterion validity coding

We then coded each of the extracted measures to determine whether it included items assessing each of the five factors—structural, organizational, provider, patient, and innovation—based on our operational definitions noted above. Items were coded as structural-level factors if they assess constructs that represent the structure of the broader sociocultural context or community in which a specific organization is nested. For example, the Organizational Readiness for Change scale [45] assesses several features of structural context in which drug treatment centers exist, including facility structure (independent versus part of a parent organization) and characteristics of the service area (rural, suburban, or urban). Items were coded as organizational level factors if they assess constructs that represent the organization in which an innovation is being implemented. For example, the ORCA [27] assesses numerous organizational constructs including culture (e.g., ‘senior leadership in your organization reward clinical innovation and creativity to improve patient care’) and leadership (e.g., ‘senior leadership in your organization provide effective management for continuous improvement of patient care’). Items were coded as provider-level factors if they assess constructs that represent aspects of the individual provider who implements the innovation. For example, the Evidence-Based Practice Attitudes Scale [24] assesses providers’ general attitudes towards implementing evidence-based innovations (e.g., ‘I am willing to use new and different types of therapy/interventions developed by researchers’) whereas the Big 5 personality questionnaire assesses general personality traits such as neuroticism and agreeableness [46]. Items were coded as patient-level factors if they assess constructs that represent aspects of the individual patients who will receive the innovation directly or indirectly. These aspects could include patient characteristics such as ethnicity or socioeconomic status (Barriers and Facilitators Assessment Instrument [47]), and patient needs (e.g., ‘the proposed practice changes or guideline implementation take into consideration the needs and preferences of patients’; ORCA [27]). Finally, items were coded as innovation-level factors if they assess constructs that represent aspects of the innovation that is being implemented. These aspects could include the relative advantage of an innovation above existing practices [26] and quality of evidence supporting the innovation’s efficacy (ORCA [27]).

The coding process was item-focused rather than construct-focused, meaning that each item was evaluated individually and coded as representing a construct reflecting a structural, organizational, individual provider, individual patient, or innovation-level factor. In order for a measure to be coded as representing one of the five factors, it had to include two or more items assessing a construct subsumed within the higher-order factor. We chose an item-focused coding approach because there is considerable heterogeneity across disciplines and across researchers regarding psychometric criteria for scale development the procedures by which constructs are operationalized.

It is also important to note that we coded items based on the subject or content of the item rather than based on the viewpoint of the respondent who completed the item. For example, a measure could include items in order to assess the general culture of a clinical care organization from two different perspectives—the perspective of the individual provider, or from the perspective of administrators. Though these two perspectives might be construed to represent both provider and organizational-level factors, in our review, both were coded as organizational factors because the subject of the items’ assessment is the organization (i.e., its culture) regardless of who is providing the assessment.

Measures were excluded because items did not assess any of the five factors (e.g., they instead measured an implementation outcome such as fidelity [48]), were utilized only in articles examining a non-health-related innovation (e.g., end-user computing systems [49]), were unpublished or unobtainable (e.g., full measure available only in an unpublished manuscript that could not be obtained from corresponding author [50]), provided insufficient information for review (e.g., multiple example items were not provided in original source article, nor was measure available from corresponding author [51]), were redundant with newer versions of a measure (e.g., Typology Questionnaire redundant with Competing Values Framework [52]), or were only published in a foreign language (e.g., physician intention measure [53]).

In addition, we coded the implementation outcome present in each search article that utilized one of the retained measures in order to determine the relative predictive utility, or criterion validity, of each of these measures [54]. In essence, we wanted to determine whether each measure was reliably associated with one or more implementation outcomes assessed in the articles included in our review. In order to do so, two coders reviewed all search articles and identified which of five possible implementation outcomes was assessed based on the typology provided by Proctor et al.[6]2: adoption, or the ‘intention, initial decision, or action to try or employ an innovation or evidence-based practice’; fidelity, or ‘the degree to which an intervention was implemented as it was prescribed in the original protocol or as it was intended by the program developers’; implementation cost, or ‘the cost impact of an implementation effort’; penetration, or ‘the integration of a practice within a service setting and its subsystems’; sustainability, ‘the extent to which a newly implemented treatment is maintained or institutionalized within a service setting’s ongoing, stable operations’; or no implementation outcomes. In addition, for those articles that assessed an implementation outcome, we also coded whether each included measure was demonstrated to be a statistically significant predictor of the implementation outcome. Together, these codes indicate the extent to which each measure has demonstrated criterion validity in relation to one or more implementation outcomes.

Reliability

Together, the first and third authors (SC and CB) independently screened study records, reviewed full-text articles, identified measures within articles, coded measures, and assessed criterion validity. At each of these five stages of coding, inter-rater reliability was assessed by having each rater independently code a random sample representing 25% of the full items [55]. Coding discrepancies were resolved through discussion and consultation with the second author (AD).

Results

Literature search results

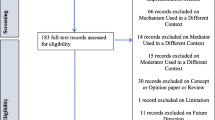

As depicted in Figure 2, these search strategies yielded 589 unique peer-reviewed journal article records. Of those, 210 full-text articles were reviewed and vetted further, yielding a total of 125 full-text articles from which measures were extracted. A total of 112 measures were extracted from these retained articles. Across each of the stages of coding, inter-rater reliability was relatively high, ranging from 87 to 100% agreement.

Our screening yielded a total of 62 measures. Table 1 provides the full list of measures we obtained. (See Additional file 2 for a list of the names and citations of excluded measures.) For each measure, we provide information about its name and original validation source, whether it includes items that assess each of the five factors, information about the constructs measured, and the implementation context(s) in which the scale was used: healthcare (e.g., nursing utilization of evidence-based practice, guideline implementation), workplace, education, or mental health/substance abuse settings. In addition, we list information about the criterion validity [54] of each measure by examining the original validation source and each search article that utilized the scale, the type of implementation outcome that was assessed in each article, and whether there was evidence that the measure was statistically associated with the implementation outcome assessed. It is important to note that we utilized only the 125 articles eligible for final review and the original validation article (if not located within our search) in order to populate information for the criterion validity and implementation context. Thus, this information represents only information available through these 125 articles and not from an exhaustive search of each measure within the available empirical literature.

Factors assessed

Of the 62 measures we obtained, most (42; 67.7%) assessed only one type of factor. Only one measure—the Barriers and Facilitators Assessment Instrument [47]—included items designed to assess each of the five factors examined in our review.

Of the five factors coded in our review, individual provider and organizational factors were the constructs most frequently assessed by these measures. Thirty-five (56.5%) measures assessed provider-level constructs, such as research-related attitudes and skills [56–58], personality characteristics (e.g., Big 5 Personality [46], and self-efficacy [59]).

Thirty-seven (59.7%) measures assessed organizational-level constructs. Aspects of organizational culture and climate were assessed frequently [45, 60] as were measures of organizational support or ‘buy in’ for implementation of the innovation [61–63].

Innovation-level constructs were measured by one quarter of these measures (16; 25.8%). Many of these measures assessed constructs outlined in Roger’s diffusion of innovations theory [18] such as relative advantage, compatibility, complexity, trialability, and observability [26].

Structural-level (5; 8.1%) and patient-level (5; 8.1%) constructs were the least likely to be assessed. For example, the Barriers and Facilitators Assessment Instrument [47] assesses constructs associated with each of the five factors, including structural factors such as the social, political, societal context and patient factors such as patient characteristics. The ORCA [27] assesses patient constructs in terms of the degree to which patient preferences are addressed in the available evidence supporting an innovation.

Implementation context and criterion validity

Consistent with our search strategies, most (47; 75.8%) measures were developed and/or implemented in healthcare-related settings. Most measures were utilized to examine factors that facilitate or inhibit adoption of evidence-based clinical care guidelines [56, 64, 65]. However, several measures were utilized to evaluate implementation of health-related innovations in educational (e.g., implementation of a preventive intervention in elementary schools [66]), mental health (technology transfer in substance abuse treatment centers [45]), workplace (e.g., willingness to implement worksite health promotion programs [67]), or other settings.

Surprisingly, almost one-half (30; 48.4%) of the measures located in our search neither assessed criterion validity in their original validation studies nor in the additional articles located in our search. That is, for the majority of these measures, implementation outcomes such as adoption or fidelity were not assessed in combination with the measure in order to determine whether the measure is reliably associated with an implementation outcome. Of the 32 measures for which criterion validity was examined, adoption was the most frequent (29 of 32; 90.1%) implementation outcome examined. Only a small proportion of studies (5 of 32; 15.6%) examined fidelity, and no studies examined implementation cost, penetration, or sustainability. Again, it is important to note that we did not conduct an exhaustive search of each measure to locate all studies that have utilized it in past research, so it is possible that the criterion validity of these measures has, in fact, been assessed in other studies that were not located in our review. In addition, it is important to keep in mind that the criterion validity of recently developed scales may be weak solely because these measures have been evaluated less frequently than more established measures.

Discussion

Existing gaps in measurement present a formidable barrier to efforts to advance implementation science [68, 69]. In the current review, we addressed these existing gaps by identifying a comprehensive, five-factor multi-level framework that builds upon converging evidence from multiple previous frameworks. We then conducted a systematic review in order to identify 62 available measures that can be utilized to assess constructs representing structural-, organizational-, provider-, patient-, and innovation-level factors—factors that are each hypothesized to affect implementation outcomes. Further, we evaluated the criterion validity of each measure in order to determine the degree to which each measure has, indeed, predicted implementation outcomes such as adoption and fidelity. In total, the current review advances understanding of the conceptual factors and observable constructs that impact implementation outcomes. In doing so, it provides as useful tool to aid researchers as they determine which of five types of factors to examine and which measures to utilize in order to assess constructs within each of these factors (see Table 1).

Available measures

In addition to providing a practical tool to aid in research design, our review highlights several important aspects about the current state of measurement in implementation science. While there is a relative preponderance of measures assessing organizational-, provider-, and innovation-level constructs, there are relatively few measures available to assess structural- and patient-level constructs. Structural-level constructs such as political norms, policies, and relative resources/socioeconomic status can be important macro-level determinants of implementation outcomes [9, 19, 20]. Why, then, did our search yield so few available measures of structural-level constructs? Structural-level constructs are among the least likely to be assessed because their measurement poses unique methodological challenges for researchers. In order to ensure enough statistical power to test the effect of structural-level constructs on implementation outcomes, researchers must typically utilize exceptionally large samples that are typically difficult to obtain [17]. Alternatively, when structural-level constructs are deemed to be important determinants of implementation outcomes, researchers conducting implementation trials may simply opt to control for these factors in their study designs by stratifying or matching organizations on these characteristics [70] rather than measuring them. Though the influence of some structural-level constructs might be captured through formative evaluation [71], many structural-level constructs such as relative socioeconomic resources and population density can be assessed with standardized population-type measures such as those assessed in national surveys such as the General Social Survey [72].

Though patient-level constructs may be somewhat easier to assess, there is also a relative dearth of measures designed to assess these constructs. Though we might assume that most innovations have been tested for patient feasibility in prior stages of research or formative evaluation [71], this is not always a certainty. Thus, measures that assess the degree to which innovations are appropriate and feasible with the patient population of interest are especially important. Beyond feasibility, other important patient characteristics such as health literacy may also affect implementation, making it more likely that an innovation will be effectively implemented with some types of patients but not others [3]. Measures that assess these and other patient-level constructs will also be useful in strengthening these existing measurement gaps.

In addition to locating those measures outlined in Table 1, the current review also highlights additional strategies that will allow researchers to further expand the available pool of measures. Though measures utilized in research examining non-health related innovations were excluded from the current review, many of these measures (see Additional file 2)—and those identified in other systematic reviews [38, 40]—could also be useful to researchers to the extent that they are psychometrically sound and can be adapted to contexts of interest. Further, adaption of psychometrically sound measures from other literatures assessing organizational-level constructs (e.g., culture [73, 74]), provider-level constructs (e.g., psychological predictors of behavior change [75, 76]), or others could also offer fruitful measurement strategies.

Criterion validity

Because of its primary relevance in the current review, we evaluated the criterion validity of the identified measures. Our review concluded that for a vast majority of measures, criterion validity has either not been examined or has not been supported. For example, although the BARRIERS scale was the most frequently utilized measure of those included in our review (i.e., utilized in 12 articles), only three of those articles utilized the measure to predict an implementation outcome (i.e., adoption [77–80]). Instead, this measure was used to describe the setting as either amenable or not amenable to implementation, though no implementation activity was assessed by the measure itself. Thus, there is a preponderance of scales that currently serve descriptive purposes only. Though descriptive information obtained through these measures is useful for elicitation efforts, these measures might also provide important information if they are used as predictors of implementation outcomes.

The lack of criterion validity associated with the majority of the measures identified in the current review contributes to growing evidence regarding the weak psychometric properties of many available implementation science measures. As Squires et al.[12] recently discussed in their review of measures assessing research utilization, a large majority of these existing measures demonstrate weak psychometric properties. Basic psychometric properties—reliability (e.g., internal reliability, test-retest reliability) and validity (e.g., construct validity, criterion validity)—of any measure should always be evaluated prior to including the measure in research [54]. This is especially true in the area of implementation science measurement, given that it is a relatively new area of study and newly developed measures may have had limited use. Thus, our review highlights the need for continued development and refinement of psychometrically sound measures for use in implementation science settings.

Our examination of implementation outcomes also provides us with a unique opportunity to identify trends in the type and frequency of implementation outcomes used within this sample of the literature. Measures identified in the current review have been predominantly developed and tested in relation to implementation outcomes that occur early in the implementation process (i.e., adoption, fidelity) rather than those outcomes that occur later in the implementation process (i.e., sustainability [81, 82]). Presumably, our use of broad search terms such as ‘implementation’ or ‘translational research’ would have identified measures that have been utilized by researchers examining both early (e.g., adoption, fidelity) and later (e.g., sustainability) stages of implementation. To that extent, our findings mirror the progression of the field of implementation science as a whole, with early theorizing and research focusing predominantly on the initial adoption of an innovation and more recent investigations giving greater attention to the long-term sustainability of the innovation. Future research will benefit by examining the degree to which the measures identified in our search (and the constructs they assess) affect later-stage outcomes such as penetration and sustainability in implementation trials or affect patient health and implementation outcomes simultaneously in hybrid effectiveness-implementation designs [33]. In addition, though we did not include patient health outcomes in our criterion validity coding scheme, we did come across a number of articles that included these outcomes (e.g., number of bloodstream infections [83]) alone or in combination with other implementation outcomes. The use of these outcome measures underscores the notion that patient-level variables continue to be relevant in implementation trials as they are in efficacy trials.

Limitations and future directions

The current review advances implementation science measurement by identifying a comprehensive, multi-level conceptual framework that articulates factors that predict implementation outcomes and provides a systematic review of quantitative measures available to assess constructs representing these factors. It is important to note, however, that the specific search strategies adopted in this systematic review affect the types of articles located and the conclusions that can be drawn from them in important ways.

Our use of multiple databases (i.e., MEDLINE, CINAHL, PsycINFO) which span multiple disciplines (e.g., medicine, public health, nursing, psychology) and search of the Implementation Science journal provides a broad cross-section of the empirical literature examining implementation of health-related innovations. Despite this broad search, it is possible that additional relevant literature and measures could be identified in future reviews through the use of other databases such as ERIC or Business Source Complete, which may catalogue additional health- and non-health implementation research from other disciplines such as education and business, respectively. Indeed, a recent review of a wide array of organizational literatures yielded 30 measures of organizational-level constructs [40], only 13% of which overlapped with the current review. Thus, additional reviews that draw on non-health-related literatures will help to identify additional potentially relevant measures.

Further, the specific keywords and database restrictions used to search for these keywords also impact the range of articles identified in the current review. For example, our use of the keyword ‘health’ was designed to provide a general cross-section of measures that would be relevant to researchers examining health-related innovations, broadly construed. Its use may have omitted articles that utilized only a specific health discipline keyword (e.g., cancer, diabetes, HIV/AIDS) but not ‘health’ as a keyword in the title or abstract. Similarly, our measure-related keywords or thirteen expert-identified implementation science keywords [34] may have captured a majority, but not a complete, range of implementation science articles. We did not account for truncation or spelling variants in our search and, in two databases, we limited our search to two instrument-related fields that could have also resulted in missing potentially relevant studies and measures. Additional systematic reviews that utilize expanded sets of keywords (e.g., use of keywords as medical subject headings [MeSH]) may yield additional measures to complement those identified in the current review. Identification of qualitative measures would also further complement the current review.

Finally, as noted earlier, assessment of criterion validity was based only on articles that were identified in the current search. Thus, because separate systematic searches were not conducted on each of the 62 individual measures, our assessment of criterion validity is based on a sampling of the literature available from the search strategy adopted herein. As a consequence, further research would be required in order to ensure an exhaustive assessment of criterion validity for some or all of the identified measures.

Conclusion

As the nexus between research and practice, the field of implementation science plays a critical role in advancing human health. Though it has made tremendous strides in its relatively short life span, the field also continues to face formidable challenges in refining the conceptualization and measurement of the factors that affect implementation success [84]. The current research addresses these challenges by outlining a comprehensive conceptual framework and the associated measures available for use by implementation researchers. By helping researchers gain greater clarity regarding the conceptual factors and measured variables that impact implementation success, the current review may also contribute towards future efforts to translate these frameworks into theories. As has been demonstrated in nearly every domain of health, theory-based research—in which researchers derive testable hypotheses from theories or frameworks that provide a system of predictable relationships between constructs—has stimulated many of the greatest advances in effective disease prevention and health promotion efforts [85]. So, too, must implementation science translate existing frameworks into theories that can gain greater specificity in predicting the interrelations among the factors that impact implementation success and, ultimately, improve human health.

Endnotes

1It is important to note that we do not consider patient perceptions of the innovation as patient-level factors [28] because the object of focus is the innovation rather than the patient per se. Thus, patient perceptions of the innovation—similar to provider perceptions of the innovation—are considered to be innovation-level factors.

2Given that Proctor’s [6] conceptualization of the constructs of acceptability, appropriateness, and feasibility are redundant with our conceptualization of innovation-level factors, we omitted these three constructs from our coding of implementation outcomes.

References

National Institutes of Health (NIH): Dissemination and implementation research in health (R01). 2010, Bethesda, MD: NIH, http://grants.nih.gov/grants/guide/pa-files/PAR-10-038.html

Cooksey D: A review of UK health research funding. 2006, London: HM Treasury

Haines A, Kuruvilla S, Matthias B: Bridging the implementation gap between knowledge and action for health. Bull World Health Organ. 2004, 82: 724-731.

Balas EA, Boren SA: Managing clinical knowledge for health care improvement. Yearbook of medical informatics 2000: Patient-centered systems. Edited by: Bemmel J, McCray AT. 2000, Stuttgart, Germany: Schattauer, 65-70.

Grol R, Bosch M, Hulscher M, Eccles M, Wensing M: Planning and studying improvement in patient care: The use of theoretical perspectives. Milbank Q. 2007, 85: 93-138.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M: Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Hlth. 2011, 38: 65-76.

Proctor EK, Brownson RC: Measurement issues in dissemination and implementation research. Dissemination and implementation research in health: Translating science to practice. Edited by: Brownson R, Colditz G, Proctor E. 2012, New York: Oxford University Press, 261-280.

Greenhalgh T, Robert G, MacFarlane F, Bate P, Kyriakidou O: Diffusion of Innovations in Service Organizations: Systematic Review and Recommendations. Milbank Q. 2004, 82: 581-629.

Shortell SM: Increasing value: A research agenda for addressing the managerial and organizational challenges facing health care delivery in the United States. Med Care Res Rev. 2004, 61: 12S-30S.

Grimshaw J: Improving the scientific basis of health care research dissemination and implementation. 2007, Paper presented at the NIH Conference on Building the Sciences of Dissemination and Implementation in the Service of: Public Health

Eccles M, Armstrong D, Baker R, Cleary K, Davies H, Davies S, Glasziou P, Ilott I, Kinmonth A, Leng G: An implementation research agenda. Implem Sci. 2009, 4: 18-

Squires JE, Estabrooks CA, O'Rourke HM, Gustavsson P, Newburn-Cook CV, Wallin L: A systematic review of the psychometric properties of self-report research utilization measures used in healthcare. Implem Sci. 2011, 6: 83-

Cohen SJ, Havlorson HW, Gosselink CA: Changing physician behavior to improve disease prevention. Prev Med. 1994, 23: 284-291.

Prochaska JO, DiClemente CC: Stages and processes of self-change of smoking: Toward an integrative model of change. J Consult Clin Psychol. 1983, 51: 390-395.

Klein KJ, Sorra JS: The Challenge of Innovation Implementation. The Academy of Management Review. 1996, 21: 1055-1080.

Glasgow RF, Vogt TM, Boles SM: Evaluating the public health impact of health promotion interventions: The RE-AIM framework. Am J Public Health. 1999, 89: 1322-1327.

Proctor EK, Landsverk J, Aarons G, Chambers D, Glisson C, Mittman B: Implementation research in mental health services: An emerging science with conceptual, methodological, and training challenges. Adm Policy Ment Hlth. 2009, 36: 24-34.

Rogers EM: Diffusion of innovations 5th edn. 2003, New York: Free Press

Durlak JA, DuPre EP: Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. Am J Community Psychol. 2008, 41: 327-

Damschroder L, Aron D, Keith R, Kirsh S, Alexander J, Lowery J: Fostering implementation of health services research findings into practice: A consolidated framework for advancing implementation science. Implem Sci. 2009, 4: 50-

Blankenship KM, Friedman SR, Dworkin S, Mantell JE: Structural interventions: concepts, challenges and opportunities for research. Journal of urban health: bulletin of the New York Academy of Medicine. 2006, 83: 59-72.

Gupta GR, Parkhurst J, Ogden JA, Aggleton P, Mahal A: Structural approaches to HIV prevention. The Lancet. 2008, 372: 764-775.

Aarons GA, Sommerfeld DH: Leadership, Innovation Climate, and Attitudes Toward Evidence-Based Practice During a Statewide Implementation. J Am Acad Child Adolesc Psychiatry. 2012, 51: 423-431.

Aarons GA: Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS). Mental Health Services Research. 2004, 6: 61-74. Article included in review

Azjen I: The Theory of Planned Behavior. Organ Behav Hum Decis Process. 1991, 50: 179-211. Article included in review

Scott S, Plotnikoff R, Karunamuni N, Bize R, Rodgers W: Factors influencing the adoption of an innovation: An examination of the uptake of the Canadian Heart Health Kit (HHK). Implem Sci. 2008, 3: 41-Article included in review

Helfrich C, Li Y, Sharp N, Sales A: Organizational Readiness to Change Assessment (ORCA): Development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implem Sci. 2009, 4: 38-Article included in review

Feldstein AC, Glasgow RE: A Practical, Robust Implementation and Sustainability Mode (PRISM) for integrating research findings into practice. Jt Comm J Qual Patient Saf. 2008, 34: 228-243.

Kalichman SC, Cain D, Eaton L, Jooste S, Simbayi LC: Randomized Clinical Trial of Brief Risk Reduction Counseling for Sexually Transmitted Infection Clinic Patients in Cape Town, South Africa. Am J Public Health. 2011, 101: e9-e17.

Noguchi K, Albarracin D, Durantini MR, Glasman LR: Who participates in which health promotion programs? A meta-analysis of motivations underlying enrollment and retention in HIV-prevention interventions. Psychol Bull. 2007, 133: 955-975.

Vernon SW, Bartholomew LK, McQueen A, Bettencourt JL, Greisinger A, Coan SP, Lairson D, Chan W, Hawley ST, Myers RE: A randomized controlled trial of a tailored interactive computer-delivered intervention to promote colorectal cancer screening: sometimes more is just the same. nn Behav Med. 2011, 41: 284-299.

Dovidio JF, Penner LA, Albrecht TL, Norton WE, Gaertner SL, Shelton JN: Disparities and distrust: the implications of psychological processes for understanding racial disparities in health and health care. Soc Sci Med. 2008, 67: 478-486.

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C: Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012, 50: 217-226.

Rabin BA, Brownson RC, Haire-Joshu D, Kreuter MW, Weaver ND: A glossary for dissemination and implementation research in health. J Public Health Manag Pract. 2008, 14: 117-123.

Rycroft-Malone J: The PARIHS framework: A framework for guiding the implementation of evidence-based practice. J Nurs Care Qua. 2004, 19: 297-304.

Wallerstein NB, Duran B: Using Community-Based Participatory Research to Address Health Disparities. Health Promotion Practice. 2006, 7: 312-323.

Minkler M, Salvatore A: Participatory approaches for study design and analysis in dissemination and implementation research. Dissemination and implementation research in health: Translating science to practice. Edited by: Brownson R, Colditz G, Proctor E. 2012, New York: Oxford University Press, 192-212.

Eccles M, Hrisos S, Francis J, Kaner E, Dickinson H, Beyer F, Johnston M: Do self-reported intentions predict clinicians' behaviour: A systematic review. Implem Sci. 2006, 1: 28-Article included in review

Emmons KM, Weiner B, Fernandez ME, Tu SP: Systems antecedents for dissemination and implementation: a review and analysis of measures. Health Educ Behav. 2012, 39: 87-105.

French B, Thomas L, Baker P, Burton C, Pennington L, Roddam H: What can management theories offer evidence-based practice?. A comparative analysis of measurement tools for organisational context. Implem Sci. 2009, 4: 28-

Squires JE, Estabrooks CA, Gustavsson P, Wallin L: Individual determinants of research utilization by nurses: A systematic review update. Implem Sci. 2011, 6: 1-

Shariff SZ, Sontrop JM, Haynes RB, Iansavichus AV, McKibbon KA, Wilczynski NL, Weir MA, Speechley MR, Thind A, Garg AX: Impact of PubMed search filters on the retrieval of evidence by physicians. CMAJ. 2012, 184: E184-190.

Lokker C, Haynes RB, Wilczynski NL, McKibbon KA, Walter SD: Retrieval of diagnostic and treatment studies for clinical use through PubMed and PubMed's Clinical Queries filters. J Am Med Inform Assoc. 2011, 18: 652-659.

Reed JG, Baxter PM: Using reference databases. The handbook of research synthesis and meta-analysis. Edited by: Hedges LV, Valentine JC. 2009, New York: Russell Sage, 73-101.

Lehman WEK, Greener JM, Simpson DD: Assessing organizational readiness for change. J Subst Abuse Treat. 2002, 22: 197-209. Article included in review

Costa PTJ, McCrae RR: Revised NEO Personality Inventory (NEO–PI–R) and the NEO Five Factor Inventory (NEO–FFI) professional manual. 1992, Odessa, FL: Psychological Assessment Resources, Article included in review

Peters MA, Harmsen M, Laurant MGH, Wensing M: Ruimte voor verandering? Knelpunten en mogelijkheden voor verbeteringen in de patiëntenzorg [Room for improvement? Barriers to and facilitators for improvement of patient care]. 2002, Nijmegen, the Netherlands: Centre for Quality of Care Research (WOK), Radboud University Nijmegen Medical Centre, Article included in review

Yamada J, Stevens B, Sidani S, Watt-Watson J, de Silva N: Content validity of a process evaluation checklist to measure intervention implementation fidelity of the EPIC intervention. Worldviews Evid Based Nurs. 2010, 7: 158-164.

Doll WJ, Torkzadeh G: The measurement of end-user computing satisfaction. MIS Quart. 1988, 12: 259-274.

Dufault MA, Bielecki C, Collins E, Willey C: Changing nurses' pain assessment practice: a collaborative research utilization approach. J Adv Nurs. 1995, 21: 634-645.

Brett JLL: Use of nursing practice research findings. Nurs Res. 1987, 36: 344-349.

Green L, Gorenflo D, Wyszewianski L: Validating an instrument for selecting interventions to change physician practice patterns: A Michigan consortium for family practice research study. J Fam Pract. 2002, 51: 938-942. Article included in review

Gagnon M, Sanchez E, Pons J: From recommendation to action: psychosocial factors influencing physician intention to use Health Technology Assessment (HTA) recommendations. Implem Sci. 2006, 1: 8-

American Psychological Association: National Council on Measurement in Education, American Educational Research Association: Standards for educational and psychological testing. 1999, Washington, DC: American Educational Research Association

Wilson DB: Systematic coding. The handbook of research synthesis and meta-analysis. Edited by: Cooper HM, Hedges LV, Valentine JC. 2009, New York: Russell Sage, 159-176.

Funk SG, Champagne MT, Wiese RA, Tornquist EM: BARRIERS: The barriers to research utilization scale. Appl Nurs Res. 1991, 4: 39-45. Article included in review

Melnyk BM, Fineout-Overholt E, Mays MZ: The evidence-based practice beliefs and implementation scales: Psychometric properties of two new instruments. Worldviews Evid Based Nurs. 2008, 5: 208-216. Article included in review

Pain K, Hagler P, Warren S: Development of an instrument to evaluate the research orientation of clinical professionals. Can J Rehabil. 1996, 9: 93-100. Article included in review

Rohrbach LA, Graham JW, Hansen WB: Diffusion of a school-based substance-abuse prevention program: Predictors of program implementation. Prev Med. 1993, 22: 237-260. Article included in review

Glisson C, James L: The cross-level effects of culture and climate in human service teams. J Organ Behav. 2002, 23: 767-794. Article included in review

Dückers MLA, Wagner C, Groenewegen PP: Developing and testing an instrument to measure the presence of conditions for successful implementation of quality improvement collaboratives. BMC Health Serv Res. 2008, 8: 172-Article included in review

Helfrich C, Li Y, Mohr D, Meterko M, Sales A: Assessing an organizational culture instrument based on the Competing Values Framework: Exploratory and confirmatory factor analyses. Implem Sci. 2007, 2: 13-Article included in review

Thompson CJ: Extent and factors influencing research utilization among critical care nurses. 1997, Texas Woman's University, Unpublished doctoral dissertation

Bahtsevani C, Willman A, Khalaf A, Ostman M: Developing an instrument for evaluating implementation of clinical practice guidelines: A test-retest study. J Eval Clin Pract. 2008, 14: 839-846. Article included in review

Humphris D, Hamilton S, O'Halloran P, Fisher S, Littlejohns P: Do diabetes nurse specialists utilise research evidence?. Practical Diabetes International. 1999, 16: 47-50. Article included in review

Klimes-Dougan B, August GJ, Lee CS, Realmuto GM, Bloomquist ML, Horowitz JL, Eisenberg TL: Practitioner and site characteristics that relate to fidelity of implementation: The Early Risers prevention program in a going-to-scale intervention trial. Professional Psychology: Research and Practice. 2009, 40: 467-475. Article included in review

Jung J, Nitzsche A, Neumann M, Wirtz M, Kowalski C, Wasem J, Stieler-Lorenz B, Pfaff H: The Worksite Health Promotion Capacity Instrument (WHPCI): Development, validation and approaches for determining companies' levels of health promotion capacity. BMC Public Health. 2010, 10: 550-Article included in review

Meissner HI: Proceedings from the 3rd Annual NIH Conference on the Science of Dissemination and Implementation: Methods and Measurement. 2010, Bethesda, MD

Rabin BA, Glasgow RE, Kerner JF, Klump MP, Brownson RC: Dissemination and implementation research on community-based cancer prevention: A systematic review. Am J Prev Med. 2010, 38: 443-456.

Fagan AA, Arthur MW, Hanson K, Briney JS, Hawkins DJ: Effects of communities that care on the adoption and implementation fidelity of evidence-based prevention programs in communities: Results from a randomized controlled trial. Prev Sci. 2011, 12: 223-234.

Stetler C, Legro M, Rycroft-Malone J, Bowman C, Curran G, Guihan M, Hagedorn H, Pineros S, Wallace C: Role of “external facilitation” in implementation of research findings: A qualitative evaluation of facilitation experiences in the Veterans Health Administration. Implem Sci. 2006, 1: 23-

Smith TW, Marsden P, Hout P, Kim J: General social surveys, 1972–2010: Cumulative codebook. 2011, Chicago, IL: National Opinion Research Center

Jung T, Scott T, Davies HTO, Bower P, Whalley P, McNally R, Mannionm R: Instruments for exploring organizational culture: A review of the literature. Public Adm Rev. 2009, 69: 1087-1096.

Schein EH: Organizational culture and leadership. 2010, San Francisco, CA: Jossey-Bass

Bandura A: Social cognitive theory: An agentic perspective. Annu Rev Psychol. 2001, 52: 1-26.

Fisher JD, Fisher WA: Changing AIDS-risk behavior. Psychol Bull. 1992, 111: 455-474.

Brown CE, Wickline MA, Ecoff L, Glaser D: Nursing practice, knowledge, attitudes and perceived barriers to evidence-based practice at an academic medical center. J Adv Nurs. 2009, 65: 371-381. Article included in review

Lewis CC, Simons AD: A pilot study disseminating cognitive behavioral therapy for depression: Therapist factors and perceptions of barriers to implementation. Adm Policy Ment Hlth. 2011, 38: 324-334. Article included in review

Lyons C, Brown T, Tseng MH, Casey J, McDonald R: Evidence-based practice and research utilisation: Perceived research knowledge, attitudes, practices and barriers among Australian paediatric occupational therapists. Aust Occup Therap J. 2011, 58: 178-186. Article included in review

Stichler JF, Fields W, Kim SC, Brown CE: Faculty knowledge, attitudes, and perceived barriers to teaching evidence-based nursing. J Prof Nurs. 2011, 27: 92-100. Article included in review

Aarons GA, Hurlburt M, Horwitz S: Advancing a conceptual model of evidence-based practice pmplementation in public service sectors. Adm Policy Ment Hlth. 2011, 38: 4-23.

Rogers EM: Diffusion of innovations 4th edn. 1995, New York: Free Press, 4

Chan K, Hsu Y-J, Lubomski L, Marsteller J: Validity and usefulness of members reports of implementation progress in a quality improvement initiative: findings from the Team Check-up Tool (TCT). Implem Sci. 2011, 6: 115-Article included in review

Dearing JW, Kee KF: Historical roots of dissemination and implementation science. Dissemination and implementation research in health: Translating science to practice. Edited by: Brownson R, Colditz G, Proctor E. 2012, New York: Oxford University Press, 55-71.

Glanz K, Rimer KB, Viswanath K: Health behavior and health education: Theory, research, and practice. 2008, San Francisco, CA: Jossey-Bass, 4

Estabrooks CA, Squires JE, Cummings GG, Birdsell JM, Norton PG: Development and assessment of the Alberta Context Tool. BMC Health Serv Res. 2009, 9: 234-Article included in review

Cummings GG, Hutchinson AM, Scott DS, Norton PG, Estabrooks CA: The relationship between characteristics of context and research utilization in a pediatric setting. BMC Health Serv Res. 2010, 10: 168-Article included in review

Scott S, Grimshaw J, Klassen T, Nettel-Aguirre A, Johnson D: Understanding implementation processes of clinical pathways and clinical practice guidelines in pediatric contexts: a study protocol. Implem Sci. 2011, 6: 133-Article included in review

Seers K, Cox K, Crichton N, Edwards R, Eldh A, Estabrooks C, Harvey G, Hawkes C, Kitson A, Linck P: FIRE (facilitating implementation of research evidence): a study protocol. Implem Sci. 2012, 7: 25-Article included in review

Estabrooks CA, Squires JE, Hayduk LA, Cummings GG, Norton PG: Advancing the argument for validity of the Alberta Context Tool with healthcare aides in residential long-term care. BMC Med Res Methodol. 2011, 11: 107-Article included in review

The AGREE Collaboration: The Appraisal of Guidelines for Research & Evaluation (AGREE) Instrument, 2001. 2001, London, UK: The AGREE Research Trust, Article included in review

Lavis J, Oxman A, Moynihan R, Paulsen E: Evidence-informed health policy 1 – Synthesis of findings from a multi-method study of organizations that support the use of research evidence. Implem Sci. 2008, 3: 53-Article included in review

Norton W: An exploratory study to examine intentions to adopt an evidence-based HIV linkage-to-care intervention among state health department AIDS directors in the United States. Implem Sci. 2012, 7: 27-Article included in review

de Vos M, van der Veer S, Graafmans W, de Keizer N, Jager K, Westert G, van der Voort P: Implementing quality indicators in intensive care units: Exploring barriers to and facilitators of behaviour change. Implem Sci. 2010, 5: 52-Article included in review

Corrigan PW, Kwartarini WY, Pramana W: Staff perception of barriers to behavior therapy at a psychiatric hospital. Behav Modif. 1992, 16: 132-144. Article included in review

Corrigan PW, McCracken SG, Edwards M, Kommana S, Simpatico T: Staff training to improve implementation and impact of behavioral rehabilitation programs. Psychiatr Serv. 1997, 48: 1336-1338. Article included in review

Donat DC: Impact of behavioral knowledge on job stress and the perception of system impediments to behavioral care. Psychiatr Rehabil J. 2001, 25: 187-189. Article included in review

Chau JPC, Lopez V, Thompson DR: A survey of Hong Kong nurses' perceptions of barriers to and facilitators of research utilization. Res Nurs Health. 2008, 31: 640-649. Article included in review

Fink R, Thompson CJ, Bonnes D: Overcoming barriers and promoting the use of research in practice. J Nurs Adm. 2005, 35: 121-129. Article included in review

Retsas A, Nolan M: Barriers to nurses' use of research: An Australian hospital study. Int J Nurs Stud. 1999, 36: 335-343. Article included in review

Thompson DR, Chau JPC, Lopez V: Barriers to, and facilitators of, research utilisation: A survey of Hong Kong registered nurses. Int J Evid Based Healthc. 2006, 4: 77-82. Article included in review

Temel AB, Uysal A, Ardahan M, Ozkahraman S: Barriers to Research Utilization Scale: Psychometric properties of the Turkish version. J Adv Nurs. 2010, 66: 456-464. Article included in review

Uysal A, Temel AB, Ardahan M, Ozkahraman S: Barriers to research utilisation among nurses in Turkey. J Clin Nurs. 2010, 19: 3443-3452. Article included in review

Brown T, Tseng MH, Casey J, McDonald R, Lyons C: Predictors of research utilization among pediatric occupational therapists. OTJR: Occup, Particip Health. 2010, 30: 172-183. Article included in review

Hutchinson AM, Johnston L: Bridging the divide: A survey of nurses' opinions regarding barriers to, and facilitators of, research utilization in the practice setting. J Clin Nurs. 2004, 13: 304-315. Article included in review

Facione PA, Facione CF: The California Critical Thinking Disposition Inventory: Test manual. 1992, Millbrae, CA: California Academic Press, Article included in review

Profetto-McGrath J, Hesketh KL, Lang S, Estabrooks CA: A study of critical thinking and research utilization among nurses. West J Nurs Res. 2003, 25: 322-337. Article included in review

Wangensteen S, Johansson IS, Björkström ME, Nordström G: Research utilisation and critical thinking among newly graduated nurses: Predictors for research use. A quantitative cross-sectional study. J Clin Nurs. 2011, 20: 2436-2447. Article included in review

Lunn LM, Heflinger CA, Wang W, Greenbaum PE, Kutash K, Boothroyd RA, Friedman RM: Community characteristics and implementation factors associated with effective systems of care. Serv Res. 2011, 38: 327-341. Article included in review

Shortell SM, Obrien JL, Carman JM, Foster RW, Hughes EFX, Boerstler H, Oconnor EJ: Assessing the impact of continuous quality improvement total quality management: Concept versus implementation. Health Serv Res. 1995, 30: 377-401. Article included in review

Zammuto RF, Krakower JY: Quantitative and qualitative studies of organizational culture. Quantitative and qualitative studies of organizational culture. Volume 5. Edited by: Woodman RW, Pasmore WA. 1991, Greenwich, CT: JAI Press, Article included in review

McCormack B, McCarthy G, Wright J, Slater P, Coffey A: Development and testing of the Context Assessment Index (CAI). Worldviews Evid Based Nurs. 2009, 6: 27-35. Article included in review

Efstattion JF, Hrisos S, Francis J, Kaner E, Dickinson H, Beyer F, Johnston M: Measuring the working alliance in counselor supervision. J Couns Psychol. 1990, 37: 322-329. Article included in review

Dobbins M, Rosenbaum P, Plews N, Law M, Fysh A: Information transfer: What do decision makers want and need from researchers?. Implem Sci. 2007, 2: 20-Article included in review

Marsick VJ, Watkins KE: Demonstrating the value of an organization's learning culture: The Dimensions of the Learning Organization Questionnaire. Adv Dev Hum Resour. 2003, 5: 132-151. Article included in review

Estrada N, Verran J: Nursing practice environments: Strengthening the future of health systems: learning organizations and evidence-based practice by RNs. Commun Nurs Res. 2007, 40: 187-Article included in review

Sockolow PS, Weiner JP, Bowles KH, Lehmann HP: A new instrument for measuring clinician satisfaction with electronic health records. Computers, Informatics, Nursing: CIN. 2011, 29: 574-585. Article included in review

Green L, Wyszewianski L, Lowery J, Kowalski C, Krein S: An observational study of the effectiveness of practice guideline implementation strategies examined according to physicians' cognitive styles. Implem Sci. 2007, 2: 41-Article included in review

Poissant L, Ahmed S, Riopelle R, Rochette A, Lefebvre H, Radcliffe-Branch D: Synergizing expectation and execution for stroke communities of practice innovations. Implem Sci. 2010, 5: 44-Article included in review

Aarons GA, Sommerfeld D, Walrath-Greene C: Evidence-based practice implementation: The impact of public versus private sector organization type on organizational support, provider attitudes, and adoption of evidence-based practice. Implem Sci. 2009, 4: 83-Article included in review

Aarons G, Glisson C, Green P, Hoagwood K, Kelleher K, Landsverk J: The organizational social context of mental health services and clinician attitudes toward evidence-based practice: A United States national study. Implem Sci. 2012, 7: 56-Article included in review

Butler KD: Nurse Practitioners and Evidence-Based Nursing Practice. Clinical Scholars Review. 2011, 4: 53-57. Article included in review

Versteeg M, Laurant M, Franx G, Jacobs A, Wensing M: Factors associated with the impact of quality improvement collaboratives in mental healthcare: An exploratory study. Implem Sci. 2012, 7: 1-Article included in review

Melnyk BM, Bullock T, McGrath J, Jacobson D, Kelly S, Baba L: Translating the evidence-based NICU COPE program for parents of premature infants into clinical practice: Impact on nurses' evidence-based practice and lessons learned. J Perinat Neonatal Nurs. 2010, 24: 74-80. Article included in review

Estrada N: Exploring perceptions of a learning organization by RNs and relationship to EBP beliefs and implementation in the acute care setting. Worldviews Evid Based Nurs. 2009, 6: 200-209. Article included in review.

Upton D, Upton P: Development of an evidence-based practice questionnaire for nurses. J Adv Nurs. 2006, 53: 454-458. Article included in review

Barwick M, Boydell K, Stasiulis E, Ferguson HB, Blase K, Fixsen D: Research utilization among children's mental health providers. Implem Sci. 2008, 3: 19-

McColl A, Smith H, White P, Field J: General practitioner's perceptions of the route to evidence based medicine: A questionnaire survey. Br Med J. 1998, 316: 361-Article included in review

Byham-Gray LD, Gilbride JA, Dixon LB, Stage FK: Evidence-based practice: What are dietitians' perceptions, attitudes, and knowledge?. J Am Diet Assoc. 2005, 105: 1574-1581. Article included in review

Good LR, Nelson DA: Effects of person-group and intragroup attitude similarity on perceived group attractivesness and cohesiveness: II. Psychol Rep. 1973, 33: 551-560. Article included in review

Wallen GR, Mitchell SA, Melnyk B, Fineout-Overholt E, Miller-Davis C, Yates J, Hastings C: Implementing evidence-based practice: Effectiveness of a structured multifaceted mentorship programme. J Adv Nurs. 2010, 66: 2761-2771. Article included in review

Shiffman RN, Dixon J, Brandt C, Essaihi A, Hsiao A, Michel G, O'Connell R: The GuideLine Implementability Appraisal (GLIA): Development of an instrument to identify obstacles to guideline implementation. BMC Med Inform Decis Mak. 2005, 5: 23-Article included in review

van Dijk L, Nelen W, D'Hooghe T, Dunselman G, Hermens R, Bergh C, Nygren K, Simons A, de Sutter P, Marshall C: The European Society of Human Reproduction and Embryology guideline for the diagnosis and treatment of endometriosis: An electronic guideline implementability appraisal. Implem Sci. 2011, 6: 7-Article included in review

Smith JB, Lacey SR, Williams AR, Teasley SL, Olney A, Hunt C, Cox KS, Kemper C: Developing and testing a clinical information system evaluation tool: prioritizing modifications through end-user input. J Nurs Adm. 2011, 41: 252-258. Article included in review

Price JL, Mueller CW: Absenteeism and turnover of hospital employees. 1986, Greenwich, CT: JAI Press, Article included in review

Price JL, Mueller CW: Professional turnover: The case of nurses. Health Syst Manage. 1981, 15: 1-160. Article included in review

Norman C, Huerta T: Knowledge transfer & exchange through social networks: Building foundations for a community of practice within tobacco control. Implem Sci. 2006, 1: 20-Article included in review

Leiter MP, Day AL, Harvie P, Shaughnessy K: Personal and organizational knowledge transfer: Implications for worklife engagement. Hum Relat. 2007, 60: 259-Article included in review

Davies A, Wong CA, Laschinger H: Nurses' participation in personal knowledge transfer: the role of leader-member exchange (LMX) and structural empowerment. J Nurs Manag. 2011, 19: 632-643. Article included in review

El-Jardali F, Lavis J, Ataya N, Jamal D: Use of health systems and policy research evidence in the health policymaking in eastern Mediterranean countries: views and practices of researchers. Implem Sci. 2012, 7: 2-Article included in review

Liden RC, Maslyn JM: Multidimensionality of Leader-Member Exchange: An empirical assessment through scale development. J Manag. 1998, 24: 43-72. Article included in review

Cowin L: The effects of nurses’ job satisfaction on retention: An Australian perspective. J Nurs Adm. 2002, 32: 283-291. Article included in review

Olade RA: Attitudes and factors affecting research utilization. Nurs Forum (Auckl). 2003, 38: 5-15. Article included in review

Aiken LH, Patrician PA: Measuring organizational traits of hospitals: The Revised Nursing Work Index. Nurs Res. 2000, 49: 146-153. Article included in review

Stetler C, Ritchie J, Rycroft-Malone J, Schultz A, Charns M: Improving quality of care through routine, successful implementation of evidence-based practice at the bedside: an organizational case study protocol using the Pettigrew and Whipp model of strategic change. Implem Sci. 2007, 2: 3-Article included in review

Hagedorn H, Heideman P: The relationship between baseline Organizational Readiness to Change Assessment subscale scores and implementation of hepatitis prevention services in substance use disorders treatment clinics: A case study. Implem Sci. 2010, 5: 46-Article included in review

Fineout-Overholt E, Melnyk BM: Organizational Culture and Readiness for System-Wide Implementation of EBP (OCR-SIEP) scale. 2006, Gilbert, AZ: ARCC llc Publishing, Article included in review

Melnyk BM, Fineout-Overholt E, Giggleman M: EBP organizational culture, EBP implementation, and intent to leave in nurses and health professionals. Commun Nurs Res. 2010, 43: 310-Article included in review

Cooke RA, Rousseau DM: Behavioral norms and expectations. Group & Organizational Studies. 1988, 13: 245-273. Article included in review

Goh SC, Quon TK, Cousins JB: The organizational learning survey: A re-evaluation of unidimensionality. Psychol Rep. 2007, 101: 707-721. Article included in review

Wang W, Saldana L, Brown CH, Chamberlain P: Factors that influenced county system leaders to implement an evidence-based program: A baseline survey within a randomized controlled trial. Implem Sci. 2010, 5: 72-Article included in review

Glisson C, Landsverk J, Schoenwald S, Kelleher K, Hoagwood KE, Mayberg S, Green P: Assessing the Organizational Social Context (OSC) of mental health services: implications for research and practice. Adm Policy Ment Health. 2008, 35: 98-113. Article included in review

James LR, Sells SB: Psychological climate. The situation: An interactional perspective. Edited by: Magnusson D. 1981, Hillsdale, NJ: Lawrence Erlbaum, Article included in review

Brehaut JC, Graham ID, Wood TJ, Taljaard M, Eagles D, Lott A, Clement C, Kelly A, Mason S, Stiell IG: Measuring acceptability of clinical decision rules: Validation of the Ottawa Acceptability of Decision Rules Instrument (OADRI) in four countries. Med Decis Making. 2010, 30: 398-408. Article included in review

Newton M, Estabrooks C, Norton P, Birdsell J, Adewale A, Thornley R: Health researchers in Alberta: An exploratory comparison of defining characteristics and knowledge translation activities. Implem Sci. 2007, 2: 1-Article included in review

Shortell SM, Jones RH, Rademaker AW, Gillies RR, Dranove DS, Hughes EFX, Budetti PP, Reynolds KSE, Huang CF: Assessing the impact of total quality management and organizational culture on multiple outcomes of care for coronary artery bypass graft surgery patients. Med Care. 2000, 38: 207-217. Article included in review.

Wakefield BJ, Blegen MA, Uden-Holman T, Vaughn T, Chrischilles E, Wakefield DS: Organizational culture, continuous quality improvement, and medication administration error reporting. Am J Med Qual. 2001, 16: 128-134. Article included in review

Pacini R, Epstein S: The relation of rational and experiential information processing styles to personality, basic beliefs, and the ratio-bias phenomenon. J Pers Soc Psychol. 1999, 76: 972-987. Article included in review

Sladek R, Bond M, Huynh L, Chew D, Phillips P: Thinking styles and doctors' knowledge and behaviours relating to acute coronary syndromes guidelines. Implem Sci. 2008, 3: 23-Article included in review

Ofi B, Sowunmi L, Edet D, Anarado N: Professional nurses' opinion on research and research utilization for promoting quality nursing care in selected teaching hospitals in Nigeria. Int J Nurs Pract. 2008, 14: 243-255. Article included in review

Van Mullem C, Burke L, Dohmeyer K, Farrell M, Harvey S, John L, Kraly C, Rowley F, Sebern M, Twite K, Zapp R: Strategic planning for research use in nursing practice. J Nurs Adm. 1999, 29: 38-45. Article included in review

Baessler CA, Blumberg M, Cunningham JS, Curran JA, Fennessey AG, Jacobs JM, McGrath P, Perrong MT, Wolf ZR: Medical-surgical nurses' utilization of research methods and products. Medsurg Nurs. 1994, 3: 113-Article included in review.

Bostrom A, Wallin L, Nordstrom G: Research use in the care of older people: a survey among healthcare staff. Int J Older People Nurs. 2006, 1: 131-140. Article included in review

Ohrn K, Olsson C, Wallin L: Research utilization among dental hygienists in Sweden – a national survey. Int J Dent Hyg. 2005, 3: 104-111. Article included in review