Abstract

Background

Interventions to alleviate stigma are demonstrating effectiveness across a range of conditions, though few move beyond the pilot phase, especially in low- and middle-income countries (LMICs). Implementation science offers tools to study complex interventions, understand barriers to implementation, and generate evidence of affordability, scalability, and sustainability. Such evidence could be used to convince policy-makers and donors to invest in implementation. However, the utility of implementation research depends on its rigor and replicability. Our objectives were to systematically review implementation studies of health-related stigma reduction interventions in LMICs and critically assess the reporting of implementation outcomes and intervention descriptions.

Methods

PubMed, CINAHL, PsycINFO, and EMBASE were searched for evaluations of stigma reduction interventions in LMICs reporting at least one implementation outcome. Study- and intervention-level characteristics were abstracted. The quality of reporting of implementation outcomes was assessed using a five-item rubric, and the comprehensiveness of intervention description and specification was assessed using the 12-item Template for Intervention Description and Replication (TIDieR).

Results

A total of 35 eligible studies published between 2003 and 2017 were identified; of these, 20 (57%) used qualitative methods, 32 (91%) were type 1 hybrid effectiveness-implementation studies, and 29 (83%) were evaluations of once-off or pilot implementations. No studies adopted a formal theoretical framework for implementation research. Acceptability (20, 57%) and feasibility (14, 40%) were the most frequently reported implementation outcomes. The quality of reporting of implementation outcomes was low. The 35 studies evaluated 29 different interventions, of which 18 (62%) were implemented across sub-Saharan Africa, 20 (69%) focused on stigma related to HIV/AIDS, and 28 (97%) used information or education to reduce stigma. Intervention specification and description was uneven.

Conclusion

Implementation science could support the dissemination of stigma reduction interventions in LMICs, though usage to date has been limited. Theoretical frameworks and validated measures have not been used, key implementation outcomes like cost and sustainability have rarely been assessed, and intervention processes have not been presented in detail. Adapted frameworks, new measures, and increased LMIC-based implementation research capacity could promote the rigor of future stigma implementation research, helping the field deliver on the promise of stigma reduction interventions worldwide.

Similar content being viewed by others

Background

Health-related stigma – the co-occurrence of labeling, stereotyping, separating, status loss, and discrimination associated with a specific disease in the context of power imbalance [1] – deepens health disparities and drives population mortality and morbidity [2]. Interventions to alleviate stigma and its consequences are demonstrating effectiveness across a range of conditions, including HIV/AIDS, mental and substance use disorders, leprosy, epilepsy, and tuberculosis [3,4,5,6,7,8,9,10]. For example, social contact interventions, which facilitate interactions between individuals with a stigmatizing condition and those without it, have been shown to be effective at reducing community stigmatizing beliefs about mental health [6]; individual- and group-based psychotherapeutic interventions have been shown to reduce internalized stigma associated with HIV and mental health conditions [3, 10]; and socioeconomic rehabilitation programs have been shown to reduce stigmatizing attitudes towards people with leprosy [5]. Observed effects have tended to be small-to-moderate and limited to changes in attitudes and knowledge, with less evidence concerning long-term impacts on behavior change and health [11, 12]. Stigma can be intersectional, wherein multiple stigmatizing identities converge within individuals or groups, and effective interventions often grow complex to reflect this reality [13]. Interventions may be multi-component and multi-level [3], meaning that they may be especially difficult to implement, replicate, and disseminate to new contexts [14].

Few stigma reduction interventions move beyond the pilot phase of implementation, and those that do have tended to be in high-income countries. For example, mass media campaigns to reduce the stigma associated with mental health have been implemented at scale and sustained over time in the England, Scotland, Canada, New Zealand, and Australia [11]; however, most interventions do not reach those who need them. This is especially true in low- and middle-income countries (LMICs), where reduced access to resources and lack of political support for stigma reduction interventions compound the burden and consequences of stigma [15, 16]. For example, most LMICs spend far less than needed on the provision of mental health services [17], making large-scale investment in mental health stigma reduction programs unlikely without strong evidence of affordability and sustainability. Furthermore, stigma in low-resource settings tends to be a greater impediment to accessing services than elsewhere [18]. Anti-homosexuality laws and other legislation criminalizing stigmatized identities both increase the burden of stigma and prevent the implementation of effective services and interventions [19]. The same cultural and structural factors that drive and facilitate stigmatizing attitudes threaten the credibility and uptake of the interventions themselves [20].

Implementation science seeks to improve population health by leveraging interdisciplinary methods to promote the uptake and dissemination of effective, under-used interventions in the real world [21]. The emphasis is on implementation strategies, namely on approaches to facilitate, strengthen, or sustain the delivery of evidence-based technologies, practices, and services [22, 23]. Implementation science studies use qualitative and quantitative methods to measure implementation outcomes, including acceptability, adoption, appropriateness, cost, feasibility, fidelity, penetration, and sustainability (Table 1) [24]; these are indicators of implementation success and process, proximal to service delivery and patient health outcomes. Increasingly, studies use psychometrically validated measures of implementation outcomes [25, 26]. A range of theoretical frameworks support implementation science, including those that can be used to guide the translation of research into practice (e.g., the Canadian Institutes of Health Research Model of Knowledge Translation [27]), study the determinants of implementation success (e.g., the Consolidated Framework for Implementation Research [28]), and evaluate the impact of implementation (e.g., the RE-AIM framework [29]) [30]. Depending on the level of evidence required and the research questions involved, studies fall along a continuum from effectiveness, to hybrid effectiveness-implementation [31], to implementation (Fig. 1). Whereas effectiveness studies focus a priori on generalizability and test the effect of interventions on clinical outcomes [32], hybrid study designs can be used to test intervention effects while examining the process of implementation (type 1), simultaneously test clinical interventions and assess the feasibility or utility of implementation interventions or strategies (type 2), or test implementation interventions or strategies while observing clinical outcomes (type 3) [31]. Non-hybrid implementation studies focus a priori on the adoption or uptake of clinical interventions in the real world [33].

Continuum of study designs from effectiveness to implementation. As defined by Curran et al. [31]

Implementation science has particular relevance to the goal of delivering effective stigma reduction interventions in LMICs, offering tools to identify, explain, and circumvent barriers to implementation given severe resource constraints [34]. It can be used to study and improve complex interventions whose multiple, interacting components blur the boundaries between intervention, context, and implementation [14] and has the potential to generate evidence of affordability, scalability, and sustainability, which could be used to convince policy-makers and donors to invest in future implementation [35]. Moreover, it could bring policy-makers, providers, patients, and other stakeholders into the research process, promoting engagement around the study and delivery of interventions that may themselves be stigmatized [36]. However, the utility of implementation research depends on its rigor and replicability. To encourage growth and strength in the field of stigma implementation research, it is important to summarize previous work in the area, evaluate that rigor and replicability, and articulate priorities for future research. Our objectives were to systematically review implementation studies of health-related stigma reduction interventions in LMICs and critically assess the reporting of implementation outcomes and intervention descriptions.

Methods

We registered our systematic review protocol in the International Prospective Register of Systematic Reviews (PROSPERO #CRD42018085786) and followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) guidelines [37].

Search strategy

One author (CK) searched four electronic bibliographic databases (PubMed, CINAHL, PsycINFO, and EMBASE) through November 15, 2017, for studies fulfilling four search concepts – stigma, intervention, implementation outcomes, and LMICs. We developed a list of terms for each concept in collaboration with an information scientist. The full search strategy for all databases is presented in Additional file 1. The PsycINFO search excluded dissertations, while the CINAHL search was restricted to academic journals. Finally, the reference lists of included studies were reviewed for additional publications.

Study selection

Studies were included in any language that (1) collected empiric data, (2) evaluated implementation of an intervention whose primary objective was to reduce stigma related to a health condition, (3) were based in a LMIC according to the World Bank [38], and (4) reported at least one implementation outcome as defined by Proctor et al. [24]. Studies evaluating interventions targeting stigma related to marginalized identities, behaviors, beliefs, or experiences (e.g., stigma related to race, economic status, employment, or sexual preference) were excluded if the interventions did not also target stigma related to a health condition. Unpublished and non-peer-reviewed research were excluded. Qualitative and quantitative studies had the same inclusion and exclusion criteria. The Covidence tool was used to remove duplicate studies and to conduct study screening [39]. A mix of two authors from a team of four (CK, BJ, CSK, and LS) independently screened all titles, abstracts, and full-text articles, and noted reasons for excluding studies during full-text review. Studies passed the title/abstract screening stage if the title or abstract mentioned stigma reduction and if it was possible that the study had been conducted in a LMIC. Studies passed the full-text screening stage if all criteria above were met. Disagreements were resolved through discussion until consensus was reached.

Data abstraction

Two authors (CK and BJ) independently piloted a structured abstraction form with two studies; all co-authors reviewed, critiqued, and approved the form. For each study, one of three authors (CK, BJ, and CSK) abstracted study and intervention characteristics (Table 2) onto a shared spreadsheet. One of the two remaining authors verified each abstraction, and the group of three resolved any disagreement through discussion.

At the study level, we collected research questions, methods and study types, implementation research frameworks used, years of data collection, study populations, implementation outcomes reported [24], stigma, service delivery, patient health, and/or other outcomes reported, study limitations, and conclusions or lessons learned. Studies were categorized as effectiveness, type 1, 2, or 3 hybrid effectiveness-implementation [31], or implementation, according to Curran et al. [31]. We noted the stage of intervention implementation at the time of each study as either pilot/once-off, scaling up, implemented and sustained at scale, or undergoing de-implementation. Studies were considered to have used an implementation research framework if authors specified one within the introduction or methods. Implementation outcomes were defined according to Proctor et al. [24]. Patient-level service penetration – the percent of eligible patients receiving an intervention – was considered a form of penetration, though this distinction is not clear in Proctor et al. [24]. We developed a five-item rubric to assess the quality of reporting of implementation outcomes, noting whether the authors included the implementation outcomes in their study objectives; whether they specified any hypotheses or conceptual models for the implementation outcomes; whether they described measurement methods for the implementation outcomes; whether they used validated measures for the implementation outcomes [25]; and whether they reported the sample sizes for the implementation outcomes.

At the intervention level, we collected intervention names, intervention descriptions, countries, associated stigmatizing health conditions, and target populations. Interventions were categorized based on type, including information/education, skills, counselling/support, contact, structural, and/or biomedical [3]; socio-ecological level, including individual, interpersonal, organizational, community, and/or public policy; stigma domain targeted, including driver, facilitator, and/or manifestation [3]; and finally the type of stigma targeted, including experienced, community, anticipated, and/or internalized [40]. The 12-item Template for Intervention Description and Replication (TIDieR) was used to evaluate the comprehensiveness of intervention description and specification by the studies in the sample [31]. TIDieR is an extension of item five of the Consolidated Standards of Reporting Trials (CONSORT), providing granular instructions for the description of interventions to ensure sufficient detail for replicability [41]. Implementation science journals encourage the use of TIDieR or other standards when describing interventions [42]. Each item in the TIDieR checklist (e.g., who provides the intervention? What materials are used?) was counted as present if any aspect of the item was mentioned, regardless of quality or level of detail. When multiple studies in the sample evaluated the same intervention, TIDieR intervention specification was assessed across the studies. Risk of bias was not assessed, as the goal was not to synthesize results across the studies in the sample.

Analysis

We calculated percentages for categorical variables and means and standard deviations (SD) for continuous variables. An implementation outcome reporting score was calculated for each study by summing the number of rubric items present and dividing by the total number of applicable items. A TIDieR specification score out of 12 was calculated for each intervention by summing the number of checklist items reported across studies of the same intervention and dividing by the total number of applicable items. These variables were used to summarize the aims, methods, and results of the studies and interventions in the sample. Qualitative synthesis and quantitative meta-analysis of study findings was not possible, given the heterogeneity in research questions and outcomes.

Results

Study selection

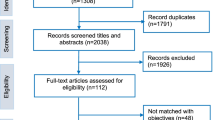

We screened 5951 studies and assessed 257 full-text articles for eligibility. A total of 35 studies met all eligibility criteria (Fig. 2) [43,44,45,46,47,48,49,50,51,52,53,54,55,56,57,58,59,60,61,62,63,64,65,66,67,68,69,70,71,72,73,74,75,76,77] and evaluated 29 different stigma reduction interventions (Table 3).

Study characteristics

The 35 studies in the sample were published between 2003 and 2017; the median year of publication was 2013 (Table 4). Study designs varied and included both qualitative and quantitative methods; 20 (57%) adopted at least one qualitative method, including interviewing, focus groups, or observation, while 8 (23%) reported results from cross-sectional surveys. One was an effectiveness study, with no a priori intent to assess implementation outcomes. The majority (32, 91%) were type 1 hybrid effectiveness-implementation studies; for example, Shah et al. [66] paired an effectiveness study with a process evaluation in order to assess provider-level acceptability and feasibility. None were type 2 or type 3 hybrid studies. Two were implementation studies; for example, Gurnani et al. [53] used routinely collected monitoring and evaluation data to assess the penetration of a structural intervention to reduce stigma around HIV/AIDS and sex work. Most (29, 83%) were evaluations of once-off or pilot implementations, while 6 (17%) evaluated implementation at scale. None evaluated the interventions undergoing scale-up, and none evaluated the process of de-implementation. No studies adopted a formal theoretical framework for implementation research.

Patient, provider, or community-level acceptability (20, 57%) and feasibility (14, 40%) were the most frequently reported implementation outcomes. Though authors usually reported whether participants found activities useful, enjoyable, or difficult, they rarely described why. Penetration was also relatively common (6, 17%). In comparison, appropriateness and fidelity were reported in 5 (14%) and 4 (11%) studies, respectively, while cost and sustainability were reported twice each, and adoption was reported once. In addition to these implementation outcomes, stigma (25, 71%) and service delivery outcomes (12, 34%) were most frequently reported, while patient health outcomes were rarely assessed (7, 20%).

Implementation outcome reporting scores were low, with a mean of 40% (SD 30%); 14 (40%) studies mentioned implementation outcomes in their study objectives, while 3 (9%) prespecified a hypothesis or conceptual model to explain implementation outcomes. For example, Rice et al. [56] used diffusion of innovation theory to inform their hypothesis about the penetration of messaging in intervention settings. Though 28 (80%) studies described methods for collecting implementation outcomes and 24 (69%) documented a sample size for those outcomes, none used validated measures of implementation outcomes in their quantitative data collection.

Intervention characteristics

Of the 29 interventions in the sample, 18 (62%) were implemented in sub-Saharan Africa (Table 5), 20 (69%) focused on stigma related to HIV/AIDS, and fewer addressed mental health (3, 10%), leprosy (2, 7%), or other conditions (6, 21%); the majority (28, 97%) used information or education to reduce stigma. For example, the Tchova Tchova program in Mozambique broadcasted HIV education over the radio, including a debate segment where listeners could ask questions to an HIV specialist [72]. Skill- and capacity-building were the next most common types of stigma reduction interventions (13, 45%), followed by counseling (6, 21%) and contact events (6, 21%). The Stigma Assessment and Reduction of Impact program in Indonesia, for instance, taught participatory video production skills to people affected by leprosy [67, 68], while the Trauma-Focused Cognitive Behavioral Therapy program in Zambia counseled orphans and vulnerable children to reduce shame-related feelings around sexual abuse [61,62,63]. Few interventions used structural (1, 3%) or biomedical (1, 3%) approaches to reduce stigma. The drivers of stigma were targeted by 28 (97%) studies, while few targeted its facilitators (4, 14%) or manifestations (10, 34%). In Senegal, the HIV Prevention 2.0 study targeted all three through its Integrated Stigma Mitigation Intervention approach, wherein drivers related to knowledge and competency of service providers, facilitators related to peer support and peer-to-peer referral, and manifestations related to individual self-stigma and self-esteem [76]. Most interventions (24, 83%) focused on reducing community stigma, while fewer targeted experienced (11, 38%), anticipated (7, 24%), or internalized stigma (9, 31%). For example, the Indian film Prarambha was produced to raise awareness about HIV and designed to be viewed by individuals in HIV-vulnerable communities, thus targeting a driver of community stigma related to HIV [58]. While many interventions operated at the individual (23, 79%) and interpersonal levels (14, 48%), fewer were implemented at the community (11, 38%), organizational (6, 21%), or public policy (1, 3%) levels. Several interventions at the community, organizational, or public policy level specifically targeted the structural drivers of health-related stigma among key or vulnerable populations. In another example from India, the Karnataka Health Promotion Trust organization educated female sex workers on their legal rights and implemented sensitization and awareness training with government officials, police, and journalists [53].

Adherence to the TIDieR checklist for reporting interventions was uneven. On average, interventions met 60% (SD 10%) of the TIDieR criteria. All interventions specified how they were delivered – whether face-to-face, remotely, individually, or in a group, and the majority offered a rationale to justify the intervention (28, 97%) and described the procedures involved in delivering intervention components (28, 97%). Few interventions (5, 17%) documented how they were tailored to different target groups or contexts, and only 2 (7%) described modifications that took place over the course of implementation.

Discussion

We systematically reviewed implementation research conducted in support of stigma reduction interventions in LMICs. A broad, inclusive definition of implementation research was used, considering any studies that reported implementation outcomes while evaluating stigma reduction interventions. Few studies were found, with the majority of these evaluating interventions to reduce HIV-related stigma, taking place in sub-Saharan Africa, and evaluating pilot or once-off interventions. The interventions in the sample were diverse, adopting a variety of tactics to reduce stigma, though those that had been implemented at scale tended to incorporate mass media or target structural changes, rather than individual-level support or service delivery. Further, none took a trans-diagnostic approach seeking to reduce stigma associated with multiple health conditions.

A critical assessment of these studies suggested three key gaps in the literature. First, no study in the sample explicitly incorporated a conceptual framework for implementation research, evaluated implementation strategies using a type 2 or 3 hybrid study design, nor used validated measures of implementation outcomes. Second, most studies focused on intervention acceptability and feasibility, and few assessed adoption, appropriateness, cost, fidelity, penetration, or sustainability. Third, intervention descriptions were sparse and often lacked the key details necessary for the eventual replication and adoption of those interventions. These gaps were consistent across the different stigmatizing health conditions – coverage of robust methods for implementation research was not greater among studies of interventions targeting any particular condition.

Theoretical frameworks, validated measures, and rigorous methods support the generalizability and ultimately promote the utility of implementation research [78]. Implementation science is a rapidly growing field, though essentially all available frameworks and measures for implementation determinants and outcomes have been developed in high-income countries [25, 30, 79]. Frameworks like the Consolidated Framework for Implementation Research are increasingly popular and have produced actionable results to enhance implementation in high-resource settings [80,81,82,83], though they may need to be translated and adapted to support implementation of stigma reduction and other complex interventions in LMICs. Improvements to measurement could also promote the comparability of findings across future stigma implementation studies, accelerating knowledge production in the field and easing the translation of findings into practice [84]. Robust measures are increasingly available [25], including measures of acceptability, appropriateness, feasibility [85], and sustainability [86, 87], though there is a major need for continued development and validation to ensure these are relevant to stigma interventions and valid in LMIC settings. With such measures and frameworks in hand, LMIC-based stigma researchers could start to assess how patient-, provider-, facility-, and community-level characteristics predict implementation outcomes. Such studies would help determine, for example, the projected health sector cost of providing in-service stigma reduction training to clinicians, or the patient-level factors associated with preference for peer counselors over lay counselors. Subsequent type 2 and 3 effectiveness-implementation hybrid study designs could compare implementation strategies and observe changes in relevant outcomes [31], for example, experimenting with the counselor cadre and assessing relative levels of adoption. Of course, for all this to be feasible, capacity-building and funding for implementation science among stigma researchers in LMICs is critical. Few opportunities for training and support of LMIC-based implementation researchers are currently available [88].

Future research (Box 1) will need to assess the complete range of implementation outcomes to further strengthen the evidence base for the delivery and scale-up of effective stigma reduction interventions. Studies in this sample concentrated on assessing acceptability and feasibility and rarely measured other implementation outcomes. For example, only five studies measured provider- or facility-level adoption or penetration. As such, little is known about the factors associated with the uptake of stigma reduction interventions by health facilities, staff, patients, or communities in LMICs. Appropriateness, fidelity, cost, and sustainability were also seldom evaluated. Appropriateness is important because uptake of an intervention is unlikely unless community members, patients, and providers perceive its utility and compatibility with their other activities. One study used an innovative approach to improve the appropriateness of a stigma reduction intervention by involving community members with leprosy as staff members to inform study design and implementation [67]. Another asked community members to help select and tailor intervention components to address local concerns [61]. Fidelity has been shown to be critical to ensuring that effectiveness is maximized and successful outcomes are replicable across settings [89]. Evidence of cost and cost-effectiveness is necessary to justify scale-up and funding by health systems and donors. Finally, sustainability ensures investments into stigma reduction efforts are not wasted [90, 91].

Detailed, transparent descriptions of interventions in manuscripts and supplemental materials are also important to ensure others can replicate the work and achieve comparable results to those seen in effectiveness studies [92]. The majority of stigma interventions in the sample performed well against the TIDieR criteria, offering some description of the who, what, when, where, and why of intervention delivery [41], though descriptions were generally sparse, and few manuscripts offered links to formal manuals or protocols detailing intervention content and procedures. This is consistent with other reviews highlighting deficiencies in the comprehensive reporting of processes for complex interventions [93]. Moreover, few studies in the sample reported on intervention tailoring, modifications that were made over the course of the study, or fidelity assessment. Stigma is multi-dimensional; as a result, successful stigma interventions are complex, operating across multiple components and socio-ecological levels [15]. Complex interventions like these work best when peripheral components are tailored to local contexts [94]; it is therefore important to define the core, standardized parts of an intervention, and those that can be or have been adapted to suit local needs. As noted above, fidelity assessment is important to ensuring effectiveness; more frequent reporting of fidelity would serve both to increase the range of implementation outcomes assessed and to improve performance against the TIDieR criteria. Future stigma implementation research could ease the translation of findings into practice and deepen intervention specification by providing intervention materials as manuscript appendices, comprehensively documenting and reporting adaptations or modifications to interventions, and incorporating fidelity assessment into implementation and evaluation [95].

This review had several limitations. First, studies of interventions with stigma reduction as a secondary objective or incidental effect were excluded, though many interventions have immense potential to reduce health-related stigma even if stigma reduction is not their primary goal. For example, integration of services to address stigmatizing conditions into primary care and other platforms (e.g., primary mental health care [96] or prevention of vertical transmission of HIV as part of routine antenatal care [97]) may improve service delivery and patient health outcomes and de-stigmatize the associated condition. Evaluations of the implementation of these approaches exist (e.g., using interviews to assess acceptability and feasibility of vertical transmission prevention and antenatal service integration in Kenya [98]) but were not captured by this review. Second, studies conducted in high-income countries were excluded, though they may represent a significant proportion of stigma implementation research. This review focused on the unique challenge of studying the implementation of stigma-specific interventions in LMICs, where there is a large burden of unaddressed stigma as well as significant financial and logistic constraints to deliver such interventions. Third, this review was focused on implementation science, seeking to develop generalizable knowledge beyond the individual context under study. Therefore, unpublished and non-peer-reviewed studies were excluded. We recognize that barriers to publication in academic journals are greater for investigators in LMIC settings. To limit bias against non-English speaking investigators, we did not restrict our search on the basis of language. Finally, the assessment of implementation outcomes by studies in the sample was too sparse to draw strong conclusions about factors that promote or inhibit successful and sustained implementation at scale.

Conclusion

Implementation science has the potential to support the development, delivery, and dissemination of stigma reduction interventions in LMICs, though usage to date has been limited. Rigorous stigma implementation research is urgently needed. There are clear barriers to successful implementation of stigma reduction interventions, especially in LMICs. Given these barriers, implementation science can help maximize the population health impact of stigma reduction interventions by allowing researchers to test and refine implementation strategies, develop new approaches to improve their interventions in various settings, explore and understand the causal mechanisms between intervention and impact, and generate evidence to convince policy-makers of the value of scale-up [99]. Such research will help us deliver on the promise of interventions to alleviate the burden of stigma worldwide.

Abbreviations

- LMICs:

-

low- and middle-income countries

- TIDieR:

-

Template for Intervention Description and Replication

- SD:

-

standard deviation

References

Link BG, Phelan JC. Conceptualizing stigma. Ann Rev Sociol. 2001;27:363–85.

Hatzenbuehler ML, Phelan JC, Link BG. Stigma as a fundamental cause of population health inequalities. Am J Public Health. 2013;103(5):813–21.

Stangl AL, Lloyd JK, Brady LM, Holland CE, Baral S. A systematic review of interventions to reduce HIV-related stigma and discrimination from 2002 to 2013: how far have we come? J Int AIDS Soc. 2013;16(3 Suppl 2):18734.

Thornicroft G, Mehta N, Clement S, Evans-Lacko S, Doherty M, Rose D, Koschorke M, Shidhaye R, O'Reilly C, Henderson C. Evidence for effective interventions to reduce mental-health-related stigma and discrimination. Lancet. 2016;387(10023):1123–32.

Sermrittirong S, Van Brakel WH, Bunbers-Aelen JF. How to reduce stigma in leprosy–a systematic. Lepr Rev. 2014;85:149–57.

Livingston JD, Milne T, Fang ML, Amari E. The effectiveness of interventions for reducing stigma related to substance use disorders: a systematic review. Addiction. 2012;107:39–50.

Birbeck G. Interventions to reduce epilepsy-associated stigma. Psychol Health Med. 2006;11(3):364–6.

Sommerland N, Wouters E, Mitchell E, Ngicho M, Redwood L, Masquillier C, van Hoorn R, van den Hof S, Van Rie A. Evidence-based interventions to reduce tuberculosis stigma: a systematic review. Int J Tubercul Lung Dis. 2017;21(11):S81–6.

Hickling FW, Robertson-Hickling H, Paisley V. Deinstitutionalization and attitudes toward mental illness in Jamaica: a qualitative study. Rev Panam Salud Publica. 2011;29(3):169–76.

Shilling S, Bustamante JA, Salas A, Acevedo C, Cid P, Tapia T, Tapia E, Alvarado R, Sapag JC, Yang LH. Development of an intervention to reduce self-stigma in outpatient mental health service users in Chile. Rev Fac Cien Med Univ Nac Cordoba. 2016;72(4):284–94.

Gronholm PC, Henderson C, Deb T, Thornicroft G. Interventions to reduce discrimination and stigma: the state of the art. Soc Psychiatry Psychiatr Epidemiol. 2017;52(3):249–58.

Mak WW, Mo PK, Ma GY, Lam MY. Meta-analysis and systematic review of studies on the effectiveness of HIV stigma reduction programs. Soc Sci Med. 2017;188:30–40.

Bowleg L. The problem with the phrase women and minorities: intersectionality—an important theoretical framework for public health. Am J Public Health. 2012;102(7):1267–73.

Pfadenhauer LM, Gerhardus A, Mozygemba K, Lysdahl KB, Booth A, Hofmann B, Wahlster P, Polus S, Burns J, Brereton L. Making sense of complexity in context and implementation: the Context and Implementation of Complex Interventions (CICI) framework. Implement Sci. 2017;12:21.

Mascayano F, Armijo JE, Yang LH. Addressing stigma relating to mental illness in low-and middle-income countries. Front Psych. 2015;6:38.

Becker AE, Kleinman A. Mental health and the global agenda. New Engl J Med. 2013;369:66–73.

Saxena S, Thornicroft G, Knapp M, Whiteford H. Resources for mental health: scarcity, inequity, and inefficiency. Lancet. 2007;370(9590):878–89.

Thornicroft G, Alem A, Dos Santos RA, Barley E, Drake RE, Gregorio G, Hanlon C, Ito H, Latimer E, Law A. WPA guidance on steps, obstacles and mistakes to avoid in the implementation of community mental health care. World Psychiatry. 2010;9(2):67–77.

Beyrer C. Pushback: the current wave of anti-homosexuality laws and impacts on health. PLoS Med. 2014;11(6):e1001658.

Brown RP, Imura M, Mayeux L. Honor and the stigma of mental healthcare. Personal Soc Psychol Bull. 2014;40(9):1119–31.

Eccles MP, Mittman BS. Welcome to implementation science. Implement Sci. 2006;1:1.

Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implementation Sci. 2013;8:139.

Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Proctor EK, Kirchner JE. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21.

Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, Hensley M. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(2):65–76.

Lewis CC, Fischer S, Weiner BJ, Stanick C, Kim M, Martinez RG. Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implementation Sci. 2015;10:155.

Lewis CC, Weiner BJ, Stanick C, Fischer SM. Advancing implementation science through measure development and evaluation: a study protocol. Implementation Sci. 2015;10:102.

Tetroe J. Knowledge translation at the Canadian Institutes of Health Research: a primer. Focus Technical Brief. 2007;18:1–8.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50.

Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–7.

Nilsen P. Making sense of implementation theories, models and frameworks. Implementation Sci. 2015;10:53.

Curran GM, Bauer M, Mittman B, Pyne JM, Stetler C. Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Medical Care. 2012;50(3):217.

Wells KB. Treatment research at the crossroads: the scientific interface of clinical trials and effectiveness research. Am J Psychiatry. 1999;156:5–10.

Cosby JL. Improving patient care: the implementation of change in clinical practice. Qual Saf Health Care. 2006;15(6):447.

Bauer MS, Damschroder L, Hagedorn H, Smith J, Kilbourne AM. An introduction to implementation science for the non-specialist. BMC Psychology. 2015;3:32.

Proctor E, Luke D, Calhoun A, McMillen C, Brownson R, McCrary S, Padek M. Sustainability of evidence-based healthcare: research agenda, methodological advances, and infrastructure support. Implement Sci. 2015;10:88.

Leykum LK, Pugh JA, Lanham HJ, Harmon J, McDaniel RR. Implementation research design: integrating participatory action research into randomized controlled trials. Implement Sci. 2009;4:69.

Moher D, Liberati A, Tetzlaff J, Altman DG, Group P. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. PLoS Med. 2009;6(7):e1000097.

World Bank Country and Lending Groups. https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups. Accessed 20 March 2018.

Covidence Systematic Review Software. www.covidence.org. Accessed 8 June 2018.

Turan B, Hatcher AM, Weiser SD, Johnson MO, Rice WS, Turan JM. Framing mechanisms linking HIV-related stigma, adherence to treatment, and health outcomes. Am J Public Health. 2017;107(6):863–9.

Hoffmann TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Altman DG, Barbour V, Macdonald H, Johnston M. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348:g1687.

Wilson PM, Sales A, Wensing M, Aarons GA, Flottorp S, Glidewell L, Hutchinson A, Presseau J, Rogers A, Sevdalis N. Enhancing the reporting of implementation research. Implement Sci. 2017;12:13.

el-Setouhy MA, Rio F. Stigma reduction and improved knowledge and attitudes towards filariasis using a comic book for children. J Egyptian Soc Parasitol. 2003;33:55–65.

Norr KF, Norr JL, McElmurry BJ, Tlou S, Moeti MR. Impact of peer group education on HIV prevention among women in Botswana. Health Care Women Int. 2004;25(3):210–26.

Witter S, Were B. Breaking the silence: using memory books as a counselling and succession-planning tool with AIDS-affected households in Uganda. Afr J AIDS Res. 2004;3(2):139–43.

Lawoyin OO. Findings from an HIV/AIDS programme for young women in two Nigerian cities: a short report. Afr J Reprod Health. 2007;11(2):99–106.

Boulay M, Tweedie I, Fiagbey E. The effectiveness of a national communication campaign using religious leaders to reduce HIV-related stigma in Ghana. Afr J AIDS Res. 2008;7:133–41.

Finkelstein J, Lapshin O, Wasserman E. Randomized study of different anti-stigma media. Patient Educ Couns. 2008;71(2):204–14.

Khumalo-Sakutukwa G, Morin SF, Fritz K, Charlebois ED, van Rooyen H, Chingono A, Modiba P, Mrumbi K, Visrutaratna S, Singh B, et al. Project Accept (HPTN 043): a community-based intervention to reduce HIV incidence in populations at risk for HIV in sub-Saharan Africa and Thailand. J Acquir Immune Defic Syndr. 2008;49(4):422–31.

Lapinski MK, Nwulu P. Can a short film impact HIV-related risk and stigma perceptions? Results from an experiment in Abuja, Nigeria. Health Commun. 2008;23(5):403–12.

Pappas-DeLuca KA, Kraft JM, Galavotti C, Warner GL, Mooki M, Hastings P, Koppenhaver T, Roels TH, Kilmarx PH. Entertainment-education radio serial drama and outcomes related to HIV Testing in Botswana. AIDS Educ Prev. 2008;20(6):486–503.

Zeelen J, Wijbenga H, Vintges M, de Jong G. Beyond silence and rumor: Storytelling as an educational tool to reduce the stigma around HIV/AIDS in South Africa. Health Educ. 2010;110(5):382–98.

Gurnani V, Beattie TS, Bhattacharjee P, Mohan HL, Maddur S, Washington R, Isac S, Ramesh BM, Moses S, Blanchard JF. An integrated structural intervention to reduce vulnerability to HIV and sexually transmitted infections among female sex workers in Karnataka state, south India. BMC Public Health. 2011;11:755.

Watt MH, Aronin EH, Maman S, Thielman N, Laiser J, John M. Acceptability of a group intervention for initiates of antiretroviral therapy in Tanzania. Global Public Health. 2011;6(4):433–46.

Denison JA, Tsui S, Bratt J, Torpey K, Weaver MA, Kabaso M. Do peer educators make a difference? An evaluation of a youth-led HIV prevention model in Zambian Schools. Health Educ Res. 2012;27(2):237–47.

Rice RE, Wu Z, Li L, Detels R, Rotheram-Borus MJ. Reducing STD/HIV stigmatizing attitudes through community popular opinion leaders in Chinese markets. Human. Commun Res. 2012;38(4):379–405.

Al-Iryani B, Basaleem H, Al-Sakkaf K, Kok G, van den Borne B. Process evaluation of school-based peer education for HIV prevention among Yemeni adolescents. SAHARA J. 2013;10:55–64.

Catalani C, Castaneda D, Spielberg F. Development and assessment of traditional and innovative media to reduce individual HIV/AIDS-related stigma attitudes and beliefs in India. Front Public Health. 2013;1:21.

Li L, Lin C, Guan J, Wu Z. Implementing a stigma reduction intervention in healthcare settings. J Int AIDS Soc. 2013;16(3 Suppl 2):18710.

Li L, Wu Z, Liang LJ, Lin C, Guan J, Jia M, Rou K, Yan Z. Reducing HIV-related stigma in health care settings: a randomized controlled trial in China. Am J Public Health. 2013;103(2):286–92.

Murray LK, Dorsey S, Skavenski S, Kasoma M, Imasiku M, Bolton P, Bass J, Cohen JA. Identification, modification, and implementation of an evidence-based psychotherapy for children in a low-income country: the use of TF-CBT in Zambia. Int J Mental Health Syst. 2013;7:24.

Murray LK, Familiar I, Skavenski S, Jere E, Cohen J, Imasiku M, Mayeya J, Bass JK, Bolton P. An evaluation of trauma focused cognitive behavioral therapy for children in Zambia. Child Abuse Neglect. 2013;37(12):1175–85.

Murray LK, Skavenski S, Michalopoulos LM, Bolton PA, Bass JK, Familiar I, Imasiku M, Cohen J. Counselor and client perspectives of trauma-focused cognitive behavioral therapy for children in Zambia: a qualitative study. J Clin Child Adolesc Psychol. 2014;43(6):902–14.

French H, Greeff M, Watson MJ. Experiences of people living with HIV and people living close to them of a comprehensive HIV stigma reduction community intervention in an urban and a rural setting. SAHARA J. 2014;11:105–15.

French H, Greeff M, Watson MJ, Doak CM. A comprehensive HIV stigma-reduction and wellness-enhancement community intervention: a case study. JANAC. 2015;26:81–96.

Shah SM, Heylen E, Srinivasan K, Perumpil S, Ekstrand ML. Reducing HIV stigma among nursing students: a brief intervention. Western J Nursing Res. 2014;36(10):1323–37.

Lusli M, Peters RM, Zweekhorst MB, Van Brakel WH, Seda FS, Bunders JF, Irwanto. Lay and peer counsellors to reduce leprosy-related stigma--lessons learnt in Cirebon. Indonesia. Lepr Rev. 2015;86:37–53.

Lusli M, Peters R, van Brakel W, Zweekhorst M, Iancu S, Bunders J, Irwanto, Regeer B. The impact of a rights-based counselling intervention to reduce stigma in people affected by leprosy in Indonesia. PLoS Negl Trop Dis. 2016;10(12):e0005088.

Peters RM, Dadun, Zweekhorst MB, Bunders JF, Irwanto, van Brakel WH. A cluster-randomized controlled intervention study to assess the effect of a contact intervention in reducing leprosy-related stigma in Indonesia. PLoS Negl Trop Dis. 2015;9(10):e0004003.

Peters RMH, Zweekhorst MBM, van Brakel WH, Bunders JFG, Irwanto. ‘People like me don't make things like that’: participatory video as a method for reducing leprosy-related stigma. Global Public Health. 2016;11(5/6):666–82.

Salmen CR, Hickey MD, Fiorella KJ, Omollo D, Ouma G, Zoughbie D, Salmen MR, Magerenge R, Tessler R, Campbell H, et al. “Wan Kanyakla” (We are together): community transformations in Kenya following a social network intervention for HIV care. Social Sci Med. 2015;147:332–40.

Figueroa ME, Poppe P, Carrasco M, Pinho MD, Massingue F, Tanque M, Kwizera A. Effectiveness of community dialogue in changing gender and sexual norms for HIV prevention: evaluation of the Tchova Tchova Program in Mozambique. J Health Commun. 2016;21(5):554–63.

Tekle-Haimanot R, Pierre-Marie P, Daniel G, Worku DK, Belay HD, Gebrewold MA. Impact of an educational comic book on epilepsy-related knowledge, awareness, and attitudes among school children in Ethiopia. Epilepsy Behavior. 2016;61:218–23.

Tora A, Ayode D, Tadele G, Farrell D, Davey G, McBride CM. Interpretations of education about gene-environment influences on health in rural Ethiopia: the context of a neglected tropical disease. Int Health. 2016;8(4):253–60.

Wilson JW, Ramos JG, Castillo F, Castellanos EF, Escalante P. Tuberculosis patient and family education through videography in El Salvador. J Clin Tuberc Other Mycobact Dis. 2016;4:14–20.

Lyons CE, Ketende S, Diouf D, Drame FM, Liestman B, Coly K, Ndour C, Turpin G, Mboup S, Diop K, et al. Potential impact of integrated stigma mitigation interventions in improving HIV/AIDS service delivery and uptake for key populations in senegal. J Acquir Immune Defic Syndr. 2017;74:S52–9.

Oduguwa AO, Adedokun B, Omigbodun OO. Effect of a mental health training programme on Nigerian school pupils’ perceptions of mental illness. Child Adolesc Psychiatry. Mental Health. 2017;11:19.

Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implement Sci. 2014;9:118.

Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8:22.

Kirk MA, Kelley C, Yankey N, Birken SA, Abadie B, Damschroder L. A systematic review of the use of the consolidated framework for implementation research. Implement Sci. 2015;11:72.

Keith RE, Crosson JC, O’Malley AS, Cromp D, Taylor EF. Using the consolidated framework for implementation research (CFIR) to produce actionable findings: a rapid-cycle evaluation approach to improving implementation. Implement Sci. 2017;12:15.

Klafke N, Mahler C, Wensing M, Schneeweiss A, Müller A, Szecsenyi J, Joos S. How the consolidated framework for implementation research can strengthen findings and improve translation of research into practice: a case study. Oncol Nursing Forum. 2017;2017:E223–31.

Ilott I, Gerrish K, Booth A, Field B. Testing the Consolidated Framework for Implementation Research on health care innovations from South Yorkshire. J Eval Clin Pract. 2013;19(5):915–24.

Kimberlin CL, Winterstein AG. Validity and reliability of measurement instruments used in research. Am J Health Syst Pharmacy. 2008;65(23):2276–84.

Weiner BJ, Lewis CC, Stanick C, Powell BJ, Dorsey CN, Clary AS, Boynton MH, Halko H. Psychometric assessment of three newly developed implementation outcome measures. Implement Sci. 2017;12:108.

Luke DA, Calhoun A, Robichaux CB, Elliott MB, Moreland-Russell S. The Program Sustainability Assessment Tool: a new instrument for public health programs. Prev Chronic Dis. 2014;11:130184.

Instrumentation: Dissemination and Implementation Science Measures. https://www.jhsph.edu/research/centers-and-institutes/global-mental-health/dissemination-and-scale-up/instrumentation/index.html. Accessed 2 March 2018.

Kemp CG, Weiner BJ, Sherr KH, Kupfer LE, Cherutich PK, Wilson D, Geng E, Wasserheit J. Implementation science for integration of HIV and non-communicable disease services in sub-Saharan Africa: a systematic review. AIDS. 2018;32(Suppl 1):S93–S105.

Carroll C, Patterson M, Wood S, Booth A, Rick J, Balain S. A conceptual framework for implementation fidelity. Implement Sci. 2007;2:40.

Stirman SW, Kimberly J, Cook N, Calloway A, Castro F, Charns M. The sustainability of new programs and innovations: a review of the empirical literature and recommendations for future research. Implement Sci. 2012;7:17.

Iwelunmor J, Blackstone S, Veira D, Nwaozuru U, Airhihenbuwa C, Munodawafa D, Kalipeni E, Jutal A, Shelley D, Ogedegbe G. Toward the sustainability of health interventions implemented in sub-Saharan Africa: a systematic review and conceptual framework. Implement Sci. 2015;11:43.

Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof. 2006;29:126–53.

Riley BL, MacDonald J, Mansi O, Kothari A, Kurtz D, Edwards NC. Is reporting on interventions a weak link in understanding how and why they work? A preliminary exploration using community heart health exemplars. Implement Sci. 2008;3:27.

Craig P, Dieppe P, Macintyre S, Michie S, Nazareth I, Petticrew M. Developing and evaluating complex interventions: the new Medical Research Council guidance. BMJ. 2008;337:a1655.

Michie S, Fixsen D, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implement Sci. 2009;4:40.

Patel V, Belkin GS, Chockalingam A, Cooper J, Saxena S, Unützer J. Grand challenges: integrating mental health services into priority health care platforms. PLoS Med. 2013;10(5):e1001448.

Bond V, Chase E, Aggleton P, Stigma HIV. AIDS and prevention of mother-to-child transmission in Zambia. Eval Program Planning. 2002;25(4):347–56.

Winestone LE, Bukusi EA, Cohen CR, Kwaro D, Schmidt NC, Turan JM. Acceptability and feasibility of integration of HIV care services into antenatal clinics in rural Kenya: a qualitative provider interview study. Global Public Health. 2012;7(2):149–63.

Lobb R, Colditz GA. Implementation science and its application to population health. Ann Rev Public Health. 2013;34:235–51.

Acknowledgements

This article is part of a collection that draws upon a 2017 workshop on stigma research and global health, which was organized by the Fogarty International Center, National Institute of Health, United States. The article was supported by a generous contribution by the Fogarty International Center. We would like to thank Sarah Safranek at the University of Washington for her assistance in developing our search strategy. The publication of this paper was supported by the Fogarty International Center of the National Institutes of Health.

Funding

CK is supported by a grant from the National Institute of Mental Health (F31 MH112397-01A1). BJ and SD are supported by a grant from the National Institute of Mental Health (R01 MH110358-02). JS is supported by Grant FONDECYT 1160099, CONICYT, Chile.

Availability of data and materials

All data generated or analyzed during this study are included in this published article as a supplementary information file.

Author information

Authors and Affiliations

Contributions

CK, NJ, JS, JB, DR, and SB conceived the study. CK developed the review protocol and implemented data collection. CK, BJ, CSK, and LS reviewed studies for inclusion. CK, BJ, CSK, and LS abstracted, analyzed, and interpreted the data. All authors drafted the manuscript, and all authors contributed to revisions. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Additional file

Additional file 1:

S1. Systematic review search strategy. Search terms, number of results, and filters used when collecting studies from databases for the systematic review. S2. Abstraction form and dataset. Abstraction form and dataset used for our analysis. (ZIP 148 kb)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated.

About this article

Cite this article

Kemp, C.G., Jarrett, B.A., Kwon, CS. et al. Implementation science and stigma reduction interventions in low- and middle-income countries: a systematic review. BMC Med 17, 6 (2019). https://doi.org/10.1186/s12916-018-1237-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12916-018-1237-x