Abstract

Background

The persistent gap between research and practice compromises the impact of multi-level and multi-strategy community health interventions. Part of the problem is a limited understanding of how and why interventions produce change in population health outcomes. Systematic investigation of these intervention processes across studies requires sufficient reporting about interventions. Guided by a set of best processes related to the design, implementation, and evaluation of community health interventions, this article presents preliminary findings of intervention reporting in the published literature using community heart health exemplars as case examples.

Methods

The process to assess intervention reporting involved three steps: selection of a sample of community health intervention studies and their publications; development of a data extraction tool; and data extraction from the publications. Publications from three well-resourced community heart health exemplars were included in the study: the North Karelia Project, the Minnesota Heart Health Program, and Heartbeat Wales.

Results

Results are organized according to six themes that reflect best intervention processes: integrating theory, creating synergy, achieving adequate implementation, creating enabling structures and conditions, modifying interventions during implementation, and facilitating sustainability. In the publications for the three heart health programs, reporting on the intervention processes was variable across studies and across processes.

Conclusion

Study findings suggest that limited reporting on intervention processes is a weak link in research on multiple intervention programs in community health. While it would be premature to generalize these results to other programs, important next steps will be to develop a standard tool to guide systematic reporting of multiple intervention programs, and to explore reasons for limited reporting on intervention processes. It is our contention that a shift to more inclusive reporting of intervention processes would help lead to a better understanding of successful or unsuccessful features of multi-strategy and multi-level interventions, and thereby improve the potential for effective practice and outcomes.

Similar content being viewed by others

Background

Scholars commonly acknowledge inconsistent and sparse reporting about the design and implementation of complex interventions within the published literature [1–3]. Complex interventions (also referred to as multiple interventions) deliberately apply coordinated and interconnected intervention strategies, which are targeted at multiple levels of a system [4]. Variable and limited reporting of complex interventions compromises the ability to answer questions about how and why interventions work through systematic assessment across multiple studies [3]. In turn, limited evidence-based guidance is available to inform the efforts of those responsible for the design and implementation of interventions, and the gap remains between research and practice.

The momentum within the last five years to identify promising practices in many fields [5–7] increases the urgency and relevance of understanding how and why interventions work. However, complex community health programs involve a set of highly complex processes [8–10]. It has been argued that much of the research on these programs has treated the complex interactions among intervention elements and between intervention components and the external context as a 'black box' [4, 11–14]. Of particular relevance to these programs are failures to either describe or take into account community involvement in the design stages of an intervention [8]; the dynamic, pervasive, and historical influences of inner and outer implementation contexts [12, 14–17]; or pathways for change [13, 14]. A comprehensive set of propositions to guide the extraction of evidence relevant to the planning, implementation, and evaluation of complex community health programs is missing.

Our research team was interested in applying a set of propositions that arose out of a multiple intervention framework to examine reports on community health interventions [4]. To this end, we present a set of propositions that reflects best practices for intervention design, implementation, and evaluation for multiple interventions in community health, and we conduct a preliminary assessment of information reported in the published literature that corresponds to the propositions.

Propositions for the design, implementation and evaluation of community health interventions

The initial sources for propositions were primary studies and a series of systematic and integrative reviews of many large-scale multiple intervention programs in community health (e.g., in fields of tobacco control, heart health, injury prevention, HIV/AIDS, workplace health) [8, 10, 18–24]. By multiple interventions, we mean multi-level and multi-strategy interventions [4]. Common to many of these were notable failures of well-designed research studies to achieve expected outcomes. Authors of these reviews have elaborated reasons why some multiple intervention programs may not have had their intended impact. Insights for propositions include researchers' reflections on the failure of their multiple intervention effectiveness studies to yield hypothesized outcomes, and reviews of community trials elaborating reasons why some multiple interventions programs have not demonstrated their intended impact [8, 10, 22, 23, 25, 26]. The predominant and recurring reasons for multiple intervention research failures are addressed in the initial set of propositions for how and why interventions contribute to positive outcomes.

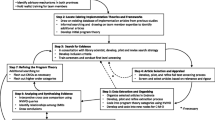

The propositions arise from and are organized within a multiple interventions program framework (see Figure 1 and Table 1). The framework is based on social ecological principles and supported by theoretical and empirical literature describing the design, implementation, and evaluation of multiple intervention programs [8–10, 18–21, 25–29]. The framework has four main elements, and several processes within these elements. The propositions address some of the common reasons reported to explain failures in multiple intervention research.

Methods

The preliminary assessment involved three main steps: selection of a sample of multiple intervention projects and publications, development of a data extraction tool, and data extraction from the publications.

Selection of a sample of multiple intervention projects and publications

A first set of criteria was established to guide the selection of a pool of community-based multi-strategy and multi-level programs to use as case examples. The intent was not to be exhaustive, but to identify a set of programs that address a particular health issue that we anticipated might report details relevant to the propositions. The team decided reporting of such intervention features would most likely be represented in: a community-based primary prevention intervention program; a program that was well-resourced and evaluated, and thus represented a favorable opportunity for a pool of publications that potentially reported key intervention processes; and, a health issue that had been tackled using multiple intervention programs for a prolonged period, thus providing the maturation of ideas in the field.

In the last 30 years, community-based cardiovascular disease prevention programs have been conducted world-wide and their results have been abundantly published. The first pioneer community-based heart health program was the North Karelia Project in Finland, launched in 1971 [30]. Subsequent pioneering efforts included research and demonstration projects in the United States and Europe that included the Minnesota Heart Health Program, and Heartbeat Wales [9, 31, 32]. Although specific interventions varied across these projects, the general approach was similar. Community interventions were designed to reduce major modifiable risk factors in the general population and priority subgroups, and were implemented in various community settings to reach well-defined population groups. Interventions were theoretically sound and were informed by research in diverse fields such as individual behaviour change, diffusion of innovations, and organizational and community change. Combinations of interventions employed multiple strategies (e.g., media, education, policy) and targeted multiple layers of the social ecological system (e.g., individual, social networks, organizations, communities). Many of these exemplar community heart health programs were well-resourced relative to other preventive and public health programs, including large budgets for both process and outcome evaluations. Thus, community-based cardiovascular disease program studies were chosen as the case exemplar upon which to select publications to explore whether specific features of interventions as defined by the propositions were in fact described.

To guide the selection of a pool of published literature on community-based heart health programs, a second set of criteria was established. These included: studies representative of community-based heart health programs that were designed and recognized as exemplars of multiple intervention programs; studies deemed to be methodologically sound in an existing systematic review; and reports published in English. Selection of published articles meeting these criteria involved a two-step process. First, a search of the Effective Public Health Practice Project [33] was conducted to identify a systematic review of community-based heart health programs. The most recent found was by Dobbins and Beyers [25]. Dobbins and Beyers identified a pool of ten heart health programs deemed to be moderate or strong methodologically. From this pool, a subset of three projects was selected: the North Karelia Heart Health Project (1971–1992), Heartbeat Wales (1985–1990), and the Minnesota Heart Health Program (1980–1993), which were all well-resourced, extensively evaluated, and provided a pool of rigorous studies describing intervention effectiveness.

Second, a subset of primary publications identified in the Dobbins and Beyer's [25] systematic review was retrieved for each of the three programs. In total, four articles were retrieved and reviewed for the Minnesota Heart Health Program [34–37] and five articles for Heartbeat Wales [38–42]. For Heartbeat Wales, a technical report was also used because several of the publications referred to it for descriptions of the intervention [43]. The primary studies and detailed descriptions of the project design, implementation and evaluation for the North Karelia Project were retrieved from its book compilation [30].

Development of a data extraction tool

The team was interested in identifying the types of intervention information reported, or not reported, in the published literature that corresponded with the identified best processes in the design, delivery, and evaluation of multiple intervention programs featured in the propositions. To enhance consistency, accuracy, and completeness of this extraction, a systematic method to extract the intervention information reported in the selected research studies was used. Existing intervention extraction forms [44, 45] first were critiqued to determine their relevancy for extracting the types of intervention information corresponding to the propositions. These forms provided close-ended responses for various characteristics of interventions, but did not allow for the collection of information on the more complex intervention processes reflected in the propositions. Thus, the research team designed a data extraction tool that would guide the extraction of intervention information compatible with the propositions.

To this end, an open-ended format was used to extract verbatim text from the publications. Standard definitions for the proposition were developed (see Tables 2 through 7 in the results section), informed by key sources that described pertinent terms and concepts (e.g., sustainability, synergy) [46–51]. In order to enhance completeness and consistency of data extraction, examples were added to the definitions following an early review of data extraction (see below).

Data extraction from the publications

Pairs from the research team were assigned to one of the three heart health projects. Information from the studies was first extracted independently, and then the pairs for each project compared results to identify any patterns of discrepancies. Throughout the process, all issues and questions related to the data extraction were synthesized by a third party. Early on, examples were added to the definitions of the propositions to increase consistency of information extracted with respect to content and level of detail. Through discussion within pairs and across the research team, consensus was reached on information pertinent to the propositions, and each pair consolidated the information onto one form for each project. The consolidated form containing the consensus decisions from each pair was then used to compare patterns across the full set of articles. All members of the research team participated in the process to identify trends and issues related to reporting on relevant intervention processes. These trends and issues are described in the next section.

Results

Results are reported for each proposition in order from one through nine, and grouped according to the themes shown in the multiple interventions program framework (Figure 1). For each proposition, results are briefly described in the text. These descriptions are accompanied by a table that includes the operational definition for the proposition, findings related to reporting on the proposition, and illustrative verbatim examples from one or more of the projects.

Integrating theory (proposition one)

Information regarding the use of theories was most often presented as a list, with limited description of the complementary or unifying connections among the theories in the design of the interventions. Commonly, intervention programs projected changes at multiple socio-ecological levels, such as individual behaviour changes, in addition to macro-environmental changes. However, while theories were used for interventions targeting various levels of the system, the integration of multiple theories was generally implicit and simply reflected in the anticipated outputs. Although less common, the use of several theories was made more explicit through description of the use of a program planning tool, such as a logic model (Table 2).

Creating synergy (propositions two and three)

General references were frequently made regarding the rationale for combining, sequencing, and staging interventions as an approach to optimizing overall program effectiveness and/or sustainability. In particular, references to this were most often found in proposed explanations for shortfalls in expected outcomes. However, specific details regarding how intervention strategies were combined, sequenced, or staged across levels, as well as across sectors and jurisdictions, were usually absent. Thus, insufficient information was provided to understand potential synergies that may have arisen from coordinating interventions across sectors and jurisdictions. In contrast, more specific details were reported for the combining, sequencing, and staging of interventions within levels of the system (i.e., a series of interventions directed at the intrapersonal level) (Table 3).

Achieving adequate implementation (propositions four and five)

Proposition four specifically considers the quantitative aspects of implementation. Information reported ranged from general statements to specific details. Although the population subgroups targeted by the intervention were often clearly identified, information regarding the estimated reach of the intervention was generally non-specific. The amount of time for specific intervention strategies and the overall program tended to be reported in time periods such as weeks, months or years. Information regarding specific exposure times for interventions tended to be unavailable. The intensity of interventions was provided in some reports, with authors describing strategies that included the passive receipt of information, interaction, and/or environmental changes. A description of investment levels is also a marker of the intensity of an intervention strategy. However, investment descriptions were quite variable, ranging from no information to general information on investment of human and financial resources. In addition, challenges to reporting costs and benefits were often acknowledged.

Proposition five considers the quality of implementation, represented by qualitative descriptions of the intervention. Reporting regarding the quality of the implementation was primarily implicit (Table 4).

Creating enabling structures and conditions (proposition six)

Reporting of information relative to the deliberate creation of structures and conditions was limited and generally implicit, often embedded in the details of intervention implementation (Table 5).

Modifying interventions during implementation (propositions seven and sight)

Although authors acknowledged the importance of flexibility in intervention delivery, information regarding adaptations to environmental circumstances was vague. Reference to context was often in discussion sections of studies, and provided as a partial explanation for unintended or unexpected outcomes. There was minimal description regarding the modification of interventions in response to information gained from process/formative evaluation, outcomes, or population trends – the core of proposition eight. Again, authors acknowledged the significance of process/formative evaluation in informing intervention implementation, with some examples to illustrate how interventions were guided in response to information gathered. At other times, in the summative evaluation, reporting focused on using process evaluation results to explain why expected outcomes were or were not achieved, rather than how the process evaluation results did or did not shape the interventions during implementation. Suggestions for improved program success, based on information gained from formative evaluations, were noted in some discussions (Table 6).

Facilitating sustainability (proposition nine)

Reporting on elements regarding the intention to facilitate sustainability of multiple intervention benefits was also variable. Authors made reference to the notion of sustainability at the onset of projects and described the conditions and supports that were in place to facilitate continued and extended benefits. Elements of sustainability represented in program outcomes were also described in some detail. In other examples, reporting only focused on sustainability of the program during the initial research phase of program implementation and discussed the desirability of continuing the program beyond the research phase (Table 7).

Discussion

The primary purpose of this paper was to conduct a preliminary assessment of information reported in published literature on 'best' processes for multiple interventions in community health. It is only with this information that questions of how and why interventions work can be studied in systematic reviews and other synthesis methods (e.g., realist synthesis). The best processes were a set of propositions that arise from and were organized within a multiple interventions program framework. Community-based heart health exemplars were used as case examples.

Although some information was reported for each of the nine propositions, there was considerable variability in the quantity and specificity of information provided, and in the explicit nature of this information across studies. Several possible explanations may account for the insufficient reporting of implementation information. Authors are bound by word count restrictions in journal articles, and consequently, process details such as program reach might be excluded in favour of reporting methods and outcomes [3]. Reporting practices reflect what traditionally has been viewed as important in intervention research. There is emphasis on reporting to prove the worth of interventions over reporting to improve community health interventions. This follows from the emphasis on answering questions of attribution (does a program lead to the intended outcomes?), rather than questions of adaptation (how does a dynamic program respond to changing community readiness, shifting community capacity, and policy windows that suddenly open?) [16, 52].

An alternative explanation is that researchers are not attending to the processes identified in the propositions when they design multiple intervention programs. Following these propositions requires a transdisciplinary approach to integrating theory, implementation models that allow for contextual adaptation and feedback processes, and mixed methods designs that guide the integrative analysis of quantitative and qualitative findings. These all bring into question some of the fundamental principles that have long been espoused for community health intervention research, including issues of fidelity, the use of standardized interventions, the need to adhere to predictive theory, and the importance of following underlying research paradigms. When coupled with the challenges of operationalizing a complex community health research study that is time- and resource-limited, it is perhaps not surprising that the propositions were unevenly and weakly addressed.

It would be premature to generalize these results to other programs. The three multiple intervention programs (the North Karelia Project, Heartbeat Wales, and the Minnesota Heart Health Program) selected for this study were implemented between 1971 and 1993, and represented the 'crème de la crème' of heart health programs in terms of study resources and design. In particular, the North Karelia project continues to receive considerable attention due to the impressive outcomes achieved [17]. We think it would be useful to apply the data extraction tool developed by our team to some of the more contemporary multiple intervention programs targeting chronic illness. Our findings would provide a useful basis of comparison to determine whether or not there has been an improvement over the past decade in the reporting of information that is pertinent to the propositions. Before embarking on this step, it would be helpful to have further input on the data extraction tool, particularly from those who are involved in the development of new approaches to extract data on the processes of complex interventions with the Cochrane initiative [3].

Conclusion

Study findings suggest that limited reporting on intervention processes is a weak link in published research on multiple intervention programs in community health. Insufficient reporting prevents the systematic study of processes contributing to health outcomes across studies. In turn, this prevents the development and implementation of evidence-based practice guidelines. Based on the findings, and recognizing the preliminary status of the work, we offer two promising directions.

First, it is clear that a standard tool is needed to guide systematic reporting of multiple intervention programs. Such a tool could inform both the design of such research, as well as ensure that important information is available to readers of this literature and to inform systematic analyses across studies. In addition, a research tool that describes best processes for interventions could benefit practitioners who are responsible for program design, delivery, and evaluation.

Second, the reasons for limited reporting on intervention processes need to be understood. Some issues to explore include the influence of publication policies for relevant journals, and the types of research questions and processes that are used.

It is through a more concerted effort to describe and understand the black box processes of multiple interventions programs that we will move this field of research and practice forward. It is our contention that a shift to more inclusive reporting of intervention processes would help lead to a better understanding of successful or unsuccessful features of multi-strategy and multi-level interventions, and thereby improve the potential for effective practice and outcomes.

References

Oakley A, Strange V, Bonell C, Allen E, Stephenson J, Ripple Study Team: Process evaluation in randomized control trials of complex interventions. BMJ. 2006, 332: 413-416. 10.1136/bmj.332.7538.413.

Rychetnick L, Frommer M, Hall P, Sheill A: Criteria for evaluating evidence on public health interventions. J Epidemiol Community Health. 2002, 56: 119-127. 10.1136/jech.56.2.119.

Armstrong R, Waters E, Moore L, Riggs E, Cuervo LG, Lumbiganon P, Hawe P: Improving the reporting of public health intervention research: advancing TREND and CONSORT. J Public Health (Oxf). 2008 Jan 19,

Edwards N, Mill J, Kothari AR: Multiple intervention research programs in community health. Can J Nurs Res. 2004, 36: 40-54.

Ciliska D, Jull A, Thompson C, (Eds): Purpose and procedure. Evid Based Nurs. 2008, 11: 1-2.

Haynes B, Gasziou P, (Eds): Purpose and procedure. Evid Based Med. 2007, 12: 161-10.1136/ebm.12.6.161.

Reid S, (Ed): Purpose and procedure. Evid Based Ment Health. 2008, 11: 1-2. 10.1136/ebmh.11.1.1.

Merzel C, D'Afflitti J: Reconsidering community-based health promotion: promise, performance, and potential. Am J Public Health. 2003, 93: 557-574.

Edwards N, MacLean L, Estable A, Meyer M: Multiple intervention program recommendations for MHPSG technical review committees. 2006, Ottawa, Ontario: Community Health Research Unit

Smedley B, Syme SL: Promoting health: intervention strategies from social and behavioral research. Am J Health Promot. 2001, 15: 149-166.

Brownson RC, Haire-Joshu D, Luke DA: Shaping the context of health: a review of environmental and policy approaches in the prevention of chronic diseases. Annu Rev Public Health. 2006, 27: 341-370. 10.1146/annurev.publhealth.27.021405.102137.

Dooris M, Poland B, Kolbe L, deLeeuw E, McCall D, Wharf-Higgins J: Healthy settings: building evidence for the effectiveness of whole system health promotion – challenges & future directions. Global Perspectives on Health Promotion Effectiveness. Edited by: McQueen D, Jones C. 2007, New York: Springer, 1:

Krieger N: Proximal, distal, and the politics of causation: what's level got to do with it?. Am J Public Health. 2008, 2008 Jan 2,

Pawson R: Evidence-based policy: a realist perspective. 2006, Thousand Oaks: Sage

Edwards N, Clinton K: Context in health promotion and chronic disease prevention. Background document prepared for Public Health Agency of Canada. 2008

Greenhalgh P, Robert G, MacFarlane F, Bates SP, Kyriakidou O, Peacock R: Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004, 82: 581-629. 10.1111/j.0887-378X.2004.00325.x.

McLaren L, Ghali LM, Lorenzetti D, Rock M: Out of context? Translating evidence from The North Karelia project over place and time. Health Educ Res. 2007, 22: 414-424. 10.1093/her/cyl097.

COMMIT Research Group: Community intervention trial for smoking cessation (COMMIT): summary of design and intervention. J Natl Cancer Inst. 1991, 83: 1620-1628. 10.1093/jnci/83.22.1620.

Green LW, Glasgow RE: Evaluating the relevance, generalization, and applicability of research. Eval Health Prof. 2006, 29: 126-153. 10.1177/0163278705284445.

CART Project Team: Community action for health promotion: a review of methods and outcomes 1990–1995. Am J Prev Med. 1997, 13: 229-239.

Best A, Stokols D, Green LW, Leischow S, Holmes B, Buchholz K: An integrative framework for community partnering to translate theory into effective health promotion strategy. Am J Health Promot. 2003, 18: 168-176.

Deschesnes M, Martin C, Hill AJ: Comprehensive approaches to school health promotion: how to achieve broader implementation?. Health Promot Int. 2003, 18: 387-396. 10.1093/heapro/dag410.

Ebrahim S, Smith GD: Exporting failure? Coronary heart disease and stroke in developing countries. Int J Epidemiol. 2001, 30: 201-205. 10.1093/ije/30.2.201.

Koepsell TD, Wagner EH, Cheadle AC, Patrick DL, Martin DC, Diehr PH, Perrin EB, Kristal AR, Allan-Andrilla CH, Dey LJ: Selected methodological issues in evaluating community-based health promotion and disease prevention programs. Annu Rev Public Health. 1992, 13: 31-57. 10.1146/annurev.pu.13.050192.000335.

Dobbins M, Beyers J: The effectiveness of community-based heart health projects: a systematic overview update. 1999, Hamilton, Ontario: Effective Public Health Practice Project

Goodman RM: Bridging the gap in effective program implementation: from concept to application. J Community Psychol. 2000, 28: 309-321. 10.1002/(SICI)1520-6629(200005)28:3<309::AID-JCOP6>3.0.CO;2-O.

Centres for Disease Control (CDC): Best practices for comprehensive tobacco control programs. 1999, Atlanta, US: Department of Health and Human Services, CDC, National Centre for Chronic Disease Prevention and Health Promotion

Stokols D: Translating social ecological theory into guidelines for community health promotion. Am J Health Promot. 1996, 10: 282-298.

Richard L, Lehoux P, Breton E, Denis J, Labrie L, Léonard C: Implementing the ecological approach in tobacco control programs: results of a case study. Eval Program Plann. 2004, 27: 409-421. 10.1016/j.evalprogplan.2004.07.004.

Puska P, Tuomileto J, Nissinen A, Vartiainen E, (Eds): The North Karelia project: 20 year results and experiences. 1995, Helsinki: National Public Health Institute

Anderson LM, Brownson RC, Fullilove MT, Teutsch SM, Novick LF, Fielding J, Land GH: Evidence-based public health policy and practice: promises and limits. Am J Prev Med. 2005, 28: 226-230. 10.1016/j.amepre.2005.02.014.

Pang T, Sadana R, Hanney S, Bhutta ZA, Hyder AA, Simon J: Knowledge for better health: a conceptual framework and foundation for health research systems. Bull World Health Organ. 2003, 81: 815-820.

Public Health Research, Education and Development Program: Effective public health practice project. 2005, Hamilton: McMaster University, [http://www.myhamilton.ca/myhamilton/CityandGovernment/HealthandSocialServices/Research/EPHPP/AboutEPHPP.asp]

Kelder S, Perry C, Klepp K: Community-wide youth exercise promotion: long-term outcomes of the Minnesota heart health program and the class of 1989 study. J Sch Health. 1993, 63: 218-223.

Lando H, Pechacek T, Pirie P, Murray D, Mittelmark M, Lichtenstein E, Nothwehr F, Gray C: Changes in adult cigarette smoking in the Minnesota heart health program. Am J Public Health. 1995, 85: 201-208.

Luepkar R, Murray D, Jacobs D, Mittelmark M: Community education for cardiovascular disease prevention: risk factor changes in the Minnesota heart health program. Am J Public Health. 1994, 84: 1383-1393.

Perry C, Kelder S, Murray D, Klepp K: Communitywide smoking prevention: long term outcomes of the Minnesota heart health program and class of 1989 study. Am J Public Health. 1992, 82: 1210-1216.

Nutbeam D, Catford J: The Welsh heart programme evaluation strategy: progress, plans and possibilities. Health Promot. 1987, 2: 5-18. 10.1093/heapro/2.1.5.

Nutbeam D, Smith C, Simon M, Catford J: Maintaining evaluation design in long term community based health promotion programmes: Heartbeat Wales case study. J Epidemiol Community Health. 1993, 47: 127-133.

Smail S, Parish R: Heartbeat Wales – a community programme. Practitioner. 1989, 233: 343-347.

Tudor-Smith C, Nutbeam D, More L, Catford J: Effects of Heartbeat Wales programme over five years on behavioural risks for cardiovascular disease: quasi-experimental comparison of results from Wales and matched areas. BMJ. 1998, 316: 818-822.

Phillips CJ, Prowle MJ: Economics of a reduction in smoking: case study from Heartbeat Wales. J Epidemiol Community Health. 1993, 47: 215-223.

Directorate of the Welsh Heart Programme: Take Heart: A consultative document on the development of community-based heart health initiatives within Wales. Cardiff. 1985

Dobbins M, Ciliska D, Cockerill R, Barnsley J, DiCenso A: A framework for the dissemination and utilization of research for health-care policy and practice. Online J Knowl Synth Nurs. 2002, 9: 1-12.

Riley B: OHHP: Taking Action for Healthy Living Local Reporting Forms. Data collection tools prepared for the Ontario Ministry of Health and Long-Term Care for monitoring and evaluating the OHHP (2003–2008). 2003

Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F: Implementation Research: A Synthesis of the Literature. 2005, Tampa: University of Florida

Last J: A Dictionary of Epidemiology. 2001, Oxford: University Press

Mancini JA, Marek LI: Sustaining community-based programs for families: conceptualization and measurement. Fam Relat. 2004, 53: 339-10.1111/j.0197-6664.2004.00040.x.

McLeroy K, Bibeau D, Steckler A, Glanz K: An ecological perspective on health promotion programs. Health Educ Q. 1988, 15: 351-377.

Richard L, Potvin L, Kishchuk N, Prlic H, Green LW: Assessment of the integration of the ecological approach in health promotion programs. Am J Health Promot. 1996, 10: 318-328.

Rychetnik L, Hawe P, Waters E, Barratt A, Frommer M: A glossary for evidence based public health. J Epidemiol Community Health. 2004, 58: 538-545. 10.1136/jech.2003.011585.

Mol A: Proving or improving: on health care research as a form of self-reflection. Qual Health Res. 2006, 16: 405-414. 10.1177/1049732305285856.

Multiple interventions for community health. [http://aix1.uottawa.ca/~nedwards]

Acknowledgements

We wish to acknowledge the research internship that brought us together as a team – Dr. Nancy Edwards' three-month Research Internship in Multiple Interventions in Community Health [53]. Also, thanks to Ms. Christine Herrera and Heather McGrath for their research and technical support in preparation of this article. Contributions were supported by awards to Dr. Riley (personnel award from the Heart and Stroke Foundation of Canada and the Canadian Institutes of Health Research), Dr. Kothari (Career Scientist Award from the Ontario Ministry of Health and Long Term Care) and Dr. Edwards (Nursing Chair funded by the Canadian Health Services Research Foundation, Canadian Institutes of Health Research, and the Government of Ontario).

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

BR conceived of the study, managed the project, and was the lead writer. JM led development of the data extraction tool. OM led the description of results. NE conceived of the multiple interventions framework and co-developed the propositions with BR. All authors contributed substantively to the operational definitions, data extraction, and writing. All authors have read and approved the final manuscript.

Anita Kothari, Donna Kurtz, Linda I vonTettenborn contributed equally to this work.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

This article is published under license to BioMed Central Ltd. This is an Open Access article distributed under the terms of the Creative Commons Attribution License (http://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Riley, B.L., MacDonald, J., Mansi, O. et al. Is reporting on interventions a weak link in understanding how and why they work? A preliminary exploration using community heart health exemplars. Implementation Sci 3, 27 (2008). https://doi.org/10.1186/1748-5908-3-27

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1748-5908-3-27