Abstract

Herein, we review aspects of leading-edge research and innovation in materials science that exploit big data and machine learning (ML), two computer science concepts that combine to yield computational intelligence. ML can accelerate the solution of intricate chemical problems and even solve problems that otherwise would not be tractable. However, the potential benefits of ML come at the cost of big data production; that is, the algorithms demand large volumes of data of various natures and from different sources, from material properties to sensor data. In the survey, we propose a roadmap for future developments with emphasis on computer-aided discovery of new materials and analysis of chemical sensing compounds, both prominent research fields for ML in the context of materials science. In addition to providing an overview of recent advances, we elaborate upon the conceptual and practical limitations of big data and ML applied to materials science, outlining processes, discussing pitfalls, and reviewing cases of success and failure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The ongoing revolution with artificial intelligence has the potential to transform society well beyond applications in science and technology. A key ingredient is machine learning (ML), for which increasingly sophisticated methods have been developed, thus bringing an expectation that within a few decades, machines may be able to outperform humans in most tasks, including intellectual tasks. Two converging movements are responsible for the revolution. The first may be referred to as “data-intensive discovery” [1], “e-Science”, or “big data” [3,4], a movement wherein massive amounts of data are transformed into knowledge. This is attained with various computational methods, which increasingly engage ML techniques, in a movement characterized by a transition in which data move from a “passive” to an “active” role. What we mean by an “active” role for data is that it is not considered solely to confirm or refute a hypothesis but also to assist in raising new hypotheses to be tested at an unprecedented scale. The transition into a fully active role for data will only be complete when the computational methods (or machines) are capable of generating knowledge themselves. Within this novel paradigm, data must be organized in such a way as to be machine-readable, particularly since computers at present cannot “read” and interpret. Attempts to teach computers to read are precisely within the realms of the second movement, in which natural language processing tools are under development to process spoken and written text. Significant advances in this regard have been recently achieved upon combining ML and big data, as may be appreciated by the astounding progress in speech processing [5] and machine translation. Computational systems are still far away from the human ability to interpret text, but the increasingly synergistic use of big data and ML allows one to envisage the creation of intelligent systems that can handle massive amounts of data with analytical ability. Then, beyond the potential to outperform humans, machines would also be able to generate knowledge–without human intervention.

Regardless of whether such optimistic predictions will become a reality, big data and ML already have a significant impact owing to the generality of their approaches. To understand why this is happening, we need to distinguish the contributions of the two areas. Working with big data normally requires proper infrastructure, with major difficulties being associated with the gathering and curation of much data. In Materials Science, for instance, access to large databases and considerable computational power are essential, as exemplified in this review paper in the discussion of sensor networks. Standard ML algorithms, on the other hand, can operate on small datasets and in many cases require only limited computational resources. Furthermore, there are major limitations in terms of what ML can achieve by virtue of fundamental conceptual difficulties.

The goals of ML fall into two distinct types [6]: (i) classification of data instances in a large database, as in image processing and voice recognition; (ii) making inferences based on the organization and/or structuring of the data. Needless to say, the second goal is much harder to achieve. Let us consider, as an example, the application of ML to identify text authorship. In a classification experiment with tens of English literature books modeled as word networks, book authors were identified with high accuracy using supervised ML [7]. Nonetheless, it would be impossible with current technology to make a detailed analysis of writing style and establish correlations among authors, which would represent a task of the second type. This will require considerable new developments, such as teaching computers to read. Today, the success of ML stems mostly from applications focused on the first goal, which encompasses most of its applications in materials science. Nonetheless, much more can be expected in the next few decades, as we shall comment upon in our conclusive Sect. 5.

Considerable work has been devoted to addressing the challenges that arise when materials science meets big data and ML. The evolution in computing resources allows scientists to produce and manipulate unprecedented data volumes to be stored and managed via algorithms with embedded intelligence [8]. Data processing has become a much more complex endeavour than just storage and retrieval, as concepts such as data curation and provenance come into play, particularly if the data are to be machine-readable. Materials scientists already employ substantial amounts of machine-readable data in at least two major fields: in exploring protein databanks and in crystallography. These are illustrative examples of machine-readable content that require artificial intelligence.

In this review paper, we discuss two areas in Materials Science that are fundamental on their own but that also complement each other: ML-based discovery of new materials and ML-based analysis of chemical sensing compounds. The first presents the latest techniques to search the space of possibilities given by molecular interactions; the second reviews analytical methods to understand the properties of materials when used for sensing. They are complementary since new materials can lend sensing capabilities that, in turn, can produce more data to feed algorithmic methods for materials discovery. In addition to the acronym ML, we will use the acronyms AI for artificial intelligence, DL for deep learning, and DNN for deep neural networks - the latter two used interchangeably.

2 New trends in big data and machine learning relevant to materials sciences

Concepts and methodologies related to big data and ML have been employed to address many problems in materials sciences, as emphasized by the illustrative examples that we will discuss in the next sections. A description of the concepts and myths targeted at chemists and related professionals is given in the review by Richardson et al. [9]. In this section, we briefly introduce such concepts as background to assist the reader in following the paper.

2.1 Big data

The broadly advertised term “big data” has gained attention as a direct consequence of the rapid growth in the amount of data being produced in all fields of human activity. The magnitude of this increase is often highlighted even to the general public, as in a recent news piece by Forbes, which states that “There are 2.5 quintillion bytes of data created each day at our current pace, but that pace is only accelerating with the growth of the Internet of Things (IoT)” [10]. However, the term big data is not just about massive data production, a perspective that has popularized it as a jargon filled with expectations [3]. Big data also refers to a collection of novel software tools and analytical techniques that can generate real value by identifying and visualizing patterns from disperse and apparently unconnected data sources. Nevertheless, to grasp the genuine virtues and potential of big data in materials science, its meaning must be interpreted in connection with the specificities of this particular domain. Big data might be understood as a movement driven by technological advances that accelerated data generation to a pace sufficiently fast to move it beyond the capacity of existing resources centralized in a single company or institution. Although this accelerated pace raises many computational problems, it also introduces potential benefits, as innovations induced by big data problems will certainly lead to a range of entirely new scientific discoveries that would not be possible otherwise.

In Materials Sciences, big data can be exploited in many ways: in computer simulations, miniaturized sensors, combinatorial synthesis, in the design of experimental procedures and protocols with increased complexity, in the immediate sharing of experimental results via databases and the Internet, to name a few. In quantum chemistry, for example, there exists the ioChem-BD platform [11], a tool to manage large volumes of simulation results on chemical structures, bond energies, spin angular momentum, and other descriptive measures. The platform provides inspection tools, including versatile browsing and visualization for a minimum level of comprehension, in addition to techniques and tools for systematic analysis. In data-driven medicinal chemistry [4], investigators must face critical issues and factors that scale with the data, such as data sharing, modelling molecular behaviour, implementation and validation with experimental rigor, and defining and identifying ethical considerations.

Big data have often been characterized in terms of the so-called five Vs: volume, velocity, variety, veracity, and value [3, 4]. Although it is not a strong definition, this description somehow captures the characteristic properties of the big data scenario. As far as volume is concerned, size is relative across research fields – what is considered big in Materials Science may be small in computer science; what matters is to what extent the data is manageable and usable by those who need to learn from it. Materials Science produces big data volumes by means of techniques such as parallel synthesis [12], high-throughput screening (HTS) [13], and first-principle calculations as reported in notorious efforts on quantum chemistry [14], molecules in general as in the AFLOW project [15], and organic molecules as in the ANI-1 project [16]. Big data volumes in materials science also originate from compilations of the literature and patent repositories [17, 18]. Closely related is velocity, which refers to the pace of data generation and may affect the capability of drawing conclusions and identifying alternative experimental directions, demanding off-the-shelf analytical tools to support timely summarization and hypotheses validation. Variety refers to the diversity of data types and formats currently available. While materials science has the advantage of an established universal language to describe compounds and reactions, many problems arise when translating this language into computational models whose usage varies across research groups and even across individual researchers. Veracity in Materials Science is closely concerned with the potential lack of quality in data produced by imprecise simulations or collected from experiments not conforming to a sufficiently rigid protocol, especially when biological organisms are involved. Finally, value refers to the obvious urge for data that are trustworthy, precise, and conclusive.

The importance of big data for materials science is highlighted in several initiatives, such as the BIGCHEM project described by Tetko et al. [19]. Their work is illustrative of the issues in handling big data in chemistry and life sciences: it includes a discussion on the importance of data quality, the challenges in visualizing millions of data instances and the use of data mining and ML for predictions in pharmacology. Of particular relevance is the search for suitable strategies to explore billions of molecules, which can be useful in various applications, especially in the pharmaceutical industry, to reduce the massive cost of identifying new lead compounds [19].

2.2 Machine learning (ML)

In computer science, the standard approach is to use programming languages to code algorithms that “teach” the computer to perform a particular task. ML, in turn, refers to implementing algorithms that tell the computer how to “learn”, given a set of data instances (or examples) and some underlying assumptions. Computer programs such as those deployed over the DL frameworks can then execute tasks that are not explicitly defined in the code. As the very name implies, it depends on learning, a process that in humans takes years, even decades, and that often happens based on the observation of both successes and failures. It is thus implicit that such learning depends on a large degree of experimental support. In its most usual approach, ML depends on an extensive set of successful and unsuccessful examples that will mold the underlying learning algorithm. This is where ML and big data collide. The abundance of both data and computing capacity has brought feasibility to approaches that would not work otherwise due to a lack of sufficient examples to learn from and/or processing power to drive the learning process. In fact, specialists argue that data collection and preparation in ML can demand more effort than the actual design of the learning algorithms [20]. Nevertheless, solving the issues to build effective learning programs is worthwhile; computers handle datasets much larger than humans can possibly do, as they are not susceptible to fatigue; and, unless mistakenly programmed, they hardly ever make numerical errors.

ML is a useful approach to problems for which designing explicit algorithms is difficult or infeasible, as in the case of spam filtering or detecting meaningful elements in images. Such problems have a huge space of possible solutions; thus, rather than searching for an explicit solution, a more effective strategy is to have the computer progressively learn new patterns directly from examples. Many problems in materials science conform to this strategy, including protein structure prediction, virtual screening of host-guest binding behaviour, material design, property prediction, and the derivation of models for quantitative structure-activity relationships (QSARs). This last one is, itself, an ML practice based on classification and regression techniques; it is used, for example, to predict the biological activity of a compound having its physicochemical properties as input.

2.3 An overview of deep learning

The latest achievements in ML are related to techniques broadly known as deep learning (DL) achieved with deep neural networks (DNNs) [21], which outperform state-of-the-art algorithms in handling problems such as images and speech recognition (see Fig. 1a). DL algorithms rely on artificial neural networks (ANNs), a biology-inspired technique in which the underlying principle is to approximate complex functions by translating a large number of inputs into a proper output. The principles behind DL are not new, dating back to the introduction of the perceptron neural network in 1958. After decades of disappointing results in the 1980 and 1990 s, ANNs were revitalised with impressive innovations in 2012 in the seminal paper by Krizhevsky et al. [22] and their AlexNet architecture for image classification, inspired by the ideas of LeCun et al. [23]. The driving factors responsible for this drastic change in the profile of a 50-year research field were significant algorithmic advances coupled with huge processing power (thanks to GPU advances [24]), big data sets, and robust development frameworks.

Figure 1 illustrates the evolving popularity of DL and its applications. Figure 1a shows the rate of improvement in the task of image classification after the introduction of DL methods. Figure 1b shows the increasing interest in the topic in the number of indexed publications by the Institute for Scientific Information, while the increasing popularity of the major software packages [24], Torch, Theano, Caffe, TensorFlow, and Keras, is demonstrated in Fig. 1c.

Facts about deep learning. a The improvement of the image classification rate after the introduction of the AlexNet deep neural network. b The increasing number of publications as indexed by ISI (International Scientific Indexing). c The popularity (Google Trends Score) of major deep learning software packages in the current decade: Torch, Theano, Caffe, TensorFlow, and Keras [24]. Elaborated by the authors of this paper

A common method of performing DL is by means of deep feedforward networks or, simply, feedforward neural networks, a kind of multiplayer perceptron [25]. They work by approximating a function f*, as in the case of a classifier y = f* that maps an input vector x to a category y. Such mapping is formally defined as y = f(x;θ), whereas the network must learn the parameters θ that result in the best function approximation. One can think of the network as a pipeline of interconnected layers of basic processing units, the so-called neurons, which work in parallel; each neuron is a vector-to-scalar function. The model is inspired by neuroscience findings according to which a neuron receives input from many other neurons; each input is multiplied by a weight – the set of all the weights corresponds to the set of parameters θ. After receiving the vector of inputs, the neuron computes its activation value, a process that proceeds up to the output layer.

Initially, the network does not know the correct weights. To determine the weights, it uses a set of labelled examples so that every time the classifier y = f* misses the correct class, the weights are adjusted by back-propagating the error. During this back-propagation, a widely used method to adjust the weights is named gradient descent, which calculates the derivative of a loss function (e.g., mean squared error) for each weight and subtracts if from that weight. The adjustment repeats for multiple labelled examples and over multiple iterations until approximating the desired function. All this process is called the training phase, and the abundance of labeled data produced currently has drastically changed the domains in which ML can work upon.

With an appropriately designed and complex architecture, possibly consisting of dozens of layers and hundreds of neurons, an extensively trained artificial neural network defines a mathematical process whose dynamics are capable of embodying increasingly complex hierarchical concepts. As a result, the networks allow machines to mimic abilities once considered to be exclusive to humans, such as translating text or recognizing objects.

Once the algorithms are trained, they should be able to generalise and provide correct answers for new examples of a similar nature. In such an optimization process, it is important to balance the fit to the training data: overfitting and the model will make correct mappings only to the training examples; underfitting and the model will miss even previously seen examples. For sensor networks, for example, overfitting produces a very low error in the training set because the model encompasses both the noise and the real signal. This often results in a system that generalizes poorly, as the noise is random. Therefore, a compromise is required that involves using several different training sets [26] and, most importantly, regularization techniques [25].

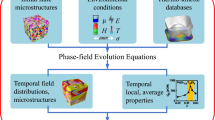

2.4 The flow path towards data‐based scientific discovery in materials science

Figure 2 illustrates the standard flow path from data production to the outcome of ML. The first step concerns methods to produce sufficient data to feed computational learning methods. Such data must initially be analysed by a domain expert, who will classify, label, validate, or reject the results of an experiment or simulation. The preprocessing step can be quite laborious and critical in that, if not taken rigorously, it might invalidate the remainder of the process or compromise its results. Once the data are ready, it is necessary to iterate it over an ML method; such methods require a stage of learning (or training) in which the computer learns from the known results provided by the domain expert. During the training step, knowledge is transferred from the training data to the computer in the form of algorithmic settings that are specific to each method. A model is then learned, i.e., a mathematical abstraction that, if computationally executed, can be applied to new, unseen data and produce accurate classifications/regressions and correct inferences as final outcomes of the process.

3 Materials discovery

Using computational tools to discover new materials and evaluate material properties is an old endeavour. For instance, DENDRAL, the first documented project on computer-assisted organic synthesis, dates back to 1965 [27]. According to Szymkuc et al. [28]. The early enthusiasm observed in the initial attempts waned with successive failures to obtain reasonable predictive power that could be used to plan organic synthesis, so much so that teaching a computer to perform this task was at some point considered a “mission impossible” [28]. However, renewed interest has emerged with the enhanced capability afforded by big data and novel ML approaches, particularly since one may envisage–for the first time–the possibility of exploring a considerable amount of the space of possible solutions defined by the elements in the periodic table and the laws of reactivity. In doing so, the number of possible material structures is estimated to be 10100, which is larger than the number of particles in the universe [29]. In this vastness, the discovery of new materials must face resource and time constraints [30, 31]. Expensive experiments must be well planned, ideally targeting lead structures with a high potential of generating new materials with useful properties. The power of ML in materials science offers the greatest potential in this scenario. Of course, this comes at a cost; these complex spaces must be mathematically modelled, and a significant number of the representative patterns must be available as examples for the ML system to learn from. ML classifiers can then be trained to predict material properties, as demonstrated for magnetic materials without requiring first-principles calculations [32].

Predicting material properties from basic physicochemical properties involves exploring quantitative structure-property relationships (QSPRs), analogous versions of QSAR for nonbiological applications [33,34,35]. There are many examples of ML applied in this domain. For example, the reaction outcomes from the crystallization of templated vanadium selenites were predicted with a support vector machine (SVM)-based model where the training set included a “dark” portion of unsuccessful reactions compiled from laboratory notebooks [36]. Briefly, SVM refers to a discriminative classifier formally defined by a separating hyperplane, a widely used technique in ML [8]. The prediction of the target compound was attained with a success rate of 89 %, higher than that obtained with human intuition (78 %) [36]. For inorganic solid-state materials, atom-scale calculations have been a major tool to help understand material behaviour and accelerate material discovery [37]. Some of the relevant properties, however, are only obtained at a very high computational cost, which has stimulated the use of data-based discovery. In addition to highlighting major recent advances, Ward and Wolverton [37] comment upon current limitations in the field, such as the limited availability of appropriate software targeted at the computation of material properties.

In the choice of an outline to describe contributions found in the chemistry literature related to materials discovery, we selected a subset of topics that exemplify the many uses of ML.

3.1 Large databases and initiatives

Materials genome initiatives and multi-institutional international efforts seeking to establish a generic platform for collaboration [38, 39, 40] set a hallmark for the importance of big data and ML in chemistry and materials sciences. A major goal is perhaps to move beyond the trial-and-error empirical approaches prevailing in the past [35]. For example, the US Materials Genome Initiative (MGI) (https://www.mgi.gov/) established the following issues as major challenges [41]:

-

Lead a culture shift in materials research to encourage and facilitate an integrated team approach;

-

Integrate experiment, computation, and theory and equip the materials community with advanced tools and techniques;

-

Make digital data accessible; and.

-

Create a world-class materials workforce that is trained for careers in academia or industry.

To encourage a cultural change in materials research, ongoing efforts intend to generate data that can serve both to validate existing models and to create new, more sophisticated models with enhanced predictive capabilities. This has been achieved with a virtual high-throughput experimentation facility involving a national network of labs for synthesis and characterization [41] and partnerships between academia and industry for tackling specific applications. For example, the Center for Hierarchical Materials Design is developing databases for materials properties and materials simulation software [41], and alliances have been established to tackle topics such as Materials in Extreme Dynamic Environments and Multiscale Modeling of Electronic Materials [41]. There are also studies of composite materials to improve aircraft fuel efficiency and of metal processing to produce lighter weight products and vehicles [41]. Regarding the integration of experiment, theory, and computer simulation, perhaps the most illustrative example is an automated system designed to create a material, test it and evaluate the results, after which the best next experiment is chosen in an iterative procedure. The whole process was conducted without human intervention. The system is already in use to speed up the development process of high-performance carbon nanotubes for use in aircraft [41]. Another large-scale program at the University of California, Berkeley, used high-performance computing and state-of-the-art theoretical tools to produce a publicly available database of the properties of 66,000 new and predicted crystalline compounds and 500,000 nanoporous materials [41].

There are cases that require combining different levels of theory and modelling with experimental results, particularly for more complex materials. In the Nanoporous Materials Genome Center, microporous and mesoporous metal-organic frameworks and zeolites are studied for energy-relevant processes, catalysis, carbon capture, gas storage, and gas- and solution-phase separation. The theoretical and computational approaches range from electronic structure calculations combined with Monte Carlo sampling methods to graph theoretical analysis, which are assembled into a hierarchical screening workflow [41].

An important feature of MGI is the provision of infrastructure for researchers to report their data in a way that it can be curated. Programs such as the Materials Data Repository (MDR) and Materials Resource Registry are being developed to allow for worldwide discovery, in some cases based on successful resources from other communities, such as the Virtual Astronomical Observatory’s registry [41]. This component of the “Make digital data accessible” goal of MGI has already provided extensive datasets, e.g., for compounds (~ 1,500 compounds) to be used in electrodes for ion-lithium batteries and over 21,000 organic molecules for liquid electrolytes. These programs ensure immediate access by the industry to data that may help to accelerate material development in applications such as hydrogen fuel cells, pulp, and paper industry and solid-state lighting [41]. Additionally, the QM database contains the ground-state electronic structures for 3 million molecules and 10 low-lying excited states for more than 3.5 million molecules (e.g., “water”, “ethanol”, “ethyl alcohol”) in the “PubChemQC” project (http://pubchemqc.riken.jp/) [42]. For this database, the ground state structures were calculated with density-functional theory (DFT) at the B3LYP/6-31G* level, while the time-dependent DFT with the B3LYP functional and 6–31 + G* basis set was used for the excited states. The project also employs ML (SVMs and regression) for predicting DFT results related to the electronic structure of molecules.

3.2 Identification of compounds with genetic algorithms

In bioinspired computation, computer scientists define procedures that mimic mechanisms observed in natural settings. This is the case for the genetic algorithms [43] inspired by Charles Darwin’s ideas of evolution, which mimic the “survival of the fittest” principle to set up an optimization procedure. In these algorithms, known functional compounds are crossed over along with a mutation factor to produce novel compounds; the mutation factor introduces new properties into the mutations. Novel compounds with no useful properties are disregarded, while those displaying useful properties (high fitness) are selected to produce new combinations. After a certain number of generations (or iterations), new functional compounds emerge with some properties inherited from their ancestors, supplemented with other properties acquired along their mutation pathway. Of course, this is an oversimplified description of the process, which depends on accurate modelling of the compounds, a proper definition of the mutation procedure, and a robust evaluation of the fitness property. The latter may arrive by means of calculations, as in the case of conductivity or hardness, reducing the need for expensive experimentation.

In genetic algorithms, each compositional or structural characteristic of a compound is interpreted as a gene. Examples of chemical genes include the fraction of individual components in a given material, polymer block sizes, monomer compositions, and processing temperature. The genome refers to the set of all the genes in a compound, while the resulting properties of a genome are named a phenotype. The task of a genetic algorithm is to scan the search space of the gene domains to identify the most suitable phenotypes, as measured by a fitness function. The relationship between the genome and the phenotype gives rise to the fitness landscape (see Tibbetts et al. [2] for a detailed background). Figure 3 illustrates a fitness landscape for two hypothetical genes, say, block size and processing temperature of a polymer synthesis process whose aim is to achieve high rates of hardness. Note that when exploring a search space, the fitness landscape is not known in advance and rarely only bidimensional; instead, it is implicit in the problem model defined by the genes’ domains and in the definition of the fitness function. The modelling of the problem is correct if the genes permit gainful movement over the fitness landscape, while the fitness function correlates with interesting physical properties. In the example in Fig. 3, the genetic algorithm moves along the landscape by producing new compounds while avoiding compounds that will not improve the fitness. The mechanism of the genetic algorithm, therefore, grants it a higher probability of moving towards phenotypes with the desired properties. For a comprehensive review focused on materials science, please refer to the work of Paszkowicz [44].

A fitness landscape considering two hypothetical genes as, for example, block size and processing temperature of polymer synthesis. Fitness could be hardness, for instance. The landscape contains local maxima, a global maximum, and a global minimum. The path of a genetic algorithm along 11 iterations is shown in red. Elaborated by the authors of this paper

The identification of more effective catalysts has also benefited from genetic algorithms, as in the work by Wolf et al. [45] where a set of oxides (B2O3, Fe2O3, GaO, La2O3, MgO, MnO2, MoO3, and V2O5) was taken as the initial population of an evolutionary process. Aimed at finding the catalysts that would optimize the conversion of propane into propene through dehydrogenation, the elements of the initial set were iteratively combined to produce four generations of catalysts. In total, the experiment produced 224 new catalysts with an increase of 9 % in conversion (T = 500 °C, C3H8/O2, p(C3H8) = 30 Pa). A thorough review of evolutionary methods in searching for more efficient catalysts was given by Le et al. [29]. Bulut et al. [46] explored the use of polyimide solvent-resistant nanofiltration membranes (phase inversion) to produce membrane-like materials. The aim was to optimize the composition space given by two volatile solvents (tetrahydrofuran and dichloromethane) and four nonsolvent additives (water, 2-propanol, acetone, and 1-hexanol). This system was modeled as a genome with eight variables corresponding to a search space of 9 × 1021 possible combinations, which could not be exhaustively scanned regardless of the screening method. The solution was to employ a genetic algorithm driven by a fitness function defined by the membrane retention and permeance. Throughout four generations and 192 polymeric solutions, the fitness function indicated an asymptotic increase in the membrane performance.

3.3 Synthesis prediction using ML

The synthesis of new compounds is a challenging task, especially in organic chemistry. The search for machine-based methods to predict which molecules will be produced from a given set of reactants and reagents started in 1969, with Corey and Wipke [47], who demonstrated that synthesis (and retrosynthesis) predictions could be carried out by a computing machine. Their approach was based on templates produced by expert chemists that defined how atom connectivity would rearrange, given a set of conditions–see Fig. 4. Despite demonstrating the concept, their approach suffered from limited template sets, which prevented their method from encompassing a wide range of conditions and that would fail in the face of even the smallest alterations.

Example of a reaction and its corresponding reaction template. The reaction is centered in the green-highlighted areas (27,28), (7,27), and (8,27). The corresponding template includes the reaction center and nearby functional groups. Reproduced with permission from the work of Jin et al. [48]

The use of templates (or rules) to transfer knowledge from human experts to computers, as seen in the work of Corey and Wipke, corresponds to an old computer science paradigm broadly referred to as “expert systems” [49]. This approach has attained limited success in the past due to the burden of producing sufficiently comprehensive sets of rules capable of yielding results over a broad range of conditions, coupled with the difficulty of anticipating exceptional situations. Nevertheless, it has gained renewed interest recently, as ML methods may contribute to automatic rule generation taking advantage of large datasets, as it is being explored, for instance, in medicine [50].

In association with big data, ML became an alternative to extracting knowledge not only from experts but also from datasets. Coley et al. [51], for example, used a 15,000-patent dataset to train a neural network to identify the sequence of templates that would most likely produce a given organic compound during retrosynthesis. Segler and Waller [52] used 8.2 million binary reactions (including 14.4 million molecules) acquired from the Reaxys web-based chemistry database (https://www.reaxys.com) to build a knowledge graph, a bipartite directed graph G=(M,R,E), made of two sets of nodes, where M stands for the set of molecules and R for the set of reactions, plus one set E of labelled edges, each representing a role t ∈ {reactant, reagent, catalyst, solvent, product}. A schema of the approach is depicted in Fig. 5. A link prediction ML algorithm was employed, which predicts new edges from the characteristics of existing paths within the graph structure given by edges of type reactant. In the example depicted in Fig. 5b, the reactant path between molecules 1 and 4 indicates a missing reaction node between them, i.e.,. node D in Fig. 5c. The experiments confirmed a high accuracy in predicting the products of binary reactions and in detecting reactions that are unlikely to occur.

Graph representation of reactions. a Four molecules, 1, 2, 3, and 4, and three reactions, A, B, and C. b The graph representation in which the circles represent the molecule nodes, and the diamonds represent the reaction nodes; edges describe the role, reactant, reagent, catalyst, solvent, or product of each molecule in a given reaction. Notice the path, depicted in orange color, made of reactant edges between molecules 1 and 4. c The missing reaction node D that was indicated by the reactant path found between 1 and 4. Reproduced with permission from the work of Segler and Waller [52]

Owing to the limitations of ad hoc procedures relying on templates, Szymkuc et al. [28] advocated that for chemical syntheses, the chemical rules from stereo- and regiochemistry may be coded with elements of quantum mechanics to allow ML methods to explore pathways of known reactions from a large database. This tends to increase the data space to be searched. From recent literature, not restricted to the chemical field, DL appears to be the most promising approach for successfully exploring large search spaces [53, 54] and for reaching an autonomous molecular design [55]. Schwaller et al. [56] used DL methods to predict outcomes of chemical reactions and found the approach suitable to assimilate latent patterns for generalizing out of a pool of examples, even though no explicit rules were produced. Assuming that organic chemistry reactions sustain properties similar to those studied in linguistic theories [57], they explored state-of-the-art neural networks to translate reactants into products similarly to how translation is performed from one language into another. In their work, a DNN was trained over Lowe’s dataset of US patents, which contains patents applied between 1976 and 2016 [58], including 1,808,938 reactions described using the SMILES [59] chemical language, which defines a notation system to represent molecular structures as graphs and strings amenable to computational processing. Jin’s dataset [48], a cleaned version of Lowe’s dataset after removing duplicates and erroneous reactions, with 479,035 reactions, was also used. They achieved an accuracy of 65.4 % for single-product reactions over the entire Lowe’s dataset and an accuracy of 80.3 % over Jin’s dataset. Bombarelli et al. [60] also combined the SMILES notation and a DNN to map discrete molecules into a continuous multidimensional space in which the molecules are represented as vectors. In such a continuous space, it is possible to predict the properties of the existing vectors and predict new vectors with certain properties. Gradients are computed to indicate where to look for vectors whose properties vary in the desired way, and optimization techniques can be employed to search for the best candidate molecules. After finding new vectors, a second neural network converts the continuous vector representation into a SMILES string that reveals a potential lead compound. DNNs were also employed to predict reactions with 97 % accuracy on a validation set of 1 million reactions, clearly showing superior performance to previous rule-based expert systems [61].

With respect to inorganic chemistry, Ward et al. [62] survey the models to predict the melting points of binary inorganic compounds; the formation enthalpy of crystalline compounds; the crystal structures that are likely to form at certain compositions; band-gap energies of specific classes of crystals; and the properties of metal alloys regarding mechanical features. Nevertheless, according to Ward et al., there are no widely used machine learning models for band-gap energy or glass-forming ability, even though large-scale databases with the corresponding properties have been available for years.

3.4 Quantum chemistry

The high computational cost of quantum chemistry has been a limiting factor in exploring the virtual space of all possible molecules from a quantum perspective. This is the reason why researchers are increasingly resorting to ML approaches [63, 64]. Indeed, ML has been used to replace or supplement quantum mechanical calculations for predicting parameters such as the input for semiempirical QM calculations [65], modeling electronic quantum transport [66], or establishing a correlation between molecular entropy and electron correlation [63]. ML can be employed to overcome or minimize the limitations of ab initio methods [67] such as DFT, which are useful to determine chemical reactions and quantum interactions between atoms and molecular and material properties but are not suitable to treat large or complex systems [68].

The coupling of ANNs and ab initio methods is exemplified in the PROPhet project [68] (PROPerty Prophet) for establishing nonlinear mappings between a set of virtually any system property (including scalar, vector, and/or grid-based quantities) and any other property. PROPhet provides, among its functionalities, the ability to learn analytical potentials, nonlinear density functions, and other structure-property or property-property relationships, reducing the computational cost of determining material properties, in addition to assisting in the design and optimization of materials [68]. Quantum chemistry-oriented ML approaches have also been used to predict the sites of metabolism for cytochrome P450 with a descriptor scheme where a potential reaction site was identified by determining the steric and electronic environment of an atom and its location in the molecular structure [69].

ML algorithms can accelerate the determination of molecular structures via DFT, as described by Pereira et al. [70], who estimated HOMO and LUMO orbital energies using molecular descriptors based only on connectivity. Another aim was to develop new molecular descriptors, for which a database containing > 111,000 structures was employed in connection with various ML models. With random forest models, the mean absolute error (MAE) was smaller than 0.15 and 0.16 eV for the HOMO and LUMO orbitals, respectively [70]. The quality of estimations was considerably improved when the orbital energy calculated by the semiempirical PM7 method was included as an additional descriptor.

The prediction of crystal structures is among the most important applications of high-throughput experiments [71], which rely on ab initio calculations. DFT has been combined with ML to exploit interatomic potentials for searching and predicting carbon allotropes [72]. In this latter method, the input structural information comes from liquid and amorphous carbon only, with no prior information on crystalline phases. The method can be associated with any algorithm for structure prediction, and the results obtained using ANNs were orders of magnitude faster than with DFT [72].

With a high-throughput strategy, time-dependent DFT was employed to predict the electronic spectra of 20,000 small organic molecules, but the quality of these predictions was poor [73]. Significant improvement was attained with a specific ML method named Ansatz, which was employed to determine low-lying singlet-singlet vertical electronic spectra, with excitation reproduced within ± 0.1 eV for a training set of 10,000 molecules. Significantly, the prediction error decreased monotonically with the size of the training set [73]; this experiment opened the prospect for addressing the considerably more difficult problem of determining transition intensities. As a proof-of-principle exercise, accurate potential energy surfaces and vibrational levels for methyl chloride were obtained in which ab initio energies were required for some nuclear configurations in a predefined grid [74]. ML using a self-correcting approach based on kernel ridge regression was employed to obtain the remaining energies, reducing the computational cost of the rovibrational spectra calculation by up to 90 % since tens of thousands of nuclear configurations could be determined within seconds [74]. ANNs were trained to determine spin-state bond lengths and ordering in transition metal complexes, starting with descriptors obtained with empirical inputs for the relevant parameters [75]. Spin-state splittings of single-site transition metal complexes could be obtained within 3 kcal mol− 1, an accuracy comparable to that of DFT calculations. In addition to predicting structures validated with ab initio calculations, the approach is promising for screening transition metal complexes with properties tailored for specific applications.

The performance of ML methods for the given applications in quantum chemistry has been assessed via contests, as often done in computer science. An example is the Critical Assessment of Small Molecule Identification (CASMI) Contest (www.casmi-contest.org) [76], in which ML and chemistry-based approaches were found to be complementary. Improvements in fragmentation methods to identify small molecules are considerable and should be further improved in the coming years with the integration of further high-quality experimental training data [76].

According to Goh et al. [35], DNNs have been used in quantum chemistry so far to a more limited extent than they have in computational structural biology and computer-aided drug design, possibly because the extensive amounts of training data they require may not yet be available. Nevertheless, Goh et al. state that such methods will eventually be applied massively for quantum chemistry, owing to their observed superiority in comparison to traditional ML approaches – an opinion we entirely support. For example, DNNs applied to massive amounts of data could be combined with QM approaches to yield accurate QM results for a considerably larger number of compounds than is feasible today [63].

Though the use of DNNs in quantum chemistry may still be at an embryonic stage, it is possible to identify significant contributions. Tests have been made mainly with the calculation of atomization energies and other properties of organic molecules [35] using a portion of 7,000 compounds from a library of 109 compounds, where the energies in the training set were obtained with the PBE0 (Perdew–Burke-Ernzerhof (PBE) exchange energy and Hartree-Fock exchange energy) hybrid function [77]. DNN models yielded superior performance compared to other ML approaches since a DNN could successfully predict static polarizabilities, ionization potentials and electron affinity, in addition to atomization energies of the organic molecules [78]. Significantly, the accuracy was similar to the error of the corresponding theory employed in QM calculations to obtain the training set. Applying DNNs to the dataset of the Harvard Clean Energy Project to discover organic photovoltaic materials, Aspuru-Guzik et al. [79] predicted HOMO and LUMO energies and power conversion efficiency for 200,000 compounds, with errors below 0.15 eV for the HOMO and LUMO energies. DL methods have also been exploited in predicting ground- and excited state properties for thousands of organic molecules, where the accuracy for small molecules can be even superior to QM ab initio methods [78]. Recent advances in the use of machine learning and computational chemistry methods to study organic photovoltaics are discussed in other works [80, 81, 82].

3.5 Computer‐aided drug design

Drug design has relied heavily on computational methods in a number of ways, from computer calculations of quantum chemistry properties with ab initio approaches, as previously discussed, to screening processes in high-throughput analysis of families of potential drug candidates. Huge amounts of data have been gathered over the last few decades with a range of experimental techniques, which may contain additional information on the material properties. This is the case for mass spectrometry datasets that may contain valuable hidden information on antibiotics and other drugs. In the October 2016 issue of Nature Chemical Biology [83], the use of big data concepts was highlighted in the discovery of bioactive peptidic natural products via a method referred to as DEREPLICATOR. This tool works with statistical analysis via Markov chain-Monte Carlo to evaluate the match between spectra in the database of Global Natural Products Social infrastructure (containing over one hundred million mass spectra) with those from known antibiotics. Crucial for the design of new drugs are their absorption, distribution, metabolism, excretion, and toxicity (ADMET) properties, on which the pharmacokinetic profile depends [84]. Determining ADMET properties is not feasible when such a large number of drug candidates are to be screened; therefore, computational approaches are the only viable option. For example, prediction results generated from various QSAR models can be compared to experimentally measured ADMET properties from databases [85,86,87,88,89,90,91]. These models are limited in that they may not be suitable to explore novel drugs, which motivates an increasing interest in ML methods, which can be trained to generate predictive models that may discover implicit patterns from new data used to determine more accurate models [84]. Indeed, ML predictive models have been used to identify potential anti-SARS-CoV-2 drugs, particularly viral proteins as targets [92], and to evaluate drug toxicity [93].

Pires and Blundell developed the approach named pkCSM (http://structure.bioc.cam.ac.uk/pkcsm), in which the ADMET properties of new drugs can be predicted with graph-based structural signatures [84]. In pkCSM, the graphs are constructed by representing atoms as nodes, while the edges are given by their covalent bonds. Additionally, the labels used to decorate the nodes and edges with physicochemical properties are essential, similar to the approach used in embedded networks [5]. The concept of structural signatures is associated with establishing a signature vector that represents the distance patterns extracted from the graphs [84]. The workflow for pkCSM is depicted in Fig. 6, which involves two sets of descriptors for input molecules: general molecule properties and the distance-based graph signature. The molecular properties include lipophilicity, molecular weight, surface area, toxicophore fingerprint, and the number of rotatable bonds.

The workflow of pkCSM is represented by the two main sources of information, namely, the calculated molecular properties and shortest paths, for an input molecule. With these pieces of information, the ML system is trained to predict ADMET properties. Reproduced with permission from the work of Pires et al. [84]

QSAR [94,95,96,97, 98] as in computer-aided drug design that predicts the biological activity of a molecule, is ubiquitous in some of these applications. The inputs are typically the physicochemical properties of the molecule. The use of DL for QSAR is relatively recent, as typified in the Merck challenge [99], wherein the activity of 15 drug targets was predicted in comparison to a test set. In later work, this QSAR experiment was repeated with a dataset curated from PubChem containing over 100,000 data points, for which 3,764 molecular descriptors per molecule were used as DNN input features [100]. DNN models applied to the Tox21 challenge provided the highest performance [101], with 99 % of neurons in the first hidden layer displaying significant association with toxicophore features. Therefore, DNNs may be used to discover chemical knowledge by inspecting the hidden layers [101]. Virtual screening is also relevant to complement docking methods for drug design, as exemplified with DNNs to predict the activity of molecules in protein binding pockets [102]. In another example, Xu et al. [103] employed a dataset with 475 drug descriptions to train a DNN to predict whether a given drug may induce liver injury. They used their trained predictor over a second dataset with 198 drugs, of which 172 were accurately classified with respect to their liver toxicity. This type of predictor affords significant time and cost savings by rendering many experiments unnecessary. This practice in the QSAR domain is potentially useful for the nonbiological quantitative structure-property relationship (QSPR) [34], in which the goal is to predict physical properties departing from simpler physicochemical properties. Despite the existence of interesting works on the topic [75, 79], there is room for further research.

A highly relevant issue that can strongly benefit from novel procedures standing on big data and classical ML methods is drug discovery for neglected diseases. Cheminformatics tools have been assembled into a web-based platform in the project More Medicines for Tuberculosis (MM4TB), funded by the European Union [104]. The project relies on classical ML methods (Bayesian modelling, SVMs, random forest, and bootstrapping), collaboratively working on data acquired from the screening of natural products and synthetic compounds against the microorganism Mycobacterium tuberculosis.

Self-organizing maps (SOMs) are a particular type of ANN that has been proven useful in a rational drug discovery process, where they assist in predicting the activity of bioactive molecules and their binding to macromolecular targets [105]. Antimicrobial peptide (AmP) activity was predicted using an adaptive neural network model with the amino acid sequence as input data [106]. The algorithm iterated to optimize the network structure, in which the number of neurons in a layer and their connectivity were free variables. High charge density and low aggregation in solution were found to yield higher antimicrobial activity. In another example of antimicrobial activity prediction, ML was employed to determine the activity of 78 sequences of antimicrobial peptides generated through a linguistic model. In this, the model treats the amino acid sequences of natural AmPs as a formal language described by means of a regular grammar [107]. The system was not efficient in predicting the 38 shuffled sequences of the peptides, a failure attributed to their low specificity. The authors [107] concluded that complementary methods with high specificity are required to improve prediction performance. An overview of the use of ANNs for drug discovery, including DL methods, is given by Gawehn et al. [108].

To a lesser extent than for drug design, ML is also being employed for modelling drug-vehicle relationships, which are essential to minimize toxicity [109]. Authors employed ML on data from the National Institute of Health’s (NIH) Developmental Therapeutics Program to build classification models and predict toxicity profiles for a drug. That is, they employed the random forest classifier to determine which drug carriers led to the least toxicity with a prediction accuracy of 80 %. Since this method is generic and may be applied to wider contexts, we see great potential in its use in the near future owing to the increasing possibilities introduced by nanotech-based strategies for drug delivery. To realize such potential, important knowledge gaps related to nanomaterials, immune responses and immunotherapy will need to be filled [110].

As in many other application areas, beyond materials science or pharmaceutical research, the performance of distinct ML methods has been evaluated according to their performance in solving a common problem. It now seems that DL may perform better than other ML methods [111], especially in cases where large datasets have been compiled over the years. Ekins [111] listed a number of applications of DNNs in the pharmaceutical field, including prediction of aqueous solubility of drugs, drug-induced liver injury, ADME and target activity, and cancer diagnosis. In a more recent work, Korotcov and collaborators [112] showed that DL yielded superior results compared to SVMs and other ML approaches in the prediction ability for drug discovery and ADME/Tox data sets. The results are presented for Chagas disease, tuberculosis, malaria, and bubonic plague.

DL has also succeeded in the problem of protein contact prediction [113]. In 2012, Lena et al. [114] superseded the previously impassable mark of 30 % accuracy for the problem. They used a recursive neural network trained over a 2,356-element dataset from the ASTRAL database [115], a big data compendium of protein sequences and relationships. Then, they tested their network over 364 protein folds, achieving the first-time-ever mark of 36 % accuracy, which brought new hope to this complex field.

4 Sensor‐based data production for computational intelligence

The term Internet of Things (IoT) was coined at the end of the 20th century to mean that any type of device could be connected to the Internet, thus enabling tasks and services to be executed remotely [116]. In other words, the functioning of a device, appliance, etc. could be monitored and/or controlled via the Internet. If (almost) any object can be connected, three immediate consequences can be identified: (i) sensing must be ubiquitous; (ii) huge volumes of data will be generated; (iii) systems will be required to process the data and make use of the network of connected “things” for specific purposes. There is a virtually endless list of possible services, ranging across traffic control, health monitoring, surveillance, precision agriculture, control of manufacturing processes, and weather monitoring. In an example of sensors and sensing networks for monitoring health and the environment with wearable electronics, Wang et al. [117] emphasized the need to develop new materials for meeting the stringent requirements to develop IoT-related applications.

A comprehensive review on chemical sensing (or IoT) is certainly beyond the scope of this paper, and we shall, therefore, restrict ourselves to providing some illustrative examples on how chemical sensors are producing big data to make the point that sensors and biosensors are key to providing the data needed to solve problems by means of ML. Indeed, methods akin to big data and ML have been employed for analysing data from sensors and biosensors through computer-assisted diagnosis for the medical area and other areas where diagnosis relies on sensing devices, such as in fault prediction in industrial settings.

4.1 ML in sensor applications

Materials Science is essential for IoT sensing and biosensing, as well as in intelligent systems, for a variety of reasons, including the development of new materials for building innovative chemical (and electrochemical) sensing technologies (see, for instance, the review paper by Oliveira Jr et al. [118]). In recent decades, increasingly complex chemical sensing has produced data volumes from a wide range of analytical techniques. There has been a tradition in chemistry – probably best represented by contributions in chemometrics – to employ statistics and computational methods to treat not only sensing data but also other types of analytical data. Electronic noses (e-noses) are an illustrative example of the use of ML methods in sensing and biosensing [119, 120]. Ucar et al. [121] built an android nose to recognize the odor of six fruits by means of sensing units made of metal-oxide semiconductors whose output was classified using the Kernel Extreme Learning Machines (KELMs) method. In another work [122], the authors introduced a framework for multiple e-noses with cross-domain discriminative subspace learning, a more robust architecture for a wider odor spectrum. Robust e-sensing is also present in the work of Tomazzoli et al. [123], who employed multiple classification techniques, such as partial least squares-discriminant analysis (PLS-DA), k-nearest neighbors (kNN), and decision trees, to distinguish between 73 samples of propolis collected over different seasons based on the UV-Vis spectra of hydroalcoholic extracts. The relevance of this study lies in establishing standards for the properties of propolis, a biomass produced by bees and widely employed as an antioxidant and antibiotic due to its amino acids, vitamins, and bioflavonoids. As with many natural products, propolis displays immense variability, including dependence on the season when it is collected, so that excellent quality control must be ensured for reliable practical use in medicine. Automated classification approaches represent, perhaps, the only possible way to attain low-cost quality control for natural products that are candidate materials for cosmetics and medicines. The quantification of extracellular vesicles and proteins, as biomarkers for various diseases, was achieved with a combination of impedance spectroscopy measurements and machine learning [124].

Disease monitoring and control are essential for agriculture, as is the case for orange plantations, particularly when diseases and deficiencies may yield similar visual patterns. Marcassa and coworkers [125] took images obtained from fluorescence spectra and employed SVMs and ANNs to distinguish between samples affected by Huanglongbing (HLB) disease and those with zinc deficiency stress. The ability to process large amounts of data and identify patterns allows one to integrate sensing and classifying tasks into portable devices such as smartphones. Mutlu et al. [126], for instance, used colored-strip images corresponding to distinct pH values to train a least-squares-SVM classifier. The results indicated that the pH values were determined with high accuracy.

The visualization of bio(sensing) data to gain insight, support decisions or, simply, acquire a deeper understanding of the underlying chemical reactions has been exploited extensively by several research groups, as reviewed in the papers by Paulovich et al. [127] and Oliveira et al. [128]. Previous results achieved by applying data visualization techniques to different types of problems point to potentially valuable traits when chemical data are inspected from a graphical perspective. Possible advantages of this approach include:

-

(i)

The whole range of features describing a given dataset of sensing experiments can be used as the input to multidimensional projection techniques without discarding information at an early stage that might otherwise be relevant for a future classification For example, in electrochemical sensors, rather than using information about oxidation/reduction peaks, entire voltammograms may be considered; in impedance spectroscopy, instead of taking the impedance value at a given frequency, the whole spectrum can be processed to obtain a visualization.

-

(ii)

Other multidimensional visualization techniques, such as parallel coordinates [129], allow identification of the features that contribute most significantly to the distinguishing ability of the bio(sensor).

-

(iii)

Various multidimensional projection techniques are available, including nonlinear models, which in some cases have been proven to be efficient for handling biosensing data [127]. Such usage is exemplified with an example in which impedance-based immunosensors were employed to detect the pancreatic cancer biomarker CA19-9 [130].

The feature selection mechanism used by Thapa et al. [130] performed via manual visual inspection and combined with the silhouette coefficient (a measure of cluster quality), was demonstrated to enhance the immunosensor performance. However, more sophisticated approaches can be employed, as in the work by Moraes et al. [131], in which a genetic algorithm was applied to inspect the real and imaginary parts of the electric impedance measured by two sensing units. The method was capable of distinguishing triglycerides and glucose by means of well-characterized visual patterns. Using predictive modelling with decision trees, Aileni [132] introduced a system named VitalMon, designed to identify correlations between parameters from biomedical sensors and health conditions. An important tenet of the design was data fusion from different sources, e.g., a wireless network, and sensed data related to distinct parameters, such as breath, moisture, temperature, and pulse. Within this same approach, data visualization was combined with ML methods [133]] for the diagnosis of ovarian cancer using input data from mass spectrometry.

This type of analytical approach is key for electronic tongues (e-tongues) and e-noses, as these devices take the form of arrays of sensing units and generate multivariate data [134]. For example, data visualization and feature selection were combined to process data from a microfluidic e-tongue to distinguish between gluten-free and gluten-containing foodstuffs [135]. ML methods can also be employed to teach an e-tongue whether a taste is good or not, according to human perception. This has been done for the capacitance data of an e-tongue applied to Brazilian coffee samples, as explained by Ferreira et al. [136]. In that paper, the technique yielding the highest performance was referred to as an ensemble feature selection process based on the random subspace method (RSM). The suitability of this method for predicting coffee quality scores from the impedance data obtained with an e-tongue was supported by the high correlation between the predicted scores and those assigned by a panel of human experts.

4.2 Providing data for big data and ML applications with chem/biosensor networks

As discussed, sensors and biosensors are crucial to provide information at the core of big data and ML. Large-scale deployments of essentially self-sustaining wireless sensor networks (WSNs) for personal health and environment monitoring, whose data can be mined to offer a comprehensive overview of a person’s or ecosystem’s status, were anticipated long ago [137]. In this vision, large numbers of distributed sensors continuously collect data that are further aggregated, analysed, and correlated to report upon real-time changes in the quality of our environment or an individual’s health. At present, deployments of chemical WSNs are limited in scale, and most of the sensors employed rely on the modulation of physical properties, such as temperature, pressure, conductivity, salinity, light illumination, moisture, or movement/vibration, rather than chemical measurements. In environmental monitoring, there are examples of relatively large-scale deployments that encompass forest surveillance (e.g., GreenOrbs WSN with approximately 5,000 sensors connected to the same base station [138]), vineyard monitoring [139,140,141,142], volcanic activity monitoring [143], greenhouse monitoring [144, 145], soil moisture monitoring [146], water status monitoring [147], animal migration [148, 149] and marine environment monitoring [150, 151], among others [152]. In the wireless body sensor network (WBSN) arena, with over two-thirds of the world´s population already connected by mobile devices [153], the potential impact of WSNs and IoT on human performance, health and lifestyle is enormous. While numerous wearable technologies specific to fitness, physical activity and diet are available, studies indicate that devices that monitor and provide feedback on physical activity may not offer any advantage over standard approaches [154]. These studies suggest that ML approaches may be required to generate a meaningful and effective improvement in an individual’s lifestyle. However, physical sensors offer only a limited perspective of the environmental status or individual’s condition. A much fuller picture requires more specific molecular information, an arena where WSNs based on chemical and biochemical sensors are essential to bringing the IoT to the next level of impact. In contrast to physical transducers such as thermistors, photodetectors, and movement sensors, chemical and biochemical sensors rely on intimate contact with the sample (e.g., blood, sweat or tears in the case of WBSN or water or soil in the case of environment-based sensors). These classical chemical sensors and biosensors follow a generic measurement scheme, in which a prefunctionalized surface presents receptor sites that selectively bind a species of interest in a sample.

Since the early breakthroughs in the 1960 and 1970 s, which led to the development of a plethora of electrochemical and optochemical diagnostic devices, the vision of reliable and affordable sensors capable of functioning autonomously over extensive periods (years) to provide access to continuous streams of real-time data remains unrealized. This is despite significant investment in research and the many thousands of papers published in the literature. For example, it has been over 40 years since the concept of an artificial pancreas was proposed by combining the glucose electrode with an insulin pump [155]. Even now, there is no chemical sensor/biosensor that can function reliably inside the body for longer than a few days. The root problem remains the impact of biofouling and other processes that rapidly change the response characteristics of the sensor, leading to drift and sensitivity loss. Accordingly, in the past decade, scientists have begun to target more accessible media via less invasive means. This is in alignment with the exponential growth of the wearables market, which increasingly seeks to expand the current physical parameters to bring reliable chemical sensing to the wrists of over 3 billion wearers by 2025 [156].

Likewise, a number of low-cost devices to access molecular information via the analysis of sweat, saliva, interstitial, and ocular fluid have been proposed. At their core, these bodily fluids contain relatively high concentrations of electrolytes, such as sodium, potassium and ammonium salts, in addition to biologically relevant small molecules, such as glucose, lactate, and pyruvate. While the relative concentrations of these compounds in alternative bodily fluids deviate from those found in blood, they offer an accessible means to a wide range of clinically relevant data, which can be collated and analysed to offer wearer-specific models. Several groups have made significant progress towards the realisation of practical platforms for the quantification of electrolytes in sweat in recent years. Integrating ion-selective electrodes with a wearable system capable of harvesting and transporting sweat, watch-type devices have shown impressive ability to harvest sweat and track specific electrolytes in real time [157], as illustrated in Fig. 7. Similarly, a wearable electrochemical sensor array was developed by Javey et al. [158]. The resulting fully integrated system, capable of real-time detection of sodium, potassium, glucose, and lactate, is worn as a band on the forehead or arm, transmitting the data to a remote base station.

SwEatch: watch-sensing platform for sodium analysis in sweat. 1: sweat harvesting device in 3d-printed platform base, 2: fluidic sensing chip, 3: electronic data logger and battery, and 4: 3d-printed upper casing. Reproduced with permission from the work of Glennon et al. 157

Contact lenses provide another means to access a wide range of molecular analytes in a noninvasive manner through information-rich ocular fluid. Pioneered by Badugu et al. [159] nearly 20 years ago, a smart contact lens could monitor ocular glucose through fluorescence changes. The initial design was further developed to encompass ions such as calcium, sodium, magnesium, and potassium [160]. While the capability of such a device is self-evident, such a restrictive sensing mode may ultimately hamper its application. It took several years for significant inroads into flexible electronics and wireless power transfer to enable a marriage of electrochemical sensing with a conformable contact lens. Demonstrated by Park et al. [161] for real-time quantification of glucose in ocular fluid, this platform indicates the potential of combining a reliable, accurate chemical sensing method with integrated power and electronics in a noninvasive approach to access important clinical data.

Although considerably more invasive than sweat or ocular fluid sensing, accurate determination of biomarkers in interstitial fluids has a proven track record. Indeed, the first FDA-approved means for noninvasive glucose monitoring, namely, Glucowatch [162], relied on interstitial fluid sampling extracted using reverse iontophoresis with subsequent electrochemical detection. This pioneering development from 2001 offered multiple measurements per hour and provided its wearer with an easy-to-use watch-like interface. Although ultimately hampered by skin irritation and calibration issues, it nonetheless signified a milestone in minimally invasive glucose measurements. Continuing with this approach, in 2017, the FDA approved device Abbott Freestyle Libre, which enables the wireless monitoring of blood sugar via analysis of the interstitial fluid. On application, the device punctures the skin and places a 0.5 cm fibre wick through the outer skin barrier so that interstitial fluid can be sampled and monitored for glucose in real time for up to two weeks, at which point it is replaced. Data are accessed using a wireless mobile phone-like base station.

In addition to delivering acceptable analytical performance in the relevant sample media, there are a number of challenges for on-body sensors related to size, rigidity, power, communication, data acquisition, processing, and security [163], which must be overcome before they can realize their full potential and play a pivotal role in applications of the IoT in healthcare services.

A similar scenario is faced in the environmental arena, in that (bio)chemical sensing is inherently more expensive and complex than monitoring physical parameters such as temperature, light, depth, or movement. This is strikingly illustrated in the Argo Project, which currently has ca. A total of 3,000–4,000 sensorized ‘floats’ are distributed globally in the oceans, all of which track location, depth, temperature, and salinity. These were originally devised to monitor several core parameters (temperature, pressure, and salinity) and share the data from this global sensor network via satellite communications links to provide an accurate in situ picture of the ocean status in real or near real time. Temperature and pressure data are accurate to ± 0.002 °C, and uncorrected salinity is accurate to ± 0.1 psu (can be improved by relatively complex and time-consuming postacquisition processing). Interestingly, an increasing number of floats now include ‘Biogeochemical’ sensors (308, ca. 10 %, April 2018) for nitrate (121), chlorophyll (186), oxygen (302) and pH (97); see http://www.argo.ucsd.edu and the maps in Fig. 8. Of these, nitrate is measured by direct UV absorbance, and chlorophyll is measured by absorbance/fluorescence at spectral regions characteristic of algal chlorophyll. These are optical measurements rather than conventional chemical sensor measurements. Moreover, it is likely that most, if not all, of the pH measurements are performed using optically responsive dyes rather than the well-known glass electrode. This strikingly demonstrates that chemical sensors are avoided when long-term, reliable and accurate measurements are required from remote locations and hostile environments. It is also striking that more complex chemical and biological measurements (i.e. ; that require analysers incorporating reagents, microfluidics, etc.) are not included in the Argo project. These autonomous analyzer platforms for tracking key parameters such as nutrients, concentration of dissolved oxygen (COD), pH, heavy metals, and organics typically cost €15 K or more per unit to buy, not including service and consumable charges. For example, the Seabird Electronics dissolved oxygen sensors used in the Argo project cost $60 K each [164], and Microlab autonomous environmental phosphate analysers cost ca. €20K per unit; i.e. they are far too expensive to use as basic building blocks of larger-scale deployments for IoT applications.

Distribution of sensorised floats monitoring the status of a variety of ocean parameters that encompass core parameters (temperature, velocity/pressure, salinity) and biogeochemical sensing (e.g., oxygen, nitrate, chlorophyll, pH). Reproduced with permission from the Argo documentation [165]

Progress towards realizing disruptive improvements is encouraged by competitions organised by environmental agencies such as the Alliance for Coastal Technologies (http://www.act-us.info/nutrients-challenge/index.php), who launched the ‘Global Nutrient Sensor Challenge’ in 2015. The purpose was to stimulate innovation in the sector, as participants had to deliver nutrient analysers capable of 3 months of independent in situ operation at a maximum unit cost of $5,000. The ACT estimated that the global market for these devices is currently ca. 30,000 units in the USA, and ca. 100,000 units globally (i.e. ca. $500 million per year). This is set to expand further as new applications related to nutrient recovery grow in importance (e.g., from biodigester units and wastewater treatment plants), driven by the need to meet regulatory targets and business opportunities linked to the rapidly increasing cost of nutrients.

4.3 Prospects for scalable applications of chemical sensors and biosensors

From the discussion so far, it is clear that there are substantial markets in personal health and environmental monitoring and other sectors for reliable chemical sensors and biosensors that are fit for purpose with an affordable use model. While progress has been painfully slow over the past 30–40 years, since the excitement of early promising breakthroughs [166], the beginnings of larger-scale use and a tentative move from single-use or centralised facilities towards real-time continuous measurements at point of need are now apparent. As this trend develops, the range and volume of data collected will rise exponentially, and new types of services will emerge, most likely borrowing ideas and models from existing applications, such as the myriad of products for personal exercise tracking, merged with new tools designed to deal with the more complex behaviour of molecular sensors.