Abstract

Radionuclides, whether naturally occurring or artificially produced, are readily detected through their particle and photon emissions following nuclear decay. Radioanalytical techniques use the radiation as a looking glass into the composition of materials, thus providing valuable information to various scientific disciplines. Absolute quantification of the measurand often relies on accurate knowledge of nuclear decay data and detector calibrations traceable to the SI units. Behind the scenes of the radioanalytical world, there is a small community of radionuclide metrologists who provide the vital tools to convert detection rates into activity values. They perform highly accurate primary standardisations of activity to establish the SI-derived unit becquerel for the most relevant radionuclides, and demonstrate international equivalence of their standards through key comparisons. The trustworthiness of their metrological work crucially depends on painstaking scrutiny of their methods and the elaboration of comprehensive uncertainty budgets. Through meticulous methodology, rigorous data analysis, performance of reference measurements, technological innovation, education and training, and organisation of proficiency tests, they help the user community to achieve confidence in measurements for policy support, science, and trade. The author dedicates the George Hevesy Medal Award 2020 to the current and previous generations of radionuclide metrologists who have devoted their professional lives to this noble endeavour.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The discovery of spontaneous radioactive decay of unstable nuclei has altered the way that the atomic structure was perceived. No longer was the atom indivisible and immutable, and a suite of subatomic particles has been identified since then. Fundamental research entered into the abstract world of quantum mechanics to describe non-intuitive phenomena at atomic and subatomic levels. Through interactions with particles, stable atoms could be transformed into manmade radionuclides. However, their radioactive decay remained a random process, governed by invariable nuclide-specific decay rates. Vast amounts of energy could be unleashed from nuclear reactions. The emitted radiation interacting with matter offered possibilities for powerful nuclear measurement techniques, as well as medical applications in imaging, diagnostics, and therapy. The risk of physical harm through radiation exposure of the human body has always been the downside of this phenomenon.

The nuclear dimension offered new possibilities and challenges in analytical chemistry. New radioisotopes were formed and characterised. Some of these nuclides were used as tracers to follow natural processes, such as element uptake in biological entities or oceanographic currents. The radioanalytical techniques and their applications are quite diverse, and touch many fields of research, including biology, hydrology, geology, cosmology, archaeology, atmospheric sciences, health protection, pharmacology, chemistry, physics, and engineering. Radioactivity plays a particular role in establishing a measure of time, as the exponential-decay law predicts the relationship between the amounts of decaying atoms and their progeny as a function of time. Determination of this ratio allows age dating of materials, for example for geochronology or nuclear forensics.

Thousands of radionuclides have been investigated for their decay properties, not only as a looking glass to the interior of the atom, but also as a means for their identification and quantification through radioanalytical techniques. Hundreds of nuclides are of particular importance in the context of human health, medical applications, nuclear energy production, environmental monitoring, or astrophysics. Acquiring reliable and precise data on decay properties, such as decay modes, branching ratios, photon and particle emission probabilities and energies, half-lives, as well as cross section data for nuclear production processes is a painstaking task which is still far from completion and perfection. Quite rare is the availability of primary standards for the activity of relevant radionuclides, to which the detection efficiency of a measurement setup could be calibrated.

Behind the vast scene of nuclear science and its many applications, stands a small international community of radionuclide metrologists who lay the foundations for a common measurement system for radioactivity. They are at the apex of the metrology pyramid, providing the most accurate measurements of activity by means of primary standardisation techniques. Through key comparisons of their standards, they demonstrate international equivalence and realise the SI-derived unit becquerel (Bq). Through secondary standardisation, the world is provided with calibration sources to establish SI-traceability for all radioactivity measurements. Radionuclide metrology laboratories provide the tools needed to convert a count rate in a nuclear detector into an SI-traceable activity: certified radioactive sources for calibration, accurate half-life values, emission probabilities of various types of radiation (x rays, gamma rays, alpha particles, conversion electrons, Auger electrons), and evaluated decay schemes of the most important radionuclides. They provide sound methodology for the user communities, and are proactive in developments and quality control of personalised nuclear medicine.

This paper is part of the George Hevesy Medal Award 2020 lecture at the 12th International Conference on Methods and Applications of Radioanalytical Chemistry (MARC XII) held on April 3–8, 2022 in Kailua-Kona, Hawaii. It contains personal reflections of the author on highlights in his career and pays tribute to the hundreds of collaborators who helped to shape his contributions in the world of radionuclide metrology, neutron activation analysis, and experimental fission research. The original manuscript, containing more background information, figures and technical drawings of the primary standardisation instruments, is publicly available as a JRC Technical Report [1].

A common measurement system for activity

Primary standardisation of activity

The measurand of an activity measurement is the expectation value at a reference time of the number of radioactive decays per second of a particular radionuclide in a material. The result is expressed in the SI-derived unit becquerel, which is equivalent to 1 aperiodic decay per second. The unit becquerel is realised through reference measurements by means of primary standardisation techniques, which are commonly (but not exclusively) based on absolute counting of radioactive decays per unit time. What is considered a primary method differs from one radionuclide to another, depending on its decay scheme and in particular on the type of radiation that is emitted. In this respect, the standardisation of activity entails a higher work load than other SI units, since it has to be executed for each radionuclide of interest separately, by means of a selection of suitable techniques under specific conditions.

In an ideal world, a primary standardisation method for a particular radionuclide is designed such that (i) its calibration is based on physical principles, not on other radioactivity measurements, (ii) its result is insensitive to uncertainties associated with the nuclear decay scheme, (iii) it is under statistical control, i.e. all significant sources of uncertainties are identified and properly quantified, and (iv) the total uncertainty of the result is small. In the real world, these conditions may not be perfectly achieved. A method is called primary when it has a combination of the above characteristics that is competitive with the best methods available for the specific radionuclide. The transparency and completeness of the uncertainty budget as well as the accuracy of the measurement result are important criteria.

The radionuclide metrology community has published two special issues of Metrologia, one explaining the state-of-the-art of measurement techniques used in standardisation of activity [2], and another elaborating in detail the uncertainty components involved in using them [3]. Primary techniques can be subdivided into high-geometry methods that aim at 100% detection efficiency, defined-solid-angle counting methods which extrapolate the total activity from a measurement of a geometrically well-defined fraction of emissions, and coincidence counting methods which inherently return detection efficiencies from coincidence rates in multiple detectors [4]. All counting methods require excellent compensation for count losses resulting from pulse pileup and system dead time, which is generally achieved by live-time counting and imposing a comparably long dead time of a known type (extending or non-extending) when registering a count [5]. Most of the significant sources of uncertainty are known and quantifiable, yet presenting a complete uncertainty budget remains a challenge that may require extensive modelling [6].

Whereas one can standardise the activity of a particular mononuclidic source, e.g. in the form of a radioactive deposit on an ultra-thin polymer foil, the realisation of the becquerel is generally performed for a radionuclide in acidic solution and expressed as an activity per unit mass of the solution, in kBq g−1. Source preparation involves quantitative dispensing of aliquots of the solution onto a substrate, the amounts being determined by weighing of the dispenser bottle on a calibrated microbalance before and after drop deposition [7]. The timing and duration of each measurement need to be accurately recorded by means of a clock synchronised to coordinated universal time (UTC), so that the results from activity measurements can be stated at a fixed reference time. The clock frequency of timing chips in live-time modules is verified by comparison with disseminated reference frequency signals. A standardisation of activity therefore requires SI-traceability to the unit of mass as well as time and frequency.

Dissemination of the becquerel

Metrology is key to unlocking the benefits as well as controlling the harm of ionising radiation, in view of the omnipresence and widespread use of radioactivity in numerous applications. The user community needs calibration sources to determine the efficiency of their detectors, so that count rates can be converted into activity values. The calibration sources are traceable to the primary standards through a chain of direct comparisons, each step involving an additional uncertainty propagation. Secondary standards in radioactivity are usually pure solutions carrying a single radionuclide, which are measured relative to a corresponding primary standard in the same geometry in a transfer instrument. Common practice is the use of a stable, re-entrant pressurised gas ionisation chamber enclosing a few grams of solution contained in a sealed glass ampoule [8, 9]. The activity ratio of the secondary to primary standard follows directly from the ratio of their ionisation currents. Calibration sources from a secondary standard can be produced from quantitative aliquots of the solution.

Traceability is a vertical comparison scheme that connects activity measurements with a particular standard [10]. Ionisation chambers are also used as hospital calibrators for short-lived radionuclides to be administered to patients for diagnosis and therapy. Their calibration factors are usually provided by the manufacturer, based on a particular traceability chain. In proficiency tests, the calibration factors for different geometries can be compared to an independent secondary standardisation by a metrology institute [11, 12]. Recently, the first international proficiency test was held for combinations of nuclides which can be used together as theragnostic pairs [12]. Such actions bring quantitative personal medicine closer to practice.

Mutual recognition of national standards

International collaboration in nuclear metrology is driven by common issues, such as trade, healthcare, environmental stewardship, nuclear security, and science [13, 14]. The common radioactivity measurement system is promoted by the Consultative Committee for Ionizing Radiation (CCRI). It discusses the needs for establishing the SI-derived unit becquerel and advises on organising Key Comparisons (KCs) of radioactivity standards among the National Metrology Institutes (NMIs) [15]. As a result of this horizontal comparison scheme, a common definition of the becquerel is established and users are not confined within state borders to seek particular radioactivity standards. Two approaches are used for comparison exercises. In the first approach, samples of a single radioactive solution are distributed to all participating laboratories and the results of their activity measurements are directly compared. The alternative approach is for NMIs to individually submit a standardised solution for indirect comparison through one of three transfer instruments operated by the International Bureau of Weights and Measures (BIPM): the SIR [16, 17], SIRTI [18], and ESIR [19].

A measure of equivalence

Equivalence of standards does not imply equality, but it ensures statistical consistency in the presence of measurement uncertainty [10]. The laboratories report extensive uncertainty budgets, addressing each potential source of error and associated uncertainty estimate, adhering to proper statistical rules [20] and vocabulary [21]. Since there is no objective arbiter in interlaboratory comparisons at the highest level of metrological accuracy, the Key Comparison Reference Value (KRCV) is a consensus value derived from the massic activity values provided by the participating laboratories. The ‘Degree of Equivalence’ compares the difference between the laboratory value and the KCRV with the expanded uncertainty of this difference [22].

For decades, the KCRV was calculated as an arithmetic mean, which acknowledges an equal weight among NMIs, but lacks efficiency at a scientific level by ignoring differences in the stated uncertainties. In May 2013, Section II of the CCRI was the first Consultative Committee that succeeded in adopting a weighting method, based on the ‘Power-Moderated Mean’ (PMM) [22,23,24]. In a trade-off between efficiency and robustness of the mean, the PMM uses an algorithm that applies statistical weighting of data when the stated uncertainties are realistic, yet moderates the weighting when they are not realistic and provides a criterion to identify extreme data eligible for rejection on statistical grounds. The PMM is easy to implement [25] and simulations show that it provides a robust mean and uncertainty, irrespective of the level of consistency of the data set. As a result of using the PMM, better consistency is generated between KCRVs and expected activity values from the response curve of the SIR ionisation chamber convoluted with nuclear decay data. It outperforms other methods, including the most popular method (D–L) used for meta-analysis in clinical trials, as demonstrated in Fig. 1 [22].

Simulation results for measures of efficiency and robustness of the arithmetic (AM), Mandel–Paule (M–P), power-moderated (PMM), inverse-variance weighted (WM), and Der Simonian-Laird (D–L) mean for discrepant data sets as a function of the number of data. (Top) Standard deviation of the mean around the true value used in the simulation. (Bottom) The ratio of the standard deviation of the mean to the calculated uncertainty. The PMM is almost as robust as the AM, yet more efficient. WM, M-P and D-L underestimate the uncertainty

Meta-analysis of equivalence

Since mutual confidence in measurements is acquired through equivalence, metrology can inspire trust only if the measurement techniques are under statistical control. This means that each significant source of uncertainty should be identified and appropriately quantified. Trustworthiness is built on the completeness of the uncertainty budget, and conversely it is affected by understatement of uncertainty due to ignorance or desire to impress the world with bold claims of unmatched accuracy. The maturity of a metrological community can be assessed through meta-analysis of interlaboratory comparisons. A graphic tool, the PomPlot [26, 27], was developed to visualise equivalence in relation to the stated uncertainties. PomPlots can be made of a single intercomparison, a set of comparisons for a single laboratory, or an overview of comparisons within a specific community [26]. Laboratories can evaluate the quality of their uncertainty estimates through an aggregated PomPlot of their proficiency results [26, 27].

Figure 2 shows a combined PomPlot of SIR submissions of activity standards for various radionuclides from member NMIs. The PomPlot displays deviations D of individual results from the reference value on the horizontal axis and uncertainties u on the vertical axis, using the characteristic spread parameter S calculated in the PMM [22] as the yardstick. The diagonals represent the ζ-score when the u values are corrected for the uncertainty of the reference value and mutual correlation [22], otherwise the ζ-score lines diverge at the top [28]. If all data are normally distributed, the majority is found back within the ζ = ± 1 and ζ = ± 2 areas, and practically no outlier is found outside the ζ = ± 3 area.

A combinatory PomPlot of a non-exhaustive set of key comparison results for activity standardisations of radionuclides, through the SIR (K1) and direct comparisons (K2). Several inconsistent data are due to the difficult standardisation of.125I. Overall, the uncertainties in the last two decades have been somewhat more homogenous and realistic than in the past (see Fig. 4 in [26] for comparison)

In Fig. 2, there is a small fraction of outliers and data with understated uncertainties which belong at a lower position in the graph. Fortunately, the stated uncertainties turn out to be informative, since they correlate strongly with the deviations from the reference value. However, a more linear proportionality was found with the square root of the uncertainty [6, 26]. In other words, some uncertainties are overstated and others are understated, even at the highest level of metrology. This observation supports the use of a moderated power in the statistical weights of the PMM. PomPlots of proficiency tests of European radioactivity monitoring laboratories reveal a higher percentage of outliers and a subset with grossly understated uncertainties. It is not uncommon to find a high concentration of trustworthy laboratories near a u/S-value of 1, since they have a grasp of the typical uncertainties associated with their methods [27, 29, 30].

Primary standardisation at the JRC

Joint Research Centre

The EURATOM treaty [31] signed in 1957 founded the Joint Nuclear Research Centre (JNRC) of the European Commission (EC) that harbours “a bureau of standards specialising in nuclear measurements for isotope analysis and absolute measurements of radiation and neutron absorption” at the Geel site in Belgium. The Radionuclide Metrology team of the JRC started its pioneering work in 1959 “to ensure that a uniform nuclear terminology and a standard system of measurements are established” (Article 8) [31]. The group developed and applied accurate primary standardisation techniques for radioactivity, measured and evaluated nuclear decay schemes and atomic data. With time, the work was extended to characterisation of nuclear reference materials, the organisation of intercomparisons among European radioactivity monitoring laboratories, and performance of ultra-sensitive gamma-ray spectrometry in an underground facility. Through education and training programs, organisation of workshops and conferences, and scientific publications, nuclear research is promoted and sound methodology is passed on to the metrological community. Particular attention is paid to rigorous uncertainty estimation and data analysis techniques.

Below, a summary of measurement techniques applied at the JRC for primary standardisation work in the last two decades is presented. A more elaborated version with technical graphs is available in the report [1].

Source preparation

Two aspects in the preparation of quantitative sources for activity standardisation are usually of crucial importance: achieving an accurate quantification of the amount of radioactive solution contained in the source and a high transparency of the source for the emitted radiation [7, 32,33,34,35]. The most accurate quantification is done by mass weighing using the “pycnometer method” with a microbalance calibrated with SI-traceable reference weights, either using the direct method or the substitution method [7, 35]. A sufficient amount of solution should be dispensed on a series of sources to reduce the non-negligible random and systematic weighing errors. The radioactive drops are dispensed on an appropriate substrate bespoke to the method used, e.g. on an ultra-thin polymer foil or directly into a scintillator cocktail. Polyvinylchloride-polyvinylacetate copolymer (VYNS) support and cover foils are produced in a controlled manner on a spinning soaped glass plate, and covered with a vacuum evaporated gold layer if conductivity is required. The source homogeneity is improved by adding seeding and wetting agents [7, 34]. The JRC source drier speeds up the drying process significantly by blowing heated nitrogen jets into the drop to curtail the formation of big crystals [32, 33]. To avoid contamination with dust particles, the source preparation is performed in a laminar flow of filtered air and the sources are kept dry in dust-free desiccators. Qualitative sources for high-resolution spectrometry can be produced by non-quantitative methods, such as e.g. electrodeposition [36,37,38], vacuum evaporation [33, 39], and collection of recoil atoms following alpha decay [40, 41].

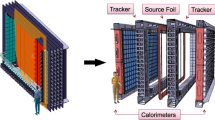

Large pressurised proportional counter

JRC’s Large Pressurised Proportional Counter (LPPC) consists of a cylindrical gas chamber and a central planar cathode dividing it into two D-shaped counters with a 21-μm-thick stainless steel anode wire each [42]. A simplified scheme [42] and a technical drawing [1] have been published elsewhere. The LPPC is operated with P10 counting gas, consisting of 90% argon and 10% methane. The counter gas is continuously refreshed, while kept at a constant pressure between 0.1 and 2 MPa [43]. The LPPC is used as a 4π geometry counter aiming at 100% counting efficiency for the activity of beta and alpha emitters, such as 204Tl [44], 39Ar [37], 238Pu [45], and 241Am [46, 47]. Mono-energetic x rays from 55Fe are used for calibration of the energy threshold. The source deposited on a gold-evaporated VYNS foil is integrated into the cathode.

4π β-γ coincidence counter

The coincidence method [48,49,50,51] offers an alternative to high-efficiency methods for radionuclides which emit at least two distinguishable types of radiation in their decay process, most commonly β or α particles (or low-energy x rays and Auger electrons from electron capture) followed by γ-ray emissions. The detection set-up consists of two detectors which, ideally, are exclusively sensitive to one type of radiation: a small version of the pressurised gas proportional counter (SPPC) as the particle detector and a 15 cm × 15 cm NaI(Tl) well-type scintillation crystal as the photon detector. Either by classical electronics [49] or by digital data acquisition [50], the individual count rates in both detectors as well as their coincidence count rate are recorded (Fig. 3). Ideally, the unknown source activity and detection efficiencies can be derived from a single measurement. In practice, the activity is mostly obtained from a linear extrapolation of a series of measurements performed at varying counting inefficiencies approaching zero.

A photo of the set-up [46] and technical drawings of the SPPC and well NaI crystal [1] have been published. The source is placed in the cathode of the SPPC, and the SPPC is then slid inside the 50 mm diameter well of the NaI detector. This ensures a favourable geometry and high detection efficiency for both detectors, thus reducing the time needed for reaching statistical accuracy in the coincidence channel. In the last two decades, coincidence methods have been used to standardise 152Eu [52], 65Zn [53], 54Mn [54], 192Ir [55], 241Am [46], 134,137Cs [56, 57], 125I [58, 59], and 124Sb [60] with accuracies of typically 0.1%–0.5%. For certain radionuclides (152Eu, 192Ir, 241Am), the efficiencies were high enough to use a sum-counting method to obtain the decay rate directly from the sum of the detector count rates minus the coincidence count rate.

Formerly, the JRC operated an old coincidence set-up with an atmospheric pill-box gas counter, sandwiched between a bottom and top 7.6 cm × 5.1 cm cylindrical NaI crystal inside a lead castle. The set-up without the gas counter was used as a photon-photon coincidence counter for the standardisation of 125I [58], in which the efficiency was varied by source covering. The same x–x,γ coincidence technique was applied with two moveable integral line NaI detectors at various distances from the source. Both approaches yield the correct result in a single measurement under any condition, without the need for extrapolation [58].

4π γ counter

High-efficiency photon detection systems are ideally suited for 4π γ counting, which is an advantageous standardisation technique for radionuclides emitting cascades of γ-rays after decay [4, 61]. The JRC uses the well-type NaI(Tl) detector in the coincidence set-up, as well as an advanced version with a larger (20.3 cm × 20.3 cm) NaI(Tl) crystal and a narrow (2.5 cm) and deep (13.4 cm) well [1]. As the number of coincident photons per decay increases, the probability of non-detection and its associated uncertainty approach zero. The radionuclide-specific total counting efficiency can be determined by Monte Carlo simulation of the photon-particle transport or by bespoke analytical models [62,63,64,65]. The success of the modelling depends primordially on the accuracy of the input parameters, such as chemical composition and dimensions of the materials, as well as attenuation coefficients, particle ranges, and bremsstrahlung production data.

The activity of 152Eu [52], 192Ir [55], 166mHo [66] could be standardised by 4π γ counting with close to 100% counting efficiency, owing to their complex decay scheme, whereas this was not the case with 124Sb [60]. Reference activity values for PET nuclides (e.g. 18F, 111In) were determined for proficiency tests of hospital calibrators [11, 12]. The large detector and its portable lead shield can in principle be moved to production centres of short-lived PET nuclides for in-situ standardisations. Even though single γ-ray emitters are not ideally suited for the 4π γ counting method, the thermal neutron flux in a research reactor was determined with 1.5% accuracy through a measurement of the 198Au activity in an irradiated gold monitor [67]. The same instrument was used for the photon sum-peak counting method for the standardisation of 125I, in which the detection efficiency is determined from the ratio between the single photon detections and coincidence peaks [58] and correcting properly for random coincidences [59]. Both standardisation methods apply to point sources, but can be expanded to voluminous sources if heterogeneity of the detection efficiency is taken into account.

CsI(Tl) sandwich spectrometer

The windowless 4π CsI(Tl) sandwich spectrometer [68] consists of two cylindrical CsI(Tl) scintillation crystals with a semispherical cavity in their front faces. Pictures [46] and a technical drawing [1] have been published. The radioactive source is pressed between both crystals and emits its radiation quasi perpendicularly towards the inner wall of the spherical cavity formed in the centre of the detector. Radiation-induced scintillations are detected by photomultiplier tubes at the back of the crystals. The set-up is mounted inside a source-interlock chamber, which is continuously flushed with dry hydrogen to protect the hygroscopic CsI(Tl) crystals from contact with humidity in the air.

The detector is sensitive to alpha and beta particles, as well as x rays and gamma rays. It is limited at the low-energy side—up to about 10 keV—due to electronic noise and at the high-energy side—above a few hundreds of keV—due to partial transparency to photons. The instrument is unique among standardisation laboratories, yet it has an unparalleled quality to detect the decay of radionuclides with complex decay schemes at quasi zero detection inefficiency. This was demonstrated in key comparisons of 152Eu [52], 238Pu [45], 192Ir [55], 124Sb [60], and 166mHo [66]. The wall of the internal cavity can be covered with plastic caps to stop the particles from being detected, thus allowing to switch from the 4π mixed particle-photon counting method to 4π photon counting. The activity of 125I has been standardised with both options, each requiring particular detection efficiency formulas [58, 59].

Counting at a defined solid angle

High-efficiency counting of α emitters in a solid source has the disadvantage that the α particles emitted at the smallest angles to the source plane can get absorbed in the carrier foil without being detected. Therefore, metrological accuracy can be gained by counting only the particles emitted perpendicularly to the source plane within a well-defined small solid angle (DSA) and multiplying the count rate with the geometry correction factor [69]. The JRC has two α-DSA counting set-ups consisting of a source chamber, distance tube, and a circular diaphragm in front of a large PIPS detector. Pictures and a technical drawing have been published [33]. Variations in geometrical efficiency can be realised by replacing diaphragms and distance tubes.

Accurate measurements of the distance between source and diaphragm as well as the diaphragm radius were performed by optical techniques [33, 70] (Fig. 4), which have recently been automated with a 3D-coordinate measuring machine [71]. The solid angle subtended by the detector can be addressed with exact mathematical algorithms for circular and elliptical configurations [72,73,74,75], however the method gains significant robustness by taking into account the source activity distribution obtained with digital autoradiography [70, 76, 77]. An accuracy of 0.1% can be achieved on the activity. The solid angle is quite insensitive to temperature changes, provided that the diaphragm and distance tube have equal thermal expansion coefficients. The technique has been successfully applied to activity standardisation of 238Pu [45], 241Am [46], and 231Pa [28], certification of reference materials with tracer activity [78], and half-life measurements of 233U [79], 243Am [80], 225Ac [41, 81], 230U [82], and 235,238U.

Dr Maria Marouli optically measuring the source thickness using a vertically traveling microscope with distance probe. It is currently replaced with a 3D-coordinate measuring machine [71]

The DSA counting technique is not suitable for β emitters due to the high probability of scattering processes. However, two different set-ups have been constructed for activity measurements of x-ray emitters. Both have a source chamber, distance tube with diaphragm, and gas proportional counter with front window. In one counter, the window foil is sufficently strong and thick to allow for vaccuum pumping in the source chamber (drawing in [1]). It has been used for an activity standardisaton [83] and half-life measurement [84] of 55Fe. The other set-up has an ultra-thin window to minimise photon absorption, however it requires the use of helium gas in the source chamber to counterbalance the gas pressure in the detector. In the past, it was used to make a set of x ray fluorescence sources for the calibration of Si(Li) detectors [85, 86].

Liquid scintillation counters

Through the work of Dr. T. Altzitzoglou, the JRC performed liquid scintillation counting (LSC) with the CIEMAT/NIST (C/N) efficiency tracing technique, as well as the triple-to-double-coincidence ratio (TDCR) method [87, 88]. The C/N 4π liquid scintillation measurements were performed using a Packard Tri-Carb model 3100 TR/AB (PerkinElmer, Inc., Waltham, MA, USA) and two Wallac Quantulus 1220 (PerkinElmer, Inc.) liquid scintillation spectrometers (Fig. 5). All instruments were operated with two phototubes in sum–coincidence mode at a temperature of about 12 °C. The TDCR set-up was developed and constructed at the JRC. It consisted of three photomultipliers surrounding the liquid scintillator vial at 120°, the signals of which were used to form double and triple coincidences.

Both methods require rather complex calculation techniques to derive the counting efficiency of the nuclide under study. This is done using the free parameter model, describing the physicochemical processes and statistics involved in photon emission. The free parameter can be deduced from the measurement of a tracer (C/N) or from the coincidence ratio (TDCR). LSC was applied for the standardisation of a suite of reference materials and solutions for key comparisons, measurements of decay data and characterisation of materials for proficiency testing exercises (e.g. [89]). Examples of nuclides measured are 3H (as tritiated water), 90Sr, 124Sb, 125I, 151Sm, 152Eu, 166mHo, 177Lu, 204Tl, 238Pu, and 241Am.

PomPlot of key comparisons

Figure 6 shows PomPlots [26, 27] of the key comparisons of activity measurements to which the JRC participated in the last two decades. The reference value was calculated from the PMM [22] of the official results from the NMIs, with exclusion of values based on secondary standardisation techniques and extreme values identified on statistical grounds [22]. For most KCs, the data sets are rather consistent and few outliers are identified. Only in the case of 125I, a scientifically motivated selection of unbiased data was made instead of a consensus value of the majority of the data. This is due to a bias towards low values caused by volatility of iodine, incomplete modelling of the deexcitation process in LSC techniques, and inadequate corrections for accidental coincidences [59] in the photon sum-peak counting method [58].

PomPlots of CCRI(II) K2 key comparisons of activity. The radionuclides and the year of execution are indicadated in the corner of the graphs. The red dots represent the JRC results. In the case of 177Lu [90, 91], the JRC result at u/S = 4.5 and ζ = 0.6 is off the scale due to the large uncertainty estimate. The same applies to extremely discrepant or uncertain data from other NMIs

A signature PomPlot of JRC’s results is presented in Fig. 7, which aggregates all the red dots from Fig. 6. It is immediately apparent that most of the JRC results are near the top of the graph, which means that their stated uncertainties are lower than state-of-the-art in the field. More importantly, the JRC data are very reliable, since 80% of the results score within ζ = ± 1 and all of them are within ζ = ± 2. This exceptional track record is based on redundancy of methods and realistic assessment of measurement uncertainty. The JRC is famous for using a multitude of techniques to underpin the final result, e.g. 7 methods for 125I, 6 for 241Am, 5 for 192Ir and 166mHo, and 4 for 238Pu. These methods are mutually consistent at a high level of accuracy, as demonstrated in Fig. 8 by the aggregate PomPlot of the partial results obtained with the individual techniques.

Aggregate PomPlot of JRC’s results in the KCs depicted in Fig. 6. The distribution of the data shows that the measurements are very accurate and the stated uncertainties are reliable

One of the most challenging aspects of metrology is achieving a complete uncertainty budget with ambitious but realistic claims on accuracy. Practice has shown that redundancy of methods is indispensable to explore the strengths and weaknesses of specific approaches and to gain confidence in the accuracy attributed to the reported activity value.

Secondary standardisation

Secondary standardisations are performed using primary-calibrated re-entrant pressurised ionisation chambers (IC) in combination with a current-measuring device or a charge capacitor with voltage over time readout. Thus, activity reference values are provided for proficiency tests of European monitoring laboratories [89] and nuclear calibrators in hospitals [11, 12]. Whereas the linearity range of nuclear counting devices is hampered by pulse pileup and dead-time effects [5], ICs can boast a wide dynamic range over which the ionisation current varies proportionally with the measured activity [92]. Owing to their favourable stability, reproducibility, and linearity properties, ICs are well suited for the measurement of the half-life of gamma-emitting radionuclides over periods varying from hours to decades.

JRC’s principal ionisation chamber (IC1) is a Centronic IG12 well-type chamber filled with argon gas at a pressure of 2 MPa and surrounded by a lead shield (photo in [1]). The ionisation current is integrated over time by a custom-made air capacitor, which is placed in another lead shield to reduce discharge effects by radiation. The capacitor voltage is sampled by means of an electrometer operating in voltage mode, triggered by a crystal oscillator with an SI- traceable frequency. This configuration shows better linearity and stability than a direct ionisation current readout with a conventional electrometer. The long-term stability can be further perfected by correcting for a minor anti-correlation with ambient humidity, possibly due to humidity-induced self-discharge of the air capacitor. The JRC has a second ionisation chamber (IC2) which is traceable to the National Physical Laboratory (NPL) reference chamber, thus allowing for bilateral equivalence checks.

Nuclear decay data

Need for reliable decay data

Quantitative analyses and SI-traceable measurements of radioactivity through particle and photon detection crucially rely on the accuracy of the associated emission probabilities in the decay of the particular radionuclide. Other critical nuclear data are half-lives, branching ratios, emission energies, total decay energies, and cross sections for nuclear reactions. Over the years, the scientific community has measured, compiled and evaluated decay data and combined them with theoretical studies with the aim of deriving consistent nuclear decay schemes with realistic uncertainty budgets [93, 94]. These reference data are highly desired input for modelling codes in numerous applications and for theoretical understanding of the structure of the nucleus.

Intermediate half-life measurements

Through the exponential-decay law, the activity of a radionuclide can be extrapolated to an arbitrary point in the future or the past [95, 96]. The accuracy of these extrapolations in time depends critically on the reliability of the half-life value and its assigned uncertainty. Nuclear half-life determinations through repeated decay rate measurements are undeservedly perceived as easy experiments with straightforward data analysis. In the past, authors rarely discussed the level of attention paid to the stability of the measurement conditions, the integrity of the data analysis, and the completeness of the uncertainty budget. As a result, the literature abounds with discrepant half-life data from poorly documented measurements. In the last decades, insight was gained in the propagation of errors and the essential information required for complete reporting [96,97,98,99,100]. Owing to the increased awareness, NMIs have adopted a more detailed reporting style, addressing all critical issues contributing to the uncertainty budget. Applying enhanced rigor in experimental execution and data analysis, the metrological community is currently achieving better consistency at a higher level of accuracy than in the past [100].

A least-squares fit of exponential functions to a measured radioactive decay rate series provides an estimate of the half-life and its statistical uncertainty on the condition that all deviations from the theoretical curve are normally distributed. The propagation of those random deviations towards the half-life value T1/2 decreases proportionally with the square root of the number of measurements [99]. However, this result may be biased and the error underestimated if the experiment suffers instabilities that exceed the duration of individual measurements [96,97,98, 100]. Medium-frequency perturbations reveal themselves as autocorrelated structures in the residuals from the fit to the decay curve. Typical examples are daily or annual oscillations due to environmental influences, step functions due to instrument scaling switches and recalibrations, jumps in detection efficiency due to occasional source repositioning, and non-random variations in background and impurity activity. An empirical decomposition algorithm has been designed to separate medium-frequency effects from the random statistical component in the fit residuals, such that custom error propagation factors to the half-life can be calculated [100].

Systematic errors, however, lead to a biased fit without leaving a visible trace in the residuals. The associated error propagation does not reduce by performing additional decay rate measurements [96,97,98]. Common examples are under- or overcompensation of count loss through pulse pileup and system dead time, systematic errors in spectral analysis and corrections for interfering nuclides and background signals, non-linearities or slow changes in detector response, and source degradation. Taking these aspects properly into account requires a comprehensive understanding of the metrological limitations of the set-up. In gamma-ray spectrometry, the systematic errors can be significantly reduced by co-measuring a long-lived reference nuclide with γ-ray emissions in the same energy region. The strengths and weaknesses of the reference source method were demonstrated for 145Sm and 171Tm half-life measurements performed at the Paul Sherrer Institute (PSI) [101, 102].

The JRC has published several cases of good practice in half-life measurements using various instruments, i.e. re-entrant ionization chambers [92, 103,104,105,106,107], HPGe detectors [81, 82, 105, 106, 110], NaI(Tl) scintillation detectors [81, 82, 105], liquid scintillation counters [82, 107], the CsI(Tl) sandwich spectrometer [81, 82], planar ion-implanted silicon detectors [40, 81, 82, 108, 109, 111], x-ray [84] and alpha-particle defined solid angle counters [81, 82], and a pressurized proportional counter [42, 81, 82]. Over a period of 3 decades, the JRC was the only institute that published two unbiased values for the half-life of 109Cd [98, 106], whereas other data in the literature were discrepant (Fig. 9). This was recently confirmed through new measurement results from the NIST and the NPL [112]. A similar discrepancy between the literature values of the 55Fe half-life was solved with the most stable data set collected over a period of 18 years [42, 98], consistent with a previous measurement at the JRC [84] and a recent result from the PTB [113] (Fig. 10). Extensive research was done on important nuclides for detector calibration (54Mn, 65Zn, 109Cd, 22Na, 134Cs), medically interesting isotopes (177Lu, 171Tm, 99mTc), and in particular alpha-emitters considered for alpha immunotherapy, such as the decay products in the 230U [82, 108, 114] and 225Ac series [40, 41, 81] and 227Th [110] from the 227Ac series.

The level of attention paid to every detail in the uncertainty budget is exemplified in an answer to a critique on a paper regarding the 209Po half-life [111]. The linearity of the nuclear counting methods is well controlled by in-house made live-time modules which impose extending or non-extending dead time on the detection system, and additionally by taking into account secondary cascade effects between pulse pileup and dead time [5]. The linearity of the ionisation chamber is established by integrating the charge over an air capacitor, as demonstrated by achieving the most accurate half-life measurement of the medically important diagnostic nuclide 99mTc over the full working range of the instrument [92]. Its linearity can be further perfected by compensating for a small anti-correlation with ambient humidity [115].

Short and long half-life measurements

Sampling a decay curve is just one method to determine a half-life, applicable only on a ‘human’ time scale. For very short and very long half-lives, say below the second region and above the century region, other techniques are more suitable. Short lifetimes of excited nuclear levels ranging between about 1 ps to several ns are measured by the Recoil Distance Doppler-Shift method in specialised laboratories. Nano- and microsecond nuclear states are mostly measured electronically in delayed coincidence experiments or by time interval analysis. Extremely long half-lives exceeding a century are commonly determined through a specific activity measurement. These techniques and their sources of uncertainty are discussed in a review paper [96], together with propagation factors for applications in spectrometry, nuclear dating, activation analysis, and dosimetry. The fact that the paper is frequently downloaded underlines the fundamental importance of the exponential-decay law.

The JRC determined the half-life of the short-lived decay products in the 230U [114] and 225Ac [41] decay chains by delayed coincidence measurements using digital data acquisition in list mode. The experiments were performed in a small vacuum chamber with an ion-implanted planar silicon detector, measuring alpha and beta particle energies from a weak parent source facing the sensitive area at close distance [1]. Delayed coincidence spectra were built from the time differences between signals from the energy window of the parent and progeny nuclides, respectively. The true parent-progeny coincidences take an exponential shape, whereas random coincidences show up as a flat component. To avoid the problem of bias in least-square fitting to Poisson-distributed data (instead of normally distributed data), unbiased half-life values were obtained with a maximum likelihood method, a moment analysis, and a modified least-squares fit [116].

The half-lives of the long-lived nuclides 129I [117, 118], 151Sm [119, 120], 231 Pa [28], 233U [79], 243Am [80] were determined through their specific activity, i.e. the activity per quantity of atoms of this particular radionuclide, e.g. expressed in Bq per gram. The specific activity is proportional—via the molar mass and the Avogadro number—to the decay constant, i.e. the ratio between the activity and the number of atoms of a nuclide. The metrology is based on three fields of expertise: mass metrology, mass spectrometry (or coulometry, titration), and absolute activity measurements. The tasks at hand are the careful weighing of the amount of material in solution, the determination of the relative amount of the element and isotope of interest, and the measurement of the massic activity of the specific radionuclide. The attainable accuracy is in the 0.1% region, but can be compromised if the chemical state of the element in solution is not exactly known. The mass content can be determined relative to a reference solution with known amounts of another isotope and by performing isotope dilution mass spectrometry of different mixtures of both solutions.

An alternative route is through analysis of radionuclide mixtures both by mass spectrometry and activity spectrometry. For example, by determining the 243Am/241Am activity ratio and isotopic amount ratio in an americium reference material [121] by high-resolution alpha-particle spectrometry and thermal ionisation spectrometry, respectively, the half-life value for 243Am could be determined relative to 241Am [80]. In a similar manner, the half-life ratio of 235U/234U could be derived from high-resolution alpha-particle spectrometry on three uranium materials enriched in 235U and containing traces of 234U [122]. Since the 234U/238U half-life ratio of the parent-progeny pair 238U–234U is known from mass spectrometric analyses of uranium material in ‘secular equilibrium’, also the half-life ratio of 235U/238U could be determined with 0.5% accuracy.

Alpha-particle emission probabilities

Alpha-particle spectrometry is a radionuclide assay method with a wide suite of applications [123, 124]. Nuclear monitoring laboratories commonly perform measurements with ion-implanted silicon detectors on thin sources, with the aim of determining absolute activities or activity ratios of alpha-emitting nuclides. Advantageous properties of the technique are the low background levels that can be achieved due to a low sensitivity to other types of radiation, the intrinsic detection efficiency close to unity which reduces the efficiency calculation to a geometrical problem, and the uniqueness of the nuclide-specific alpha energy spectra. The main challenge is the limited energy resolution, which causes alpha energy peaks to be partially unresolved due to their width and low-energy tailing. Spectral deconvolution often requires fitting of analytical functions to each peak in the alpha energy spectrum. True coincidence effects between alpha particles and subsequently emitted conversion electrons are known to cause spectral distortions, which lead to significant changes in the apparent peak areas.

The JRC has a set of high-resolution alpha-particle spectrometers dedicated to reference measurements of alpha emission probabilities, in order to improve the nuclear decay scheme of heavy radionuclides [33]. Thin, homogeneous sources with low self-absorption are prepared with vacuum evaporation and electrodeposition techniques [7, 38, 125]. Small planar ion-implanted silicon detectors with 50–150 mm2 active area are used for optimum energy resolution. The feedback capacitor in the pre-amplifier is adapted to the detector capacitance for noise reduction. The detector flange and the preamplifier housing are stabilised in temperature by circulating water from a thermostatic bath. In addition, an off-line mathematical peak stabilisation method [126, 127] is applied to reduce peak shift in measurement series to one spectral bin width, even if the campaign extends over years. A strong magnetic field is applied on top of the source to deflect interfering conversion electrons away from the detector [128].

The fluctuations of the energy loss of charged alpha particles by ionisation collisions when travelling through thin layers of matter is represented by the Landau function, which corresponds to the mirror shape of the characteristic peaks in measured alpha-energy spectra. The Landau function consists of a complex integral, which is inconvenient for implementation in peak fitting software. Spectral fitting is done instead with a line shape model ‘BEST’ based on the convolution of a Gaussian with up to 10 left-handed exponential tailing functions and up to 4 right-handed exponentials [129]. It can deal with a wide range of energy resolutions, even down to the level where the peaks look like step functions [130]. It is the energy resolution and counting statistics of the measured spectra that determines the number of tailing parameters needed to reproduce the peak shape with sufficient accuracy [129]. Common spectra are well reproduced with up to three tailing parameters (Fig. 11).

Top: Traditional peak shape model based on the convolution of a Gaussian with three exponential tailing functions. Middle: Failed peak fit to a 240Pu alpha spectrum with very high statistical accuracy, using three tailing functions. Bottom: Fit of the same 240Pu spectrum with the ‘BEST’ shaping model [129]

The methodology has been used to significantly improve the emission probabilities in the decay of 235U [131], 240Pu [132, 133], 238U [134], the 230U decay series [135], the 225Ac/213Bi decay series [40], 236U [136], 226Ra [137], and 231 Pa [138]. The published 231 Pa results were the first using direct alpha-particle spectrometry with semi-conductor detectors and the values of emission probabilities were improved by an order of magnitude, which should help to solve inconsistencies and inaccuracies in the decay scheme. For 238U, the thickness of the sources was a trade-off between the conflicting requirements of energy resolution and counting statistics [139]. Spectra were continuously acquired over a period of 2 years, and an extra-large magnet system had to be used to cover the big 238U sources. Emission energies and a branching factor for 213Bi could be improved by removing spectral interferences through chemical separation by collecting recoil atoms on a glass plate [40]. Uncertainty calculations require particular attention to account for correlation effects from interferences and peak shape distortions [123]. Detailed uncertainty budgets were published for the determination of reference values for 226Ra, 234U, and 238U activity in water in the frame of proficiency tests [140, 141].

The energy resolution of silicon-based detectors cannot go below the physical limit of about 8 keV imposed by the finite number of electron–hole pairs that the alpha particle can produce in the Si lattice. These detectors are also imperfect with respect to linearity between pulse height and particle energy, since energy loss in dead layers varies with the particle's energy and the required energy for creating an electron–hole pair depends on the stopping power. More powerful detection techniques are magnetic spectrometers [142], cryogenic detectors [143], and time-of-flight (TOF) instruments [144]. The JRC is constructing the novel ‘A-TOF’ time-of-flight set-up with focussing magnets to reach a superior energy resolution and linearity (Fig. 12) [145].

Drs. Krzysztof Pelczar and Stefaan Pommé at the construction of the A-TOF facility, which measures the energy of alpha particles emitted in radioactive decay. The energy resolution is improved by measuring the time-of-flight of the alpha particles over a flightpath of several meters. The aim is to provide accurate nuclear decay data of alpha-emitting nuclides

Internal conversion coefficients

Together with partners from the STUK (Radiation and Nuclear Safety Authority, Finland), the JRC introduced the use of windowless Peltier-cooled silicon drift detectors for high-resolution internal conversion electron (ICE) spectrometry and demonstrated its potential as an alternative to alpha spectrometry for the isotopic analysis of plutonium mixtures [146,147,148,149,150]. Whereas in alpha spectrometry the alpha transitions from the fissile isotope 239Pu are strongly interfered by the transitions of 240Pu [39, 151], in ICE spectrometry the respective L and M+ ICE energies of the Pu isotopes are well separated and ideally suited for isotopic fingerprinting [146, 148] (Fig. 13). The ‘BEST’ deconvolution software [129] is easily adapted to analyse mixed x-ray and ICE spectra in order to determine the relative ICE emission intensities from the fitted peak areas. For the plutonium isotopes 238,239,240Pu, a good agreement is found with the theoretically expected Internal Conversion Coefficients (ICC) calculated from the BrIcc database [148]. On the other hand, the theoretical ICC values for the 59 keV transition following 241Am decay were contradicted, since the experimental data are anomalous due to a nuclear penetration effect [149]. These findings contribute to a more reliable data set, complementary to some of the best results obtained with magnetic spectrometers, and completed with a comprehensive uncertainty budget. All necessary data are in place to use this analytical method for fingerprinting of plutonium mixtures.

Gamma-ray intensities

Gamma-ray spectrometry is the workhorse of radioanalysis, enabling identification and quantification of a mixture of radionuclides in one spectrum and requiring little effort in sample preparation. In particular, high-purity Ge detectors are routinely used owing to their superior energy resolution for gamma quanta. Their energy-dependent detection efficiency is generally established by fitting a smooth curve through measured count rates per Bq at specific gamma-ray energies from SI-traceable calibration sources, taking into account the γ-ray intensities and the half-life of the corresponding nuclide. Modelling is often used to convert the efficiency curve to different geometries [152]. Accurate decay data are therefore indispensable for the user community to perform SI-traceable activity measurements. Primary standardisation laboratories provide accurate decay data by performing γ-ray spectrometry on calibrated sources. The JRC has published γ-ray intensities of important nuclides in the frame of NORM (naturally occurring radioactive material) [153] and nuclear medicine, such as 235U [154], 124Sb [155], and the entire 227Ac [156] decay chain. A discussion is provided on the choice of proper spectral data for 238U spectrometry [157].

Cross sections

Production rates of nuclides through nuclear reactions is of great importance in nuclear science (e.g. astrophysics), the safe use of nuclear energy [158], the production of radiopharmaceuticals, and in nuclear techniques for analytical chemistry. Proper calibration of radioactivity measurements is an important aspect in experimental determinations of nuclear cross sections. For example, it was discovered that the cross section for natHf(d,x)177Ta, as well as for other reactions producing 177Ta, was crucially depending on the choice of the γ-ray intensities in the decay of 177Ta [159]. A misprint in evaluated data led to an error by a factor of 3. Primary techniques can help to improve accuracy, for example by using 4π γ counting of a gold neutron fluence monitor in a NaI well detector to underpin the neutron capture cross section of 235U [160]. The GELINA linear accelerator facility at the JRC produces bunches of neutrons and uses time-of-flight to determine the energy of the neutrons inducing nuclear reactions in a remote experimental set-up along a flightpath. For example, neutron-induced ternary fission particles were measured in a ΔE–E detector set-up [161] and the evidence showed that the ternary-to-binary fission ratio was invariable among the resonances in the 235U(n,f) cross section [162].

k 0 factors

In neutron activation analysis (NAA), γ-ray spectrometry is performed on a matrix that has been irradiated by neutrons from a nuclear research reactor with the aim of determining the concentration of elements in the material. The method heavily depends on a combination of nuclear data, including isotopic abundances, neutron capture cross sections and photon intensities. To reduce the combined uncertainty of such factors, Simonits and De Corte aggregated them into a universal constant—the ‘k0 factor’—which is determined directly for each relevant γ-ray by analysing a reference material relative to a co-irradiated gold neutron flux monitor [163, 164]. The meaning of the k0 factor was explicitly defined for 17 activation-decay schemes, together with the matching Bateman equations for the time dependence of the generated activity.

Pommé et al. generalised the k0-NAA formalism to extreme activation and measurement conditions [164]. Generalised activation-decay equations were derived, with inclusion of burnup effects and successive neutron capture in high-flux regimes, backward branching or looping, in-situ measurements, consecutive irradiations, and mathematical singularities [95]. The k0-NAA activation-decay formulas for high-flux conditions were redefined in a compact manner that closely resembles the classical NAA formulas. At high neutron densities, the direct applicability of the 197Au(n,γ)198Au reaction as a neutron flux monitor is disrupted by the high neutron capture cross section of the reaction product 198Au. A simple mathematical solution was presented to determine the ensuing burnup effect prior to any characterisation of the neutron field, merely based on the spectrometry of the induced 198Au and 199Au decay gamma rays [165, 166]. Thus, an iterative procedure for neutron flux monitoring can be avoided.

The Belgian Nuclear Research Centre (SCK CEN) and the JRC set up a joint NAA laboratory at the BR1 reactor of the SCK [167,168,169]. The data analysis was done in SCK’s γ-ray spectrometry service, which routinely performed environmental monitoring and health physics measurements [170,171,172,173]. A comprehensive uncertainty budget was presented to bring the k0-NAA method under statistical control [174]. The content of various reference materials was analysed, among which Al–Co, Al–Au, Al–Ag, and Al–Sc alloys, bronzes, mussel tissue, bovine liver, Antarctic krill, incineration ash, aerosol filters, and water. The SCK CEN performed additional NAA measurements for external clients. The irradiation and measurement conditions for a specific sample were chosen by means of a deterministic simulation tool that quickly predicted spectral shapes and detection limits, which was helpful to reduce interfering activity and to optimise the analytical power [175].

At the fast irradiation facility of the BR1, a series of measurements were performed to improve the k0 factors and related data for ten analytically important reactions leading to the short-lived radionuclides 20F, 71Zn, 77mSe, 80Br, 104Rh, 109mPd, 110Ag, 124m1Sb, 179mHf, and 205Hg [176]. Work was done to improve the k0-factor and various associated nuclear data for the 555.8 keV gamma-ray of the 104mRh–104Rh parent-daughter pair, involving adaptations with respect to the activation-decay type and true-coincidence effects [177]. An investigation was made of the 97Zr half-life value and the k0 and Q0 factors for Zr isotopes, which are important monitors for epithermal neutron flux [178]. All these reference values are applicable at other k0-NAA laboratories, provided that the applied convention for the characterisation of the neutron field is valid.

Fission product yields

One of the big questions about fission dynamics is how much of the motion energy of the elongating nucleus on its path towards neck rupture is transferred to thermal excitation of the nucleons through internal friction. Salient features of the fission fragments are asymmetry in their sizes due to favourable nuclear shell effects [179,180,181,182,183,184,185,186], and even–odd staggering in their proton yields reminiscent of the proton pairing in an even–even fissioning nucleus [186,187,188]. A study of the preponderance of fission fragments with even nuclear charge number can render unique insights in the viscosity of cold nuclear matter, i.e. the coupling between the collective motion of the fissioning nucleus and the intrinsic single-particle degrees of freedom of the nucleons. In a model where friction is invoked, the nucleus will arrive thermally excited at the scission point and the bonds between nucleon pairs will be broken. In an adiabatic model, the nuclear viscosity is negligibly small and nucleon pairing persists up to scission.

Physical methods to measure mass, charge, and energy of the fragments require complicated mass separators, such as e.g. the Lohengrin facility at the ILL in Grenoble. However, the mass and charge distributions of the heavy fragments are also accessible through common radioanalytical techniques. Since the fission fragments are in excess of neutrons, they promptly emit some neutrons and undergo isobaric decay with emission of characteristic gamma rays. Gamma-ray spectrometry of the fission products provides their individual yield and an unequivocal identification of their mass and charge numbers. At the 15-MeV linear accelerator of the University of Ghent [189, 190], fission of 238U was induced by bremsstrahlung at seven excitation energies close to the fission barrier [186, 187]. Irradiated 238U samples were transported with a rabbit system, back and forth between the irradiation site and a γ-ray spectrometer, to measure the yield of the short-lived fission products. Long-lived fragments were caught in aluminium foils [191] and measured at longer duration. Some elements were isolated by chemical separation to reduce spectral interference. As part of the radioanalytical process, a set of ‘best’ nuclear decay data were selected for 118 radionuclides, in particular half-lives and γ-ray emission probabilities, since some data appeared to be inconsistent with the observations in the experiment [186].

In addition to the radioanalytical work, double energy measurements of the fission fragments were performed with two opposing surface barrier detectors [182]. By combining the results from both techniques, it was possible to derive neutron emission probabilities as well as the mass, charge, and kinetic energy of the fragments at the moment of scission. The study showed the same, very pronounced proton odd–even effect as long as the excitation energy of the nucleus remained below the height of the fission barrier plus the pairing gap, i.e. the energy needed to break up a proton pair [187] (Fig. 14). At the same time, the added excitation energy appeared as increased kinetic energy of the fragments [182]. When the excitation energy was further increased above the pairing gap, the odd–even effect collapsed (Fig. 15) and the kinetic energy dropped as the fragments gained internal heat. This suggests that low-energy fission is a superfluid process with little or no dissipation of collective energy to quasi-particle excitations while the nucleus elongates towards the scission point.

Measured fragment charge odd–even effect as a function of the average excitation energy of 238U at the fission barrier [187]. The line represents a deconvolution of the data accounting for the continuous energy distribution of the bremsstrahlung used for excitation. The odd–even effects drops near the pairing gap at 2.2 MeV

The time dimension

The exponential-decay law

The exponential-decay law [192] is a cornerstone of nuclear physics, the common measurement system of radioactivity, and numerous applications derived from it, including radiometric dating and nuclear dosimetry. It follows directly from quantum theory and its validity has been amply confirmed by experiment [193]. Mathematically, the invariability of the decay constant λ is a necessary as well as a sufficient condition for exponential decay. The decay constants are specific to each radionuclide and inversely proportional to the half-life [96].

Some authors have questioned the invariability of nuclear decay constants as well as the notion of radioactive decay as a spontaneous process [194]. They have put forward new hypotheses in which solar neutrinos, cosmic neutrinos, gravitation waves, cosmic weather, etc. are actors influencing the radioactive decay process. Their claims of violations of the exponential-decay law are based on observed instabilities in certain series of decay rate measurements. However, their work is compromised by poor metrology, in particular regarding the lack of an uncertainty evaluation of the attained stability in the experiments.

Most of these claims of new physics have been convincingly invalidated by high-quality metrology showing invariability of the decay constants such that the discussion is largely settled [195,196,197,198,199,200,201,202,203,204,205,206,207]. However, it was unsettling to experience that some editors from reputed journals accepted to publish those unsubstantiated claims permeated by coginitive bias, whereas they opposed publication of papers with sound metrological counterevidence. The problem lies not in the liberty taken to suggest new ideas, but in the lack of scrutiny to put these ideas to the test. In the words of Carl Sagan [208]: “At the heart of science is an essential balance between two seemingly contradictory attitudes—an openness to new ideas, no matter how bizarre or counterintuitive they may be, and the most ruthlessly sceptical scrutiny of all ideas, old and new. This is how deep truths are winnowed from deep nonsense.”

The 5th force

Incomplete uncertainty assessment has a direct impact on science and decision making. The controversy about violations of the exponential-decay law was fed by incomplete uncertainty budgets in measured decay curves with cyclic deviations at the permille level. New theories with high aspirations—involving a 5th force!—were proposed to claim that decay is induced by solar and cosmic neutrinos. Patents were issued to use repeated beta decay measurements as an alternative to elaborate solar neutrino flux monitoring experiments. Others patented methods to reduce radioactive waste by shortening the half-lives of long-lived nuclides. Questions were raised about the validity of nuclear dating in archaeology and geo- and cosmochronology. The very foundation of radionuclide metrology was affected, since direct comparison of activity measurements is made possible through normalisation to a reference date via the exponential-decay law. Establishing international equivalence of standards for the unit becquerel implicitly assumes the invariability of the decay constants, and therefore would require an adjustment if radioactivity would be sensitive to variations in neutrino flux through solar dynamics and the Earth-Sun distance.

The CCRI(II) initiated a large-scale investigation on the most extensive set of long-term activity measurements supplied by 14 metrology institutes across the globe, together spanning a period of 60 years. Analysis of more than 70 decay curves for annual oscillations showed that there was no mutual correlation in the cyclic deviations from exponential decay [42, 195,196,197,198,199, 205]. This contradicts the assertion that decay rates follow the annual cycle of the solar neutrino flux due to the varying Earth-Sun distance. The most stable data sets had negligible deviations of the order of 0.0006%, thus confirming the invariability of the decay constants down to that level. On the other hand, environmental effects, such as humidity, temperature, and radon in air, had caused some of the observed cyclic effects in activity measurements.

Reanalysis of the often-cited ‘sinusoidal’ instability in 3H decay measurements by Falkenberg revealed that the shape of this artefact was caused by the introduction of an arbitrary ‘correction’ function to the data to account for the ‘degradation’ of the poorly executed experiment [197]. The annual cycle in ionisation chamber measurements of a 226Ra source at the PTB in the period 1983–1998 was in phase with radon emission rates in the laboratory, which may have caused a discharge effect in the capacitor. When a new current measurement method was introduced at the PTB in 1999, this annual cycle disappeared and a new, smaller cyclic effect was observed in phase with ambient humidity and temperature [196, 209]. The oscillations in the 32Si and 36Cl decay rate measurements at Brookhaven National Laboratory and Ohio State University, respectively, show a strong correlation with the dew point in a nearby weather station [204]. Radon decay rates in a closed vessel in Israel were clearly influenced by weather conditions, such as solar irradiance and rainfall [200,201,202]. Ambient humidity has been identified as the culprit for instabilities in several experiments, considering that it also penetrates temperature-controlled laboratories [115, 210]. Figure 16 shows a correlation plot of 134Cs activity measurements in JRC’s IC1 ionisation chamber with outdoor humidity.

Average values and standard uncertainty of residuals to exponential decay of 134Cs in JRC’s ionisation chamber IC1 in Geel, Belgium binned as a function of ambient humidity obtained from a weather station in Antwerp at about 40 km distance [211]. Humidity causes an annual cycle in the data

Further claims were made of monthly oscillations in decay curves, allegedly associated with rotation of the solar interior. In this type of research, the Lomb-Scargle periodogram is a commonly used statistical tool to search for periodicity in unevenly sampled time series [212]. Whereas the original Lomb-Scargle solution is equivalent to an unweighted fit of a sinusoidal curve to the time series, the equations have been upgraded to apply statistical weighting of data and to allow for fitting a baseline value. Different statistical significance criteria should be applied for cyclic modulations fitted to time series using any of the four options, i.e. weighted or unweighted fitting with or without a free baseline [212]. Applying proper uncertainty propagation, it was found that the claimed monthly ‘solar-neutrino induced decay’ cycles were not statistically significant or else caused by terrestrial weather [193, 201, 202] (Fig. 17).

Amplitudes of annual (1 a−1) and monthly (9.43 a−1, 12.7 a−1) sinusoidal cycles fitted to the residuals from exponential decay of various nuclides as a function of the uncertainty on the amplitude. The dotted lines correspond to 1% probability for outliers at the low and high side of the associated Rayleigh statistical distribution of random noise. The data were not corrected for environmental influences, which explains the presence of significant annual cycles

Some authors apply data dredging methods to propose unsubstantiated claims of causality between correlated variables. For example, repeated measurements of activity and the capacitance of a cable (and even umbilical cord blood parameters) have been associated with space weather variables, whereas the sign of the correlation changed from one measurement to the other. The most likely explanation is susceptibility of the measurements to ambient humidity, which correlated positively with all data sets [203, 206]. In conclusion, the claims of new physics are founded on the quicksand of poor metrology. The exponential-decay law remains the solid foundation of the common measurement system of radioactivity and requires no amendment for its application.

Radiochronometry

Relativity theory has done away with the intuitive concept of time being something that flows uniformly and equally through the universe, in the course of which all events happen [213, 214]. Events are not crisply ordered in past, present and future, only vaguely ordered in sequence of events. There is no ‘present’ that is common to the universe. Even the world depicted by relativity theory is an approximation, since the world is a quantum one with processes that transform physical quantities from one to the other. There is no special variable ‘time’, the variables evolve with respect to each other. Time in physics is defined by its measurement: time is what a clock reads. The illusion of a common time in our everyday life can only be applied as an approximation at close proximity. The value of a nuclear half-life pertains to the conditions in which it was measured, which is by default at rest on the surface of Earth. In this context, half-lives can be considered invariable.

Radiochronometry is based on the statistical laws ruling the temporal dependence of the expected number of radioactive atoms in a decay chain, commonly known as the Bateman equations [95]. Radioactivity provides unique clocks by which the geological timescale can be calibrated. Before the discovery of radioactive decay, the age of Earth was estimated as only tens of millions of years, based on the time needed for a molten globe to cool down to Earth’s current state. Now it is understood that the heat from radioactive elements present in the composition of Earth has kept its interior hot for billions of years. The inventory of remaining radionuclides stemming from Earth’s original accretion contains only long-lived nuclides (> 108 y), since the shorter-lived nuclides have decayed away. This provides a measure of the time scale at which the synthetic events that created the material of the Solar system stopped.

Age dating through the exponential decay of a radionuclide and the ingrowth of its progeny in a closed system can be achieved in various ways [96], either through the ratio of the atomic concentration of the nuclide at different times (e.g. carbon dating), the ratio of progeny to parent nuclides (e.g. uranium-lead dating), or isochron dating methods which apply a ratio with a stable isotope to gain independence from initial amounts of the progeny (e.g. lead-lead and rubidium-strontium dating). The dating equations are linearly proportional to the half-life of the parent or the ratio of the half-lives involved, which means that the relative uncertainty of the half-life constitutes an upper limit to the attainable accuracy on the age. Owing to the accuracy attainable with mass spectrometry techniques, the half-life uncertainty has become a potential bottleneck in the uncertainty budget of nuclear chronometry.

The most commonly used nuclear chronometers are mathematically equivalent to a singular parent-progeny decay. Whereas the dating equation is easily obtained, the derivation of the uncertainty propagation formulas is less straightforward and has been circumvented by applying simulation tools. Only recently, Pommé et al. published succinct uncertainty propagation formulas for nuclear dating by atom ratio (mass spectrometry) and activity ratio (nuclear spectrometry) measurements of parent-progeny pairs [96, 215]. Additional approximating formulas were presented to show that, in certain conditions, the propagation factors of relative uncertainties on measured ratios and decay constants towards the age can approach unity, however also large or small numbers are possible [215]. A striking conclusion is that radiometric dating through atom ratio measurements is less sensitive to the progeny half-life, whereas activity ratio measurements are less sensitive to the parent half-life. Activity ratio measurements enhance the signal of the short-lived nuclide. They may be a good alternative to atom ratio measurements in cases where the atom concentration of the short-lived nuclide is particularly low.