Abstract

Decades of research show that contextual information from the body, visual scene, and voices can facilitate judgments of facial expressions of emotion. To date, most research suggests that bodily expressions of emotion offer context for interpreting facial expressions, but not vice versa. The present research aimed to investigate the conditions under which mutual processing of facial and bodily displays of emotion facilitate and/or interfere with emotion recognition. In the current two studies, we examined whether body and face emotion recognition are enhanced through integration of shared emotion cues, and/or hindered through mixed signals (i.e., interference). We tested whether faces and bodies facilitate or interfere with emotion processing by pairing briefly presented (33 ms), backward-masked presentations of faces with supraliminally presented bodies (Experiment 1) and vice versa (Experiment 2). Both studies revealed strong support for integration effects, but not interference. Integration effects are most pronounced for low-emotional clarity facial and bodily expressions, suggesting that when more information is needed in one channel, the other channel is recruited to disentangle any ambiguity. That this occurs for briefly presented, backward-masked presentations reveals low-level visual integration of shared emotional signal value.

Similar content being viewed by others

Introduction

Emotion expressions are potent social stimuli. Decades of research have shown that emotion expressions draw attention and, depending on the modality by which they are expressed, can influence one’s judgments, categorizations, and general perception of individuals and the world around them (e.g., Adams et al., 2010; Adams et al., 2017; Albohn et al., 2019; Hugenberg, 2005; Ito & Urland, 2005). However, the social signal value of an expression can be driven by the context in which it is expressed. For example, the silhouette of a man smiling in a dark alley will be judged very differently than the same silhouette framed by a colorful sunset at the beach. Social-expressive information can also be influenced by more than just the scene in which an individual appears. Social information derived from expressions is influenced by who else is around the expresser (i.e., crowds of other people; see Im et al., 2017), face and background color (Minami et al., 2018), identity cues such as gender, race, and age (Adams et al., 2015; Adams et al., 2016; Albohn & Adams, 2020; Hugenberg, 2005), eye gaze (Adams & Kleck, 2003, 2005; Milders et al., 2011), and the posture of the body (Mondloch et al., 2013), among many others. That expression judgments change with different combinations of social stimuli underscores the importance of the context in which a social cue is presented and the influence it can have on judgments, as well as the fluidity of social perception in general.

In an initial demonstration of combinatorial processing of social visual cues, Adams and Kleck (2003, 2005) showed that the non-overlapping cues of facial expression and eye gaze aid in the recognition and intensity ratings of facial expressions, what they termed the shared signal hypothesis. Specifically, angry and happy faces with direct eye gaze, and fearful faces with averted eye gaze, were categorized more quickly and rated as expressing more intense emotion than the opposite eye-gaze pairings. Congruent versus incongruent pairings of facial expression, body language, visual scene, and vocal cues have likewise revealed enhanced processing at very early stages of visual processing (i.e., within 100 ms; Meeren et al., 2005), as well as along conscious and nonconscious processing routes (e.g., De Gelder et al., 2005).

Research also suggests a similar shared emotional signal value for faces and voices. De Gelder and Vroomen (2000) showed that individuals are often biased by emotional tone when asked to make emotion judgments of faces, even when they are explicitly asked to ignore the accompanying tones. When participants were presented with incongruent pairings of happy/sad faces and voices, happy-face/sad-voice pairings were rated as more sad and sad-face/happy-voice pairings were rated as happier than congruent pairings of face and voice emotion. Critically, the authors also showed that facial expression influenced categorization of voices. These results suggest that social-expressive cues that share signal value but have no other overlap have a bidirectional influence on each other and that this integration occurs early in visual processing, not necessarily within deliberate conscious awareness (De Gelder & Vroomen, 2000).

The significance of examining how different channels of information (face, body, voice) influence expression categorization allows for formulating inferences about the processes of emotion perception at the lowest levels of vision. When combined in congruent versus incongruent pairings, eye gaze, gender, body expressions, and even vocal expressions have shown facilitative effects on the recognition of facial expressions of emotion (Adams et al., 2015; Adams & Kleck, 2003, 2005; Meeren et al., 2005). There is some evidence that points to such facilitative effects even when cues from different channels are constrained to nonconscious processing routes (e.g., Adams et al., 2012; De Gelder et al., 2005; Milders et al., 2011). For instance, Adams et al. (2012) found that amygdala responses to subliminally presented anger and fear expressions (33-ms presentation backward masked) varied as a function of eye-gaze direction. Specifically, they found greater amygdala responses to clear threat cue combinations, i.e., direct gaze anger and averted gaze fear expressions. Likewise, Milders et al. (2011) utilized an attentional blink paradigm and found evidence for pre-conscious processing and visual awareness of direct gaze anger and averted gaze fear. De Gelder et al. (2005) found similar combinatorial influences for face perception as a function of subliminally presented bodies, arguably due to the recruitment of partially overlapping neuroanatomical regions (see also De Gelder & Tamietto, 2011, p. 59). These findings suggest that social cues influence vision early in the visual processing stream. Yet, behavioral and neuroscientific research have long treated various sources of emotion information (e.g., expression, gaze, body, identity) as being functionally distinct and engaging doubly dissociable non-interactive processing routes (e.g., Bruce & Young, 1986; Haxby et al., 2000, 2002). Comparatively, little work has examined the relative and combined influence of these stimulus modalities on emotion expression recognition and judgments.

Beyond facial and vocal cues, the other stimulus channel that has been investigated with regard to emotion expression perception is body expression, though with mixed results. Some research has shown that the emotion present through body expressions largely informs emotion face judgments (Aviezer et al., 2012; Aviezer et al., 2015), while others have shown that face emotion expressions are the driving force for informing emotion body judgments (Robbins & Coltheart, 2012; Willis et al., 2011). Critically, however, these effects appear to be largest under conditions of ambiguity (e.g., with anger and disgust being facial expressions that share similar facial configurations). Additionally, there is competing evidence with regard to whether face-body emotional information is integrated early (Gu et al., 2013) or occurs later in the visual processing stream (Teufel et al., 2019). While there is some inconsistency on how influential each channel is on expression recognition, there is converging evidence that congruency in emotion expression across different modalities aids in emotion recognition (for review, see Hu et al., 2020; Wang et al., 2017).

Overview

In the current research, we examined the influence of expressive faces on general body emotion categorization and expressive bodies on general face emotion categorization across conscious visual processing. Given previous work that supports both integration and interference of body and face cues on emotion recognition, we had three predictions. First, we predicted that faces and bodies would inform one another bidirectionally, leading to greater processing fluency (faster reaction times (RTs) and higher accuracies) when emotion cues are congruent regardless of the stimulus channel (i.e., a body and a face that share emotional content leading to perceptual integration). Second, if body and face cues are competing for attentional resources, we predicted bidirectional processing interference when body and facial emotion expressions of the stimuli were mismatched (i.e., slower RTs and lower accuracies). Third, we predicted that there would be a bi-directional effect of body expression on face emotion processing, and facial expression on body emotion processing (see also, Lecker et al., 2020). Finally, across both studies we predicted that the perceived emotional clarity of the rapidly presented stimuli (either body expressions in Experiment 1 or face expressions in Experiment 2) would influence integration and interference effects. Specifically, highly intense expressions (clearer displays) would show the strongest integration and interference effects on another concurrently presented expressive stimulus, whereas lower emotional clarity expressions (less clear and more ambiguous) would result in minimal or no integration or interference effects. This prediction is in line with previous research that has shown perceptual ambiguity in expressions causes greater decoding difficulty (Brainerd, 2018; Graham & Labar, 2007; Kim et al., 2017).

To investigate the possibility of both integration and interference effects, we employed a paradigm where either bodies (Experiment 1) or faces (Experiment 2) were briefly presented (33-ms backward masked). Our main question throughout this work asks whether bodies influence face perception and faces influence body perception – even when we are not consciously aware we are seeing more than one stimulus. To accomplish this, we utilized subliminal presentation to restrict conscious awareness of one stimulus while consciously viewing and responding to the other in order to restrict the visual integration of the two to low-level visual processing (i.e., at the pre-attentive stage). By restricting awareness in this way, we attempt to get as close as possible within a non-fMRI design to testing the contention that social vision begins at the subcortical level (see De Gelder & Tamietto, 2011). We acknowledge that using subliminal presentations is an approximation. However, this work alongside Milders et al.’s (2011) work with attentional blink, fMRI work with gaze and emotion (Adams & Kleck, 2003), and subliminal presentations (see, e.g., Brooks et al., 2012, for a meta-analysis), we believe adds to research that is highly suggestive of low-level visual integration, perhaps even at the subcortical level.

Subliminal bodies or faces were shown alongside a supraliminally presented face or body, respectively. In this way, participants were asked to respond to the emotional content of a supraliminally presented visual stimulus (body or face), while simultaneously presented with a subliminal and backward masked emotion stimulus (face or body, respectively). Thus, we are able to disentangle whether bodies, faces, or both have integrative, interference, or both types of effects on the emotional processing of the other. By employing subliminal and backward-masked presentations of one of the stimuli, any integration or interference that occurs will be very early on in the visual stream, thereby offering evidence for social visual interaction at the earliest stages of visual process. This would demonstrate visual integration based on social meaning, rather than shared visual characteristics of the stimuli, given that bodies and faces can signal the same emotion but do so via very different visual representations. In other words, we utilized subliminal presentation to restrict conscious awareness of one stimulus while consciously viewing and responding to the other, in order to restrict the visual integration of the two to low-level visual processing (i.e., at the pre-attentive stage).

Specifically, Experiment 1 was designed to investigate whether rapidly presented body expressions influenced supraliminal facial expression processing in general. We predicted that if the body expression emotion was congruent with the facial expression emotion that we would find integration effects and, similarly, that we would find interference effects if the two expression cues were incongruent.

Experiment 2 was designed to test whether rapidly presented face stimuli influenced supraliminal bodily emotion expression processing in general. We predicted that if faces have a defining influence on body emotion categorization, then subliminal bodies would enhance categorization of bodies when the two stimuli are congruent in emotion. However, we also predicted that there would be perceptual interference when the faces display an emotion incongruent with emotion expressed on a body, resulting in slower RTs and lower accuracies.

Overall, these two studies attempt to parse the influence of emotional bodies on emotional face judgments, and vice versa. If our hypotheses are correct, it would suggest that bodily and facial expressions of emotion are integrated when presented in congruent pairings, evidenced by higher accuracy (and faster RTs), but lead to interference effects when presented in incongruent pairings, evidenced by lower accuracy (and slower RTs).

Experiment 1

Method

Power considerations

In order to determine power, we based our sample size on previous research that has tested similar hypotheses (average N = 25; Kret et al., 2013; Meeren et al., 2005). To ensure that we had appropriate power in our study, we used the derived effect size from Kret et al. (2013) to conduct an a priori power analysis. The effect size (\( {\eta}_p^2 \) = .25) was derived from the emotion by body part interaction for accuracy from Kret et al. (2013, p.4). A repeated-measures, within-factors power analysis in G*Power revealed a minimum sample size of approximately 22 to achieve adequate power to detect a medium effect size (parameters: effect size f(U) = 0.58, α = .05, power = 0.95, number of groups = 2, number of measurements = 14, “as in SPSS” option enabled).

Participants

Participants were 37 (female = 19, males = 17, unidentified = 1; Mage = 18.97 years, SD = 1.00) undergraduates at The Pennsylvania State University. One participant did not report their age. Each participant completed the experiment in exchange for course credit.

Stimuli

In Experiment 1, fear, anger, and neutral body stimuli were from the BEAST database (De Gelder & Van den Stock, 2011). Given the availability of stimuli in the BEAST database, we selected 11 fear, 13 anger, and 16 neutral body expressions. The average height of the body stimuli was 292 pixels.

A separate group of participants drawn from a community sample (N = 22, ten females, ten males, one gender nonconforming, one not reported; Mage = 37.13 years, SD = 13.47) rated each body stimuli on a 1–7 likert scale ranging from 1 = “Very angry” to 7 = “Very fearful." The average score each stimulus received on this scale acted as our “emotional clarity” measure as stimuli rated at the extremes would likely reflect clearer, more prototypical, and more intense emotional displays. Likewise, stimuli with scores toward the center of this scale are likely to be more ambiguous, less prototypical, and less intense (i.e., could be “angry,” “fearful,” or “neither” depending on how the participant interpreted the scale).

We also determined categorization accuracy by transforming individual responses into discrete categories. Scale ratings were transformed into categorizations using the midpoint as the cutoff (participant ratings below 4 were coded as anger whereas ratings above 4 were coded as fear). Based on this procedure, 100% of the anger stimuli were categorized as anger and 94% of the fear stimuli were categorized as fear.

The face stimuli used in Experiment 1 were fear and anger expressions from the Pictures of Facial Affect Set (Ekman & Friesen, 1976) and the Montreal Set of Facial Displays of Emotion (MSFDE) (Beaupré et al., 2000). We selected 16 anger faces and 17 fear faces for a total of 33 face stimuli. The average height of the face stimuli was 217 pixels.

Procedure

Participants were greeted by an experimenter and filled out an informed consent form before proceeding. They were then instructed to place their chin in a chinrest that leveled their head such that their eyes would be approximately aligned to the center of the computer screen. Participants were seated approximately 51 cm away from the screen. The stimuli were presented on a PC monitor with a display resolution of 1440 × 900 with a refresh rate of 60 Hz.

Once seated appropriately, participants were given instructions that they would be seeing an angry or fearful face. They were told that they would need to identify if the face was angry or fearful as quickly and as accurately as possible. They were told to press the up arrow to denote if the face was angry and the down arrow if the face was fearful. Participants were told to keep their eyes fixated on the center of the screen for the duration of the study, and to raise their hand if at any point they had questions.

Participants completed 192 randomized trials. Specifically, there were 32 fear-congruent trials, 32 fear-incongruent trials, and 32 fear-neutral trials. The pattern of trials was the same for anger. Each trial started with a fixation dot that occurred for 200 ms. Neutral trials in both studies were included to enhance the expressive primes and reduce priming habituation to the stimuli as some trials were repeated due to an imbalance of stimuli across emotion and face/body categories.

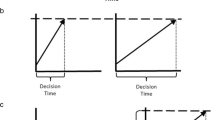

Following the fixation dot, a subliminal and backward-masked body and a supraliminal face appeared simultaneously in the participants’ left and right visual field. The body stimuli appeared briefly for 33 ms followed by a mask that consisted of the same body image but scrambled to appear like a random noise image typical of subliminal presentation paradigms (see Brooks et al., 2012). The face and mask were then presented for 1,000 ms. After 1,000 ms both stimuli (face and mask) disappeared and the participants had an additional 1,000 ms to respond whether the face displayed anger or fear. The face and body stimuli were paired so there were trials where they were congruent or incongruent in the emotion they displayed. Figure 1 displays the experimental procedure. Following completion of the study, participants completed a brief demographics questionnaire and were asked whether they noticed anything other than faces throughout the experiment. They were then debriefed and thanked for their participation.

Experimental design of Experiment 1. Faces were supraliminally presented to participants, and emotional bodies that were subliminally presented (i.e., 33 ms, backward masked). Participants were instructed to classify the face emotion as either angry or fearful

Results

No participant reported seeing stimuli other than the supraliminally presented faces. Neutral body trials acted as filler for this paradigm and were thus not included in the analyses. Similarly, we made no explicit predictions about the face or body emotion (i.e., fear and anger) interacting with trial congruence, and thus we collapsed across emotion expression to examine congruent trial (anger face with anger body, fear face with fear body) and incongruent trial (anger face with fear body, fear face with anger body) differences.

Reaction time (RT)

RT was preprocessed by removing incorrect trials, removing responses below 200 ms, and log transforming participants’ RT responses. We used a linear mixed-effects model to regress RT on fixed effects for trial congruency (congruent vs. incongruent), body expression emotion clarity, and their interaction. We included participants as a random effect. Since body expression emotional clarity was measured on a unidimensional scale (from very angry to very fearful) we calculated new scores for each stimulus such that new responses ranged from 1 = “not at all [emotion]” to 4 = “extremely [emotion]."

There were no RT differences across trial congruency (F(1, 3672.3) = 1.48, p = .224) or body-rating emotional clarity (F(1, 3672.5) = 0.28, p = .596). There was also no interaction between trial congruency and body expression emotional clarity, F(1, 3672.3) = 1.36, p = .244. The full linear mixed effects regression model is presented in the Online Supplemental Materials (OSM) 1.

Accuracy

Accuracy was assessed through a binomial generalized linear mixed-effects regression. We regressed trial-level accuracy (0 or 1) on fixed effects for trial congruency (congruent vs. incongruent), body expression emotion clarity, and their interaction. We included random intercepts for participants. Reported p-values were obtained through model comparison using the likelihood ratio test method.

There was a main effect of trial congruency, χ2(1) = 7.09, p = .008, but no main effect of body rating emotional clarity, χ2(1) = 2.14, p = .143, suggesting that the model with trial congruency as a fixed effect was significantly better than the intercept-only model. However, post hoc analyses for the main effect of congruency revealed that on average congruent (M = 0.79) and incongruent (M = 0.79) trial accuracies did not significantly differ, z = -0.40, p = .69.

Critically, though, there was a body expression emotional clarity by trial congruency interaction, χ2(1) = 6.89, p = .009 (see Fig. 2).

As predicted, when body expression emotional clarity increased, there was an increase in accuracy for congruent trials (estimate = 0.49, z = 2.89, p = .004) and a decrease in accuracy for incongruent trials, though not a significant decrease (estimate = -0.14, z = -.83, p = .409).

Though the slope for incongruent trials was not significant on its own, it was in the predicted direction. Furthermore, there was a significant difference between the congruent and incongruent slopes (z = 2.63, p = .009), suggesting strong integration effects of congruent face and body emotions on facial emotion perception. The full linear mixed-effects regression model is presented in OSM 2.

Discussion

Experiment 1 was designed to test whether rapidly presented, subliminal, and backward-masked body expressions facilitated (integration) or attenuated (interference) the processing of supraliminal face expressions when face-body emotion pairings either matched (congruent trials) or mismatched (incongruent trials). We predicted that we would find integration effects for congruent emotion trials, and interference effects for incongruent emotions trials. While there were no significant RT differences between congruency trial types, there were differences in classification accuracies moderated by the subliminal body emotion expression. In particular, body emotions that were more intense and matched the supraliminal face emotion were more accurately categorized than either face-body emotion pairings that were mismatched or face emotion expressions that were paired with body emotion expressions (subliminal) that were of a lower expressive emotional clarity. This suggests that subliminal, backward-masked body emotion expressions enhance the categorization of the consciously viewable face emotion expressions. A non-significant slope for incongruent trials suggests that there is little to no evidence that mismatched face-body emotion expression pairings hindered supraliminal face emotion categorization, regardless of emotional clarity of the subliminally presented body emotion expression.

Experiment 2

Method

Power considerations

We used the same power analysis from Experiment 1 to determine appropriate sample size in Experiment 2. We aimed for approximately 20 participants, but oversampled to ensure reliable results.

Participants

Participants were 27 (15 females, 12 males; Mage = 19.3 years, SD = 1.17) undergraduates at The Pennsylvania State University. Each participant completed the experiment in exchange for course credit.

Stimuli

Experiment 2 used the same fear and anger body stimuli from the BEAST database as used in Experiment 1. The fear and anger face stimuli used in Experiment 2 were the same as the face stimuli used in Experiment 1 with the addition of 17 neutral faces from the same actors in those sets.

A separate group of participants (N = 27; 22 females, five males; Mage = 19.74 years, SD = 1.32) rated each anger and fear face stimuli on a 1–7 Likert scale ranging from 1 = “Very angry” to 7 = “Very fearful.” Scale ratings were transformed into categorizations using the midpoint as the cutoff (below 4 was coded as anger and above 4 was coded as fear). Based on participants’ ratings, 100% of the anger stimuli were categorized as anger and 100% of the fear stimuli were categorized as fear.

Procedure

Participants completed the same consent, seating, and chin rest procedure described in Experiment 1. Once seated appropriately, participants were given instructions that they would be seeing an angry or fearful body. They were told that they would need to identify if the body was angry or fearful as quickly and as accurately as possible. They were told to press the up arrow to denote if the body was angry and the down arrow if the body was fearful. Participants were told to keep their eyes fixated on the center of the screen for the duration of the study, and to raise their hand if at any point they had questions.

Participants completed 160 trials. Specifically, there were 32 fear-congruent trials, 32 fear-incongruent trials, and 16 fear-neutral trials. The pattern of trials was the same for anger. Each trial started with a fixation dot that occurred for 200 ms. Again, neutral trials were included to enhance expressive primes and reduce habituation to stimuli. Following the fixation dot, a subliminal face and a supraliminal body appeared simultaneously in both the left and right visual fields of the participants. The face stimuli (and body stimuli) appeared for 33 ms followed by a mask that consisted of the same face image but scrambled to appear to be a random noise image. The body and mask together were then presented for an additional 1,000 ms. After 1,000 ms both stimuli (body and mask) disappeared and the participants had an additional 1,000 ms to respond whether the body displayed anger or fear. The face and body stimuli were paired such that there were trials where stimuli were congruent, incongruent, or neutral with regard to the emotion each displayed. Following completion of the study, participants completed a brief demographics questionnaire and were asked if they noticed anything other than bodies throughout the experiment. They were then debriefed and thanked for their participation.

Results

Seven participants explicitly reported seeing something present other than the supraliminally presented bodies. Additionally, five participants had questionable accuracy (< 65%) and abnormally skewed RT distributions. However, including either or both of these groups of participants in the analyses did not change the overall significance of the results, and therefore we report the results with all participants included.

As in Experiment 1, neutral congruency trials acted as filler and are not included in the analyses. Similarly, we collapsed across face/body emotion to directly examine the effects of congruency of each trial on expression recognition. Given that we were only able to have the anger and fear stimuli rated on emotional clarity, neutral congruency trials were not used in the analysis.

Reaction time

Reaction time was preprocessed identical to Experiment 1. We conducted a linear mixed-effects model analysis, regressing RT on fixed effects for trial congruency (congruent vs. incongruent), face expression emotional clarity rating, and their interaction. Face expression emotional clarity was similarly preprocessed like the body expression emotional clarity ratings in Experiment 1 and ranged from 1 = “not at all [emotion]” to 4 = “extremely [emotion]." We included random intercepts for each participant.

There was a significant RT main effect for face expression emotional clarity (F(1, 2544.1) = 4.68, p = .031). Overall, individuals were quicker at responding to trials that contained faces rated as high in emotional clarity.

There was no main effect for trial congruency (F(1, 2544.7) = 2.08, p = .150) nor a significant face expression emotional clarity by trial congruency interaction (F(1, 2544.8) = 1.72, p = .190). The full linear mixed effects regression model is presented in OSM 3.

Accuracy

As in Experiment 1, accuracy was assessed through a binomial generalized linear mixed-effects regression. There was a main effect of trial congruency on accuracy, χ2(1) = 5.66, p = .018. Post hoc analyses revealed that congruent trials (M = 0.81) were significantly higher in accuracy than incongruent trials (M = 0.76), z = 4.20, p < .001.

There was a main effect of face expression emotional clarity, χ2(1) = 4.27, p = .039. Participants were more accurate for trials that contained highly intense subliminal face expressions compared to trials that had more ambiguous-appearing facial emotion expressions.

Critically, and in line with our hypotheses, there was an interaction between face expression emotional clarity and trial congruency, χ2(1) = 8.91, p = .003 (see Fig. 2). As predicted, when face expression emotional clarity increased there was an increase in accuracy for congruent trials (estimate = 0.61, z = 2.89, p < .001) and a decrease in accuracy for incongruent trials (estimate = -0.11, p = .497). Though the slope for incongruent trials was not significant on its own, there was a significant difference between the congruent and incongruent trial slopes (z = 2.99, p = .003). This result aligns with Experiment 1’s findings to suggest integration effects of congruent subliminal face emotions on supraliminal body emotion perception. The full binomial generalized linear mixed-effects regression can be found in OSM 4.

Discussion

Experiment 2 was designed to examine the effects that subliminal, backward-masked faces had on supraliminally presented bodies. We predicted that we would find integration and interference depending on whether the subliminal presented face was congruent or incongruent with the emotion that was present on the supraliminal stimulus. For the RT data, we found quicker response times to faces with more emotional clarity but did not find any significant results demonstrating interference or integration. However, for the accuracy data we did find integration effects. These integration effects were revealed by higher accuracy for congruent pairings of faces and bodies when compared to the incongruent trials, particularly for face stimuli that were rated higher in emotion expression clarity. While the individual slope for incongruent trials across face expression emotional clarity ratings was not significant on its own, it was in the predicted direction as hypothesized and significantly different than the slope of congruent trials. This suggests that while there may be nominal interference effects of face emotion on body emotion recognition, the more likely candidate for visual processing of emotion is the integration of similarly valenced but visually distinct stimuli.

General discussion

Decades of research and an abundance of evidence suggest that both the face and body are powerful social stimuli that can influence an individual’s impressions and categorization of others. Despite how powerful faces and bodies can be, no work (to our knowledge) has attempted to disentangle whether similarly valenced but visually distinct sources of information show integration, interference, or both types of effects in a within-person design (see Lecker et al., 2020, for similar conclusions). Given this dearth in the literature, the current research reports two studies designed to test visual integration and interference effects that emotional faces can exert on body expression recognition and emotional bodies on face expression recognition. We had several linked hypotheses: First, we expected a greater ability to integrate face-body and body-face cue pairings when congruent emotion expressions were present (e.g., a fear face presented with a fearful body) regardless of the visual channel of the stimuli. Second, we predicted interference effects of face-body and body-face cue pairings when incongruent emotion expressions were presented (e.g., a fear face presented with an angry body). Finally, we predicted that face/body expressions would exert an influence on body/face expressions even when they are not consciously processed, and that this relationship would be contingent on the emotional clarity of the subliminal expression presented.

Across two studies, we showed strong evidence for integration and little-to-no evidence for interference across both supraliminal face and subliminal body (Experiment 1) and supraliminal body and subliminal face (Experiment 2) emotion expression pairings. Importantly, in both studies the integration effects were moderated by the emotional clarity of the subliminal presented stimuli, suggesting that ambiguity plays a large role in when socially relevant cues are visually integrated. Overall, these findings reveal that there are bidirectional integration effects of body emotion on face emotion, and face emotion on body emotion recognition. That is, it appears that both faces and bodies reciprocally influence the recognition of the other at low levels of visual processing.

Together, findings across two studies provide evidence for integration effects and nominal support for interference effects of face emotion expression on body emotion expression recognition and vice versa. We found that facial expressions were integrated in body expression perception when each stimulus displays a congruent emotion. However, we also found little to no evidence that mismatched face and body emotions caused interference to the processing of emotion displays. These effects were apparent regardless of which stimulus (face/body) was presented supraliminally versus subliminally.

Overall, there are inconsistent results reported in the literature as to whether face and body emotion aids or hinders recognition. Despite these inconsistencies, what is clear is that examining how emotion bodies and faces inform one another is a unique case. Indeed, the emotional content of a face and body can be emotionally congruent or incongruent but the channels of each stimulus share no perceptual overlap other than both being visual (see De Gelder & Tamietto, 2011). Compare this to how other social cues are typically examined, such as the influence of identity cues on emotion perception. These paradigms typically take the form of the stimulus sharing perceptual similarity across the cues being examined – that is, face gender’s influence on face emotion perception whereby face channel remains constant across both conditions of interest.

These findings demonstrate that faces and bodies are not recognized and categorized in isolation, but instead are influenced by the context surrounding the stimuli. Faces appear to give context to the expression of a person's body, but likewise the body can aid in the interpretation of facial expressions. Our work suggests that emotion cues, even those widely varying in visual characteristics such as bodies and faces, mutually influence emotion perception starting at very early stages of visual processing. Thus, it is not just visual similarity, but social signal similarity, that influences low-level visual processing. Further, these findings have important implications for understanding how individuals are perceived holistically while also complementing and extending other studies that have shown that facial expressions inform body emotion judgments (Robbins & Coltheart, 2012; Willis et al., 2011).

Conclusion

There are wide, sometimes vehemently argued, disagreements about the very nature of emotion and emotional expression. Most emotion theories, however, appear to agree that facial expressions of emotion forecast the impending behavior of the expresser (e.g., Ekman, 1973; Fridlund, 1994; Frijda & Tcherkassof, 1997; Russell, 1997). As such, it should not be surprising to find that the face meaningfully influences the perception of bodily emotion, and vice versa, despite each having very different visual characteristics (i.e., facial and bodily expressions look nothing alike). After all, the face and body forecast the same behavior tendencies (e.g., to fight or flight). Critically, humans are arguably innately prepared to extract such behavioral forecasts from one another, which requires integrating shared meaning across multiple expressive cues (i.e., Shared Signal Hypothesis: Adams et al., 2017). How early in visual processing this occurs, however, remains unclear. In the current work, faces and bodies that shared emotional signal value (e.g., both convey anger/fight or fear/flight) were integrated very early in visual processing. This occurred despite faces and bodies being highly distinct visual stimuli. These findings are consistent with prior claims for the existence of very low-level (subcortical) social vision (Tamietto & De Gelder, 2010), and highlight the need for continued research in this domain.

References

Adams, R. B., Jr., & Kleck, R. (2003). Perceived gaze direction and the processing of facial displays of emotion. Psychological Science, 14(6), 644–647. https://doi.org/10.1046/j.0956-7976.2003.psci_1479.x

Adams, R. B., Jr., & Kleck, R. (2005). Effects of direct and averted gaze on the perception of facially communicated emotion. Emotion, 5(1), 3–11. https://doi.org/10.1037/1528-3542.5.1.3

Adams, R. B., Jr., Ambady, N., Shimojo, S., & Nakayama, K. (Eds.). (2010). The Science of Social Vision (Vol. 7). Oxford University Press.

Adams, R. B., Jr., Franklin Jr, R. G., Kveraga, K., Ambady, N., Kleck, R. E., Whalen, P. J., … Nelson, A. J. (2012). Amygdala responses to averted vs direct gaze fear vary as a function of presentation speed. Social Cognitive and Affective Neuroscience, 7(5), 568–577. https://doi.org/10.1093/scan/nsr038

Adams, R. B., Jr., Hess, U., & Kleck, R. E. (2015). The intersection of gender-related facial appearance and facial displays of emotion. Emotion Review, 7, 5–13. https://doi.org/10.1177/1754073914544407

Adams, R. B., Jr., Garrido, C. O., Albohn, D. N., Hess, U., & Kleck, R. E. (2016). What facial appearance reveals over time: When perceived expressions in neutral faces reveal stable emotion dispositions. Frontiers in Psychology, 7, 986. https://doi.org/10.3389/fpsyg.2016.00986

Adams, R. B., Jr., Albohn, D. N., & Kveraga, K. (2017). Social vision: Applying a social functional approach to face and expression perception. Current Directions in Psychological Science, 26(3), 243–248. https://doi.org/10.1177/0963721417706392

Albohn, D. N., & Adams, R. B., Jr. (2020). Emotion residue in neutral faces: Implications for impression formation. Social Psychological and Personality Science, 194855062092322. https://doi.org/10.1177/1948550620923229

Albohn, D. N., Brandenburg, J. C., & Adams, R. B., Jr. (2019). Perceiving emotion in the neutral face: A powerful mechanism of person perception. In U. Hess & S. Hareli’s (Eds.), The Social Nature of Emotion Expression: What Emotions Can Tell Us About the World. Springer Publishing Company. https://doi.org/10.1007/978-3-030-32968-6_3

Aviezer, H., Trope, Y., & Todorov, A. (2012). Holistic person processing: Faces with bodies tell the whole story. Journal of Personality and Social Psychology, 103, 20–37. https://doi.org/10.1037/a0027411

Aviezer, H., Messinger, D. S., Zangvil, S., Mattson, W., Gangi, D. N., & Todorov, A. (2015). Thrill of Victory or Agony of Defeat? Perceivers Fail to Utilize Information in Facial Movements. Emotion., 15(6), 791–797. https://doi.org/10.1037/emo0000073

Beaupré, M. G., Cheung, N., & Hess, U. (2000). The Montreal Set of Facial Displays of Emotion [Slides]. Department of Psychology, University of Quebec.

Brainerd, C. J. (2018). The emotional-ambiguity hypothesis: A large-scale test. Psychological Science, 29(10), 1706–1715. https://doi.org/10.1177/0956797618780353

Brooks, S. J., Savov, V., Allzén, E., Benedict, C., Fredriksson, R., & Schiöth, H. B. (2012). Exposure to subliminal arousing stimuli induces robust activation in the amygdala, hippocampus, anterior cingulate, insular cortex and primary visual cortex: a systematic meta-analysis of fMRI studies. NeuroImage, 59(3), 2962–2973. https://doi.org/10.1016/j.neuroimage.2011.09.077

Bruce, V., & Young, A. (1986). Understanding face recognition. British Journal of Psychology, 77(3), 305–327. https://doi.org/10.1111/j.2044-8295.1986.tb02199.x

De Gelder, B., & Tamietto, M. (2011). Faces, bodies, agent vision and social consciousness. In B. A. Adams, N. Ambady, K. Nakayama, & S. Shimojo (Eds.), The Science of Social Vision. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780195333176.003.0004

De Gelder, B., & Van den Stock, J. (2011). The bodily expressive action stimulus test (BEAST). Construction and validation of a stimulus basis for measuring perception of whole body expression of emotions. Frontiers in Psychology, 2(8), 1–6. https://doi.org/10.3389/fpsyg.2011.00181

De Gelder, B., & Vroomen, J. (2000). The perception of emotions by ear and by eye. Cognition and Emotion, 14(3), 289–311. https://doi.org/10.1080/026999300378824

De Gelder, B., Morris, J. S., & Dolan, R. J. (2005). Unconscious fear influences emotional awareness of faces and voices. Proceedings of the National Academy of Sciences of the United States of America, 102(51), 18682–18687. https://doi.org/10.1073/pnas.0509179102

Ekman, P. (1973). Cross-cultural studies of facial expression. In P. Ekman (Ed.), Darwin and Facial Expression: A Century of Research in Review (pp. 169–222). Academic Press.

Ekman, P., & Friesen, W. V. (1976). Pictures of Facial Affect. Consulting Psychologists Press. https://doi.org/10.1037/t49272-000

Fridlund, A. J. (1994). Human facial expression: An evolutionary view. Academic Press. https://doi.org/10.1016/b978-0-12-267630-7.50008-3

Frijda, N. H., & Tcherkassof, A. (1997). Facial expressions as modes of action readiness. In J. A. Russell & J. M. Fernández-Dols (Eds.), The Psychology of Facial Expression (pp. 78–102). Cambridge University Press. https://doi.org/10.1017/cbo9780511659911.006

Graham, R., & LaBar, K. S. (2007). Garner interference reveals dependencies between emotional expression and gaze in face perception. Emotion, 7(2), 296–313. https://doi.org/10.1037/1528-3542.7.2.296

Gu, Y., Mai, X., & Luo, Y. J. (2013). Do bodily expressions compete with facial expressions? Time course of integration of emotional signals from the face and the body. PLOS ONE, 8(7), e66762. https://doi.org/10.1371/journal.pone.0066762

Haxby, J. V., Hoffman, E. A., & Gobbini, M. I. (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–233. https://doi.org/10.1016/S1364-6613(00)01482-0

Haxby, J. V., Hoffman, E. A., & Gobbini, M. I. (2002). Human neural systems for face recognition and social communication. Biological Psychiatry, 51(1), 59–67. https://doi.org/10.1016/S0006-3223(01)01330-0

Hu, Y., Baragchizadeh, A., & O'Toole, A. J. (2020). Integrating faces and bodies: Psychological and neural perspectives on whole person perception. Neuroscience and Biobehavioral Reviews, 112, 472–486. https://doi.org/10.1016/j.neubiorev.2020.02.021

Hugenberg, K. (2005). Social categorization and the perception of facial affect: Target race moderates the response latency advantage for happy faces. Emotion, 5(3), 267–276. https://doi.org/10.1037/1528-3542.5.3.267

Im, H. Y., Albohn, D. N., Steiner, T. G., Cushing, C. A., Adams Jr., R. B., & Kveraga, K. (2017). Differential hemispheric and visual stream contributions to ensemble coding of crowd emotion. Nature Human Behavior, 1, 828–842. https://doi.org/10.1101/101527

Ito, T. A., & Urland, G. R. (2005). The influence of processing objectives on the perception of faces: An ERP study of race and gender perception. Cognitive, Affective and Behavioral Neuroscience, 5(1), 21–36. https://doi.org/10.3758/CABN.5.1.21

Kim, X. M., Mattek, A. M., Bennett, R. H., Solomon, K. M., Shin, J., & Whalen, P. J. (2017). Human amygdala tracks a feature-based valence signal embedded within the facial expression of surprise. Journal of Neuroscience, 37(39), 9510–9518. https://doi.org/10.1523/JNEUROSCI.1375-17.2017

Kret, M. E., Stekelenburg, J. J., Roelofs, K., & de Gelder, B. (2013). Perception of face and body expressions using electromyography, pupillometry and gaze measures. Frontiers in Psychology, 4(28). https://doi.org/10.3389/fpsyg.2013.00028

Lecker, M., Dotsch, R., Bijlstra, G., & Aviezer, H. (2020). Bidirectional contextual influence between faces and bodies in emotion perception. Emotion, 20(7), 1154–1164. https://doi.org/10.1037/emo0000619

Meeren, H. K. M., van Heijnsbergen, C. C. R. J., & de Gelder, B. (2005). Rapid perceptual integration of facial expression and emotional body language. Proceedings of the National Academy of Sciences, 102(45), 16518–16523. https://doi.org/10.1073/pnas.0507650102

Milders, M., Hietanen, J. K., Leppänen, J. M., & Braun, M. (2011). Detection of emotional faces is modulated by the direction of eye gaze. Emotion, 11(6), 1456–1461. https://doi.org/10.1037/a0022901

Minami, T., Nakajima, K., & Nakauchi, S. (2018). Effects of face and background color on facial expression perception. Frontiers in Psychology, 9(6), 1–6. https://doi.org/10.3389/fpsyg.2018.01012

Mondloch, C. J., Horner, M., & Mian, J. (2013). Wide eyes and drooping arms: Adult-like congruency effects emerge early in the development of sensitivity to emotional faces and body postures. Journal of Experimental Child Psychology, 114(2), 203–216. https://doi.org/10.1016/j.jecp.2012.06.003

Robbins, R. A., & Coltheart, M. (2012). The effects of inversion and familiarity on face versus body cues to person recognition. Journal of Experimental Psychology: Human Perception and Performance, 38(5), 1098–1104. https://doi.org/10.1037/a0028584

Russell, J. A. (1997). Reading emotion from and into faces: Resurrecting a dimensional-contextual perspective. In J. A. Russell & J. M. Fernández-Dols (Eds.), The psychology of facial expression (pp. 295–320). Cambridge University Press. https://doi.org/10.1017/cbo9780511659911.015

Tamietto, M., & De Gelder, B. (2010). Neural bases of the non-conscious perception of emotional signals. Nature Reviews Neuroscience, 11(10), 697–709. https://doi.org/10.1038/nrn2889

Teufel, C., Westlake, M. F., Fletcher, P. C., & von dem Hagen, E. (2019). A hierarchical model of social perception: Psychophysical evidence suggests late rather than early integration of visual information from facial expression and body posture. Cognition, 185(3), 131–143. https://doi.org/10.1016/j.cognition.2018.12.012

Wang, L., Xia, L., & Zhang, D. (2017). Face-body integration of intense emotional expressions of victory and defeat. PLOS ONE, 12(2), 1–17. https://doi.org/10.1371/journal.pone.0171656

Willis, M. L., Palermo, R., & Burke, D. (2011). Judging approachability on the face of it: The influence of face and body expressions on the perception of approachability. Emotion, 11(3), 514–523. https://doi.org/10.1037/a0022571

Acknowledgements

This work was supported by NIMH grant MH101194 awarded to R.B.A., Jr and K.K.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Open practices statement

None of the data or materials for the experiments reported here are publicly available. None of the experiments were preregistered. Qualified researchers may contact the corresponding authors for stimuli and data.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

ESM 1

(DOCX 17 kb)

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Albohn, D.N., Brandenburg, J.C., Kveraga, K. et al. The shared signal hypothesis: Facial and bodily expressions of emotion mutually inform one another. Atten Percept Psychophys 84, 2271–2280 (2022). https://doi.org/10.3758/s13414-022-02548-6

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-022-02548-6