Abstract

Previous studies have shown that after actively using a handheld tool for a period of time, participants show visual biases toward stimuli presented near the end of the tool. Research suggests this is driven by an incorporation of the tool into the observer’s body schema, extending peripersonal space to surround the tool. This study aims to investigate whether the same visual biases might be seen near remotely operated tools. Participants used tools—a handheld rake (Experiment 1), a remote-controlled drone (Experiment 2), a remote-controlled excavator (Experiment 3), or a handheld excavator (Experiment 4)—to rake sand for several minutes, then performed a target-detection task in which they made speeded responses to targets appearing near and far from the tool. In Experiment 1, participants detected targets appearing near the rake significantly faster than targets appearing far from the rake, replicating previous findings. We failed to find strong evidence of improved target detection near remotely operated tools in Experiments 2 and 3, but found clear evidence of near-tool facilitation in Experiment 4 when participants physically picked up the excavator and used it as a handheld tool. These results suggest that observers may not incorporate remotely operated tools into the body schema in the same manner as handheld tools. We discuss potential mechanisms that may drive these differences in embodiment between handheld and remote-controlled tools.

Similar content being viewed by others

Remotely operated devices have taken on critical roles in today’s society—including bomb-disposal robots, robotic surgeons, and military drones—and accurate, precise understanding of our interactions with these devices is more important than ever. Teleoperation allows surgeons to perform complex procedures from anywhere in the world using robotic arms, but to program an effective interface we need to understand how the surgeon represents the relationship between their body and the tool. Neurological and cognitive psychological research has shown that the space immediately surrounding the body—peripersonal space—and our mental representation of our body—the body schema—are highly flexible and dependent on our interactions with the surrounding environment (e.g., Berlucchi & Aglioti, 1997; Graziano & Cooke, 2006). Distinct representations of peripersonal space arose to establish a defensive perimeter around the body and serve as a mental boundary between the space that allows immediate physical interaction with the environment and extrapersonal space (the region outside of peripersonal space; Graziano & Cooke, 2006). Observers process stimuli presented within peripersonal space differently than stimuli viewed outside of this action window, experiencing visual and attentional biases (e.g., Abrams, Davoli, Du, Knapp, & Paull, 2008), increased integration of multimodal information (Làdavas, Pellegrino, Farnè, & Zeloni, 1998), and automatic motor action preparation (Costantini, Ambrosini, Tieri, Sinigaglia, & Committeri, 2010).

Interestingly, research suggests that handheld tools, such as small rakes, can be incorporated into the body schema through active use (Maravita & Iriki, 2004). This research has also shown that as the body schema extends to include a tool, peripersonal space is also extended to the area surrounding the tool. As a result, stimuli appearing near handheld tools are subject to the same visual and motor biases as stimuli presented near the hands, even when these stimuli occupy space several feet away from the body (Maravita, Spence, Kennet, & Driver, 2002). The apparent flexibility of peripersonal space representations raises many questions about when and how tool use contributes to extension of the body schema and a resulting widening of peripersonal space. We investigated these questions by examining whether or not active use of a remotely controlled tool is sufficient to introduce mental representations of a new zone of peripersonal space around the tool’s functional action area.

Representations of peripersonal space encode the space proximal to the body, exhibiting several characteristics indicative of visuomotor representation of objects. When stimuli appear in peripersonal space, they are not only represented in terms of their physical location, but also dynamically based on the motor responses/grasps they elicit (e.g., Fogassi et al., 1996; Rizzolatti, Fadiga, Fogassi, & Gallese, 1997) separate from their visual characteristics (Murata et al., 1997; Rizzolatti et al., 1997). These findings suggest that observers represent objects in peripersonal space in terms of the potential actions these objects afford. A strictly visual representation would not need to be dynamic, or to represent the grasp an object elicits. A representation that integrates potential motor plans, however, would require information about an object’s motion as well as its grasp affordances.

Peripersonal space is not static, as the body constantly changes its position and posture. In order to segregate representations of objects into peripersonal and extrapersonal space, the brain must maintain a dynamic representation of the body. This representation, the body schema, is created from visual, tactile, and proprioceptive inputs stemming from the somatosensory cortex, posterior parietal lobe, and the insular cortex (Berlucchi & Aglioti, 1997). Interestingly, the body schema can be updated to include extracorporeal objects that are either in direct contact with the body or close to the body (Aglioti, Smania, Manfredi, & Berlucchi, 1996).

Research on altered vision near the hands speaks to the importance of action affordances to representations of peripersonal space (e.g., Brockmole, Davoli, Abrams, & Witt, 2013; Thomas, 2015, 2017). Much research illustrates biases in visual processing that occur near the hands presumably due to visuomotor representations within peripersonal space (for reviews, see Brockmole, et al., 2013; Goodhew, Edwards, Ferber, & Pratt, 2015). For example, several studies employing an attentional cueing paradigm (Posner, Walker, Friedrich, & Rafal, 1987) have found that observers are facilitated in detecting targets that appear within the hands’ grasping space (e.g., McManus & Thomas, 2018; Reed, Betz, Garza, & Roberts, 2010; Reed, Grubb, & Steele, 2006; Thomas, 2013). Due to the bimodal representation of peripersonal space, researchers have also found evidence of competition between visual and tactile stimuli presented in perihand space. In this phenomenon, known as the crossmodal competition effect, observers are slowed in detecting a tactile target on one hand when a competing visual stimulus is presented near the opposite hand (Brozzoli, Pavani, Urquizar, Cardinali, & Farnè, 2009; Maravita et al., 2002).

Interestingly, researchers have found that many of the visual biases present near the hands also exist near the ends of handheld tools, documenting cases of both crossmodal competition (e.g., Maravita et al., 2002) and improved target detection (Reed et al., 2010) near the ends of tools after observers spend a short time using the tool. These near-tool visual biases occur at distances from observers’ hands that exceed the boundaries typically classified as falling within peripersonal space (Maravita et al., 2002). Evidence indicates that these near-tool effects are driven by active use, as they are observed specifically near the functional end of tools (Farnè, Iriki, & Làdavas, 2005) and do not occur in observers who only passively hold a tool (Holmes, Calvert, & Spence, 2007a), suggesting users must have a functional relationship with a tool to experience an extension of peripersonal space around the tool. However, an alternative explanation for these near-tool effects is that tools—often novel, salient objects present on only one side of space in these paradigms—capture attention, biasing responses to stimuli appearing near their ends (Holmes, Calvert, & Spence, 2004; Holmes, Sanabria, Calvert, & Spence, 2007b).

The advent of remote-controlled devices has allowed observers to experience functional action areas that are completely separate from the physical body. Remote-controlled cars, helicopters, and drones create new action areas, allowing us to interact with the environment without having to trace a direct line from body to tool to external object. Whereas primate studies measuring receptive fields in vPMC bimodal neurons suggest that handheld tool use extends peripersonal space out from the body in a large, continuous bubble that contains the entirety of the updated action area (Maravita & Iriki, 2004), when observers use a remote tool, they create an action area that is not contiguous with the body. That is, regardless of where a remote-controlled tool operates, by definition, the user is not in physical contact with the functional part of the tool.

Although this is a relatively new avenue of research, some studies have investigated the influence of remote tool use on peripersonal space representations, such as by using a computer mouse with simple detection paradigms or crossmodal detection paradigms (Bassolino, Serino, Obaldi, & Làdavas, 2010; Gozli & Brown, 2011). Leveraging the idea that target detection is facilitated when targets appear in perihand space (Reed et al., 2006), the researchers found that participants were facilitated in detecting movement of an onscreen cursor when they had first practiced with a mouse whose movement corresponded to movement of the cursor, but showed no such facilitation after practice where movement of the mouse did not create corresponding visual movement of the cursor (Gozli & Brown, 2011). The improvement in movement detection of a functional over a nonfunctional mouse cursor may suggest that active use of a computer mouse can create a disconnected action area around a cursor, which may be represented as a part of peripersonal space (Gozli & Brown, 2011). Furthermore, using laser pointers and sticks have been shown to produce similar alterations in line bisection paradigms. Participants tend to mark the midpoint of a line left of center when the line is present within peripersonal space, and the to right of center when the line is outside of peripersonal space (Longo & Lourenco, 2006). However, both sticks and laser pointers seem to enlarge the size of peripersonal space, despite the fact that lasers do not allow any sort of physical interaction with the line (Costantini et al., 2014; Hunley, Marker, & Lourenco, 2017). More broadly, these results raise the possibility that a tool’s functional end does not have to be physically connected to the body to be incorporated into the body schema.

However, there are multiple potential differences between handheld and remote tools that could prevent remote tools from sharing the same embodied relationship between tool and user as handheld tools do. One characteristic of handheld tools that is not typically shared by remote tools is haptic feedback. When employing a handheld tool, users can physically feel when the tool makes contact with something. This is not the case when using a remote control, unless the controller has a built-in haptic feedback system. Tactile sensation has been shown to be an important aspect of tool embodiment—in fact, studies have found that haptic feedback alone is sometimes sufficient to simulate tool use, even in the absence of a visual signal (Park & Reed, 2015; Serino Canzoneri, Marzolla, Di Pellegrino, & Magosso, 2015). Researchers found that blindfolded participants showed multisensory integration (a characteristic of peripersonal space) at increased distances after experiencing simulated tool use via simultaneous delivery of a tactile stimulation and the sound of a pencil tapping 100 cm from the hand (Serino et al., 2015). A lack of tactile sensation may prevent remotely controlled tools from being embodied in the same way as handheld tools.

Another potential obstacle for the embodiment of remote tools is the complexity of the relationship between user input and tool action. Most studies of the incorporation of handheld tools into the body schema involve sticks or rakes that enable simple reaching or pointing actions that are extensions of arm/hand movements (e.g., Reed et al., 2010). Handheld tool use lengthens participants’ perception of arm length, as evidenced by changes in estimates of the distance between two points touched on the arm before and after using a tool (Canzoneri et al., 2013). Remote-controlled tools, however, typically involve a more complex interface with a wider repertoire of actions, lacking a fully intuitive mapping between user body movement and tool movement. In the instances where participants seem to have successfully incorporated tools that are not directly connected to their bodies into the body schema (e.g., Bassolino et al., 2010; Sengül et al., 2013), simple, intuitive mappings that mirror handheld tool movement were employed. However, the most common remote controls use more complex arrays of multiple buttons and joysticks, which may hamper a remote-controlled tool’s incorporation into the body schema as a simple extension of the arm or hand.

In addition, unlike simple handheld tools, which essentially always afford actions when a user holds them appropriately, remote-controlled tools can only function in the external environment when they are turned on, powered up, and receiving signals from a controller. Typical handheld tools also tend to only modestly extend a user’s action area, whereas remotely controlled tools have a functional range often limited only by the distance the user can see, such as in the case of drones.

Across four experiments, we investigated the extent to which it is possible for observers to incorporate a complex, remotely operated tool into the body schema and in turn create a novel region of peripersonal space around that tool. In each experiment, participants actively used either a handheld or remote-controlled tool before completing an attentional cueing task in which they detected targets appearing near or far from the tool. This paradigm has proven to be robust in illustrating visual biases in perihand space (e.g., Agauas, Jacoby, & Thomas, 2020; Reed et al., 2006; Sun & Thomas, 2013) as well as near the ends of handheld tools (Reed et al., 2010). After replicating findings of facilitation near a handheld tool in Experiment 1, in Experiments 2 and 3 we examined whether similar visual biases would be observed for the space near a small flying drone (Experiment 2) and a remote-controlled excavator (Experiment 3). If active use of remote tools is sufficient in driving observers to incorporate these tools into the body schema and expand representations of peripersonal space, then participants should be faster to detect targets appearing near the tool than targets appearing outside of the tool’s functional area. However, if the expansion of peripersonal space around handheld tools relies on factors above and beyond tools’ functionality, then a target’s location with respect to a remote tool may not influence response times in the target-detection task. To preview our results, we failed to find strong evidence of near-tool facilitation for either the drone or excavator when participants engaged with these tools via a remote control. However, in a final experiment, we found that participants again showed a near-tool visual bias when they used the excavator by grasping it in their own hands instead of interacting with it via remote control. Taken together, these results suggest that the visual biases that occur near handheld tools may not transfer to the space around all remote-controlled tools.

Overview of attentional cueing task

Although the type of tools participants used and their manner of interacting with these tools varied across experiments, all participants performed the same attentional cueing task to test for visual biases near each tool after a training/use period. This attentional task (Posner et al., 1987) has reliably demonstrated visual biases in the space near the hands as well as handheld tools (e.g., Reed et al., 2010). Details on the cueing task are reproduced from McManus and Thomas (2018). Stimuli were presented on a monitor with a resolution of 1,024 × 768 pixels and a refresh rate of 60 Hz. During Experiments 1 and 2, viewing distance was fixed at 36 cm from the monitor using a chin rest. During Experiment 4, viewing distance was approximately 36 cm from the monitor, but viewing distance was not fixed using a chin rest. Stimuli consisted of a black central fixation cross and two empty black squares measuring 3.25° positioned 14.63° to the left and right of fixation. The target stimulus was a black dot (2.44°). Experiment 3 used the same display as the previous two, but as the monitor was on the ground, and viewing distance was approximately 105 cm (distance was not fixed using a chin rest), the visual angles between the central fixation point and the target boxes were only 5.04°.

In the cueing task, after a random delay between 1,500 to 3,000 ms, the outline of one of the boxes darkened. Two hundred milliseconds after cue onset, a dot either appeared in the darkened box (valid cue trial), the opposite box (invalid cue trial), or did not appear (catch trial). Participants were instructed to press a button as soon as a dot appeared. Each block contained 56 valid trials, 16 invalid trials, and eight catch trials for a total of 80 trials per block. Trial type was randomized within blocks.

Experiment 1

Before examining how active use of remote-controlled tools may alter representations of peripersonal space, it is first important to ensure we can reproduce findings of visual biases near the end of handheld tools in our experimental population. Experiment 1 served as a replication of Reed et al. (2010), to justify the use of the Posner task to determine whether the space around a tool is represented as peripersonal space after active tool use. As in Reed et al.’s (2010) study, we asked participants to rake sand with a small handheld tool before performing the attentional cueing task while holding the raking tool near one of the target locations or keeping the tool away from the display. The attentional paradigm and tool use task were identical to the methods employed in Reed et al. (2010). However, in order to more closely approximate the spatial relationship between the remote-controlled tool and the attentional cueing display we used for Experiment 2, we made a few minor changes to the Reed et al. (2010) design. Whereas the rake used in the previous study had prongs that were perpendicular to its handle, the rake used in Experiment 1 had prongs that extended straight out, parallel to the handle. In addition, while participants in Reed et al. (2010) held the rake to the left or right side of target boxes, with the prongs facing inwards, participants in Experiment 1 held the rake above one of the target boxes (see Fig. 1).

Experimental setup for Experiment 1. The first panel shows a participant raking sand using the handheld rake. The bottom-left panel shows the rake configuration. The hand holding the rake is supported, and the rake is pointed directly above the closest cue box. The right hand is used to respond during the cueing task. The bottom-right panel shows the configuration when the rake is not used. The hand is still supported and is held flat on the support boxes in the same location, but is not holding the rake

Methods

Eighty-threeFootnote 1 undergraduate volunteers participated in the study for course credit. All participants had normal or corrected-to-normal vision.

Participants were first familiarized with the cueing task during a short practice block (20 trials) in which they responded with their dominant hand and kept their nondominant hand in their lap. They then performed eight blocks of trials where handheld tool proximity was manipulated across blocks. Before each block, participants received instructions on hand placement and tool use detailing one of four possible configurations: (1) respond with the left hand and hold the rake near the monitor with the right hand, using several boxes as support; (2) respond with the left hand and place the right hand on the support boxes, without the rake; (3) respond with the right hand and hold the rake near the monitor with the left hand; and (4) respond with the right hand and place the empty left hand on the support boxes (see Fig. 1).

Instructions were given verbally and provided in writing on the computer display. Before blocks in which participants held the rake near the display, they were instructed to rake a tray of sand for 1 minute. This was done to ensure there was active tool use before each block with the rake next to the monitor, which presumably caused participants to incorporate or reincorporate the tool into their body schema (Reed et al., 2010). To ensure consistent tool placement across observers, participants placed the end of rake on three black guide dots shown along with the block instructions. The dots were presented over the left or right square, depending on the hand used to hold the rake. These guide dots disappeared when participants began the first trial in a block. Experimenters ensured that participants complied with all hand placement instructions. Participants ran through two blocks of each of the four configurations in a randomized order.

Results and discussion

Five participants who made response errors on more than 50% of catch trials were dropped from the study and replaced, as they were likely not waiting for the target to appear before making their responses. Another 15 participants were dropped from the study for failing to follow instructions. This generally meant that participants failed to maintain the correct hand or rake placement, or accidentally skipped the instruction screen before starting a block. To eliminate anticipation and inattention errors, trials with a reaction time of less than 200 ms or greater than 1,000 ms were excluded from analyses. For the remaining 63 participants, a total of 7.72% of trials with excessively long or short reaction times were omitted from analyses. These criteria are based on previous studies tracking target detection near the hands (e.g., Reed et al., 2010; Thomas, 2013).

Reaction-time data (measured in ms) were submitted to a 2 × 2 × 2 within-subjects analysis of variance (ANOVA; Table 1 contains the ANOVA results; Table 2 contains the means for each condition), with factors of validity (valid vs. invalid cue), tool condition (rake on screen vs. no rake), and target distance. Target distance consisted of target near (if the target appeared on the same side of the monitor as the rake or empty, propped up hand) or target far (if the target appeared on the opposite side from the rake or empty hand).

The ANOVA yielded a main effect of validity, F (1, 62) = 236.245, p < .001, partial eta squared = .792, which was expected based on the paradigm. Cued trials were significantly faster than uncued trials. There was also a main effect of tool condition, F (1, 62) = 12.250, p = .001, partial eta squared = .165. Participants responded significantly faster when the rake was absent than when the rake was present.

There was also a significant interaction between validity and tool condition, F(1, 62) = 11.077, p = .001, partial eta squared = .152. For both valid and invalid trials, participants were faster to respond when the rake was absent than when it was present; however, the difference between the rake-present and rake-absent reaction times was much greater in the invalid trials. Pairwise comparisons reflected this as well, as there was a significant difference between reaction times when the rake was present versus absent for invalid trials, t(1, 62) = −3.981, p < .001, but not for valid trials, t(1, 62) = −1.930, p = .058. This likely reflects the fact that invalid trials were significantly longer than valid trials. With longer reaction times, the differences between the rake and no-rake conditions in the invalid trials were larger.

Importantly, the ANOVA also yielded a significant interaction between tool condition and target distance, F(1, 62) = 9.381, p = .003, partial eta squared = .131. Figure 2 displays this interaction between tool condition and target distance, collapsing across the factor of validity. As can be seen from this figure, when participants held a rake near the display, they were facilitated in detecting targets near this rake. However, target distance had little influence on reaction times when participants instead rested their empty hand on boxes near the display. Pairwise comparisons confirmed that target detection was facilitated in the rake- present condition, t(62) = 2.773, p = .007, but not the rake-absent condition, t(62) = −.812, p = .420.

These results replicate previous findings; participants were faster to detect a target presented within the action area of a handheld tool than a target far from a tool. This attentional bias to the space around the rake suggests that peripersonal space had expanded to surround the tool. Participants were able to incorporate a handheld rake into their body schema, to interpret a rake as part of themselves, after using it to rake sand for only 1 minute, indicating that we were able to induce peripersonal space expansion in our experimental population. This replication of Reed et al.’s (2010) previous findings with a new rake design and location relative to the target boxes also suggests peripersonal space expansion is robust to these changes that more closely mimic the spatial relationships between tool and targets we employed in our second experiment.

Experiment 2

The primary question under investigation in this study is whether the visual biases typically associated with the space around a handheld tool also occur around a remote-controlled tool’s functional action area. In Experiment 2, we began to examine this question by asking a new group of participants to perform both the sand-raking and attentional cueing tasks we used in Experiment 1 with a remote controlled, instead of a handheld, tool. Participants first practiced operating a lightweight flying drone with a video-game-style controller before using this drone to rake sand in a pool approximately 5 feet away from where they were standing. They then performed the target-detection task under conditions in which the drone was either suspended above one of the target locations or was stowed far from the display. If active use of a remote-controlled tool with a complex interface—in the absence of haptic feedback and operated at a distance well outside the typical boundaries of peripersonal space—is sufficient to introduce visual biases in the space surrounding the tool, then we should expect to see a pattern of target detection facilitation near the drone that is similar to what we observed near the handheld rake in Experiment 1.

Methods

Another 70 volunteers, none of whom participated in Experiment 1, participated in Experiment 2 for course credit. The target-detection task of Experiment 2 was essentially a replication of the first experiment employing a drone with a rake attachment (straws 7 cm in length attached to the bottom of each of the four drone propellers) instead of a handheld rake.Footnote 2 In order to give participants a sense of comfort and control with the drone—teaching them they could use the drone to interact with the environment—participants went through a 10-minute practice phase. Participants practiced flying the drone around the room for 5 minutes, then used the drone to rake a pool of sand for another 5 minutes. Participants stood in a box indicated by lines of the tape on the ground. This was 5 feet from the edge of the pool of sand, which was approximately in the middle of the room. At this distance, participants were capable of easily observing the tool’s immediate effect on the environment. After the practice phase, participants filled out a brief questionnaire, which asked participants to rate their comfort, control, and the degree to which they viewed the drone as an extension of themselves using a 7-point Likert scale (see Appendix A). The questionnaire was administered at this time in order to most accurately assess performance and ability during the practice phase, as well as to give the best chance of capturing a subjective sense of tool embodiment. A research assistant responsible for administering the experiment was also asked to rate the level of control the participant displayed over the drone (see Appendix B). This was done to allow us to identify and control for participants that did not feel they could use the drone to interact with the space around them.

During the target-detection task test phase, four configurations were tested: (1) respond with the right hand, with the drone and rake attachment suspended over the left square; (2) respond with the right hand, without the drone present; (3) respond with the left hand, with the drone suspended over the right square; and (4) respond with the left hand, without the drone present (see Fig. 3). Before blocks with the drone present, participants raked the pool of sand for an additional minute to ensure they maintained a functional relationship with the drone. Blocks with the drone present began with onscreen instructions to rake with the drone for an additional minute, as well as guide dots above the target square as in Experiment 1. The drone was suspended in a way that the ends of the 7-cm prongs extending down from the drone were in contact with these guide dots, to ensure the distance and direction between the functional end of the drone and the target location were consistent with Experiment 1. Participants ran through two blocks of each of the four configurations in a randomized order. To conclude the experiment, participants were asked to fill out a posttest questionnaire designed to assess demand characteristics, in case the questionnaire administered after the practice phase clued participants in to the purpose of the study. Participants were asked what they anticipated the results of the experiment would be, and what they believed was the purpose of the experiment (see Appendix C).

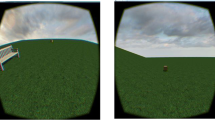

Experimental setup for Experiment 2. The left panel shows a participant raking sand with the drone, by hovering the drone over the pool until the prongs extending down from the drone make contact with the sand. The right panel shows the configuration for the target-detection task with the drone present. The drone is suspended over one of the cue boxes, the hand on the side with the drone is placed on the desk, and the opposite hand is used to respond to the target

Results and discussion

Data were put through the same exclusion criteria as in the previous experiment. Ten participants made response errors on more than 50% of catch trials and so were dropped from the study and replaced. Another 10 participants were dropped from the study for failing to follow the instructions. As before, trials with a reaction time of less than 200 ms or greater than 1,000 ms were excluded. This omitted 9.88% of trials from analyses. The three items on the participant questionnaire assessing drone ability were all found to be significantly correlated (Question 1 and Question 2: r = .739, p < .001; Question 2 and Question 3: r = .506, p < .001; Question 1 and Question 3: r = .488, p < .001), and so were averaged together to create a drone score for each participant (see Table 5). No participants were found to have drone scores outside 2.5 standard deviations from the mean, so no participants were eliminated based on self-assessed drone ability.

Data were first entered into a 2 × 2 × 2 within-subjects ANOVA, with factors of validity, tool condition, and target distance (see Table 3 for ANOVA results, and Table 4 for reaction-time means for each condition). Validity was defined as whether the target did or did not appear in the cued location, tool condition was defined as whether or not the drone was suspended above one target box, and target distance was defined as whether the target appeared near the drone if the drone was present, or whether the target appeared on the side of the nonresponding hand if the drone was absent (similar to Experiment 1, the distinction between target distance in the drone-absent condition was essentially meaningless) Table 5.

As expected, there was a large main effect of validity, F(1, 49) = 237.019, p < .001, ηp2 = .829. Valid trials had significantly faster response times than invalid trials did. There was also a marginal main effect of tool condition. F(1, 49) = 3.555, p = .065, ηp2 = .068, which indicated that participants detected all targets slightly faster with the drone present than absent.

The ANOVA also yielded a marginal interaction between tool condition and target distance, F(1, 49) = 2.879, p = .096, ηp2 = .055. As can be seen in Fig. 4, which displays data for this experiment collapsed across the factor of validity, this interaction between tool condition and target distance was in the direction of the hypothesized results: With the drone absent, participants were slightly slower to detect targets on the side of the nonresponding hand (defined as target near) than when the target appeared on the opposite side of the monitor. Pairwise comparisons found this difference to be marginally significant, t(49) = 1.961, p = .056. With the drone present, participants were slightly faster to detect targets appearing near the drone than far. However, this difference did not approach significance, t(49) = −.896, p = .375.

Interestingly, the averaged participant self-reported drone score was not significantly correlated with researcher ratings (r = .235, p = .108). Participants’ subjective ratings of their drone scores were significantly lower than the researcher’s more standardized ratings (more standardized based on the fact that researchers could compare an individual participant’s drone ability to that of other participants; t = 3.92, p < .001). This could indicate that participants had some difficulties or frustration using the drone, which could impact their ability to incorporate the drone into their body schema. There were no participants whose drone score or researcher rating fell outside 2.5 standard deviations from the mean. Only a single participant was able to guess the purpose of the study based on the posttest answers, suggesting the measurement of self-reported tool efficacy did not introduce a strong demand characteristic for the majority of participants. Eliminating this person from analyses did not substantially alter the pattern of statistical significance across the remaining participants. We performed a median split on the reaction-time data to compare participants with high and low control over the drone, as participants with more control over the drone may be more likely to successfully incorporate that drone into their body schema. Running separate 2 × 2 × 2 ANOVAs on the low-scoring and high-scoring groups showed a main effect of tool condition in the low drone scoring participants, F(1, 24) = 4.362, p < .048, ηp2 = .154, which was not present in the high-scoring group (p = .582). The low-scoring group detected targets presented on either side of the screen faster with the drone present than with the drone absent. However, neither group showed the significant interaction between tool condition and target distance that would indicate the typical near-tool facilitation effect (p > .241 in both groups; see Appendix D).

Based on these results, participants were unable to incorporate the drone into their body schema despite active use. Targets appearing near the drone were not detected significantly faster than targets far from the drone. This result could indicate that users do not incorporate a remote tool into the body schema through active use alone. However, an alternate explanation, which we address in the next experiment, is that the drone was not necessarily a very effective tool. Participants tended to rate their ability with the drone below the researcher ratings of the participant’s performance, indicating that they may not have felt comfortable or effective acting with the drone. If participants did not feel that the drone extended their action area, then it is unlikely that they would have incorporated the drone into the body schema. Previous studies have shown that effective active use is a prerequisite for this effect (Canzoneri et al., 2013; Maravita & Iriki, 2004); participants must be able to achieve some kind of goal using the tool. If participants feel they cannot accomplish a task with the tool, they may not be inclined to interpret the area around the tool as active space. While observing time spent flying the drone in Experiment 2, researchers noted that participants had difficulty with the task. The lightweight drone was relatively unstable with the rake attachment, and contact with the sand frequently resulted in a crash.

Aside from the drone’s questionable efficacy, it also differed from the handheld rake employed in Experiment 1 in several key ways that could have potentially limited its incorporation into the body schema. Unlike the rake, which retained an affordance for action as long as participants grasped it by the handle, the drone was not active during the target-detection phase of the experiment. After a raking session, the drone was switched off and hung passively above the monitor. Participants also put down the drone controller to use the keyboard during the testing phase. Previous work has found that near-tool facilitation fades as participants stop using the tool to achieve a goal and that rendering some kinds of tools nonfunctional also eliminates near-tool facilitation (Gozli & Brown, 2011). The lack of a continuous affordance for action may have prevented us from observing facilitation near the drone, a possibility we explore in Experiment 3. In addition, whereas participants in Experiment 1 could feel when the handheld rake made contact with the sand, the drone’s remote control did not provide the same sort of haptic feedback; participants’ sensation of the controller remained the same regardless of what the drone did. The absence of haptic feedback likewise could have prevented participants from embodying the drone. In addition, whereas participants in Experiment 1 used the rake as a natural extension of the hand/arm, performing a simple reaching motion, participants in Experiment 2 used the drone by steering with thumbsticks and pressing buttons. Finally, in Experiment 1, the rake extended participants’ functional action area by less than a foot, whereas participants in Experiment 2 flew the drone several feet away from themselves.

In Experiment 3, we examined performance with a sturdier and more reliable remote-controlled tool in order to address the issues of questionable tool efficacy and a lack of continuous tool affordances while retaining other key properties of remote tools—the absence of haptic feedback, a complex interface between user and tool, and a long range of operation.

Experiment 3

Although the results of Experiment 2 may suggest that active use is not sufficient for users to incorporate a remote-controlled tool into the body schema, the unreliability of the flying drone in completing the sand-raking task, as well as its inertness during the target-detection task, may have contributed to the lack of facilitation we observed. We examined this possibility in Experiment 3 by employing a remote-controlled toy excavator. Whereas the video-game-style controller for this tool was quite similar to the controller participants in Experiment 2 used to fly the drone, the excavator itself proved to be a far more effective tool for raking sand. Participants drove the excavator on the ground, eliminating concerns of crash landings, and the excavator easily handled contact with the sand. In addition, unlike participants in Experiment 2, who passively viewed the switched-off drone during the target-detection task, participants in Experiment 3 continuously maintained an active relationship with the excavator. They drove the excavator to the monitor at the start of each block of target-detection trials, used a response button built directly into the excavator’s controller, and had the ability to use the excavator’s functional end at any time during this phase of the experiment. The design of Experiment 3 therefore allowed us to examine how active use with a complex remote-controlled tool operated several feet away from the user in the absence of haptic feedback may or may not shape representations of peripersonal space.

Methods

Sixty volunteers, none of whom participated in Experiments 1 or 2, ran in Experiment 3 for course credit. The same target-detection task was used as in Experiment 2, but this time the drone was replaced by a remote-controlled excavator, which has an arm with a scoop attached to the excavator, as seen in Fig. 5. Participants began the experiment by driving the excavator up a ramp and into a pool of sand where they practiced using the excavator for three and a half minutes, with the goal of raking the sand by dragging the scoop through the pool. After the practice phase, participants and researchers answered similar questionnaires to the ones used in Experiment 2 in which the word drone was replaced with excavator. This was again done to gauge levels of comfort and control using the excavator to interact with the environment.

Experimental setup for experiment 3. The left panel shows the excavator during the raking phase of the experiment. The participant drove the excavator in and out of the pool, so they maintained control over the excavator for the duration of the experiment. The right panel shows the participant completing the Posner cueing task with the excavator present. Participants responded to the target using a button on the remote, and positioned the excavator next to the monitor themselves

After filling out the questionnaire, the participant was seated in front of a monitor lying flat on the ground, approximately 110 cm from the participant’s eyes (head placement was not fixed). While this is a greater viewing distance than the desktop monitor setup employed in Experiments 1 and 2, moving the task onto the floor gave participants a comfortable, more natural space to use the remote-controlled excavator. By placing the excavator, sand, and monitor all on the floor, we hoped to encourage participants to begin to encode this floor space as a distinct new functional action area. This is also a more ecologically valid scenario in that a remote-controlled excavator would most likely be used on the ground rather than on a desk. The participant then ran through a practice block of the same Posner cueing paradigm, using a red button built into the excavator controller to respond to the target instead of a keyboard press. Incorporating the response button into the excavator controller allowed participants to continue holding the excavator controller during the target-detection phase of the experiment, ensuring they were able to steer/move the excavator at all times. Participants therefore had continuous control over the excavator for the duration of the experiment, as they did while holding the rake in Experiment 1.

Participants ran through eight blocks of the target-detection task under three configurations: (1) with the excavator on the right side, and the scoop placed directly next to the right target box; (2) with the excavator on the left side, and the scoop placed adjacent to the left target box; and (3) with the excavator away from the monitor (see Fig. 5). Configurations 1 and 2 were assigned to two blocks each, while Configuration 3 was assigned to four blocks. Block order was randomized. Each block with the excavator present was preceded by a minute of raking sand in the pool, which was approximately the same distance from the participant as was the monitor. After the minute was up, participants were responsible for using the remote control to drive the excavator out of the pool and over to the indicated side of the monitor. The participant then used the remote control to position the scoop of the excavator next to the target box before beginning the block. Before blocks without the excavator present, participants drove the excavator several feet away from the monitor. These steps were taken to ensure participants had control over the excavator at all times, even during the target-detection task. After running through eight blocks of the task, participants were again given a posttest to assess demand characteristics. The posttest was identical to the one used in Experiment 2 (see appendix C).

Results and discussion

One participant responded to more than 50% of catch trials, and so was dropped from analysis. Another seven participants were dropped from analysis for either failing to follow instructions or due to mechanical problems with the excavator, leaving 52 participants included in analyses. As in Experiments 1 and 2, all trials shorter than 200 ms or longer than 1,000 ms were excluded, which accounted for 3.85% of all trials. All items on the participant questionnaire were again found to be correlated (Question 1 and Question 2: r = .681, p < .001; Question 2 and Question 3: r = .503, p < .001; Question 1 and Question 3: r = .549, p < .001), and were averaged into a control score. No participants had control scores outside 2.5 standard deviations from the mean (see Table 8). Two participants were able to guess the purpose of the study based on the posttest answers. Eliminating these participants from analyses did not substantially change the pattern of results seen in Table 6.

Data were again entered into a 2 × 2 × 2 within-subjects ANOVA, with the same factors as in Experiment 2 (validity, tool condition, and target distance). Factors were defined the same as in Experiment 2, with the exception that tool condition now consisted of either excavator present or excavator absent. ANOVA results are shown in Table 6, and reaction time means for each condition are shown in Table 7. There was a main effect of validity, F(1, 51) = 333.058, p < .001, ηp2 = .867, with valid trials showing faster RTs than invalid trials, which was expected based on the paradigm. There was no interaction between tool condition and target distance (p = .374).

While there was no interaction between tool condition and target distance, there was a main effect of tool condition, F(1, 51) = 4.711, p = .035, ηp2 = .085. While targets presented near the excavator were not detected any faster than targets presented far from the excavator, there did seem to be a general prioritization of the monitor, with faster target detection times when the excavator was present.

As in Experiment 2, the items found on the participant questionnaire were highly correlated. The researcher ratings were again uncorrelated with the participant’s control scores (r = −.033, p = .815). We found that the participants’ rating of their ability using the excavator was again significantly lower than the researchers rating, t(52) = 3.48, p = .001. This may mean that the participants did not feel comfortable using the excavator, but researchers noted that participants were largely successful in using the excavator to complete the raking task. Performing a median split on the data by control score and running separate ANOVAs for the high-scoring and low-scoring groups did not reveal any new effects or interactions, although the main effect of excavator condition was only seen in the low excavator scoring group, F(1, 25) = 4.666, p = .041, ηp2 = .157, who were significantly faster to detect targets when the excavator was present than when it was absent. Excavator condition did not show a significant main effect in the high scoring group (p = .342; see Appendix E), which is consistent with the results of Experiment 2 Fig. 6.

The results of Experiment 3 do not strongly suggest that the excavator was incorporated into the body schema. While there was a general prioritization of the monitor when the excavator was present (primarily for participants who reported feeling less control over the tool), there was no prioritization of the target appearing near the excavator scoop compared with the target appearing outside of the excavator’s active scooping space. One possible interpretation of the main effect of excavator presence that we observed is that participants did incorporate the excavator into the body schema, representing the entire monitor as within the remote-controlled tool’s action space. Although using the controller to initiate a scooping action would not allow participants to reach “far” targets during the detection task, the excavator was free to move across the entire floor at all times, so this is not entirely impossible. However, previous studies examining facilitation near the hands and near the ends of tools have consistently found processing biases specifically within a static hand/tool’s grasping/action space (e.g., Reed et al., 2010; Thomas, 2015). The more generous interpretation of our results as supporting incorporation of a remote tool into the body schema is therefore not consistent with previous standards set forth in the literature.

Although the results of Experiment 3 suggest that taking effective action alone is not necessarily sufficient for observers to incorporate a complex remote-controlled tool into the body schema in the absence of haptic feedback—even when the tool continuously affords action—it is also possible that the visual complexity and structure of the remote tool we tested may have biased attention in a qualitatively different manner than the relatively simple handheld rake we tested in Experiment 1. We examined this possibility in Experiment 4.

Experiment 4

In Experiment 1, we replicated previous work showing that observers are facilitated in detecting targets appearing near the functional end of a simple handheld tool. However, in Experiments 2 and 3, we failed to find strong evidence for a similar prioritization near the ends of remote-controlled tools. Although the handheld and remote-controlled tools we tested across experiments differed in ways that are endemic to these tools—that is, providing versus lacking haptic feedback, simple versus more complex interface, contiguous with the body versus fully separate from the body—they were also of substantially different shapes and sizes. Notably, both the drone and excavator were more visually complex than the simple sticks and rakes that research has traditionally shown can be incorporated into the body schema (e.g., Maravita et al., 2002; Reed et al., 2010). We ran Experiment 4 to investigate whether these differences in visual complexity were responsible for the pattern of results we found across the previous experiments. We again asked participants in this experiment to use the excavator to perform a raking task, but instead of interacting with this excavator via remote control, these participants picked up the excavator and used it as a handheld rake. Following the raking task, participants physically held the excavator near one side of the display during the target-detection task. If the visual characteristics of the excavator drove our failure to find facilitation near this tool in Experiment 3, then we should again see no evidence of faster reaction times to detect targets near the handheld excavator in Experiment 4. However, if participants detect targets near the excavator significantly faster than targets far from the excavator when they hold the excavator in their hand, it instead implies that observers can temporarily incorporate visually complex handheld tools into the body schema.

Methods

Fifty volunteers, none of whom participated in Experiments 1–3, ran in Experiment 4 for course credit. Setup was similar to Experiment 2: The same target-detection task was used, and stimuli were displayed on an upright, desk-mounted monitor. Participants used the same excavator as in Experiment 3, but they did not operate it with its remote control. Participants began the experiment by physically picking up the excavator (not the remote controller) and using its scoop to rake a tray of sand adjacent to the monitor (see Fig. 7), thereby using the excavator as a handheld tool instead of a remotely operated tool. Participants raked sand for 1 minute before beginning the experiment. No questionnaires followed the practice phase, as participants did not have to remotely operate any tool.

Experimental setup for Experiment 4. The left panel shows a participant during the raking phase of the experiment. Participants picked up the excavator and used it to rake sand as a handheld tool. The right panel shows the participant completing the Posner cueing task while holding the excavator. Participants responded to the target using a button on the keyboard

To begin the experimental phase, participants ran through a practice block of the Posner cueing paradigm, using the keyboard to respond. Participants then ran through eight blocks of the target-detection task under three configurations: (1) with them holding the excavator near the right side of the monitor, (2) with them holding the excavator near the left side of the monitor, and (3) with the excavator away from the monitor (see Fig. 7). As in Experiment 3, Configurations 1 and 2 were assigned to two blocks each, while Configuration 3 was assigned to four blocks, for a total of eight blocks. Block order was randomized. Each block with the excavator present was preceded by a minute of raking sand in the tray. During these blocks, the participant physically picked up the excavator (instead of the remote controller) and raked the sand using the excavator as a handheld tool. The participant then physically held the excavator next to the indicated side of the monitor, so that the scoop was directly next to a target box. Before blocks without the excavator present, participants put down the excavator away from the monitor.

Results and discussion

Three participants responded to more than 50% of catch trials, and so were dropped from analysis. Another participant was excluded for failing to follow instructions, leaving 46 participants included in analyses. As in Experiments 1 and 2, all trials shorter than 200 ms or longer than 1,000 ms were excluded, which accounted for 5.66% of all trials.

Data were entered into a 2 × 2 × 2 within-subjects ANOVA, with identical factors to previous experiments (validity, tool condition, and target distance). Factors were defined the same as in Experiment 3. ANOVA results are shown in Table 9, and reaction time means for each condition are shown in Table 10. There was an expected main effect of validity, F(1, 45) = 325.306, p < .001, ηp2 = .878, with valid trials showing faster RTs than invalid trials. There was a significant interaction between validity and target distance, F(1, 45) = 10.013, p = .003, ηp2 = .182. On invalid trials, targets near the excavator or on the side of the nonresponding hand were detected significantly faster than targets far from the excavator, t(45) = −3.297, p = .002, while on valid trials, targets defined as near the excavator or on the side of the nonresponding hand were detected at roughly the same speed as targets far from the excavator (reaction-time averaged 368.9 ms and 369.3 ms, respectively). However, these comparisons are collapsed across the tool condition variable, so roughly half of the “near target” classifications fall under the excavator-absent condition. Under this condition, near and far targets are functionally identical, as there is nothing near the monitor to draw focus, so we do not find this interaction to be particularly meaningful. Critically, however, there was a significant interaction between tool condition and target distance, F(1, 46) = 20.752, p < .001, ηp2 = .316 (see Fig. 8). Pairwise comparisons confirmed that the presence of the excavator facilitated target detection: Target detection speed between near and far targets was not significantly different when the excavator was absent, t(45) = 1.828, p = .074, while targets presented near the excavator were detected significantly faster than far targets, t(45) = −3.867, p < .001. This finding mirrors the results we obtained in Experiment 1 with a different set of participants using a simpler, but functionally similar, tool, again providing evidence that target detection is facilitated near a recently used handheld tool.

These results, taken together with the failure to find changes in target detection near the remote-controlled excavator and drone, could indicate that remotely operated tools are incapable of expanding peripersonal space (at least under conditions where these tools include a complex interface), do not provide haptic feedback regarding contact with goal objects, and operate in space that is not contiguous with the body. Target detection near the excavator was facilitated when participants picked it up and used it as a handheld tool, yet participants were no faster to detect targets appearing near versus far from the same excavator when it was operated remotely.

General discussion

A large body of literature using a variety of paradigms suggests observers experience visual biases near handheld tools (e.g., Farnè, et al., 2005; Maravita et al., 2002; Reed et al., 2010). In the first experiment of this study, we replicated one such finding: When our participants raked sand for 1 minute, they subsequently showed evidence of visual prioritization of the space near the rake, suggesting they incorporated this rake into their mental representation of their bodies. The literature documenting visual biases near handheld tools indicates that these effects are reliant on active tool use; an observer must use the tool to expand their action area, and they must understand that the tool allows them to interact with space that was previously out of their reach (Maravita et al., 2002; Reed et al., 2010). However, although participants in our second and third experiments did actively use remote-controlled tools, they did not show strong evidence that this active use led to the introduction of visual biases near these tools. In Experiment 4, when participants instead used one of these tools by holding it in their hands, they once again showed visual prioritization of the space near this tool. Taken together, these results suggest not all remotely controlled tools meet the conditions necessary for incorporation into the body schema. More specifically, our data indicate that active tool use alone is not sufficient to induce near-tool visual biases.

The results of our experiments provide compelling evidence that active use is not the sole driver of tool embodiment and suggest potential additional mechanisms that may contribute to a tool’s incorporation into the body schema. One key difference between the handheld and remote tools used in our study that potentially may explain the lack of visual biases we observed near the remote tools was the interface between user and tool. Both the handheld rake and handheld excavator effectively moved sand with a simple reaching action that provided direct tactile feedback. However, the remote-controlled drone and remote-controlled excavator required participants to steer/rake via thumbsticks and buttons instead of direct, intuitive arm movements. This method of remote control lacked a simple mapping to the tools’ movements and gave no tactile feedback. When participants picked up the rake (Experiment 1) or the excavator (Experiment 4), they could directly feel through the tool when they made contact with the sand, as well and its level of resistance as they moved the tool through the tray. This was not true when participants operated the remote tools, as neither the drone nor excavator remote controls provided haptic feedback when the tool made contact with the sand. In other words, whereas previous research has indicated observers must actively use a tool in order to incorporate it into the body schema (e.g., Maravita & Iriki, 2004), a key component of this active use may involve feeling the tool’s movements and effects on the environment through simple, direct action. This distinction could explain why participants failed to incorporate the remote tools into their body schema; the drone and excavator did not provide the same direct one-to-one tactile/haptic feedback as a consequence of raking action in the same manner as the handheld tools. Even in the absence of vision, haptic feedback may be critical in generating the sensation of tool use (Park & Reed, 2015; Serino et al., 2015).

Future research may address this possibility by building haptic feedback into the controls of a remote tool that simulates direct feedback, or by running a similar experiment in virtual reality using a controller with haptic feedback built in. Manipulations of interface complexity and the method of translating a participant’s physical movements to the movements of a remote tool may also shed additional light on how similarities between body and tool movement may contribute to embodiment (e.g., a reaching action on the tool interface leads to a reaching action of the tool).

Another difference between the handheld and remote-controlled tools we investigated that may have contributed to the incorporation of the former, but not the latter, into the body schema is their contiguity with the body. That is, incorporation may occur more readily when there is a physical connection between a tool and its user. In our experiments, we only observed near-tool visual biases when participants physically held the tools. While previous work has shown people exhibit flexibility in what is represented as part of the body schema (e.g., Aglioti et al., 1996), to incorporate a remote object, an observer would need to create a noncontiguous representation, spatially separate from the rest of the body, as well as a disconnected bubble of peripersonal space to surround it. Such a situation differs extensively from incorporating a handheld tool like a rake, which involves an extension of the existing body schema—rather than creation of a satellite representation—to encompass the rake. In other words, while much evidence suggests the body schema can stretch, it may not be easy for it to split.

An alternative but potentially related mechanism that may shape tool embodiment involves the distance between a tool and its user. Handheld tools generally increase a participant’s action area by less than a meter, so peripersonal space does not have to extend very far to incorporate the new area. It might be the case that a noncontiguous tool can be incorporated into the body schema as long as the action area around the tool remains within a limited range of the existing action area around the participant (i.e., Gozli & Brown, 2011). In Experiment 2, participants flew the drone around a medium-sized room during the training phase, whereas in Experiment 3, the excavator remained on the ground several feet away from a participant for the duration of the experiment. Perhaps these distances discouraged participants from incorporating these remote tools into the body schema. In addition, variations in the distance between a tool and participant during the raking and target detection phases of our experiment may have also contributed to the lack of facilitation we observed in Experiment 2. Whereas participants used the drone to rake sand in an area far outside of traditional peripersonal space, they performed a target-detection task in which the drone was much closer to the body (although still outside of immediate grasping space). In Experiment 3, however, the distance between participants and the excavator was approximately the same during both the raking and target-detection tasks, suggesting a mismatch between raking and detection distances alone cannot explain all of our results.

Finally, although our experiments were not explicitly designed to differentiate between the active use and attentional accounts of visual biases near the ends of handheld tools, the results of Experiments 2 and 3 do speak to this controversy. If tools capture multisensory attention rather than extending multisensory representations of peripersonal space (e.g., Holmes, Sanabria, Calvert, & Spence, 2007b), this attentional bias should manifest equally well with handheld tools and with salient remote-controlled tools that have been the object of recent focused practice. However, we found no evidence for attentional prioritization of the space near remote-controlled tools in Experiments 2 and 3 despite the fact that the two novel and presumably attentionally salient remote tools were placed on a side of a screen. While an active use account of peripersonal space expansion may be modified to fit with our obtained results (e.g., by incorporating haptic feedback), our findings are inconsistent with an attentional account of near-tool effects.

Although the null findings of Experiments 2 and 3 may be interpreted as suggesting that, at least under the circumstances tested here, observers represent remotely operated tools differently than handheld tools, we note that the results do warrant further investigation in future studies that examine the potential mechanisms driving embodiment discussed above. Additional work could more systematically manipulate the factors of haptic feedback, tool interface complexity, contiguity, and operating distance to disentangle which one(s) may be most important in contributing to a sense of embodiment. As remotely controlled tools become more prevalent in our day-to-day lives, as well as easier to use, it would be of great benefit to fully understand how users represent these tools. This is true for serious, life-threatening situations, such as teleoperation, as well as more casual activities such as drone racing. While the literature has documented the effects of incorporating simple handheld tools and objects into body representations (Aglioti et al., 1996; Canzoneri et al., 2013; Reed et al., 2010), it is important to uncover the boundary conditions under which more complex tool use expands representations of peripersonal space.

Notes

A power analysis with an estimated effect size of f = 0.25 for the key interaction between target distance and tool condition derived by averaging effect sizes documented in previous publications reporting facilitation near the hands/near a tool in target-detection tasks (e.g., McManus & Thomas, 2018; Sun & Thomas, 2013; Reed et al., 2010; Reed et al., 2006; Thomas, 2013), with alpha = 0.05 indicated a sample size of 46 participants was sufficient to achieve power of 0.90. In all experiments reported here, we collected samples at least this large.

For the first eight participants, the attachment consisted of a small wooden rake with four metal prongs extending straight down from the bottom of the drone. This proved too fragile for the task and upset the weight balance of the drone, so we implemented use of the straw attachments for the remaining 62 participants. Patterns of significance did not differ across analyses in which these eight participants were included versus excluded.

References

Abrams, R. A., Davoli, C. C., Du, F., Knapp, W. H., & Paull, D. (2008). Altered vision near the hands. Cognition, 107(3), 1035–1047.

Agauas, S. J., Jacoby, M., & Thomas, L. E. (2020). Near-hand effects are robust: Three OSF pre registered replications of visual biases in perihand space. Visual Cognition, 28(3), 192–204. doi:https://doi.org/10.1080/13506285.2020.1751763

Aglioti, S., Smania, N., Manfredi, M., & Berlucchi, G. (1996). Disownership of left hand and objects related to it in a patient with right brain damage. NeuroReport, 8(1), 293–296.

Bassolino, M., Serino, A., Ubaldi, S., & Làdavas, E. (2010). Everyday use of the computer mouse extends peripersonal space representation. Neuropsychologia, 48(3), 803–811.

Berlucchi, G., & Aglioti, S. (1997). The body in the brain: Neural bases of corporeal awareness. Trends in Neurosciences, 20(12), 560–564.

Brockmole, J. R., Davoli, C. C., Abrams, R. A., & Witt, J. K. (2013). The world within reach: Effects of hand posture and tool use on visual cognition. Current Directions in Psychological Science, 22(1), 38–44.

Brozzoli, C., Pavani, F., Urquizar, C., Cardinali, L., & Farnè, A. (2009). Grasping actions remap peripersonal space. NeuroReport, 20(10), 913–917.

Canzoneri, E., Ubaldi, S., Rastelli, V., Finisguerra, A., Bassolino, M., & Serino, A. (2013). Tool-use reshapes the boundaries of body and peripersonal space representations. Experimental Brain Research, 228(1), 25–42.

Costantini, M., Ambrosini, E., Tieri, G., Sinigaglia, C., & Committeri, G. (2010). Where does an object trigger an action? An investigation about affordances in space. Experimental Brain Research, 207(1/2), 95–103.

Costantini, M., Frassinetti, F., Maini, M., Ambrosini, E., Gallese, V., & Sinigaglia, C. (2014). When a laser pen becomes a stick: Remapping of space by tool-use observation in hemispatial neglect. Experimental Brain Research, 232(10), 3233–3241.

Farnè, A., Iriki, A., & Làdavas, E. (2005). Shaping multisensory action–space with tools: Evidence from patients with cross-modal extinction. Neuropsychologia, 43(2), 238–248.

Fogassi, L., Gallese, V., Fadiga, L., Luppino, G., Matelli, M., & Rizzolatti, G. (1996). Coding of peripersonal space in inferior premotor cortex (area F4). Journal of Neurophysiology, 76(1), 141–157.

Goodhew, S.C., Edwards, M. Ferber, S., & Pratt, J. (2015). Altered visual perception near the hands: A critical review of attentional and neurophysiological models. Neuroscience & Biobehavioral Reviews, 55, 223–233.

Gozli, D. G., & Brown, L. E. (2011). Agency and control for the integration of a virtual tool into the peripersonal space. Perception, 40(11), 1309–1319.

Graziano, M. S., & Cooke, D. F. (2006). Parieto-frontal interactions, personal space, and defensive behavior. Neuropsychologia, 44(6), 845–859.

Holmes, N. P., Calvert, G. A., & Spence, C. (2004). Extending or projecting peripersonal space with tools? Multisensory interactions highlight only the distal and proximal ends of tools. Neuroscience Letters, 372(1/2), 62–67.

Holmes, N. P., Calvert, G. A., & Spence, C. (2007a). Tool use changes multisensory interactions in seconds: Evidence from the crossmodal congruency task. Experimental Brain Research, 183(4), 465–476.

Holmes, N. P., Sanabria, D., Calvert, G. A., & Spence, C. (2007b). Tool-use: Capturing multisensory spatial attention or extending multisensory peripersonal space? Cortex, 43(3), 469–489.

Hunley, S. B., Marker, A. M., & Lourenco, S. F. (2017). Individual differences in the flexibility of peripersonal space. Experimental Psychology, 64(1), 49–55. doi:https://doi.org/10.1027/1618-3169/a000350

Làdavas, E., Di Pellegrino, G., Farnè, A., & Zeloni, G. (1998). Neuropsychological evidence of an integrated visuotactile representation of peripersonal space in humans. Journal of Cognitive Neuroscience, 10(5), 581–589.

Longo, M. R., & Lourenco, S. F. (2006). On the nature of near space: Effects of tool use and the transition to far space. Neuropsychologia, 44(6), 977–981.

Maravita, A., & Iriki, A. (2004). Tools for the body (schema). Trends in Cognitive Sciences, 8(2), 79–86.

Maravita, A., Spence, C., Kennett, S., & Driver, J. (2002). Tool-use changes multimodal spatial interactions between vision and touch in normal humans. Cognition, 83(2), B25–B34.

McManus, R., & Thomas, L. E. (2018). Immobilization does not disrupt near-hand attentional biases. Consciousness and Cognition. Advance online publication. doi:https://doi.org/10.1016/j.concog.2018.05.001

Murata, A., Fadiga, L., Fogassi, L., Gallese, V., Raos, V., & Rizzolatti, G. (1997). Object representation in the ventral premotor cortex (area F5) of the monkey. Journal of Neurophysiology, 78(4), 2226–2230.

Park, G. D., & Reed, C. L. (2015). Haptic over visual information in the distribution of visual attention after tool-use in near and far space. Experimental Brain Research, 233(10), 2977–2988.

Posner, M. I., Walker, J. A., Friedrich, F. A., & Rafal, R. D. (1987). How do the parietal lobes direct covert attention? Neuropsychologia, 25(1), 135–145.

Reed, C. L., Betz, R., Garza, J. P., & Roberts, R. J. (2010). Grab it! Biased attention in functional hand and tool space. Attention, Perception, & Psychophysics, 72(1), 236–245.

Reed, C. L., Grubb, J. D., & Steele, C. (2006). Hands up: attentional prioritization of space near the hand. Journal of Experimental Psychology: Human Perception and Performance, 32(1), 166.

Rizzolatti, G., Fadiga, L., Fogassi, L., & Gallese, V. (1997). The space around us. Science, 277(5323), 190–191.

Serino, A., Canzoneri, E., Marzolla, M., Di Pellegrino, G., & Magosso, E. (2015). Extending peripersonal space representation without tool-use: Evidence from a combined behavioral-computational approach. Frontiers in Behavioral Neuroscience, 9, 4.

Sengül, A., Rognini, G., van Elk, M., Aspell, J. E., Bleuler, H., & Blanke, O. (2013). Force feedback facilitates multisensory integration during robotic tool use. Experimental Brain Research, 227(4), 497–507.

Sun, H. M., & Thomas, L. E. (2013). Biased attention near another’s hand following joint action. Frontiers in Psychology, 4, 443.

Thomas, L. E. (2013). Grasp posture modulates attentional prioritization of space near the hands. Frontiers in Psychology, 4, 312. doi:https://doi.org/10.3389/fpsyg.2013.00312

Thomas, L. E. (2015). Grasp posture alters visual processing biases near the hands. Psychological Science, 26(5), 625–632.

Thomas, L. E. (2017). Action experience drives visual-processing biases near the hands. Psychological Science, 28(1), 124–131.

Acknowledgments

We would like to thank the undergraduate researchers who assisted with data collection for this project: Emilee Anderson, Connor O’Fallon, Aisha Hassam, Morgan Jacoby, Brooke Billadeau, Allison Nadeau, and Kaylee Abfalter, Ganesh Padmanabhan, and Enrique Alvarez Vazquez for their assistance with coding and building these experiments, and Dr. Rebecca Woods, Dr. Jeffrey Johnson, and Dr. Michael Robinson for guidance and insightful feedback. This work was supported by grants from the National Science Foundation (BCS #1556336) and the National Institute of General Medical Sciences (#P30 GM103505) to L. E. Thomas.

Open practices statement

The data and materials for all experiments are available upon request from Dr. Laura E. Thomas. None of the experiments was preregistered.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendices

Appendix A

Appendix B

Appendix C

Appendix D

Appendix E

Rights and permissions

About this article

Cite this article

McManus, R.R., Thomas, L.E. Vision is biased near handheld, but not remotely operated, tools. Atten Percept Psychophys 82, 4038–4057 (2020). https://doi.org/10.3758/s13414-020-02099-8

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13414-020-02099-8