Abstract

This paper aims to deal with an iterative algorithm for hierarchical fixed point problems for a finite family of nonexpansive mappings in the setting of real Hilbert spaces. We establish the strong convergence of the proposed method under some suitable conditions. Numerical examples are presented to illustrate the proposed method and convergence result. The algorithm and result presented in this paper extend and improve some well-known algorithm and results, respectively, in the literature.

Similar content being viewed by others

1 Introduction

Throughout this paper, we assume that H is a real Hilbert space whose inner product and norm are denoted by \(\langle\cdot,\cdot \rangle\) and \(\|\cdot\|\), respectively. We also assume that \(T : H \to H\) is a nonexpansive operator, that is, \(\| Tx - Ty \| \leq\| x-y \|\) for all \(x,y \in H\). The fixed point set of T is denoted by \(F(T)\), that is, \(F(T) = \{ x \in H : Tx = x \}\). It is well known that \(F(T)\) is closed and convex (see [1]).

Let C be a nonempty closed convex subset of H and \(S : C \to H\) be a nonexpansive mapping. The hierarchical fixed point problem (in short, HFPP) is to find \(x\in F(T)\) such that

It is linked with some monotone variational inequalities and convex programming problems. Various methods have been proposed to solve (1.1); see, for example, [2–18] and the references therein.

Yao et al. [2] introduced the following iterative algorithm to solve HFPP (1.1):

where \(f: C\to H\) is a contraction mapping and \(\{\alpha_{n}\}\) and \(\{ \beta_{n}\}\) are sequences in \((0,1)\). Under some restrictions on parameters, they proved that the sequence \(\{ x_{n}\}\) generated by (1.2) converges strongly to a point \(z\in F(T)\) which is also a unique solution of the following variational inequality problem (VIP): Find \(z \in F(T)\) such that

In 2011, Ceng et al. [19] investigated the following iterative method:

where U is a Lipschitzian mapping and F is a Lipschitzian and strongly monotone mapping. They proved that under some approximate assumptions on the operators and parameters, the sequence \(\{x_{n}\}\) generated by (1.4) converges strongly to a unique solution of the following variational inequality problem (VIP): Find \(z \in F(T)\) such that

Simultaneously, the hierarchical fixed point problem is considered for a family of finite nonexpansive mappings. By using a \(W_{n}\)-mapping [20], Yao [21] introduced the following iterative method:

where A is a strongly positive linear bounded operator, that is, there exists \(\alpha>0\) such that \(\langle Ax ,x \rangle\ge\alpha\|x\|^{2}\), \(f: C\to H\) is a contraction mapping and \(\{\alpha_{n}\}\) and \(\{\beta _{n}\}\) are sequences in \((0,1)\). Under some restrictions on parameters, he proved that the sequence \(\{ x_{n}\}\) generated by (1.6) converges strongly to a unique solution of the following variational inequality problem defined on the set of common fixed points of nonexpansive mappings \(T_{i} : H \to H\), \(i =1,2, \ldots,N\): Find \(z \in\bigcap_{i=1}^{N} F(T_{i})\) such that

By combining Korpelevich’s extragradient method and the viscosity approximation method, Ceng et al. [22] introduced and analyzed implicit and explicit iterative schemes for computing a common element of the set of fixed points of a nonexpansive mapping and the set of solutions of a variational inequality problem for an α-inverse-strongly monotone mapping defined on a real Hilbert space. Under suitable assumptions, they established the strong convergence of the sequences generated by the proposed schemes.

By combining Krasnoselskii-Mann type algorithm and the steepest-descent method, Buong and Duong [23] introduced the following explicit iterative algorithm:

where \(T_{i}^{k}=(1-\beta_{k}^{i})x_{k}+\beta_{k}^{i}T_{i}\) for \(1\leq i\leq N\), \(\{T_{i}\}^{N}_{i=1}\) are N nonexpansive mappings on a real Hilbert space H, \(T_{0}^{k}=I-\lambda_{k}\mu F\), and F is an L-Lipschitz continuous and η-strongly monotone mapping. They proved that the sequence \(\{x_{k}\}\) generated by (1.8) converges strongly to a unique solution of the following variational inequality problem: Find \(z \in\bigcap_{i=1}^{N} F(T_{i})\) such that

Recently, Zhang and Yang [24] considered the following explicit iterative algorithm:

where V is an α-Lipschitzian on a real Hilbert space H, F is an L-Lipschitz continuous and η-strongly monotone mapping and \(T_{i}^{k}=(1-\beta_{k}^{i})x_{k}+\beta_{k}^{i}T_{i}\) for \(1\leq i\leq N\). Under suitable assumptions, they proved that the sequence \(\{x_{k}\}\) generated by the iterative algorithm (1.10) converges strongly to a unique solution of the variational inequality problem of finding \(z \in\bigcap_{i=1}^{N} F(T_{i})\) such that

In this paper, motivated by the above works and related literature, we introduce an iterative algorithm for hierarchical fixed point problems of a finite family of nonexpansive mappings in the setting of real Hilbert spaces. We establish a strong convergence theorem for the sequence generated by the proposed method. In order to verify the theoretical assertions, some numerical examples are given. The algorithm and results presented in this paper improve and extend some recent corresponding algorithms and results; see, for example, Yao et al. [2], Suzuki [14], Tian [15], Xu [16], Ceng et al. [19], Buong and Duong [23], Zhang and Yang [24], and the references therein.

2 Preliminaries

In this section, we present some known definitions and results which will be used in the sequel.

Definition 2.1

A mapping \(T:C\to H\) is said to be α-inverse strongly monotone if there exists \(\alpha>0\) such that

Lemma 2.1

[19]

Let \(U:C \to H\) be a τ-Lipschitzian mapping, and let \(F:C\to H \) be a k-Lipschitzian and η-strongly monotone mapping, then for \(0\leq\rho\tau<\mu\eta\), \(\mu F-\rho U\) is \(\mu\eta -\rho\tau\)-strongly monotone, i.e.,

Definition 2.2

[21]

A mapping \(T: H \to H\) is said to be an averaged mapping if there exists \(\alpha\in(0,1)\) such that

where \(I: H\to H\) is the identity mapping and \(R: H \to H\) is a nonexpansive mapping. More precisely, when (2.1) holds, we say that T is α-averaged.

It is easy to see that the averaged mapping T is also nonexpansive and \(F(T ) = F(R)\).

Lemma 2.2

If the mappings \(\{T_{i}\}^{N}_{i=1}\) defined on a real Hilbert space H are averaged and have a common fixed point, then

Lemma 2.3

[1]

Let C be a nonempty closed convex subset of a real Hilbert space H. If \(T : C \rightarrow C\) is a nonexpansive mapping with \(F(T)\neq \emptyset\), then the mapping \(I -T\) is demiclosed at 0, i.e., if \(\{x_{n}\}\) is a sequence in C weakly converging to x, and if \(\{(I -T )x_{n}\}\) converges strongly to 0, then \((I -T )x = 0\).

Definition 2.3

A mapping \(T : C \to H\) is said to be a k-strict pseudo-contraction if there exists a constant \(k \in[0, 1)\) such that

Lemma 2.4

[27]

Let C be a nonempty closed convex subset of a real Hilbert space H and \(S: C \to H\) be a k-strict pseudo-contraction mapping. Define \(B: C \to H\) by \(Bx=\lambda Sx+(1-\lambda)x\) for all \(x\in C\). Then, as \(\lambda\in[k,1)\), B is a nonexpansive mapping such that \(F(B)=F(S)\).

Lemma 2.5

[28]

Let \(T:C\to H\) be a k-Lipschitzian and η-strongly monotone operator. Let \(0<\mu<\frac{2\eta}{k^{2}}\), \(W=I-\lambda\mu T\) and \(\mu(\eta -\frac{\mu k^{2}}{2})=\tau\). Then, for \(0 < \lambda< \min\{1,\frac{1}{\tau}\}\), W is a contraction mapping with constant \(1-\lambda\tau\), that is,

Lemma 2.6

[29]

Let \(\{x_{n}\}\) and \(\{y_{n}\}\) be bounded sequences in a Banach space E and \(\{\beta_{n}\}\) be a sequence in \([0,1]\) with \(0 < \liminf_{n\to\infty} \beta_{n} \leq\limsup_{n\to\infty} \beta_{n} < 1\). Suppose \(x_{n+1}=\beta_{n}x_{n}+(1-\beta_{n})y_{n}\), \(\forall n\geq0\) and \(\limsup_{n\to\infty}(\|y_{n+1}-y_{n}\|-\|x_{n+1}-x_{n}\|) \leq0\). Then \(\lim_{n\to\infty}\|y_{n}-x_{n}\|=0\).

We close this section by presenting the following lemma on the sequences of real numbers.

Lemma 2.7

[30]

Let \(\{a_{n}\}\) be a sequence of nonnegative real numbers such that

where \(\{\upsilon_{n}\}\) is a sequence in \((0,1)\) and \(\delta_{n}\) is a sequence such that

-

(i)

\(\sum_{n=1}^{\infty}\upsilon_{n}=\infty\);

-

(ii)

\(\limsup_{n\to\infty} \frac{\delta_{n}}{\upsilon_{n}} \leq0\) or \(\sum_{n=1}^{\infty}|\delta_{n}|<\infty\).

Then \(\lim_{n\to\infty}a_{n}=0\).

3 An iterative method and strong convergence results

Let C be a nonempty closed convex subset of a real Hilbert space H and \(\{T_{i}\}^{N}_{i=1}\) be N nonexpansive mappings on C such that \(\Omega=\bigcap_{i=1}^{N} F(T_{i})\neq\emptyset\). Let \(T: C \to C\) be a k-Lipschitzian mapping and η-strongly monotone, and \(f: C \to C\) be a contraction mapping with a constant τ. We consider the following hierarchical fixed point problem (in short, HFPP) of finding \(z \in\Omega\) such that

Now we suggest the following algorithm for finding a solution of HFPP (3.1).

Algorithm 3.1

For a given arbitrarily point \(x_{0}\in C\), let the iterative sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) be generated by

where \(T_{i}^{n}=(1-\delta_{n}^{i})I+\delta_{n}^{i}T_{i}\) and \(\delta_{n}^{i}\in (0,1)\) for \(i=1,2,\ldots,N\). Suppose the parameters satisfy \(0<\mu<\frac{2\eta}{k^{2}}\) and \(0\leq \rho< \frac{\nu}{\tau}\), where \(\nu= \mu ( \eta-\frac{\mu k^{2}}{2} )\). Also \(\{\gamma_{n}\}\), \(\{\alpha_{n}\}\) and \(\{\beta_{n}\}\) are sequences in \((0,1)\) satisfying the following conditions:

-

(a)

\(0 < \liminf_{n\to\infty} \gamma_{n} \leq\limsup_{n\to \infty}\gamma_{n} < 1\),

-

(b)

\(\lim_{n\to\infty}\alpha_{n}=0\) and \(\sum_{n=1}^{\infty }\alpha_{n}=\infty\),

-

(c)

\(\{\beta_{n}\}\subset[\sigma,1)\) and \(\lim_{n\to\infty }\beta_{n}=\beta<1\),

-

(d)

\(\lim_{n\to\infty}|\delta_{n-1}^{i}-\delta_{n}^{i}|=0\) for \(i=1,2,\ldots,N\).

Remark 3.1

Algorithm 3.1 can be viewed as an extension and improvement of some well-known results.

-

(a)

If \(\beta_{n}=0\), \(\gamma_{n}=\beta\), \(\mu=1\), \(\rho =\gamma\) and \(f(y_{n})=f(x_{n})\), then Algorithm 3.1 reduces to the one studied in [21].

-

(b)

If \(\beta_{n}=0\), \(N=1\), \(\gamma_{n}=0\), \(\rho=1\) and \(f(y_{n})=f(x_{n})\), then Algorithm 3.1 can be seen as an extension of an algorithm considered in [2].

-

(c)

If \(\beta_{n}=0\), \(N=1\), \(\delta_{n}^{1}=1\), \(\gamma_{n}=0\) and \(f(y_{n})=U(x_{n})\), then Algorithm 3.1 reduces to that considered and studied in [19].

-

(d)

If \(\beta_{n}=0\), \(\gamma_{n}=1-\beta_{n}^{0}\), \(\rho=0\), then Algorithm 3.1 reduces to following algorithm:

$$ x_{n+1}=\bigl(1-\beta_{n}^{0} \bigr)x_{n}+\beta_{n}^{0}(I-\lambda_{n}\mu T)T_{N}^{n} \cdots T_{1}^{n} x_{n}, $$(3.3)where \(\lambda_{n}=\frac{\alpha_{n}}{\beta_{n}^{0}}\). We can see that (3.3) coincides with the algorithm proposed in [23].

-

(e)

If \(\beta_{n}=0\), \(\gamma_{n}=0\) and \(f(y_{n})=V(x_{n})\), then Algorithm 3.1 reduces to the one considered in [24].

This shows that Algorithm 3.1 is quite general and unified one. We expect the wide applicability of Algorithm 3.1.

Lemma 3.1

The sequences \(\{x_{n}\}\) and \(\{y_{n}\}\) are bounded.

Proof

Let \(x^{*} \in\Omega\). We have

Since \(\lim_{n\to\infty}\alpha_{n}=0\), without loss of generality, we may assume that \(\alpha_{n} \leq\min\{\epsilon,\frac{\epsilon}{\tau}\}\) for all \(n\geq1\), where \(0 < \epsilon< 1 - \limsup_{n\to\infty}\gamma_{n}\). From (3.2) and (3.4), we obtain

where the second inequality follows from Lemma 2.5 and the third inequality follows from (3.4). By induction on n, we obtain

Hence, \(\{x_{n}\}\) is bounded, and consequently, we deduce that \(\{y_{n}\} \), \(\{Ty_{n}\}\), \(\{T_{1}x_{n+1}\}\), \(\|T_{1}^{n}x_{n+1}\|, \|T_{2}T_{1}^{n}x_{n+1}\|, \ldots , \|T_{N-1}^{n} \cdots T_{1}^{n}x_{n+1}\|, \|T_{N}T_{N-1}^{n} \cdots T_{1}^{n}x_{n+1}\|\) and \(\{f(y_{n})\}\) are bounded. □

Lemma 3.2

Let \(\{x_{n}\}\) be a sequence generated by Algorithm 3.1. Then:

-

(a)

\(\lim_{n\to\infty}\|x_{n+1}-x_{n}\|=0\).

-

(b)

The weak w-limit set \(w_{w}(x_{n}) \subset\Omega\) (\(w_{w}(x_{n})=\{x: x_{n_{i}}\rightharpoonup x\}\)).

Proof

We estimate

It follows from the definition of \(T_{i}^{n+1}\) that

and from (3.6) we have

By induction on N, we have

Since \(\lim_{n\to\infty}|\delta_{n+1}^{i}-\delta_{n}^{i}|=0\) for \(i=1,2,\ldots,N\), and \(\|x_{n+1}\|, \|T_{1}x_{n+1}\|, \|T_{1}^{n}x_{n+1}\|, \|T_{2}T_{1}^{n}x_{n+1}\|, \ldots, \|T_{N-1}^{n} \cdots T_{1}^{n}x_{n+1}\|, \|T_{N}T_{N-1}^{n} \cdots T_{1}^{n}x_{n+1}\|\) are bounded, we obtain

Define \(w_{n}=\frac{x_{n+1}-\gamma_{n}x_{n}}{1-\gamma_{n}}\). Then \(x_{n+1}=(1-\gamma_{n})w_{n}+\gamma_{n}x_{n}\), and therefore, from (3.5), we have

Since \(\lim_{n\to\infty}\alpha_{n}=0\), \(\lim_{n\to\infty}\beta _{n}=\beta\), \(\liminf_{n\to\infty}\gamma_{n} < \limsup_{n\to\infty}\gamma_{n}<1\) and

we get

By Lemma 2.6, we have \(\lim_{n\to\infty}\|w_{n}-x_{n}\|=0\). Since \(\|x_{n+1}-x_{n}\|=(1-\gamma_{n})\|w_{n}-x_{n}\|\), we obtain

We next estimate

which implies that

Since \(\lim_{n\to\infty}\alpha_{n}=0\) and \(\liminf_{n\to\infty}\gamma_{n} < \limsup_{n\to\infty}\gamma _{n}<1\), we have

Define a mapping \(W: C\to H\) by

with \(\sigma\leq\beta<1\). It follows from Lemma 2.4 that W is a nonexpansive mapping and \(F(W)=\Omega\). Note that

Since \(\lim_{n\to\infty}\beta_{n}=\beta\) and \(\lim_{n\to\infty}\| x_{n}-y_{n}\|=0\), we obtain

Since \(\{x_{n}\}\) is bounded, without loss of generality we may assume that \(x_{n}\rightharpoonup x^{*}\in C\). It follows from Lemma 2.3 that \(x^{*}\in F(W)=\Omega\). Therefore, \(w_{w}(x_{n}) \subset\Omega\). □

Theorem 3.1

The sequence \(\{x_{n}\}\) generated by Algorithm 3.1 converges strongly to \(z \in\Omega=\bigcap_{i=1}^{N} F(T_{i})\) such that it is also a unique solution of HFPP (3.1).

Proof

Since \(\{x_{n}\}\) is bounded and \(x_{n} \rightharpoonup w\), from Lemma 3.2 we have \(w \in\Omega\). Since \(0 \leq\rho\tau< \mu\eta\), from Lemma 2.1 it can be easily seen that the operator \(\mu T-\rho f\) is \(\mu\eta -\rho\tau\) strongly monotone, and we get the uniqueness of the solution of HFPP (3.1). Let us denote this unique solution of HFPP (3.1) by \(z\in\Omega\).

Since \(\{x_{n}\}\) is bounded, there exists a subsequence \(\{x_{n_{k}}\}\) of \(\{x_{n}\}\) such that

Next, we show that \(x_{n}\rightarrow z\). We have

which implies that

Let \(\upsilon_{n}=\alpha_{n}(\nu- \rho\tau)\) and \(\delta_{n}=2\alpha_{n}\langle\rho f(z)-\mu T(z),x_{n+1}-z\rangle\). Then we have

It follows that

Thus, all the conditions of Lemma 2.7 are satisfied. Hence we deduce that \(x_{n}\to z\). This completes the proof. □

4 Examples

To illustrate Algorithm 3.1 and the convergence result, we consider the following examples.

Example 4.1

Let \(\alpha_{n}=\frac{1}{2(n+1)}\), \(\beta_{n}=\frac{1}{n^{3}}\) and \(\gamma_{n}=\frac{1}{4}\). It is easy to show that the sequences \(\{\alpha_{n}\}\), \(\{\beta_{n}\}\) and \(\{\gamma_{n}\}\) satisfy conditions (a), (b) and (c). Let \(\delta_{n}^{i}=\frac{n+i}{n+i+1}\) for \(i=1,2\). Then

This implies that the sequence \(\{\delta_{n}^{i}\}\) satisfies condition (d).

Let \(T_{1}, T_{2} : \mathbb{R} \to\mathbb{R}\) be defined by

and let the mapping \(f: \mathbb{R}\to\mathbb{R}\) be defined by

It is easy to see that \(T_{1}\) and \(T_{2}\) are nonexpansive, and f is a contraction mapping with constant \(\frac{1}{7}\). Clearly,

Let \(T: \mathbb{R}\to\mathbb{R}\) be defined by

Then T is 1-Lipschitzian and \(\frac{1}{7}\)-strongly monotone.

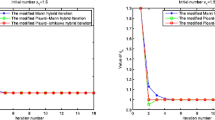

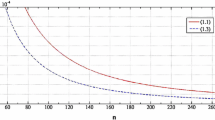

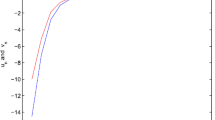

In all tests we take \(\rho=\frac{1}{30}\) and \(\mu=\frac{1}{7}\). In this example, \(\eta=\frac{1}{7}\), \(k=1\) and \(\tau=\frac{1}{7}\). It is easy to see that the parameters satisfy \(0<\mu<\frac{2\eta }{k^{2}}\) and \(0\leq\rho\tau<\nu\), where \(\nu=\mu ( \eta-\frac{\mu k^{2}}{2} )\). All codes were written in Matlab, the values of \(\{y_{n}\}\) and \(\{x_{n}\}\) with different n are reported in Table 1.

Remark 4.1

Table 1 and Figure 1 show that the sequences \(\{y_{n}\}\) and \(\{x_{n}\}\) converge to 0. Also, \(\{0\}=\Omega\).

Example 4.2

All the mappings and parameters are the same as in Example 4.1 except \(T_{1}\), \(T_{2}\) and f. Let \(T_{1}, T_{2}, T_{3} : \mathbb{R}\to\mathbb{R}\) be defined by

and let \(f: \mathbb{R}\to\mathbb{R}\) be defined by

Then \(T_{1}\), \(T_{2}\) and \(T_{3}\) are nonexpansive mappings, and f is a contraction mapping with constant \(\frac{2}{7}\). Clearly,

Let \(\delta_{n}^{i}=\frac{n+i}{n+i+1}\) for \(i=1,2,3\).

All codes were written in Matlab, the values of \(\{y_{n}\}\) and \(\{x_{n}\}\) with different n are reported in Table 2.

Remark 4.2

Table 2 and Figure 2 show that the sequences \(\{y_{n}\}\) and \(\{x_{n}\}\) converge to 1. Also, \(\{1\}=\Omega\).

References

Geobel, K, Kirk, WA: Topics in Metric Fixed Point Theory. Cambridge University Press, Cambridge (1990)

Yao, Y, Cho, YJ, Liou, YC: Iterative algorithms for hierarchical fixed points problems and variational inequalities. Math. Comput. Model. 52(9-10), 1697-1705 (2010)

Crombez, G: A hierarchical presentation of operators with fixed points on Hilbert spaces. Numer. Funct. Anal. Optim. 27, 259-277 (2006)

Mainge, PE, Moudafi, A: Strong convergence of an iterative method for hierarchical fixed-point problems. Pac. J. Optim. 3(3), 529-538 (2007)

Moudafi, A: Krasnoselski-Mann iteration for hierarchical fixed-point problems. Inverse Probl. 23(4), 1635-1640 (2007)

Cianciaruso, F, Marino, G, Muglia, L, Yao, Y: On a two-steps algorithm for hierarchical fixed point problems and variational inequalities. J. Inequal. Appl. 2009, Article ID 208692 (2009)

Gu, G, Wang, S, Cho, YJ: Strong convergence algorithms for hierarchical fixed points problems and variational inequalities. J. Appl. Math. 2011, Article ID 164978 (2011)

Marino, G, Xu, HK: Explicit hierarchical fixed point approach to variational inequalities. J. Optim. Theory Appl. 149(1), 61-78 (2011)

Ceng, LC, Ansari, QH, Yao, JC: Iterative methods for triple hierarchical variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 151, 489-512 (2011)

Bnouhachem, A: A modified projection method for a common solution of a system of variational inequalities, a split equilibrium problem and a hierarchical fixed-point problem. Fixed Point Theory Appl. 2014, Article ID 22 (2014)

Bnouhachem, A: An iterative method for common solutions of equilibrium problems and hierarchical fixed point problems. Fixed Point Theory Appl. 2014, Article ID 194 (2014)

Bnouhachem, A, Al-Homidan, S, Ansari, QH: An iterative algorithm for system of generalized equilibrium problems and fixed point problem. Fixed Point Theory Appl. 2014, Article ID 235 (2014)

Ceng, LC, Al-Mezel, SA, Latif, A: Hybrid viscosity approaches to general systems of variational inequalities with hierarchical fixed point problem constraints in Banach spaces. Abstr. Appl. Anal. 2014, Article ID 945985 (2014)

Suzuki, T: Moudafi’s viscosity approximations with Meir-Keeler contractions. J. Math. Anal. Appl. 325(1), 342-352 (2007)

Tian, M: A general iterative algorithm for nonexpansive mappings in Hilbert spaces. Nonlinear Anal. 73, 689-694 (2010)

Xu, HK: Viscosity method for hierarchical fixed point approach to variational inequalities. Taiwan. J. Math. 14(2), 463-478 (2010)

Wang, Y, Xu, W: Strong convergence of a modified iterative algorithm for hierarchical fixed point problems and variational inequalities. Fixed Point Theory Appl. 2013, Article ID 121 (2013)

Ansari, QH, Ceng, LC, Gupta, H: Triple hierarchical variational inequalities. In: Ansari, QH (ed.) Nonlinear Analysis: Approximation Theory, Optimization and Applications, pp. 231-280. Springer, New York (2014)

Ceng, LC, Anasri, QH, Yao, JC: Some iterative methods for finding fixed points and for solving constrained convex minimization problems. Nonlinear Anal. 74(16), 5286-5302 (2011)

Takahashi, W, Atsushiba, S: Strong convergence theorems for a finite family of nonexpansive mappings and applications. Indian J. Math. 41(3), 435-453 (1999)

Yao, Y: A general iterative method for a finite family of nonexpansive mappings. Nonlinear Anal. 66, 2676-2678 (2007)

Ceng, LC, Khan, AR, Ansari, QH, Yao, JC: Viscosity approximation methods for strongly positive and monotone operators. Fixed Point Theory 10(1), 35-71 (2009)

Buong, N, Duong, LT: An explicit iterative algorithm for a class of variational inequalities in Hilbert spaces. J. Optim. Theory Appl. 151, 513-524 (2011)

Zhang, C, Yang, C: A new explicit iterative algorithm for solving a class of variational inequalities over the common fixed points set of a finite family of nonexpansive mappings. Fixed Point Theory Appl. 2014, Article ID 60 (2014)

Combettes, PL: Solving monotone inclusions via compositions of nonexpansive averaged operators. Optimization 53, 475-504 (2004)

Byrne, C: A unified treatment of some iterative algorithms in signal processing and image reconstruction. Inverse Probl. 20, 103-120 (2004)

Zhou, HY: Convergence theorems of fixed points for k-strict pseudocontractions in Hilbert spaces. Nonlinear Anal. 69(2), 456-462 (2008)

Deng, BC, Chen, T, Li, ZF: Cyclic iterative method for strictly pseudononspreading in Hilbert space. J. Appl. Math. 2012, Article ID 435676 (2012)

Suzuki, T: Strong convergence of Krasnoselskii and Mann’s type sequences for one-parameter nonexpansive semigroups without Bochner integrals. J. Math. Anal. Appl. 305(1), 227-239 (2005)

Xu, HK: Iterative algorithms for nonlinear operators. J. Lond. Math. Soc. 66, 240-256 (2002)

Acknowledgements

The research part of the second author was done during his visit to King Fahd University of Petroleum and Minerals (KFUPM), Dhahran, Saudi Arabia. Second author would like to express his thanks to KFUPM for providing excellent working facilities and conditions. In this research, JC Yao was partially supported by the grant MOST 102-2115-M-039-003-MY3.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Bnouhachem, A., Ansari, Q.H. & Yao, JC. An iterative algorithm for hierarchical fixed point problems for a finite family of nonexpansive mappings. Fixed Point Theory Appl 2015, 111 (2015). https://doi.org/10.1186/s13663-015-0358-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13663-015-0358-6