Abstract

A filter regularization method is developed to solve a time-fractional inverse advection–dispersion problem, which is based on the modified ‘kernel’ idea. Proofs of convergence are given under both priori and posteriori regularization parameter choice rules. Numerical examples are presented to illustrate the effectiveness of the proposed algorithm.

Similar content being viewed by others

1 Introduction

In the last few decades, many problems in finance [1, 2], physics [3,4,5,6], control theory [7], hydrology [8, 9] and viscoelasticity [10] were modeled mathematically by fractional partial differential equations. The biggest important advantage of using fractional partial differential equations in mathematical modeling is their non-local property in the sense that the next state of the system depends not only upon its current state but also upon all of its proceeding states. The fractional-order models are more adequate than the integral-order models to describe the memory and hereditary properties of different substances [11,12,13,14,15,16,17].

In practical physical applications, Brownian motion, the diffusion with an additional velocity field and diffusion under the influence of a constant external force field are both modeled by the advection–dispersion equation [18]. However, the advection–dispersion equation is not suitable for anomalous diffusion, i.e., the fractional generalization may be different for the advection case and the transport in an external force field [19]. A straightforward extension of the continuous time random walk (CTRW) model leads to a fractional advection–dispersion equation. The time-fractional advection–dispersion equation is obtained by replacing the time-derivative in the advection–dispersion equation by a generalized derivative of order α with \(0<\alpha \leq 1\) and can be used to simulate contaminant transport in porous media [20]. Direct problems for time-fractional advection–dispersion equations have been studied extensively in recent years [21,22,23,24]. By contrast, little has been done on the inverse problems for time-fractional advection–dispersion equations.

In this paper, the inverse problem of time-fractional advection–dispersion equation is given by

where u is the solute concentration, the constants a (\(a>0\)) and b (\(b\geq 0\)) represent the dispersion coefficient and the average fluid velocity, respectively. The time-fractional derivative \({}_{0}D^{\alpha }_{t}u(x,t)\) is the Caputo fractional derivative of order α (\(0<\alpha \leq 1\)) defined by [11]

The problem is to detect the temperature for \(0\leq x<1\) by means of the current temperature measurement at \(x=1\). It is well known that this problem is ill-posed, since the small errors in the input data may cause large errors in the output solution.

Recently, time-fractional inverse diffusion problems have been considered by some authors; see [25,26,27,28,29]. Although there are many papers on time-fractional inverse diffusion problems, we only find a few results on inverse problems for time-fractional advection–dispersion equations. Zheng and Wei [30, 31] applied the spectral regularization method and the modified equation method to a time-fractional inverse advection–dispersion problem, respectively. Following the work of [30, 31], Zhao et al. [32] used an optimal filtering method to deal with a time-fractional inverse advection–dispersion problem. However, the theoretical studies in the literature [30,31,32] were only based on a priori regularization parameter choice rule. The main aim of this present work is to present a filter regularization method and prove the convergence estimates under both priori and posteriori regularization parameter choice rules. The advantages of the proposed approach over the other existing results available in the literature [30,31,32] are demonstrated in Remark 3.5, Remark 3.6, Remark 3.8 and Remark 4.6 in detail.

The idea of filter regularization method by modified ‘kernel’ is very simple and natural, since the ill-posedness of time-fractional inverse advection–dispersion problem is caused by the high frequency components of the ‘kernel’, the ‘kernel’ function can be modified. As long as the modified ‘kernel’ function satisfies som certain properties, a new regularization method can be established. In addition, the modified ‘kernel’ idea has been used to deal with several ill-posed problems [33,34,35,36].

This paper consists of six sections. Section 2 states the ill-posedness of the problem and presents a filter regularization method. In Sects. 3 and 4, the error estimates are proved under both prior and posterior regularization parameter choice rules. Numerical experiments are performed in Sect. 5. A brief conclusion is given in Sect. 6.

2 Ill-posedness of the problem and a filter regularization method

In order to apply the Fourier transform, all the functions are extended to the whole line \(-\infty < t<\infty \) by defining them to be zero for \(t<0\). Here, and in the following sections, \(\|\cdot \|\) denotes the \(L^{2}\)-norm, i.e.,

The Fourier transform of the function \(f(t)\) is written as

and \(\|\cdot \|_{p}\) denotes the \(H^{p}\)-norm, i.e.,

Taking the Fourier transform to (1.1) with respect to t, one can easily get the solution of problem (1.1) in the frequency domain

or equivalently

where

It is easy to see that the real part of \(k(\xi )\) is

and the imaginary part of \(k(\xi )\) is

with

Note that \(k(\xi )\) has a positive real part and therefore the factor \(e^{(1-x)A}\) increases exponentially for \(0\leq x<1\) as \(|\xi |\rightarrow \infty \). The measured data function \(g^{\delta }(t)\in L^{2}( \mathbb{R})\) usually contains an error, and it satisfies

where \(\delta >0\) is a noise level. This mean a small error for the measured data \(g^{\delta }(t)\) will be amplified infinitely by the factor \(e^{(1-x)A}\) and destroy the solution of problem (1.1). Therefore, the time-fractional inverse advection–dispersion problem is severely ill-posed and some regularization methods are needed. Thus, a filter regularization method to solve problem (1.1) is presented in this paper.

To regularize the problem, our aim is to replace the term \(e^{(1-x)A}\) by another term. For this purpose, a regularized solution in frequency space is defined as follows:

or equivalently

In the following section, the error estimates between the regularized solution \(u^{\beta ,\delta }(x,t)\) and the exact solution \(u(x,t)\) will be proved under both prior and posterior regularization parameter choice rules.

3 Prior choice rule and error estimate

In this section, the \(L^{2}\)-estimate and \(H^{p}\)-estimate are discussed under a prior regularization parameter choice rule, respectively. Before obtaining the main theorems, some auxiliary lemmas firstly are given.

Lemma 3.1

([31])

If \(x\in [0,1)\), and \(k(\xi )\) is given by (2.3), then the following inequality holds:

Lemma 3.2

([37])

Let \(0< x< a\), \(0<\mu <1\), then

3.1 \(L^{2}\)-Estimate

Theorem 3.3

Suppose that \(u(x,\cdot )\) is the exact solution of problem (1.1) and \(u^{\beta ,\delta }(x,\cdot )\) is the filter regularized solution of problem (1.1). Let the assumption (2.4) and the prior condition \(\|u(0,\cdot )\|\leq E\) hold. If the regularization parameter β is selected by

then we have the following error estimate:

Proof

Due to Parseval’s formula and the triangle inequality, we have

Let

According to Lemma 3.2, it is obvious that

Combining the formulas (3.3)–(3.5), it follows that

using Eq. (3.1), the error estimate (3.2) is obtained. □

Remark 3.4

From (3.2), it is clear that a Hölder-type estimate in the interval \(0< x<1\) is obtained. However, the error estimate at \(x=0\) cannot be obtained. In order to obtain the error estimate at \(x=0\), a stronger prior bound is introduced as follows:

Remark 3.5

The error estimate (3.2) is the same order as that of Theorem 1 in [30] and Theorem 2.1 in [32].

Remark 3.6

In [31], the authors regularize the time-fractional inverse advection–dispersion problem and derive the error estimate

Thus, the convergence rate in this work is better than the one in [31].

3.2 \(H^{p}\)-Estimate

Theorem 3.7

Suppose that \(u(0,\cdot )\) is the exact solution of problem (1.1) and \(u^{\beta ,\delta }(0,\cdot )\) is the filter regularized solution of problem (1.1). Let the assumption (2.3) and the prior condition \(\|u(0,\cdot )\|_{H^{p}}\leq E\) hold. If the regularization parameter β is selected by

then we have the following error estimate:

Proof

Using the analogous technique of Theorem 3.3, it is evident that

For the first term on the right-hand side of (3.10), the following inequality holds:

For the second term on the right-hand side of (3.10), and note that \(\|u(0,\cdot )\|_{H^{p}}\leq E\), we have

where E is the prior bound.

Now setting the function \(f(\xi ):=\frac{\beta |e^{k(\xi )}|}{1+ \beta |e^{k(\xi )}|}(1+|\xi |^{2})^{-\frac{p}{2}}\), and using Lemma 3.1, it is easy to see that:

-

(i)

\(f(\xi )\leq (1+|\xi |^{2})^{-\frac{p}{2}}\leq |\xi |^{-p}\leq ( \ln \frac{1}{\beta })^{-p}\), for \(|\xi |\geq \ln \frac{1}{ \beta }\),

-

(ii)

\(f(\xi )\leq \beta |e^{k(\xi )}|\leq \beta e^{\frac{|\xi |^{\frac{ \alpha }{2}}}{\sqrt{a}}}\leq \beta ^{1-\frac{\alpha }{2\sqrt{a}}}\), for \(|\xi |< \ln \frac{1}{\beta }\).

Then the following inequality can be obtained:

Taking into account (3.8), (3.10), (3.11), (3.12) and (3.13), the error estimate (3.9) is obtained. □

Remark 3.8

In [30,31,32], the convergence estimates have been obtained under a prior regularization parameter choice rule. However, in practical computations, such choices do not work well for all cases. Therefore, it is better to provide a posterior regularization parameter choice rule. In the next section, this topic is considered.

4 Posterior choice rule and error estimate

This section presents a posterior regularization parameter choice by Morozov’s discrepancy principle. Choose the regularization parameter β as the solution of the equation

where \(\tau >1\) is a constant and β denotes the regularization parameter. To establish existence and uniqueness of solution for Eq. (4.1), the following lemmas are needed.

Lemma 4.1

Let \(\rho (\beta ):= \|\frac{1}{1+\beta |e^{k(\xi )}|}\widehat{g} ^{\delta }(\xi )-\widehat{g}^{\delta }(\xi ) \|\), if \(0<\tau \delta <\|\widehat{g}^{\delta }(\xi )\|\), then

-

(a)

\(\rho (\beta )\) is a continuous function;

-

(b)

\(\lim_{\beta \rightarrow 0}\rho (\beta )=0\);

-

(c)

\(\lim_{\beta \rightarrow +\infty }\rho (\beta )=\|\widehat{g}^{ \delta }\|\);

-

(d)

\(\rho (\beta )\) is a strictly increasing function.

The proof is very easy and we omit it here.

Remark 4.2

From Lemma 4.1, it is known that there exists a unique solution β satisfying Eq. (4.1).

Lemma 4.3

If β is the solution of Eq. (4.1), then the following inequality holds:

Proof

Due to the triangle inequality and Eq. (4.1), we have

The lemma is proved. □

Lemma 4.4

If β is the solution of Eq. (4.1), the following inequality also holds:

Proof

Due to the triangle inequality and Eq. (4.1), we have

Then (4.4) can be obtained. The lemma is proved. □

Now, the main theorem is given as follows.

Theorem 4.5

Suppose that the prior condition \(\|u(0,\cdot )\|\leq E\) and the assumption (2.4) hold, and there exists \(\tau >1\) such that \(0<\tau \delta <\|\widehat{g}^{\delta }\|\). The regularization parameter \(\beta >0\) is chosen by Morozov’s discrepancy principle (4.1). Then the following convergence estimate holds:

Proof

Due to the Parseval formula and Lemma 4.3, we have

Using Lemma 4.4, it follows that

Therefore, the conclusion of the theorem can be obtained directly from (4.8). □

Remark 4.6

Obviously, If \(\alpha =1\), the problem (1.1) naturally corresponds to the standard inverse advection–dispersion problem [38]. If \(\alpha =1\) and \(b=0\), the problem (1.1) corresponds to the classical inverse heat conduction problem [39]. If \(b=0\), the problem (1.1) corresponds to the time-fractional inverse diffusion problem [40]. Thus, the results in the paper are valid for these special problems.

5 Numerical examples

In this section, three numerical examples are presented to illustrate the behavior of the proposed method. In our numerical experiment, we set \(a=1\) and \(b=1\) in (1.1).

The numerical examples are constructed in the following way. First, the initial data \(u(0,t)=f(t)\) of time-fractional advection–dispersion problem is presented at \(x=0\), and the function \(u(1,t)=g(t)\) is computed by solving a direct problem, which is a well-posed problem. Second, a randomly distributed perturbation to the data function obtaining vector \(g^{\delta }(t)\) is added, i.e.,

Here, the function “\(\operatorname{randn}(\cdot )\)” generates arrays of random numbers whose elements are normally distributed with mean 0, variance \(\sigma ^{2}=1\) and standard deviation \(\sigma =1\), “\(\operatorname{randn}(\operatorname{size}(g))\)” returns array of random entries that is the same size as g, the magnitude ϵ indicates a relative noise level. Here, the total noise δ can be measured in the sense of the root mean square error according to

Third, the regularized solution is obtained by solving the inverse problem. Finally, the regularized solution is compared with the exact solution.

In the experiments under the prior regularization parameter choice rule, the regularization parameter β is chosen by (3.1), and in the experiments under the posterior regularization parameter choice rule, the regularization parameter β is chosen by (4.1) with \(\tau =1.1\).

Example 5.1

Consider a smooth function

Example 5.2

Consider a piecewise smooth function

Example 5.3

Consider the following discontinuous function:

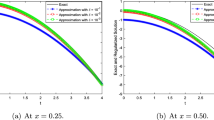

Figures 1, 3 and 5 show the comparison between prior and posterior methods for different α for Examples 5.1, 5.2, 5.3, respectively. Figures 2, 4 and 6 show the comparison between prior and posterior methods for different ϵ for Examples 5.1, 5.2, 5.3, respectively.

The comparison of the exact solution and its approximation solution for \(\epsilon =0.01\) at \(x=0.8\) with Example 5.1, (a) \(\alpha =0.1\), (b) \(\alpha =0.7\)

The comparison of the exact solution and its approximation solution for \(\alpha =0.3\) at \(x=0.1\) with Example 5.1, (a) \(\epsilon =0.01\), (b) \(\epsilon =0.001\)

The comparison of the exact solution and its approximation solution for \(\epsilon =0.001\) at \(x=0.8\) with Example 5.2, (a) \(\alpha =0.1\), (b) \(\alpha =0.7\)

The comparison of the exact solution and its approximation solution for \(\alpha =0.3\) at \(x=0.1\) with Example 5.2, (a) \(\epsilon =0.001\), (b) \(\epsilon =0.0001\)

The comparison of the exact solution and its approximation solution for \(\epsilon =0.001\) at \(x=0.8\) with Example 5.3, (a) \(\alpha =0.1\), (b) \(\alpha =0.7\)

The comparison of the exact solution and its approximation solution for \(\alpha =0.3\) at \(x=0.1\) with Example 5.3, (a) \(\epsilon =0.001\), (b) \(\epsilon =0.0001\)

Figs. 1–6 show that the smaller the parameter ϵ is, the better the computed approximation is, and the smaller the parameter α is, the better the computed approximation is. Moreover, Figs. 1–6 also show that both prior and posterior parameter choice rules work well, but the result under a prior rule is better than the results under a posterior rule. Finally, these tests illustrate that the proposed method is not only effective for the smooth example, but it also works well for the continuous and discontinuous examples.

6 Conclusion

In this paper, an inverse problem of time-fractional advection–dispersion equations has been studied. A filter regularization method is suggested to deal with this problem, and its convergence is also discussed under both a prior regularization parameter choice rule and Morozov’s discrepancy principle. The numerical examples verify the efficiency and accuracy of the proposed computational method.

There are several potential extensions of the present method. On the one hand, the proposed method can be adapted to two-dimensional and three-dimensional inverse problems. On the other hand, the proposed method can be extended to the case with time-dependent coefficient. Towards this end, more vigorous investigations are needed in the future.

References

Roberto, M., Scalas, E., Mainardi, F.: Waiting-times and returns in high frequency financial data: an empirical study. Physica A 314, 171–180 (2002)

Sabatelli, L., Keating, S., Dudley, J., Richmond, P.: Waiting time distributions in financial markets. Eur. Phys. J. B 27, 273–275 (2002)

West, B., Bologna, M., Grigolini, P.: Physical of Fractal Operators. Springer, New York (2003)

Hilfer, R.: Application of Fractional Calculus in Physics. World Scientific, Singapore (2000)

Xu, W., Sun, H., Chen, W., Chen, H.: Transport properties of concrete-like granular materials interacted by their microstructures and particle components. Int. J. Mod. Phys. B 32, 1840011 (2018)

Sun, H., Zhang, Y., Wei, S., Zhu, J., Chen, W.: A space fractional constitutive equation model for non-Newtonian fluid flow. Commun. Nonlinear Sci. Numer. Simul. 62, 407–417 (2018)

Mechado, J.A.T.: Discrete time fractional-order controllers. Fract. Calc. Appl. Anal. 4, 47–66 (2001)

Baeumer, B., Meerschaert, M.M., Benson, D.A., Wheatcraft, S.W.: Subordinate advection–dispersion equation on contaminant transport. Water Resour. Res. 37, 1543–1550 (2001)

Schumer, R., Benson, D.A., Meerschaert, M.M., Baeumer, B.: Multiscaling fractional advection–dispersion equations and their solutions. Water Resour. Res. 39, 1022–1032 (2003)

Bagley, R.L., Torvik, P.J.: A theoretical basis for the application of fractional calculus to viscoelasticity. J. Rheol. 27, 201–210 (1983)

Podlubny, I.: Fractional Differential Equations. Acadmic Press, San Diego (1999)

Hajipour, M., Jajarmi, A., Baleanu, D., Sun, H.: On an accurate discretization of a variable-order fractional reaction-diffusion equation. Commun. Nonlinear Sci. Numer. Simul. 69, 119–133 (2019)

Baleanu, D., Jajarmi, A., Asad, J.H.: Classical and fractional aspects of two coupled pendulums. Rom. Rep. Phys. 71, 103 (2019)

Meng, R., Yin, D., Drapaca, C.S.: Variable-order fractional description of compression deformation of amorphous glassy polymers. Comput. Mech. (2019). https://doi.org/10.1007/s00466-018-1663-9

Baleanu, D., Jajarmi, A., Hajipour, M.: On the nonlinear dynamical systems within the generalized fractional derivatives with Mittag-Leffler kernel. Nonlinear Dyn. 94, 397–414 (2018)

Baleanu, D., Sajjadi, S.S., Jajarmi, A., Asad, J.H.: New features of the fractional Euler–Lagrange equations for a physical system within non-singular derivative operator. Eur. Phys. J. Plus 134, 181 (2019)

Baleanu, D., Jajarmi, A., Bonyah, E., Hajipour, M.: New aspects of poor nutrition in the life cycle within the fractional calculus. Adv. Differ. Equ. 2018, 230 (2018)

Khalifa, M.E.: Some analytical solutions for the advection–dispersion equation. Appl. Math. Comput. 139, 299–310 (2003)

Metzler, R., Klafter, J.: The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

Bear, J.: Hydraulics of Groundwater. McGraw-Hill, New York (1979)

Meerschaert, M.M., Tadjeran, C.: Finite difference approximations for fractional advection–dispersion flow equations. J. Comput. Appl. Math. 172, 65–77 (2004)

Wang, K., Wang, H.: A fast characteristic finite difference method for fractional advection-diffusion equations. Adv. Water Resour. 34, 810–816 (2011)

Liu, F., Anh, V.V., Turner, I., Zhuang, P.: Time fractional advection–dispersion equation. J. Appl. Math. Comput. 13, 233–245 (2003)

Huang, F., Liu, F.: The time fractional diffusion equation and the advection–dispersion equation. ANZIAM J. 46, 317–330 (2005)

Cheng, J., Nakagawa, J., Yamamoto, M., Yamazaki, T.: Uniqueness in an inverse problem for a one-dimensional fractional diffusion equation. Inverse Probl. 25, 115002 (16 pp.) (2009)

Zhang, Y., Xu, X.: Inverse source problem for a fractional diffusion equation. Inverse Probl. 27, 035110 (12 pp.) (2011)

Jin, B., Rundell, W.: An inverse problem for a one-dimensional time-fractional diffusion problem. Inverse Probl. 28, 075010 (19 pp.) (2012)

Liu, J.J., Yamamoto, M.: A backward problem for the time-fractional diffusion equation. Appl. Anal. 89, 1769–1788 (2010)

Li, G., Zhang, D., Jia, X., Yamamoto, M.: Simultaneous inversion for the space-dependent diffusion coefficient and the fractional order in the time-fractional diffusion equation. Inverse Probl. 29, 065014 (36 pp.) (2013)

Zheng, G.H., Wei, T.: Spectral regularization method for the time fractional inverse advection–dispersion equation. Math. Comput. Simul. 81, 37–51 (2010)

Zheng, G.H., Wei, T.: A new regularization method for the time fractional inverse advection–dispersion problem. SIAM J. Numer. Anal. 49, 1972–1990 (2011)

Zhao, J.J., Liu, S.S.: An optimal filtering method for a time-fractional inverse advection–dispersion problem. J. Inverse Ill-Posed Probl. 24, 51–58 (2016)

Qian, Z., Fu, C.L.: Regularization strategies for a two-dimensional inverse heat conduction problem. Inverse Probl. 23, 1053–1068 (2007)

Qian, Z., Fu, C.L., Feng, X.L.: A modified method for high order numerical derivatives. Appl. Math. Comput. 182, 1191–1200 (2006)

Zhang, Z.Q., Ma, Y.J.: A modified kernel method for numerical analytic continuation. Inverse Probl. Sci. Eng. 21, 840–853 (2013)

Liu, S.S., Feng, L.X.: A posteriori regularization parameter choice rule for a modified kernel method for a time-fractional inverse diffusion problem. J. Comput. Appl. Math. 353, 355–366 (2019)

Feng, X.L., Ning, W.T., Qian, Z.: A quasi-boundary-value method for a Cauchy problem of an elliptic equation in multiple dimensions. Inverse Probl. Sci. Eng. 22, 1045–1061 (2014)

Qian, Z., Fu, C.L., Xiong, X.T.: A modified method for a non-standard inverse heat conduction problem. Appl. Math. Comput. 180, 453–468 (2006)

Berntsson, F.: A spectral method for solving the sideways heat equation. Inverse Probl. 15, 891–906 (1999)

Xiong, X.T., Gao, H., Liu, X.: An inverse problem for a fractional diffusion equation. J. Comput. Appl. Math. 236, 4474–4484 (2012)

Funding

This work was partially supported by National Natural Science Foundation of China (11871198), the Fundamental Research Funds for the Universities of Heilongjiang Province Heilongjiang University Special Project (RCYJTD201804), the National Science Foundation of Hebei Province (A2017501021) and the Fundamental Research Funds of the Central Universities (N182304024).

Author information

Authors and Affiliations

Contributions

The main idea of this paper was proposed by LF. SL prepared the manuscript initially and performed all the steps of the proofs in the research. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors have declared that no competing interests exist.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Liu, S., Feng, L. Filter regularization method for a time-fractional inverse advection–dispersion problem. Adv Differ Equ 2019, 222 (2019). https://doi.org/10.1186/s13662-019-2155-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-2155-8