Abstract

In this paper, the implicit midpoint method is presented for solving Riesz tempered fractional diffusion equation with a nonlinear source term, where the tempered fractional partial derivatives are evaluated by the modified second-order Lubich tempered difference operator. Stability and convergence analyses of the numerical method are given. The numerical experiments demonstrate that the proposed method is effective.

Similar content being viewed by others

1 Introduction

In this paper, we consider Riesz tempered fractional diffusion equation with a nonlinear source term

with the initial and boundary conditions

where \(1<\alpha< 2\), \(\lambda\geq0\), the diffusion coefficient κ is a positive constant, \(\varphi(x)\) is a known sufficiently smooth function, \(g(x,t,u)\) satisfies the Lipschitz condition

here L is Lipschitz constant, and the Riesz tempered fractional derivative \(\frac{\partial^{\alpha,\lambda}u(x,y,t)}{ \partial \vert x \vert ^{\alpha} }\) is expressed as [1, 2]

where \(c_{\alpha}=-\frac{1}{2\cos(\frac{\pi\alpha}{2})}\), \({}_{a}^{R} D^{\alpha,\lambda}_{x}\) and \({}_{x}^{R} D^{\alpha ,\lambda}_{b}\) stand for the left and right Riemann–Liouville tempered fractional derivatives which are defined as

where the symbols \({}_{a}^{R} D^{(\alpha,\lambda)}_{x}\) and \({}_{x}^{R} D^{(\alpha,\lambda)}_{b}\) are defined by

where \({\varGamma}(\cdot)\) is Gamma function.

Moreover, if \(\lambda=0\), then the Riesz tempered fractional derivative will reduce to the usual Riesz fractional derivative (see e.g. [3,4,5,6,7,8]).

In recent years, differential equations with tempered fractional derivatives have widely been used for modeling many special phenomena, such as geophysics [9,10,11] and finance [12, 13] and so on. It has attracted many authors’ attention in constructing the numerical algorithm for tempered fractional partial differential equation (see e.g., [1, 2, 14,15,16,17,18,19,20,21,22,23,24]). Li and Deng proposed the tempered weighted and shifted Grünwald–Letnikov formula with second-order accuracy for Riemann–Liouville tempered fractional derivative in [24], and its approximation is applied in the numerical simulation of the tempered fractional Black–Scholes equation for European double barrier option by Zhang et al. [14]. Based on this approximation, Qu and Liang [15] constructed a Crank–Nicolson scheme for a class of variable-coefficient tempered fractional diffusion equation, and disscussed the stability and convergence. Yu et al. [16] proposed a third-order difference scheme for one side Riemann–Liouville tempered fractional diffusion equation and given the stability and convergence analysis. Yu et al. [19] constructed a fourth-order quasi-compact difference operator for Riemann–Liouville tempered fractional derivative and tested its effectiveness by numerical experiment. Zhang et al. [1] presented a modified second-order Lubich tempered difference operator for approximating the Riemann–Liouville tempered fractional derivative and verified its effectiveness by theoretical analysis and numerical results. The aim of this paper is to try to use the implicit midpoint method and the modified second-order Lubich tempered difference operator to construct a new numerical scheme, and to give a theoretical analysis of the numerical method.

The outline of this paper is arranged as follows. In Sect. 2, numerical scheme is proposed for solving Riesz tempered fractional diffusion equation with a nonlinear source term. Section 3 is devoted into the stability and convergence analysis. In Sect. 4, we use the proposed method (abbr. T-ML2) and the method (abbr. T-WSGL) in literature [24] to solve the test problems. Finally, we draw the conclusion in Sect. 5.

2 Numerical method

Let \(x_{i}=a+ih\), \(i=0,1,2,\ldots,M\), \(t_{n}=n\tau\), \(n=0,1,2,\ldots,N\), where \(h=(b-a)/M\) is spatial step size, \(\tau=T/N\) denotes the time step size. The exact solution and numerical solution at the point \((x_{i},t_{n})\) are denoted by \(u(x_{i},t_{n})\) and \(u^{n}_{i}\), respectively.

To discretize the Riemann–Liouville tempered fractional derivatives, we would introduce the modified second-order Lubich tempered difference operators \(\delta^{\alpha}_{x-}\) and \(\delta^{\alpha}_{x+}\) at the point \((x_{i},t_{n})\), which are defined as

where \(g^{(\alpha,\lambda)}_{k}\) are given as

then we have the following lemma.

Lemma 2.1

([1]) If \(\widetilde{u}(x,t_{n})\in\mathscr{C} ^{2+\alpha}_{\lambda}(\mathbb {R})\) (\(1\leq n\leq N\)), for the fixed step size h, we have

where \(\widetilde{u}(x,t_{n})\) is a zero-extension of \(u(x,t_{n})\) with respect to x on \(\mathbb {R}\) which is defined as

The fractional Sobolev space \(\mathscr{C}^{2+\alpha}_{\lambda }(\mathbb {R})\) is defined by

where \(\widehat{v}(\varpi)\) is represented as the Fourier transformation of \(v(x)\) defined by

According to (1.5)–(1.7), we have

where

Using the implicit midpoint method to solve (1.1) at the point \((x_{i},t_{n})\), we find

Applying (2.1) to discretize the Riesz tempered fractional derivative, we get

where there exists a constant \(c_{1}\) such that

Omitting the error term \(\mathscr{R}^{n+\frac{1}{2}}_{i}\), we obtain the following numerical scheme for solving (1.1)–(1.3):

where \(u_{i}^{n+\frac{1}{2}}=\frac{u^{n+1}_{i}+u^{n}_{i}}{2}\), \(g^{n+\frac{1}{2}}_{i}=g( x_{i},t_{n+\frac{1}{2}},u_{i}^{n+\frac{1}{2}} )\).

Furthermore, the matrix form of (2.6) can be written as

where

here A is a Toeplitz matrix, which can be written as \(A=B+B^{T}\), where the matrix B is defined as

where \(d=-e^{h\lambda} ( \frac{3\alpha-2}{2\alpha}-\frac {2(\alpha-1)}{\alpha}e^{-h\lambda}+\frac{\alpha-2}{2\alpha }e^{-2h\lambda} )^{\alpha}\).

Remark 2.1

In [24], Li and Deng combined the Crank–Nicolson method with a tempered weighted and shifted Grünwald–Letnikov operator to propose a numerical method with the accuracy of \(O(\tau^{2}+h^{2})\) for tempered fractional diffusion equation with a linear source term, where the tempered weighted and shifted Grünwald–Letnikov operators with second-order accuracy are defined as

where the weights \(\widehat{g}^{(\alpha,\lambda)}_{k}\) are given as

the weights \(w_{0}^{(\alpha)}=1, w_{k}^{(\alpha)}=(1-\frac{1+\alpha }{k})w_{k-1}^{(\alpha)}\), \(k\geq1\). The values of \(\gamma_{1}\), \(\gamma _{2}\) and \(\gamma_{3}\) can be selected in the following three sets:

-

(1)

\(S^{\alpha}_{1}( \gamma_{1},\gamma_{2},\gamma_{3} )= \{ \max \{ \frac{2(\alpha^{2}+3\alpha-4)}{\alpha^{2}+3\alpha+2},\frac {\alpha^{2}+3\alpha}{\alpha^{2}+3\alpha+4} \}\leq\gamma _{1}\leq\frac{3(\alpha^{2}+3\alpha-2)}{2(\alpha^{2}+3\alpha +2)}, \gamma_{2}=\frac{2+\alpha}{2}-2\gamma_{1}, \gamma_{3}=\gamma _{1}-\frac{\alpha}{2} \}\).

-

(2)

\(S^{\alpha}_{2}( \gamma_{1},\gamma_{2},\gamma_{3} )= \{ \gamma _{1}=\frac{2+\alpha}{4}-\frac{\gamma_{2}}{2}, \frac{(\alpha -4)(\alpha^{2}+3\alpha+2)}{2(\alpha^{2}+3\alpha+2)}\leq\gamma _{2}\leq\min \{ \frac{(\alpha-2)(\alpha^{2}+3\alpha+4)+16}{2(\alpha^{2}+3\alpha +4)}, \frac{(\alpha-6)(\alpha^{2}+3\alpha+2)+48}{2(\alpha^{2}+3\alpha +2)} \}, \gamma_{3}=\frac{2-\alpha}{4}-\frac{\gamma_{2}}{2} \}\).

-

(3)

\(S^{\alpha}_{3}( \gamma_{1},\gamma_{2},\gamma_{3} )= \{ \gamma _{1}=\frac{\alpha}{2}+\gamma_{3}, \gamma_{2}=\frac{2-\alpha }{2}-2\gamma_{3}, \max \{ \frac{(2-\alpha)(\alpha^{2}+\alpha -8)}{\alpha^{2}+3\alpha+2},\frac{(1-\alpha)(\alpha^{2}+2\alpha )}{2(\alpha^{2}+3\alpha+4)} \} \leq\gamma_{3}\leq\frac{(2-\alpha)(\alpha^{2}+2\alpha -3)}{2(\alpha^{2}+3\alpha+2)} \}\).

3 Stability and convergence analysis

In order to analyze the stability and convergence of the numerical method, we would introduce some notations and lemmas.

Let

for any \(u^{n},v^{n}\in\widehat{\gamma}_{h}\), we define the following discrete inner product and corresponding norm:

Remark 3.1

From [1], we note that the matrix A is negative definite, i.e. for any \(\chi\in\mathbb {R}^{M-1}\), \(\chi A \chi^{T} \leq0\), therefore, we have Lemma 3.1.

Lemma 3.1

For any \(u^{n} \in\widehat{\gamma}_{h}\), we have

Assuming \(\widehat{u}^{n}_{i}\) is the numerical solution for (2.6)–(2.8) starting from another initial value \(\widehat{\varphi}(x)\), denote \(\eta^{n}=(0,\eta^{n}_{1},\eta^{n}_{2},\ldots,\eta ^{n}_{M-1},0 )\), where \(\eta^{n}_{i}=u^{n}_{i}-\widehat{u}^{n}_{i} \), then we have the following consequences.

Theorem 3.1

For any given positive number \(\mu\in(0,1)\), if \(0<\tau\leq\tau _{0}=\frac{1-\mu}{L}\), then the numerical scheme (2.6)–(2.8) is stable, i.e. there exists a constant \(c_{2}\), such that

Proof

According to (2.6), we obtain the following equation:

where \(\widehat{ g }^{n+\frac{1}{2}}_{i}=g(x_{i},t_{n+\frac {1}{2}},\widehat{u}^{n+\frac{1}{2}}_{i} )\).

Multiplying by \(h\eta^{n+\frac{1}{2}}\) in (3.1), summing up from 1 to \(M-1\) on i, we have

Noticing that

Employing Lemma 3.1, we find

Substituting (3.3) and (3.4) into (3.2), we obtain

Since g satisfies Lipschitz condition with respect to u, we have

It follows from (3.5) that

We can obtain the recursion from (3.6),

For any given \(\mu\in(0,1)\), and \(0< \tau\leq\tau_{0}=\frac{1-\mu }{L} \), then we obtain from (3.7)

It follows from (3.8) and the discrete Gronwall inequality that

Therefore

where \(c_{2}=\sqrt{e^{\frac{2T L}{\mu}}}\). The proof is completed. □

Theorem 3.2

For any given positive number \(\mu\in(0,1)\), if \(0<\tau\leq\tau _{0}=\frac{2-2\mu}{2L+1}\), then the numerical scheme (2.6)–(2.8) is convergent, i.e. there exists a constant \(c_{3}\), such that

where \(\varepsilon^{n}=( 0,\varepsilon^{n}_{1},\varepsilon ^{n}_{2},\ldots,\varepsilon^{n}_{M-1},0 )\), \(\varepsilon ^{n}_{i}=u(x_{i},t_{n})-u^{n}_{i}\).

Proof

Subtracting (2.6) from (2.4), we get the error equation

where \(\widetilde{ g }^{n+\frac{1}{2}}_{i}=g(x_{i},t_{n+\frac {1}{2}},\frac{u(x_{i},t_{n+1})+u(x_{i},t_{n})}{2} )\).

Similarly, we can conclude from the deduction of Theorem 3.1 that

According to (1.4) and Cauchy–Schwarz inequality, we obtain from (3.10)

It follows from (3.11) that

We can obtain the recursion from (3.12),

For any given positive number \(\mu\in(0,1)\), if \(0<\tau\leq\tau _{0}=\frac{2-2\mu}{2L+1}\), then we can conclude from (3.13) that

In view of the discrete Gronwall inequality, we have

It follows from (2.5) and the definition of the discrete norm that

Therefore

Substituting (3.17) into (3.15), we get

Thus

where \(c_{3}=\sqrt{\frac{{c_{1}}^{2}(b-a)T}{\mu}e^{\frac{(2 L+1)T}{\mu}}}\). The proof is completed. □

4 Numerical experiments

Denote \(\lVert\varepsilon(h,\tau) \rVert=\sqrt{h\tau\sum^{N}_{n=1}\sum^{M-1}_{i=1}| u(x_{i},t_{n})-u^{n}_{i} |^{2}}\) as \(L_{2}\) norm of the error at the point \((x_{i},t_{n})\), where \(u(x_{i},t_{n})\) and \(u_{i}^{n}\) are the exact solution and numerical solution with the step sizes h and τ at the grid point \((x_{i},t_{n})\), respectively. The observation order is defined as

Example 1

Consider the initial-boundary value problem in the following Riesz tempered fractional diffusion equation:

where \(1< \alpha< 2\), the nonlinear source term is

where \(A_{2}=1\), \(A_{3}=-2\), \(A_{4}=1\).

The exact solution of Example 1 is

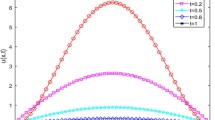

From Table 1, we can observe the second-order accuracy in both spatial and temporal directions with different α and λ, which is in line with our convergence analysis. The numerical solutions of Example 1 are shown in Fig. 1, we can find from Fig. 2 that the global perturbation errors depend on the initial perturbation errors, which proved the correctness of our stability analysis.

Numerical solutions for Example 1 with \(h=\tau =0.01\), \(\alpha=1.5\), \(\lambda=1\)

Numerical solutions for the perturbation equations of Example 1 with \(h=\tau=0.01\), \(\alpha=1.5\), \(\lambda=1\) and the initial perturbation error \(\eta^{0}_{i}=1e-04\) (\(0\leq i \leq M \))

Example 2

Consider the initial-boundary value problem in the following Riesz tempered fractional diffusion equation:

where \(1< \alpha< 2\), the linear source term \(g(x,t)\) is

The exact solution of Example 2 is

For contrast, we apply our numerical scheme (2.6)–(2.8) (T-ML2) and the method (T-WSGL) in [24] for solving Example 2 with different α and \(\lambda=1\), respectively. The errors and corresponding observation orders are listed in Table 2, we find that T-ML2 and T-WSGL are both effective for solving Example 2. However, we need to select the values of \(\gamma_{1}\), \(\gamma_{2}\) and \(\gamma_{3}\) for different α when T-WSGL is used. In a sense, T-ML2 may be more convenient than T-WSGL for Example 2.

5 Conclusion

In this paper, the implicit midpoint method is proposed for solving the Riesz tempered fractional diffusion equation with a nonlinear source term, the numerical scheme is proved to be stable and convergent by the energy method, and numerical examples verify the correctness of the theoretical analysis and the effectiveness of the proposed method.

References

Zhang, Y., Li, Q., Ding, H.: High-order numerical approximation formulas for Riemann–Liouville (Riesz) tempered fractional derivatives: construction and application (I). Appl. Math. Comput. 329, 432–443 (2018)

Çelik, C., Duman, M.: Finite element method for a symmetric tempered fractional diffusion equation. Appl. Numer. Math. 120, 270–286 (2017)

Li, C., Zeng, F.: Numerical Methods for Fractional Calculus, pp. 170–174. Chapman & Hall, Boca Raton (2015)

Liao, H., Lyu, P., Vong, S.: Second-order BDF time approximation for Riesz space-fractional diffusion equations. Int. J. Comput. Math. 95, 144–158 (2017)

Wang, P., Huang, C.: An implicit midpoint difference scheme for the fractional Ginzburg–Landau equation. J. Comput. Phys. 312, 31–49 (2016)

Ding, H., Li, C.: High-order numerical algorithms for Riesz derivatives via constructing new generating functions. J. Sci. Comput. 71, 759–784 (2017)

Choi, Y., Chung, S.: Finite element solutions for the space-fractional diffusion equation with a nonlinear source term. Abstr. Appl. Anal. 2012, 183 (2012)

Çelik, C., Duman, M.: Crank–Nicolson method for the fractional diffusion equation with the Riesz fractional derivative. J. Comput. Phys. 231, 1743–1750 (2012)

Baeumer, B., Meerschaert, M.: Tempered stable Lévy motion and transient super-diffusion. J. Comput. Appl. Math. 233, 2438–2448 (2010)

Meerschaert, M., Zhang, Y., Baeumer, B.: Tempered anomalous diffusion in heterogeneous systems. Geophys. Res. Lett. 35, L17403 (2008)

Metzler, R., Klafter, J.: The restaurant at the end of the random walk: recent developments in the description of anomalous transport by fractional dynamics. J. Phys. A, Math. Gen. 37, R161 (2004)

Carr, P., Geman, H., Madan, D., Yor, M.: Stochastic volatility for Lévy processes. Math. Finance 13, 345–382 (2003)

Wang, W., Chen, X., Ding, D., Lei, S.: Circulant preconditioning technique for barrier options pricing under fractional diffusion models. Int. J. Comput. Math. 92, 2596–2614 (2015)

Zhang, H., Liu, F., Turner, I., Chen, S.: The numerical simulation of the tempered fractional Black–Scholes equation for European double barrier option. Appl. Math. Model. 40, 5819–5834 (2016)

Qu, W., Liang, Y.: Stability and convergence of the Crank–Nicolson scheme for a class of variable-coefficient tempered fractional diffusion equations. Adv. Differ. Equ. 2017, 108, 1–11 (2017)

Yu, Y., Deng, W., Wu, Y., Wu, J.: Third order difference schemes (without using points outside of the domain) for one sided space tempered fractional partial differential equations. Appl. Numer. Math. 112, 126–145 (2017)

Dehghan, M., Abbaszadeh, M.: A finite difference/finite element technique with error estimate for space fractional tempered diffusion-wave equation. Comput. Math. Appl. 75, 2903–2914 (2018)

Wu, X., Deng, W., Barkai, E.: Tempered fractional Feynman–Kac equation: theory and examples. Phys. Rev. E 93, 032151 (2016)

Yu, Y., Deng, W., Wu, Y., Wu, J.: High-order quasi-compact difference schemes for fractional diffusion equations. Commun. Math. Sci. 15, 1183–1209 (2017)

Zayernouri, M., Ainsworth, M., Karniadakis, G.: Tempered fractional Sturm–Liouville EigenProblems. SIAM J. Sci. Comput. 37, A1777–A1800 (2015)

Chen, M., Deng, W.: High order algorithms for the fractional substantial diffusion equation with truncated Lévy flights. SIAM J. Sci. Comput. 37, A890–A917 (2015)

Sabzikar, F., Meerschaert, M., Chen, J.: Tempered fractional calculus. J. Comput. Phys. 293, 14–28 (2015)

Zheng, M., Karniadakis, G.: Numerical methods for SPDEs with tempered stable processes. SIAM J. Sci. Comput. 37, A1197–A1217 (2015)

Li, C., Deng, W.: High order schemes for the tempered fractional diffusion equation. Adv. Comput. Math. 42, 543–572 (2016)

Acknowledgements

The authors would like to express the thanks to the anonymous referees for their valuable comments and suggestions.

Availability of data and materials

The authors declare that all data and material in the paper are available and veritable.

Funding

This work is supported by National Science Foundation of China (No. 11671343), and the Project of Scientific Research Fund of Hunan Provincial Science and Technology Department (No. 2018WK4006).

Author information

Authors and Affiliations

Contributions

The two authors contributed equally and significantly in writing this article. The authors wrote, read, and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Hu, D., Cao, X. The implicit midpoint method for Riesz tempered fractional diffusion equation with a nonlinear source term. Adv Differ Equ 2019, 66 (2019). https://doi.org/10.1186/s13662-019-1990-y

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-019-1990-y