Abstract

This paper concerns the problem of enhanced results on robust finite time passivity for uncertain discrete time Markovian jumping BAM delayed neural networks with leakage delay. By implementing a proper Lyapunov–Krasovskii functional candidate, reciprocally convex combination method, and linear matrix inequality technique, we derive several sufficient conditions for varying the passivity of discrete time BAM neural networks. Further, some sufficient conditions for finite time boundedness and passivity for uncertainties are proposed by employing zero inequalities. Finally, the enhancement of the feasible region of the proposed criteria is shown via numerical examples with simulation to illustrate the applicability and usefulness of the proposed method.

Similar content being viewed by others

1 Introduction and problem statement with preliminaries

There has been a growing research interest in the field of recurrent neural networks (RNNs) largely studied by many researchers in recent years. The network architecture includes various types of neural networks such as bidirectional associative memory (BAM) neural networks, Hopfield neural networks, cellular neural networks, Cohen–Grossberg neural networks, neural and social networks which have received great attention due to their wide applications in the field of classification, signal and image processing, parallel computing, associate memories, optimization, cryptography, and so on. The bidirectional associative memory (BAM) neural network models were initially coined by Kosko, see [1, 2]. This network has an extraordinary class of RNNs which can have the ability to store bipolar vector pairs. It is composed of neurons and is arranged in two layers, one is the X-layer and the other is the Y-layer. The neurons in one layer are fully interconnected to the neurons in the other layer. The BAM neural networks are designed in such a way that, for a given external input, they can reveal only one global asymptotic or exponential stability equilibrium point. Hence, considerable efforts have been made in the study of stability analysis of neural networks, and as a credit to this, a large number of sufficient conditions have been proposed to guarantee the global asymptotic or exponential stability for the addressed neural networks.

Furthermore, the existence of time delays in the network will result in bad performance, instability or chaos. Accordingly, the time delays can be classified into two types: discrete and distributed delays. Here, we have taken both the delays into account while modeling our network system, because the length of the axon sizes is too large. So, it is noteworthy to inspect the dynamical behaviors of neural systems with both time delays, see, for instance, [3–11].

In [12], Shu et al. considered the BAM neural networks with discrete and distributed time delays. Some sufficient conditions were obtained to ensure the global asymptotic stability [12]. Also, time delays in the leakage term have great impact on the dynamic behavior of neural networks. However, so far, there have been a very few existing works on neural networks with time delay in the leakage term, see, for instance, [13–17].

Further the stability performance of state variable with leakage time delays was discussed by Lakshmanan et al. in [18]. While modeling a real nervous system, stochastic noises and parameter uncertainties are inevitable and should be taken into account. In the real nervous system, the synaptic transmission has created a noisy process brought on by apparent variation from the release of neurotransmitters and the connection weights of the neuron completely depend on undisputed resistance and capacitance values. Therefore, it is of practical significance to investigate the stochastic disruption in the stability of time-delayed neural networks with parameter uncertainties, see references cited therein [19–22]. Moreover, the hasty consequence (impulsive effect) is probable to exist in a wide variety of evolutionary processes that in turn make changes in the states abruptly at certain moments of time [23–28].

The conversion of the parameters jump will lead to a finite-state Markov process. Recently, the researchers in [29, 30] investigated the existence of Markovian jumps in BAMNNs and exploited the stochastic LKF approach, the new sufficient conditions were derived for the global exponential stability in the mean square.

The BAM-type NNs with Markovian jumping parameters and leakage terms were described by Wang et al. in [31]. In [32], a robust stability problem was studied and some delay-dependent conditions were derived for the neutral-type NNs with time-varying delays. The authors in [33–35] developed some conditions for the stability analysis of neural networks with integral inequality approach. The criteria to obtain the stability result of neural networks with time-varying delays were checked in[36–38]. It should be noted that, with all the consequences reported in the literature above, they are concerned only with Markovian jumping SNNs with Lipschitz model neuron activation functions. Up to now, very little attention has been paid to the problem of the global exponential stability of Markovian jumping SBAMNNs with non-Lipschitz type activation functions, which frequently appear in realistic neural networks. This situation motivates our present problem, i.e., α-inverse holder activation functions.

Our main objective of this paper is to study the delay-dependent exponential stability problem for a class of Markovian jumping uncertain BAM neural networks with mixed time delays, leakage delays, and α-inverse Holder activation functions under stochastic noise perturbation.

To the best of authors knowledge, so far, no result on the global exponential stability of Markovian jumping stochastic impulsive uncertain BAM neural networks with leakage, mixed time delays, and α-inverse Hölder activation functions has been available in the existing literature, which motivates our research to derive the following BAM neural networks:

where \(x(t) = (x_{1}(t),x_{2}(t),\ldots,x_{n}(t))^{T}\in\mathbb{R}^{n}\) and \(y(t) = (y_{1}(t),y_{2}(t),\ldots,y_{n}(t))^{T}\in\mathbb{R}^{n}\) denote the states at time t; \(f(\cdot)\), \(g(\cdot)\), \(h(\cdot)\) and \(\tilde {f}(\cdot)\), \(\tilde{g}(\cdot)\), \(\tilde{h}(\cdot)\) denote the neuron activation functions, \(C = \operatorname{diag}\{{c_{i}}\}\), \(D = \operatorname{diag}\{ {d_{j}}\}\) are positive diagonal matrices; \(c_{i}>0\), \(d_{j}>0\), \(i,j=1,2,\ldots,n\), are the neural self inhibitions; \(W_{0} = (W_{0ji})_{n\times n}\), \(V_{0} = (V_{0ij})_{n\times n}\) are the connection weight matrices; \(W_{1} = (W_{1ji})_{n\times n}\), \(V_{1} = (V_{1ij})_{n\times n}\) are the discretely delayed connection weight matrices; and \(W_{2} = (W_{2ji})_{n\times n}\), \(V_{2} = (V_{2ij})_{n\times n}\) are the distributively delayed connection weight matrices; \(I = (I_{1},I_{2},\ldots,I_{n})^{T}\) and \(J = (J_{1},J_{2},\ldots ,J_{n})^{T}\) are the external inputs; \(\tau_{1}(t)\) and \(\tau_{2}(t)\) are the discrete time-varying delays which are bounded with \(0<\tau_{1}(t)<\bar{\tau }_{1}\), \(\dot{\tau}_{1}(t)\leq\tau_{1}<1\), and \(0<\tau_{2}(t)<\bar {\tau}_{2}\), \(\dot{\tau}_{2}(t)\leq\tau_{2}<1\), respectively; \(\sigma_{1}\) and \(\sigma_{2}\) are constant delays. The leakage delays \(\nu_{1} \geq0\), \(\nu_{2} \geq0\) are constants; \(\rho_{1}: \mathbb {R}^{n}\times\mathbb{R}^{n}\times\mathbb{R}^{n}\times\mathbb {R}^{+} \longrightarrow\mathbb{R}^{n}\) and \(\rho_{2}: \mathbb {R}^{n}\times\mathbb{R}^{n}\times\mathbb{R}^{n}\times\mathbb {R}^{+} \longrightarrow\mathbb{R}^{n}\) denote the stochastic disturbances \(\omega(t) = (\omega_{1}(t),\omega_{2}(t),\ldots,\omega_{n}(t))^{T}\) and \(\tilde{\omega}(t) = (\tilde{\omega}_{1}(t),\tilde{\omega }_{2}(t),\ldots,\tilde{\omega}_{n}(t))^{T}\) are n-dimensional Brownian motions defined on a complete probability space \((\mathbb{A}, \mathcal{F}, \{\mathcal{F}_{t}\}_{t\geq 0},\mathbb{P})\) with a filtration \(\{{\mathcal{F}_{t}}\}_{t\geq0}\) satisfying the usual conditions (i.e., it is right-continuous and \(\mathcal{F}_{0}\) contains all \(\mathbb{P}\)-null sets) and \(\mathbb {E}\{{d\omega(t)}\} = \mathbb{E}\{{d\tilde{\omega}(t)}\} = 0\), \(\mathbb{E}\{{d\omega^{2}(t)}\} = \mathbb{E}\{{d\tilde{\omega }^{2}(t)}\} = dt\); \(M_{k}(\cdot): \mathbb{R}^{n}\times\mathbb{R}^{n} \rightarrow\mathbb{R}^{n}\), \(N_{k}(\cdot): \mathbb{R}^{n}\times\mathbb {R}^{n} \rightarrow\mathbb{R}^{n}\), \(k \in\mathbb{Z}_{+}\) are some continuous functions. The impulsive time \(t_{k} \) satisfies \(0 = t_{0} < t_{1} < \cdots< t_{k}\rightarrow\infty\), (i.e., \(\lim_{k\rightarrow\infty}t_{k} = +\infty\)) and \(\inf_{k \in\mathbb{Z}_{+}}\{{t_{k}- t_{k-1}}\} > 0\).

The main contributions of this research work are highlighted as follows:

- ∗:

-

Uncertain parameters, Markovian jumping, stochastic noises, and leakage delays are taken into account in the stability analysis of designing BAM neural networks with mixed time delays.

- ∗:

-

By fabricating suitable LKF, the global exponential stability of addressed neural networks is checked via some less conserved stability conditions.

- ∗:

-

For novelty, some uncertain parameters are initially handled in Lyapunov–Krasovskii functional which ensures the sufficient conditions for global exponential stability of designed neural networks.

- ∗:

-

In our proposed BAM neural networks, by considering both the time delay terms, the allowable upper bounds of discrete time-varying delay is large when compared with some existing literature, see Table 1 of Example 4.1. This shows that the approach developed in this paper is brand-new and less conservative than some available results.

Suppose that the initial condition of the stochastic BAM neural networks (1) has the form \(x(t) = \phi(t) \) for \(t \in [-\bar{\omega},0]\) and \(y(t) = \psi(t)\) for \(t\in[-\bar {\tilde{\omega}},0 ]\), where \(\phi(t)\) and \(\psi(t)\) are continuous functions, \(\bar{\omega} = \max(\bar{\tau}_{1}, \nu_{1},\sigma _{1})\) and \(\bar{\tilde{\omega}} = \max(\bar{\tau_{2}},\nu _{2},\sigma_{2}) \). Throughout this section, we assume that the activation functions \(f_{i}\), \(\tilde{f_{j}}\), \(g_{i}\), \(\tilde {g_{j}}\), \(h_{i}\), \(\tilde{h_{j}}\); \(i,j = 1,2,\ldots,n\), satisfy the following assumptions:

Assumption 1

-

(1)

\(f_{i},\tilde{f_{j}}\) are monotonic increasing continuous functions.

-

(2)

For any \(\rho_{1}, \rho_{2}, \theta_{1}, \theta _{2}\in\mathbb{R}\), there exist the respective scalars \(q_{\rho_{1}} > 0\), \(r_{\rho_{1}} > 0\) and \(q_{\rho_{2}} > 0\), \(r_{\rho_{2}} > 0\) which are correlated with \(\rho_{1}\), \(\rho_{2}\) and \(\alpha>0\), \(\beta> 0\) so that

$$\begin{aligned}& \bigl\vert f_{i}(\theta_{1}) - f_{i}( \rho_{1}) \bigr\vert \geq q_{i_{\rho _{1}}} \vert \theta_{1} - \rho_{1} \vert ^{\alpha}, \quad\forall \vert \theta_{1} - \rho_{1} \vert \leq r_{i_{\rho_{1}}}, \quad\mbox{and} \\& \bigl\vert \tilde{f}_{j}(\theta_{2}) - \tilde{f}_{j}(\rho_{2}) \bigr\vert \geq \tilde{q}_{j_{\rho_{2}}} \vert \theta_{2} - \rho_{2} \vert ^{\beta},\quad \forall \vert \theta_{2} - \rho_{2} \vert \leq\tilde {r}_{j_{\rho_{2}}}. \end{aligned}$$

Assumption 2

\(g_{i}\), \(h_{i}\) and \(\tilde{g}_{j}\), \(\tilde {h}_{j}\) are continuous and satisfy

\(\forall s_{1}, s_{2}, \tilde{s}_{1}, \tilde{s}_{2} \in\mathbb{R}\), \(s_{1}\neq s_{2}\) and \(\tilde{s}_{1}\neq\tilde{s}_{2}\), \(i,j = 1,2,3,\ldots,n\). Denote \(E = \operatorname{diag}\{{e_{i}}\}\), \(K = \operatorname{diag}\{ {k_{i}}\}\) and \(\widetilde {E} = \operatorname{diag} \{{\tilde{e}_{j}}\}\), \(\widetilde{K} = \operatorname{diag}\{{\tilde {k}_{j}}\}\) respectively.

Remark 1.1

In [39], the function \(f_{i}\) used in Assumption 1 is said to be an α-inverse Holder activation function which is a non-Lipschitz function. This activation function plays an important role in the stability issues of neural networks, and there exists a great number of results in the engineering mathematics, for example, \(f(\theta) = \operatorname{arc} \tan\theta\) and \(f(\theta)=\theta^{3} + \theta\) are 1-inverse Holder functions, \(f(\theta)=\theta^{3}\) is 3-inverse Holder function.

Remark 1.2

From Assumption 2, we can get that \(e_{i}\), \(\tilde{e}_{j}\) and \(k_{i}\), \(\tilde{k}_{j}\) are positive scalars. So E, Ẽ and K, K̃ are both positive definite diagonal matrices. The relations among the different activation functions \(f_{i}\), \(\tilde{f}_{j}\) (which are α-inverse Holder activation functions) \(g_{i}\), \(\tilde{g}_{j}\) and \(h_{i}\), \(\tilde {h}_{j}\) are implicitly established in Theorem 3.2. Such relations, however, have not been provided by any of the authors in the reported literature.

In order to guarantee the global exponential stability of system (1), we assume that the system tends to its equilibrium point and the stochastic noise contribution vanishes, i.e.,

Assumption 3

\(\rho(x^{*},y^{*},y^{*},t) = 0\); \(i,j = 1,2,\ldots,n\).

For such deterministic BAM neural networks, we have the following system of equations:

Thus system (1) admits one equilibrium point \((x^{*},y^{*}) = (x_{1}^{*},x_{2}^{*},\ldots,x_{n}^{*},y_{1}^{*},y_{2}^{*},\ldots,y_{n}^{*})^{T}\) under Assumption 3. In this regard, let \(u(t) = x(t)-x^{*}\) and \(v(t) = y(t)-y^{*}\), then system (1) can be rewritten in the following form:

where

Apparently, \(\bar{f}_{i}(s)\), \(\bar{\tilde{f}}_{j}(s)\) is also an α-inverse Holder function, and \(\bar{f}_{i}(0) = \bar {g}_{i}(0) = \bar{h}_{i}(0) = \bar{\tilde{f}}_{j}(0) = \bar{\tilde{g}}_{j}(0) = \bar{\tilde{h}}_{j}(0) = 0\), \(i,j=1,2,\ldots,n\).

Let \(\{r(t), t \geq0 \}\) be a right-continuous Markov chain in a complete probability space \((\Omega,\mathcal{F},\{\mathcal{F}_{t}\} _{t\geq0},\mathbb{P})\) and take values in a finite state space \(\mathfrak{M} = \{1,2,\ldots,N\}\) with generator \(\Gamma= (\gamma_{ij})_{N\times N}\) given by

where \(\Delta t > 0\) and \(\lim_{\Delta t\rightarrow0}(\frac{O(\Delta t)}{\Delta t})=0\). Here \(\gamma_{ij} \geq0\) is the transition probability rate from i to j if \(i \neq j\), while \(\gamma_{ii} = -\sum_{j=1}^{N}\gamma_{ij}\).

In this paper, we consider the following BAM neural networks with stochastic noise disturbance, leakage, mixed time delays, and Markovian jump parameters, which is actually a modification of system (3):

where \(u(t-\nu_{1})\), \(\tau_{1}(t)\), \(\tau_{2}(t)\), \(v(t)\), \(u(t)\), \(v(t-\nu_{2})\), \(\bar{f}(v(t))\), \(\bar{\tilde{f}}(u(t))\), \(\bar {g}(v(t-\tau_{1}(t)))\), \(\bar{\tilde{g}}(u(t-\tau_{2}(t)))\), \(\bar {h}(v(t))\), \(\bar{\tilde{h}}(u(t))\) have the same meanings as those in (3), \(\bar{\rho_{1}}(u(t-\nu_{1}), v(t), v(t-\tau _{1}(t)), t,r(t))\) and \(\bar{\rho_{2}}(v(t-\nu _{2}),u(t),u(t-\tau_{2}(t)),t,\tilde{r}(t))\) are noise intensity function vectors, and for a fixed system mode, \(C(r(t))\), \(D(r(t)) \), \(W_{0}(r(t))\), \(V_{0}(\tilde{r}(t))\), \(W_{1}(r(t))\), \(V_{1}(\tilde{r}(t))\), \(W_{2}(r(t))\), \(V_{2}(\tilde{r}(t))\), \(\bar {M}_{k}(r(t))\), and \(\bar{\tilde{N}}_{k}(\tilde{r}(t))\) are known constant matrices with appropriate dimensions.

For our convenience, each possible value of \(r(t)\) and \(\tilde {r}(t)\) is denoted by i and j respectively; \(i, j \in\mathfrak {M}\) in the sequel. Then we have \(C_{i} = C(r(t))\), \(D_{j} = D(\tilde{r}(t))\), \(W_{0i}=W_{0}(r(t))\), \(V_{0j}= V_{0}(\tilde{r}(t))\), \(W_{1i}=W_{1}(r(t))\), \(V_{1j}= V_{1}(\tilde{r}(t))\), \(W_{2i}=W_{2}(r(t))\), \(V_{2j}= V_{2}(\tilde{r}(t))\), \(\bar{M}_{ki}=\bar{M}_{k}(r(t))\), \(\bar {\tilde{N}}_{kj}=\bar{\tilde{N}}_{k}(\tilde{r}(t))\), where \(C_{i}\), \(D_{j}\), \(W_{0i}\), \(V_{0j}\), \(W_{1i}\), \(V_{1j}\), \(W_{2i}\), \(V_{2j}\), \(\bar{M}_{ki}\), \(\bar{\tilde{N}}_{kj}\) for any \(i, j\in \mathfrak{M}\).

Assume that \(\bar{\rho_{1}} : \mathbb{R}^{n} \times \mathbb{R}^{n}\times\mathbb{R}^{n}\times\mathbb {R}^{+}\times\mathfrak{M}\rightarrow\mathbb{R}^{n}\) and \(\bar{\rho_{2}} : \mathbb{R}^{n}\times\mathbb{R}^{n}\times\mathbb {R}^{n}\times\mathbb{R}^{+}\times \mathfrak{M}\rightarrow\mathbb{R}^{n}\) are locally Lipschitz continuous and satisfy the following assumption.

Assumption 4

for all \(u_{1}, u_{2}, v_{1}, v_{2}\in\mathbb{R}^{n}\) and \(r(t)=i\), \(\tilde{r}(t)=j\), \(i, j\in\mathfrak {M}\),where \(R_{1i}\), \(\widetilde{R}_{1j}\), \(R_{2i}\), \(\widetilde {R}_{2j}\), \(R_{3i}\), and \(\widetilde{R}_{3j}\) are known positive definite matrices with appropriate dimensions.

Consider a general stochastic system \(dx(t)=f(x(t), t, r(t))\, dt + g(x(t), t, r(t))\, d\omega(t)\), \(t \geq0\) with the initial value \(x(0) = x_{0} \in\mathbb{R}^{n}\), where \(f: \mathbb {R}^{n}\times\mathbb{R}^{+}\times\mathfrak{M} \rightarrow \mathbb{R}^{n}\) and \(r(t)\) is the Markov chain. Let \(C^{2,1}(\mathbb {R}^{n} \times\mathbb{R}^{+} \times\mathfrak{M}; \mathbb {R}^{+})\) denote a family of all nonnegative functions V on \(\mathbb {R}^{n}\times\mathbb{R}^{+}\times\mathfrak{M}\) which are twice continuously differentiable in x and once differentiable in t. For any \(V \in C^{2,1}(\mathbb{R}^{n}\times\mathbb{R}^{+}\times\mathfrak {M}; \mathbb{R}^{+})\), define \(\mathcal{L}V:\mathbb{R}^{n}\times \mathbb{R}^{+} \times\mathfrak{M} \rightarrow\mathbb{R}\) by

where

By generalized Ito’s formula, one can see that

Let \(u(t;\xi)\) and \(v(t;\tilde{\xi})\) denote the state trajectory from the initial data \(u(\theta)=\xi(\theta)\) on \(-\bar{\omega}\leq\theta\leq0\) in \(L_{\mathcal {F}_{0}}^{2}([-\bar{\omega},0];\mathbb{R}^{n})\) and \(v(\theta)=\tilde{\xi}(\theta)\) on \(-\bar{\tilde{\omega}}\leq\theta \leq0\) in \(L_{\mathcal{F}_{0}}^{2}([-\bar{\tilde{\omega }},0];\mathbb{R}^{n})\). Clearly, system (4) admits a trivial solution \(u(t,0)\equiv0\) and \(v(t,0)\equiv0\) corresponding to the initial data \(\xi=0\) and \(\tilde{\xi}=0\), respectively. For simplicity, we write \(u(t;\xi)=u(t)\) and \(v(t,\tilde{\xi})=v(t)\).

Definition 1.3

The equilibrium point of neural networks (4) is said to be globally exponentially stable in the mean square if, for any \(\xi\in L_{\mathcal{F}_{0}}^{2}([-\bar{\omega },0];\mathbb{R}^{n})\), \(\tilde{\xi} \in L_{\mathcal {F}_{0}}^{2}([-\bar{\tilde{\omega}},0];\mathbb{R}^{n})\), there exist positive constants η, \(\mathcal{T}\), \(\Pi_{\xi}\), and \(\Theta_{\tilde{\xi}}\) correlated with ξ and ξ̃ such that, when \(t > \mathcal{T}\), the following inequality holds:

Definition 1.4

We introduce the stochastic Lyapunov–Krasovskii functional \(V \in C^{2,1}(\mathbb{R}^{+}\times\mathbb{R}^{n} \times\mathbb {R}^{n} \times\mathfrak{M};\mathbb{R}^{+})\) of system (4), the weak infinitesimal generator of random process \(\mathcal{L}V\) from \(\mathbb{R}^{+}\times\mathbb{R}^{n} \times\mathbb{R}^{n} \times \mathfrak{M}\) to \(\mathbb{R}^{+}\) defined by

Lemma 1.5

([39])

If \(f_{i}\) is an α-inverse Holder function, then for any \(\rho_{0} \in\mathbb{R}\), one has

Lemma 1.6

([39])

If \(f_{i}\) is an α-inverse Holder function and \(f_{i}(0) = 0\), then there exist constants \(q_{i_{0}} > 0\) and \(r_{i_{0}}\geq0\) such that \(|f_{i}(\theta)| \geq q_{i_{0}}|\theta|^{\alpha}\), \(\forall|\theta| \leq r_{i_{0}}\). Moreover, \(|f_{i}(\theta)| \geq q_{i_{0}} r_{i_{0}}^{\alpha}\), \(\forall|\theta| \geq r_{i_{0}}\).

Lemma 1.7

([21])

For any real matrix \(M >0\), scalars a and b with \(0 \leq a < b\), vector function \(x(\alpha)\) such that the following integrals are well defined, we have

Lemma 1.8

([39])

Let \(x,y \in\mathbb{R}^{n}\), and G is a positive definite matrix, then

Lemma 1.9

([21])

Given constant symmetric matrices \(\Upsilon_{1}\), \(\Upsilon_{2}\), and \(\Upsilon_{3}\) with appropriate dimensions, where \(\Upsilon _{1}^{T}=\Upsilon_{1}\) and \(\Upsilon_{2}^{T}=\Upsilon_{2} > 0\), \(\Upsilon_{1}+\Upsilon_{3}^{T} \Upsilon_{2}^{-1} \Upsilon_{3} < 0\) if and only if

Lemma 1.10

([21])

For any constant matrix \(\Omega\in\mathbb{R}^{n \times n}\), \(\Omega = \Omega^{T} > 0\), scalar \(\gamma> 0\), vector function \(\omega:[0, \gamma] \rightarrow\mathbb{R}^{n}\), such that the integrations concerned are well defined, then

Lemma 1.11

([33])

For given matrices D, E, and F with \(F^{T}F \leq I\) and scalar \(\epsilon> 0\), the following inequality holds:

Remark 1.12

Lakshmanan et al. in [18] analyzed the impact of time-delayed BAM neural networks for ensuring the stability performance when the leakage delay occurred. In [12], the authors discussed the stability behavior in the sense of asymptotic for BAM neural networks with mixed time delays and uncertain parameters. Moreover, the comparisons for maximum allowable upper bounds of discrete time-varying delays have been listed. Lou and Cui in [29] conversed the exponential stability conditions for time-delayed BAM NNs while Markovian jump parameters arose. Further, the stochastic effects on neural networks and stability criteria were conversed via exponential sense by Huang and Li in [40] by the aid of Lyapunov–Krasovskii functionals. In all the above mentioned references, the stability problem for BAM neural networks was considered only with leakage delays or mixed time delays, or stochastic effects, or Markovian jump parameters, or parameter uncertainties, but all the above factors have not been taken into one account and no one investigated exponential stability via delays at a time. Considering the above facts is very challenging and advanced in this research work.

2 Global exponential stability for deterministic systems

Theorem 2.1

Under Assumptions 1 and 2, the neural network system (4) is globally exponentially stable in the mean square if, for given \(\eta_{i},\widetilde{\eta}_{j}>0\) (\(i,j\in\mathfrak {M}\)), there exist positive definite matrices S, T, S̃, T̃, \(R_{2}\), \(\widetilde{R}_{2}\), \(N_{1}\), \(N_{2}\), \(N_{3}\), \(N_{4}\), \(N_{5}\), \(N_{6}\) and \(H_{i}\), \(\widetilde{H}_{j}\) (\(i,j\in\mathfrak{M}\)), positive definite diagonal matrices P, Q, and positive scalars \(\lambda_{i}\) and \(\mu_{j}\) (\(i,j\in\mathfrak {M}\)) such that the following LMIs are satisfied:

where

Proof

Let us construct the following Lyapunov–Krasovskii functional candidate:

where

By Assumption 4, (5) and (6), we obtain

It is easy to prove that system (4) is equivalent to the following form:

By utilizing Lemmas 1.6 and 1.10, from (4) and Definition 1.4, one has

By combining Eqs. (11)–(18), we can obtain that

where

and

Let \(\alpha= \min_{i\in\mathcal {S}}\lambda_{\min}(-\Xi_{i})\) and \(\beta= \min_{j\in\mathcal {S}}\mu_{\min}(-\Omega_{j})\). From conditions (9) and (10), it is easy to see that \(\alpha>0\) and \(\beta>0\). This fact together with (19) gives

Then, for \(t = t_{k}\), by some simple calculations, one gets

Therefore \(V_{1}(t_{k},u(t_{k}),v(t_{k}),i,j) \leq V_{1}(t_{k^{-}},u(t_{k^{-}}),v(t_{k^{-}}),i,j)\), \(k\in\mathbb{Z}_{+}\), which implies that \(V(t_{k},u(t_{k}),v(t_{k}),i,j) \leq V(t_{k^{-}},u(t_{k^{-}}),v(t_{k^{-}}),i,j)\), \(k\in\mathbb{Z}_{+}\). Using mathematical induction, we have that, for all \(i,j \in\mathfrak {M}\) and \(k \geq1 \),

Since \(t>t'\), it follows from Dynkin’s formula that we have

Hence it follows from the definition of \(V(t,u(t),v(t),i,j)\), the generalized Ito’s formula, and (20) that

By (21), we can get that \(\lim_{t\rightarrow+\infty}\mathbb {E}{(\|u(t)\|^{2}+\|v(t)\|^{2})} = 0 \) and \(\lim_{t\rightarrow+\infty }\mathbb{E}(\|u(t)\|^{2}+ \|v(t)\|^{2}) = 0\). Furthermore,

For \(\bar{f}_{l}(\theta)\) and \(\bar{\tilde{f}}_{m}(\theta)\), by Lemma 1.6 there exist constants \(q_{l0}>0\), \(\tilde{q}_{m0}>0\), and \(r_{l0}>0\), \(\tilde{r}_{m0}>0\) such that \(|\bar{f}_{l}(\theta)| \geq q_{l0}|\theta|^{\alpha}\), \(\forall |\theta| \leq r_{l0}\), \(l=1,2,\ldots,n\), and \(|\bar{\tilde{f}}_{m}(\theta) |\geq\tilde{q}_{m0}|\theta |^{\alpha}\), \(\forall |\theta| \leq\tilde{r}_{m0}\), \(m=1,2,\ldots,n\).

By (22), there exists a scalar \(\mathcal{T}>0\), when \(t\geq\mathcal{T}\), \(\mathbb{E}\{u_{l}(t)\} \in[-\bar {r}_{0},\bar{r}_{0}]\), \(l=1,2,\ldots,n\), where \(\bar{r}_{0} = \min_{1\leq l \leq n} r_{l0}\) and \(\mathbb{E}\{ v_{m}(t)\} \in[-\bar{\tilde{r}}_{0},\bar{\tilde{r}}_{0}]\), \(m = 1,2,\ldots,n\), where \(\bar{\tilde{r}}_{0} = \min_{1 \leq l \leq n}\tilde{r}_{m0}\). Hence when \(t\geq\mathcal{T}\), one gets

where \(p = \min_{1\leq l \leq n}p_{l}\), \(\tilde{p} = \min_{1\leq m \leq n}\tilde{p}_{m}\) and \(q_{0} = \min_{1 \leq l \leq n}q_{l0}\), \(\tilde{q}_{0} = \min_{1 \leq m \leq n}\tilde{q}_{m0}\). By (23), we get

where

Let

where

Let \(\chi= \max_{i,j\in\mathfrak{M}}\{\pi_{\xi_{i}+\tilde {\xi}_{j}}\}\). It follows from (23), (24), and (25) that

Therefore

By Definition 1.3 and (26), we see that the equilibrium point of neural networks (4) is globally exponentially stable in the mean square sense. □

Remark 2.2

To the best of our knowledge, the global exponential stability criteria for impulsive effects of SBAMNNs with Markovian jump parameters and mixed time, leakage term delays, α-inverse Holder activation functions have not been discussed in the existing literature. Hence this paper reports a new idea and some sufficient conditions for global exponential stability conditions of neural networks, which generalize and improve the outcomes in [9, 11, 21, 37, 38].

Remark 2.3

The criteria given in Theorem 2.1 are dependent on the time delay. It is well known that the delay-dependent criteria are less conservative than the delay-independent criteria, particularly when the delay is small. Based on Theorem 2.1, the following result can be obtained easily.

Remark 2.4

If there are no stochastic disturbances in system (4), then the neural networks are simplified to

3 Global exponential stability of uncertain system

Now consider the following BAM neural networks with stochastic noise disturbance, Markovian jump parameters, leakage and mixed time delays, which are in the uncertainty case system:

Assumption 5

The perturbed uncertain matrices \(\Delta C(t)\), \(\Delta D(t)\), \(\Delta W_{0i}(t)\), \(\Delta W_{1i}(t)\), \(\Delta W_{2i}(t)\), \(\Delta V_{0j}(t)\), \(\Delta V_{1j}(t)\), and \(\Delta V_{2j}(t)\) are time-varying functions satisfying: \(\Delta W_{0i}(t) = MF_{l}(t)N_{W_{0i}}\), \(\Delta W_{1i}(t) = MF_{l}(t)N_{W_{1i}}\), \(\Delta W_{2i}(t) = MF_{l}(t)N_{W_{2i}}\), \(\Delta V_{0j}(t) = MF_{l}(t)N_{V_{0j}}\), \(\Delta V_{1j}(t) = MF_{l}(t)N_{V_{1j}}\), \(\Delta V_{2j}(t) = MF_{l}(t)N_{V_{2j}}\), \(\Delta C(t) = MF_{l}(t)N_{C_{i}}\) and \(\Delta D(t)=MF_{l}(t)N_{D_{j}}\), where M, \(N_{W_{0i}}\), \(N_{W_{1i}}\), \(N_{W_{2i}}\), \(N_{V_{0j}}\), \(N_{V_{1j}}\), \(N_{V_{2j}}\), \(N_{C_{i}}\), and \(N_{D_{j}}\) are given constant matrices, respectively. \(F_{l_{z}}(t)\) (\(l = 0, 1, 2, 3\)) (where \(z= \mbox{either } i \mbox{ or } j\)) are unknown real time-varying matrices which have the following structure: \(F_{l_{z}}(t)= \operatorname{blockdiag}\{\delta _{l_{1}}(t)I_{z_{l_{1}}},\ldots,\delta _{l_{k}}(t)I_{z_{l_{k}}}, F_{l_{1}}(t), \ldots,F_{l_{s}}(t)\}\), \(\delta_{l_{z}} \in\mathbb {R}\), \(|\delta_{l_{z}}|\leq1\), \(1 \leq z \leq\tilde{k}\) and \(F_{l_{p}}^{T} F_{l_{p}}\leq I\), \(1\leq p \leq s\). We define the set \(\Delta_{l}\) as \(\Delta_{l} = \{ F_{l_{z}}^{T}(t)F_{l_{z}}(t)\leq I, F_{l_{z}}N_{l_{z}} = N_{l_{z}}F_{l_{z}}, \forall N_{l_{z}} \in\Gamma_{l_{z}}\} \), where \(\Gamma_{l_{z}} = \{N_{l_{z}}= \operatorname{blockdiag}[N_{l_{1}},\ldots ,N_{l_{k}},n_{l_{1}}I_{f_{l_{1}}}, \ldots, n_{l_{s}}I_{f_{l_{s}}}]\}\), \(N_{l_{z}}\) invertible for \(1 \leq z \leq\tilde{k}\) and \(n_{l_{p}} \in\mathbb{R}\), \(n_{l_{p}} \neq0\), for \(1 \leq p \leq s\) and p, k̃, \(s \in \mathfrak{M}\).

Also \(\Delta H_{i}\), \(\Delta\widetilde{H}_{j}\), \(\Delta R_{1i}\), \(\Delta R_{2i}\), \(\Delta R_{3i}\), \(\Delta\widetilde{R}_{1j}\), \(\Delta \widetilde{R}_{2j}\), \(\Delta\widetilde{R}_{3j}\), \(\Delta R_{2}\), \(\Delta\widetilde{R}_{2}\), ΔS, ΔT, ΔS̃, ΔT̃, \(\Delta N_{1}\), \(\Delta N_{2}\), \(\Delta N_{3}\), \(\Delta N_{4}\), \(\Delta N_{5}\), and \(\Delta N_{6}\) are positive definite diagonal matrices that are defined as follows: \(\Delta H_{i} = \check{E} \Sigma F_{H_{i}}\), \(\Delta\widetilde{H}_{j} = \check{E} \Sigma F_{\widetilde{H}_{j}}\) and \(\Delta R_{1i} = \check{E} \Sigma F_{R_{1i}}\), where Ě, \(F_{H_{i}}\), \(F_{\widetilde{H}_{j}}\), \(F_{R_{1i}}\), \(F_{R_{2i}}\), \(F_{R_{3i}}\), \(F_{\widetilde{R}_{1j}}\), \(F_{\widetilde{R}_{2j}}\), \(F_{\widetilde{R}_{3j}}\), \(F_{R_{2}}\), \(F_{\widetilde{R}_{2}}\), \(F_{S}\), \(F_{\widetilde{S}}\), \(F_{T}\), \(F_{\widetilde{T}}\), \(F_{N_{1}}\), \(F_{N_{2}}\), \(F_{N_{3}}\), \(F_{N_{4}}\), \(F_{N_{5}}\), and \(F_{N_{6}}\) are positive diagonal matrices (i.e., \(F_{H_{i}}F_{H_{i}}^{T} = \operatorname{diag}(h_{1}^{*},h_{2}^{*},\ldots,h_{n}^{*})\), \(F_{\widetilde {H}_{j}}F_{\widetilde{H}_{j}}^{T} = \operatorname{diag}(\tilde {h}_{1}^{*}, \tilde {h}_{2}^{*},\ldots,\tilde{h}_{n}^{*})\), where \(h_{i}^{*}, \tilde {h}_{j}^{*} > 0\) (\(i,j = 1,2,\ldots,n\))) and the remaining terms are defined in a similar way, which characterizes how the deterministic uncertain parameter in Σ enters the nominal matrices \(H_{i}\), \(\widetilde{H}_{j}\), \(R_{b_{i}}\) (\(b = 1, 2, 3\)), \(\widetilde {R}_{c_{j}}\) (\(c = 1, 2, 3\)), S, S̃, T, T̃, \(N_{1}\), \(N_{2}\), \(N_{3}\), \(N_{4}\), \(N_{5}\), and \(N_{6}\). The matrix Σ with real entries, which may be time-varying, is unknown and satisfies \(\Sigma^{T} \Sigma\leq I\).

Remark 3.1

Overall, the stability of time-delayed neural networks fully depends on the Lyapunov–Krasovskii functional and LMI concepts. In particular, based on the neural networks, different types of LKF are chosen or handled to lead to the system stability. Up to now, no one has considered uncertain parameters in Lyapunov–Krasovskii functional terms. Without loss of generality, the gap is initially filled in this work, and also this kind of approach gives more advanced and less conserved stability results.

Theorem 3.2

Under Assumptions 1, 2, and 5, the neural network system (28) is global robust exponentially stable in the mean square if, for given \(\eta_{i}, \widetilde{\eta}_{j}>0\) (\(i,j\in \mathfrak{M}\)), there exist positive definite matrices S, T, S̃, T̃, \(R_{2}\), \(\widetilde{R}_{2}\), \(N_{1}\), \(N_{2}\), \(N_{3}\), \(N_{4}\), \(N_{5}\), \(N_{6}\) and \(H_{i}\), \(\widetilde{H}_{j}\) (\(i,j\in \mathfrak{M}\)), positive definite diagonal matrices ΔS, ΔT, \(\Delta{R}_{2}\), ΔS̃, ΔT̃, \(\Delta \widetilde{R}_{2}\), \(\Delta H_{i}\), \(\Delta\widetilde{H}_{j}\), \(\Delta N_{1}\), \(\Delta N_{2}\), \(\Delta N_{3}\), \(\Delta N_{4}\), \(\Delta N_{5}\), \(\Delta N_{6}\), P, Q and positive scalars \(\lambda_{i}\) and \(\mu_{j}\) (\(i,j\in\mathfrak{M}\)) such that the following LMIs are satisfied:

where

where

The remaining values of \(\Xi_{1}^{*}\), \(\Delta_{1}^{*}\) are the same as in Theorem 2.1, and ∗ means the symmetric terms.

Proof

The matrices \(C_{i}\), \(H_{i}\), \(D_{j}\), \(\widetilde{H}_{j}\), \(R_{2}\), \(\widetilde{R}_{2}\), S, S̃, T, T̃, \(N_{1}\), \(N_{2}\), \(N_{3}\), \(N_{4}\), \(N_{5}\), and \(N_{6}\) in the Lyapunov–Krasovskii functional of Theorem 2.1 are replaced by \(C_{i}+\Delta C_{i}(t)\), \(H_{i}+\Delta H_{i}\), \(D_{j}+\Delta D_{j}(t)\), \(\widetilde{H}_{j}+\Delta\widetilde{H}_{j}\), \(R_{2}+\Delta R_{2}\), \(\widetilde{R}_{2}+\Delta\widetilde{R}_{2}\), \(S+\Delta S\), \(\widetilde{S}+\Delta\widetilde{S}\), \(T+\Delta T\), \(\widetilde {T}+\Delta\widetilde{T}\), \(N_{1}+\Delta N_{1}\), \(N_{2}+\Delta N_{2}\), \(N_{3}+\Delta N_{3}\), \(N_{4}+\Delta N_{4}\), \(N_{5}+\Delta N_{5}\), and \(N_{6}+\Delta N_{6}\), respectively.

Hence, by applying the same procedure of Theorem 2.1 and using Assumption 5, Lemmas 1.8, 1.9, 1.10 and 1.11 and putting \(\eta= \max_{i,j\in\mathfrak {M}}\{ \max_{i\in\mathfrak{M}}\eta_{i}, \max_{j\in\mathfrak {M}}\widetilde{\eta}_{j}\}\), we have from (28) and Definition 2 (weak infinitesimal operator \(\mathcal{L}V\)) that

where \(\Psi(t)\) and \(\Phi(t)\) are given in Theorem 2.1. The remaining proof of this theorem is similar to the procedure of Theorem 2.1, and we get that the uncertain neural network (28) is global robust exponentially stable in the mean square sense. □

4 Numerical examples

In this section, we provide two numerical examples with their simulations to demonstrate the effectiveness of our results.

Example 4.1

Consider the second order stochastic impulsive BAM neural networks (4) with \(u(t) = (u_{1}(t),u_{2}(t))^{T}\), \(v(t) = (v_{1}(t),v_{2}(t))^{T}\); \(\bar{\omega}(t)\), \(\bar{\tilde{\omega }}(t)\) are second order Brownian motions and \(r(t)\), \(\tilde{r}(t)\) denote right-continuous Markovian chains taking values in \(\mathfrak {M}=\{1,2\}\) with generator

The associated parameters of neural networks (4) take the values as follows:

Taking

The following activation functions play in neural network system (4):

It is easy to obtain that, for any \(a, b \in\mathbb{R}\) with \(a < b\), there exists a scalar \(c \in(a,b)\) such that

Therefore \(f_{i}(\cdot)\) and \(\bar{\tilde{f}}_{j}(\cdot)\), \(i,j = 1,2\), are 1-inverse Holder functions. In addition, for any \(a, b \in\mathbb {R}\), it is easy to check that

By a similar way, we get the same inequalities for \(\bar{\tilde {g}}_{j}(\cdot)\) and also \(\bar{\tilde{h}}_{j}(\cdot)\) (\(j=1,2\)). That means the activation functions \(\bar{f}_{i}(\cdot)\), \(\bar {\tilde{f}}_{j}(\cdot)\), \(\bar{g}_{i}(\cdot)\), \(\bar{\tilde {g}}_{j}(\cdot)\), \(\bar{h}_{i}(\cdot)\), \(\bar{\tilde{h}}_{j}(\cdot )\) (\(i, j=1,2\)) satisfy Assumptions 1 and 2.

Then, by Theorem 2.1, solving the LMIs using the Matlab LMI control toolbox, one can obtain the following feasible solutions:

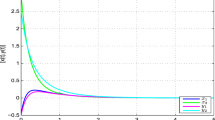

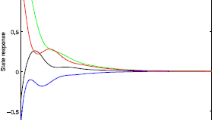

Figure 1 narrates the time response of state variables \(u_{1}(t)\), \(u_{2}(t)\), \(v_{1}(t)\), \(v_{2}(t)\) with and without stochastic noises, and Fig. 2 depicts the time response of Markovian jumps \(r(t)=i\), \(\tilde{r}(t)=j\). By solving LMIs (5)–(10), we get the feasible solutions. The obtained discrete time delay upper bounds of \(\bar{\tau}_{1}\) and \(\bar{\tau}_{2}\) for neural networks (4), which are given in Table 1, are very maximum. This shows that the contributions of this research work is more effective and less conservative than some existing results. Therefore, by Theorem 2.1, we can conclude that neural networks (4) are globally exponentially stable in the mean square for the maximum allowable upper bounds \(\bar{\tau}_{1} = \bar{\tau}_{2} = 7.46\).

The state response \(u_{1}(t)\), \(u_{2}(t)\), \(v_{1}(t)\), \(v_{2}(t)\) of (1) with stochastic disturbances and without stochastic disturbances

The state responses \(r(t)\) and \(\tilde{r}(t)\) denote Markovian jump in system (4)

Example 4.2

Consider the second order uncertain stochastic impulsive BAM neural networks (28) with \(u(t) = (u_{1}(t), u_{2}(t))^{T}\), \(v(t) = (v_{1}(t),v_{2}(t))^{T}\); \(\bar{\omega}(t)\), \(\bar{\tilde{\omega }}(t)\) are second order Brownian motions and \(r(t)\), \(\tilde{r}(t)\) denote right-continuous Markovian chains taking values in \(\mathfrak {M}=\{1,2\}\) with generator

The associated parameters of neural networks (28) are as follows:

Taking

\(\nu_{1} = \nu_{2} = 1\), \(\bar{\tau}_{1} = \bar{\tau}_{2} = 0.4\), \(\sigma_{1} = \sigma_{2} = 0.3\). The following activation functions play in neural network system (28):

Therefore, by Theorem 3.2 in this paper, the uncertain delayed stochastic impulsive BAM neural networks (28) under consideration are global robust exponentially stable in the mean square.

5 Conclusions

In this paper, we have treated the problem of global exponential stability analysis for the leakage delay terms. By employing the Lyapunov stability theory and the LMI framework, we have attained a new sufficient condition to justify the global exponential stability of stochastic impulsive uncertain BAMNNs with two kinds of time-varying delays and leakage delays. The advantage of this paper is that different types of uncertain parameters were introduced into the Lyapunov–Krasovskii functionals, and the exponential stability behavior was studied. Additionally, two numerical examples have been provided to reveal the usefulness of our obtained deterministic and uncertain results. To the best of our knowledge, there are no results on the exponential stability analysis of inertial-type BAM neural networks with both time-varying delays by using Wirtinger based inequality, which might be our future research work.

References

Kosko, B.: Neural Networks and Fuzzy Systems—A Dynamical System Approach to Machine Intelligence. Prentice Hall, Englewood Cliffs (1992)

Kosko, B.: Adaptive bidirectional associative memories. Appl. Opt. 26(23), 4947–4960 (1987)

Feng, Z., Zheng, W.: Improved stability condition for Takagi-Sugeno fuzzy systems with time-varying delay. IEEE Trans. Cybern. 47(3), 661–670 (2017)

Joya, G., Atencia, M.A., Sandoval, F.: Hopfield neural networks for optimization: study of the different dynamics. Neurocomputing 43, 219–237 (2002)

Li, R., Cao, J., Alsaedi, A., Alsaadi, F.: Exponential and fixed-time synchronization of Cohen-Grossberg neural networks with time-varying delays and reaction-diffusion terms. Appl. Math. Comput. 313, 37–51 (2017)

Li, R., Cao, J., Alsaedi, A., Alsaadi, F.: Stability analysis of fractional-order delayed neural networks. Nonlinear Anal., Model. Control 22(4), 505–520 (2017)

Nie, X., Cao, J.: Stability analysis for the generalized Cohen–Grossberg neural networks with inverse Lipschitz neuron activations. Comput. Math. Appl. 57, 1522–1538 (2009)

Tu, Z., Cao, J., Alsaedi, A., Alsaadi, F.E., Hayat, T.: Global Lagrange stability of complex-valued neural networks of neutral type with time-varying delays. Complexity 21, 438–450 (2016)

Zhang, H., Wang, Z., Lin, D.: Global asymptotic stability and robust stability of a class of Cohen-Grossberg neural networks with mixed delays. IEEE Trans. Circuits Syst. I 56, 616–629 (2009)

Zhu, Q., Cao, J.: Robust exponential stability of Markovian jump impulsive stochastic Cohen–Grossberg neural networks with mixed time delays. IEEE Trans. Neural Netw. 21, 1314–1325 (2010)

Zhang, X.M., Han, Q.L., Seuret, A., Gouaisbaut, F.: An improved reciprocally convex inequality and an augmented Lyapunov–Krasovskii functional for stability of linear systems with time-varying delay. Automatica 84, 221–226 (2017)

Shu, H., Wang, Z., Lu, Z.: Global asymptotic stability of uncertain stochastic bi-directional associative memory networks with discrete and distributed delays. Math. Comput. Simul. 80, 490–505 (2009)

Balasundaram, K., Raja, R., Zhu, Q., Chandrasekaran, S., Zhou, H.: New global asymptotic stability of discrete-time recurrent neural networks with multiple time-varying delays in the leakage term and impulsive effects. Neurocomputing 214, 420–429 (2016)

Li, R., Cao, J.: Stability analysis of reaction-diffusion uncertain memristive neural networks with time-varying delays and leakage term. Appl. Math. Comput. 278, 54–69 (2016)

Senthilraj, S., Raja, R., Zhu, Q., Samidurai, R., Yao, Z.: Exponential passivity analysis of stochastic neural networks with leakage, distributed delays and Markovian jumping parameters. Neurocomputing 175, 401–410 (2016)

Senthilraj, S., Raja, R., Zhu, Q., Samidurai, R., Yao, Z.: Delay-interval-dependent passivity analysis of stochastic neural networks with Markovian jumping parameters and time delay in the leakage term. Nonlinear Anal. Hybrid Syst. 22, 262–275 (2016)

Li, X., Fu, X.: Effect of leakage time-varying delay on stability of nonlinear differential systems. J. Franklin Inst. 350, 1335–1344 (2013)

Lakshmanan, S., Park, J.H., Lee, T.H., Jung, H.Y., Rakkiyappan, R.: Stability criteria for BAM neural networks with leakage delays and probabilistic time-varying delays. Appl. Math. Comput. 219, 9408–9423 (2013)

Liao, X., Mao, X.: Exponential stability and instability of stochastic neural networks. Stoch. Anal. Appl. 14, 165–185 (1996)

Su, W., Chen, Y.: Global robust exponential stability analysis for stochastic interval neural networks with time-varying delays. Commun. Nonlinear Sci. Numer. Simul. 14, 2293–2300 (2009)

Zhang, H., Wang, Y.: Stability analysis of Markovian jumping stochastic Cohen-Grossberg neural networks with mixed time delays. IEEE Trans. Neural Netw. 19, 366–370 (2008)

Zhu, Q., Cao, J.: Exponential stability of stochastic neural networks with both Markovian jump parameters and mixed time delays. IEEE Trans. Syst. Man Cybern. 41, 341–353 (2011)

Bao, H., Cao, J.: Stochastic global exponential stability for neutral-type impulsive neural networks with mixed time-delays and Markovian jumping parameters. Commun. Nonlinear Sci. Numer. Simul. 16, 3786–3791 (2011)

Li, X., Song, S.: Stabilization of delay systems: delay-dependent impulsive control. IEEE Trans. Autom. Control 62(1), 406–411 (2017)

Li, X., Wu, J.: Stability of nonlinear differential systems with state-dependent delayed impulses. Automatica 64, 63–69 (2016)

Li, X., Bohner, M., Wang, C.: Impulsive differential equations: periodic solutions and applications. Automatica 52, 173–178 (2015)

Stamova, I., Stamov, T., Li, X.: Global exponential stability of a class of impulsive cellular neural networks with supremums. Int. J. Adapt. Control Signal Process. 28, 1227–1239 (2014)

Pan, L., Cao, J.: Exponential stability of stochastic functional differential equations with Markovian switching and delayed impulses via Razumikhin method. Adv. Differ. Equ. 2012, 61 (2012)

Lou, X., Cui, B.: Stochastic exponential stability for Markovian jumping BAM neural networks with time-varying delays. IEEE Trans. Syst. Man Cybern. 37, 713–719 (2007)

Wang, Z., Liu, Y., Liu, X.: State estimation for jumping recurrent neural networks with discrete and distributed delays. Neural Netw. 22, 41–48 (2009)

Wang, Q., Chen, B., Zhong, S.: Stability criteria for uncertainty Markovian jumping parameters of BAM neural networks with leakage and discrete delays. Int. J. Math. Comput. Phys. Electr. Comput. Eng. 8(2), 391–398 (2014)

Balasubramaniam, P., Krishnasamy, R., Rakkiyappan, R.: Delay-interval-dependent robust stability results for uncertain stochastic systems with Markovian jumping parameters. Nonlinear Anal. Hybrid Syst. 5, 681–691 (2011)

Gu, K.: An integral inequality in the stability problem of time-delay systems. In: Proceedings of 39th IEEE CDC, Philadelphia, Sydney (1994)

Guo, S., Huang, L., Dai, B., Zhang, Z.: Global existence of periodic solutions of BAM neural networks with variable coefficients. Phys. Lett. A 317, 97–106 (2003)

Haykin, S.: Neural Networks. Prentice Hall, New York (1994)

Shi, Y., Cao, J., Chen, G.: Exponential stability of complex-valued memristor-based neural networks with time-varying delays. Appl. Math. Comput. 313, 222–234 (2017)

Wu, H.: Global exponential stability of Hopfield neural networks with delays and inverse Lipschitz neuron activations. Nonlinear Anal., Real World Appl. 10, 2297–2306 (2009)

Yang, X., Cao, J.: Synchronization of Markovian coupled neural networks with nonidentical node-delays and random coupling strengths. IEEE Trans. Neural Netw. 23, 60–71 (2012)

Li, Y., Wu, H.: Global stability analysis in Cohen–Grossberg neural networks with delays and inverse Holder neuron activation functions. Inf. Sci. 180, 4022–4030 (2010)

Huang, T., Li, C.: Robust exponential stability of uncertain delayed neural networks with stochastic perturbation and impulse effects. IEEE Trans. Neural Netw. Learn. Syst. 23, 867–875 (2012)

Balasubramaniam, P., Vembarasan, V.: Robust stability of uncertain fuzzy BAM neural networks of neutral-type with Markovian jumping parameters and impulses. Comput. Math. Appl. 62, 1838–1861 (2011)

Rakkiyappan, R., Chandrasekar, A., Lakshmana, S., Park, J.H.: Exponential stability for Markovian jumping stochastic BAM neural networks with mode-dependent probabilistic time-varying delays and impulse control. Complexity 20(3), 39–65 (2015)

Park, J.H., Park, C.H., Kwon, O.M., Lee, S.M.: A new stability criterion for bidirectional associative memory neural networks. Appl. Math. Comput. 199, 716–722 (2008)

Mohamad, S.: Lyapunov exponents of convergent Cohen–Grossberg-type BAM networks with delays and large impulses. Appl. Math. Sci. 2(34), 1679–1704 (2008)

Park, J.H.: A novel criterion for global asymptotic stability of BAM neural networks with time-delays. Chaos Solitons Fractals 29, 446–453 (2006)

Acknowledgements

This work was jointly supported by the National Natural Science Foundation of China under Grant No. 61573096, the Jiangsu Provincial Key Laboratory of Networked Collective Intelligence under Grant No. BM2017002, the Rajiv Gandhi National Fellowship under the University Grant Commission, New Delhi under Grant No. F1-17.1/2016-17/RGNF-2015-17-SC-TAM-21509, the Thailand research grant fund under Grant No. RSA5980019.

Author information

Authors and Affiliations

Contributions

All authors contributed equally and significantly in writing this article. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Notations

\(\mathbb{R}\) is the set of real numbers; \(\mathbb {R}^{n}\) is the n-dimensional Euclidean space; \(\mathbb{R}^{n\times n}\) denotes the set of all \(n\times n\) real matrices; \(\mathbb{Z_{+}}\) is the set of all positive integers. For any matrix A, \(A^{T}\) is the transpose of A, \(A^{-1}\) is the inverse of A; ∗ means the symmetric terms in a symmetric matrix. Positive definite matrix A is denoted by \(A>0\), negative definite A is denoted by \(A < 0\), \(\lambda_{\mathrm{min}}(\cdot)\) denotes minimum eigenvalues of a real symmetric matrix; \(\lambda_{\mathrm{max}}(\cdot)\) is maximum eigenvalues of a real symmetric matrix; \(I_{n}\) denotes the \(n\times n\) identity matrix; \(x = (x_{1},x_{2},\ldots,x_{n})^{T}\), \(y = (y_{1},y_{2},\ldots ,y_{n})^{T}\) are column vectors; \(x^{T}y = \sum_{i=1}^{n} x_{i}y_{i}\), \(\|x\| = (\sum_{i=1}^{n} x_{i}^{2})^{1/2}\); \(\dot{x}(t)\), \(\dot{y}(t)\) denote the derivatives of \(x(t)\) and \(y(t)\), respectively; ∗ is the symmetric form of a matrix; \(C^{2,1}(\mathbb{R}^{+}\times\mathbb{R}^{n}\times\mathfrak {M};\mathbb{R}^{+})\) is the family of all nonnegative functions \(V(t,u(t),i)\) on \(\mathbb{R}^{+}\times\mathbb {R}^{n}\times\mathfrak{M}\) which are continuously twice differentiable in u and once differentiable in t; \((\mathbb{A},\mathcal{F},\{\mathcal {F}_{t}\}_{t\geq0},\mathbb{P})\) is a complete probability space, where \(\mathbb{A}\) is the sample space, \(\mathcal {F}\) is the σ-algebra of subsets of the sample space, \(\mathbb{P}\) is the probability measure on \(\mathcal{F}\) and \(\{\mathcal{F}_{t}\}_{t\geq0}\) denotes the filtration; \(L^{2}_{\mathcal{F}_{0}}([-\tilde {\omega},0];\mathbb{R}^{n}) \) denotes the family of all \(\mathcal{F}_{0}\)-measurable \(C([-\tilde{\omega},0];\mathbb{R}^{n})\)-valued random variables \(\tilde{\xi} = \{\tilde{\xi}(\theta): -\tilde{\omega }\leq\theta\leq0\}\) such that \(\sup_{-\tilde{\omega}\leq\theta\leq0} \mathbb {E}|\tilde{\xi}(\theta)| < \infty\), where \(\mathbb{E}\{\cdot\}\) stands for the mathematical expectation operator with respect to the given probability measure \(\mathbb{P}\).

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Maharajan, C., Raja, R., Cao, J. et al. Global exponential stability of Markovian jumping stochastic impulsive uncertain BAM neural networks with leakage, mixed time delays, and α-inverse Hölder activation functions. Adv Differ Equ 2018, 113 (2018). https://doi.org/10.1186/s13662-018-1553-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1553-7