Abstract

In this article, by using Razumikhin-type technique, we investigate p th moment exponential stability of stochastic functional differential equations with Markovian switching and delayed impulses. Several stability theorems of impulsive hybrid stochastic functional differential equations are derived. It is assumed that the state variables on the impulses can relate to the finite delay. These new results are employed to a class of n-dimensional linear impulsive hybrid stochastic systems with bounded time-varying delay. Moreover, an effective M-matrix method is introduced to study the exponential stability of these hybrid systems. Meanwhile, some examples and simulations are given to show our results.

Similar content being viewed by others

1 Introduction

Stochastic differential equation is an emerging field drawing attention from both theoretical and applied disciplines, which has been successfully applied to problems in mechanical, electrical, economics, physics and several fields in engineering. For details, see [1–6] and the references therein. Recently, stability of stochastic differential equations with Markovian switching has received a lot of attention [7–12]. For example, Ji and Chizeck [7] and Mariton [8] studied the stability of a jump linear equation

where x(t) takes values in Rn, r(t) is a Markovian chain taking values in S = {1, 2, ..., N}. Mao [9] discussed the stability of nonlinear stochastic differential equation with Markovian switching of the form

In [10], Mao studied the stability of stochastic functional differential equation with Markovian switching of the form

Impulsive effects are common phenomena due to instantaneous perturbations at certain moment, such phenomena are described by impulsive differential equation which have been used effciently in modelling many practical problems that arise in the fields of engineering, physics, and science as well. So the theory of impulsive differential equations is also attracting much attention in recent years [13–19]. Correspondingly, a lot of stability results of impulsive stochastic functional differential equations have been obtained [20–26]. However, there are few results on the stability of impulsive stochastic differential equation with Markovian switching. In [27], Wu and Sun established some stability criteria of p-moment stability for stochastic differential equations with impulsive jump and Markovian switching.

In this article, we shall extend Razumikhin method [10, 12] to investigate the p th moment exponential stability of the following stochastic functional differential equations with Markovian switching and delayed impulse

The state variables on the impulses relate to the finite delay, which implies that the impulsive effects are more general than those given in [20, 22, 23]. Some Theorems on the p th moment exponential stability are derived in the case that the impulsive gain or These new results are employed to the n-dimensional impulsive hybrid stochastic systems with bounded time-varying delay. Useful criteria in terms of an M-matrix (see Berman and Plemmons [28]) which can be verified much more easily are established. Meanwhile, examples and simulations are provided to show the impulsive effects play an important role in the stability for hybrid stochastic systems. The rest of this article is organized as follows. In Section 2, stochastic functional differential equations with Markovian switching and delayed impulses together with some definitions of p th moment exponential stability are presented. In Section 3, the Razumikhin-type theorems on p th moment exponential stability for stochastic functional differential equations with Markovian switching and delayed impulses are established. In Section 4, these results will then be applied to the n dimensional hybrid stochastic delay systems and M-matrix method is introduced to verify the stability easily. Finally, examples are given to demonstrate our effective results in Section 5.

2 Preliminaries

Let R = (−∞, +∞), R+ = [0, +∞), Rndenote the n-dimensional Euclidean space with the Euclidean norm | · |. If A is a vector or matrix, its transpose is denoted by AT, and its norm is denoted by where λmax(·) is the maximum eigenvalue of a matrix. is an m-dimensional Brownian motion on a complete probability space with a natural filtration satisfying the usual conditions, .

Let τ > 0 and exist, and with the norm where ψ(t+) and ψ(t−) denote the right-hand and left-hand limits of function ψ(t) at t.

Denoted by the family of all bounded, , PC([−τ, 0]; Rn)-valued random variables. For p > 0, denoted by the family of all -measurable -valued random variables ψ such that Let r(t)(t > 0) be a right-continuous Markovian chain on the probability space taking values in a finite state space S = {1, 2, ..., N} with generator given by

where Δ > 0. Here γ ij ≥ 0 is the transition rate from i to j while

We assume that the Markovian chain r(t) is independent of the Brownian motion ω(t). It is known that almost every sample path of r(t) is a right-continuous step function with a finite number of simple jumps in any finite subinterval of R+.

Consider the following impulsive hybrid stochastic functional differential equation of the form

where and , are impulsive moments satisfying t k < tk+1and represents the jump in the state x at t k with I k determining the size of the jump, f : PC([-τ, 0]; Rn) × R+× S → Rn, g : PC(-τ, 0]; Rn)×R+×S → Rn×m, I k : Rn×PC([-τ, 0]; Rn) × R+ × S → Rn.

Throughout this article, we assume that f, g and I k satisfy the necessary conditions for the global existence and uniqueness of solutions for all t ≥ 0. For any there exists a unique stochastic process satisfying Equation (2.1) denoted by x(t; ξ), which is continuous on the left-hand side and limitable on the right-hand side. Also we assume that f(0, t, i) ≡ 0, g(0, t, i) ≡ 0 and I k (0, 0, t, i) ≡ 0, k = 1, 2, ..., which implies that x(t) ≡ 0 is an equilibrium solution.

Let be the family of all nonnegative functions V (x, t, i) on Rn×[−τ, ∞) × S which are continuous on Rn× (tk−1, t k ] × S, V t , V x , V xx are continuous on Rn× (tk−1, t k ] × S. For each we define an operator associated with Equation (2.1) as follows:

where

Definition 2.1. The zero solution of Equation (2.1) are said to be p th moment exponentially stable if there exists η > 0 such that for any initial values and t ≥ 0

Remark 2.1. When p = 2, it is often called to be exponentially stable in mean square.

3 Stability analysis

In the following, we shall establish some criteria on p th moment exponential stability for Equation (2.1).

Theorem 3.1. Assume that and constants p > 0, c1 > 0, c2 > 0, such that

(i) for all (t, x) ∈ Rn × [−τ, ∞) × S;

(ii) for all t ∈ (tk-1, t k ] and whenever

(iii) for all i∈S,

(iv)

(v) for any

then the zero solution of Equation (2.1) is p th moment exponentially stable with p th moment exponent λ, where

Proof. For any we denote the solution x(t)= x(t; ξ) of (2.1) and extend r(t) = r(0) = r0 for all t ∈ [−τ, 0]. Let ε be small enough such that t + ε ∈ (tk−1, t k ). By generalized Itô formula, we have

Let ε → 0, it follows that for t ∈ (tk-1, t k ]

Let W(t) = eλtEV (x(t), t, i), we have for t ∈ (tk- 1, t k ]

From (iii), we have

Taking M > 0 such that

we can claim that for t ≥ -τ

It is easy to see that W(t) < M for t ∈ [-τ, 0]. Now, we shall prove that

Otherwise, there exists a t* ∈ (0, t1] such that

In view of the continuity of W(t) in [0, t1], there exists a t** ∈ (0, t*) such that

For t ∈ [t**, t*], θ ∈ [-τ, 0], we have

Then we obtain

Together with (3.3) and (ii), for t ∈ [t∗∗, t∗], we have

Thus

This is a contradiction. Hence (3.7) holds. From (iii), we obtain

Next, we shall show that

If it does not hold, there exists a such that

In view of the continuity of W(t) in (t1, t2], there exists a such that

For , we have qW(t) >W(t + θ). It follows from (3.3) and (ii) that for

Then, we have

which is a contradiction. Thus, (3.15) holds. By induction, we can prove that for k = 1, 2, ...

Therefore, we have for t ≥ -τ

From (i) and the above inequality, we have

which implies that

The proof of Theorem 3.1 is complete.

Remark 3.1. In Theorem 3.1, the zero solution of hybrid stochastic functional differential equations without impulses is allowed to be unstable. In this case, the delayed impulses are key in stabilizing the hybrid stochastic equations. It requires the nearest impulse time interval must be sufficiently small and the maximal impulsive gain .

Theorem 3.2. Assume that and constants p > 0, c1 > 0, c2 > 0, , γ i > 0, µ > 0, λ > 0, i ∈ S, k = 1, 2, ... such that

(i) for all (t, x) ∈ [−τ, ∞) × Rn;

(ii) for all t ∈ (tk-1, t k ] and whenever

(iii) for all i ∈ S,

(iv) ;

(v) for any ,

then the zero solution of Equation (2.1) is p th moment exponentially stable, where .

Proof. Since (v) holds, we can choose sufficiently small ε > 0 such that for all i ∈ S. Let W(t) = eλt EV (x(t), t, i), we have for t ∈ (tk−1, t k ]

From (iii), we have

Taking M > 0 such that

we shall show that for t ≥ -τ

It is easy to see that W(t) < M for t ∈ [−τ, 0]. Now, we shall prove that

If it does not hold, there exists a t* ∈ (0, t1] such that

In view of the continuity of W(t) in [0, t1], there exists a t** ∈ [0, t*) such that

For

Then

Together with (3.24) and (ii), for we have

Thus

This is a contradiction. Next, we shall show that

If it does not hold, we have

Since the continuity of W((t) in [0, t1], there exists a such that

For we have

By (3.24) and (ii), for we have

Thus

which is a contradiction. It follows from (3.25), (3.35) and (3.28) that

Furthermore, we can prove that

Indeed, there exists a such that

If for Then (q + ε)W(t) >W(t + θ) for θ ∈ [−τ, 0]. Thus by (3.24) and (ii), for we have D+ W (t) ≤ (λ − γ)W(t) ≤ 0. It follows that

This is a contradiction. If there exists a such that

In view of the continuity of W(t) in (t1, t2], there exists a such that

Then for , θ ∈ [−τ, 0], (q + ε) W(t) >W(t + θ). Thus for , D+W (t) ≤ (λ−γ)W(t) ≤ 0. It follows that

which leads to a contradiction. Moreover, we can conclude that . If this does not hold, we have. To prove the conclusion, two cases are to be considered.

Case (i). For t ∈ (t1, t2], . From (3.26), (3.28) and (3.43), we have for t ∈ (t1, t2], θ ∈ [−τ, 0]

Then by (3.24) and (ii), for t ∈ (t1, t2]

Thus

which leads to a contradiction.

Case (ii). There exists a such that Since and in view of the continuity of W(t) in , there exists a such that

For we have

It follows from (3.24) and (ii) that for

Thus

This is a contradiction. By induction, we can prove that for k = 1, 2, ...

Therefore, we have for t ≥ −τ

The proof of Theorem 3.1 is complete.

Remark 3.2. In Theorem 3.2, it is permitted that the maximal impulsive gain . This means that the hybrid stochastic equation not only can achieve exponential stability but also is exponential stability with delayed impulses. In this case, it requires that the minimal impulse time interval must be sufficiently large such that the hybrid stochastic differential equations with delayed impulses can make keep its stability property.

4 Some consequences

In the following, we shall apply the above new results to a class of linear impulsive hybrid stochastic systems by using Lyapunov function and M-matrix method.

Consider the following n-dimensional impulsive hybrid stochastic delay differential equation

where 0 ≤ τ (t) ≤ τ is continuous, τ is a positive constant. For convenience, we denote A(r(t)) = A i , B(r(t)) = B i , C(r(t)) = C i , D(r(t)) = D i , where A i = (a uv (i))n×n, B i = (b uv (i))n×n, C i = (c uv (i))n×n, D i = (d uv (i))n×n.

Theorem 4.1. Assume that there exist symmetric positive definite matrices Q i and constants such that

(i) for all i ∈ S

and

where mean that matrices are negative semi-definite;

(ii) for all i ∈ S

(iii) ;

(iv) for all ,

then the zero solution of Equation (4.1) is exponentially stable in the mean square, where

Proof. We define Clearly

Then for t ∈ (tk−1, t k ]

In view of for any vectors x, y ∈ Rn, scalar ε > 0, the following inequality holds

then it follows that

and

Substituting (4.8) and (4.9) into (4.6) we can derive that

Then

Next, if for we have

Thus

For t = t k , it follows from (ii) that

Consequently, the conclusions follow from Theorem 3.1. This completes the proof.

Theorem 4.2. Assume that exist symmetric positive definite matrices Q i and constants such that (4.4) holds and the following conditions hold:

-

(i)

for all i ∈ S

(4.15)

and

(ii) ;

(iii) for all ,

then the zero solution of Equation (4.1) is exponentially stable in the mean square, where

Proof. By (iii), we choose sufficiently small ε > 0 such that We define

V(x, t, i) = xT(t) Q i x(t). Similar to the proof of Theorem 4.1, we get for t ∈ (tk−1, t k ]

For we have

Thus

Therefore, the conclusions follow from Theorem 3.2.

In the following, we shall establish tractable exponential stability conditions. To this end, we take

Corollary 4.1. Assume that there exist constants such that

(i) for all i ∈ S

(ii)

(iii) for all i ∈ S

then the zero solution of Equation (4.1) is exponentially stable in the mean square, where

Corollary 4.2. Assume that there exist constants such that (4.20) holds and the following conditions are satisfied:

-

(i)

-

(ii)

for all i ∈ S

(4.22)

then the zero solution of Equation (4.1) is exponentially stable in the mean square, where

Next, we apply Corollaries 4.1 and 4.2 to establish some very useful criteria in terms of M matrix which can be verified much more easily. If A is a vector or matrix, by A ≫ 0 we mean all elements of A are positive. If A1 and A2 are vectors or matrices with same dimensions we write A1 ≫ A2 if and only if A1 − A2 ≫ 0. Moreover, we also adopt here the traditional natation by letting

Definition 4.1. (see [12, 28]) A square matrix A =(a ij )N×Nis called a nonsingular M matrix if A can be expressed in the form A = sI − B with s > ρ(B) while all the elements of B are nonnegative, where I is the identity matrix and ρ(B) the spectral radius of B.

Remark 4.1. If A is a nonsingular M-matrix, then A has nonpositive off-diagonal and positive diagonal entries, that is

Corollary 4.3. There exist constants such that (4.20) holds. If there exists λ > 0 such that Ξ − Γ is a nonsingular and for any i ∈ S

then the zero solution of Equation (4.1) is exponentially stable in the mean square, where Ξ is the diagonal matrix

Proof. We conclude that all the elements of (Ξ − Γ)−1 are nonnegative. So we have (4.24) holds, namely all r i are positive. Note

that is

Then we have for i ∈ S

The required conclusion follows from Corollary 4.1.

Corollary 4.4. There exist constants such that (4.17) holds. If there exists λ > 0 such that is a nonsingular and for any i ∈ S

then the zero solution of Equation (4.1) is exponentially stable in the mean square, where is the diagonal matrix denoted by

5 Examples and numerical simulations

In this section, two examples are provided to illustrate our results.

Example 5.1. Let ω(t) be a scalar Brownian motion. Let r(t), t ≥ 0 be a right-continuous Markov chain taking values in S = {1, 2} with the generator

Consider the following scalar hybrid impulsive stochastic delay system

where t k = 0.003k, τ(t) = 1, A1 = -1, A2 = -2, B1 = 1, B2 = 2, C1 = 1, C2 = 2, D1 = 2, D2 = 3, d1k= d2k= 0.32, Taking r1 = r2 = 1, we see that there exists λ > 0 such that

and

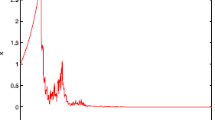

By Corollary 4.1, the zero solution of Equation (5.1) is exponentially stable in the mean square. Figure 1 depicts x(t) of Equation (5.1) is exponentially stable in the mean square. Figure 2 depicts x(t) of Equation (5.1) without delayed impulses.

Trajectory of the states x ( t ) of Equation ( 5 .1).

Trajectory of the states x ( t ) of Equation ( 5 .1) without delay impulses.

Remark 5.1. From Figures 1 and 2, although hybrid stochastic delay system without impulses may be exponentially unstable in the mean square, adding delayed impulses may lead to exponentially stable in the mean square, which implies that impulses may change the stable behavior of an system.

Example 5.2. Let r(t), t ≥ 0 be a right-continuous Markov chain taking values in S = {1, 2, 3} with the generator

Consider the following the 3-D hybrid impulsive stochastic delay system

where

t k = 0.02k, τ(t) = 0.5, ||B1|| = 1.1817, ||B2|| = 1.3650, ||C1|| = 0.8, ||C2|| = 1, ||D1|| = ||D2|| = 1. It is easy to see that By computation, we have

Taking λ = 0.5, we have

Hence

Ξ − Γ is a nonsingular M-matrix. Then

Compute

By Corollary 4.3, the zero solution of Equation (5.2) is exponentially stable in the mean square. Figure 3 depicts x1(t), x2(t) of Equation (5.2) is exponentially stable in the mean square.

Trajectory of the states x ( t ) of Equation ( 5 .2).

References

Mao X: Exponential Stability of Stochastic Differential Equations. Marcel Dekker, New York; 1994.

Mao X: Stochastic Differential Equations and Applications. Ellis Horwood, Chichester, UK; 1997.

Karatzas I, Shreve SE: Brownian Motion and Stochastic Calculus. Springer-Verlag, Berlin; 1991.

Chang M: On Razumikhin-type stability conditions for stochastic functional differential equations. Math. Modell 1984, 5: 299–307. 10.1016/0270-0255(84)90007-1

Mao X: Razumikhin type theorems on exponential stability of neutral stochastic functional differential equations. SIAM J. Math. Anal 1997, 28(2):389–401. 10.1137/S0036141095290835

Janković S, Randjelović J, Jovanović M: Razumikhin-type exponential stability criteria of neutral stochastic functional differential equations. J. Math. Anal. Appl 2009, 355: 811–820. 10.1016/j.jmaa.2009.02.011

Ji Y, Chizeck HJ: Controllability, stabilizability and continuous-time Markovian jump linear quadratic control. IEEE Trans. Automat. Control 1990, 35: 777–788. 10.1109/9.57016

Mariton M: Jump Linear Systems in Automatic Control. Marcel Dekker, New York; 1990.

Mao X: Stability of stochastic differential equations with Markovian switching. Stochastic Process. Appl 1999, 79: 45–67. 10.1016/S0304-4149(98)00070-2

Mao X: Stochastic functional differential equations with Markovian switching. Funct. Diff. Equ 1999, 6: 375–396.

Shaikhet L: Stability of stochastic hereditary systems with Markov switching. Theory Stoch. Process 1996, 2: 180–184.

Mao X, Lam J, Xu S, Gao H: Razumikhin method and exponential stability of hybrid stochastic delay interval systems. J. Math. Anal. Appl 2006, 314: 45–66. 10.1016/j.jmaa.2005.03.056

Liu B, Liu X, Teo K, Wang Q: Razumikhin-type theorems on exponential stability of impulsive delay systems. IMA J. Appl. Math 2006, 71: 47–61.

Anokhin A, Berezansky L, Braverman E: Exponential stability of linear delay impulsive differential equations. J. Math. Anal. Appl 1995, 193: 923–941. 10.1006/jmaa.1995.1275

Lakshmikantham V, Bainov DD, Simeonov PS: Theory of Impulsive Differential Equations. World Scientific, Singapore; 1989.

Samoilenko AM, Perestyuk NA: Impulsive Differential Equations. World Scientific, Singapore; 1995.

Shen J, Yan J: Razumikhin type stability theorems for impulsive functional differential equations. Nonlinear Anal 1998, 33: 519–531. 10.1016/S0362-546X(97)00565-8

Liu XZ, Wang Q: The method of Lyapunov functionals and exponential stability of impulsive systems with time delay. Nonlinear Anal 2007, 66(7):1465–1484. 10.1016/j.na.2006.02.004

Wu QJ, Zhou J, Xiang L: Global exponential stability of impulsive differential equations with any time delays. Appl. Math. Lett 2010, 23: 143–147. 10.1016/j.aml.2009.09.001

Pan LJ, Cao JD: Exponential stability of impulsive stochastic functional differential equations. J. Math. Anal. Appl 2011, 382: 672–685. 10.1016/j.jmaa.2011.04.084

Liu B: Stability of solutions for stochastic impulsive systems via comparison approach. IEEE Trans. Automat. Control 2008, 53: 2128–2133.

Peng SG, Zhang Y: Razumikhin-type theorems on p th moment exponential stability of impulsive stochastic delay differential equations. IEEE Trans. Automat. Control 2010, 55: 1917–1922.

Cheng P, Deng FQ: Global exponential stability of impulsive stochastic functional differential systems. Stat. Probab. Lett 2010, 80: 1854–1862. 10.1016/j.spl.2010.08.011

Sakthivel R, Luo J: Asymptotic stability of impulsive stochastic partial differential equations with infinite delays. J. Math. Anal. Appl 2009, 356: 1–6. 10.1016/j.jmaa.2009.02.002

Anguraj A, Vinodkumar A: Existence, uniqueness and stability results of random impulsive semilinear differential systems. Nonlinear Anal Hybr. Syst 2010, 4: 475–483. 10.1016/j.nahs.2009.11.004

Li CX, Sun JT: Stability analysis of nonlinear stochastic differential delay systems under impulsive control. Phys. Lett. A 2010, 374: 1154–1158. 10.1016/j.physleta.2009.12.065

Wu HJ, Sun JT: p -moment stability of Stochastic differential equations with impulsive jump and Markovian switching. Automatica 2006, 42: 1753–1759. 10.1016/j.automatica.2006.05.009

Berman A, Plemmons RJ: Nonnegative Matrices in the Mathematical Sciences. SIAM, Philadelphia; 1994.

Acknowledgements

The authors would like to thank the referee and the associate editor for their very helpful suggestions. This work was supported by the National Natural Science Foundation of China under Grant No. 60874088, and the Natural Science Foundation of Jiangsu Province of China under Grant No. BK2009271, and JSPS Innovation Program under Grant No. CXZZ11_0132.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors' contributions

All authors contributed equally to the manuscript. All authors read and approved the final manuscript.

Authors’ original submitted files for images

Below are the links to the authors’ original submitted files for images.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 2.0 International License (https://creativecommons.org/licenses/by/2.0), which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

About this article

Cite this article

Pan, L., Cao, J. Exponential stability of stochastic functional differential equations with Markovian switching and delayed impulses via Razumikhin method. Adv Differ Equ 2012, 61 (2012). https://doi.org/10.1186/1687-1847-2012-61

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/1687-1847-2012-61