Abstract

This paper investigates a computational method to find an approximation to the solution of fractional differential equations subject to local and nonlocal m-point boundary conditions. The method that we will employ is a variant of the spectral method which is based on the normalized Bernstein polynomials and its operational matrices. Operational matrices that we will developed in this paper have the ability to convert fractional differential equations together with its nonlocal boundary conditions to a system of easily solvable algebraic equations. Some test problems are presented to illustrate the efficiency, accuracy, and applicability of the proposed method.

Similar content being viewed by others

1 Introduction

Recently the studies of fractional differential equations (FDEs) gained the attention of many scientists around the globe. This topic remains a central point in several special issues and books. Fractional-order operators are nonlocal in nature and due to this property, they are most nicely applicable to various systems of natural and physical phenomena. This property has motivated many scientists to develop fractional-order models by considering the ideas of fractional calculus. Examples of such systems can be found in many disciplines of science and engineering such as physics, biomathematics, chemistry, dynamics of earthquakes, dynamical processes in porous media, material viscoelastic theory, and control theory of dynamical systems. Furthermore, the outcome of certain observations indicates that fractional-order operators possess some properties related to systems having long memory. For details of applications and examples, we refer the reader to the work in [1–7].

The qualitative study of FDEs which discusses analytical investigation of certain properties like existence and uniqueness of solutions has been considered by several authors. In 1992 Guptta [8] studied the solvability of three point boundary value problems (BVPs). Since then many researchers have been working in this area and provided many useful results which guarantee the solvability and existence of a unique solution of such problems. For the reader interested in the existence theory of such problem we refer to work presented by Ruyun Ma [9] in which the author presents a detailed survey on the topic. In [10] the author derived an analytic relation which guarantees the existence of positive solution of a general third order multi-point BVPs. Also some analytic properties of solutions of FDEs are discussed by El-Sayed in [11]. Often it is impossible to arrive at the exact solution when FDEs has to be solved under some constraints in the form of boundary conditions. Therefore the development of approximation techniques remains a central and active area of research.

The spectral methods, which belong to the approximation techniques, are often used to find approximate solution of FDEs. The idea of the spectral method is to convert FDEs to a system of algebraic equations. However, different techniques are used for this conversion. Some of well-known techniques are the collocation method, the tau method, and the Galerkin method. The tau and Galerkin methods are analogous in the sense that the FDEs are enforced to satisfy some algebraic equations, then some supplementary set of equations are derived using the relations of boundary conditions (see, e.g., [12] and the references therein). The collocation method [13, 14], which is an analog of the spectral method, consists of two steps. First, a discrete representation of the solution is chosen and then FDEs are discretized to obtain a system of algebraic equations.

These techniques are extensively used to solve many scientific problems. Doha et al. [15] used collocation methods and employed Chebyshev polynomials to find an approximate solution of initial value problems of FDEs. Similarly, Bhrawy et al. [16] derived an explicit relation which relates the fractional-order derivatives of Legendre polynomials to its series representation, and they used it to solve some scientific problems. Saadatmandi and Dehghan [17] and Doha et al. [18] extended the operational matrices method and derived operational matrices of fractional derivatives for orthogonal polynomials and used it for solving different types of FDEs. Some recent and good results can be found in the articles like [15, 19, 20].

Some other methods have also been developed for the solution of FDEs. Among others, some of them are iterative techniques, reproducing kernel methods, finite difference methods etc. Esmaeili and Shamsi [21] developed a new procedure for obtaining an approximation to the solution of initial value problems of FDEs by employing a pseudo-spectral method, and Pedas and Tamme [22] studied the application of spline functions for solving FDEs. In [23], the author used a quadrature tau method for obtaining the numerical solution of multi-point boundary value problems. Also the authors in [24–31] extended the spectral method to find a smooth approximation to various classes of FDEs and FPDEs. Some recent results in which orthogonal polynomials are applied to solve various scientific problems can be found in [32–36].

Multi-point nonlocal boundary value problems appears widely in many important scientific phenomena like in elastic stability and in wave propagation. For the solution of such a problem Rehman and Khan [37, 38] introduced an efficient numerical scheme, based on the Haar wavelet operational matrices of integration for solving linear multi-point boundary value problems for FDEs. FDEs subject to multi-point nonlocal boundary conditions are a little bit difficult. In this area of research a few articles are available. Some good results on solution of nonlocal boundary value problems can be found in [39–44].

Bernstein polynomials are frequently used in many numerical methods. Bernstein polynomials enjoy many useful properties, but they lack the important property of orthogonality. As the orthogonality property is one of more important properties in approximation theory and numerical simulations, these polynomials cannot be directly implemented in the current technique of numerical approximations. To overcome this difficulty, these non-orthogonal Bernstein polynomials are transformed into an orthogonal basis [45–48]. But as the degree of the polynomials increases the transformation matrix becomes ill conditioned [49, 50], which results some inaccuracies in numerical computations. Recently Bellucci introduced an explicit relation for normalized Bernstein polynomials [51]. One applied Gram-Schmidt orthonormalization process to some sets of Bernstein polynomials of different scale levels, identifying the pattern of polynomials, and generalizing the result. The main results presented in this article are based on these generalized bases.

In this article we present an approximation procedure to find an approximate solution of the FDEs subject to local and nonlocal m-point BVPs. The method is designed to solve linear FDEs with constant coefficients, linear FDEs with variable coefficients, and nonlinear FDEs, under local and nonlocal m-point boundary conditions. In particular, we consider the following generalized class of FDEs:

In equation (1) \(\lambda_{i}\in\mathbf{R}\), \(t\in{}[ 0,\omega]\), \(f(t)\in C([0,\omega])\). The orders of the derivatives are defined as

In equation (2) \(\lambda_{i}(t)\in C([0,\omega])\). In (3) \(F(U(t)^{\rho_{0}},U(t)^{\rho_{1}},\ldots, U(t)^{\rho_{p}})\) is a nonlinear function of \(U(t)\) and its fractional derivatives.

The main aim in this paper is to find a smooth approximation to \(U(t)\), which satisfies a given set of m-point boundary conditions. We consider the following two types of boundary constraints.

Type 1: Multi-point local boundary conditions defined as

Type 2: Non local m-point boundary conditions, defined as

We use the normalized Bernstein polynomials for our investigation, which is based on the explicit relations presented in [51]. We use these polynomials to develop new operational matrices. We develop four operational matrices, two of them being operational matrices of integration and differentiation, the formulation technique for these two operational matrices is the same as that used for traditional orthogonal polynomials. We introduce two more operational matrices to deal with the local and nonlocal boundary conditions. To the best of our knowledge such types of operational matrices are not designed for normalized Bernstein polynomials.

We organized the rest of article as follows. In Section 2, we recall some basic concepts definition from fractional calculus, approximation theory, and matrix theory. Also we present some properties of normalized Bernstein polynomials which are helpful in our further investigation. In Section 3, we present a detailed procedure for the construction of the required operational matrices. In Section 4, the developed matrices are employed to solve FDEs by introducing a new algorithm. In Section 5, the proposed algorithm is applied to some test problems to show the efficiency of the proposed algorithm. The last section is devoted to a short conclusion.

2 Some basic definitions

In this section, we present some basic notation and definitions from fractional calculus and well-known results which are important for our further investigation. More details can be found in [5, 7].

Definition 1

The Riemann-Liouville fractional-order integral of order \(\sigma\in\mathbf{R}_{+}\) of a function \(\phi(t) \in(L^{1}[a,b],\mathbf{R})\) is defined by

The integral on right hand side exists and is assumed to be convergent.

Definition 2

For a given function \(\phi(t)\in C^{n}[a,b]\), the fractional-order derivative in the Caputo sense, of order σ is defined as

The right side is assumed to be point wise defined on \((a,\infty)\), where \(n=[\sigma]+1\) in the case that σ is not an integer.

This leads to \(I^{\sigma}t^{k}=\frac{\Gamma(1+k)}{\Gamma(1+k+\sigma )}t^{k+\sigma}\) for \(\sigma>0\), \(k\geq0\), \(D^{\sigma}C=0\), for a constant C and

Throughout the paper, we will use the Brenstein polynomials of degree n. The analytic relation of the Brenstein polynomials of degree n defined on \([0,1]\) is given as

The set of polynomials defined by (8) have a lot of interesting properties. They are positive on \([0,1]\) and also approximate a smooth function on the domain \([0,1]\). The polynomials defined in (8) are not orthogonal, after the application of the Gram-Schmidt process the explicit form of normalized Bernstein polynomials is obtained (as discussed in detail in [51]) by

where

The polynomials defined in (9) are not directly applicable in construction of operational matrices (it will be clear in the next section). Therefore, we further generalize the relation

where

Note that in equation (10), \(\boldsymbol {\phi}_{j,n}(t)\) is of degree n for all choices of j. By analyzing we observe that the minimum power of t is at maximum value of k and minimum value of l, which is 0. Conversely the maximum power of t is n. These polynomials are orthogonal on the interval \([0,1]\). To make them applicable on the interval \([0,\omega]\), we simply make substitution of \(t=\frac {t}{\omega}\) without loss of generality. So we can write the orthogonal Bernstein polynomials on the interval \([0,\omega]\) as follows:

where \(\mathbf{w}_{(j,n)}=\sqrt{2(n-j)+1}/\sqrt{\omega}\). The orthogonality relation for these polynomials is defined as follows:

As usual we can approximate any function \(f\in C[0,\omega]\) in the normalized Bernstein polynomial as

which can always be written as

where HNT and \(\boldsymbol {\mho}_{\mathbf{N}}^{\boldsymbol {\omega}}(\mathbf{t})\) are \(\mathbf{N}=n+1\) terms column vector containing coefficients and Bernstein polynomials, respectively, and one defined

As N represents the size of the resulting algebraic equations it is considered as a scale level of the scheme.

The integral of the triple product of fractional-order Legendre polynomials over the domain of interest was recently used in [52]. There the author used this value to solve fractional differential equations with variable coefficients directly. We use a triple product for Bernstein polynomials to construct a new operational matrix which is of basic importance in solving FDEs with variable coefficients. The following theorem is of basic importance.

Theorem 1

The definite integral of the product of three Bernstein polynomials over the domain \([0,\omega]\) is constant and is defined as

where

and \(\mathbf{w}_{((a,b,c),n)}=\mathbf{w}_{(a,n)} \mathbf{w}_{(b,n)}\mathbf{w}_{(c,n)}\).

Proof

Consider the following expression:

Evaluating the integral and using the notation \(\Theta_{(a,b,c)}\) we can write

□

Now, we present the inverse of the well-known Vandermonde matrix. The inverse of this matrix will be used when we use operational matrices to solve under local boundary conditions.

Theorem 2

Consider a matrix V defined as

where \(\tau_{1}<\tau_{2}<\cdots<\tau_{p}\). The inverse of this matrix exists and in defined as

where the entries \(b_{(j,i)}\) are defined by

3 Operational matrices of derivative and integral

Now we are in a position to construct new operational matrices. The operational matrices of derivatives and integrals are frequently used in the literature to solve fractional-order differential equations. In this section, we present the proofs of constructions of four new operational matrices. These matrices act as building blocks in the proposed method.

Theorem 3

The fractional integration of order σ of the function vector \(\boldsymbol {\mho}^{\boldsymbol {\omega}}_{\mathbf{N}}(\mathbf{t})\) (as defined in (17)) is defined as

where \(\mathbf{P}_{(N\times N)}^{(\sigma,\omega)}\) is an operational matrix for fractional-order integration and is given as

where

where \(\overbrace{\Delta_{(s,k^{\prime},l^{\prime})}}\) is as defined in (11), and

Proof

Consider the general element of (17) and apply fractional integral of order σ, consequently we will get

Using the definition of fractional-order integration we may write

where \(\Delta_{(r,k,l,\sigma,\omega)}^{\prime}=\frac{\overbrace {\Delta _{(r,k,l)}}\Gamma(l+r-k+1)}{\Gamma(l+r-k+1+\sigma)\omega^{l+r-k}}\). We can approximate \(t^{l+r-k+\sigma}\) with normalized Bernstein polynomials as follows:

Using equation (12), we can write

On further simplifications, we can get

Using (26) and (24) in (23) we get

Using the notation

and evaluating for \(r=0,1,\ldots,n\) and \(s=0,1,\ldots,n\) completes proof of the theorem. □

Theorem 4

The fractional derivative of order σ of the function vector \(\boldsymbol {\mho}^{\boldsymbol {\omega}}_{\mathbf{N}}(\mathbf{t})\) (as defined in (17)) is defined as

where \(\mathbf{D}_{(N\times N)}^{(\sigma,\omega)}\) is defined as

where

and

Proof

On application of the derivative of order σ to a general element of (17), we may write

Using the definition of the fractional-order derivative we can easily write

where

We can approximate \(t^{l+r-k-\sigma}\) with the Bernstein polynomials as follows:

Using equation (12), we can write

On further simplification we can get

Using (35) and (33) in (31) we get

Using the notation

and evaluating for \(r=0,1,\ldots,n\), and \(s=0,1,\ldots,n\), we complete the proof of the theorem. □

The operational matrices developed in the previous theorems can easily solve FDEs with initial conditions. Here we are interested in the approximate solution of FDEs under complicated types of boundary conditions. Therefore we need some more operational matrices such that we can easily handle the boundary conditions effectively.

The following matrix plays an important role in the numerical simulation of fractional differential equations with variable coefficients.

Theorem 5

For a given function \(f\in C[0,\omega]\), and \(u=\mathbf{H}_{\mathbf {N}}^{\mathbf{T}}\boldsymbol {\mho}^{\boldsymbol {\omega}}_{\mathbf {N}}(\mathbf{t})\), the product of \(f(t)\) and σ order fractional derivative of the function \(u(t) \) can be written in matrix form as

where \(\mathbf{Q}_{(N\times N)}^{(f,\sigma,\omega)}=\mathbf {D}_{(N\times N)}^{(\sigma,\omega)}\mathbf{R}_{(N\times N)}^{(f,\omega)}\), and \(\mathbf{D}_{(N\times N)}^{(\sigma,\omega)}\) is an operational matrix for fractional-order derivative and

where

and the entries \(\Theta_{(q,r,s)}\) are defined as in Theorem 1, and \(d_{q}\) are the spectral coefficients of the function \(f(t)\).

Proof

Applying Theorem 4 we can write

and

where

Consider the general element of \(\overbrace{\boldsymbol {\mho}^{\boldsymbol {\omega}}_{\mathbf{N}}(\mathbf{t})}\), and approximate it with normalized Bernstein polynomials as

where \(c_{i}^{r}\) can easily be calculated as

Now as \(f(t)\in C[0,\omega]\), we can approximate it with normalized Bernstein polynomials as

Using equation (42) in (41) we get the following estimates:

In view of Theorem 1 we obtain the following estimate:

Evaluating (45) for \(s=0,1,\ldots, n\) and \(r=0,1,\ldots, n\) we can write

where \(\Omega_{(r,s)}=\sum_{q=0}^{n}d_{q}\Theta_{(q,r,s)}\). In simplified notation, we can write

Using (47) in (39) we get the desired result. The proof is complete. □

Since one of our aims in this paper is to solve FDEs under different types of local and non-local boundary conditions, we have to face some complicated situations, so to handle these situations we will use the operational matrix developed in the next theorem.

Theorem 6

Let f be a function of the form \(f(t)=at^{n^{\prime}}\) where \(a\in \mathbb{R}\) and \(n^{\prime}\in\mathbb{N}\), then for any function \(u(t)\in C[0,\omega]\), \(u(t)=\mathbf{H}_{\mathbf{N}}^{\mathbf{T}}\boldsymbol {\mho}^{\boldsymbol {\omega}}_{\mathbf{N}}(\mathbf{t})\), \(\tau\leq \omega\). Then we can generalize the product of \({}_{0}I_{\tau}^{\sigma}u(t)\,dt\) and \(f(t)\) in matrix form as

where

The entries of the matrix are defined by

where

Proof

Consider the general term \(\boldsymbol {\mho}^{\boldsymbol {\omega}}_{\mathbf {N}}(\mathbf{t})\), if we calculate the σ order definite integral from 0 to τ we get

Using (12) we may write

Using the notation

we can write

Now \(a\Lambda_{(i,\sigma,\tau,\omega)}t^{n^{\prime}}\) can be approximated with Bernstein polynomials as follows:

where \(d_{(i,j)}\) can be calculated as

Using the notation \(\Omega_{(i,j)}=d_{(i,j)}\) and equation (52) in (51) we get the desired result. The proof is complete. □

4 Application of operational matrices

The operational matrices derived in the previous section play a central role in the numerical simulation of FDEs under different types of boundary conditions. We start our discussion with the following class of FDEs:

where \(\lambda_{i}\in\mathbf{R}\), \(t\in{}[0,\omega]\), \(f(t)\in C([0,\omega])\). The orders of the derivatives are defined as

The solution to this equation can be assumed in terms of series representation of normalized Bernstein polynomials such that the following relation holds:

Now by the application of fractional integral of order σ, and use of Theorem 3, we can explicitly write \(U(t)\) as follows:

Our main aim is to obtain HNT, which is an unknown vector. We will use this vector to get the solution to the problem.

Type 1: Suppose we have to solve (54) under the following condition:

\(c_{0}\) can easily be obtained using the initial condition \(U(0)=0\). The solution in (56) must satisfy all the conditions, therefore using the conditions at the intermediate and boundary points we have

In order to make the notation simple we use \(\tau_{p}=\omega\) in last equation. Equation (58) can then also be written in matrix form as

The matrix in the left is a Vandermonde matrix, in view of Theorem 2 its inverse exists. Using the inverse of V we get the values of \(c_{i}\):

Using the values of \(c_{i}\) in equation (56) we may write

In simplified notation we can write

where \(g(t)=u_{0}+\sum_{i=1}^{p}\sum_{j=1}^{p}b_{(i,j)}(u_{j}-u_{0})t^{i}\). Approximating \(g(t)=\mathbf{G_{N}^{T}}\boldsymbol {\mho}_{\mathbf {N}}^{\boldsymbol {\omega}}(\mathbf{t})\) and making use of Theorem 6, we can easily get

In simplified notation we can write

where

is \(N\times N\) matrix related to type 1 boundary conditions. For instance we stop the current procedure here and discuss the procedure of obtaining analogous relation for \(U(t)\) under type 2 boundary conditions.

Type 2: Suppose if we need to solve the problem under m-point non-local boundary conditions

Then using the initial conditions we can find the first p constants of integrations in equation (56),

However, the last constant \(c_{p}\) is unknown. In order to get a relation for \(c_{p}\) we use nonlocal boundary conditions. Using the m-point boundary condition we get

From (65), we see that left sides of (67) and (68) are equal, therefore for the sake of obtaining the value of \(c_{p}\), we can write

On further simplification we get

where \(\zeta_{1}=\{\sum_{i=0}^{m-2}\varphi_{i}\tau_{i}^{p}-\omega ^{p}\}\) and \(\zeta_{2}=\frac{1}{\zeta_{1}}\{\sum_{l=0}^{(n-1)}u_{l}\tau ^{l}-\sum_{i=0}^{m-2}\sum_{l=0}^{(p-1)}\varphi_{i}u_{l}\tau _{i}^{l}\}\). Now, using (70) in (66) we get

Now, in view of Theorem 6, we can write (71) as

Here \(\sum_{l=0}^{(p-1)}u_{l}t^{l}+\zeta_{2}t^{p}=\mathbf{G_{N}^{T}}\boldsymbol {\mho}_{\mathbf{N}}^{\boldsymbol {\omega}}(\mathbf{t})\). On further simplification we can write

where

We see that for both local and non-local boundary conditions we get \(U(t)\) in matrix form as

Here HNT is an unknown vector to be determined. Note that we use \(E=E^{1}\) if type 1 boundary conditions are given, otherwise use \(E=E^{2}\). Now using (74) and using Theorem 4, we can write

Approximating \(f(t)=\mathbf{F_{N}^{T}} \boldsymbol {\mho}_{\mathbf{N}}^{\boldsymbol {\omega}}(\mathbf{t})\), and using (75), (55) in (54) we may write

After a long calculation and simplification we get

We see that (77) is an easily solvable matrix equation and it can easily be solved for HNT, by using HNT in (74) this will lead us to an approximate solution to the problem.

Next, we consider the linear FDEs with variable coefficients,

where \(\lambda_{i}(t)\in C([0,\omega])\), \(t\in{}[0,\omega]\), \(f(t)\in C([0,\omega])\). We start our analysis with the initial assumption

Using the integral of order σ we can write

We can of course get \(U(t)\) as

where \(E_{N\times N}\), \(\mathbf{G_{N}^{T}} \boldsymbol {\mho}_{\mathbf{N}}^{\boldsymbol {\omega}}(\mathbf{t})\) can be analogously derived as in the previous section depending on the type of boundary conditions to be used. Using (81) and Theorem 5, we can easily write

Approximating \(f(t)=\mathbf{F_{N}^{T}}\boldsymbol {\mho}_{\mathbf {N}}^{\boldsymbol {\omega}}(\mathbf{t})\), and using (82), (79) in (78) we may write

On rearranging we get

Now, we consider the nonlinear FDEs. The nonlinear FDEs are often very difficult to solve with operational matrix techniques. One way is to use the collocation method, by doing so the FDEs result in nonlinear algebraic equations. These nonlinear algebraic equations are then solved iteratively using the Newton method or some other iterative method. The second approach is to linearize the nonlinear part of the fractional differential equation using a quasilinearization technique. Doing so the nonlinear fractional differential equations is converted to a recursively solvable linear differential equations. This technique was introduced recently and has been used to find an approximate solution of many scientific problem. The quasilinearization method was introduced by Bellman and Kalaba [55] to solve nonlinear ordinary or partial differential equations as a generalization of the Newton-Raphson method. The origin of this method lies in the theory of dynamic programming. In this method, the nonlinear equations are expressed as a sequence of linear equations and these equations are solved recursively. The main advantage of this method is that it converges monotonically and quadratically to the exact solution of the original equations [56]. Also some other interesting work in which quasilinearization method is applied to scientific problems is in [57–60]. Consider a nonlinear fractional-order differential equation of the form

The general procedure of this method is as given now. First solve the linear part with a given set of local or nonlocal conditions

This equation can easily be solved using the method developed in the previous discussion. Label the solution at this step as \(U_{0}(t)\).

The next step is to linearize the nonlinear part with a multivariate Taylor series expansion about \(U_{0}(t)\). So after linearizing and simplification we get

The above equation is an FDE with variable coefficients. It can easily be solved with the method developed in Section 4.2. The solution at this stage will be labeled \(U_{1}(t)\) and is the solution of the problem at first iteration. Again we have to linearize the problem about \(U_{1}(t)\) to obtain the solution at second iteration. The whole process can be seen as a recurrence relation like

And the boundary conditions become

where \(b_{j}(t)=\lambda_{j}-\frac{\partial f}{\partial U^{\rho _{j}}}(U_{(r)}(t),U_{(r)}^{\rho_{1}}(t),U_{(r)}^{\rho_{2}}(t),\ldots, U_{(r)}^{\rho_{n}}(t))\) and

It can be easily noted that the above equation is fractional differential equation with variable coefficients. Using the initial solution \(X_{0}(t)\) we may start the iterations. The coefficients \(b_{i}(t)\) and the source term \(\overbrace{F(t)}\) can be updated at every iteration r to get the next solution at \(r+1\). At every step we may solve the problem at given nonlocal boundary conditions. We assume that the method converges to the exact solution of the problem if there is convergence at all.

5 Examples

To show the applicability and efficiency of the proposed method, we solve some fractional differential equations. The numerical simulation is carried out using MatLab. However, we believe that the algorithm can be simulated using any simulation tool kit.

Example 1

As a first problem we solve the following linear integer order boundary value problem

where

We can easily see that the exact solution is \(U(t)=\sin(\pi t)\). We test our algorithm by solving this problem under the following sets of boundary conditions:

We approximate the solution to this problem with different types of boundary conditions and observe that the approximate solution is very accurate. For illustration purposes we calculate the absolute difference at a different scale levels. The results are displayed in Figure 1 and Figure 2 for boundary conditions \(S_{1}\) and \(S_{2}\), respectively. We compare the exact solution and approximate solution under boundary conditions \(S_{1}\) at different scale levels, and the results are displayed in Table 1. It can easily be noted that with increase of scale level, that the approximate solution becomes more and more accurate, and at scale level \(N=11\), the approximate solution is accurate up to the seventh digit. The accuracy may be increased by using a high scale level. For instance we simulate the algorithm at high scale level and measure \(\Vert E_{N}\Vert_{2}\) and \(\Vert E_{N}\Vert_{\infty}\) at each scale level N. Table 2 shows these results at high scale levels under boundary conditions \(S_{1}\) and \(S_{2}\). From this example, we conclude that the proposed method is convergent for integer order differential equations (linear).

To show the efficiency of proposed method in solving nonlocal m-point boundary problem, we solve Example 1 under a 7-point nonlocal boundary condition as defined in \(S_{3}\). We observe that the method works well, the absolute difference is much less than 10−5, a very high accuracy for such complicated problems. We compare the approximate solution with the exact solution at different scale levels. We also calculate \(\Vert E\Vert _{2}\) at different scale levels. The results are displayed in Figure 3.

Simulation and observation of Example 1 . (a) Comparison of approximate solution at different scale levels with the exact solution. (b) Absolute difference of exact and approximate solution. (c) Convergence of \(\|E\|_{2} \) at different scale levels.

Example 2

As a second example consider the following fractional-order differential equation:

Here we consider \(g(t)\) as

We consider the following two types of boundary conditions:

It can easily be observed that the exact solution of the problem is \(U(t)= t^{3} { (t - 3 )}^{4} \).

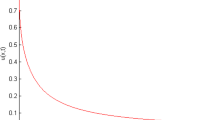

We solve this problem with the proposed method under boundary conditions \(S_{1}\), and we observe that the approximate solution obtained via the new method is very accurate even for very small value of N. Figure 4(a) shows the comparison of the approximate solution with the exact solution. One can easily see that the approximate solution matches very well with the exact solution. In the same figure we also display the absolute error obtained with the new method. It is clear that the absolute error is less than 10−4 at scale level \(N=7\). We measure \(\|E\|_{2}\) at different values of N and observe that the method is highly convergent. We also approximate solution of this problem under boundary condition \(\mathbf{S_{2}}\). The results are displayed in Figure 5. We can easily observe that the approximate solution of the method converges to the exact solution as the value of N increases.

Example 2 . (a) Comparison of approximate solution with the exact solution under boundary condition \(\mathbf{S_{1}}\). (b) Absolute error obtained with the new method at different scale levels. (c) \(\|E\| _{2}\) obtained with the new method at different values of N.

Example 2 . (a) Comparison of approximate solution with the exact solution under boundary condition \(\mathbf{S_{2}}\). (b) Absolute error obtained with the new method at different scale levels. (c) \(\|E\| _{2}\) obtained with the new method at different values of N.

Example 3

As a third example consider the following fractional differential equation with variable coefficients:

We consider the following types of boundary conditions:

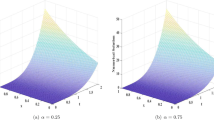

We select a suitable \(g(t)\), such that the exact solution of the problem is \(e^{t}\). We simulate the proposed algorithm to solve this problem under boundary conditions \(S_{1}\) and we observe that the method works well. The results are displayed in Figure 6. In this figure we display the comparison of the exact and the approximate solution, the absolute error of approximation and the square norm of the error. One can easily conclude that the method is highly efficient. We solve the problem under boundary condition \(S_{2}\) and the results are displayed in Table 3. One can easily see that the approximate solution is much more accurate.

Example 3 . (a) Comparison of the approximate solution with the exact solution under boundary condition \(\mathbf{S_{1}}\). (b) Absolute error obtained with the new method at different scale levels. (c) \(\|E\|_{2}\) obtained with the new method at different values of N.

Example 4

As a last example consider the following nonlinear fractional differential equation:

with boundary conditions

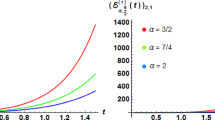

Select a suitable \(g(t)\) such that the exact and unique solution of the above problem is \(U(t)=e^{t/6}\). We approximate the solution of this problem with the iterative method proposed in the paper under boundary condition \(S_{1}\). We carry out the iteration at different scale levels N. We observe that the method converges more rapidly to the exact solution for high values of N. For instance, at some values of N we calculate \(\|E\|_{2}\) at each iteration. The results are displayed in Figure 7, one can see that \(\|E\|_{2}\) falls below 10−12 at the fifth iteration using scale level \(N=7\). On solving the problem under boundary conditions \(S_{2}\) we observe that the method provides very accurate estimate of solution. The results are displayed in Table 4.

6 Conclusion

From experimental results and analysis of the proposed method we conclude that the method is efficient in solving linear and nonlinear fractional-order differential equations under different types of boundary conditions. The method has the ability to solve both local and nonlocal boundary value problems. We use normalized Bernstein polynomials for our analysis. But the method can be used to generalize such types of operational matrices for almost all types of orthogonal polynomials. It is also possible to get a more approximate solution of such problems using other types of orthogonal polynomials like Legendre, Jacobi, Laguerre, Hermite etc. It is not clear to us which is the best set of orthogonal polynomials for this method. Further investigation is required to generalize the method to solve other types of scientific problems.

References

Baleanu, D, Diethelm, K, Scalas, E, Trujillo, JJ: Fractional Calculus Models and Numerical Methods, Series on Complexity, Nonlinearity and Chaos. World Scientific, Boston (2012)

Kilbas, AA, Srivastava, HM, Trujillo, JJ: Theory and Applications of Fractional Differential Equations. North-Holland Mathematics Studies, vol. 204. Elsevier, Amsterdam (2006)

Lazarevic, MP, Spasic, AM: Finite-time stability analysis of fractional order time-delay systems: Gronwall’s approach. Math. Comput. Model. 49(3), 475-481 (2009)

Magin, RL: Fractional Calculus in Bioengineering. Begell House, Redding (2006)

Podlubny, I: Fractional Differential Equations. Academic Press, San Diego (1999)

Sabatier, J, Agrawal, OP, Machado, JAT (eds.): Advances in Fractional Calculus: Theoretical Developments and Applications in Physics and Engineering. Springer, Dordrecht (2007)

Zaslavsky, GM: Hamiltonian Chaos and Fractional Dynamics. Oxford University Press, Oxford (2008)

Gupta, CP: Solvability of a three-point nonlinear boundary value problem for a second order ordinary differential equation. J. Math. Anal. Appl. 168, 540-551 (1992)

Ma, R: A survey on nonlocal boundary value problems. Appl. Math. E-Notes 7, 257-279 (2007)

Guezane-Lakoud, A, Zenkoufi, L: Existence of positive solutions for a third-order multi-point boundary value problem. Appl. Math. 3, 1008-1013 (2012)

El Sayed, AMA, Bin-Tahir, EO: Positive solutions for a nonlocal boundary-value problem of a class of arbitrary (fractional) orders differential equations. Int. J. Nonlinear Sci. Numer. Simul. 14(4), 398-404 (2012)

Canuto, C, Hussaini, MY, Quarteroni, A, Zang, TA: Spectral Methods in Fluid Dynamics. Springer, New York (1988)

Bhrawy, AH, Alofi, AS: A Jacobi-Gauss collocation method for solving nonlinear Lane-Emden type equations. Commun. Nonlinear Sci. Numer. Simul. 17, 62-70 (2012)

Funaro, D: Polynomial Approximation of Differential Equations. Lecturer Notes in Physics. Springer, Berlin (1992)

Doha, EH, Bhrawy, AH, Ezz-Eldien, SS: Efficient Chebyshev spectral methods for solving multi-term fractional orders differential equations. Appl. Math. Model. 35, 5662-5672 (2011)

Bhrawy, AH, Alofi, AS, Ezz-Eldien, SS: A quadrature tau method for variable coefficients fractional differential equations. Appl. Math. Lett. 24, 2146-2152 (2011)

Saadatmandi, A, Dehghan, M: A new operational matrix for solving fractional-order differential equations. Comput. Math. Appl. 59, 1326-1336 (2010)

Doha, EH, Bhrawy, AH, Ezz-Eldien, SS: A Chebyshev spectral method based on operational matrix for initial and boundary value problems of fractional order. Comput. Math. Appl. 62, 2364-2373 (2011)

Ghoreishi, F, Yazdani, S: An extension of the spectral Tau method for numerical solution of multi-order fractional differential equations with convergence analysis. Comput. Math. Appl. 61, 30-43 (2011)

Vanani, SK, Aminataei, A: A Tau approximate solution of fractional partial differential equations. Comput. Math. Appl. 62, 1075-1083 (2011)

Esmaeili, S, Shamsi, M: A pseudo-spectral scheme for the approximate solution of a family of fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 16, 3646-3654 (2011)

Pedas, AA, Tamme, E: On the convergence of spline collocation methods for solving fractional differential equations. J. Comput. Appl. Math. 235, 3502-3514 (2011)

Bhrawy, AH, Al-Shomrani, MM: A shifted Legendre spectral method for fractional-order multi-point boundary value problems. Adv. Differ. Equ. 2012 8 (2012)

Khalil, H, Khan, RA: The use of Jacobi polynomials in the numerical solution of coupled system of fractional differential equations. Int. J. Comput. Math. (2014). doi:10.1080/00207160.2014.945919

Shah, K, Ali, A, Khan, RA: Numerical solutions of fractional order system of Bagley-Torvik equation using operational matrices. Sindh Univ. Res. J. (Sci. Ser.) 47(4), 757-762 (2015)

Khalil, H, Khan, RA: A new method based on Legendre polynomials for solutions of the fractional two-dimensional heat conduction equation. Comput. Math. Appl. (2014). doi:10.1016/j.camwa.2014.03.008

Khalil, H, Khan, RA: New operational matrix of integration and coupled system of Fredholm integral equations. Chin. J. Math. 2014, Article ID 146013 (2014).

Khalil, H, Khan, RA: A new method based on Legendre polynomials for solution of system of fractional order partial differential equation. Int. J. Comput. Math. 91, 2554-2567 (2014). doi:10.1080/00207160.2014.880781

Khalil, H, Khan, RA, Al Smadi, MH, Freihat, A: Approximation of solution of time fractional order three-dimensional heat conduction problems with Jacobi polynomials. J. Math. 47(1), 35-56 (2015)

Khalil, H, Rashidi, MM, Khan, RA: Application of fractional order Legendre polynomials: a new procedure for solution of linear and nonlinear fractional differential equations under m-point nonlocal boundary conditions. Commun. Numer. Anal. 2016(2), 144-166 (2016)

Khalil, H, Khan, RA, Baleanu, D, Rashidi, MM: Some new operational matrices and its application to fractional order Poisson equations with integral type boundary constrains. Comput. Appl. Math. (2016). doi:10.1016/j.camwa.2016.04.014

Bhrawy, AH, Zaky, MA: A method based on the Jacobi tau approximation for solving multi-term time-space fractional partial differential equations. J. Comp. Physiol. 281, 876-895 (2015)

Bhrawy, AH, Zaky, MA: Numerical simulation for two-dimensional variable-order fractional nonlinear cable equation. Nonlinear Dyn. 80(1-2), 101-116 (2015)

Bhrawy, AH, Zaky, MA: New numerical approximations for space-time fractional Burgers’ equations via a Legendre spectral-collocation method. Rom. Rep. Phys. 67(2), 340-349 (2015)

Zaky, MA, Bhrawy, AH, Van Gorder, RA: A space-time Legendre spectral tau method for the two-sided space-time Caputo fractional diffusion-wave equation. Numer. Algorithms 71(1), 151-180 (2016)

Bhrawy, AH, Zaky, M: Shifted fractional-order Jacobi orthogonal functions: application to a system of fractional differential equations. Appl. Math. Model. 40(2), 832-845 (2016)

Rehman, M, Khan, RA: A numerical method for solving boundary value problems for fractional differential equations. Appl. Math. Model. 36, 894-907 (2012)

Rehman, M, Khan, RA: The Legendre wavelet method for solving fractional differential equations. Commun. Nonlinear Sci. Numer. Simul. 16, 4163-4173 (2011)

Liu, Y: Numerical solution of the heat equation with nonlocal boundary condition. J. Comput. Appl. Math. 110, 115-127 (1999)

Ang, W: A method of solution for the one-dimensional heat equation subject to nonlocal condition. Southeast Asian Bull. Math. 26, 185-191 (2002)

Dehghan, M: The one-dimensional heat equation subject to a boundary integral specification. Chaos Solitons Fractals 32, 661-675 (2007)

Noye, KHBJ: Explicit two-level finite difference methods for the two-dimensional diffusion equation. Int. J. Comput. Math. 42, 223-236 (1992)

Avalishvili, G, Avalishvili, M, Gordeziani, D: On integral nonlocal boundary value problems for some partial differential equations. Bull. Georgian. Natl. Acad. Sci. (N. S.) 5, 31-37 (2011)

Sajavicius, S: Optimization, conditioning and accuracy of radial basis function method for partial differential equations with nonlocal boundary conditions, a case of two-dimensional Poisson equation. Eng. Anal. Bound. Elem. 37, 788-804 (2013)

Yousefi, SA, Behroozifar, M: Operational matrices of Bernstein polynomials and their applications. Int. J. Inf. Syst. Sci. 41(6), 709-716 (2010)

Doha, EH, Bhrawy, AH, Saker, MA: On the derivatives of Bernstein polynomials: an application for the solution of high even-order differential equations. Bound. Value Probl. 2011, 829543 (2011)

Doha, EH, Bhrawy, AH, Saker, MA: Integrals of Bernstein polynomials: an application for the solution of high even-order differential equations. Appl. Math. Lett. 24(1), 559-565 (2011)

Juttler, B: The dual basis functions for the Bernstein polynomials. Adv. Comput. Math. 8(4), 345-352 (1998)

Farouki, RT: Legendre Bernstein basis transformations. J. Comput. Math. 119(1), 145-160 (2000)

Hermann, T: On the stability of polynomial transformations between Taylor, Bernstein, and Hermite forms. Numer. Algorithms 13(2), 307-320 (1996)

Bellucci, MA: On the explicit representation of orthonormal Bernstein polynomials. http://arxiv.org/abs/1404.2293v2

Chen, Y, Sun, Y, Liu, L: Numerical solution of fractional partial differential equations with variable coefficients using generalized fractional-order Legendre functions. Appl. Comput. Math. 244, 847-858 (2014)

Knuth, DE: The Art of Computer Programming. Fundamental Algorithms, vol. 1. Addison-Wesley, Reading (1968)

Bellman, RE, Kalaba, RE: Quasilinearization and Non-linear Boundary Value Problems. Elsevier, New York (1965)

Stanley, EL: Quasilinearization and Invariant Imbedding. Academic Press, New York (1968)

Agarwal, RP, Chow, YM: Iterative methods for a fourth order boundary value problem. J. Comput. Appl. Math. 10(2), 203-217 (1984)

Akyuz Dascioglu, A, Isler, N: Bernstein collocation method for solving nonlinear differential equations. Math. Comput. Appl. 18(3), 293-300 (2013)

Charles, A, Baird, J: Modified quasilinearization technique for the solution of boundary-value problems for ordinary differential equations. J. Optim. Theory Appl. 3(4), 227-242 (1969)

Mandelzweig, VB, Tabakin, F: Quasilinearization approach to nonlinear problems in physics with application to nonlinear ODEs. Commun. Comput. Phys. 141(2), 268-281 (2001)

Acknowledgements

The authors are thankful to the reviewers and the editor for carefully reading and useful suggestion which improved the quality of the article.

Author information

Authors and Affiliations

Corresponding author

Additional information

Competing interests

The authors declare that they have no competing interests.

Authors’ contributions

The authors have made equal contributions in preparing the proofs and numerical simulations. The authors have approved the final manuscript.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Khalil, H., Khan, R.A., Baleanu, D. et al. Approximate solution of linear and nonlinear fractional differential equations under m-point local and nonlocal boundary conditions. Adv Differ Equ 2016, 177 (2016). https://doi.org/10.1186/s13662-016-0910-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-016-0910-7