Abstract

In this paper, we present a class of new derivative-free gradient type methods for large-scale nonlinear systems of monotone equations. The methods combine the RMIL conjugate gradient method, the strategy of hyperplane projection and the derivative-free line search technique. Under some appropriate assumptions, the global convergence of the given methods is established. Numerical experiments indicate that the proposed methods are effective.

Similar content being viewed by others

1 Introduction

In this paper, we consider the following nonlinear systems of monotone equations:

where \(F:\mathbb{R}^{n} \rightarrow\mathbb{R}^{n}\) is monotone and continuous, which means F satisfies \((F(x) - F(y))^{T} (x - y) \geq0 \) for all \(x,y\in\mathbb{R}^{n}\). Nonlinear systems of monotone equations are widely used in economy, finance, engineering, industry and many other fields, so there are numerous iterative algorithms for solving (1).

Recently, La Cruz [3] presented a spectral method that uses the residual vector as search direction for solving large-scale systems of nonlinear monotone equations. Solodov and Svaiter [12] proposed a method which combined projection, proximal point and Newton method. According to the work of Solodov and Svaiter [12], Zhang and Zhou [17] developed a spectral gradient projection method for solving nonlinear monotone equations.

In particular, the conjugate gradient methods are widely used methods for solving large-scale nonlinear equations because of the low memory and simplicity [8, 10]. In the last few years, some authors proposed a series of methods for solving nonlinear monotone equations, which combined conjugate gradient methods and projection method [12]. For instance, Cheng [2] extended the PRP conjugate method to monotone equations. Yan et al. [13] proposed two modified HS conjugate method. Li and Li [7] designed a class of derivative-free methods based on line search technique. Ahookhosh et al. [1] developed two derivative-free conjugate gradient procedures. Papp and Rapajić [9] described some new FR type methods. Yuan et al. [14, 15] proposed new three-terms conjugate gradient methods. Dai et al. [4] gave a modified Perrys conjugate gradient method. Zhou et al. [20, 21] developed a class of methods. Zhang [16] developed a residual method-based secant condition.

For unconstrained optimization problem, Rivaie et al. [11] designed a RMIL conjugate gradient method. Fang and Ni [6] extended the RMIL method to solve large-scale nonlinear systems of equations with the ideas of nonmonotone line search. Numerical experiments show that the RMIL method is practically effective.

For systems of monotone equations, we describe a class of new derivative-free gradient type method, which is inspired by the efficiency of the RMIL method [11], and the strategy of projection method [12].

This paper is organized as follows. In Sect. 2, we propose the algorithm. In Sect. 3, we establish the global convergence. In Sect. 4, numerical results show the efficiency of the proposed methods. In Sect. 5, we give some conclusions. We denote by \(\|\cdot\|\) the Euclidean norm.

2 Algorithm

In this section, we first consider the conjugate gradient method for the following unconstrained optimization problem:

where \(f:\mathbb{R}^{n} \rightarrow\mathbb{R}\) is smooth.

Quite recently, Rivaie et al. [11] developed RMIL conjugate gradient method, and the search direction \(d_{k}\) is given by

where \(g_{k}\) is the gradient of f.

Numerical results show that RMIL conjugate gradient method is superior and more efficient than other conjugate gradient method.

We focus on the method for solving monotone equations (1). We have the projection procedure in [12], by performing some line search techniques to find a point

such that

On the other hand, for any \(x^{*}\) such that \(F(x^{*}) = 0\), by the monotonicity of F, we obtain

Equations (5) and (6) imply that the hyperplane

strictly separates the zeros of systems of monotone equations (1) from \(x_{k}\). Therefore, Solodov and Svaiter [12] could compute the next iterate \(x_{k+1}\) by projecting \(x_{k}\) onto the hyperplane \(H_{k}\). Specifically, \(x_{k+1}\) is obtained by

The steplength \(\alpha_{k}\) of (4) is determined by a proper line search technique. Recently, Zhang and Zhou[17], Zhou[18] presented the following derivative-free line search rule:

where \(\alpha_{k} = \max\{s,\rho s, {\rho}^{2} s ,\ldots\}\), \(d_{k}\) is the search direction, σ, s, ρ are constants, and \(\sigma>0\), \(s>0\), \(1 > \rho> 0 \).

Now, we extend RMIL conjugate gradient method [11] for solving nonlinear systems of monotone equations, which combined the projection method [12] and derivative-free line search technique [17, 18]. The steps of our algorithm are listed as follows.

Algorithm 1

(MRMIL)

Step 0: Choose an initial point \(x_{0}\in\mathbb{R}^{n}\). Let \(\delta> 0\), \(\sigma >0\), \(s >0\), \(\epsilon>0\), \(1 > \rho> 0\), \(k_{\mathrm{max}} > 0\). Set \(k = 0\).

Step 1: Choose the search direction \(d_{k}\) that satisfies the following sufficient descent condition:

and determine the initial steplength \(\alpha= s\).

Step 2: If

then set \(\alpha_{k} = \alpha\), \(z_{k} = x_{k} + \alpha_{k} d_{k}\) and go to step 3.

Else set \(\alpha_{k} = \rho\alpha_{k}\), and go to step 2.

Step 3: If \(\|F(z_{k})\| > \epsilon\), then compute

and go to step 4, otherwise stop.

Step 4: If \(\|F(x_{k+1})\| > \epsilon\) and \(k < k_{\mathrm{max}}\), then set \(k = k + 1\) and go to step 1, otherwise stop.

Let \(y_{k-1} = F_{k} - F_{k-1}\), \(\beta_{k} = \frac{F_{k}^{T} y_{k-1}}{\|d_{k-1}\| ^{2}}\), \(0 < \gamma< 1\). Now, based on the direction \(d_{k}\) of RMIL conjugate gradient algorithm for unconstrained optimization, we are going to construct three directions \(d_{k}\) that satisfy the sufficient descent condition(10).

MRMIL1 direction:

where \(\theta_{k} = \frac{(F_{k}^{T} y_{k-1})^{2}}{4\gamma\|F_{k}\|^{2} \|d_{k-1}\| ^{2}} + 1 \), \(0 < \gamma< 1\). We set \(u = \sqrt{2\gamma}\|d_{k-1}\|^{2} F_{k}\), \(v = \frac{1}{\sqrt {2\gamma}}(F_{k}^{T} y_{k-1}) d_{k-1}\), and use \(u^{T} v \leq\frac{1}{2}(\|u\|^{2} + \|v\|^{2})\), then, for \(k \in \mathbb{N}\), we have

The MRMIL1 method is the Algorithm1 with MRMIL1 direction which is defined by (13).

MRMIL2 direction:

where \(\theta_{k} = \frac{(F_{k}^{T} d_{k-1})^{2} \|y_{k-1}\|^{2}}{4\gamma\|F_{k}\| ^{2} \|d_{k-1}\|^{4}} + 1 \), \(0 < \gamma< 1\). We set \(u = \sqrt{2\gamma}\|d_{k-1}\|^{2} F_{k}\), \(v = \frac{1}{\sqrt {2\gamma}}(F_{k}^{T} d_{k-1}) y_{k-1}\), and use \(u^{T} v \leq\frac{1}{2}(\|u\|^{2} + \|v\|^{2})\), then, for \(k \in \mathbb{N}\), we have

The MRMIL2 method is Algorithm 1 with the MRMIL2 direction which is defined by (15).

MRMIL3 direction:

where \(\theta_{k} = \frac{F_{k}^{T} y_{k-1}}{4\gamma\|d_{k-1}\|^{2}} \), \(0 < \gamma< 1\). We set \(u = \sqrt{2\gamma}\|d_{k-1}\|^{2} F_{k}\), \(v = \frac{1}{\sqrt {2\gamma}}(F_{k}^{T} y_{k-1}) d_{k-1}\), and use \(u^{T} v \leq\frac{1}{2}(\|u\|^{2} + \|v\|^{2})\), then, for \(k \in \mathbb{N}\), we have

The MRMIL3 method is Algorithm 1 with the MRMIL3 direction which is defined by (17).

Using (13), (15) and (17), we get

From (14), (16), (18) and (19), it is not difficult to show that the directions \(d_{k}\) defined by the MRMIL1, MRMIL2 and MRMIL3 directions satisfy the sufficient descent condition

if we let \(\delta= 1 - \gamma\) and \(0< \gamma<1\).

3 Convergence analysis

In this section, so as to obtain the global convergence of MRMIL1, MRMIL2 and MRMIL3 method, we give the following assumptions.

Assumption 3.1

-

(1)

The solution set of the systems of monotone equations \(F(x)=0\) is nonempty.

-

(2)

\(F(x)\) is Lipschitz continuous on \(\mathbb{R}^{n}\), namely

$$ \bigl\Vert F(x) - F(y) \bigr\Vert \leq L \Vert x - y \Vert , \quad\forall x,y \in\mathbb{R}^{n}, $$(21)where L is a positive constant.

Assumption 3.1 implies that

where κ is a positive constant.

Now, we get Lemma 3.1 whose proof is similar to those in [12] and is omitted.

Lemma 3.1

Suppose Assumption3.1is satisfied and the sequence\(\{x_{k}\}\)is generated by the Algorithm1. For any\(x^{*}\)such that\(F({x^{*}}) = 0\), we have

In addition, the sequence\(\{ x_{k} \}\)satisfies

Lemma 3.2

Suppose Assumption3.1is satisfied and the sequences\(\{x_{k}, d_{k}\}\)are generated by the Algorithm1with the MRMIL1, MRMIL2 or MRMIL3 direction. Then we have

whereκ, γ, s, Lare constants, and\(\kappa>0\), \(1 > \gamma > 0\), \(s>0\), \(L>0\).

Proof

From (4) and the step 3 of Algorithm 1, we have

By step 1 and step 2 of Algorithm 1, we get

□

For \(k \in\mathbb{N}\), the boundedness of \(d_{k}\) can be divided into three cases.

Case 1 (MRMIL1 direction): The MRMIL1 direction is defined by (13). Using (21), (22), (25) and (26), we have

Case 2 (MRMIL2 direction): Analogously, the MRMIL2 direction is defined by (15). By (21), (22), (25) and (26), we get

Case 3 (MRMIL3 direction): The definition of MRMIL3 direction given by (17). (21), (22), (25) and (26) implies

From (13), (15), (17) and (22), we get

Combining with (27), (28) and (29), we find (24).

Lemma 3.3

Suppose Assumption3.1is satisfied and the sequence\(\{x_{k}, \alpha_{k}, d_{k}, F_{k}\}\)are generated by the Algorithm1with the MRMIL1, MRMIL2 or MRMIL3 direction. If there exists a constant\(\epsilon> 0\), such that\(\|F_{k}\| \geq \epsilon\)for all\(k \in \mathbb{N} \cup0\), then we have

Proof

If \(\alpha_{k} \neq s\), by the step 2 of Algorithm 1, we know that \(\rho^{-1}\alpha_{k}\) does not satisfy (11). Then we have

Combining with Assumption 3.1, (22) and (24), we have

It follows from (14), (16), (18), (21), (24), (32) and (33) that

Then we have

This implies (31). □

Theorem 3.4

Suppose Assumption3.1is satisfied and the sequences\(\{x_{k}, \alpha_{k}, d_{k}, F_{k} \}\)are generated by the Algorithm1with the MRMIL1, MRMIL2 or MRMIL3 direction. Then we have

In particular, the sequence\(\{ x_{k} \}\)converges to\(x^{*}\)and\(F(x^{*})=0\).

Proof

If (36) does not hold, then there exists a constant \(\epsilon> 0\) such that

From (14), (16) and (18). we get

Suppose x̃ is an arbitrary accumulation point of \(\{x_{k}\}\) and \(K_{1}\) is an infinite index set such that

From (23), (25) and (40), we get

On the other hand, together with the conclusion of Lemma 3.3 and (39), we obtain

Equations (41) and (42) are a contradiction, then the conclusion (36) is hold. From Assumption 3.1, Lemma 3.1 and (36), we see that the sequence \(\{x_{k}\}\) converges to some accumulation point \(x^{*}\) such that \(F(x^{*})=0\). □

4 Numerical experiments

In this section, we report some numerical test results for the MRMIL1 method, the MRMIL2 method, and the MRMIL3 method and compare with the DFPB1 method in [1] and the M3TFR2 method in [9]. Our tests are implemented in Matlab R2011a, run on a personal computer with 8 GB RAM and Intel CPU I5-3470.

In order to compare all methods, we employ the performance profiles [5], which are defined by the following fraction:

where P is the test set, \(|P|\) is the number of problems in the test set P, V is the set of optimization solvers, and \(t_{p,v}\) is the CPU time (or the number of the function evaluations, or the number of iterations) for \(p \in P\) and \(v \in V\).

We test the following problems with different starting points and various sizes: (1) the problems 1–7 from [2, 7, 13, 19] with sizes 1000, 5000, 10,000, 50,000; (2) the problem 8 from [7] with sizes 10,000, 20,164, 40,000;

All problems are initialized with the following eight starting points: \(x_{0}^{1}= 10\cdot(1,1,\ldots,1)^{T}\), \(x_{0}^{2}= -10\cdot(1,1,\ldots,1)^{T}\), \(x_{0}^{3}= (1,1,\ldots,1)^{T}\), \(x_{0}^{4}= -(1,1,\ldots,1)^{T}\), \(x_{0}^{5}= 0.1\cdot(1,1,\ldots,1)^{T}\), \(x_{0}^{6}= (1,\frac{1}{2},\ldots,\frac{1}{n})^{T}\), \(x_{0}^{7}= (\frac{1}{n},\frac{2}{n},\ldots,1)^{T}\), \(x_{0}^{8}= (\frac{n-1}{n},\frac{n-2}{n},\ldots,0)^{T}\).

For all methods, the stopping criteria are (1) \(\|F(x_{k})\|\leq\epsilon\) or (2) \(\|F(z_{k})\|\leq\epsilon\) or (3) the number of iterations exceeds \(k_{\mathrm{max}}\). where \(\epsilon= 10^{-4}\), \(k_{\mathrm{max}}=10^{5}\). Similar to [1, 9], we used the same parameters for five methods: initial steplength \(s=\| \frac{F_{k}^{t} d_{k}}{ (F(x_{k} + 10^{-8} d_{k}) - F_{k})^{T} d_{k} / 10^{-8}} \|\), \(\rho= 0.7\), \(\sigma= 0.3\), \(\gamma= \frac{1}{4}\).

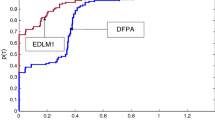

Figure 1 is for the iterations performance profiles related to five methods. As we can see, MRMIL3 guarantees better results than M3TFR2, DFPB1, MRMIL1 and MRMIL2 as it solves a higher percentage of problems when \(\tau\geq0.2\), It also can be seen that DFPB1, MRMIL1 and MRMIL2 have similar performances, especially when \(\tau\geq0.3\). Furthermore, M3TFR2 gives better results than DFPB1, MRMIL1 and MRMIL2, and the difference is significantly small as the performance ratio τ increases.

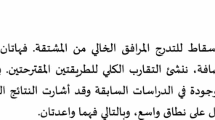

The number of function evaluations performance profiles are reported in Figure 2. we note that MRMIL3 performs better than the other four methods when \(\tau\geq0.2\). In addition, we also observe that MRMIL1 and MRMIL2 is more efficient than DFPB1 when \(\tau\geq0.3\), but more inefficient than M3TFR2.

Figure 3 shows the CPU time performance profiles. When \(\tau\leq0.15\), M3TFR2 and DFBP1 uses the shortest CPU time, but MRMIL3 gives the best result when \(\tau\geq0.15\).

5 Conclusion

In this paper, we give a class of new derivative-free gradient type methods for large-scale nonlinear systems of monotone equations. Under mild assumptions, we prove that the methods possess global convergence properties. Numerical experiments show that the proposed methods are promising, especially the MRMIL3 method, which is the most efficient one.

References

Ahookhosh, M., Amini, K., Bahrami, S.: Two derivative-free projection approaches for systems of large-scale nonlinear monotone equations. Numer. Algorithms 64(1), 21–42 (2013)

Cheng, W.: A PRP type method for systems of monotone equations. Math. Comput. Model. 50(1–2), 15–20 (2009)

Cruz, W.: A spectral algorithm for large-scale systems of nonlinear monotone equations. Numer. Algorithms 76(4), 1109–1130 (2017)

Dai, Z., Chen, X., Wen, F.: A modified Perrys conjugate gradient method-based derivative-free method for solving large-scale nonlinear monotone equations. Appl. Math. Comput. 270, 378–386 (2015)

Dolan, E., Moré, J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2001)

Fang, X., Ni, Q.: A new derivative-free conjugate gradient method for large-scale nonlinear systems of equations. Bull. Aust. Math. Soc. 95(3), 500–511 (2017)

Li, Q., Li, D.: A class of derivative-free methods for large-scale nonlinear monotone equations. IMA J. Numer. Anal. 31(4), 1625–1635 (2011)

Nocedal, J.: Conjugate gradient methods and nonlinear optimization. In: Linear and Nonlinear Conjugate Gradient-Related Methods, pp. 9–23. SIAM, Philadelphia (1996)

Papp, Z., Rapajić, S.: FR type methods for systems of large-scale nonlinear monotone equations. Appl. Math. Comput. 269, 816–823 (2015)

Polak, E.: Optimization: Algorithms and Consistent Approximations. Applied Mathematical Sciences, vol. 124. Springer, Berlin (2012)

Rivaie, M., Mamat, M., June, L., Mohd, I.: A new class of nonlinear conjugate gradient coefficients with global convergence properties. Appl. Math. Comput. 218(22), 11323–11332 (2012)

Solodov, M., Svaiter, B.F.: A globally convergent inexact Newton method for systems of monotone equations. In: Fukushima, M., Qim, L. (eds.) Reformulation: Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods, pp. 355–369. Kluwer Academic, Dordrecht (1998)

Yan, Q., Peng, X., Li, D.: A globally convergent derivative-free method for solving large-scale nonlinear monotone equations. J. Comput. Appl. Math. 234(3), 649–657 (2010)

Yuan, G., Hu, W.: A conjugate gradient algorithm for large-scale unconstrained optimization problems and nonlinear equations. J. Inequal. Appl. 2018, Article ID 113 (2018)

Yuan, G., Zhang, M.: A three-terms Polak–Ribiére–Polyak conjugate gradient algorithm for large-scale nonlinear equations. J. Comput. Appl. Math. 286, 186–195 (2015)

Zhang, L.: A derivative-free conjugate residual method using secant condition for general large-scale nonlinear equations. Numer. Algorithms (2019). https://doi.org/10.1007/s11075-019-00725-7

Zhang, L., Zhou, W.: Spectral gradient projection method for solving nonlinear monotone equations. J. Comput. Appl. Math. 196(2), 478–484 (2006)

Zhou, W.: Convergence properties of a quasi-Newton method and its applications. Ph.D. thesis, College of Mathematics and Econometrics, Hunan University, Changsha, China (2006)

Zhou, W., Li, D.: A globally convergent BFGS method for nonlinear monotone equations without any merit functions. Math. Comput. 77(624), 2231–2240 (2008)

Zhou, W., Li, D.: On the Q-linear convergence rate of a class of methods for monotone nonlinear equations. Pac. J. Optim. 14(4), 723–737 (2018)

Zhou, W., Wang, F.: A PRP-based residual method for large-scale monotone nonlinear equations. Appl. Math. Comput. 261, 1–7 (2015)

Acknowledgements

The author would like to express his sincere thanks to the editors and the referees for their valuable suggestions.

Availability of data and materials

Not applicable.

Funding

This research was funded by the National Natural Science Foundation of China (11871211) and the Natural Science Foundation of Huzhou University (2018XJKJ46).

Author information

Authors and Affiliations

Contributions

The author carried out the results, and read and approved the current version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares to have no competing interests.

Appendix: Test problems

Appendix: Test problems

We now list the following test problems.

- Problem 1.

([7], p. 1630) The function \(F(x) = (f_{1}(x), f_{2}(x), \ldots , f_{n}(x))^{T}\), where

$$ \begin{gathered} f_{1}(x) = 2 x_{1} + \sin(x_{1}) - 1, \\ f_{i}(x) = -2 x_{i-1} + 2 x_{i} + \sin(x_{i}) - 1, \quad2 \leq i \leq n-1, \\ f_{n}(x) = 2 x_{n} + \sin(x_{n}) - 1. \end{gathered} $$ - Problem 2.

([7], p. 1630) The function \(F(x) = (f_{1}(x), f_{2}(x), \ldots , f_{n}(x))^{T}\), where

$$ f_{i}(x) = 2 x_{i} - \sin(x_{i}), \quad1 \leq i \leq n. $$ - Problem 3.

([7], p. 1631) The function \(F(x) = (f_{1}(x), f_{2}(x), \ldots , f_{n}(x))^{T}\), where

$$ f_{i}(x) = 2 x_{i} - \sin \vert x_{i} \vert , \quad1 \leq i \leq n. $$ - Problem 4.

([13], p. 654) The function \(F(x) = (f_{1}(x), f_{2}(x), \ldots , f_{n}(x))^{T}\), where

$$ \begin{gathered} f_{1}(x) = \frac{1}{3} x_{1}^{3} + \frac{1}{2}x_{2}^{2}, \\ f_{i}(x) = -\frac{1}{2} x_{i}^{2} + \frac{i}{3}x_{i}^{3} + \frac {1}{2}x_{i+1}^{2}, \quad2 \leq i \leq n-1, \\ f_{n}(x) = -\frac{1}{2}x_{n}^{2} + \frac{n}{3}x_{n}^{3}. \end{gathered} $$ - Problem 5.

([13], p. 654) The function \(F(x) = (f_{1}(x), f_{2}(x), \ldots , f_{n}(x))^{T}\), where

$$ \begin{gathered} f_{1}(x) = x_{1} - \exp \biggl(\cos\biggl(\frac{x_{1} + x_{2}}{n+1}\biggr) \biggr), \\ f_{i}(x) = x_{i} - \exp \biggl(\cos\biggl( \frac{x_{i-1} + x_{i} + x_{i+1}}{n+1}\biggr) \biggr), \quad2 \leq i \leq n-1, \\ f_{n}(x) = x_{n} - \exp \biggl(\cos\biggl( \frac{x_{n-1} + x_{n}}{n+1}\biggr) \biggr). \end{gathered} $$ - Problem 6.

([19], p. 2236) The function \(F(x) = Ax + b(x) - e\), where \(b(x) = (e^{x_{1}}, e^{x_{2}},\ldots, e^{x_{n}})^{T} \) and \(e=(1,1,\ldots ,1)^{T}\) are the \(n\times1\) vectors,

$$ A = \begin{pmatrix} 2 & -1 & & & \\ -1 & 2 & -1 & & \\ & \ddots& \ddots& \ddots& \\ & & \ddots& \ddots& -1\\ & & & -1 & 2 \end{pmatrix} $$is the \(n\times n\) matrix.

- Problem 7.

([2], p. 19) The function \(F(x) = Ax - e\), where \(e=(1,1,\ldots,1)^{T}\) is the \(n\times1\) vector, and

$$ A = \begin{pmatrix} \frac{5}{2} & 1 & & & \\ 1 & \frac{5}{2} & 1 & & \\ & \ddots& \ddots& \ddots& \\ & & \ddots& \ddots& 1\\ & & & 1 & \frac{5}{2} \end{pmatrix} $$is the \(n\times n\) matrix.

- Problem 8.

([7], p. 1631) The function \(F(x) = Ax + h^{2} y - 10h^{2}e\), where \(h = \frac{1}{r+1}\), \(n=r^{2}\), \(x=(x_{1},x_{2},\ldots,x_{n})^{T}\), \(y=(x_{1}^{3},x_{2}^{3},\ldots,x_{n}^{3})^{T} \) and \(e=(1,1,\ldots,1)^{T}\) are the \(n\times1\) vectors. I is the \(m\times m\) identity matrix,

$$ B = \begin{pmatrix} 4 & -1 & & & \\ -1 & 4 & -1 & & \\ & \ddots& \ddots& \ddots& \\ & & \ddots& \ddots& -1\\ & & & -1 & 4 \end{pmatrix} $$is the \(r\times r\) matrix, and

$$ A = \begin{pmatrix} B & -I & & & \\ -I & B & -I & & \\ & \ddots& \ddots& \ddots& \\ & & \ddots& \ddots& -I\\ & & & -I & B \end{pmatrix} $$is the \(n\times n\) matrix.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fang, X. A class of new derivative-free gradient type methods for large-scale nonlinear systems of monotone equations. J Inequal Appl 2020, 93 (2020). https://doi.org/10.1186/s13660-020-02361-5

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-020-02361-5