Abstract

For large-scale unconstrained optimization problems and nonlinear equations, we propose a new three-term conjugate gradient algorithm under the Yuan–Wei–Lu line search technique. It combines the steepest descent method with the famous conjugate gradient algorithm, which utilizes both the relevant function trait and the current point feature. It possesses the following properties: (i) the search direction has a sufficient descent feature and a trust region trait, and (ii) the proposed algorithm globally converges. Numerical results prove that the proposed algorithm is perfect compared with other similar optimization algorithms.

Similar content being viewed by others

1 Introduction

It is well known that the model of small- and medium-scale smooth functions is simple since it has many optimization algorithms, such as Newton, quasi-Newton, and bundle algorithms. Note that three algorithms fail to effectively address large-scale optimization problems because they need to store and calculate relevant matrices, whereas the conjugate gradient algorithm is successful because of its simplicity and efficiency.

The optimization model is an important mathematic problem since it has been applied to various fields such as economics, engineering, and physics (see [1–12]). Fletcher and Reeves [13] successfully address large-scale unconstrained optimization problems on the basis of the conjugate gradient algorithm and obtained amazing achievements. The conjugate gradient algorithm is increasingly famous because of its simplicity and low requirement of calculation machine. In general, a good conjugate gradient algorithm optimization algorithm includes a good conjugate gradient direction and an inexact line search technique (see [14–18]). At present, the conjugate gradient algorithm is mostly applied to smooth optimization problems, and thus, in this paper, we propose a modified LS conjugate gradient algorithm to solve large-scale nonlinear equations and smooth problems. The common algorithms of addressing nonlinear equations include Newton and quasi-Newton methods (see [19–21]), gradient-based, CG methods (see [22–24]), trust region methods (see [25–27]), and derivative-free methods (see [28]), and all of them fail to address large-scale problems. The famous optimization algorithms of spectral gradient approach, limited-memory quasi-Newton method and conjugate gradient algorithm, are suitable to solve large-scale problems. Li and Li [29] proposed various algorithms on the basis of modified PRP conjugate gradient, which successfully solve large-scale nonlinear equations.

A famous mathematic model is given by

where \(f: \Re^{n}\rightarrow \Re \) and \(f\in C^{2}\). The relevant model is widely used in life and production. However, it is a complex mathematic model since it needs to meet various conditions in the field [30–33]. Experts and scholars have conducted numerous in-depth studies and have made some significant achievements (see [14, 34, 35]). It is well known that the steepest descent algorithm is perfect since it is simple and its computational and memory requirements are low. It is regrettable that the steepest descent method sometimes fails to solve problems due to the “sawtooth phenomenon”. To overcome this flaw, experts and scholars presented an efficient conjugate gradient method, which provides high performance with a simple form. In general, the mathematical formula for (1.1) is

where \(x_{k+1}\) is the next iteration point, \(\alpha_{k}\) is the step length, and \(d_{k}\) is the search direction. The famous weak Wolfe–Powell (WWP) line search technique is determined by

and

where \(\varphi \in (0, 1/2)\), \(\alpha_{k} > 0\), and \(\rho \in ( \varphi, 1)\). The direction \(d_{k+1}\) is often defined by the formula

where \(\beta_{k} \in \Re \). An increasing number of efficient conjugate gradient algorithms have been proposed by different expressions of \(\beta_{k}\) and \(d_{k}\) (see [13, 36–42] etc.). The well-known PRP algorithm is given by

where \(g_{k}\), \(g_{k+1}\), and \(f_{k}\) denote \(g(x_{k})\), \(g(x_{k+1})\), and \(f(x_{k})\), respectively; \(g_{k+1}=g(x_{k+1})=\nabla f(x_{k+1})\) is the gradient function at the point \(x_{k+1}\). It is well known that the PRP algorithm is efficient but has shortcomings, as it does not possess global convergence under the WWP line search technique. To solve this complex problem, Yuan, Wei, and Lu [43] developed the following creative formula (YWL) for the normal WWP line search technique and obtained many fruitful theories:

and

where \(\iota \in (0,\frac{1}{2})\), \(\alpha_{k} > 0\), \(\iota_{1} \in (0,\iota)\), and \(\tau \in (\iota,1)\). Further work can be found in [24]. Based on the innovation of YWL line search technique, Yuan pay much attention to normal Armijo line search technique and make further study. They proposed an efficient modified Armijo line search technique:

where \(\lambda, \gamma \in (0,1)\), \(\lambda_{1} \in (0,\lambda)\), and \(\alpha_{k}\) is the largest number of \(\{\gamma^{k}|k=0,1,2,\ldots \}\). In addition, experts and scholars pay much attention to the three-term conjugate gradient formula. Zhang et al. [44] proposed the famous formula

Nazareth [45] proposed the new formula

where \(y_{k}=g_{k+1}-g_{k}\) and \(s_{k}=x_{k+1}-x_{k}\). These two conjugate gradient methods have a sufficient descent property but fail to have the trust region feature. To improve these methods, Yuan et al. [46, 47] make a further study and get some good results. This inspires us to continue the study and extend the conjugate gradient methods to get better results. In this paper, motivated by in-depth discussions, we express a modified conjugate gradient algorithm, which has the following properties:

-

The search direction has a sufficient descent feature and a trust region trait.

-

Under mild assumptions, the proposed algorithm possesses the global convergence.

-

The new algorithm combines the steepest descent method with the conjugate gradient algorithm.

-

Numerical results prove that it is perfect compared to other similar algorithms.

The rest of the paper is organized as follows. The next section presents the necessary properties of the proposed algorithm. The global convergence is stated in Sect. 3. In Sect. 4, we report the corresponding numerical results. In Sect. 5, we introduce the large-scale nonlinear equations and express the new algorithm. Some necessary properties are listed in Sect. 6. The numerical results are reported in Sect. 7. Without loss of generality, \(f(x_{k})\) and \(f(x_{k+1})\) are replaced by \(f_{k}\) and \(f_{k+1}\), and \(\|\cdot \|\) is the Euclidean norm.

2 New modified conjugate gradient algorithm

Experts and scholars have conducted thorough research on the conjugate gradient algorithm and have obtained rich theoretical achievements. In light of the previous work by experts on the conjugate gradient algorithm, a sufficient descent feature is necessary for the global convergence. Thus, we express a new conjugate gradient algorithm under the YWL line search technique as follows:

where \(\delta =\max (\min (\eta_{5}|s_{k}^{T}y_{k}^{*}|,|d_{k}^{T}y _{k}^{*}|),\eta_{2}\|y_{k}^{*}\|\|d_{k}\|,\eta_{3}\|g_{k}\|^{2})+\eta _{4}*\|d_{k}\|^{2}\), \(y_{k}^{*}=g_{k+1}-\frac{\|g_{k+1}\|^{2}}{\|g _{k}\|^{2}}g_{k}\), and \(\eta_{i} >0\) (\(i=1, 2,3, 4, 5\)). The search direction is well defined, and its properties are stated in the next section. Now, we introduce a new conjugate gradient algorithm called Algorithm 2.1.

3 Important characteristics

This section lists some important properties of sufficient descent, the trust region, and the global convergence of Algorithm 2.1. It expresses the necessary proof.

Lemma 3.1

If search direction \(d_{k}\) meets condition of (2.1), then

and

Proof

It is obvious that formulas of (3.1) and (3.2) are true for \(k=0\).

Now consider the condition \(k \geq 1\). Similarly to (2.1), we have

and

Thus, the statement is proved. □

Similarly to (3.1) and (3.2), the algorithm has a sufficient descent feature and a trust region trait. To obtain the global convergence, we propose the following necessary assumptions.

Assumption 1

-

(i)

The level set of \(\pi =\{x|f(x) \leq f(x _{0})\}\) is bounded.

-

(ii)

The objective function \(f \in C^{2}\) is bounded from below, and its gradient function g is Lipschitz continuous, thats is, there exists a constant ζ such that

$$ \bigl\Vert g(x)-g(y)\bigr\Vert \leq \zeta \Vert x-y\Vert ,\quad x, y \in R^{n}. $$(3.3)The existence and necessity of the step length \(\alpha_{k}\) are established in [43]. In view of the discussion and established technique, the global convergence of the proposed algorithm is expressed as follows.

Theorem 3.1

If Assumptions (i)–(ii) are satisfied and the relative sequences of \(\{x_{k}\}\), \(\{d_{k}\}\), \(\{g_{k}\}\), and \(\{\alpha_{k}\}\) are generated by Algorithm 2.1, then

Proof

By (1.7), (3.1), and (3.3) we have

Summing these inequalities from \(k=0\) to ∞, under Assumption (ii), we obtain

This means that

Similarly to (1.8) and (3.1), we obtain

Thus, we obtain the following inequality:

where the last inequality is obtained since the gradient function is Lipschitz continuous. Then, we have

By (3.6) we arrive at the conclusion

as claimed. □

4 Numerical results

In this section, we list the numerical result in terms of the algorithm characteristics NI, NFG, and CPU, where NI is the total iteration number, NFG is the sum of the calculation frequency of the objective function and gradient function, and CPU is the calculation time in seconds.

4.1 Problems and test experiments

The tested problems listed in Table 1 stem from [48]. At the same time, we introduce two different algorithms into this section to measure the objective algorithm efficiency through the tested problems. We denote the two algorithms as Algorithm 2 and Algorithm 3. They are different from Algorithm 2.1 only at Step 5. One is determined by (1.10), and the other is computed by (1.11).

Stopping rule: If the inequality \(| f(x_{k})| > e_{1}\) is correct, let \(stop1=\frac{|f(x_{k})-f(x_{k+1})|}{| f(x_{k})|}\) or \(stop1=| f(x _{k})-f(x_{k+1})|\). The algorithm stops when one of the following conditions is satisfied: \(\|g(x)\|<\epsilon \), the iteration number is greater than 2000, or \(stop 1 < e_{2}\), where \(e_{1}=e_{2}=10^{-5}\) and \(\epsilon =10^{-6}\). In Table 1, “No” and “problem” represent the index of the the tested problems and the name of the problem, respectively.

Initiation: \(\iota =0.3\), \(\iota_{1}=0.1\), \(\tau =0.65\), \(\eta_{1}=0.65\), \(\eta_{2}=0.001\), \(\eta_{3}=0.001\), \(\eta_{4}=0.001\), \(\eta_{5}=0.1\).

Dimension: 1200, 3000, 6000, 9000.

Calculation environment: The calculation environment is a computer with 2 GB of memory, a Pentium(R) Dual-Core CPU E5800@3.20 GHz, and the 64-bit Windows 7 operation system.

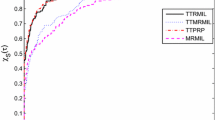

A list of the numerical results with the corresponding problem index is listed in Table 2. Then, based on the technique in [49], the plots of the corresponding figures are presented for the three discussed algorithms.

Other case: To save the paper space, we only list the data of dimension of 9000, and the remaining data are listed in the attachment.

4.2 Results and discussion

Obviously, the objective algorithm (Algorithm 2.1) is more effective than the other algorithms since the point value on the algorithm curve is largest among the three curves. In Fig. 1, the proposed algorithm curve is above the other curves. This means that the objective algorithm solves complex problems with fewer iterations, and Algorithm 3 is better than Algorithm 2. In Fig. 2, we obtain that the proposed algorithm has a large initial point, which means that it has high efficiency and its curve seems smoother than others. It is well known that the most important metric of an algorithm is the calculation time (CPU time), which is an essential aspect to measure the efficiency of an algorithm. Based on Fig. 3, the objective algorithm successfully fully utilizes its outstanding characteristics. Therefore, it saves time compared to the other algorithms in addressing complex problems.

5 Nonlinear equations

The model of nonlinear equations is given by

where the function of h is continuously differentiable and monotonous, and \(x \in R^{n}\), that is,

Scholars and writers paid much attention to this model since it significantly influences various fields such as physics and computer technology (see [1–3, 8–11]), and it has resulted in many fruitful theories and good techniques (see [47, 50–54]). By mathematical calculations we obtain that (5.1) is equivalent to the model

where \(F(x)=\frac{\|h(x)\|^{2}}{2}\), and \(\|\cdot \|\) is the Euclidean norm. Then, we pay much attention to the mathematical model (5.2) since (5.1) and (5.2) have the same solution. In general, the mathematical formula for (5.2) is \(x_{k+1}=x_{k}+\alpha_{k}d_{k}\). Now, we introduce the following famous line search technique into this paper [47, 55]:

where \(\alpha_{k}=\max \{s, s\rho, s\rho^{2}, \ldots\}\), \(s, \rho >0\), \(\rho \in (0,1)\), and \(\sigma >0\). Solodov [56] proposes a projection proximal point algorithm in a Hilbert space that finds the zeros of set-valued maximal monotone operators. Ceng and Yao [57–60] paid much attention to the research in Hilbert spaces and obtained successful achievements. Solodov and Svaiter [61] applied the projection technique to large-scale nonlinear equations and obtained some ideal achievements. For the projection-based technique, the famous formula

is flexible, where \(w_{k}=x_{k}+\alpha_{k}d_{k}\). The search direction is extremely important for the proposed algorithm since it largely determines the efficiency. Likewise, the algorithm contains the perfect line search technique. By the monotonicity of \(h(x)\) we obtain

where \(x^{*}\) is the solution of \(h(x^{*})=0\). We consider the hyperplane

It is obvious that the hyperplane separates the current iteration point of \(x_{k}\) from the zeros of the mathematical model (5.1). Then, we need to calculate the next iteration point \(x_{k+1}\) through projection of current point \(x_{k}\). Therefore, we give the following formula for the next point:

In [55], it is proved that formula (5.5) is effective since it not only obtains perfect numerical results but also has perfect theoretical characteristics. Thus, we introduce it here. The formula of the search direction \(d_{k+1}\) is given by

where \(\delta =\max (\min (\eta_{5}|s_{k}^{T}y_{k}^{*}|,|d_{k}^{T}y _{k}^{*}|),\eta_{2}\|y_{k}^{*}\|\|d_{k}\|,\eta_{3}\|g_{k}\|^{2})+\eta _{4}*\|d_{k}\|^{2}\), \(y_{k}^{*}=h_{k+1}-\frac{\|h_{k+1}\|^{2}}{\|h _{k}\|^{2}}h_{k}\), and \(\eta_{i} >0\) (\(i=1, 2,3\)). Now, we express the specific content of the proposed algorithm.

6 The global convergence of Algorithm 5.1

First, we make the following necessary assumptions.

Assumption 2

-

(i)

The objective model of (5.1) has a nonempty solution set.

-

(ii)

The function h is Lipschitz continuous on \(R^{n}\), which means that there is a positive constant L such that

$$ \bigl\Vert h(x)-h(y)\bigr\Vert \leq L\Vert x-y\Vert , \quad \forall x, y \in R^{n}. $$(6.1)

By Assumption 2(ii) it is obvious that

where θ is a positive constant. Then, the necessary properties of the search direction are the following (we omit the proof):

and

Now, we give some lemmas, which we utilize to obtain the global convergence of the proposed algorithm.

Lemma 6.1

If Assumption 2 holds, the relevant sequence \(\{x_{k}\}\) is produced by Algorithm 5.1, and the point \(x^{*}\) is the solution of the objective model (5.1). We obtain that the formula

is correct and the sequence \(\{x_{k}\}\) is bounded. Furthermore, either the last iteration point is the solution of the objective model and the sequence of \(\{x_{k}\}\) is bounded, or the sequence of \(\{x_{k}\}\) is infinite and satisfies the condition

This paper merely proposes, but omits, the relevant proof since it is similar to the proof in [61].

Lemma 6.2

Algorithm 5.1 generates an iteration point in a finite number of iteration steps, which satisfies the formula of \(x_{k+1}=x_{k}+\alpha _{k}d_{k}\) if Assumption 2 holds.

Proof

We denote \(\Psi = N \cup \{0\}\). We suppose that Algorithm 5.1 has terminated or the formula \(\|h_{k}\| \rightarrow 0\) is erroneous. This means that there exists a constant \(\varepsilon _{*}\) such that

We prove this conclusion by contradiction. Suppose that certain iteration indexes \(k^{*}\) fail to meet the condition (5.3) of the line search technique. Without loss of generality, we denote the corresponding step length as \(\alpha_{k^{*}}^{(l)}\), where \(\alpha _{k^{*}}^{(l)}=\rho^{l}s\). This means that

By (6.3) and Assumption 2(ii) we obtain

By (6.6) we obtain

It is obvious that this formula fails to meet the definition of the step length \(\alpha_{k^{*}}^{(l)}\). Thus, we conclude that the proposed line search technique is reasonable and necessary. In other words, the line search technique generates a positive constant \(\alpha_{k}\) in a finite frequency of backtracking repetitions. By the established conclusion we propose the following theorem on the global convergence of the proposed algorithm. □

Theorem 6.1

If Assumption 2 holds and the relevant sequences \(\{d_{k}, \alpha_{k}, x_{k+1},h_{k+1}\}\) are calculated using Algorithm 5.1, then

Proof

We prove this by contradiction. This means that there exist a constant \(\varepsilon_{0} > 0\) and an index \(k_{0}\) such that

On the one hand, by (6.2) and (6.4) we obtain

On the other hand, from (6.3) we have

These inequalities indicate that the sequence of \(\{d_{k}\}\) is bounded. This means that there exist an accumulation point \(d^{*}\) and the corresponding infinite set \(N_{1}\) such that

By Lemma 6.1 we obtain that the sequence of \(\{x_{k}\}\) is bounded. Thus, there exist an infinite index set \(N_{2} \subset N_{1}\) and an accumulation point \(x^{*}\) that meet the formula

By Lemmas 6.1 and 6.2 we obtain

Since \(\{d_{k}\}\) is bounded, we obtain

By the definition of \(\alpha_{k}\) we obtain the following inequality:

where \(\alpha_{k}^{*}=\alpha_{k}/\rho \). Now, we take the limit on both sides of (6.10) and (6.3) and obtain

and

The obtained contradiction completes the proof. □

7 The results of nonlinear equations

In this section, we list the relevant numerical results of nonlinear equations and present the objective function \(h(x)=(f_{1}(x), f_{2}(x), \ldots, f_{n}(x))\), where the relevant functions’ information is listed in Table 1.

7.1 Problems and test experiments

To measure the efficiency of the proposed algorithm, in this section, we compare this method with (1.10) (as Algorithm 6) using three characteristics “NI”, “NG”, and “CPU” and the remind that Algorithm 6 is identical to Algorithm 5.1. “NI” presents the number of iterations, “NG” is the calculation frequency of the function, and “CPU” is the time of the process in addressing the tested problems. In Table 1, “No” and “problem” express the indices and the names of the test problems.

Stopping rule: If \(\|g_{k}\| \leq \varepsilon \) or the whole iteration number is greater than 2000, the algorithm stops.

Initiation: \(\varepsilon =1e{-}5\), \(\sigma =0.8\), \(s=1\), \(\rho =0.9\), \(\eta_{1}=0.85\), \(\eta_{2}=\eta_{3}=0.001\), \(\eta_{4}= \eta_{5}=0.1\).

Dimension: 3000, 6000, 9000.

Calculation environment: The calculation environment is a computer with 2 GB of memory, a Pentium(R) Dual-Core CPU E5800@3.20 GHz, and the 64-bit Windows 7 operation system.

The numerical results with the corresponding problem index are listed in Table 4. Then, by the technique in [49], the plots of the corresponding figures are presented for two discussed algorithms.

7.2 Results and discussion

From the above figures, we safely arrive at the conclusion that the proposed algorithm is perfect compared to similar optimization methods since the algorithm (1.10) is perfect to a large extent. In Fig. 4 we see that the proposed algorithm quickly arrives at a value of 1.0, whereas the left one slowly approaches 1.0. This means that the objective method is successful and efficient for addressing complex problems in our life and work. It is well known that the calculation time is one of the most essential characteristics in an evaluation index of the efficiency of an algorithm. From Figs. 5 and 6, it is obvious that the two algorithms are good since their corresponding point values arrive at 1.0. This result expresses that the above two algorithms solve all of the tested problems and that the proposed algorithm is efficient.

8 Conclusion

This paper focuses on the three-term conjugate gradient algorithms and use them to solve the optimization problems and the nonlinear equations. The given method has some good properties.

-

(i)

The proposed three-term conjugate gradient formula possesses the sufficient descent property and the trust region feature without any conditions. The sufficient descent property can make the objective function value be descent, and then the iteration sequence \(\{x_{k}\}\) converges to the global limit point. Moreover, the trust region is good for the proof of the presented algorithm to be easily turned out.

-

(ii)

The given algorithm can be used for not only the normal unstrained optimization problems but also for the nonlinear equations. Both algorithms for these two problems have the global convergence under general conditions.

-

(iii)

Large-scale problems are done by the given problems, which shows that the new algorithms are very effective.

References

Birindelli, I., Leoni, F., Pacella, F.: Symmetry and spectral properties for viscosity solutions of fully nonlinear equations. J. Math. Pures Appl. 107(4), 409–428 (2017)

Ganji, D.D., Fakour, M., Vahabzadeh, A., et al.: Accuracy of VIM, HPM and ADM in solving nonlinear equations for the steady three-dimensional flow of a Walter’s B fluid in vertical channel. Walailak J. Sci. Technol. 11(7), 203–204 (2014)

Georgiades, F.: Nonlinear equations of motion of L-shaped beam structures. Eur. J. Mech. A, Solids 65, 91–122 (2017)

Dai, Z., Wen, F.: Some improved sparse and stable portfolio optimization problems. Finance Res. Lett. (2018). https://doi.org/10.1016/j.frl.2018.02.026

Dai, Z., Li, D., Wen, F.: Worse-case conditional value-at-risk for asymmetrically distributed asset scenarios returns. J. Comput. Anal. Appl. 20, 237–251 (2016)

Dong, X., Liu, H., He, Y.: A self-adjusting conjugate gradient method with sufficient descent condition and conjugacy condition. J. Optim. Theory Appl. 165(1), 225–241 (2015)

Dong, X., Liu, H., He, Y., Yang, X.: A modified Hestenes–Stiefel conjugate gradient method with sufficient descent condition and conjugacy condition. J. Comput. Appl. Math. 281, 239–249 (2015)

Liu, Y.: Approximate solutions of fractional nonlinear equations using homotopy perturbation transformation method. Abstr. Appl. Anal. 2012(2), 374 (2014)

Chen, P.: Christoph Schwab, sparse-grid, reduced-basis Bayesian inversion, nonaffine-parametric nonlinear equations. J. Comput. Phys. 316(C), 470–503 (2016)

Shah, F.A., Noor, M.A.: Some numerical methods for solving nonlinear equations by using decomposition technique. Appl. Math. Comput. 251(C), 378–386 (2015)

Waziri, M., Aisha, H.A., Mamat, M.: A structured Broyden’s-like method for solving systems of nonlinear equations. World Appl. Sci. J. 8(141), 7039–7046 (2014)

Wen, F., He, Z., Dai, Z., et al.: Characteristics of investors risk preference for stock markets. Econ. Comput. Econ. Cybern. Stud. Res. 48, 235–254 (2014)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7(2), 149–154 (1964)

Al-Baali, M., Narushima, Y., Yabe, H.: A family of three-term conjugate gradient methods with sufficient descent property for unconstrained optimization. Comput. Optim. Appl. 60(1), 89–110 (2015)

Egido, J.L., Lessing, J., Martin, V., et al.: On the solution of the Hartree–Fock–Bogoliubov equations by the conjugate gradient method. Nucl. Phys. A 594(1), 70–86 (2016)

Huang, C., Chen, C.: A boundary element-based inverse-problem in estimating transient boundary conditions with conjugate gradient method. Int. J. Numer. Methods Eng. 42(5), 943–965 (2015)

Huang, N., Ma, C.: The modified conjugate gradient methods for solving a class of generalized coupled Sylvester-transpose matrix equations. Comput. Math. Appl. 67(8), 1545–1558 (2014)

Mostafa, E.S.M.E.: A nonlinear conjugate gradient method for a special class of matrix optimization problems. J. Ind. Manag. Optim. 10(3), 883–903 (2014)

Albaali, M., Spedicato, E., Maggioni, F.: Broyden’s quasi-Newton methods for a nonlinear system of equations and unconstrained optimization, a review and open problems. Optim. Methods Softw. 29(5), 937–954 (2014)

Fang, X., Ni, Q., Zeng, M.: A modified quasi-Newton method for nonlinear equations. J. Comput. Appl. Math. 328, 44–58 (2018)

Luo, Y.Z., Tang, G.J., Zhou, L.N.: Hybrid approach for solving systems of nonlinear equations using chaos optimization and quasi-Newton method. Appl. Soft Comput. 8(2), 1068–1073 (2008)

Tarzanagh, D.A., Nazari, P., Peyghami, M.R.: A nonmonotone PRP conjugate gradient method for solving square and under-determined systems of equations. Comput. Math. Appl. 73(2), 339–354 (2017)

Wan, Z., Hu, C., Yang, Z.: A spectral PRP conjugate gradient methods for nonconvex optimization problem based on modified line search. Discrete Contin. Dyn. Syst., Ser. B 16(4), 1157–1169 (2017)

Yuan, G., Sheng, Z., Wang, B., et al.: The global convergence of a modified BFGS method for nonconvex functions. J. Comput. Appl. Math. 327, 274–294 (2018)

Amini, K., Shiker, M.A.K., Kimiaei, M.: A line search trust-region algorithm with nonmonotone adaptive radius for a system of nonlinear equations. 4OR 14, 133–152 (2016)

Qi, L., Tong, X.J., Li, D.H.: Active-set projected trust-region algorithm for box-constrained nonsmooth equations. J. Optim. Theory Appl. 120(3), 601–625 (2004)

Yang, Z., Sun, W., Qi, L.: Global convergence of a filter-trust-region algorithm for solving nonsmooth equations. Int. J. Comput. Math. 87(4), 788–796 (2010)

Yu, G.: A derivative-free method for solving large-scale nonlinear systems of equations. J. Ind. Manag. Optim. 6(1), 149–160 (2017)

Li, Q., Li, D.H.: A class of derivative-free methods for large-scale nonlinear monotone equations. IMA J. Numer. Anal. 31(4), 1625–1635 (2011)

Sheng, Z., Yuan, G., Cui, Z.: A new adaptive trust region algorithm for optimization problems. Acta Math. Sci. 38B(2), 479–496 (2018)

Sheng, Z., Yuan, G., Cui, Z., et al.: An adaptive trust region algorithm for large-residual nonsmooth least squares problems. J. Ind. Manag. Optim. 34, 707–718 (2018)

Yuan, G., Sheng, Z., Liu, W.: The modified HZ conjugate gradient algorithm for large-scale nonsmooth optimization. PLoS ONE 11, 1–15 (2016)

Yuan, G., Sheng, Z.: Nonsmooth Optimization Algorithms. Press of Science, Beijing (2017)

Narushima, Y., Yabe, H., Ford, J.A.: A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J. Optim. 21(1), 212–230 (2016)

Zhou, W.: Some descent three-term conjugate gradient methods and their global convergence. Optim. Methods Softw. 22(4), 697–711 (2007)

Cardenas, S.: Efficient generalized conjugate gradient algorithms. I. Theory. J. Optim. Theory Appl. 69(1), 129–137 (1991)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Hestenes, M.R., Steifel, E.: Cassettari, D., et al.: Method of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409–436 (1952)

Wei, Z., Yao, S., Liu, L.: The convergence properties of some new conjugate gradient methods. Appl. Math. Comput. 183(2), 1341–1350 (2006)

Yuan, G., Lu, X.: A modified PRP conjugate gradient method. Ann. Oper. Res. 166(1), 73–90 (2009)

Yuan, G., Lu, X., Wei, Z.: A conjugate gradient method with descent direction for unconstrained optimization. J. Comput. Appl. Math. 233(2), 519–530 (2009)

Yuan, G., Meng, Z., Li, Y.: A modified Hestenes and Stiefel conjugate gradient algorithm for large-scale nonsmooth minimizations and nonlinear equations. J. Optim. Theory Appl. 168(1), 129–152 (2016)

Yuan, G., Wei, Z., Lu, X.: Global convergence of BFGS and PRP methods under a modified weak Wolfe–Powell line search. Appl. Math. Model. 47, 811–825 (2017)

Zhang, L., Zhou, W., Li, D.H.: A descent modified Polak–Ribière–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26(4), 629–640 (2006)

Nazareth, L.: A conjugate direction algorithm without line searches. J. Optim. Theory Appl. 23(3), 373–387 (1977)

Yuan, G., Wei, Z., Li, G.: A modified Polak–Ribière–Polyak conjugate gradient algorithm for nonsmooth convex programs. J. Comput. Appl. Math. 255, 86–96 (2014)

Yuan, G., Zhang, M.: A three-terms Polak–Ribière–Polyak conjugate gradient algorithm for large-scale nonlinear equations. J. Comput. Appl. Math. 286, 186–195 (2015)

Andrei, N.: An unconstrained optimization test functions collection. Environ. Sci. Technol. 10(1), 6552–6558 (2008)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91(2), 201–213 (2001)

Ahmad, F., Tohidi, E., Ullah, M.Z., et al.: Higher order multi-step Jarratt-like method for solving systems of nonlinear equations, application to PDEs and ODEs. Comput. Math. Appl. 70(4), 624–636 (2015)

Kang, S.M., Nazeer, W., Tanveer, M., et al.: Improvements in Newton–Raphson method for nonlinear equations using modified Adomian decomposition method. Int. J. Math. Anal. 9(39), 1910–1928 (2015)

Matinfar, M., Aminzadeh, M.: Three-step iterative methods with eighth-order convergence for solving nonlinear equations. J. Comput. Appl. Math. 225(1), 105–112 (2016)

Papp, Z., Rapajić, S.: FR type methods for systems of large-scale nonlinear monotone equations. Appl. Math. Comput. 269(C), 816–823 (2015)

Yuan, G., Wei, Z., Lu, X.: A new backtracking inexact BFGS method for symmetric nonlinear equations. Comput. Math. Appl. 55(1), 116–129 (2008)

Li, Q., Li, D.H.: A class of derivative-free methods for large-scale nonlinear monotone equations. IMA J. Numer. Anal. 31(4), 1625–1635 (2011)

Solodov, M.V., Svaiter, B.F.: A hybrid projection-proximal point algorithm. J. Convex Anal. 6, 59–70 (1999)

Ceng, L.C., Wen, C.F., Yao, Y.: Relaxed extragradient-like methods for systems of generalized equilibria with constraints of mixed equilibria, minimization and fixed point problems. J. Nonlinear Var. Anal. 1, 367–390 (2017)

Cho, S.Y.: Generalized mixed equilibrium and fixed point problems in a Banach space. J. Nonlinear Sci. Appl. 9, 1083–1092 (2016)

Cho, S.Y.: Strong convergence analysis of a hybrid algorithm for nonlinear operators in a Banach space. J. Appl. Anal. Comput. 8, 19–31 (2018)

Liu, Y.: A modified hybrid method for solving variational inequality problems in Banach spaces. J. Nonlinear Funct. Anal. 2017, Article ID 31 (2017)

Solodov, M.V., Svaiter, B.F.: A Globally Convergent Inexact Newton Method for Systems of Monotone Equations, Reformulation, Nonsmooth, Piecewise Smooth, Semismooth and Smoothing Methods, pp. 1411–1414. Springer, Berlin (1998)

Acknowledgements

The authors would like to thank the editor and the referees for their interesting comments, which greatly improved our paper. This work is supported by the National Natural Science Foundation of China (Grant No. 11661009), the Guangxi Science Fund for Distinguished Young Scholars (No. 2015GXNSFGA139001), and the Guangxi Natural Science Key Fund (No. 2017GXNSFDA198046). Innovation Project of Guangxi Graduate Education (No. YCSW2018046)

Author information

Authors and Affiliations

Contributions

The work of Dr. GY is organizing and checking this paper, and Dr. WH mainly has done the experiments of the algorithms and written the codes. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare to have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Yuan, G., Hu, W. A conjugate gradient algorithm for large-scale unconstrained optimization problems and nonlinear equations. J Inequal Appl 2018, 113 (2018). https://doi.org/10.1186/s13660-018-1703-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13660-018-1703-1