Abstract

Background

Theory and correlational research indicate organizational leadership and climate are important for successful implementation of evidence-based practices (EBPs) in healthcare settings; however, experimental evidence is lacking. We addressed this gap using data from the WISDOM (Working to Implement and Sustain Digital Outcome Measures) hybrid type III effectiveness-implementation trial. Primary outcomes from WISDOM indicated the Leadership and Organizational Change for Implementation (LOCI) strategy improved fidelity to measurement-based care (MBC) in youth mental health services. In this study, we tested LOCI’s hypothesized mechanisms of change, namely: (1) LOCI will improve implementation and transformational leadership, which in turn will (2) mediate LOCI’s effect on implementation climate, which in turn will (3) mediate LOCI’s effect on MBC fidelity.

Methods

Twenty-one outpatient mental health clinics serving youth were randomly assigned to LOCI plus MBC training and technical assistance or MBC training and technical assistance only. Clinicians rated their leaders’ implementation leadership, transformational leadership, and clinic implementation climate for MBC at five time points (baseline, 4-, 8-, 12-, and 18-months post-baseline). MBC fidelity was assessed using electronic metadata for youth outpatients who initiated treatment in the 12 months following MBC training. Hypotheses were tested using longitudinal mixed-effects models and multilevel mediation analyses.

Results

LOCI significantly improved implementation leadership and implementation climate from baseline to follow-up at 4-, 8-, 12-, and 18-month post-baseline (all ps < .01), producing large effects (range of ds = 0.76 to 1.34). LOCI’s effects on transformational leadership were small at 4 months (d = 0.31, p = .019) and nonsignificant thereafter (ps > .05). LOCI’s improvement of clinic implementation climate from baseline to 12 months was mediated by improvement in implementation leadership from baseline to 4 months (proportion mediated [pm] = 0.82, p = .004). Transformational leadership did not mediate LOCI’s effect on implementation climate (p = 0.136). Improvement in clinic implementation climate from baseline to 12 months mediated LOCI’s effect on MBC fidelity during the same period (pm = 0.71, p = .045).

Conclusions

LOCI improved MBC fidelity in youth mental health services by improving clinic implementation climate, which was itself improved by increased implementation leadership. Fidelity to EBPs in healthcare settings can be improved by developing organizational leaders and strong implementation climates.

Trial registration

ClinicalTrials.gov identifier: NCT04096274. Registered September 18, 2019.

Similar content being viewed by others

Background

Many implementation theories and frameworks in healthcare assert the importance of organizational leadership and organizational implementation climate for achieving high fidelity to newly implemented clinical interventions [1,2,3,4,5,6]; however, the evidentiary basis for these claims is thin. The usual standard for making causal claims in the medical and social sciences is demonstration of effect within a randomized controlled trial [7]; yet, recent reviews indicate no trials have tested whether experimentally induced change in organizational leadership or organizational implementation climate contributes to improved implementation of clinical interventions in healthcare [8, 9]. In the present study, we address this gap using data from the WISDOM (Working to Implement and Sustain Digital Outcome Measures) hybrid type III effectiveness-implementation trial. The WISDOM trial showed that a strategy called Leadership and Organizational Change for Implementation (LOCI) [10, 11], which targets organizational leadership and organizational implementation climate, improved fidelity to measurement-based care (MBC) in outpatient mental health clinics serving youth [12]. In this paper, we tested LOCI’s hypothesized mechanisms of change. Specifically, we tested whether improvement in clinic leadership contributed to improvement in clinic implementation climate and whether improved implementation climate in turn contributed to improved MBC fidelity.

Measurement-based care in youth mental health

Measurement-based care is an evidence-based practice (EBP) that involves the collection of standardized symptom rating scales from patients prior to each treatment session and use of the results to guide treatment decisions [13]. Meta-analyses of over 30 randomized controlled trials indicate feedback from MBC improves the outcomes of mental health treatment relative to services as usual across patient ages, diagnoses, and intervention modalities [14,15,16,17]. There is also evidence MBC improves mental health medication adherence [18], reduces risk of treatment dropout [14], and is particularly effective for youths and for patients who are most at risk for treatment failure [14,15,16, 18].

Unfortunately, MBC is rarely used in practice. Only 14% of clinicians who deliver mental health services to youth in the USA use any form of MBC [19], and MBC usage rates are similarly low in other countries [20, 21]. When MBC use is mandated, less than half of clinicians view feedback and use it to guide treatment [22,23,24]. Digital MBC systems (i.e., measurement feedback systems) remove many practical barriers to MBC implementation by collecting measures from patients electronically (e.g., via tablet or phone) and instantaneously generating feedback [25]. However, even when these systems are available, clinician fidelity to MBC—defined as administering measures, viewing feedback reports, and using the information to guide treatment—is often substandard [22, 26]. Qualitative and quantitative studies of MBC implementation indicate clinicians’ work environments explain much of the variation in their attitudes toward, and use of, MBC [19, 27, 28], with organizational leadership and supportive organizational culture or organizational implementation climate identified as key determinants [13, 28,29,30].

Mechanisms of the LOCI strategy

LOCI is a multicomponent organizational implementation strategy that engages organizational executives and first-level leaders (i.e., those who administratively supervise clinicians) to build an organizational climate to support the implementation of a focal EBP with fidelity [10, 31]. It includes two overarching components: (1) monthly organizational strategy meetings between executives and LOCI consultants/trainers to develop and embed policies, procedures, and practices that support implementation of a focal EBP and (2) training and coaching for first-level leaders, to develop their skills in leading implementation. Organizational survey data and feedback guide planning, goal specification, and progress monitoring for both components. The aim of these components is to develop an organizational implementation climate [32, 33] in which clinicians perceive that use of a specific EBP with high fidelity is expected, supported, and rewarded [34].

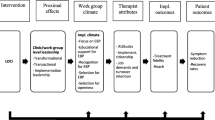

Figure 1 shows LOCI’s theoretical model as applied to MBC in the present study. This model forms the basis for the study hypotheses. The LOCI strategy draws on two leadership theories—full-range leadership [35, 36] and implementation leadership [37]—and on theories of organizational implementation climate [33, 34], to explain variation in implementation success and to identify targets for implementation improvement. As is shown in Fig. 1, LOCI seeks to equip first-level leaders (e.g., clinical program managers), with two types of leadership behaviors believed to influence implementation success. Transformational leadership, drawn from the full-range leadership model, is a general type of leadership that reflects a leader’s ability to inspire and motivate employees to follow an ideal or course of action [38, 39]. Implementation leadership is a type of focused leadership that refers to leader behaviors that facilitate the organization’s specific strategic objective of successfully implementing a focal EBP, such as MBC [37, 40,41,42,43,44]. As is shown in the figure, LOCI aims to increase first-level leaders’ use of these leadership behaviors in order to support the development of an implementation climate that prompts and supports clinicians’ use of the focal EBP with high fidelity.

Study theoretical model. Note: LOCI, Leadership and Organizational Change for Implementation strategy; MBC, measurement-based care. First-level leaders are those who administratively supervise clinicians (e.g., clinical managers). Random assignment of clinics to LOCI (vs. training and technical assistance only) is expected to cause improvement in clinic-level implementation leadership, transformational leadership, and implementation climate for digital MBC (Aim 1). Improvement in implementation leadership and transformational leadership is expected to mediate LOCI’s effect on improved clinic implementation climate (Aim 2). Improvement in clinic implementation climate is expected to mediate LOCI’s effect on improved fidelity to digital MBC as experienced by youth (Aim 3). In this study, the clinic level is synonymous with the organization level; however, this is not always the case in applications of LOCI. The LOCI strategy can be applied to organizations with multiple levels, resulting in theoretical models that describe how LOCI intervenes at multiple organizational levels to influence climate

Correlational studies offer preliminary support for the relationships shown in Fig. 1. In a 5-year study of 30 outpatient mental health clinics serving youth, Williams et al. [45] showed that increases in implementation leadership at the clinic level were associated with increases in clinic EBP implementation climate, which subsequently predicted increases in clinicians’ self-reported use of evidence-based psychotherapy techniques. Other studies have shown that higher levels of organizational implementation climate predict higher observed fidelity to evidence-based mental health interventions in outpatient clinics and schools [46, 47]. In the other fully powered trial of LOCI that is currently published [48], researchers studying mental health care in Norway showed that LOCI improved first-level leaders’ implementation leadership, transformational leadership, and clinic implementation climate for trauma-focused EBPs. However, no studies have tested the two key linkages in LOCI’s hypothesized theory of change, namely: (1) that improvement in clinic implementation leadership and transformational leadership contributes to subsequently improved clinic implementation climate, and (2) that improvement in clinic implementation climate explains LOCI’s effect on EBP fidelity.

Study contributions

This study makes three contributions to the literature. In Aim 1, we test LOCI’s effects on growth in first-level leaders’ use of implementation leadership (Hypothesis 1) and transformational leadership (Hypothesis 2), and on clinic implementation climate for MBC (Hypothesis 3), from baseline to 18-month post-baseline (i.e., 6 months after completion of LOCI). This aim seeks to replicate findings from an earlier trial [48] in which LOCI improved these outcomes in a different treatment setting (mental health clinics within Norwegian health trusts), patient population (adults), and set of EBPs (trauma-focused assessment and psychotherapies). In Aim 2, we test the hypotheses that experimentally induced improvement in first-level leaders’ implementation leadership (Hypothesis 4) and transformational leadership (Hypothesis 5) at T2 (4 months after baseline) will mediate LOCI’s effects on clinic implementation climate at T4 (12 months after baseline). In Aim 3, we test LOCI’s focal mechanism, namely: that improvement in clinic implementation climate from pre- to post-LOCI (i.e., T1 to T4) will mediate LOCI’s effect on MBC fidelity during the same period (Hypothesis 6). We believe this is the first study to test whether experimentally induced improvement in clinic leadership contributes to improved implementation climate and whether improvement in implementation climate improves observed fidelity to an EBP.

Method

Study design and procedure

Project WISDOM was a cluster randomized, controlled, hybrid type III effectiveness-implementation trial designed to test the effects of LOCI versus training and technical assistance only on MBC fidelity in outpatient mental health clinics serving youth. Details of the trial and primary implementation and clinical outcomes are reported elsewhere [12]. The trial enrolled 21 clinics serving youth in Idaho, Oregon, and Nevada, USA. Clinics were eligible if they were not actively implementing a digital MBC system and if they employed three or more clinicians delivering psychotherapy to youth (ages 4–18 years). Using covariate constrained randomization, clinics were randomly assigned to one of two parallel arms: (1) LOCI plus training and technical assistance in MBC or (2) training and technical assistance in MBC only. Clinic-level randomization aligned with the scope of the LOCI strategy and prevented contamination of outcomes at the clinician and patient levels. Clinic leaders could not be naïve to condition; however, clinicians and caregivers of youth were naïve to condition.

Following baseline assessments and randomization of clinics, executives and first-level leaders in the LOCI condition began participating in the LOCI implementation strategy. One month later, clinicians who worked with youths in both conditions received training to implement an evidence-based digital MBC system called the Outcome Questionnaire-Analyst (OQ-A; see below for details; [49, 50]). Following the initial OQ-A training, clinics in both conditions received two booster trainings and ongoing OQ-A technical assistance from the OQ-A purveyor organization until the trial’s conclusion.

To assess LOCI’s effects on its targeted mechanisms of change, clinicians who served youth in participating clinics were asked to complete web-based assessments evaluating their clinic’s leadership and clinic implementation climate for MBC at five time points: baseline (T1; following randomization of clinics but prior to initiation of LOCI or OQ-A training), 4-month post-baseline (T2), 8-month post-baseline (T3), 12-month post-baseline (T4; coinciding with the conclusion of LOCI), and 18-month post-baseline (T5; 6 months after LOCI concluded). Surveys were administered from October 2019 to May 2021. Clinic leaders provided the research team with rosters and emails of all youth-serving clinicians at each time point. Confidential survey links were distributed by the research team directly to clinicians via email. Clinicians received a small financial incentive for completion of each assessment (i.e., gift card to a national retailer) based on an escalating structure (US $30, US $30, US $45, US $50, US $55).

The primary implementation outcome of MBC fidelity was assessed for new youth outpatients who initiated treatment in the 12 months following clinician training in the MBC system. Upon intake to services, parents/caregivers of new youth patients were presented with study information requesting their consent for contact by the research team. Caregivers who agreed were contacted by research staff via telephone to complete screening, informed consent, and baseline measures (if eligible). After study entry, caregivers completed assessments reporting on the youth’s treatment participation (i.e., number of sessions) and symptoms monthly for 6 months following the youth’s baseline. Assessments were completed regardless of the youth’s continued participation in treatment, unless the caregiver formally withdrew (n = 7). Caregivers received a US $15 gift card to a national retailer for completion of each assessment. Enrollment and collection of follow-up data for youth occurred from January 2020 to July 2021. The CONSORT and Stari guidelines were used to report the results of this mediation analysis within the larger trial [51, 52].

Participants

All licensed clinicians who worked with youth in participating clinics at each time point were eligible to participate in web-based surveys of clinic leadership and climate. This broad inclusion criterion ensured a full picture of clinic leadership and climate at each time point.

Inclusion criteria for youth were intentionally broad to reflect the trial’s pragmatic nature and the applicability of MBC to a wide range of mental health diagnoses. Eligible youth were new patients (i.e., no psychotherapy at the clinic in the prior 12 months), ages 4 to 17 years, who had been diagnosed by clinic staff with an Axis I DSM disorder deemed appropriate for outpatient treatment at the clinic; it was not required that youths be assigned to clinicians who completed surveys. Youths were excluded if they initiated treatment more than 7 days before the informed consent interview. Electronic informed consent was obtained from all participants. The Boise State University Institutional Review Board provided oversight for the trial (protocol no. 041‐SB19‐081) which was prospectively registered at ClinicalTrials.gov (identifier: NCT04096274).

Clinical intervention: digital measurement-based care

The OQ-A is a digital MBC system shown to improve the effectiveness of mental health services in over a dozen clinical trials across four countries [49, 53]. OQ-A measures are sensitive to change upon weekly administration and designed to detect treatment progress regardless of treatment protocol, patient diagnosis, or clinician discipline [53]. In this study, clinicians had access to parent- and youth-report forms of the Youth Outcomes Questionnaire 30.2 [54, 55] and the Treatment Support Measure [56, 57]. Measures were completed by caregivers and/or youth electronically (via tablet or phone). Administration typically took 3–5 min. Measures were automatically scored by the OQ-A system, and feedback reports were generated within seconds. Feedback included a graph of change in the youth’s symptoms, critical items (e.g., feelings of aggression), and a color-coded alert, generated by an empirical algorithm, indicating whether the youth was making expected progress or was at risk of negative treatment outcome.

Clinicians were instructed to administer a youth symptom measure to the caregiver and/or youth at each session, review the feedback within 7 days of the session, and use the feedback to guide clinical decision-making. Clinicians were encouraged to discuss feedback with the caregiver and/or youth when they believed it was clinically appropriate and to administer a Treatment Support Measure if a youth was identified as high risk for negative outcome. Consistent with prior MBC trials, clinicians were not given specific guidance on how to respond to feedback; instead, they were advised to use their clinical skills in partnership with patients and clinical supervisors.

Implementation strategies

OQ-A training and technical assistance

The initial, 6-h, OQ-A training provided to clinicians in both conditions was conducted in-person by the OQ-A purveyor organization. Training focused on the conceptual and psychometric foundations of the measures, the value of clinical feedback, clinical application of measures and feedback with youth and families, and technical usage of the system. Learning activities included didactics, in vivo modeling and behavioral rehearsal, exercises with sample feedback reports, and use of the system in “playground mode.” Two, live, virtual, 1-h booster trainings were offered to clinicians 3 and 5 months after the initial training. Professional continuing education hours were offered at no cost for all trainings to encourage participation. After the initial training, all clinics in both conditions received year-round technical assistance from the OQ-A purveyor organization. This included on-demand virtual training sessions, an online library of training videos, and a customer care representative to troubleshoot technical issues.

Leadership and Organizational Change for Implementation (LOCI)

Details of the LOCI implementation strategy are available elsewhere [11, 12]. Briefly, LOCI was implemented in quarterly cycles over 12 months. During each cycle, (1) executives and first-level leaders within LOCI clinics attended monthly organizational strategy meetings to review data and to develop clinic-wide policies, procedures, and practices to support OQ-A implementation, and (2) first-level leaders attended leadership development trainings (5 days total) and participated in brief (~ 15 min) weekly coaching calls, designed to enhance their leadership skills. Once per month, individual coaching calls were replaced by group coaching calls with all other first-level leaders in the LOCI condition.

To support enrollment in the study, clinic leaders in the training and technical assistance only condition were offered access to four, professionally produced, web-based, general leadership seminars (1 h each). Seminars covered general leadership topics like giving effective feedback and leading change. The seminars were made available immediately after the OQ-A training.

Measures

MBC fidelity

The primary outcome of MBC fidelity was measured at the youth level using an empirically validated MBC fidelity index [22, 58, 59]. The index was generated using electronic metadata from the OQ-A system combined with monthly caregiver reports of the number of sessions youths attended. Following prior research [22], scores were calculated as the product of two quantities: (a) the youth’s completion rate (i.e., number of measures administered relative to the number of sessions attended within the 6-month observation period) and (b) the youth’s viewing rate (i.e., the number of feedback reports viewed by the clinician relative to the number of measures administered). Note that this product is equivalent to the ratio of viewed feedback reports to total sessions; it represents an events/trials proportion. MBC fidelity index scores summarize the level of MBC fidelity experienced by each youth (range = 0–1) and have been shown to predict clinical improvement of youths receiving MBC [22, 58, 59]. Importantly, this index captures the administration and viewing components of MBC fidelity but does not indicate whether clinicians used the feedback to guide treatment.

Implementation leadership

Clinicians assessed the extent to which their first-level leaders exhibited implementation leadership behaviors with regard to the OQ-A using the 12-item Implementation Leadership Scale (ILS) [40]. The ILS includes four subscales assessing the extent to which the first-level leader is proactive, knowledgeable, supportive, and perseverant about implementation. Responses were made on a 0 (not at all) to 4 (very great extent) scale. Total scores were calculated as the mean of all items. In prior research, scores on the ILS exhibited excellent internal consistency, convergent and discriminant validity [40, 47, 60, 61], and sensitivity to change [45].

Transformational leadership

Clinicians assessed the extent to which their first-level leaders exhibited transformational leadership behaviors using the Multifactor Leadership Questionnaire (MLQ) [62, 63]. The MLQ is a widely used measure that has demonstrated excellent psychometric properties [64] and is associated with implementation climate for EBP as well as clinicians’ attitudes toward, and use of, EBPs [65,66,67,68]. Responses were made on a 5-point scale (“not at all” to “frequently, if not always”). Consistent with prior studies, we used the 20-item transformational leadership total score, calculated as the mean of four subscales: idealized influence, inspirational motivation, intellectual stimulation, and individual consideration.

Clinic implementation climate

Clinicians’ perceptions of their clinics’ implementation climate for OQ-A were measured using the 18-item Implementation Climate Scale (ICS) [34]. The ICS includes six subscales assessing focus, educational support, recognition, rewards, selection, and openness. Responses were made on a 0 (not at all) to 4 (a very great extent) scale with the total score calculated as the mean of all items. Prior research provides evidence for the structural, convergent, and discriminant validity of scores on the ICS [27, 34, 69,70,71,72] as well as sensitivity to change [45].

Data aggregation

Best practice guidelines [73,74,75,76] recommend clinician ratings of first-level leadership and clinic implementation climate be aggregated and analyzed at the clinic level. To justify aggregation, guidelines recommend that researchers test the level of inter-rater agreement among clinicians within each clinic to confirm there is evidence of shared experience. We used the rwg(j) statistic [77] to assess inter-rater agreement among clinicians within each clinic. Across all clinics and all waves, average values of rwg(j) were above the recommended cutoff of 0.7 [78, 79] for implementation leadership (M = 0.82, SD = 0.27), transformational leadership (M = 0.87, SD = 0.24), and clinic implementation climate (M = 0.94, SD = 0.10).

Covariates

In order to increase statistical power and to address potential imbalance across clusters, we planned a priori to include covariates of state and clinic size (number of youths served in the prior year) in all analyses. In addition, in the mediation analyses described below, we included baseline values of the hypothesized mediator and outcome (when possible) to increase the plausibility of the no-unmeasured-confounding assumptions within the causal mediation approach [80, 81].

Data analysis

All analyses used an intent-to-treat approach. To test LOCI’s effects on growth in first-level leaders’ implementation leadership (H1), transformational leadership (H2), and clinic implementation climate (H3) for Aim 1, we used three-level linear mixed-effects regression models [82, 83] with random effects addressing the nesting of repeated observations (level 1) within clinicians (level 2) within clinics (level 3). Separate models were estimated for each outcome. At level 1, observations of leadership and climate collected from clinicians at each time point were modeled using a piecewise growth function that captured differences in change from baseline to each time point across conditions [84]. Implementation condition and clinic covariates were entered at level 3. Models were estimated using the mixed command in Stata 17.0 [85] under full maximum likelihood estimation, which accounts for missing data on the outcomes, assuming data are missing at random. Effect sizes were calculated as the standardized mean difference in change (i.e., difference in differences) from baseline to each time point (i.e., Cohen’s d) using formulas by Feingold [86]. Cohen suggested values of d could be interpreted as small (0.2), medium (0.5), and large (0.8) [87].

Aim 2 tested the hypotheses that experimentally induced improvement in first-level leaders’ implementation leadership (H4), and transformational leadership (H5) by T2, would mediate LOCI’s effect on improvement in clinic implementation climate by T4. These mediation hypotheses were tested using the multilevel causal mediation approach by Imai et al. [81], implemented in the R “mediation” package [88]. To align our analytic approach with our theoretical model, we estimated a 2–2-1 mediation model in which the primary antecedent (LOCI) and mediator (clinic-level aggregate leadership scores) entered the model at level 2 (i.e., the clinic level), and the outcome (clinician ratings of implementation climate) entered at level 1, representing latent clinic means [89]. Separate models were estimated for each type of leadership because Imai’s approach does not accommodate simultaneous mediators [81]. The inclusion of baseline values for the mediator (i.e., leadership) and outcome (i.e., climate) in each model modified the interpretation of the effects so that they represented the effect of LOCI on change in leadership from T1 to T2 and of change in leadership on change in climate from T1 to T4. To stabilize the effect estimates, we set the number of analytic simulations for the direct and indirect effects to 10,000. These analyses produced estimates of LOCI’s indirect and direct effects on T4 implementation climate, as well as the proportion of LOCI’s total effect on implementation climate that was mediated by improvement in leadership (i.e., proportion mediated = pm). Indirect effects indicate the extent to which LOCI influenced T4 implementation climate through its effect on T2 leadership (i.e., mediation). Direct effects indicate the residual (remaining) effect of LOCI on T4 implementation climate that was not explained by change in T2 leadership. The pm statistic is an effect size measure indicating how much of LOCI’s effect on implementation climate was explained by change in leadership.

Aim 3 tested the hypothesis that improvement in clinic implementation climate from T1 to T4 would mediate LOCI’s effect on MBC fidelity during the same time period (H6). The nested data structure was accommodated using a 2–2-1 model in which the primary antecedent (LOCI) and mediator (aggregate clinic-level T4 implementation climate scores) occurred at level 2 (i.e., clinic level) and the outcome (MBC fidelity) occurred at level 1 (i.e., youth level). Note that the inclusion of baseline values of clinic implementation climate in this model modified the interpretation of the effects so that they represent the effect of LOCI on change in climate from T1 to T4 and of change in climate on fidelity during the same time period. To address the events/trials nature of the MBC fidelity index, a generalized linear mixed-effects model with random clinic intercepts, a binomial response distribution, and a logit link function was used in the second step of the mediation analysis [82]. In total, 18 clinics enrolled a total of 234 youth, all of whom had MBC fidelity data; however, one clinic was missing ratings of T4 implementation climate, resulting in a sample of 17 clinics and 231 youth for this analysis. A sensitivity analysis based on mean imputation of the missing T4 implementation climate value yielded the same inferential conclusions. A priori statistical power analyses conducted with the PowerUp! macro [90, 91] indicated the trial had power of 0.74–0.90 to detect minimally meaningful mediation effect sizes depending on observed intraclass correlation coefficients and variance explained by covariates.

Results

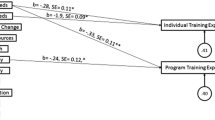

Figure 2 shows the flow of clinics, clinicians, and youth through the study. As is shown in Table 1, there were no differences by condition on the distribution of any clinic (K = 21), clinician (N = 252), or youth (N = 231) characteristics (all ps > 0.05). In total, 252 clinicians completed assessments for the study across 5 waves (average response rate = 88% across waves). The average number of participating clinicians per clinic was 12 (SD = 6.4). Nearly two-thirds of clinicians (n = 154, 61%) participated in 3 or more waves of data collection, and there were no differences by condition on clinician participation patterns (p = 0.114). A total of 234 youths were enrolled in the study. The average number of youths per clinic was 13.6 (SD = 13.3). The average number of assessments completed per youth was 5.9 (SD = 1.8) out of 7; 64% (n = 148) of youth had complete data, and 90% (n = 208) had 3 or more completed assessments. There were no differences in caregiver response rates for youth data by condition (p = 0.557).

CONSORT diagram showing the flow of clinics, clinicians, and youth through the WISDOM trial Note: ITT, intent to treat; LOCI, Leadership and Organizational Change for Implementation strategy; T2, 4-month follow-up; T4, 12-month follow-up; WISDOM, Working to Implement and Sustain Digital Outcome Measures trial. aOne clinic participated in LOCI for only 6 months. bOne clinic that enrolled youth did not have T4 climate data

Effects of LOCI on growth in clinic leadership and implementation climate

Figure 3 shows the growth in first-level leaders’ implementation leadership, transformational leadership, and clinic implementation climate from baseline to 18 months (6 months after LOCI completed). Compared to clinicians in control clinics, clinicians in LOCI reported significantly greater increases in their first-level leaders’ use of implementation leadership behaviors from baseline to 4 months (b = 1.27, SE = 0.18, p < 0.001), 8 months (b = 1.46, SE = 0.22, p < 0.001), 12 months (b = 1.28, SE = 0.27, p < 0.001), and 18 months (b = 1.07, SE = 0.37, p = 0.003). These results supported Hypothesis 1. As is shown in Table 2, LOCI’s effects on implementation leadership were large at all follow-up points, including 4-, 8-, 12-, and 18-month post-baseline (range of d = 0.97 to 1.34).

Change in clinic leadership and climate by condition and wave Note: Means estimated using linear mixed-effects regression models. Error bars represent 95% confidence intervals. All models control for state and clinic size. P-values contrast the difference between conditions on change in the outcome from baseline to the referenced time point. LOCI, Leadership and Organizational Change for Implementation condition. Control, training and technical assistance only condition. T5 occurred 6 months after completion of the LOCI strategy. See Table 2 for effect sizes. aK = 21 clinics, N = 248 clinicians, J = 803 observations. bK = 21 clinics, N = 251 clinicians, and J = 810 observations. cK = 21 clinics, N = 247 clinicians, and J = 809 observations

Hypothesis 2 stated that growth in first-level leaders’ transformational leadership would be superior in LOCI clinics relative to control. This hypothesis was partially supported (see Fig. 3 and Table 2). Clinicians in LOCI reported significantly greater growth in their first-level leaders’ use of transformational leadership behaviors from baseline to 4 months (b = 0.31, SE = 0.13, p = 0.019); however, this difference disappeared at 8 months (b = 0.27, SE = 0.14, p = 0.061) and was not evident at 12 months (b = 0.21, SE = 0.16, p = 0.191) or 18 months (b = 0.15, SE = 0.19, p = 0.438).

Hypothesis 3 stated growth in clinic implementation climate would be superior in LOCI clinics relative to control. This hypothesis was supported. Relative to clinicians in control, clinicians in LOCI reported significantly greater increases in their clinics’ implementation climate for MBC at 4 months (b = 0.56, SE = 0.10, p < 0.001), 8 months (b = 0.71, SE = 0.11, p < 0.001), 12 months (b = 0.55, SE = 0.12, p < 0.001), and 18 months (b = 0.43, SE = 0.15, p = 0.005). Table 2 shows that these effects were large during the intervention period and at posttest (i.e., at 4-, 8-, and 12-month post-baseline; d ranged from 0.98 to 1.25) and slightly attenuated at 18-month follow-up (d = 0.76).

Indirect effects of LOCI on T4 implementation climate through T2 clinic leadership

Hypotheses 4 and 5 examined how LOCI improved T4 clinic implementation climate by testing mediation models. Hypothesis 4 stated LOCI would have an indirect effect on T4 implementation climate through improvement in first-level leaders’ use of implementation leadership from T1 to T2. As is shown in Table 3, this hypothesis was supported. The LOCI strategy had a significant indirect effect on T4 implementation climate through T2 implementation leadership (indirect effect = 0.51, p = 0.004) even as LOCI’s direct effect was not statistically significant (direct effect = 0.11, p = 0.582). The proportion-mediated statistic indicated 82% of LOCI’s total effect on clinic implementation climate at T4 was explained by improvement in implementation leadership from T1 to T2 (pm = 0.82).

Hypothesis 5 stated LOCI would have an indirect effect on T4 implementation climate through improvement in first-level leaders’ transformational leadership. This hypothesis was not supported (see Table 3). There was no evidence of an indirect effect of LOCI through transformational leadership (indirect effect = 0.16, p = 0.135) even as LOCI’s direct effect on T4 implementation climate remained statistically significant (direct effect = 0.38, p = 0.024). This pattern confirms LOCI improved T4 implementation climate but not through its effect on transformational leadership.

Indirect effect of LOCI on MBC fidelity through implementation climate

Hypothesis 6 stated that LOCI’s effect on clinic implementation climate from T1 to T4 would mediate LOCI’s effect on MBC fidelity measured at the youth level during the same time period. As is shown in Table 3, results of the mediation analysis supported this hypothesis. The LOCI strategy had a statistically significant indirect effect on MBC fidelity through clinic implementation climate, increasing fidelity by 14 percentage points (indirect effect = 0.14, 95% CI = 0.01–0.37, p = 0.033) through this mechanism. The direct effect of LOCI on fidelity after accounting for clinic implementation climate was not statistically significant (direct effect = 0.05, p = 0.482). The proportion-mediated statistic indicated 71% of LOCI’s effect on MBC fidelity was explained by improvement in clinic implementation climate from T1 to T4 (pm = 0.71, p = 0.045).

Discussion

This study is the first to experimentally test the hypotheses that (a) increases in first-level leaders’ use of implementation leadership and transformational leadership improve clinic implementation climate, and (b) improvement in clinic implementation climate contributes to improved fidelity to an EBP. As such, it represents an important step in advancing recommendations for rigorous tests of mechanisms and causal theory in implementation science [73, 92]. Results support the hypotheses that (a) first-level leaders can help generate clinic implementation climates for a specific EBP through the use of implementation leadership behaviors, and (b) first-level leaders and organization executives can improve fidelity to EBP by developing focused implementation climates in their organizations.

In this trial, increases in first-level leaders’ use of implementation leadership by 4-month post-baseline explained 82% of LOCI’s effect on improvement in clinic implementation climate by 12-month post-baseline. This finding aligns with qualitative data from another recent trial of MBC implementation in community mental health [93], which also found that leader and clinical supervisor support for MBC were perceived as key implementation mechanisms. The linkage of implementation leadership to improvement in clinic implementation climate suggests implementation leadership behaviors are important targets for implementation success. Accordingly, pre-service educational programs for health leaders, implementation purveyor organizations, and other stakeholders interested in supporting EBP implementation should consider integrating these leadership competencies into core curricula and training.

Contrary to our hypotheses (see Fig. 1), LOCI did not exert lasting effects on transformational leadership, and increases in transformational leadership did not explain LOCI’s effect on improvement in clinic implementation climate. This pattern of results aligns with theoretical models of leadership and climate which suggest that specific types of focused leadership (i.e., implementation leadership [40]) are needed to generate specific types of focused organizational climate and associated outcomes [37, 44]. This finding also suggests the LOCI strategy could be streamlined without loss of efficacy by scaling back (or eliminating) components that address transformational leadership, as has been done in implementation studies of autism interventions [94]. Streamlining the content of LOCI may increase LOCI’s feasibility and allow for greater development of implementation leadership skills.

Improvement in clinic implementation climate explained 71% of LOCI’s effect on youth-level MBC fidelity. The validation of this theoretical linkage within an experimental design lends credence to prior correlational studies and theory suggesting clinic implementation climate can contribute to improved EBP implementation in mental health settings [27, 44,45,46,47]. These results suggest organizational and system leaders can improve the implementation of EBPs by deploying organizational policies, procedures, and practices that send clear signals to clinicians about the importance of EBP implementation relative to competing priorities within practice settings.

Discussions about change in organizational leadership and organizational climate often center around how long it takes for these constructs to change and what level of resources are required. Results from this trial suggest changes in first-level leadership and clinic implementation climate can occur quickly, within 4 months, and that these changes can be lasting, even 6 months after supports (i.e., LOCI) are removed. A similarly brief timeframe for initial change, and similarly sustained period of maintenance of effect, was observed for implementation leadership and climate in the other large trial of LOCI, which occurred in a different country, patient population, and EBP [48]. Together, results from these trials confirm implementation leadership and implementation climate are modifiable with a combination of training, weekly coaching calls, data feedback, and goal setting.

This study highlights multiple directions for future research. Future studies should examine moderators of LOCI’s effectiveness with an eye toward the minimally necessary components to make LOCI effective. For example, there is some evidence that intervention complexity moderates the association between implementation climate and fidelity [47]. This is consistent with organizational climate theory, which indicates climate is most strongly related to employee behaviors when service complexity is higher [95] and when there is high interdependence among employees to complete tasks and high intangibility of the service provided [96]. Other research should look beyond the organization level at how systems can be modified in complementary ways to create supportive implementation climates for targeted interventions.

Strengths of this study include the use of an experimental, longitudinal design, enrollment of clinics in diverse policy environments (i.e., three different States), measurement of leadership and climate by third-party informants whose behavior is most salient to implementation success (i.e., clinicians), measurement of MBC fidelity through objective computer-generated data, time ordering of hypothesized antecedents and consequents, and use of rigorous causal mediation models to estimate direct and indirect effects. The study’s primary limitation is generalizability given all clinics and caregivers of youth volunteered to participate. In addition, the MBC fidelity measure does not assess whether clinicians used the feedback to inform clinical decisions. Data were not collected on other mechanisms that may explain LOCI’s effects, a gap that may be fruitfully addressed by future qualitative research. Because the LOCI condition included training and technical assistance, it was not possible to isolate LOCI’s independent effects; this is also a fruitful area for future research. Finally, we were unable to fully test LOCI’s hypothesized theory of change due to our use of the causal mediation approach which precludes testing serial multiple mediator models (e.g., LOCI → leadership → climate → fidelity). Nonetheless, our results confirm the most consequential links in LOCI’s theoretical model and offer important directions for research and practice.

Conclusion

In this mediation analysis of the WISDOM trial, experimentally induced improvement in implementation leadership explained increases in clinic implementation climate, which in turn explained LOCI’s effects on MBC fidelity in youth mental health services. This offers strong evidence that fidelity to EBPs can be improved by developing organizational leaders and strong implementation climates.

Availability of data and materials

NJW and SCM had full access to all data in the study and take responsibility for the integrity of the data and the accuracy of the analyses. Requests for access to deidentified data can be sent to Dr. Williams at natewilliams@boisestate.edu, Boise State University School of Social Work, 1910 W University Dr., Boise, ID 83725.

Abbreviations

- DSM:

-

Diagnostic and Statistical Manual of Mental Disorders

- EBP:

-

Evidence-based practice

- H1–H6:

-

Hypotheses 1–6

- ILS:

-

Implementation Leadership Scale

- ICS:

-

Implementation Climate Scale

- LOCI:

-

Leadership and Organizational Change for Implementation strategy

- MBC:

-

Measurement-based care

- MLQ:

-

Multifactor Leadership Questionnaire

- OQ-A:

-

Outcome Questionnaire-Analyst

- WISDOM:

-

Working to Implement and Sustain Digital Outcome Measures trial

References

Weiner BJ, Belden CM, Bergmire DM, Johnston M. The meaning and measurement of implementation climate. Implement Sci. 2011;6(1):78.

Damschroder LJ, Aron DC, Keith RE, Kirsh SR, Alexander JA, Lowery JC. Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implementation Sci. 2009;4(1):50.

Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health. 2011;38(1):4–23.

Stetler CB, Ritchie JA, Rycroft-Malone J, Charns MP. Leadership for evidence-based practice: strategic and functional behaviors for institutionalizing EBP. Worldviews Evid Based Nurs. 2014;11(4):219–26.

Birken S, Clary A, Tabriz AA, Turner K, Meza R, Zizzi A, Larson M, Walker J, Charns M. Middle managers’ role in implementing evidence-based practices in healthcare: a systematic review. Implement Sci. 2018;13:1–14.

Nilsen P. Making sense of implementation theories, models and frameworks. Implement Sci. 2015;10(1):1–13.

Woodward J: Making things happen: a theory of causal explanation: Oxford university press; 2005.

Meza RD, Triplett NS, Woodard GS, Martin P, Khairuzzaman AN, Jamora G, Dorsey S. The relationship between first-level leadership and inner-context and implementation outcomes in behavioral health: a scoping review. Implement Sci. 2021;16(1):69.

Williams NJ, Glisson C: Changing organizational social context to support evidence-based practice implementation: a conceptual and empirical review. In: Albers B, Shlonsky A, Mildon R, editors. Implementation Science 3.0. Switzerland: Springer; 2020, p. 145–172.

Aarons GA, Ehrhart MG, Farahnak LR, Hurlburt MS. Leadership and Organizational Change for Implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implement Sci. 2015;10(1):11.

Aarons GA, Ehrhart MG, Moullin JC, Torres EM, Green AE. Testing the Leadership and Organizational Change for Implementation (LOCI) intervention in substance abuse treatment: a cluster randomized trial study protocol. Implement Sci. 2017;12(1):29.

Williams NJ, Marcus SC, Ehrhart MG, Sklar M, Esp S, Carandang K, Vega N, Gomes A, Brookman-Frazee L, Aarons GA: Randomized trial of an organizational implementation strategy to improve measurement-based care fidelity and youth outcomes in community mental health. Journal of the American Academy of Child and Adolescent Psychiatry; in press.

Lewis CC, Boyd M, Puspitasari A, Navarro E, Howard J, Kassab H, Hoffman M, Scott K, Lyon A, Douglas S, et al. Implementing measurement-based care in behavioral health: a review. JAMA Psychiat. 2019;76(3):324–35.

de Jong K, Conijn JM, Gallagher RA, Reshetnikova AS, Heij M, Lutz MC. Using progress feedback to improve outcomes and reduce drop-out, treatment duration, and deterioration: amultilevel meta-analysis. Clin Psychol Rev. 2021;85:102002.

Rognstad K, Wentzel-Larsen T, Neumer S-P, Kjøbli J. A systematic review and meta-analysis of measurement feedback systems in treatment for common mental health disorders. Adm Policy Ment Health. 2023;50(2):269–82.

Tam H, Ronan K. The application of a feedback-informed approach in psychological service with youth: systematic review and meta-analysis. Clin Psychol Rev. 2017;55:41–55.

Lambert MJ, Whipple JL, Hawkins EJ, Vermeersch DA, Nielsen SL, Smart DW. Is it time for clinicians to routinely track patient outcome? a meta-analysis. Clin Psychol Sci Pract. 2003;10(3):288.

Zhu M, Hong RH, Yang T, Yang X, Wang X, Liu J, Murphy JK, Michalak EE, Wang Z, Yatham LN. The efficacy of measurement-based care for depressive disorders: systematic review and meta-analysis of randomized controlled trials. J Clin Psychiatry. 2021;82(5):37090.

Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, Hawley KM. Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Adm Policy Ment Health. 2018;45(1):48–61.

Gilbody SM, House AO, Sheldon TA. Psychiatrists in the UK do not use outcomes measures: national survey. Br J Psychiatry. 2002;180(2):101–3.

Patterson P, Matthey S, Baker M. Using mental health outcome measures in everyday clinical practice. Australas Psychiatry. 2006;14(2):133–6.

Bickman L, Douglas SR, De Andrade AR, Tomlinson M, Gleacher A, Olin S, Hoagwood K. Implementing a measurement feedback system: a tale of two sites. Adm Policy Ment Health. 2016;43(3):410–25.

Garland AF, Kruse M, Aarons GA. Clinicians and outcome measurement: what’s the use?. J Behav Health Serv Res. 2003;30(4):393–405.

de Jong K, van Sluis P, Nugter MA, Heiser WJ, Spinhoven P. Understanding the differential impact of outcome monitoring: therapist variables that moderate feedback effects in a randomized clinical trial. Psychother Res. 2012;22(4):464–74.

Lyon AR, Lewis CC, Boyd MR, Hendrix E, Liu F. Capabilities and characteristics of digital measurement feedback systems: results from a comprehensive review. Administration and Policy in Mental Health and Mental Health Services Research. 2016;43(3):441–66.

Mellor-Clark J, Cross S, Macdonald J, Skjulsvik T. Leading horses to water: lessons from a decade of helping psychological therapy services use routine outcome measurement to improve practice. Adm Policy Ment Health. 2016;43(3):279–85.

Williams NJ, Ramirez NV, Esp S, Watts A, Marcus SC: Organization-level variation in therapists’ attitudes toward and use of measurement-based care. Administration and Policy in Mental Health and Mental Health Services Research 2022:1–16.

Gleacher AA, Olin SS, Nadeem E, Pollock M, Ringle V, Bickman L, Douglas S, Hoagwood K. Implementing a measurement feedback system in community mental health clinics: a case study of multilevel barriers and facilitators. Adm Policy Ment Health. 2016;43(3):426–40.

Marty D, Rapp C, McHugo G, Whitley R. Factors influencing consumer outcome monitoring in implementation of evidence-based practices: results from the National EBP Implementation Project. Adm Policy Ment Health. 2008;35(3):204–11.

Kotte A, Hill KA, Mah AC, Korathu-Larson PA, Au JR, Izmirian S, Keir SS, Nakamura BJ, Higa-McMillan CK. Facilitators and barriers of implementing a measurement feedback system in public youth mental health. Adm Policy Ment Health. 2016;43(6):861–78.

Aarons GA, Farahnak LR, Ehrhart MG: Leadership and strategic organizational climate to support evidence-based practice implementation. In: Dissemination and implementation of evidence-based practices in child and adolescent mental health. edn. New York, NY, US: Oxford University Press; 2014: 82–97.

Klein KJ, Sorra JS. The challenge of innovation implementation. Acad Manag Rev. 1996;21(4):1055–80.

Klein KJ, Conn AB, Sorra JS. Implementing computerized technology: an organizational analysis. J Appl Psychol. 2001;86(5):811–24.

Ehrhart MG, Aarons GA, Farahnak LR. Assessing the organizational context for EBP implementation: the development and validity testing of the Implementation Climate Scale (ICS). Implement Sci. 2014;9(1):157.

Avolio BJ, Bass BM, Jung DI. Re-examining the components of transformational and transactional leadership using the multifactor leadership. J Occup Organ Psychol. 1999;72(4):441–62.

Bass BM, Avolio BJ. The implications of transactional and transformational leadership for individual, team, and organizational development. Res Organ Chang Dev. 1990;4(1):231–72.

Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74.

Bass BM. Two decades of research and development in transformational leadership. Eur J Work Organ Psy. 1999;8(1):9–32.

Bass BM. Does the transactional–transformational leadership paradigm transcend organizational and national boundaries? Am Psychol. 1997;52(2):130.

Aarons GA, Ehrhart MG, Farahnak LR. The Implementation Leadership Scale (ILS): development of a brief measure of unit level implementation leadership. Implement Sci. 2014;9(1):45.

Schneider B, Ehrhart MG, Macey WH. Organizational climate and culture. Annu Rev Psychol. 2013;64(1):361–88.

Schneider B, Ehrhart MG, Mayer DM, Saltz JL, Niles-Jolly K. Understanding organization-customer links in service settings. Acad Manag J. 2005;48(6):1017–32.

Barling J, Loughlin C, Kelloway EK. Development and test of a model linking safety-specific transformational leadership and occupational safety. J Appl Psychol. 2002;87(3):488.

Ehrhart MG, Schneider B, Macey WH: Organizational climate and culture: an introduction to theory, research, and practice. New York, NY, US: Routledge/Taylor & Francis Group; 2014.

Williams NJ, Wolk CB, Becker-Haimes EM, Beidas RS: Testing a theory of strategic implementation leadership, implementation climate, and clinicians’ use of evidence-based practice: a 5-year panel analysis. Implementation Science 2020, 15(1).

Williams NJ, Becker-Haimes EM, Schriger SH, Beidas RS. Linking organizational climate for evidence-based practice implementation to observed clinician behavior in patient encounters: a lagged analysis. Implementation Science Communications. 2022;3(1):1–14.

Williams NJ, Hugh ML, Cooney DJ, Worley JA, Locke J: Testing a theory of implementation leadership and climate across autism evidence-based interventions of varying complexity. Behavior Therapy 2022.

Skar A-MS, Braathu N, Peters N, Bækkelund H, Endsjø M, Babaii A, Borge RH, Wentzel-Larsen T, Ehrhart MG, Sklar M: A stepped-wedge randomized trial investigating the effect of the Leadership and Organizational Change for Implementation (LOCI) intervention on implementation and transformational leadership, and implementation climate. BMC health services research 2022, 22(1):1–15.

Lambert MJ, Whipple JL, Kleinstäuber M. Collecting and delivering progress feedback: a meta-analysis of routine outcome monitoring. Psychotherapy. 2018;55(4):520.

Shimokawa K, Lambert MJ, Smart DW. Enhancing treatment outcome of patients at risk of treatment failure: meta-analytic and mega-analytic review of a psychotherapy quality assurance system. J Consult Clin Psychol. 2010;78(3):298–311.

Schulz KF, Altman DG, Moher D. CONSORT 2010 statement: updated guidelines for reporting parallel group randomised trials. J Pharmacol Pharmacother. 2010;1(2):100–7.

Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, Rycroft-Malone J, Meissner P, Murray E, Patel A: Standards for Reporting Implementation Studies (StaRI) statement. bmj 2017, 356.

Lambert MJ. Helping clinicians to use and learn from research-based systems: the OQ-analyst. Psychotherapy. 2012;49(2):109.

Ridge NW, Warren JS, Burlingame GM, Wells MG, Tumblin KM. Reliability and validity of the youth outcome questionnaire self-report. J Clin Psychol. 2009;65(10):1115–26.

Dunn TW, Burlingame GM, Walbridge M, Smith J, Crum MJ: Outcome assessment for children and adolescents: psychometric validation of the Youth Outcome Questionnaire 30.1 (Y‐OQ®‐30.1). Clinical Psychology & Psychotherapy: An International Journal of Theory & Practice 2005, 12(5):388–401.

Harmon SC, Lambert MJ, Smart DM, Hawkins E, Nielsen SL, Slade K, Lutz W. Enhancing outcome for potential treatment failures: therapist–client feedback and clinical support tools. Psychother Res. 2007;17(4):379–92.

Whipple JL, Lambert MJ, Vermeersch DA, Smart DW, Nielsen SL, Hawkins EJ. Improving the effects of psychotherapy: the use of early identification of treatment and problem-solving strategies in routine practice. J Couns Psychol. 2003;50(1):59.

Bickman L, Kelley S, Breda C, De Andrade A, Riemer M. Effects of routine feedback to clinicians on youth mental health outcomes: a randomized cluster design. Psychiatr Serv. 2011;62(12):1423–9.

Sale R, Bearman SK, Woo R, Baker N. Introducing a measurement feedback system for youth mental health: predictors and impact of implementation in a community agency. Adm Policy Ment Health. 2021;48(2):327–42.

Shuman CJ, Ehrhart MG, Torres EM, Veliz P, Kath LM, VanAntwerp K, Banaszak-Holl J, Titler MG, Aarons GA. EBP implementation leadership of frontline nurse managers: validation of the implementation leadership scale in acute care. Worldviews Evid Based Nurs. 2020;17(1):82–91.

Aarons GA, Ehrhart MG, Torres EM, Finn NK, Roesch SC. Validation of the Implementation Leadership Scale (ILS) in substance use disorder treatment organizations. J Subst Abuse Treat. 2016;68:31–5.

Bass BM, Avolio BJ: Manual for the multifactor leadership questionnaire (form 5X). Redwood City, CA: Mindgarden 2000.

Avolio BJ: Full range leadership development: Sage Publications; 2010.

Antonakis J, Avolio BJ, Sivasubramaniam N. Context and leadership: an examination of the nine-factor full-range leadership theory using the Multifactor Leadership Questionnaire. Leadersh Q. 2003;14(3):261–95.

Guerrero EG, Fenwick K, Kong Y. Advancing theory development: exploring the leadership–climate relationship as a mechanism of the implementation of cultural competence. Implement Sci. 2017;12:1–12.

Aarons GA. Transformational and transactional leadership: association with attitudes toward evidence-based practice. Psychiatr Serv. 2006;57(8):1162–9.

Aarons GA, Sommerfeld DH. Leadership, innovation climate, and attitudes toward evidence-based practice during a statewide implementation. J Am Acad Child Adolesc Psychiatry. 2012;51(4):423–31.

Brimhall KC, Fenwick K, Farahnak LR, Hurlburt MS, Roesch SC, Aarons GA. Leadership, organizational climate, and perceived burden of evidence-based practice in mental health services. Adm Policy Ment Health. 2016;43:629–39.

Lyon AR, Cook CR, Brown EC, Locke J, Davis C, Ehrhart M, Aarons GA: Assessing organizational implementation context in the education sector: confirmatory factor analysis of measures of implementation leadership, climate, and citizenship. Implementation Science 2018, 13(1).

Williams NJ, Ehrhart MG, Aarons GA, Marcus SC, Beidas RS. Linking molar organizational climate and strategic implementation climate to clinicians’ use of evidence-based psychotherapy techniques: cross-sectional and lagged analyses from a 2-year observational study. Implementation Sci. 2018;13(1):85.

Ehrhart MG, Torres EM, Hwang J, Sklar M, Aarons GA. Validation of the Implementation Climate Scale (ICS) in substance use disorder treatment organizations. Subst Abuse Treat Prev Policy. 2019;14(1):1–10.

Ehrhart MG, Shuman CJ, Torres EM, Kath LM, Prentiss A, Butler E, Aarons GA. Validation of the implementation climate scale in nursing. Worldviews Evid Based Nurs. 2021;18(2):85–92.

Williams NJ. Multilevel mechanisms of implementation strategies in mental health: integrating theory, research, and practice. Adm Policy Ment Health. 2016;43(5):783–98.

Chan D. Functional relations among constructs in the same content domain at different levels of analysis: atypology of composition models. J Appl Psychol. 1998;83(2):234–46.

Lengnick-Hall R, Williams NJ, Ehrhart MG, Willging CE, Bunger AC, Beidas RS, Aarons GA. Eight characteristics of rigorous multilevel implementation research: a step-by-step guide. Implementation Sci. 2000;18:52.

LeBreton JM, Moeller AN, Wittmer JL. Data aggregation in multilevel research: best practice recommendations and tools for moving forward. J Bus Psychol. 2023;38(2):239–58.

James LR, Demaree RG, Wolf G. Estimating within-group interrater reliability with and without response bias. J Appl Psychol. 1984;69(1):85.

LeBreton JM, Senter JL. Answers to 20 questions about interrater reliability and interrater agreement. Organ Res Methods. 2008;11(4):815–52.

James LR, Choi CC. Ko C-HE, McNeil PK, Minton MK, Wright MA, Kim K-i: Organizational and psychological climate: a review of theory and research. Eur J Work Organ. 2008;17(1):5–32.

VanderWeele T: Explanation in causal inference: methods for mediation and interaction: Oxford University Press; 2015.

Imai K, Keele L, Tingley D. A general approach to causal mediation analysis. Psychol Methods. 2010;15(4):309–34.

Raudenbush SW, Bryk AS: Hierarchical linear models: applications and data analysis methods, vol. 1: sage; 2002.

Lang JW, Bliese PD, Adler AB. Opening the black box: amultilevel framework for studying group processes. Adv Methods Pract Psychol Sci. 2019;2(3):271–87.

Hedeker D, Gibbons R. Longitudinal data analysis Johns Wiley & Sons. Hoboken, New Jersey: Inc; 2006.

StataCorp L: Stata statistical software: release 17 College Station. TX StataCorp LP 2021.

Feingold A. Effect sizes for growth-modeling analysis for controlled clinical trials in the same metric as for classical analysis. Psychol Methods. 2009;14(1):43–53.

Cohen J: Statistical Power Analysis for the Behavioral Sciences, 2nd edn: Lawrence Erlbaum Associates; 1988.

Imai K, Keele L, Tingley D, Yamamoto T: Causal mediation analysis using R. In: Advances in social science research using R: 2010: Springer; 2010: 129–154.

Zhang Z, Zyphur MJ, Preacher KJ. Testing multilevel mediation using hierarchical linear models: problems and solutions. Organ Res Methods. 2009;12(4):695–719.

Kelcey B, Dong N, Spybrook J, Shen Z. Experimental power for indirect effects in group-randomized studies with group-level mediators. Multivar Behav Res. 2017;52(6):699–719.

Bulus M, Dong N, Kelcey B, Spybrook J: PowerUpR: power analysis tools for multilevel randomized treatments. R package version 1.1. 0. In.; 2021.

Williams NJ, Beidas RS. Annual Research Review: the state of implementation science in child psychology and psychiatry: a review and suggestions to advance the field. J Child Psychol Psychiatry. 2019;60(4):430–50.

Lewis CC, Boyd MR, Marti CN, Albright K. Mediators of measurement-based care implementation in community mental health settings: results from a mixed-methods evaluation. Implement Sci. 2022;17(1):1–18.

Brookman-Frazee L, Stahmer AC: Effectiveness of a multi-level implementation strategy for ASD interventions: study protocol for two linked cluster randomized trials. Implement Sci 2018, 13(1).

Hofmann DA, Mark B. An investigation of the relationship between safety climate and medication errors as well as other nurse and patient outcomes. Pers Psychol. 2006;59(4):847–69.

Mayer DM, Ehrhart MG, Schneider B. Service attribute boundary conditions of the service climate–customer satisfaction link. Acad Manag J. 2009;52(5):1034–50.

Acknowledgements

We are grateful for the partnership provided to us by participating clinics, leaders, clinicians, and families.

Funding

This research was supported by a grant from the US National Institute of Mental Health: R01MH119127 (PI: Williams). The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Author information

Authors and Affiliations

Contributions

NJW generated the study hypotheses and design, obtained funding for the research, led completion of research procedures and data collection, analyzed and interpreted the data, and wrote and edited the manuscript. MGE, GAA, SE, LBF, MS, and SCM contributed to study design, completion of research procedures, interpretation of data, and editing of the manuscript. SCM contributed to data analysis and drafting of the manuscript. MGE, GAA, SE, MS, KC, and NJW delivered the LOCI strategy. SE and NRV led all participant recruitment and data collection. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The Institutional Review Board (IRB) at Boise State University approved this study and served as the single IRB for all participating clinics (Protocol Number no. 041‐SB19‐081). Electronic informed consent was obtained from all study participants.

Consent for publication

Not applicable.

Competing interests

G. A. A. is co-editor in chief of Implementation Science. All decisions about this paper were made by another co-editor in chief. N. J. W., M. G. E., S. E., M. S., K. C., N. R. V., L. B. F., and S. C. M. declare that they have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Additional file 1.

STARI checklist. CONSORT checklist.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Williams, N.J., Ehrhart, M.G., Aarons, G.A. et al. Improving measurement-based care implementation in youth mental health through organizational leadership and climate: a mechanistic analysis within a randomized trial. Implementation Sci 19, 29 (2024). https://doi.org/10.1186/s13012-024-01356-w

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13012-024-01356-w